Abstract

Multi-objective evolutionary algorithms (MOEAs) have been the choice for generating a set of Pareto-optimal (PO) solutions in one run. However, these algorithms sometimes suffer slow and poor convergence toward the PO front. One of the remedies to improve their convergence is to couple global search of MOEAs with local search. However, such coupling brings other implementation challenges, such as what, when, and how many solutions can be chosen for local search with MOEAs? In this paper, these challenges are addressed by developing a local search module that can choose solutions for local search using a set of reference lines. The heuristic strategies are also developed with the module for determining the frequency of executing local search and for terminating MOEA adaptively using a statistical performance indicator. The proposed algorithm, which is referred to as \({\text {RM}}^2\)OEA, is tested on 2-objective ZDT and 3-objective DTLZ test problems. Results demonstrate faster and improved convergence of \({\text {RM}}^2\)OEA over a benchmark MOEA from the literature.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

A multi-objective optimization problem (MOOP) can be written as

where \(F_{\text {MOOP}}({\mathbf {x}})\) is the vector of conflicting objectives \(({f_j}({\mathbf {x}}),\forall j = 1, \dots , M)\), \({\mathbf {x}}\) is the vector of decision variables, and S is the feasible search space. Such MOOP can be solved efficiently by using multi-objective evolutionary algorithms (MOEAs) because these algorithms can generate a set of Pareto-optimal (PO) solutions in one run. Therefore, MOEAs have been successfully used for theoretical [8, 29] and engineering optimization problems [1, 19, 23, 24].

Despite of showing success in solving MOOP, MOEAs still suffer slow and poor convergence for some problems [12, 26]. It generally occurs when many solutions of MOEA become non-dominated. In this case, the environmental selection of MOEA cannot differentiate such solutions, thereby reducing the selection pressure [18]. Moreover, the variation operators such as crossover and mutation operators are also becoming ineffective. One of the remedies to address this challenge is to develop the hybrid or memetic MOEAs [12, 15, 25]. These memetic algorithms are developed by coupling global search of MOEA with the local search techniques by converting a MOOP into a single-objective optimization problem (SOOP).

In the literature, many attempts have been made to implement local search with global search of MOEAs. First of all, a MOOP is converted into a SOOP using the weighted-sum method [10, 11], the \(\epsilon -\)constraint method [15, 25], or the scalarizing functions [7, 26]. After this conversion, a set of solutions for performing local search is chosen. In this case, either an appropriate solution [26] or a set of solutions is selected. The set either consists of the current non-dominated solutions [13, 17], or offspring solutions after crossover and mutation [2]. The selection of the appropriate non-dominated solutions is also made heuristic when any solution can be selected with some probability [11, 12]. Moreover, the local search is executed at the beginning on the initial population, during the generations, after finishing the fixed number of generations, or combination of two or more such strategies [11, 12]. These memetic algorithms mostly get terminated after a fixed number of generations [10] or function evaluations [15, 25]. A little focus has been made to terminate these algorithms adaptively such as in [6, 7].

From the literature, it is found that when local search is coupled with MOEAs, a set of implementation challenges emerges that need to be addressed for effective working of memetic MOEAs [11, 26]. The first challenge is the criterion for choosing a solution or a set of solutions for local search. Since MOEAs work on a population, the effectiveness of memetic MOEAs depends on this challenge. The second challenge is the number of solutions for local search since limited local searches on few solutions may not be effective or too much local searches on many solutions can become computationally expensive. The third challenge is when to execute local search? Since MOEAs are generation or iteration-based algorithms, it is important to know the right time for executing local search. Otherwise, many local search computations can be wasted without improving the convergence. The fourth challenge is the termination condition for memetic MOEAs. A fixed number of generations or function evaluations may not be needed since a memetic MOEA might have converged earlier or more number of generations may be needed for better convergence. Addressing all these challenges ensures a balance between local and global searches for better convergence of any memetic algorithm.

This papers focuses on the above challenges by developing a local search module that can be coupled with the existing MOEAs. The following are the contributions of the proposed local search module.

-

Selection of solutions for local search using a set of reference lines: In this procedure, a single solution closest to each reference line is chosen for local search. The number of local search solutions is calculated using Das and Denis method [4].

-

A heuristic strategy for the frequency of executing local search using the statistical performance indicator called modified inverse generalized distance (\({\text {IGD}}^{+}\)) indicator.

-

A heuristic strategy for an adaptive termination criterion for the proposed MOEA using \({\text {IGD}}^{+}\) indicator.

-

Comparative analysis of the proposed MOEA over a set of 2- and 3-objective test problems with the existing MOEA.

The remaining paper is organized in five sections. Section 2 presents the relevant literature survey of hybrid or memetic MOEAs and their strategy for balancing local and global searches. Section 3 presents the proposed reference-lines-steered memetic MOEA with implementation details of the local search module. The proposed MOEA is tested and its results are presented in Sect. 4. The paper is concluded in Sect. 5 with note on the future work.

2 Related literature survey

Multi-objective genetic local search algorithm (MOGLS) [10] is one of the earliest implementations for solving a combinatorial optimization problem. In MOGLS, the weighted-sum method is used to convert a MOOP into a SOOP. All new solutions after crossover and mutation are chosen, and the local search is applied to them after assigning weights. MOGLS is then modified to perform local search on a set of solutions by using tournament selection operator [12]. The solutions for local search are selected using a probability for reducing the number of computations. The algorithm is further extended to S-MOGLS [11] in which the Pareto-ranking for MOEA and the weighted-sum method for local search are used. The selection of solutions for local search is done with a probability using binary tournament selection operator. Another variant called cellular MOGLS is also developed [17] in which local search is applied to all non-dominated solutions in every generation. In another attempt, local search is coupled with the recombination operators [13] in which all offspring solutions are chosen for local search. A Pareto-local search (PLS) is developed [14] for combinatorial MOOPs, which is coupled with MOEA/D [28]. Three population sets are stored, and PLS is applied to one set so that other sets can be updated. During local search, the solutions are perturbed, and a SOOP is formulated using the weight-sum method.

Apart from the weighted-sum method, the \(\epsilon -\)constraint method is also used for converting a MOOP into a SOOP [15, 25]. The non-dominated solutions in every generation are selected for performing local search using the sequential quadratic programming (SQP) method. The local search solutions are mixed with the current solutions, and the best solutions are chosen. The hybrid MOEAs get terminated using a fixed number of function evaluations. Local search is also implemented by determining all promising non-dominated directions and coupled with MOEA [2]. In another attempt, a local search strategy called hill-climbing sidestep is proposed [16] for MOEAs. The direction for local search is found using the geometry of directional cones. The sidestep is performed when the new solution is closer to the (local) PO solution. The local search is executed on every solution of the offspring population. A hybrid framework for MOEAs is proposed [26] in which a local search solution in every generation is selected from the clusters that are made after projecting the solutions on a hyperplane. A scalarizing function is used for performing local search using the SQP method. In another attempt, local search via perturbation of variables of a solution with respect to its neighborhood is coupled with MOEA [3]. The Gaussian mutation operator is used for perturbing local search solutions. The farthest-candidate solution method is used to include better local search solution in a population. A memetic version of MOEA/D [28] is also developed by coupling a heuristic sequential quadratic approximation method. For each sub-problem, local search using Tchebycheff function is executed in every generation.

Nadir point estimation is another area in which local search is used [6, 7]. Since the Nadir point needs to be estimated, only the worst points in each objective from the current population or the extreme solutions of the current non-dominated solutions are found, and local search is executed using the augmented achievement scalarizing function. The SOOP is solved using the SQP method.

The local search has also been used with MOEAs for solving engineering optimization problems. For example, the multi-objective elastic structural topology optimization problem is solved using hybrid or customized MOEA [21,22,23,24]. The current non-dominated solutions are clustered, and the best representative solution from each cluster is chosen for local search in every generation. A problem-specific local search is executed, and the solutions after local search replace its parent solution in the current population. Another example is the optimal soil cutting by bulldozer and its blade [1] in which local search is executed on the obtained non-dominated solutions of MOEA after termination.

From the above literature survey, it can be seen that the studies focused on choosing an appropriate set of local search solutions. The number of local search solutions was decided either heuristically or deterministically. The frequency of executing local search was determined either in every generation or using some functions. The termination of hybrid MOEAs was mostly on a number of generations or functional evaluations. The challenges mentioned in Sect. 1 have been handled uniquely by these studies in order to make a balance between local and global searches. In the following section, the proposed algorithm is described, and details are given for addressing the challenges of implementing local search with MOEA.

3 Proposed memetic multi-objective evolutionary algorithm

The proposed memetic MOEA is developed using a commonly used framework of generational MOEAs, as presented in Algo. 1. It can be seen that a set of input parameters needs to be set at the beginning in Step 1. Thereafter, a random population \(P_0\) of size N is generated in Step 2. The objective functions and constraints are evaluated in Step 3, and the fitness is assigned to each solution. In the standard loop of the generations, a mating pool (\(P_{tmp}\)) is created in Step 5 by using a selection operator to select good and above average solutions. The solutions in the mating are then used for creating an offspring population (\(Q_t\)) in Step 6 using variation operators, such as crossover and mutation. Both the parent and offspring populations are combined in Step 7. The purpose of combining and evaluating the populations is to select the N best solutions, which is done in Step 8. In this step, the fitness is given to each solution, and the best N solutions are selected for the next generation population (\(P_{t+1}\)). At last, the generation counter is increased by one. The loop over generation gets terminated when the counter reaches the maximum number of generations (\(T_{\text {max}}\)).

3.1 The local search module

The local search module is proposed to address challenges mentioned in Sect. 1 so that a balance can be made between local and global searches for better convergence. In order to implement local search, a MOOP needs to be converted into a SOOP. The details are given in the following section.

3.1.1 Formulation for local search

The \(\epsilon -\)constraint method [20] is used for converting a MOOP to a SOOP, which is given as

Here, the parameter, \(\epsilon _j~(\forall j \in \{1,\dots ,M-1\})\), is the upper bound on their respective objective value, \(f_j\). It can be seen that an unconstrained MOOP is converted into a constrained SOOP. In order to solve equation (2), the method of multiplier (MOM) [20] is used that converts the constraint SOOP into an unconstrained optimization problem. Using MOM, the resulting optimization problem is given as

Here, \(P({\mathbf {x}},{\sigma ^t},{\tau ^t})\) is the penalty function, R is the penalty parameter, \(g_j\)’s (\(\forall j=1,\dots , M-1\)) are inequality constraints, \(h_k\)’s (\(\forall k=1,\dots ,K\)) are equality constraints, and t represents iteration counter. The \(\sigma _j^{(t)}\) and \(\tau _k^{(t)}\) penalty parameters are calculated as

where \(\langle a \rangle \) is the bracket operator, which is equal to a when \(a < 0\). Otherwise, it is equal to zero. The initial values of \(\sigma _j^{(0)}\) and \(\tau _k^{(0)}\) are kept zero for all constraints.

The unconstrained problem given in equation (3) is solved using the steepest descent method [20] in which a unidirectional search is performed along the unit vector of the steepest descent direction. The gradient of the penalty function is calculated using the central difference method. The MOM method gets terminated after (\(T_{MOM}\)) iterations or the difference between the new solution and the current solution is less than or equal to a user-defined parameter (\(\epsilon _{\text {MOM}}\)). In each sequence of MOM, the steepest descent method gets terminated after (\(T_{SDM}\)) iterations.

3.1.2 Number and choice for local search solutions

The number and choice of solutions for local search are determined using Das and Dennis method [4]. The number of solutions for local search (\(N_{LS}\)) is found by generating the structured reference points on a unit hyperplane, which is given as

where M is the number of objectives, and p is the number of equal divisions on each objective axis.

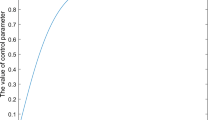

The same reference points are used for choosing local search solutions through the reference lines. These lines are drawn from the origin and the reference points as shown in Fig. 1.

In order to select solutions for local search from the current population, all solutions are first normalized and then, clustered. First, each objective value \(f_j({\mathbf {x}})\) of solution (\({\mathbf {x}}\)) is translated to \({\hat{f}}_j({\mathbf {x}}) = f_j({\mathbf {x}}) - z_j^{*}\), where \(z^{*}_j\) is the \(j-\)th component of the ideal vector (\(z^{*}\)) found from the current population. This translation shifts all solutions in the first quadrant of the objective space. Thereafter, the extreme points are found by minimizing the Achievement Scalarizing Function (\(\text {ASF}({\mathbf {x}},{w_j})\)) given in equation (6).

where \(w_{j,k}(\forall k \in \{1,\dots ,M\})\) is a search vector for \(j-\)th objective. It is defined as \(w_{j,k}=1\) for \(j=k\), or \(w_{j,k}=10^{-6}\), otherwise. The obtained extreme points are then used to construct a plane to find its intercept on each objective axis, that is, \(z^e\). Using the ideal point and the intercepts, all solutions of the current population are normalized using equation (7).

where \({\hat{F}}_j({\mathbf {x}})\) is the \(j-\)th normalized objective value of solution (\({\mathbf {x}}\)).

After normalization, each solution is then clustered with a reference line that is closest to it. It is done by finding the Euclidean distance \((d_1({\mathbf {x}},{\mathbf {w}}))\) between the solution (\({\mathbf {x}}\)) and each reference line \(({\mathbf {w}})\), which is given as

The solution (say \({\mathbf {s}}\)), which has the least \(d_1\) value to a reference line (say \({\mathbf {r}}\)), is included into the cluster of the reference line (\({\mathbf {r}}\)). Similarly, every solution is clustered with one of the reference lines. If any cluster for a reference line is empty, the nearest solution having the least PBI fitness [28] from the clusters of neighboring lines is selected to fill this cluster. The PBI fitness is calculated as

The distance \(d_2\) for a solution (\({\mathbf {x}}\)) along a reference line (\({\mathbf {w}}\)) is calculated as

Once the clusters are made, a solution having the least PBI distance from each cluster is selected for local search. In this way, the number of chosen set of local search solutions is the same as the number of the reference lines. Since local search is steered by a set of the reference lines, the proposed memetic MOEA is referred to as Reference-Lines-Steered Memetic MOEA (\({\text {RM}}^2\)OEA).

3.1.3 Frequency of executing local search

Apart from the number and choice of local search solutions, the frequency of executing local search is an important challenge to address for making a balance between global and local searches. This challenge can be addressed by using the statistical indicator. There exist many indicators such as R2, hypervolume, generalized distance, inverse generalized distance, etc. Among them, hypervolume indicator is used in various studies, which does not need any reference PO solutions. However, it is computationally expensive as compared to other indicators. In this paper, a modified inverse generalized distance (\({\text {IGD}}^{+}\)) indicator [9] is used because its performance is found similar to hypervolume indicator but with less computational requirement. It is calculated as

where P is the set of the obtained non-dominated solutions from MOEA and \({\mathbf {x}}_i\) is one of its solutions, \(P^*\) is the set of the uniformly distributed PO solutions and \({\mathbf {a}}_j\) is one of the PO solutions, \(|P^*|\) is the cardinality of \(P^{*}\), and \(d^{+}({\mathbf {x}}_i, {\mathbf {a}}_j)\) is the Euclidean distance calculated in the normalized objective space. It is calculated as

where \({\mathbf {x}}_i^{(k)}\) and \({\mathbf {a}}_j^{(k)}\) represent \(k-\)th objective value of solutions \({\mathbf {x}}_i \in P\) and \({\mathbf {a}}_j \in P^{*}\). A smaller value of \({\text {IGD}}^{+}\) represents better convergence and distribution of P with respect to \(P^{*}\).

Using \({\text {IGD}}^{+}\), the local search is executed after satisfying the condition given in equation (13).

Here, \({\text {IGD}}_{{\text {max}}}^{+}\), \({\text {IGD}}_{{\text {min}}}^{+}\), and \({\text {IGD}}_{{\text {avg}}}^{+}\) represent maximum, minimum, and average values of \({\text {IGD}}^{+}\) from the past 20 generations of \({\text {RM}}^2\)OEA. From simulations, it is found that once this condition gets satisfied in any generation, it remains the same for subsequent generations. In order to control many local searches, the next local search is allowed after (\(0.1 \times T_{{\text {max}}}\)) of generations.

3.2 Selection and variation operators

In Step 5 of Algo. 1, a random selection operator is used, which picks two solutions randomly for performing crossover. Once a pair of solutions is considered, they are not selected again and other pair of solutions is picked. In this way, a random selection is used for all solutions of the parent population \(P_t\).

Simulated binary crossover (SBX) and polynomial mutation operators [5] are used as variation operators. SBX is performed with a probability of \(p_c\), which is kept high. Mutation is performed with a probability of \(p_m\), which is kept low.

3.3 Environmental selection

Environmental selection of \(\theta -\)DEA [27] is adapted because it is found to be efficient for a large class of MOOPs. Also, it uses reference lines for selecting solutions from the combined population. The \(\theta -\)dominance principle is used for sorting the solutions. First, all solutions of the combined population are divided into clusters as defined in Sect. 3.1.2. Thereafter, the solutions from the same cluster are compared using the PBI distance given in equation (9). The solutions of the same cluster are then sorted using the \(\theta -\)dominance principle. In order to select solutions for the next generation population (\(P_{t+1}\)), the best rank solution from each cluster is copied.

3.4 Adaptive termination of \({\text {RM}}^2\)OEA

The proposed \({\text {RM}}^2\)OEA gets terminated using \({\text {IGD}}^{+}\) indicator defined in Sect. 3.1.3. The \({\text {IGD}}^{+}\) values are stored from the past few generations and an adaptive termination condition is developed, which is given as

Here, \({\text {IGD}}^{+}_{{\text {max}}}\) and \({\text {IGD}}^{+}_{{\text {min}}}\) are the maximum and minimum values of \({\text {IGD}}^{+}\) indicator in the past 20 generations. The terms \(\epsilon \) and \(\epsilon _{{\text {max}}}\) are used for terminating \({\text {RM}}^2\)OEA. The values of these terms are generated using fluctuations in \({\text {IGD}}^{+}\) values. It means that an increase in \({\text {IGD}}^{+}\) value for successive generations considers a fluctuation. After experimenting with different sets of values, the following values are used, which are given in equation (15).

3.5 Scope of local searches

The local search module can be applied at various steps of \({\text {RM}}^2\)OEA presented in Algo. 1. Three variants of \({\text {RM}}^2\)OEA are presented in this paper. For example, when local search module is executed before Step 5 (that is, before selection), it is referred to as \({\text {RM}}^2\)OEA-OP. In this implementation, the local search solutions replace their corresponding parent solutions from the population. The second variant is \({\text {RM}}^2\)OEA-OF in which local search module is executed before Step 7 (that is, after \(Q_t\) is generated). In this implementation, the local search solutions replace their corresponding offspring solutions from the population. The third variant is \({\text {RM}}^2\)OEA-ENV in which local search module is executed before Step 9 (that is, after environmental selection). In this implementation, the local search solutions replace their corresponding solutions from the next generation population (\(P_{t+1}\)).

4 Results and discussion

Three variants of \({\text {RM}}^2\)OEA is now tested for its performance assessment. The details are as follows.

4.1 Test problems

The performance of three variants is tested on 2-objective ZDT1, ZDT2, ZDT3, and ZDT6 problems [29]. ZDT1 is a \(30-\)variable problem, which has the convex PO front. ZDT2 is also a \(30-\)variable problem but the PO front is concave. ZDT3 is also a \(30-\)variable problem, which has several disconnected PO fronts. ZDT6 is a \(10-\)variable problem with a concave PO front but the density of solutions along with the PO front changes.

\({\text {RM}}^2\)OEA is also tested on 3-objective problems, that is, DTLZ1, DTLZ2, DTLZ3, and DTLZ4 problems [8]. DTLZ1 is a multi-modal MOOP, which has the linear PO front. DTLZ2 is a simple MOOP, which has the concave PO front. DTLZ3 is a multi-modal MOOP, which has the concave PO front. DTLZ4 is a biased MOOP, which has the concave PO front.

4.2 Algorithm for comparison

It can be observed from the discussion in Sect. 3.3 that the environmental selection of \(\theta -\)DEA is used. Therefore, three variants of \({\text {RM}}^2\)OEA are tested with \(\theta -\)DEA. Moreover, \(\theta -\)DEA has already shown its out-performance over other benchmark MOEAs [27], the comparison is thus made with this algorithm only for simplicity and clarity. It is important to note that the variants of \({\text {RM}}^2\)OEA are developed using various local search implementations in the literature. \({\text {RM}}^2\)OEA-OF is motivated by MOGLS and its variants [10, 11] in which local search is implemented on the offspring population. MOGLS implemented local search on newly created solutions after crossover and mutation. The weighted-sum method was used for converting the multi-objective problem into the single-objective optimization problem. However, \({\text {RM}}^2\)OEA-OF chooses a few solutions as described earlier from the offspring population, and local search is implemented using the \(\epsilon -\)constraint method.

\({\text {RM}}^2\)OEA-ENV is motivated by the studies [15, 25] in which local search is applied to the solutions after the environmental selection. In these studies, all non-dominated solutions after environmental selection were selected for performing local search using the \(\epsilon -\)constraint method. The solutions after local search were then combined with the current population. However, \({\text {RM}}^2\)OEA-ENV chooses few non-dominated solutions using the approach described earlier. The solutions after local search then replace their corresponding solutions in the current population.

It is noted that local search is executed after every (\(0.1\times T_{\text {max}}\)) generations for \({\text {RM}}^2\)OEA-OP, \({\text {RM}}^2\)OEA-OF, and \({\text {RM}}^2\)OEA-ENV. The adaptive version of any variant signifies execution of local search as per the details given Sect. 3.1.3.

4.3 Performance indicator

The statistical analysis of \({\text {RM}}^2\)OEA is performed using \({\text {IGD}}^{+}\) indicator, which is described in Sect. 3.1.3. A smaller value of this indicator with respect to the PO front (\(P^{*}\)) signifies better convergence and good diversity among the obtained non-dominated solutions (P).

It is important to note that a set of the PO solutions is needed to calculate \({\text {IGD}}^{+}\) value. Since the above problems are mathematical MOOPs, their PO fronts are known. For example, the PO front of ZDT1 is defined by \(f_2 = 1 - \sqrt{f_1}\) and \(f_1 \in [0,1]\). ZDT2 has the PO front defined by \(f_2 = 1 - f_1^2\) and \(f_1 \in [0,1]\). ZDT3 has disconnected PO fronts, which are defined by the ranges in \(f_1\) such as \(f_1 = [0, 0.0830015349]\) \(\cup \) [0.1822287280, 0.2577623634] \(\cup \) [0.4093136748, 0.4538821041] \(\cup \) \([0.61839667944, 0.6525117038]\) \(\cup \) [0.8233317983, 0.8518328654], and \(f_2 = 1 - \sqrt{f_1} - f_1\sin (10\pi f_1)\). ZDT6 has the same PO front as defined for ZDT2, however the range of \(f_1\) is defined as \(f_1 \in [0.2807753191, 1]\). For DTLZ1 problem, the PO front is defined by a linear plane in the first quadrant and having the intercept on each objective axis at 0.5. It is defined by \(\sum _{i=1}^{M}f_i = 0.5, \forall f_i \ge 0\). DTLZ2 to DTLZ4 problems have the PO front defined by \(\sum _{i=1}^{M} f_i^2 = 1, \forall f_i \ge 0\). It is a spherical surface defined in the first quadrant.

Since a set of the reference points is generated as described in Sect. 3.1.2, the reference lines drawn from the origin and the reference points are used to find their points of the intersection with the given PO front. These points of intersection become the PO solutions to a given problem. It can be observed that when a set of non-dominated solutions generated by MOEA is evolved closer to these generated PO solutions, a smaller \({\text {IGD}}^{+}\) value will be observed. It is noted that MOEAs are run for 30 times with different initial populations for performance assessment.

4.4 Experimental settings

In order to run \({\text {RM}}^2\)OEA and \(\theta -\)DEA, some input parameters need to be fixed. For example, Table 1 presents different input parameters for running the MOEAs. It is noted that \({\text {RM}}^2\)OEA gets terminated either by the adaptive termination condition discussed in Sect. 3.4 or by the maximum number of allowed generations (\(T_{max}\)), whichever is satisfied early. The input parameters for running the local search using MOM are given in Table 2. The MOM method gets terminated either by \(T_{\text {MOM}}\) or \(\epsilon _{\text {MOM}}\), whichever is satisfied early. The only parameters left for \(\theta -\)DEA are \(\theta =5\) and the neighborhood size is \(T_{NB}=20\). It uses PBI function for calculating the fitness. It is also noted that all variants of \({\text {RM}}^2\)OEA and \(\theta -\)DEA are run with (\(p=N-1\)) equal divisions for 2-objective MOOP and \(p=12\) equal divisions for 3-objective MOOP for generating the reference points. In the case of 3-objective problems, the number of reference points becomes 91.

4.5 Comparison based on \({\text {IGD}}^{+}\) values

Tables 3, 4, 5, and 6 presents the performance assessment comparison of three variants of \({\text {RM}}^2\)OEA with \(\theta -\)DEA on 2-objective MOOPs. The \(\text {IGD}^{+}\) values are shown for different values of local search solutions (\(N_{\text {LS}}\)). Meaning, a smaller value of \(N_{\text {LS}}\) suggests less number of local search solutions, and vice-versa. Table 3 shows that \({\text {RM}}^2\)OEA-OP and \({\text {RM}}^2\)OEA-OF variants are found to be better than \(\theta -\)DEA for any number of \(N_{\text {LS}}\) for ZDT1 problem. Except for a few \({\text {IGD}}^{+}\) values, \({\text {RM}}^2\)OEA-ENV variant is also found to be better. An interesting observation can be seen at the median \({\text {IGD}}^{+}\) values of all variants \({\text {RM}}^2\)OEA that the local search module shows similar performance irrespective of different \(N_{\text {LS}}\) values. From Table 3, it can also be seen that \({\text {RM}}^2\)OEA-OF is found to be the best among other variants. Therefore, \({\text {RM}}^2\)OEA-OF is made adaptive using the condition described in Sect. 3.1.3. The last column of the same table suggests that adaptive \({\text {RM}}^2\)OEA-OF shows better performance than \(\theta -\)DEA but unable to generate the same quality of solutions as \({\text {RM}}^2\)OEA-OF.

For ZDT2 problem, Table 4 shows that \({\text {RM}}^2\)OEA-OP and \({\text {RM}}^2\)OEA-OF variants are found to be better than \(\theta -\)DEA for any number of \(N_{\text {LS}}\). \({\text {RM}}^2\)OEA-ENV is not found competitive as other variants. Among them, \({\text {RM}}^2\)OEA-OF is found to be the best. However, its adaptive version in the last column of the same table is not as good as \({\text {RM}}^2\)OEA-OF, but better than \(\theta -\)DEA. From the table, it can be seen that \(N_{\text {LS}}\) does not show much impact on the performance of three variants of \({\text {RM}}^2\)OEA.

For ZDT3 problem, Table 5 presents a comparison among MOEAs. Since ZDT3 has disconnected PO fronts, not a single variant is found to be outperforming \(\theta -\)DEA. With \(N_{\text {LS}}=72\), \({\text {RM}}^2\)OEA-OF is found to be better than \(\theta -\)DEA for all three statistical values of \({\text {IGD}}^{+}\). The adaptive version of \({\text {RM}}^2\)OEA-OF seems to be not helping \({\text {RM}}^2\)OEA. It can also be seen from the table that \({\text {RM}}^2\)OEA-OP is unable to generate better \({\text {IGD}}^{+}\) values than \(\theta -\)DEA.

For ZDT6 problem, Table 6 shows a better performance of \({\text {RM}}^2\)OEA-OP, \({\text {RM}}^2\)OEA-OF and adaptive \({\text {RM}}^2\)OEA-OF variants over \(\theta -\)DEA. Except for \(N_{\text {LS}}=36\), \({\text {RM}}^2\)OEA-ENV is unable to perform better than \(\theta -\)DEA. Among all the variants, \({\text {RM}}^2\)OEA-OF is found to better than others.

Tables 7, 8, 9, and 10 presents the performance comparison of all variants of \({\text {RM}}^2\)OEA with \(\theta -\)DEA for 3-objective DTLZ problems. For DTLZ1 problem, \({\text {RM}}^2\)OEA-ENV is found to be the best among all variants. Except for the worst \({\text {IGD}}^{+}\) values for all \(N_{\text {LS}}\), \({\text {RM}}^2\)OEA-OP, \({\text {RM}}^2\)OEA-OF and its adaptive version are found to be better than \(\theta -\)DEA. Regarding \(N_{\text {LS}}\), the performance of all variants of \({\text {RM}}^2\)OEA seems to be similar.

For DTLZ2 problem, Table 8 shows that \({\text {RM}}^2\)OEA-OF and its adaptive version are found to be better than \(\theta -\)DEA. For this problem, adaptive \({\text {RM}}^2\)OEA-OF is found to be the best. \({\text {RM}}^2\)OEA-OP and \({\text {RM}}^2\)OEA-ENV are unable to generate good \({\text {IGD}}^{+}\) values than \(\theta -\)DEA. The performance of \({\text {RM}}^2\)OEA variants seems to be quite similar for different \(N_{\text {LS}}\) values.

For DTLZ3 problem, Table 9 shows better performance of all variants over \(\theta -\)DEA. Among the variants, \({\text {RM}}^2\)OEA-OF is found to be the best. For this example as well, different \(N_{\text {LS}}\) values show the similar performance of variants of \({\text {RM}}^2\)OEA.

For DTLZ4 problem, Table 10 show that only \({\text {RM}}^2\)OEA-OF shows the best performance for different values of \(N_{\text {LS}}\) over \(\theta -\)DEA. \({\text {RM}}^2\)OEA-ENV is better than \(\theta -\)DEA only for \(N_{\text {LS}}=91\). Other variants are unable to perform better than \(\theta -\)DEA. Since this problem is biased, this could be a probable reason for not so good performance of other variants.

From Tables 3, 4, 5, 6, 7, 8, 9, and 10, it can be seen that \({\text {RM}}^2\)OEA-OF is the best variant and shows better \({\text {IGD}}^{+}\) values against \(\theta -\)DEA in most for the problems. The adaptive version of \({\text {RM}}^2\)OEA-OF does not seem to be as effective as \({\text {RM}}^2\)OEA-OF, except for DTLZ2. Moreover, it can also be observed that \(N_{\text {LS}}\) seems to have not much impact on the performance of any variants of \({\text {RM}}^2\)OEA. It can be due to the selection of good solutions for local search that helps global search of MOEA for better convergence.

4.6 Obtained PO solutions

From Sect. 4.5, it can be seen that \({\text {RM}}^2\)OEA-OF is found to be the best among three variants. In this section, the solutions corresponding to the median value of \({\text {IGD}}^{+}\) for both \(\theta -\)DEA and \({\text {RM}}^2\)OEA-OF are shown. Since the performance of all variants is similar for different values of \(N_{\text {LS}}\), the obtained PO solutions for the least \(N_{\text {LS}}\) are shown, that are, \(N_{\text {LS}}=20\) for 2-objective problems and \(N_{\text {LS}}=15\) for 3-objective problems.

Figure 2 shows the obtained PO solutions of ZDT1 problem. The PO front of this problem is non-convex. It can be seen that \(\theta -\)DEA is unable to reach the corner along the \(f_2-\)axis as compared to \({\text {RM}}^2\)OEA-OF. This observation is similar to the outcome of \({\text {IGD}}^{+}\) comparison. Figure 3 shows the obtained PO solutions for ZDT2 problem. The PO front of this problem is convex. For this problem as well, \({\text {RM}}^2\)OEA-OF generates solutions throughout the range of both objectives, which is not visible with \(\theta -\)DEA. Figure 4 shows the obtained PO solutions for ZDT3 problem. This problem has disconnected PO front. The figures shows a better distribution of solutions of \({\text {RM}}^2\)OEA-OF over \(\theta -\)DEA. Figure 5 shows distribution of solutions of both MOEAs for ZDT6 problem. It is noted that this problem has convex PO front. It can be seen from the figure that both MOEAs show similar distribution of solutions.

Figure 6 shows obtained PO solution for DTLZ1 problem which has linear PO front. This problem is difficult to solve because it has many local PO fronts before reaching to the true PO front. It can be seen from the figure that both MOEAs show similar distribution of solutions for DTLZ1 problems. It can be observed that \({\text {RM}}^2\)OEA-OF shows a better performance based on \({\text {IGD}}^{+}\) values, however, the difference among these values is small. Figure 7 shows the obtained PO solutions of both MOEAs for convex DTLZ2 problem. For this problem as well, the distribution of solutions is found similar for both MOEAs. Figure 8 shows the obtained PO solutions for convex DTLZ3 problem. However, this problem is difficult to solve since there exists many local PO fronts. The distribution of solutions is again found similar for both MOEAs. Figure 9 shows the obtained PO solutions for convex DTLZ4 problem. The figure shows similar distribution of solutions of \({\text {RM}}^2\)OEA-OF and \(\theta -\)DEA.

4.7 Convergence details

From Sects. 4.5 and 4.6, it can be observed that \({\text {RM}}^2\)OEA was effective and generated better PO solutions and \({\text {IGD}}^{+}\) values. In this section, the convergence of \({\text {RM}}^2\)OEA-OF is compared with \(\theta -\)DEA. Figure 10 shows the convergence plots for ZDT problems. For ZDT1 problem, \({\text {RM}}^2\)OEA-OF with different \(N_{\text {LS}}\) values shows quicker convergence than \(\theta -\)DEA. In fact, the convergence plots of \({\text {RM}}^2\)OEA-OF get flatten at around 60 generations and are converged after 100 generations as compared to the fixed number of generations, that is 150, for \(\theta -\)DEA. It seems that \({\text {RM}}^2\)OEA-OF with \(N_{\text {LS}}=80\) has the quickest convergence. For ZDT2 problem, \({\text {RM}}^2\)OEA-OF with different \(N_{\text {LS}}\) values again shows quicker convergence. The quickest convergence is observed with \(N_{\text {LS}}=80\) local search solutions, which has converged in 86 generations as compared to the fixed number of generations of \(\theta -\)DEA, that is, 200. For ZDT3 problem again, a quicker convergence of \({\text {RM}}^2\)OEA-OF with different \(N_{\text {LS}}\) values can be seen, which has converged in less than 200 generations against the maximum number of generations allotted, that is, 1000. For ZDT6 problem, \({\text {RM}}^2\)OEA-OF has converged in less than 400 generations. A sharp improvement can be seen after 220 generations. For the above 2-objective ZDT problems, \({\text {RM}}^2\)OEA-OF with \(N_{\text {LS}}=80\) local search solutions is found to be the best for the quickest convergence.

Figure 11 shows the convergence plots for 3-objective DTLZ problems. For DTLZ1 problem, local search seems to be effective after 150 generations. Therefore, \({\text {RM}}^2\)OEA-OF with \(N_{\text {LS}}=36, 91\) values has converged in less than 350 generations as compared to the fixed generations for \(\theta -\)DEA, that is, 400. For DTLZ2 problem, \({\text {RM}}^2\)OEA-OF and \(\theta -\)DEA show similar convergence. For DTLZ3 problem, \({\text {RM}}^2\)OEA-OF with different \(N_{\text {LS}}\) values shows quicker convergence than \(\theta -\)DEA. The quickest convergence is observed with \(N_{\text {LS}}=91\), which has converged in 658 generations. For DTLZ4 problem, \({\text {RM}}^2\)OEA-OF and \(\theta -\)DEA show a similar convergence. However, \({\text {RM}}^2\)OEA-OF gets terminated in less than 370 generations because of the adaptive termination condition described in Sect. 3.4.

Table 11 presents generations required, functional evaluations, and computational time of \({\text {RM}}^2\)OEA and \(\theta -\)DEA for the convergence. For ZDT1 problem, hybridizing \({\text {RM}}^2\)OEA-OF with the given set of local search solutions (\(N_{\text {LS}}\)) has reduced the number of generations. \({\text {RM}}^2\)OEA-OF with \(N_{\text {LS}}=80\) requires the least number of generations that saves more than 50% against \(\theta -\)DEA. However, since several local searches are involved with \({\text {RM}}^2\)OEA-OF, it requires more number of function evaluations and computational time than \(\theta -\)DEA. For ZDT2 problem, \({\text {RM}}^2\)OEA-OF with \(N_{\text {LS}}=80\) requires the least number of generations that saves around 57% of generations than \(\theta -\)DEA. Although the number of function evaluations required by \({\text {RM}}^2\)OEA-OF is more, computational time required by it is relatively less than \(\theta -\)DEA. For ZDT3 problem, \({\text {RM}}^2\)OEA-OF with different \(N_{\text {LS}}\) values requires quite fewer generations than \(\theta -\)DEA that saves the computational time. However, more number of function evaluations is needed by \({\text {RM}}^2\)OEA-OF. For ZDT6 problem, \({\text {RM}}^2\)OEA-OF is needed quite a less number of generations. Therefore, \({\text {RM}}^2\)OEA-OF needs fewer function evaluations for convergence, and computational time is also reduced. For DTLZ1 problem, almost a saving of 50 generations can be seen for \({\text {RM}}^2\)OEA-OF with \(N_{\text {LS}}=36, 91\) values against \(\theta -\)DEA. The number of function evaluations and computation time is more than \(\theta -\)DEA. For DTLZ2 problem, \({\text {RM}}^2\)OEA-OF and \(\theta -\)DEA need all allotted generations. The same observation can be seen in Fig. 10 in which both MOEAs have a similar trend. In this case, \({\text {RM}}^2\)OEA-OF needs more number of function evaluations and computational time than \(\theta -\)DEA. For DTLZ3 problem, except for \(N_{\text {LS}}=51\) case, \({\text {RM}}^2\)OEA-OF requires less number of generations than \(\theta -\)DEA that saves its computational time. However, the number of function evaluations of \({\text {RM}}^2\)OEA-OF is more than \(\theta -\)DEA. For DTLZ4 problem, a good number of generations is saved by \({\text {RM}}^2\)OEA-OF against \(\theta -\)DEA that reduces computational time. However, \({\text {RM}}^2\)OEA-OF needs more function evaluations than \(\theta -\)DEA.

From the above discussion, it can be seen that a larger number of local search solutions always helps \({\text {RM}}^2\)OEA-OF in quicker convergence in most of the problems. Since the number is large, it requires many function evaluations. However, fewer number of local search solutions is equally good in helping \({\text {RM}}^2\)OEA-OF for better convergence, which further reduces functional evaluations and computational time. Although a clear and distinct trend cannot be seen between the convergence and \(N_{\text {LS}}\), this study brings out the fact that fewer number of local search solutions can be useful for better and quicker convergence unless a good and effective set of solutions is selected for local search.

5 Conclusion

The local search module has been proposed, which was steered by the reference lines. It was coupled with a commonly used MOEA framework, and three variants of \({\text {RM}}^2\)OEA were proposed. After solving 2-objective ZDT problems and 3-objective DTLZ problems, it was found that the performance of \({\text {RM}}^2\)OEA-OF variant generated the best values of \(\text {IGD}^{+}\) indicator for most of the problems. Since their \(\text {IGD}^{+}\) values were quite close for \({\text {RM}}^2\)OEA-OF and \(\theta -\)DEA, the obtained PO solutions were qualitatively found similar. However, the convergence plots suggested that \({\text {RM}}^2\)OEA-OF required fewer generations than \(\theta -\)DEA. Since the local search module involved extra computations for the MOM method, the number of function evaluations and computation time were relatively more for \({\text {RM}}^2\)OEA-OF than \(\theta -\)DEA. In some problems, however, \({\text {RM}}^2\)OEA-OF outperformed \(\theta -\)DEA in terms of a number of generations required, which was even less than 50%. One limitation observed from the results of ZDT3 problem is that \({\text {RM}}^2\)OEA and its variants may not be suitable for disconnected PO fronts. From the results, it can be concluded that a fewer local search solutions can also be useful for better and quicker convergence unless a good and effective set of solutions is selected for local search. In future work, the local search module can be extended for constraint MOOPs. The same module can be tested on engineering optimization problems for better convergence. The proposed algorithm can also be extended for solving many-objective optimization problems when the number of objectives is more three.

References

Barakat N, Sharma D (2019) Evolutionary multi-objective optimization for bulldozer and its blade in soil cutting. Int J Manag Sci Eng Manag 14(2):102–112

Bosman PAN (2012) On gradients and hybrid evolutionary algorithms for real-valued multiobjective optimization. IEEE Trans Evol Comput 16(1):51–69

Chen B, Zeng W, Lin Y, Zhang D (2015) A new local search-based multiobjective optimization algorithm. IEEE Trans Evol Comput 19(1):50–73. https://doi.org/10.1109/TEVC.2014.2301794

Das I, Dennis JE (1998) Normal-boundary intersection: a new method for generating the pareto surface in nonlinear multicriteria optimization problems. SIAM J Optim 8(3):631–657. https://doi.org/10.1137/S1052623496307510

Deb K, Agrawal RB (1995) Simulated binary crossover for continuous search space. Complex Syst 9(2):115–148

Deb K, Miettinen K, Chaudhuri S (2010) Toward an estimation of Nadir objective vector using a hybrid of evolutionary and local search approaches. IEEE Trans Evol Comput 14(6):821–841. https://doi.org/10.1109/TEVC.2010.2041667

Deb K, Miettinen K, Sharma D (2009) A hybrid integrated multi-objective optimization procedure for estimating Nadir point. In: Ehrgott M, Fonseca CM, Gandibleux X, Hao JK, Sevaux M (eds) Evolutionary multi-criterion optimization. Springer, Berlin, Heidelberg, pp 569–583

Deb K, Thiele L, Laumanns M, Zitzler E (2005) Scalable test problems for evolutionary multiobjective optimization. In: Abraham A, Jain L, Goldberg R (eds) Evolutionary multiobjective optimization: theoritical advances and applications. Springer, London, pp 105–145

Ishibuchi H, Masuda H, Nojima Y (2015) A study on performance evaluation ability of a modified inverted generational distance indicator. In: Proceedings of the 2015 annual conference on genetic and evolutionary computation. ACM, pp 695–702

Ishibuchi H, Murata T (1996) Multi-objective genetic local search algorithm. IEEE Trans Evol Comput 28:119–124

Ishibuchi H, Narukawa K (2004) Some issues on the implementation of local search in evolutionary multiobjective optimization. In: Deb K (ed) Genetic and evolutionary computation - GECCO 2004. Springer, Berlin, Heidelberg, pp 1246–1258

Ishibuchi H, Yoshida T, Murata T (2003) Balance between genetic search and local search in memetic algorithms for multiobjective permutation flowshop scheduling. IEEE Trans Evol Comput 7(2):204–222

Jaszkiewicz A (2002) Genetic local search for multi-objective combinatorial optimization. Eur J Oper Res 137(1):50–71. https://doi.org/10.1016/S0377-2217(01)00104-7

Ke L, Zhang Q, Battiti R (2014) Hybridization of decomposition and local search for multiobjective optimization. IEEE Trans Cybern 44(10):1808–1820

Kumar A, Sharma D, Deb K (2007) A hybrid multi-objective optimization procedure using pcx based nsga-ii and sequential quadratic programming. In: 2007 IEEE congress on evolutionary computation, pp 3011–3018. https://doi.org/10.1109/CEC.2007.4424855

Lara A, Sanchez G, Coello CAC, Schutze O (2010) HCS: a new local search strategy for memetic multiobjective evolutionary algorithms. IEEE Trans Evol Comput 14(1):112–132. https://doi.org/10.1109/TEVC.2009.2024143

Murata T, Nozawa H, Tsujimura Y, Gen M, Ishibuchi H (2002) Effect of local search on the performance of cellular multiobjective genetic algorithms for designing fuzzy rule-based classification systems. In: Proceedings of the 2002 congress on evolutionary computation, 2002. CEC ’02, vol 1, pp 663–668. https://doi.org/10.1109/CEC.2002.1007005

Purshouse RC, Fleming PJ (2007) On the evolutionary optimization of many conflicting objectives. IEEE Trans Evol Comput 11(6):770–784. https://doi.org/10.1109/TEVC.2007.910138

Ram L, Sharma D (2017) Evolutionary and gpu computing for topology optimization of structures. Swarm Evol Comput 35:1–13. https://doi.org/10.1016/j.swevo.2016.08.004

Ravindran A, Ragsdell K, Reklaitis G (2007) Engineering optimization: methods and applications, 2nd edn. Wiley, Hoboken. https://doi.org/10.1002/9780470117811

Sharma D, Deb K (2014) Generation of compliant mechanisms using hybrid genetic algorithm. J Inst Eng India Ser C 95(4):295–307. https://doi.org/10.1007/s40032-014-0127-z

Sharma D, Deb K, Kishore N (2008) Towards generating diverse topologies of path tracing compliant mechanisms using a local search based multi-objective genetic algorithm procedure. In: IEEE congress on evolutionary computation, 2008. CEC 2008. (IEEE World Congress on Computational Intelligence). IEEE, pp 2004–2011

Sharma D, Deb K, Kishore NN (2011) Domain-specific initial population strategy for compliant mechanisms using customized genetic algorithm. Struct Multidiscip Optim 43(4):541–554

Sharma D, Deb K, Kishore NN (2014) Customized evolutionary optimization procedure for generating minimum weight compliant mechanisms. Eng Optim 46(1):39–60

Sharma D, Kumar A, Deb K, Sindhya K (2007) Hybridization of sbx based nsga-ii and sequential quadratic programming for solving multi-objective optimization problems. In: 2007 IEEE congress on evolutionary computation, pp 3003–3010. https://doi.org/10.1109/CEC.2007.4424854

Sindhya K, Miettinen K, Deb K (2013) A hybrid framework for evolutionary multi-objective optimization. IEEE Trans Evol Comput 17(4):495–511

Yuan Y, Xu H, Wang B, Yao X (2016) A new dominance relation-based evolutionary algorithm for many-objective optimization. IEEE Trans Evol Comput 20(1):16–37. https://doi.org/10.1109/TEVC.2015.2420112

Zhang Q, Li H (2007) Moea/d: a multiobjective evolutionary algorithm based on decomposition. IEEE Trans Evol Comput 11(6):712–731. https://doi.org/10.1109/TEVC.2007.892759

Zitzler E, Deb K, Thiele L (2000) Comparison of multiobjective evolutionary algorithms: empirical results. Evol Comput J 8(2):125–148

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Saikia, R., Sharma, D. Reference-lines-steered memetic multi-objective evolutionary algorithm with adaptive termination criterion. Memetic Comp. 13, 49–67 (2021). https://doi.org/10.1007/s12293-021-00324-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12293-021-00324-x