Abstract

In this paper, we obtain the stability of isoperimetric inequalities with respect to the concentrate topology. The concentration topology is weaker than the \(\square \)-topology which is like the weak topology. As an application, we obtain isoperimetric inequalities on the non-discrete n-dimensional \(l^1\)-cube and \(l^1\)-torus by taking the limits of isoperimetric inequalities of discrete \(l^1\)-cubes and \(l^1\)-torus. The method of this paper builds on by introducing an \(\varepsilon \)-relaxed (iso-)Lipschitz order.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Isoperimetric inequalities are simple and interesting geometric inequalities that have been studied for a long time. Exact solutions are known in some basic spaces, such as the n-dimensional Euclidean space and the n-dimensional sphere. A more detailed list is seen in [8, Appendix H]. Isoperimetric inequalities on metric measure spaces with lower Ricci curvature bounds is studied in [7].

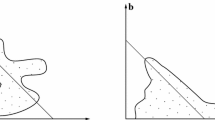

Gromov [12] introduced the Lipschitz order relation on the space of all metric measure spaces and developed a rich theory. In this paper, we focus on applications of the Lipschitz order relation to isoperimetric inequalities. This is a relatively new approach to isoperimetric inequalities. Gromov claimed Corollary 3.21 in Sect. 3 which states that an isoperimetric inequality on a continuous metric measure space is represented by using the Lipschitz order under some assumptions. The main results of this paper are Theorems 1.7 and 1.8 that appear later. Theorem 1.7 is a generalization of the Gromov’s claim. Theorem 1.8 express a stability of isoperimetric inequalities with respect to the concentrate topology. The concentration topology is defined by the observable distance \({{\,\mathrm{{ d}_{\mathrm{conc}}}\,}}\) (Definition 2.21) and weaker than the \(\square \)-topology (Definition 2.15) which is like the weak topology of measures. These theorems make it possible to treat discrete and continuous spaces in the same way. One of the most important applications is to obtain an isoperimetric inequality on a continuous space by the limit operation of discrete spaces. For example, we obtain the following sharp isoperimetric inequality on the continuous n-dimensional hypercube \([0,1]^n\) with the \(l^1\)-metric \(d_{l^1}\) and the uniform measure \({\mathcal {L}}^n\).

Theorem 1.1

For any closed subset \(\Omega \subset [0,1]^n\) with \({\mathcal {L}}^n(\Omega )>0\), we take the metric ball \(B_\Omega \subset [0,1]^n\) centered at the origin with \({\mathcal {L}}^n(B_\Omega )={\mathcal {L}}^n(\Omega )\). Then we have

for any \(r>0\), where \(U_r(A):=\{\,{x\in [0,1]^n\mid d_{l^1}(x,A)< r}\,\}\) is the open r-neighborhood of a subset \(A\subset [0,1]^n\).

Similarly, we obtain the following sharp isoperimetric inequality of the \(l^1\)-torus \(T^n\) by using Corollary 6 in [4]. The \(l^1\)-torus \(T^n\) is the n-fold \(l^1\)-product of the one-dimensional unit sphere \(S^1\) equipped with the uniform measure.

Theorem 1.2

For any closed subset \(\Omega \subset T^n\) with \(m_{T^n}(\Omega )>0\), we take a metric ball \(B_\Omega \) of \(T^n\) with \(m_{T^n}(B_\Omega )=m_{T^n}(\Omega )\). Then we have

for any \(r>0\), where \(U_r(A)\) is the open r-neighborhood of a subset \(A\subset T^n\) with respect to the \(l^1\)-metric.

There are only few spaces satisfying the inequalities in Theorems 1.1 and 1.2 because these are required to hold for any \(r>0\). As a former result, Lévy’s isoperimetric inequality (Theorem 2.11) also holds for any \(r>0\). It is a sharp isoperimetric inequality on a unit sphere in an Euclidean space. The isoperimetric inequality on the n-dimensional standard Gaussian space [6, 23] is also of the same type.

A usual isoperimetric inequality is given in the case that \(r>0\) is small. There are many variants of Theorem 1.1 and 1.2, if these are not required to hold for any \(r>0\). In [22], the isoperimetric profiles on a \(l^p\)-ball in the Euclidean space \(\mathbb {R}^n\) is calculated, where \(1\le p\le 2\). We note that Theorem 1.1 is the sharp isoperimetric inequality on a \(l^\infty \)-ball in the Banach space \({\mathbb {R}}^n\) equipped with the \(l^1\)-metric. In [15], a quantitative isoperimetric inequality on the Banach space \({\mathbb {R}}^2\) equipped with the \(l^1\)-metric is given. We remark that the boundary measure is given by the \(l^1\)-length in [15] but it is given by Minkowski content with respect to the \(l^1\)-metric in Theorem 1.1. In [3], the Cheeger constants on product spaces of metric measure spaces are calculated, where product spaces is equipped with the \(l^2\)-product metric. In [13], the concentration function on the unit sphere in a uniformly convex vector space is studied. In Sect. 4.3, we calculate the concentration function on the hypercubes \([0,1]^n\) equipped with the \(l^1\)-metric. In [24], an isoperimetric inequality on the product space equipped with the Talagrand’s convex distance is studied. That is a generalization of an isoperimetric inequality on the n-dimensional Hamming cube. The Wulff shapes may be related with Theorem 1.1 but it is nontrivial that there exists an energy which gives Minkowski content with respect to the \(l^1\)-metric. The Wulff shape in \({\mathbb {R}}^n\) is studied in [25].

In this paper, we deal with two key concepts, called “\({{\,\mathrm{ICL}\,}}\) condition” and “iso-dominant”. Roughly speaking, “\({{\,\mathrm{ICL}\,}}\) condition” means that an isoperimetric inequality holds for any \(r>0\). The concept “iso-dominant” also means isoperimetric inequality but it is defined by using the (iso-)Lipschitz order. Theorem 1.5 below means that the two concepts are equivalent to each other in some assumptions. However, these assumptions are incompatible with non-continuous spaces. Therefore, we introduce \(\varepsilon \)-relaxed notions (Definitions 1.3 and 1.6) of those concepts. Theorem 1.7, which appears later, expresses their equivalence. The iso-dominant is kept under taking a limit, as is stated in Theorem 1.8 below.

First, we formulate the form of isoperimetric inequalities. On a general metric measure space, we consider a Lévy type isoperimetric inequality. Namely, we consider that the open r-neighborhood in isoperimetric inequalities for any \(r>0\). Let \((X,d_X)\) be a complete separable metric space with a Borel probability measure \(m_X\). We call such a triple \((X,d_X,m_X)\) an mm-space (which is an abbreviation of a metric measure space). If we say that X is an mm-space, the metric and the measure are respectively indicated by \(d_X\) and \(m_X\).

Definition 1.3

(Isoperimetric comparison condition of Lévy type; cf. [20]) We say that an mm-space X satisfies the isoperimetric comparison condition of Lévy type \({{\,\mathrm{ICL}\,}}_\varepsilon (\nu )\) for a Borel probability measure \(\nu \) on \(\mathbb {R}\) and a real number \(\varepsilon \ge 0\) if we have \(V(b)\le m_X(B_{b-a+\varepsilon }(A))\) for any \(a,b\in {{\,\mathrm{supp}\,}}\nu \) with \(a\le b\) and for any Borel subset \(A\subset X\) with \(m_X(A)>0\) and \(V(a)\le m_X(A)\), where \(V(t):=\nu ((-\infty ,t])\) is the cumulative distribution function of \(\nu \). We abbreviate \({{\,\mathrm{ICL}\,}}_0(\nu )\) as \({{\,\mathrm{ICL}\,}}(\nu )\).

We remark that Definition 1.3 is only defined in the case \(\varepsilon =0\) in [20]. The 1-measurement of an mm-space X is defined as

where \(\varphi _*{m_X}\) is the push-forward measure of \({m_X}\) by \(\varphi \) and a 1-Lipschitz function is a Lipschitz continuous function with Lipschitz constant less than or equal to one. We denote by \({\mathcal {P}}(\mathbb {R})\) the set of all Borel probability measures on \(\mathbb {R}\) and we see \({\mathcal {M}}(X;1)\subset {\mathcal {P}}(\mathbb {R})\). In the case that \(\nu \in {\mathcal {M}}(X;1)\), the \({{\,\mathrm{ICL}\,}}(\nu )\) condition for X means a sharp isoperimetric inequality on X. In fact, if X satisfies \({{\,\mathrm{ICL}\,}}(\varphi _*m_X)\) for some 1-Lipschitz function \(\varphi :X\rightarrow \mathbb {R}\), then we have

for any \(t\in {{\,\mathrm{supp}\,}}(\varphi _*m_X)\) and any \(r>0\) with \(t+r\in {{\,\mathrm{supp}\,}}(\varphi _*m_X)\), where a Borel subset \(\Omega \subset X\) with \(m_X(\Omega )>0\) satisfies \(m_X(\Omega )\ge m_X(\varphi ^{-1}((-\infty , t]))\). This means that the subset \(\varphi ^{-1}((-\infty , t])\subset X\) is an extremal set for any \(t\in {{\,\mathrm{supp}\,}}(\varphi _*m_X)\). Lévy’s isoperimetric inequality is paraphrased as \(S^n(1)\) satisfies \({{\,\mathrm{ICL}\,}}(\xi _*m_{S^n(1)})\), where \(\xi :S^n(1)\rightarrow \mathbb {R}\) is the distance function from one point. The set of iso-mm-isomorphism class of \({\mathcal {P}}(\mathbb {R})\) has an order relation called the iso(perimetrically)-Lipschitz order (see Definitions 3.2, 3.3 and Proposition 3.4).

Gromov defined an iso-dominant using the iso-Lipschitz order and claimed that an iso-dominant recollects the isoperimetric inequality [11].

Definition 1.4

(Iso-dominant [11]) We call a Borel probability measure on \(\mathbb {R}\) an iso-dominant of an mm-space X if it is an upper bound of \({\mathcal {M}}(X;1)\) with respect to the iso-Lipschitz order \(\succ '\). That means \(\nu \succ '\mu \) for all \(\mu \in {\mathcal {M}}(X;1)\).

We have the following relation between an iso-dominant and ICL.

Theorem 1.5

([20]) Let X be an mm-space and \(\nu \) a Borel probability measure on \(\mathbb {R}\) with connected support. Assume that the cumulative distribution function V of \(\nu \) is continuous. Then, X satisfies \({{\,\mathrm{ICL}\,}}(\nu )\) if and only if \(\nu \) is an iso-dominant of X.

Gromov claim Corollary 3.21 in Sect. 3 without the proof in Section 9 in [11]. It is a variant of Theorem 1.5. We focus on the continuity of V in Theorem 1.5. Without the continuity of V, we find the following counterexample of Theorem 1.5. We put \([k]:=\{0,\dots ,k-1\}\) and consider the n-dimensional discrete cube \([k]^n\) equipped with the \(l^1\)-metric and the uniform measure, say \(m_{[k]^n}\). Then, \([k]^n\) satisfies \({{\,\mathrm{ICL}\,}}((d_0)_*m_{[k]^n})\), where \(d_0\) is the distance function from the origin [5]. Since the cumulative distribution function of \((d_0)_*m_{[k]^n}\) is not continuous, we are not able to apply Theorem 1.5 with \([k]^n\) as an mm-space X. In fact, \((d_0)_*m_{[k]^n}\) is not an iso-dominant of \([k]^n\). However, we regard \((d_0)_*m_{[k]^n}\) as an iso-dominant of \([k]^n\) if we allow an error. This is one of our motivations of introducing the iso-Lipschitz order with an error (see Definition 3.23).

The iso-Lipschitz order \(\succ '_{(s,t)}\) with error (s, t) satisfies some beneficial properties such as Theorems 3.26, 3.28, and 3.31 in Sect. 3.2. Now, we define the iso-dominant with an error by using the iso-Lipschitz order with an error.

Definition 1.6

Let \(\varepsilon \ge 0\) be a real number. We call a Borel probability measure \(\nu \) on \(\mathbb {R}\) an \(\varepsilon \)-iso-dominant of an mm-space X if we have \(\nu \succ '_{(\varepsilon ,0)}\mu \) for all \(\mu \in {\mathcal {M}}(X;1)\).

We have the following Theorem 1.7, which explains the relation between \(\varepsilon \)-iso-dominants and \({{\,\mathrm{ICL}\,}}_\varepsilon (\nu )\). Theorem 1.7 is a generalization of Theorem 1.5.

Theorem 1.7

Let X be an mm-space and \(\nu \) a Borel probability measure on \(\mathbb {R}\), and let \(\varepsilon \ge 0\). We define

where \(\delta ^-({{\,\mathrm{supp}\,}}\nu ;a):=\inf \{\,{t>0\mid a-t\in {{\,\mathrm{supp}\,}}\nu }\,\}\). Then we have the following (1) and (2).

-

(1)

If \(\inf {{\,\mathrm{supp}\,}}\nu >-\infty \), we assume \(\nu (\{\inf {{\,\mathrm{supp}\,}}\nu \})\le {m_X}(\{x\})\) for any \(x\in {{\,\mathrm{supp}\,}}{m_X}\). Then, \(\nu \) is an \((\varepsilon +\Delta ({{\,\mathrm{supp}\,}}\nu ))\)-iso-dominant of X if X satisfies \({{\,\mathrm{ICL}\,}}_\varepsilon (\nu )\).

-

(2)

Assume that \({{\,\mathrm{supp}\,}}\nu \) is connected or \(\nu (\{x\})>0\) for any \(x\in {{\,\mathrm{supp}\,}}\nu \). Then, X satisfies \({{\,\mathrm{ICL}\,}}_{2\varepsilon }(\nu )\) if \(\nu \) is an \(\varepsilon \)-iso-dominant of X.

Theorem 1.7 implies that \((d_0)_*m_{[k]^n}\) is a 1-iso-dominant of the \(l^1\)-discrete hypercube \([k]^n\) since \([k]^n\) satisfies \({{\,\mathrm{ICL}\,}}((d_0)_*m_{[k]^n})\) (See Example 3.38). It is important to note that we cannot eliminate the term \(\Delta ({{\,\mathrm{supp}\,}}\nu )\) from (1). This is because \((d_0)_*m_{[k]^n}\) is not an 0-iso-dominant of \([k]^n\), even though \([k]^n\) satisfies \({{\,\mathrm{ICL}\,}}_0((d_0)_*m_{[k]^n})\).

We will show that the condition that \(\nu \) is an \(\varepsilon \)-iso-dominant of X is stable under the convergence with respect to the Prokhorov metric \({{\,\mathrm{{ d}_{\mathrm{P}}}\,}}\) and the observable distance function \({{\,\mathrm{{ d}_{\mathrm{conc}}}\,}}\). This property enables us to obtain the isoperimetric inequality of a continuous space by using a discretization. The following Theorem 1.8 is one of the main theorems of this paper and represents the stability of \(\varepsilon \)-iso-dominant.

Theorem 1.8

Let X and \(X_n, n=1,2,\dots \), be mm-spaces, let \(\nu \) and \(\nu _n, n=1,2,\dots \), be Borel probability measures on \(\mathbb {R}\), and let \(\varepsilon _n, n=1,2,\dots \) be non-negative real numbers. We assume that \(\{X_n\}_n\) \({{\,\mathrm{{ d}_{\mathrm{conc}}}\,}}\)-converges to X and \(\{\nu _n\}_n\) weakly converges to \(\nu \), and \(\{\varepsilon _n\}_n\) converges to a real number \(\varepsilon \) as \(n\rightarrow \infty \) and that \(\nu _n\) is an \(\varepsilon _n\)-iso-dominant of \(X_n\) for any positive integer n. Then, \(\nu \) is an \(\varepsilon \)-iso-dominant of X.

We remark that the distance \({{\,\mathrm{{ d}_{\mathrm{conc}}}\,}}\) gives the concentration topology. It is weaker than the \(\square \)-topology which is like the weak topology of measures.

Now, we obtain a sharp isoperimetric inequality of the n-dimensional continuous \(l^1\)-hypercube \([0,1]^n\). The following Theorem 1.9 is one of the applications of Theorem 1.8. The proof of Theorem 1.8 is in Sect. 4.

Theorem 1.9

The measure \((d_0)_*m_{[0,1]^n}\) is the greatest element of \({\mathcal {M}}([0,1]^n;1)\) with respect to the iso-Lipschitz order \(\succ '\), where \(d_0\) is the distance function from the origin.

By Theorems 1.5 and 1.9, the \(l^1\)-hypercube \([0,1]^n\) satisfies \({{\,\mathrm{ICL}\,}}((d_0)_*\) \(m_{[0,1]^n})\). This implies Theorem 1.1.

Similarly, we obtain the following Theorem 1.10 by using Corollary 6 in [4].

Theorem 1.10

The measure \(\xi _*m_{T^n}\) is the greatest element of \({\mathcal {M}}(T^n;1)\) with respect to the iso-Lipschitz order \(\succ '\), where \(\xi \) is the distance function from one point.

By Theorems 1.5 and 1.10, the \(l^1\)-torus \(T^n\) satisfies \({{\,\mathrm{ICL}\,}}(\xi _*m_{T^n})\). This yields Theorem 1.2.

Obtaining sharp isometric inequalities using a similar method requires constraints on spaces. If the 1-measurement \({\mathcal {M}}(M;1)\) of an compact Riemannian homogeneous space M has the greatest element \(\nu \), then M is only a round sphere [19]. Furthermore, a necessary condition for the existence of the maximum of the 1-measurement is given in Theorem 1.9 in [20].

If the 1-measurement \({\mathcal {M}}(X;1)\) of an mm-space X has the greatest element \(\nu \), then we obtain the precise value of the observable diameter \(\mathrm{ObsDiam}(X;-\kappa )\) of X (Definition 2.9) because we have

Hence, we obtain the value of \(\mathrm{ObsDiam}([0,1]^n;-\kappa )\) and \(\mathrm{ObsDiam}(T^n;-\kappa )\) for any \(\kappa \in (0,1]\). As former results, the n-dimensional unit sphere is known to be an mm-space whose 1-measurement has the greatest element (see §9 in [11]). The n-dimensional standard Gaussian space is also such an mm-space by an isoperimetric inequality [6, 23]. In Sect. 4.2, we calculate the observable diameters of some spaces as one of the applications of the iso-Lipschitz order with an additive error.

2 Preliminaries

In this section, we present some basics of mm-spaces. We refer to [12, 21] for more details about the contents of this section.

2.1 Some Basics of mm-spaces

Definition 2.1

(mm-space) Let \((X,{d_X})\) be a complete separable metric space and \(m_X\) a Borel probability measure on X. We call such a triple \((X,{d_X},{m_X})\) an mm-space. We sometimes say that X is an mm-space, for which the metric and measure of X are respectively indicated by \({d_X}\) and \({m_X}\). We put \(tX:=(X, td_X,m_X)\) for \(t>0\). Since an mm-space is equipped with a probability measure, it is nonempty.

We denote the Borel \(\sigma \)-algebra over X by \({\mathcal {B}}_X\). For any point \(x\in X\), any two subsets \(A,B\subset X\) and any real number \(r\ge 0\), we define

where \(\inf \emptyset :=\infty \). We remark that \(U_r(\emptyset )=B_r(\emptyset )=\emptyset \) for any real number \(r\ge 0\). The diameter of A is defined by \(\mathrm{diam}A:=\sup _{x,y\in A}d_X(x,y)\) for \(A\ne \emptyset \) and \(\mathrm{diam}\emptyset :=0\).

Let Y be a topological space and let \(p:X\rightarrow Y\) be a measurable map from a measure space \((X,{m_X})\) to a Borel space \((Y,{\mathcal {B}}_Y)\). The push-forward \(p_*m_X\) of \({m_X}\) by the map p is defined as \(p_*{m_X}(A):={m_X}(p^{-1}(A))\) for any \(A\in {\mathcal {B}}_Y\).

Definition 2.2

(support) Let \((X,d_X)\) be a metric space and \(m_X\) a Borel measure on X. We define the support \({{\,\mathrm{supp}\,}}m_X\) of \(m_X\) by

Proposition 2.3

Let \((X,d_X)\) be a metric space and \(m_X\) a Borel measure on X. Let Y be a separable metric space. Let \(f:X\rightarrow Y\) be a continuous map. Then we have

Proof

Since

we have \({{\,\mathrm{supp}\,}}f_*m_X\subset \overline{f({{\,\mathrm{supp}\,}}m_X)}\) because Y is separable.

Next, let us prove

Take any \(y\in f({{\,\mathrm{supp}\,}}m_X)\). There exists \(x\in {{\,\mathrm{supp}\,}}m_X\) such that \(y=f(x)\). Take any positive real number \(\varepsilon >0\). Since f is continuous, there exists \(\delta >0\) such that

Then we have

and obtain (2.1). Because \({{\,\mathrm{supp}\,}}f_*m_X\) is closed, we have

This completes the proof. \(\square \)

Definition 2.4

(mm-isomorphism) Two mm-spaces X and Y are said to be mm-isomorphic if there exists an isometry \(f:{{\,\mathrm{supp}\,}}{m_X}\rightarrow {{\,\mathrm{supp}\,}}{m_Y}\) such that \(f_*{m_X}={m_Y}\), where \({{\,\mathrm{supp}\,}}{m_X}\) is the support of \({m_X}\). Such an isometry f is called an mm-isomorphism. The mm-isomorphism relation is an equivalence relation on the set of mm-spaces. Denote by \({\mathcal {X}}\) the set of mm-isomorphism classes of mm-spaces.

Definition 2.5

(Lipschitz order) Let X and Y be two mm-spaces. We say that X dominates Y and write \(Y\prec X\) if there exists a 1-Lipschitz map \(f:X\rightarrow Y\) satisfying

We call the relation \(\prec \) on \({\mathcal {X}}\) the Lipschitz order.

Proposition 2.6

(Proposition 2.11 in [21]) The Lipschitz order \(\prec \) is a partial order relation on \({\mathcal {X}}\).

Definition 2.7

(Transport plan) Let \(\mu \) and \(\nu \) be two Borel probability measures on \(\mathbb {R}\). We say that a Borel probability measure on \(\mathbb {R}^2\) is a transport plan between \(\mu \) and \(\nu \) if we have \(({{\,\mathrm{pr}\,}}_1)_*\pi =\mu \) and \(({{\,\mathrm{pr}\,}}_2)_*\pi =\nu \), where \({{\,\mathrm{pr}\,}}_1\) and \({{\,\mathrm{pr}\,}}_2\) is the first and second projection respectively. We denote by \(\Pi (\mu ,\nu )\) the set of transport plans between \(\mu \) and \(\nu \).

2.2 Observable Diameter and Partial Diameter

Observable diameter is one of the most important invariants among all invariants for mm-spaces. We remark that the 1-measurement appears in the definition of the observable diameter.

Definition 2.8

(Partial diameter) Let X be an mm-space and let \(\alpha \in [0,1]\) be a real number. We define the \(\alpha \)-partial diameter \(\mathrm{diam}(X;\alpha )\) of X as

For any Borel probability measure \(\mu \) on \(\mathbb {R}\), we set

Definition 2.9

(Observable diameter) Let X be an mm-space. For any real number \(\kappa \in [0,1]\), we define the \(\kappa \)-observable diameter \(\mathrm{ObsDiam}(X;-\kappa )\) of X as

Proposition 2.10

(Proposition 2.18 in [21]) Let X and Y be two mm-spaces and \(\kappa \in [0,1]\) a real number. If \(Y\prec X\), then we obtain

2.3 Lévy’s Isoperimetric Inequality

Let \(S^n(r)\) be the n-dimensional sphere of radius \(r>0\) centered at the origin in the \((n+1)\)-dimensional Euclidean space \(\mathbb {R}^{n+1}\). Let the distance \(d_{S^n(r)}(x,y)\) between two points x and y in \(S^n(r)\) be the geodesic distance, and let the measure \(m_{S^n(r)}\) on \(S^n(r)\) be the Riemannian volume measure on \(S^n(r)\) normalized as \(m_{S^n(r)}(S^n(r))=1\). Then, \((S^n(r),\,d_{S^n(r)},\,m_{S^n(r)})\) is an mm-space.

Theorem 2.11

(Lévy’s isoperimetric inequality [10, 16]) For any nonempty closed subset \(\Omega \subset S^n(1)\), we take a metric ball \(B_\Omega \) of \(S^n(1)\) with \(m_{S^n(1)}(B_\Omega )=m_{S^n(1)}(\Omega )\). Then we have

for any \(r>0\).

2.4 Box Distance and Observable Distance

In this section, we briefly describe the box distance function and the observable distance function.

Definition 2.12

(Parameter) Let \(I:=[0,1)\) and let \({\mathcal {L}}^1\) be the Lebesgue measure on I. Let X be a topological space equipped with a Borel probability measure \({m_X}\). A map \(\varphi :I\rightarrow X\) is called a parameter of X if \(\varphi \) is a Borel measurable map such that

Lemma 2.13

(Lemma 4.2 in [21]) Any mm-space has a parameter.

Definition 2.14

(Pseudo-metric) A pseudo-metric \(\rho \) on a set S is defined to be a function \(\rho :S\times S\rightarrow [0,\infty )\) satisfying

-

(1)

\(\rho (x,x)=0,\)

-

(2)

\(\rho (y,x)=\rho (x,y),\)

-

(3)

\(\rho (x,z)\le \rho (x,y)+\rho (y,z)\)

for any \(x,y,z\in S\)

If \(\rho \) is a metric, \(\rho (x,y)=0\) implies \(x=y\) for any two points \(x,y\in S\). However, a pseudo-metric does not necessarily satisfy this condition.

Definition 2.15

(Box distance) For two pseudo-metrics \(\rho _1\) and \(\rho _2\) on \(I:=[0,1)\), we define \(\square (\rho _1,\rho _2)\) to be the infimum of \(\varepsilon \ge 0\) such that there exists a Borel subset \(I_0\subset I\) satisfying

-

(1)

\(|\rho _1(s,t)-\rho _2(s,t)|\le \varepsilon \) for any \(s,t\in I_0\),

-

(2)

\({\mathcal {L}}^1(I_0)\ge 1-\varepsilon \).

We define the box distance \(\square (X,Y)\) between two mm-spaces X and Y to be the infimum of \(\square (\varphi ^*d_X,\psi ^*d_Y)\), where \(\varphi :I\rightarrow X\) and \(\psi :I\rightarrow Y\) run over all parameters of X and Y, respectively, and where \(\varphi ^*{d_X}(s,t):={d_X}(\varphi (s),\varphi (t))\) for \(s,t\in I\).

Theorem 2.16

(Theorem 4.10 in [21]) The function \(\square \) is a metric on the set \({\mathcal {X}}\) of mm-isomorphism classes of mm-spaces.

Definition 2.17

(Prokhorov metric) The Prokhorov metric \({{\,\mathrm{{ d}_{\mathrm{P}}}\,}}\) is defined by

for any two Borel probability measures \(\mu \) and \(\nu \) on a metric space X.

Proposition 2.18

(Proposition 4.12 in [21]) For any two Borel probability measures \(\mu \) and \(\nu \) on a complete separable metric space X, we have

Definition 2.19

(Ky Fan metric) Let \((X,\mu )\) be a measure space. For two \(\mu \)-measurable maps \(f,g:X\rightarrow \mathbb {R}\), we define the Ky Fan metric \(d_{\mathrm{KF}}=d_{\mathrm{KF}}^\mu \) by

Lemma 2.20

(Lemma 1.26 in [21]) Let \((X,\mu )\) be a measure space. For two \(\mu \)-measurable maps \(f,g:X\rightarrow \mathbb {R}\), we have

Definition 2.21

(Observable distance) For a parameter \(\varphi \) of an mm-space X, we define

and

The Hausdorff distance function \(d_{\mathrm{H}}^{\mathrm{KF}}\) is defined by

for two subsets A and B of Borel measurable functions from I, where the open \(\varepsilon \)-neighborhood of A is defined by

We define the observable distance \({{\,\mathrm{{ d}_{\mathrm{conc}}}\,}}\) between two mm-spaces X and Y by

where \(\varphi :I:=[0,1)\rightarrow X\) and \(\psi :I\rightarrow Y\) are two parameters of X and Y respectively.

Theorem 2.22

(Theorem 5.13 in [21]) The function \({{\,\mathrm{{ d}_{\mathrm{conc}}}\,}}\) is a metric on \({\mathcal {X}}\).

Proposition 2.23

(Proposition 5.5 in [21]) For two mm-spaces X and Y, we have \({{\,\mathrm{{ d}_{\mathrm{conc}}}\,}}(X,Y)\le \square (X,Y)\).

3 Isoperimetric Comparison Condition

3.1 Isoperimetric Comparison Condition Without an Error

In this subsection, we investigate the relation between iso-dominant and isoperimetric comparison condition. We refer to [20] for more details about the contents of this subsection. The main aim of this subsection is to introduce the following Theorems 3.5 and 3.7 which are extensions of Theorem 1.5. In a continuous space, isoperimetric problem is represented in terms of an isoperimetric profile. Let X be an mm-space. The boundary measure of a Borel set \(A \subset X\) is defined to be

Set

The isoperimetric profile \(I_X : \mathrm{Im}m_X \rightarrow [0,+\infty )\) of X is defined by

for \(v \in \mathrm{Im}m_X\). The following isoperimetric comparison condition is a generalization of an isoperimetric inequality. It is a derivative of the \({{\,\mathrm{ICL}\,}}\) condition.

Definition 3.1

(Isoperimetric comparison condition [20]) We say that X satisfies the isoperimetric comparison condition \({{\,\mathrm{IC}\,}}(\nu )\) for a Borel probability measure \(\nu \) on \(\mathbb {R}\) if

where V means the cumulative distribution function of \(\nu \) and \({\mathcal {L}}^1\) the one-dimensional Lebesgue measure on \(\mathbb {R}\).

We define the following iso-Lipschitz order in order to define an iso-dominant (See Definition 1.4).

Definition 3.2

(Iso-Lipschitz order [11, §9]) Let \(\mu ,\nu \in {\mathcal {P}}(\mathbb {R})\). We say that \(\mu \) iso-dominates \(\nu \) if there exists a monotonically non-decreasing 1-Lipschitz function \(f:{{\,\mathrm{supp}\,}}\mu \rightarrow {{\,\mathrm{supp}\,}}\nu \) such that \(f_*\mu =\nu \), where \({{\,\mathrm{supp}\,}}\mu \) is the support of \(\mu \). We write \(\mu \succ '\nu \) if \(\mu \) iso-dominates \(\nu \).

Definition 3.3

(Iso-mm-isomorphism) Two Borel probability measures \(\mu \) and \(\nu \) on \(\mathbb {R}\) are said to be iso-mm-isomorphic if there exists a real number c such that \(({{\,\mathrm{id}\,}}_\mathbb {R}+c)_*\mu =\nu \), where \({{\,\mathrm{id}\,}}_\mathbb {R}\) is the identity function on \(\mathbb {R}\). The iso-mm-isomorphism relation is an equivalence relation on the set of Borel probability measures on \(\mathbb {R}\).

In the definition of iso-dominant (Definition 1.4), an upper bound of a 1-measurement appears. An upper bound is defined on a partially ordered set. The following Proposition 3.4 asserts that a 1-measurement is a partially ordered set.

Proposition 3.4

The iso-Lipschitz order is a partial order on the set of iso-mm-isomorphism class of Borel probability measures on \(\mathbb {R}\).

The purpose of this subsection is to introduce the following Theorem 3.5 which is an extension of Theorem 1.5 because one of the aims of this paper is to prove Theorem 1.7 which is a generalization of Theorem 1.5.

Denote by \({\mathcal {V}}\) the set of Borel probability measures on \(\mathbb {R}\) absolutely continuous with respect to the one-dimensional Lebesgue measure \({\mathcal {L}}^1\) and with connected support.

An mm-space X is said to be essentially connected if we have \(m_X^+(A)>0\) for any closed set \(A\subset X\) with \(0<m_X(A)<1\).

Theorem 3.5

([20]) Let X be an essentially connected mm-space and let \(\nu \in {\mathcal {V}}\). Then the following (1), (2), and (3) are all equivalent.

-

(1)

The measure \(\nu \) is an iso-dominant of X.

-

(2)

The space X satisfies \({{\,\mathrm{ICL}\,}}(\nu )\).

-

(3)

The space X satisfies \({{\,\mathrm{IC}\,}}(\nu )\).

Gromov claim the following Corollary 3.21 without the proof in Sect. 9 in [11]. It is a variant of Theorem 3.5. We prepare to state it and extend Theorem 3.5 to prove it.

Denote \({\mathcal {F}}(X)\) by the set of all closed subsets of X. Put \(m_X({\mathcal {F}}(X)):=\{\,{m_X(A)\mid A\in {\mathcal {F}}(X)}\,\}\). The isoperimetric profile with respect to closed subsets \(I_X^{\mathrm{cl}} : m_X({\mathcal {F}}(X)) \rightarrow [0,+\infty )\) of X is defined by

for \(v \in m_X({\mathcal {F}}(X))\).

If we obtain a Borel set \(A_0\subset X\) such that \(I_X(m_X(A_0))=m_X^+(A_0)\), an isoperimetric inequality on X is represented by

for any \(A\subset X\) with \(m_X(A)=m_X(A_0)\). The following Definition 3.6 is a variant of Definition 3.1.

Definition 3.6

(Isoperimetric comparison condition with respect to closed subsets) We say that X satisfies the isoperimetric comparison condition with respect to closed subsets \({{\,\mathrm{IC}\,}}^{\mathrm{cl}}(\nu )\) for a Borel probability measure \(\nu \) on \(\mathbb {R}\) if

where V denotes the cumulative distribution function of \(\nu \).

The following Theorem 3.7 is an extension of Theorem 3.5.

Theorem 3.7

Let X be an essentially connected mm-space and let \(\nu \in {\mathcal {V}}\). Then the following (1), (2), (3), and (4) are all equivalent.

-

(1)

The measure \(\nu \) is an iso-dominant of X.

-

(2)

The space X satisfies \({{\,\mathrm{ICL}\,}}(\nu )\).

-

(3)

The space X satisfies \({{\,\mathrm{IC}\,}}(\nu )\).

-

(4)

The space X satisfies \({{\,\mathrm{IC}\,}}^{\mathrm{cl}}(\nu )\).

Let us prove Theorem 3.7. By Theorem 3.5, it is satisfied to prove that (3) implies (4) and that (4) implies (2). The following Proposition 3.8 means that (3) implies (4). The following Theorem 3.15 means that (4) implies (2).

Proposition 3.8

Let X be an mm-space and \(\nu \) a Borel probability measure on \(\mathbb {R}\). If X satisfies \({{\,\mathrm{IC}\,}}(\nu )\), then X satisfies \({{\,\mathrm{IC}\,}}^{\mathrm{cl}}(\nu )\).

Proof

This follows from \(I_X\le I_X^{\mathrm{cl}}\) on \(m_X({\mathcal {F}}(X))\). \(\square \)

To prove the following Theorem 3.15, we define the following Definition 3.9 and we prepare some lemmas. These lemmas are also used in the proof of Theorem 1.7.

Definition 3.9

(Generalized inverse function) For a monotonically non-decreasing and right-continuous function \(F : \mathbb {R}\rightarrow [ 0, 1 ]\) with

we define a generalized inverse function \({\tilde{F}} : [ 0, 1 ] \rightarrow \mathbb {R}\) by

for \(s \in [ 0, 1 ]\).

Lemma 3.10

For any F as in Definition 3.9, \({\tilde{F}}\) takes finite values and \({\tilde{F}}|_{(0,1)}\) is non-decreasing.

Proof

Fix any real number \(s\in (0,1)\) and define \(A:=\{\,{t\in {\mathbb {R}}\mid s\le F(t)}\,\}\). The set A is nonempty because \(\lim _{t\rightarrow \infty }F(t)=1\). Since \(\lim _{t\rightarrow -\infty }F(t)=0\), there exists \(t_0\in \mathbb {R}\) such that \(F(t_0)<s\). For any element \(t\in A\), we have \(F(t_0)<s\le F(t)\). Since F is non-decreasing, the inequality \(t_0<t\) follows. This implies that \(t_0\) is a lower bound of A. Hence, \({\tilde{F}}(s)\) takes finite values. The function \({\tilde{F}}\) is a non-decreasing function on (0, 1) because we have \(\{\,{t\in {\mathbb {R}}\mid s'\le F(t)}\,\}\supset \{\,{t\in {\mathbb {R}}\mid s\le F(t)}\,\}\) for any \(0<s'\le s<1\). This completes the proof. \(\square \)

Lemma 3.11

(cf. [19]) For any F as in Definition 3.9, we have the following (1), (2), and (3).

-

(1)

\(F\circ {\tilde{F}}(s)\ge s\) for any real number s with \(0 \le s < 1\).

-

(2)

\(\tilde{F} \circ F(t) \le t\) for any real number t with \(0<F(t)<1\).

-

(3)

\(\tilde{F}^{-1}((\,-\infty ,t\,]) \setminus \{0,1\}=(0,F(t)]\setminus \{1\}\) for any real number t.

Proof

First we prove (1). If \(s=0\), we have (1) because \(\mathrm{Im}F\subset [0,1]\). Fix a real number \(s\in (0,1)\) and define \(A:=\{\,{t\in {\mathbb {R}}\mid s\le F(t)}\,\}\ne \emptyset \). By the definition of infimum, we have

for any \(t'\in A\). For any \(t'>\inf A\), we have \(t'\in A\) because F is non-decreasing. By this, we have

By the right continuity of F, we obtain

Therefore, we have

Next we prove (2). We take any real number \(t\in {\mathbb {R}}\) with \(0<F(t)<1\), then we have

Last we prove (3). Take any real number \(s\in {\tilde{F}}^{-1}((-\infty ,t])\setminus \{0,1\}\). It follows from \({\tilde{F}}(s)\le t\) and the non-decreasing property of F that \(F\circ {\tilde{F}}(s)\le F(t)\). This implies that \(s\le F(t)\) by (1) and we have \(s\in (0,F(t)]\setminus \{1\}\). Conversely, take any real number \(s\in (0, F(t)]\setminus \{1\}\). Since \(s\le F(t)\), we obtain \({\tilde{F}}(s)\le t\) by the definition of \({\tilde{F}}(s)\). Hence \(s\in {\tilde{F}}^{-1}((-\infty ,t])\setminus \{0,1\}\). This completes the proof. \(\square \)

Remark 3.12

The generalized inverse function \({\tilde{F}}\) of a function F is a Borel measurable function. In fact, \({\tilde{F}}|_{(0,1)}\) is monotonically non-decreasing.

Lemma 3.13

Let \(\mu \) be a Borel probability measure on \(\mathbb {R}\) with cumulative distribution function F. Then we have

where \({\mathcal {L}}^1|_{[\,0,1\,]}\) is the one-dimensional Lebesgue measure on \([\,0,1\,]\).

Proof

By Lemma 3.11(3), we have

for any \(t>0\). This completes the proof. \(\square \)

Lemma 3.14

(Lemma 3.13 in [20]) Let \(g:\mathbb {R}\rightarrow \mathbb {R}\) be a monotonically non-decreasing function, \(f:\mathbb {R}\rightarrow [\,0,+\infty \,)\) a Borel measurable function, and \(A\subset \mathbb {R}\) a Borel set. Then we have

Theorem 3.15

Let X be an essentially connected mm-space and \(\nu \in {\mathcal {V}}\). If X satisfies \({{\,\mathrm{IC}\,}}^{\mathrm{cl}}(\nu )\), then X satisfies \({{\,\mathrm{ICL}\,}}(\nu )\).

Proof

Setting \(E:=({{\,\mathrm{supp}\,}}\nu )^\circ \), we easily see the bijectivity of \(V|_E : E \rightarrow (\,0,1\,)\). We define a function \(\rho : \mathbb {R}\rightarrow \mathbb {R}\) by

for a real number t. We see that \(\rho = V'\) \({\mathcal {L}}^1\)-a.e. and that \(\rho \) is a density function of \(\nu \) with respect to \({\mathcal {L}}^1\). Since \(I_X^{\mathrm{cl}}\circ V \ge \rho \) everywhere on \(V^{-1}(m_X({\mathcal {F}}(X)))\), we have \(I_X^{\mathrm{cl}} \ge \rho \circ (V|_E)^{-1}\) on \(m_X({\mathcal {F}}(X))\setminus \{0,1\}\). To prove \({{\,\mathrm{ICL}\,}}(\nu )\), we take two real numbers \(a,b\in {{\,\mathrm{supp}\,}}\nu \) with \(a\le b\) and a closed set \(A\subset X\) with \(m_X(A)>0\) and \(V(a)\le m_X(A)\). Note that replacing a Borel set A by a closed set A in the Definition 1.3 is equivalent to the original definition. We may assume \(m_X(B_{b-a}(A)) < 1\). Let s be any real number with \(0 \le s \le b-a\). Remarking \(m_X(B_s(A))\in m_X({\mathcal {F}}(X))\setminus \{0,1\}\), we see

Setting \(g(s):=m_X(B_s(A))\), we have

and hence

where we remark that \(g'(s) > 0\) by essential connectedness of X. Since \(g(0)=m_X(A)\), we have

if \(V(a)>0\). If \(V(a)=0\), we have \(a=\inf {{\,\mathrm{supp}\,}}\nu \le (V|_E)^{-1}\circ g(0)\) since \((V|_E)^{-1}\circ g(0)\in E=({{\,\mathrm{supp}\,}}\nu )^\circ \). By Lemmas 3.14 and 3.13,

which implies

This completes the proof. \(\square \)

This completes the proof of Theorem 3.7. At the end of this subsection, let us prove Corollary 3.21 by Theorem 3.7. We prepare some definitions and propositions to prove Corollary 3.21.

Proposition 3.16

([20]) Let X and Y be mm-spaces such that X dominates Y. Then we have

In particular, if X satisfies \({{\,\mathrm{IC}\,}}^{\mathrm{cl}}(\nu )\) for a Borel probability measure \(\nu \) on \(\mathbb {R}\), then Y also satisfies \({{\,\mathrm{IC}\,}}^{\mathrm{cl}}(\nu )\).

Proof

Since X dominates Y, there is a 1-Lipschitz map \(f : X \rightarrow Y\) such that \(f_*m_X= m_Y\). For any closed set \(A\subset Y\), we see \(f^{-1}(B_\varepsilon (A)) \supset B_\varepsilon (f^{-1}(A))\) by the 1-Lipschitz continuity of f, and hence

which implies that, for any \(v\in m_Y({\mathcal {F}}(Y))\),

The rest is easy. This completes the proof. \(\square \)

Definition 3.17

(Dominant [11, §9]) We call a Borel probability measure \(\nu \) on \(\mathbb {R}\) a dominant of an mm-space X if \(\nu \) is an upper bound of \({\mathcal {M}}(X;1)\) with respect to the Lipschitz order \(\succ \). That means \((\mathbb {R},|\cdot |,\nu )\succ (\mathbb {R},|\cdot |,\mu )\) for all \(\mu \in {\mathcal {M}}(X;1)\). The Lipschitz order \(\succ \) is defined in Definition 2.5.

Using Proposition 3.16, we prove the following Proposition 3.18.

Proposition 3.18

(Gromov [11, §9]) If \(\nu \) is a dominant of an mm-space X, then

where \(I_\nu ^{\mathrm{cl}}\) is the isoperimetric profile with respect to closed subsets of \((\mathbb {R},\nu )\).

Proof

We take any real number \(v \in m_X({\mathcal {F}}(X))\) and fix it. If \(v = 0\), then it is obvious that \(v \in \nu ({\mathcal {F}}(\mathbb {R}))\) and \(I_\nu ^{\mathrm{cl}}(v) = 0 = I_X^{\mathrm{cl}}(v)\). Assume \(v > 0\). For any \(\varepsilon >0\) there is a closed set \(A\subset X\) such that \(m_X(A)=v\) and \(m_X^+(A)<I_X^{\mathrm{cl}}(v)+\varepsilon \). Note that A is nonempty because \(v > 0\). Define a function \(f:X\rightarrow \mathbb {R}\) by \(f(x):=d_X(x,A)\). Then f is 1-Lipschitz continuous. Since \(f_*m_X((\,-\infty ,0\,]) = m_X(A) = v\), we have

Since \(\nu \) dominates \(f_*m_X\), Proposition 3.16 implies that \(v\in \nu ({\mathcal {F}}(\mathbb {R}))\) and \(I_\nu ^{\mathrm{cl}}(v)\le I_{f_*m_X}^{\mathrm{cl}}(v)\). We therefore have \(I_\nu ^{\mathrm{cl}}(v)<I_X^{\mathrm{cl}}(v)+\varepsilon \). Since \(\varepsilon >0\) is arbitrary, we obtain \(I_\nu ^{\mathrm{cl}}(v)\le I_X^{\mathrm{cl}}(v)\). This completes the proof. \(\square \)

Definition 3.19

[Iso-simpleness [11, §9]] A Borel probability measure \(\nu \) on \(\mathbb {R}\) is said to be iso-simple if \(\nu \in {\mathcal {V}}\) and if

Remark 3.20

For any Borel probability measure \(\nu \) on \(\mathbb {R}\), we always observe \(I_\nu \circ V\le V'\) \({\mathcal {L}}^1\)-a.e. In fact, we have

\({\mathcal {L}}^1\)-a.e. t.

Gromov [11]*§9 stated the following corollary without proof.

Corollary 3.21

(Gromov [11]*§9) Let X be an essentially connected mm-space and \(\nu \) an iso-simple Borel probability measure on \(\mathbb {R}\). Then, we have \(I_\nu ^{\mathrm{cl}} \le I_X^{\mathrm{cl}}\) on \(m_X({\mathcal {F}}(X))\) if and only if \(\nu \) is an iso-dominant of X.

Proof

We assume that \(\nu \) is an iso-dominant of X. By Proposition 3.18, we have \(I_\nu ^{\mathrm{cl}} \le I_X^{\mathrm{cl}}\) on \(m_X({\mathcal {F}}(X))\). Conversely, we assume \(I_\nu ^{\mathrm{cl}} \le I_X^{\mathrm{cl}}\) on \(m_X({\mathcal {F}}(X))\). Then we have \(I_\nu \circ V\le I_\nu ^{\mathrm{cl}}\circ V\le I_X^{\mathrm{cl}}\circ V\) on \(V^{-1}(m_X({\mathcal {F}}(X)))\). Since \(\nu \) is iso-simple, we have

This means that X satisfies \({{\,\mathrm{IC}\,}}^{\mathrm{cl}}(\nu )\). By Theorem 3.7, \(\nu \) is an iso-dominant of X. This completes the proof. \(\square \)

3.2 Iso-Lipschitz Order with an Error

In this section, we define the iso-Lipschitz order with an additive error and present some properties. To define the iso-Lipschitz order with an error, we use transport plans (Definition 2.7) and the following iso-deviation.

Definition 3.22

(Iso-deviation) We define the iso-deviation \({{\,\mathrm{dev}\,}}_\succ \) of a subset \(S\subset \mathbb {R}^2\) by

if S is nonempty. We set \({{\,\mathrm{dev}\,}}_\succ \emptyset :=0\).

The iso-deviation evaluates the deviation from the monotonically non-decreasing and 1-Lipschitz property. The following iso-Lipschitz order with an error is a generalization of the iso-Lipschitz order (Definition 3.2).

Definition 3.23

(Iso-Lipschitz order \(\succ '_{(s,t)}\) with error (s, t)) Let \(\mu \) and \(\nu \) be two Borel probability measures on \(\mathbb {R}\) and \(s,t\ge 0\) two real numbers. We say that \(\mu \) iso-dominates \(\nu \) with error (s, t) and denote \(\mu \succ '_{(s,t)}\nu \) if there exists a transport plan \(\pi \in \Pi (\mu ,\nu )\) and a Borel subset \(S\subset \mathbb {R}^2\) such that \({{\,\mathrm{dev}\,}}_\succ S\le s\) and \(1-\pi (S)\le t\).

The following Propositions 3.24 and 3.25 are useful properties of the iso-deviation. By Proposition 3.24, we obtain \({{\,\mathrm{dev}\,}}_\succ \overline{S}\le \varepsilon \) if we check \({{\,\mathrm{dev}\,}}_\succ S\le \varepsilon \) for any real number \(\varepsilon \ge 0\). Proposition 3.25 implies that a subset \(S\subset \mathbb {R}^2\) determines a 1-Lipschitz function if we have \({{\,\mathrm{dev}\,}}_\succ S=0\).

Proposition 3.24

For a subset \(S\subset \mathbb {R}^2\), we have

Proposition 3.25

Let \(S\subset \mathbb {R}^2\). For any two points \((x,y),(x',y')\in S\), we have

Proof

Take any \((x,y),(x',y')\in S\). By symmetry, we may assume that \(y\ge y'\). Then we have

This completes proof. \(\square \)

Theorem 3.26

Let \(\mu \) and \(\nu \) be two Borel probability measures on \(\mathbb {R}\). Then \(\mu \succ '\nu \) if and only if \(\mu \succ '_{(0,0)}\nu \).

Proof

Assume that \(\mu \succ '\nu \). Then, there exists a monotonically non-decreasing 1-Lipschitz function \(f:{{\,\mathrm{supp}\,}}\mu \rightarrow {{\,\mathrm{supp}\,}}\nu \) such that \(f_*\mu =\nu \). We put \(\pi :=({{\,\mathrm{id}\,}}_\mathbb {R},f)_*\mu \in \Pi (\mu ,\nu )\). Let us prove \({{\,\mathrm{dev}\,}}_\succ {{\,\mathrm{supp}\,}}\pi =0\). By Proposition 2.3, we have

which implies that

by Proposition 3.24. Hence, it suffices to prove \({{\,\mathrm{dev}\,}}_\succ (({{\,\mathrm{id}\,}}_\mathbb {R}, f)({{\,\mathrm{supp}\,}}\mu ))=0\). Take any two points \((x_1,y_1),(x_2,y_2)\in ({{\,\mathrm{id}\,}}_\mathbb {R},f)({{\,\mathrm{supp}\,}}\mu )\). Then, we have \(x_1,x_2\in {{\,\mathrm{supp}\,}}\mu \) and \(y_1=f(x_1), y_2=f(x_2)\). In the case that \(x_1\ge x_2\), we have

because f is 1-Lipschitz. In the case that \(x_1\le x_2\), we have \(f(x_1)\le f(x_2)\) since f is monotonically non-decreasing. Then we have

Therefore we obtain \({{\,\mathrm{dev}\,}}_\succ {{\,\mathrm{supp}\,}}\pi = 0\). It follows that \(\mu \succ '_{(0,0)}\nu \).

Conversely, assume that \(\mu \succ _{(0,0)}'\nu \). Then there exists \(\pi \in \Pi (\mu ,\nu )\) such that \({{\,\mathrm{dev}\,}}_\succ {{\,\mathrm{supp}\,}}\pi =0\). Take any point \(x\in {{\,\mathrm{supp}\,}}\mu \). We now claim that there exists a unique point \(y\in {{\,\mathrm{supp}\,}}\nu \) such that \((x,y)\in {{\,\mathrm{supp}\,}}\pi \). Let us prove the existence of y. Take any \(x\in {{\,\mathrm{supp}\,}}\mu \). By Proposition 2.3, we have

Hence, there exists \(\{(x_n,y_n) \}_{n\in \mathbb {N}}\subset {{\,\mathrm{supp}\,}}\pi \) such that \(x_n\) converges to x. By Proposition 3.25, we have

for any positive integers m and n. This means that \(\{y_n \}\) is a Cauchy sequence. Therefore, \(\{y_n \}\) converges to some \(y\in \mathbb {R}\). Since \({{\,\mathrm{supp}\,}}\pi \) is closed, we have \((x,y)\in {{\,\mathrm{supp}\,}}\pi \). In addition, we have

The uniqueness of \(y\in {{\,\mathrm{supp}\,}}\nu \) follows from \({{\,\mathrm{dev}\,}}_\succ {{\,\mathrm{supp}\,}}\pi =0\) and Proposition 3.25.

Now, we define a function \(f:{{\,\mathrm{supp}\,}}\mu \rightarrow {{\,\mathrm{supp}\,}}\nu \) by \(f(x):=y\) for \(x\in {{\,\mathrm{supp}\,}}\mu \), where \(y\in {{\,\mathrm{supp}\,}}\nu \) satisfies \((x,y)\in {{\,\mathrm{supp}\,}}\pi \). By \({{\,\mathrm{dev}\,}}_\succ {{\,\mathrm{supp}\,}}\pi =0\) and Proposition 3.25, f is a 1-Lipschitz function. Let us prove that f is monotonically non-decreasing. Take any \(x,x'\in {{\,\mathrm{supp}\,}}\mu \) with \(x\le x'\). Then we have

It remains to show \(f_*\mu =\nu \). Let us prove

By the definition of f, we have \({{\,\mathrm{supp}\,}}\pi \supset \{\,{(x,f(x))\mid x\in {{\,\mathrm{supp}\,}}\mu }\,\}\). We now check \({{\,\mathrm{supp}\,}}\pi =\{\,{(x,f(x))\mid x\in {{\,\mathrm{supp}\,}}\mu }\,\}\). Take any point \((x,y)\in {{\,\mathrm{supp}\,}}\pi \). By Proposition (2.3), we have

Because f is well-defined, we have \(y=f(x)\). Thus we have \((x,y)\in \{\,{(x,f(x))\mid x\in {{\,\mathrm{supp}\,}}\mu }\,\}\). Therefore we obtain (3.1).

By (3.1), we have

for any Borel sets A and B of \(\mathbb {R}\). Since

we have \(\pi =(\mathrm {id}_\mathbb {R},f)_*\mu \), which implies \(\nu =(\mathrm {pr}_2)_*\pi =f_*\mu \). This completes the proof. \(\square \)

Proposition 3.27

Let \(d_{l^1}\) be the \(l^1\)-distance \(d_{l^1}((x,y),(x',y')):=|x-x'|+|y-y'|\) on \(\mathbb {R}^2\) and \(d_H\) the Hausdorff distance function with respect to \(d_{l^1}\). For any two closed subsets \(S,S'\subset \mathbb {R}^2\), we have

Proof

Take any real number \(\varepsilon >0\) with \(\varepsilon >d_H(S,S')\). We have \(S'\subset U_\varepsilon (S)\). Let us prove \({{\,\mathrm{dev}\,}}_\succ U_\varepsilon (S)\le {{\,\mathrm{dev}\,}}_\succ S+2\varepsilon \). Take a point \((x_i,y_i)\in U_\varepsilon (S)\) for \(i=1,2\). Then there exists \((x'_i,y'_i)\in S\) such that \(d_{l^1}((x_i,y_i),(x'_i,y'_i))<\varepsilon \). Now, we have

Therefore we obtain

This implies \({{\,\mathrm{dev}\,}}_\succ S'-{{\,\mathrm{dev}\,}}_\succ S\le 2d_H(S,S')\). By exchanging S for \(S'\), we also obtain \({{\,\mathrm{dev}\,}}_\succ S-{{\,\mathrm{dev}\,}}_\succ S'\le 2d_H(S,S')\). \(\square \)

Theorem 3.28

Let \(\mu \) and \(\nu \) be two Borel probability measures on \(\mathbb {R}\) and \(s,t\ge 0\). If \(\mu \succ '_{(s+\varepsilon ,t+\varepsilon )}\nu \) for every \(\varepsilon >0\), then we have \(\mu \succ '_{(s,t)}\nu \).

Proof

Suppose that \(\mu \succ '_{(s+\frac{1}{n},t+\frac{1}{n})}\nu \) for any positive integer n. For any positive integer n, there exist \(\pi _n\in \Pi (\mu ,\nu )\) and a closed subset \(S_n\subset \mathbb {R}^2\) such that \({{\,\mathrm{dev}\,}}_\succ S_n\le s+\frac{1}{n}\) and \(\pi _n(S_n)\ge 1-t-\frac{1}{n}\). Due to the weak compactness of \(\Pi (\mu ,\nu )\), we may assume that \(\pi _n\) converges weakly to some Borel probability measure \(\pi \) by taking a subsequence. By Prokhorov’s theorem, for any positive number m, there exists a compact subset \(K_m\subset \mathbb {R}^2\) such that \(\sup _{n\in \mathbb {N}}\pi _n(K_m^c)\le \frac{1}{m}\) and \(\pi (K_m^c)\le \frac{1}{m}\). We may assume that the sequence of \(\{K_m\}\) is monotonically non-decreasing with respect to the inclusion relation. Let \(d_H\) be the Hausdorff distance function of \((\mathbb {R}^2, d_{l^1})\) and \(d_H^m\) the Hausdorff distance function of \((K_m,d_{l^1})\). Since \(K_m\) is compact, \(({\mathcal {F}} (K_m),d_H^m)\) is also compact, where \({\mathcal {F}}(K_m)\) is the set of all closed subsets of \(K_m\). By taking a subsequence \(\{n_i^{(1)}\}_{i\in \mathbb {N}}\subset \mathbb {N}\), we have \(d_H^1(S_{n_i^{(1)}}\cap K_1,S_\infty ^1)\rightarrow 0\) as \(i\rightarrow \infty \) for some \(S_\infty ^1\in {\mathcal {F}}(K_1)\), where \(\mathbb {N}\) is the set of positive integers. Furthermore, we take some subsequence \(\{n_i^{(2)}\}_{i\in \mathbb {N}}\subset \{n_i^{(1)}\}_{i\in \mathbb {N}}\) and we have \(d_H^2(S_{n_i^{(2)}}\cap K_2,S_\infty ^2)\rightarrow 0\) for some \(S_\infty ^2\in {\mathcal {F}}(K_2)\). By repeating this procedure, we take a subsequence \(\{n_i^{(m)}\}_{i\in \mathbb {N}}\subset \{n_i^{(m-1)}\}_{i\in \mathbb {N}}\) and we have \(d_H^m(S_{n_i^{(m)}}\cap K_m,S_\infty ^m)\rightarrow 0\) for some \(S_\infty ^m\in {\mathcal {F}}(K_m)\). Put \(n_i:=n_i^{(i)}\). Since the convergence on \(({\mathcal {F}}(K_m),d_H^m)\) implies the convergence on \(({\mathcal {F}}(\mathbb {R}),d_H)\), we obtain

for any positive integer m. Since \(\{K_m\}\) is monotonically non-decreasing with respect to the inclusion relation, \(\{S_\infty ^m\}\) is also monotonically non-decreasing. By Proposition 3.27 and (3.2), we have

Since \(\{\pi _{n_i}\}\) converges weakly to \(\pi \) and (3.2), we also have

for any positive number m. Now, we put \(S:=\bigcup _{m=1}^\infty S_\infty ^m \). By (3.3), we have

By (3.4), we have

where we remark that the limit exists because \(\{S^m_\infty \}\) is monotonically non-decreasing. Therefore we obtain \(\mu \succ '_{(s,t)} \nu \). This completes the proof. \(\square \)

The following Theorem 3.31 is a variation of the transitive property. To prove Theorem 3.31, we prepare the following Definition 3.29 and Proposition 3.30.

Definition 3.29

(Subtransport plan) Let \(\mu \) and \(\nu \) be two Borel probability measures on \(\mathbb {R}\). We say that a Borel measure on \(\mathbb {R}^2\) is a subtransport plan between \(\mu \) and \(\nu \) if we have \(({{\,\mathrm{pr}\,}}_1)_*\pi \le \mu \) and \(({{\,\mathrm{pr}\,}}_2)_*\pi \le \nu \).

Proposition 3.30

Let \(\mu \) and \(\nu \) be two Borel probability measures on \(\mathbb {R}\). Then we have \(\mu \succ '_{(s,t)}\nu \) if and only if there exists a subtransport plan \(\pi \) between \(\mu \) and \(\nu \) such that \({{\,\mathrm{dev}\,}}_\succ {{\,\mathrm{supp}\,}}\pi \le s\) and \(1-\pi (\mathbb {R}^2)\le t\).

The proof of the above proposition is easy and omitted.

Theorem 3.31

Let \(\mu _1\), \(\mu _2\), and \(\mu _3\) be three Borel probability measures on \(\mathbb {R}\) and let \(s_i,t_i\ge 0\) for \(i=1,2\). If \(\mu _1\succ '_{(s_1,t_1)}\mu _2\) and if \(\mu _2\succ '_{(s_2,t_2)}\mu _3\), then we have \(\mu _1\succ '_{(s_1+s_2,t_1+t_2)}\mu _3\).

Proof

Suppose that \(\mu _1\succ '_{(s_1,t_1)}\mu _2\) and \(\mu _2\succ '_{(s_2,t_2)}\mu _3\). There exists a subtransport plan \(\pi _i\) between \(\mu _i\) and \(\mu _{i+1}\) such that \({{\,\mathrm{dev}\,}}_\succ {{\,\mathrm{supp}\,}}\pi _i\le s_i\) and \(1-\pi _i({{\,\mathrm{supp}\,}}\pi _i)\le t_i\) for \(i=1,2\). Put \(\mu ':=(\mathrm {pr}_2)_*\pi _1\) and \(\mu '':=(\mathrm {pr}_1)_*\pi _2\). By the disintegration theorem (see III-70 in [18] or Theorem 5.3.1 in [1]), there exist two families \(\{(\pi _1)_x\}_{x\in \mathbb {R}}\) and \(\{(\pi _2)_x\}_{x\in \mathbb {R}}\) of Borel probability measures on \(\mathbb {R}\) such that

for any Borel subsets A and B of \(\mathbb {R}\). Now, we put

for any three Borel subsets A, B, and C of \(\mathbb {R}\), where \(\mu '\wedge \mu '':=\mu '-(\mu '-\mu '')_+\) and a measure \((\mu '-\mu '')_+\) is defined by

for any Borel set \(B\subset \mathbb {R}\). Then we have

In particular, \(\pi _{13}\) is a subtransport plan between \(\mu _1\) and \(\mu _3\). Moreover, we obtain \(1-\pi _{13}({{\,\mathrm{supp}\,}}\pi _{13})\le t_1+t_2\) because we have

It remains to show \({{\,\mathrm{dev}\,}}_\succ {{\,\mathrm{supp}\,}}\pi _{13}\le s_1+s_2\). By Proposition 3.24 and since

it suffices to prove

Take any \((x_i,z_i)\in \mathrm {pr}_{13}({{\,\mathrm{supp}\,}}\pi _{123})\) for \(i=1,2\). There exists a point \(y_i\in \mathbb {R}\) such that \((x_i,y_i,z_i)\in {{\,\mathrm{supp}\,}}\pi _{123}\). By (3.5), we have

and

This implies that \((x_i,y_i)\in {{\,\mathrm{supp}\,}}\pi _1\) and \((y_i,z_i)\in {{\,\mathrm{supp}\,}}\pi _2\). Now, let us prove

In the case that \(y_1<y_2\), we have

In the case that \(y_1\ge y_2\), we have

Combining (3.7) with \({{\,\mathrm{dev}\,}}_\succ {{\,\mathrm{supp}\,}}\pi _2\le s_2\), we obtain

which implies (3.6). This completes the proof. \(\square \)

3.3 Isoperimetric Comparison Condition with an Error

In this section, we prove Theorem 1.7 to explain the relation between \(\varepsilon \)-iso-dominant and \({{\,\mathrm{ICL}\,}}_\varepsilon \). We also explain the relation between \({{\,\mathrm{IC}\,}}_\varepsilon ^+\) (Definition 3.35) and \({{\,\mathrm{ICL}\,}}_\varepsilon \). The condition \({{\,\mathrm{IC}\,}}_\varepsilon ^+\) is a discretization of \({{\,\mathrm{IC}\,}}\) (Definition 3.1). At the end of this section, we give some examples of these conditions.

Proposition 3.32

Let \(\varepsilon \) be a non-negative real number. If a Borel probability measure \(\nu \) on \(\mathbb {R}\) is an \(\varepsilon \)-iso-dominant of an mm-space X, then \((t\cdot \mathrm {id}_\mathbb {R})_*\nu \) is a \(t\varepsilon \)-iso-dominant of tX.

Remark 3.33

By Theorem 3.26, a Borel measure on \(\mathbb {R}\) is a 0-iso-dominant if and only if it is an iso-dominant.

Let A be a subset of \(\mathbb {R}\). We put

for a point \(a\in A\), where we define

if \(\{\,{t>0\mid a-t\in A}\,\}=\emptyset \). We define \(\Delta (A)\) by

If A is a closed set, we have \(a-\delta ^- (A;a)\in A\).

Proof of Theorem 1.7 (1)

Let V be the cumulative distribution function of \(\nu \). Take any 1-Lipschitz function \(f:X\rightarrow \mathbb {R}\) and let \(F:\mathbb {R}\rightarrow [0,1]\) be the cumulative distribution function of \(f_*{m_X}\). We put \(\pi :=({\tilde{V}},{\tilde{F}})_*{\mathcal {L}}^1|_{[0,1]}\) and see \(\pi \in \Pi (\nu ,f_*{m_X})\). It suffices to prove \({{\,\mathrm{dev}\,}}_{\succ }{{\,\mathrm{supp}\,}}\pi \le \varepsilon +\delta \), where \(\delta :=\Delta ({{\,\mathrm{supp}\,}}\nu )\). Take any points \((x_1,y_1),(x_2,y_2) \in {{\,\mathrm{supp}\,}}\pi \). Let us prove

Since \(\{0,1\}\) is a null set with respect to \({\mathcal {L}}^1\), we have

Then, there exists \(\{t_i^n\}_{n=1}^\infty \subset (0,1)\) such that \(x_i=\lim _{n\rightarrow \infty }{\tilde{V}}(t_i^n)\) and \(y_i=\lim _{n\rightarrow \infty }{\tilde{F}}(t_i^n)\) for \(i=1,2\).

If \(x_1>x_2\), we have \(y_1\ge y_2\), which implies (3.8). In fact, we have

We assume \(x_1\le x_2\). Let us prove

for any positive integer n. In the case that \({\tilde{V}}(t_1^n)=\inf {{\,\mathrm{supp}\,}}\nu \), we have

which implies

Since we have

and (3.10), we have

where (3.11) is the assumption of this theorem. By using \({{\,\mathrm{ICL}\,}}_\varepsilon (\nu )\), we obtain

In the case that \({\tilde{V}}(t_1^n)>\inf {{\,\mathrm{supp}\,}}\nu \), we have \(\delta ^-({{\,\mathrm{supp}\,}}\nu ; {\tilde{V}}(t_1^n)) <\infty \). By the definition of \(\delta ^-({{\,\mathrm{supp}\,}}\nu ; {\tilde{V}}(t_1^n))\), there exists a sequence \(\{s_k^n \}_{k=1}^{\infty }\) of positive real numbers such that \(\lim _{k\rightarrow \infty }s_k^n=\delta ^-({{\,\mathrm{supp}\,}}\nu ; {\tilde{V}}(t_1^n))\) and \({\tilde{V}}(t_1^n)-s_k^n\in {{\,\mathrm{supp}\,}}\nu \) for any positive integer k. By the definition of \({\tilde{V}}\), we have \(V({\tilde{V}}(t_1^n)-s)<t_1^n\) for any real number \(s>0\), which implies

By \({{\,\mathrm{ICL}\,}}_\varepsilon (\nu )\), we have

By taking the limit as \(k\rightarrow \infty \), we have

Hence we obtain (3.9).

By using (3.9), we have

By the definition of \({\tilde{F}}(t_2^n)\), we have

By taking the limit as \(n\rightarrow \infty \), we obtain \(y_2-y_1\le x_2-x_1+\delta +\varepsilon \). This completes of proof. \(\square \)

Proof of Theorem 1.7 (2)

Take any two real numbers \(a,b\in {{\,\mathrm{supp}\,}}\nu \) with \(a\le b\) and any Borel set \(A\subset X\) with \({m_X}(A)>0\) and \({m_X}(A)\ge V(a)\). We define a 1-Lipschitz function \(f:X\rightarrow \mathbb {R}\) by \(f(x):={d_X}(x,A)\) for \(x\in X\). Since \(\nu \) is an \(\varepsilon \)-iso-dominant of X, there exists a transport plan \(\pi \) between \(\nu \) and \(f_*{m_X}\) such that \({{\,\mathrm{dev}\,}}_{\succ }{{\,\mathrm{supp}\,}}\pi \le \varepsilon \). We put

We remark that we have \(a'\le b'\) by the definition of \(a'\) and \(b'\). We claim that we can assume that \(b'<\infty \) because we have

We now check (3.12). First, let us prove

We take any point \((x,y)\in {{\,\mathrm{supp}\,}}\pi \). By \(b'=\infty \), there exists \((x',y')\in {{\,\mathrm{supp}\,}}\pi \) such that \(x\le x'\) and \(y'\le b-a+\varepsilon \). Then we have

because \({{\,\mathrm{dev}\,}}_\succ {{\,\mathrm{supp}\,}}\pi \le \varepsilon \). This implies (3.13). Then (3.13) implies

This completes the proof of (3.12).

Now, we have

In particular, we have

because \(V(a')\ge {m_X}(A)>0\). Let us prove \(a\le a'\). By (3.14), we may assume \(a>\inf {{\,\mathrm{supp}\,}}\nu \). If \(a>a'\), then we have \(V(a)>V(a')\) because we have \(\nu (\{a \})>0\) or \({{\,\mathrm{supp}\,}}\nu \) is connected, which implies a contradiction.

Next, let us prove \(b\le b'\). We may assume \(b\ge a'\) because \(b\le a'\le b'\) if \(b\le a'\). Let us prove that

Take any positive integer n. There exists \((s_n,t_n)\in {{\,\mathrm{supp}\,}}\pi \) such that \(a'-1/n<s_n\le a'\) and \(t_n\le 0\). By Proposition 3.25, we have

Since the sequence \(\{t_n\}\) is bounded, there exists a subsequence \(\{n(i)\}\) and \(y_0'\in \mathbb {R}\) such that \(t_{n(i)}\rightarrow y_0'\) as \(i\rightarrow \infty \). We have \(y_0'\le 0\) because \(t_n\le 0\) for any n. Since \((s_{n(i)},t_{n(i)})\rightarrow (a',y_0')\) as \(i\rightarrow \infty \) and \({{\,\mathrm{supp}\,}}\pi \) is closed, we obtain \((a',y_0')\in {{\,\mathrm{supp}\,}}\pi \). Hence (3.15) is proved.

Similarly, let us prove that

By Proposition 2.3,

By (3.17), there exists a sequence \(\{(s_n,t_n)\}\subset {{\,\mathrm{supp}\,}}\pi \) such that \(s_n\rightarrow b\) as \(n\rightarrow \infty \). The sequence \(\{t_n\}\) is bounded because \({{\,\mathrm{dev}\,}}_\succ {{\,\mathrm{supp}\,}}\pi \le \varepsilon \). Hence there exists a subsequence \(\{n(i)\}\) and \(y_0\in \mathbb {R}\) such that \(t_{n(i)}\rightarrow y_0\) as \(i\rightarrow \infty \). Since \((s_{n(i)},t_{n(i)})\rightarrow (b,y_0)\) as \(i\rightarrow \infty \) and \({{\,\mathrm{supp}\,}}\pi \) is closed, we obtain \((b,y_0)\in {{\,\mathrm{supp}\,}}\pi \). Hence (3.16) is proved.

Now, we have

since \({{\,\mathrm{dev}\,}}_\succ {{\,\mathrm{supp}\,}}\pi \le \varepsilon \). Therefore, we have \((b,y_0)\in {{\,\mathrm{supp}\,}}\pi \cap (\mathbb {R}\times (-\infty ,b-a+\varepsilon ])\), which implies \(b\le b'\) by the definition of \(b'\).

If we have

then we obtain

It remains to prove (3.18). Take any point \((x,y)\in {{\,\mathrm{supp}\,}}\pi \cap ((-\infty ,b']\times \mathbb {R})\). In the case that \(x<b'\), there exists \((x',y')\in {{\,\mathrm{supp}\,}}\pi \cap (\mathbb {R}\times (-\infty ,b-a+\varepsilon ])\) such that \(x'>x\) by the definition of \(b'\). Now, we have \(y-y'=y-y'-\max \{\,{x-x',0}\,\}\le {{\,\mathrm{dev}\,}}_\succ {{\,\mathrm{supp}\,}}\pi \le \varepsilon \). Hence, we obtain \(y\le y'+\varepsilon \le b-a+2\varepsilon \).

In the case that \(x=b'\), then for any positive integer n, there exists a point \((x_n,y_n)\in {{\,\mathrm{supp}\,}}\pi \cap (\mathbb {R}\times (-\infty ,b-a+\varepsilon ])\) such that \(x-1/n<x_n\le x\). By \({{\,\mathrm{dev}\,}}_{\succ }{{\,\mathrm{supp}\,}}\pi \le \varepsilon \), we obtain

Hence we have \((x,y)\in (-\infty ,b']\times (-\infty ,b-a+2\varepsilon ]\). This completes the proof. \(\square \)

Isoperimetric profiles are for non-discrete spaces. The following Definition 3.34 define isoperimetric profiles for discrete spaces.

Definition 3.34

(\(\varepsilon \)-discrete isoperimetric profile) Let X be an mm-space, and \(\varepsilon \ge 0\) a real number. We define the \(\varepsilon \)-discrete isoperimetric profile \(I_X^\varepsilon \) of X by

where \(\mathrm{Im}{m_X}:=\{\,{m_X(A) \mid A\subset X\text { is a Borel set.} }\,\}\).

The following Definition 3.35 is a discrete version of \({{\,\mathrm{IC}\,}}(\nu )\) condition.

Definition 3.35

(Isoperimetric comparison condition with an error) We say that an mm-space X satisfies the condition \({{\,\mathrm{IC}\,}}_\varepsilon ^+(\nu )\) for a Borel probability measure \(\nu \) on \(\mathbb {R}\) and a real number \(\varepsilon \ge 0\) if we have

for any \(t\in ({{\,\mathrm{supp}\,}}\nu \setminus \{\sup {{\,\mathrm{supp}\,}}\nu \})\cap V^{-1}(\mathrm{Im}{m_X}\setminus \{0\})\), where \(V(t):=\nu ((-\infty ,t])\) is the cumulative distribution function of \(\nu \), and where

The following Propositions 3.36 and 3.37 explain the relation between \({{\,\mathrm{IC}\,}}^+_\varepsilon \) condition and \({{\,\mathrm{ICL}\,}}\) condition.

Proposition 3.36

Let X be a finite mm-space equipped with the uniform measure, and \(\nu \) a Borel probability measure on \(\mathbb {R}\) with \(N:=\#{{\,\mathrm{supp}\,}}\nu <\infty \). Let \(\varepsilon \) be a non-negative real number. We assume that

If X satisfies \({{\,\mathrm{IC}\,}}_\varepsilon ^+(\nu )\), then it satisfies \({{\,\mathrm{ICL}\,}}_{(N-1)\varepsilon }(\nu )\).

Proof

Suppose that X satisfies \({{\,\mathrm{IC}\,}}_\varepsilon ^+(\nu )\). Take any two real numbers \(a,b\in {{\,\mathrm{supp}\,}}\nu \) with \(a\le b\) and a Borel subset \(A\subset X\) with \({m_X}(A)\ge V(a)\). We remark that \(V(a)>0\) because \(\#{{\,\mathrm{supp}\,}}\nu <\infty \). We may assume \(a<\sup {{\,\mathrm{supp}\,}}\nu \). We inductively define \(\delta ^+_n:\mathbb {R}\rightarrow [0,\infty ]\) by

for any positive integer n. Now, there exists a positive integer \(n_0\) such that \(\delta ^+_{n_0}(a)=b\) and \(n_0\le N-1\). Let us prove by induction

for any positive integer \(n\le n_0\).

First, we consider the case \(n=1\). Since \(m_X\) is the uniform measure and \(\mathrm{Im}\nu \subset (1/\# X) {\mathbb {Z}}\), there exists a Borel set \({\tilde{A}}_1\subset A\) such that \({m_X}({\tilde{A}}_1)=V(a)\) because we have \({m_X}(A)\ge V(a)\). By the definition of \(I_X^{\delta ^+(a)+\varepsilon }\), we have

where we remark that X satisfies \({{\,\mathrm{IC}\,}}^+_\varepsilon (\nu )\).

Next, we assume (3.19) for \(n=k\). Hence, we have

which implies that there exists a Borel subset

such that \({m_X}({\tilde{A}}_k)=V\circ \delta ^+_k(a)\). Therefore we have

if \(k+1\le n_0\). Hence we obtain (3.19). In particular, we have

Therefore we obtain

This completes the proof. \(\square \)

Proposition 3.37

Let X be an mm-space and \(\nu \) a Borel probability measure on \(\mathbb {R}\), and \(\varepsilon \ge 0\) a real number. If X satisfies \({{\,\mathrm{ICL}\,}}_\varepsilon (\nu )\), then it satisfies \({{\,\mathrm{IC}\,}}_\varepsilon ^+(\nu )\).

Proof

Take any \(t\in ({{\,\mathrm{supp}\,}}\nu \setminus \{\sup {{\,\mathrm{supp}\,}}\nu \})\cap V^{-1}(\mathrm{Im}m_X\setminus \{0\})\). Since \(t\in {{\,\mathrm{supp}\,}}\nu \setminus \{\sup {{\,\mathrm{supp}\,}}\nu \}\), we have \(\delta ^+(t)<\infty \). Since \(t\in V^{-1}(\mathrm{Im}m_X\setminus \{0\})\), we have \(V(t)\in \mathrm{Im}m_X\) and \(V(t)>0\). Take any Borel set \(A\subset X\) with \(m_X(A)=V(t)\). By \({{\,\mathrm{ICL}\,}}_\varepsilon (\nu )\), we have

because we have \(t, t+\delta ^+(t)\in {{\,\mathrm{supp}\,}}\nu \). This implies that

by Definition 3.34. This completes the proof. \(\square \)

Example 3.38

Let \(G_1,G_2,\dots ,G_n\) be connected graphs with same order \(k\ge 2\). Let \(\Pi _{i=1}^n G_i\) be the Cartesian product graph equipped with the path metric and the uniform measure. Let \(d_0:[k]^n\rightarrow \mathbb {R}\) be the \(l^1\)-distance function from the origin. Then \(\Pi _{i=1}^n G_i\) satisfies \({{\,\mathrm{ICL}\,}}((d_0)_*m_{[k]^n})\) by Corollary 14 in [5] because

for any \(a\in {{\,\mathrm{supp}\,}}(d_0)_*m_{[k]^n}\), where

Hence the measure \((d_0)_*m_{[k]^n}\) is a 1-iso-dominant of \(\Pi _{i=1}^n G_i\) by Theorem 1.7 (1). In particular, the measure \((d_0)_*m_{[k]^n}\) is a 1-iso-dominant of the discrete \(l^1\)-cube \([k]^n\).

Example 3.39

We assume that k is a positive even integer. Let \(X:=(\mathbb {Z}/(k\mathbb {Z}))^n\) be the discrete torus equipped with the \(l^1\)-metric and the uniform measure \(m_X\), and \(d_0:X\rightarrow \mathbb {R}\) the \(l^1\)-distance function from the origin. Then it satisfies \({{\,\mathrm{ICL}\,}}((d_0)_*m_X)\) by Corollary 6 in [4] because

for any \(a\in {{\,\mathrm{supp}\,}}(d_0)_*m_X\), where

Hence the measure \((d_0)_*m_X\) is a 1-iso-dominant of X.

3.4 Stability of \(\varepsilon \)-Iso-Dominant

The aim of this subsection is to prove Theorem 1.8. We prepare some definitions and lemmas to prove it. The following Definition 3.40 is a generalization of \(\varepsilon \)-iso-dominant (Definition 1.4).

Definition 3.40

((s, t)-iso-dominant) Let s and t be two non-negative real numbers. We call a Borel probability measure \(\nu \) on \(\mathbb {R}\) an (s, t)-iso-dominant of an mm-space X if we have \(\nu \succ '_{(s,t)}\mu \) for all \(\mu \in {\mathcal {M}}(X;1)\).

Definition 3.41

(Distortion from the diagonal) Let \((X,d_X)\) be a metric space. We define the distortion from the diagonal of a subset \(S\subset X\times X\) by

if S is nonempty. We define \({{\,\mathrm{dis}\,}}_\Delta \emptyset :=0\). Let \(\mu \) and \(\nu \) be two Borel probability measures on X. We define the distortion from the diagonal of a transport plan \(\pi \in \Pi (\mu ,\nu )\) between \(\mu \) and \(\nu \) by

where \(S\subset X\times X\) is a closed subset.

Theorem 3.42

(Strassen’s theorem; cf. [26, Corollary 1.28]) Let \(\mu \) and \(\nu \) be two Borel probability measures on a metric space X. Then we have

Lemma 3.43

For a subset \(S\subset \mathbb {R}^2\), we have

Proof

Take any two points \((x,y),(x',y')\in S\). If \(x-x'\ge 0\), then we have

If \(x-x'<0\), then we have

Hence we obtain \({{\,\mathrm{dev}\,}}_\succ S\le 2{{\,\mathrm{dis}\,}}_\Delta S\). This completes the proof. \(\square \)

Lemma 3.44

Let \(\mu \) and \(\nu \) be two Borel probability measures on \(\mathbb {R}\). If \(d_P(\mu ,\nu )<\varepsilon \), then we have \(\mu \succ '_{(2\varepsilon ,\varepsilon )}\nu \).

Proof

This follows from Theorem 3.42 and Lemma 3.43. \(\square \)

Lemma 3.45

Let \(\mu \) and \(\nu \) be two Borel probability measures on \(\mathbb {R}\), and X an mm-space. If \(\mu \) is an (s, t)-iso-dominant of X and we have \(d_P(\mu ,\nu )<\varepsilon \), then \(\nu \) is an \((s+2\varepsilon ,t+\varepsilon )\)-iso-dominant of X.

Proof

This follows from Lemma 3.44 and Theorem 3.31. \(\square \)

Lemma 3.46

Let X and Y be two mm-spaces, and \(\nu \) a Borel probability measure on \(\mathbb {R}\). If \(\nu \) is an (s, t)-iso-dominant of X and we have \({{\,\mathrm{{ d}_{\mathrm{conc}}}\,}}(X,Y)<\varepsilon \), then \(\nu \) is an \((s+2\varepsilon ,t+\varepsilon )\)-iso-dominant of Y.

Proof

Take any \(g\in {\mathcal {L}}{} { ip}_1(Y)\). By \({{\,\mathrm{{ d}_{\mathrm{conc}}}\,}}(X,Y)<\varepsilon \), there exists two parameters \(\varphi :I\rightarrow X\) and \(\psi :I\rightarrow Y\) such that

Hence there exists \(f\in {\mathcal {L}}{} { ip}_1(X)\) such that \({{\,\mathrm{{ d}_{\mathrm{KF}}}\,}}(\varphi ^*f,\psi ^*g)<\varepsilon \). By Lemma 2.20, we have

Therefore we have \(f_*m_X\succ _{(2\varepsilon ,\varepsilon )}'g_*m_Y\) by Lemma 3.44. Since \(\nu \) is an (s, t)-iso-dominant of X, we have \(\nu \succ _{(s,t)}' f_*m_X\), which implies \(\nu \succ _{(s+2\varepsilon ,t+\varepsilon )}'g_*m_Y\) by Theorem 3.31. \(\square \)

Proof of Theorem 1.8

Without loss of generality, we assume

Take any positive integer n. Since the measure \(\nu _n\) is an \((s+\varepsilon _n,t+\varepsilon _n)\)-iso-dominant of \(X_n\), the measure \(\nu \) is an \((s+3\varepsilon _n,t+2\varepsilon _n)\)-iso-dominant of \(X_n\) by Lemma 3.45. By Lemma 3.46, the measure \(\nu \) is an \((s+5\varepsilon _n,t+3\varepsilon _n)\)-iso-dominant of X. Hence, we have \(\nu \succ _{(s+5\varepsilon _n,t+3\varepsilon _n)}' f_*m_X\) for any \(f\in {\mathcal {L}}{} { ip}_1(X)\). By Theorem 3.28, we obtain \(\nu \succ _{(s,t)}'f_*m_X\). This completes the proof. \(\square \)

We apply Theorem 1.8 for the space of pyramids \(\Pi \). The space \(\Pi \) is a natural compactificaion of the set \({\mathcal {X}}\) of mm-spaces. We refer to [12, 21] for the theory of pyramids.

Definition 3.47

(Pyramid, cf. Definition 6.3 in [21]) A subset \({\mathcal {P}}\subset {\mathcal {X}}\) is called a pyramid if it satisfies the following (1), (2), and (3).

-

(1)

If \(X\in {\mathcal {P}}\) and if \(Y \prec X\), then \(Y\in {\mathcal {P}}\).

-

(2)

For any two mm-spaces \(X, X'\in {\mathcal {P}}\), there exists an mm-space \(Y\in {\mathcal {P}}\) such that \(X\prec Y\) and \(X'\prec Y\).

-

(3)

\({\mathcal {P}}\) is nonempty and \(\square \)-closed.

We denote the set of pyramids by \(\Pi \). The set \(\Pi \) is equipped with the weak Hausdorff convergence (Definition 6.4 in [21]). About the weak Hausdorff convergence, we introduce the following useful proposition (cf. Proposition 6.9 in [21]).

Proposition 3.48

(Down-to-earth criterion for weak convergence) For given \(\square \)-closed subset \({\mathcal {Y}}_n,{\mathcal {Y}}\subset {\mathcal {X}}\), \(n=1,2,\dots \), the following (1) and (2) are equivalent to each other.

-

(1)

\({\mathcal {Y}}_n\) converges weakly to \({\mathcal {Y}}\).

-

(2)

Let \(\underline{{\mathcal {Y}}}_\infty \) be the set of the limits of convergent subsequences \(Y_n\in {\mathcal {Y}}_n\), and \(\overline{{\mathcal {Y}}}_\infty \) the set of the limits of convergent subsequences of \(Y_n\in {\mathcal {Y}}_n\). Then we have

$$\begin{aligned} {\mathcal {Y}}=\underline{{\mathcal {Y}}}_\infty =\overline{{\mathcal {Y}}}_\infty \end{aligned}$$

To apply Theorem 1.8 for pyramids, we consider the following Propositions 3.49 and 3.51, and Definition 3.50.

Proposition 3.49

Let X and Y be two mm-spaces. If a Borel probability measure \(\nu \) on \(\mathbb {R}\) is an (s, t)-iso-dominant of X for \(s,t\ge 0\) and \(X\succ Y\), then \(\nu \) is an (s, t)-iso-dominant of Y.

Definition 3.50

Let \({\mathcal {Y}}\subset {\mathcal {X}}\). We say that a Borel probability measure \(\nu \) on \(\mathbb {R}\) is an (s, t)-iso-dominant of \({\mathcal {Y}}\) if \(\nu \) is an (s, t)-iso-dominant of X for any mm-space \(X\in {\mathcal {Y}}\).

Proposition 3.51

Let X be an mm-space, and \(\nu \) a Borel probability measure on \(\mathbb {R}\). Then, \(\nu \) is an (s, t)-iso-dominant of X if and only if \(\nu \) is an (s, t)-iso-dominant of \({\mathcal {P}}_X:=\{\,{Y\in {\mathcal {X}}\mid Y\prec X}\,\}\).

The following Theorem 3.52 is the stability of isoperimetric inequalities for weak Hausdorff convergence. This is generalization of Theorem 1.8.

Theorem 3.52

Let \({\mathcal {Y}}_n\subset {\mathcal {X}}\) be a \(\square \)-closed subset, and \(\overline{{\mathcal {Y}}}_\infty \) the set of the limits of convergent subsequences of \(Y_n\in {\mathcal {Y}}_n\). We assume that a sequence \(\{\nu _n\}_{n=1}^\infty \) of Borel probability measures on \(\mathbb {R}\) converges weakly to a Borel probability measure \(\nu \), and a sequence \(\{\varepsilon _n\}_{n=1}^\infty \) of non-negative real numbers converges to 0. If \(\nu _n\) is an \((s+\varepsilon _n,t+\varepsilon _n)\)-iso-dominant of \({\mathcal {Y}}_n\) for any positive integer n, then \(\nu \) is an (s, t)-iso-dominant of \(\overline{{\mathcal {Y}}}_\infty \).

Proof

This theorem follows from Theorem 1.8 and Proposition 2.23. \(\square \)

We obtain the following corollary by Proposition 3.48.

Corollary 3.53

Let \(\{{\mathcal {P}}_n\}_{n=1}^\infty \) be a sequence of pyramids, and \(\{\nu _n\}_{n=1}^\infty \) a sequence of Borel probability measures on \(\mathbb {R}\). We assume that \(\{{\mathcal {P}}_n\}_{n=1}^\infty \) converges weakly to a pyramid \({\mathcal {P}}\) and \(\{\nu _n\}_{n=1}^\infty \) converges weakly to a Borel probability measure \(\nu \) on \(\mathbb {R}\), and a sequence \(\{\varepsilon _n\}_{n=1}^\infty \) of non-negative real numbers converges to 0. If \(\nu _n\) is an \((s+\varepsilon _n,t+\varepsilon _n)\)-iso-dominant of \({\mathcal {P}}_n\), then \(\nu \) is an (s, t)-iso-dominant of \({\mathcal {P}}\).

4 Applications of Iso-Lipschitz Order

4.1 Isoperimetric Inequality of Non-discrete \(l^1\)-Cubes

In this subsection, we assume that \([0,1]^n\) is equipped with the \(l^1\)-metric \(d_{l^1}\) and the uniform measure \(m_{[0,1]^n}:={\mathcal {L}}^n|_{[0,1]^n}\), where \({\mathcal {L}}^n\) is the n-dimensional Lebesgue measure. Put \([k]:=\left\{ 0,1,2,\dots ,k-1 \right\} \). We have

We assume that \(\frac{1}{k} [k]^n\) is equipped with the \(l^1\)-metric \(d_{l^1}\) and the uniform measure \(m_{\frac{1}{k}[k]^n}:=\frac{1}{k^n}\sum _{x\in \frac{1}{k}[k]^n} \delta _x\).

Lemma 4.1

The sequence \(\{ m_{\frac{1}{k} [k]^n}\}_{k=1}^\infty \) converges weakly to \(m_{[0,1]^n}\) as \(k\rightarrow \infty \).

Proof

Define a function \(f:[0,1]^n\rightarrow \frac{1}{k} [k]^n\) by \(f((x_i)_{i=1}^n):=(\frac{1}{k} \lfloor k x_i \rfloor )_{i=1}^n\), where \(\lfloor \cdot \rfloor \) is the floor function. We set \(\pi :=({{\,\mathrm{id}\,}}_{[0,1]^n},f)_*m_{[0,1]^n}\). Then we have \(\pi \in \Pi (m_{[0,1]^n},m_{\frac{1}{k} [k]^n})\). Take any point \((x,f(x))\in {{\,\mathrm{supp}\,}}\pi =({{\,\mathrm{id}\,}}_{[0,1]^n},f)([0,1]^n)\) and put \(x:=(x_i)_{i=1}^n\). Since

we have \({{\,\mathrm{dis}\,}}_\Delta {{\,\mathrm{supp}\,}}\pi \le \frac{n}{k}\). By Theorem 3.42, we obtain

as \(k\rightarrow \infty \). This completes the proof. \(\square \)

Proof of Theorem 1.9

We define a function \(d_0:\mathbb {R}^n\rightarrow \mathbb {R}\) by \(d_0((x_i)_{i=1}^n):=\sum _{i=1}^n |x_i|\). By Example 3.38, the measure \((d_0)_*m_{[k]^n}\) is a 1-iso-dominant of \([k]^n\). Hence, the measure \((\frac{1}{k} d_0)_*m_{[k]^n}\) is a \(\frac{1}{k}\)-iso-dominant of \(\frac{1}{k}[k]^n\) by Proposition 3.32. Since \(d_0\) is 1-Lipschitz, we have

as \(n\rightarrow \infty \) by Lemma 4.1. By Theorem 1.8, the measure \((d_0)_*m_{[0,1]^n}\) is an iso-dominant of \([0,1]^n\). This completes the proof. \(\square \)

We obtain Theorem 1.10 in the same way as in the proof of Theorem 1.9 by using Example 3.39.

As another application of Theorem 1.8, we obtain the following, which is a variant of normal law à la Lévy (see Theorem 2.2 in [21]) by using Theorem 13 in [5].

Theorem 4.2

(Normal law à la Lévy on product graphs) Let \(G_1,G_2,\) \(\dots ,G_n,\dots \) be connected graphs with same order \(k\ge 2\). Put

Let \(X_n:=(\prod _{i=1}^n G_i,d_{X_n},m_{X_n})\) be the Cartesian product graph equipped with the path metric \(d_{X_n}\) and the uniform measure \(m_{X_n}\). Put \(Y_n:=(\prod _{i=1}^n G_i,\varepsilon _n\cdot d_{X_n},m_{X_n})\). Let \(\{f_{n_i}\}\) be a subsequence of a sequence of 1-Lipschitz functions \(f_n:Y_n\rightarrow \mathbb {R}, n=1,2,\dots \). If \((f_{n_i})_*m_{Y_{n_i}}\) converges weakly to a Borel probability measure \(\sigma \), then we have \(\gamma ^1\succ '\sigma \), where \(\gamma ^1\) is the 1-dimensional standard Gaussian measure.

In the case that \(k=2\), we see that \(X_n\) is the n-dimensional Hamming cube. If we replace \(X_n\) by n-dimensional (non-discrete) \(l^1\)-cube or n-dimensional (non-discrete) \(l^1\)-torus, we obtain the normal law à la Lévy respectively.

Proof of Theorem 4.2

We define a function \(d_0:\mathbb {R}^n\rightarrow \mathbb {R}\) by

By Example 3.38, the measure \((d_0)_*m_{[k]^n}\) is a 1-iso-dominant of \(X_n\), which implies that \((\varepsilon _n \cdot d_0)_*m_{[k]^n}\) is an \(\varepsilon _n\)-iso-dominant of \(Y_n\) by Proposition 3.32. By Proposition 3.51, the measure \((\varepsilon _n \cdot d_0)_*m_{[k]^n}\) is an \(\varepsilon _n\)-iso-dominant of \({\mathcal {P}}_{Y_n}:=\{\,{Y\in {\mathcal {X}}\mid Y\prec Y_n}\,\}\). By the central limit theorem, \((\varepsilon _n \cdot d_0)_*m_{[k]^n}\) converges weakly to \(\gamma ^1\) as \(n\rightarrow \infty \). Putting \({\mathcal {Y}}_n:={\mathcal {P}}_{Y_n}\), \(\gamma ^1\) is an iso-dominant of \(\overline{{\mathcal {Y}}}_\infty \) by Theorem 3.52.

Take any sequence of 1-Lipschitz functions \(f_n:Y_n\rightarrow \mathbb {R}, n=1,2,\dots \). We assume that a subsequence \(\{n(i)\}_{i=1,2,\dots }\) satisfies that \({(f_{n(i)})}_*m_{Y_{n(i)}}\) converges weakly to a measure \(\sigma \) as \(i\rightarrow \infty \). Since \({(f_n)}_*m_{Y_n}\in {\mathcal {P}}_{Y_n}\), we have \(\sigma \in \overline{{\mathcal {Y}}}_\infty \). Then we obtain \(\gamma ^1\succ '\sigma \) because \(\gamma ^1\) is an iso-dominant of \(\overline{{\mathcal {Y}}}_\infty \). This completes the proof. \(\square \)

4.2 Comparison Theorem for Observable Diameter

In this subsection, we evaluate the observable diameter by using the iso-dominant.

Proposition 4.3

Let \(\mu \) and \(\nu \) be two Borel probability measures on \(\mathbb {R}\). If \(\mu \succ '_{(s,t)}\nu \), then we have

Proof

By \(\mu \succ '_{(s,t)}\nu \), there exist \(\pi \in \Pi (\mu ,\nu )\) and a Borel set \(S\subset \mathbb {R}^2\) such that \({{\,\mathrm{dev}\,}}_\succ S\le s\) and \(1-\pi (S)\le t\). Take any Borel set \(A\subset \mathbb {R}\) with \(\mu (A)\ge 1-\kappa \). Put \(B:={{\,\mathrm{pr}\,}}_2(S\cap ({{\,\mathrm{pr}\,}}_1)^{-1}(A))\). Since

we have \(\mathrm{diam}(\nu ;1-\kappa -t)\le \mathrm{diam}B\). By Proposition 3.25, we have \(\mathrm{diam}B\le \mathrm{diam}A+{{\,\mathrm{dev}\,}}_\succ S\), which implies \(\mathrm{diam}(\nu ;1-\kappa -t)\le \mathrm{diam}A+s\). Then we obtain \(\mathrm{diam}(\nu ;1-\kappa -t)\le \mathrm{diam}(\mu ;1-\kappa )+s\). This completes the proof. \(\square \)

Proposition 4.4

Let \(\{\mu _n\}_{n=1}^\infty \) be a sequence of Borel probability measures on \(\mathbb {R}\) and \(\kappa \) a positive real number. We assume that \(\{\mu _n\}_{n=1}^\infty \) converges weakly to a Borel probability measure \(\mu \) on \(\mathbb {R}\) and that the function \(t\mapsto \mathrm{diam}(\mu ;1-t)\) is continuous at \(\kappa \). Then we have

Proof