Abstract

The aim of this paper is to exhibit a novel two-step iterative algorithm named PV algorithm to determine the fixed points of weak contractions in Banach spaces. Data dependence result is also obtained. It is proved that this PV iterative algorithm converges strongly to the fixed point of weak contractions. This iteration is almost stable for weak contraction. Furthermore, it is proved that rate of convergence of the PV iterative algorithm is faster than Picard, Ishikawa, Mann, S,normal-S, Varat, and F* algorithms. Examples are also given to support the main result. The results of this paper are original and will further enrich the existing literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Throughout this article, we assume that \(Z_{+}\) represents the set of all nonnegative integers, and we consider the mapping \(H: V^* \rightarrow V^*\), where \(V^*\) is a nonempty, convex, and closed subset of a Banach space \(B^*\). We denote by \(\text {Fix}(H)\) the set of all fixed points of H.

Several nonlinear problems can be mathematically formulated using self-mappings of the form \(A(x) = x\). These mappings exhibit various properties such as contraction, continuity etc. Banach’s work on contraction mappings is a well-celebrated result in the literature on fixed points. However, a question arises regarding the verification of the contraction condition for self-mappings when it is relaxed.

In response to this question, Berinde [1] introduced a new concept known as weak contraction, also referred to as almost contractions. He established that the class of weak contractions is more general than the classes of contraction mappings, Kannan mappings [2], Chatterjee mappings [3], Zamfirescu mappings [4], etc. He developed the existence and uniqueness theorem for fixed points of these weaker contractions. Due to its wide range of applications, numerous researchers have examined and proposed iterative algorithms for various classes of mappings (e.g., see [5,6,7,8,9]). Additionally, many researchers [10,11,12] have expanded the scope of this theory by obtaining several extensions of fixed point theory. The iterative algorithms listed below are known as the Picard [13], Mann [14], Ishikawa [15], S [16], normal-S [17], Varat [18], and \(F^*\) [19] algorithms, respectively, for the self- mapping H defined on \(V^*\). Here {\(r_m\)}, {\(s_m\)}, and {\(t_{m}\)} are sequences in the interval (0, 1).

A natural question arises from the above discussion whether it is feasible to discover a two-step iterative algorithm with the rate of convergence that is more accelerated than \(F^{*}\) iterative algorithm (1.7 ) and from some other iterative algorithms?

In this paper, a novel two-step iterative algorithm, PV algorithm, is introduced which is given for a mapping \(H:V\text {*} \rightarrow V\text {*}\) where \(V\text {*}\) is a nonempty, closed and convex subset of a Banach space \(B\text {*}\), the sequence \(\{p_{m}\}\) is defined by:

where \(r_{m}\) is a sequence in (0, 1).

Now, the main results are proved using PV iterative algorithm for weak contractions which satisfy (1.10) on an arbitrary Banach space. We begin with the subsequent result on strong convergence.

Now, we recall the definition of weak contraction.

Definition 1.1

[1](Weak contraction): A map \(H: B\text {*} \rightarrow B\text {*}\) where \(B\text {*}\) is a Banach space is termed as a weak contraction if for some constants \(\delta \in (0,1)\) and \(L\ge 0\), we have:

Berinde [1] proved the subsequent theorem for the uniqueness and the existence of a fixed point in these mappings.

Theorem 1.1

[1] Let \(H: B\text {*} \rightarrow B\text {*}\) where \(B\text {*}\) is a Banach space be a weak contraction with \(\delta \in (0,1)\), \(L\ge 0\) and it also satisfies

Then, the mapping H has a unique fixed point in \(B\text {*}\).

Ostrowski [20] defined the notion of stability as

Definition 1.2

Let \(H: B^* \rightarrow B^*\), where \(B^*\) is a Banach space with some \(p \in \text {Fix}(H)\). Assume that \(p_0 \in B^*\) and \(p_{m+1} = g(H, p_m)\) is an iterative algorithm for some function g. For a sequence \(\{p_m\}\) in \(B^*\), let \(\{y_m\}\) be an approximate sequence, and define \(\alpha _m = \Vert y_{m+1} - g(H, y_m)\Vert \). Then, the iterative algorithm \(p_{m+1} = g(H, p_m)\) is called H-stable if

Using Definition 1.2, Harder and Marie [21], Harder [22] proved the stability of several iterative algorithms for various types of contractive-type operators. Moreover, Osilike [23, 24] proved the stability of Ishikawa and Mann iterative schemes for operators of contractive type. Ostrowski [20] provides the subsequent definition.

Definition 1.3

Let \(H: B^* \rightarrow B^*\), where \(B^*\) is a Banach space, be a weak contraction, and \(p \in \text {Fix}(H)\). Assume that \(p_0 \in B^*\) and \(p_{m+1} = g(H, p_m)\), \(m \in Z_+\), is an iterative algorithm for some function g. For a sequence \(\{p_m\}\) in \(B^*\), let \(\{y_m\}\) be an approximate sequence and define \(\alpha _m = \Vert y_{m+1} - g(H, y_m)\Vert \). Then, the iterative algorithm \(p_{m+1} = g(H, p_m)\) is said to be almost H-stable if:

To correlate the rate of convergence of two iterative algorithms, Berinde [25] provides the subsequent definitions.

Definition 1.4

Let \(\{\eta _m\}\) and \(\{\theta _m\}\) be two sequences in the set of positive real numbers that converge to \(\eta \) and \(\theta \), respectively. Suppose that:

-

(i)

If \(l = 0\), then \(\{\theta _m\}\) converges to \(\theta \) faster than \(\{\eta _m\}\) to \(\eta \).

-

(ii)

If \(0< l < \infty \), then \(\{\theta _m\}\) and \(\{\eta _m\}\) converge at the same rate of convergence.

Definition 1.5

Let {\(p_m\) }and {\(q_m\)} be two iterative algorithms, both converging to the exact same point p with the following error estimates \(\theta _m\) and \(\eta _m\)(best ones available) where \(\theta _m\), \(\eta _m\) \(\rightarrow 0\) and satisfies

If \(\lim _{m \rightarrow \infty } \frac{\theta _m}{\eta _m} = 0\), then \(\{p_m\}\) converges faster than \(\{q_m\}\).

In the context of Banach spaces, we aim to define and quantify the speed at which sequences or iterative algorithms converge to a common limit point. To achieve this, we introduce a new definition and establish its consistency with the definition 1.5.

Given \(p\in X\) (Banach Space), we denote an ’\(\epsilon \)’ neighborhood of p as \(V_\epsilon (p)=\{x\in X \mid \Vert x-p\Vert \le \epsilon \}\).

Let \(\{p_m\}\) and \(\{q_{m}\}\) be two sequences in a Banach space X that converge to the same fixed point p. Our new definition states that the sequence \(\{p_m\}\) is faster than \(\{q_{m}\}\) if, after a certain number of steps, \(\{p_m\}\) approaches p more closely than \(\{q_{m}\}\) does. In other words, after a certain number of steps, \(\{p_m\}\) always lies inside a smaller neighborhood of p compared to \(\{q_{m}\}\).

Definition 1.6

Let \(\{p_m\}\) and \(\{q_{m}\}\) be two sequences in a Banach Space X such that both \(\{p_m\}\) and \(\{q_{m}\}\) converge to the same point p. We say that \(\{p_m\}\) converges to p faster than \(\{q_m\}\) if, for any positive real number \(\epsilon _2 > 0\), there exists \(\epsilon _1 > 0\) and \(a \in {\mathbb {N}}\) such that \(\epsilon _1 < \epsilon _2\), \(\Vert p_m - p\Vert < \epsilon _1\), and \(\Vert q_m - p\Vert < \epsilon _2\) for all \(m \ge a\).

Now, we will demonstrate that the definition 1.6 is consistent with the definition 1.5. Consider \(\{p_m\}\), \(\{q_m\}\), \(\theta _m\), and \(\eta _{m}\) as in definition 1.5.

As \(\lim _{m \rightarrow \infty }\frac{\theta _m}{\eta _m} = 0\), for some \(0< \epsilon _{0} < 1\), there exists \(m_{0} \in {\mathbb {N}}\) such that for all \(m \ge m_{0}\), we have \(\frac{\theta _m}{\eta _m}< \epsilon _{0} \implies \theta _m < \epsilon _{0}\eta _m\)

Now since \(\eta _m \rightarrow 0\), for any \(\epsilon _2 > 0\), there exists \(m_2 \in {\mathbb {N}}\) such that \(0< \eta _m < \epsilon _{2}\) for all \(m \ge m_{2}\).

Consequently, as \(\theta _m< \epsilon _{0}\eta _m < \epsilon _{0}\epsilon _{2} = \epsilon _1\) (say) for all \(m \ge K\) (where \(K = \sup \{m_{0},m_{2}\}\)), we have \(\epsilon _{0}\epsilon _{2} = \epsilon _1 < \epsilon _{2}\) (as,\(0< \epsilon _{0} < 1\)).

Hence, \(\Vert p_m - p\Vert \le \theta _m < \epsilon _1\) and \(\Vert q_m - p\Vert \le \eta _m < \epsilon _2\), where \(\epsilon _1 < \epsilon _{2}\). Therefore, \(\{p_m\}\) converges faster than \(\{q_m\}\) as per definition 1.6.

Definition 1.7

Consider two self-operators K and H on a nonempty subset \(V^*\) of a Banach space \(B^*\). If, for all \(x \in V^*\) and for a fixed \(\epsilon > 0\), we have \(\Vert Hx - Kx \Vert \le \epsilon \), then the operator K is said to be an approximate operator for H.

The subsequent lemma has an essential role in proving the major result of this paper.

Lemma 1.2

[26] Let \(\{a_{m}\}\) and \(\{b_{m}\)} be two sequences from the set of all nonnegative real numbers and \( t \in [0,1)\), such that \(a_{m+1}\le ta_{m} + b_{m}\), for all \(m\ge 0.\) Then,

Another useful lemma stated by [19]

Lemma 1.3

[19] Let \(\{\alpha _m\}\) be a sequence in \({\mathbb {R}}_{+}\) and there exists \(N \in {\mathbb {Z}}_{+}\), such that for all \(m \ge N\), \(\{\alpha _m\}\) satisfies the following inequality:

where \(\mu _m \in (0, 1)\) for all \(m \in {\mathbb {Z}}_{+}\), such that \(\sum _{m=0}^{\infty } \mu _m = \infty \) and \(\eta _m \ge 0\) is a bounded sequence. Then:

2 Main results

We exhibit a novel two-step iterative algorithm, named as PV algorithm, which is given below

For a mapping \(H:V\text {*} \rightarrow V\text {*}\),where \(V\text {*}\) is a nonempty, closed and convex subset of a Banach space \(B\text {*}\), the sequence \({p_{m}}\) is defined by:

where \(r_{m}\) is a sequence in (0, 1).

Now, we will prove the main results using PV iterative algorithm for weak contractions which satisfy (1.10) on an arbitrary Banach space. We begin with the subsequent result on strong convergence.

Theorem 2.1

Let \(V\text {*}\) be a nonempty, closed and convex, subset of a Banach space \(B\text {*}\) and H be a self map on \(V\text {*}\) which is a weak contraction also satisfying (1.10). Then, the sequence {\(p_{m}\)} defined by PV iterative algorithm (2.1) converges to the unique fixed point of H.

Proof

Let \(p \in Fix(H)\). By condition (1.10), we have:

Now, by PV iterative algorithm (2.1), we have

Inductively, we get

Now, as \( 0<\delta <1 \) hence \(\{p_{m}\}\) converges strongly to p. \(\square \)

The Subsequent theorem shows the almost H-stability of the PV iterative algorithm (2.1).

Theorem 2.2

Let H be a weak contraction from \(V^*\) to \(V^*\), which also satisfies (1.10), where \(V^*\) is a nonempty, closed and convex subset in a Banach space \(B^*\). Then, the PV iterative algorithm (2.1) turns out to be almost H-stable.

Proof

Let \({y_{m}}\) be an arbitrary sequence in \(V\text {*}\),and the sequence constructed by PV algorithm is \(p_{m+1} = g(H, p_{m})\) and \(\sigma _{m}=\Vert y_{m+1} - g(H,z_{m})\Vert \),where m is in \(Z_{+}\). Now, we will show:

\(\text {Let}\,\sum _{m=0}^{\infty }\sigma _{m} < \infty .\, \text {Then,by the PV algorithm, we have }\)

\(u_{m}=\Vert y_{m} -p\Vert \) and \(q=\delta ^{3} \) Then, we have \(u_{m+1} \le \sigma _{m} +q.u_{m}\) as \( q=\delta ^{3}\), \(\delta \in (0,1)\) thus \(0<q<1\) and \(\sum _{m=0}^{\infty }\sigma _{m} < \infty \implies \sigma _{m} \rightarrow 0 \). Therefore, \(u_{m} \rightarrow 0 \) using lemma 1.2. \(\square \)

In this theorem, we will demonstrate that the PV algorithm is faster than other iterative algorithms.

Theorem 2.3

Let \(H: V\text {*} \rightarrow V\text {*}\) be a weak contraction also satisfying (1.10), where \(V\text {*}\) is a nonempty, closed and convex subset in a Banach space \(B\text {*}\). Let the sequences \(\{p_{1,m}\},\, \{p_{2,m}\},\, \{p_{3,m}\}, \{p_{4,m}\},\, \{p_{5,m}\},\, \{p_{6,m}\},\, \{p_{7,m}\}\) and \(\{p_{m}\}\) be defined by Picard, Mann, Ishikawa, S, normal-S, Varat, \(F^{*}\) iterative algorithms, and PV, respectively, and converge to the same point \(p \in \text {Fix}(H)\). Then, the PV algorithm converges more rapidly than all the algorithms mentioned above.

Proof

Because of inequality (2.2) in Theorem 2.1, we have:

As proved by [27]:

Then:

Now, as \(0<\delta <1\) Therefore we have

Hence, the sequence \(p_{m}\) converges to p faster than \({p_{1,m}}\) Now, by normal-S algorithm as proved by ALI [19], we get:

Now, as \(0<\delta <1\) Therefore, we have

Hence, the sequence \( p_{m}\) converges to p faster than \({p_{5,m}}\).

As proved by the Sintunavarat W, Pitea A [18] that

Now,as \(0<\delta <1\) therefore we have

Hence, the sequence \({p_{m}}\) converges to p faster than \({p_{6,m}}\).

Now,for \({p_{7,m}}\) by F.Ali [19] we have that

Now,as \(0<\delta <1\) Therefore we have

Hence, the sequence \({p_{m}}\) converges to p faster than \({p_{7,m}}\). \(\square \)

Also, F.Ali [19] showed that the F* algorithm converges quicker than Varat, Mann, Ishikawa, and normal-S algorithms for the case of weak contractions. Thus, PV iterative algorithm converges more rapidly than every iterative algorithm from (1.1) to (1.7).

Example 2.1

Let \(B\text {*}\)=R Banach space with the usual norm and \(V\text {*}= [0,\frac{\pi }{2}]\,\). Let \(f:V\text {*}\rightarrow V\text {*}\) be defined as \( f(x)=\frac{x}{4} + \cos (x)\).Then, as f is a contraction mapping and hence a weak contraction satisfying (1.10) and has only one fixed point which is 0.865(approx). Here control sequences are taken as

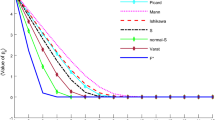

taking an initial guess of 0.44933229232998 and using python language we can see that PV iteration converges to the fixed point 0.865(approx) faster then Picard [13], Ishikawa [15], Mann [14], S [16], normal-S [17], Varat [18] and F* [19] iterative algorithms, as we can see in Table 1 and Fig. 1.

Behaviour of convergence for the sequences defined by various iterative algorithms for Example 2.1

Behaviour of convergence with error for the sequences defined by various iterative algorithms for Example 2.1

Example 2.2

Weak contraction which is not a contraction

Then, as f is not continous at x=0.5 we can say that f cannot be a contraction but it can be easily verified that f is a weak contraction with \(\delta =\frac{1}{2}\) and \( L=1\) and fixed point is at 0. Now, all the conditions of the Theorems 2.1 and 2.3 are satisfied.Taking control sequence \( r_{m}\) = 0.4790527832595, \(s_{m}\) =0.4790527832595, and \(t_{m}\)= 0.4790527832595 using python language we can show that the sequence defined by PV iterative algorithm (2.1) converges to a unique fixed point p = 0 of the mapping f faster than the algorithms (1.1) to (1.7) which is shown in Table 2 and Fig. 3.

Behaviour of convergence for the sequences defined by various iterative algorithms for Example 2.2

Behaviour of convergence with error for the sequences defined by various iterative algorithms for Example 2.2

Now, we prove a result which will be used in Application section

3 Result on data dependence

Recently, data dependence research for fixed points is a key area of fixed point theory. Noteworthy researchers who have made contributions to the data dependence field of fixed points are Markin [28], MURSEAN [29],Berinde [1, 30, 31], Soltuz [32], Soltuz and Grosan [33] and Oltainwo [34]. Now, a theorem on the data dependence of fixed points is proved.

Theorem 3.1

Let H be a weak contraction also satisfying(1.10) and let an approximate operator of H be K, \({p_{m}}\) be a sequence generated by PV iterative algorithm (2.1) for H. Now,generate a sequence \(u_{m}\) for K as follows:

where \(r_{m}\) is a sequence in (0, 1)satisfying \(0.5 \le r_{m}\) for all m in \(Z_{+}\) and \(\sum _{m=0}^{\infty }r_{m}<\infty \).If \(Hp = p\) and \( Kq = q \) such that \(u_{m} \,\rightarrow q \,\) and \(Ku_{m} \rightarrow q \) as \( m\rightarrow \infty \), then we have:

where \( \epsilon >0\) is a fixed number.

Proof

Using (3.1),(1.10) and (2.1) we get

One can show that

Now, putting (3.3),(3.4) and (3.5) in (3.2) we get

Now, we consider

Now, using the fact that \(0.5 \le r_{m}\) Thus \(1 - r_{m}\le r_{m}\) and \(\delta \in (0, 1)\)

let,

Now,as \(r_{m}\in (0,1)\), \(\sum _{m=0}^{\infty }r_{m}<\infty \) and \(\delta \in (0,1)\) therefore \(\mu _{m}\in (0,1)\) too also \(\sum _{m=0}^{\infty }\mu _{m}<\infty \) with \(\theta _{m}\), \(\mu _{m}\) and \(\eta _{m}\) as defined above all the condition of the Lemma 1.3 are satisfied hence we have

Putting the value of \(\eta _{m}\) in (3.6) above and using the fact that

\(\Vert Hp_{m} -p_{m}\Vert \rightarrow 0\) and \(\Vert Hu_{m} -u_{m}\Vert \le \epsilon \) we get

\(\square \)

The following example supports the above Theorem

Example 3.1

Consider

One can easily shown that L is a weak contraction with \(\delta =\frac{16}{25}\).

Now,consider

thus here \(\epsilon =0.032\).

Fixed point of the function H is q=\(-\)0.002 and \(u_{m}\rightarrow q\) also at \(-\)0.002. H is continuous thus \(u_{m}\rightarrow H(q)=q\).

Let us take \(K(t) = 0.127+0.0631(t-0.2)-0.04(t-0.2)^2 \,and \,r_{m} =0.49\,,u_{0} =u \,\in Y \)

From the Table 3 we can see that \({u_{m}}\) converges to the fixed point \(q=-0.0022\) of K

Now, using the Theorem 3.1 we have

for this example we have \( L =0\) and \(\delta =\frac{16}{25}\) thus we get

Putting the value of \(\epsilon \)=0.0032 ,\(\delta =\frac{16}{25}\) we have

Thus from the theorem we have \(\Vert p-q\Vert \le 0.30356 \) and we have actually \(\Vert p-q\Vert = 0.0022 \).

4 Application

In this section, we will show an application of our results to solve nonlinear Matrix equations, these kinds of applications can be seen in papers such as [35] and [36]. Therefore, we introduce the following terminology.

\(\Vert .\Vert _{tr}\) represents the trace norm.\(\Vert A\Vert _{tr}\) also written as tr(A) is obtained by adding singular values of A where singular values of A are the square roots of the eigenvalues of \(A^{*}A\).

\(\Vert .\Vert \) is representation of spectral norm.

\(\Vert A\Vert = \sqrt{\lambda ^{+}A^{*}A}\) where \(\lambda ^{+}(A^{*}A)\) is the largest eigenvalue of \(A\text {*}A\).

\(M_{k}\) represents set of \(k \times k \) matrices.

\(H_{k}\) represents set of \(k \times k \) hermitian matrices.

\(P_{k}\) represents set of \(k \times k \) positive semi definite matrices.

\(X _1\ge 0\) means \(X _1\in P_{k}\).

\(X _1>X_2\) means \(X _1-X_2 > 0\).

\(X _1 \ge X_2\) means \(X _1 - X_2 \ge 0\).

Remark [37]\(P_{k}\subseteq H_{k}\subseteq M_{k}\) and (\(H_{k},\le \)) is a partially ordered set then \(H_{k}\) with trace norm is a complete metric space and hence a Banach Space.

Lemma 4.1

[38] If \(X_2\ge 0\) and \(X_1\ge 0\) then \( 0 \le tr(X_2X_1)\le \Vert X_1\Vert tr(X_2)\).

Consider the following non-linear matrix equation

where each \(A_{i}\) is an arbitrary \(k \times k \) matrix for each \(i = 1, 2,.,m.\) \(Q_{1}\) is a positive definite hermitian matrix. F is an order-preserving continuous map from \(P_{k}\) into \(P_{k}\) such that \(H_{k}\), endowed with trace norm is a normed Banach Space. Hence, it is a complete metric space. Let \( G: P_{k} \rightarrow \,P_{k}\) be a continuous order preserving self map such that

for all \(X \in P_{k}\). Clearly, a fixed point of G is a solution of the above equation.

Define \(\textit{C}=\{ tQ_{1}+(1-t)X_{0} \,\forall \, t\in [0,1] \}\).

Lemma 4.2

If we have G as defined above such that \(G(Q_{1})\) and \(G(X_{0}) \in C\) for some \(X_{0}\); and F satisfies \(F(tX+(1-t)Y) = tF(X) + (1-t)F(Y)\) for all \(X, Y \in C\).

Then G is a mapping from C to C.

Proof

Let \(A \in \textit{C}\) Then \(A=tQ_{1}+(1-t)X_{0}\) for some \(t\in [0,1]\)

Now,

Putting value of A in above we get

Now, as \(G(Q_{1})\) and \(G(X_{0})\in C\) then so is \(G(A)=tG(Q_{1}) +(1-t)G(X_{0})\in C\) as C is a convex set. \(\square \)

Theorem 4.3

Let (4.1) be the nonlinear matrix equation given above. Now consider

assume \(\exists \,X_{0}\) such that \(G(X_{0}) \le X_{0}\). Let \(\textit{C} = \{ tQ_{1} + (1-t)X_{0} \mid t \in [0,1] \}\). F is a nonlinear function \(F:P_{k}\longrightarrow P_{k}\), and \(F(tX_{0}+(1-t)Q_{1})=tF(X_{0})+(1-t)F(Q_{1}) \,\forall \, t\in [0,1]\) and \(G(X_{0}), G(Q_{1}) \in C\). Using Lemma 4.2, we have \(G:C\rightarrow C\), where C is a closed and convex set of \(H_{k}\) under trace norm, which is a Banach space. Further, let us also have the following conditions.

-

(i)

\(\,\Vert F(X_{1})-F(Y_{1})\Vert _{tr} \le \beta (\Vert X_{1}-G(X_{1})\Vert _{tr} + \Vert Y_{1}-G(Y_{1})\Vert _{tr})\). Where, \(\beta \in [1, \frac{3}{2}]\).

-

(ii)

\(\,\Vert \sum _{i=1}^{m}A^{*}_{i}A_{i}\Vert \le \alpha \). Where, \(\alpha \in [0, \frac{1}{4}]\).

Proof

Let \(X_{1},Y_{1}\in C\) assume without loss of generality assume that \(X_{1}\ge Y_{1}\) as all elements in C are comparable.

Now,

Now,since F is an order-preserving map then \( X_{1}\ge Y_{1} \implies F(X_{1})\ge F(Y_{1}) \) thus \(A^{*}_{i}(F(X_{1})- F(Y_{1}))A_{i}\ge 0\); and hence \(\sum _{i=1}^{m}A^{*}_{i}(F(X_{1})-F(Y_{1}))A_{i}\ge 0\)

Now applying the Lemma 4.1 we get

Thus, \(\textit{G}\) is a Kannan map from \(\textit{C}\) to \(\textit{C}\). Now, as a Kannan mapping implies weak contraction, we can apply Theorem 2.1 to obtain the fixed point of \(\textit{G}\), which will be the solution of the equation (4.1). \(\square \)

Now, an example to support above result

Example 4.1

Consider the matrix difference equation

Let m=2,\(\textit{C}=\{ tQ_{1}+(1-t)X_{0} \, \forall t\in [0,1] \}\), \(Q_{1}=\begin{pmatrix} 5 &{} 0 \\ 0 &{} 5 \end{pmatrix}\),\(F(X)=X+Q\), \(A_{1}=\begin{pmatrix} \frac{\sqrt{3}}{4} &{} 0 \\ 0 &{} \frac{\sqrt{3}}{4} \end{pmatrix}\) and \(A_{2}=\begin{pmatrix} \frac{1}{4} &{} 0 \\ 0 &{} \frac{1}{4} \end{pmatrix}\).Then one can easily show that \(F:C\rightarrow C\) satisfy

. Also, we have \( \Vert A^{*}_{1}A_{1}+A^{*}_{2}A_{2}\Vert =\frac{1}{4}\) and \(G(X)= Q_{1} +\frac{1}{4}(X+Q_{1})\).

Now, consider

Using the formula for G(X) and G(Y)

Thus, all the conditions of the Theorem 4.3 are satisfied hence we can apply the Theorem 2.1 and 4.3 to obtain the solution of the matrix difference equation.

5 Conclusion

In this research paper, we have presented a novel and advanced two-step iterative algorithm for determining fixed points of weak contractions in Banach spaces. This algorithm is more effective and converges faster than some major iterative algorithms, as demonstrated by Theorem 2.3. Additionally, in Theorem 2.2, we have proved that the PV iterative algorithm is almost H-stable. Our claims are validated by Examples 2.1 and 2.2. Furthermore, we have obtained a result regarding data dependence, and an example illustrates the validity of this result. Lastly, we approximate the solution of a nonlinear matrix difference equation. However, a few natural questions arise in this field which can be further proved in the coming years:

-

(Q1)

Is it possible to define an iterative technique whose convergence rate is faster than that of the PV iterative procedure for the class of weak contractions in a Banach space?

-

(Q2)

Does the PV iteration strongly converge to the fixed point of weak contractions in spaces with weaker conditions than a Banach space, such as a metric space or quasi-Banach space?

-

(Q3)

Does the PV iterative algorithm converge for other classes of mappings, such as enriched contractions or quasi-nonexpansive mappings?

Availability of data

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

References

Berinde, V.: On the approximation of fixed points of weak contractive mappings, carpathian. Carpathian J. Math. 19, 7–22 (2003)

Kannan, R.: Some results on fixed points. Bull. Cal. Math. Soc. 60, 71–76 (1968)

S., C.: Fixed-point theorems. Doklady Bolgarskoi Akademiya Nauk 25 (1972)

Zamfirescu, T.: Fix point theorems in metric spaces. Arch. Math. 23(1), 292–298 (1972)

Mohd, J., Javid, A., Santosh, K.: Estimating fixed points via new iterative scheme with an application. J. Funct. Spaces (2022). https://doi.org/10.1155/2022/3740809

Mohammad, I., Sahu, D.R., Santosh, K.: Fixed point theorems in symmetrizable topological spaces. Nonlinear Anal. Forum 9, 97–107 (2004)

Singh, V.K., Santosh, K.: Iteration process with errors for local strongly h-accretive type mappings. Fixed Point Theory 9, 351–362 (2008)

Santosh, K.: Some fixed point theorems for iterated contraction maps. J. Appl. Function. Anal. 10, (2015)

Anju, P., Reena, M., Kumar, S.: Fixed points of nonexpansive mappings using mp iterative process. Adv. Theory Nonlinear Anal. Appl. 6(2), 229–245 (2022)

Pragati, G., Santosh, K., Swapnil, V., Soumya, G.: On some w - interpolative contractions of suzuki-type mappings in quasi-partial b-metric space. J. Funct. Spaces 2022, 1–12 (2022). https://doi.org/10.1155/2022/9158199

Pragati, G., L.M., S.R., Swapnil, V., Gauri, G.: Common fixed point results on generalized weak compatible mapping in quasi-partial b-metric space. Journal of Mathematics 2021, 1–10 (2021). https://doi.org/10.1155/2021/5526801

Pragati, G., Swapnil, V.: Fixed point via implicit contraction mapping on quasi-partial b-metric space. J. Anal. (2021). https://doi.org/10.1007/s41478-021-00309-6

Picard, E.: Memoire sur la theorie des equations aux derivees partielles et la methode des approximations successives. J. Math. 6, 145–210 (1890)

Mann, W.R.: Mean value methods in iteration. Proc. Am. Math. Soc. 4(3), 506–510 (1953)

Ishikawa, S.: Fixed points by a new iteration method. Proc. Am. Math. Soc. 44(1), 147–150 (1974)

Agarwal, R.P., O’Regan, D., Sahu, D.R.: Iterative construction of fixed points of nearly asymptotically nonexpansive mappings. J. Nonlinear Convex Anal. 8(1), 61 (2007)

Sahu, D.R.: Applications of the s-iteration process to constrained minimization problems and split feasibility problems. Fixed Point Theory 12(1), 187–204 (2011)

Sintunavarat, W., Pitea, A.: On a new iteration scheme for numerical reckoning fixed points of Berinde mappings with convergence analysis. J. Nonlinear Sci. Appl. 9(5), 2553–2562 (2016)

Faeem, A., Javid, A.: Convergence, stability, and data dependence of a new iterative algorithm with an application. Comput. Appl. Math. 39, 267 (2020). https://doi.org/10.1007/s40314-020-01316-2

Ostrowski, A.: The round-off stability of iterations. ZAMM-J. Appl. Math. Mech. 47(2), 77–81 (1967)

Harder, A.M.: Fixed point theory and stability results for fixed point iteration procedures. Ph.D. Thesis, Univ of Missouri-Rolla (1987)

Harder, A.M.: Fixed point theory and stability results for fixed point iteration procedures (1988)

Osilike, M.: Stability results for the Ishikawa fixed point iteration procedure. Indian J. Pure Appl. Math. 26, 937–946 (1995)

Osilike, M.: Stability of the Mann and Ishikawa iteration procedures for \(\varphi \)-strong pseudocontractions and nonlinear equations of the \(\varphi \)-strongly accretive type. J. Math. Anal. Appl. 227(2), 319–334 (1998)

Berinde, V.: Picard iteration converges faster than Mann iteration for a class of quasi-contractive operators. Fixed Point Theory Appl. 2004(2), 1–9 (2004)

Berinde, V.: Generalized Contractions and Applications. Editura cub press Baia Mare, Romania (1997)

Khan, S.H.: A Picard–Mann hybrid iterative process. Fixed Point Theory Appl. 2013(1), 1–10 (2013)

Markin, J.: Continuous dependence of fixed point sets. Proc. Am. Math. Soc. 38(3), 545–547 (1973)

Muresan, S.: Data dependence of the fixed points set of weakly Picard operators. Studia Univ. Babes-Bolyai Math. 43(1), 79–83 (1998)

Rus, I.A., Petruşel, A., Sıntămărian, A.: Data dependence of the fixed point set of some multivalued weakly Picard operators. Nonlinear Anal.: Theory Methods Appl. 52(8), 1947–1959 (2003)

Espinola, R., Petrusel, A.: Existence and data dependence of fixed points for multivalued operators on gauge spaces. J. Math. Anal. Appl. 309, 420–432 (2005). https://doi.org/10.1016/j.jmaa.2004.07.006

Soltuz, S.M.: Data dependence for Ishikawa iteration. Lect. Mat. 25(2), 149–155 (2004)

Şoltuz, Ş, Grosan, T.: Data dependence for Ishikawa iteration when dealing with contractive-like operators. Fixed Point Theory Appl. 2008, 1–7 (2008)

Olatinwo, M.: Some results on the continuous dependence of the fixed points in normed linear space. Fixed Point Theory 10(1), 151–157 (2009)

Pragati, G., Chanpreet, K.: Fixed points of interpolative matkowski type contraction and its application in solving non-linear matrix equations. Rendiconti del Circolo Matematico di Palermo (2022). https://doi.org/10.1007/s12215-022-00789-w

Pragati, G., Singh, S.R., Santosh, K., Swapnil, V.: On nonunique fixed point theorems via interpolative chatterjea type suzuki contraction in quasi-partial b-metric space. J. Math. 2022, 1–10 (2022). https://doi.org/10.1155/2022/2347294

André, R., Martine, R.: A fixed point theorem in partially ordered sets and some application to matrix equations. Proc. Am. Math. Soc. 132, 1435–1443 (2004). https://doi.org/10.1090/S0002-9939-03-07220-4

Jian-hui, L., Xi-yan, H., Lei, Z.: On the Hermitian positive definite solution of the nonlinear matrix equation x plus a*x(-1)a plus b*x-1b = i. Bull. Brazi. Math. Soc. 39, 371–386 (2008)

Author information

Authors and Affiliations

Contributions

Both the authors contributed equally and significantly. Both the authors have read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

Both the authors declare that they do not have a conflict of interests.

Consent to participate

Not applicable.

Consent for publication

Both the authors give their consent to the publisher to publish their research findings.

Ethics approval

This research doesn’t contain any studies performed on humans or animals as participants.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Gautam, P., Vineet Convergence and stability of a novel iterative algorithm for weak contraction in banach spaces. Rend. Circ. Mat. Palermo, II. Ser 73, 1537–1554 (2024). https://doi.org/10.1007/s12215-023-00991-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12215-023-00991-4