Abstract

Flying an aircraft is a mentally demanding task where pilots must process a vast amount of visual, auditory and vestibular information. They have to control the aircraft by pulling, pushing and turning different knobs and levers, while knowing that mistakes in doing so can have fatal outcomes. Therefore, attempts to improve and optimize these interactions should not increase pilots’ mental workload. By utilizing pilots’ visual attention, gaze-based interactions provide an unobtrusive solution to this. This research is the first to actively involve pilots in the exploration of gaze-based interactions in the cockpit. By distributing a survey among 20 active commercial aviation pilots working for an internationally operating airline, the paper investigates pilots’ perception and needs concerning gaze-based interactions. The results build the foundation for future research, because they not only reflect pilots’ attitudes towards this novel technology, but also provide an overview of situations in which pilots need gaze-based interactions.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Human factors in general and human–computer interaction in particular have a longstanding history in aviation research [30]. Research starting as early as the 1950s, was already concerned with “[...] whether (and how) to integrate information on a single display, how to arrange displays, how displays, controls, and the comprehension of spatial information are related, [...] what types of displays are best for certain functions [...]” [30, p. 772], and how to allocate “[...] tasks to humans and automation [...]” [30, p. 778].

These and other newly evolving topics make continuous research on human–computer interaction in aviation inevitable. In particular, the growing role of automation in modern aircraft introduces challenges that necessitate intensive research with regard to workload, crew coordination, error management, vigilance [30, 50], as well as situation awareness [17]. Simultaneously, the introduction of the so called “[...] glass cockpits, replacing nearly all electromechanical ’steam gauges’ with graphical displays” [30, p. 778] radically enhanced the design space and the complexity of creating cockpit displays. Considering the potentially fatal outcomes of misperceptions involving human–computer interaction in the cockpit, the importance of this research becomes even more evident [27].

A powerful technology to explore and alleviate the impact of the previously mentioned challenges is eye tracking. Eye tracking can provide an unobtrusive way to observe, analyze and utilize a person’s visual attention [15]. We assume that, in the near future, it will be possible and allowed to integrate and reliably utilize eye tracking technology in the cockpit. By accepting enhanced flight vision systems in general and head-up displays in particular, the Federal Aviation Administration also “[...] recognized emerging technologies and placed within the rule, provisions for the use of an ‘equivalent display. [...]” [5, p. 4]. Thus, the regulations can be interpreted as to indicate that the use of head-mounted devices in the cockpit will no longer be prohibited in the near future [5]. This will allow the use of head-mounted displays such as augmented reality glasses, possibly with built-in mobile eye trackers. Also, remote eye tracking installations that integrate well into cockpits have been suggested [11].

In non-aviation related human–computer interaction research, different gaze-based interaction techniques have been proposed that are potentially also relevant for the cockpit: in explicit gaze-based interaction the user expresses the wish to trigger an interaction by using gaze as a pointer, either by fixating (e.g., [34]), or by moving the eyes to produce a smooth pursuit (e.g., [47]). Implicit approaches, on the other hand, record and interpret the user’s visual attention for estimating her information needs and adapting the interface (e.g., [6, 25]). Over the last few years, a trend towards increasing pervasiveness of gaze-based interaction can be observed [9], enabling gaze-based interaction with public displays [51], mobile phones [19, 28], smart watches [20], and display-free interaction with urban spaces [3].

In the context of aviation research, eye tracking is used to explore the correlation between pilots’ scanning behavior and their performance [13, 31, 42], expertise [44], mental workload [7, 16], vigilance [40], and for evaluating (existing and novel) displays, as well as the cockpit setup [36, 43]. Ziv [52] conducts a literature review on eye tracking studies in aviation, while Hollomon et al. [22] review ongoing gaze-based interaction research in general, and in relation to aviation. For the latter, they state that, even though research for both implicit and explicit gaze-based interactions exists, implicit solutions are missing. Dehais et al. [12] utilize implicit gaze to create an assistant system that improves pilots’ situation awareness, while Alonso et al. [2] explore its use to support air traffic control. Merchant and Schnell [35] use explicit gaze-based interactions in combination with audio inputs to control a flight simulator, while Pauchet et al. [38] do so to allow eyes-free interactions with touch surfaces. Thomas et al. [46] compare solutions for point-and-select tasks in the cockpit, and both Rudi et al. [41] and Peysakhovich et al. [39] explore different eye movement visualization techniques for the use in pilot training. Despite the lack of implicit gaze-based interactions, Hollomon et al. conclude that multi-modal solutions utilizing those should be preferred.

None of the preceding research evaluates how pilots perceive gaze-based interactions in the cockpit; that is, whether pilots can think of working with such a technology and what potential applications in the cockpit they see. This should however be the first step when studying user groups, i.e., to understand their needs; in particular, considering the specific area of aviation, in which the environment restricts the design or implementation of interfaces. That includes the limited access to the simulator and pilots for evaluating systems, the strict governmental regulations, the long development cycles of aircraft manufacturers, and the complex and confined cockpit. Moreover, understanding pilots’ future gaze-based interaction needs contributes to the design and layout of future cockpits.

This article fills that gap, and as a first consults active professional airline pilots from an internationally operating airline. The responses are analyzed to determine the overall attitude among pilots, and to identify use cases that reflect pilots’ needs and should be the focus of future research. For this purpose, we chose to employ a survey, which allows us to identify potential applications of gaze-based interactions in the cockpit. It provides insights on key components of any human–computer interaction, i.e., the action (of the pilot) and reaction (by the system) [33]. This type of study is particularly useful considering the given environment of a cockpit. That is, an environment where extensive tests and modifications in a real cockpit or a full-flight simulator are difficult to conduct. Moreover, surveys allow inquiring a diverse set of information in a more structured way compared to the alternatives of observations/interviews. They are also less time consuming considering the limited availability of airline pilots.

In summary, we make the following contributions based on the survey results:

Evaluate how professional airline pilots perceive gaze-based interactions.

Evaluate flight scenarios, for which pilots believe they can benefit from gaze-based interactions.

Infer directions for future research on gaze-based interactions in the cockpit.

In particular, the future directions on gaze-based interactions comprise a summary of the most promising gaze-based interactions identified by the pilots in Sect. 7.

The remainder of this article is structured as follows: first, we explain the existing interactions in today’s glass cockpits, by introducing the corresponding terminology, the visual attention distribution and pilots’ tasks and challenges, before presenting the research questions considered in this article. Then, we elaborate on the applied methodology, i.e., on the study procedure, distributed questionnaires and participating pilots. Afterwards, the results and a comprehensive discussion of those are presented, followed by the conclusions and outlook.

a Frontal view on the A320 cockpit with its most important AOIs. b The details of the PFD. The description of the abbreviations used in the figures can be found in Table 1 and Section 2.1

2 Interactions in the cockpit

This section helps understanding the flight scenarios (in the following only referred to as scenarios) pilots identified for gaze-based interactions in the cockpit. A scenario describes a flight during which pilots encounter a sequence of different events, such as a change of the wind direction. The cockpit setup and abbreviations are introduced in the next section, as a basis for discussions in the rest of the article. In the following sections the existing interactions in the cockpit, the visual attention distribution, and the tasks and challenges pilots face on a daily basis are presented and provide insights into pilots’ motivations and needs. Based on these aspects the research questions are defined.

The aviation domain is separated into civil and military aviation. Within civil aviation, there is the category of scheduled air transports. It is concerned with the commercial transportation of passengers or goods. This article focuses on this aviation category, which is commonly also referred to as commercial aviation. In particular, all pilots that participated in our study are certified for a widely spread aircraft type in commercial aviation, the Airbus 320 (A320) [1]. The A320 family is a typical example of aircraft, whose glass cockpit did not undergo any major changes since the first generation was shipped during the late 1980s. For simplicity we refer to the commercial aviation pilots as pilots.

2.1 Cockpit setup

To better understand the pilots’ situation, it is important to know the environment they are working in. Therefore, we introduce and explain the most important areas of interest (AOIs) in the A320 cockpit, as well as their abbreviations. These AOIs are used in the subsequent analyses, and were selected based on the previous work by Anders [4] and a discussion with our domain expert. Figure 1a shows a frontal view of the A320 cockpit including the AOIs. The abbreviations are explained in Table 1 and this section.

After entering the cockpit, pilots first adjust their seats to be able to look out the aircraft window. This allows them to look straight outside and to the left or right, depending on their seat (OUT). An unobstructed view to the outside is important during the takeoff and landing phase, as well as during ground operations, and if visual meteorological conditions hold, also during the cruise phase. The commander usually sits on the left seat, while the first officer sits on the right (see Fig. 2).

Pilots in an A320 full flight simulator without a HUD. Also depicted is some of the eye tracking equipment (red circles, i.e., two cameras and one infrared emitter) that pilots had experience with (refer to the section on the study procedure), before filling in our survey. This setup only monitors the first officer to the right. The commander sits to the left

The A320 can be equipped with a head-up display (HUD), which is a see-through display containing the same information as shown on the primary flight display. Its advantage is that due to its position within the pilot’s outside view (to the front), there is no need to look down into the cockpit anymore, thus minimizing the number of attention switches. This is of particular interest in the landing phase during which the pilot looks out the window at the runway, and simultaneously tries to monitor the flight parameters of the primary flight display (described in detail below). Both pilots have a laptop or tablet called electronic flight bag (EFB) at their disposal. It is located beneath the left (for the commander) or right window (for the first officer). The EFB contains information, such as the destination airport’s takeoff and landing routes, meteorological reports or administrative information.

Underneath the window is the glareshield (GSc), which describes the area containing the flight control units (FCUs), navigation display controls, as well as some other control knobs. Except for the FCUs, the elements are mirrored for both pilots. The FCUs allow the pilot to control the aircraft automation, which includes controlling the altitude (i.e., the flight height), heading (i.e., the yaw of the aircraft), speed (i.e., the forward motion speed), vertical speed (i.e., the vertical motion speed) and autopilots (i.e., whether or not the aircraft is controlled by the pilot using the side-stick).

The main panel (MPn) contains the primary flight display (PFD) and the navigation display (ND) (mirrored for both pilots), the electronic centralized aircraft monitors (ECAMs), the gear controls, as well as other control knobs.

The PFD (see Fig. 1b) can be considered the most important display in the cockpit. It contains all the flight parameters that the pilot needs to monitor for the aviating task. These include the speed (SPD), attitude (ATT—i.e., the orientation of the aircraft; pitch/roll), altitude (ALT), flight mode annunciators (FMAs—describing the aircraft’s automation state), vertical speed (V/S), heading (HDG), barometric information (QNH—i.e., the outside air pressure, which is important for the altitude computation) and frequency information (FRQ—i.e., the frequency used for communication with the air traffic controller). The latter is configured using the radio management panel (RMP).

The ND, situated next to the PFD, is a multi-modal display used during the navigating task. That is, it is possible to change its mode of representation or blend in additional information (such as terrain). Amongst others, it displays both the aircraft’s current position in relation to the set of pre-defined waypoints along the flight route (including the start point and destination), as well as meteorological information such as the wind direction and -speed, the most imminent weather hazards and the topography of the area underneath the aircraft.

The ECAMs are multi-modal displays situated in the center of the cockpit. The upper ECAM is called the engine/warning display (EWD) and contains both information on the engine status and warning or checklist information, as well as other aircraft information, such as the amount of fuel. The lower ECAM is the system display (SD), which contains either additional information from/for the EWD or other aircraft parameters.

Finally, the center pedestal (CP) is the name for the area between the two pilots. It contains two multifunction control display units (MCDUs), which are mirrored for both pilots. The MCDUs allow pilots to set the flight route and different long-term flight parameters. The computer system of an MCDU is called the flight management guidance computer (FMGC). In addition to the MCDUs, the center pedestal contains the thrust lever, flaps control, speed brakes, RMP, and other aircraft control knobs.

2.2 Interactions and visual attention

Figures 1 and 2, even though only depicting the most relevant parts of the cockpit, already demonstrate its complexity. Most of the depicted knobs can be pushed, some can be turned, others can be pulled and few allow all of these actions. Different combinations of these interactions may lead to different outcomes. Other knobs might start blinking and thus change their representation mode, requiring the pilot to interact. Moreover, all input devices provide haptic feedback to the pilot, i.e., all knobs, levers and even the foot pedals. The pilot continuously modifies these throughout the flight using the FCUs and MCDU. They allow the pilot to configure the automation parameters, plan the flight route, perform computations, etc. The levers (flaps, gear, brakes) on the main panel and center pedestal are used primarily during takeoff and landing phases, while the remaining knobs and levers are used less frequently. Not depicted is the over-head console, with the knobs, switches and levers, which are used in emergencies, to change the cockpit lighting and to communicate with the passengers.

Some of the displays are multi-modal, i.e., they provide different representations and require an additional interaction step to retrieve information. Each display has its own specific representation(s). The pilot needs to read and process the information from all of these. More precisely, the pilot either has directly accessible information on a single display, needs to combine different displays, or to interact with the aircraft to change a display’s mode. Even though the intensity of the interaction changes with the scenario at hand, Anders [4] shows that the PFD and ND are used the most throughout the flight. This is reasonable, considering that those two are the key references for a pilot when aviating and navigating. The FCU, MCDU, EFB and OUT areas complement the aviating and navigating tasks, while the ECAMs are used for system management.

Finally, the pilot needs to process aircraft’s audio notifications, the air traffic controller (ATC) call-outs, the other pilot’s and possibly the aircraft crew’s feedback, alongside the usual engine sounds of an aircraft. Consequently, the pilot’s interaction space is not only complex, but her cognitive capacities are being challenged both visually and auditorily throughout the flight.

2.3 Tasks and challenges

Scheduled air transport aircrafts are usually manned with two pilots: the pilot flying (PF) and the pilot monitoring (PM). The former is concerned with the main control of the aircraft (e.g., steering), while the latter performs supplementary tasks (e.g., letting the gear down), and double checks and confirms the actions of the PF. Among the PF’s tasks is also the crew resource management, which includes communicating with the PM (e.g., by giving the order to let the gear down) and the cabin crew (e.g., announcing upcoming turbulence) [29]. In most cases, the commander and first officer agree upon who acts as PF to the destination and who does so for the flight back to the origin.

Pilots take different actions to master the tasks and challenges they encounter during a flight. Wickens [49] suggests a categorization of aviation tasks by: aviating, navigating, communicating and systems management. The most important task for flying an airplane can be considered that of aviating, which is defined as staying up in the air throughout the flight. This is followed by navigating, i.e., reaching the destination while avoiding hazardous obstacles. The tasks of communicating and systems management include the exchange with the crew within the cockpit and with the air traffic controller or other airplanes outside of the cockpit, and reading and understanding the different aircraft systems.

Overview of the different flight phases and the percentage of fatal accidents that occured during those phases along with the percentage of onboard fatalities for the time period of 2008–2017 (adapted from [8]). The blue column contains the phase separation as introduced in the section on tasks and challenges, and considered for RQ4

Moreover, these four aviation tasks need to be managed by the pilots throughout all flight phases, which include taxi, load/unload, park, tow, takeoff, initial climb, climb, cruise, descent, initial approach, final approach, and landing (see Fig. 3). For simplicity we summarize these as ground operations (including taxi, load/unload, park, tow), takeoff (including initial climb and climb), cruise, and landing (including descent, initial approach and final approach).

2.4 Research questions

The cockpit is a special environment, for which gaze-based interactions differ from those employed in the public or at home. In particular, an aircraft is a highly automated system, controlled by only two persons and incorrect actions can have fatal outcomes. To preserve situation awareness, the pilots need to perceive the elements in the environment, understand their current situation, and anticipate the state they will be in [17]. Thus, they need to process a vast amount of information. Any solution that supports this process, can help increase flight safety. We believe that using eye tracking in an unobtrusive way to create novel gaze-based interactions, constitutes such a solution. That is, recording pilots’ eyes and computing their point of regard in the cockpit.

We aim at answering the following research questions:

RQ1 Do pilots anticipate that they will benefit from gaze-based interactions?

RQ2 Where in the cockpit do pilots anticipate that they will need gaze-based interactions?

RQ3 How do pilots want to interact with the aircraft using gaze-based interactions?

RQ4 When do pilots anticipate that they will benefit from gaze-based interactions?

3 Methodology

In this section we describe our methodology. This includes an overview of the involved participants (i.e., the pilots), a description for the questionnaires that were distributed and an explanation of the procedure. The latter includes the study execution and the methods chosen for the analyses of the pilots’ feedback.

3.1 Participants

Our survey was distributed among a total of 68 pilots. All pilots are active A320 pilots of an internationally operating airline. They were recruited using the airline’s internal communication platform. As compensation, the day the pilots participated was accounted as a regular working day, but they were free to spend it as they wished after and before participating in the study. Out of the 68 pilots, 20 completed our survey, since participation was not mandatory. Out of those 20, one forgot to record the participant number and was therefore only considered for the main analyses and not for the following demographic statistics.

The 19 pilots for whom the demographic information is available, had an average age of 29.6 years (SD: 5.7, MIN: 25, MAX: 45, MED: 28). Two of them were captains and 17 were first officers. Two of them were female and 17 were male. The pilots had a flight experience of 6.5 years on average (SD: 4.6, MIN: 1.5, MAX: 21, MED: 5) and an average total number of flight hours of 3047.4 (SD: 2405.6, MIN: 1200, MAX: 12100, MED: 2000). 18 of the pilots were active A320 pilots, while one was a Boeing 777 pilot, who recently changed the certification from the A320. 7 pilots were certified A320 instructors.

3.2 Questionnaires

Two questionnaires were distributed among the pilots. A standard demographics questionnaire including questions regarding their flight experience, and a novel survey designed for this study. The survey was created to answer research questions RQ2–RQ4. It introduced the study aims, explained the concept of gaze-based interactions and gave pilots an example scenario for such an interaction (described below). For each of the requested gaze-based interaction aspects, the pilots received a definition and an example as well. The survey concluded with questions to assess pilots’ subjective impression on gaze-based interactions in the cockpit, i.e., to determine whether they believe that they benefit from those and thus to answer RQ1.

Among the gaze-based interaction aspects, were the name and description of the scenario, which allow us understand the pilots’ intention. To simplify a systematic analysis and to provide the pilots with an easy-to-use template, they were asked to describe the scenarios as follows (see Fig. 4 for a screenshot):

What part of the cockpit they want to look at to initiate the gaze-based interaction. This was called the Trigger and requested in plain text.

When they wish a particular piece of information should be made available. This was called the Activator. The type of activator could be chosen from a predefined set of options:

voice command, e.g., after saying “speed”,

gesture, e.g., after showing a thumbs up,

time limit, e.g., after 3 s,

external device, e.g., touching a cuff link,

other, i.e., an activator we did not consider.

In particular, pilots were asked to provide the activator command as well, i.e., the gesture, time, device, etc. For example, “speed” for the voice command.

Which information they actually want to receive. This was called the Information and requested in plain text.

How they want the information to be provided to them. This was called the Representation. The type could be chosen from a predefined set of options:

audio, e.g., hearing “speed two hundred knots”,

adapted display, e.g., the engine/warning display (EWD) changing to a primary flight display (PFD) when looking at the system display,

tactile, e.g., wearing a belt that vibrates twice to indicate an engine failure,

external device, e.g., a smartwatch beeping twice to indicate a speed of 200 knots,

augmented reality, e.g., to see a small PFD representation when looking at the EWD,

other, i.e., a representation we did not consider.

Similar to the activator, pilots had to provide the representation details. For example, “speed two hundred knots” for the audio.

Where the information should be made available. This was called the Location and was requested in plain text.

An image of the A320 cockpit was provided as well, such that pilots could mark the position of the trigger and activator. They could also add a remark, if they believed that it was necessary to understand the scenario and their intentions.

As a reference and to support the comprehension process, an example scenario was given. This scenario involves a miniature representation of the PFD that is projected to the left of the EWD with the help of augmented reality. This is to be done whenever the pilot is looking at the EWD for more than 2 s. The motivation for this is that the PFD is no longer visible when looking at the EWD, but contains important information that needs continuous observations nonetheless. The decomposition of this scenario looks as follows and is also included in Fig. 4 (in red color):

Name Miniature PFD when looking at EWD.

Description Additional information when looking at EWD.

Trigger EWD.

Activator Time limit: 2 s after looking at trigger.

Information Miniature PFD.

Representation Augmented reality.

Location Hovering left of the EWD.

Remark None.

As last part of the questionnaire, pilots responded to three questions to determine their subjective impression of gaze-based interactions in the cockpit (refer to RQ1). The first question asked them to rate whether they believe that they can benefit from the gaze-based interactions they provided. This was done on a 7-point Likert scale from “totally disagree” to “totally agree”. The second and third question asked the pilots to list the potential advantages and disadvantages they see for gaze-based interactions in the cockpit. Note that we chose this order of questioning, i.e., from explicit scenarios to the more general question concerning gaze-based interactions, to ensure that pilots had already given a thought to the topic first.

3.3 Procedure

We differentiate between the study execution and the evaluation of the questionnaires. The former describes the preparation and execution of the study, while the latter explains how particularly the novel survey questionnaire was evaluated.

3.3.1 Study execution

All of the 20 pilots who participated in our study had previous experience with eye tracking technology, either as PF or as instructor. That is, the PFs had flown scenarios in an A320 full flight simulator and had their gaze recorded using the Smart Eye Pro remote eye tracking system [45] (see Fig. 1), while the instructors accompanied them. In particular, all pilots received a detailed explanation of what eye tracking is and how it works. More precisely, they knew that cameras record a pilot’s eyes and compute her point of regard and that this information is used to know where she spent her visual attention at what point in time. The pilots were aware of the fact that the technology can sometimes suffer of imprecision and/or inaccuracy and it may work better for some pilots than for others. Moreover, they had an understanding of the eye tracking hardware setup, which included information on how such a system can be included into a full flight simulator, how it is tested with regard to the precision and accuracy of the eye movements, and a rudimentary explanation on how technical and algorithmic countermeasures can be introduced to improve those. In summary, all pilots had both a theoretical understanding of eye tracking and its limitations, and gathered some hands-on experience with it. None of the pilots had first-hand experiences with gaze-based interactions.

Pilots were given the novel survey questionnaire, the concept of gaze-based interactions was introduced, and an example provided. Then, it was explained that the aim of the underlying study is to explore how gaze-based interactions in the cockpit can be used to support pilots with their everyday tasks. Pilots were asked to identify and describe scenarios in the cockpit, for which they anticipated that gaze-based interactions would be useful. To ensure that pilots’ creativity was not bound by technical limitations and to emphasize the focus on future cockpits, it was explained that the premise of the study is that there are no technological limitations with regard to the available interactions. More precisely, this referred to existing technologies, such as eye tracking, as well as voice recognition, gesture recognition, tactile displays and augmented reality. Pilots should assume that these are all available in the cockpit and functioning. The basic concept of gaze-based interactions was introduced by asking them to think of moments in their career, in which they looked at a position in the cockpit (e.g., a knob, lever, instrument, etc.) and thought it would be useful to receive some information. Pilots could fill in the survey either in the facilities of an aviation training company or at home.

3.3.2 Questionnaire evaluation

Due to the complex environment of a cockpit (and full flight simulator) and its limited availability, as well as the limited resources (pilots) in the field of aviation, extensive experiments are difficult to conduct. To nonetheless uncover research potentials for gaze-based interactions in the cockpit, qualitative analyses as the one applied here are ideal. This study employs both the directed and summative content analysis approaches [23] to answer the research questions. The following sections are separated by those research questions and describe, which approaches are utilized to answer them and what aspects of the data are used.

RQ1—Summative content analysis To answer RQ1, i.e., if pilots anticipate that they will benefit from gaze-based interactions, we do a summative content analysis. That is, we analyzed the survey with regard to the advantages and disadvantages the pilots identified.

The advantages reflect pilots’ visions and expectations for gaze-based interactions, as well as their acceptance of this novel technology. The disadvantages emphasize the possible pitfalls and limitations they see for such a system.

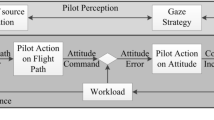

RQ2—Directed and summative content analysis For answering RQ2, i.e., where in the cockpit do pilots anticipate that they will need gaze-based interactions, we first employ the directed content analysis approach. We utilize existing research and knowledge on the interactions in the cockpit and human–computer interaction technologies to analyze the survey questionnaire. More precisely, we analyze the survey by the key components of any human–computer interaction, i.e., the action (of the pilot) and reaction (by the system) [33]. Figure 5 shows an overview of these aspects under which the survey data are analyzed (some are explained in the next section). For explicit gaze-based interactions, the action needs a point of regard, i.e., the position of the trigger, and the reaction needs a location where the requested information is shown, i.e., the position of the information. Both at the trigger and information positions, pilots perceive some content. The trigger helps to identify cockpit areas, which are critical with regard to the existing interactions, while the information pilots request and the location they choose for it, reflect their needs.

The positions are represented by the underlying AOIs. The content of the trigger is either derived from the content of the underlying AOI or (if possible) from the pilots’ text, while the content of the information is what the pilots explicitly requested. Neither of the contents are represented by their underlying AOI (e.g., main panel (MPn) instead of ’Flaps setting’), to emphasize the pilots’ information needs.

To some extent we exceed the directed content analysis into the summative content analysis, as we utilize the position of the trigger and the information, as well as their respective contents for further in-depth analyses. The resulting measures include the distance between the trigger and the information positions, the logic relation between the current and requested content and the accessibility of the requested content. These aspects allow us to refine our assessments of the pilots’ intentions and the potential improvements of the interactions. In particular, all of these aspects provide indications on the needed effort. For example, a short distance, indicates that the pilot wishes to quickly and frequently access some content, the complexity of the logic relation reflects the effort of the interaction, while the accessibility of the requested content shows how many interaction steps can be saved. Moreover, any combination of these aspects provides an outlook on the potential improvements.

The distance is determined by utilizing the position of the trigger \(p_t\) and that of the requested content \(p_i\), and is only determined for ‘augmented reality’ and ‘adapted display’ representations. In some cases, details about the position are lost with the assignment to an AOI, such as for looking out the window (i.e., the ’OUT’ position), which is also comparably large. In those cases, we consider the pilots’ original statements in the assessment. The distance between \(p_t\) and \(p_i\) is separated into four different topological categories. The category is ‘overlay’, if \(p_i\) partially or completely overlaps \(p_t\) either with opacity or not [e.g., the primary flight display (PFD) shows taxi-information after looking at the vertical speed (V/S)], and \(p_i\) is not bigger than \(p_t\). The category ‘near peripheral vision’ is assigned in the latter case and when the area of \(p_i\) touches that of \(p_t\) (e.g., showing the ground speed as augmented reality next to the V/S). The category ‘distant peripheral vision’, implies that \(p_i\) is not next to \(p_t\), but visible in the pilot’s peripheral vision (e.g., changes to the HUD when looking straight outside) and ’outside peripheral vision’ means that the pilot needs to move her head to see \(p_i\) (e.g., changes to the HUD when looking at the PFD).

The logic relation is determined by comparing the current content \(c_t\) and the requested content \(c_i\) before categorizing them. The categories are ‘combine’, ‘surveil’, ‘warn’ and ‘manipulate’, and are assigned depending on what the pilots intend to do with \(c_i\). Combine is assigned whenever a pilot wants to combine \(c_i\) with \(c_t\) and use that to infer her current situation, e.g., receiving information about the wind for combining it with the current flight parameters to anticipate the aircraft’s flight path. Surveil is assigned if a pilot wants to continue monitoring a particular information, while being concerned with some other part of the cockpit, e.g., seeing the PFD while looking at the EWD (described in Sect. 3.2). The warn category is used whenever a pilot needs to be notified that she is not paying attention to some information that might be important for the given scenario (‘warn-attention’) or she is doing something wrong (‘warn-mistake’). Finally, the manipulate category indicates that the pilot intends to manipulate the aircraft using her gaze in combination with an audio command.

The accessibility of the content \(c_i\) is categorized, too. That is, whether the content is visible somewhere else (called ‘available’), visible after an interaction (called ‘accessible’), or not accessible at all, i.e., a novel piece of information which is not available in the cockpit today (called ‘new’). If the \(c_i\) is set to “N/A”, it is not accessible and thus assigned to the category “N/A”.

RQ3—Directed content analysis To answer RQ3, i.e., how pilots want to interact with the aircraft using gaze-based interactions, we do a directed content analysis. That is, we analyze the survey by the activators and representations the pilots provided to us. The activator reflects a pilot’s input, and the representation her favored output format.

The activator empowers a future interaction system to avoid unintentional reactions, while the representation ensures a pilot-intended response by such a system. The unintentional initiation of an interaction using gaze is known as the Midas touch problem [26], based on king Midas who turned everything into gold as soon as he touched it. The graphical representation is also highly connected to the visual attention distribution in today’s cockpits [4].

RQ4—Summative content analysis Answering RQ4, i.e., when pilots anticipate they will benefit from gaze-based interactions, we conduct a summative content analysis, which includes the analysis of the data according to the tasks and challenges introduced in the corresponding section. More precisely, we analyzed the survey with regard to the number of crew members involved, the flight task at hand, the flight phase and the flight condition.

Crew resource management is one of the important tasks in the cockpit [29], i.e., the coordination between the pilots and with the cabin crew. Therefore, the scenarios are analyzed with regard to the number of crew members involved. The focus is on the number of crew members, for which the eyes would need to be simultaneously tracked and validated.

The pilots’ tasks in the cockpit are categorized as suggested by Wickens [49], i.e., aviation, navigation, communication and systems management. Based on this assignment it is possible to infer the motivations and challenges of each scenario. In combination with the gaze-based interaction needs, potential deficiencies in today’s cockpits can be identified.

The flight phase at hand influences a pilot’s tasks and challenges. Refer to Fig. 3 for an overview of the flight phases under analysis(marked in blue). The ‘all’ category represents scenarios that cannot be uniquely assigned to a specific flight phase. An example for such a scenario is “Pilot sees different augmented information, such as city names when looking outside”. A pilot can always look out the window, and the information the pilot listed can be shown both while on the ground and in the air. The separation into flight phases allows evaluating possible correlations with the number of fatal accidents. Furthermore, it is possible to identify deficiencies in today’s cockpits concerning human–computer interaction.

The flight condition, i.e., whether we have an abnormal or normal scenario is an indicator of the imminence of the situation. Abnormal conditions are those where it is no longer possible to continue with normal procedures, but no lives are in imminent danger. Such scenarios include a fire on board, fuel loss, etc.

3.3.3 Expert involvement

According to Hsieh and Shannon [23] the validity of the results can be ensured by using an auditor or expert to validate both the survey (directed content analysis) and the interpretations (summative content analysis). This was done in form of our domain expert. All of the questionnaires and the later interpretations thereof were discussed and developed in collaboration with the domain expert. That is, the domain expert reviewed the questionnaire for plausibility and was involved in the design iterations. Furthermore, the domain expert reviewed the scenario assessments conducted by the authors. Our domain expert is both an experienced A320 pilot (with 2000 flight hours during 5 years of flying) and a certified A320 instructor.

4 Results

20 pilots provided 39 scenarios for which they anticipated they can benefit from introducing gaze-based interactions. On average each pilot provided 1.95 scenarios (SD: 0.67). One pilot did not provide a gaze-based interaction scenario, but a general interaction scenario, which is excluded from our analysis. Moreover, three pilots forgot to fill in the part of the questionnaire concerned with their subjective impression and are not part of the corresponding evaluation. In summary, we had 38 scenarios from 20 pilots and the subjective impressions of 17 pilots.

Before the results can be reported, some data corrections were necessary. To do so, all available fields were consulted and if possible the correct information substituted. All corrections were discussed with the domain expert. In the following, the details on the corrections are given for each aspect.

Note that the total sum of associations can be larger than the number of scenarios, because in some cases a scenario is associated with multiple categories of an aspect. For example, consider a scenario describing the trigger to be on the multifunction control display unit (MCDU) and the location as ‘overlaying or beneath the MCDU’. The trigger position of this scenario is MCDU twice, respectively with MCDU and the larger center pedestal (CP) as the requested information’s position. Another example is that multiple categories apply. For example, a scenario describing the outside view (OUT) as trigger and the HUD as location, can be categorized as either near or distant peripheral vision, depending on where the pilot is looking. In this case we would associate the scenario with both. Moreover, the number of association can be different between aspects. For example, a scenario describing the trigger to be OUT and the information to be ‘city names, airport names, etc.’, is only associated with the trigger position OUT, but with different contents (‘city names’, ‘airport names’, etc). This is however not the case for aspects that are compared to each other, such as the positions and contents of the triggers and information. This means that in some cases, associations were included to ensure an equal number for two aspects and thus to allow a comparison.

To explain the following analyses, a reference scenario provided to us by a pilot is introduced. The results are reported in conformance to the previously introduced analytical procedure, i.e., with regard to the research questions. For each research question, we introduce the aspects that are analyzed to answer it. Then, the corrections (if any existed) are presented, because their outcome influences the results, which are given afterwards. The results include the outcome of the analysis concerning the aspects at hand for the reference example. This is concluded by the findings that can be derived from the results in light of the research question.

Based on these results, we identified and summarized the most promising gaze-based interactions in Table 3, to be included in future research.

4.1 Reference example

The scenario given in Table 2, provided by one pilot, is used as a reference to explain the different analyses. It describes a typical scenario during takeoff and landing. The pilot flying makes the callout “Gear Up” or “Gear Down” and expects the pilot monitoring to act accordingly and pull the gear lever up or down. The gear lever is next to the system display, thus when the pilot monitoring looks at it, her attention is moved away from the primary flight display. Therefore, the pilot describing this scenario suggested showing the most relevant flight indicators next to the lever using augmented reality. A short description of this scenario would be: “Pilot sees augmented flight information when looking at gear lever during takeoff and landing”. For readability, if scenarios are reported, then only with their short description.

4.2 RQ1. Do pilots anticipate that they will benefit from gaze-based interactions?

For RQ1, the pilots’ answers on the advantages and disadvantages are summarized into appropriate categories.

4.2.1 Results

The analysis of how pilots perceive gaze-based interactions, and whether they believe that they are of use to them in the scenarios they provided, indicates their acceptance for this type of novel technology. On a 7-point Likert scale (with 7 representing full agreement), pilots rated the statement that they would benefit from gaze-based interactions with 5.4 (SD: 1.4) (not depicted in a separate Figure). Figure 6 shows the advantages (a) and disadvantages (b) for gaze-based interactions in the cockpit that pilots identified. The plain text descriptions were summarized and categorized by their key statements to eliminate ambiguities. The categorization was validated by our domain expert. Note, that pilots could state more than one advantage or disadvantage. Pilots provided an average number of 1.8 (SD: 0.6) advantages and 2.1 (SD: 1) disadvantages.

4.2.2 Findings

To assess pilots’ attitude towards gaze-based interactions in the cockpit, we do not only consider the feedback from the (Likert-scale based) question, but analyze the advantages and disadvantages they provided to us.

These findings answer pilots’ thoughts on whether they anticipate that they will benefit from gaze-based interactions:

- F1.1:

32.3% of the pilots explained that they believe they are able to access information faster assuming they had the novel interactions available. Looking at the derived measures and comparing the distances for the old and new cockpit setup, this notion can be confirmed. As a consequence, pilots will be able to perceive the information faster. Given that they comprehend the situation faster as well, it can be assumed that the situation awareness will increase, as 12.9% of the pilots state.

- F1.2:

19.4% anticipate that their system overview will increase, and 9.7% that they will be supported during abnormal scenarios, but that depends on the actual realization of the interactions.

- F1.3:

43.8% of the pilots are concerned that the new content leads to an information overload in the cockpit. This is a known design challenge for cockpits [50] and since pilots are highly trained professionals who are aware of the prevailing issues in today’s cockpits, this is not surprising. The pilots’ caution can also be related to Airbus’ philosophy of counseling pilots more intensively than other aircraft manufacturers (trust the automation vs. trust the pilot) [37]. This results in a comparably more salient feedback from the aircraft, which pilots do not want to extend. Nonetheless, information overload is a valid concern, which is considered in this paper by including questions in the survey asking about what information must be provided how and when.

- F1.4:

15.6% of the pilots mention possible technical restrictions as a disadvantage. While being a valid concern today, this aspect can be neglected when considering future systems and practical applications, where the technology has progressed and appropriately tested before it is integrated into a cockpit.

- F1.5:

9.4% point out the individuality of pilots, i.e., that each pilot might need different gaze-based interactions, maybe even with a different configuration. This was also mentioned during the informal discussions with the pilots. The study considers this aspect by not going into detail about the exact parametric values, but concentrating on the overall considerations needed to employ gaze-based interactions in the cockpit. Moreover, we generally do not discuss how the interactions should be realized in detail, but simply identify potential application areas within the cockpit. The remaining concerns can be answered similarly to the preceding ones.

The average response on the benefits of gaze-based interactions in the cockpit is positive and the absolute number of advantages and disadvantages in total and per pilots are balanced. Weighing the most frequently mentioned disadvantage and advantage against each other, one might argue that the risk of information overload outweighs the possibility of faster visual access to information. In particular, considering that faster access does not necessarily mean that the perception speed increases as well. However, the actual answer is: it depends on the interaction and its realization. Novel interactions must follow existing guidelines and be designed to fit the respective scenario, e.g., by taking the context into account and using a representation and activator, which is appropriate. For the resulting interactions, the perceived cognitive workload can be measured, e.g., by utilizing questionnaires such as the NASA TLX [21].

4.3 RQ2. Where in the cockpit do pilots anticipate that they will need gaze-based interactions?

To answer RQ2, the trigger and information fields and the distance and accessibility categories, which were introduced in Sect. 3.3.2 (see also Fig. 5) are analyzed.

4.3.1 Corrections

Although it was emphasized that the triggers are required and have to be at a position pilots look at before receiving an information, some pilots described scenarios that do not include them or described them incorrectly. These are assigned to the category ‘N/A’. There are a total of 9 scenarios that do not include gaze-based triggers. Instead two scenarios use voice as a trigger. One trigger is negated, i.e., it is bound to when pilots do not look at a particular position and six scenarios include aircraft-event based triggers. The aircraft based triggers are: turning a knob; an engine failure; the 50ft callout of the aircraft during landing; exceeding a speed limit; and when the thrust lever is set to ‘take off/go-around thrust’. Consequently, the current content, which is based on these positions, could not be determined for either and was assigned to ‘N/A’, too.

The position of the information cannot be identified in 5 scenarios, because pilots chose ‘audio’ (four times) and ‘tactile’ (once) as the representation and therefore did not define a location. The ‘Pilot’ AOI position refers to a scenario that requested the information to be presented in the pilot’s field of view. This can however be anywhere in the cockpit and therefore, it was decided to create that pseudo-AOI, binding the information to the pilot. As with the triggers, the content of these positions was assigned to the category ‘N/A’.

In 12 cases the distance cannot be estimated, because either no trigger or information position existed. For 6 scenarios no category can be assigned regarding the accessibility, because the requested content was not given. In 2 scenarios, both the current and requested content were ‘N/A’, thus not allowing to determine a logic relation category.

4.3.2 Results

The charts of Fig. 7 show the positions pilots prefer for both the trigger (a) and the information (b) and the assigned distance categories between them (c). The charts (a) and (b) of Fig. 8 show the content that pilots see when looking at the trigger positions in the current A320 cockpit and the content that pilots requested. Chart (c) depicts the logic relation between the contents, while (d) represents the accessibility of the requested content.

For the reference example in Table 2, both the trigger and the information position are on the main panel, while the distance for the reference example is ‘near peripheral vision’, because the information is shown next to the lever. The current content is ‘Gear setting’ and ‘Altitude, Vertical Speed’ are the requested contents. The logic relation is ‘combine’, because the pilot needs the information to decide on the interaction with the lever. The accessibility of the requested content is ‘available’, since both the altitude and vertical speed are always visible on the primary flight display.

4.3.3 Findings

Findings related to RQ2 are primarily derived from the positions of the triggers, because they describe where the pilots’ attention is, when they request assistance. The position of the requested information helps in categorizing the distances and thus to draw conclusions on pilots’ motivation for the interaction, i.e., to answer why pilots need assistance. For example, looking at the PFD, but requesting the information to be presented outside the peripheral vision, indicates that a pilot does not need assistance with the current, but the next task. In particular, this influences the answer on where pilots wish to receive support, as that position is no longer where the pilots currently look, but elsewhere. The current and requested content, as well as their logic relation and accessibility add to those insights on the pilots’ motivation.

Ignoring the scenarios categorized as ‘N/A’, we found the following concerning where pilots anticipate that they will need gaze-based interactions, i.e., they want to:

- F2.1:

access the key flight parameters, i.e., the primary flight display (PFD) and navigation display (ND), for areas that are away from the related displays. 87.5% of the triggers are defined on unrelated areas. More precisely, 40.0% of the triggers are defined on the outside view (OUT), 12.5% on the electronic centralized aircraft monitors, 12.5% on the glareshield (GSc), 10.0% on the multifunction control display units (MCDUs), 7.5% on the center pedestal (CP), 2.5% on the electronic flight bag and main panel (MPn). This is supported by the fact that 77.1% of the previously mentioned areas were also categorized with a distance of ’overlay’ or ’near peripheral vision’. Additionally, 60.0% of the requested information is related to the existing key flight parameters, thus supporting our interpretation.

- F2.2:

enhance the OUT area in the cockpit with novel spatial and flight route related data. In the current cockpit, this area does not contain any flight information, especially when flying under instrument meteorological conditions. Evaluating the position of the requested content, we find that in 38.6% of the cases, the position of the requested content is OUT. In particular, pilots do not only request available or accessible information to be depicted (e.g., to see the flight parameters), but out of the 26.0% of the requested content that can be considered novel, 76.9% are related to OUT.

- F2.3:

enhance the PFD area (given as trigger in 12.5% of the cases). More precisely, 60% of the requested information for the PFD area (7.5% of the PFD-related triggers) aim at doing so, i.e., to create a view for the aircraft’s position in the airport, highlight important parameters in an engine failure and show the ground speed value next to the vertical speed.

81.1% of the distances are categorized as ‘overlay’ or ‘near peripheral vision’ and there are no big changes between the distributions of the positions of the triggers and information (see Fig. 8), which supports our focus on the trigger position for answering RQ2. The three most salient changes between the positions are with the GSc, MCDU and HUD. The former two are easily explained, because pilots requested the information to be shown on areas next to those, i.e., OUT/MPn for the GSc and CP for the MCDU. The HUD is given as the position for the information in 11.4% of the cases. However, it is safe to assume that this choice is based on the same reasoning as for the OUT area, i.e., to enhance the space in the cockpit that currently does not contain any information (as most aircrafts of the airline do not have a HUD). Stating HUD instead of OUT can be traced back to the fact that pilots have a stronger awareness of that technology.

4.4 RQ3. How do pilots want to interact with the aircraft using gaze-based interactions?

The activator and representation fields are analyzed for RQ3.

4.4.1 Corrections

Pilots described several scenarios with activators, which we summarize as ‘aircraft events’, i.e., the interaction is initiated based on the aircraft’s state (change). These scenarios include: turning a knob; an engine failure; reaching a particular speed; an interaction with the flight management guidance computer; an interaction with the navigation system; reaching a certain distance from an airport; changing the thrust. For some scenarios pilots chose the category ‘other’, but all of these can be assigned to one of the existing categories or ‘aircraft event’.

For the representations, the associations to the ‘other’ category are all corrected to one of the existing categories, too. A few scenarios are corrected from ‘adapted display’ to ‘augmented reality’, because the pilots clearly described an augmented reality setup.

4.4.2 Results

Figure 9a provides an overview of the activators the pilots chose. As external devices, pilots mentioned a push button, a button on augmented reality glasses, and touching a lever in the cockpit. The gestures the pilots provided were blinking with the eyes and not specified any further in one scenario. Figure 9b shows an overview of the preferred representations. Warnings were to be represented with audio signals or tactile feedback, whereas the tactile feedback was requested to be via a belt.

The reference example in Table 2 is categorized as ’voice’ for the activator and ‘augmented reality’ as representation.

4.4.3 Findings

As described earlier, the interaction is composed of the pilot’s action and the system’s reaction. Thus, the findings related to RQ3 are derived from the activator, which represents the pilot’s action and the representation, which is the system’s reaction. In particular, the activator poses as a countermeasure to the Midas touch problem [26], i.e., the unintentional initiation of an interaction.

The following findings answer how pilots want to interact with the aircraft using gaze-based interactions, i.e., they:

- F3.1:

prefer that the interactions are initiated ‘automatically’, i.e., based on a time threshold (37.5%) or aircraft event (22.9%). These types of interactions are cognitively less demanding compared to those requiring an external device or gesture, which is probably also the pilots’ motivation for choosing those categories.

- F3.2:

want to interact with the system using their voice (22.9%), which is a common approach pilots are familiar with when they for example interact with the assistant systems on their smartphone. Moreover, pilots are continuously using audio as a communication between each other and with air traffic control.

- F3.3:

prefer to utilize augmented reality (54.2%) and adapted display (33.3%) technology as the modes of representation. This can have a number of reasons. Augmented reality and adapted displays can be used analogously, by utilizing the possibility to completely overlay an area within the pilot’s field of view. In informal discussions with the pilots they emphasized that most of them have an open mind with regard to novel technologies such as augmented reality. Furthermore, both augmented reality and adapted displays can represent a variety of information, thus enabling pilots to think beyond the limitations of the displays in current cockpits. In particular, augmented reality in combination with head-worn devices does not have any spatial limitation on where the information can be displayed, e.g., one can show the weather information on an empty area in the cockpit. To some extent that holds also true for adapted displays, i.e., being able to adapt the engine/warning display to mirror the content of the primary flight display. Moreover, augmented reality can be used without interfering with the existing systems in the cockpit, i.e., it can extent the existing information without having to completely re-design the existing cockpit. Finally, augmented reality is explained thoroughly in the questionnaire to ensure that pilots are aware of its capabilities and, because the reference example given in the survey used augmented reality as the representation. The latter two reasons are however unavoidable if one wants to ensure that pilots understand the capabilities of augmented reality and, because an example is always needed.

4.5 RQ4. When do pilots anticipate that they will benefit from gaze-based interactions?

To answer RQ4, the scenarios are categorized by the number of crew members, the flight task, the flight phase and the flight condition.

4.5.1 Results

Figure 10a shows the number of crew members involved in the given scenarios. However, no scenario involves the cabin crew and only two scenarios describe tasks that necessitate tracking both pilots’ eyes, while the remaining scenarios involve only one pilot. Chart (b) reflects the flight task associated with the scenarios and chart (c) the flight phase at hand. Chart (d) represents the flight condition. Only one scenario is categorized as abnormal, while the remaining scenarios constitute normal conditions.

The reference example in Table 2 involves one pilot, because even though the PF is commanding the pilot monitoring (PM), the gaze-based interaction is only concerned with the PM. The task is ‘aviation’, because the PM which is considered here is manipulating the aircraft’s gear setting with the aim to lower the aerodynamic drag. The flight phase was associated with ‘takeoff’ and ‘landing’, because gear interactions take place in those phases. The flight condition is ‘normal’.

4.5.2 Findings

To identify the states, for which pilots’ anticipate that they will need gaze-based interactions, we analyzed the survey questionnaire with regard to the tasks and challenges they face every day. That is, we look at the crew constellation, the flight condition, task and phase.

The following findings answer when pilots anticipate that they will need gaze-based interactions, i.e., they wish:

- F4.1:

to receive support during the aviation task in 70.7% of the cases, which is not surprising and reflects the common ordering of the tasks’ importance.

- F4.2:

to continuously utilize gaze-based interactions, because in 52.4% of the cases the flight phase was associated with ’all’. One of the key contributors to this is most certainly the findings from RQ2, i.e., pilots wish to keep the key flight parameters within sight, while they are concerned with areas in the cockpit farther away from the primary flight display (PFD) or navigation display (ND). Moreover, pilots primarily wish to surveil and combine the requested information (76.8%), which indicates that even though they wish to see the information, they will not necessarily actively use it throughout the flight.

- F4.3:

for support during the landing phase (26.2%), which is presumably due to the pilots’ awareness of the numbers of accidents per flight phase presented in Fig. 3.

Only one pilot describes scenarios involving both pilots. This might be the result of the existing training, and the clear structures in the cockpit when it comes to crew resource management, thus not necessitating changes, but also simply because the pilots did not consider a setup involving the tracking of both pilots or even the cabin crew. Although 9.7% of the pilots stated they believed gaze-based interactions could support them in abnormal scenarios (refer to the next section), only one pilot described an abnormal scenario. The most probable explanation is that, even though pilots are aware of the difficulties, they could not think of an explicit scenario to report.

5 Discussion

Research question RQ1, whether pilots anticipate that they will benefit from gaze-based interactions resulted in a balanced outcome without significant differences between the perceived advantages and disadvantages. The average number of disadvantages (i.e., 2.1) given per pilot was higher than the advantages (i.e., 1.8), but a Spearman rank-order correlation revealed no significant differences. Nonetheless, finding F1.3 reveals that the concern of having too much information and consequently an increased mental workload in the cockpit is strong. To avoid such effects, key factors in the representation of information for novel gaze-based interactions, are the considerations from information processing [48] and the inclusion of existing insights from avionics display design [10]. Both Imbert et al. [24] and Peysakhovich et al. [39] extensively discuss the design requirements, pitfalls and processes associated with the integration of eye tracking technology into cockpits and should be considered to avoid the technical restrictions addressed in finding F1.4. Moreover, when utilizing head-mounted devices for augmented reality in the cockpit, previous insights by Arthur III et al. [5] and Foyle et al. [18] should be considered. The former provides a good overview of NASA’s extensive research on head-worn devices, their solutions, and challenges. The latter can serve as a guideline not only for augmented taxiing solutions, but also regarding the interplay of augmentation and situation awareness.

Research question RQ2, i.e., where in the cockpit pilots anticipate that they will need gaze-based interactions, was answered by different findings. The pilots’ wish to access the key flight parameters when their attention is away from the corresponding displays (finding F2.1), is simply explained by the fact that these flight parameters are the pilots’ main point of reference for flying the aircraft and thus for ensuring a safe travel. Having these within their sight throughout all interactions with the cockpit aims at providing the pilots with an additional level of safety to not miss anything essential, while being occupied with other tasks. On the other hand, these parameters require a certain amount of the pilots’ attention, which raises the question on whether the benefits outweigh the potentially added level of cognitive load. However, doing so is not part of this work and needs to be explored in further detail in a separate study.

The fact that pilots want to enhance the outside view (OUT) (finding F2.2), which currently does not contain any auxiliary information, is not surprising. Especially, considering the development of the HUD, which is also placed in front of the outside view and the ongoing research to develop head-worn devices that enable an augmented reality enhanced view out the window [5].

The idea to enhance the PFD’s functionality (finding F2.3) is presumably the most critical, because it is the most important display in the cockpit and highly refined. There is ongoing research concerning the visualizations for taxiing, but including the ground speed or highlighting some parameters on the PFD during an engine failure need to be researched in further detail.

Analyzing the responses to answer RQ3, i.e., how pilots want to interact with the aircraft utilizing gaze-based interactions, it becomes clear that pilots wish to lessen their cognitive load and therefore prefer interactions that activate automatically (finding F3.1). This reflects finding F1.3 for RQ1, i.e., the pilots’ fear of an increased cognitive workload, which can be potentially avoided by not having to think of actively initiating the interaction. This means of course, that the timing thresholds have to be extensively evaluated and the events under which the system provides the assistance trained to avoid the Midas touch problem and that pilots are surprised by the aircraft’s behavior.

The wish to use voice commands as input (finding F3.2) stems from the pilots’ existing experience with such technology, i.e., they are familiar with the voice-controlled assistant system on their smartphone. The existing mature technology is also the advantage of this approach, i.e., when creating novel interactions utilizing voice as input, it is possible to employ existing frameworks. However, a potential disadvantage of audio commands, though not mentioned by pilots, may stem from technical issues resulting from the existing verbal interactions (e.g., with air traffic control) and sound of the cockpit (e.g., the engine). Although less popular, other technologies, such as external devices and gestures have been noted in some cases (see Fig. 9a). In recent years, gestures have found there ways into cars (e.g., [14]) and similar solutions are well thinkable for the cockpit. However, as in the automotive setting, their application should focus on less critical interactions, such as activating the intercom for talking to air traffic control. These premises also hold true for the use of an external device, such as a cuff link or belt.

Finding F3.3, that pilots prefer augmented reality and adapted displays as representations emphasizes the importance of research in the direction of Arthur III et al. [5]. More precisely, with the capability of modern augmented reality glasses to provide non-opaque overlaying visualizations, the scenarios involving adapted displays, can also be realized with augmented reality. Audio and tactile representations have been mentioned as well (see Fig. 9b). In fact, both the use of 3D audio and tactile displays have received some significant attention in aviation research [32]. Nonetheless, the potential use in combination with gaze-based interactions needs further exploration.

For research question RQ4, i.e., when pilots anticipate that they will benefit from gaze-based interactions, we find that pilots wish to utilize gaze-based interactions throughout the flight (finding F4.2) and during the landing phase (finding F4.3). This is presumably a result of the finding F2.1 and F2.2 from RQ2 and not as much an indication of an existing information deficiency (finding F2.3) in the cockpit necessitating the development of a novel display. A realization however, has to take care to not result in too much information as the pilots replied in the context of finding F1.3. Moreover, pilots wish assistance while aviating (finding F4.1), which considering the importance of the task together with finding F1.3 (avoid to much information) may have influenced finding F3.1 (automatically triggered interactions), which ultimately aims at reducing their cognitive load.

5.1 Limitations

This paper’s main limitation is the missing user study to validate the results. However, in this article we do not aim at designing and evaluating the actual interactions, but rather at giving directions for future research or development. By following the guidelines supplied by Hsieh and Shannon [23] and involving a domain expert in the evaluation of the study outcome we included a second level of validation. Nonetheless, the final proof of validity can only result from an empirical evaluation of the scenarios. The authors are planning to utilize these insights in future work, but it should be noted that this constitutes a large and long-term study.

It is difficult to assess the impact of pilots’ experience with eye tracking technology on the results in detail. However, as described in the procedure section, the pilots received a similar introduction on the topic and as discussed below, pilots have a good understanding of the limitations and capabilities of technology. Thus, it can be assumed that the pilots have a similar knowledge base and understood the issues of eye tracking, especially with regard to precision, accuracy and the Midas touch problem. Nonetheless, overcoming these limitations is the focus of ongoing eye tracking research and should be the focus of researchers and practitioners creating gaze-based interaction solutions.

The authors have conducted a study in the flight simulator, in which eye tracking was used for analytical purposes (instead of for interaction). There, they were able to reach an appropriate precision and accuracy (around 1 degree of visual angle) in the difficult environment of a full flight simulator. That is one, for which we were able to separate pilot gazes on the different sub-components of the PFD. Moreover, we include the ‘activators’ in our study to circumvent the Midas touch problem.

Asking pilots to think of flight scenarios where they can benefit from gaze-based interactions, while assuming that there are no technical limitations, might appear like a contradiction considering the insights on, e.g., the eye tracking limitations described above. However, pilots as regular consumers are familiar with common technologies such as voice recognition (e.g., on their smartphones), and are aware that the solutions as they exist today do have technical limitations. This can cause them to exclude scenarios or gaze-based interaction solutions they would think are not possible with today’s technologies or that do not work perfectly yet (such as voice recognition) even though they might be of interest for future research. To ensure that pilots do not think about the details of a possible realization, but focus on their needs in a future cockpit, that particular sentence was included. This referred only to the existing technologies covered in the questionnaire. These included eye tracking, voice recognition, gesture recognition, tactile displays, augmented reality, etc. Pilots should assume that these are all available in the cockpit and functioning well, while not ignoring the given environment and natural (e.g., physical) limitations thereof. Looking at the feedback we find that pilots did not provide unrealistic scenarios or neglected the special environment of the cockpit and its limitations. A summary of the most promising solutions derived from the answers is given in Sect. 7.

Some pilots may have had difficulties with the questionnaire design (see Fig. 4), although our domain expert previously looked at the form and agreed with the layout. We did not receive any explicit feedback indicating this, but considering the necessary corrections, there appear to be some ambiguities. Although the overall survey structure is good and simplifies the analyses, we would suggest including more examples, maybe as video demonstrations. The name field may be omitted, because it can be extracted from the description. Both the trigger and location fields may be omitted, because their positions can be marked in the cockpit image.

6 Conclusions

This paper explored the potential of gaze-based interactions in the cockpit with the help of a qualitative user study. A set of questionnaires was distributed among pilots of an internationally operating airline. The aim was to determine their attitude towards gaze-based interactions in the cockpit, identify challenges and needs, and manifest them in directions for future research.

20 pilots participated in the study. Applying a mixture of directed and summative content analysis [23] reveals that pilots perceive gaze-based interactions as overall positive. A detailed analysis of the 39 situations they described as appropriate for gaze-based interactions, indicates that pilots wish to continuously monitor the key flight parameters (e.g., the altitude, speed, and vertical speed), enhance areas of sparse information density (e.g., the outside view) and receive assistance during complex tasks (such as systems management). Moreover, pilots prefer that interactions are initiated automatically and based on the context, and want to utilize augmented reality and audio as means of feedback. Finally, they explained that gaze-based interactions are needed the most for the task of aviating and not necessarily for a particular flight phase.

The study relied on A320 pilots and provided them with an A320 layout as reference. The results are nonetheless generalizable. Glass cockpit layouts and interactions do not significantly differ across manufacturers. In particular, the prevailing differences do not impact the scenarios provided in Table 3.

Consequently, pilots will not only accept technologies such as eye tracking and augmented reality for gaze-based interactions in future cockpits, but it is safe to assume that they will also be able to increase their situation awareness. The results of this paper can be used by researchers to design novel gaze-based interactions for situations and with technology, for which they know that pilots will accept and benefit from.

7 Outlook

The authors believe that extending the survey and distributing it to more pilots could provide even more insights and enable the development of guidelines for the research and development of gaze-based interactions in the cockpit of the future. Furthermore, future research will involve a study that evaluates explicit gaze-based interaction solutions based on the directions given in Table 3. In particular, the study will evaluate the perceived cognitive load, the usability, the performance, throughput and situation awareness of pilots.

The results support the definition of the most promising gaze-based interactions to include in future research. All in all, the gaze-based interaction scenarios in Table 3 are summarized and derived from those given by the pilots and are very promising for future in-depth analyses.

In a first step, we broke down the scenarios to a simple description of their underlying tasks, e.g., ‘flaps interaction’. Then, we looked at the AOIs involved in the task (given in the Trigger column). Based on these two aspects we traversed all scenarios and looked for similarities. We summarized the similar scenarios by identifying their least common denominator. For example, scenarios involving interactions with flaps and gears are summarized in the first scenario of Table 3. Afterwards, we chose appropriate names, descriptions, information and locations that describe all involved scenarios. The activators and representations contain all of those given in the scenarios. They are however refined, e.g., we introduced the call-out “sky, asssist”. Finally, the remarks include important details that were removed during the identification of the least common denominator.