Abstract

This paper contains an infeasible interior-point method for \(P_*(\kappa )\) horizontal linear complementarity problem based on a kernel function. The kernel function is used to determine the search directions. These search directions differ from the usually used ones in some interior-point methods, and their analysis is more complicated. Main feature of our algorithm is that there is no calculation of the step size, i.e, we use full-Newton steps at each iteration. The algorithm constructs strictly feasible iterates for a sequence of perturbations of the given problem, close to its central path. Two types of full-Newton steps are used, feasibility steps and centering steps. The iteration bound matches the best-known iteration bound for these types of algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In 1984, Karmarkar [9] introduced an interior-point method (IPM) for linear programming (LP). Karmarkar used the efficiency of the simplex method with the theoretical advantages of the ellipsoid method to create his efficient polynomial algorithm. This algorithm is based on projective transformations and the use of Karmarkar’s primal potential function. This new algorithm sparked much research, creating a new direction in optimization—the field of IPMs. Unlike the simplex method, which travels from vertex to vertex along the edges of the feasible region, IPMs follow approximately a central path in the interior of the feasible region and reaches the optimal solution only asymptotically. As a result of finding the optimal solution in this fashion, the analysis of the IPMs become substantially more complex than that of the simplex method. Since the first IPM was developed, many researchers have proposed and analyzed various IPMs for LP and a large amount of results have been reported [5, 7, 19]. IPMs have been very successful in solving wide classes of optimization problems such as linear complementarity problem (LCP) [10, 11, 15, 26], horizontal linear complementarity problem (HLCP) [2, 3, 8, 14, 22], semi-definite optimization (SDO) [16, 21] and convex quadratic programming (CQP) [20, 30].

Two interior-point approaches are proposed: feasible and infeasible interior-point methods (IIPMs). The above mentioned papers mostly focus on feasible methods. The most important class is the feasible path following method: the algorithm starts with a strictly feasible point neighbor the central path and generates a sequence of iterates which satisfy the same conditions. These conditions are an expensive process in general. Most existing codes make use of a starting point that satisfy strict positivity but not the equality condition. For this reason, the authors tried to develop algorithms which start with any strict positive point not necessarily feasible. More recently, algorithms that do not require feasible starting point have been the focus of active research [8, 15, 16, 23]. These algorithms are called IIPMs. Roos [23] introduced the first full-Newton step IIPM for LP. Mansouri et al. extended Roos’s algorithm to SDO, LCP and \(P_*(\kappa )\)-HLCP problems [3, 15, 16].

The majority of primal-dual IPMs, say the above mentioned ones, are based on the classical logarithmic barrier function, which leads to the usual Newton search directions. However, some authors, e.g Wang et al. [25] and Lee et al. [13], presented some different kernel function based IPMs for the \(P_*(\kappa )-\)HLCP, which reduce the gap between the practical behavior of the algorithms and their theoretical performance results. Darvay [6] presented a new technique for finding a class of search directions. The search direction of his algorithm which is called Darvay’ direction, was introduced by using an algebraic equivalent transformation (form) of the nonlinear equations which defined the central path and then applying Newton’s method for the new system of equations. Based on this direction, Darvay proposed a full-Newton step primal-dual path following IPM for LP. Later on, Achache [1], Wang and Bai [27–29] and Mansouri et al. [2, 18] extended Darvay’s algorithm for LP to CQP, SDO, second orde cone optimization (SOCO), symmetric cone optimization (SCO), monotone LCP and \(P_*(\kappa )\)-HLCPs, respectively.

In this paper, we use some different search directions from usual Newton directions and Darvay’s directions, to analyze the full-Newton step IIPM of Roos [23] for the \(P_*(\kappa )\)-HLCPs. These directions are based on a kernel function that used by Liu and Chen [12] for LP. The analysis of these directions is more complicated compared to the classical Newton directions and Darvay’s directions. This analysis shows the way of treatment with some directions which differ from the usually used directions in IPMs. We present a full-Newton step IIPM for HLCP based on the mentioned directions and prove that the number of iterations of our algorithm coincides with the best obtained bound for \(P_*(\kappa )\)-HLCPs.

The objective of the HLCP problem is to find a vector pair in a finite dimensional real vector space that satisfies a certain system of equations. More precisely, for a given vector \(b\in {\mathbb {R}}^{n}\) and a pair of matrixes \(Q,R\in {\mathbb {R}}^{n\times n}\), we want to find a vector pair \(\left( x,\,s\right) \in {\mathbb {R}}^{n}\times {\mathbb {R}}^{n}\) (or show that no such vector pair exists), such that the following inequalities and equations are satisfied:

Note that the linear complementarity problem (LCP) is obtained by taking \(R = -I\). The HLCP is not an optimization problem. However, it is closely related to optimization problems, because Kurush–Kuhn–Tucker (KKT) optimality conditions for many optimization problems can be formulated as LCP. For example, KKT conditions of linear and quadratic optimization problems can be formulated as LCP and the HLCP provides a convenient general framework for the equivalent formulations of LCP [4].

In this paper, we are focusing on \(P_*(\kappa )\)-HLCP, in the sense that

where \(\kappa \) is a nonnegative constant and \({\mathcal {I}^+}\!\!\!=\{i : u_iv_i>0\}\) and \({\mathcal {I}^-}\!\!\!=\{i : u_iv_i<0\}\). A \(P_*(\kappa )\)-HLCP is a general optimization problem, containing many aforementioned problems such as LP, \(P_*(\kappa )\)-LCP and CQP problems. Therefore dealing with this problem, remove the necessity of handling many of its subproblems, separately.

The paper is organized as follows. In Sect. 2, we introduce the central path of the \(P_*(\kappa )\)-HLCP as well as its perturbed problem. Moreover, we present two types of search directions. In Sect. 3, we give the new search directions for full-Newton step IIPMs, these directions can be interpreted as a kind of steepest descent direction of a separate function, which is constructed by using the kernel function on coordinates. The full-Newton step IIPM based on the new directions is also presented in this section. In Sect. 4, we investigate some useful tools that will be used in the analysis of the IIPM proposed in this paper. Section 5 is about the centering steps.

In Sect. 6, we analyze the feasibility step used in the algorithm.

We derive the complexity for the algorithm in Sect. 7; the complexity analysis shows that the complexity bound of the algorithm coincides with the best-known iteration complexity for IIPMs. Finally, some conclusions and remarks are given in Sect. 8.

Throughout the paper, we use the following notations. \({\mathbb {R}}^{n}_{ +}({\mathbb {R}}^{n}_{ ++})\) is the nonnegative (positive) orthant of \({\mathbb {R}}^n\). The 2-norm and the infinity norm are denoted by \(\Vert \cdot \Vert _2\) and \(\Vert \cdot \Vert _{\infty }\), respectively. If \(x,s\in {\mathbb {R}}^n\), then \(xs\) denotes the componentwise (or Hadamard) product of the vectors \(x\) and \(s\). \(v_{\min }\) and \(v_{\max }\) denote the minimal and maximal components of the vector \(v\), respectively.

2 Preliminaries

2.1 The central path for \(P_*(\kappa ){-}\hbox {HLCP}\)

Solving \(P_*(\kappa ){-}\hbox {HLCP}\) is equivalent with finding a solution of the following system of equations

The general idea is to solve (2) using Newton’s method. However, Newton’s method can “get stuck” at the complementarity equation \(xs = 0\). Therefore, the main idea of IPMs is to replace the last equation in (2), the so called complementarity equation, with the parameterized equation \(xs = \mu e\), with parameter \(\mu > 0\). So we consider the following system

Here \(e\) is the all-one vector. In [24] is established that if HLCP satisfies the interior-point condition (IPC), i.e., there exists \((x,s)>0\) with \(Qx+Rs=b\), then the above system has a unique solution for each \(\mu >0\), that is denoted by \((x(\mu ),\,(s(\mu ))\) and is called the \(\mu -\)center of HLCP. We call the solution set \(\{(x(\mu ), s(\mu )) | \mu > 0\}\) the central path of the HLCP. If \(\mu \rightarrow 0\), then the limit of the central path exists [24] and since the limit point satisfies \(xs=0\), the limit yields the optimal solution for HLCP.

2.2 The perturbed problem

Let \(\left( x^{0},s^{0}\right) >0\) such that \({x^{0}}s^{0}=\mu ^{0} e\) for some (positive) number \(\mu ^{0}\). We denote the initial value of the residual as \(r^0\), as

For any \(\nu \) with \(0<\nu \le 1\), we consider the perturbed problem \((P_{\nu })\), defined by

Note that if \(\nu =1\) then \(\left( x,\,s\right) =\left( x^{0},\,s^{0}\right) \) yields a strictly feasible solution of \(\left( P_{\nu }\right) \). We conclude that if \(\nu =1\) then \(\left( P_{\nu }\right) \) satisfies the IPC.

Lemma 1

Let the original problem \(\left( P\right) \) be feasible, then the perturbed problem \((P_{\nu })\) satisfies the IPC.

Proof

The proof is similar to the proof of Lemma 4.1 in [15]. \(\square \)

2.3 The central path of the perturbed problem

We assume that \((P)\) is feasible. Assuming \(0<\nu \le 1\), Lemma 1 implies that the problem \(P_{\nu }\) satisfies the IPC, and hence its central path exists. This means that the system

has a unique solution, for every \(\mu >0\). In the sequel, this unique solution is denoted by \((x(\mu ,\nu ),\,s(\mu ,\nu ))\). It is the \(\mu \)-center of the perturbed problem \(\left( P_{\nu }\right) \). Note that since \(x^{0}s^{0}=\mu ^{0}e\), \((x^{0},\,s^{0})\) is the \(\mu ^{0}\)-center of the perturbed problem \(\left( P_{1}\right) \). In other words, \(\left( x\left( \mu ^{0},1\right) ,\,s\left( \mu ^{0},1\right) \right) =\left( x^{0},s^{0}\right) \). In the sequel the parameters \(\mu \) and \(\nu \) always satisfy the relation \(\mu =\nu \mu ^{0}\).

2.4 Search directions

2.4.1 Centering search directions

We are given a positive feasible pair \((x,s)\) for \((P_{\nu })\) and some \(\mu >0\). Our aim is to define centering search directions \(\varDelta x,\varDelta s\) that move in the direction of the \(\mu \)-center \((x(\mu ,\nu ),\,s(\mu ,\nu ))\). In fact, we want the new iterates \(x+\varDelta x,\,s+\varDelta s\) to satisfy system (5) and be positive with respect to \(\mu \). After substitution, this yields the following conditions on \(\varDelta x,\varDelta s\):

If we neglect for the moment the inequality constraints and ignore the quadratic term \(\varDelta x\varDelta s\), since \(b-Qx-Rs=\nu r^{0}\), this system can be rewritten as follows

In [11] is showed that If \((Q,R)\) is a \(P_*(\kappa )\)-pair, then the coefficient matrix in the above linear system is nonsingular. Hence this system uniquely defines the search directions \(\varDelta x,\,\varDelta s\) for any \(x > 0\) and \(s > 0\). These directions follow the \(\mu \)-center \((x(\mu ,\nu ),\,s(\mu ,\nu ))\). We call them centering search directions. Let

Then system (6) can be expressed as

where

2.4.2 Feasibility search directions

According to the definition of \(P_{\nu }\), the feasibility equation for \(\left( P_{\nu ^+}\right) \) is given by

To get iterates that are feasible for \(\left( P_{\nu ^+}\right) \), we need search directions \(\varDelta ^{f}x\) and \(\varDelta ^{f}s\) such that

Let \(\nu ^+=(1-\theta )\nu \), where \(\theta \) is the barrier update parameter in the algorithm. Since \(\left( x,\,s\right) \) is feasible for \((P_{\nu })\), it follows that \(\varDelta ^{f}x\) and \(\varDelta ^{f}s\) should satisfy

Thus, if we neglect for the moment the inequality constraints and ignore the quadratic term \(\varDelta ^f x\varDelta ^f s\), then \((\varDelta ^f x,\,\varDelta ^f s)\) can be defined by the following system

This system uniquely defines the search directions \(\varDelta ^{f}x,\,\varDelta ^{f}s\) for any \(x > 0\) and \(s > 0\). Since these displacements are designed to find feasible iterates for \(\left( P_{\nu ^+}\right) \), we call them feasibility search directions. Let

where \(v\) is defined in (7), then the system (11) can be expressed as

where \(\bar{Q}\) and \(\bar{R}\) are as defined in (9).

3 The new search directions

Consider the kernel function \(\psi (t):{\mathbb {R}}_{++}\rightarrow {\mathbb {R}}_{+}\) and its barrier function \(\varPsi (v):{\mathbb {R}}^{n}_{++}\rightarrow {\mathbb {R}}_{+}\) as follows

Note that, this kernel function is a special case of the kernel function \(\phi (t)\) as follows

which is applied for linear and semidefinit programming by Peng et al. [21]. Noticing that \(\psi '(t)=t-\frac{1}{t^3}\) and doing the transformation \(d_x+d_s=-\bigtriangledown \varPsi (v)\) in (8), we obtain the following equivalent system of equations:

By this transformation, we obtain the following equivalent system of equations for the centering search directions \(\varDelta x,\varDelta s\):

Similarly, doing transformation \(d_x^f+d_s^f=-\bigtriangledown \varPsi (v)\) in (13), we obtain the following equivalent system of equations for finding the scaled search directions \(d^f_x\) and \(d^f_s\):

We get the following system for the feasibility search directions \(\varDelta ^f x\) and \(\varDelta ^f s\):

3.1 Proximity function

We need a proximity measure to check how close we are to the \(\mu \)-center. So, to measure the quality of any approximation \((x, s)\) of \((x(\mu , \nu ), s(\mu ,\nu ))\), we introduce a norm-based proximity function as follows:

Note that

Therefore, this proximity function vanishes if and only if the pair \((x,\,s)\) coincides with the \(\mu \)-center \((x(\mu , \nu ), s(\mu ,\nu ))\).

3.2 Description of the full-Newton step IIPMs

Note that if \(\nu =1\) and \(\mu =\mu ^{0}\), then \(\left( x,\,s\right) =\left( x^{0},\,s^{0}\right) \) is the \(\mu \)-center of the perturbed problem \(P_{\nu }\). These are our initial iterates.

We measure proximity to the \(\mu \)-center of the perturbed problem \(P_{\nu }\) by the quantity \(\delta (v)\) as defined in (18). Initially we thus have \(\delta (v)=0\). In the sequel we assume that at the start of each iteration, just before the \(\mu \)-update, \(\delta (v)\le \tau \) for a (small) threshold value \(\tau >0\). So this is certainly true at the start of the first iteration.

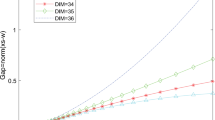

One (main) iteration of our algorithm works as follows. Suppose that for some \(\mu \in \left( 0,\,\mu ^{0}\right) \) we have \(\left( x,s\right) \) satisfying the feasibility condition (5) for \(\nu =\frac{\mu }{\mu ^{0}}\), and \(\delta (v)\le \tau \). We reduce \(\mu \) to \(\mu ^{+}=\left( 1-\theta \right) \mu \), with \(\theta \in \left( 0,1\right) \), and find new iterates \(\left( x^{+},\,s^{+}\right) \) that satisfy (5), with \(\mu \) replaced by \(\mu ^{+}\) and \(\nu \) by \(\nu ^{+}=\frac{\mu ^+}{\mu ^0}\), and such that \(x^{T}s\le n\mu ^{+}\) and \(\delta \left( x^{+},\,s^{+};\,\mu ^{+}\right) \le \tau \). Note that \(\nu ^{+}=\left( 1-\theta \right) \nu \). For this mean we accomplish first one feasibility step and then a few centering steps. The feasibility step serves to get iterates \(\left( x^{f},\,s^{f}\right) \) that are strictly feasible for \((P_{\nu ^{+}})\), and close to its \(\mu \)-center \(\left( x\left( \mu ^{+},\nu ^{+}\right) ,\,s\left( \mu ^{+},\nu ^{+}\right) \right) \). Then the centering steps improve the closeness of \(\left( x^{f},\,s^{f}\right) \) to the centeral path of \((P_{\nu ^{+}})\) and obtain a pair \((x,\,s)\) that satisfies the condition \(\delta \left( x,\,s;\,\mu \right) \le \tau \).A more formal description of the algorithm is given in Fig. 1.

4 Some useful tools

Through the paper, we assume that \(\delta :=\delta (v):=\delta \left( x,s;\mu \right) \). Our first lemma in this section provides a lower and an upper bound for the coordinates of \(v\) in terms of \(\delta \).

Lemma 2

Let \(\delta :=\delta (v)\), as defined by (18). Then

Proof

The lemma is trivial if \(v_{\min }\ge 1\) and \(v_{\max }\le 1\). Consider the case that \(v_{\min } < 1\). From (18) we derive

which implies

On the other hand, if \(v_{max} > 1\) then we find

The lemma follows directly from the above inequalities. \(\square \)

By the lemma just made, it is clear that for \(i=1,\,\ldots ,\,n\):

Using the next lemma we obtain some bounds for the unique solution of system (14).

Lemma 3

(Lemma 2 in [8]) Let \((Q, R)\) in the HLCP be a \(P_*(\kappa )\)-pair, then for any \((x,s)\in {\mathbb {R}}^{n}_{++}\times {\mathbb {R}}^{n}_{++}\) and any \(a\in {\mathbb {R}}^n\) the unique solution \((u,v)\) of the linear system

satisfies

where \(D=X^{-\frac{1}{2}}S^{\frac{1}{2}}\) and \(\tilde{a}=(xs)^{-\frac{1}{2}}~a.\)

Corollary 1

The unique solution \((d_x,d_s)\) of the linear system (14) satisfies

Proof

Comparing system (15) with (21) and let \(a=\mu v\left( v^{-3}-v\right) \). Then we have \(\tilde{a}=\frac{a}{\sqrt{\mu }~v}=\sqrt{\mu }\left( v^{-3}-v\right) \). So we may write

where the last inequality follows from (20). Using Lemma 3 we derive that

Since \(D\varDelta x=\sqrt{\mu }~d_x\), \(D^{-1}\varDelta s=\sqrt{\mu }~d_s\) and \(\left( \varDelta x\right) ^T\varDelta s=\mu \left( d_x\right) ^Td_s\), the corollary follows. \(\square \)

Let

Then we have

Lemma 4

Let \(\omega \) be as defined in (22). Then the following holds:

Proof

Using the definition of \({\omega }\) and corollary 1 , the lemma follows. \(\square \)

Lemma 5

(Lemma A.1 in [17]) Let \(f_i={\mathbb {R}}_+\rightarrow {\mathbb {R}}, ~i=1,\cdots ,n\), be a convex function. Then for any nonzero vector \(z\in {\mathbb {R}}^{n}_+\), the following inequality holds

5 Analysis of the centering steps

We denote the result of the full-Newton step given by system (15) at \((x, s)\) by \((x^+ , s^+ )\), i.e.,

5.1 The feasibility of the centering steps

Using (7) and system (15), we may write

In the following lemma we state a feasibility condition for the centering steps.

Lemma 6

The iterates \(\left( x^+,s^+\right) \) are strictly feasible if and only if \(v^{-2}+d_xd_s>0\).

Proof

If \(x^+\) and \(s^+\) are positive, then the equality (25) makes it clear that \(v^{-2}+d_xd_s>0\). For the prove of the converse implication, we introduce a step length \(\alpha \in [0,1]\) and we define

Then we have \(x^0 = x\), \(x^1 = x^+\) and similar relations for s. Hence we obtain \(x^0s^0 = xs > 0\). Using (25), we can write

So \(x^{\alpha }s^{\alpha } > 0\) for \(\alpha \in [0,1]\). Thus, none of the entries of \(x^{\alpha }\) and \(s^{\alpha }\) vanishes for \(\alpha \in [0,1]\). Since \(x^0\) and \(s^0\) are positive, and \(x^{\alpha }\) and \(s^{\alpha }\) are depend linearly on \(\alpha \), this implies that \(x^{\alpha }>0\) and \(s^{\alpha }>0\) for \(\alpha \in [0,1]\). Hence, \(x^1\) and \(s^1\) must be positive, which proves that \(x^+\) and \(s^+\) are positive. \(\square \)

Corollary 2

The iterates \((x^+, s^+)\) are strictly feasible if \(\Vert d_xd_s\Vert _{\infty }<\frac{1}{1+\delta }\).

Proof

From (19) and Lemma 6, the corollary follows. \(\square \)

5.2 The effect of a full-Newton step on the proximity function

The following lemma investigates the effect of a full-Newton step on the proximity measure.

Lemma 7

Let \(\delta \le \frac{1}{2\sqrt{1+2\kappa }}\). Then after a full-Newton step, we have

Proof

Let \(v^+:=\sqrt{\frac{x^+s^+}{\mu }}\). Then from (25), we have \(\left( v^+\right) ^2=v^{-2}+d_xd_s\). We may write

where the inequality is due to (24). For each \(i=1,\cdots ,n\), we define the function

Note that this function is convex in \(z_i\). Taking \(z_i=2\omega _i^2\), using Lemma 5, we have

Notice that if \(\delta \le \frac{1}{2\sqrt{1+2\kappa }}\), then from (20) and Lemma 4, we may write for \(1\le j\le n\):

Therefore the above inequalities may continue as follows:

where in the third and forth inequality, we used (20) and Lemma 4 respectively. The last inequality, is due to the assumption \(\delta \le \frac{1}{2\sqrt{1+2\kappa }}\). \(\square \)

5.3 The value of \(x^Ts\) after doing the step

The next lemma gives an upper bound for the quantity \(x^Ts\) after each full-Newton step.

Lemma 8

One has

Proof

From (25), (20) and Corollary 1 we derive:

which proves the lemma. \(\square \)

6 Analysis of the feasibility step

We denote the resulting vectors of the feasibility step given by system (17) at \((x, s)\) by \((x^f , s^f )\), i.e.,

6.1 The feasibility of the feasibility step

From (16) we may write

Lemma 9

The iterates \(\left( x^f,s^f\right) \) are strictly feasible if and only if

Proof

The proof of this lemma is the same as that of Lemma 6.\(\square \)

Corollary 3

The iterates \((x^f, s^f)\) are strictly feasible if \(\Vert d^f_xd^f_s\Vert _{\infty }<\frac{1}{1+\delta }\).

Proof

From (20) and Lemma 9 the corollary follows.\(\square \)

Let

Then similar to (23) and (24), we have:

Using this, in the next subsection we investigate the effect of the feasibility step on the proximity function.

6.2 An upper bound for proximity function after a feasibility step

Lemma 10

Let \(\mu ^+=(1-\theta )\mu \), \(v^f=\sqrt{\frac{x^fs^f}{\mu ^+}}\) and \(\delta \le \frac{1}{2\sqrt{1+2\kappa }}\) , then we have

Proof

Let \(u=v^{-2}+d^f_xd^f_s\). Using (26) we derive

then, we may write

Note that, using (28) and (29), we have

By the same way as in the proof of Lemma 7, we may derive

So the proof is completed. \(\square \)

Note that in the algorithm, for using the result of Lemma 7 after performing the feasibility step, we need the following condition be satisfied

Using Lemma 10, this is certainly true, if

By some elementary calculations, we obtain that if

then the inequality (31) is satisfied.

6.3 An upper bound for \(\omega ^f\)

We start by finding some bounds for the unique solution of the linear system (17).

Lemma 11

(Lemma 3.3 in [8]) Let \((Q, R)\) in the HLCP be a \(P_*(\kappa )\)-pair, then the linear system

has a unique solution \(w=(u,v)\), for any \(z=(x,s)\in R^{n}_{++}\times {\mathbb {R}}^{n}_{++}\) and any \(a,\tilde{b}\in R^{n}\), such that the following inequality is satisfied:

Here

and \(\tilde{a}\) and \(D\) are defined in Lemma 3.

Comparing system (35) with (17) and considering \(w=(u,v)=(\varDelta ^{f}x,\varDelta ^{f}s)\), \(a=\mu \left( v^{-2}-v^2\right) \), \(\tilde{b}=\theta \nu r^0\), \(z=(x,s)\) in system (35), we have

From (20) and the definition of \(\delta \) in (18), we also have

Also by the definition of \(\xi (z,\tilde{b})\), it is obvious that

Furthermore, noticing the definitions of \(\varDelta ^f x\) and \(\varDelta ^f s\), we may write \( D\varDelta ^f_x=\sqrt{\mu }~d^f_x\) and \(D^{-1}\varDelta ^f_s=\sqrt{\mu }~d^f_s.\) Substituting the above equations in (36), we have

Now we specify our initial iterates \(\left( x^0,\,s^0\right) \). Assuming that \(\rho _p\) and \(\rho _d\) are such that

for some optimal solutions \(\left( x^*,\,s^*\right) \) of \((P)\), as usual we start the algorithm with

The lemma below achieves a bound for the quantity \(\xi (z,r^0)\) in (37).

Lemma 12

(Lemma 4.6 in [3]) Let \(\xi (\cdot , \cdot )\) be as defined in Lemma 11. Then the following holds:

Thanks to the next Lemmas, we will obtain some upper bounds for \(\Vert x\Vert _1\) and \(\Vert s\Vert _1\).

Lemma 13

(Lemma 4.7 in [3]) Let \(\left( x,\,s\right) \) be feasible for the perturbed problem \(P_{\nu }\) and \(\left( x^0,\,s^0\right) \) as defined in (39). Then for any optimal solution \(\left( x^*,\,s^*\right) \), we have

Lemma 14

Let \(\left( x,\,s\right) \) be feasible for the perturbed problem \((P_{\nu })\) and \(\left( x^0,\,s^0\right) \) as defined in (39). Then we have

Proof

Since \(x^0=\rho _p\,e,\,s^0=\rho _d\, e,\,\Vert x^*\Vert _\infty \le \rho _p\) and \(\Vert s^*\Vert _\infty \le \rho _d\), we derive

Also \(\left( x^0\right) ^Ts^0=n\,\rho _p\,\rho _d\). Substituting these values in Lemma 13 and noting that \(\mu ^0=\rho _p\rho _d\), using (19), we have

Since \(x^0,\,s^0,\,x\) and \(s\) are positive, we obtain

Due to \(x^0=\rho _p\,e\) and \(s^0=\rho _d\,e\), these statements follow the lemma. \(\square \)

Applying (40) and (41) in Lemma (12), using (19), we derive

Now substituting (42) in (37), we obtain

6.4 The values for \(\theta \) and \(\tau \)

In this subsection, we specify some values for the algorithm’s parameters \(\tau \) and \(\theta \) such that our main targets are achieved. We have found that \(\delta (x^f, s^f; \mu ^+)<\frac{1}{2\sqrt{1+2\kappa }}\) holds if the inequalities (32), (33) and (34) are satisfied. Then by (43), inequality (32) holds if

Obviously, the left side of the above inequality is increasing in \(\delta \). Using this, one may easily verify that the above inequality is satisfied if

which are in agreement with (32) and (33). Note that by Corollary 3, the feasibility step is strictly feasible if \(\Vert d_x^fd_s^f\Vert _{\infty }<\frac{1}{1+\delta }\). From 29 we find that \(\Vert d_x^fd_s^f\Vert _{\infty }\le 2(\omega ^f)^2\). Therefore \((x^f,s^f)\) are strictly feasible if \((\omega ^f)^2<\frac{1}{2(1+\delta )}\). Using (43), this inequality is certainly satisfied whenever

As is mentioned above, the left side of this inequality is monotonically increasing with respect to \(\delta \) while the right side is monotonically decreasing. So substituiting \(\delta \) by \(\tau \) gives an upper bound for its left side and a lower bound for its right side. Consequently \((x^f,s^f)\) are strictly feasible if

But with the values of \(\tau \) and \(\theta \) in (44), an upper bound for the left side of (45) is 0.1220 while a lower bound for its right side is 0.6742. Therefore (42) also guarantees the strict feasibility of the feasibility step.

7 Complexity analysis

Let \(\delta \left( x^f,\,s^f;\,\mu ^+\right) \le \frac{1}{2\sqrt{1+2\kappa }}\). Starting at \((x^f,\,s^f)\) we repeatedly apply full-Newton steps until the \(k-\)iterate, denoted as \(\left( x^+,\,s^+\right) :=(x^k,\,s^k)\), satisfies \(\delta \left( x^k,\,s^k;\,\mu ^+\right) \le \tau \). We can estimate the required number of centering steps by using Lemma 7. Let \(\delta (v^k)=\delta \left( x^k,s^k;\mu ^+\right) \), \(\delta (v^0)=\delta \left( x^f,s^f;\mu ^+\right) \) and \(\gamma =5.4247\sqrt{1+2\kappa }\). It then follows that

Using the definition of \(\gamma \) and \(\delta \left( v^0\right) \le \frac{1}{2\sqrt{1+2\kappa }}\), this gives

Hence we certainly have \(\delta \left( x^+,s^+;\mu ^+\right) \le \tau \), if

Taking logarithms at both sides, this reduces to

Thus we find that after no more than

centering steps, we have iterates \(\left( x^+,s^+\right) \) that satisfy \(\delta \left( x^+,\,s^+;\,\mu ^+\right) \le \tau \).

Substituting the value of \(\tau \) from (44) in (47) implies that at most

centering steps are needed in our algorithm.

Note that in each main iteration of the algorithm, both the value of \(x^Ts\) and the norm of the residual are reduced by the factor \(1-\theta \). Hence, the total number of main iterations is bounded above by

Due to (44), we may state without further proof, the main result of the paper:

Theorem 1

If \((P)\) has an optimal solution \(\left( x^*,\,s^*\right) \) such that \(\Vert x^*\Vert \le \rho _p\) and \(\Vert s^*\Vert \le \rho _d\), then after at most

iterations, the algorithm finds an \(\varepsilon \)-solution of \(P_*(\kappa )-HLCP\).

8 Concluding remarks and further research

We generated the new search directions for full-Newton step IIPM based on a kernel function for \(P_*(\kappa )\)-HLCP. The complexity bound for the algorithm admits the best-known result for IIPMs. In general, the algorithm belongs to a kind of small-update IIPMs. According to the default value for \(\theta \) in (44), for a \(P_*(\kappa )\)-HLCP with large data, one can not expect a good performance for the algorithm. Further research may focus on to design the kernel function based large-update IIPMs.

References

Achache, M.: A new primal-dual path-following method for convex quadratic programming. Comput. Appl. Math. 25(1), 97110 (2006)

Asadi, S., Mansouri, H.: Polynomial interior-point algorithm for \(P_*(\kappa )\) horizontal linear complementarity problem. Numer. Algorithms 63(2), 385–398 (2013)

Asadi, S., Mansouri, H.: A full-Newton step infeasible-interior-point algorithm for \(P_*(\kappa )\)- horizontal linear complementarity problems. Yugoslav J. Oper. Res. 23(3), (2013) doi:10.2298/YJOR130515034A

Anitescu, M., Lesaja, G., Potra, F.A.: An infeasible-interior-point predictor-corrector algorithm for the \(P_0\)-Geometric LCP. Appl. Math. Optim. 36(2), 203–228 (1997)

Anstreicher, K.M., Bosch, R.A.: A new infinity-norm path following algorithm for linear programming. SIAM J. Optim. 5(2), 236–246 (1995)

Darvay, Zs: New interior-point algorithms in linear programming. Adv. Model. Optim. 5(1), 51–92 (2003)

Ghami, M.El, Ivanov, I., Melissen, J.B.M., Roos, C., Steihaug, T.: A polynomial-time algorithm for linear optimization based on a new class of kernel functions. J. Comput. Appl. Math. 224(2), 500–513 (2009)

Gurtuna, F., Petra, C., Potra, F.A., Shevehenko, O., Vancea, A.: Corrector-Predictor methods for sufficient linear complementarity problems. Comput. Optim. Appl. 48(3), 453–485 (2011)

Karmarkar, N.K.: A new polynomial-time algorithm for linear programming. Combinatorica 4(4), 373–395 (1984)

Kojima, M., Mizuno, S., Yoshise, A.: A polynomial-time algorithm for a class of linear complementarity problems. Math. Program. 44(1), 126 (1989)

Kojima, M., Megiddo, N., Noma, T.: A unified approach to interior point algorithms for linear complementarity problems. In: Lecture Notes in Computer Science, Springer, Berlin (1991)

Liu, Z., Chen, Y.: A full-Newton step infeasible interior-point algorithm for linear programming based on a self-regular proximity. J. Appl. Math. Inform. 29(1–2), 119–133 (2011)

Lee, Y.H., Cho, Y.Y., Cho, G.M.: Kernel function based interior-point methods for horizontal linear complementarity problems. J. Inequal. Appl. 1, 1–15 (2013). doi:10.1186/1029-242X-2013-215

Mansouri, H., Asadi, S.: A quadratically convergent \(O{\sqrt{n}}\) interior-point algorithm for the \(P_*(\kappa )\)-matrix horizontal linear complementarity problem. J. Sci. Islam. Repub. Iran 20(4), 432–438 (2012)

Mansouri, H., Zangiabadi, M., Pirhaji, M.: A full-Newton step \(O(n)\) infeasible interior-point algorithm for linear complementarity problems. Nonlinear Anal. Real World Appl. 12(1), 545–561 (2011)

Mansouri, H., Roos, C.: A new full-Newton step on infeasible interior-point algorithm for semidefinite optimization. Numer. Algorithms 52(2), 225–255 (2009)

Mansouri, H., Roos, C.: Simplifed \(O(nL)\) infeasible interior-point algorithm for linear optimization using full-Newton step. Optim. Methods Softw. 22(3), 519–530 (2007)

Mansouri, H., Pirhaji, M.: A polynomial interior-point algorithm for monotone linear complementarity problems. J. Optim. Theory Appl. 157(2), 451–461 (2012)

Mizuno, S., Todd, M.J., Ye, Y.: On adaptive-step primal-dual interior-point algorithms for linear programming. Math. Oper. Res. 18(4), 964–981 (1993)

Nesterov, Y.E., Nemirovski, A.S.: Interior Point Polynomial Methods in Convex Programming: Theory and Algorithms. SIAM Publications. SIAM, Philadelphia (1993)

Peng, J., Roos, C., Terlaky, T.: Self-regular functions and new search directions for linear and semidefinite optimization. Math. Program. 93(1), 129–171 (2002)

Potra, F.A.: Primal-dual affine scaling interior point methods for linear complementarity problems. SIAM J. Optim. 19(1), 114–143 (2008)

Roos, C.: A full-Newton step \(O(n)\) infeasible interior-point algorithm for linear optimization. SIAM J. Optim. 16(4), 1110–1136 (2006)

Stoer, J., Wechs, M.: Infeasible-interior-point paths for sufficient linear complementarity problems and their analyticity. Math. Program. Ser. A 83(3), 407–423 (1998)

Wang, G.Q., Bai, Y.Q.: Polynomial interior-point algorithms for \(P_*(\kappa )\)-horizontal linear complementarity problem. J. Comput. Appl. Math. 233(2), 248–263 (2009)

Wang, G.Q., Yu, C.J., Teo, K.L.: A full-Newton step feasible interior-point algorithm for \(P_*(\kappa )\)-linear complementarity problem. J. Global Optim. 59(1), 81–99 (2014)

Wang, G.Q., Bai, Y.Q.: A new primal-dual path-following interior-point algorithm for semidenite optimization. J. Math. Anal. Appl. 353(1), 339–349 (2009)

Wang, G.Q., Bai, Y.Q.: A primal-dual path-following interior-point algorithm for second-order cone optimization with full Nesterov-Todd step. Appl. Math. Comput. 215(3), 1047–1061 (2009)

Wang, G.Q., Bai, Y.Q.: A new full Nesterov-Todd step primal-dual path-follwing interior-point algorithm for symmetric optimization. J. Optim. Theory Appl. 154(3), 966–985 (2012)

Ye, Y., Tse, E.: An extension of Karmarkar’s projective algorithm for convex quadratic programming. Math. Program. 44(1–3), 157–179 (1989)

Acknowledgments

Authors would like to thank for the financial grant from Shahrekord University. They were also partially supported by the Center of Excellence for Mathematics, University of Shahrekord, Shahrekord, Iran.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Asadi, S., Zangiabadi, M. & Mansouri, H. An infeasible interior-point algorithm with full-Newton steps for \(P_*(\kappa )\) horizontal linear complementarity problems based on a kernel function. J. Appl. Math. Comput. 50, 15–37 (2016). https://doi.org/10.1007/s12190-014-0856-4

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12190-014-0856-4

Keywords

- Horizontal linear complementarity problem

- Central path

- Infeasible full-Newton step method

- Kernel function