Abstract

This paper presents a difference scheme by considering cubic B-spline quasi-interpolation for the numerical solution of a fourth-order time-fractional integro-differential equation with a weakly singular kernel. The fractional derivative of the mentioned equation has been described in the Caputo sense. Time fractional derivative is approximated by a scheme of order \(O(\tau ^{2-\alpha })\) and the Riemann–Liouville fractional integral term is discretized by the fractional trapezoidal formula. The spatial second derivative has been approximated using the second derivative of the cubic B-spline quasi-interpolation. The discrete scheme leads to the solution of a system of linear equations. We show that the proposed scheme is stable and convergent. In addition, we have shown that the order of convergence is \(O(\tau ^{2-\alpha }+h^{2})\). Finally, various numerical examples are presented to support the fruitfulness and validity of the numerical scheme.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Partial integro-differential equations with a weakly singular kernel have many applications in various fields of science and engineering, such as heat conduction in materials with memory, viscoelasticity, reactor dynamics, biomechanics, and pressure in porous media [1,2,3,4]. Several numerical methods have been used for solving integro-differential equations with a weakly singular kernel. For example, Wang et al [5] proposed a high order compact alternating direction implicit scheme for solving two-dimensional time-fractional integro-differential equations with a weakly singularity near the initial time. Qiu et al [6] introduced and analyzed a Sinc–Galerkin method for solving the fourth-order partial integro-differential equation with a weakly singular kernel. In [7], the Sinc-collocation approach combined with the double exponential transformation has been employed for solving a class of variable-order fractional integro-partial differential equations. Fakhar–Izadi [8] derived a space-time Spectral–Galerkin method for the solution of one and two-dimensional fourth order time-fractional partial integro-differential equations with a weakly singular kernel. Zhang et al [9] proposed the quintic B-spline collocation method for solving fourth order partial integro-differential equations with a weakly singular kernel. Dehestani et al [10] applied Legender–Laguerre functions and the collocation method for solving variable-order time-fractional partial integro-differential equations. Hashemizadeh et al [11] presented a spectral method for solving nonlinear Volterra integral equations with a weakly singular kernel based on Genocchi polynomials. Biazar and Sadri [12] presented an operational approach based on shifted Jacobi polynomials for solving a class of weakly singular fractional integro-differential equations.

Fractional calculus has proved to be a valuable tool in modeling of different materials and processes in many applied sciences like biology, bio-mechanic, electrochemistry and etc, in accordance with their memory and hereditary properties [13,14,15,16]. Various numerical schemes are presented for solving fractional partial differential equations, such as finite difference [17,18,19,20,21,22], spectral [23,24,25], meshless [26, 27], and finite element [28, 29] methods.

In this paper, we consider the fourth-order time-fractional integro-differential equation with a weakly singular kernel as follows [30]:

where \(\Omega =(0,L)\times (0,T]\),\(0<\alpha ,\beta <1\),f(x, t) is source term and \(u^{0}(x)\) is given smooth function. In fact, problem (1) is equivalent to

In (2), \(_{C}{\mathcal {D}}_{0,t}^\alpha\) is fractional derivative operator in caputo sense and \({\mathcal {I}}^{(\beta )}\) is defined as follows

where \(\Gamma (.)\) is the Gamma function.

Equation (1), can be found in the modeling of floor systems, window glasses, airplane wings, and bridge slabs [31, 32]. In fact, fourth-order spatial derivative operators are needed in the modeling of heat flow in materials with memory, strain gradient elasticity, and phase separation in binary mixtures [33,34,35].

The fourth-order fractional equations have recently attracted the attention of researchers. For example, in [36], the authors proposed a new study for weakly singular kernel fractional fourth-order partial integro-differential equations by means of optimum q-HAM. Tariq and Akram developed a quintic spline technique for time fractional fourth-order partial differential equations [32]. Heydari and Avazzadeh used the orthonormal Bernstein polynomials to solve nonlinear variable-order time fractional fourth-order diffusion-wave equations with nonsingular fractional derivative [37]. Abdelkawy et al [38] derived a highly accurate technique for solving distributed-order time-fractional-sub-diffusion equations of the fourth order. Yang et al [39] introduced a quasi-wavelet based numerical method for fourth-order partial integro-differential equations with a weakly singular kernel. Roul and Goura considered a high order numerical method for time-fractional fourth order partial differential equations [40].

Cubic B-spline quasi-interpolation has been applied in some papers, see [41,42,43,44,45,46,47]. The fundamental benefit of B-spline quasi-interpolation is that they may be built directly without solving any systems of linear equations. It also results in a better approximation of smooth functions. Furthermore, they are local in the sense that the value of B-spline quasi-interpolant at a given point is determined solely by the values of the given function in the neighborhood of that point. Sablonniere [48] found that the cubic B-spline quasi-interpolation first derivative is more accurate than the finite difference approximation. Among the numerical methods so far proposed to solve time-fractional integro-differential equations, B-spline quasi-interpolations have rarely been used. This motivates us to construct a numerical scheme by using cubic B-spline quasi-interpolation to solve equation (1).

In this paper, we construct a difference method using cubic B-spline quasi-interpolation for problem (1). We approximate the temporal Caputo derivative with a \(L_1\)-discrete formula. Meanwhile, we apply a second-order formula to approximate \({\mathcal {I}}^{(\beta )}\) operator. Then we proved the stability and convergence of the difference method. Numerical examples verify the accuracy of the proposed method. Also, the convergence order of the scheme is \((2-\alpha )\) for time and 2 for space. The advantages of the method are flexibility and simplicity. The method is computationally optimal and fast.

The remainder of the paper is organized as follows. In section 2, we introduce some definitions and preliminaries to fractional calculus and cubic B-spline quasi-interpolation. The difference scheme for the fourth-order time-fractional integro-differential equation with a weakly singular kernel is derived in section 3. The stability and convergence of the method are investigated in Sections 4 and 5. In section 6, some numerical examples are provided to demonstrate the theoretical results. A conclusion ends the article.

2 Some definitions and preliminary

The domain is divided into a uniform grid of mesh points \((x_j,t_k)\) with \(x_{j}=jh,h=\frac{L}{M},0\le j\le M\) and \({t_k} = k\tau\), \(\tau =\frac{T}{N}\), \(0\le k\le N\). The values of the function u at the grid points are denoted \(u(x_{i},t_{k})\) and \(U_{i}^{k}\) is the approximate solution at the point \((x_{i},t_{k})\).

Definition 1

The left- and right-sided Riemann–Liouville integrals of a suitably smooth function f(x) on (a, b) are defined by [31, 49, 50]

respectively.

Definition 2

The left- and right-sided Riemann–Liouville derivatives of order \(\alpha\) are defined by [31, 49, 51]

and

respectively, where n is a positive integer satisfying \(n-1<\alpha \le n\).

Definition 3

The left- and right-sided Caputo derivatives of order \(\alpha\) are defined by [16, 31, 49]

and

respectively, where n is a positive integer satisfying \(n-1<\alpha \le n\).

Lemma 1

(\(L_1\) approximation) Let \(\alpha \in (0,1)\) and \(u(.,t)\in C_t^2([0,T])\) then the following approximation formula holds [52, 53]

in which

where C is a positive constant given by

Lemma 2

Let \(\beta \in (0,1)\) and u(., t) is suitably smooth on (0, T) then for the \({\mathcal {I}}^{(\beta )}\) there holds that [49]

where

Now, we introduce B-spline and univariate B-spline quasi-interpolants that we will use in the next section. In order to define B-splines, we need the concept of knot sequences.

Definition 4

A knot sequence \(\varvec{\xi }\) is a nondecreasing sequence of real numbers,

The elements \(\xi _i\) are called knots.

Provided that \(m\ge p+2\) we can define B-splines of degree p over the knot sequence \(\varvec{\xi }\).

Definition 5

Suppose for a nonnegative integer p and some integer j that \(\xi _{j-p-1}\le \xi _{j-p}\le \cdots \le \xi _{j}\) are \(p+2\) real numbers taken from a knot sequence \(\varvec{\xi }\). The j-th B-spline \(B_{j,p,\varvec{\xi }}:{\mathbb {R}}\rightarrow {\mathbb {R}}\) of degree p is identically zero if \(\xi _{j-p-1}=\xi _{j}\) and otherwise defined recursively by [54]

starting with

A B-spline of degree 3 is also called a cubic B-spline. Using the relation (14), the cubic B-spline \(B_{j,3,\varvec{\xi }}\) are given by

In accordance [54], suppose for integers \(n>p\ge 0\) that a knot sequence

is given. This knot sequence allows us to define a set of \(n+p\) B-splines of degree p, namely

We consider the space of splines spanned by the B-splines in (16) over the interval \([\xi _{0},\xi _{n}]\),

We now introduce two definitions about knots which are crucial for splines.

Definition 6

A knot sequence \(\varvec{\xi }\) is called \((P+1)\)-regular if \(\xi _{i-p-1}<\xi _{i}\) for \(i=1,\cdots ,n+p\). Such a knot sequence ensures that all the B-splines in (16) are not identically zero [54].

Definition 7

A knot sequence \(\varvec{\xi }\) is called \((P+1)\)-open on an interval [a, b] if it is \((P+1)\)-regular and it has end knots of multiplicity \(p+1\),i.e., [54]

Suppose \(\{B_{j,p,\varvec{\xi }}\}_{j=1}^{n+p}\) form a basis for \({\mathcal {S}}_{p,\varvec{\xi }}\). For each \(j=1,\cdots ,n+p,\) let \(\lambda _{j}\) be a linear functional defined on C[a, b] that can be computed from values of f at some set of points in [a, b]. We have the following definition.

Definition 8

A formula of the form

is called a B-spline quasi-interpolation formula of degree p [55].

According to [54, 56] the error of a quasi-interpolation satisfies

where \(S_{\varvec{\xi }}^{p}=[\xi _{0},\xi _{n}]\), \(S_{x}\) is the union of the supports of all B-splines \(B_{i,p,\varvec{\xi }}\), \(i\sim x\) and \(\Vert f^{(p+1)}\Vert _{\infty ,S_{x}}\) denotes the maximum norm of \(f^{(p+1)}\) on \(S_{x}\) and \(\Delta (x)=\max _{y\in S_{x}}|y-x|\) that \(\sim\) is used to indicate proportionality. If the local mesh ratio is bounded, i.e., if the quotients of the lengths of adjacent knot intervals are \(\le r_{y}\), then the error of the derivatives on the knot intervals \((\xi _{0},\xi _{n})\) can be estimated by

for \(j\le p\).

Suppose \(a=x_{0}<\dots <x_{n}=b\) are equally spaced points in the interval [a, b]. We have the following theorem.

Theorem 1

Given a function f defined on [a, b], let

Then (19) defines a linear operator mapping C[a, b] into \({\mathcal {S}}_{p,\varvec{\xi }}\) with \(Q_{p}s=s\) for all cubic polynomials s [55].

For approximate derivatives of f by derivatives of \(Q_{3}f\) up to the order \(h^{3}\), we can evaluate the value of \(f'\) and \(f''\) at \(x_{j}\) by \((Q_{3}f)'(x)=\sum \nolimits _{j = 1}^{n+3}(\lambda _{j}f)B^{'}_{j,p,\varvec{\xi }}(x)\) and \((Q_{3}f)''(x)=\sum \nolimits _{j= 1}^{n+3}(\lambda _{j}f)B^{''}_{j,p,\varvec{\xi }}(x)\). We set \(Y=(f_{0},f_{1},\dots ,f_{n})^{T}\), \(Y'=(f'_{0},f'_{1},\dots ,f'_{n})^{T}\) and \(Y''=(f''_{0},f''_{1},\dots ,f''_{n})^{T}\) where \(f_{j}^{'}=(Q_{3}f)'(x_{j})\), \(j=1,\dots ,n\) and \(f_{j}^{''}=(Q_{3}f)''(x_{j})\), \(j=1,\dots ,n\). The first and the second derivatives of \(Q_3(f)\) are calculated as

where \(B^{'}_{i,p,\varvec{\xi }}(x)\) and \(B^{''}_{i,p,\varvec{\xi }}(x)\) are obtained from (15) such that \(\xi _j=x_j\) for \(j=0,1,\dots ,n\). Now according to (23) and (24) we have

and

Therefore, we can display the approximation of \(f'\) and \(f''\) in the following matrix form

where \(D_{1},D_{2}\in {\mathbb {R}}^{(n+1)\times (n+1)}\) are obtained as follows:

3 Description of the difference scheme

In the present section we construct a difference scheme for solving (1).

Considering (2) at the point \((x_i,t_k)\), one has

Using (10), (13), (23) and (24) equation (26) can be approximated by

where \(|(R_1)_i^k|\le C(\tau ^{2-\alpha }+h^2)\) and \(|(R_2)_i^k|\le Ch^2\).

After simplification we obtain

where \(\mu =\tau ^{\alpha }\Gamma (2-\alpha )\).

Ignoring \((R_1)_i^k,(R_2)_i^k\) and replacing the functions \(u_i^k\) and \(v_i^k\) with its numerical approximations \(U_i^k\) and \(V_i^k\) in (29), we obtain the following difference scheme

We set \(l_1=\mu +\mu a_{k,k}\) and \(l_{2}=\frac{\mu }{h^2}\). So that in each time step we encounter the following system of linear equations

where

such that B and C are pentadiagonal matrices and I is identity matrix

4 Stability analysis

In the current section, the stability of the scheme (31)–(33) can be analyzed by using the Fourier method [57]. We assume that the exact solution u is continuous and the derivative of u is square integrable. Let \(\tilde{U}^{k}_{j}\) be the approximate solution of the scheme, and define

\(\zeta ^{k}_{j}={U}^{k}_{j}-\tilde{U}^{k}_{j}, \qquad 1\le j\le M-1, \quad 1\le k\le N,\)

with corresponding vector

So that

Set

then we have

Next, we define the grid functions as follows:

We can expand \(\zeta ^{k}(x)\) into a Fourier series

where

Denoting

and using the Parseval equality

one has

We can expand \(\zeta _{j}^{k}\) into Fourier series, and Because the difference equations are linear, we can analyze the behavior of the total error by tracking the behavior of an arbitrary nth component [58]. So we can assume that the solution of (37) has the following form

where \(\sigma _{x}=2\pi l/L\). Substituting the above expression into (37) we obtain

where

Theorem 2

Suppose that \(d_{k}\), \((1\le k\le N-1)\) are defined by (44), then we obtain

\(\vert d_{k} \vert \le C_k \vert d_{0} \vert , \quad k=1,2,\cdots ,N-1.\)

Proof

We will prove this claim by mathematical induction. For \(k=1\) we prove that there exist a constant \(C_1\) such that

For this purpose, we have

and

We take the limit from \(\frac{1+rs'a_{0,1}}{z}\) as \(\tau \rightarrow 0\) and \(h\rightarrow 0\) in such a way that we maintain the ratio \(\frac{\mu }{h^2}=\frac{\tau ^{\alpha }\Gamma (2-\alpha )}{h^2}\) equal to a fixed constant H. So that

As a result, there is a positive constant \(C_1\) independent of N, M that says

Assume that

We have

Now assume that

so similar to initial case \(k=1\), we obtain

This completes the proof. \(\square\)

Theorem 3

The finite difference scheme (31)–(35) is unconditionally stable for \(\alpha \in (0,1).\)

Proof

Thanks to theorem (2) and Parseval’s equality, we obtain

so that

which indicates that the numerical scheme is stable. \(\square\)

5 Convergence

In this section, we prove that convergence of the difference scheme (31)–(35). Similar to the previous section let \(e_{j}^{k}=u_{j}^{k}-U_{j}^{k},1\le j\le M-1, 0\le k\le N-1\) and and denote, \(e^{k}=(e^{k}_{1},e^{k}_{2},\ldots ,e^{k}_{M-1})^{T},{\textbf{R}}^{k}=(R^{k}_{1},R^{k}_{2},\ldots ,R^{k}_{M-1})^{T},0\le k\le N-1.\)

From Equations (31)–(35) and \(R_{j}^{k+1}=O(\tau ^{2-\alpha }+h^{2})\) and noticing that \(e_{j}^{0}=0\), similar to (37) one has

Using the similar idea of stability analysis, we define the following functions

and

We expand the \(e^{k}(x)\) and \(R^{k}(x)\) into the following Fourier series expansions

where

Applying the Parseval equality

and

we have

Now, we suppose that

where \(\sigma _{x}=\frac{2l\pi }{L}\). By replacing the above relations into (46) leads to

Lemma 3

(Discrete Gronwall inequality) Let \({y_n}\) and \({g_n}\) be nonnegative sequences and b be a nonnegative constant. If [59]

then

Theorem 4

If \(\eta _{k}\) be the solution of Equation (54), then there is positive constant C such that

Proof

In view of the convergence of the series on the right-hand side of Equation (53), we know that there exists a positive constant \(C_{2}\), such that

According to Equations (54), (56) and theorem (2), we have

This completes the proof. \(\square\)

Theorem 5

The difference scheme (31)–(35) is convergent, and the order of convergence is \(O(\tau ^{2-\alpha } +h^{2})\).

Proof

By theorem (4) and Equation (56), we can obtain

furthermore, there exists a positive constant \(C_1\), such that

So that

where \(C'=C\sqrt{L}\). This completes the proof. \(\square\)

6 Numerical experiments

In this section, five test problems are presented to check the effectiveness, validity, stability, and convergence orders of the present method. The domain in all examples is \(\Omega =[0,1]\times [0,1]\). All computations are implemented with MATLAB R2020b. The error norms used in this section are as follows:

where \(e_{j}^{k}=u(x_{j},t_{k})-U_{j}^{k}\). In all examples we have used the following formulas to calculate the convergence rate:

Example 1

For the first example, consider the following problem:

with the initial condition \(u^0(x)=0\). The source term is

The exact solution is

In tables 1 and 3, we record the norm of errors and convergence orders in spatial direction for different values of \(\alpha\) and \(\beta\) . In table 2, the orders of convergence with respect to time for different values of \(\alpha\) and \(\beta\) are reported. For each value of \(\alpha\) and \(\beta\), we chose different spatial step sizes \(h=1/10,1/20,\dots ,1/320\) and a fixed temporal step length of \(\tau\) to obtain the numerical convergence rates in spatial, which is in excellent agreement with our theoretical results. In table 4, we compared our results with the reference [30]. In table 5, we presented the results for large time instant t.

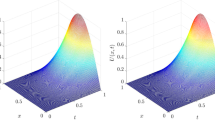

Figure 1 compares the plots of the exact and numerical sulotions computed by difference scheme using \(\tau =\frac{1}{55}\) and \(h=\frac{1}{320}\). The plot of pointwise errors and the contour plot of numerical solution at \(t=1\) with \(\tau =\frac{1}{55}\) and \(h=\frac{1}{320}\) is illustrated in figure 2. In figure 3, a comparison between the numerical and exact solutions at \(t=1\) with \(\tau =\frac{1}{55}\) and \(h=\frac{1}{320}\) is demonstrated. It can be seen from tables 1 and 3 that, when spatial step sizes decrease, we obtain better results. In tables 1, 2, and 3 the CPU time is almost 2 seconds. All the figures show that the numerical scheme is efficient and effective.

The graph of exact (left) and numerical (right) solutions at \(\tau =1/55\) and \(h=1/320\) with \(\alpha =\beta =0{.}1\) for Example 1.

The surface of absolute pointwise errors when \(\tau =1/55\) , \(h=\frac{1}{320}\) and contour plot of numerical solution for Example 1.

The comparison between numerical solution and exact solution (left) and pointwise absolute error (right) with \(\tau =1/55\) and \(h=\frac{1}{320}\) at \(t=1\) for example 1.

Example 2

Consider the problem (1) with exact solution \(u(x,t) = t^{\beta }\sin (\pi x),(x,t)\in \Omega\). The source term is taken as

The exact (left) and numerical (right) solutions at \(\tau =1/277\) and \(h=1/640\) with \(\alpha =0.8,\beta =0.6\) for example 2.

The comparison between numerical solution and exact solution (left) and pointwise absolute error (right) with \(\tau =1/277\) and \(h=\frac{1}{640}\) at \(t=1\) for example 2.

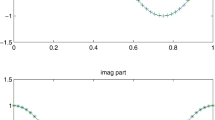

Poinwise errors for Example 2: top left \((\alpha =0{.}8,\beta =0{.}6,\tau =1/10,h=1/640)\), top right \((\alpha =0{.}1,\beta =0{.}3,\tau =1/277,h=1/640)\), bottom left \((\alpha =0{.}4,\beta =0{.}5,\tau =1/10,h=1/640)\), bottom right \((\alpha =0{.}6,\beta =0{.}9,\tau =1/5,h=1/640)\).

In table 6, we list

L2-norm errors and experiment order of convergence for the difference scheme. Herein, we take \(\tau =\frac{1}{277}\) and choose different spatial step sizes for different values of \(\alpha\) and \(\beta\) . Also, the convergence rate in space is seen to be about 2. The CPU time is less than 30 seconds. In table 7, we compared our results with those of the reference [30]. In table 8, we presented the results for large time instant t. Figure 4 shows the exact and numerical solutions. Figure 5 presents the exact and numerical solutions and absolute error at \(t=1\). In figure 6, we depicted graph of pointwise errors for different values of \(\alpha ,\beta ,\tau ,h\). It is apparent from tables and figures that the numerical scheme works well.

Example 3

In this example the exact solution of the problem (1) is given by \(u(x,t) = t^3x^3(1-x)^3\) and the inhomogeneous term is

Tables 9 and 10 give the L2-norm errors and convergence orders using the present numerical method. It is observed that the numerical solutions of the numerical scheme are seen to be in good agreement with the exact ones. In table 11, we presented the results for large time instant t. The CPU time is less than 12 seconds. In figure 8, surfaces of pointwise error are portrayed at different \(\alpha ,\beta ,\tau ,h, T\) . In figure 7, the numerical solution and exact solution curves have been demonstrated. In figure 9, the comparison between \(u(x_j,t_k)\) and \(U_j^k\) at \(t=1\) is created to show the efficiency of the presented method. Also, the CPU time illustrates that the proposed scheme is fast.

The exact (left) and numerical (right) solutions curves at \(\tau =1/95\) and \(h=1/1280\) with \(\alpha =0{.}7,\beta =0{.}7\) for example 3.

Poinwise errors for Example 3: top left \((\alpha =0{.}7,\beta =0{.}7,\tau =1/95,h=1/1280,T=1)\), top right \((\alpha =0{.}9,\beta =0{.}1,\tau =0{.}02,h=1/640,T=2)\), bottom left \((\alpha =0{.}9,\beta =0{.}1,\tau =0{.}04,h=1/640,T=4)\), bottom right \((\alpha =0{.}8,\beta =0{.}4,\tau =0{.}08,h=1/640,T=8)\).

The comparison between numerical solution and exact solution (left) and absolute error (right) with \(\tau =1/277,h=\frac{1}{1280}\) and \(\alpha =0{.}7,\beta =0{.}7\) at \(t=1\) for example 3.

Example 4

Consider fourth-order time-fractional integro-differential equation with a weakly singular kernel (1) with exact solution \(u(x,t) = t^2e^xx^3(1-x)^3,(x,t)\in \Omega\). The source term is taken as

The exact (left) and numerical (right) solutions plots at \(\tau =1/40\) and \(h=1/1280\) with \(\alpha =0{.}8,\beta =0{.}5\) for example 4.

The poinwise error (left) and comparison between numerical and exact solutions (right) for example 4.

From table 12, we can see that by decreasing h, more accurate results can be achieved. In table 13, L-2 norm errors are demonstrated for \(\alpha =0{.}3,0{.}8\). It is clear from table 12 that the presented method is accurate with a good order of convergence. The CPU time is less than 12 seconds. Figure 10 shows that the exact and numerical solutions are the same. The plot of pointwise error and comparison between numerical and exact solutions at \(t=1\) are illustrated in figure 11. All the tables and figures clearly show that the present difference scheme is impressive in term of accuracy.

Example 5

In the last example, we consider \(u(x,t)=(x^2-x)^4\sin (\pi x)t^\alpha\).

In table 14, L-2 norm errors are reported for \(\alpha =\beta =0.1,0.3,0.7\). In table 15, numerical results are presented with large time instant \(t,(T=2,4,6,10)\). Tables 14 and 15 verify the efficiency of the proposed method.

7 Conclusions

In this paper, we presented a difference scheme using cubic B-spline quasi-interpolation for the numerical solution of a fourth-order time-fractional integro-differential equation with a weakly singular kernel. The time fractional derivative of the mentioned equation is approximated by a scheme of order \(O(\tau ^{2-\alpha })\) and the spatial derivative is replaced with a second order approximation. The fractional integral is approximated by polynomial interpolation. In terms of the implementation and speed of the method, it is easy to apply and almost fast. We have proved the stability and convergence of the numerical method with the order of convergence \(O(\tau ^{2-\alpha }+h^2)\). Five test problems have been performed to show the convergence orders, applicability, and capability of the method. All numerical computations are obtained by using MATLAB R2020b.

Abbreviations

- \(_{RL}{\mathcal {I}}_{a,x}^{\alpha }\) :

-

Riemann–Liouville integral

- \(_{C}{\mathcal {D}}_{a,x}^{\alpha }\) :

-

Caputo fractional derivative

References

Renardy M, Nohel J A and Lodge A S 1985 Viscoelasticity and Rheology. Academic Press

Olmstead W E, Davis S H, Rosenblat S and Kath W L 1986 Bifurcation with memory. SIAM J. Appl. Math. 46(2): 171–188

Sanz-Serna J M 1988 A numerical method for a partial integro-differential equation. SIAM J. Numer. Anal. 25(2): 319–327

Chen C and Shih T 1998 Finite element methods for integrodifferential equations. World Scientific

Wang Z, Cen D and Mo Y 2021 Sharp error estimate of a compact L1-ADI scheme for the two-dimensional time-fractional integro-differential equation with singular kernels. Appl. Numer. Math. 159: 190–203

Qiu W, Xu D and Guo J 2021 The Crank-Nicolson-type Sinc-Galerkin method for the fourth-order partial integro-differential equation with a weakly singular kernel. Appl. Numer. Math. 159: 239–258

Babaei A, Banihashemi S and Cattani C 2021 An efficient numerical approach to solve a class of variable-order fractional integro-partial differential equations. Numer. Methods Partial Differ. Equ. 37(1): 674–689

Fakhar-Izadi F 2020 Fully spectral-Galerkin method for the one-and two-dimensional fourth-order time-fractional partial integro-differential equations with a weakly singular kernel. Numer. Methods Partial Differ. Equ. 38(2): 160–176

Zhang H, Han X and Yang X 2013 Quintic B-spline collocation method for fourth order partial integro-differential equations with a weakly singular kernel . Appl. Math. Comput. 219(12): 6565–6575

Dehestani H, Ordokhani Y and Razzaghi M 2020 Numerical solution of variable-order time fractional weakly singular partial integro-differential equations with error estimation. Math. Model. Anal. 25(4): 680–701

Hashemizadeh E, Ebadi M A and Noeiaghdam S 2020 Matrix Method by Genocchi Polynomials for Solving Nonlinear Volterra Integral Equations with Weakly Singular Kernels. sym. 12(12): 2105

Biazar J and Sadri K 2019 Solution of weakly singular fractional integro-differential equations by using a new operational approach. J. Comput. Appl. Math. 352: 453–477

Allahviranloo T 2020 Fuzzy fractional differential operators and equations. Studies in fuzziness and soft computing series. Springer Nature

Vaidyanathan S, Azar A T and Radwan AG 2018 Mathematical Techniques of Fractional Order Systems. Elsevier Science

Matouk A Z ed 2020 Advanced Applications of Fractional Differential Operators to Science and Technology. IGI Global

Yang X J, Ju X and Gao F 2020 General Fractional Derivatives with Applications in Viscoelasticity. Academic Press

Cen D, Wang Z and Mo Y 2021 Second order difference schemes for time-fractional KdV-Burgers’ equation with initial singularity. Appl. Math. Lett. 112: 106829

Cen D and Wang Z 2022 Time two-grid technique combined with temporal second order difference method for two-dimensional semilinear fractional sub-diffusion equations. Appl. Math. Lett. 129: 107919

Ou C, Cen D, Vong S and Wang Z 2022 Mathematical analysis and numerical methods for Caputo-Hadamard fractional diffusion-wave equations. Appl. Numer. Math. 177: 34–57

Abdi N, Aminikhah H and Refahi Sheikhani A H 2021 High-order rotated grid point iterative method for solving 2D time fractional telegraph equation and its convergence analysis. Comput. Appl. Math. 40: 1–26

Abdi N, Aminikhah H and Refahi Sheikhani A H 2021 On rotated grid point iterative method for solving 2D fractional reaction-subdiffusion equation with Caputo-Fabrizio operator. J. Differ. Equ. Appl. 27: 1134–60

Abdi N, Aminikhah H and Refahi Sheikhani A H 2022 High-order compact finite difference schemes for the time-fractional Black-Scholes model governing European options. Chaos Solit. Fractals. 162: 112423

Taghipour M and Aminikhah H 2022 Application of Pell collocation method for solving the general form of time-fractional Burgers equations. Math. Sci. 1–19

Taghipour M and Aminikhah H 2022 A fast collocation method for solving the weakly singular fractional integro-differential equation. Comput. Appl. Math. 41: 142

Taghipour M and Aminikhah H 2022 A spectral collocation method based on fractional Pell functions for solving time-fractional Black-Scholes option pricing model. Chaos Solit. Fractals. 163: 112571

Lin J, Bai J, Reutskiy S and Lu J 2022 A novel RBF-based meshless method for solving time-fractional transport equations in 2D and 3D arbitrary domains. Eng. Comput. 24: 1–8

Molaee T and Shahrezaee A 2022 Numerical solution of an inverse source problem for a time-fractional PDE via direct meshless local Petrov-Galerkin method. Eng. Anal. Bound. Elem. 138: 211–218

Wang H, Xu D, Zhou J and Guo J 2021 Weak Galerkin finite element method for a class of time fractional generalized Burgers’ equation. Numer. Methods Partial Differ. Equ. 37(1): 732—749

Zheng Y and Zhao Z 2020 The time discontinuous space-time finite element method for fractional diffusion-wave equation. Appl. Numer. Math. 150: 105—116

Xu D, Qiu W and Guo J 2020 A compact finite difference scheme for the fourth-order time-fractional integro-differential equation with a weakly singular kernel. Numer. Methods Partial Differ. Equ. 36(2): 439-458

Podlubny I 1998 Fractional differential equations. Elsevier

Tariq H and Akram G 2017 Quintic spline technique for time fractional fourth-order partial differential equation. Numer. Methods Partial Differ. Equ. 33(2): 445–466

Engel G, Garikipati K, Hughes T J R, Larson M J, Mazzei L and Taylor R L 2002 Continuous/discontinuous finite element approximations of fourth-order elliptic problems in structural and continuum mechanics with applications to thin beams and plates, and strain gradient elasticity. Comput. Methods Appl. Mech. Eng. 191(34): 3669–3750

Miller R K 1978 An integrodifferential equation for rigid heat conductors with memory. J. Math. Anal. Appl. 66(2): 313–332

Bazgir H and Ghazanfari B 2019 Spectral solution of fractional fourth order partial integro-differential equations. Comput. methods differ. equ. 7(2): 289–301

Baleanu D, Darzi R and Agheli B 2017 New study of weakly singular kernel fractional fourth-order partial integro-differential equations based on the optimum q-homotopic analysis method. J. Comput. Appl. Math. 320: 193–201

Heydari M H and Avazzadeh Z 2021 Orthonormal Bernstein polynomials for solving nonlinear variable-order time fractional fourth-order diffusion-wave equation with nonsingular fractional derivative. Math. Methods Appl. Sci. 44(4): 3098–3110

Abdelkawy M A, Babatin M M and Lopes A M 2020 Highly accurate technique for solving distributed-order time-fractional-sub-diffusion equations of fourth order. Comput. Appl. Math. 39(2): 1–22, 2020

Yang X, Xu D and Zhang H Quasi-wavelet based numerical method for fourth-order partial integro-differential equations with a weakly singular kernel. Int. J. Comput. Math. 88(15): 3236–3254

Roul P and Goura V P 2020 A high order numerical method and its convergence for time-fractional fourth order partial differential equations. Appl. Math. Comput. 366: 124727

Zhu C G and Kang W S 2010 Numerical solution of Burgers-Fisher equation by cubic B-spline quasi-interpolation. Appl. Math. Comput. 216(9): 2679–2686

Zhu C G and Wang R H 2009 Numerical solution of Burgers’ equation by cubic B-spline quasi-interpolation. Appl. Math. Comput. 208(1): 260–272

Zhang J, Zheng J and Gao Q 2018 Numerical solution of the Degasperis-Procesi equation by the cubic B-spline quasi-interpolation method. Appl. Math. Comput. 324: 218–227

Taghipour M and Aminikhah H 2021 A B-Spline Quasi Interpolation Crank-Nicolson Scheme for Solving the Coupled Burgers Equations with the Caputo-Fabrizio Derivative. Math. Probl. Eng.

Sun Z 2009 The Method of Order Reduction and Its Application to the Numerical Solutions of Partial Differential Equations . Science Press, Beijing

Mittal R C, Kumar S and Jiwari R 2020 A cubic B–spline quasi–interpolation method for solving two–dimensional unsteady advection diffusion equations. Int. J. Numer. Methods Heat Fluid Flow. 30(9): 4281—4306

Mittal R C, Kumar S and Jiwari R 2021 A Comparative Study of Cubic B–spline–Based Quasi–interpolation and Differential Quadrature Methods for Solving Fourth-Order Parabolic PDEs. Proc. Natl. Acad. Sci. India - Phys. Sci. 91(3): 461-474

Sablonniere P 2005 Univariate spline quasi-interpolants and applications to numerical analysis. Rend. Semin. Mat. Univ. 63(3): 211—222

Li C and Cai M 2019 Theory and numerical approximations of fractional integrals and derivatives. Siam, Society for Industrial and Applied Mathematics

Yang X J 2019 General Fractional Derivatives: Theory, Methods and Applications. Chapman and Hall/CRC

Yang X J 2019 General Fractional Derivatives:Theory,Methods and Applications. Chapman and Hall/CRC

Lin Y M and Xu C J 2007 Finite difference/spectral approximations for the time-fractional diffusion equation. J. Comput. Phys. 225: 1533—1552

Sun L Y and Zhu C G 2020 Cubic B-spline quasi-interpolation and an application to numerical solution of generalized Burgers-Huxley equation. Adv. Mech. Eng. 12(11): 1687814020971061

Kunoth A, Lyche T, Sangalli G and Serra-Capizzano S 2018 Splines and PDEs: From approximation theory to numerical linear algebra. Cetraro: Springer

Schumaker L 1981 Spline functions: basic theory . Wiley Interscience

Hollig K and Horner J 2013 Approximation and modeling with B-splines. SIAM

Chen C, Liu F and Burrage K 2008 Finite difference methods and a Fourier analysis for the fractional reaction–subdiffusion equation. Appl. Math. Comput. 198: 754—769

Bernatz R 2010 Fourier series and numerical methods for partial differential equations. New York: Wiley

Holte J M 2009 Discrete Gronwall lemma and applications, MAA-NCS Meeting at the University of North Dakota

Acknowledgements

We sincerely appreciate the anonymous referees’ insightful comments, which helped to strengthen the final text.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Taghipour, M., Aminikhah, H. A difference scheme based on cubic B-spline quasi-interpolation for the solution of a fourth-order time-fractional partial integro-differential equation with a weakly singular kernel. Sādhanā 47, 253 (2022). https://doi.org/10.1007/s12046-022-02005-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12046-022-02005-y