Abstract

Firefly algorithm is one of the most promising population-based meta-heuristic algorithms. It has been successfully applied in many optimization problems. Several modifications have been proposed to the original algorithm to boost the performance in terms of accuracy and speed of convergence. This work proposes a partition cum unification based genetic firefly algorithm to explore the benefits of both the algorithms in a novel way. With this, the initial population is partitioned into two compartments based on a weight factor. An improved firefly algorithm runs in the first compartment, whereas, the genetic operators like selection, crossover, and mutation are applied on the relatively inferior fireflies in the second compartment giving added exploration abilities to the weaker solutions. Finally, unification is applied on the subsets of fireflies of the two compartments before going to the next iterative cycle. The new algorithm in three variants of weightage factor have been compared with the two constituents i.e. standard firefly algorithm and genetic algorithm, additionally with some state-of-the-art meta-heuristics namely particle swarm optimization, cuckoo search, flower pollination algorithm, pathfinder algorithm and bio-geography based optimization on 19 benchmark objective functions covering different dimensionality of the problems viz. 2-D, 16-D, and 32-D. The new algorithm is also tested on two classical engineering optimization problems namely tension-compression spring and three bar truss problem and the results are compared with all the other algorithms. Non-parametric statistical tests, namely Wilcoxon rank-sum tests are conducted to check any significant deviations in the repeated independent trials with each algorithm. Multi criteria decision making tool is applied to statistically determine the best performing algorithm given the different test scenarios. The results show that the new algorithm produces the best objective function value for almost all the functions including the engineering problems and it is way much faster than the standard firefly algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Meta-heuristic algorithms are approximate techniques for solving an optimization problem. They capitalize on intuitions along with randomness property in their search for improving solutions at hand through successive iterations. Nature has inspired the development of many meta-heuristic algorithms [1, 2]. The earliest of this kind, in the form of genetic algorithm (GA) [3, 4] was motivated by Darwin’s survival of the fittest by means of natural selection. Several researchers have shown there keen interests in this beautiful domain since then. As a result, numerous meta-heuristic algorithms have emerged over years [5, 6].

Different creatures communicate with each other through various unique modes of communication. Fireflies use their flashing lights of varying intensity to communicate for attracting mates or warning predators. A suitable mate would either communicate back mimicking the same pattern of flash or would produce response in a different flashing pattern. The intensity of the light which is the key source of attraction also depends on the distance between the source and the observer because of absorption in air. Environmental conditions like wind, darkness or fogginess also regulate the intensity. This phenomenon besides soothing the eyes of nature lovers in the darkness of summer nights has become the epitome of inspiration for many scientific researchers mainly dealing with optimization problems.

1.1 Standard firefly algorithm

Fireflies may be conceived as candidate solutions in the search space. The attraction and movements offer heuristic goals to achieve brighter fireflies i.e. better solutions. The standard firefly algorithm (FA) which has been developed by Yang [7] has two parts in the position updates as shown in Equation (1),

where, \(x_i\) is the position of the firefly that is to be updated based on the difference of position of another firefly denoted by \(x_j\); in the first part, which is driven by attraction heuristic, \(\beta _0\) is the light intensity when the distance between them is 0, \(\gamma \) is the absorption coefficient and \(r_{ij}\) is the Euclidean distance between them; in the second part, which models random movement, \(\alpha \) is the coefficient of randomness and \(\varepsilon ()\) yields a random value in [0, 1].

1.2 Modifications of standard FA

FA is prone to premature convergence triggering efforts to relax the constant parameters used therein. Studies also show that this is comparatively slow and often suffers from problems of falling into local minima [8]. The movement update function solely depends on the present light intensity or fitness and no previous memory is preserved. Furthermore, the algorithm parameters are fixed for all the successive iterations making the step length of learning, constant, thereby foregoing the needed exploitation especially at the later stages. Enormous efforts have been channelled to boost the performance of the standard FA [9, 10]. The various modifications, in accordance with some broad categories are as follows.

1.2a Based on adaptive parameters: In this category, the parameters i.e. the user defined constants, used in like any other meta-heuristic algorithms, have been updated in the successive iterations. These constants control the degree of exploitation and exploration. The randomness coefficient \(\alpha \) has been tuned based on the current iteration number by Shakarami and Sedaghati [11]. Kavousi-Fard et al [12] have improvised the randomness part using crossover and mutation operators. Modification of attraction related parameters (\(\beta _0\), \(\gamma \) and \(r_{ij}\)) have been also explored by many researchers. Lin et al [13] have used a new concept of virtual distance, \(r'\), and computed the intensity of light \(\beta \) as \(\beta _0\gamma (1-r')\) to update the position of fireflies. Gandomi et al [14] have employed 12 different chaos functions which have non-repetition and ergodicity properties for updating the constant values of the algorithm (\(\beta \) and \(\gamma \)). Sulaiman et al [15] have introduced minimum variance distance replacing the Cartesian distance for the attraction part. The random part has been modified with a mutation operator. Wang et al [16] have handled the premature convergence by regulating all the constant parameters based on the difference of light intensity measured by a formula dependent on number of iteration. Yu et al [17] have proposed wise step length control specific to individual firefly based on its personal and global best position for updating its current position. A practical problem of optimizing droplet ejection speed in electro-hydrodynamic inkjet printing has been reportedly solved by using an improved FA [18]. The authors have used a new rule for updating the brightness instead of a constant initial brightness coefficient for getting comparatively better results.

Keeping in view the simple modifications in this approach, the results have been really encouraging. It has been proved that keeping updating strategy same may lead to stagnation. None of the cases in this line have considered the need for changing updating strategy in general. This method has also not considered the past memory. This method has not been able to reduce the search space to speed-up the convergence.

1.2b Based on update strategy: The second class of approaches for modification deals with changing the strategy of updating the position of the fireflies. Opposition based approach has been introduced by Roy et al [19] for solving high dimensional problems where opposite or reverse set of regularly achieved population has been determined in each iteration. The brightest one has been updated by \(x_{min} + x_{max} - x\) so that it can still improve from the initial local extremes. This method to some extent mimics the mutation operator in genetic algorithm which has eventually initiated many experiments with different methods and levels of incorporation. Yu et al [20] have defined a criterion of hazardous condition based on which a mutation can be operated on the weaker solutions to improve them. Additionally, the authors have preserved the historical performance in memory so that hitherto best cases are not left-out. Kazemzadeh-Persi [21] has added k newborn generations in each iteration with the help of mutation. The authors also have suggested updating the position based on the average directions of movement dictated by all the brighter fireflies. Another line of modification has been approached [12, 22] by employing five crossover operators and three mutation operators along with adaptive \(\alpha \). Three random solutions have been considered for generating the mutated solutions, one by applying \(x_{mute1} = x_{q1} + \varepsilon (x_{q2} - x_{q3})\), and another for any iteration number t by applying \(x_{mute2} = x_{mute1} + \varepsilon t(x_b - x_w)\). In other similar works [11, 23], the value of \(\alpha \) has been made adaptive by chaotic mapping or applying mutation operator. Mohammadi et al [23] have proposed the update of the position with respect to the brighter firefly \(x_j\), thereby adding more exploration capability. Hassanzadeh and Kanan [24] have used fuzzy attraction function represented by a Cauchy distribution from the selected top k brighter fireflies to impose the influence of a collection rather than an individual in order to explore towards the global best. In some literature [25, 26] , mutation has been used replacing the updating formula in Equation (1) or as an additional operator immediately after using the same updating formula. Wang et al [27] have introduced Cauchy distribution for performing mutation on the initially fitter fireflies known as good nodes set to obtain improved results on benchmark functions. In another recent work by Peng et al [28], a novel courtship learning strategy has been adopted where the updating of the position of the lower intensity male fireflies have been accomplished using the guidance from the relatively superior female fireflies. Tong et al [29] have proposed using several diverse sub-groups of FA and exchanging information for learning collectively about the global best more effectively. Zhou et al [30] have adopted partial attraction model which have been used to protect swarm diversity and utilize full individual information. Baykasouglu et al [31] have proposed a modification to FA to solve dynamic multidimensional knapsack problem by replacing pair-wise comparison by a specialized comparison based on a dynamic variable in each iteration. The authors have also used a probabilistic switch to decide whether to update the position or not for the firefly to avoid early convergence and reduce computational time.

This method of modification has been deemed very useful to specific problems. This has not considered the already improvement areas like adaptive control or changing the randomness characteristics for better explorations. This method has been not able to reduce the search space and the time complexity of convergence.

1.2c Based on solution space and randomness control: In the third category of approaches, two types of modification have been tried. Firstly, changing the solution space to an easy search space has been explored by Liu et al [32]. They have used quaternion representation for \(x_i(k) = \) \((y_1^i, y_2^i, y_3^i, y_4^i)\) for all components k. The update of position takes place in the quadruple-folded search space, while the computation of brightness is done in actual solution space using a norm transform function. Fister et al [33] have also used quaternion representation of the solution space for enhancing performance and avoiding stagnation. Wang et. al. [34] have used a predefined neighbourhood to overcome the problem of oscillation based on too much attractions happening during the search. Secondly, the randomness behaviour of the fireflies has been controlled by following certain probability distribution functions. For example, Farahani et al [35] have introduced Gaussian distribution, whereas Yang [36] has used Levy distribution for generating component wise product with the original random fraction. The randomness property has been improved by adding direction of movement of the fireflies using an additional sign vector in case of the later. Tighzert et al [37] have presented a set of new compact firefly algorithms involving levy functions, elitism or preservation of previous performances and opposition-based learning. They have tested on benchmark functions considering 30 dimensions. Wu et al [38] have also used adaptive logarithmic Levy distribution to improve over the global searching characteristics of FA using a probabilistic switch.

The best part about this method is the reduction of search space thereby minimizing the computational cost of the algorithm. This approach of modification has been proved beneficial in most of the cases though the use of other lines of modifications like adaptive control and changing the updating strategy along with it would have clearly achieved more robustness.

1.2d Hybrid approaches: There have been a few attempts of hybridization of the FA with other evolutionary algorithms. Luthra and Pal [39] have used genetic operators like crossover along with the standard FA for solving a monoalphabatic substitution cipher problem. Rahmani and MirHassani [40] have applied genetic crossover operator on the two fittest fireflies and mutation on the whole population based on the mutation probability after the pass of standard algorithm in each iteration. They have used this for solving a capacitated facility location problem. Sarangi et al [41] have modified the FA by integrating the formula used in particle swarm optimization (PSO). They have retained best positions for both individual and group in all successive iterations and have used it for the additional velocity component calculation. Aydilek et al [42] have enhanced hybrid FA and PSO by incorporating chaotic functions. Yelghi and Köse [43] have successfully incorporated the concept of tidal force for modifying the attraction component of the standard FA. Tomas et al [44] have introduced the concept of orthogonal learning to the hybridized FA and PSO algorithms to affect better performances than that obtained from the original hybridization. Huang et al [45] have used a hybridization of GA, FA and differential evolution (DE) to enable the maximum power point tracking for photovoltaic systems under partial shading conditions. The authors have created the hybridization framework by implementing DE mutation followed by FA attraction and finally carrying out GA crossover in each generation.

The hybrid methods of FA have been able to successfully make inroads to the hitherto unseen solution space. Nevertheless, a generic framework of integration of different independent meta-heuristics has not been standardized. There has been no such work reported which has partitioned the population and considered running different meta-heuristic algorithms in sub-groups.

1.3 Need and novelty of new algorithm

There have been numerous efforts for improving the standard FA to solve multiple optimization problems. At the same time they have added precious knowledge base to the literature in the process of different approaches of modifications. The key aspects that can be highlighted from the rigorous literature review are as follows:

-

Randomness part can be modified with controlled direction using genetic crossover and/or mutation or following other probability distributions for better exploration.

-

Records of past solutions and performance avoids probable loss of eligible solutions.

-

Adaptive \(\alpha \) comes handy especially at later stages for intensive exploitation.

Giving due respect to the previous modification efforts, it has been observed that there is no formal integration with any full fledged evolutionary algorithm as such. Genetic operators in parts have been tried as an alternate or additional update strategy of the standard FA. Their application has been targeted mainly on the fittest fireflies [40] for getting even better solutions. In some cases it has been purely application specific implementation of crossover using dominant genomes [39]. The modified algorithm in such case can not be used for solving any general optimization problems. If in any scenario it has been used to evolve the weaker solutions alone, additional evaluations of objective function fitness have been performed incurring more computational cost.

1.4 Motivation and inspiration

A single method of modification may not capture all the needed improvements of the existing FA. This may raise issues with premature convergence in problems with higher dimensionality. A very handful cases have concentrated on combining different approaches of modifications. A very few of the previous works reported has targeted hybridization of different meta-heuristics in true sense. Hence, this work aims to capitalize on the strengths of two algorithms viz. faster convergence of GA and better accuracy of FA so as to achieve a better performing algorithm.

In this approach, a novel methodology is formulated in the form of partition cum unification based genetic - FA (PUBG-FA) by integrating FA with GA. The initial population is partitioned into two compartments based on a weight factor, w set by user, which determines how much of the algorithm is dominated by FA. The first compartment which has the superior fireflies based on light intensity (fitness) runs a little improved version of standard FA, whereas the second chamber consisting of the weaker solutions runs the entire GA encompassing selection, crossover and mutation. After each iteration these two populations are again unified and the intensities of the new fireflies are computed. Based on that, the consolidated population is sorted and trimmed before going to the next iterative cycle for further partition, update and unification. The new algorithm in three variants of different w (30\(\%\), 50\(\%\) and 70\(\%\)) is tested and compared with the two constituents i.e. standard firefly and genetic algorithm and additionally with some state-of-the-art meta-heuristics namely PSO [46], cuckoo search (CS) [47, 48], flower pollination algorithm (FPA) [49], pathfinder algorithm (PFA) [50], bio-geography based optimization (BBO) [51] on 19 benchmark objective functions of different complexity, continuity, differentiability, mode, separability and scalability. This testing is carried-out considering several dimensionality of the problems viz. 2-D, 16-D, and 32-D. Further, the new algorithm is tested and compared with others on two real engineering optimization problems namely tension-compression spring [52, 53] and 3-bar truss problem [53, 54]. Non-parametric statistical tests, namely Wilcoxon rank-sum tests are conducted on the objective function values for each dimensional (scalability) test for all the algorithms from repeated independent experiments to statistically rule-out any significant deviations. One of the extensively used multi-criteria decision making (MCDM) tools, namely, technique for order of preference by similarity to ideal solution (TOPSIS) [55] is applied to statistically determine the best performing algorithm given the different multi-dimensional test scenarios and two assessment criteria - running time and objective function value.

Thus, the primal novel prospects and contributions of this article can be summarized as follows:

-

Seeking key improvement areas of FA from the past literature and combining them together for getting added advantages.

-

Envisaging a genetic strategy so as to achieve a faster convergence rate than conventional FA.

-

Partitioning the population for running two algorithms in two compartments and unification of the population derived from respective compartments.

-

Keeping the algorithmic parameter to tune to a single weight factor indicating the dominance of one algorithm over another.

-

Utilizing MCDM tool to strategically decide the final ranks of the competing optimization algorithms.

The rest of the paper is segmented as follows: section 2, where the methodology is described, presents the new algorithm elaborately and describes the objective functions and engineering optimization problems considered for testing. Results of all the testing are discussed in section 3. Finally, some concluding remarks are drawn in section 4.

2 Methodology

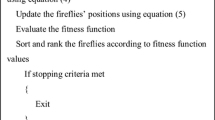

The basic idea of the new algorithm PUBG-FA is pretty simple. Dimmer fireflies get attracted towards the brighter ones and by virtue of their positions getting updated, their light intensities or objective fitness values are improved. The weaker section having feeble flashing intensity, instead of waiting for their turn for improving up the ladder of intensity through generations, are moved into a separate genetic compartment. In this space they get opportunities for further and faster improvement through explorations by applying standard genetic operators, namely selection, crossover and mutation. The remaining best part of the population having brighter fireflies undergo a little improved version of the standard FA in the primary compartment. Memory of the candidate solutions, random movement of the brightest firefly and adaptive \(\alpha \) constitute the improvement spectrum of FA as already successfully experimented in some previously reported works [19, 20, 36]. Figure 1 shows the flowchart of the new algorithm. After each iteration, the populations from two compartments along with the past memory are unified and ranked according to the light intensity meaning the objective fitness value before venturing for the next cycle. In the pseudo code presented in Algorithm 1, the new algorithm can be easily comprehended. Key improvement areas and features of PUBG-FA are discussed in the following subsection.

2.1 Features of PUBG-FA

2.1a Memory of past records: In the standard FA, the positions of all the fireflies are updated in a stochastic goal direction. Naturally, it may so happen that the intensity of the light meaning the objective fitness deteriorates from the previous value. Hence, it is changed to keep memory of the past records so that eligible candidates once found are never lost.

2.1b Random movement of the brightest: The position of the brightest firefly is not updated by the standard FA as because only dimmer fireflies update positions with respect to the brighter ones. Therefore, the algorithm is modified so that the brightest one is updated by the following Equation (2) involving only random term.

2.1c Adaptive random coefficient: It has been realized by the past researchers that an adaptive \(\alpha \) improves the exploration in the beginning and exploitation in the final stages of the FA. The following Equation (3) is used to update \(\alpha \) based on the value of initial randomness coefficient \(\alpha _0\) value and current generation/iteration counter iter.

2.1d Partitioning the population: The initial population of size pop with randomly assigned positions is partitioned into two compartments based on w, which actually says \(w \times pop\) would go into FA compartment and the rest i.e. \((1-w) \times pop\) would go into the GA counterpart. This essentially makes the number of total functional evaluations same as that of the standard FA while improving the exploration capability by applying integrated GA.

2.1e Genetic operators: The specific implementations of genetic operators immensely influence the performance of GA. In this real coded GA, RouletteWheel selection mechanism is adopted. Uniform crossover as shown in Equations (4) and (5), is implemented. with a crossover probability of 0.8. Mutation operator is implemented by taking average of a random fraction \(\varepsilon ()\) in [0, 1] and previous value on the same dimension of position vector with a mutation probability of 0.2 as presented in Equation (6).

2.1f Unification before ranking: The position-updated populations from the two compartments are unified and ranked according to their newly computed light intensities or fitness values. This approach is better than parallel processing of FA and GA in two compartments without mixing. This is because the current scheme i.e. unification creates more chances to the newly evolved fireflies from GA compartment to come to the mainstream FA and thereby converge rapidly by following a local direction of search.

2.2 Objective functions for testing

The new algorithm in three variants with 30%, 50% and 70% of w is tested on 19 benchmark functions [56, 57] as illustrated in table 1 and the results are compared with that of the standard FA and GA and some five state-of-the-art meta-heuristic algorithms, namely PSO, CS, FPA, PFA, and BBO. Here, the different variants of this algorithm is used to study the impact of w on the overall performance. In this study, three main indices used for comparison are mean CPU time, mean number of functional evaluations and mean value of objective function presented by the best solution. The population size and maximum generation for termination for the 2-D functions (function 1 to 10 in table 1) are kept at 50 and 100 respectively. The respective values are chosen as 200 and 300 for all 16-D and 32-D functions (function 11 to 19 in table 1) for the enhanced complexity of the problems. The number of independent repeated trials is set at 30 for all the functions. The parameter settings for all the algorithms are fixed as per the values in table 2. To make the comparative study really unbiased, all the algorithms are started with the same initial random population set.

2.3 Engineering case study problems for testing

The algorithms have been also tested on some real world, engineering case study problems which are described in the following sub-sections:

3.1a Design optimization of tension/compression helical spring: In this case, the objective is to minimize the weight of a helical spring under tension/compression. Design variables for this case study are wire diameter (d), mean coil diameter (D), and the number of active coils (N) (figure 2). Here, the constraints on shear stress, surge frequency, and minimum deflection should be satisfied during the weight optimization. The objective function and the constraints of this problem can be formulated as follows:

Instead of choosing strict death penalty which often suffers stagnation, this problem has been formulated by a modified version of barrier penalty approach [58], which works by adding weighted penalty values computed from the deviations from all the constraints to the objective function value. The initial population has been generated considering the feasible solution space defined by only the individual bounds of the decision variables. The final solution has also been checked for feasibility. The candidate which lies in the last generation of solutions matching all the constraints with the best objective function value has been selected as the solution.

3.1b Design optimization of 3-bar truss structure: This problem can be regarded as one of the most studied cases in constrained optimization works for bench-marking. Figure 3 illustrates the shape of the formulated 3-bar truss and the related forces acting on this structure. Here, the design space constitutes of two parameters: the area of bars 1 and 3, which is \(A_1\) and the area of bar 2, which is \(A_2\). The objective of this problem is to minimize the total weight of the structure. While solving this, the optimal design has to satisfy several constraints including stress, deflection, and buckling.This problem can be formulated mathematically as follows:

This problem has been similarly modelled by adding penalty values to the specific objective function value. The initial population has been generated considering the feasible solution space defined by both the individual bounds of the decision variables and all the constraints to be satisfied. The final solution has been checked for feasibility defined by the bounds and constraints.

2.4 Statistical tests

As per the recommendation by Derrac et al [59], the non-parametric Wilcoxon rank-sum statistical test with 95% degree of confidence (5% degree of significance) is performed along with experimental evaluations to detect any significant differences between the attained results of different algorithms.

TOPSIS is one of the most popular MCDM tool and has been successfully utilized for decision making across many engineering domains. There are grossly two criteria for relative comparison of all the meta-heuristic algorithms - mean CPU time and mean objective function value computed from 30 independent repeated runs with a specific algorithm. The lower the time and value, the better is the algorithm. The respective mean values for all the dimensions (2-D, 16-D and 32-D) including the engineering problems are used for comparative study.

3 Results and discussions

It should be mentioned that other than the improvement areas mentioned earlier all the remaining equivalent design and implementation is kept unaltered for all the algorithms in test. The user defined constant parameters are also same for all the algorithms. All the respective parameters and their values are shown in table 2. The results for benchmark objective functions and real engineering problems are discussed in the following subsections.

3.1 Results for benchmark objective functions

The population size and maximum number of iteration have been kept 50 and 100 respectively, for all the meta-heuristic algorithms for this lower dimension tests. It is clearly visible from comparison results of tests on 2D objective functions tabulated in table 3, the new PUBG-FA performs better in comparison with the standard FA and GA and it outperforms most of the state-of-the-art algorithms tested in this study. In case of Beale and Schaffer(6), FA3 has been found to be most accurate optimizer, while for Zettl function FA2 has been the topmost performer. For rest of the 2D functions, FA4 with the maximum (70%) weightage/dominance of FA has been proved to be the most suitable blender. It performs satisfactorily well for all the multimodal problems and specifically for functions like Carrom Table and Easom with nearly flat like surfaces containing very small central nadir area. In case of Acley (1) and Zakharov, PFA produces the least objective function value with the new algorithm closely following it. Figures 5 to 14 show the convergence plots of the algorithms for the 2-D objective functions. The convergence plots of the Carrom Table function and Easom function reveal that standard GA and standard FA get trapped at local minima, respectively. This clearly raises concerns about using any one specific standard algorithm for the two optimization problems. However, the new algorithm performs well and finds the global minima, converging pretty faster for both the optimization problems. It is clear from the plots that all the algorithms start with the initial same fitness dictated by the same initial population set design. Working with the higher dimensional 16-D benchmark function tests, the new algorithm is found to outperform all the algorithms except for Rastrigin function (table 4). Testing with the same group of functions on 32-D, has produced similar kind of results in favour of the new algorithm except for Rastrigin and Step (2) functions (table 5). The minimum objective values (global minimums) for all the functions tested are 0. For complexly scattered multi-modal functions like Rastrigin and Rosenbrock functions, where all the standard algorithms find it hard to converge to the global minima, the new PUBG-FA shows encouraging results. Figures 15 to 33 show the convergence plots for the 16-D and 32-D objective functions. Competitiveness of different algorithms for Alpine(1) and Rastrigin functions is studied from the respective graphs. They are complex, uniformly distributed multi-modal functions with a single global minimum at 0. The standard optimization algorithms are trapped at local minima whereas the proposed FA variants avoid trapping into local minima and converge rapidly. The impressive result is due to the seamless hybridization of the two standard algorithms enhancing both exploration and exploitation. The same happens with Step (2) and Sum Squares function, where the PUBG-FA is able to find competitive results while most of the other algorithms including the standard FA and GA seem to suffer from local optimization traps. In case of Rastrigin function alone when tested on 16-D, the new algorithm is preceded by FPA, PSO and BBO in the ascending order of superiority. In the further higher dimension of Rastrigin and Step (2) functions, BBO and FPA has been found the best performer respectively. In all the functions of higher dimensions too, PUBG-FA has outperformed the standard algorithms FA and GA quite consistently. The fast convergence rate of the new algorithm is very much evident from all the convergence plots. The success of PUBG-FA is due to the continuous exchange of useful information between two complete sets of different algorithms.

Even though, the variant with highest w (70%) as represented by FA4 is the overall best performer among all, FA2 with the least weightage of FA (30%) shows commendable performance when considered in terms of both accuracy and speed of convergence. FA2 has seen comparatively higher success rates in the higher dimensions. The most remarkable result is achieved while optimizing the 16-D and 32-D Sphere, Step (2) and Sum Squares functions, where it could draw a clear demarcation line of quality between itself and the two standard algorithms used as the constituents - FA and GA.

The average time taken and standard deviations are also far less than that of the standard FA and for lesser w variant it is very close to GA. The number of functional evaluations for all the algorithms is logged and found same for all the algorithms and it matches with the theoretically obtainable values (\(D\times N\)) i.e. 5000 and 60000 for the 2-D and higher dimensional functions (16-D and 32-D) respectively.

The time complexity of the new algorithm is studied by analytical method alongside plotting with a close watch on the reported time of execution in logs. Considering N as the population size the time complexity for the standard GA and FA are in the order of \(O(\frac{9N}{2} + 2N\log {(2N)})\) and \(O(\frac{N}{2}\times (N-1) + N\log (N))\) respectively. The newly developed PUBG-FA maintains an intermediate order of time as regards the standard algorithms, which can be computed as \(O(\frac{9N}{4} + \frac{N}{4}\times (\frac{N}{2}-1) + 2N\log {(2N)})\).

Figure 4 shows the plotting of time complexity of the new algorithm (FA3) along with that of the constituents used. It is evident from the mean CPU time tabulated in the table 4, all the variants of the new algorithm has an intermediate time complexity between standard GA and FA1 (standard FA). The fitness convergence plots against elapsed computation time for all the function tests and case studies are separately available as supplementary files (Figures. S1 to S30).

3.2 Results of engineering case study problems

The new algorithm has shown clear supremacy in dealing with constrained optimization problems when entrusted to solve two real engineering case studies. Population size and maximum number of iterations have been set as 200 and 300 respectively for the tension-compression spring problem having three variables. The FA4 variant of PUBG-FA has been able to expose the best solution with optimal weight satisfying all the constraints. The comparison results obtained in this study are tabulated in table 6. Similarly, the 3-bar truss optimization problem has been solved by all the algorithms using population size and maximum iteration number as 50 and 100 respectively. Here also, FA4 has been the topmost performer producing the least objective value. The results shown in table 7 indicate a total supremacy of all the variants of the new algorithm in the context of this particular case study. The performance plots for the engineering case studies are shown in figures 33 and 34. This proves that the new algorithm is aptly suitable in handling real world constraints besides achieving global optimization.

3.3 Results of statistical tests

The results of non-parametric Wilcoxon rank-sum tests are tabulated in tables 8, 9, and 10 for 2-D, 16-D and 32-D benchmark functions respectively. The p-values are all below 0.05 (with 5% significance) and thus confirm that there is no significance differences in the results obtained in independent trials. The final ranks obtained by applying TOPSIS considering all the test scenarios are shown in table 11. It shows that FA2 and FA3 variants of PUBG-FA are the two top performing algorithms followed by PSO and GA. The other variant of the new algorithm - FA4 is assigned the 5th overall rank. Equal weights are given for all the objective functions and problems whereas ratio of weights of computation time to objective value is chosen as 1:3.

4 Conclusions

In this paper, a new algorithm PUBG-FA is proposed, which first partitions the population in two compartments for running a slightly modified FA and standard GA. Then it unifies the worked-upon sub-populations of two compartments and finally ranks the fireflies before going into next generation. The new algorithm is experimented with three variants of weightage, w which determines the percentage of total population belonging to FA chamber. This is tested on 19 different benchmark optimization functions, the first 10 of which are used as 2-D functions and the rest are run separately as 16-D and 32-D functions. The results, when compared with the standard FA and GA, show the algorithm is significantly more efficient with faster convergence than the standard FA and lower minimal values than the two. The new algorithm is also compared with some of the state-of-the-art meta-heuristic algorithms on the same functions and encouraging results are observed. A popular MCDM tool TOPSIS reveals that the new variants are very competitive and successfully outperform some renowned algorithms in majority of the cases.

Meta-heuristic algorithms are not panacea. It has been exhibited that different optimization problems suit different techniques ranging from simple classical optimization methods to complex meta-heuristic and population-based evolutionary algorithms. The methodology adopted in this study can be seen as a new approach of dealing with multiple population based meta-heuristic algorithms collaboratively for solving a particular optimization problem. This work has some prompting directions pertaining to the future works as follows:

-

The same hybridization schema can be rigorously explored in future considering other combination of some of the top performing evolutionary algorithms like PSO, FPA, BBO, etc.

-

The new PUBG-FA can be further tailored for new practical problems associated with social and engineering domain, e.g., preservation of privacy in social networks, the multicast vehicle routing, and parameter tuning in training of machine learning and artificial intelligence models.

References

Xin-She Yang. Nature-inspired metaheuristic algorithms. Luniver press, 2010

Ilhem Boussaïd, Julien Lepagnot, and Patrick Siarry. A survey on optimization metaheuristics. Information sciences, 237:82, 2013

John H Holland. Genetic algorithms and adaptation. In Adaptive Control of Ill-Defined Systems, pp 317. Springer, 1984

Kalyanmoy Deb. An introduction to genetic algorithms. Sādhanā, 24(4-5):293, 1999

Tansel Dokeroglu, Ender Sevinc, Tayfun Kucukyilmaz, and Ahmet Cosar. A survey on new generation metaheuristic algorithms. Computers & Industrial Engineering, 137:106040, 2019

Kashif Hussain, Mohd Najib Mohd Salleh, Shi Cheng, and Yuhui Shi. Metaheuristic research: a comprehensive survey. Artificial Intelligence Review, 52(4):2191, 2019

Xin-She Yang. Firefly algorithms for multimodal optimization. In International symposium on stochastic algorithms, p 169. Springer, 2009

Waqar A Khan, Nawaf N Hamadneh, Surafel L Tilahun, and Ngnotchouye J M. A review and comparative study of firefly algorithm and its modified versions. Optimization Algorithms-Methods and Applications, p 281, 2016

Iztok Fister, Iztok Fister Jr, Xin-She Yang, and Janez Brest 2013. A comprehensive review of firefly algorithms. Swarm and Evolutionary Computation, 13:34, 2013

Nilanjan Dey. Applications of firefly algorithm and its variants. Springer, Berlin, 2020

Mahmood Reza Shakarami and Reza Sedaghati. A new approach for network reconfiguration problem in order to deviation bus voltage minimization with regard to probabilistic load model and dgs. Int. J. Electr. Comput. Energ. Electr. Commun. Eng., 8(2):430, 2014

Abdollah Kavousi-Fard, Haidar Samet, and Fatemeh Marzbani. A new hybrid modified firefly algorithm and support vector regression model for accurate short term load forecasting. Expert systems with applications, 41(13):6047, 2014

Xiaoyu Lin, Yiwen Zhong, and Hui Zhang. An enhanced firefly algorithm for function optimisation problems. International Journal of Modelling, Identification and Control, 18(2):166, 2013

Amir Hossein Gandomi, X-S Yang, S Talatahari, and Amir Hossein Alavi. Firefly algorithm with chaos. Communications in Nonlinear Science and Numerical Simulation, 18(1):89, 2013

Mohd Herwan Sulaiman, Hamdan Daniyal, and Mohd Wazir Mustafa. Modified firefly algorithm in solving economic dispatch problems with practical constraints. In 2012 IEEE International Conference on Power and Energy (PECon), pp 157. IEEE, 2012

Bin Wang, Dong-Xu Li, Jian-Ping Jiang, and Yi-Huan Liao. A modified firefly algorithm based on light intensity difference. Journal of Combinatorial Optimization, 31(3):1045, 2016

Shuhao Yu, Shoubao Su, Qingping Lu, and Li Huang. A novel wise step strategy for firefly algorithm. International Journal of Computer Mathematics, 91(12):2507, 2014

Amit Kumar Ball, Shibendu Shekhar Roy, Dakshina Ranjan Kisku, Naresh Chandra Murmu, and Leandro dos Santos Coelho. Optimization of drop ejection frequency in ehd inkjet printing system using an improved firefly algorithm. Applied Soft Computing, 94:106438, 2020

Abhishek Ghosh Roy, Pratyusha Rakshit, Amit Konar, Samar Bhattacharya, Eunjin Kim, and Atulya K Nagar. Adaptive firefly algorithm for nonholonomic motion planning of car-like system. In 2013 IEEE Congress on Evolutionary Computation, pp 2162. IEEE, 2013

Shuhao Yu, Shenglong Zhu, Yan Ma, and Demei Mao. Enhancing firefly algorithm using generalized opposition- based learning. Computing, 97(7):741, 2015

MJ Kazemzadeh-Parsi. A modified firefly algorithm for engineering design optimization problems. Iranian Journal of Science and Technology. Transactions of Mechanical Engineering, 38(M2):403, 2014

Mohammad Javad Kazemzadeh-Parsi, Farhang Daneshmand, Mohammad Amin Ahmadfard, and Jan Adamowski. Optimal remediation design of unconfined contaminated aquifers based on the finite element method and a modified firefly algorithm. Water Resources Management, 29(8):2895, 2015

Sirus Mohammadi, Babak Mozafari, Soodabeh Solimani, and Taher Niknam. An adaptive modified firefly optimisation algorithm based on hong’s point estimate method to optimal operation management in a microgrid with consideration of uncertainties. Energy, 51:339, 2013

Tahereh Hassanzadeh and Hamidreza Rashidy Kanan. Fuzzy fa: a modified firefly algorithm. Applied Artificial Intelligence, 28(1):47, 2014

Sankalap Arora, Sarbjeet Singh, Satvir Singh, and Bhanu Sharma. Mutated firefly algorithm. In 2014 International Conference on Parallel, Distributed and Grid Computing, p 33. IEEE, 2014

Sankalap Arora and Satvir Singh. Performance research on firefly optimization algorithm with mutation. In International conference, computing & systems, 2014

Wen-chuan Wang, Lei Xu, Kwok-wing Chau, and Dong-mei Xu. Yin-yang firefly algorithm based on dimensionally cauchy mutation. Expert Systems with Applications, 150:113216, 2020

Hu Peng, Wenhua Zhu, Changshou Deng, and Zhijian Wu. Enhancing firefly algorithm with courtship learning. Information Sciences, 543:18, 2021

Nan Tong, Qiang Fu, Caiming Zhong, and Pengjun Wang. A multi-group firefly algorithm for numerical optimization. In Journal of Physics: Conference Series, vol 887, p 012060. IOP Publishing, 2017

Lingyun Zhou, Lixin Ding, Maode Ma, and Wan Tang. An accurate partially attracted firefly algorithm. Computing, 101(5):477, 2019

Adil Baykasoğlu and Fehmi Burcin Ozsoydan. An improved firefly algorithm for solving dynamic multidimensional knapsack problems. Expert Systems with Applications, 41(8):3712, 2014

Jingsen Liu, Yinan Mao, Xiaozhen Liu, and Yu Li. A dynamic adaptive firefly algorithm with globally orientation. Mathematics and Computers in Simulation, 2020

Iztok Fister, Xin-She Yang, Janez Brest, and Iztok Fister Jr. Modified firefly algorithm using quaternion representation. Expert Systems with Applications, 40(18):7220, 2013

Hui Wang, Wenjun Wang, Xinyu Zhou, Hui Sun, Jia Zhao, Xiang Yu, and Zhihua Cui. Firefly algorithm with neighborhood attraction. Information Sciences, 382:374, 2017

Sh M Farahani, AA Abshouri, B Nasiri, and MR2011 Meybodi. A gaussian firefly algorithm. International Journal of Machine Learning and Computing, 1(5):448, 2011

Xin-She Yang. Firefly algorithm, levy flights and global optimization. In Research and development in intelligent systems XXVI, p 209. Springer, 2010

Lyes Tighzert, Cyril Fonlupt, and Boubekeur Mendil. A set of new compact firefly algorithms. Swarm and Evolutionary Computation, 40:92, 2018

Jinran Wu, You-Gan Wang, Kevin Burrage, Yu-Chu Tian, Brodie Lawson, and Zhe Ding. An improved firefly algorithm for global continuous optimization problems. Expert Systems with Applications, 149:113340, 2020

Jitin Luthra and Saibal K Pal. A hybrid firefly algorithm using genetic operators for the cryptanalysis of a monoalphabetic substitution cipher. In 2011 World congress on information and communication technologies, p 202. IEEE, 2011

A Rahmani and SA MirHassani. A hybrid firefly-genetic algorithm for the capacitated facility location problem. Information Sciences, 283:70, 2014

Shubhendu Kumar Sarangi, Rutuparna Panda, Sabnam Priyadarshini, and Archana Sarangi. A new modified firefly algorithm for function optimization. In 2016 International Conference on Electrical, Electronics, and Optimization Techniques (ICEEOT), p 2944. IEEE, 2016

İbrahim Berkan Aydilek, İzzettin Hakan Karaçizmeli, Mehmet Emin Tenekeci, Serkan Kaya, and Abdülkadir Gümüşçü. Using chaos enhanced hybrid firefly particle swarm optimization algorithm for solving continuous optimization problems. Sādhanā, 46(2):1, 2021

Aref Yelghi and Cemal Köse. A modified firefly algorithm for global minimum optimization. Applied Soft Computing, 62:29, 2018

Kadavy Tomas, Pluhacek Michal, Viktorin Adam, and Senkerik Roman. Firefly algorithm enhanced by orthogonal learning. In Computer Science On-line Conference, p 477. Springer, 2018

Yu-Pei Huang, Xiang Chen, and Cheng-En Ye. A hybrid maximum power point tracking approach for photovoltaic systems under partial shading conditions using a modified genetic algorithm and the firefly algorithm. International Journal of Photoenergy, 2018, 2018

Russell Eberhart and James Kennedy. Particle swarm optimization. In Proceedings of the IEEE international conference on neural networks, volume 4, pages 1942–1948. Citeseer, 1995

Xin-She Yang and Suash Deb. Cuckoo search via lévy flights. In 2009 World congress on nature & biologically inspired computing (NaBIC), p 210. IEEE, 2009

R Indumathy, S Uma Maheswari, and G Subashini. Nature-inspired novel cuckoo search algorithm for genome sequence assembly. Sādhanā, 40(1):1, 2015

Xin-She Yang. Flower pollination algorithm for global optimization. In International conference on unconventional computing and natural computation, p 240. Springer, 2012

Hamza Yapici and Nurettin Cetinkaya. A new meta-heuristic optimizer: pathfinder algorithm. Applied soft computing, 78:545, 2019

Dan Simon. Biogeography-based optimization. IEEE transactions on evolutionary computation, 12(6):702, 2008

Singiresu S Rao. Engineering optimization: theory and practice. John Wiley & Sons, 2019

Amir Parnianifard, Ratchatin Chancharoen, Gridsada Phanomchoeng, and Lunchakorn Wuttisittikulkij. A new approach for low-dimensional constrained engineering design optimization using design and analysis of simulation experiments. International Journal of Computational Intelligence Systems, 13(1):1663, 2020

Jasbir Singh Arora. Introduction to optimum design. Elsevier, 2004

Young-Jou Lai, Ting-Yun Liu, and Ching-Lai Hwang. Topsis for modm. European journal of operational research, 76(3):486, 1994

Momin Jamil and Xin-She Yang. A literature survey of benchmark functions for global optimization problems. arXiv preprintarXiv:1308.4008, 2013

Sudhanshu K Mishra. Some new test functions for global optimization and performance of repulsive particle swarm method. Available at SSRN 926132, 2006

Carlos A Coello Coello. Use of a self-adaptive penalty approach for engineering optimization problems. Computers in Industry, 41(2):113, 2000

Joaquín Derrac, Salvador García, Daniel Molina, and Francisco Herrera. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm and Evolutionary Computation, 1(1):3, 2011

Author information

Authors and Affiliations

Corresponding author

Supplementary Information

Below is the link to the electronic supplementary material.

Abbreviations

Abbreviations

- GA:

-

Genetic algorithm

- FA:

-

Firefly algorithm

- PUBG-FA:

-

Partition cum unification based genetic - FA

- PSO:

-

Particle swarm optimization

- CS:

-

Cuckoo search

- FPA:

-

Flower pollination algorithm

- PFA:

-

Pathfinder algorithm

- BBO:

-

Bio-geography based optimization

- MCDM:

-

Multi-criteria decision making

- TOPSIS:

-

Technique for order of preference by similarity to ideal solution

Rights and permissions

About this article

Cite this article

Gupta, D., Dhar, A.R. & Roy, S.S. A partition cum unification based genetic- firefly algorithm for single objective optimization. Sādhanā 46, 121 (2021). https://doi.org/10.1007/s12046-021-01641-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12046-021-01641-0