Abstract

Urban transportation network design and traffic control problems fall within the scope of infrastructural engineering sciences which become increasingly more important in ever-growing societies of today. In highly populated old cities where establishing new links are facing many human-related, social, economic, and political problems, a workaround for addressing traffic problems is to expand the capacity of existing links, so as to not only control the traffic, but also reduce the urban environmental pollutions caused by vehicles stuck in traffic and decrease the time wasted in traffic to accelerate routines of the society. In the present research, an urban transportation network design model is presented with the aim of enhancing travel time reliability by expanding the capacity of existing network links at minimum possible cost. A significant assumption taken in the present study is that demands in normal condition and peak traffic hours are treated separately, so as to prevent possible problems by congestion management. In the present study, the uncertainty associated with demand for travel, travel time, and the flow passing through different links are taken into consideration. Travel time reliability calculations are carried out assuming that the demand for travel and travel time follow lognormal distributions. In order to solve this bi-level model, particle swarm optimization algorithm was used. Incorporation of the inertial coefficients dynamics, personal learning, and communal learning into the algorithm contributes to the convergence of this algorithm for solving the bi-level model.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

A characteristic problem faced by transportation engineers and planners because of the growth in demand for transportation is the expansion of transportation network. Urban transportation planners are facing a number of limitations for solving such problems. For this purpose, they should overcome social, economic, political, and environmental problems as well as limitations in usable urban space. The planner should make decisions that not only maximize advantages of such capacity expansion, but also minimize associated costs with such an expansion, so as to keep the expenditures within the available budget while respecting social well-being, political, and environmental limitations.

In this respect, there are two types of problem to solve: (1) expanding the capacity of existing links, and (2) establishing new links. Such problems may be classified under urban transportation network design problems (UTNDP). Given spatial limitations as well as economic constraints and so on, the present research is focused on the capacity expansion of existing links across urban transportation networks.

When it comes to decision-making and planning for the problem of capacity expansion of existing links across an urban transportation network, there are two main decision-makers. The first decision-makers are the government or urban transportation and traffic administration, which seek expanding the capacity of the existing links to realize such goals as enhancing social well-being, attenuating urban traffic, reducing air pollution, decreasing waiting time along urban paths, and minimizing the associated costs with such expansion. The second major decision-makers are the people or passengers who tend to select a path through which they can arrive at destination in minimum possible time. Therefore, given that the two major decision-makers interact with one another and the decisions made by the former decision-maker affects those by the latter one, we are dealing with a bi-level programming problem.

Depending on its status, the upper-level model delivers information to the lower-level model, and the lower-level model proceeds to decision-making and selection based on its own preferences. In the next step, the lower-level model reports a feedback of its decisions to the upper-level model and the upper-level model is adjusted to achieve a globally optimal decision considering its own decisions. Bi-level programming not only considers its local interests, but also takes into consideration the global interests; this is a characteristic of this type of modeling.

Another point to note in urban transportation problems is the subject matter of uncertainty. It is obvious that no absolute certainty exists in real world problems, i.e., any and all problems are facing degrees of uncertainty. In UTNDPs, the uncertainty is associated with demand for travel, flows through links, total travel time, link capacities, etc. Lack of certainty results in unreliability in responding to or solving the problems, raising the subject-matter of network reliability assessment or measurement. In order to assess reliability of a transportation network, three criteria should be evaluated: (1) connectivity reliability, (2) travel time reliability, and (3) capacity reliability [1].

Connectivity reliability: This type of reliability refers to the probability that nodes across a network are connected to one another. In fact, this probability investigates the presence of a path between a particular origin-destination pair. A network may be assessed as efficient if there is at least one usable and active path for any origin-destination pair.

Travel time reliability: The travel time reliability expresses the probability that a trip is successfully accomplished between a given pair of origin and destination within a particular period of time at a given level of service. This type of reliability usually gives an indication of the difference between anticipated travel time based on plans or average travel time and actual travel time resulted from traffic congestion or demand variations.

Capacity reliability: The probability that capacity of a network can successfully serve a particular level of origin-destination demand at an acceptable level of service is commonly referred to as transportation network capacity reliability, which is an important criterion for performance evaluation of transportation systems.

Urban transportation network programming problems are bi-level in nature (due to the presence of two types of decision-makers). However, each of the decision-makers may follow different objectives that may prove conflicting with those of the others (similar to any multi-objective problem in other contexts). Based on the nature of multi-objective problems, the problems may have different solutions which form Pareto fronts rather than a unique optimal solution. A Pareto front consists of the solutions dominating other solutions of a problem. A common methodology for solving such problems is the multi-objective particle swarm optimization (MOPSO), which returns a dominated set of solutions as optimal solution.

In the present research, an attempt has been made to get as close to real world as possible and take uncertainties into consideration when modeling and solving the problem. As such, in the present research, the demand for travel, total travel time through the links, and the flow passing through each link were assumed to be uncertain. Based on this assumption, the aim is to present a bi-level model for an urban transportation network in such a way that, the decision-maker at the first level follows two objectives: (1) expanding the capacity of existing links across the network in an attempt to enhance travel time reliability, thereby not only facilitating the traffic and flows through the links at peak hours, but also reducing the traffic load and air pollution, and (2) contributing to economy of the city, i.e., expanding the capacity at lowest possible cost for the government or transportation and traffic administration. The decision-makers at second level of the problem, i.e., general public or passengers traveling through the urban transportation network, seek to choose a path, subject to the decision made by the administration or government, via which they can achieve the shortest travel time or, considering the uncertainties associated with travel time, smallest diversion from the predicted travel time. Once such model was presented, a particle swarm optimization (PSO) algorithm is presented which strives to reach a logical and good solution for the model. The particle swarm optimization (PSO), which is a population-based algorithm, was inspired by the social behavior of animals such as fish schooling and bird flocking. Similar to other population-based algorithms, such as evolutionary algorithms, PSO can solve a variety of difficult optimization problems, and has shown a faster convergence rate than other evolutionary algorithms on some problems. Another advantage of PSO is that it has very few parameters to adjust, which makes it particularly easy to implement [2].

One of the aspects considered in this paper is the correlation of the links along a path. The flow passing through a path goes through every single link comprising that path. Therefore, the links comprising each path depend on one another, and this dependence is not limited to the adjacent links, but rather encompasses all of the links comprising the path. As such, despite the fact that this tends to make the problem much more complicated, but it was considered when calculating the travel time reliability of the network in an attempt to keep the model as close to reality as possible.

This paper is organized in six sections. Section 2 presents a review on related literatures. Section 3 explains a bi-level multi-objective model for the problem considered in this research. Then in section 4, a metaheuristic solution method using PSO is presented for the model presented in section 3. In section 5, in order to implement the presented model and solution algorithm, numerical examples of two networks are investigated. Section 7 gives conclusion and sets out recommendations for future research.

2 Literature review

LeBlanc was the first to present a network design formulation in 1975 [3]. Later on in 1979, Abdulaal and LeBlanc extended the formulation into a complete version. This equilibrium allocation-dependent network design problem with continuous investment variables was formulated as an unconstrained nonlinear optimization problem. Hook–Jeeves pattern search algorithm and Powell’s algorithm were applied to solve this model on a medium-sized realistic network [4]. Following the above-mentioned research works, the network design problem experienced numerous developments and extensive studies were performed on this subject. Optimization of road tariffs under congestion and queue conditions [5, 6], optimization of reserved capacity of a signal control network [6, 7], and estimation of travel matrix [8] were some of the developments performed on the network design problems. In general, these problems are formulated in the form of bi-level programming problems where equilibrium of definitive or probable user is formulated at lower level, while various types of optimal system design problems with specific decision variables are formulated at higher level. There have been also attempts to convert such problems into equivalent single-level problems, such as [9] who presented an outstanding equivalent single-level continuous optimization model for a conventional bi-level continuous network design problem and applied the so-called Lagrangian algorithm to have the model solved.

Compared to deterministic networks, stochastic networks have been the subject of lower studies on network optimization. Most of the stochastic networks considered in the transportation-related literature have been studied in terms of travel time, cost, and link failure (lack of connectivity). The cause of uncertainty in travel time is the delay during the trip that in study [10] discusses a practice-ready methodology that allows states to easily calculate planning-level estimates of these recurring and nonrecurring delays for freeway corridors and state department of transportation (DOT) districts. This process can also be fully automated to make it repeatable. Based on the user’s risk preferences, the objective functions used in the literature on optimum stochastic routing can be categorized into two classes: (1) risk-prone objectives, and (2) risk-averse objectives. The risk-averse objective functions (the most important of which are presented in literature review) do not involve change measurements [11,12,13,14,15,16,17,18]. In the studies related to this category of objectives, it is assumed that users select the minimum expected travel time, but are not sensitive to changes in the travel time. Nevertheless, many experimental studies have concluded that, there are cases where users prefer travel time reliability rather than the travel time itself [19,20,21,22,23]. Following this line of reasoning, other studies have adopted risk-prone objective functions. It has been shown that schedule coordination is more beneficial when headways are larger (e.g., more than 10 min or even half an hour) [24, 25], and that passengers perceives the value of waiting time twice as much as the in-vehicle travel time [26]. As a result, the extra waiting time costs caused by the transfer failure event will greatly change the relative utility between different routes, such that some passengers would modify their routes as response to the status of transfer coordination. With the provision of traffic information or route guidance along the trip, dynamic rerouting behaviour is prevalent under stochastic travel conditions [27, 28]. As indicated in the empirical study by Kim et al [29], passengers would present lower stickiness on the same route with the increase in the number of alternative routes. The performance of transit operation is highly related to the temporal and spatial passenger flow distribution and running fleet [30,31,32].Based on the measurement of adopted changes, the risk-prone objective functions are categorized into two main classes: (1) probability-based objectives, and (2) variance-based objectives. The probability-based methods extensively measure reliability of stochastic on time arrival [33,34,35,36,37] and α – reliability [1, 38]. Reliability in this context is defined as ‘‘certainty of service aspects compared with the schedule (such as travel time (including waiting), arrival time and seat availability) as perceived by the user’’ [39]. Traditionally measured in terms of service-oriented indicators (such as on-time performance, headway regularity [40]), lately there has been a shift toward passenger-oriented measures, as they can better capture the effectiveness of reliability improvement strategies by including the end-user perspective [41]. A review of the existing passenger-oriented reliability measures can be found in Gittens and Shalaby [42] and in Currie et al [43] .

Reliability may be measured in terms of travel time regularity (consistency of experienced travel times) or punctuality (deviation from the scheduled arrival time/travel time). Cats notes that in case of urban high-frequency services, passengers arrive randomly without consulting the schedule, making travel time regularity more relevant than punctuality [44]. This study is based on dense high-frequency urban transit networks, so reliability has been measured in terms of regularity of travel time. Determination of the reliability criterion requires a comprehensive knowledge of travel time distribution of the link and path. Evaluation of such distributions and associated parameters requires compressed information which can be challenging both statistically and computationally. In addition, in many studies, travel times of the links of a path are assumed to be independent of one another. The studies where the correlation of travel times of the links are considered investigate distributions with appropriate analytical characteristics such as normal and lognormal distributions [36, 37]. An example of such research works is the one reported by Mingxin [45] who used lognormal distribution for travel demand and link capacities under correlation between adjacent links to estimate travel time. Also Dixit et al [46] in their study by using a consistent method for all journeys using all available transit modes, reliability can be compared between any route for any origin anddestination (OD) pair in a multimodal transit network. The developed metric can be used to study the reliability impacts of policies affecting multiple transit modes. Additionally, it could also be used as an input to behavioral models such as mode, route, or departure time choice models. Recently, Liu and Ceder [47] integrated the timetable synchronization and vehicle scheduling using deficit function. The successful implementation of this methodology largely depends on developing efficient solution algorithms. A new DF-based solution method has been developed to handle transportation network design problem for practical implementations. Fonseca et al [48] proposed a matheuristic approach to address the integration of timetabling and vehicle scheduling with the objective to minimize transfer cost and operation cost. Therefore, Weitiao et al [49] has developed a more generalized schedule coordination model into which the transfer reliability and the respective behavioural reactions (i.e., rerouting) are explicitly incorporated. They have stated that users given a set of headways and slack times, will choose acceptable paths prior to starting their journey and reroute in case of missed connection. Likewise, given a set of flow patterns selected by users, system designers can re-allocate the vehicle resources to avoid extra costs caused by congestion. Then, users will choose the new trip chains again. Therefore, they can use this iterative strategy between the upper-level and lower-level decisions to reach the optimal solution gradually. The method of successive averages (MSA) is used to address the demand assignment problem under a given schedule design.

In order to cope with the problems related to probability-based criteria, the use of variance (standard deviation) as a variable has been also accepted by other researchers. These studies include a wide spectrum of problems with different objective function formulations [50,51,52,53,54,55]. In 1994, Sivakumar and Batta introduced a variance constraint for standard least expected time (LET) path problems and formulated it as an integer programming problem assuming independent links. Similar formulation was presented by [52] in presence of a correlation structure with covariance cycle characteristics. However, performance of the proposed branch-and-bound method was not tested on an actual large-scale network. [50, 51] presented a multi-criterion applied function that included travel time variance as a part of the objective. [51] showed that, this class of problems is NP-Hard even for simple second-degree functions with independent link travel time.

Among other objective factors which are based on variance-based criteria, a conical combination of mean and standard deviation of travel time has been widely used in related literature. Rational of such objectives is that, users tend to prefer risk-proneness, and consider not only lower mean travel time, but also low variability. In absence of correlation between the links, objective functions based on minimization of mean and standard deviation of travel time can be found in the studies by [56, 57]. Furthermore, optimization by considering correlation between the links has been practiced in recent studies such as those by [36, 37, 45, 53, 58,59,60] presented a quick heuristic algorithm for such problems when Cholesky coefficients of the covariance matrix of the links were positive. They solved the considered problem as a multi-objective shortest path problem of m in size (where m is the number of links) by revising the previous formulations and determining Pareto set of paths. [55] proposed a Lagrangian-based heuristic algorithm for solving the two classes of the problems (with and without considering the correlation between the links) based on approximations based on real samples. Results of their calculation indicated a mean error of about 5.4%. The study presented by [59] developed a model for finding the shortest time path based on mean and standard deviation of travel time considering the correlation between links, and then solved it using an efficient LET path algorithm.

There are also studies where simulations are adopted for monitoring and investigating urban traffic with the purpose of expanding the existing links capacity. For instance, the study presented by [61] proposed a simulation-based optimization for investigating the state of link capacities and controlling traffic signal. An innovation incorporated into their algorithm is that, at each iteration, a particular optimization algorithm is used depending on the conditions realized during the simulation. Zhen et al [62] in his study, focused on how to effectively model the flow-based accessibility measured and reformulated the extremely critical user behaviors through considering the trade-off between individual travel time budgets and actual congestion levels. This study summarizes as follows.

-

(1)

To characterize the accessibility, the flow-based accessibility measure is firstly proposed in the considered transportation network, which is defined as the total accessible traffic flow over different OD pairs when the psychological expectation of travelers is balanced with the actual traffic congestion level.

-

(2)

Two classes of mathematical models, i.e., bi-level programming models and two-stage stochastic programming models, are proposed for the network design problems with deterministic and stochastic travel demands, respectively.

-

(3)

To handle the proposed model, they develop an effective algorithm to solve the approximate optimal solutions. This part aims to design some heuristics to effectively solve the proposed models. They design a heuristic algorithm based on the ideas of the random search and implicit enumeration method, called Probability Search Algorithm (PSA). In this algorithm, the PSA is employed to specify the network design strategies in the upper level, and the classical Frank–Wolfe algorithm will be adopted to solve the corresponding flow assignment plan in the lower level. In addition, based on the algorithmic procedures for lower level problem they propose the simulation-based PSA to solve two-stage stochastic programming models.

Ben-Aved and Blair [63] proved that, bi-level programming problems are strictly NP-Hard. Various deterministic algorithms have been developed for solving such problems, which are generally classified under three classes: (A) vertex enumeration approach [64,65,66,67], (B) Kuhn-Tucker approach [64, 68,69,70]; and (C) penalty function approach [64, 71]. Moreover, a number of algorithms have been developed for special bi-level problems, such as exact penalty method [72], disjunctive cuts method [73], and parametric programming algorithm [74].

Today’s problems are engaged with large scales and excessive nonlinearity, and surely planning for such systems requires multi-level programming which is characterized by large scale and nonlinearity. For example, in a recent study Zhen et al [62] two deterministic bi-level programming models are firstly formulated to maximize the network accessible flow. The up- per level focuses on choosing the potential links in the pre-specified candidate set, and the lower level assigns all the flows to the super network with principles of user equilibrium or system optimization with travel time budgets. Moreover, to handle uncertain potential demands in reality, the problem of interest is further formulated as two-stage stochastic programming models. To solve these proposed models, efficient heuristic algorithms are designed on the basis of probability search algorithm, Frank–Wolfe algorithm and Monte Carlo simulation method.

Particle swarm optimization (PSO) is a population-based exploratory algorithm which was first introduced by [75], being inspired from social behavior of communities of fishes, birds, etc. In the following years, some developments were performed on the PSO algorithm, including those in the definitions of coefficient of inertia and personal and communal learning, which were proved by [76, 77]. Given that PSO is a low-computational cost method in terms of both the required memory and speed [75], it has exhibited a very good performance in terms of convergence and proved successful in many contexts. In relation to the solution of multi-level problems, [78] developed a PSO algorithm for linear bi-level programming problems wherein decision-makers at different levels share constraints. [79] presented an intelligent hybrid algorithm by combining PSO with chaos search technique (CST) for solving nonlinear bi-level programming problems. [80, 81] applied PSO algorithm for solving bi-level planning models in relation to pricing problems across supply chain and strategic competition optimization in electricity market, respectively. Nevertheless, PSO-based algorithms for solving multi-level problems are limited to some special problems (such as linear formulations and special applications) and small-scale bi-level problems. One of the studies performed to further develop the PSO algorithm was the one reported by [82] who considered dynamic coefficient of inertia and personal and communal learning to impose large contributions into enhancement of the algorithm. They compared their proposed method against three other PSO-based algorithms and ended up concluding that the proposed methodology provided a faster convergence to the solution for the considered examples.

Considering the literature review, investigation of the state of link capacities with the aim of enhancing the network reliability and passengers’ satisfaction by shortening their travel time is of paramount importance, especially in large cities which are frequently facing peak demand for transportation. Inspired by the current state of urban transportation and two research works performed by [83] on link capacity change and [82] on solving the presented model, the present paper proposes a model for such problems. In the research by [83], a bi-level model was used to determine the required expansion in link capacities, where the link capacity expansion, as an upper-level decision variable, was evaluated in such a way to decrease travel time through different paths, with the lower-level decision variable related to the choice of the LET path between origin-destination pairs. Novelty of the proposed model in the present research comes in the form of considering travel time reliability in presence of dependent network links and stochastic demands, link flows, and travel times. The novelty also comes in the form of the fact that the considered model in this research is a bi-level one with the second level being inspired by the study by [59] for selecting the path with the lowest mean and standard deviation of total travel time. In addition, even though the presented solving method in this research is basically inspired by the research by [82], but innovations have been adopted in terms of personal and communal learning, which are thoroughly explained in section 4.

3 Presenting a model for urban transportation network link expansion problem

3.1 Problem description and model explanation

In an urban transportation network, once the transportation facilities are provided, passengers choose their travel mode considering then-current traffic conditions. This involves interactions between transportation administration and general public (i.e., passengers), making the so-called bi-level programming model a suitable approach to the solution of urban transportation network design problems.

Considering what was mentioned in the previous section, the present paper aims at presenting an appropriate model for the bi-level problem faced in urban transportation network. Accordingly, at the upper level, the aim is to maximize travel time reliability across the network while minimizing the associated cost. At the lower level, the passengers try to select the path to the destination, with minimum mean and standard deviation of travel time. General form of the modeling is presented as follows:

In the above general model, \( TTR_{a} \) is travel time reliability of the path a, \( Cost_{a} \) is the cost of expanding the capacity of the links comprising the path a, Zy is the objective function of the upper-level problem with its decision variable being the capacity expansion vector of the links comprising the considered network (y), \( E(t_{a} ) \) and \( \delta \left( {t_{a} } \right) \) are mean and standard deviation of the path a, and Zx is objective function of the lower-level problem with its decision variable being the paths selected by passengers (x).

In this problem, the upper-level decision-maker is the urban transportation administration, while passengers are the lower-level decision-makers. The administration seeks maximizing the reliability of the appropriate path between origin-destinations pairs at minimum possible cost; on the other hand, considering the administration’s decision, the passengers look to select the path with minimum travel time and the lowest time variance.

3.1a Upper-level problem: In the upper-level problem, the decision-maker is so-called leader under whose decision the lower-level problem should make the best choice from its own point of view. In this study, the upper-level problem is a multi-objective one that follows maximizing travel time reliability along paths while minimizing the associated cost with such enhancement in the reliability. Increasing the time travel reliability along different paths depends on the capacity of and the flow passing through the links comprising the paths. It is obvious that, the links with capacities less than the flow passing through them will end up with heavy traffic and increased travel time. Subsequently, the paths going through the links will be also end up with increased travel time; at the end of the day, considering other links comprising the paths, overall travel time reliability decreases. Therefore, in order to prevent from such a decrease in reliability or even increase travel time reliability of different paths, one should expand the capacity of the links for which the passing flow exceeds the link capacity. On the other hand, such an expansion incurs some cost to the administration, which should be minimized.

3.1b Lower-level problem: The lower-level problem (follower) makes decisions based on the decision adopted by the leader. In the specific problem discussed in this research, the upper-level problem increases the link capacities and provides the updated capacities to the low-level problem (passengers are added during peak traffic hours). Accordingly, among the available paths between each origin-destination pair, the passenger selects the path with minimum travel time and variability. Following this approach, the flows passing through the links are obtained and provided to the upper-level problem by the lower-level problem to calculate travel time reliability across the paths.

3.2 Modeling the urban transportation network optimization problem for maximizing travel time reliability while minimizing the travel time

The important thing in the present research is that, the flow passing through the links as well as the demand for origin-destination pairs are associated with some degrees of uncertainty, making the travel time through a particular origin-destination pair a stochastic variable which can, however, follow a particular distribution. The notation and terminologies used in this paper are as follows (table 1):

The mathematical model as a bi-level multi-objective model is as follows:

The above model is a bi-level multi-objective model. Equations (1-a) and (1-b) present objective functions of the higher-level problem which seeks to expand the link capacities in such a way to maximize reliability of the paths selected by passengers (which is determined by solving the lower-level problem) at minimum possible cost. Evaluation of Equation (1-a) is explained at the end of this section. Equations (1-c) through (1-h) express constraints of the upper-level problem. Equation (1-c) ensures that the current capacity is equal to the constant initial capacity plus the added capacity; Equation (1-d) sets out that sum of the flows passing through all of the paths connecting each origin-destination pair is equal to the demand for that origin-destination pair; Equation (1-f) refers to greatness or equality of the flows passing through links; finally, Equations (1-g) and (1-h) set out upper and lower bounds of capacity expansion, respectively. Equation (1-i) is the objective function of the lower-level problem, and determines optimal, for passengers added during peak traffic hours, paths connecting each origin-destination pair in such a way to achieve minimum mean travel time and standard deviations. Equations (1-j), (1-k), and (1-l) are the set of constraints applied to the lower-level problem, and ensure that, the demands raised during peak traffic hours are flown through the passengers-selected paths, only one path is selected for each particular origin-destination pair, and decision variable of the upper-level problem is also binary in nature, respectively.

As can be observed, the model presented in this section is an NP-Hard, nonlinear complex binary model.

The first thing which comes to mind regarding this model is the travel time reliability of the path. Some information about travel time through a path is required before this type of reliability can be calculated.

Distribution of travel time of a link can be modeled based on the function presented by United States Department of Road and Traffic [45], which is known as a credible model among researchers in this field, as in Equation (2):

Equation (3) gives free flow travel time (\( ft_{i} \)) through ith link where Lei is the length of the ith link and Vi is the allowable velocity at the ith link. The fti obtained from Equation (3) is substituted into Equation (2) to through the calculate the travel time ith link (\( tt_{i} \)), where fi is the flow passing through the ith link, cpi is the capacity of the ith link, and α and β are specific constants whose default values are 0.15 and 4, respectively, or rather evaluated based on the particular set of real data [84]. When calculating the initial flows, cpi is equal to the base capacity of each link. During the optimization stage, however, cpi refers to the base capacity plus the expanded capacity to the link, i.e. new capacity of the ith link. Total travel time (tti) is composed of two parts: (1) free flow travel time (fti), and (2) travel time delay of the ith link (dti), which are well evident in Equation (2). Equation (2) is a uniformly increasing convex function which depends on the flows passing through that particular link.

In the present research, considering the studies reported by [37], it is assumed that travel time of the links follows translated lognormal distribution, which requires taking the presumption that probability distribution of demand for travel is a lognormal distribution. Also in this research, it is assumed that passengers during normal traffic hours, have no accurate information about different paths connecting different origin-destination pairs, so that the population of passengers are distributed randomly yet uniformly among several shorter paths (assuming that the other paths are unreasonably long). Of course, it is worth noting that, for each origin-destination pair, the number of selected paths are generated randomly. Therefore, given the uniform distribution of demands over different paths and the fact that each path is composed of several links and knowing that sum of several lognormal distributions is also a lognormal distribution, the passing flow through ith link (\( f_{i} \)) at each demand also follows a lognormal distribution with the parameters \( \varphi_{1}^{{f_{i} }} \) and \( \varphi_{2}^{{f_{i} }} \). As such, considering these assumptions and Equation (2), the following relationship can be formulated:

The Equation (4) shows that, travel time delay through the ith link (\( dt_{i} \)) exhibits a lognormal distribution. Therefore, distribution of total travel time at ith link (\( tt_{i} \)) would be a translated lognormal distribution, \( SLN(\varphi_{1}^{{t_{i} }} ,\varphi_{2}^{{t_{i} }} ,\varphi_{3}^{{t_{i} }} ) \), with the following parameters:

From Equations 5 to 7, are obtained the total travel time distribution parameters of the ith link, which follows the shifted log-normal distribution.

Regarding probability distribution of travel time along a path connecting an origin-destination pair, since the path is composed of several links, the obtained distribution is the sum of probability distributions of the links comprising the path. When the random variable of travel time through links follows a translated log-normal distribution, cumulative probability function of travel time through the path, which is composed of a set of links, does not have a closed form, and the travel time through the kth path connecting the wth origin-destination (\( TT^{{p_{w}^{k} }} \)) is estimated using a translated lognormal distribution (Fenton–Wilkinson’s approach [85]) with the parameters \( \varphi_{2}^{{p_{w}^{k} }} \) and \( \varphi_{3}^{{p_{w}^{k} }} \) (\( SLN(\varphi_{1}^{{p_{w}^{k} }} ,\varphi_{2}^{{p_{w}^{k} }} ,\varphi_{3}^{{p_{w}^{k} }} ) \)), with its mean and variance values obtained from the following relationships:

From equations (8) and (9) calculate the mean and variance of the travel time distribution respectively the kth path connecting the wth origin-destination pair.where \( \rho_{ij}^{{p_{w}^{k} }} \) is the correlation coefficient of travel time through the ith link to that through the jth link along the kth path connecting the wth origin-destination pair. Therefore, travel time reliability of the kth path connecting the wth origin-destination pair is calculated used CFD of distribution of the estimated total travel time through the path, \( TT^{{p_{w}^{k} }} \,\sim\,SLN(\varphi_{1}^{{p_{w}^{k} }} ,\varphi_{2}^{{p_{w}^{k} }} ,\varphi_{3}^{{p_{w}^{k} }} ) \).

By definition, travel time reliability of a path refers to the probability that total travel time (\( TT^{{p_{w}^{k} }} \)) of the path is smaller or equal to a predetermined threshold, Thw. The threshold Thw indicates expected travel time plus an additional time considered to ensure on-time termination of the travel between the wth origin-destination pair. Therefore:

Equation (10) is a stochasticity definition of the kth path connecting the wth origin-destination pair travel time reliability.

Considering the law of large numbers, the above probability for the kth path connecting the wth origin-destination pair (expressed as a vector of the links comprising the path) is calculated using standard normal distribution, as follows:

Equation (11) shows how to calculate the probability expressed in Equation (10).

Once finished with reliability calculations for substituting into the model, other relationships could be calculated as well; some of the relationships are also already evaluated when calculating the reliability. Therefore, the next section explains the approach followed to solve the model.

4 Solution of the optimization model

As explained in the Literature Review, various methods have been proposed for solving a bilevel multi-objective problem encountered in the design of an urban transportation network; for instance, the meta-heuristic algorithms, including GA, simulated annealing (SA) algorithm, particle swarm optimization (PSO) algorithm, extremal optimization (EO) algorithm, and adaptive large neighborhood search (ALNS) are commonly used to solve large-scale NP-hard problems [86].

The main cause behind using the MOPSO technique for solving the presented model in this research was its good convergence. Moreover, this algorithm has only few parameters to set, which lowers the time complexity of implementing it. A major drawback associated with the MOPSO algorithm is the possible entrapment in local optimal. The MOPSO cannot guarantee global convergence in solving discrete problems. In the present work, we lightly modified the procedures for calculating and updating the algorithm parameters to not only attenuate the mentioned drawback but also add to its convergence rate. Although this study has not been aimed at comparing various problem-solving methods and algorithms, but one should notice that the studies where heuristic and metaheuristic methodologies have been adopted to address multi-objective bilevel problems are too many, a few of which are cited in the Literature Review.

Finally, it was decided to use the PSO algorithm in this article. The PSO algorithm is not only associated with low computational cost in terms of both the required memory and convergence rate, but also exhibits much homogeneous performance and has been successfully implemented in many contexts. As such, when it comes to the solution of multi-level programming problems, PSO algorithms has been developed for solving bi-level linear programming problems wherein decision-makers at different levels share constraints; in newer developments, the linearity problem was addressed, so that the algorithm could be further applied to nonlinear problems. Considering flexibility and high convergence rate of PSO algorithm, it is used to solve the bi-level model presented in section 2.3 of the present research. In this section, assuming that the reader is adequately familiar with PSO algorithm (interested readers can refer to the research by [87]), initial explanations on the algorithm is neglect and rather proceed to implementation of the algorithm and the modifications that have been made. In the following, different steps of the solving model are described in a step-by-step approach, with explanations given for each step.

Step 1. Receiving network inputs: In the first step, the model was provided with network inputs including the number of nodes, links connecting the ith node to the jth node, origin-destination pairs, length of the links, current capacity of the links, allowable velocity through the links, cost per unit expansion of link capacity, mean and standard deviation of demand, the demand raised during emergencies or peak traffic hours, and the time duration considered by the decision-maker for traveling from the origin to destination of each pair.

Step 2. Finding all available paths connecting the origin-destination pairs: In this step, the model lists all available paths connecting each origin-destination pair.

Step 3. Defining features of the variables of the upper-level problem for PSO: Given that the decision variable of the upper-level problem is the added capacity to the links, then end up with equal number of decision variables to the number of links across the network. Given continuous nature of PSO, initially consider a value within [0, 1] for each variable, while assuming that the other decision variables form a vector of the size equal to the number of links across the network.

Step 4. Determining the parameters of PSO for upper-level problem: In this step, number of iterations and population size (which are variable depending on the problem size and decision-maker’s attitude) are determined. Moreover, minimum and maximum values of the coefficient of inertia (\( w_{\hbox{min} } \) and \( w_{\hbox{max} } \), respectively) are evaluated at this step.

Step 5. Initializing the PSO for the upper-level problem: At this step, a position and an initial velocity are defined for each particle. Considering the population size defined in Step 3, initial position of each particle is generated as a random number of the uniform distribution of [0, 1] for each variable, and initial velocity of all particles is set to zero. Then, cost function is evaluated for each particle, and finally, the best memory of each particle is taken as its current position, and the best position among all particles is that that of the particle for which the cost function is minimized; the positions are then stored for subsequent comparisons.

Step 6. Performing main PSO calculations for the number of iterations: This step is repeated for the number of iterations defined in step 4. For each particle, this step is briefly explained in the following.

Step 6.1. Updating particle velocity and position of the upper-level problem: According to PSO algorithm, velocity of each particle is updated via the following relationship:

And the particle positions are updated as follows:

In both of the above relationships, \( v_{i}^{t + 1} \) denotes the velocity of the ith particle at (t + 1)th iteration, r1 and r2 are uniform random number in the range [0, 1], \( x_{i}^{t} \)is the positon of the ith particle at tth iteration, \( x_{i,best} \)is the best personal memory of the ith particle up to current, and \( x_{gbest} \) is the best position experienced by all particles up to current. Furthermore, personal learning coefficient (c1), communal learning coefficient (c2), and coefficient of inertia (w) are the three parameters of paramount importance in this research, with their calculation method, which introduces a novelty of the present research, explained at the end of this section.

Step 6.2. Checking whether position of the upper-level decision variable is in range: At this step, it is checked that whether the updated position of each particle at step 6.1 remains within the allowable range previously defined in step 3; i.e., in case the updated position exceeds the upper bound of the particle position or falls below the lower bound of the particle position, the dimension of the updated velocity of the particle which is responsible for this outage is inversed (mirrored/reversed).

Step 6.3. Calculating cost function of the upper-level problem

Step 6.3.1. Transforming the particle position to integer decision variable within the considered range: As was mentioned in step 5, each dimension of the particle position falls in the range of [0, 1]. However, variables of the problem considered herein are integer values in the range of [0, m], so that the transformation is performed through the equation (14):

where Q is decision variable of the upper-level problem and x is the position established at Step 6.1.

Step 6.3.2. Updating link capacities considering the decision variable and calculating the cost of link capacity expansion (second objective function in the upper-level problem): At this step, expanded capacities (Q) are added to the capacities given in Step 1 followed by calculating the capacity expansion cost.

Step 6.3.3. Calculating mean and standard deviation of travel time through paths based on the new link capacities using Equations (2) through (9).

Step 6.3.4. Optimizing the lower-level problem considering the decision variable and constraints of the upper-level problem: At this step, the second-level problem is optimized through several steps, as briefly explained in the following.

Step 6.3.4.1. Among available path connecting each origin-destination pair, the path with minimum mean and standard deviation of travel time is selected. Output of this step is a vector wherein each element denotes optimal path connecting a particular origin-destination pair. These paths are those selected by the passengers if the added demand for traveling between their target origin-destination pairs.

Step 6.3.4.2. Adding the demand raised at peak traffic hours to the flow passing through the selected paths for each origin-destination path by the passengers, and then updating the mean and standard deviation of travel time through each link and hence each path.

Step 6.3.5. Calculating travel time reliability of the selected paths by the lower-level problem using the information obtained from the lower-level problem (the value of the first objective function in the lower-level problem).

Step 6.3.6. Summing the values of the two objective functions with appropriate weights using weighted sum method, and taking the result as the value of the cost function. For this purpose, given that one of the objective functions seeks maximizing the travel time reliability while the others tries to minimize the capacity expansion cost, the summation is performed via the following relationship:

Where \( w_{1} \) and \( w_{2} \) are the weights of the first and second objective functions that determined by the upper-level decision maker, also \( TTR^{\hbox{max} } \) is the sum of the best reliability values than can be realized across the network. This value is realized when all of the links adopt their upper bound of capacity expansion regardless of the associated cost. Moreover, \( TTR^{\hbox{min} } \) is the lowest network reliability that is realized when zero link capacity expansion is undertaken. \( Cost^{\hbox{max} } \) is the maximum cost that can be incurred by the administration for enhancing the capacity, while \( Cost^{\hbox{min} } \) is the minimum capacity expansion cost.

Step 6.4. At each iteration, firstly, for each particle, it is checked whether or not the value of the cost function of the particle is better than the best cost function experienced by the particle up to current; and if a better value was obtained, the value of cost function and current position of the particle replace the previous best cost function and position of the particle; then it is checked whether this function is better than the best cost function of the entire pool of particles; if yes, the value of this cost function and current position replace the previous best cost function and position, respectively.

Step 6.5. If the number of iterations is smaller than the allowable number of iterations considered in Step 4, after updating the parameters of the upper-level problem, one should proceed to Step 6.1. Otherwise, optimal solution of the problem is the best cost function and position among the entire pool of particles.

The important thing in this solution approach is the appropriate choice of the parameters depending on the particular problem in question. Numerous research works have been performed on this subject, including the study reported by [87] where efficiency of PSO for different problems was subjected to the proper choice of the PSO parameters.

One of the objectives and novelties presented in this research is the presentation of an improved version of the PSO algorithm by which one can enhance convergence rate of the algorithm while avoiding local optima as much as possible for bi-level problems faced in transportation network. Among others, some features of this improved PSO algorithm are that, it will not add to temporal and spatial complexities of the problem and it is easy to implement with its simple structure and the few parameters to be adjusted.

In the present research, the followings are applied to the previously mentioned steps of the PSO algorithm:

(A) The value of the coefficient of inertia of the first iteration (at Step 6.1) is set to its maximum value, i.e., wmax. Furthermore, the coefficient of inertia should successively at each iteration [77]. For this purpose, a damping factor called w_damp was used. The value of this damping factor was selected in such a way to achieve a final coefficient of inertia of wmin. Therefore, we end up with the following relationship:

Using Equation (16), the value of the decreasing inertia coefficient is obtained.

(B) At Step 6.5, should there was a need for a repetition, before going back to step 6.1, the value of the coefficient of inertia was updated as follows:

The inertia coefficient is adjusted using Equation (17).

(C) The values of personal and communal learning coefficients were updated at each iteration. The idea was that, at early iterations, in order to avoid diverging toward local optima, it is better to keep the personal learning coefficient higher than the communal one, while at final iterations, the opposite holds true. To this end, according to the study reported by [82], the following relationships should be established:

Therefore, firstly, initial values were defined for c1 and c2. According to [76], initial value of c1 was set to 2.9924, and based on Equation (18), initial value of c2 was given as Equation (19).

where rand values are random numbers in the range of [0, 1].

(D) At each iteration, the value of c1 is reduced in such a way to achieve a value of 1 by the final iteration. As such, the damping factor (c1_damp) that should be multiplied by c1 at each iteration is obtained as follows:

Therefore, at Step 6.5, should there was a need for a repetition, before going back to Step 6.1, the value of c1 was updated as per Equation (21):

The value of \( {\text{c}}_{2} \)was also updated using Equation (19).

The optimal value of the parameters \( \hbox{max} \_it \), \( nPop \), \( w_{\hbox{min} } \)and \( w_{\hbox{max} } \) are obtained by design of experiment.

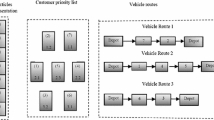

Figure 1 shows the flow of evaluating the cost function for a particle at a particular iteration.

5 Presentation of a numerical case study

The model proposed in this research is investigated here for two networks.

5.1 Investigation of a hypothetical simple network with 4 nodes and 10 links

In this section, a small network with 4 nodes and 10 links is considered, as demonstrated in figure 2. Characteristics of the network are tabulated in table 2.

Properties of the considered origin-destination pairs are given in table 3.

The upper-level decision-maker aims at enhancing reliability of the above-described network by expanding the link capacities at minimum possible cost. Moreover, the lower-level decision maker is seeking to find a path with minimum mean and standard deviation of travel time.

Using the proposed PSO algorithm, the presented model for the considered network was modeled, simulated and finally solved in MATLAB R2017a Software running on a PC powered by a CPU Core(TM) i5-2410M 2.30 GHz. The parameters considered for solving the network included wmin = 0.01, wmax = 0.9, max_it = 200, and nPop = 40.

Solutions of the problem for the considered network are given in table 4. Presented in this table are the optimal path connecting each origin-destination pair, capacity expansion of each link, and cost of capacity expansion.

According to table 4, cost of link capacity expansion was found to be 4,170 unit currency and total travel time for the entire pool of origin-destination pairs was found to be 1 hour and 5 minutes and 19 seconds. The capacity expansion of each link is presented separately. Moreover, upper bound of link capacity expansion was set to 100. If the link capacity is not expanded, total travel time for the entire pool of origin-destination pairs was found to be 10 hours and 38 minutes and 40 seconds, i.e., 10 times longer than the corresponding total travel time to optimal link capacity expansion.

Algorithm running time for this example was 38 seconds.

5.2 Investigation of the transportation network across the city of Sioux Falls, South Dakota, USA (adapted from the study by [83])

Investigated in this section is the urban transportation network of the city of Sioux Falls. The network has been studied in many contributions, including the study by [83]. The considered network is composed of 24 nodes and 76 connecting links, as demonstrated in figure 3, with the network information detailed at https://github.com/bstabler/TransportationNetworks/tree/master/SiouxFalls. In the study by [83], 10 links were candidate for capacity expansion, including (6-8), (7-8), (8-6), (8-7), (9-10), (16-10), (24-13), (10-16), and (24-13). In the present research, the same 10 links were considered as candidates for capacity expansion at the following link capacity expansion costs, respectively: (245, 260, 245, 260, 226, 226, 351, 231, 351, and 231 unit currencies). The difference between the investigation reported in the study by [83] and the present contribution is that, in the present research, it is assumed that, under normal condition, each origin-destination pair is associated with a demand with a mean equal to the demand provided in the network information, and a randomly generated standard deviation proportional to its mean (as detailed in Appendix 1), in such a way that the demands follow lognormal distribution. Furthermore, during peak traffic hours, a randomly generated demand in proportion to the demand under normal condition is added to the normal condition demand (as detailed in Appendix 1) and the result is referred to as peak traffic demand. The problem begins with distributing the randomly generated demand among several paths of shorter length uniformly, with the number of the paths being generated randomly based on a uniform distribution. Using the generated distributions, the flows passing through all links are calculated followed by finding an optimal path for the demands during peak traffic hours, while in the study by [83] capacities of the candidate links increased to an optimal value considering the existing demands (under normal conditions and regardless of probable demands).

The Sioux Falls network with 24 nodes and 76 links ([83]).

In the present research, the flow passing through the links are calculated under normal demand, with the results tabulated in table 5. As can be observed, among the total of 76 links, the flow passing through 66 links exceeds the corresponding link capacity, which are marked in bold. Moreover, the candidate links for capacity expansion are marked in italic.

The considered network using the model presented in section 3, where the upper-level decision-maker seeks to enhance travel time reliability of the network by expanding the capacity of the 10 selected links at minimum possible cost, and the lower-level decision-maker aims at finding the path with minimum mean and standard deviation of travel time for traveling from the origin to the target destination in peak traffic hours. In the present research, given that the flow passing through about 87% of the links under normal conditions in terms of demand exceeds the corresponding link capacity (at an average of 17,000 units difference between the capacity of the links engaged with capacity shortage and the flow passing through them) and assuming a limited budget for link capacity expansion, maximum capacity expansion per link was set to 15,000 units. In this problem, there are a total of 528 origin-destination pairs; mean and standard deviation of the demand for these pairs under normal condition and peak traffic hours are reported in Appendix 1.

In order to solve the model using the method presented in section 4, MATLAB R2017a Software running on a PC powered by a CPU Core(TM) i5-2410M 2.30 GHz was used. In order to adjust the algorithm parameters, response surface methodology (RSM) was implemented in Design Expert 10.0.7. Adjustable ranges of the parameters are presented in table 6. On this basis, a total of 21 experiments were performed and the software gave 30 optimal designs, of which we selected and run 6 designs. The results are presented in table 7.

Among the above-proposed designs, the Design #2 was selected as it returned the lowest value of cost function.

In this network, number of the paths connecting any origin-destination pair is high. For instance, 3,412 and 4,027 paths connect the origin-destination pairs (4-1) and (15-1), respectively. Moreover, there are 407 origin-destination pairs for which the model has succeeded to find optimal path and travel time during peak traffic hours at a reliability of 90% (solution of lower-level problem); some of such solutions are presented in Appendix 2. Decision variables of the upper-level problem are reported in table 8.

In the considered network, in case of failure to expand the capacity of the candidate links, total travel time between all of the origin-destination pairs was, on average, 820,000 minutes; but in case the capacity expansion was realized to the level mentioned in table 8, the corresponding total travel time decreased to 510,751 minutes (i.e., 38% reduction), but instead, the upper-level decision-maker incurs a cost of 11,311,638 unit currencies.

Algorithm running time for this example was 45 minutes and 29 seconds.

6 Discussion

Upon implementing and solving the presented model of transportation network for the city of Sioux Falls, the travel times changed significantly, increasing the passengers’ satisfaction with the transportation system and traffic. Moreover, in the mid-term, the shortened travel time would improve the passengers’ mental state as they would spend less time for transportation and rather more time for working at higher efficiency and productivity. In terms of urban planning, the improvement in travel time reliability would not only reduce the traffic-induced expenses, but also lower the fuel consumption and hence the environmental and acoustic pollutions. Although increasing the capacity at particular links would incur some cost to the system, but rather was anticipated to reduce the travel times by 38% on average, leading to a reliability of 90%. As a solution, increasing the network link capacities can be used to mitigate the traffic problem in metropolitans effectively. In a metropolitan, the presence of old links, especially those within the core texture of the city, makes its extremely costly to increase a new link. In addition, there are places where it is practically impossible to establish a new link. In such cases, the link capacity can be increased by various methods while preserving the existing streets, including the widening of the driveway or establishing such regulations as parking prohibitions to turn the ex-parking lots into driveway.

7 Conclusion

In this study, the model was evaluated on two networks of different sizes. In this respect a smaller hypothetical network along with a real network (the one across the Sioux Falls city) were considered. Specifications of these networks were detailed in previous sections. Upon modeling, the increase in the link capacities was calculated, as reported in table 9.

After solving the problem described in section 5, the values of the objective function of the first-level problem in three optimal cases, without increasing the capacity of the links and with the maximum increase in the capacity of the links, are presented in table 9 and the changes are expressed relative to the optimal state.

According to the results of table 9, the improved reliability of travel is clearly evident.

As is evident from the results, in case of failure to increase the link capacities at an anticipated cost of 11,311,638 unit-costs, total travel time would increase by 39% (i.e., the reliability reduces) while an increase in the cost by 3 folds would shorten the travel time by no more than 13% considering all origin-destination pairs.

In the management of urban transportation and traffic, network reliability is an important indicator of the system efficiency and productivity assessment, which is important both from the point of view of management and the users of the transportation system. As stated before, in this research, the reliability of travel times were considered as the system assessment criteria. Implementing the model on a part of Sioux Falls urban transportation network, by increasing the capacity of some lines in the network, the reliability was significantly increased.

Considering the bi-level and nonlinear nature of the problem the presented model was solved using the proposed PSO algorithm on two case studies of a small and a relatively large networks. Given computational and temporal complexity of the problem, the presented solutions were not exact optimal solutions, but rather rational and close to the optimal solution. For the urban network across the city of Sioux Falls, the model parameters were set using RSM as the design method, largely contributing to the convergence to optimal solution.

The proposed model not only was computationally complex, but also suffered from intensive temporal complexity, with the complexity increasing exponentially with increasing the number of origin-destination pairs, links, or nodes across the network. For example, in order to solve the Sioux Falls network problem with adjusted parameters, the processing time was found to be 276 hours and 22 minutes and 57 seconds, which is so long; the parameters were adjusted using systems of identical properties in a parallel arrangement. The following list provides recommendations for future research in this field:

-

1.

Considering the objective of environmental pollution minimization in the upper-level problem.

-

2.

Given that decision variable of the upper-level problem is link capacity expansion; involvement of capacity reliability maximization can contribute to enhancement of overall network reliability.

-

3.

Adopting other appropriate solution methods such as Quantum Particle Swarm Optimization (QPSO), multi-objective hybrid algorithms comprised of PSO and genetic algorithm to decrease the processing time or even converge to better solutions, the interested readers can refer to [88].

-

4.

Presenting a heuristic solution method to reduce computational and temporal complexity of the problem.

References

Chen A, Zhou Z, Chootinan P, Ryu S, Yang C and Wong S C 2011 Transport network design problem under uncertainty: a review and new developments. Transp. Rev. 31: 743–768

Jiang T and Li J 2016 Research on the task scheduling algorithm for cloud computing on the basis of particle swarm optimization. Int. J. Simul. Syst. Sci. Technol. 17: 1–5

Leblanc L J 1975 An algorithm for the discrete network design problem. Transp. Sci. 9: 183–199

Abdulaal M and LeBlanc L J 1979 Continuous equilibrium network design models. Transp. Res. Part B Methodol. 13: 19–32

Yan H and Lam W H K 1996 Optimal road tolls under conditions of queueing and congestion. Transp. Res. Part A Policy Pract. 30: 319–332

Yin Y 2000 Genetic-algorithms-based approach for bilevel programming models. J. Transp. Eng. 126: 115–120

Wong S C 1997 Group-based optimisation of signal timings using parallel computing. Transp. Res. Part C Emerg. Technol. 5: 123–139

Maher M J, Zhang X and Van Vliet D 2001 A bi-level programming approach for trip matrix estimation and traffic control problems with stochastic user equilibrium link flows. Transp. Res. Part B Methodol. 35: 23–40

Meng Q, Yang H and Bell M G H 2001 An equivalent continuously differentiable model and a locally convergent algorithm for the continuous network design problem. Transp. Res. Part B Methodol. 35: 83–105

Lan C L, Venkatanarayana R and Fontaine M D 2019 Development of a methodology for determining statewide recurring and nonrecurring freeway congestion: virginia case study. Transp. Res. Rec. 2673: 566–578

Cheung R K 1998 Iterative methods for dynamic stochastic shortest path problems. Nav. Res. Logist. (NRL) 45: 769–789

Fan Y Y, Kalaba R E and Moore Ii J E 2005 Shortest paths in stochastic networks with correlated link costs. Comput. Math. Appl. 49: 1549–1564

Gao S 2005 Optimal adaptive routing and traffic assignment in stochastic time-dependent networks. Department of Civil and Environmental Engineering, Massachusetts Institute of Technology, Cambridge

Hall R W 1986 The fastest path through a network with random time-dependent travel times. Transp. Sci. 20: 182–188

Miller-Hooks E and Mahmassani H 2003 Path comparisons for a priori and time-adaptive decisions in stochastic, time-varying networks. Eur. J. Oper. Res. 146: 67–82

Polychronopoulos G H and Tsitsiklis J N 1996 Stochastic shortest path problems with recourse. Netw. Int. J. 27: 13–-143

Pretolani D 2000 A directed hypergraph model for random time dependent shortest paths. Eur. J. Oper. Res. 123: 315–324

Waller S T and Ziliaskopoulos A K 2002 On the online shortest path problem with limited arc cost dependencies. Netw. Int. J. 40: 216–227

Bates J, Polak J, Jones P and Cook A 2001 The valuation of reliability for personal travel. Transp. Res. Part E Logist. Transp. Rev. 37: 191–229

Brownstone D, Ghosh A, Golob T F, Kazimi C and Van Amelsfort D 2003 Drivers’ willingness-to-pay to reduce travel time: evidence from the San Diego I-15 congestion pricing project. Transp. Res. Part A Policy Pract. 37: 373–387

Carrion C and Levinson D 2012 Value of travel time reliability: a review of current evidence. Transp. Res. Part A Policy Pract. 46: 720–741

Recker W, Chung Y, Park J, Wang L, Chen A, Ji Z, Liu H, Horrocks M, and Oh JS 2005 Considering risk-taking behavior in travel time reliability. California PATH Program, Institute of Transportation Studies University of California

Small K A 1999 Valuation of travel-time savings and predictability in congested conditions for highway user-cost estimation. Transportation Research Board, Washington, DC

Ting C-J and Schonfeld P 2005 Schedule coordination in a multiple hub transit network. J. Urban Plan. Dev. 131: 112–124

Wu Y, Yang H, Tang J and Yu Y 2016 Multi-objective re-synchronizing of bus timetable: model, complexity and solution. Transp. Res. Part C Emerg. Technol. 67: 149–168

Quarmby D A 1967. Choice of travel mode for the journey to work: some findings. J. Transp. Econ. Policy 1: 273–314

Xu H, Yang H, Zhou J and Yin Y 2017 A route choice model with context-dependent value of time. Transp. Sci. 51: 536–548

Zhao X, Wan C, Sun H, Xie D and Gao Z 2017 Dynamic rerouting behavior and its impact on dynamic traffic patterns. IEEE Trans. Intell. Transp. Syst. 18: 2763–2779

Kim J, Corcoran J and Papamanolis M 2017 Route choice stickiness of public transport passengers: measuring habitual bus ridership behaviour using smart card data. Transp. Res. Part C Emerg. Technol. 83: 146–164

Liu R and Sinha S 2007 Modelling urban bus service and passenger reliability. In: The Third International Symposium on Transportation Network Reliability

Wu W, Liu R and Jin W 2017 Modelling bus bunching and holding control with vehicle overtaking and distributed passenger boarding behaviour. Transp. Res. Part B Methodol. 104: 175–197

Wu W, Liu R and Jin W 2018 Integrating bus holding control strategies and schedule recovery: simulation-based comparison and recommendation. J. Adv. Transp. 2018: 1–13

Fan Y Y, Kalaba R E and Moore J E 2005 Arriving on time. J. Optim. Theory Appl. 127: 497–513

Frank H 1969 Shortest paths in probabilistic graphs. Oper. Res. 17: 583–599

Nie Y M and Wu X 2009 Shortest path problem considering on-time arrival probability. Transp. Res. Part B Methodol. 43: 597–613

Seshadri R and Srinivasan K K 2010 Algorithm for determining most reliable travel time path on network with normally distributed and correlated link travel times. Transp. Res. Rec. 2196: 83–92

Srinivasan K K, Prakash A A and Seshadri R 2014 Finding most reliable paths on networks with correlated and shifted log–normal travel times. Transp. Res. Part B Methodol. 66: 110–128

Chen A and Ji Z 2005 Path finding under uncertainty. J. Adv. Transp. 39: 19–37

van Oort N 2016 Incorporating enhanced service reliability of public transport in cost-benefit analyses. Publ. Transp. 8: 143–160

Kittelson, Associates I 2003. Urbitran, Inc., LKC Consulting Services, Inc., MORPACE International, Inc., Queensland University of Technology, and Y. Nakanishi. Transportation Research Board of the National Academies Washington

Bagherian M, Cats O, van Oort N and Hickman M 2016 Measuring passenger travel time reliability using smart card data. In: The 9th Triennial Symposium on Transportation Analysis

Gittens A and Shalaby A 2015 Evaluation of bus reliability measures and development of a new composite indicator. Transp. Res. Rec. 2533: 91–99

Currie G, Douglas N J and Kearns I 2012 An assessment of alternative bus reliability indicators. In: Australasian Transport Research Forum (ATRF), 35th

Cats O 2014 Regularity-driven bus operation: principles, implementation and business models. Transp. Policy 36: 223–230

Li M, Zhou X and Rouphail N M 2017 Quantifying travel time variability at a single bottleneck based on stochastic capacity and demand distributions. J. Intell. Transp. Syst. 21: 79–93

Dixit M, Brands T, van Oort N, Cats O and Hoogendoorn S 2019 Passenger travel time reliability for multimodal public transport journeys. Transp. Res. Rec. 2673: 149–160

Liu T and Ceder A A 2018 Integrated public transport timetable synchronization and vehicle scheduling with demand assignment: a bi-objective bi-level model using deficit function approach. Transp. Res. Part B Methodol. 117: 935–955

Fonseca J P, van der Hurk E, Roberti R and Larsen A 2018 A matheuristic for transfer synchronization through integrated timetabling and vehicle scheduling. Transp. Res. Part B Methodol. 109: 128–149

Wu W, Liu R, Jin W and Ma C 2019 Stochastic bus schedule coordination considering demand assignment and rerouting of passengers. Transp. Res. Part B Methodol. 121: 275–303

Mirchandani P B and Soroush H 1985 Optimal paths in probabilistic networks: a case with temporary preferences. Comput. Oper. Res. 12: 365–381

Nikolova E, Brand M and Karger D R 2006 Optimal route planning under uncertainty. In: Proceedings of the Sixteenth International Conference on International Conference on Automated Planning and Scheduling

Sen S, Pillai R, Joshi S and Rathi A K 2001 A mean-variance model for route guidance in advanced traveler information systems. Transp. Sci. 35: 37–49

Seshadri R and Srinivasan K K 2012 An algorithm for the minimum robust cost path on networks with random and correlated link travel times. In: Network Reliability in Practice. Springer; pp. 171–208

Sivakumar R A and Batta R 1994 The variance-constrained shortest path problem. Transp. Sci. 28: 309–316

Xing T and Zhou X 2011 Finding the most reliable path with and without link travel time correlation: a Lagrangian substitution based approach. Transp. Res. Part B Methodol. 45: 1660–1679

Chen A and Zhou Z 2010 The α-reliable mean-excess traffic equilibrium model with stochastic travel times. Transp. Res. Part B Methodol. 44: 493–513

Ji Z, Kim Y S and Chen A 2011 Multi-objective α-reliable path finding in stochastic networks with correlated link costs: A simulation-based multi-objective genetic algorithm approach (SMOGA). Exp. Syst. Appl. 38: 1515–1528

Nicholson A 2012 Estimating travel time reliability: can we safely ignore correlation. Delft University, Netherlands

Prakash A A and Srinivasan K K 2018. Pruning algorithms to determine reliable paths on networks with random and correlated link travel times. Transportation Science 52:80-101

Zockaie A, Nie Y, Wu X and Mahmassani H S 2013 Impacts of correlations on reliable shortest path finding: a simulation-based study. Transp. Res. Rec. 2334: 1–9

Osorio C and Selvam K K 2017 Simulation-based optimization: achieving computational efficiency through the use of multiple simulators. Transp. Sci. 51: 395–411

Di Z, Yang L, Qi J and Gao Z 2018 Transportation network design for maximizing flow-based accessibility. Transp. Res Part B Methodol. 110: 209–238

Ben-Ayed O and Blair C E 1990. Computational difficulties of bilevel linear programming. Oper. Res. 38: 556–560

Bard J F 2013 Practical bilevel optimization: algorithms and applications. Springer, Berlin

Bialas W and Karwan M 1982. On two-level optimization. IEEE Trans. Autom. Control 27: 211–214

Shi C, Lu J and Zhang G 2005 An extended Kth-best approach for linear bilevel programming. Appl. Math. Comput. 164: 843–855

Zhang G, Shi C and Lu J 2008 An extended K th-Best approach for referential-uncooperative bilevel multi-follower decision making. Int. J. Comput. Intell. Syst. 1: 205–214

Bard J F, Falk J E 1982 An explicit solution to the multi-level programming problem. Comput. Oper. Res. 9: 77–100

Hansen P, Jaumard B and Savard G 1992 New branch-and-bound rules for linear bilevel programming. SIAM J. Sci. Stat. Comput. 13: 1194–1217

Shi C, Lu J and Zhang G 2005 An extended Kuhn–Tucker approach for linear bilevel programming. Appl. Math. Comput. 162: 51–63

White D J, Anandalingam G 1993 A penalty function approach for solving bi-level linear programs. J. Glob. Optim. 3: 397–419.

Ankhili Z and Mansouri A 2009 An exact penalty on bilevel programs with linear vector optimization lower level. Eur. J. Oper. Res. 197: 36–41

Audet C, Haddad J and Savard G 2007 Disjunctive cuts for continuous linear bilevel programming. Optim. Lett. 1: 259–267

Faísca N P, Dua V, Rustem B, Saraiva P M and Pistikopoulos E N 2007 Parametric global optimisation for bilevel programming. J. Glob. Optim. 38: 609–623

Kennedy J and Eberhart R 1995 Particle swarm optimization. In: IEEE Proceedings of ICNN’95—International Conference on Neural Networks

Clerc M and Kennedy J 2002 The particle swarm-explosion, stability, and convergence in a multidimensional complex space. IEEE Trans. Evol. Comput. 6: 58–73

Shi Y and Eberhart R 1998 A modified particle swarm optimizer. In: International Conference on Evolutionary Computation Proceedings. IEEE, pp. 69–73

Kuo R J and Huang C C 2009 Application of particle swarm optimization algorithm for solving bi-level linear programming problem. Comput. Math. Appl. 58: 678–685

Wan Z, Wang G and Sun B 2013 A hybrid intelligent algorithm by combining particle swarm optimization with chaos searching technique for solving nonlinear bilevel programming problems. Swarm Evolut. Comput. 8: 26–32

Gao Y, Zhang G, Lu J and Wee H-M 2011 Particle swarm optimization for bi-level pricing problems in supply chains. J. Glob. Optim. 51: 245–254

Zhang G, Zhang G, Gao Y and Lu J 2010 Competitive strategic bidding optimization in electricity markets using bilevel programming and swarm technique. IEEE Trans. Ind. Electron. 58: 2138–2146

Yang Q, Tian J and Si W 2017 An improved particle swarm optimization based on difference equation analysis. J. Differ. Equ Appl. 23: 135–152

Mathew T V and Sharma S 2009 Capacity expansion problem for large urban transportation networks. J. Transp. Eng. 135: 406–415

Guessous Y, Aron M, Bhouri N and Cohen S 2014 Estimating travel time distribution under different traffic conditions. Transp. Res. Procedia 3: 339–348