Abstract

Alzheimer’s disease (AD) is characterized by gradual neurodegeneration and loss of brain function, especially for memory during early stages. Regression analysis has been widely applied to AD research to relate clinical and biomarker data such as predicting cognitive outcomes from MRI measures. Recently, multi-task based feature learning (MTFL) methods with sparsity-inducing \( \ell _{2,1} \)-norm have been widely studied to select a discriminative feature subset from MRI features by incorporating inherent correlations among multiple clinical cognitive measures. However, existing MTFL assumes the correlation among all tasks is uniform, and the task relatedness is modeled by encouraging a common subset of features via sparsity-inducing regularizations that neglect the inherent structure of tasks and MRI features. To address this issue, we proposed a fused group lasso regularization to model the underlying structures, involving 1) a graph structure within tasks and 2) a group structure among the image features. To this end, we present a multi-task feature learning framework with a mixed norm of fused group lasso and \( \ell _{2,1} \)-norm to model these more flexible structures. For optimization, we employed the alternating direction method of multipliers (ADMM) to efficiently solve the proposed non-smooth formulation. We evaluated the performance of the proposed method using the Alzheimer’s Disease Neuroimaging Initiative (ADNI) datasets. The experimental results demonstrate that incorporating the two prior structures with fused group lasso norm into the multi-task feature learning can improve prediction performance over several competing methods, with estimated correlations of cognitive functions and identification of cognition-relevant imaging markers that are clinically and biologically meaningful.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Alzheimer’s disease (AD) is the most common cause of dementia, which mainly affects memory function, and progress ultimately culminate in a state of dementia where all cognitive functions are affected. The disease poses a serious challenge to the aging society. The worldwide prevalence of AD is predicted to quadruple from 46.8 million in 2016 (Alzheimer’s Association et al. 2016) to 131.5 million by 2050 according to ADI’s World Alzheimer Report (Batsch and Mittelman 2015). Dementia also has a huge economic impact. Today, the total estimated worldwide cost of dementia of US is $818 billion, and it will achieve a trillion dollar disease by 2018.

Predicting cognitive performance of subjects from neuroimaging measures and identifying relevant imaging biomarkers are important focuses of the study of Alzheimer’s disease. Magnetic resonance imaging (MRI) allows the direct observation of brain changes such as cerebral atrophy or ventricular expansion (Castellani et al. 2010). Previous work showed that brain atrophy detected by MRI is correlated with neuropsychological deficits (Frisoni et al. 2010). The relationships between commonly used cognitive measures and structural changes detected by MRI have been previously studied using regression models. Many analyses have demonstrated a relationship between baseline MRI features and cognitive measures. The most commonly used cognitive measures are the Alzheimer’s Disease Assessment Scale cognitive total score (ADAS), the Mini Mental State Exam score (MMSE), and the Rey Auditory Verbal Learning Test (RAVLT). ADAS is the gold standard in AD drug trials for cognitive function assessment, and is the most popular cognitive testing instrument to measure the severity of the most important symptoms of AD. MMSE measures cognitive impairment, including orientation to time and place, attention and calculation, immediate and delayed recall of words, language and visuo-constructional functions. RAVLT measures episodic memory and is used for the diagnosis of memory disturbances, including eight recall trials and a recognition test.

Early studies focused on traditional regression models to predict cognitive outcomes one at a time. To achieve more appropriate predictive models of performance and identify relevant imaging biomarkers, many previous works formulated the prediction of multiple cognitive outcomes as a multi-task learning problem and developed regularized multi-task learning methods to model disease cognitive outcomes (Wan et al. 2012, 2014; Zhou et al. 2013; Wang et al. 2012). Multi-task learning (MTL) (Caruana 1998) describes a learning paradigm that seeks to improve the generalization performance of a learning task with the help of other related tasks. The fundamental hypothesis of the MTL methods is to assume that if tasks are related then learning of one task can benefit from the learning of other tasks. Learning multiple related tasks simultaneously has been theoretically and empirically shown to significantly improve performance. The key of MTL is how to exploit correlation among tasks via an appropriate shared representation. Two popular shared representations to model task relatedness are model parameter sharing (Argyriou et al. 2008; Jebara 2011) and feature representation sharing (Evgeniou and learning 2004; Yu et al. 2005; Xue et al. 2007). There are inherent correlations among different cognitive scores. Therefore, the prediction of different types of cognitive scores can be modeled as an MTL formulation, and the tasks are related in the sense that they all share a small set of features, which is multi-task feature learning (MTFL) problem. To solve MTFL problems, regularization has been introduced to produce better performance than traditional solution using single-task learning. The most commonly used regularization is \(\ell _{2,1} \)-norm (Liu et al. 2009), which is employed to extract features that impact all or most clinical scores, since the assumption is that a given imaging marker can affect multiple cognitive scores and only a subset of brain regions (region-of-interest, ROI) are relevant. (Wang et al. 2011) and (Zhang and Yeung 2012a) employed multi-task feature learning strategies to select biomarkers that could predict multiple clinical scores. Specifically, (Wang et al. 2011) employed an \( \ell _{1} \)-norm regularizer to impose sparsity among all elements and proposed the use of a combined \( \ell _{2,1} \)-norm and \( \ell _{1} \)-norm regularizations to select features. (Zhang et al. 2012) proposed a multi-task learning with \( \ell _{2,1} \)-norm to select a common subset of relevant features for multiple variables from each modality.

A major limitation of existing MTFL methods is that complex relationships among imaging markers and among cognitive outcomes are often ignored. The correlating of multiple prediction models assumes that all tasks shared the same feature subset. This is not a realistic assumption, since it treats all cognitive outcomes (response) and MRI features (predictors) equally and neglects underlying correlations between the cognitive tasks and structure within MRI features. Specifically, 1) for the cognitive outcomes, each assessment typically yields multiple evaluation scores from a set of relevant cognitive tasks, and thus these scores are inherently correlated. An example would be the scores of TOTAL and TOT6 in the RAVLT. Different assessments can evaluate different cognitive functions, resulting in low correlation and preferring different brain regions. For example, the tasks in TRAILS aim to test a combination of visual, motor, and executive functions, while the set of RAVLT aims testing of verbal learning memory. It is reasonable to assume that correlations among tasks are not equal, and some tasks may be more closely related than others in assessment tests of cognitive outcomes. 2) On the other hand, for MRI data, many MRI features are interrelated and together reveal brain cognitive functions (Yan et al. 2015). In our data, multiple shape measures (volume, area, and thickness) from the same region provide a comprehensively quantitative evaluation of cortical atrophy, and tend to be selected together as joint predictors. Our previous study proposed a model in which prior knowledge guided multi-task feature learning model. Using group information to enforce intra-group similarity has been demonstrated to be an effective approach (Liu et al. 2017). Overall, it is important to explore and utilize such interrelated structures and select important and structurally correlated features together.

To address these model limitations, we designed a novel multi-task feature learning that models a common representation with respect to MRI features across tasks as well as the local task structure with respect to brain regions. Specifically, we designed novel mixed structured sparsity norms, called fused group lasso, to capture the underlying structures at the level of tasks and features. This regularizer is based on the natural assumption that if some tasks are correlated, they should have a small similar weight vector and similar selected brain regions. It penalizes differences between prediction models of highly correlated tasks, and encourages similarity in the selected features of highly correlated tasks. To discover such dependent structures among the cognitive outcomes, we employed the Pearson correlation coefficient to uncover the interrelations among cognitive measures and estimated the correlation matrix of all tasks. In our work, all the cognitive measures (20 in total) in the ADNI dataset were used to exploit the relationship. To the best of our knowledge, our approach is the first work to analyze all cognitive measures in the ADNI dataset and their relationships. With estimated task correlation, we employ the idea of fused lasso to capture the dependence of response variables. At the same time, taking into account the group structure among predictors, prior group information is incorporated into the fused lasso norm, promoting intra-group similarity with group sparsity. By incorporated fused group lasso into the MTFL model, we can better understand the underlying associations of prediction tasks of cognitive measures, allowing more stable identification of cognition-relevant imaging markers. The resulting formulation is challenging to solve due to the use of non-smooth penalties including the fused group lasso and the \( \ell _{2,1} \)-norm. An effective ADMM algorithm is proposed to tackle the complex non-smoothness.

Through empirical evaluation and comparison with different baseline methods and recently developed MTL methods using data from ADNI, we illustrate that the proposed FGL-MTFL method outperforms other methods. Improvements are statistically significant for most scores (tasks). The results demonstrate that incorporation of the fused group lasso into the traditional MTFL formulation improves predictive performance relative to traditional machine learning methods. We discuss the most prominent ROIs and task correlations identified by FGL-MTFL. We found that the results corroborate previous studies in neuroscience. Our previous works also formulate prediction tasks with a multi-task learning scheme. The algorithm SMKMTL (Sparse multi-kernel based multi-task learning) in (Cao et al. 2017) exploits a nonlinear prediction model based on multi-kernel learning. However, it assumes that correlations among tasks are equal and the features are independent. Although the GSGL-MTL (Group-guided Sparse Group Lasso regularized multi-task learning) algorithm (Liu et al. 2017) exploits the group structure of features by incorporating a priori group information, it does not consider the complex relationships among cognitive outcomes.

The rest of the paper is organized as follows. In “Preliminary Methodology”, we provide a description of the preliminary methodology: multi-task learning (MTL), \( \ell _{2,1} \)-norm, group lasso norm, and fused lasso norm. A detailed mathematical formulation and optimization of FGL-MTFL is provided in “Fused Group Lasso Regularized Multi-Task Feature Learning, FGL-MTFL”. In “Experimental Results and Discussions”, we present the experimental results and evaluate the performance of FGL-MTFL using data from the ADNI-1 dataset. The conclusions are presented in “Conclusion”.

Preliminary Methodology

Multi-Task Learning

Consider a multi-task learning (MTL) setting with k tasks. Let p be the number of covariates, shared across all the tasks, and let n be the number of samples. Let \(X \in \mathbb {R}^{n \times p}\) denote the matrix of covariates, \(Y \in \mathbb {R}^{n \times k}\) be the matrix of responses with each row corresponding to a sample, and \({\Theta } \in \mathbb {R}^{p \times k}\) denote the parameter matrix, with column \(\theta _{.m} \in \mathbb {R}^{p}\) corresponding to task m, \(m = 1,\ldots ,k\), and row \(\theta _{j.} \in \mathbb {R}^{k}\) corresponding to feature j, \(j = 1,\ldots ,p\). The MTL problem can be constructed by estimating the parameters based on suitable regularized loss function. In order effectively to associate imaging markers and cognitive measures, the MTL model minimizes the following objective:

where \(L(\cdot )\) denotes the loss function and \(R(\cdot )\) is the regularizer. In the current context, we assume the loss to be the square loss, i.e.,

where \(\mathbf {y}_{i} \in \mathbb {R}^{1 \times k}, \mathbf {x}_{i} \in \mathbb {R}^{1 \times p}\) are the i-th rows of \(Y,X\), respectively corresponding to the multi-task response and covariates for the i-th sample. We note that the MTL framework can be easily extended to other loss functions. Clearly, different choices of the penalty \( R({\Theta }) \) may present quite different multi-task methods. Using some prior knowledge, we then add penalty \( R({\Theta }) \) to encode relatedness among tasks.

ℓ 2,1-norm

One appealing property of the \( \ell _{2,1} \)-norm regularization is that it encourages multiple predictors from different tasks to share similar parameter sparsity patterns. The MTFL model via the \( \ell _{2,1} \)-norm regularization considers

and is suitable for the simultaneous prioritization of sparsity over features for all tasks.

The key point of Eq. 3 is the use of \( \ell _{2} \)-norm for \( \theta _{j.} \), which forces grouping of the weights corresponding to the j-th feature across multiple tasks and tends to select features based on the joint strength of k tasks jointly (See Fig. 1a). There is a correlation among multiple cognitive tests. A relevant imaging predictor typically may have more or less influence on all these scores, and it is possible that only a subset of brain regions are relevant to each assessment. By employing MTFL, the correlation among different tasks can be incorporated into the model to build a more appropriate predictive model and identify a subset of features. The rows of \( {\Theta } \) are equally treated in MTFL, which implies that the underlying structures among predictors are ignored.

The illustration of three different regularizations. Each column of Θ is corresponding to a single task and each row represents a feature dimension. The MRI features in each region belong to a group. We assume the p features to be divided into g disjoint groups \( \mathcal G_{\ell },\ell = 1,\ldots , g \), with each group having νℓ features respectively. For each element in Θ, white color means zero-valued elements and color indicates non-zero values

G 2,1-norm

Despite the above achievements, few regression models take into account the covariance structure among predictors. To achieve a certain function, brain imaging measures are often correlated with each other. For MRI data, groups correspond to specific regions-of-interest (ROIs) in the brain, e.g., entorhinal and hippocampus. Individual features are the specific properties of those regions, e.g., cortical volume and thickness. In this study, for each region (group), multiple features were extracted to measure the atrophy information of each ROI involving cortical thickness, surface area, and volume from gray matter and white matter. Multiple shape measures from the same region provide a comprehensively quantitative evaluation of cortical atrophy, and tend to be selected together as joint predictors.

We assume the p covariates to be divided into g disjoint groups \({\mathcal {G}}_{\ell }, \ell = 1,\ldots ,g\), with each group including \(\nu _{\ell }\) covariates respectively. In the context of AD, each group corresponds to a region-of-interest (ROI) in the brain, and the covariates in each group correspond to specific features of that region. For AD, the number of features in each group, \(\nu _{\ell }\), is 1 or 4, and the number of groups g can be in the hundreds. We then introduce a \( G_{2,1} \)-norm according to the relationship between brain regions (ROIs) and cognitive tasks, and encourage a task-specific subset of ROIs (See Fig. 1b). The \( G_{2,1} \)-norm \( \| {\Theta } \|_{G_{2,1}} \) is defined as:

where \(w_{\ell } = \sqrt {\nu _{\ell }}\) is the weight for each group and \(\theta _{{\mathcal {G}}_{\ell },m} \in \mathbb {R}^{\nu _{\ell }}\) is the coefficient vector for group \({\mathcal {G}}_{\ell }\) and task m.

Fused Lasso

Fused lasso is one of these variants, where pairwise differences between variables are penalized using the \( \ell _{1} \) norm, which results in successive variables being similar. The Fused lasso norm is defined as:

where \({\mathcal {H}}\) is a \((k-1) \times k\) sparse matrix with \({\mathcal {H}}_{m,m} = 1\), and \({\mathcal {H}}_{m,m + 1} = -1\).

It encourages \(\theta _{.m}\) and \(\theta _{.m + 1}\) to take the same value by shrinking the difference between them toward zero. This approach has been employed to incorporate temporal smoothness to model disease progression. In longitudinal model, it is assumed that the difference of the cognitive scores between two successive time points is relatively small.

Fused Group Lasso Regularized Multi-Task Feature Learning, FGL-MTFL

Formulation

There are two limitations of traditional MTFL. When regularized by the \( \ell _{2,1} \)-norm in Eq. 3, sparsity is achieved by treating each task equally, which ignores the underlying structures among predictors. On the other hand, for some highly correlated features, the traditional MTFL tends to identify one and ignore the others, which was inadequate for yielding a biologically meaningful interpretation. Our previous work exploited relationships of features in the learning procedure, which motivated us to consider the underlying structure of these relationships. To address the limitations of MTFL with \( \ell _{2,1} \)-norm, we consider the structure of task and features instead of assuming that all tasks have similar weights and commonly selected features. More specifically, we propose a new regularization for multi-task feature learning, called the fused group lasso. The underlying idea is that if tasks are highly correlated according to the task interrelations, the tasks are supposed to share common brain regions, but tasks with low correlation are more likely to have different brain regions.

To model the task-feature relationships, we first construct a graph \( \mathbb {G} \) to model the task correlation, as shown in Fig. 2 (left). Let \(\mathbb {G}=(V,E)\) be an undirected graph where V represents the set of vertices and E is the set of edges. Each task is treated as a graph node, and each edge (pairwise link) \( e_{m,l}(m,l) \in E\) in \(\mathbb {G}\) corresponds to an edge from the \( m \)-th task to the \( l \)-th task, and \(|r_{m,l}|\) encodes the strength of the relationship between the \( m \)-th task and the \( l \)-th task. In this model, we adopt a simple and commonly-used approach to infer \( \mathbb {G} \) from data. In this approach, we first compute pairwise Pearson correlation coefficients for each pair of tasks, and then connect two nodes with an edge only if their correlation coefficient is above a given threshold \(\tau \).

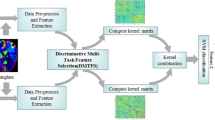

The illustration of the FGL-MTFL method. The method involves two steps: 1) estimation of task correlation and construct an undirected graph (Left); and 2) joint learning of all regression models in a fused group lasso regularized multi-task feature learning (FGL- MTFL) formulation based on the estimated correlation matrix (Right)

Brain imaging measures are often correlated with each other, so incorporation of the covariance of MRI features can improve the performance of traditional MTL methods. Thus, to identify biologically meaningful markers, we utilize prior knowledge of interrelated structure to group related features together to guide the learning process. The benefit of this strategy was also revealed in our previous work (Liu et al. 2017) , in which we proposed a group lasso multi-task learning algorithm that explicitly models the correlation structure within features, and achieved good performance in the prediction of cognitive scores from imaging measures.

Once the graph of task correlation \(\mathbb {G}\) is constructed, we then integrate the group structure of features into the fused lasso norm and propose a new fused group lasso regularized multi-task feature learning (FGL-MTFL) model as follows.

where \(|r_{m,l}|\) indicates the strength weight of the correlation between two tasks connected by an edge, \(\lambda _{1}\) and \(\lambda _{2}\) represent regularization parameters that determine the amount of two separate penalties. In the new fused group lasso norm, if the two tasks m and l are highly correlated, the difference between the two corresponding regression coefficients \(\theta _{.m}\) and \(\theta _{.l}\) will be penalized more than differences for other pairs of tasks with weaker correlation. The regularization tends to flatten the values of regression coefficients for each feature across multiple highly correlated tasks, so that the strength of the influence of each task becomes more similar across those tasks.

The FGL-MTFL model includes two regularization processes: (1) all tasks are regularized by the \( \ell _{2,1} \)-norm regularizer, which captures global relationships by encouraging multiple predictors across tasks to share similar parameter sparsity patterns. This ensures that a small subset of features will be selected for all regression models from a global perspective. (2) Two local structures (graph structures within tasks and group structures within features) are considered from a local perspective by the proposed fused group lasso (FGL) regularizer, which combines two structured sparsity regularizers (fused lasso and group lasso) to capture the specific task-ROI specific structures. This encourages the matching of related tasks to similar selected brain regions.

This new model not only preserves the strength of \( \ell _{2,1} \)-norm to require similarity across multiple scores from a cognitive test, but also considers the complex graph structure among responses and the interrelated group structure of imaging predictors. To the best of our knowledge, no sparsity-based algorithm has been described that includes both global and local task correlation for the prediction of cognitive outcomes in AD.

We used a symmetric correlation matrix \({\mathcal {D}} \in {\mathbb R}^{k \times k}\) to describe the correlation in the fusion penalty, which is defined as:

where \(m,l = 1,2,\ldots ,k \). We normalize the matrix by \(\kappa \), which is the number of edges in E, defined as \(\mathcal Z = (1/\kappa ) \mathcal D\). Then the formulation can be written into the following matrix form:

The framework of the proposed method is illustrated in Fig. 2.

Efficient Optimization for FGL-MTFL

ADMM

In recent years, ADMM has become popular since it is often easy to parallelize distributed convex problems. In ADMM, the solutions to small local subproblems are coordinated to identify the globally optimal solution (Boyd et al. 2011).

The variant augmented Largrangian of ADMM method is formulated as follows:

where f and g are convex functions, and variables \(A \in {\mathbb R}^{p \times n}, x \in {\mathbb R}^{n}, B \in {\mathbb R}^{p \times m}, z \in {\mathbb R}^{m}, c \in {\mathbb R}^{p}\). u is a scaled dual augmented Lagrangian multiplier, and \(\rho \) is a non-negative penalty parameter. In each iteration of ADMM, this problem is solved by alternating minimization \(L_{\rho }(x,z,u)\) over \(x,z,~\text {and}~u\). At the \((k + 1)\)-th iteration, the update of ADMM is carried out by:

Efficient Optimization for FGL-MTFL

We developed an efficient ADMM-based algorithm to solve the objective function in Eq. 8, which is equivalent to the following constrained optimization problem:

where \(Q, S\) are slack variables. Then Eq. 9 can be solved by ADMM. The augmented Lagrangian is

where U and V are augmented Lagrangian multipliers.

Update\({\Theta }\): From the augmented Lagrangian in Eq. 10, the update of \({\Theta }\) at \((t + 1)\)-th iteration is carried out by:

which is the closed form and can be derived by setting Eq. 11 to zero.

Note that \({\mathcal Z}\) is a symmetric matrix. We define \({\Phi } = {\mathcal Z}{\mathcal Z}\), where \({\Phi }\) is also a symmetric matrix with \({\Phi }_{m,l}\) denoting the value of weight \((m,l)\). With this linearization, the value for \({\Theta }\) can be updated in parallel by the individual \(\theta _{.m}\). Thus in the \((t + 1)\)-th iteration, \(\theta _{.m}^{(t + 1)}\) can be updated efficiently using Cholesky factorization.

The above optimization problem is quadratic. The optimal solution is given by \( \theta _{.m}^{(t + 1)} = F_{m}^{-1} b_{m}^{(t)} \), where

The computation of \(\theta _{.m}^{(t + 1)}\) involves solving a linear system, which is the most time-consuming part of the whole algorithm. To efficiently compute \(\theta _{.m}^{(t + 1)}\) efficiently, we can compute the Cholesky factorization of F at the beginning of the algorithm:

Note that F is a constant and positive definite matrix. Using Cholesky factorization, we only need to solve the following two linear systems for each iteration:

Since \(A_{m}\) is an upper triangular matrix, it is very efficient to solve these two linear systems.

UpdateQ: The update for Q effectively needs to solve the following problem

which is equivalent to the following problem:

where \(O^{(t + 1)} = {\Theta }^{(t + 1)} + \frac {1}{\rho }U^{(t)}\). It is clear that Eq. 18 can be decoupled into

where \(q_{i.}\) and \(o_{i.}\) are the i-th row of \(Q^{(t + 1)}\) and \(O^{(t + 1)}\), respectively. Since \(\phi (q_{i.})\) is strictly convex, we conclude that \(q_{i.}^{(t + 1)}\) is its unique minimizer. Then we introduce the following lemma (Liu et al. 2009) to solve the Eq. 19.

Lemma 1

For any \(\lambda _{1} \geq 0\) , we have

UpdateS: The update for S effectively needs to solve the following problem

which is effectively equivalent to computation of the proximal operator for \(G_{2,1}\)-norm. In particular, the problem can be written as

where \({\Pi }^{(t + 1)} = {\Theta }^{(t + 1)}{\mathcal Z} + \frac {1}{\rho }V^{(t)}\). Since the groups \({\mathcal {G}}_{\ell }\) used in our work are disjointed, the Eq. 22 can be decoupled into

where \(s_{{\mathcal {G}}_{\ell } m}\), \(\pi _{{\mathcal {G}}_{\ell } m}\) are rows in group \({\mathcal {G}}_{\ell }\) for task m of \(S^{(t + 1)}\) and \({\Pi }^{(t + 1)}\), respectively. Then we introduce the following lemma (Yuan et al. 2013).

Lemma 2

For any \(\lambda _{2} \geq 0\) , we have

Dual Update forU andV: Following standard ADMM dual update, the update for the dual variables according to our setting is as follows:

The dual updates can be performed in an element-wise parallel manner. Algorithm 1 summarizes the whole algorithm. The MATLAB codes of the proposed algorithm are available at: https://bitbucket.org/XIAOLILIU/fgl-mtfl.

Convergence

The convergence of the Algorithm 1 is shown in the following theorem

Theorem 3

Suppose there exists at least one solution\({\Theta }^{*}\)of Eq. 8. Assume\(L({\Theta })\)isconvex,\(\lambda _{1} > 0,~ \lambda _{2} > 0\).Then the following property for FGL-MTFL iteration in Algorithm 1 holds:

Furthermore,

whenever Eq. 8 has a unique solution.

Note that the condition that allowed convergence in Theorem 3 is quite easy to satisfy. \(\lambda _{1}, \lambda _{2}\) are regularization parameters and should always be larger than zero. The detailed proof is discussed in (Cai et al. 2009). Unlike Cai et al., we do not require \(L({\Theta })\) to be differentiable, and explicitly treat the non-differentiability of \(L({\Theta })\) by using its subgradient vector \(\partial L({\Theta })\), similar to the strategy used by (Ye and Xie 2011).

Experimental Results and Discussions

In this section, we present experimental results to demonstrate the effectiveness of the proposed FGL-MTFL to characterize AD progression using a dataset from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) (Weiner et al. 2010).

Experimental Setup

MR images and data used in this work were obtained from the Alzheimers Disease Neuroimaging Initiative (ADNI) database (adni.loni.ucla.edu) (Weiner et al. 2010). The primary goal of ADNI is to test whether serial MRI, PET, other biological markers, and clinical and neuropsychological assessments can be combined to measure the progression of MCI and early AD. Approaches to characterize AD progression will help researchers and clinicians develop new treatments and monitor their effectiveness. Further, a better understanding of disease progression will increase the safety and efficacy of drug development and potentially decrease the time and cost of clinical trails. In ADNI, all participants received 1.5 Tesla (T) structural MRI. The MRI features used in our experiments are based on imaging data from the ADNI database processed by a team from UCSF (University of California at San Francisco), who performed cortical reconstruction and volumetric segmentations with the FreeSurfer image analysis suite (http://surfer.nmr.mgh.harvard.edu/) according to the atlas generated in (Desikan et al. 2006). The FreeSurfer software was employed to automatically label the cortical and subcortical tissue classes for the structural MRI scan of each subject and to extract thickness measures of the cortical regions of interests (ROIs) and volume measures of cortical and subcortical regions.

Briefly, this processing includes motion correction and the averaging (Reuter et al. 2010) of multiple volumetric T1-weighted images (when more than one is available), removal of non-brain tissue using a hybrid watershed/surface deformation procedure (Segonne et al. 2004), automated Talairach transformation, segmentation of the subcortical white matter and deep gray matter volumetric structures (including hippocampus, amygdala, caudate, putamen, and ventricles) (Fischl et al. 2002, 2004), intensity normalization (Sled et al. 1998), tessellation of the gray matter white matter boundary, automated topology correction (Fischl et al. 2001; Ségonne et al. 2007), and surface deformation following intensity gradients to optimally place the gray/white and gray/cerebrospinal fluid borders at the location where the greatest shift in intensity defines the transition to the other tissue class (Dale et al. 1999; Dale and Sereno 1993).

In total, 48 cortical regions and 44 subcortical regions were generated, , with typically 1 or 4 features in each group. The names of the cortical and subcortical regions are listed in Tables 1 and 2. For each cortical region, the cortical thickness average (TA), standard deviation of thickness (TS), surface area (SA), and cortical volume (CV) were calculated as features. For each subcortical region, the subcortical volume was calculated as a feature. The separate SA values for the left and right hemisphere and the total intracranial volume (ICV) were also included. This yielded a total of \(p = 319\) MRI features extracted from cortical/subcortical ROIs in each hemisphere (Tables 1 and 2). Details of the analysis procedure are available at http://adni.loni.ucla.edu/research/mri-post-processing/.

The ADNI project is a longitudinal study, with data collected repeatedly over a 6-month or 1-year interval. The scheduled screening date for subjects becomes baseline after approval and the time point for the follow-up visits is denoted by the duration starting from the baseline. In our current work, we investigated the prediction performance of our method to infer cognitive outcomes based on a number of neuropsychological assessments at the time of the initial baseline. In this work, we further performed the following preprocessing steps:

-

remove features with more than 10% missing entries (for all patients and all time points);

-

remove the ROI whose name is “unknown;

-

remove the instances with missing value of cognitive scores;

-

exclude patients without baseline MRI records;

-

complete the missing entries using the average value.

This yields a total of \(n = 788\) subjects, who were then categorized into three baseline diagnostic groups: Cognitively Normal (CN, \(n_{1} = 225\)), Mild Cognitive Impairment (MCI, n2 = 390), and Alzheimer’s Disease (AD, \(n_{3} = 173\)). Table 3 lists the demographics information of all subjects, including age, gender, and education. We used 10-fold cross validation to evaluate our model and conducted the comparison. In each of ten trials, a 5-fold nested cross validation procedure was employed to tune the regularization parameters. The data were z-scored before applying the regression methods. The range of each parameter varied from \( 10^{-1} \) to \( 10^{3} \). The average (avg) and standard deviation (std) of performance measures were calculated and shown as avg \(\pm \) std for each experiment. For each run, all methods received exactly the same train and test set. The reported results are the best results of each method with the optimal parameters. For predictive modeling, all the cognitive assessments (a total of 20 tasks) in Table 4 were examined. To the best of our knowledge, no previous works have used all the cognitive scores to train and evaluate their MTL models.

For the quantitative performance evaluation, we employed the metrics of Correlation Coefficient (CC) and Root Mean Squared Error (rMSE) between the predicted clinical scores and the target clinical scores for each regression task. To evaluate the overall performance for all tasks, the normalized mean squared error (nMSE) (Argyriou et al. 2008; Zhou et al. 2013) and weighted R-value (wR) (Stonnington et al. 2010) were used. The rMSE, CC, nMSE and wR are defined as follows:

where \(\mathrm {y}\) is the ground truth of the target at a single task and \(\hat {\mathrm {y}}\) is the corresponding prediction according to a prediction model, cov is the covariance, and \(\sigma \) is the standard deviation.

where \( Y \) and \( \hat {Y} \) are the ground truth cognitive scores and the predicted cognitive scores, respectively.

Smaller values of nMSE and rMSE and larger values of CC and wR indicate better regression performance. We report the mean and standard deviation based on 10 experimental iterations on different splits of data for all comparable experiments. A Student’s t-test at a significance level of 0.05 is performed to determine whether the performances difference are significant.

Comparison with Baseline Comparable Methods

In this section, we conduct empirical evaluation for the proposed methods by comparison with two single-task learning methods: Lasso and Ridge. Both methods were applied independently to each task, and compared to representative multi-task learning methods:

-

1.

Multi-task feature learning (MTFL): \( \underset {\Theta }{\min }~ \frac {1}{2} \|Y - X {\Theta }\|_{F}^{2}+ \lambda \|{\Theta }\|_{2,1}\).

-

2.

Multi-task feature learning combined with lasso (SGL-MTFL): \( \underset {\Theta }{\min }~ \frac {1}{2} \|Y - X {\Theta }\|_{F}^{2}+\lambda _{1} \|{\Theta }\|_{2,1} + \lambda _{2} \|{\Theta } \|_{1} \).

-

3.

Fused lasso regularized multi-task learning (FL-MTL): \( \underset {\Theta }{\min }~ \frac {1}{2} \|Y - X {\Theta }\|_{F}^{2}+\lambda \|{\Theta } {\mathcal Z}\|_{1} \).

-

4.

Fused group lasso regularized multi-task learning (FGL-MTL): \(\underset {\Theta }{\min }~ \frac {1}{2} \|Y - X {\Theta }\|_{F}^{2}+\lambda \|{\Theta } {\mathcal Z}\|_{G_{2,1}} \).

-

5.

Fused lasso regularized multi-task feature learning (FL-MTFL): \( \underset {\Theta }{\min }~ \frac {1}{2} \|Y - X {\Theta }\|_{F}^{2}+\lambda _{1} \|{\Theta }\|_{2,1} + \lambda _{2} \|{\Theta } {\mathcal Z}\|_{1} \).

-

6.

Group lasso regularized multi-task feature learning (GL-MTFL): \( \underset {\Theta }{\min }~ \frac {1}{2} \|Y - X {\Theta }\|_{F}^{2}+\lambda _{1} \|{\Theta }\|_{2,1} + \lambda _{2} \| {\Theta } \|_{G_{2,1}}\).

-

7.

Fused lasso regularized SGL-MTL (FSGL-MTL):\( \underset {\Theta }{\min }~ \frac {1}{2}\)\( \|Y - X {\Theta }\|_{F}^{2}+\lambda _{1} \|{\Theta }\|_{1} + \lambda _{2} \|{\Theta } {\mathcal Z}\|_{G_{2,1}} \).

-

8.

Fused group lasso regularized MTFL (FGL-MTFL): \( \underset {\Theta }{\min }~ \frac {1}{2} \|Y - X {\Theta }\|_{F}^{2}+\lambda _{1} \|{\Theta }\|_{2,1} +\lambda _{2} \|{\Theta } {\mathcal Z}\|_{G_{2,1}} \).

The average and standard deviation of performance measures were calculated by 10-fold cross validation on different splits of data, and are shown in Tables 5 and 6. It is worth noting that the same training and testing data were used across experiments for all methods for fair comparison. Note that all pairwise links between tasks were incorporated into both the fused lasso and fused group lasso regularized methods, and the graph was created using only the training data.

From the experimental results in Tables 5 and 6, we observe the following:

-

1.

FL-MTFL and FGL-MTFL both demonstrated an improved performance over the other baseline methods in terms of nMSE and wR, while FGL-MTFL performed the best among all competing methods. The t-test results show that the FGL-MTFL significantly outperforms all the other methods in terms of nMSE and all the other methods except FL-MTFL in terms of CC. Thus, simultaneously exploiting the structure among the tasks and features resulted in significantly better prediction performance.

-

2.

The norm of both the fused lasso and fused group lasso can improve the performance of the traditional MTFL, which demonstrates that considering the local structure within the tasks can improve the overall prediction performance. A fundamental step is to estimate the true relationships among tasks, thus promoting the most appropriate information sharing among related tasks while avoiding the use of information from unrelated tasks.

-

3.

We investigated the effect of group penalty in our model. The traditional MTFL consideres only the sparsity of regression coefficients, thus failing to capture the group structure of features in the data.

From the Tables 5 and 6, we can find that both FL-MTFL and FGL-MTFL are better than traditional MTFL. This is because the assumption in MTFL is a strict constraint and may affect the flexibility of the multi-task model as described in “ℓ2,1-norm”. The extraction of multiple features to measure the atrophy of each imaging biomarker can further improve prediction performance by capturing inherent feature structures.

GL-MTFL is similar to FGL-MTFL but the regularization term of \( G_{2,1} \)-norm ignore the structure of tasks. This approach can flexibly take into account the complex relationships among imaging markers in a fused lasso regularization rather than relying on a simple grouping scheme. Moreover, compared with GL-MTFL, our FGL-MTFL can flexibly consider the complex relationships among outcomes in a group format rather than within a simple group lasso scheme. Overall, FGL-MTFL achieved better performances than FL-MTFL, demonstrating the benefit of employing group structural information among the features.

-

4.

All compared multi-task learning methods improve predictive performance over the independent regression algorithm (Ridge, Lasso, GOSCAR, and ncFGS). This justifies the motivation to learn multiple tasks simultaneously.

Additionally, we show the scatter plots for the predicted values versus the actual values for ADAS, MMSE, TOTAL, and ANIM for the testing data in the cross-validation in Fig. 3.

To investigate the influence of edge weight on performance, we compared our proposed FGL-MTFL with an unweighted FGL-MTFL (\( \lambda _{1} \|{\Theta }\|_{2,1} + \lambda _{2} {\sum }_{m = 1}^{k} \|\theta _{.m} - {\text {sign}}(r_{m,l}) \theta _{.l}\|_{G_{2,1}} \)), which only considers the graph topology of tasks and treats all links equally. The result is shown in Table 7, and shows obvious improvement with the weighted FGL-MTFL compared to the unweighted one.

Comparison with the State-of-the-Art MTL Methods

To illustrate how well our FGL-MTFL works by means of modeling the correlation among the tasks, we comprehensively compared our proposed methods with several popular state-of-the-art related methods. The representative multi-task learning algorithms used for comparison include the following models:

-

1.

Robust multi-Task Feature Learning (RMTL) (Chen et al. 2011):

RMTL (\(\min _{\Theta } L(X,Y,{\Theta }) + \lambda _{1} \|P\|_{*} + \lambda _{2} \|S\|_{2,1},\)\(\text {subject to}~ {\Theta } = P + S\)), which assumes that the model \( {\Theta } \) can be decomposed into two components: a shared feature structure \( P \) that captures task relatedness and a group-sparse structure \( S \) that can detect outliers.

-

2.

Clustered multi-Task Learning (CMTL) (Zhou et al. 2011):

CMTL (\( \min _{\Theta ,M:M^{\mathrm {T}}M=I_{c}}~L(X,Y,{\Theta }) + \lambda _{1} (\text {Tr}({\Theta }^{\mathrm {T}}\)\({\Theta }) -\text {Tr}(M^{\mathrm {T}}{\Theta }^{\mathrm {T}}{\Theta } M)) + \lambda _{2} \text {Tr}({\Theta }^{\mathrm {T}}{\Theta })\), where \(M \in \mathbb {R}^{c \times k}\) is an orthogonal cluster indicator matrix, and the tasks are clustered into \(c < k\) clusters) incorporates a regularization term to induce clustering between tasks and then shares information only to tasks belonging to the same cluster. In CMTL, the number of clusters is set to 11 because the 20 tasks belong to 11 sets of cognitive functions.

-

3.

Trace-Norm Regularized multi-Task Learning (Trace) (Ji and Ye 2009): Assumes that all models share a common low-dimensional subspace (\( \min _{\Theta }~L(X,Y,{\Theta }) + \lambda \|{\Theta }\|_{*} \)).

-

4.

Sparse regularized multi-task learning formulation (SRMTL) (Zhou):

SRMTL (\( \min _{\Theta }~L(X,Y,{\Theta }) + \lambda _{1} \|{\Theta } \mathcal Z\|^{2}_{F} + \lambda _{2}\)\(\|{\Theta }\|_{1}~ \), where \(\mathcal Z \in {\mathbb R}^{k \times k}\)) contains two regularization processes: (1) all tasks are regularized by their mean value, and therefore knowledge obtained from one task can be utilized by other tasks via the mean value; (2) sparsity is enforced in the learning with \( \ell _{1} \) norm.

-

5.

Multi-task Sparse Structure Learning from tasks parameters (p-MSSL) (Goncalves et al. 2014):

p-MSSL (\( \min _{\Theta ,{\Omega } \succ 0}~L(X,Y,{\Theta }) - \frac {k}{2}\log |{\Omega }| + \text {Tr}({\Theta } {\Omega } {\Theta }^{T}) + \lambda _{1} \|{\Omega }\|_{1}+\lambda _{2}\|{\Theta }\|_{1}\), where \({\Omega } \in \mathbb {R}^{k\times k}\) is a matrix that captures the task relationship structure.

-

6.

Group-Sparse Multi-task Regression and Feature Selection (G-SMuRFS) (Yan et al. 2015):

G-SMuRFS (\( \min _{\Theta }~L(X,Y,{\Theta }) +\lambda _{1}\|{\Theta }\|_{2,1}+\lambda _{2}{\sum }_{l = 1}^{q} w_{l} \)\(\sqrt {{\sum }_{j \in {\mathcal {G}}_{l}} \| \theta _{j.} \|_{2}} \)) takes into account coupled features and group sparsity across tasks. Parameters for these three methods are set following the same approach as that used in the baseline comparable methods. Tables 8 and 9 show the results of the comparable MTL methods in terms of rMSE, CC, nMSE and wR.

-

7.

Multi-task relationship learning (MTRL) (Zhang and Yeung 2012b):

MTRL (\( \min _{W,b,{\Omega }}~{\sum }_{I = 1}^{m} \frac {1}{n_{i}} {\sum }_{j = 1}{n_{i}}({y_{j}^{i}} -{w_{i}^{T}} {x_{j}^{i}} - b_{i})^{2} + \frac {\lambda _{1}}{2} \text {tr}(WW^{T})+\frac {\lambda _{2}}{2} \text {tr}(W{\Omega }^{-1}W^{T})\), \(~\mathrm {s.t.}~{\Omega } \succeq 0, \text {tr}({\Omega })= 1\)) can simultaneously model positive task correlation, describe negative task correlation, and identify outlier tasks based on the same underlying principle.

From the experimental results in Tables 8 and 9, we observe that the proposed method significantly outperforms the other recently developed algorithms. Specifically, the t-test results indicate that FGL-MTFL significantly outperforms all other methods significantly in terms of CC and all but FL-MTRL in terms of nMSE. Compared with the other multi-task learning that utilize different assumptions, G-SMuRFS and our proposed methods, both multi-task feature learning methods with sparsity-inducing norms, have an advantage. Since not all the brain regions are associated with AD, many of the features are irrelevant and redundant. Thus, sparse-based MTL methods involving FGL-MTFL and G-SMuRFS are more appropriate to predict cognitive measures with better performance than the non-sparse based MTL methods. Unlike G-SMuRFS, the group regularization \( G_{2,1} \)-norm in FGL-MTFL decouples the group sparse regularization across tasks to provide more flexibility.

Identification of Correlation among Cognitive Outcomes

A fundamental component of our FGL-MTFL is to estimate the relationship structure among tasks, thus promoting appropriate information sharing among related tasks. In this section, we investigate and evaluate the estimated task relationships. Figure 4 shows the normalized estimated correlation matrix \({\mathcal Z} \).

Using the previous results obtained by our FGL-MTFL, the constructed graph \(\mathbb G \) includes all pairwise links for each pair of tasks. The correlation of these links may be weak or not actually correlated due to bad estimation of the correlation matrix. To clearly analyze the influence of the estimated task relationships to the prediction performance of cognitive outcomes, we constructed graphs \(\mathbb G \) that vary the value of the threshold \(\tau \) based on the estimated correlation matrix \({\mathcal Z} \). The range of the \(\tau \) value is \( {0.1,~0.3,~0.5, \text { and }0.7} \). The graphs of these four examples are shown in Fig. 5. In this experiment, rather than including all pairwise links, a specific threshold value was applied to the correlation matrix \({\mathcal Z} \), and the performance with different threshold values is presented in Table 10. In this case, by thresholding the estimated correlation matrix with a higher \( \tau \), we can construct a sparse undirected graph to represent only the most reliable correlation. From the data shown in Fig. 5, we can find that with an increase in the threshold value, the graph of tasks becomes less dense. When \(\tau = 0.1\), only one link is removed, and when \(\tau \) increases to 0.3 and 0.5, the numbers of remaining links are only 133 and 48, respectively. When \(\tau = 0.7\), there are only eight highly correlated cognitive scores. We found these remaining cognitive outcomes (including ADAS, MMSE, and RAVLT) are the most commonly used in the multi-task learning to predict cognitive outcomes (Yan et al. 2013, 2015; Wan et al. 2012; Li et al. 2012). Moreover, as shown in the Fig. 5d, ADAS is the most important of the cognitive outcome tests, with the most edges to other tasks, especially when the threshold is large, i.e., 13 links remains when \(\tau = 0.5\) and 4 links remains when \(\tau = 0.7\).

Additionally, we evaluated the performance of FL-MTL, FGL-MTL and FGL-MTFL methods in response to changing the thresholds in terms of CC and wR, as presented in Table 10. The symbol “–” indicates that no threshold was used. The performance of both FL-MTL and FGL-MTL increased with increased threshold value. However, FGL-MTFL achieved better results as the threshold value decreased. For values of \(\tau \) less than 0.3, FGL-MTFL obtains the best result and is stable, which indicates that many edges do not contribute to performance in Fig. 5a.

In order to investigate the correlation of multiple cognitive tests, we constructed a graph to show the estimated correlation of inter-cognitive assessment tests, such as ADAS and MMSE, and intra-cognitive assessment tests, such as TOTAL and TOT6 in RAVLT. From Fig. 6 and Table 11, we can observe strong correlation of all intra cognitive assessment tests. Correlation analysis of inter-cognitive assessment tests shows that ADAS is an important test with strong correlations with other tests.

Identification of MRI Biomarkers

Key goals of studies of Alzheimer’s disease are better cognitive score prediction and identification of which brain areas are more affected by the disease to help diagnose early stages of the disease and determine how it spreads. One focus of this work was the identification of MRI biomarkers. Our FGL-MTFL is a group sparse model that can identify a compact set of relevant neuroimaging biomarkers at the region level due to the group lasso on the features, allowing better interpretability of the brain region. The top ten selected MRI brain regions are shown in Table 12, as determined by calculating the overall weights for all cognitive tasks.

Some important brain regions are identified by our FGL-MTFL (see Fig. 7), such as Middle Temporal (Yan et al. 2015; Xu et al. 2016; Visser et al. 2002; Zhu et al. 2016), Hippocampus (Zhu et al. 2016) and Entorhinal (Yan et al. 2015), regions that are highly relevant to cognitive impairment. These findings are in accordance with current understanding of the pathological pathway of AD, and reports that these identified brain regions are highly related to clinical functions. For example, the hippocampus is located in the temporal lobe of the brain and participates in memory and spatial navigation. The Entorhinal cortex is the first area of the brain to be affected in Alzheimer’s disease, and is typically subjected to the most heavy damage with the progression of Alzheimer’s disease (Hoesen et al. 1991).

Conclusion

Many clinical/cognitive measures have been designed to evaluate patient cognitive status for use as criteria for clinical diagnosis of probable AD. In this paper, we propose a multi-task learning framework for predictive modeling of cognitive measures based on MRI data from ADNI. The existing MTL approach neglects the relationships between outcomes and between features. We consider the multi-task learning problem assuming unequal correlation of the tasks and effects of different correlated tasks on different brain regions. Based on the intuitive motivation that tasks should be related to a group of features, we exploited the global task-common structure as well as task-ROI specific structure, and present a novel fused group lasso regularized multi-task learning method (FGL-MTFL). Experiments and comparisons of this model with baseline methods illustrate that FGL-MTFL offers consistently better performance than several currently applied multi-task learning algorithms. In the current work, only a priori group information is incorporated into the multi-task predictive model, but there is no ability to automatically learn the feature groups. In future work, we will investigate other structures in features, such as graph structure, which can provide additional insights to understand and interpret data. Our current work is based on linear methods, but kernel methods can model the cognitive scores as nonlinear functions of neuroimaging measures. Recently, many kernel-based classification or regression methods with faster optimization speed or stronger generalization performance have been investigated by theoretically and experimentally. Our future work will focus on kernel-based multi-task learning to better capture the complex but more flexible relationship between cognitive scores and the neuroimaging measures.

Information Sharing Statement

Source code is available at: https://bitbucket.org/XIAOLILIU/fgl-mtfl. Both the source code and documentation are available on request. Contact: neuxiaoliliu@gmail.com and caopeng@cse.neu.edu.cn.

References

Alzheimer’s Association, & et al. (2016). Alzheimer’s disease facts and figures. Alzheimer’s & Dementia, 12(4), 459–509.

Argyriou, A., Evgeniou, T., Pontil, M. (2008). Convex multi-task feature learning. Machine Learning, 73(3), 243–272.

Batsch, N.L., & Mittelman, M.S. (2015). World Alzheimer Report 2012. Overcoming the stigma of dementia. Alzheimer’s Disease International (ADI), p. 5.

Boyd, S., Parikh, N., Chu, E., Peleato, B., Eckstein, J. (2011). Distributed optimization and statistical learning via the alternating direction method of multipliers. Foundation and Trends in Machine Learning, 3(1), 1–122.

Cai, J.-F., Osher, S., Shen, Z. (2009). Split bregman methods and frame based image restoration. Multiscale modeling & simulation, 8(2), 337–369.

Cao, P., Liu, X., Yang, J., Zhao, D., Zaiane, O. (2017). Sparse multi-kernel based multi-task learning for joint prediction of clinical scores and biomarker identification in alzheimer’s disease. In International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, pp. 195–202.

Caruana, R. (1998). Multitask learning. In Learning to learn. Springer, pp. 95–133.

Castellani, R.J., Rolston, R.K., Smith, M.A. (2010). Alzheimer disease. Disease-a-month: DM, 56(9), 484.

Chen, J., Zhou, J., Ye, J. (2011). Integrating low-rank and group-sparse structures for robust multi-task learning.

Dale, A.M., Fischl, B., Sereno, M.I. (1999). Cortical surface-based analysis. I. Segmentation and surface reconstruction. NeuroImage, 9, 179–194.

Dale, A.M., & Sereno, M.I. (1993). Improved localization of cortical activity by combining EEG and MEG with MRI cortical surface reconstruction: a linear approach. Journal of Cognitive Neuroscience, 5(2), 162–176.

Desikan, R.S., Ségonne, F., Fischl, B., Quinn, B.T., Dickerson, B.C., Blacker, D., Buckner, R.L., Dale, A.M., Maguire, R.P., Hyman, B.T. (2006). An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. NeuroImage, 31(3), 968–980.

Evgeniou, T., & learning, M.P. (2004). Regularized multi–task. In Proceedings of the 10th ACM SIGKDD international conference on Knowledge discovery and data mining. ACM, pp. 109–117.

Fischl, B., Liu, A., Dale, A.M. (2001). Automated manifold surgery: constructing geometrically accurate and topologically correct models of the human cerebral cortex. IEEE Transactions on Medical Imaging, 20, 70–80.

Fischl, B., Salat, D.H., Busa, E., Albert, M., Dieterich, M., Haselgrove, C., van der Kouwe, A., Killiany, R., Kennedy, D., Klaveness, S., Montillo, A., Makris, N., Rosen, B., Dale, A.M. (2002). Whole brain segmentation: automated labeling of neuroanatomical structures in the human brain. Neuron, 33, 341–355.

Fischl, B., Salat, D.H., van der Kouwe, A.J., Makris, N., Segonne, F., Quinn, B.T., Dale, A.M. (2004). Sequence-independent segmentation of magnetic resonance images. NeuroImage, 23, S69–S84.

Frisoni, G.B., Fox, N.C., Jack, C.R., Scheltens, P., Thompson, P.M. (2010). The clinical use of structural MRI in Alzheimer disease. Nature Reviews Neurology, 6(2), 67–77.

Goncalves, A., Das, P., Chatterjee, S., Sivakumar, V., Zuben, F.J.V., Banerjee, A. (2014). Multi-task sparse structure learning. In In CIKM, pp. 451–460.

Jebara, T. (2011). Multitask sparsity via maximum entropy discrimination. Journal of Machine Learning Research, 12(Jan), 75–110.

Ji, S., & Ye, J. (2009). An accelerated gradient method for trace norm minimization. In Proceedings of the 26th annual international conference on machine learning. ACM, pp. 457–464.

Liu, J., Ji, S., Ye, J. (2009). Multi-task feature learning via \(\ell _{2,1}\)-norm minimization. In Proceedings of the 25th conference on uncertainty in artificial intelligence. AUAI Press, pp. 339–348.

Liu, X., Cao, P., Zhao, D., Zaiane, O., et al. (2017). Group guided sparse group lasso multi-task learning for cognitive performance prediction of alzheimer’s disease. In International Conference on Brain Informatics. Springer, pp. 202–212.

Liu, X., Goncalves, A.R., Cao, P., Zhao, D., Banerjee, A., et al. (2017). Modeling Alzheimer’s disease cognitive scores using multi-task sparse group lasso. Computerized Medical Imaging and Graphics, 66, 100–114.

Reuter, M., Rosas, H.D., Fischl, B. (2010). Highly accurate inverse consistent registration: A robust approach. NeuroImage, 53(4), 1181–1196.

Segonne, F., Dale, A.M., Busa, E., Glessner, M., Salat, D., Hahn, H.K., Fischl, B. (2004). A hybrid approach to the skull stripping problem in MRI. NeuroImage, 22, 1060–1075.

Ségonne, F., Pacheco, J., Fischl, B. (2007). Geometrically accurate topology-correction of cortical surfaces using nonseparating loops. IEEE Transactions on Medical Imaging, 26(4), 518–529.

Li, S., Saykin, A.J., Risacher, S.L., Kim, S., Fang, S., Rao, B.D., Li, T., Yan, J., Zhang, Z., Wan, J. (2012). Sparse bayesian multi-task learning for predicting cognitive outcomes from neuroimaging measures in alzheimer.

Sled, J.G., Zijdenbos, A.P., Evans, A.C. (1998). A nonparametric method for automatic correction of intensity nonuniformity in MRI data. IEEE Transactions on Medical Imaging, 17, 87–97.

Stonnington, C.M., Chu, C., Klöppel, S., Jack, C.R., Ashburner, J., Frackowiak, R.S.J. (2010). Alzheimer disease neuroimaging initiative predicting clinical scores from magnetic resonance scans in Alzheimer’s disease. NeuroImage, 51(4), 1405–1413.

Hoesen, G.W.v., Hyman, B.T., Damasio, A.R. (1991). Entorhinal cortex pathology in Alzheimer’s disease. Hippocampus, 1(1), 1–8.

Visser, P.J., Verhey, F.R.J., Hofman, P.A.M., Scheltens, P., Jolles, J. (2002). Medial temporal lobe atrophy predicts Alzheimer’s disease in patients with minor cognitive impairment. Journal of Neurology Neurosurgery & Psychiatry, 72(4), 491–497.

Wan, J., Zhang, Z., Rao, B.D., Fang, S., Yan, J., Saykin, A.J., Li, S. (2014). Identifying the neuroanatomical basis of cognitive impairment in Alzheimer’s disease by correlation-and nonlinearity-aware sparse Bayesian learning. IEEE transactions on medical imaging, 33(7), 1475–1487.

Wan, J., Zhang, Z., Yan, J., Li, T., Rao, B.D., Fang, S., Kim, S., Risacher, S.L., Saykin, A.J., Li, S. (2012). Sparse Bayesian multi-task learning for predicting cognitive outcomes from neuroimaging measures in Alzheimer’s disease. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 940–947.

Wang, H., Nie, F., Huang, H., Risacher, S., Ding, C., Saykin, A.J., Li, S. (2011). ADNI Sparse Multi-task regression and feature selection to identify brain imaging predictors for memory performance. In International Conference on Computer Vision, pp. 6–13.

Wang, H., Nie, F., Huang, H., Yan, J., Kim, S., Risacher, S., Saykin, A., Li, S. (2012). High-order multi-task feature learning to identify longitudinal phenotypic markers for Alzheimer’s disease progression prediction. In Advances in Neural Information Processing Systems, pp. 1277–1285.

Weiner, M.W., Aisen, P.S., Jack, C.R. Jr, Jagust, W.J., Trojanowski, J.Q., Shaw, L., Saykin, A.J., Morris, J.C., Cairns, N., Beckett, L.A., Toga, A., Green, R., Walter, S., Soares, H., Snyder, P., Siemers, E., Potter, W., Cole, P.E., Schmidt, M. (2010). The Alzheimer’s disease neuroimaging initiative: progress report and future plans. Alzheimer’s & Dementia, 6, 202–211.

Xu, L., Wu, X., Li, R., Chen, K., Long, Z., Zhang, J., Guo, X., Yao, L. (2016). Prediction of progressive mild cognitive impairment by multi-modal neuroimaging biomarkers. Journal of Alzheimer’s Disease, 51 (4), 1045–1056.

Xue, Y., Liao, X., Carin, L., Krishnapuram, B. (2007). Multi-task learning for classification with dirichlet process priors. Journal of Machine Learning Research, 8(Jan), 35–63.

Yan, J., Huang, H., Risacher, S.L., Kim, S., Inlow, M., Moore, J.H., Saykin, A.J., Shen, L. (2013). Network-guided sparse learning for predicting cognitive outcomes from MRI measures. In International Workshop on Multimodal Brain Image Analysis. Springer, pp. 202–210.

Yan, J., Li, T., Wang, H., Huang, H., Wan, J., Nho, K., Kim, S., Risacher, S.L., Saykin, A.J., Shen, L., et al. (2015). Cortical surface biomarkers for predicting cognitive outcomes using group \(\ell _{2,1}\) norm. Neurobiology of aging, 36, S185–S193.

Ye, G.-B., & Xie, X. (2011). Split bregman method for large scale fused lasso. Computational Statistics & Data Analysis, 55(4), 1552–1569.

Yu, K., Tresp, V., Schwaighofer, A. (2005). Learning gaussian processes from multiple tasks. In Proceedings of the 22nd international conference on Machine learning. ACM, pp. 1012–1019.

Yuan, L., Liu, J., Ye, J. (2013). Efficient methods for overlapping group lasso. IEEE Transactions on Pattern Analysis and Machine Intelligence, 35(9), 2104–2116.

Zhang, D., Shen, D., Alzheimer’s Disease Neuroimaging Initiative. (2012). Multi-modal multi-task learning for joint prediction of multiple regression and classification variables in Alzheimer’s disease. NeuroImage, 59(2), 895–907.

Zhang, Y., & Yeung, D.-Y. (2012a). A convex formulation for learning task relationships in multi-task learning. In Conference on Uncertainty in Artificial Intelligence (UAI2010) 2010, pp. 733–742.

Zhang, Y., & Yeung, D.-Y. (2012b). A convex formulation for learning task relationships in multi-task learning. arXiv:1203.3536.

Zhou, J., Chen, J., Ye, J. (2011). Clustered multi-task learning via alternating structure optimization. In Advances in neural information processing systems, pp. 702–710.

Zhou, J., Liu, J., Narayan, V.A., Ye, J., Alzheimer’s Disease Neuroimaging Initiative. (2013). Modeling disease progression via multi-task learning. NeuroImage, 78, 233–248.

Zhou, J.Y. Multi-task learning in crisis event classification. Technical report, Tech. Rep., http://www.public.asu.edu/jzhou29.

Zhu, X., Suk, H.-I., Lee, S.-W., Shen, D. (2016). Subspace regularized sparse multitask learning for multiclass neurodegenerative disease identification. IEEE Transactions on Biomedical Engineering, 63(3), 607–618.

Acknowledgements

This research was supported by the National Science Foundation for Distinguished Young Scholars of China under Grant (No.71325002 and No.61225012), the National Natural Science Foundation of China (No.61502091), the Fundamental Research Funds for the Central Universities (No.N161604001 and No.N150408001).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Liu, X., Cao, P., Wang, J. et al. Fused Group Lasso Regularized Multi-Task Feature Learning and Its Application to the Cognitive Performance Prediction of Alzheimer’s Disease. Neuroinform 17, 271–294 (2019). https://doi.org/10.1007/s12021-018-9398-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12021-018-9398-5