Abstract

Industrial robot positioning technology is a key component of industrial automation and intelligent manufacturing. Accurate positioning can effectively promote industrial development. Existing positioning technologies such as Monte Carlo positioning methods still suffer from inaccurate positioning in complex environments. Therefore, a localization method for industrial robots based on an improved Monte Carlo algorithm was proposed. Meanwhile, this method was optimized by combining scanning matching technology. Finally, simulation experiments were conducted to verify it. These experiments confirmed that the average positioning error of the proposed improved Monte Carlo algorithm in a simple environment was 1.55 cm. In complex environments such as obstacle edges and narrow corridors, the optimization method combining scanning matching further reduced the average positioning error to 0.35 cm, demonstrating superior positioning performance. In addition, the optimization method combining scanning matching maintained a positioning accuracy of over 95.00% in complex environments, far higher than traditional positioning methods. Moreover, it maintained low error and high positioning accuracy when facing various motion paths. Its error still did not exceed 1.00 cm, and the attitude angle error was less than 0.005 rad. In summary, compared with the existing methods, the positioning accuracy and accuracy of the proposed industrial robot positioning method are significantly improved, showing the positioning effect that is highly compatible with the target path. By providing more precise robot positioning, the robot’s motion path and task execution can be optimized, reducing errors and collisions, thereby increasing productivity. Therefore, the precise robot positioning technology proposed in this study has important strategic significance for promoting the modernization of manufacturing industry, promoting scientific and technological progress and realizing sustainable development.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the era of Industry 4.0, the integration of artificial intelligence and robotics technology is fundamentally changing traditional manufacturing. Industrial robots play a role in industrial automation and intelligent manufacturing on highly repetitive assembly lines. These robots also play a crucial role in precision machining, quality inspection, material handling, and even complex operational tasks [1, 2]. As one of the key technologies in industrial automation, precise positioning of robots is crucial for achieving efficient and flexible automated production [3]. In complex industrial environments, robots need to accurately perceive their own position and posture to perform precise control and path planning [4]. However, with the increasing complexity of factory environments and the increasing demand for production flexibility and response speed, traditional positioning methods are no longer able to meet the needs of modern industry [5]. For example, positioning systems that rely on preset markers or infrastructure often lack the necessary flexibility and scalability. These systems may require expensive reconfiguration in the event of environmental changes or device upgrades [6]. Therefore, researchers have been exploring more advanced and reliable positioning technologies. The technique of using Monte Carlo methods to estimate the state of robots in the environment has advantages in dealing with nonlinear and non-Gaussian noise problems [7]. However, standard Monte Carlo positioning techniques still have some limitations in practical applications, such as particle degradation, limited positioning accuracy, and insufficient adaptability to complex environments [8].

The positioning of industrial robots plays an important role in modern manufacturing. Accurate positioning technology enables robots to adapt to changing work environments and task requirements, quickly adjust work strategies, and enhance flexibility. In addition, robot positioning is a foundation for achieving device interconnection, data analysis, and intelligent decision-making. Therefore, numerous scholars around the world conducted research on positioning technology. Guan et al. proposed a loosely coupled visible light positioning inertial fusion method based on visible light positioning technology to meet the demand for precise positioning in indoor positioning services and robotics. By combining an Inertial Measurement Unit (IMU) and a rolling shutter camera, the robustness of positioning was improved in the event of insufficient or interrupted light sources [9]. Kammel et al. proposed a method for determining position by integrating standard passive ultra-high frequency radio frequency fingerprint recognition technology with robot odometer data. This improved the absolute positioning accuracy of the mobile robot platform in indoor environments, effectively enhancing the robot positioning accuracy [10]. Akai proposed a novel probability model to address the uncertainty issue of regressing depth values from camera images in mobile robot localization. This solved the uncertainty of processing depth regression results while positioning the robot pose, thereby ignoring inaccurate depth regression results and enhancing the robustness of positioning [11]. Li et al. proposed a method that combined global visual and semantic information as landmarks in Bayesian filter observation models. This solved the dependency on local, global visual or semantic information in robot visual localization. By introducing an improved Gaussian process into an observation model with visual information, the localization accuracy was improved [12]. Xu et al. proposed a hybrid colored Kalman filter and a colored extended unbiased finite impulse response filter to address the problems of declining positioning accuracy and algorithm divergence in indoor mobile robot positioning, and developed an adaptive filter algorithm using a filter bank combining the average field of view, thereby improving the robot positioning accuracy [13]. Aiming at the challenge of real-time positioning of human joint in dynamic environment, Jha et al. proposes a human pose estimation method using Kinect depth sensor and MediaPipe framework, calculates joint Angle through inverse kinematics, and controls humanoid robot through Python-Arduino communication. Thus, the real-time positioning accuracy of human joints is improved [14].

In mobile robot positioning, Kalman filters and particle filters may perform poorly or exhibit divergence due to interference or measurement loss. Therefore, Lee et al. proposed an improved nonlinear finite memory estimation algorithm that reduced the adverse effects of interference, including linearization errors, thereby improving the localization accuracy [15]. Nguyen et al. proposed an ultra wideband assisted multi-robot positioning system that did not rely on loop closure and only required odometer data from neighboring robots to provide more accurate local and global positioning estimates. In wireless sensor networks, there are issues with measurement data loss and robot abduction in mobile robot localization [16]. Therefore, Suh et al. proposed a limited memory interactive multi-model estimation algorithm based on nearest finite measurements. This ensured the positioning accuracy even when the mobile robot suddenly changed speed and direction [17]. Güler et al. proposed an onboard relative localization framework for multi-robot systems to address the issue that robot populations could not rely on positioning infrastructure to obtain global positions. This experiment introduced a filtering method to improve the estimation accuracy of tag robots’ position. Meanwhile, the maneuvering of tag robots was captured with acceptable accuracy, achieving high-precision positioning [18]. Lajoie and Beltrame proposed an open source collaborative simultaneous positioning and map building system designed to be scalable, flexible, decentralized and sparse, aiming at the key problem of multi-robot cooperative operation in indoor, underground or underwater environments without external positioning systems. It also supports LiDAR, stereo vision, RGB-D sensing and a novel robot loop closure priority technology, thus realizing robot positioning in special environments [19]. Gonzalez et al. proposed an adaptive deburring method based on industrial computer vision to address the challenges of operator influence, workpiece variability adaptation, and burr shape recognition during mechanical deburring. By analyzing simple images in real time, the path and conditions of machining tools can be dynamically adjusted to rapidly and accurately locate and detect edges and burrs. It provides a new perspective for robot positioning [20].

In summary, the research on industrial robot positioning technology is constantly advancing, improving the accuracy, robustness, and real-time performance of robots in dynamic environments. However, few scholars have conducted research on Monte Carlo localization methods. Therefore, this study proposes an Improved Monte Carlo Localization Algorithm (IMCLA). By adaptively adjusting the particles, introducing random particles, and improving the sampling strategy, the accuracy of localization is improved combined with scanning matching. This study aims to provide new solutions for autonomous navigation and precise control of industrial robots, promoting industrial automation and intelligent manufacturing. The innovation of the research lies in its ability to dynamically adjust the number of particles and introduce random particles to enhance the algorithm's recovery ability in the face of abnormal situations such as kidnapping. Meanwhile, combining with the map environment, while improving positioning accuracy, the algorithm's adaptability to complex environments is enhanced.

2 Methods and materials

Firstly, based on a detailed explanation of the Monte Carlo localization method, this section proposes improvements to its three shortcomings and proposes IMCLA. Then, IMCLA is combined with scanning matching to perform secondary optimization on the positioning method of industrial robots, further improving the positioning accuracy. The technical process of the study is shown in Fig. 1.

2.1 Improved Monte Carlo localization algorithm

Some traditional robot localization methods typically combine Extended Kalman Filter (EKF) for nonlinear system state estimation with IMU that provides raw data on robot motion. However, this method does not consider external environmental information and only relies on internal sensor information of the robot as the localization basis, resulting in low accuracy [21, 22]. Monte Carlo can integrate laser data to effectively consider the external environment. Therefore, the study proposes IMCLA based on the improvement of Monte Carlo. Monte Carlo represents the confidence of robot pose as a set of particles, rather than a single estimate. Figure 2 shows the Monte Carlo localization method.

In Fig. 2, the Monte Carlo localization method mainly consists of three steps, namely particle set initialization, particle sampling, and particle resampling. When initializing the particle set, there are two situations: the initial pose of the robot is unknown and known[23]. For unknown situations, Monte Carlo localization uniformly distributes particles with the same weight. For unknown situations, only a portion of particles are randomly selected from the pose prior coins and assigned the same weight. The initial pose \(x_{0}\) of the robot is represented by Eq. (1).

In Eq. (1), \(M\) represents the quantity of particles. \(x_{0}^{i}\) represents the \(i\) th particle in the particle set representing the initial pose. \(\frac{1}{M}\) represents a particle weight. In particle sampling, the Monte Carlo localization method adjusts the pose of the robot at the next moment based on the control inputs in the motion model. Therefore, the predicted pose particle set and the weight of each particle are obtained, represented by Eq. (2).

In Eq. (2), \(X_{t + 1}\) and \(w_{t + 1}^{i}\) represent the particle set and weights at time \(t + 1\), respectively. \(u_{t + 1}\) represents the input control at time \(t + 1\). \(X_{t}\) represents the particle set at time \(t\). \(z_{t + 1}\) represents the observation data at time \(t + 1\). \(sample\left( \cdot \right)\) and \(p\left( \cdot \right)\) represent the sampling function and likelihood function, respectively. Particle resampling is performed on particles in \(X_{t + 1}\) based on \(w_{t + 1}^{i}\). Particles with higher weights have a higher probability of being sampled. The result of resampling is represented by Eq. (3).

In Eq. (3), \(resample\left( \cdot \right)\) represents the resampling function. \(X_{t}^{\prime }\) is the posterior particle set. The particles in this collection integrate information from laser sensors, more accurately reflecting the robot's state in the environment. At this point, the pose \(x_{t}\) of the robot is represented by Eq. (4).

In Eq. (4), \(x_{t}^{\prime i}\) represents the \(i\) th particle in the posterior particle set at this time. \(W_{i}\) represents the weight of \(x_{t}^{\prime i}\). By continuously applying motion and measurement models, the Monte Carlo localization method can dynamically update the particle set, thereby tracking the state changes of the robot. The improvement of Monte Carlo in research includes three aspects in Fig. 3.

In Fig. 3, improvements were made in three aspects: particle quantity, kidnapping problem, and sampling error. The particle quantity problem refers to the need for a large number of particles in Monte Carlo to ensure the accuracy of localization. However, it will increase the computational burden and cause resource waste. The kidnapping problem refers to the situation where the algorithm may not be able to accurately track the pose of a robot when it is moved to a new position, as resampling may result in the loss of correct particles [24]. Sampling error refers to the limitation of positioning accuracy on the accuracy of motion and measurement models. Moreover, errors in sensors such as odometers can affect the final positioning results. This study proposes a method of "adaptive particle quantity" to solve the particle quantity problem. By adjusting the Kullback–Leibler Divergence (KLD) of particles, the particle set can adaptively change as needed. A histogram \(H\) is set to represent the occupied space of particles. If the quantity of vacancies occupied by particles in \(H\) is \(k\), the upper limit of the particle set is represented by Eq. (5).

In Eq. (5), \(M_{x}\) represents the upper limit quantity of particles. \(\varepsilon\) represents the margin of error. \(z_{1 - \delta }\) represents the quantile of a normal distribution. \(1 - \delta\) represents the sampling frequency of KLD. Specifically, when initializing, \(H\) has more vacancies and \(k\) is larger, resulting in a larger \(M_{x}\). After determining the robot pose, the particle set converges, resulting in fewer vacancies in \(H\), smaller \(k\), and a decrease in \(M_{x}\), avoiding wasting computing resources. This study proposes to solve the kidnapping problem by introducing random particles, which involves setting a probability \(c\), represented by Eq. (6).

In Eq. (6), \(w_{f}\) represents the long-term mean change in particle weights. \(w_{s}\) represents the short-term mean change in particle weights. The updates of these two are represented by Eq. (7).

In Eq. (7), \(\alpha_{f}\) and \(\alpha_{s}\) represent the long-term decay rate and short-term decay rate, respectively. \(w_{a}\) represents the mean weight of particles. In each iteration of resampling, the particles quantity increases by probability DD, rather than increasing by a fixed value. This can introduce new pose particles when positioning fails, helping Monte Carlo recover from incorrect poses and enhancing its stability. In response to sampling errors, the study adopts an improved sampling method. The traditional Monte Carlo positioning algorithm uses wheel odometer data for sampling, resulting in significant errors. The improved sampling method also utilizes LiDAR data to correct the predicted pose of EKF, achieving the re fusion of pose data and laser data, thereby improving positioning accuracy. Figure 4 shows the IMCLA process proposed in the study.

In Fig. 4, after IMCLA initialization, an EKF motion model is used to sample and process the robot pose information from the previous moment. Subsequently, the particle set was updated with the data obtained from the laser data model processing to obtain the robot pose information. Furthermore, IMCLA updates \(w_{f}\) and \(w_{s}\), determines the probability \(c\), and uses it for resampling to add random particles. After adding random particles, IMCLA uses KLD sampling to control the maximum number of particles in the particle set. Finally, conditional judgment is performed. When the particles in the particle set reach \(M_{x}\), sampling is stopped. Meanwhile, the robot’s optimal pose output at the current moment is obtained. It is worth noting that IMCLA does not stop after completing a robot pose update, but instead returns to the prediction update step to cycle the entire process and achieve real-time positioning of the robot.

Based on the above content, three optimization methods are summarized as follows. Firstly, the adaptive particle number adjustment method is proposed to dynamically adjust the total number of particles by KLD to adapt to the requirements of positioning accuracy in different environments. This method increases the number of particles when the initial pose of the robot is uncertain to ensure that a wider search space is covered, and reduces the number of particles when the pose information becomes clear to reduce the computational cost, thus effectively balancing the positioning accuracy and the use of computational resources. Secondly, in order to solve the kidnapping problem, the concept of random particles is introduced. In the resample process, a new particle representing the possible pose is introduced according to the set probability value to help the algorithm recover quickly and maintain the accuracy of the position when the pose of the robot changes suddenly. Finally, to solve the problem of sampling error, the sampling strategy is improved, which no longer relies solely on wheel odometer data, but integrates LiDAR data and extended Kalman filter to predict the pose. This method of multi-sensor data fusion improves the accuracy of robot pose prediction and reduces the influence of sensor error and environmental factors on the positioning accuracy.

2.2 Localization optimization combining IMCLA and scanning matching

The final output of IMCLA is the weighted mean of the particle set. However, when the robot is in a complex environment, due to the influence of obstacles and other environmental factors, there is a certain deviation between the robot’s pose obtained by IMCLA and its true pose. Therefore, for positioning in complex environments, a method combining IMCLA with scanning matching is proposed to further optimize positioning technology and improve positioning accuracy. Before performing scan matching, it is necessary to first construct a map of the robot's environment. Therefore, this study chooses Gmapping to complete the establishment of the map. Gmapping mainly includes four steps: particle sampling, weight calculation, selective resampling, and map updating. Figure 5 shows the specific process.

In Fig. 4, the particle sampling and weight calculation in Gmapping are similar to IMCLA, and the particle pose is updated using IMCLA. When performing selective resampling, a parameter \(E\) is set in Gmapping to represent the effectiveness of the particle swarm, represented by Eq. (8).

In Eq. (8), \(w_{i}\) represents the weight of the \(i\) th particle in the particle swarm. \(N\) represents the total number of particles. The threshold set for the study is \(N/2\). When \(E\) is less than \(N/2\), resample the particle swarm [25]. On the contrary, no sampling is performed. This approach can effectively solve the particle degradation caused by frequent resampling. Finally, the map is updated, represented by Eq. (9).

In Eq. (9), \(\widehat{x}_{t}^{\left( i \right)}\) represents the map information around the \(i\) th particle at time \(t\). \(m_{t - i}^{\left( i \right)}\) represents the motion data of the \(i\) th particle at time \(t - 1\). \(z_{t}\) represents the observation data obtained at time \(t\). \(x^\circ\) represents the initial value of the particle set. The foundation of IMCLA implementation is to configure laser scanning equipment for robots [26]. This study uses the maps obtained from Gmapping to represent them using a grid network map. Therefore, the positioning of robots with laser devices in grid network maps can be transformed into a matching optimization problem, represented by Eq. (10).

In Eq. (10), \(\zeta\) and \(\zeta^{*}\) represent robot’s estimated and actual pose, respectively. \(S_{i} \left( \zeta \right)\) represents the coordinates of the endpoint of the robot laser equipment’s radiation in the grid network map. \(M\left( {S_{i} \left( \zeta \right)} \right)\) represents the occupancy probability of the map at coordinate \(S_{i} \left( \zeta \right)\). \(S_{i} \left( \zeta \right)\) is represented by Eq. (11).

In Eq. (11), \(\zeta_{x}\) and \(\zeta_{y}\) represent the coordinates of the robot in the grid network map. \(\theta\) represents robot’s heading angle. \(x_{laser}\) and \(y_{laser}\) represent the laser equipment’s coordinates on a robot. \(\theta_{laser}\) represents an angle between the laser equipment’s heading angle and a robot. The goal of scanning matching in the study is to minimize the deviation \(\Delta \zeta\) between \(\zeta\) and \(\zeta^{*}\). The objective function \(F\) is represented by Eq. (12).

Gaussian Newton iteration is used to optimize \(F\). The weighted mean of the particle set output by IMCLA is taken as \(\zeta\). The Taylor series expansion is used to approximate the nonlinear regression model, ultimately achieving the minimum. At this point, the laser beam endpoint can match the edges of obstacles in the real map, thereby improving the positioning accuracy of the robot in complex environments. On this basis, the study introduces Discrete Fourier Transform (DFT) to further eliminate errors caused by possible measurement anomalies. Figure 6 shows the basic idea of DFT.

Figure 6a shows that the robot is at the center of the measurement environment. Figure 6b shows that the robot's pose has shifted, indicating that its estimated pose is moving upwards to the right. The laser distance in Fig. 6a is set to \(d_{mn}\), which represents the distance obtained by the laser scanner, i.e. the true distance. The laser distance in Fig. 6b is set to \(d_{vn}\), which represents the virtual laser measurement value, i.e. the estimated distance. The function \(f = d_{mn} - d_{vn}\) is constructed, with a sine shaped curve. The distance offset between the actual pose and the estimated pose is reflected in the amplitude of the function \(f\). The directional shift is reflected in the phase of the function \(f\) [27]. Furthermore, the amplitude and phase of \(f\) are taken as the first input \(D_{1}\) of DFT, represented by Eq. (13).

In Eq. (13), \(S\) represents the number of points scanned by the laser. \(s\) represents the \(s\) th laser point. \(i\) represents imaginary units. Assuming that the error between the actual pose of the robot and the estimated pose is small, it is represented by Eq. (14).

In Eq. (14), \(x_{vn}\) and \(y_{vn}\) represent the estimated pose. \(x_{mn}\) and \(y_{mn}\) represent the true pose. \(x_{err}\) and \(y_{err}\) represent pose errors. According to Eq. (14), Eq. (15) can be obtained.

Therefore, according to Eq. (15), the pose offset of the robot can be obtained, and abnormal measurement values can be eliminated based on the pose offset. Figure 7 shows a localization optimization method combining IMCLA and scan matching.

In Fig. 7, the output of IMCLA, which is the weighted mean of the particle set, is used as the input for the optimization localization method. Meanwhile, the convergence of the particle set is first judged. If it converges, scanning matching is performed. Subsequently, after using Gaussian Newton iteration to match the laser beam endpoint with the edge of the obstacle, a preliminary estimate of the pose was obtained. Further filtering operations are completed using the DFT module to eliminate the deviation between the estimated pose and the actual pose. Finally, the robot pose output at this time is compared with the robot pose obtained by IMCLA. If the former converges, the pose is optimized and used as the final robot pose output. On the contrary, it indicates optimization failure, and the results are abandoned.

3 Results

The proposed IMCLA localization method and the optimized localization method combining IMCLA with scanning matching were validated. The Robot Operation System (ROS) was used as the software platform to conduct repeated positioning experiments and motion positioning experiments. The experimental results were analyzed in detail.

3.1 Experimental and analysis of repeated positioning methods for industrial robots

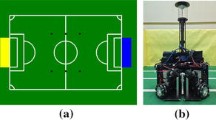

ROS was chosen as the software platform for the study. Gazebo 3D physical simulation platform was chosen to create a simulated real environment. A robot with sensors, LiDAR and other equipment was designed as the experimental object. The comparative methods selected for the study are global positioning system-based positioning method, artificial sideline-based positioning method, and vision-based positioning method. These three comparative methods were named M1, M2, and M3 in sequence. A method combining IMCLA with scanning matching was named IMCLA-Plus. Based on previous experience, reference to existing literature, and continuous experiments, the algorithm parameters were set as follows: the total number of particles was 500, the initial weight of particles was 1/500, the sampling frequency of KLD was 1 Hz, the error limit was 0.10 m, the probability of adding random particles was 0.08, and the number of iterations was 50. The convergence threshold is set to 10–4, the number of laser scanning points and frequency range of DFT are 200 and 1 Hz, respectively, and the matching threshold of scanning matching is 0.05m.

In the repeated positioning experiment, three points were selected as the target points in the simulated map, named after points 1–3. Point 1 is located in an open and simple environment, point 2 is located around obstacles, and point 3 is located in a narrow corridor environment. This robot was controlled to start from the origin and pass through three points in sequence, repeating 10 times. Figure 8 shows the data for point 1.

In Fig. 8a, the maximum absolute error of M1 positioning reached 5.16 cm. The maximum absolute error of M2 positioning reached 4.02 cm. The maximum absolute error during M3 positioning reached 3.94 cm. The proposed IMCLA and IMCLA-Plus had an error range of within 1.00 cm. In Fig. 8b, the localization accuracy of the proposed IMCLA and IMCLA-Plus was both above 95.00%, while the three comparison methods were all below 95.00%. Overall, for point 1 in a simple environment, the positioning performance of IMCLA and IMCLA-Plus was much better than the comparison method, but the difference was not significant. Table 1 shows the positioning results of point 2.

In Table 1, the average positioning error of M1 was 4.28 cm. The average positioning error of M2 was 3.64 cm. The average positioning error of M1 was 2.95 cm. The average positioning error of the proposed IMCLA was 1.55 cm, which was significantly improved compared to the error in point 1. The average positioning error of IMCLA-Plus was only 0.35 cm, which was not much different from the error in point 1. This indicated that the error of IMCLA in locating robots at the edge of obstacles was much lower than the comparison algorithms’. After combining with scanning matching, the positioning accuracy of IMCLA-Plus was further improved, indicating the effectiveness of the research content. Figure 9 shows the positioning simulation of point 3.

In Fig. 9a, the positioning distance of these three comparison methods was relatively far from the target point. The distribution of 10 positions was relatively scattered, with M1 being particularly prominent. This is because the global positioning system has weak indoor positioning capabilities, and point 3 is located in a narrow aisle, resulting in poor positioning performance. In Fig. 9b, the localization effect of IMCLA and IMCLA-Plus was significantly affected by the three comparison methods. The overlap between IMCLA-Plus and the target point reached 90%, further indicating that after optimizing IMCLA, its positioning performance was greatly improved in complex environments.

3.2 Experiment and analysis of motion positioning methods for industrial robots

In the motion localization experiment, simple, pile around, and complex motion paths were designed. In addition, based on the results of repeated positioning experiments, the study selected M3 with better positioning performance as the comparative method. Figure 10 shows the localization results of various methods on a simple motion path.

In Fig. 10a, the fit degree with the designed simple motion path, from high to low, was IMCLA-Plus, IMCLA, and M3, respectively. Figure 10b shows the positioning errors of three methods with simple motion paths. The maximum error of M3 was 2.98 cm, and the minimum was 1.27 cm. The error of IMCLA was between 0.00 cm and 1.52 cm. The error of IMCLA-Plus still did not exceed 1.00 cm. Figure 11 shows the positioning results of various methods on the path of pile movement.

In Fig. 11a, in the positioning of the motion path around the pile, the IMCLA-Plus was positioned close to a straight line, and its attitude angle changed less. M3 and IMCLA both had varying degrees of angular displacement in positioning, and their paths exhibited curves. Figure 11b shows the attitude angle error. The average attitude angle error of M3 reached 0.034 rad, IMCLA was 0.13 rad, and IMCLA-Plus was less than 0.05 rad, making its positioning of robot posture more accurate. Figure 12 shows the result in complex motion paths.

In Fig. 12, when facing complex motion paths in a complex environment, the positioning effect of M3 was greatly reduced, and even a significant positioning deviation appeared in the upper right corner, which did not match the path. IMCLA improved positioning performance compared to M3, with its positioning path following the target path and the deviation occurring within the acceptable range. The positioning effect of IMCLA-Plus was the best, and its positioning path fit was almost the same as the target path, which matched the previous experimental results. This is due to the addition of Gmapping, matching scanning, and DFT modules on top of IMCLA.

4 Discussion and conclusion

There are problems with low accuracy of industrial robot positioning in complex environments and the number of particles in Monte Carlo positioning methods. Therefore, the study improved the Monte Carlo method using three methods: “adaptive particle quantity”, “introducing random particles”, and “improved sampling method”. IMCLA was proposed and combined with scanning matching to further optimize the robot positioning method. These experiments confirmed that in the repeated positioning experiment at point 1, the error range of IMCLA and IMCLA-Plus was within 1.00 cm, while the errors of traditional methods were 5.16 cm, 4.02 cm, and 3.94 cm, respectively. At point 2, the average positioning error of IMCLA was 1.55 cm, while IMCLA-Plus was reduced to 0.35 cm, which was significantly improved compared to traditional methods. In the narrow corridor environment at point 3, IMCLA-Plus had the best positioning effect, with a 90% overlap with the target point. In addition, for simple motion paths, the maximum error of M3 was 2.98 cm, the minimum was 1.27 cm. The error of IMCLA was between 0.00 cm and 1.52 cm. The error of IMCLA-Plus still did not exceed 1.00 cm. For the path around the pile, the average attitude angle error of M3 reached 0.034 rad, the average IMCLA was 0.13 rad, and the average IMCLA-Plus was less than 0.05 rad. For complex motion paths, the positioning results of IMCLA-Plus were more closely aligned with the target path. Overall, IMCLA and its optimized version IMCLA-Plus have practical application value in improving the positioning accuracy of industrial robots and adapting to complex environments. Further exploration can be conducted on the application of this method in real industrial scenarios. Integration with other sensor technologies can be considered to achieve wider applications and higher positioning performance.

Highlights of the study are as follows:

1. An improved Monte Carlo positioning algorithm is proposed, which effectively solves the limitations of traditional Monte Carlo algorithm in positioning accuracy and adaptability through adaptive adjustment of particle number, introduction of random particles and improvement of sampling strategy. Where, adaptively adjusts the number of particles so that the size of the particle set ADAPTS to changes as needed. When positioning fails, adding random particles can introduce new attitude particles that help Monte Carlo recover from the wrong attitude. The improved sampling strategy uses liDAR data to correct the EKF predicted attitude, and realizes the fusion of attitude data and laser data, thus improving the positioning accuracy.

2. Combining the proposed improved Monte Carlo positioning algorithm with scan matching optimization method, Gauss–Newton iteration and DFT module are used to further improve the positioning accuracy of robots in complex environments.

3. Compared with traditional methods, the proposed method has shown higher positioning accuracy and real-time performance in repeated positioning and motion positioning experiments. In addition, the study also explores the application potential of improved Monte Carlo positioning algorithms in real industrial scenarios, and proposes the prospect of integrating with other sensor technologies to achieve a wider range of applications, providing important technical support for the development of industrial automation and intelligent manufacturing.

References

Bo, L.I., Wei, T., Zhang, C., Fangfang, H.U.A., Guangyu, C.U.I., Yufei, L.I.: Positioning error compensation of an industrial robot using neural networks and experimental study. Chin. J. Aeronaut. 35(2), 346–360 (2022)

Li, Z., Li, S., Luo, X.: An overview of calibration technology of industrial robots. IEEE/CAA J. Autom. Sin. 8(1), 23–36 (2021)

Sanneman, L., Fourie, C., Shah, J.A.: The state of industrial robotics: Emerging technologies, challenges, and key research directions. Found. Trends® in Robot.. 8(3), 225–306 (2021)

Truong, L.V., Huang, S.D., Yen, V.T., Cuong, P.V.: Adaptive trajectory neural network tracking control for industrial robot manipulators with deadzone robust compensator. Int. J. Control. Autom. Syst. 18(9), 2423–2434 (2020)

Goodwin, J., Saldaña, C.: Vision-based localization for cooperative robot-CNC hybrid manufacturing. Int. J. Adv. Manuf. Technol. 126(1), 241–258 (2023)

Chiang, T.H., Sun, Z.H., Shiu, H.R., Lin, K.C.J., Tseng, Y.C.: Magnetic field-based localization in factories using neural network with robotic sampling. IEEE Sens. J. 20(21), 13110–13118 (2020)

Josef, S., Degani, A.: Deep reinforcement learning for safe local planning of a ground vehicle in unknown rough terrain. IEEE Robot. Automat. Lett. 5(4), 6748–6755 (2020)

Shule, W., Almansa, C.M., Queralta, J.P., Zou, Z., Westerlund, T.: Uwb-based localization for multi-uav systems and collaborative heterogeneous multi-robot systems. Proced. Comput. Sci. 175(1), 357–364 (2020)

Guan, W., Huang, L., Hussain, B., Yue, C.P.: Robust robotic localization using visible light positioning and inertial fusion. IEEE Sens. J. 22(6), 4882–4892 (2021)

Kammel, C., Kögel, T., Gareis, M., Vossiek, M.: A cost-efficient hybrid UHF RFID and odometry-based mobile robot self-localization technique with centimeter precision. IEEE J. Radio Freq. Identif. 6(1), 467–480 (2022)

Akai, N.: Mobile robot localization considering uncertainty of depth regression from camera images. IEEE Robotics and Autom. Lett. 7(2), 1431–1438 (2022)

Li, J., Hu, S., Li, Q., Chen, J., Leung, V.C., Song, H.: Global visual and semantic observations for outdoor robot localization. IEEE Transact. Netw. Sci. Eng. 8(4), 2909–2921 (2020)

Xu, Y., Shmaliy, Y.S., Bi, S., Chen, X., Zhuang, Y.: Extended Kalman/UFIR filters for UWB-based indoor robot localization under time-varying colored measurement noise. IEEE Internet Things J. 10(17), 15632–15641 (2023)

Jha, P., Yadav, G.P.K., Bandhu, D., Bandhu, D., Hemalatha, N., Mandava, R.K., Adin, M.Ş, et al.: Human–machine interaction and implementation on the upper extremities of a humanoid robot. Discov. Appl. Sci. 6(4), 152 (2024)

Lee, S.S., Lee, D.H., Lee, D.K., et al.: Improved nonlinear finite-memory estimation approach for mobile robot localization. IEEE/ASME Trans. Mechatron. 27(5), 3330–3338 (2022)

Nguyen, T.H., Nguyen, T.M., Xie, L.: Flexible and resource-efficient multi-robot collaborative visual-inertial-range localization. IEEE Robot. Automat. Lett. 7(2), 928–935 (2021)

Suh, D., Lee, D.K., Pak, J.M., Ahn, C.K.: Distributed frobenius-norm finite memory interacting multiple model estimation for mobile robot localization. IEEE Access 10(1), 124193–124205 (2022)

Güler, S., Abdelkader, M., Shamma, J.S.: Peer-to-peer relative localization of aerial robots with ultrawideband sensors. IEEE Trans. Control Syst. Technol. 29(5), 1981–1996 (2020)

Lajoie, P.Y., Beltrame, G.: Swarm-slam: Sparse decentralized collaborative simultaneous localization and mapping framework for multi-robot systems. IEEE Robot. Autom. Lett. 9(1), 475–482 (2023)

González, M., Rodríguez, A., López-Saratxaga, U., Pereira, O., de Lacalle, L.N.L.: Adaptive edge finishing process on distorted features through robot-assisted computer vision. J. Manuf. Syst. 74, 41–54 (2024)

Li, M.G., Zhu, H., You, S.Z., Tang, C.Q.: UWB-based localization system aided with inertial sensor for underground coal mine applications. IEEE Sens. J. 20(12), 6652–6669 (2020)

Yousuf, S., Kadri, M.B.: Information fusion of GPS, INS and odometer sensors for improving localization accuracy of mobile robots in indoor and outdoor applications. Robotica 39(2), 250–276 (2021)

Ghazal, M.T., Al-Ghadhanfari, M., Waisi, N.Z.: Simulation of autonomous navigation of turtlebot robot system based on robot operating system. Bull. Electr. Eng. Inform. 13(2), 1238–1244 (2024)

Sousa, R.B., Sobreira, H.M., Moreira, A.P.: A systematic literature review on long-term localization and mapping for mobile robots. J. Field Robot. 40(5), 1245–1322 (2023)

Liu, J., Liu, Z.: The vision-based target recognition, localization, and control for harvesting robots: a review. Int. J. Precis. Eng. Manuf. 25(2), 409–428 (2024)

Jiang, Z., Bi, Y., Zhou, M., Hu, Y., Burke, M., Navab, N.: Intelligent robotic sonographer: mutual information-based disentangled reward learning from few demonstrations. Int. J. Robot. Res. 43(7), 981–1002 (2024)

Marzoa Tanco, M., Trinidad Barnech, G., Andrade, F., et al.: Magro dataset: a dataset for simultaneous localization and mapping in agricultural environments. Int. J. Robot. Res. 43(5), 591–601 (2024)

Funding

The research is supported by: 2022 The Project of Quality Engineering Project of Anhui Province (2022cxtd017) and 2023 The Project of Quality Engineering Project of Anhui business and technology college (2023xjjy24).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, C., Zhang, C. Industry and artificial intelligence: industrial robot localization based on improved monte carlo algorithm. Int J Interact Des Manuf (2024). https://doi.org/10.1007/s12008-024-02085-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12008-024-02085-6