Abstract

Models are increasingly applied to improve the thermo-elastic behavior of machine tools. The most universal models are those with physically based approaches. They are used for analysis in the design process of the machines and for determining correction values in machine control during operation. In order to achieve sufficient accuracy, the models must be adjusted with metrological support. This is due to some model parameters, which have a high degree of uncertainty and overall effect. Because of the large number of parameters with very different characteristics, the determination of the parameters relevant for the adjustment is manually and computationally time-consuming. This article presents a systematic method of parameter selection that reduces this effort. The procedure is demonstrated exemplarily by the example of the thermo-elastic model of a hexapod strut axis.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The machining industry continues to be confronted with demands for higher accuracy. A large part of the accuracy limiting machining errors of today’s machine tools is due to their thermo-elastic behaviour. Therefore, the mastering of the thermo-elastic machine behaviour has a particular relevance. Numerous measures are known to control this behaviour [1]. They can be divided into measures for compensation and correction [2]. Compensatory measures have influence through constructive modifications with the aim of minimizing the displacements of the Tool Center Point (TCP). Corrective measures in contrast are used to predict the remaining thermo-elastic deformations by control integrated models and correct the deformations by inverse movements of the feed axes. Using these measures, machine tools get resilient and self-adaptable to thermal loads from inside the machines and its surroundings. These are attributes of the worry-free productivity in an Industry 4.0 factory [3].

Thermal models are increasingly used for these measures. Compensatory measures are incorporated during the concept and design phase of the machine tool. This is an interactive process. In this process, various virtual prototypes, representing promising design options, are generated. Thermal models are part of the virtual prototypes and are used to analyse the thermo-elastic behaviour. Based on this, decisions about the machine design are made. An example is the analysis of a latent heat storage device [4]. The device temporarily stores the feed drive heat losses using paraffin based phase-change materials. The aim is to lower the temperature rising as a response to the drive activity and with it lower the accuracy relevant temperature dependent deformation of the machine structure. A simulation is used to predict the impact of the devices on the temperature field under typical load cycles. Based on this, predictions for the efficiency during deployment of these devices are made.

In the case of correction measures, the models are used for the internal calculation of correction values for the motion axes. The models compute the thermo-elastic machine behaviour mainly with internal signals of the numerical control as input signals. The effectiveness of the correction approach is e.g. verified on the example of a current 5-axis machine tool. The thermal error induced by internal heat sources of the rotary axes could be reduced by up to 85% [5].

2 Structure based models

Thermal behaviour models can be divided into correlative and structure-based models. Correlative models use empirical approaches, in which all parameters must be identified by a metrologically supported adjustment. This enables a high model quality, but in principle also limits its validity to the load regimes on which the measurements are based. This is especially suitable for the repetitive load regimes in series production. Instead, structure-based models use physically founded approaches. They therefore have a larger range of validity [6]. They are suitable for a broad variety of load regimes that are typical for single-item production. Furthermore, they appropriate for a wide range of applications.

This is demonstrated in [7]. A structure-based model is developed and used alongside the life cycle of a machine tool. Beginning in the concept phase, fundamental design decisions are made based on coarse models of the general machine structure. In the design phase, machine components are constructively developed. The models get gradually adapted to the development progress. In the accompanying analyses, detailed design variants are compared. Finally, in the operating phase, the model can be used for the control-integrated correction. In this application, the model computes the correction values during the operation of the machine.

One particular problem of structure-based thermal models is the high uncertainty of results. The cause is typically not the model structure, because the available model approaches are mostly adequate. In addition, most parameters can be estimated with sufficient accuracy from design data of the computer-aided engineering process. Only some of the parameters show very high uncertainties and at the same time high impact. This leads to large model deviations compared to real behaviour [8]. Especially for models with correction purpose, the resulting accuracy is not sufficient. Therefore, a model adjustment based on measurements of the thermal machine behaviour is necessary. So far, a high temporal, personnel and metrological expenditure is necessary for this. The aim is therefore to reduce this effort.

3 Tasks of the model adjustment process

In order to find approaches for reducing the effort, a systematic analysis of the procedure for parameter adjustment is carried out. The analysis delivered the workflow of the parameter adjustment shown in Fig. 1 consisting of 7 steps. The steps are underlaid with specific subtasks. The parameter adjustment can be divided into three phases: the planning phase for planning the load tests and measurements, the experimental phase for carrying them out on the machine and the adjustment phase for non-linear optimization of the parameters and the final verification.

Generalized procedure for parameter adjustment (according to [8])

The need for support of individual subtasks varies considerably. The need for non-linear optimization of the parameters in the adjustment phase, for example, is relatively low. However, it is more evident in the planning and experimental phase. There is particularly large potential for increasing efficiency in the planning phase, because not only the planning effort itself can be reduced, but also the effort in the test and comparison phase.

The effort in the planning phase results in particular from the fact that the adjustment must be specifically adapted in each case due to the always individual characteristics of the machine, their operating conditions and the model. In the first step of this phase, the basis for a successful adjustment is laid by conditioning the model. An essential sub-task is the selection of the parameters to be adjusted from the large number of total parameters. The work is currently characterized by an intuitive approach, requires in-depth thermal expertise and involves a great deal of manual and computational effort. A systematic methodology for determining the parameters to be calibrated has been developed to reduce these requirements.

4 Thermo-elastic model of a hexapod strut

The methodology is presented by example of the correction model of a hexapod. The correction model shows the accuracy relevant thermo-elastic behaviour of 6 identical rod axes of a hexapod [7]. The thermo-elastic behaviour is characterized by processes that extends over several physical domains. These processes form the thermo-elastic chain of effects and are shown in Fig. 2 in the form of a cause-effect relationship. The model uses a so-called structure-based approach, in which primarily physically justified model approaches are used.

Accuracy relevant behaviour of the thermo-elastic chain of effects (according to [9])

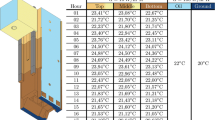

The thermal behaviour extends over several physical domains and shows several non-linear effects within. Digital block simulation (DBS) network models are well suited to simulate this. Therefore, the correction model is implemented in this form. Figure 3 shows the part of the model for simulation of the temperature field. It contains different types of simulation objects: Assemblies are finite element (FE) models to represent temperature fields of solids. Contact elements describe thermal connections between two assembly objects and between assembly objects and the environment. This includes the non-linear effects of heat radiation, convection and heat transport by mass transfer. The contact elements also represent relative movements between the assembly objects, e.g. for the simulation of moving axis assemblies of the machine tools. There are also simulation objects for temperature restraints and source heat flows. They describe the boundary conditions of assemblies such as measured ambient temperatures and friction caused heat losses. Another model part not shown here determines the thermos-elastic deformation of the components based on the calculated temperature field of the strut axes. It is determined by the free longitudinal thermal expansion based on the mean temperature of each machine part.

Thermal network model of a hexapod strut axis (corresponding to [7])

Conventionally, FE models of machine tools approximate the fine geometry of the components with high geometric resolution. They therefore have a very high number of degrees of freedom and are very computationally intensive. In order to achieve the real-time capability necessary for the correction model, the assembly objects consist of order-reduced FE models. To generate these reduced models, geometric high-resolution FE models are created based on CAD data of the components using FE tools. These are subsequently transformed into compact models, without significant loss of accuracy. For this purpose, adapted and further enhanced model order reduction (MOR) methods of the Krylov type [10] are used. The enhancements support mainly the simulation of relative movements between axis assemblies and other substantial nonlinearities [7]. As a result, the ratio of real time to simulation time for the model of the hexapod axis strut using a conventional PC is about 100 to 1.

5 Principle procedure for parameter selection

Physical based thermal models have a high number of parameters. Some of these parameters have a high uncertainty and a high overall impact on the machine behaviour and have to be adjusted. Both the uncertainty and the impact of the parameters are initially unknown and thus also the parameters to be adjusted. When determining the parameters to be adjusted, the following characteristic boundary conditions must be considered:

-

Structure-based models are comparatively large. The number of parameters is typically well above 100, i.e. a high number of model parameters has to be investigated.

-

As a result of the large heat capacities of the machine parts, the impact of the parameters is strongly delayed. The effect of the parameters can therefore only be determined by computationally expensive transient model calculations.

-

The effect of the parameters is very versatile. For example, the time delayed effect of the parameters depend on the time constant of the components, the parameter type and the machines movement.

-

The uncertainty of many individual parameters of model approaches for convection, thermal radiation and power dissipation are unknown. In most cases, only the overall uncertainty of model approaches can be estimated.

-

The structure of the models clearly varies due to the wide variety of machine types and existing modelling approaches.

-

The thermal behaviour of the machines depend on the varying operating and ambient conditions of the machines.

A methodology for parameter selection was developed with the aim of systematically using prior knowledge about the model and typical boundary conditions to reduce the manual and computational effort. It covers five stages:

-

1.

Determination of the parameter characteristics and categorisation for efficient use in the further procedure,

-

2.

Reduction of the number of parameters by combining the individual parameters of sub-models into condensed characteristic values,

-

3.

Further reduction of the number of parameters by pre-selecting parameters with significant uncertainty,

-

4.

Determination of realistic (manufacturing, operating and environmental conditions of the machine) and time-optimal load regimes for parameter stimulation and

-

5.

Selection of the parameters to be adjusted using quantitative results of simulative sensitivity analyses.

After the parameter selection, the metrologically assisted identification of the parameters contained in the characteristic values can take place.

6 Relevant parameter characteristics and categorisation

Efficient and successful parameter selection is only possible if the characteristics of the parameters are systematically considered. In the first stage of parameter selection, the primary characteristics of the parameters of the model are therefore determined and categorized for efficient use in further procedures. The essential characteristics are uncertainty, temporal course of effects and location of effects.

With regard to the uncertainties, there are clear differences between physically based and empirical model approaches. The parameters of physically based models describe material properties and geometry. This is typically achieved with very little uncertainty. However, some sub-models are empirical in nature. They are used as a simplified representation of complex processes such as fluid- and elastohydrodynamics. Physically based models are too computationally expensive for this. Empirical models approximate the behaviour much stronger. They therefore have significantly higher uncertainties.

The result of an analysis of the application of these two types of model abstraction within physical-based thermal models is shown in Fig. 4. The parameters can be divided into the categories of basic thermal quantities with respect to uncertainties. These are capacity C, conductance L, heat source \( {\dot{\text{Q}}} \) and deformation u. It turns out, that the parameters for calculating thermal capacities have very low uncertainties due to physically based models. The parameters for heat sources, on the other hand, are mostly empirical in nature. They have very high uncertainties. The parameters for thermal conductance of solids and heat transfer require further differentiation. They are classified into their behavioural domains, since typical characteristics for their uncertainty can be found there. For example, the behaviour domain of solid-state conduction is described physically based, while heat transfer processes like convection are represented empirically. The models for the deformation calculation are physically based and therefore show minor uncertainties.

With regard to the temporal course of thermal effects, the parameters are also divided into the basic thermal quantities. They show a different character in their temporal effect. This is clearly shown by the example of a minimal thermal machine model [11]. The capacity of the machine body is concentrated in one point. The body has a uniform temperature and is connected to the environment via heat transfer h at the surface A. When the body gets constantly heated by an internal heat source \( {\dot{\text{Q}}} \), the response of the temperature T corresponds to the behaviour of a 1st order delay element (Fig. 5a):

a Step response of a uniformly heated body; b influences of the uncertainties of the basic thermal variables capacity C, conductance L and heat load \( {\dot{\text{Q}}} \) (corresponding to [11])

The temporal effect of the uncertainty of the parameters can be determined by the total differential. Figure 5b shows the proportions of uncertainties with respect to the total uncertainty over time. It is assumed that the uncertainty of the basic quantities is equal and the total uncertainty uT can be determined according to the error propagation law:

As can be seen in Fig. 5b, the influence of the conductance component increases over time. The capacity component goes in the opposite direction. It is constantly decreasing. The influence of the heat source portion, on the other hand, remains constant.

A further classification is required with regard to the local effect, since the parameters show a locally higher influence mainly due to insulating heat transfer mechanisms around certain machine areas. The classification of the local effect of the parameters is done via the sub-model or the model object (e.g. assembly, contact, environment) in which the parameter is located.

7 Aggregation of parameters to characteristic values

A general approach to reduce the high manual and simulative effort for the analysis of the many individual parameters during parameter selection is to aggregate individual parameters. This enables the combined handling of parameters, which significantly reduces the number of required partial analyses during the whole parameter adjustment process.

In order to allow the characteristics of the parameters to be used during selection of parameters, only parameters with the same characteristics should be combined. This can be achieved by aggregating only parameters of the same category. The fact that the sub-models of the existing model structure each contain parameters with a similar effect character can be used here. For example, the sub-model for heat capacity of a machine part contains the parameters for specific heat capacity, density and volume (see Fig. 4). These parameters are united by the effect character of the thermal delay at the given location. The greater are their resulting capacity values, the greater is the thermal time constant of the machine part. According to these correlations, the parameters can be structured on the level of the sub-models. The result variables of the partial models represent the effect of several partial parameters. They are therefore called condensed parameters.

If the model contains geometrically or physically high-resolution sub-models with the same characteristics, it is useful to combine several of these sub-models. An example is the heat conduction in solids. The conduction is often modelled with a locally very high-resolution FE mesh. The FE elements of this mesh can be interpreted as sub-models. The parameters of these sub-models are thermal conductivity and geometric parameters. They have a similar effect, because they represent the thermal conduction over the structure. Furthermore, the properties of the material describing the parameters hardly vary within the components. Accordingly, the sub-models can be combined over larger local areas. Another example is the power loss of a sealed rolling bearing. There is often a high physical resolution of the power loss description with sub-models for the seal friction and sub-models for the friction of the rolling contacts. The sub-models have the same thermal characteristics of heat sources and a similarly high uncertainty. They are arranged locally close to each other and thus act approximately at the same location in the machine. Here it is also useful to combine the parameters of the sub-models.

Figure 6 shows the grouping of the parameters to condensed values using the example of the strut axis model. The local mapping of the parameters is given by the model objects of the network plan. The parameters of the reduced FE models of the assembly objects are combined to condensed parameters which represent the capacities and heat conduction of the respective solids. The contact model objects contain model approaches for heat transfer and friction heat sources. The heat transfer represents joints, gaps and fluid-filled interiors. They are modelled with approaches for convection, heat radiation and heat transport by mass transfer. The condensed parameters represent the parameters of the respective models. The same applies to the heat flow model objects, which represent boundary conditions for assembly objects with sub- models for friction and electrical losses.

8 Preselection of parameters with significant uncertainty

In order to further reduce the computational effort of the sensitivity analysis, a pre-selection of parameters with parameters of significant uncertainty is done. Uncertainty values of previous uncertainty analyses are used [8], which are briefly summarized below.

Statements on uncertainties can be found in particular for material and geometry parameters of physically based solid models. From this, overall uncertainties of partial models can be estimated. They can be estimated as ± 7% for approaches for the determination of heat capacities, ± 10% for heat conduction and ± 10% for thermal expansion.

There is little information on the uncertainties of empirical model approaches for heat transfers and heat sources at machine tools. There are isolated literature references to the uncertainty of model approaches itself, e.g. for convection. However, these uncertainties refer to idealized boundary conditions which do not exist at machine tools. For example, approaches for convective heat transfer at component surfaces assume undisturbed conditions of the oncoming airflow and homogeneous temperature fields of surfaces. In reality, the on flow conditions are affected by surrounding components and forced flows and the temperature fields of the components are distinctly inhomogeneous. Therefore, further literature searches as well as simulative and experimental analyses on uncertainties of the model approaches were carried out. As boundary conditions, realistic operating and environmental conditions of machine tools were considered. The investigations allow a rough quantitative estimation of the uncertainties. They range from about ± 20% to − 70/+ 200%.

These high uncertainty values of the empirical approaches lead to the assumption of insufficient model accuracy in correction applications. The pre-selection of the parameters for the sensitivity analysis therefore include these approaches.

9 Procedure for screening the condensed parameters

The further selection of the parameters is carried out by means of a sensitivity analysis. It is intended to provide a ranking of the uncertainty of condensed parameters. There are numerous methods of sensitivity analysis. They differ quite considerably in the information content of the sensitivity measures, which characterize the parameter impact. A common classification of sensitivity analyses is local and global as well as qualitative and quantitative [12]. Local methods determine the sensitivity of the parameters at a point in its parameter space with fixed values of all other parameters. The sensitivity measure is determined by partial derivatives or correlation coefficients. Global methods additionally determine the relationship between individual parameters. Therefore, several model parameters are varied simultaneously. Compared to qualitative methods, quantitative methods may consider the statistical distribution of the uncertainty of the parameters in their parameter space. Variance-based methods are usually used for this purpose. Table 1 shows a comparison of three representative and frequently used methods of sensitivity analysis. The methods roughly cover the described range of sensitivity analyses.

As Table 1 indicates, variance-based quantitative methods are computationally too intensive for the sensitivity analysis of the preselected parameters. Local methods, on the other hand, are relatively fast, but do not provide sufficient information for the subsequent parameter adjustment. Therefore, the global qualitative method by Morris is chosen. The method is computationally less demanding and allows at least a good estimation of the ranking of the parameter impact.

The Morris method determines the sensitivity by so-called elementary effects. The mean elementary effect \( \mu^{*} \) for a parameter [14] serves as the global sensitivity measure. It is determined from several elementary effects with simultaneous variation of the other parameters. The standard deviation of the elementary effects serves as a further sensitivity measure. It reflects the interdependencies of the parameters (e.g. temperature-dependent heat transitions, which depend on the magnitude of the input power loss) as well as the non-linearities in the effect of the parameters.

10 Determination of appropriate load regimes for parameter excitation

The parameter selection is based on a realistic stimulation of the parameters, i.e. it corresponds to the use of the correction model under realistic machine tool operating conditions. The stimulation is performed by load regimes which describe the temporal course of the input data of the thermo-elastic model (Fig. 4). Input data are mostly technological data such as positions {x} and velocities \( \left\{ {\dot{x}} \right\} \) of the axes. However, they also contain measurement data, such as the temperature of surrounding air TU of the machines, for the acquisition of ambient conditions.

A realistic stimulation of the machine tool means a thermal load under typical operating and environmental conditions. The conditions depend on the application scenarios of the machine tools, which can be very different. Additionally the application scenarios cover long time periods. This is associated with computationally expensive simulations. It is therefore analysed to what extent the scenarios influence the parameter effect and how realistic stimulation can be achieved with the lowest possible computational load. Three fundamentally different scenarios are examined using the hexapod as an example (Fig. 7):

-

Manufacturing of individual parts are characterized by operating phases with changing working areas and long breaks for workpiece change, programming and set-up.

-

The machining in series production runs almost uninterrupted (short workpiece exchange times can be neglected) with movements around the axis center, because the machine tools are typically tailored for the manufacturing task.

-

Lastly an artificial scenario of a “step response” following a constant load of a continuous full axis stroke and subsequent cooling at standstill. The load is applied until the approximate steadiness in order to achieve a balanced effect of the basic thermal parameters C, L and \( {\dot{\text{Q}}} \) (see Fig. 3).

All scenarios simulate an 8-h shift of a working day and are performed at average axis speed.

The evaluation of the parameter stimulation is done by a sensitivity analysis according to Morris. The parameters are considered in the form of condensed parameters. The sub-models of the thermal model, which represent the condensed parameters, are provided with a scaling factor. This factor is varied in the sensitivity analyses and thus scales the effect of the respective parameter. The sensitivity is determined with respect to the accuracy relevant displacement of the TCP. The longitudinal expansion of the strut axis serves as the characteristic quantity for this, whereby the individual values are averaged over the simulation time.

The comparison of the impacts of the parameters for different application scenarios of the machine shows different absolute values. This is caused by different thermal loads, e.g. by the varying length of the motion cycles. However, the parameters should be selected based on the relative effect of the parameters compared to each other. The effects are therefore normalized. The mean elementary effects of the individual parameters \( \mu_{k}^{*} \) are thus evaluated in relation to the sum of all mean elementary effects \( \sum \mu_{i}^{*} \) of the respective application scenario. Figure 8 shows a comparison of the normalized effects. There are clear differences between the application scenarios for some parameters. Overall, however, the effects are quite similar and the ranking order of the parameters hardly differs. In principle, therefore, each of the load regimes examined can be used for parameter selection.

The computational effort of the load regimes depends on the machine time to be simulated. The investigated load regimes each require 8 h. However, the step response load regime can be shortened without noticeably changing the parameter effect. The reason for this lies in the thermal time constant of the strut axis. The time constant results in an approximate steadiness after 3 h of constant load. After 3 h of heating and 3 h of cooling, there is no longer any relevant parameter effect with this load regime. It can be terminated. Because of this, the step response load regime is to be preferred for parameter selection.

11 Selection of the parameters to be adjusted

In the last stage, the parameters for adjustment can finally be selected based on a sensitivity analysis. The sensitivity analysis according to Morris and uncertainty values as defined in [7] are used. The result of the analysis ranks the parameter impacts in the form of elementary effects (Fig. 8). The elementary effects can be understood as a variation of the strut axis elongation if the analysed parameter is varied over its entire value range. Thus, the statistical distribution of the parameter values within the range of uncertainty is assumed to be equal. This corresponds to a uniform distribution. It roughly reflects the reality. It conveys a rather high and unlikely value. The elementary effects can therefore be interpreted as an upper limit for the considered impact.

Based on the analysis, the parameters for the adjustment process can be selected. As can be seen in Fig. 9, the elementary effects of the first four parameters have values above 50 µm. These values are relatively high with regard to the desired motion accuracy of the hexapod. If the uncertainties are significantly reduced by a parameter adjustment, an acceptable residual error of the model can be expected for the correction application. These four parameters are therefore selected for subsequent optimization.

The values of the standard deviation σ determined in the sensitivity analysis also provide essential information for the subsequent optimization of the parameters. In the present case, the values have a relevant amount. Thus, dependencies between the condensed parameters and/or nonlinear effects of the condensed parameters must be assumed. The behaviour must be analysed in greater depth in the further procedure of the parameter adjustment and, if necessary, taken into account. If, for example, dependencies exist between the characteristic values, the parameter optimization of these characteristic values cannot take place independently.

12 Summary and outlook

Thermal machine models contain parameters with high uncertainties. For applications with high accuracy requirements, such as control-based correction, these must be metrologically adjusted. An essential part of the effort for the adjustment occurs in the work stages for the selection of parameters to be adjusted. For this purpose, a 5-step procedure was presented in this article and exemplarily implemented using the thermal model of a hexapod strut axis:

-

1.

Categorisation of the parameters with regard to their character of impact,

-

2.

Reduction of the number of parameters by combining them into condensed parameters,

-

3.

Further reduction by preselection of parameters with significant uncertainty,

-

4.

Determination of realistic and time-optimal load regimes for parameter stimulation and

-

5.

Selection of the parameters to be adjusted via simulative sensitivity analyses.

The 5-stage parameter selection represents a consistently well-founded methodology. It allows a sound selection of the parameters and thus represents the prerequisite for achieving a sufficient model quality in the following parameter adjustment.

An efficient workflow was developed for the individual stages and a computationally favourable method of sensitivity analysis was selected. This reduces the necessary computational effort.

The procedure of parameter selection was systematically elaborated. This provides an operating scheme and thus minimizes the necessary expert knowledge. Furthermore, it forms the basis for the development of support tools that automate parts of the work. This can reduce the manual effort.

The procedure consistently takes the given prior knowledge into account. For example, the character of the parameters is taken into account. This makes it possible to condense individual parameters, enables time-optimised load regimes and allows the pre-selection of relevant parameters with significant uncertainty. Eventually, a significant reduction of the computational effort could be achieved.

The selection of parameters considered in this article is followed by numerous further stages of the parameter adjustment. The approach of the bundled treatment of the parameters in the form of condensed parameters can be further pursued. This means the parameter adjustment is initially done with the condensed parameters. Only in case of a need in increased model accuracy, the adjustment is shifted to the level of individual parameters.

References

Mayr, J., et al.: Thermal issues in machine tools. CIRP Ann. 61(2), 771–791 (2012)

Großmann, K.: Thermo-Energetische Gestaltung von Werkzeugmaschinen. Zeitschrift für wirtschaftlichen Fabrikbetrieb (ZWF) 107(5), 307–314 (2012)

Lee, J., Bagheri, B., Kao, H.A.: Cyber-physical systems architecture for Industry 4.0-based manufacturing systems. Manuf. Lett. 3, 18–23 (2015). ISSN 2213-8463

Voigt, I., Winkler, S., Bucht, A., Drossel, W.-G., Werner R.: Thermal error compensation on linear motor based on latent heat storages. In: Conference on Thermal Issues in Machine Tools, pp. 117–126. Auerbach/Vogtl (2018)

Gebhardt, M.: Thermal behaviour and compensation of rotary axes in 5-axis. Doctoral Thesis (2014)

Großmann, K., Wunderlich, B., Szatmari, S.: Progess in accuracy and calibration of parallel kinematics. Parallel kinematic machines in research and practice. In: Proceedings of the 4th Chemnitz Parallel Kinematics Seminar PKS 2004, 20–21 April 2004, Chemnitz (Germany), pp. 49–68. Reports from the IWU, 24, Verlag Wissenschaftliche Skripten, Zwickau (2004)

Schroeder, S., Galant, A., Beitelschmidt, M., Kauschinger, B.: Efficient modelling and computation of structure-variable thermal behaviour of machine tools, pp. 13–22. In: Conference on Thermal Issues in Machine Tools, Auerbach/Vogtl (2018)

Kauschinger, B., Schroeder, S.: Uncertain parameters in thermal machine-tool models and methods to design their metrological adjustment process. Appl. Mech. Mater. 794, 379–386 (2015)

Kauschinger, B., Schroeder, S.: Uncertainties in heat loss models of rolling bearings of machine tools. In: Procedia CIRP 7th HPC 2016—CIRP Conference on High Performance Cutting (2016)

Krylov, A.N.: On the numerical solution of the equation by which in technical questions frequencies of small oscillations of material systems are determined. Izvestaja AN SSSR (News of Academy of Sciences of the USSR) 7(4), 491–539 (1931)

Jungnickel, G.: Simulation des thermischen Verhaltens von Werkzeugmaschinen. Schriftenreihe des Lehrstuhls für Werkzeugmaschinen, Dresden (2000)

Pianosi, F., et al.: Sensitivity analysis of environmental models: a systematic review with practical workflow. Environ. Model. Softw. 79, 214–232 (2016)

Morris, M.D.: Factorial sampling plans for preliminary computational experiments. Technometrics 33(2), 161 (1991)

Campolongo, F., Cariboni, J., Saltelli, A.: An effective screening design for sensitivity analysis of large models. Environ. Model. Softw. 22(10), 1509–1518 (2007)

Sobol, I.: Global sensitivity indices for nonlinear mathematical models and their Monte Carlo estimates. Math. Comput. Simul. 55(1–3), 271–280 (2001)

Saltelli, A., Annoni, P., Azzini, I., Campolongo, F., Ratto, M., Tarantola, S.: Variance based sensitivity analysis of model output Design and estimator for the total sensitivity index. Comput. Phys. Commun. 181(2), 259–270 (2010)

Acknowledgements

The German Science Foundation (DFG) funded this research within the CRC 96 “Thermo-energetic design of machine tools” project B04.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Schroeder, S., Kauschinger, B., Hellmich, A. et al. Identification of relevant parameters for the metrological adjustment of thermal machine models. Int J Interact Des Manuf 13, 873–883 (2019). https://doi.org/10.1007/s12008-019-00529-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12008-019-00529-y