Abstract

Purpose of Review

This review focuses on the current advancements in optimizing patient response to cardiac resynchronization therapy (CRT).

Recent Findings

It has been well known that not every patient will derive benefit from CRT, and of those that do, there are varying levels of response. Optimizing CRT begins well before device implant and involves appropriate patient selection and an understanding of the underlying substrate. After implant, there are different CRT device programming options that can be enabled to help overcome barriers as to why a patient may not respond.

Summary

Given the multifaceted components of optimizing CRT and the complex patient population, multi-subspecialty clinics have been developed bringing together specialists in heart failure, electrophysiology, and imaging. Data as to whether this results in better response rates and outcomes shows promise.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The beneficial effects of cardiac resynchronization therapy (CRT) in the heart failure population with intraventricular conduction delays (IVCDs) have been well known since the early 2000’s. The presence of an IVCD represents electromechanical inefficiency in a failing heart. Typically, late activation of the left ventricular (LV) lateral wall occurs and contributes to worsening hemodynamics. Asynchronous ventricular contraction results in a decline in systolic function, increased end systolic and end diastolic volumes (ESV and EDV) and increased wall stress [1]. Some patients can also experience worsened mitral insufficiency due to abnormal papillary muscle function. Overall, this discoordinated ventricular conduction results in inefficiency and promotes adverse ventricular remodeling.

A pacemaker-mediated therapy aimed at re-establishing synchrony was a novel and significant development in the treatment of heart failure. CRT not only improves cardiac output in the short term but can also lead to reverse ventricular remodeling long term. These improvements translate into better survival and reductions in heart failure hospitalizations [2]. It has been noted that many patients who meet current guidelines for CRT implant appear not to respond and hence a large body of research has been devoted to identifying potential predictors of favorable outcomes [3]. Response rates to CRT differ based on how one defines response [4]. When one uses outcomes like 6-min hall walk distances or improvement in quality of life scores, response rates appear to be higher than when compared to more objective outcomes like heart failure hospitalizations or death [4]. The notion of labelling a patient a CRT “responder” vs. “non-responder” has been called into question by many in the electrophysiology and heart failure communities as it is difficult to determine the disease course in any individual patient. For example, lack of apparent improvement with CRT may actually represent an arrest of a downhill trajectory and hence actually be a favorable response. Gold and colleagues have termed such a patient a “non-progresser.” Other patients are not simply “non-responders” to CRT but actually are made worse by the device [5]. Lastly, there exists a group of patients that develop dramatic improvements in ejection fraction following CRT sometimes to normalization. Such patients have been termed “super-responders” [6]. In addition, when there is a bradycardia indication in the setting of a diminished LV ejection fraction (LVEF), the goal of CRT is often to protect LV function from deterioration with right ventricular pacing rather than LVEF improvement [7]. Such patients fall outside the lines of a discussion of response. Regardless of whether one believes in the term “responder” or not, all patients receiving CRT devices require close follow-up to ensure they are on the best possible trajectory. The term “optimization” in the setting of CRT has traditionally referred to echocardiographically guided selection of optimal AV and VV delays. As CRT implant has progressed over time, the term CRT optimization has evolved beyond an echo-guided strategy now to encompassing a multidisciplinary algorithmic approach to selecting interventions best suited to individual CRT patients.

Appropriate Patient Selection

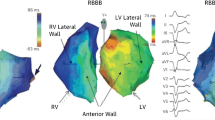

One of the first keys to optimizing outcomes in CRT patients is to determine the underlying electrical substrate. If the baseline QRS morphology prior to CRT is unknown, it is imperative to suppress pacing when feasible and to perform a 12-lead ECG of the underlying rhythm as the response in patients with varying QRS durations and morphologies vary significantly. Much has been written about the role of CRT in patients with a non-LBBB pattern (right bundle branch block or non-specific intraventricular conduction delay) [8,9,10]. While certain patients with non-LBBB’s may benefit from CRT, the chances of such are significantly lower than in patients with a LBBB pattern or RV-paced pattern [8, 9]. In patients with a non-LBBB pattern, QRS duration may play a role in determining the chances of response [11]. It is thought that most patients with a non-LBBB and a QRS duration < 150 ms have insignificant amounts of left ventricular dyssynchrony and hence may not benefit form CRT. In subgroup analysis from the MADIT-CRT trial, not only was there no benefit with CRT in these patients but also a strong trend towards harm [10]. When the QRS is > 150 or more, LV dyssynchrony may be present and hence response to CRT higher [12, 13]. Patients with a right bundle branch block (RBBB) with concomitant left-sided delay have been termed “RBBB masking LBBB” [12]. Such patients could reasonably be expected to benefit from CRT [12].

In addition, there are newer data to suggest that not all LBBB’s are created equal. Classically, to be considered a LBBB, the QRS duration must be ≥ 120 ms with a QS or rS pattern in lead V1 and an R wave in leads V6 and I [14]. The AHA, ACC, and HRS also include broad notching or slurring in the R wave in leads I, aVL and V5 and V6 [15]. In fact, this slurring or notching present in the lateral leads may be more representative of true electrical conduction delay whereas LBBB without lateral lead notching may be more representative of increased LV mass [16]. This slurring or notching ultimately has implications for CRT responsiveness. There is emerging data showing that response to CRT is more favorable in the LBBB subset population with QRS notching [17].

Optimizing CRT

Once the appropriateness of CRT implant has been determined and the underlying QRS complex and morphology delineated, employing an algorithmic approach in optimizing outcomes in CRT patients is advised. Such an algorithm is usually derived from three main subsets of cardiology: heart failure/comorbidity management, EP device management, and imaging analysis. All patients receiving a CRT device with the goal of LVEF improvement should have an echocardiogram at least 3 months after device implant with 6 months likely being optimal. Echocardiographic follow-up gives the clinician a sense of which trajectory the patient may be on and can guide the appropriateness of various interventions. Optimization also starts with a comprehensive physical exam to determine volume status and adequate device healing.

Heart Failure Management

Current CRT guidelines stipulate that CRT patients be taking optimal medical therapy for heart failure prior to device implant [18]. In clinical practice, the 6 months follow-up post-CRT implant visit offers an opportunity to improve medical management. Examples may be uptitrating various medications, adding new medications, or substituting one medication for another. This visit also provides an opportunity for the clinician to gauge patient compliance with medications. Heart failure patients are well known to have a higher degree of cognitive impairment than patients with many other disease states [19]. Often times heart failure regimens can be complicated and cumbersome. An assessment of cognitive status is reasonable to ensure that patients are capable of medical compliance. Ensuring that patients are receiving and taking optimal medical therapy for heart failure is imperative to maximizing outcomes. In addition, patients with robust response to CRT occasionally require diuretic downtitration to prevent hypovolemia. Secondly an assessment of comorbidity burden is useful. It has been shown that overall comorbidity burden plays a role in lack of CRT responsiveness [20]. Screening for obstructive sleep apnea and treatment if indicated may be useful. An assessment of blood glucose control, adequate coronary revascularization when necessary, and optimizing renal function may improve outcomes. In CRT patients who are doing poorly at follow-up, employment of wireless pulmonary artery (PA) pressure monitoring may be an appropriate intervention in staving off heart failure hospitalizations. In the CHAMPION trial, the use of a wireless radiofrequency PA pressure monitor was able to reduce heart failure hospitalizations by 37% and improve quality of life compared to controls [21].

EP Device Management

An electrophysiologic analysis of the device provides the second facet to patient optimization. At optimization, a full device check should be performed looking at overall device and lead function, percent biventricular pacing, atrial fibrillation burden, PVC burden, and the presence of anodal stimulation. Achieving as close to 100% biventricular pacing in appropriate patients is an important goal as small differences in biventricular percent translate into differences in mortality [22]. A PA and lateral CXR is an important tool in assessing lead position. While CXR’s certainly have limitations, a general sense of lead position is useful. Apical lead positions have been shown to be inferior to non-apical positions [23]. In general, anterior leads are also thought to be inferior to non-anterior leads although the data for this is less clear [24].

Recently, calculating the electrical delay between the onset of the QRS and the local electrogram on the LV lead (QLV) has been evaluated as a marker of improved outcomes with CRT. While this is typically measured in the EP lab, an estimate of QLV can be determined on device check at time of optimization and may aid in decision making when lead reposition is considered. The SMART-AV QLV substudy looked at the relationship between QLV and clinical outcomes [25]. The study found that when the observed QLV was > 95 ms, heart failure patients had the best improvements in EF, ESV, EDV, and quality of life (QOL) measurements at 6 months follow-up [25]. Taken together, QLV and suboptimal lead position can be used to support LV lead revision in selected patients.

It has long been known that right ventricular pacing can be deleterious in patients with systolic dysfunction [26]. LV alone pacing, however, has been shown to be non-inferior to biventricular pacing [27]. More recently, LV fusion pacing algorithms have been introduced where the LV wave form fuses with intrinsic conduction rather than simply pre-empting intrinsic AV conduction [28]. Such pacing has been shown to be non-inferior to traditional biventricular pacing. A superiority trial, however, is currently actively enrolling [28]. In patients without clinical response where such algorithms are available and not being utilized, a switch to fusion pacing may be reasonable.

A newer technology becoming increasingly available in CRT devices is multipoint pacing (MPP). In this pacing algorithm, pacing stimuli are delivered from more than one LV pacing vector. The rationale for MPP is based on the idea that pacing from multiple sites in the LV may result in a more physiologic depolarization of the ventricle and in turn improve synchrony and CRT response. In the MultiPoint Pacing Trial, MPP was compared to conventional biventricular pacing and found to be non-inferior [29•]. The study also found that the non-responder rate was about 5% lower in the MPP group compared to the conventional pacing group. It is hypothesized that MPP may be particularly useful in overcoming areas of scar in the LV. The one major drawback to this technology is that it will drain the device battery faster. In selected suboptimal responders to CRT, a trial of MPP may be reasonable.

Lastly, in CRT patients with significant atrial fibrillation burdens the percent biventricular pacing that is reported in device diagnostics is often misleading in terms of the amount of true biventricular pacing delivered. In a holter study by Sweeney and colleagues, significant amounts of fusion and pseudofusion beats were noted despite device diagnostics showing near 100% biventricular pacing [30]. Such beats are unlikely to result in a synchronized contraction. To manage this, there are several options: one can work towards improving rate control of the AF, re-establish normal sinus rhythm, or in refractory cases consideration of AV node ablation. A novel CRT programming algorithm has been developed which works to recognize and discriminate the amount of effective CRT pacing from pseudofusion. When the amount of pseudofusion pacing is above a certain threshold, the CRT device will increase pacing rate to a certain extent in order to overcome the intrinsic depolarization from AF. This algorithm has shown to increase effective CRT pacing during AF from 80 to 87% [31•]. Data as to whether this leads to improved CRT response and better outcomes is still forthcoming.

Cardiac Imaging

In addition to providing an objective measure of response in the form of a follow-up echocardiogram, cardiac imaging represents the third arm in CRT optimization. In clinical studies, optimization of the AV intervals has largely been a disappointment [32]. In the SMART AV trial, echocardiographic or device-based AV optimization was no better than standard out-of-the-box settings [32]. Similarly, optimization of the VV interval has not shown to result in significant clinical improvement [33]. That said, in the small RESPONSE HF study which enrolled non-responders, there was a small benefit of AV combined with VV optimization noted [34]. In clinical practice, AV and VV optimization is reasonable in CRT non-responders. Small changes in the AV interval are unlikely to result in meaningful benefits. Reduction of the diastolic filling pattern when achievable is a desirable goal.

Imaging may play a role in determining whether the left ventricular lead is located in a late-activated area. In the TARGET trial, 220 patients were randomized to LV lead positioning at the latest site of peak contraction free of scar based on speckle-tracking echocardiography vs. LV lead placement based on operator preference [35]. The study found that targeted LV lead position based on speckle tracking yielded improved reverse ventricular remodeling compared to non-targeted patients. In the STARTER trial, 187 patients were randomized to a targeted approach using radial strain derived from echocardiographic speckle tracking vs. lead position based on physician preference. Patients in the targeted arm had a reduction in the combined risk of death and heart failure hospitalizations [36]. Taking the data from these trials may aid in decision-making in terms of whether to attempt LV lead repositioning. In non-responders, the device can be suppressed and subsequently a determination can be made if the LV lead is in a site of late activation free of scar. In selected cases, moving the LV lead to an alternative location based on this data may be a reasonable strategy. Certainly the underlying electrical substrate is also an important factor in this consideration.

CRT Optimization Clinics

Given the multifaceted components involved in CRT optimization, clinics have arisen specifically dedicated to the task of employing an algorithmic approach to optimizing outcomes in these patients. There are two varieties of such clinics: dedicated non-responder clinics vs. a care pathway approach [37, 38]. In a non-responder clinic, patients are referred because of a perceived lack of benefit from the device. The advantage to this is that the clinics are usually small and less resource intensive. The drawback is that the clinic will miss many patients who could benefit given a dependence on referrals. The second type is a care pathway approach. In this scenario, all patients regardless of response are seen at some time period post device implant and all patients receive optimization. This approach is likely not to miss many patients but is resource intense. That said, patients enrolled in a clinic like this have been shown to derive improved outcomes compared to non-enrolled patients [38].

Conclusions and Future Directions

CRT is an evolving therapy for the heart failure population. The definition of CRT optimization has changed from an echo-guided therapy to a multifaceted algorithmic approach to CRT patients involving data from heart failure, electrophysiology, and cardiac imaging. Specialized CRT clinics have been developed to streamline the integration of these specialties. In the future, targeted device therapy tailoring specific lead location to an individualized patient as well as guiding device programming offers significant promise. ECGI technology measuring electrical LV activation in real time is an exciting technology that could revolutionize how CRT patients are treated both at implant and follow-up.

References

Papers of particular interest, published recently, have been highlighted as: • Of importance

Leclercq C, Kass DA. Retiming the failing heart: principles and current clinical status of cardiac resynchronization. J Am Coll Cardiol. 2002;39(2):194–201.

Rickard J, Cheng A, Spragg D, Bansal S, Niebauer M, Baranowski B, et al. Durability of survival effect of cardiac resynchronization therapy by level of left ventricular functional improvement: fate of “non-responders”. Heart Rhythm. 2014;11(3):412–6.

Rickard J, Michtalik H, Sharma R, Berger Z, Iyoha E, Green AR, et al. Predictors of response to cardiac resynchronization therapy: a systematic review. Int J Cardiol. 2016;225:345–52.

Birnie DH, Tang AS. The problem of non-response to cardiac resynchronization therapy. Curr Opin Cardiol. 2006;219:20–6.

Rickard J, Jackson G, Spragg DD, Cronin EM, Baranowski B, Tang WHW, et al. QRS prolongation induced by cardiac resynchronization therapy correlates with deterioration in left ventricular function. Heart Rhythm. 2012;9(10):1674–8.

Rickard J, Kumbhani DJ, Popovic Z, Verhaert D, Manne M, Sraow D, et al. Characterization of super-response to cardiac resynchronization therapy. Heart Rhythm. 2010;7(7):885–9.

Curtis AB, Worley SJ, Chung ES, et al. Improvement in Clin outcomes with biventricular versus right ventricular pacing: the BLOCK HF study. J Am Coll Cardiol. 2016;18:2148–57.

Rickard J, Kumbhani DJ, Gorodeski EZ, Baranowski B, Wazni O, Martin DO, et al. Cardiac resynchronization therapy in non-left bundle branch block morphologies. Pacing Clin Electrophysiol. 2010;33(5):590–5.

Wokhlu A, Rea RF, Asirvatham SJ, Webster T, Brooke K, Hodge DO, et al. Upgrade and de novo cardiac resynchronization therapy: impact of paced or intrinsic QRS morphology on outcomes and survival. Heart Rhythm. 2009;6:1439–47.

Zareba W, Klein H, Cygankiewicz I, Hall WJ, McNitt S, Brown M, et al. Effectiveness of cardiac Resyncrhonization therapy by QRS morphology in the multicenter automatic defibrillator implantation trial-cardiac resynchronization therapy (MADIT-CRT). Circulation. 2011;123:1061–72.

Rickard J, Bassiouny M, Cronin EM, Martin DO, Varma N, Niebauer MJ, et al. Predictors of response to cardiac resynchronization therapy in patients with a non-left bundle branch block morphology. Am J Cardiol. 2011;108(11):1576–80.

Fantoni C, Kawabata M, Massaro R, et al. Right and left ventricular activation sequence in patients with heart failure and right bundle branch block: a detailed analysis using three-dimensional non-fluoroscopic electroanatomic mapping system. J Cardiovasc Electrophysiol. 2005;16(2):112–9.

Varma N. Left ventricular conduction delays and relation to QRS configuration in patients with left ventricular dysfunction. Am J Cardiol. 2009;103(11):1578–85.

Nikoo MH, Aslani A, Jorat MV. LBBB: state-of-the-art criteria. Int Cardiovasc Res J. 2013;7(2):39–40.

Surawicz B, Childers R, Deal BJ, Gettes LS, Bailey JJ, Gorgels A, et al. AHA/ACCF/HRS recommendations for the standardization and interpretation of the electrocardiogram: part III: intraventricular conduction disturbances: a scientific statement from the American Heart Association electrocardiography and arrhythmias committee, council on clinical cardiology; the American College of Cardiology Foundation; and the Heart Rhythm Society. Endorsed by the International Society for Computerized Electrocardiology. J Am Coll Cardiol. 2009;53(11):976–81.

Strauss DG, Selvester RH, Wagner GS. Defining left bundle branch block in the era of cardiac resynchronization therapy. Am J Cardiol. 2011;107(6):927–34.

Migliore F, Baritussio A, Stabile G, Reggiani A, D’Onofrio A, Palmisano P, et al. Prevalence of true left bundle branch block in current practice of cardiac resynchronization therapy implantation. J Cardiovasc Med. 2016;17(7):462–8.

Epstein AE, DiMarco JP, Ellenbogen KA, American College of Cardiology Foundation; American Heart Association task force on practice guidelines, Heart Rhythm Society, et al. 2012 ACCF/AHA/HRS focused update incorporated into the ACCF/AHA/HRS 2008 guidelines for device –based therapy of cardiac rhythm abnormalities: a report of the American College of Cardiology Foundation/American Heart Association task force on practice guidelines and the Heart Rhythm Society. J Am Coll Cardiol. 2013;61:6–75.

Kim J, Shin MS, Hwang SY, Park E, Lim YH, Shim JL, et al. Memory loss and decreased executive function are associated with limited functional capacity in patients with heart failure compared to patients with other medical conditions. Heart Lung. 2018;47(1):61–7.

Verbrugge FH, Dupont M, Rivero-Ayerza M, de Vusser P, van Herendael H, Vercammen J, et al. Comorbidity significantly affects clinical outcome after cardiac resynchronization therapy regardless of ventricular remodeling. J Card Fail. 2012;18(11):845–53.

Abraham WT, Adamson PB, Bourge RC, Aaron MF, Costanzo MR, Stevenson LW, et al. Wireless pulmonary artery haemodynamic monitoring in chronic heart failure: a randomised controlled trial. Lancet. 2011;377:658–66.

Hayes DL, Boehmer JP, Day JD, Gilliam FR III, Heidenreich PA, Seth M, et al. Cardiac resynchronization therapy and the relationship of percent biventricular pacing to symptoms and survival. Heart Rhythm. 2011;8(9):1469–75.

Singh JP, Klein HU, Huang DT, Reek S, Kuniss M, Quesada A, et al. Left ventricular lead position and clinical outcome in the multicenter automatic defibrillator implantation trial-cardiac resynchronization therapy (MADIT-CRT). Circulation. 2011;123(11):1159–66.

Wilton SB, Shibata MA, Sondergaard R, Cowan K, Semeniuk L, Exner DV. Relationship between left ventricular lead position using a simple radiographic classification scheme and long-term outcome with resynchronization therapy. J Interv Card Electrophysiol. 2008;23(3):219–27.

Gold MR, Birdersdotter-Green U, Singh JP, et al. The relationship between ventricular electrical delay and left ventricular remodeling with cardiac resynchronization therapy. Eur Heart J. 2011;32(20):2516–24.

Wilkoff BL, Cook JR, Epstein AE, Greene HL, Hallstrom AP, Hsia H, et al. Dual chamber and VVI implantable defibrillator trial investigators. Dual-chamber pacing or ventricular backup pacing in patients with an implantable defibrillator: the dual chamber and VVI implantable defibrillator (DAVID) trial. JAMA. 2002;288(24):3115–23.

Boriani G, Kranig W, Donal E, Calo L, Casella M, Delarche N, et al. A randomized double-blind comparison of biventricular versus left ventricular stimulation for cardiac resynchronization therapy: the biventricular versus left Univentricular pacing with ICD back-up in heart failure patients (B-LEFT HF) trial. Am Heart J. 2010;159(6):1052–8.

Martin DO, Lemke B, Birnie D, Krum H, Lee KL, Aonuma K, et al. Adaptive CRT study investigators. Investigation of a novel algorithm for synchronized left-ventricular pacing and ambulatory optimization of cardiac resynchronization therapy: results of the adaptive CRT trial. Heart Rhythm. 2012;9(11):1807–14.

• Tomassoni G, Baker J, Corbisiero R, et al. Rationale and design of a randomized trial to assess the safety and efficacy of MultiPoint Pacing (MPP) in cardiac resynchronization therapy: The MPP Trial. Ann Noninvasive Electrocardiol, 2017;22(6). https://doi.org/10.1111/anec.12448. This study is ongoing and will help to answer wether MPP results in improved CRT response rates.

Kamath GS, Cotiga D, Koneru JN, Arshad A, Pierce W, Aziz EF, et al. The utility of 12-lead holter monitoring in patients with permanent atrial fibrillation for the identification of nonresponders after cardiac resynchronization therapy. J Am Coll Cardiol. 2009;53(12):1050–5.

• Plummer CJ, Frank CM, Bari Z, et al. A novel algorithm increases the delivery of effective cardiac resynchronization therapy during atrial fibrillation: the CRTee randomized crossover trial. Heart Rhythm. 2017;S1547-5271(17):31240–7. This study shows that with this novel algorithm, there is an increase in effective CRT pacing in patients with AF. More data is needed to see if this means improved response to CRT and clinical outcomes.

Ellenbogen KA, Gold MR, Meyer TE, Fernndez Lozano I, Mittal S, Waggoner AD, et al. Primary results from the SmartDelay determined AV optimization: a comparison to other AV delay methods in cardiac resynchronization therapy (SMART-AV) trial: a randomized trial comparing empirical, echocardiography-guided, and algorithmic atrioventricular delay programming in cardiac resynchronization therapy. Circulation. 2010;122(25):2660–8.

Boriani G, Muller CP, Seidl KH, et al. Randomized comparison of simultaneous biventricular stimulation versus optimized interventricular delay in cardiac resynchronization therapy. The resynchronization for the HemodYnamic treatment for heart failure management II implantable cardioverter defibrillator (RHYTHM II ICD) study. Am Heart J. 2006;151(5):1050–8.

Weiss R, Malik M, Dinerman J, et al. VV optimization in cardiac resynchronization therapy non-responders: RESPONSE-HF trial results. Abstract AB12–5. Denber (CO): HRS. 2010.

Khan FZ, Virdee MS, Palmer CR, Pugh PJ, O'Halloran D, Elsik M, et al. Targeted left ventricular lead placement to guide cardiac resynchronization therapy. J Am Coll Cardiol. 2012;59(17):1509–18.

Saba S, Marek J, Schwartzman D, Jain S, Adelstein E, White P, et al. Echocardiography-guided left ventricular lead placement for cardiac resynchronization therapy. Circ Heart Fail. 2013;6(3):427–34.

Mullens W, Grimm RA, Verga T, Dresing T, Starling RC, Wilkoff BL, et al. Insights from a cardiac resynchronization optimization clinic as part of a heart failure disease management program. J Am Coll Cardiol. 2009;53(9):765–73.

Altman RK, Parks KA, Schlett CL, et al. Multidisciplinary care of patients receiving cardiac resynchronization therapy is associated with improved clinical outcomes. Eur Heart J. 2012;17:2181–8.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

Adam Grimaldi and Eiran Z. Gorodeski declare no conflicts of interest. John Rickard reports personal fees from Medtronic and personal fees from Boston scientific, outside the submitted work.

Human and Animal Rights and Informed Consent

This article does not contain any studies with human or animal subjects performed by any of the authors.

Additional information

This article is part of the Topical Collection on Pharmacologic Therapy

Rights and permissions

About this article

Cite this article

Grimaldi, A., Gorodeski, E.Z. & Rickard, J. Optimizing Cardiac Resynchronization Therapy: an Update on New Insights and Advancements. Curr Heart Fail Rep 15, 156–160 (2018). https://doi.org/10.1007/s11897-018-0391-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11897-018-0391-y