Abstract

In this article, we accumulate theories and computing procedures to find eigenvalue bounds for interval matrices. Also, we have pointed out the computational complexity and applications of interval eigenvalue problems. Computing exact eigenvalue for the general interval matrices is an NP-hard problem. Various eigenvalue bounds for symmetric, non-symmetric, and complex interval matrices are included here. The article attempts to present some of the important methods proposed by various authors in one place, analyze them, and then final observations are made. In this respect, each of the methods has been addressed by taking simple example problems of interval matrices so that readers may understand the philosophy of those methods. Further, the increase of tightness of the solutions is also discussed. The interlinks of their development and gaps among them are highlighted.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Uncertainty is an integral part of measurements, and we can classify it by various genres like random uncertainty, fuzzy uncertainty, bounded uncertainty depending upon their appearances. When the dispersion of uncertainty is bounded, they can be enclosed by the compact interval, and computations of compact intervals lead to interval arithmetic [15].

The irrational number \(\pi\) has a non-recurring, non-terminating expression; Archimedes used an inscribed polygon and a circumscribing polygon, each of size n of a unit circle. The area of the inscribed polygon gives a lower bound of the value of \(\pi\), and the area of circumscribing polygon gives an upper bound of the value of \(\pi\). By increasing n; we get smaller connected compact intervals containing \(\pi\) up to the desired number of the accuracy of the digits of \(\pi\).

The computers are truncating floating-point numbers by rounding up to certain digits, depending upon the required precision. Rump [65] demonstrates by examples that the rounding up can cause a significant amount of errors, propagate over time and become hugged. He introduced the verified inclusions method to compute the exact value of the function in a guaranteed way. This method deals with intervals motivated to study interval arithmetic.

Nurturing many bounded but inexact data at once leads us to focus on the problems of linear algebra with interval data. A linear transformation is one of the important topics in linear algebra, and we know that each linear transformation corresponds to a matrix vice-versa. This influences us to study different kinds of problems with interval data corresponding to interval matrices like interval eigenvalue problems, interval systems of linear and non-linear equations [1, 5, 29, 63, 66, 67], etc. Computational complexity is the main challenge for interval problems, even computing determinant of an interval matrix is an NP-hard problem [27]. Two crucial aspects of interval computation are the propagation of uncertainty and uncertainty quantification; our focus is to minimize the second one.

There is no way to calculate the exact interval eigenvalues of a general interval matrix. Our goal is to minimize the overestimation of the interval eigenvalue bounds. If we look into the interval eigenvalue problems, there are formulae for exact eigenvalue bounds for symmetric interval matrix(exponential computational time). Deif [4] solved the interval eigenvalue problem with the condition of consistent sign pattern of the eigenvectors computed for the center matrix of the interval matrix. Hertz [7] enclosed the eigenvalues of a symmetric interval matrix exactly by calculating the eigenvalues of vertex matrices. Hertz [8] found the formula for computing maximal eigenvalue for a special class of symmetric interval matrices. Rohn [31] estimated eigenvalue bounds for any interval matrix. Further Rohn [32], Leng and He [20] gave eigenvalue bounds for symmetric interval matrix. Based on the continuity property of characteristic polynomial, Leng et al. [24] computed bounds for real eigenvalues of real interval matrices. Hladik et al. [45] imparted bounds on real eigenvalues and singular values of interval matrices. Hladik et al. [46] proposed a filtering method that can tight the exiting outer eigenvalue bounds. Using sufficient conditions of Rex and Rohn [17], Leng [19] computed eigenvalue bounds for both standard and generalized interval eigenvalue problems. One can find other methods for evaluating eigenvalue bounds for interval matrices in [23, 47, 53]. Further, parametric interval eigenvalue problems came to light by Kolev [39], and regularity radius of parametric interval matrices are derived in [40].

Hertz [9] derived formulae for both real and imaginary parts of interval eigenvalues of complex interval matrices. Hladik [41] proposed eigenvalue bounds for complex interval matrices, which depends on the eigenvalue bounds of certain symmetric interval matrices. Matcovschi et al. [51] estimated eigenvalues bound for complex interval eigenvalue problems. Roy et al. [13] deduces sufficient conditions for regularity for complex interval matrices and consequently enclosed interval eigenvalues.

In different application problems the eigenvalue problem may also appear as generalized interval eigenvalue problems [19, 21, 71, 75], polynomial interval eigenvalue problems [64], nonlinear interval eigenvalue problems [55].

The applications of interval computations are described in [59]. The interval eigenvalue problem has plenty of applications, specifically in spring-mass systems [20], dynamical systems [7], robustness in control theory, where they directly use techniques of interval analysis. The solution and stability of the interval differential equations are analyzed in [52] using Lyapunov inequality. Eigenvalue bounds of interval Hermitian matrices appear in fractional order time-invariant systems are studied in [25]. In general, interval eigenvalue problems arise in the structural analysis [76], sensitivity analysis [74] of various systems with uncertainty. Guaranteed pathing for a robot under constraints with bounded uncertainty has been developed in [48]. Electrical circuit analysis using interval arithmetic in the presence of fluctuate of inputs modeled in [36]. Global optimization problems were handled using interval techniques [3, 12]. In this regard, Kepler’s conjecture has been proved with the help of interval arithmetic [68].

In the other context, inefficient numerical computations cause major accidents, and a guaranteed solution can play a major role in preventing these disasters [11]. Useful resources for interval computations are also available in [58].

The following section contains the notations and preliminaries corresponding to interval eigenvalue problems. In the Sect. 3 we have discussed the computational complexity of different interval eigenvalue problems. We have started discussing various eigenvalue bounds for the symmetric interval eigenvalue problems in Sect. 4. Eigenvalue bounds for general interval matrices and complex interval matrices have been discussed in Sects. 5 and 6 respectively. Applications of interval methods for eigenvalue bounds in different uncertain problems are highlighted in Sect. 7. Finally, concluding remarks and future directions have been made in Sect. 8.

2 Preliminaries

In this section, we have presented basic notations and definitions corresponding to interval computations [16, 60]. Here we denote an interval by the boldface symbol. Also, interval means a connected and compact subset of the set real numbers \(\mathbb {R}\). We denote an interval by the symbol \(\varvec{a}\) and it is determined by the lower and upper values \(\underline{a}\), \(\overline{a}\) respectively, so \(\varvec{a} = \{a \in \mathbb {R} : \underline{a} \le a \le \overline{a}\}\). A real number a can be considered as the trivial interval [a, a]. The center, width, and absolute values of an interval \(\varvec{a}\) are respectively denoted by \(a_c\), \(a_\triangle\), \(|\varvec{a}|\) and defined by

Let \(\varvec{a} = [\underline{a}, \overline{a}]\) and \(\varvec{b} = [\underline{b}, \overline{b}]\) be two intervals, then basic arithmetic operations of intervals are defined as following,

The set of all intervals in \(\mathbb {R}\) is denoted by the symbol \(\mathbb {IR}\), let \(\varvec{a}\), \(\varvec{b}\), \(\varvec{c}\) belongs to \(\mathbb {IR}\), then we have

Let \(\varvec{A} = \{A \in M_n(\mathbb {R}) : \underline{A} \le A \le \overline{A}\}\) be an interval matrix, where \(\underline{A} = (\underline{a}_{ij})\) and \(\overline{A} = (\overline{a}_{ij})\) are \(n \times n\) lower and upper real matrices respectively, the inequalities are taken component wise. Alternatively, we can write the interval matrix as \(\varvec{A} = (\varvec{a}_{ij})_{n \times n}\), where \(\varvec{a}_{ij} = [\underline{a}_{ij}, \overline{a}_{ij}]\). The center, radius, and absolute matrices of an interval matrix are defined as follows,

A complex interval \(\varvec{c}\) is an ordered pair of two real intervals \(\varvec{a}\) and \(\varvec{b}\) such that \(\varvec{c} = \varvec{a} + i\varvec{b}\). The set of all complex intervals is denoted by \(\mathbb {IC}\). Similarly, the complex interval matrix \(\varvec{C}\) is an ordered pair of two real interval matrices \(\varvec{A}\) and \(\varvec{B}\), i.e. \(\varvec{C} = \varvec{A} + i\varvec{B}\). The center, radius, and absolute matrices of \(\varvec{C}\) are defined as follows,

Definition 1

The symmetric interval matrix corresponding to an interval matrix \(\varvec{A}\) is defined as \(\varvec{A}^s = \{A \in \varvec{A} : A = A^T\}\).

Definition 2

The matrices of the form \(A = \{(a_{ij}) : a_{ij} = \underline{a}_{ij} \text{ or } \overline{a}_{ij}\}\) are called vertex matrices in an interval matrix \(\varvec{A}\).

Definition 3

A matrix norm \(\Vert \cdot \Vert\) is said to be consistent if \(\Vert Ax\Vert \le \Vert A\Vert \cdot \Vert x\Vert\), for all \(A \in \mathbb {R}^{n \times n}\) and \(x \in \mathbb {R}^n\).

Definition 4

The spectral radius \(\varvec{\rho }(\varvec{A})\) of an interval matrix \(\varvec{A}\) is the set \(\{\rho (A) : A \in \varvec{A}\}\), where \(\rho (A)\) is the spectral radius of a matrix A.

We denote the minimum and maximum of the set \(\{\rho (A) : A \in \varvec{A}\}\) by \({\underline{\rho }}(\varvec{A})\) and \({\overline{\rho }}(\varvec{A})\), respectively. The computational complexities for computing \({\underline{\rho }}(\varvec{A})\) and \({\overline{\rho }}(\varvec{A})\) are mentioned in the Table 1.

The exact real eigenvalue set of a real interval matrix \(\varvec{A}\) is defined as \(\varvec{\Lambda } = \{\lambda : Ax = \lambda x, x \ne 0, A \in \varvec{A}\}\) is a compact subset in \(\mathbb {R}\) [24, 44]. We can express the eigenvalue set \(\varvec{\Lambda }\) as the union of n intervals as follows,

\(\varvec{\Lambda } = \bigcup _{i = 1}^{n} \varvec{\lambda }_i\), where \(\varvec{\lambda }_i = [{\underline{\lambda }}_i, {\overline{\lambda }}_i] \in \mathbb {IR}\) with \({\underline{\lambda }}_i\) and \({\overline{\lambda }}_i\) are the lower and upper end-points, respectively of the \(i^{th}\) interval eigenvalue \(\varvec{\lambda }_i\) of \(\varvec{A}\) for \(i = 1,\dots ,n\). In the cases of an interval matrix with purely imaginary eigenvalues, \(\varvec{\lambda }_i\) can be empty for some i; one such example is described in [45].

The exact eigenvalue set of a complex interval matrix \(\varvec{C} = \varvec{A} + i\varvec{B}\) is defined as \(\varvec{\Lambda } = \{\lambda + i\mu : Cz = (\lambda + i\mu ) z, z \ne 0, C \in \varvec{C}\}\) is a compact subset in \(\mathbb {C}\). We can bound the eigenvalue set \(\varvec{\Lambda }\) as a subset of a complex intervals as follows,

\(\varvec{\Lambda }\) \(\subseteq\) \([{\underline{\lambda }}, {\overline{\lambda }}] + i[{\underline{\mu }}, {\overline{\mu }}])\), where \([{\underline{\lambda }}, {\overline{\lambda }}] + i[{\underline{\mu }}, {\overline{\mu }}] \in \mathbb {IC},\) with \({\underline{\lambda }}\), \({\overline{\lambda }}\) are the lower and upper end-points for the real part, respectively and \({\underline{\mu }}\), \({\overline{\mu }}\) are the lower and upper end-points, respectively for the imaginary part of the interval eigenvalues of \(\varvec{C}\).

Computing exact interval eigenvalue set \(\varvec{\Lambda }\) corresponding to an interval matrix is an NP-hard problem, so our focus is to compute outer bounds of \(\varvec{\Lambda }\) as small as possible. Very few studies have been done on the inner approximation of interval eigenvalues set \(\varvec{\Lambda }\) [54]. Since interval arithmetic is based on reliability so we will concentrate on the methods to find an outer approximation of \(\varvec{\Lambda }.\)

Besides standard interval eigenvalue problems, different authors have explored real generalized interval eigenvalue problems [19, 37], complex generalized interval eigenvalue problems, polynomial interval eigenvalue problems, and non-linear interval eigenvalue problems.

The exact interval eigenvalue set of real generalized interval eigenvalue problem

is defined by the set \(\varvec{\Lambda }{_{gnz}} = \{ \lambda : Ax = \lambda Bx, x \ne 0, A \in \varvec{A}, B \in \varvec{B}\}\), where \(\varvec{A}\) and \(\varvec{B}\) are two real interval matrices.

The exact interval eigenvalue set of complex generalized interval eigenvalue problem

is defined by the set \(\varvec{\Lambda }{_{gnz}} = \{ \lambda + i\mu : Cz = (\lambda + i \mu )Dz, z \ne 0, C \in \varvec{C}, D \in \varvec{D}\}\), where \(\varvec{C}\) and \(\varvec{D}\) are two complex interval matrices.

Non-linear interval eigenvalue problem [64] is defined as,

where \(\varvec{A}_i\), for \(i = 0,1,2,\dots ,n\) are interval matrices. The exact eigenvalues set \(\varvec{\Lambda }{_{nonl}} = \{\lambda : (A_0 + \lambda A_1 + \lambda ^2 A_2 + \dots + \lambda ^n A_n)x = 0, x \ne 0, A_i \in \varvec{A}_i,\) for \(i = 0,1,2,\dots ,n\}\). More non-linear interval eigenvalue problems can be found in [55].

Now, we will be familiar with the computational complexity of the different problems correlated to the interval eigenvalue problems. Numerous approaches have been developed to overcome the computational complexities for finding bounds of different interval problems in a reasonable time; the same for interval eigenvalue problems are discussed in the upcoming sections.

3 Computational complexity

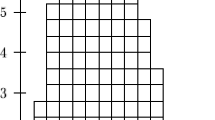

Computational complexity is one of the prime challenges for the interval techniques modeled for different interval problems. Different complexity level can be described by Fig. 1, and one can find computational complexity levels for interval problems in [28], also, specific attention has been given to symmetric interval matrices in [42]. The computational complexity of interval eigenvalue-related problems are included in Table 1.

Computing exact eigenvalue bounds for interval matrices is an NP-hard problem. There is no unified way to compute it for different interval matrices, that is why the focus is to compute the tighter bounds of the interval eigenvalues.

4 Eigenvalue bounds of real symmetric interval matrices

All eigenvalues of symmetric matrices are real, so the interval eigenvalues of symmetric interval matrices are also real and can be ordered. For the symmetric interval matrix \(\varvec{A}^s\), the center and radius matrices \(A_c\) and \(A_\triangle\), respectively, are also symmetric. Let the eigenvalues of \(A_c\) are \(\lambda _i\) for \(i = 1,\dots ,n\) and ordered as \(\lambda _n \le \dots \le \lambda _1\). Also, let \(x^i = (x_1^i,\dots ,x_n^i)^T\) be the eigenvector corresponding to the eigenvalues \(\lambda _i\) of \(A_c\) for \(i = 1,\dots ,n\). The following theorem guides us to compute exact eigenvalue bounds for real symmetric interval matrices; a strong sufficient condition for checking the constant sign pattern of eigenvectors of \(A_c\) stated in Theorem 9.

Theorem 1

[4] If \(\varvec{A}{^s}\) is a real symmetric interval matrix, and \(S^i = diag(sgn(x_1^i),...,sgn(x_n^i))\), for \(i = 1,\dots ,n,\) taken at \(A_c\), is constant over \(\varvec{A}{^s}\), then the interval eigenvalues \(\varvec{\lambda }_i = [\lambda _i(A_{c} - S^i A_\triangle S^i), \lambda _i(A_{c} + S^i A_\triangle S^i)]\), for \(i = 1,\dots ,n.\)

Example 1

Let us consider the symmetric interval matrix

Here,

Using Theorem 1, we get \(\varvec{\lambda }_1 = [6.2195, 14.1803]\) and \(\varvec{\lambda }_2 = [-15.2066\), \(-4.8102]\). These bounds are exact.

We can see that although the Theorem 1 produces an exact eigenvalue bound, it is hard to check the constant sign pattern of the eigenvectors of \(A_c\). The next theorem gives us the exact bound for the whole interval eigenvalue spectrum of real symmetric interval matrices by computing the eigenvalues of two sets of vertex matrices each of cardinality \(2^{n-1}\). This method is based on the behavior of quadratic form over the orthants of \(\mathbb {R}^n\).

Now, by Rayleigh quotient we have,

The quadratic form \(x^TAx\) can be expressed as,

Let us consider the closed unit ball centered as origin \({B}^n = \{ x \in \mathbb {R}^n : \Vert x\Vert = 1\}\). There are \(2^n\) orthants in \(\mathbb {R}^n\), in the orthants signs of the elements of \(x = (x_1,\dots ,x_n)^T\) are preserved. By seeing the expression in (5), we need to consider sign preserving orthants for \(x_ix_j\), with \(i \ne j\). As example, for \(n = 2\), we have \(x = (x_1, x_2)^T\), and there are four orthants whose sign pattern are

Since, the first(\(+ +\)) and the third(\(- -\)) quadrants produces same sign preserving orthants for \(x_ix_j\), with \(i \ne j\) and also the second and the fourth quadrants, so it is sufficient to consider the first and the second quadrant only. This is true for all n, and therefore we need to consider only \(2^{n-1}\) orthants, which we denoted by \(O_q\), for \(q = 1,\dots ,2^{n-1}\).

Let \(B_q = {B}^n \cap O_q\), for \(q = 1,\dots ,2^{n-1}\), and also, let

It can be easily computed by the stated procedure in the following theorem, and consequently, we can find the maximum eigenvalue \({\overline{\lambda }}(\varvec{A}^s)\) of the symmetric interval matrix \(\varvec{A}^s\).

Theorem 2

[7] \({\overline{\lambda }}(\varvec{A}^s)\) = \(\underset{1 \le q \le 2^{n-1}}{max}\) \({\overline{\lambda }}^q\), where \({\overline{\lambda }}^q\) = \(\underset{x \in {B}^n}{max}\) \(x^T\overline{A}^qx\), \(\overline{A}^q\) = \([\overline{a}_{kl}^q] \in V(\varvec{A})\),

for \(q = 1,\dots ,2^{n-1}.\)

Similarly, we have

Let

It can be easily computed by the stated procedure in the following theorem, and consequently, we can find the minimum eigenvalue \({\underline{\lambda }}(\varvec{A}^s)\) of the symmetric interval matrix \(\varvec{A}^s\).

Theorem 3

[7] \({\underline{\lambda }}(\varvec{A}^s)\) = \(\underset{1 \le q \le 2^{n-1}}{min}\) \({\underline{\lambda }}^q\), where \({\underline{\lambda }}^q\) = \(\underset{x \in {B}^n}{min}\) \(x^T\underline{A}^qx\), \(\underline{A}^q\) = \([\underline{a}_{kl}^q] \in V(\varvec{A})\),

for \(q = 1,\dots ,2^{n-1}.\)

Here, each q corresponds to an orthant of \(\mathbb {R}^n\) and the x belongs to that orthant has its distinct sign pattern(described for n = 2 in expression (6)), according to those sign patterns of x the \(\overline{A}^q\), \(\underline{A}^q\) are calculated. This way, for each q, we have different \(\overline{A}^q\), \(\underline{A}^q\) depending upon that orthant of \(\mathbb {R}^n\).

Example 2

Let us consider the symmetric interval matrix

By equation (8), we compute

Now, \({{\overline{\lambda }}^1} = 16,\) and \({{\overline{\lambda }}^2} = 9.4031\), Therefore by Theorem 2, we get \(\varvec{{{\overline{\lambda }}}({A^s})} = max\{16,9.4031\} = 16.\)

Similarly, with the help of Eq. (11), we compute

Now, \({{\underline{\lambda }}^1} = -16.8924\), and \({{\underline{\lambda }}^2} = -11.0623.\) Therefore by Theorem 3, we get \({\underline{\lambda }}(\varvec{A}^s) = min\{-16.8924,-11.0623\} = -16.8924.\) Hence, the eigenvalue bound for \(\varvec{A}^s\) is \([-16.8924, 16].\)

The following theorem can compute the exact maximal eigenvalue of a class of real symmetric interval matrices by computing the maximal eigenvalue of a single vertex matrix instead of \(2^{n-1}\) vertex matrices stated in the preceding theorem.

We consider the symmetric interval matrix \(\varvec{A}^s\) such that \(|\underline{a}_{ij}| \le \overline{a}_{ij}\), for \(i < j\), and define \(\varvec{A}^\ltimes = \{A : A \in \varvec{A}^s\}\). Now, the maximal eigenvalue of \(\varvec{A}^\ltimes\) can be expressed as,

where \(B^n = \{x \in \mathbb {R}^n : \Vert x\Vert = 1\}.\) This is not easy to calculate, the next theorem helps us to calculate maximal eigenvalue of \(\varvec{A}^\ltimes\).

Theorem 4

[8] \({{\overline{\lambda }}(\varvec{A}^\ltimes ) = {\overline{\lambda }}(\overline{A}).}\)

Example 3

Let us consider the symmetric interval matrix

Here,

and \({\overline{\lambda }}(\overline{A}) = 28.6015\), therefore by Theorem 4 we have \({\overline{\lambda }}(\varvec{A}^\ltimes ) = 28.6015\).

This can be verified by the following way; now, by using the method described in [7] to calculate \({\overline{\lambda }}(\varvec{A}^\ltimes )\), we need to consider the matrices,

We have \({{\overline{\lambda }}^1} = 28.6015,\) \({{\overline{\lambda }}^2} = 27.9505,\) \({{\overline{\lambda }}^3} = 27.3180,\) \({{\overline{\lambda }}^4} = 27.4382\), thus \({\overline{\lambda }}(\varvec{A}^\ltimes )\) = \(max\{28.6015, 27.9505, 27.3180, 27.4382\}\) = 28.6015, which is equal to \({\overline{\lambda }}(\overline{A}).\)

The following counterexample will demonstrate that the Theorem 4 not necessarily produces the maximal eigenvalue for any symmetric interval matrix.

Counterexample: Consider the symmetric interval matrix

Here,

and \({\overline{\lambda }}(\overline{A}) = 9.2426.\)

Let us take

The matrix \(M \in \varvec{A}{^s}\) and \({\overline{\lambda }}(M) = 10.0902\), which is greater than \({\overline{\lambda }}(\overline{A})\), as \(|\underline{a}_{ij}| \nleq \overline{a}_{ij}\), for \(i < j\).

One can get bounds separately for each interval eigenvalue of a symmetric interval matrix by the next theorem quickly, which is based on the eigenvalues of the center matrix and spectral radius of the radius matrix of the symmetric interval matrix. The \(i^{th}\) interval eigenvalue of a symmetric interval matrix \(\varvec{A}^s\) is denoted by \(\varvec{\lambda }_i(\varvec{A}^s)\).

Theorem 5

[32] If \(\varvec{A}^s\) is a symmetric interval matrix. Then

The proof of this theorem can be found in [45].

Example 4

For the symmetric interval matrix

the equation (13) produces \(\varvec{\lambda }_2(\varvec{A}^s) \subseteq [-15.2361, -4.7639]\) and \(\varvec{\lambda }_1(\varvec{A}^s) \subseteq [4.7639, 15.2361]\).

Let \({\underline{\lambda }}_i(\varvec{A}^s)\), \({\overline{\lambda }}_i(\varvec{A}^s)\) be the lower and upper value of the \(i^{th}\) interval eigenvalue of \(\varvec{A}^s\) respectively, for \(i = 1,\dots ,n\). Here, we consider the ordering of the eigenvalues of a symmetric matrix A as \(\lambda _n \le \dots \le \lambda _1\). The set of all vertex matrices in \(\varvec{A}^s\) is denoted by \(V(\varvec{A}^s)\). The following example shows that the end points \({\underline{\lambda }}_i(\varvec{A}^s)\) and \({\overline{\lambda }}_i(\varvec{A}^s)\), for \(i = 2,\dots ,(n-1)\) are not necessarily obtained by the eigenvalues of vertex matrices of the symmetric interval \(\varvec{A}^s\). Even \({\overline{\lambda }}_n(\varvec{A}^s)\) and \({\underline{\lambda }}_1(\varvec{A}^s)\) can not be necessarily computed by the eigenvalues of vertex matrices are shown in [43].

Example 5

Let us consider the symmetric interval matrix

Now, computing eigenvalues using MATLAB of \(2^6\) symmetric vertex matrices in \(\varvec{A}^s\), we have \({\overline{\lambda }}_2({V(\varvec{A}^s)}) = 9.2101\). But we can easily see that for the matrix

in \(\varvec{A}^s\), we have \(\lambda _2(B) = 10\), which is greater than \({\overline{\lambda }}_2({V(\varvec{A}^s)})\).

The following proposition sometimes produced a better upper bound for symmetric interval matrix as compared to the bound produced by equation (13).

Proposition 1

[45] For a symmetric interval matrix \(\varvec{A}^s\), we have \({\overline{\lambda }}_1(\varvec{A}^s) \le \lambda _1(|\varvec{A}{^s}|)\).

Example 6

If we consider the symmetric interval matrix

We have

and \(\lambda _1(|\varvec{A}{^s}|) = 8.4721\). By proposition 1, 8.4721 is an upper bound for \({\overline{\lambda }}_1(\varvec{A}{^s})\), where as equation (13) produces an upper bound for \({\overline{\lambda }}_1(\varvec{A}{^s})\) as 8.5311.

Lemma 1

[45] (Diagonal maximization) For each \(i \in \{1,\dots ,n\}\), there is some matrix \(A \in \varvec{A}^s\) with diagonal entries \(a_{jj} = \overline{a}_{jj}\) such that \(\lambda _i{(A)} = {\overline{\lambda }}_i(\varvec{A}^s)\).

This lemma guaranteed that for computing an upper bound for \({\overline{\lambda }}_i(\varvec{A}^s)\), it is sufficient to consider the \(i^{th}\) eigenvalue of the set \({\{(a_{ij}) \in \varvec{A}^s : a_{jj} = \overline{a}_{jj}, \forall j = 1,\dots ,n\}}\).

The following is a nice theorem that can bind eigenvalues of the sum of two real symmetric matrices in terms of the sum of their individual eigenvalues.

Theorem 6

[6, 34, 56, 57] (Weyl, 1912) Let A and B be two symmetric matrices. Then

A simpler form of the Weyl’s theorem is as bellow,

Theorem 7

[18] Let A, B, C be three real symmetric matrices and \(C = A + B\). The eigenvalues of A, B, C are denoted and ordered as:

respectively, then

Leng and He proposed the following theorem by employing the Gershgorin disk theorem for symmetric matrices, which can produce bound for eigenvalues of symmetric interval matrix with the help of Theorem 7.

Theorem 8

[20] Let \(\varvec{A}^s\) be a real symmetric interval matrix. For \(A \in \varvec{A}^s\), the eigenvalues of A are denoted and ordered as \(\lambda _n \le \dots \le \lambda _1\). Then \(\lambda _1 \le \underset{i=1,\dots ,n}{max}(\underset{j \ne i}{\sum }|\varvec{a}_{ij}| + |\varvec{a}_{ii}|)\) and \(-\underset{i=1,\dots ,n}{max}(\underset{j \ne i}{\sum }|\varvec{a}_{ij}| + |\varvec{a}_{ii}|) \le \lambda _n\).

Example 7

Let us consider the symmetric interval matrix

Now,

Let \(\mu _1\) and \(\mu _2\) be maximum and minimum eigenvalues of \(A_\triangle\). Then from Theorem 8, we have \(\mu _1 \le (4 + 4) = 8\) and \(-8 = -(4 + 4) \le \mu _2\). Now, the eigenvalues of \(A_c\) are \(-10\) and 10. Therefore by Theorem 7, we have eigenvalues bound in the Table 2 of the symmetric interval matrix \(\varvec{A}^s\).

Up to this point, we have seen several eigenvalue bounds, including the exact bound for symmetric interval matrices, have been presented by different studies. Next, we will dive into the eigenvalue bounds of general interval matrices, and bounds are obtained for both the real and imaginary parts of the eigenvalues.

5 Eigenvalue bounds of general real interval matrices

An interval matrix can be represented by a center matrix and a radius matrix, here we follow the sign pattern of eigenvectors of the center matrix. In [4], Deif gives theorems for finding the exact eigenvalues of symmetric interval matrices with the consideration of constant sign pattern of the eigenvectors of the center matrix \(A_c\). The following theorem guaranteed that \(A_c\) and \(A_c + \delta A\) have eigenvectors with same sign component wise for any \(\delta A \in [-A_\triangle , A_\triangle ]\). Let the eigenvalues of a general matrix A are of the form \(\lambda + {i}\mu\), i.e. \(\lambda\) and \(\mu\) are respectively real and imaginary parts of the eigenvalues.

Theorem 9

[4] If \(A_\triangle\) satisfies \(|T|(I-\frac{|T^{-1}|A_\triangle |T|}{\theta -\phi })^{-1}(\frac{|T^{-1}|A_\triangle |T|+\phi I}{\theta -\phi }) \le |T|,\) then \(A_c\) and \(A_c+\delta A\) have eigenvectors with same sign component wise. Where T is the modal matrix of \(A_c\), i.e. \(T^{-1}A_cT\) would be a diagonal matrix containing the eigenvalues of \(A_c\), \(\theta\) is the separation between the eigenvalues of \(A_c\), that is \(\theta = \underset{i \ne j}{min}|\lambda _i - \lambda _j|\) and \(\phi\) is a bound on the shifts in the eigenvalues of \(A_c\) under the perturbation \(\delta A\), it is taken as \(\phi \le ||~|T^{-1}|A_\triangle |T|~||.\)

This mechanism also be implemented for general interval matrices. Let \(x{^l}\) \(= (x_{r1}^l + {i}x_{y1}^l,\dots ,x_{rn}^l + {i}x_{yn}^l)^T\) and \(y{^l}\) \(= (y_{r1}^l + {i}y_{y1}^l,\dots ,y_{rn}^l + {i}y_{yn}^l)^T\) are the eigenvector and left eigenvector of \(A_c + \delta A\), is normalized such that \((y{^l})^*x{^l} = 1,\) for \(l = 1,\dots ,n\), then the following theorem provides exact eigenvalue bound for both real and imaginary part. Here, \(S^l = (s_{kj}^l)\) for \(l = 1,\dots ,n\) and ‘\(\circ\)’is the Hadamard product of two matrices.

Theorem 10

[4] If \(\varvec{A}\) is an interval matrix and \(s_{kj}^l = sgn(y_{rk}^lx_{rj}^l+y_{yk}^lx_{yj}^l)\) for \(j,k = 1,\dots ,n\), computed at \(A_c\) is constant over \(\varvec{A}\). Then real part of eigenvalues are \(\varvec{\lambda }_l = [\lambda _l(A_c-A_\triangle \circ S^l), \lambda _l(A_c+A_\triangle \circ S^l)], \textit{ for } l = 1,\dots ,n\).

Theorem 11

[4] If \(\varvec{A}\) is an interval matrix and \(s_{kj}^l = sgn(y_{rk}^lx_{yj}^l - y_{yk}^lx_{rj}^l)\) for \(j,k = 1,\dots ,n\), computed at \(A_c\) is constant over \(\varvec{A}\). Then imaginary part of eigenvalues are \(\varvec{\mu }_l = [\mu _l(A_c-A_\triangle \circ S^l), \mu _l(A_c+A_\triangle \circ S^l)], \textit{ for } l = 1,\dots ,n\).

These theorems are undoubtedly hard to follow in computation. Thus different easily calculable ways have been developed for computing tighter eigenvalue bounds for interval matrices, which are discussed here.

The below theorem can bind real eigenvalues whose corresponding eigenvector obey a certain condition of a real interval matrix. Correlated to an interval matrix \(\varvec{A}\), the set defined as

is the set of real eigenvalues whose corresponding eigenvector x follows \(Sx > 0\) for a particular diagonal matrix S whose entries either are 1 or \(-1\). Now, we define

A bound of \(L_S\) can be computed by Theorem 12.

Theorem 12

[33] Let \(q = (q_j)\) and \(p = (p_j)\) be non-negative nonzero vectors, satisfying \((\forall j)(q_j = 0 \implies (\underline{A}_S^Tq)_j \ge 0)\) and \((\forall j)(p_j = 0 \implies (\overline{A}_S^Tp)_j \le 0)\), with \({\underline{\lambda }}_S = min\{{\frac{(\underline{A}_S^Tq)_j}{q_j}} : q_j > 0\}\) and \({\overline{\lambda }}_S = max\{{\frac{(\overline{A}_S^Tp)_j}{p_j}} : p_j > 0\}\). Then \(L_S \subset [{\underline{\lambda }}_S, {\overline{\lambda }}_S].\)

Example 8

Let us consider the interval matrix

Here,

Now, if we fix

then by the Theorem 12, we have \(L_S \subset [-4, 16]\) corresponding to \(q = (1, 1)^T\) and \(p = (1, 1)^T\).

The following theorem tells us that these bounds are exact with some conditions, which is as follows:

Theorem 13

[33] If the matrix \(A_c - SA_\triangle S\) has an eigenvalue \({\underline{\lambda }}_S \in L_S\) related to a left eigenvector y with \(Sy{^T} > 0\), then \({\underline{\lambda }}_S = \text {min } L_S\). And if the matrix \(A_c + SA_\triangle S\) has an eigenvalue \({\overline{\lambda }}_S \in L_S\) related to a left eigenvector y with \(Sy{^T} > 0\), then \({{\overline{\lambda }}_S} = \text {max } L_S\).

Now, for any square interval matrices, theoretical bounds of eigenvalues are represented in [31] by the following theorem. This approach does not need any conditions on the eigenvectors; also, it can produce bounds for both real and imaginary parts of the eigenvalues separately.

Theorem 14

[31] Let \(\varvec{A}\) be an interval matrix. Then for each eigenvalue \(\lambda + i\mu\) of each matrix \(A \in \varvec{A}\), we have

where

\(\underline{r} = \underset{\Vert x\Vert _2 = 1}{min}(x^TA_cx - |x|^TA_\triangle |x|),\)

\(\overline{r} = \underset{\Vert x\Vert _2 = 1}{max}(x^TA_cx + |x|^TA_\triangle |x|),\)

\(\underline{i} = \underset{\Vert (x_1, x_2)\Vert _2 = 1}{min}\) \((x_1^T(A_c - A_c^T)x_2 - A_\triangle \circ |x_1x_2^T - x_2x_1^T|),\)

\(\overline{i} = \underset{\Vert (x_1, x_2)\Vert _2 = 1}{max}\) \((x_1^T(A_c - A_c^T)x_2 + A_\triangle \circ |x_1x_2^T - x_2x_1^T|).\)

But we can see that computationally it is not easy to calculate. For that, Rohn [31] provided very easy formulae stated in the following theorem for the bounds of the eigenvalues of each matrix in \(\varvec{A}\).

Theorem 15

[31] Let \(\varvec{A}\) be an interval matrix. Consider \(Ax = (\lambda + i\mu )x\), where \(A \in \varvec{A}\) and \(x \in {\mathbb {C}^n\setminus \{0\}}\). Then

where

\(A_{c}^\prime\) = \(\frac{1}{2}\)(\(A_{c}\) + \(A_{c}^T\)), and \(A_{\triangle }^\prime\) = \(\frac{1}{2}\)(\(A_{\triangle }\) + \(A_{\triangle }^T\)),

\(A_{c}^{\prime \prime }\) = \(\begin{pmatrix} 0 &{} \frac{1}{2}(A_{c} - A_{c}^T)\\ \frac{1}{2}(A_{c}^T - A_{c}) &{} 0\\ \end{pmatrix}\), and \(A_{\triangle }^{\prime \prime }\) = \(\begin{pmatrix} 0 &{} A_{\triangle }^\prime \\ A_{\triangle }^\prime &{} 0\\ \end{pmatrix}\).

Example 9

Let us consider the interval matrix

Therefore by equations (23) and (24), we have \({\lambda } \in [-15.2361, 15.2361]\) and \({\mu } \in [-5.2361, 5.2361]\).

The underneath theorem has built on the continuity property of characteristic polynomial corresponding to a real interval matrix for bounding real eigenvalues. Also, the procedure is based on discarding the interval which does not contain eigenvalue.

Theorem 16

[24] Let \(\lambda ^0\) be an eigenvalue of a matrix \(A=(a_{ij}) \in \varvec{A}\) and \(\lambda ^0 \in [m, n]\), then

where \(P(\lambda , a_{ij})\) is the characteristic polynomial of A.

We choose an interval [m, n]; if this interval does not satisfy the equation (25), then we can conclude by Theorem 16 that the interval [m, n] does not contain eigenvalues of \(\varvec{A}\), consequently we will remove the interval [m, n] and choose another interval from the remaining real line and repeating the procedure we will get a tight interval or union of intervals as eigenvalues for the interval matrix \(\varvec{A}\). This procedure is theoretically strong but computationally hard; the next theorems and propositions can easily produce good bounds for the general interval matrices.

The following theorem will provide formulae (Proposition 2) for eigenvalue bounds of general interval matrices with the condition that the center matrix of the interval matrix is diagonalizable. We denoted the condition number of a matrix V with respect to p-norm by \(\kappa _p(V)\) and maximum singular value by \(\sigma _{max}(V)\).

Theorem 17

[14] If \(A, B \in \mathbb {R}^{n \times n}\) and A is diagonalizable i.e. \(V^{-1}AV = diag({\zeta _1,\dots ,\zeta _n})\) for some \(V \in \mathbb {C}^{n\times n}\) and \(\zeta _1,\dots ,\zeta _n\) \(\in \mathbb {C}\). Then for every eigenvalue \(\lambda + {i}\mu\) of \(A + B,\) there exist an index \(j \in \{1,\dots ,n\}\) such that \(|(\lambda + {i}\mu ) - \zeta _j| \le \kappa _p(V) \cdot \Vert B\Vert _p\).

In most cases we use \(p = 2\), in the following proposition also. Here \(\varvec{\lambda }_i = [{\underline{\lambda }}_i, {\overline{\lambda }}_i]\) for \(i = 1,\dots ,n\).

Proposition 2

[45] Let \(A_c\) be diagonalizable i.e. \(V^{-1}A_cV\) is diagonal for some \(V \in \mathbb {C}^{n\times n}\). Then the set of real eigenvalues \(\varvec{\Lambda } \subseteq (\cup _{i = 1}^{n} \varvec{\lambda }_i)\), where for each \(i = 1,\dots ,n,\)

provided that \(\mu _i(A_c)^2 \le (\kappa _2(V) . \sigma _{max}(A_\triangle ))^2\); otherwise \(\varvec{\lambda }_i = \phi.\)

In the case, when the center of the interval matrix is not diagonalizable, then we have the following generalization of the Bauer and Fike theorem and the successive proposition for the interval eigenvalue bound.

Theorem 18

[35] Let \(A, B \in \mathbb {R}^{n \times n}\) and \(V^{-1}AV = J\) be the Jordan canonical form of A. For every eigenvalue \({\lambda + {i}\mu }\) of \(A + B\), there exists an index \(j \in \{1,\dots ,n\}\) such that \(|(\lambda + {i}\mu ) - \lambda _j(A)| \le max\{\Theta _2,\Theta _2^{\frac{1}{q}}\}\), where \(\Theta _2 = \sqrt{\frac{q(q + 1)}{2}} \cdot \kappa _2(V) \cdot \Vert B\Vert _2\) and q is the maximal dimension of the Jordan’s blocks in J.

Proposition 3

[45] Let \(V^{-1}A_cV = J\) be the Jordan canonical form of \(A_c\), and q be the dimension of maximal Jordan’s block in J. Then the set of real eigenvalues \(\varvec{\Lambda }\) \(\subseteq (\cup _{i = 1}^{n}\) \(\varvec{\lambda }_i)\), where

for \(i = 1,\dots ,n\) and \(\Theta _2 = \sqrt{\frac{q(q + 1)}{2}} \cdot \kappa _2(V) \cdot \sigma _{max}(A_\triangle )\), \(\Theta = max\{\Theta _2, \Theta _2^\frac{1}{q}\}\) provided that \(\mu _i(A_c)^2 \le \Theta ^2\); otherwise \(\varvec{\lambda }_i = \phi\).

The following theorem sometimes produces good bounds for real eigenvalues of real interval matrices, which can be useful.

Theorem 19

[44] Let \(A \in \mathbb {R}^{n \times n}\). Then \(\lambda _{min}(\frac{A + A^T}{2}) \le \lambda (A) \le \lambda _{max}(\frac{A + A^T}{2})\) for every eigenvalue \({\lambda + {i}\mu } \in \mathbb {C}\) of A.

Example 10

Let us consider the interval matrix

The center and radius matrices of \(\varvec{A}\) are respectively

The eigenvalues of \(A_c\) are \(-0.2103\), 1.3924, and 27.3179. Here, \(A_c\) is diagonalizable, therefore by Proposition 2, we have \({\varvec{\Lambda }} \subseteq [-1.7103, 1.2897] \cup [-0.1076, 2.8924]\cup [25.8179, 28.8179]\).

Example 11

Let

The eigenvalues of \(A_c\) are \(2.5000 + 1.9365i\) and \(2.5000 - 1.9365i\).

If we take

then \(\kappa _2(V) = 2.1075\) and \(\sigma _{max}(A_\triangle ) = 0.02\). Since \({\mu _i(A_c)^2 > (\kappa _2(V) \cdot \sigma _{max}(A_\triangle ))^2}\), therefore by Proposition 2, \(\varvec{\lambda }_i = \phi\), for \(i = 1,2\), consequently \(\varvec{\Lambda } = \phi\). On the other hand by Rohn’s [31] outer approximation, we have \(\varvec{\Lambda } \subseteq [0.8989, 4.1011],\) which is an overestimation.

If we take

then \(\sigma _{max}(A_\triangle ) = 2\), Since \(\mu _i(A_c)^2 < (\kappa _2(V).\sigma _{max}(A_\triangle ))^2,\) therefore by Proposition 2, \(\varvec{\lambda }_i = [-1.2438, 6.2438]\), for \(i = 1,2\), consequently \(\varvec{\Lambda } \subseteq [-1.2438, 6.2438]\); where as Rohn’s [31] outer approximation gives \(\varvec{\Lambda } \subseteq [-1.0811, 6.0811]\), which is a better overestimation.

In [46] the authors presented the following theorem, based on this, they developed an algorithm that can tight an eigenvalue bound computed by other methods.

Theorem 20

[46] Let \(\lambda ^0 \notin \varvec{\Lambda }(\varvec{A})\) and define \(\varvec{M} = \varvec{A} - \lambda ^0 I\). Then \((\lambda ^0 + \lambda ) \notin \Lambda (\varvec{A})\) for all \(\lambda\) satisfying,

where \(Q \in \mathbb {R}^{n \times n} \setminus \{O\}\) is an arbitrary matrix.

Example 12

Let us consider the interval matrix

Applying filtering algorithm [46], we have \({\lambda } \in [-16.9416, 16.2391]\), where as Rohn [31] produces \({\lambda } \in [-17.1231, 17.1231].\)

An interval matrix is said of regular if all matrices belong to that interval matrices are regular(non-singular). The following theorems present sufficient conditions for an interval matrix to be regular. These theorems play a key role in computing tighter eigenvalue bounds for interval matrices.

Theorem 21

[17] If \(\rho (|A_{c}^{-1}| A_{\triangle }) < 1\), then \([A_c - A_\triangle , A_c + A_\triangle ]\) is regular.

Theorem 22

[17] If \(\lambda _{max}(A_{\triangle }^TA_{\triangle }) < \lambda _{min}(A_{c}^TA_{c})\), then \([A_{c} - A_{\triangle }, A_{c} + A_{\triangle }]\) is regular.

Theorem 23

[17] If for some consistent matrix norm \(\Vert .\Vert\) the matrix \(A_{c}^TA_{c} - \Vert A_{\triangle }^TA_{\triangle }\Vert I\) is positive definite, then \([A_{c} - A_{\triangle }, A_{c} + A_{\triangle }]\) is regular.

Example 13

Let us consider the interval matrix

Using the algorithms in [19], which are based on Theorem 21, we have \({\lambda } \in [-16.8928, 16.0004]\). Here, we can see that even this bound is tighter than the bound obtained by the filtering method in the previous example.

Naturally, the studies have advanced into eigenvalue bounds for complex interval matrices. Interestingly approaches have been made to compute eigenvalue bounds for complex interval matrices, which involves eigenvalues bound for symmetric interval matrices discussed in the following section.

6 Eigenvalue bounds for complex interval matrices

Researchers have developed methods for computing enclosures of the eigenvalue clusters in the complex plane of complex interval matrices. In parallel to the reliability, the focus of these methods is on the tightness of the enclosures. Their usefulness is discussed in the application section to determine the systems\('\) stability. Hertz presented the following theorem that can bound separately real and imaginary parts of the eigenvalues of complex interval matrices.

Theorem 24

[9] Let \(\varvec{A} +i\varvec{B}\) be a complex interval matrix. Then for each eigenvalue \(\lambda +i\mu\), we have

and

Example 14

Let us consider the complex interval matrix \(\varvec{A} + i\varvec{B}\), with

Hertz formulae give \(\lambda \in [-18.5864,18.5864]\) and \(\mu \in [-18.5864,18.5864]\); we portrayed a simulation of eigenvalues of \(\varvec{A} + i\varvec{B}\) and Hertz [9] bound in Fig. 2 to see the tightness.

Now, using Courant-Fischer theorem [6, 56], Hladik [41] proposed the following theorem for enclosing the eigenvalues of complex interval matrix. The efficiency of this theorem depends upon the solver for the symmetric interval matrices given by different authors.

Theorem 25

[41] Let \(\varvec{A}\), \(\varvec{B}\) \(\in\) \(\mathbb {IR}^{n \times n}\). Then for every eigenvalue \(\lambda + i\mu \in \varvec{\Lambda }(\varvec{A} + i\varvec{B})\), we have

Example 15

Let us consider the complex interval matrix \(\varvec{A} + i\varvec{B}\), with

We computed eigenvalue bounds of \(\varvec{A} + i\varvec{B}\) by Hertz [9] formulae and also, by Hladik’s formulae [41] with the help of Rohn [32] formula for eigenvalue bounds of symmetric interval matrices; these bounds and a simulation of eigenvalues of \(\varvec{A} + i\varvec{B}\) are portrayed in Fig. 3. We can see in this Figure that Hladik’s method produced tighter bound than the Hertz.

As with real interval matrices, the following theorems came up with sufficient conditions for the regularity of complex interval matrices.

Theorem 26

[13] If R be an arbitrary complex matrix of order \(n \times n\) and \(\varvec{C} = \varvec{A}+i\varvec{B}\) such that

then \(\varvec{C}\) is regular.

Corollary 1

[13] If \(R = C_c^{-1}\), then \(\rho (\vert C_c^{-1} \vert C_\triangle ) < 1\) implies \(\varvec{C}\) is regular.

Theorem 27

[13] Let ‘* ’ denote the conjugate transpose and for \(M_\triangle = \begin{pmatrix} A_\triangle &{} B_\triangle \\ B_\triangle &{} A_\triangle \end{pmatrix}\)

satisfies then \(\varvec{C} = \varvec{A+iB}\) is regular.

Theorem 28

[13] Let \(\varvec{\lambda } +i \varvec{\mu }\) = \([{\underline{\lambda }}, {\overline{\lambda }}] + i[{\underline{\mu }}, {\overline{\mu }}]\) \(\in\) \({\mathbb {IC}}\) and \(\varvec{C}\) be a complex interval matrix. If the complex interval matrix \(\varvec{P} = \varvec{C} - (\varvec{\lambda } + i\varvec{\mu })I\) is regular then \((\varvec{\lambda } + i\varvec{\mu }) \cap \varvec{\Lambda }(\varvec{C}) = \phi\).

Hereto we have discussed several methods with examples for finding exact or tighter eigenvalue bounds for symmetric, real, and complex interval matrices. Now, we will exhibit some applications of these methods to the problems in different areas together with bounded uncertainty.

7 Applications

In practical problems, uncertainty arises due to different causes like measurement errors, deterioration of materials, etc. We need to consider all these errors in a certain problem; one way to do this is to enclose these errors by the closed and bounded intervals. Consequently, interval computations evolved as a reliable technique for handling bounded uncertain systems. Eigenvalue problems are intrinsic of the different mathematical problems, in real-life situations in the presence of bounded uncertainty, we need to deal with interval eigenvalue problems. In the preceding sections, we can see that various methods have been developed for finding tighter eigenvalue bounds for interval matrices as it produces better solutions for the problems with bounded uncertainty.

As an application of computing tighter eigenvalue bounds for different interval problems, here we included structural problems with bounded uncertain parameters [26, 50], stability of discrete and continuous dynamical systems in a bounded uncertain environment [30, 38, 62, 72].

7.1 Spring-mass system with bounded uncertainty

A spring-mass system of four degree of freedom with measurement uncertainty in the masses and the stiffness of the strings is pictured in Fig. 4. Here, four masses are attached to one another and with the walls by five strings.

The spring-mass system without measurement uncertainty can be described by the equation

where M, K are the mass and stiffness matrices(symmetric), respectively and where X(t) is the column vector of displacements of the masses from their origin positions along with time t. In the presence of measurement errors in determining the exact masses and stiffness of the system or due to damage of materials over time, we have to calculate the natural frequencies of the system with the interval matrices \(\varvec{M}{^s}\) and \(\varvec{K}{^s}\). They are the square root of the eigenvalues of the generalized interval eigenvalue problem \(\varvec{K{^s}x} = \varvec{\lambda M{^s}x}\), where both \(\varvec{M}{^s}\) and \(\varvec{K}{^s}\) are symmetric interval matrices. We consider the following example to see the applicability and computational complexity of the various interval eigenvalue bounds for the spring-mass system with bounded uncertainty.

Example 16

Let us consider the symmetric interval mass and stiffness matrices corresponding to an uncertain spring-mass system as

respectively.

Since we consider all four masses as unity, therefore the mass matrix \(\varvec{M}{^s} = \varvec{I},\) the interval identity matrix(real identity matrix), then the generalized interval eigenvalue problem corresponding to the spring-mass system becomes a standard symmetric interval eigenvalue problem. Eigenvalue bounds obtained by different methods are listed in Table 3.

Rohn [32] produces eigenvalue bounds for each interval eigenvalue which needs to calculate only the eigenvalues of the center matrix and the spectral radius of the radius matrix. Leng and He [20] produce cheap bounds which need to compute the eigenvalues of the center matrix and bounds for the eigenvalues of the radius matrix, and consequently, bounds for each interval eigenvalue; which is less tight than Rohn bounds [32]. Hertz [7] method produces exact eigenvalue bounds, but it’s computation time is exponential with the dimension of the matrix.

In this case, when the stiffness interval matrix is narrow, i.e., the maximum of the elements of the radius matrix is small. The following example will demonstrate the efficiency of the various eigenvalue bounds for the narrow interval matrix.

Example 17

Let us consider the symmetric interval matrices

The eigenvalue bounds by various methods are placed in Table 4. We can see that Rohn [32] produces a better bound than Leng and He [20] method. Hertz [7] computed exact eigenvalue bounds; the computational complexity of this method is exponential.

For further reading related to the problem of spring-mass systems with bounded uncertainty, we suggest the readers go through these references [49, 69]. Next, we will analyze the stability of the dynamical systems with bounded uncertainty.

7.2 Linear dynamical systems with bounded uncertainty

The appearances of the continuous and discrete time-invariant system with the bounded uncertainty environment are expected in the physical situation; to check the system’s stability over time, we need to compute tighter eigenvalue bounds of the corresponding interval matrix.

Let us consider the linear time-invariant analog uncertain system

and the linear time-invariant discrete system with uncertainty

The solution of the above systems can be determined by computing the interval eigenvalues of the interval matrix \(\varvec{A}\) and also, the stability of the systems can be determined, here two kinds of stability are discussed next. An interval matrix \(\varvec{A}\) is said to have some property \(\wp\) if each matrix belongs to the interval matrix \(\varvec{A}\) has the same property \(\wp\), i.e., an interval matrix \(\varvec{A}\) is said to be Hurwitz or Schur stable if all matrix in \(\varvec{A}\) are Hurwitz or Schur stable respectively.

7.2.1 Hurwitz stability:

An interval matrix, \(\varvec{A}\) appears in the above systems is said to be Hurwitz stable if all eigenvalues lie on the left open half-plane of \(\mathbb {C}\). For checking Hurwitz stability of the symmetric interval matrices, we need to compute maximum eigenvalues of a special set of \(2^{n-1}\) vertex matrices out of total \(2^{(n^2+n)/2}\) symmetric vertex matrices, where n is the order of the interval matrix. We can choose these vertex matrices by Theorem 2, and one example has been discussed there to find the exact maximum eigenvalue of the symmetric interval matrix. On the other hand, there is no single method for finding the exact maximum eigenvalue for general interval matrices, so we have to compute tighter eigenvalue bounds with the help of different approaches to determine the Hurwitz stability of the general interval matrices.

7.2.2 Schur stability:

If all eigenvalues of an interval matrix lie in the open unit disk centered at the origin, then the corresponding system is Schur stable over time. The eigenvalues of real symmetric matrices are real, so we need to check whether all the interval eigenvalues of a symmetric interval matrix lie in the open interval \((-1, 1)\). For checking Schur stability of the symmetric interval matrices, we need to compute the eigenvalues of two special sets; each contains \(2^{n-1}\) vertex matrices out of total \(2^{(n^2+n)/2}\) symmetric vertex matrices, where n is the order of the interval matrix; we will choose these vertex matrices by Theorems 2 and 3. In the case of general interval matrices, there is no method for finding exact interval eigenvalues, so we have to use various methods to find tighter eigenvalue bounds for checking the Schur stability of the corresponding systems.

In the following subsection, we will see the utilization of computing tighter eigenvalue bounds for interval matrices in checking the Hurwitz and Schur stability in a bounded uncertain environment.

7.3 Linear differential equations with bounded uncertainty

Let us consider the linear differential equation with interval uncertainty

Put \(x^\prime (t) = y(t)\) in equation (42), we have

We get the system of equations as

Since, \(\alpha \in \varvec{\alpha }\) and \(\beta \in \varvec{\beta }\), the coefficient matrix becomes an interval matrix \(\begin{pmatrix} \varvec{0} &{} \varvec{1}\\ -\varvec{\beta } &{} -\varvec{\alpha } \end{pmatrix}\), so by computing eigenvalues of the interval matrix we can get the solution of the uncertain linear differential equation [52], and also, determined the stability of the system, studied in the next examples.

Example 18

Let us consider the interval matrix

The real part of eigenvalues of \(\varvec{A}\) lies in \([-1.2529, 0.1029]\) and imaginary part lies in \([-0.8354, 0.8354]\), produced by both Rohn [31] and Hertz [9] methods. By Hladik [41] with Hertz [7] method we have the real part of the eigenvalues of \(\varvec{A}\) lies in \([-1.2500, 0.0542]\) and the imaginary part lies in \([-0.8254, 0.8254].\) From these bounds one can not conclude Hurwitz stability of \(\varvec{A}\) from this bound, but from the characteristic polynomial of any matrix in \(\varvec{A}\) we can conclude that it has all eigenvalues lies in the left open half of the complex plane, so \(\varvec{A}\) is Hurwitz stable. A simulation of the eigenvalues and both the bounds are depicted in Fig. 5 which shows us that all the eigenvalues of \(\varvec{A}\) are lies in the open left half part of the complex plane.

The upcoming example shows that tighter eigenvalue bounds are decisive for checking the Schur stability of complex interval matrices.

Example 19

Let us consider the complex interval matrix \(\varvec{A} + i\varvec{B}\), where

By Hertz’s [9] method we have \(\lambda\) \(\in\) \([-0.7199, 0.6499]\) and \(\mu\) \(\in\) \([-0.7532, 0.7532],\) on the other hand by Hladik’s [41] method we have \(\lambda\) \(\in\) \([-0.6874, 0.6174]\) and \(\mu\) \(\in\) \([-0.6901, 0.6901]\). A pictorial depiction of the simulation of eigenvalues and bounds by Hertz and Hladik methods are in Fig. 6, we can see that as Hertz bounds do not lie on the open unit disc centered at the origin so from this bound, we can not conclude the Schur stability of the interval matrix \(\varvec{A} + i\varvec{B}\), but a tighter bound by Hladik can conclude the Schur stability of the interval matrix.

One can follow the references [10, 70, 73] for more problems on stability of dynamical systems with bounded uncertainty. Next, we will study a nine-bar structure in a bounded uncertain ambience.

7.4 A nine-bar truss with bounded uncertainty

The structure consists of nine identical bars, construction as in Fig. 7. In the presence of measurement errors, the Young’s moduli of the bars vary with intervals; inevitably, the dynamic matrix related to the structure becomes a symmetric interval matrix. We assumed that the length, area of cross-section, the density of the bars are constant. We considered an example and computed its eigenvalue bounds by different methods.

Example 20

Let us consider the symmetric interval matrix correlated to the nine-bar truss

We have computed eigenvalue bounds of the symmetric interval matrix \(\varvec{A}^s\) related to the nine-bar truss by Rohn’s [32], Leng and He’s [20], and Hertz’s [7] methods. From Table 5, we can observe that the first two methods are produced the same eigenvalue bounds, whereas Hertz’s [7] produces only the exact right endpoint of the maximum interval eigenvalue and exact left endpoint of the minimum interval eigenvalue. However, this is an exponential method with the dimension of the matrix.

Similarly, other structural problems in the practical ambiance can be handled with interval techniques, and we suggest these references for further studies related to different structural problems with interval uncertainty [2, 22, 61].

8 Conclusions and future directions

Bounded error is inhabitable in practical problems, and interval computation is a suitable technique to deal with it. Interval analysis evolved in the seventeenth decade of the twentieth century. There is no soloist theoretical or computational concept to compute exact interval eigenvalues, which is an NP-hard problem. In this paper, we have discussed various methods for the interval eigenvalue problems for finding tighter interval eigenvalue bounds to understand the development. We have investigated the uses of techniques of classical mathematical tools in interval eigenvalue problems. Also, we have demonstrated wide applications of interval eigenvalue problems in different areas. This paper will helps researchers to gain vast knowledge about eigenvalue problems with bounded uncertainty and the development of their tighter solutions. This paper will influence researchers to work on critical problems like interval complex generalized eigenvalue problems and interval sparse eigenvalue problems, which are challenging and have plenty of applications in control theory, structure analysis, biological wastewater management, etc.

References

Neumaier A (1990) Interval methods for systems of equations. Cambridge University Press, Cambridge

Sofi A, Muscolino G, Elishakoff I (2015) Natural frequencies of structures with interval parameters. J Sound Vib 347:79–95

Voshchinin AP, Sotirov GR (1989) Optimization under uncertainty. MEI-Tekhnika, Moscow, Sofia

Deif AS (1991) The interval eigenvalue problem. ZAMM—J Appl Math Mech/Zeitschrift für Angewandte Mathematik und Mechanik 71(1):61–64

Jansson C (1997) Calculation of exact bounds for the solution set of linear interval systems. Linear Algebra Appl 251:321–340

Meyer CD (2000) Matrix analysis and applied linear algebra. Society for Industrial and Applied Mathematics, Philadelphia

Hertz D (1992) The extreme eigenvalues and stability of real symmetric interval matrices. IEEE Trans Autom Control 37(4):532–535

Hertz D (1993) The maximal eigenvalue and stability of a class of real symmetric interval matrices. IEEE Trans Circ Syst I: Fundam Theory Appl 40(1):56–57

Hertz D (2009) Interval analysis: Eigenvalue bounds of interval matrices. Encycl Optim 2:1689–1697

Petkovski DB (1988) Stability analysis of interval matrices: improved bounds. Int J Control 48(6):2265–2273

Arnold DN (1998) Some disasters attributable to bad numerical computing. https://www-users.cse.umn.edu/~arnold/disasters/disasters.html

Hansen E, Walster GH (2003) Global optimization using interval analysis: revised and expanded, vol 264. CRC Press, Boca Raton

Roy F, Gupta DK (2018) Sufficient regularity conditions for complex interval matrices and approximations of eigenvalues sets. Appl Math Comput 317:193–209

Bauer FL, Fike CT (1960) Norms and exclusion theorems. Numer Math 2(1):137–141

Alefeld G, Mayer G (2000) Interval analysis: theory and applications. J Comput Appl Math 121(1):421–464

Alefeld G, Herzberger J (2012) Introduction to interval computation. Academic Press, New York

Rex G, Rohn J (1998) Sufficient conditions for regularity and singularity of interval matrices. SIAM J Matrix Anal Appl 20(2):437–445

Stewart G, Sun JG (1990) Matrix perturbation theory. Academic Press, New York

Leng H (2014) Real eigenvalue bounds of standard and generalized real interval eigenvalue problems. Appl Math Comput 232:164–171

Leng H, He Z (2007) Computing eigenvalue bounds of structures with uncertain-but-non-random parameters by a method based on perturbation theory. Commun Numer Methods Eng 23(11):973–982

Leng H, He Z (2010) Computation of bounds for eigenvalues of structures with interval parameters. Appl Math Comput 216(9):2734–2739

Leng H, He Z (2010) Computation of bounds for eigenvalues of structures with interval parameters. Appl Math Comput 216(9):2734–2739

Leng H, He Z (2017) Eigenvalue bounds for symmetric matrices with entries in one interval. Appl Math Comput 299:58–65

Leng H, He Z, Yuan Q (2008) Computing bounds to real eigenvalues of real-interval matrices. Int J Numer Meth Eng 74(4):523–530

Ahn HS, Chen YQ, Podlubny I (2007) Robust stability test of a class of linear time-invariant interval fractional-order system using lyapunov inequality. Appl Math Comput 187(1):27–34

Chen J, Liu Y, Wang D, Zhang W, Liu X (2017) Research on natural frequency of structure considering elastic joint with interval uncertainty. Vibroeng Procedia 14:197–202

Horáček J, Hladík M, Matějka J (2018) Determinants of interval matrices. Electr J Linear Algebra 33:99–112

Horáček J, Hladík M, Černý M (2017) Interval linear algebra and computational complexity. Appl Comput Matrix Anal 192:37–66

Rohn J (1989) Systems of linear interval equations. Linear Algebra Appl 126:39–78

Rohn J (1992) Stability of interval matrices: the real eigenvalue case. IEEE Trans Autom Control 37(10):1604–1605

Rohn J (1998) Bounds on eigenvalues of interval matrices. ZAMM—J Appl Math Mech/Zeitschrift für Angewandte Mathematik und Mechanik 78(S3):1049–1050

Rohn J (2005) A handbook of results on interval linear problems. http://www.cs.cas.cz/rohn/handbook/

Rohn J, Deif AS (1992) On the range of eigenvalues of an interval matrix. Computing 47:373–377

Wilkinson JH (1988) The algebraic eigenvalue problem. Oxford University Press Inc, Oxford

Chu KE (1986) Generalization of the Bauer-Fike theorem. Numer Math 49(6):685–691

Kolev L (1993) Interval methods for circuit analysis, vol 1. World Scientific, Singapore

Kolev L (2008) Determining the range of real eigenvalues for the interval generalized eigenvalue problem. COMPEL—Int J Comput Math Electr Electr Eng 27(6):1463–1480

Kolev L, Petrakieva S (2005) Assessing the stability of linear time-invariant continuous interval dynamic systems. IEEE Trans Autom Control 50(3):393–397

Kolev LV (2006) Outer interval solution of the eigenvalue problem under general form parametric dependencies. Reliable Comput 12(2):121–140

Kolev LV (2014) Regularity radius and real eigenvalue range. Appl Math Comput 233:404–412

Hladík M (2013) Bounds on eigenvalues of real and complex interval matrices. Appl Math Comput 219(10):5584–5591

Hladík M (2015) Complexity issues for the symmetric interval eigenvalue problem. Open Math 13(1):157–164

Hladík M, Daney D, Tsigaridas E (2011) Characterizing and approximating eigenvalue sets of symmetric interval matrices. Comput Math Appl 62(8):3152–3163

Hladik M, Daney D, Tsigaridas EP (2009) Bounds on eigenvalues and singular values of interval matrices. Research report, HAL-Inria

Hladík M, Daney D, Tsigaridas E (2010) Bounds on real eigenvalues and singular values of interval matrices. SIAM J Matrix Anal Appl 31(4):2116–2129

Hladík M, Daney D, Tsigaridas E (2011) A filtering method for the interval eigenvalue problem. Appl Math Comput 217(12):5236–5242

Hladík M, Daney D, Tsigaridas EP (2011) An algorithm for addressing the real interval eigenvalue problem. J Comput Appl Math 235(8):2715–2730

Kieffer M, Jaulin L, Walter E, Meizel D (February 1999) Guaranteed mobile robot tracking using interval analysis. In: MISC’99, Workshop on Application of Interval Analysis to System and Control. Girona, Spain, pp 347–360

Modares M, Mullen RL (2006) Uncertainty analysis on dynamics of mechanical systems. J Passenger Car Mech Syst J 115(6):54–61

Modares M, Mullen RL (2014) Dynamic analysis of structures with interval uncertainty. J Eng Mech 140(4):04013011

Matcovschi MH, Pastravanu O (2014) Novel estimations for the eigenvalue bounds of complex interval matrices. Appl Math Comput 234:645–666

Mizukoshi MT, Lodwick WA (2021) The interval eigenvalue problem using constraint interval analysis with an application to linear differential equations. Fuzzy Sets Syst 419:141–157

Yuan Q, He Z, Leng H (2008) An evolution strategy method for computing eigenvalue bounds of interval matrices. Appl Math Comput 196(1):257–265

Yuan Q, He Z, Leng H (2008) An evolution strategy method for computing eigenvalue bounds of interval matrices. Appl Math Comput 196(1):257–265

Chiappinelli R (2018) "What do you mean by nonlinear eigenvalue problems"? Axioms 7(2):39

Horn RA, Johnson CR (1985) Matrix analysis. Cambridge University Press, Cambridge

Horn RA, Johnson CR (1994) Topics in matrix analysis. Cambridge University Press, Cambridge

Kearfott RB (1996) Interval computations: introduction, uses, and resources. Euromath Bull 2(1):95–112

Kearfott RB, Kreinovich V (1996) Applications of interval computations: an introduction. Springer, Boston, pp 1–22

Moore RE, Kearfott RB, Cloud MJ (2009) Introduction to interval analysis. SIAM

Muhanna RL, Mullen RL (2001) Uncertainty in mechanics problems-interval-based approach. J Eng Mech 127(6):557–566

Białas S (1983) A necessary and sufficient condition for the stability of interval matrices. Int J Control 37(4):717–722

Chakraverty S, Hladík M, Mahato NR (2017) A sign function approach to solve algebraically interval system of linear equations for nonnegative solutions. Fund Inform 152(1):13–31

Chakraverty S, Mahato NR (2018) Nonlinear interval eigenvalue problems for damped spring-mass system. Eng Comput Int J Comput-Aided Eng 35(6):2272–2286

Rump SM (1988) Algebraic computation, numerical computation and verified inclusions. Trends Comput Algebra. Lecture Notes in Computer Science. Springer, Berlin.

Shary SP (1997) Algebraic approach in the “outer problem’’ for interval linear equations. Reliable Comput 3(2):103–135

Shary SP (2002) A new technique in systems analysis under interval uncertainty and ambiguity. Reliable Comput 8(5):321–418

Hales TC (2005) A proof of the Kepler conjecture. Ann Math 162(3):1065–1185

Chen X, Shen Z, Liu X (2019) Structural dynamics model updating with interval uncertainty based on response surface model and sensitivity analysis. Inverse Probl Sci Eng 27(10):1425–1441

Daoyi X (1985) Simple criteria for stability of interval matrices. Int J Control 41(1):289–295

Huang Y, Liu Y (2018) An analytical model for solving generalized interval eigenvalue problem. Appl Math Model 61:18–28

Soh YC, Evans RJ (1988) Stability analysis of interval matrices-continuous and discrete systems. Int J Control 47(1):25–32

Juang YT, Shao CS (1989) Stability analysis of dynamic interval systems. Int J Control 49(4):1401–1408

Qiu Z, Hu J, Yang J, Lu Q (2008) Exact bounds for the sensitivity analysis of structures with uncertain-but-bounded parameters. Appl Math Model 32(6):1143–1157

Qiu Z, Wang X (2005) Several solution methods for the generalized complex eigenvalue problem with bounded uncertainties. Int J Solids Struct 42(9):2883–2900

Qiu Z, Wang X, Friswell MI (2005) Eigenvalue bounds of structures with uncertain-but-bounded parameters. J Sound Vib 282(1):297–312

Acknowledgements

The first author is grateful to the Council of Scientific and Industrial Research (CSIR), India, for the financial support to perform this work. The authors are thankful to anonymous reviewers for their valuable suggestions, which have improved the quality of the article.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Maiti, S., Chakraverty, S. Evolution of Interval Eigenvalue Problems and its Applications to the Uncertain Dynamic Problems. Arch Computat Methods Eng 30, 1167–1186 (2023). https://doi.org/10.1007/s11831-022-09837-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11831-022-09837-1