Abstract

Isogeometric shape optimization has been now studied for over a decade. This contribution aims at compiling the key ingredients within this promising framework, with a particular attention to sensitivity analysis. Based on all the researches related to isogeometric shape optimization, we present a global overview of the process which has emerged. The principal feature is the use of two refinement levels of the same geometry: a coarse level where the shape updates are imposed and a fine level where the analysis is performed. We explain how these two models interact during the optimization, and especially during the sensitivity analysis. We present new theoretical developments, algorithms, and quantitative results regarding the analytical calculation of discrete adjoint-based sensitivities. In order to highlight the versatility of this sensitivity analysis method, we perform eight benchmark optimization examples with different types of objective functions (compliance, displacement field, stress field, and natural frequencies), different types of isogeometric element (2D and 3D standard solids, and a Kirchhoff–Love shell), and different types of structural analysis (static and vibration). The numerical performances of the analytical sensitivities are compared with approximate sensitivities. The results in terms of accuracy and numerical cost make us believe that the presented method is a viable strategy to build a robust framework for shape optimization.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Structural shape optimization has been one of the early application of IsoGeometric Analysis whose seminal paper is Hughes et al. [42]. Wall et al. [87] have rapidly highlighted its benefit for shape optimization because IGA uses models that combine an accurate geometrical description and great analysis capabilities. Indeed, IGA employs spline-based geometric models to perform the analysis. More precisely, IGA is a Finite Element Method that uses a spline model to describe the domain geometry but also to represent the numerical solution of the problem using the isoparametric paradigm [19, 42]. Even in its original version, IGA draws on advanced and well-known technologies coming from the field of Computer-Aided Design, as for instance NURBS models. Nowadays, a large panel of spline technologies (T-Splines, LR B-Splines, etc.) is available for simulation [28, 69]. The growing interest for IGA does not only come from the possibility of having models with high quality geometries. These spline functions have also shown great performances when it comes to numerical simulation, and especially an increased per-degree-of-freedom accuracy in comparison with standard FEM [29]. IGA achieved to tackle demanding problems and became a solution of choice in specific fields, as for example fluid-structure interaction, or biomedical application [45, 60, 64].

Shape optimization might be one of these fields that could be pushed forward through the use of the isogeometric principle; and it already started. The reason is quite straightforward. Shape optimization requires a suitable mix of an accurate geometric description and an efficient analysis model. Even more importantly, a close link between both the geometric and the analysis models is highly sought since they repeatedly communicate during the resolution. This is where current standard approaches for structural shape optimization, based on classical FE models, face some difficulties. Numerical approaches for the shape optimization of structures are not new and several strategies have been presented in the eighties and early nineties [9, 13, 35, 41, 43, 71]. These developments have led to two principal classes of methods: the node-based approaches, and the CAD-based approaches. The node-based method uses the nodes of an analysis model (i.e. the finite element mesh) as design parameters. Conversely, the CAD-based method uses two different models of the same structure: a parametrized CAD model that describes the geometry, and a FE model to perform the structural analysis. Having these two separated models has shown great benefits [13] and has been preferred over node-based methods for quite some time [41]. However, a major drawback of the method has restricted its deployment in design offices. Indeed, it requires a close link to handle the delicate task of transferring the information between the design model and the analysis model. To this purpose, very specific program needs to be developed [10]. For complex structures, the link between the geometric model and the finite element mesh is far from straightforward. Also, the repeated mesh generations during the resolution burdens this optimization process. Thus, more recently, node-based methods regain interest and efficient approaches have been developed [27, 40, 54, 78]. In case of node-based optimization, the difficulties lie in the treatments of the large number of design parameters. Indeed, the number of nodes of a FEM mesh can be significant. Thus, adequate strategies should be put in place in order to process all the data. Special care (known as sensitivity filtering) is needed to exploit the results coming from the FE Analysis such that appropriate shape updates are imposed [7].

Using IGA, we now have models that are suitable for both shape modeling and simulation. This key feature has been shown by Wall et al. [87], and by the increasing number of papers dealing with IGA-based shape optimization [15, 23, 32, 38, 39, 46, 50, 56, 59, 65,66,67, 75, 83, 88, 91, 93]. It concerns not only structural shape optimization but also other fields as heat conduction [92], electromagnetics [20, 68], fluid mechanics [73], and many other optimization problems. A general procedure, which has been improved over the years, is commonly adopted [21, 89]. It is based on a multilevel design concept which consists in choosing different refinement levels of the same spline-based geometry to define both optimization and analysis spaces [38, 50, 67, 88]. Shape updates are represented by altering the spatial location of the control points, and in some case the weights [67, 75], on the coarse level. The finer level defines the analysis model and is set to ensure good quality of the numerical solution. The optimization and analysis refinement levels are independently chosen which provides a problem-adapted choice of the spaces.

This contribution undertakes to synthesize the previous research on isogeometric shape optimization of structures. We present a general formalism where each step is detailed: it goes from the setting and modeling of a shape optimization problem to its resolution. We deal with both theoretical and practical aspects. Especially, we compile several benchmark examples that can be of interest for further researches and the investigation of new approaches. We do not restrict to a specific context in order to highlight the generality of the presented framework. Indeed, several types of objective functions are considered. Moreover, we investigate the case of two common structural analyses: the static linear analysis but also the natural frequency analysis. Finally, we deal with the case of 2D and 3D solid isogeometric elements as introduced by Hughes et al. [42], and also the case of the isogeometric Kirchhoff–Love shell of Kiendl et al. [49]. Practical information, quantitative data and discussions about the results are provided. Furthermore, this contribution offers a new lighting on how analytical sensitivity is achievable in the context of isogeometric shape optimization. It extends the existing works regarding this issue, as for instance Fußeder et al. [32] and Qian [75]. We show how analytical discrete adjoint-based sensitivity can be computed. All the examples tackled in this work are solved using this analytical method for the sensitivity analysis. Thus, it applies to standard solid elements but also for shells. In addition, it is not limited to the case of the compliance, but it can be used for a lot of response functions as for example stress-based criteria. We provide algorithms in order to illustrate how it can be efficiently implemented. For each numerical example, quantitative results on the sensitivities are given which is, to the author’s knowledge and to a certain extent, missing in the literature. The performance of this new sensitivity analysis is compared with approximate calculations. It can be quite often red in the literature that analytical sensitivities are difficult to implement. This work offers the necessary ingredients to overcome it and shows its efficiency in term of computational time.

This new framework for isogeometric shape optimization of structures is presented as follows. Firstly, we present in Sect. 2 generalities about structural optimization and we describe the structural design problems that will be tackled in this work. Then, in Sect. 3, the different steps for the sensitivity analysis in IGA-based shape optimization are explained. We illustrate how information are shared from the design variable level to the analysis model and vice versa. Section 4 contains the main theoretical developments. We present how the principal required derivatives of the sensitivity analysis can be analytically computed. As already said, both standard solid and shell elements are considered. Finally, the results of the optimization problems are presented and discussed in Sect. 5. Concluding remarks on our observations and findings are given in Sect. 6.

2 IsoGeometric Shape Optimization

2.1 Structural Optimization

General Mathematical Formulation The mathematical formulation of an optimization problem involves different quantities. The objective function f quantifies the performance of the studied system. It is usually formulated such that the best solution is the one which returns the smallest value assessed by function f. The objective depends on several characteristics of the system, called variables or unknowns. In the specific case of structural optimization, we set design parameters that vary the geometry of the structure. We denote these parameters as design variables and we represent them as a vector of unknowns x. In this work, we only consider the case of continuum variables. Finally, we describe the space in which we are looking for the optimal solution through a combination of constraints \(c_{i}\). Mathematically, the constraints are defined as scalar functions of x, and commonly takes the form of implicit equations or inequalities. With these notations in hand, an optimization problem can be formulated as follows:

where \({\mathscr {E}}\) and \({\mathscr {I}}\) are set of indices for equality and inequality constraints, respectively.

Structural Design In structural optimization, the goal is to improve the mechanical behavior of the structure. Thus, the expression of the objective function and/or the constraints involve quantities that describe the behavior of the structure. For instance, in the case of the static analysis of structures, the objective function is generally expressed as an explicit function of the design variables x and of the displacement field u. Moreover, in the context of computational mechanics, the analysis is performed through an approximated method, such as FEM, for example. In this work, we consider the discrete approach for shape optimization which means that the discretization step happens prior to the formulation of the optimization problem [85]. In this case, the objective function is expressed using the state variables \(\varvec{u}\):

In the case of static linear analysis, the variables \(\varvec{u}\) (namely the displacement Degrees Of Freedom) implicitly depends on the design variables x through a linear system of equation:

Indeed, the stiffness matrix \(\mathbf {K}\) and the load vector \(\varvec{F}\) are built through domain integrals. It means that their expressions depend on the shape of the structure, and consequently on the design variables x.

Let us also mention the case of natural frequency analysis which is also widely encountered in computational structural analysis. The discretization step results in an eigenvalue problem:

where \(\mathbf {M}\) denotes the mass matrix. As before, the governing equations link implicitly the eigenvalues \(\lambda\) and their corresponding eigenvectors \(\varvec{v}\) to the design variables. Finally, the objective function may involve the aforementioned quantities:

In this work, we undertake to present a global framework that is not limited to one type of analysis. In the numerical experiments section, we will consider the case of static linear analysis as well as structural vibration analysis.

Optimization Algorithm There are numerous algorithms that enable to solve constrained optimization problems of form (1). Each algorithm is usually designed into a specific framework. In case of structural shape optimization, gradient-based algorithms are often used. This is possible when the objective and constraint functions are differentiable w.r.t. the design variables. For large problems with hundreds to thousands design variables, the use of gradients is quasi-inevitable to build an algorithm that converges in an acceptable amount of time. The information brought back by the gradients enables the algorithm to make suitable decisions during the resolution [72]. For large scale problems, gradient-free algorithm may require much more evaluation of the objective and constraint functions which drastically increase the overall computational time.

The computation of the sensitivities is a key step of the resolution for any gradient-based algorithm. This work deals with this issue in the context of isogeometric shape optimization. We show how full analytical sensitivities can be achieved for several objective functions and several types of element formulation (2D and 3D solid, and the widely used Kirchhoff–Love shell).

2.2 Targeted Numerical Examples

Let us describe as of now the optimization problems that we will consider in this work. Through the examples, we alternatively switch between standard IGA solid elements (2D or 3D) and a Kirchhoff–Love shell element. For all the numerical examples, we will focus on the computation of the sensitivities. Furthermore, this work aims at motivating the use of isogeometric analysis for shape optimization. Some of the presented examples have not yet been presented in the context of isogeometric shape optimization, and thus enlarge its scope of application.

Compliance Taking the compliance as the objective function is the most common choice in structural optimization. It can be expressed as follows:

We will tackle three optimization problems that consists in minimizing the compliance under a given volume constraint:

-

Plate with a hole (2D solid),

-

Square roof (shell),

-

3D beam (3D solid).

Displacement Another possible choice for the objective function concerns the minimization of the displacement at a prescribed location (e.g. at point \({\varvec{M}}\)). Such an objective function can be expressed as follows:

and where \(R_{k}\) denote some basis functions associated to the discretization. Instead of considering the displacement at a specific location, one may want to minimize the maximal deflection of a structure. It is known that solving min-max problems can be difficult due to non-differentiability of the max function [33, 58]. The discontinuity can be avoided by replacing the max function by an alternative continuous function [3, 86]. In this work, we employ the P-norm:

where P is a positive integer. As P gets large, the function \(\varPhi _{f}\) approaches the maximal value returned by function f at the \(n_{pts}\) selected locations. There exists alternative choices of aggregation functions as for instance the Kreisselmeier-Steinhauser function [3, 17, 86]. We will perform in this work two examples with, respectively, \(f_{\text {u}}\) and \(\varPhi {}_{\text {u}}\) as the objective functions:

-

2D cantilever beam (2D solid),

-

Square roof (shell).

Stress Field In order to prevent the failure of a structure, one may seek to reduce the maximal stresses due to, for example, stress concentration. Even if taking the compliance as an objective function tends to reduce the overall magnitude of the stress field, local stress with increased magnitude can appear [24, 97]. Adding the maximal stress into an optimization problem raises several difficulties due to its local nature [3, 86]. As for the maximal deflection, one solution consists in using an aggregation function that measures the maximal stress. In this work, this is done through the P-norm:

We will perform in this work two examples with \(\varPhi {}_{\sigma }\) as the objective function:

-

2D fillet (2D solid),

-

Catenary arch (shell).

For the catenary arch problem, the objective will be to minimize the bending moment along the arch. For the fillet problem, the objective will be to minimize the Von-Mises stresses in the structure.

Natural Frequencies We will deal with a last objective function. One possibility we address here is to maximize the lower natural frequency \(\lambda _{1}\) of the structure. This is done through the minimization of the following objective function:

However, such an objective function may not be differentiable at specific configurations due to mode switching [66, 76, 96]. In this case, the algorithm faces difficulties to reach convergence. In order to define an objective function that is differentiable, one possibility is to aggregate (as for the stress-based optimization; see Eq. (9)) a group of \(n_{\lambda }\) frequencies in which the mode switching occurs:

We will present one optimization example with \(\varPhi _{\lambda }\) as the objective function:

-

Elephant trunk (3D solid).

The development of the analytical sensitivities will be done in the context of static analysis. We will only mention the case of natural frequency analysis when dealing with this last optimization problem. However, we will see that the computation of the sensitivities is very similar in both contexts and involves the same steps and quantities. Again, we present this example in order to highlight the generality of the present framework.

2.3 A Multi-level Approach

The present framework relies on researches dealing with isogeometric shape optimization. A general procedure, which has been improved over the years is commonly adopted [21, 89]. The key feature and asset of isogeometric shape optimization relies on the possibility to properly choose both optimization and analysis spaces [32, 50, 65,66,67, 75]. A fine discretization is introduced as the analysis model in order to ensure good quality computations. Conversely, the optimization model (also called the design model) is defined to impose suitable shape variations. Both spaces describe the exact same geometry and are initially obtained through different refinement levels of the same geometric model [38].

During the optimization process, both models interact successively. Consequently, it is straightforward that these two models are also involved during the sensitivity analysis. Figure 1 illustrates the role of these models and how they communicate during the optimization process. In what follows, we present how each step depicted in Fig. 1 is formulated. It starts with the definition of a shape parametrization that links the design variables to the design model. Then, we need to enlighten the link between the design model and the analysis model: it concerns not only the shape update but also the sensitivity propagation. These first two parts are explained in Sect. 3. The main step of the sensitivity analysis occurs on the analysis model where gradients are computed. We deal with this issue in Sect. 4.

Computation steps during the sensitivity analysis: the design variables act on the optimization model. The analysis model is a refined version of the optimization model (representing the exact same geometry) where the structural analysis is performed. This fine model also enables to compute the sensitivities. By recalling the refinement level and the definition of the shape parametrization, the sensitivities are propagated back to the design variable level

3 Shape Update and Sensitivity Propagation

3.1 Adjoint-Based Discrete Sensitivities

In this work, we perform adjoint-based discrete sensitivities. The starting point is the differentiation of the response function of the form (2). The total derivative of function f w.r.t. a design variable \(x_{i}\) reads as:

The term \({d\varvec{u}}/{dx_{i}}\) is not explicitly known. We can identify this term by differentiating the discrete state Eqs. (3). It reads as:

Then, we can substitute Eq. (13) into Eq. (12):

One can see that the inverse \(\mathbf {K}{}^{ -1}\) is involved in the expression of the derivative. To a certain extent, it means that a resolution is required. There are two ways of dealing with this issue, namely the adjoint and the direct approaches. In the adjoint method, one solves firstly the adjoint problem:

The adjoint solution does not depend on the design variables. Consequently, for each design variable, the adjoint solution \(\varvec{u}^{*}\) is reused. Finally, the complete expression of the total derivative reads as follows:

Alternatively to the adjoint method, one can adopt the direct approach. It consists in solving several linear systems with the so called pseudo-load vectors as right-hand sides:

Once the solution \(\varvec{v}_{i}\) is computed, one can get the total derivative:

Thus, in the direct sensitivity analysis there are potentially as many systems to be solved as design variables. Thus, adjoint sensitivity analysis is often preferred as long as the number of response functions involved in the optimization problems (objective and constraints) is smaller than the number of design variables.

One can see that, either in the direct or the adjoint approach, the derivatives of the stiffness matrix and of the load vector w.r.t. the design variables are involved. However the quantities \(\mathbf {K}\) and \({\varvec{F}}\) are defined on the analysis model, while the design variables act on the optimization model. In other words, there are intermediary steps that separate the design variables from the element operators. The idea to compute the aforementioned derivatives consists in applying several chain rules of differentiation accordingly to the steps that link the design variables to the element operators (i.e. the steps taking place during the shape update, see again Fig. 1).

3.2 Shape Representation and Parametrization

The first step is the shape parametrization that defines the design variables. In fact, until now, we did not clearly define what are these design variables.

NURBS Geometric Modeling In the context of isogeometric shape optimization, the whole process is based on geometric models. On the one hand these geometric models enable to represent the shape of the structure. On the other hand, these models are also used to perform the analysis. Historically, isogeometric analysis has been introduced using NURBS models (see Hughes et al. [42]), but it is not restricted to these geometric models. In this work, we only use NURBS models but it can surely be extended to other types of analysis-suitable geometric models.

There exists a large literature on NURBS modeling and related geometric modeling techniques. The interested reader can refer to Cohen et al. [16], Cottrell et al. [19], Farin [30] and Piegl and Tiller [74], to name just a few. Let us only introduce here the very basics. NURBS is the acronym for Non-Uniform Rational Basis Spline. It constitutes the today most commonly used technology in CAD. It describes complex geometric objects in the parametric form through the use of piecewise rational functions (see for example Piegl and Tiller [74] for more information). More precisely, a NURBS surface \({\varvec{S}}\) and a NURBS volume \({\varvec{V}}\) in the general 3D space are multivariate vector-valued functions of the form:

The parameters \(\theta _{i}\) take real values in closed intervals (which form the parameter space \({\bar{\Omega }}\)), usually [0, 1]. Each control point \(\varvec{P}_{k}\) is associated to a multivariate rational basis function \(R_{k}\). These multivariate rational basis functions are built by tensor products and the weighting of univariate piecewise polynomial basis functions (the B-Spline functions). Finally, these univariate basis functions are defined by setting a polynomial degree and a knot vector. An example of a NURBS surface is given in Fig. 2. This surface represents one quarter of a square plate with a hole. The main input is the control net formed by the linear interpolation of the control points. In order to impose shape variations, an adequate choice consists in modifying the control point coordinates. By moving the location of the control points we alter the shape of the structure. This is a common choice in IGA-based shape optimization which takes up the idea behind CAD-based shape optimization (see for example Braibant and Fleury [13], Hsu [41] and Imam [43]). Due to the weighting occurring in the NURBS formulation, it is also possible to act on the weights associated to the control points to impose shape modifications [65,66,67, 75]. This option is not investigated in this work.

Shape Parametrization There is an infinite number of possibilities regarding the definition of the shape parametrization. One very simple parametrization lies in defining one independent design variable by movable control point. Each variable moves its associated control point in a specific direction:

Identically, several design variables can be associated to a single control point. For example, one variable moves the control point in the x-direction and the second variable in direction y. Furthermore, it can be interesting to link one specific variable to multiple control points in order, for example, to preserve a geometric continuity or a symmetry. This more general shape parametrization takes the form:

where \({\mathcal {D}}_{k}\) is the set of design variables acting on the kth control point. An example of design parametrization is given in Fig. 2 for the problem of a plate with a hole. Mathematically, this shape parametrization reads as:

where \({\varvec{e}}_{i}\) are the Cartesian basis vectors. More complex shape parametrizations than those of the form (22) can be formulated, as for example to rotate a group of control points along an axis, etc.

The point to emphasize is that the shape parametrization links a group of design variables to the control point coordinates. Regarding the sensitivities, the differentiation of this shape parametrization will be involved. To highlight this point, let us denote by \(P_{jk}\) the \(j^{th }\) Cartesian component of the control point \(\varvec{P}_{k}\). With these notations, we can express the derivatives w.r.t. the design variables through the derivatives w.r.t. the control points of the optimization model:

where the symbol \({\bullet }\) denotes either a component of the stiffness matrix or of the load vector (or any quantity to be derived). The term \({\partial {\varvec{P}}}/{\partial {x_{i}}}\) represents the differentiation of the shape parametrization. It contains the derivatives of the control points w.r.t. the design variables. In case of the shape parametrization of the form (22), this operator reads as:

In what follows, we will see this operator as a matrix with size \(n_{\text {cp}}\times {}3\). Furthermore, this operator is very sparse and one should take advantage of its sparsity for numerical efficiency. Indeed, one design variable usually moves only few control points.

For the example of the plate with a hole (see Fig. 2 and Eq. (23)), the derivatives of the shape parametrization are:

where the dots symbol \((\ldots )\) indicates that the remaining components are filled with zeros. They correspond to the control points that are fixed.

3.3 From the Design Model to the Analysis Model

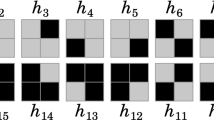

Spline Refinement As presented in Sect. 2.3, a major benefit of IGA-based shape optimization lies in the multilevel approach where the analysis model is a refined version of the design model. This is made possible by the refinement strategies that are available with B-Splines and NURBS. The first possibility consists in inserting new knots in the knot vectors. This process is called knot insertion and leads to increase the number of elements in the discretization, as shown in Fig. 3. It is also possible to elevate the degree of the underlying basis functions. Degree elevation keeps the same element density and the same regularity at the knots but increases the number of control points (and thus enriches the approximation space). Generally, these two refinement strategies are combined which offers great flexibility regarding the construction of the analysis model. This leads to the so called k-refinement [19] in which both the degree, regularity and element density are increased. For example in Fig. 3, the degree is elevated up to degree three in both direction and the number of elements is increased such that the final surface counts \(4\times {}4\) elements. We refer the interested reader to Cottrell et al. [18, 19] and Hughes et al. [42] for more information on spline refinement.

Interestingly, these refinement procedures take the form of a linear application. The control points \({\varvec{Q}}\) of the finer model are obtained through a linear relation of the form:

The refinement matrix \(\mathbf {R}\) is a sparse rectangular matrix of size \(m_{cp}\times {}n_{cp}\), \(m_{cp}\) being the number of control points of the analysis model. Equation (26) involves homogeneous coordinates: considering \(\varvec{P}_{i} = (x_{i},y_{i},z_{i})\) with associated weight \(w_{i}\), then \(\varvec{P}_{i}^{w} = (x_{i}w_{i},y_{i}w_{i},z_{i}w_{i})\). In other words, we have:

where \(\mathrm {diag}({\varvec{w}}_{P})\) is a diagonal matrix whose diagonal contains the \(n_{cp}\) weights associated to the control points \({\varvec{P}}\). The expression of the refinement matrices in case of the knot insertion and degree elevation can be found in Lee and Park [55] and Piegl and Tiller [74], for example. The degree elevation is done in three steps: Bézier decomposition (knot insertion), degree elevation on each Bézier segment, and combination of the refined Bézier segments (knot removal). These three steps can be combined in order to form the refinement matrix \(\mathbf {R}\).

Sensitivity Propagation The transition from the design model to the analysis model will also be involved during the sensitivity analysis. Indeed, after the introduction of the shape parametrization, we need to differentiate the stiffness matrix and the load vector w.r.t. the control point coordinates of the optimization model (i.e. the term \({\partial { {\bullet }}}/{\partial {\varvec{P}}}\) in Eq. (24)). However, these operators are built using the analysis model and not using the design model. Therefore, to compute these derivatives we express them in terms of derivatives w.r.t. the control points of the analysis model. To that purpose, we operate once again a chain rule:

The link between the control points \(\varvec{P}\) of the optimization model and the control points \(\varvec{Q}\) of the analysis model is given by the refinement matrix \(\mathbf {R}\). More precisely, Eq. (26) enables us to write:

Thus, the chain rule (28) takes the form:

The gradients \({\partial { {\bullet }}}/{\partial { \varvec{P}^{w} }}\) and \({\partial { {\bullet }}}/{\partial {\varvec{Q}^{w}}}\) should have appropriate shapes so that Eq. (30) makes sense. We suggest to view them as column matrices with respective sizes \(n_{cp}\times {3}\) and \(m_{cp}\times {3}\). Recalling Eqs. (27), (28), and (30), we get the following link between the derivatives w.r.t. the control points of the analysis and design models:

In the rest of the document, we omit the weighting terms (\(\mathrm {diag}({\varvec{w}}_{P})\) and \(\mathrm {diag}({\varvec{w}}_{Q})\)) for the sake of clarity. Note in addition that in most examples of this work, the weights are equal to one (B-Spline instead of NURBS) making these matrices equal to the identity.

Finally, let us notice that the refinement matrix does not change as long as the refinement levels of both optimization and analysis models remain unchanged. Thus, the matrix is commonly built once and for all at the beginning of the optimization, and can be reused during the optimization process. In addition, this matrix is sparse and of moderate size (in comparison with the stiffness matrix for instance). As a result, the transition between both models is computationally cheap.

By substituting Eqs. (31) and (24) into the expression of the total derivative (16), it yields the following result:

In order to describe the above term in brackets, let us introduce a function W that takes as input arguments the state variables \(\varvec{u}\), the adjoint variables \(\varvec{u}^{*}\), and the control points \(\varvec{Q}\) of the analysis model. We define this function as:

Even if we have not yet given the expressions of the stiffness matrix and of the load vector, let us recall that the governing equations (3) come from the virtual work principle. Consequently, function W can be seen as the total virtual work where the virtual displacement field is, in this case, the adjoint solution. Thus, in what follows function \(\text {W}\) is referred to as the adjoint work.

The load vector and the stiffness matrix depend on the computational domain, and hence, on the control point coordinates since they act on the shape of the domain. Finally, the full computation of the sensitivities requires the partial derivative of the adjoint work \({\text {W}}\) w.r.t. the control point coordinates of the analysis model. With these notations in hand, Eq. (32) reads as:

with

We will see in the next section how we achieve to compute this missing derivative \({\partial {\text {W}}}/{\partial {\varvec{Q}}}\). Depending on the formulation of the response function f, the partial derivative \({\partial {f}}/{\partial {x_{i}}}\) may also be changed using the applied chain rule of differentiation. In this case the sensitivity reads as:

For clarity and due to the large number of equations, we summarize in Algorithm 1 the main steps for the computation of the sensitivity. Once again, all these steps are illustrated in Fig. 1.

4 Differentiating the Element Formulation

This section deals with the computation of the derivatives \({\partial {\text {W}}}/{\partial {\varvec{Q}}}\) through Eq. (35). These derivatives depend on the element formulation. We present the case of the isogeometric standard solid element and the case of an isogeometric Kirchhoff–Love shell element.

4.1 Element Formulations Using Local Coordinates

A convenient way to compute the derivatives involved in Eq. (35) is to start by formulating the element using the curvilinear formalism. We will see that it enables to better identify the geometric quantities involved in the element formulation in comparison with classical formulation based on Cartesian coordinates. Also, this formalism is applicable, and even required, for a lot of element formulation including beams, shells and solids. Thus, we provide an unified framework usable for a large panel of element formulation. Very similar calculation steps are involved for the two types of isogeometric element considered in this work: the standard solid element (2D or 3D), and a Kirchhoff–Love shell formulation.

Within this context, the position vector associated to a material point is constructed from curvilinear coordinates \(\theta _{i}\) instead of Cartesian coordinates \(X_{i}\). This formalism enables to describe complex shapes with curvatures, and it is therefore widely used in shell theory [5]. Cylindrical and spherical coordinate systems are typical examples of curvilinear coordinate systems. For instance, locating a point belonging to a cylinder is simplified with the use of cylindrical coordinates, i.e. a radial coordinate r, an angular coordinate \(\theta\), and a height z:

where \(\varvec{X}\) is the vector position associated to the material point, and \({\varvec{E}}_{i}\) are Cartesian base vectors. Curvilinear coordinates can finally be seen as a generalization of these types of geometric transformations.

In this context, the gradient operator is written as [5]:

where the comma subscript represents the partial derivatives w.r.t. the curvilinear coordinates, \(\otimes\) denotes the tensor product, and \({\varvec{G}}^{k}\) are the contravariant basis vectors associated to the reference configuration. Einstein’s summation convention applies here.

4.2 Solid Formulation

4.2.1 Continuum Formulation

The linearized Green-Lagrange strain tensor \(\varvec{\varepsilon }\) in curvilinear coordinates reads as:

where the covariant components \(\varvec{\varepsilon }_{ij}\) are given by:

The covariant basis vectors \(\varvec{G}_{i}\) are tangent to the coordinate lines and are defined as:

The contravariant basis vectors can be expressed in function of the covariant basis vectors as follows:

where \(G^{ij}\) are the contravariant metric coefficients. These coefficients are commonly obtained by the covariant coefficient matrix:

Finally, let us give a definition of the covariant metric coefficients \(G_{ij}\). They are computed by the scalar product of covariant basis vectors:

More information on these geometric quantities can be found in Echter [25] and Kiendl [48], and in the underlying references cited therein.

There is already an interesting point to notice in the expression of the covariant strain components (40). We can easily identify where are located some information regarding the geometry: all the geometric quantities are contained into the covariant basis vectors only. When using Cartesian coordinates, the linearized strain components would read as:

Here the identification of the geometric information is trickier: they are contained into the differential operators. Without giving further details at the moment, it is as of now possible to sense that using the curvilinear formalism will ease later on the differentiation of the element formulation w.r.t. the control point coordinates.

Let us resume the element formulation. In the case of small displacements, the second Piola–Kirchhoff stress tensor is approximated by the linearized Cauchy stress tensor \(\varvec{\sigma }\). Furthermore, under the assumptions of a linear elastic behavior of the material, the material law is given by Hooke’s law:

where : denotes the scalar product of second-order tensors (same convention than in [5]). The fourth-order elasticity tensor is given by:

The material parameters \(\lambda\) and \(\mu\) are the Lamé constants. Thus, the stress tensor as defined by (46) is usually represented through its contravariant components:

with Einstein’s summation convention.

The internal virtual work reads as:

where the integration variables are directly the curvilinear coordinates \(\theta _{i}\) (i.e. \({{\text{d}}{\bar{\Omega }}}={\text{d}}\theta _{1}{\text {d}}\theta _{2}{\text {d}}\theta _{3}\)). The Jacobian |J| can be computed through the following triple product:

In addition to the strain components, the curvilinear formalism leads to the expression of the internal virtual work (49) where identifying the geometric quantities is quite straightforward. For comparison, the reader can consult [1] or [37] to observe what are the different transformation steps required to separate the geometric part in the internal work when Cartesian coordinates are used. The control point coordinates act on these geometric quantities. Having a compact expression, as given by the curvilinear formalism, of these quantities will surely simplify the calculation of the requested derivatives \(\partial {\text {W}{}}/\partial {{\varvec{Q}}}\).

Finally, let us give the expression of the external virtual work:

where \({\varvec{t}}\) are the external surface forces, and \({\varvec{F}}\) are the body forces.

4.2.2 Isogeometric Solid Element

The isogeometric framework sticks well with the use of curvilinear coordinates. One can define those curvilinear coordinates as being the geometric parameters of the spline. The position vector \(\varvec{X}\) is defined by a tri-variate mapping:

The covariant basis vectors are then obtained by partial derivation with respect to the parameters \(\theta _{i}\) which gives:

With these vectors in hand, one can compute the covariant metric coefficients \(G_{ij}\) using Eq. (44). Then, the contravariant metric coefficients \(G^{ij}\) are obtained by inverting a 3-by-3 matrix as given by Eq. (43). Those are the main calculation steps of the formulation. Hence, using curvilinear coordinates is actually not a complex task, especially with IGA.

For the rest, everything is identical to classical Finite Element formulations. The displacement field is approximated using the basis functions coming from the discretization of the geometry:

It leads to the following expression of the discretized strain components:

which can be stored into a vector by using, for example, the Voigt notation:

where the \(\mathbf {B}_{k}\) are strain matrices that contain the terms indicated in brackets in Eq. (55). Identically, the discretized stress is given by:

where \(\mathbf {H}\) is the matrix representation of the fourth-order material tensor given in Eq. (47).

Finally, introducing these approximated quantities into the virtual works (49) and (51) lead to the so-called stiffness matrix \(\mathbf {K}\) and load vector \({\varvec{F}}\) which can be obtained by assembling elementary matrices of the form:

The integral is computed numerically using quadrature rule. Even if the intermediary steps are a bit different, one should keep in mind that the solid element written in the curvilinear fashion is strictly the same than the standard solid element. At the end of the day, the stiffness matrices obtained with both approaches are identical. However, we will see that using the curvilinear formalism provides a suitable way to compute analytically the derivatives involved in the sensitivities [see Eq. (35)].

4.2.3 Differentiating the Standard IGA Operators

According to Eq. (35), we are at a point where we need to compute the derivatives of the external and internal works:

The stiffness matrix and the load vector are built element-wise. The sensitivity analysis will be performed similarly, and we focus here mainly on the stiffness matrix because it is the most challenging part. Summing over each element gives:

An important point to notice is that only the control points associated to the current element e give non-zero derivatives in the term \({\partial {\mathbf{K}^{e}}}/{\partial{\varvec{Q}}}\). Thus, for each element of the analysis model, only the few corresponding components of the gradient are updated. Moreover, for each element, it is numerically not efficient to build a large matrix \({\partial {\mathbf {K}^{e}}}/{\partial { \varvec{Q} }}\). Instead, we use the following development:

where k and l denote two control point indices associated to the current element e. Using Eq. (58), the derivatives of the components of the elementary stiffness matrices w.r.t. the control points are given by:

We can put this last equation into Eq. (62). By commuting the double sum and the integral, we get:

Three terms can be identified:

-

1.

Derivative of the Jacobian

$$\begin{aligned} \left( \varvec{\varepsilon }^{*}:\varvec{\sigma }\right) \dfrac{\partial { |J| }}{\partial { \varvec{Q} }} \end{aligned}$$(65)where

$$\begin{aligned} \varvec{\varepsilon }^{*} = \sum _{k} \mathbf {B}_{k}\varvec{u}_{k}^{*}, \quad \varvec{\sigma }= \sum _{l} \mathbf {H}\mathbf {B}_{l}\varvec{u}_{l}, \end{aligned}$$(66) -

2.

Derivative of the (adjoint) Strains

$$\begin{aligned} \dfrac{\partial { \varvec{\varepsilon }^{*} }}{\partial { \varvec{Q} }} = \sum _{k} \dfrac{\partial { \mathbf {B}_{k} }}{\partial { \varvec{Q} }} \varvec{u}_{k}^{*}, \end{aligned}$$(67) -

3.

Derivative of the Stresses

$$\begin{aligned} \dfrac{\partial { \varvec{\sigma }}}{\partial { \varvec{Q} }} = \sum _{l} \left( \dfrac{\partial { \mathbf {H} }}{\partial { \varvec{Q} }} \mathbf {B}_{l} + \mathbf {H}\dfrac{\partial { \mathbf {B}_{l} }}{\partial { \varvec{Q} }} \right) \varvec{u}_{l}. \end{aligned}$$(68)

We finally end up with a generic expression of the analytical sensitivities. However, to the author’s understanding, the partial derivatives w.r.t. the control points are more accessible in the context of element formulation based on curvilinear coordinates as shown in what follows.

Derivative of the Jacobian Differentiating the Jacobian w.r.t. the control points leads to:

We remind that the partial derivative \(\partial /\partial {\varvec{Q}}\) contains the partial derivatives w.r.t. the three components of the all active control points (denoted previously \(Q_{ja}\) which corresponds to the \(j{\text {th}}\) component of the control point number a). Differentiating the covariant basis vectors \(\varvec{G}_{i}\) gives:

and finally, the complete expression for the derivatives of the Jacobian is:

The derivative \({\partial { |J| }}/{\partial { \varvec{Q}_{a} }}\) takes the form of a vector with three components. The gradient \({\partial { |J| }}/{\partial { \varvec{Q} }}\) in Eq. (64) collects these derivatives where the index a corresponds to the active control points (those from the current element e).

Derivative of the Strains It is computationally not efficient to build the matrices \({\partial { \mathbf {B}_{k} }}/{\partial { \varvec{Q} }}\) and then summing as expressed in Eq. (67). If one takes a closer look at the expression, one can see that it is possible to commute the derivative and the sum. For instance, let us take the case of a specific strain component \(\varvec{\varepsilon }_{ij}^{h}\):

We already know the derivative of the covariant vectors w.r.t. the control points, e.g. see Eq. (70). Finally the term in Eq. (64) with the derivative of the (adjoint) strains is not as hard as it may seem. One has to compute quantities of the following form:

where the index a corresponds to the active control points, and \(\mathbf {u}^{*}{,_{i}}\) reads as:

The result given by Eq. (73) should be seen as a vector with three components (derivation w.r.t. each of the three components of \(\varvec{Q}_{a}\)). We end up with a compact expression of the term involving the derivatives of the strain components. One simply has to compute the derivatives of the adjoint field as expressed in Eq. (74), and then put these results in Eq. (73). In Qian [75] where Cartesian coordinates are used, such a compact expression is not provided. Instead, several intermediary results are given since combining them leads to a long and non-practicable expression.

Derivative of the Stresses Having the derivatives of the strains in hand is already a first step to compute those of the stresses. Again, Eq. (68) is not used as such. Instead, we commute the derivative and the sum. For a given stress component, the differentiation w.r.t. the control points gives:

with Einstein’s summation convention.

The derivatives of the material tensor \({\partial { {C}^{ijkl} }}/{\partial { \varvec{Q} }}\) are obtained by the derivation of the Eq. (47). A chain rule of differentiation leads to:

Thus, the derivatives of the contravariant metrics w.r.t. to the control points are involved. We have not yet computed these derivatives. To this purpose, let us remind that these metrics are obtained by inverting a 3-by-3 matrix which takes the covariant metrics as components [see Eq. (43)]. One can notice that the following relation holds true:

where \(\mathbf {I}\) denotes here the identity matrix of size 3. Differentiating this last equation leads to:

Then, we multiply this result on the right with the matrix containing the contravariant metrics in order to identify the derivatives of the contravariant metrics. Using Eq. (77) finally yields:

This enables to express the derivatives of the contravariant metrics as functions of the derivatives of the covariant metrics:

The covariant metrics are obtained by dot products between the covariant vectors as given in Eq. (44). Thus, the derivatives of the covariant metrics w.r.t. the control points is quite straightforward:

and by introducing Eq. (70) one can get the results we were interested in:

We now have all the ingredients to compute the derivatives of the stresses w.r.t. the control points of the analysis model; i.e. we know how to compute the terms involved in Eq. (75). As for the strain components, we end up with a compact expression of the derivatives of stress components thanks to the use of the curvilinear formalism. It involves the derivatives of the contravariant metrics contained in the components of the material tensor. The equations to be used are (76), (80) and (82).

4.2.4 Summary and Generalization

Despite the large number of equations, we end up with a general expression of the required derivatives (formulated initially by Eq. (35)). It reads as the differentiation of the total work evaluated using the displacement and the adjoint fields w.r.t. the control point coordinates:

where the derivatives of the internal work are given by:

and the derivatives of the external work are given by:

Regarding the implementation, one should view the derivatives as given by these equations. We present in Algorithm 2 the main steps for the implementation of the aforementioned derivatives. We summarize the principal equations one would required to implement the gradient.

4.3 Shell Formulation

4.3.1 Continuum Formulation

The Kirchhoff–Love formulation has been largely studied in the literature both for analysis and for shape optimization. We only remind here key theoretical points in order to be able to present the analytical sensitivities. The starting point consists in invoking specific kinematic assumptions, namely the Kirchhoff–Love hypotheses. These hypotheses introduced by Kirchhoff [51] and Love [61] state that the normals to the mid-surface in the reference configuration remain normal and unstretched in the deformed configuration.

There are different strategies to impose the kinematic assumptions. In this work we follow the strategy from Kiendl et al. [49] based on the direct approach. It means that the shell is regarded from the beginning as a two-dimensional surface (often named as a Cosserat surface) and proper kinematic assumptions, representing the three-dimensional behavior, are postulated. Thus, the shell continuum is described by its mid-surface \(\varvec{S}\) and director vectors:

where t is the shell thickness. In the case of Kirchhoff–Love kinematic, the director vector is taken as the normal at each point of the mid-surface:

where \(\varvec{A}_{\alpha },\,\alpha =1,2\) are the covariant vectors associated to the mid-surface.

By introducing the aforementioned kinematic assumptions, the displacement field of the entire body can be described only by the displacement \(\mathbf {u}\) of the mid-surface:

In this work we use the linearized difference vector \({\varvec{w}}^{\text {lin}}\) which is valid under the assumption of small displacement [26, 49]. This vector is also expressed w.r.t. the displacement of the mid-surface. The kinematic assumptions also involve that transversal strains vanish (trough the thickness). More details can be found, for example in Kiendl [48].

Let us directly give the expression of the virtual works for the Kirchhoff–Love shell formulation. It reads as:

where A is expressed in (88), \(\varvec{p}\) denotes distributed loads per unit of area applied on the mid-surface \(\Omega _{0}\) , and \(\varvec{t}\) denotes axial forces per unit of length applied on the edges of the patch \(\Gamma _{0}\). The internal work is expressed as the sum of two contributions: the membrane and the bending part. The membrane and bending strains are formulated using local coordinates as we did for the standard formulation in Sect. 4.2. More precisely, the covariant membrane components are:

Greek indices \((\alpha , \beta )\) takes on values 1 or 2. The covariant bending components are:

In the expression of the virtual work (90), \(\varvec{n}\) and \(\varvec{m}\) denote the normal forces and the bending moments respectively. They are expressed as follows:

with:

The constitutive tensor \(\mathbf {C}_{0}\) includes the plane-stress condition through condensation of the material equations [5]. It reads as:

where \({\bar{\lambda }} = {2\lambda \mu }/{(\lambda +2\mu )}\).

4.3.2 Isogeometric Kirchhoff–Love Element

The Kirchhoff–Love NURBS element is obtained by discretizing the mid-surface with a NURBS surface. This discretization is also used to approximate the mid-surface displacement field:

Then, the discretized membrane and bending strains take the following forms:

The expression of the membrane strain matrices \(\mathbf {B}_{k}^{m}\) and of the bending strain matrices \(\mathbf {B}_{k}^{b}\) can be inferred from Eqs. (92) and (93).

The stiffness matrix of the Kirchhoff–Love shell formulation can be built through 3-by-3 matrices of the form:

where k and l are indices of two control points related to element e, and the matrix \(\mathbf {H}_{0}\) reads as:

and where the components \(H_{0}^{\alpha \beta \gamma \delta }\) are given by Eq. (96). The integral is later computed using numerical integration. The load vector, which expresses the external virtual work (91) once the displacement field is discretized, reads as:

4.3.3 Differentiating IGA Kirchhoff–Love Operators

For the Kirchhoff–Love shell formulation, we follow the same logic as for the standard IGA formulation we have just dealt with (see Sect. 4.2). Thus, here we skip redundant calculation steps. Especially, one can obtain the counterpart of Eq. (64) for the Kirchhoff–Love shell formulation by applying the same reasoning. In that respect, we can show that the derivatives of the adjoint internal work w.r.t. the control point coordinates are given by:

Several terms can be identified: we need to compute the derivatives of the Jacobian, the derivatives of the (adjoint) strains (membrane and bending), and the derivatives of the stress resultants (membrane and bending).

Derivative of the Jacobian We already introduced the expression of the derivatives of the Jacobian w.r.t. the control points in case of a volume, see Eq. (71). In case of a surface, the derivatives are given by:

One has to differentiate Eq. (88) to get this result.

Derivative of the Membrane Strains and Stresses The expressions of the membrane strains \(\varvec{e}_{\alpha \beta }\) and the membrane forces \(\varvec{n}^{\alpha \beta }\) involved in the Kirchhoff–Love shell are essentially similar to the strain and stress fields of the standard solid elements (2D problem). Thus, we give here only the final results. One can recover the following equations by going through what has been presented for the solid formulation. For the derivatives of the membrane strains, we obtain:

where the adjoint solution is built using the bi-variate basis functions and takes the same form as Eq. (74).

The derivatives of the membrane forces is obtained using the constitutive Eq. (94). We have:

The derivatives of the material tensor \({\partial { C_{0}^{\alpha \beta \gamma \delta } }}/{\partial { \varvec{Q} }}\) can be computed similarly to what has been done for the 3D constitutive law (76).

Since the membrane part involved in the analytical sensitivities for the Kirchhoff–Love formulation is very similar to classical 2D problems (e.g. by taking thickness equals to one and assuming plane-stress state), the interested readers can start by implementing the analytical sensitivities in that context.

Derivative of the Bending Strains and Stresses The bending part involved in the sensitivity (104) requires additional developments. However, the core idea remains the same. A chain rule is applied until we get an expression with quantities that we know how to derive w.r.t. the control points. Let us split the expression of the bending strain (93) into three terms as follows in order to describe the derivatives:

where

Thus, the derivatives of the bending strains w.r.t. the control points are given by:

The derivatives \({\partial { {{\varvec{\kappa }}}_{\alpha \beta }^{1} }}/{\partial { \varvec{Q} }}\) can be written as follows:

where some circular shifts have been performed in the scalar triple products. Identically, the other term \({\partial { {{\varvec{\kappa }}}_{\alpha \beta }^{2} }}/{\partial { \varvec{Q} }}\) is given by:

with:

We already know how are expressed the derivatives of the covariant basis vectors \({\partial { \varvec{A}_{\alpha } }}/{\partial { \varvec{Q} }}\) [see Eq. (70)] and the derivatives of the Jacobian \({\partial { A }}/{\partial { \varvec{Q} }}\) (see Eq. (105)). Nonetheless, there are some additional derivatives that are involved in the differentiation of the bending strains. Regarding the inverse of the Jacobian, the derivatives read as:

The derivatives of the director vector appears multiple times. After few developments, one should obtain the following formula:

Scalar products between these derivatives \({\partial { \varvec{A}_{3} }}/{\partial { \varvec{Q} }}\) and different vectors are involved. Let us give a general expression of this type of quantities:

where \({\varvec{v}}\) denotes any required vector. Lastly, let us give the following results:

We now have all the ingredients in order to compute the derivatives of the bending strains w.r.t. the control points of the analysis model. It contains quite a lot of terms. Hence, it is worth spending some time to identify repetitive terms in order to make numerical savings in the implementation. For instance, there are multiple cross and dot products that seem better to compute once and for all at the beginning instead of computing them for each control points \(\varvec{Q}_{a}\).

Finally, the derivatives of the bending moments are computed through the derivation of the constitutive Eq. (95):

At this point, we know how to compute all terms, i.e. the derivatives of the material tensor and the derivatives of the bending strains.

Implementation Regarding the implementation of the partial derivatives of the adjoint work w.r.t. the control point coordinates in case of the Kirchhoff–Love shell formulation, it is done similarly than for the standard solid element. Thus, we refer the interested reader to Algorithm 2 to get a global view of how it can be implemented.

5 Numerical Investigation

Now, we present the results obtained for the different examples already mentioned in Sect. 2.2. The goal is to collect a large range of benchmark results (spanning various structural analyzes and objective functions) which could be of interest during the development of new methods in the context of isogeometric shape optimization. The focus is on the sensitivities. For each example, we display the gradients and we give detailed values in tables. The gradients are represented by 3D fields of arrows. On the fine analysis models, we depicted the quantities denoted \(\partial {\text {W}}/\partial \varvec{Q}\) throughout this document. On the design models, we plot the quantities \(\partial {\text {W}}/\partial \varvec{P}\) which are obtained after the first propagation step. Let us mention that these quantities do not depend on the shape parametrization: even if one use different shape parametrizations than those of this work, one could rely on the presented results. For several examples, we give in tables detailed values of the full sensitivities (which depend on the shape parametrization). We verify the correctness of the presented analytical sensitivities in comparison with approximated ones. We also discuss the numerical efficiency of these sensitivities. Let us mention that we use, in this work, the SLSQP solver available in the NLopt library as the gradient-based algorithm to solve the optimization problems [44, 53].

5.1 Compliance as the Objective Function

Autoadjoint Problem The compliance seems to be the most common choice in structural optimization. By minimizing the compliance, the structure becomes stiffer in the sense that it deforms less. The compliance is a special case where the adjoint solution can directly be inferred from the state solution. In fact, the partial derivatives of the compliance (6) w.r.t. the design variables and the displacement DOF are respectively given by:

Thus, the adjoint problem (15) reads, in the case of the compliance, as:

For the three examples tackled in this section, the load vector do not depend on the design variables. Thus, during the sensitivity analysis, one can omit the terms involving the differentiation of the load vector. If not, the partial derivatives of the compliance w.r.t. the design variables is computed firstly on the analysis model and then pull back the design variables level as explained in Sect. 3 [see more specifically Eq. (36)]. Here, we choose to omit them and to only consider the derivatives of the adjoint internal work during the sensitivity analysis.

Plate with a Hole The problem of the plate with a hole has been tackled multiple times in papers dealing with isogeometric shape optimization, see for instance Fußeder et al. [32], Hassani et al. [36], Qian [75] and Wall et al. [87]. The plate is subjected to a bi-axial loading. Initially, the shape of the hole is a square. It is known that the optimal shape for this problem consists in a circular hole, see Wall et al. [87]. The settings for this problem are given in Fig. 2. Due to symmetry, only one quarter of the plate is considered. Plane strain state is assumed. The optimization model is built using a single NURBS surface with \(2\times {1}\) quadratic elements. The weights are set such that the circular hole can be exactly described as done in Qian [75]. We consider several refinement levels to define the analysis model.

More precisely, we firstly discretize the analysis model with \(8\times {8}\) quadratic elements. Figure 4 shows several shape updates. For each shape update, we depict the solution of the structural analysis, and the gradients of the compliance at both analysis and design levels. The algorithm requires about 10 iterations to recover the optimal shape. In Table 1, we compare the analytical sensitivities (AN) with approximated sensitivities. The results are given for the initial geometry (i.e. the square hole). More specifically, we give in Table 1 the sensitivities obtained by global Finite Differences (FD) and the sensitivities obtained by semi-analytical approximation (sAN) as done, for example, in Kiendl et al. [50]. This sAN sensitivity consists in involving a finite difference scheme to approximate the derivatives of the element operators in Eq. (16). In Table 1, a forward scheme is used. We vary the perturbation step in order to highlight its influence on the sensitivities (see again Table 1). The first point that we want to underline is the correctness of the presented analytical sensitivities. In fact, we recover the FD results where there is likely no implementation errors due to simplicity. The differences arise only after a certain precision. These differences come from the approximation scheme. In fact, by varying the perturbation step, it is clear that only the first decimals of the approximated sensitivities are correct. This is especially true for the sAN sensitivities. The inaccuracy of the sAN scheme is well known and strategies for getting exact semi-analytical sensitivities have been proposed for standard FEM [7, 12, 31, 71, 85, 88]. Let us mention that one can use a central difference scheme in order to obtain a better accuracy of the approximated sensitivities. Eventually, one can observe that with the analytical sensitivities the following relations are obtained:

It is clear that the exact sensitivity verifies these equations due the symmetry of the problem. The fact that we recover these equations demonstrates the good accuracy of the analytical sensitivity. This is not the case for the FD or sAN sensitivities (except FD with the perturbation step equal to 1e−4).

Regarding the accuracy of the analytical sensitivity, we study in Fig. 5 and Table 2 the influence of the refinement level of the analysis model. By looking at the presented results (Fig. 5 and Table 2), one can notice that the sensitivities are greatly affected by the refinement level. This is especially true for the initial configuration with the square hole due to the singularities at the corners of the hole. It means that the choice of the analysis model should not be made solely to ensure good results during the structural analysis but also to ensure good sensitivities.

Finally, it is very interesting to point out that only the control points associated to the domain boundary seem to give non-zero values in the gradients: see Fig. 4. In fact, all the interior control points lead to small values in \(\partial {\text {W}}/\partial \varvec{Q}\) and \(\partial {\text {W}}/\partial \varvec{P}\) in comparison with the boundary control points. The reason is that the interior control points do not modify the physical domain (from a continuum point of view). Thus, ideally speaking the influence of their positions on the internal and external works is null (as long as no geometrical singularities are introduced as overlaps etc.). Due to numerical errors in the analysis, this is not exactly observed. Indeed, it is known that mesh distortions impact the quality of the analysis in FEM-based simulations. However, one can consider during the sensitivity analysis to only compute the terms coming from the boundary control points and set to zero all the others associated to the interior control points. Instead of computing \(\partial {\text {W}}/\partial \varvec{Q}\), one can compute the quantity \(\partial {\text {W}}/\partial \tilde{\varvec{Q}}\) where the components are given by:

Making this choice enables to reduce the numerical cost of the sensitivity analysis. Instead of performing a loop on every element plus a loop on every active control point (as explained in Algorithm 2), we only need to integrate over the support of the basis functions associated to the boundary control points and to compute partial derivatives w.r.t. to these control points only. In practice, using \(\partial {\text {W}}/\partial \varvec{Q}\) or \(\partial {\text {W}}/\partial \tilde{\varvec{Q}}\) does not lead exactly to the same gradient, in general (again due to discretization errors). Computing all the terms (i.e. using \(\partial {\text {W}}/\partial \varvec{Q}\)) give the same gradient than the one obtained with Finite Differences as already shown in Table 1. When only the boundary control points are considered during the sensitivity analysis, the result is slightly different.

Table 3 highlights this issue: we consider the two calculation methods for the initial configuration (square hole) and the optimal configuration (circular hole). The difference is quite important, especially for the square hole due to the singularities in the solution which are badly captured with the chosen analysis model (\(8\times {}8\) quadratic elements). The discretization error is surely important here. With a finer analysis model, the influence of using either every control points or only the boundary control points, becomes much lower. With an analysis model with \(64\times {}64\) cubic elements, the sensitivities for the plate with a circular hole are identical up to the sixth decimals. Thus, both approaches lead to the same optimal shape. Finally, this remark regarding the influence of interior control points on the sensitivity analysis is very related to the question of how to update the position of these control points during the optimization. Depending on the mesh density of the design model, the interior control points need to be moved in order to prevent the appearance of geometrical singularities due to element overlaps etc. This issue is beyond the scope of this article, and the interested reader is referred to [63, 77]. But let us mention that for each example presented in this work, we achieve to formulate shape parametrizations that automatically ensure a correct regularity of the geometries. Moreover, in what follows, we always consider every control points during the sensitivity analysis.

Square Shell Roof The optimization problem of the square shell roof is presented in Fig. 6. This example can be found in Hirschler et al. [38], and similar problems have been tackled by Bletzinger et al [11] and Kegl and Brank [47], for example. The roof is fixed at its corners and subjected to a vertical load (given by unit of area). The loading will not change with the shape update of the roof. The load vector can be built from the case of a square plate subjected to uniform pressure. The same idea can be found for the optimal arch problem in Kiendl et al. [50].

The results presented in Fig. 6 and Table 4 are obtained with a design model with \(4\times {}4\) quadratic B-Spline elements and an analysis model with \(32\times {}32\) quadratic B-Spline elements. The Kirchhoff–Love shell formulation is used here to model the behavior of the roof. The shape parametrization consists in moving the control points of the design model in the z-direction (see Fig. 6). The control points located at the corners of the roof are left fixed. Thus, it leads to a total of 32 design variables. Looking at the roof problem, one can notice that it has several symmetries. Consequently, the sensitivities have repeated terms; the influence of some design variables on the compliance is identical. For the initial geometry as given in Fig. 6, there are only 5 unique values in the sensitivity out of 32 components. These terms are given in Table 4. We compare the presented analytical sensitivity with several approximated sensitivities. More specifically, forward and central finite differential schemes are used to get either total FD sensitivities or sAN sensitivities. One can see that the forward scheme leads to quite significant differences with respect to the analytical sensitivity. Only the first two decimals are correct. With another perturbation step, the results can be somehow improved. However, this highlights one difficulty when employing approximated sensitivities: how to choose this perturbation step? Usually, a good point that limits its influence consists in scaling the problem such that the design variables vary between 0 and 1, for example. The objective functions and the constrains should also be scaled by using, for instance, their initial values. However, this does not guarantee that a given perturbation step will be suitable for every problem. It can be even trickier: a given perturbation can be suitable for the initial configuration but may lead to bad approximation of the sensitivities after some shape updates. More significantly, the adequate perturbation step (the one that leads to the lowest error) can be different for each design variable. But this is not identifiable in practice and usually one single perturbation step is chosen for each design variables and is kept the same during the whole optimization. It may be welcome to perform several sensitivity analyses for the initial configuration with different perturbation steps in order to select an appropriate one. For all the examples tackled in this work, this preliminary procedure was sufficient to limit the influence of the perturbation step: the optimization process always converged toward the same optimal shape and in a similar number of iterations when either approximated or analytical sensitivities were used. Finally, let us notice that even if the analytical sensitivity enables to get rid of the perturbation step, there is still the choice of the refinement level of the analysis model that needs to be done when setting up the optimization problem (see previous discussion for the plate with a hole). It can be interesting to adapt the refinement level during the optimization by using, for example, advanced tools as error estimators [2, 4, 14].

We also give in Table 4 the computational time for the different sensitivities. The computational times are scaled with the one of the analytical sensitivity. Unsurprisingly, the FD sensitivities takes the longest to compute because, for each design variable, system (3) needs to be built and solved. In case of the central FD scheme, this is even done twice per design variables. That is why the central FD takes twice the computational time of the forward FD (see again Table 4). Of course, this computational time can surely be reduced by saving redundant quantities and by using dedicated strategies as for example structural reanalysis [22, 52]. However, in this example, there are only 32 design variables. For more complex examples with thousands of design variables, FD sensitivities may be simply intractable [85]. Interestingly, the computational times of the sAN sensitivities are much higher than the AN sensitivity. Indeed, a factor roughly equal to the number of design variables (i.e. 32 here) is obtained when the forward finite difference scheme is used in the approximated part of the sAN sensitivity. Again, the computational time is doubled for the central sAN in comparison with the standard sAN (see again Table 4). The fact that the ratio between the computational time of the sAN and the AN sensitivities tends to the number of the design variables can be theoretically understood and could be inferred from Algorithm 2. The main calculation step of the AN sensitivity is the computation of the partial derivatives of the total adjoint work w.r.t. the control points coordinates \({\partial {\text {W}}}/{\partial {\varvec{Q}}}\) as previously explained. These terms take the form of an integral over the computational domain. In Algorithm 2, the steps that involve the most arithmetic operations are those from line 6 to line 10. The loop starting at line 12 consists essentially in adding terms to an array where every involved quantity is already computed. Thus, this loop goes fast. Interestingly, removing lines 12 to end of Algorithm 2 give the steps required to compute the total adjoint work (33). This means that the computation of the derivatives of the total adjoint work \({\partial {\text {W}}}/{\partial {\varvec{Q}}}\) takes a comparable amount of time than the computation of the total adjoint work in itself. Now, let us point out that during the sAN sensitivity analysis, one has to evaluate the total adjoint work as many times as design perturbations (i.e. as many times as the number of design variables with forward scheme, and twice as much with the central scheme). Because this step is the most time consuming step of the overall sensitivity analysis, it also means that the computation time of sAN is linearly proportional to the number of design variables. Let us summarize what has been observed:

This observation leads us to conclude that the time saving when using AN instead of sAN sensitivities is of the order of magnitude of the number of design variables:

Also, the computational time of the AN sensitivity is (quasi) independent of the number of design variables. This is a great benefit of this method for the sensitivity analysis, especially when incorporating a large number of design variables.

Volumetric Beam The presented analytical sensitivity method can also be applied to 3D solid models. To highlight this point, we perform the shape optimization of a volumetric beam. The problem settings are described in Fig. 7. One end of the beam is fixed and an uniform pressure is applied over its top surface. The goal is to modify the cross section in order to maximize the stiffness of the structure. The final volume should not exceed the initial volume. Additional geometrical constrains are set in order to prevent undesirable shapes as described on Fig. 7. The design model is built using a B-Spline trivariate with 16 elements spread over the x-direction. Quadratic degree is taken in this particular direction whereas linear degree is taken in the two others. Thus, the design model counts \(18\times {}2\times {}2\) control points. Only the 36 control points associated to the bottom surface (depicted in blue in Fig. 7) are movable. Two design variables are assigned to each of them. Thus, we end up with a total of 72 design variables. After refinement, the analysis model counts \(32\times {}8\times {}8\) quadratic elements.

The results of the optimization are depicted in Fig. 7. The final geometry deforms much less which leads, in this case, to a significant reduction of the maximal Von-Mises stress (factor 25). We also give in Fig. 7 the sensitivities for several iterations of the resolution. Other isogeometric shape optimization of 3D examples can be found, for example, in

Blanchard et al. [6], Hassani et al. [36], Li and Qian [57], Lian et al. [59], and Wang et al. [91]. To the authors’ knowledge, only Lian et al. [59] give quantitative results concerning the sensitivity analysis step. We hope that the presented example of the beam give additional useful results which could help to extend isogeometric shape optimization toward real-world applications.

5.2 Displacement as the Objective Function

In this section, we seek to use the displacement field as the objective function. For example, it can be interesting to adapt the shape of a structure such that it deforms in a prescribed manner. One application found in structural topology optimization using such objective functions is compliant mechanisms which are designed using such response functions [81, 98].

Adjoint Problem In the simple case described by Eq. (7), the partial derivatives of the objective function w.r.t. the design variables and the DOF read respectively as:

Recalling the expression of the discrete displacement \(\mathbf {u}^{h}\) [see again Eq. (7)], we get the expression of the missing derivatives involved in the right-hand side (127) of the adjoint problem: