Abstract

In this paper, we introduce a new algorithm of inertial form for solving monotone variational inequalities (VI) in real Hilbert spaces. Motivated by the subgradient extragradient method, we incorporate the inertial technique to accelerate the convergence of the proposed method. Under standard and mild assumption of monotonicity and Lipschitz continuity of the VI associated mapping, we establish the weak convergence of the scheme. Several numerical examples are presented to illustrate the performance of our method as well as comparing it with some related methods in the literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we are concerned with solving the classical Variational Inequality (VI) of Fichera [21, 22] and Stampacchia [38] (see also Kinderlehrer and Stampacchia [27]) in real Hilbert space. This problem stands at the core of many theoretical and applied areas, such as in transportation, economics, financial equilibrium problems, engineering mechanics and many more, see for example, [4, 5] and [6, 14, 15, 24].

Let \(C\subseteq {\mathcal {H}}\) be a nonempty, closed and convex subset of a real Hilbert space \({\mathcal {H}}\) and \({\mathcal {F}}:{\mathcal {H}}\rightarrow {\mathcal {H}}\) be a given mapping. The variational inequality with \({\mathcal {F}}\) and C, for short, VI(\({\mathcal {F}},C\)), is defined as

During the last decades, many iterative methods have been developed for solving (1.1), see for example the excellent book of Facchinei and Pang [19] and the many references therein. One of the earliest gradient-type methods for solving (1.1) is introduced by Korpelevich [28] and is known as the Extragradient Method, shortly, EM (see also [3]). Given the current iterate \(x_n\in C\), calculate the next iterate by the following:

where \(\lambda \) is some positive constant.

Despite the mild assumptions needed for the convergence of the extragradient method, which are monotonicity and Lipschitz continuity of \({\mathcal {F}}\), there is the need to evaluate \({\mathcal {F}}\) twice at \(x_n\) and \(y_n\) and compute two orthogonal projections onto the VI’s feasible set C per each iteration, that is \(P_C(\cdot )\). This of course could affect seriously the computational effort and applicability of the method in case that \({\mathcal {F}}\) and C have complex and/or general structures. One proposed extragradient method extension is the Subgradient Extragradient Method (shortly, SEM) [11,12,13]. In this method, a constructible set \(T^n\) is introduced per each iteration and then the second orthogonal projection onto C in (1.2) is replaced by this easily computable projection. Given the current iterate \(x_n\in {\mathcal {H}}\), calculate the next iterate by the following:

again \(\lambda \) is some positive constant.

Since \(T^n\) is a half-space in case that \(x_n-\lambda {\mathcal {F}}x_n\ne y_n\), the second projection in (1.3) is inherently explicit, see formula (2.2) below. Observe that similar to the extragradient method, the subgradient extragradient method still requires the evaluation of \({\mathcal {F}}\) twice per each iteration. To overcome this obstacle, but still with two metric projections per each iteration, Popov [37] introduced the modified extragradient method. Recently, Malitsky and Semenov [32] extended the modified extragradient method in [37] and proposed the Modified Subgradient Extragradient Method (shortly, MSEM). For our purposes, we present next which also uses projection onto \(T^n\) but as Popov, one evaluation of \({\mathcal {F}}\) per each iteration.

Choose \(x_0,~y_0\in C\), and set \( x_1=P_C(x_0-\lambda {\mathcal {F}}y_0),~ y_{1}=P_{C}(x_1-\lambda {\mathcal {F}}y_0)\). Given the iterates \(x_n,~y_n,~y_{n-1}\in {\mathcal {H}}\), calculate the next iterates by the following:

where \(\lambda \) is some positive constant.

Now, we wish to recall the inertial-type algorithm which originates from the heavy ball method of the second-order dynamical systems in time [1, 2, 36] and speed up the original algorithm without the inertial effects. In recent years, this technique has been studied intensively and applied successfully to many problems, see for example, [7, 8, 16,17,18, 29, 30, 33, 36]. In particular, Alvarez and Attouch [2] applied it to obtain an inertial proximal method for finding zeros of maximal monotone operators. The algorithm’s iterative rule can be formulated as follows. Choose two sequences \(\left\{ \theta _n\right\} \subset [0,1), \left\{ \lambda _n\right\} \subset (0,+\infty )\), and starting points \(x_{-1},~x_0 \in {\mathcal {H}}\). Given the iterates \(x_n,~x_{n-1}\in {\mathcal {H}}\), calculate the next iterate by the following:

Using the resolvent \(J_{\lambda _n}^{\mathcal {F}}\) of \({\mathcal {F}}\) with parameter \(\lambda _n>0\), (1.5) can be rewritten in the following compact form:

So, motivated and inspired by the above methods and results, we introduce a new inertial-type modified subgradient extragradient method for solving VIs in real Hilbert spaces. Under mild and standard assumptions, we present the weak convergence theorem. We also provide several numerical examples which illustrate the behavior of our algorithm and demonstrate its potential applicability compared with related results in the literature.

The outline of the paper is as follows. In Sect. 2, we present definitions and notions that are needed for the rest of the paper. In Sect. 3, the new algorithm is presented and analyzed. Finally, in Sect. 4, several numerical examples are presented.

2 Preliminaries

Let \({\mathcal {H}}\) denote a real Hilbert space with the inner product \(\langle \cdot ,\cdot \rangle \) and the induced norm \(\Vert \cdot \Vert \). Let C be a nonempty, closed and convex subset of \({\mathcal {H}}\). Recall that the metric projection operator \(P_C:{\mathcal {H}}\rightarrow C\) is defined, for each \(x\in {\mathcal {H}}\), by

Since C is nonempty, closed and convex set, \(P_C(x)\) exists and is unique. A useful and important case in which the orthogonal projection has a closed formula is the following. Given \(x\in {\mathcal {H}}\) and \(v\in {\mathcal {H}}\), \(v\ne 0\) and let \(T=\left\{ z\in {\mathcal {H}}: \left\langle v, z-x\right\rangle \le 0\right\} \) be a half-space. Then, for all \(u\in {\mathcal {H}}\), the projection of u onto the half-space T, denoted by \(P_T(u)\), is defined by

From the definition of \(P_C\) (2.1), it is easy to show that \(P_C\) has the following characteristic properties, see for example [23] for more details.

Lemma 2.1

-

(i)

\(\left\langle P_C(x)-P_C(y),x-y \right\rangle \ge \left\| P_C (x)-P_C (y)\right\| ^2,~\forall x,y\in {\mathcal {H}}.\)

-

(ii)

\(\left\| x-P_C (y)\right\| ^2+\left\| P_C (y)-y\right\| ^2\le \left\| x-y\right\| ^2, \forall x\in C, y\in {\mathcal {H}}.\)

-

(iii)

\(z=P_C (x) \Leftrightarrow \left\langle x-z,y-z \right\rangle \le 0,\quad \forall y\in C.\)

Lemma 2.2

For all \(x,y\in {\mathcal {H}}\) and constant \(\alpha \in {\mathbb {R}}\), the following equality holds:

Next, we present the monotone and Lipschitz continuous concepts of a mapping \({\mathcal {F}}:{\mathcal {H}} \rightarrow {\mathcal {H}}\).

- (i):

The mapping \({\mathcal {F}}\) is called monotone on C if

$$\begin{aligned} \left\langle {\mathcal {F}}x-{\mathcal {F}}y, x-y\right\rangle \ge 0, \quad \forall x,y\in C; \end{aligned}$$- (ii):

The mapping \({\mathcal {F}}\) is called L - Lipschitz continuous on C if there exists \(L>0\) such that

$$\begin{aligned} ||{\mathcal {F}}x-{\mathcal {F}}y||\le L||x-y||,~\forall x,y\in C. \end{aligned}$$

3 The algorithm

In this section, we introduce our new algorithm which is constructed around the projection method and the inertial computational technique.

Observe that the main computational effort of Algorithm 3.1 is one mapping’s evaluation, \({\mathcal {F}}y_n\) and one orthogonal projection onto C to calculate \(y_{n+1}\), per each iteration. This of course suggests that the complexity of Algorithm 3.1 is close to the classical gradient method. The term \(\theta (x_{n+1}-x_n)\) is called the inertial effect. Throughout this section, we suppose that the algorithm stopping rule does not meet and hence the algorithm generates infinite sequences.

To study the asymptotic behavior of Algorithm 3.1, we assume the following conditions:

Condition 3.2

The mapping \({\mathcal {F}}\) is monotone on C.

Condition 3.3

The mapping \({\mathcal {F}}\) is L-Lipschitz continuous on C.

Condition 3.4

The solution set of the VI (1.1) is nonempty.

We also consider the following assumptions:

Condition 3.5

\(\delta :=1-\lambda L(3+2\theta )>0\).

Condition 3.6

\(\delta (1+\theta ^2)-2\theta (2+\theta )>0\).

Observe that \(\lambda \) and \(\theta \) can always be chosen such that Conditions 3.5 and 3.6 are satisfied, for example

3.1 Convergence

To start our analysis, for each \(p\in VI({\mathcal {F}},C)\), sequences \(\{x_n\}\), \(\{y_n\}\) and \(\{w_n\}\) generated by Algorithm 3.1 and constants \(\lambda ,L\) as above, we define the sequence

and prove the following useful result:

Lemma 3.7

For each \(p\in VI({\mathcal {F}},C)\) and \(n\ge 0\), the following inequality holds:

where \(\Xi =\theta (1+\theta )+\frac{\delta }{2}\theta (1-\theta )\) and \(\Gamma =\frac{\delta }{2}(1-\theta )-2\lambda L \theta (1+\theta )\).

Proof

Since \(x_{n+1}=P_{T^n}(w_n-\lambda {\mathcal {F}}y_n)\), by the projection characteristic, Lemma 2.1(iii), we obtain

Since \(C\subset T^n\), we choose \(x=p\) and obtain \(2\left\langle p-x_{n+1}, w_n-x_{n+1}\right\rangle \le 2\lambda \left\langle p-x_{n+1},{\mathcal {F}}y_n\right\rangle .\) Using the equality \(2\left\langle a,b\right\rangle =||a||^2+||b||^2-||a-b||^2\), we get

From the definition of \(T^n\) and the fact \(x_{n+1}\in T^n\), we see that

which yields the inequality \(2\left\langle w_n-y_{n},x_{n+1}-y_n\right\rangle \le 2\lambda \left\langle {\mathcal {F}}y_{n-1},x_{n+1}-y_n\right\rangle \). Thus, by applying the above equality for \(2\left\langle a,b\right\rangle \), one obtains

Adding up both right hand sides of (3.2) and (3.3), we get

where the last inequality follows from the fact \(p\in VI({\mathcal {F}},C)\) and the monotonicity of \({\mathcal {F}}\).

On the other hand, using the L-Lipschitz continuity of \({\mathcal {F}}\) and the Cauchy–Schwarz inequality, we get

which together with the inequality \(||y_n-y_{n-1}||^2\le 2||y_n-w_n||^2+2||w_n-y_{n-1}||^2\) implies that

Combining the relations (3.4) and (3.7), we obtain

Adding the term \(2\lambda L||y_n-w_{n+1}||^2\) to both sides of (3.8), we get that

Since \(w_n=x_n+\theta (x_n-x_{n-1})=(1+\theta )x_n-\theta x_{n-1}\) and using Lemma 2.2, we obtain

Similarly, from \(w_{n+1}=x_{n+1}+\theta (x_{n+1}-x_n)\), we also get

Combining (3.9)–(3.11), we obtain

Thus

Note that \( 1-2\lambda L> 1-\lambda L(3+2\theta ) =: \delta >0. \) Thus, from (3.12) and the definition of \(\Omega _n(p)\) in (3.1), one obtains

Finally, we have the following estimation:

Thus, from (3.13) and the definitions of \(\Gamma \) and \(\Xi \) in Lemma 3.7, the desired result is obtained and the proof is complete. \(\square \)

Now, we are ready to prove the convergence theorem of Algorithm 3.1.

Theorem 3.8

Assume that Conditions 3.2–3.4 and 3.5–3.6 hold. Then, any sequences \(\left\{ x_n\right\} \), \(\left\{ y_n\right\} \) and \(\left\{ w_n\right\} \) generated by Algorithm 3.1 converge weakly to some point p which solves the VI (1.1).

Proof

For each \(n\ge 0\) and solution p of \(VI({\mathcal {F}},C)\), define \(\Phi _n(p)\) by

Now, we divide the proof of Theorem 3.8 into three steps (claims). \(\square \)

Claim 1

The limit of \(\left\{ \Phi _n(p)\right\} \) exists and \(||x_{n+1}-x_n||\rightarrow 0\) as \(n\rightarrow \infty \).

Indeed, from the definition of \(\Omega _n(p)\) and \(\Phi _n(p)\) we see that \(\Phi _n(p)=\Omega _n(p)+\Xi ||x_n-x_{n-1}||^2\). Thus, from Lemma 3.7, we obtain

Note that \( 1-2\lambda L (1+\theta )=1-\lambda L (2+2\theta )> 1-\lambda L(3+2\theta ) =: \delta >0, \) which implies that \(2\lambda L (1+\theta )<1-\delta \). Thus, from the definition of \(\Gamma \) and \(\Xi \) in Lemma 3.7, we obtain

It follows from (3.15) and (3.16) that the sequence \(\left\{ \Phi _n(p)\right\} \) is nonincreasing. Moreover

So we have that \(\Xi =\theta (1+\theta )+\frac{\delta }{2}\theta (1-\theta )=\theta \left( 1+\frac{2\theta +\delta (1-\theta )}{2}\right) \). Thus, by denoting by \(k:=\frac{2}{2\theta +\delta (1-\theta )}>0\), we get that

On the other hand, from the Cauchy–Schwarz and the Cauchy inequality, we obtain

Now

Combining (3.17) and (3.19), and then using (3.18), we get that

Observing that

and \( \left[ \delta (1-\theta )^2-2\theta ^2\right] -\left[ \delta (1+\theta ^2)-2\theta (2+\theta )\right] = 2\theta ^2+2\theta (2-\delta )\ge 0\) and thus also

where the last inequality follows from Condition 3.6. From (3.21), (3.22) and the fact \(0<\delta <1\), we get that \(1-\theta (1+k)>0\), showing that together with (3.20), \(\Phi _n(p)\ge 0\) for all \(n\ge 0\). Since \(\left\{ \Phi _n(p)\right\} \) is nonincreasing, the limit of \(\left\{ \Phi _n(p)\right\} \) exists. Now, passing to the limit in (3.15) as \(n\rightarrow \infty \) and noting that \(\Gamma -\Xi >0\), we obtain \(||x_{n+1}-x_n||\rightarrow 0\).

Claim 2

\(\lim _{n\rightarrow \infty }||w_n-y_{n}||=\lim _{n\rightarrow \infty }||x_n-y_n||=\lim _{n\rightarrow \infty }||y_n-y_{n+1}||=\lim _{n\rightarrow \infty }||w_n-y_{n-1}||=0\), and the sequences \(\left\{ x_n\right\} \), \(\left\{ y_n\right\} \), \(\left\{ w_n\right\} \) are bounded.

Indeed, from the definition of \(\Omega _n(p)\) and \(\Phi _n(p)\), we see that \(\Omega _n(x^*)=\Phi _n(x^*)-\Xi ||x_n-x_{n-1}||^2\). This together with Claim 1 implies that the limit of \(\left\{ \Omega _n(x^*)\right\} \) exists. Thus, from (3.13) and Claim 1, we get that

meaning that \(\lim _{n\rightarrow \infty }||w_n-y_{n}||=\lim _{n\rightarrow \infty }||y_n-x_{n+1}||=0\) since \(\delta >0\). Hence, from \(||x_n-x_{n+1}||\rightarrow 0\) and the inequality \( ||x_{n}-y_{n}||\le ||x_n-x_{n+1}||+||x_{n+1}-y_n||, \) we also obtain \(\lim _{n\rightarrow \infty }||x_n-y_{n}||=0\). Moreover, since

we have \(\lim _{n\rightarrow \infty }||y_n-y_{n+1}||=0\). Since \(||w_n-y_{n-1}||\le ||w_n-y_n||+||y_n-y_{n-1}||\rightarrow 0\), we also obtain that \(\lim _{n\rightarrow \infty }||w_n-y_{n-1}||=0\). By (3.20), Claim 1 and the fact \(1-\theta (1+k)>0\), we see that the sequence \(\left\{ x_n\right\} \) is bounded. Thus, the boundedness of \(\left\{ y_n\right\} \) and \(\left\{ w_n\right\} \) follows immediately from the limits in Claim 2.

Claim 3

The sequences \(\left\{ x_n\right\} \), \(\left\{ y_n\right\} \), \(\left\{ w_n\right\} \) converge weakly to the same point, which solves \(VI({\mathcal {F}},C)\).

Indeed, we first prove that every weak cluster point of \(\left\{ x_n\right\} \) solves \(VI({\mathcal {F}},C)\). From the definition of \(y_{n+1}\), we have

for all \(y\in C\). Thus, it follows from the monotonicity of \({\mathcal {F}}\) that

Now, assume that \(x^\dagger \) is a weak cluster point of \(\left\{ x_n\right\} \), i.e., there exists a subsequence \(\left\{ x_m\right\} \) of \(\left\{ x_n\right\} \) converging weakly to \(x^\dagger \). Since \(||x_m-y_m||\rightarrow 0\), \(y_m\rightarrow x^\dagger \). Since C is a convex and closed subset of \({\mathcal {H}}\), it is weakly closed and hence \(x^\dagger \in C\) since \(\left\{ y_m\right\} \subset C\). Now, passing to the limit as \(n=m\rightarrow \infty \) in (3.23), and using Claim 2, we get that \(\left\langle {\mathcal {F}}y, y-x^\dagger \right\rangle \ge 0\) for all \(y\in C\). This implies that \(x^\dagger \) solves \(VI({\mathcal {F}},C)\).

Finally, to complete the proof of Theorem 3.8, we show that the all sequence \(\left\{ x_n\right\} \) converges weakly to \(x^\dagger \). Indeed, assume that there exists a different subsequence \(\left\{ x_k\right\} \) of \(\left\{ x_n\right\} \) converging weakly to \({\bar{x}}\), i.e., \(x_k\rightharpoonup {\bar{x}}\), and \({\bar{x}}\ne x^\dagger \). Using similar arguments as above, we get that \({\bar{x}}\) also solves \(VI({\mathcal {F}},C)\).

Then

Multiplying (3.25) by \(\theta \), and then subtracting its both sides by (3.24), we obtain

where \(\Delta _n(p)\) is defined as \(\Delta _n(p)=||x_n-p||^2-\theta ||x_{n-1}-p||^2\) for each \(n\ge 0\) and p solves \(VI({\mathcal {F}},C)\). By Claim 2, that is, \(||w_n-y_{n-1}||\rightarrow 0\) and the fact \(\Delta _n(p)=\Omega _n(p)-2\lambda L ||y_{n-1}-w_n||^2\), we see that the limit of \(\left\{ \Delta _n(p)\right\} \) exists for each \(p\in VI({\mathcal {F}},C)\). Thus, from relation (3.26) and noting that both points \(x^\dagger \) and \({\bar{x}}\) are in \(VI({\mathcal {F}},C)\), we see that the limit of the sequence \(\left\{ \left\langle x_n-\theta x_{n-1},{\bar{x}}-x^\dagger \right\rangle \right\} \) exists and, hence, we denote it by l, i.e.,

Now, passing to the limit as \(n=m=k\rightarrow \infty \) in (3.27), we obtain

Thus, either \((1-\theta )||{\bar{x}}-x^\dagger ||^2=0\) or \(x^\dagger ={\bar{x}}\), since \(\theta \in [0,1)\). This implies that the all sequence \(\left\{ x_n\right\} \) converges weakly to \(x^\dagger \). From Claim 2, it is easy to see that the sequences \(\left\{ y_n\right\} \) and \(\left\{ w_n\right\} \) also converge weakly to \(x^\dagger \) and the proof of Theorem 3.8 is complete.

4 Computational experiments

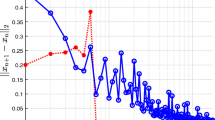

In this section, we present several numerical experiments which illustrate the behavior of Algorithm 3.1 (shortly, IMSEM) with inertial effects. The performances are also compared with four related algorithms, namely the Modified Subgradient Extragradient Method (MSEM) (Algorithm 3.1 for \(\theta =0\), also see [32]), the Subgradient Extragradient Method (SEM) in [11,12,13], the Extragradient Method (EM) in [28], and the Projected Reflected Gradient Method (PRGM) in [31].

Due to the fixed-point characteristic of the solutions of VIs, that is, \(x=P_C(x-{\mathcal {F}}x)\) for each \(x \in VI({\mathcal {F}},C)\), we use the function \(D(x)=||x-P_C(x-{\mathcal {F}}x)||^2\) to illustrate the computational performance of the aforementioned algorithms. The convergence of \(\left\{ D(x_n)\right\} \) to 0 as \(n\rightarrow \infty \) implies that the sequence \(\left\{ x_n\right\} \) converges to the solution of the problem. We describe the behavior of \(D(x_n)\) generated by each algorithm when the execution time elapses in seconds and the number of projections on C is performed. The starting points are \(x_{-1}=x_0=y_0=(1,1,\ldots ,1)\in {\mathbb {R}}^m\). We take the stepsize \(\lambda =\frac{1}{5L}\) for IMSEM and also choose the best stepsize (possibly) for each algorithm used to compare, namely \(\lambda =\frac{1}{3.01L}\) for MSEM, \(\lambda =\frac{1}{1.01L}\) for EM and SEM, \(\lambda =\frac{0.4}{L}\) for PRGM. The feasible set is a polyhedral convex set, given by \( C=\left\{ x\in {\mathbb {R}}^m_+: Ex\le f\right\} \) where E is a random matrix of size \(l\times m\) (\(l=10,~m=150\) or \(m=200\)) with entries in \((-2,2)\) and \(f\in {\mathbb {R}}^l_{+}\). All the programs are written on 7.0 Matlab and computed on a PC Desktop Intel(R) Core(TM) i5-3210M CPU @ 2.50 GHz, RAM 2.00 GB.

Example 1

This example considers a linear VI, [25]. Let \({\mathcal {F}}:{\mathbb {R}}^m\rightarrow {\mathbb {R}}^m\) which is defined by \({\mathcal {F}}(x)=Mx+q\), where

q is a vector in \({\mathbb {R}}^{m}\), N is a \(m\times m\) matrix, S is a \(m\times m\) skew-symmetric matrix with entries being generated in \((-2,2)\) and D is a \(m\times m\) diagonal matrix, whose diagonal entries are positive in (0, 2). The Lipschitz constant of \({\mathcal {F}}\) is \(L=||M||\). The numerical results for this example are shown in Figs. 1 and 2.

Example 2

Now, we consider a nonlinear VI with \({\mathcal {F}}:{\mathbb {R}}^m\rightarrow {\mathbb {R}}^m\) which is defined as \({\mathcal {F}}(x)=Mx+F(x)+q\), with M a \(m\times m\) symmetric and positive semidefinite matrix, q is a vector in \({\mathbb {R}}^{m}\) and F(x) is the proximal mapping of the function \(g(x)=\frac{1}{4}||x||^4\), i.e.,

Now, we prove that the proximal mapping F(x) is monotone. Indeed, take two points \(x_1,~x_2\in {\mathbb {R}}^m\) and set \(y_1=F(x_1),~y_2=F(x_2)\). From the definition of F, we have \(||y_1||^2y_1+y_1-x_1=0,~||y_2||^2y_2+y_2-x_2=0\) or

Thus

This together with the definition of \({\mathcal {F}}\) implies that

Hence, the operator \({\mathcal {F}}\) is monotone. Moreover, \({\mathcal {F}}\) is Lipschitz continuous with the constant \(L=||M||+1\). For experiment, all the entries of M and q are also generated randomly as in the previous example. The numerical results are shown in Figs. 3 and 4.

Example 3

Finally, we consider the VI with \({\mathcal {F}}:{\mathbb {R}}^{m}\rightarrow {\mathbb {R}}^{m}\) (where \(m=2k\)) defined by

Now, we prove that \({\mathcal {F}}\) is monotone. Indeed, for all \(x,~y\in {\mathbb {R}}^{m}\) (with \(m=2k\)), we have

where the last inequality follows from the fact that \(\left| \sin a -\sin b\right| \le |a-b|\) for all \(a,~b\in {\mathbb {R}}\). Thus, \({\mathcal {F}}\) is monotone. On the other hand, for all \(x,~y\in {\mathbb {R}}^m\), we have

This implies that \({\mathcal {F}}\) is Lipschitz continuous with \(L=\sqrt{10}\). The behaviors of the sequences \(D(x_n)\) generated by all the algorithms are described in Figs. 5 and 6.

Remark 4.1

The techniques used in the convergence proof in this paper depend strictly on Conditions 3.5 and 3.6. Thanks to the referee’s comments, we observe that due to Condition 3.5, our Algorithm 3.1 has a disadvantage over algorithms EGM and SEGM since the interval for possible stepsize \(\lambda \) is smaller. While this might be an issue for small-sized problems, for huge problems, the evaluation of \({\mathcal {F}}\) is much more expansive since it could require more computational resources. This could suggest that the EGM and SEGM, even when using the optimal stepsize, require more computational efforts due to the extra evaluation of the associated operator per each iteration (also, see [31, Sect. 5]).

Condition 3.6 is also restrictive for the inertial parameter \(\theta \) (a small interval of \(\theta \)). We assumed this condition since it was needed for the algorithm’s analysis which is completely new technique. In our numerical experiments, we have tried inertial parameters outside their theoretical bounds to see their limitations. The experimental results illustrate the computational advantages and complexity of Algorithm 3.1 with these inertial effects over other related algorithms. This suggests to study further and provide a more general proof with these choices of parameters and hence weaken Condition 3.6. Due to the importance and interest of this matter and to provide a general result as possible with no pressure, we plan to study this in a forthcoming work.

In the aforementioned experiments, the parameters are chosen outside their theoretical bounds. Now, we perform some experiments to show the behavior of the proposed algorithm (IMSEGM) where the stepsize \(\lambda \) and the inertial parameter \(\theta \) satisfy Conditions 3.5 and 3.6. In fact, Conditions 3.5 and 3.6 can be rewritten as follows:

Figure 7 describes the applicable area (black) of \(\lambda \) and \(\theta \) where Conditions 3.5 and 3.6 hold. The stepsize \(\lambda \) here depends strictly on the inertial parameter \(\theta \). For the experiment, we choose \(\lambda =0.9f(\theta )/L\) and \(\theta \in \left\{ 0.05,~0.10,~0.15,~0.20,~0.23\right\} \). The numerical results are shown in Figs. 8, 9, and 10. It was seen in the previous experiments, with a fixed stepsize \(\lambda \), the better the IMSEM, the larger the inertial parameter \(\theta \). Here, when \(\theta \) increases, then \(\lambda \) is small. This affects the numerical performance of IMSEM as shown in Figs. 8, 9, and 10. This remark suggests to study a forthcoming work where the theoretical bounds of \(\lambda \) and \(\theta \) can be extended and they do not depend on each other. Moreover, other numerical results on other test problems should be performed to check the better convergence of the proposed algorithm over existing methods.

5 Conclusions

This article presents a new inertial-type subgradient extragradient method for solving monotone and Lipschitz continuous variational inequalities (VI) in real Hilbert spaces. The algorithm requires only one orthogonal projection onto the feasible set of the VI as well as one mapping evaluation per each iteration. These computational properties make it attractable and comparable with the classical gradient method. Moreover, several numerical experiments suggest the advantages of the new proposed methods compared with recent related results in the literature.

Our results can be extended in many promising directions, such as multi-valued variational inequality [20], VIs combined with fixed point problems [10], system of VIs and mixed equilibrium problem [26], general VIs [35], strong convergence in Hilbert spaces as well as extensions to Banach spaces [9, 34] and this is surely our future goals.

References

Alvarez, F.: Weak convergence of a relaxed and inertial hybrid projection-proximal point algorithm for maximal monotone operators in Hilbert space. SIAM J. Optim. 14, 773–782 (2004)

Alvarez, F., Attouch, H.: An inertial proximal method for maximal monotone operators via discretization of a nonlinear oscillator with damping. Set-Valued Anal. 9, 3–11 (2001)

Antipin, A.S.: On a method for convex programs using a symmetrical modification of the Lagrange function. Ekonomika i Mat. Metody. 12, 1164–1173 (1976)

Aubin, J.P., Ekeland, I.: Applied Nonlinear Analysis. Wiley, New York (1984)

Baiocchi, C., Capelo, A.: Variational and Quasivariational Inequalities. Applications to Free Boundary Problems. Wiley, New York (1984)

Blum, E., Oettli, W.: From optimization and variational inequalities to equilibrium problems. Math. Program. 63, 123–145 (1994)

Bot, R.I., Csetnek, E.R., Hendrich, C.: Inertial Douglas–Rachford splitting for monotone inclusion problems. Appl. Math. Comput. 256, 472–487 (2015)

Bot, R.I., Csetnek, E.R., Laszlo, S.C.: An inertial forward–backward algorithm for the minimization of the sum of two nonconvex functions. EURO J. Comput. Optim. 4, 3–25 (2016)

Buong, N.: Strong convergence theorem of an iterative method for variational inequalities and fixed point problems in Hilbert spaces. Appl. Math. Comput. 217, 322–329 (2010)

Ceng, L.-C., Yao, J.-C.: An extragradient-like approximation method for variational inequality problems and fixed point problems. Appl. Math. Comput. 190, 205–215 (2007)

Censor, Y., Gibali, A., Reich, S.: The subgradient extragradient method for solving variational inequalities in Hilbert space. J. Optim. Theory Appl. 148, 318–335 (2011)

Censor, Y., Gibali, A., Reich, S.: Strong convergence of subgradient extragradient methods for the variational inequality problem in Hilbert space. Optim. Methods Softw. 26, 827–845 (2011)

Censor, Y., Gibali, A., Reich, S.: Extensions of Korpelevich’s extragradient method for the variational inequality problem in Euclidean space. Optim. 61, 1119–1132 (2012)

Dafermos, S.C.: Traffic equilibria and variational inequalities. Transp. Sci. 14, 42–54 (1980)

Dafermos, S.C., McKelvey, S.C.: Partitionable variational inequalities with applications to network and economic equilibria. J. Optim. Theory Appl. 73, 243–268 (1992)

Dong, Q.L., Lu, Y.Y., Yang, J.: The extragradient algorithm with inertial effects for solving the variational inequality. Optim. 65, 2217–2226 (2016)

Dong, Q.L., Gibali, A., Jiang, D., Tang, Y.: Bounded perturbation resilience of extragradient-type methods and their applications. J. Inequal. Appl. (2017). https://doi.org/10.1186/s13660-017-1555-0

Dong, Q.L., Gibali, A., Jiang, D., Ke, S.H.: Convergence of projection and contraction algorithms with outer perturbations and their applications to sparse signals recovery. J. Fixed Point Theory Appl. (2018). https://doi.org/10.1007/s11784-018-0501-1

Facchinei, F., Pang, J.S.: Finite-Dimensional Variational Inequalities and Complementarity Problems. Springer, Berlin (2003)

Fang, C., Chen, S.: A subgradient extragradient algorithm for solving multi-valued variational inequality. Appl. Math. Comput. 229, 123–130 (2014)

Fichera, G.: Sul problema elastostatico di Signorini con ambigue condizioni al contorno. Atti Accad. Naz. Lincei, VIII. Ser., Rend., Cl. Sci. Fis. Mat. Nat. 34, 138–142 (1963)

Fichera, G.: Problemi elastostatici con vincoli unilaterali: il problema di Signorini con ambigue condizioni al contorno. Atti Accad. Naz. Lincei, Mem., Cl. Sci. Fis. Mat. Nat., Sez. I, VIII. Ser. 7, 91–140 (1964)

Goebel, K., Reich, S.: Uniform Convexity, Hyperbolic Geometry, and Nonexpansive Mappings. Marcel Dekker, New York and Basel (1984)

Hartman, P., Stampacchia, G.: On some non-linear elliptic diferential-functional equations. Acta Math. 115, 271–310 (1966)

Hieu, D.V., Anh, P.K., Muu, L.D.: Modified hybrid projection methods for finding common solutions to variational inequality problems. Comput. Optim. Appl. 66, 75–96 (2017)

Kazmi, K.R., Rizvi, S.H.: A hybrid extragradient method for approximating the common solutions of a variational inequality, a system of variational inequalities, a mixed equilibrium problem and a fixed point problem. Appl. Math. Comput. 218, 5439–5452 (2012)

Kinderlehrer, D., Stampacchia, G.: An Introduction to Variational Inequalities and Their Applications. Academic Press, New York (1980)

Korpelevich, G.M.: The extragradient method for finding saddle points and other problems. Ekonomika i Mat. Metody 12, 747–756 (1976)

Maingé, P.E.: Inertial iterative process for fixed points of certain quasi-nonexpansive mappings. Set Valued Anal. 15, 67–79 (2007)

Maingé, P.E.: Convergence theorems for inertial KM-type algorithms. J. Comput. Appl. Math. 219, 223–236 (2008)

Malitsky, Y.V.: Projected reflected gradient methods for monotone variational inequalities. SIAM J. Optim. 25, 502–520 (2015)

Malitsky, Y.V., Semenov, V.V.: An extragradient algorithm for monotone variational inequalities. Cybern. Syst. Anal. 50, 271–277 (2014)

Moudafi, A.: Second-order differential proximal methods for equilibrium problems. J. Inequal. Pure Appl. Math. 4, 1–7 (2003)

Nakajo, K.: Strong convergence for gradient projection method and relatively nonexpansive mappings in Banach spaces. Appl. Math. Comput. 271, 251–258 (2015)

Noor, M.A.: Some developments in general variational inequalities. Appl. Math. Comput. 152, 199–277 (2004)

Polyak, B.T.: Some methods of speeding up the convergence of iterative methods. Zh.Vychisl. Mat. Mat. Fiz. 4, 1–17 (1964)

Popov, L.D.: A modification of the Arrow-Hurwicz method for searching for saddle points. Mat. Zametki 28, 777–784 (1980)

Stampacchia, G.: Formes bilineaires coercitives sur les ensembles convexes. C. R. Acad. Sci. Paris 258, 4413–4416 (1964)

Acknowledgements

The authors would like to thank the associate editor and two anonymous referees for their valuable comments and suggestions which helped us very much in improving the original version of this paper. This work was supported by Vietnam National Foundation for Science and Technology Development (NAFOSTED) under the project 101.01-2017.315.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Gibali, A., Hieu, D.V. A new inertial double-projection method for solving variational inequalities. J. Fixed Point Theory Appl. 21, 97 (2019). https://doi.org/10.1007/s11784-019-0726-7

Published:

DOI: https://doi.org/10.1007/s11784-019-0726-7

Keywords

- Subgradient extragradient method

- inertial effect

- variational inequality

- monotone operator

- Lipschitz continuity