Abstract

This paper focuses on the online distributed optimization problem based on multi-agent systems. In this problem, each agent can only access its own cost function and a convex set, and can only exchange local state information with its current neighbors through a time-varying digraph. In addition, the agents do not have access to the information about the current cost functions until decisions are made. Different from most existing works on online distributed optimization, here we consider the case where the cost functions are strongly pseudoconvex and real gradients of the cost functions are not available. To handle this problem, a random gradient-free online distributed algorithm involving the multi-point gradient estimator is proposed. Of particular interest is that under the proposed algorithm, each agent only uses the estimation information of gradients instead of the real gradient information to make decisions. The dynamic regret is employed to measure the proposed algorithm. We prove that if the cumulative deviation of the minimizer sequence grows within a certain rate, then the expectation of dynamic regret increases sublinearly. Finally, a simulation example is given to corroborate the validity of our results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent years, distributed optimization has attracted extensive attention in various fields [1,2,3,4,5,6]. This is due to its wide range of practicability in numerous fields such as distributed resource allocation [1], distributed economic dispatch [2], distributed machine learning [3], distributed coordination control [4], etc.

Distributed optimization in static environments has been widely studied in [7,8,9]. However, in practical applications, the scenarios that distributed optimization occurs are often dynamic. In recent years, online distributed optimization has been extensively studied [10,11,12,13,14]. For example, in [10], an online distributed push-sum algorithm is proposed for the unconstrained problem, and an online distributed coordinated algorithm based on the gradient descent method is developed in [11]. In [12], an online distributed saddle point algorithm is developed for optimization problem with a global set constraint, an online distributed mirror descent algorithm is proposed in [13], and an online distributed dual averaging algorithm is designed in [14].

It is worth pointing out that all the above works rely on real gradient information. However, it is not feasible or costly to calculate the gradient information accurately in practical applications. For example, in the Internet of Things [15], fog computing can not get the closed expression of delay since its online decision-making needs to adapt to the user preferences and the availability of resources is temporarily unpredictable. Moreover, in bandit optimization [16], agents can only observe the values of the cost functions, not the specific cost functions. In these cases, using zero-order information is desirable for distributed optimization. Recently, zeroth-order random online distributed optimization has been investigated in [17,18,19,20]. In [17, 18], zeroth-order random online distributed algorithms are proposed, where the zeroth-order information of cost functions is used. Furthermore, in [19, 20], two different random gradient-free algorithms are proposed for online distributed optimization under time-varying networks.

It is worth noting that most of the mentioned articles are applied to convex optimization problems. However, the problems of pseudoconvex optimization exist widely in reality. For example, in computer vision [21], the geometric expressions are usually modeled by pseudoconvex functions. Also, in portfolio planning [22] and fractional programming problems [23], cost functions are commonly formulated as pseudoconvex functions. Pseudoconvex optimization has a wider application range than convex optimization, as it can also be applied to some nonconvex cases. In fact, distributed optimization problems with pseudoconvex functions have only been studied in [24, 25], where real gradient information of cost functions is required.

Motivated by [19, 20, 24,25,26], we study the online distributed optimization problems with strongly pseudoconvex cost functions and random gradient-free method in this paper. Compared with [24, 25], where agents need to achieve real gradient information, here agents only use estimation of gradients instead of real gradient information to make decisions. To solve this problem, an online distributed algorithm with random gradient-free method is proposed, where a multi-point gradient estimator is used to estimate the gradients of local cost functions. Different from [11,12,13,14,15,16,17], which are based on the fact that the cost functions are convex, here the cost functions are considered to be strongly pseudoconvex. In [19, 20], gradient-free method and the convexity of cost functions are used to analyze the convergence of the proposed algorithms. Different from them, here we employ the strong pseudomonotonicity of cost functions and the Karush–Kuhn–Tucker (KKT) condition associated with pseudoconvex optimization to guarantee the effectiveness. We prove that if the graph is B-strongly connected, and the cumulative deviation of the minimizer sequence grows with a certain rate, then the expectation of dynamic regret increases sublinearly.

This paper is organized as follows. In Sect. 2, we formulate the problem and propose an algorithm. In Sect. 3, the main results are presented and the detailed proofs are given. A simulation example is given in Sect. 4. Section 5 is the conclusion of the full paper.

Notations We use \(\nabla \psi (u)\) to denote the gradient of function \(\psi \) at point u. \(\lfloor T\rfloor \) is defined as set \(\{0, 1, \ldots , T\}\) for any \(T\in {\mathbb {N}}.\) For vectors \({{\varvec{u}}},{{\varvec{v}}}\in {\mathbb {R}}^{m}\) and matrix \({{\varvec{W}}}\in {\mathbb {R}}^{m\times m},\) we denote \([{{\varvec{u}}}]_i\) represents the ith element of \({{\varvec{u}}},\) \( \Vert {{{\varvec{u}}}} \Vert =\sqrt{{{\varvec{u}}}^{\textrm{T}}{{\varvec{u}}}},\) \(\langle {{{\varvec{u}}},{{\varvec{v}}}}\rangle _{{\varvec{W}}}=\langle {{{\varvec{W}}}{{\varvec{u}}}}, {{\varvec{v}}}\rangle ,\) \(\Vert {{\varvec{u}}}\Vert _{{\varvec{W}}} ^{2}= {{\varvec{u}}}^{\textrm{T}} {{{\varvec{W}}}{{\varvec{u}}}}.\) We use \({\mathbb {E}}\{{{\varvec{u}}}\}\) to denote the expectation of random variable \({{\varvec{u}}}.\)

2 Problem formulation

2.1 Basic graph theory

A time-varying directed communication graph is defined as \({\mathcal {G}}(t)=({\mathcal {V}}, {\mathcal {E}}(t), {{\varvec{W}}}(t)),\) where \({\mathcal {V}}=\{1, \ldots ,\) \( n\}\) is a vertex set, \({\mathcal {E}}(t) \subset {\mathcal {V}}\times {\mathcal {V}}\) denotes an edge set and \({{\varvec{W}}}(t)=(w_{ij})_{n\times n}\) is a non-negative matrix to represent the weight of adjacent edges. The neighbor set of agent i is defined as \({\mathcal {N}}_{i}(t)=\{j\in {\mathcal {V}}\vert \) \(( j,i)\in {\mathcal {E}}\},\) where agent i can receive information from agent j. For digraph \({\mathcal {G}}(t)\) and some \(B>0,\) \({\mathcal {E}}_B=\) \(\bigcup _{k=tB}^{(t+1)B-1}{{\mathcal {E}}}(k)\) denotes the B-edge set. Based on the above conditions, if digraph \({\mathcal {G}}(t)\) and the edge set \({\mathcal {E}}_B(t)\) are all strongly connected for any \(t\ge 0.\) Then, \({\mathcal {G}}(t)\) is called B-strongly connected graph.

In this paper, the following assumption is made for the communication graph \({\mathcal {G}}(t).\)

Assumption 1

For any \(t\ge 0,\) \({\mathcal {G}}(t)\) is B-strongly connected graph and matrix \({{\varvec{W}}}(t)\) is doubly stochastic.

For distributed optimization, \({{\varvec{W}}}(t)\) is an essential part in facilitating agents to achieve consensus [25]. For any \(t\ge k\ge 0,\) we define

Lemma 1

[25] Based on Assumption 1, for any \(i,j \in {\mathcal {V}}\) and \(t\ge k,\) there exist certain \(D>0\) and \(0< \rho <1\) satisfying

2.2 Online distributed optimization

In this paper, we consider a multi-agent system with n agents, where agents exchange local information via graph \({{\mathcal {G}}(t)}.\) For any \(i\in {\mathcal {V}},\) a sequence of cost functions is given by \(\{\psi _i^1,\ldots ,\psi _i^{\textrm{T}}\},\) where T is a finite time horizon but is unknown by agents. For any \( t\in \lfloor T\rfloor ,\) \(\psi ^t_i: \varOmega \rightarrow {\mathbb {R}}\) is the cost function of agent i at time t and \(\varOmega \subset {\mathbb {R}}^m.\) Then, all agents aim to collaboratively solve the following optimization problem with a set constraint, which has the form:

Here, we make some basic assumptions for the problem.

Assumption 2

Constraint set \(\varOmega \) is nonempty, convex, and compact.

Assumption 3

(Strong pseudomonotonicity) For any \(m,~n\in \varOmega ,\) if \(\langle \nabla {\psi ^t(n)},m-n\rangle \ge 0,\) then \(\langle \nabla {\psi ^t(m)},m-n\rangle \) \(\ge \) \(\beta \Vert m-n\Vert ^2\) for some \(\beta >0.\)

It follows from [27] that if \(\nabla \psi ^t\) is strong pseudomonotone, then \(\psi ^t\) is strongly pseudoconvex. The definitions of strongly pseudoconvex functions are given as follows.

Definition 1

For a differentiable function \(\psi (\cdot ):{\mathbb {R}}^m\rightarrow {\mathbb {R}}\) and a convex set \(\varOmega \in {\mathbb {R}}^m,\) \(\psi (\cdot )\) is named a pseudoconvex function if \(\langle \nabla \psi (n),m-n\rangle \ge 0\) implies \(\psi (m)-\psi (n)\ge 0\) for each pair of different points \(m,n\in \varOmega .\) Moreover, if \(\langle \nabla \psi (n),m-n\rangle \ge 0\) implies \(\psi (m)-\psi (n)\ge \beta \Vert m-n\Vert ^2\) for some \(\beta >0\) and each pair of different points \(m,n\in \varOmega ,\) then \(\psi (\cdot )\) is named a strongly pseudoconvex function on \(\varOmega .\)

Assumption 4

(Lipschitz continuous gradient) \(\Vert \nabla \psi _i^t(m)-\nabla \psi _i^t(n)\Vert \le L_1\Vert m-n\Vert , ~\forall m, n\in \varOmega \) for some \(L_1>0.\)

In this paper, we are committed to developing a gradient-free method for solving problem (3). From [28], the smoothed version of \(\psi ^t\) is defined as

where \(\mu >0 \) is the smoothing parameter of function \(\psi _{\mu }^t(z).\) And the multi-point unbiased gradient estimation is defined as

where \(\hat{{{\varvec{g}}}}(z_i(t))\) ia an unbiased estimator of \(\nabla \psi _{\mu _i}^t(z_i(t)),\) \(\mu _i>0\) is the smoothing parameter and the random sequence \(\xi _{q_i}(t)\) is locally generated from an i.i.d. standard Gaussian distribution for any \(q\in Q,\) where \(Q\in {\mathbb {N}}^+\) is the number of multi-point estimation. Based on results in [29], we know that if \(\psi ^t(\cdot )\) is differentiable, then \(\psi _{\mu }^t(\cdot )\) is also differentiable, the gradient of \(\psi _{\mu }^t(\cdot )\) is defined as

Any online algorithm should mimic the performance of its offline counterpart, and the gap between them is called regret [25]. The regret with most stringent offline benchmark is the dynamic regret, whose offline benchmark is to minimize \(\psi ^t(z)\) at each time. The definition of dynamic regrets is given by

where \(z^*(t) =\text {argmin}_{z\in \varOmega }\psi ^t(z)\) for any \(t\in \lfloor T\rfloor .\) An online optimization algorithm is announced “good” if regret (7) increases sublinearly, i.e., \(\lim _{T\rightarrow \infty }{\mathcal {R}}_i^d(T)/T=0.\) Unfortunately, using dynamic regret will cause the problem to become insolvable when the minimizer of the cost function changes rapidly. Inspired by [25], the difficulty is described by the deviation of the minimization sequence \(\{z^* (t)\}_{t=0}^{T}\) :

2.3 Online distributed gradient-free algorithm

Now, we consider an offline and centralized optimization problem, defined as

where objective function \(\psi \) is strongly pseudoconvex, and constraint set \(\varOmega \) satisfies Assumption 2. The KKT condition of pseudoconvex optimization in terms of variational inequality is given in the following lemma.

Lemma 2

[25] Suppose function \(\psi : {\mathbb {R}}^m \rightarrow {\mathbb {R}}\) is pseudoconvex and differentiable and constraint set \(\varOmega \) is convex. Then, \(z^*\) is a minimum point of \(\psi \) on \(\varOmega \) if it can satisfy the following variational inequality

Based on Lemma 2, we can know problem (9) is solved if there exists a point \(z \in \varOmega \) satisfying

Combining with multi-point unbiased gradient estimation, we construct an auxiliary problem, defined as

where \(z_0\in \varOmega ,\) \(\alpha >0\) and matrix \(P\in {\mathbb {R}}^{m\times m}\) is positive definite and symmetric. Based on KKT condition, \(z^*\in \varOmega \) is the solution to (12) if and only if

By comparing (11) and (13), we can achieve that \(z^*\) is also the solution to (9) when \(z^* =z_0.\) By replacing \(z_0\) with z(t) and \(z^*\) with \(z(t+1),\) we propose the following auxiliary optimization strategy :

Note that if \(z(t+1)=z(t)\) in (14), then problem (9) is solved, which means that the solution of problem (9) is the equilibrium point of problem (14). Let matrix \(P={{\varvec{I}}}_m,\) if \(\psi ^t_i \) is a convex function, then we have \(\arg \min _{z\in \varOmega }\{ z^{\textrm{T}}Pz+\langle \alpha (t)\hat{{{\varvec{g}}}}(z(t))-2Pz(t), z\rangle \}=z(t)-\frac{\alpha (t)}{2}\hat{{{\varvec{g}}}}(z(t)).\) Thus, (14) can be regarded as an extension of the gradient descent algorithm. The detailed proofs of the convergence for algorithm (14) can be found in [30, 31].

To solve problem (3), a random gradient-free online distributed algorithm is proposed

where \(z_i(t)\) is the state of agent i at time t with \(z_i(0)\in \varOmega \) and \(\alpha (t)>0\) is a diminishing learning rate with initial value \(\alpha (0)=\alpha _0.\) Motivated by the multi-point gradient estimation method, consensus algorithm, and the auxiliary optimization strategy, we propose algorithm (15). When running algorithm (15), each agent updates status at each time only using the information received from its neighbors and its own gradient estimation at past time. Therefore, (15) is an online and distributed algorithm.

3 Main results

In this part, we will elaborate the main results of this paper and give their concrete proof.

Theorem 1

Based on Assumptions 1–4, if the learning rate \(\alpha (t)=\frac{c}{\sqrt{t+1}}\) for some \(c >0,\) then for any \(i\in {\mathcal {V}}\) and \(T\in {\mathbb {N}},\) the following inequality holds

where \(\varGamma =\frac{(4{\hat{\rho }}_1/(c \upsilon )+ 2\hat{{\mathcal {K}}}_1))+3c^2(4{\hat{\rho }}_2/(c \upsilon )+2\hat{{\mathcal {K}}}_2)}{\rho (1-\rho )\ln 2}+ \frac{6nc L_0^2}{\upsilon \varepsilon }\) \(+\frac{4d}{c \upsilon \ln 2},\) \(\hat{{\mathcal {K}}}_1={\mathcal {C}}^2+\frac{{\mathcal {C}}L_0 Dn\sqrt{m}}{\varepsilon (1-\rho )} (m+ 4)L_0,\) \({\hat{\rho }}_2= \frac{n(5M{\mathcal {K}}_2+\sqrt{m+4}L_0(kL_1+L_0)Dn\sqrt{m})}{\varepsilon },\) \(\hat{{\mathcal {K}}}_2\!=\!\frac{mn^2 H^2}{4\varepsilon ^2(1\!-\!\rho )}(m\) \(+4)^2L_0^2,\) \({\hat{\rho }}_1= n(5M\hat{{\mathcal {K}}}_1+(kL_1+L_0)c {\mathcal {C}}),\) \(F_1=L_1 kc(m+3)^{\frac{3}{2}},\) \(L_0=\sup _{t\in \lfloor T\rfloor ,i\in {\mathcal {V}},z\in \varOmega }\Vert \nabla \psi _i^t(z)\Vert ,\) \(d=Mk^2,\) \(\varepsilon =\lambda _{\min }( {P}),\) \({\mathcal {C}}=D\sqrt{m} \textstyle \sum \limits _{i=1}^{n}\Vert z_i(0)\Vert _1,\) \(\beta _1=\sup _{t\in \lfloor T\rfloor ,i\in {\mathcal {V}},z\in \varOmega }\Vert \hat{{{\varvec{g}}}}(z_i(t))\Vert ,\) \(M=n\lambda _{\max }({P}),\) \(L_1 =\sup _{t\in \lfloor T\rfloor ,i\in {\mathcal {V}},z\in \varOmega }\Vert \nabla ^2 f_i^t(z)\Vert ,\) and \(h=\sup _{z\in \varOmega }\Vert z\Vert .\)

By Theorem 1, we know that both \(\varTheta _T\) and \(\mu _i\) play important roles in the bound of dynamic regret expectation. Note that if \(\mu _i=T^{-\frac{1}{2}-\varrho }\) for any \(\varrho >0\) and \(\varTheta _T\) grows sublinearly with \(\frac{\sqrt{T+1}}{\ln (T+1)},\) then \({\mathbb {E}}\{{\mathcal {R}}_i^d(T)\}\) increase sublinearly with T, which implies the performance of online distributed algorithm (15) is “good”. Therefore, algorithm (15) can be employed to solve some strongly pseudoconvex optimization problems, where the specific gradient information is unavailable or expensive to obtain. If the fluctuation of minimizer sequence \(\{z^* (t)\}_{t=0}^{T}\) is dramatical, \({\varTheta }_T\) might become linear with \(\frac{\sqrt{T+1}}{\ln (T+1)},\) then the problem becomes insolvable. It is a natural phenomenon, even in online convex optimization.

Before proving Theorem 1, some useful lemmas are need to be presented. First, we use \({\mathcal {F}}_t\) to denote the \(\sigma \)-field generated by the entire history of the random variable as

Based on the above definition, the following lemma can be achieved.

Lemma 3

[19, 28] If \(\nabla \psi _i^t\) is \(L_1\)-Lipschitz continuous on \(\varOmega ,\) then

To prove Theorem 1, first, we present the following lemma, which gives the upper bound of the discrepancy between each agent’s state and their average state at each update.

Lemma 4

Based on Assumptions 1–4, for any \(i \in {\mathcal {V}},\)

and

where \({\bar{z}}(t)=\frac{1}{n} \textstyle \sum \limits _{i=1}^n z_i(t) ,\) \({\mathcal {K}}_1={\mathcal {C}}^2+\frac{{\mathcal {C}}Dn\sqrt{m}\beta _1}{\varepsilon (1-\rho )},\) \({\mathcal {K}}_2=\frac{m(n D\beta _1)^2}{4\varepsilon ^2(1-\rho )}.\)

Proof

See Appendix 1. \(\square \)

Next, we give the expectation for the upper bound of the cumulative square error between agents’ optimal state and their average state at each iteration time.

Lemma 5

Under Assumptions 1–4, we have

Proof

See Appendix 1. \(\square \)

Proof of Theorem 1

Based on Lemmas 4–5, we can achieve

Let \(\alpha (t)=\frac{c}{\sqrt{t+1}},\) there holds

Based on Jensen’s inequality, we have

Now, by taking expectation on both sides of inequality (24), we can obtain

Based on (22), (23), and (25), we have

Note that \(\nabla \psi _i ^t\) is bounded by \(L_0\) for any \(i \in {\mathcal {V}}\) and \(t \in \lfloor T\rfloor .\) Thus,

Substituting (26) into (27) yields (16). Thus, Theorem 1 is proved. \(\square \)

4 A simulation example

In this part, we illustrate the validity of proposed algorithm by using a numerical example. Assume a multi-agent system with six agents, labeled as \({\mathcal {V}}=\{1,2,\ldots ,6\}.\) Each agent exchange information with its neighbors via a time-varying digraph as shown in Fig. 1. For any \(i\in {\mathcal {V}},\) cost function of agent i is given by

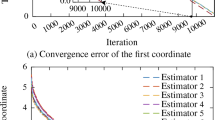

where \(z\in \varOmega .\) In this simulation, parameters are selected as \(p_1=0.6,\) \(p_2=0.8,\) \(p_3=p_6=1,\) \(p_4=0.5,\) \(p_5=0.1,\) \(q_i(t)\) is randomly selected from \([-i,i]\) and subject to a uniform distribution. Moreover, \(\varOmega =\{z\vert -10\le z\le -1\}\) is the constraint set of the objective function. Initial states of agents are selected as \(z_1(0)=0.3,\) \(z_2(0)=-0.5,\) \( z_3(0)=-0.5,\) \(z_4(0)=0.4,\) \(z_5(0)=-0.1\) and \(z_6(0)=-0.2.\) Algorithm (15) is applied to solving this problem. Let \(\alpha (t)={1}/{\sqrt{200t+500}},\) \(\mu _i =0.05\) and \(Q=1.\) The trajectories of \(x_i(t), i=1,\ldots ,6\) are represented in Fig. 2 and Fig. 3 displays the trajectories of the average regrets of all agents. From Fig. 2, it can be clearly seen that all agents’ states gradually approach to the optimal solution \(z^*(t).\) Furthermore, by observing Fig. 3, one can find that average regret of each agent gradually diminishes to zero. These observations indicate the correctness of achieved theoretical results.

The state trajectories of all agents under algorithm (15)

The average regret trajectories of all agents under algorithm (15)

5 Conclusions

This paper deals with the zeroth-order online distributed optimization problem where the sum of local cost functions is strongly pseudoconvex. To solve this problem, a random gradient-free algorithm based on multi-point gradient estimation method and auxiliary optimization strategy is proposed. Under this algorithm, each agent updates state at each time only using the information received from its neighbors at the current moment and the information of its own gradient estimation at previous time.The proposed algorithm is measured by the dynamic regret. The results indicate that if the communication graph is B-strongly connected, and the deviation of the minimizer sequence grows with a certain rate, then the expectation of dynamic regret increases with some sublinear bound. The simulation example in the previous section has verified its validity. The cases of communication delays and packet losses will be our future work, which means that there will be new difficulty for the online distributed pseudoconvex optimization problems.

Data Availability

Data sharing is not applicable to this article as no new data were created or analyzed in this study.

References

Deng, Z., & Chen, T. (2022). Distributed algorithm design for constrained resource allocation problems with high-order multi-agent systems. Automatica, 144, 110492. https://doi.org/10.1016/j.automatica.2022.110492

Yang, T., Lu, J., Wu, D., Wu, J., Shi, G., Meng, Z., & Johansson, K. H. (2016). A distributed algorithm for economic dispatch over time-varying directed networks with delays. IEEE Transactions on Industrial Electronics, 64(6), 5095–5106. https://doi.org/10.1109/TIE.2016.2617832

Lee, S., Kim, J. K., Zheng, X., Ho, Q., Gibson, G. A., & Xing, E. P. (2014). On model parallelization and scheduling strategies for distributed machine learning. Advances in Neural Information Processing Systems, 27, 2834–2842.

Lu, K., & Zhu, Q. (2020). Distributed algorithms involving fixed step size for mixed equilibrium problems with multiple set constraints. IEEE Transactions on Neural Networks and Learning Systems, 32(11), 5254–5260. https://doi.org/10.1109/TNNLS.2020.3027288

Lu, K., Zhu, Q., & Yan, X. (2022). Distributed ergodic algorithms for mixed equilibrium problems: Absent of cut property. Automatica, 141, 110297. https://doi.org/10.1016/j.automatica.2022.110297

Fu, Q., Xu, F., Shen, T., & Takai, K. (2020). Distributed optimal energy consumption control of HEVs under MFG-based speed consensus. Control Theory and Technology, 18, 193–203. https://doi.org/10.1007/s11768-020-0021-6

Zhu, Y., Yu, W., Wen, G., Chen, G., & Ren, W. (2018). Continuous-time distributed subgradient algorithm for convex optimization with general constraints. IEEE Transactions on Automatic Control, 64(4), 1694–1701. https://doi.org/10.1109/TAC.2018.2852602

Xiong, H., Han, J., Nian, X., & Li, S. (2021). Distributed projection subgradient algorithm for two-network zero-sum game with random sleep scheme. Control Theory and Technology, 19(3), 405–417. https://doi.org/10.1007/s11768-021-00055-x

Lü, Q., Li, H., & Xia, D. (2017). Distributed optimization of first-order discrete-time multi-agent systems with event-triggered communication. Neurocomputing, 235, 255–263. https://doi.org/10.1016/j.neucom.2017.01.021

Akbari, M., Gharesifard, B., & Linder, T. (2015). Distributed online convex optimization on time-varying directed graphs. IEEE Transactions on Control of Network Systems, 4(3), 417–428. https://doi.org/10.1109/TCNS.2015.2505149

Mateos-Núnez, D., & Cortés, J. (2014). Distributed online convex optimization over jointly connected digraphs. IEEE Transactions on Network Science and Engineering, 1(1), 23–37. https://doi.org/10.1109/TNSE.2014.2363554

Koppel, A., Jakubiec, F. Y., & Ribeiro, A. (2015). A saddle point algorithm for networked online convex optimization. IEEE Transactions on Signal Processing, 63(19), 5149–5164. https://doi.org/10.1109/TSP.2015.2449255

Shahrampour, S., & Jadbabaie, A. (2017). Distributed online optimization in dynamic environments using mirror descent. IEEE Transactions on Automatic Control, 63(3), 714–725. https://doi.org/10.1109/TAC.2017.2743462

Hosseini, S., Chapman, A., & Mesbahi, M. (2016). Online distributed convex optimization on dynamic networks. IEEE Transactions on Automatic Control, 61(11), 3545–3550. https://doi.org/10.1109/TAC.2016.2525928

Chen, T., & Giannakis, G. B. (2018). Bandit convex optimization for scalable and dynamic IoT management. IEEE Internet of Things Journal, 6(1), 1276–1286. https://doi.org/10.1109/JIOT.2018.2839563

Agarwal, A., Dekel, O., & Xiao, L. (2010). Optimal algorithms for online convex optimization with multi-point bandit feedback. In The 23rd Conference on Learning Theory (pp. 28–40).

Xiong, Y., Li, X., You, K., & Wu, L. (2022). Distributed online optimization in time-varying unbalanced networks without explicit subgradients. IEEE Transactions on Signal Processing, 70, 4047–4060. https://doi.org/10.1109/TSP.2022.3194369

Tang, Y., Zhang, J., & Li, N. (2020). Distributed zero-order algorithms for nonconvex multiagent optimization. IEEE Transactions on Control of Network Systems, 8(1), 269–281. https://doi.org/10.1109/TCNS.2020.3024321

Yuan, D., & Ho, D. W. (2014). Randomized gradient-free method for multiagent optimization over time-varying networks. IEEE Transactions on Neural Networks and Learning Systems, 26(6), 1342–1347. https://doi.org/10.1109/TNNLS.2014.2336806

Pang, Y., & Hu, G. (2019). Randomized gradient-free distributed optimization methods for a multiagent system with unknown cost function. IEEE Transactions on Automatic Control, 65(1), 333–340. https://doi.org/10.1109/tac.2019.2914025

Poudel, P., & Shirvaikar, M. (2010). Optimization of computer vision algorithms for real time platforms. In 2010 42nd Southeastern Symposium on System Theory (SSST) (pp. 51–55). IEEE. https://doi.org/10.1109/SSST.2010.5442803

Liu, Q., Guo, Z., & Wang, J. (2012). A one-layer recurrent neural network for constrained pseudoconvex optimization and its application for dynamic portfolio optimization. Neural Networks, 26, 99–109. https://doi.org/10.1016/j.neunet.2011.09.001

Bian, W., Ma, L., Qin, S., & Xue, X. (2018). Neural network for nonsmooth pseudoconvex optimization with general convex constraints. Neural Networks, 101, 1–14. https://doi.org/10.1016/j.neunet.2018.01.008

Xu, H., & Lu, K. (2022). Continuous-time distributed optimization with strictly pseudoconvex objective functions. Journal of the Franklin Institute, 359(2), 1483–1502. https://doi.org/10.1016/j.jfranklin.2021.11.034

Lu, K., Jing, G., & Wang, L. (2019). Online distributed optimization with strongly pseudoconvex-sum cost functions. IEEE Transactions on Automatic Control, 65(1), 426–433. https://doi.org/10.1109/TAC.2019.2915745

Lu, K., & Wang, L. (2023). Online distributed optimization with nonconvex objective functions via dynamic regrets. IEEE Transactions on Automatic Control, 68(11), 6509–6524. https://doi.org/10.1109/TAC.2023.3239432

Hu, X., & Wang, J. (2006). Solving pseudomonotone variational inequalities and pseudoconvex optimization problems using the projection neural network. IEEE Transactions on Neural Networks, 17(6), 1487–1499. https://doi.org/10.1109/TNN.2006.879774

Hajinezhad, D., Hong, M., & Garcia, A. (2019). ZONE: Zeroth-order nonconvex multiagent optimization over networks. IEEE Transactions on Automatic Control, 64(10), 3995–4010. https://doi.org/10.1109/TAC.2019.2896025

Nesterov, Y., & Spokoiny, V. (2017). Random gradient-free minimization of convex functions. Foundations of Computational Mathematics, 17, 527–566. https://doi.org/10.1007/s10208-015-9296-2

El Farouq, N. (2001). Pseudomonotone variational inequalities: Convergence of the auxiliary problem method. Journal of Optimization Theory and Applications, 111(2), 305. https://doi.org/10.1023/A:1012234817482

Pang, J. S., & Chan, D. (1982). Iterative methods for variational and complementarity problems. Mathematical Programming, 24(1), 284–313. https://doi.org/10.1007/BF01585112

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

This work was supported by the National Natural Science Foundation of China (Nos. 62103169, 51875380) and the China Postdoctoral Science Foundation (No. 2021M691313).

Appendix

Appendix

1.1 Proof of Lemma 4

Note that \(z_i(t+1)\) generated by algorithm (15) is the solution to the following optimization:

Then according to the KKT condition, we can have

for any \(z\in \varOmega .\) Note that \(\nu _i(t)\in \varOmega .\) Let \(z= \nu _i(t),\) based on \(2\varepsilon \Vert z_i(t+1)-\nu _i(t)\Vert ^2\le \Vert z_i(t+1)-\nu _i(t)\Vert _P^2\) and \(\Vert \hat{{{\varvec{g}}}}(z_i(t))\Vert \le \beta _1,\) the following inequality holds

For any \(i\in {\mathcal {V}},\) \(r_i(t)\) is defined as \(r_i(t)=z_i(t+1)-\nu _i(t).\) From inequality (30), one can obtain \(\Vert r_i(t)\Vert \le \beta _1\alpha (t)/(2\varepsilon ).\) Moreover,

For any \(i\in {\mathcal {V}},\) vector \({\tilde{z}}_s(t)\in {\mathbb {R}}^n\) and \({\tilde{r}}_s(t)\in {\mathbb {R}}^n \) are defined as a superposition of the sth entry of \(z_i(t)\) and \(r_i(t)\), respectively. Then, it follows that

which means the following equation holds

where the definition of \(\varPhi (t,k)\) can be found in (1). Since matrix \(\varPhi (t,k)\) is doubly stochastic, Eq. (33) can be further expressed

Combining (33) and (34), one can obtain

for any \(i\in {\mathcal {V}}.\) Based on inequality (2), one has

This inequality directly proves the validity of inequality (19). \(\square \)

Furthermore, we can have

Note that \(0<\rho <1\) and learning rate \(\alpha (t)\) is non-increasing, we can obtain

By Cauchy–Schwarz inequality, we can obtain

Combining (38) and (39), we can obtain the validity of inequality (20).\(\square \)

Furthermore, we can achieve

1.2 Proof of Lemma 5

To prove Lemma 5, an auxiliary lemma is given as follows.

Lemma 6

Based on Assumptions 1–4, for \(t\in \lfloor T\rfloor \) and any \(u\in {\mathbb {R}}^m,\)

Proof of Lemma 6

Note that

It is not hard to prove that \( \sum _{i=1}^n P\nu _i(t) = \sum _{i=1}^n Pz_i(t),\) Then, \(\big \langle \sum _{i=1}^n\Big (z_i(t+1)-\nu _i(t)\Big ), u-{\bar{z}}(t+1)\big \rangle _P=0.\) Using Young’s inequality and the fact that \(\sum _{i=1}^n\Vert z_i(t)-\nu _i(t)\Vert ^2\le 4\sum _{i=1}^n\Vert z_i(t)-{\bar{z}}(t)\Vert ^2,\) we can have

Combining with (20) in Lemma 4, it immediately implies (41). \(\square \)

Proof of Lemma 5

Note that

Now, we denote \({\mathcal {O}}(t)=\frac{1}{2}\sum _{i=1}^n\Vert z_i(t)^*-z_i(t)\Vert ^2_ P.\) Then,

Based on KKT condition, one can obtain

Using (41) in Lemma 6, we have

Based on Lemma 2 and Assumption 3, the following inequality can be achieved

Note that \(\Vert \nabla ^2 \psi _i^t\Vert \le L_1\) for any \(z\in \varOmega ,\) it implies \(\Vert \nabla \psi _i^t(z_i(t))-\nabla \psi _i^t({\bar{z}}(t))\Vert \le L_1 \Vert z_i(t)-{\bar{z}}(t)\Vert \) for any \(i \in {\mathcal {V}}.\) By combining the boundedness of \(\varOmega \) in Assumption 2, one can obtain

Thus, the following inequality can be achieved

Moreover, we can also achieve that

Note that

Substituting (50) and (51) into (52), using (c) in Lemma 3, then taking the total expectation for the achieved inequality after taking the conditional expectation over \({\mathcal {F}}_t,\) so we can obtain

By (45), (47), and (53), using (25), we have

Due to \({\mathcal {O}}(t) \ge 0\) for any \(t\in \lfloor T\rfloor ,\) we can have \(-\sum _{t=0}^T \nabla {\mathcal {O}}(t)={\mathcal {O}}(0)-{\mathcal {O}}(T)\le {\mathcal {O}}(0)\le d/2.\) By summing from \(t=0\) to T on both sides of inequality (54), the validity of Lemma 5 is verified. \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yan, X., Li, C., Lu, K. et al. Random gradient-free method for online distributed optimization with strongly pseudoconvex cost functions. Control Theory Technol. 22, 14–24 (2024). https://doi.org/10.1007/s11768-023-00181-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11768-023-00181-8