Abstract

Fabric defect detection has been successfully implemented in the quality quick response system for textile manufacturing automation. It is challenging to detect fabric defects automatically because of the complexity of images and the variety of patterns in textiles. This study presented a deep learning-based IM-RCNN for sequentially identifying image defects in patterned fabrics. Firstly, the images are gathered from the HKBU database and these images are denoised using a contrast-limited adaptive histogram equalization filter to eliminate the noise artifacts. Then, the Sobel edge detection algorithm is utilized to extract pertinent attention features from the pre-processed images. Lastly, the proposed improved Mask RCNN (IM-RCNN) is used for classifying defected fabric into six classes, namely Stain, Hole, Carrying, Knot, Broken end, and Netting multiple, based on the segmented region of the fabric. The dataset that can be evaluated using the true-positive rate and false-positive rate parameters yields a higher accuracy of 0.978 for the proposed improved Mask RCNN. The proposed IM-RCNN improves the overall accuracy of 6.45%, 1.66%, 4.70%, and 3.86% better than MobileNet-2, U-Net, LeNet-5, and DenseNet, respectively.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

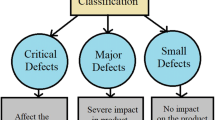

Fabric defect classification is a crucial step in the quality declaration process that looks for and identifies fabric flaws [1]. Fabric issues can be found using hybrid, geometric spectral, model-based learning and structural approaches [2]. The majority of auto fabric infection systems rely on computer vision-related algorithms for image capture and error segmentation [3]. In order to apply flaw identification of the gray cloth and lattice fabric more successfully, the YOLOv3 detection layer is utilized to feature maps of different sizes [4]. Over 70 different forms of fabric faults are tolerated in the textile business. The six common defect types are shown in Fig. 1 [5].

The image acquisition procedure, which is largely responsible for obtaining digital images of damaged samples, may often be carried out using a line-scan charge-coupled device (CCD) [6]. To locate and categorize the defective locations, the defect detection process is used, and occasionally, it also incorporates quantitative analysis [7].

After defect detection, all operations that come after it, such as the classification of defect types and the evaluation of defect grades, are referred to as the last procedure [8]. To manage flaws of various magnitudes, a Gaussian pyramid creates the inputs into the network at each scale [9]. Automation of inspection procedures within the textile sector is a topic that has been studied since the mid-1990s. It is utilized in industry usually and particularly in the textile sector. In this industry, defect detection is vital for lowering costs and increasing customer satisfaction [10]. In comparison to hand-crafted approaches, the autoencoder offers significant advantages over the Principal Component Analysis (PCA) and other cutting-edge deep learning algorithms for evaluating the effectiveness of the model on plain, patterned and rotated fabric images [11]. Computer vision-based Automatic Defection (ADD) can avoid the limitations of conventional human inspection methods by repeatedly detecting departure from a pre-defined visual average [12]. In time-sensitive fashion supply chains, a deep learning system like CNN may be utilized to decrease the chance of waste and supply chain interruption [13, 14]. Given its high level of automation and continued reliance on human labor for quality control, the textile industry is a prime candidate for the industry 4.0 transition. A study on the transition of the textile industry to industry 4.0 predicts a 10–16% improvement in yield [15]. The major objective of this work was to represent a revolutionary deep learning approach called enhanced Mask RCNN that is utilized for fabric defect identification. This is the key benefit of the recommended technique.

-

The first step in pre-processing the collected images is to utilize the CLAHE method to remove noise from the images.

-

The fully connected (FC) layer uses the regions of interest generated by the region proposal network (RPN) to generate bounding boxes and segmentation masks for the targeted regions.

-

The categorization is performed by feeding the outcomes from the fully convolutional network (FCN) which uses all of the features through the soft-pool layer.

-

The quantitative analysis of the recommended approach uses the attributes of accuracy, specificity, precision, recall, and F1 score.

The remaining components of this study were categorized into five categories listed in the following. The literature review is described in Sect. 2, the proposed method using the IM-RCNN model is described in Sect. 3, the results and analysis are described in Sect. 4, and recommendations for further research are provided in Sect. 5.

2 Literature survey

In recent years, the majority of traditional research projects on fabric defect identification have been focused on fabrics, including plain and twill fabrics. A brief summary of some of those research publications is provided in this section.

Deep learning model-based fabric defect detection was introduced by Jing et al. [2] in 2020. This method has been employed to improve product quality and production efficiency. It has also proven to be an effective tool for classification and segmentation. Public fabric datasets and independently created datasets have been used to assess the MobileNet-2 suggested model. Images are 256 × 256 pixels in size. Of these, 70% are used as training sets and 30% are used as test sets.

Huang et al. [16] present a few deep learning neural network approaches based on defect different fabric faults in 2021. Segmentation and detection make up the two portions of it. To reach segmentation results, they only need to analyze about 50 faulty samples, and they can detect defects in real time at 25 frames per second (FPS).

A two-step solution is presented in 2021 by Voronin et al. [17] using block-based alpha rooting as its initial step and a domain-based image enhancement technique. A contemporary architecture is based on neural networks in the second stage. Compared to deep learning and typical machine learning techniques, this system detects flaws more precisely.

An enhanced convolutional neural network, the CU-net, was proposed by Rong-qiang et al. in 2021 [18] for the purpose of fabric detection. This technique enhanced the traditional U-Net network. The introduction of an attention mechanism and size compression training are done using this network. The accuracy scores for the suggested approach are 98.3% and 92.7%, followed by an advance in detection methods of 4.8% and 2.3%.

A learning-based technique for automatically detecting fabric defects, followed by an enhanced histogram comparison, was proposed by Xiang Jun, et al., in 2021 [19]. After using a LeNet-5 model, which serves as a voting model to classify the type of defect in the fabric, the VI-model is used to expect flaws in the local area. A 96% accuracy rate is achieved by the trained classification model.

Convolutional neural networks (CNNs) were developed in 2021 by Samit et al. [20] to classify the faults in fabric photographs that are acquired from textiles; they were also compared to VGG, DenseNet, Inception, and Exception deep learning networks. For automatic defect detection (ADD), a VGG-based model has been shown to be more beneficial than a straightforward CNN model. The CNN model had a training accuracy of 96% and a validation accuracy of 62%.

In 2021, Parul et al. [21] proposed a unique information set theory-based approach for detecting faults in fabric texture. Using information set theory, five innovative features are implemented: effective information, energy, sigmoid, Shannon transform, and composite transform. Fabric samples are collected and considered for these characteristics. Then, the closest algorithm is utilized to classify the fabric flaws. With 99% accuracy, the Shannon transform features (STF) are used.

Feng Li et al. [22] used to advance the convolutional neural network detection technique in 2020. Therefore, it indicates three techniques to further increase precision. Start by employing multi-scale training. Use the dimension clusters approach next. Finally, we employ a gentle and conventional suppression to prevent the scenario in which the same categories of defects are eliminated due to recurrent detection. Then, it effectively increased by 9.8% MAP fabric defect detection precision.

In 2020 [23], Boshan Shi et al. proposed an advanced fabric detect detection based on low-rank gradient information decomposition and structured graph algorithm. The low-rank decomposition model is decomposed into a matrix that indicates the probability of defects in the fabric and identifies and represents defective areas. A structured graph algorithm was employed in correspondence with the qualities of the image of the fabric defect. In this method, overall TPR and FPR are 87.3% and 1.21%, respectively.

Tong et al. [24] focus on the issue of fabric defect detection in 2021 and make an effective deep convolutional neural network (DCNN) architecture. To increase compute efficiency without lowering image resolution, EDD uses lightweight materials. In order to identify defects, the L-shaped feature pyramid network (L-FPN) uses high-level semantic characteristics. It is lower than EDD by 39.8% and 49.0%, with 8.59 M parameters and 31.78B FLOPs correspondingly.

These literature studies served as the basis for the accuracy value, which was calculated using a range of deep learning techniques. The suggested technique used an IM-RCNN to classify the defective fabrics into holes, oil spots, thin bars, thick bars, tread errors, and broken ends.

Ghost Net’s high level of accuracy is demonstrated by the feature extraction methods using Sobel edge.

3 Mask RCNN

In order to classify the various flaws in a single image, semantic segmentation is proposed and executed in this section using a Mask RCNN model. An attention-fused lightweight CNN module was engaged in this instance to take the role of the Mask RCNN backbone. The Max pool is replaced with a layer of soft pooling to increase detection rates. Data preparation is a virtual procedure that improves the numerous changes in input pictures while eliminating noise. The noise in the photographs is reduced using the CLAHE filter. Additionally, it is utilized to enhance the qualities of the incoming data and lessen undesired distortions. Schematic representation of the Mask RCNN is shown in Fig. 2.

3.1 Dataset description

The HBKU fabric images database can be found in the Industrial Automation Research Laboratory, Research Associate. There are 50 images of cloth with a star design and 60 images of cloth with dots in the book. The two types of images are divided into halves that are defect-free and halves that are flawed. The box-patterned fabric data collection comprised 50 images, 30 of which were non-defected fabric. The size of every image is 256 × 256 pixels. In every flawed image, there are ground truths; the erroneous are shown as white, while the flawless areas are shown as black.

3.2 CLAHE filter

The pre-processing data help to enhance the numerous adjustments to the input images by lowering noise. The CLAHE filter method was primarily created for medical imaging, and it aims to reduce the noise produced by homogeneous areas. When pre-processing digital photographs, the procedure can be used to improve the image by removing noise. Rather than using the complete image, CLAHE works on discrete areas of the picture called tiles.

CLAHE is an improved form of HE (histogram equalization), a quick and efficient technique for enhancing photographs that can improve the contrast by reducing the gray scale. Figure 3 displays the results of HE and CLAHE processing on images. The most common image pixel sizes from the datasets are 2560*1920*or1984*1488, which were recommended in open CV. In the case of colored images, the three channels are suggested by CLAHE, and the input images are grayed out.

After defect detection, all operations that come after it, such as the classification of defect types and the evaluation of defect grades, are referred to as the last procedure. MSCDAE architecture is used.

Defect recognition is frequently sensitive to changes in illumination, and when it is excessively bright or dark, some information may be lost. CLAHE, which prevents information loss caused by extreme brightness and darkness, provides a solution to this issue. Figure 4 displays the CLAHE illustration. Reflected light is what is to blame for the image's excessive brightness in the upper left corner, as shown in the figure. Furthermore, the CLAHE-normalized image's defect information is important.

In this study, features from the gray-level co-occurrence matrix (GLCM) are retrieved. Co-occurrence, matrix P, and M × M are defined as follows:

The features of a digital image are offered and reviewed using GLCM in the sections that follow. The four of them are homogeneity, energy, contrast, and correlation (features vector). The angular second moment energy is referred as:

Compare the gray level's variance or texture metrics. If this feature grayscale contrast is considerable local fluctuation of the gray level, the difference is likely to be high in a common texture. It is calculated mathematically as follows:

The linear connection between the gray levels of nearby pixels is quantified by texture correlation (1). This attribute was determined as follows:

A pair of pixels' local correlation is measured by homogeneity. The homogeneity should be substantial if the gray levels between each pair of pixels are comparable. Using this computation as a basis,

3.3 Improved Mask RCNN

Mask RCNN extends faster RCNN. Mask RCNN features a second branch that predicts segmentation masks for each ROI pixel-by-pixel. The network inputs and outputs of faster RCNN are not intended to be aligned pixel-for-pixel. It produces two outputs: the candidate window and the classification. The difference the classification label and candidate window, the third output requires the extraction of a more precise partial layout. In order to achieve pixel-level classification, Mask RCNN follows faster RCNN with a fully convolutional network.

A set of image augmentation and enhancement techniques that have been empirically selected are included. The enhanced Mask RCNN, which was created by utilizing the best-performing network, is also shown in this study together with the proposed defect detection process.

For each of the feature maps, regions of interest with anchors were created using the region proposal network. Finally, the layer produces bounding boxes and a segmentation mask for the targeted regions, using the regions of interest produced by the RPN layer. For target box regression, categorization, and instance segmentation, the related characteristics of each RoI (Region of Interest) must be collected from each image and then sent to the fully convolutional. The fully convolutional layer network layer employs all of the features and output of the soft-pool layer to conduct the categorization. Architecture of Mask RCNN is shown in Fig. 5.

3.3.1 Feature extraction network

Feature extraction using deep learning is an important step used to extract relevant features in fabric images. The feature pyramid network model called ResNet-50 is applied to all of the fabric images. ResNet-50 extracts the features and mixes the Mask RCNN with the region recommendations. To create future maps, the input data were fed into the CNN. ResNet-50 convolutional layers are supplied, a pooling layer is added, and residual associations are maintained. The network consists of five convolutional blocks, with the first block using a convolution layer size of 7 × 7, and the second through fifth blocks using 1 × 1, 3 × 3, and 1 × 1 convolutional layers correspondingly. Accordingly, from various sizes of cloth photographs, the schematic and spatial information may be consequent. As the activation function, cross-entropy is employed. The cross-entropy activation function is expressed as follows:

where V is the observations over the class n, the probability N is the number of classes, and C is the class labels.

Exactly, gradient descent is defined as,

where η is the learning rate, \({y}^{\left(j\right)}and\) \(z^{\left( j \right)}\) are the training examples, and n is the size of the mini-batch. Following, the layers are known as the pooling layer and the fully convolutional layer obtained was applied

3.3.2 Region proposal network

The region of interest and anchors for each feature map were created using the region proposal network (RPN). Then, depending on the size of the target object, the RPN can extract the RoI features from various layers of the feature pyramid. The straightforward network structure changes, severely enhancing the detection performance of small objects and producing significant improvements in accuracy and speed without considerably increasing the calculation amount. Convolutional feature maps are layered with a tiny network to provide a sequence of rectangular point recommendations with a score. In this manner, the foreground and background values of the fabric images are recognized. RPN is similar to a classless target detector with a sliding window. It is based on the convolutional neural network's architecture. Anchor frame anchors may be produced by sliding frames. In order to avoid image or filter pyramids, the anchor box concept is produced. The sizes and positions in the RPN predictions are adjusted based on the bounds of the fabric image. The layer eventually creates bounding boxes and segmentation masks for the targeted regions of the fabric pictures using the regions of interest produced by the RPN layer.

3.3.3 Fully convolutional layer (FCN) network

For target box regression, categorization, and instance segmentation, the relevant features of each RoI must be retrieved from each image and sent to the fully convolutional. Before beginning the entire convolution, RoI Align was used to change each RoI dimension in order to conform to the FC layer parameters. The leveling process of RoI pooling in Mask RCNN was replaced by RoI Align, which used bilinear interruption to extract the related properties of each RoI on the feature maps. The target mask is created using a multi-branch computation phase and consists of three prediction branches: FC layer for classification prediction, a regression layer for bounding box modification, and an FCN for classification and segmentation.

Mask RCNN computes the nonlinear error rate as the total of different losses at each stage of the network. The loss is related to the requirement that each step be allotted. The bounding box Head, the mask head, and the Bounding Box Head all used the characteristics of ROI Align as inputs to perform classification, bounding box analysis, and segmentation. The results from the FCN layer, which makes use of all the features, are fed to the soft-max layer to do the categorization.

3.3.4 Sobel edge detection algorithm (SED)

The SED algorithm operates on the estimated gradient of an image's gray scale using a discrete differential operator; the higher and gradient, the more probable an edge is to be present. The Sobel operator may enhance the surface texture and form elements of various building roof types while reducing background noise interference by smoothing out the building boundaries in the filtered images. Two sets of 3 × 3 matrixes structure the Sobel method, which is convolved from top to bottom on the picture along the y-axis and from left to right along the z-axis. In order to estimate the horizontal and vertical brightness differences, \({S}_{y}\) and \({S}_{x}\) denote the gray values of the horizontal and vertical edge detection of the image, respectively. If the image's (y, z) coordinate point's gray value is f (y, z), the grayscale values are determined as follows:

The estimated horizontal and vertical grayscale values at each pixel point in the image are added, and the square root is used to obtain the estimated gradient M and grayscale gradient direction q at each point.

If the approximate slope M exceeds a certain threshold, then point (y, z) is considered a limit point.

4 Results and discussion

This section evaluates the suggested for IM-RCNN using several metrics, including recall, accuracy, specificity, and precision. The efficiency of the suggested paradigm is assessed from a variety of perspectives. The block diagram fabric classification is shown in Fig. 6.

The figure displays the six different kinds of common defects, namely Stain, Hole, Carrying, Knot, Broken end, and Netting multiple, based on the segmentation region of the fabric using the Sobel edge detection algorithm.

4.1 Performance analysis

The following statistical metrics, such as accuracy, precision, recall, specificity, and F1 score, are used to evaluate the success of the classification technique.

where TN and FN represent true- and false-negative results and TP and FP represent true and false fabric samples.

Table 1 provides an illustration of the classification of different classes in fabric defect detection with specific parameters. The accuracy, F1 score, precision, recall, and specificity of the proposed improved Mask RCNN are 95.98%, 96.8%, 95.56%, 94.5%, and 95.29%, respectively. Figure 7 shows the six-class categorization receiver operating characteristic (ROC) curve that was produced. The dataset that can be evaluated using the TPR and FPR parameters yields a higher accuracy of 0.978 for the proposed improved Mask RCNN. The classes of fabric defects are accurately classified by the ghost net model with high accuracy.

In order to achieve the highest level of testing accuracy, the study first calculated the number of training epochs required. The classification accuracy of the IM-RCNN was, according to the results, attained at 10 and 100 epochs with a testing accuracy of 95.98%, respectively. Figure 8 depicts the training and testing accuracy graph, and Fig. 9 shows the loss graph.

4.2 Comparative analysis

In this section, the suggested model and the current deep learning models are contrasted and examined. Precision, specificity, recall, accuracy, and F1 score were used to compare the performance of current approaches in order to show that the proposed strategy's results are more effective. Table2 shows the comparison of the proposed model and deep neural networks such as MobileNet-2, U-Net, LeNet-5, and DenseNet.

The proposed IM-RCNN improves the overall specificity of 7.14%, 1.36%, 4.89%, and 3.47% better than MobileNet-2, U-Net, LeNet-5, and DenseNet, respectively. The proposed IM-RCNN improves the overall precision of 11.88%, 6.96%, 9.61%, and 8.77% better than MobileNet-2, U-Net, LeNet-5, and DenseNet, respectively. The proposed IM-RCNN improves the overall recall of 12.19%, 7.21%, 12.12%, and 10.61% better than MobileNet-2, U-Net, LeNet-5, and DenseNet, respectively. The proposed IM-RCNN improves the overall F1 score of 7.06%, 4.74%, 6.03%, and 7.59% better than MobileNet-2, U-Net, LeNet-5, and DenseNet, respectively. The proposed IM-RCNN improves the overall accuracy of 6.45%, 1.66%, 4.70%, and 3.86% better than MobileNet-2, U-Net, LeNet-5, and DenseNet, respectively. Based on the particular parameters of the networks as indicated in Fig. 10, the effectiveness of the proposed network is evaluated.

In the experimental analysis, the proposed model was compared with the existing based on HBKU fabric images database. According to Table 3, the proposed IM-RCNN system improves the overall accuracy of 6.26%, 7.4%, and 11.4% better than U-Net, CNN, and DCNN, respectively. Compared to the existing methods, the proposed IM-RCNN system yields higher accuracy.

From the above results, it is justified that the proposed fabric defect detection technique classifies six classes such as Stain, Hole, Carrying, Knot, Broken end, and Netting multiple with better accuracy level.

5 Conclusion

In this study, a deep learning-based IM-RCNN was proposed for sequentially identifying image defects in patterned fabrics. Firstly, the images are gathered from the HKBU database and these images are pre-processed using CLAHE for eliminating the noise artifacts. The Sobel edge detection algorithm is utilized to retrieve relevant features from the pre-processed images. Lastly, the proposed IM-RCNN is used for classifying defected fabric into six classes, namely Stain, Hole, Carrying, Knot, Broken end, and Netting multiple, based on the segmentation region of the fabric. The experimental findings on a HKBU datasets reveal that the proposed technique has better and better detection outcome than other approaches. The proposed IM-RCNN improves the overall accuracy of 6.45%, 1.66%, 4.70%, and 3.86% better than MobileNet-2, U-Net, LeNet-5, and DenseNet, respectively. In future work, we will accumulate more fabric images from the textile sector and create sizable databases to validate the proposed technique for practical applications.

Data availability

Data sharing is not applicable to this article as no new data were created or analyzed in this Research.

References

Liu, J., Wang, C., Su, H., Du, B., Tao, D.: Multistage GAN for fabric defect detection. IEEE Trans. Image Process. 29, 3388–3400 (2019). https://doi.org/10.1109/TIP.2019.2959741

Jing, J., Wang, Z., Rätsch, M., Zhang, H.: Mobile-Unet: an efficient convolutional neural network for fabric defect detection. Text. Res. J. 92(1–2), 30–42 (2022). https://doi.org/10.1177/0040517520928604

Ouyang, W., Xu, B., Hou, J., Yuan, X.: Fabric defect detection using activation layer embedded convolutional neural network. IEEE Access 7, 70130–70140 (2019). https://doi.org/10.1109/ACCESS.2019.2913620

Jing, J., Zhuo, D., Zhang, H., Liang, Y., Zheng, M.: Fabric defect detection using the improved YOLOv3 model. J. Eng. Fibers Fabr. 15, 1558925020908268 (2020). https://doi.org/10.1177/1558925020908268

Jing, J.F., Ma, H., Zhang, H.H.: Automatic fabric defect detection using a deep convolutional neural network. Color. Technol. 135(3), 213–223 (2019). https://doi.org/10.1111/cote.12394

Huang, Y., Xiang, Z.: RPDNet: automatic fabric defect detection based on a convolutional neural network and repeated pattern analysis. Sensors 22(16), 6226 (2022). https://doi.org/10.3390/s22166226

Liu, Q., Wang, C., Li, Y., Gao, M., Li, J.: A fabric defect detection method based on deep learning. IEEE Access 10, 4284–4296 (2022). https://doi.org/10.1109/ACCESS.2021.3140118

Lu, Z., Zhang, Y., Xu, H., Chen, H.: Fabric defect detection via a spatial cloze strategy. Text. Res. J. 93(7–8), 1612–1627 (2023). https://doi.org/10.1177/00405175221135205

Mei, S., Wang, Y., Wen, G.: Automatic fabric defect detection with a multi-scale convolutional denoising autoencoder network model. Sensors 18(4), 1064 (2018). https://doi.org/10.3390/s18041064

Silvestre-Blanes, J., Albero, T., Miralles, I., Pérez-Llorens, R., Moreno, J.: A public fabric database for defect detection methods and results. Autex Res. J. 19(4), 363–374 (2019). https://doi.org/10.2478/aut-2019-0035

Zhou, H., Chen, Y., Troendle, D., Jang, B.: One-class model for fabric defect detection. arXiv preprint arXiv:2204.09648 (2022). https://doi.org/10.48550/arXiv.2204.09648

Talu, M.F., Hanbay, K., Varjovi, M.H.: CNN-based fabric defect detection system on loom fabric inspection. Text. Appar. 32(3), 208–219 (2022). https://doi.org/10.32710/tekstilvekonfeksiyon.1032529

Chakraborty, S., Moore, M., Parrillo-Chapman, L.: Automatic defect detection for fabric printing using a deep convolutional neural network. Int. J. Fash. Des., Technol. Educ. 15(2), 142–157 (2022). https://doi.org/10.1080/17543266.2021.1925355

Fang, B., Long, X., Sun, F., Liu, H., Zhang, S., Fang, C.: Tactile-based fabric defect detection using convolutional neural network with attention mechanism. IEEE Trans. Instrum. Meas. 71, 1–9 (2022)

Dakshina, D.S., Jayapriya, P., Kala, R.: Saree texture analysis and classification via deep learning framework. Int. J. Data Sci. Artifi. Intell. 01(01), 20–25 (2023)

Huang, Y., Jing, J., Wang, Z.: Fabric defect segmentation method based on deep learning. IEEE Trans. Instrum. Meas. 70, 1–15 (2021). https://doi.org/10.1109/TIM.2020.3047190

Voronin, V., Sizyakin, R., Zhdanova, M., Semenishchev, E., Bezuglov, D., Zelemskii, A.: Automated visual inspection of fabric image using deep learning approach for defect detection. In Automated Visual Inspection and Machine Vision IV, vol.11787, pp. 174–180 (2021). https://doi.org/10.1117/12.2592872

Rong-qiang, L., Ming-hui, L., Jia-chen, S., Yi-bin, L.: Fabric defect detection method based on improved U-Net. In Journal of Physics: Conference Series, vol. 1948(1), pp. 012160 (2021). https://doi.org/10.1088/1742-6596/1948/1/012160

Jun, X., Wang, J., Zhou, J., Meng, S., Pan, R., Gao, W.: Fabric defect detection based on a deep convolutional neural network using a two-stage strategy. Text. Res. J. 91(1–2), 130–142 (2021). https://doi.org/10.1177/0040517520935984

Wu, J., Le, J., Xiao, Z., Zhang, F., Geng, L., Liu, Y., Wang, W.: Automatic fabric defect detection using a wide-and-light network. Appl. Intell. 51(7), 4945–4961 (2021). https://doi.org/10.1007/s10489-020-02084-6

Arora, P., Hanmandlu, M.: Detection of defects in fabrics using information set features in comparison with deep learning approaches. J. Text. Inst. 113(2), 266–272 (2022). https://doi.org/10.1080/00405000.2020.1870326

Li, F., Li, F.: Bag of tricks for fabric defect detection based on cascade R-CNN. Text. Res. J. 91(5–6), 599–612 (2021). https://doi.org/10.1177/0040517520955229

Shi, B., Liang, J., Di, L., Chen, C., Hou, Z.: Fabric defect detection via low-rank decomposition with gradient information and structured graph algorithm. Inf. Sci. 546, 608–626 (2021). https://doi.org/10.1016/j.ins.2020.08.100

Zhou, T., Zhang, J., Su, H., Zou, W., Zhang, B.: EDDs: a series of efficient defect detectors for fabric quality inspection. Measurement 172, 10888 (2021). https://doi.org/10.1016/j.measurement.2020.108885

Acknowledgements

The authors would like to thank the reviewers for all of their careful, constructive, and insightful comments in relation to this work.

Funding

No Financial support.

Author information

Authors and Affiliations

Contributions

The authors confirm contribution to the paper as follows: GR and RK were involved in study conception and design, data collection, analysis and interpretation of results, and draft manuscript preparation. All authors reviewed the results and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

This paper has no conflict of interest for publishing.

Ethical approval

My research guide reviewed and ethically approved this manuscript for publishing in this Journal.

Human and animal rights

This article does not contain any studies with human or animal subjects performed by any of the authors.

Informed consent

I certify that I have explained the nature and purpose of this study to the above-named individual, and I have discussed the potential benefits of this study participation. The questions the individual had about this study have been answered, and we will always be available to address future questions.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Revathy, G., Kalaivani, R. Fabric defect detection and classification via deep learning-based improved Mask RCNN. SIViP 18, 2183–2193 (2024). https://doi.org/10.1007/s11760-023-02884-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02884-6