Abstract

For a physical sparse system identification issue, this brief proposes a filter proportionate arctangent framework-based least mean square (FP-ALMS) algorithm. The ALMS algorithm has significant robustness against impulsive noise, whereas the filter proportionate concept when utilized in combination with the ALMS takes advantage of the sparse feature to accelerate convergence time. As a result, it turns out that the FP-ALMS algorithm has greater robustness and convergence speed in an impulsive environment. Finally, simulation outcomes demonstrate that the novel FP-ALMS algorithm outperforms other existing algorithms in terms of robustness in an impulsive environment, convergence rate, and steady-state error for sparse system identification.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Sparse representations are typical in unknown systems, when they are being identified, signifying that only a certain portion of their impulse response's coefficients are nonzero (dominant), while the bulk of them are 0 or almost 0. Many real-world applications, including digital TV transmission channels [1], acoustic echo cancelers [2], and wireless multipath channels [3], involve such systems.

The conventional algorithms like the least mean square (LMS) [4], least mean square/fourth (LMS/F) [5], normalized least mean square (NLMS) [6], least mean kurtosis (LMK) [7] algorithms, etc., based on gradient descent technique were developed for different applications. Later Lyapunov adaptive filtering (LA) algorithms [8, 9] were proposed to overcome drawbacks encountered by gradient descent-based techniques like local minima problem, slow rate of convergence, but the aforementioned algorithms failed to work for sparse systems. Therefore, researchers have shown interest in developing adaptive algorithms to identify sparse systems [10,11,12,13,14,15,16,17]. When there are many more small-magnitude coefficients than large-magnitude coefficients, a system is said to be sparse. Sparsity norm-based and proportionate-type adaptive algorithms are two general categories of sparsity-aware adaptive algorithms for recognizing sparse systems [13]. In the sparsity norm-based approach, an additional regularization term that promotes sparsity is introduced to push the smaller coefficients approaching zero. When using a proportionate-based approach, the convergence is quickened by adjusting the gain term, that is proportional with the filter weights; in other words, it is larger for effective filter coefficients and lower for inert coefficients. The proportionate type is favored and employed in this study because of the difficulties in choosing the regularization factor in sparsity norm employing algorithms and being unable to operate with systems that may not be exactly sparse but still feature a reasonably sparse structure.

The majority of proportionate adaptive algorithms rely on the Gaussian assumption and are established on mean square error (MSE) criterion. In [11], a filter proportionate normalized least mean square (FPNLMS) method was proposed for a compressed input signal by the utilization of variable step size to adapt sparse systems. This resulted in improved performance compared to the other existing algorithms like PNLMS [12], improved PNLMS (IPNLMS) [14], and µ-law PNLMS (MPNLMS) [15] algorithms. But in actual time, the noise encountered is frequently impulsive, and the conventional methods struggle with this situation. The maximum correntropy criterion (MCC), a new robust optimum criterion, was recently utilized effectively in adaptive filtering [18, 19]. MCC cost becomes an effective selection in an impulsive interference environment because correntropy is resistive to outliers for suitable kernel width. For effective utilization in sparse systems some specific proportionate-type adaptive filtering approaches relying on maximum correntropy criteria (MCC) [20] and the proportional minimum error entropy (MEE) method [21] were created to counter impulsive noise. An improved proportionate algorithm utilizing the maximum correntropy criteria (IP-MCC) was presented to detect the system with changing sparsity under the impulsive noise environment [22], but the inclusion of double-sum operations and exponential components led these techniques to have a high computational cost. Adaptive filter established on the maximum versoria criteria (MVC) has recently acquired a lot of interest because, compared to other competing approaches, it performs better under non-Gaussian noise and requires fewer computations [23]. Later the proportionate MVC (P-MVC) method combined the MVC features of resilience to impulsive noise with the proportional notion to leverage sparsity for identifying sparse systems [24].

It is possible to enhance the robustness of adaptive algorithms by using saturation characteristics of nonlinearities in errors like arctangents. Hence a novel cost function framework was introduced and established on this characteristic of an arctangent function where the typical cost function was featured within the arctangent framework. This brought about the establishment of arctangent families of robust algorithms like the arctangent LMS (ALMS), arctangent least mean fourth (ALMF) [25], and arctangent LMS/F (ALMS/F) for system identification [26]. However, the above-mentioned algorithms were sparse agnostic.

The filter proportional (FP) adaptation concept is applied to the ALMS algorithm and is known as a filter proportionate arctangent LMS (FP-ALMS) method in response to the facts and drawbacks listed above. The following are the paper's key contributions: 1. The ALMS is subjected to FP adaptation ideas, resulting in the FP-ALMS algorithm that takes advantage of the system's sparseness properties. 2. It is investigated how the proportionate factor affects the final excess mean square error (EMSE) and the stability restriction for the step size. 3. The steady-state EMSE of the FP-ALMS algorithm is established, and the computational complexity is analyzed. 4. The proposed algorithm’s performance is tested for several sparse systems under the effect of impulsive noise. The following is a summary of the paper's structure. In Sect. 2, a novel FP-ALMS algorithm is developed. The FP-ALMS algorithm's performance is investigated in Sect. 3. Steady-state performance and computational complexity are analyzed in Sect. 4. In Sect. 5, the simulated outcomes are compiled. Lastly Sect. 6 of this brief deals with the conclusion.

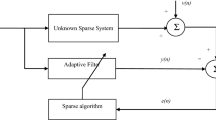

2 Proposed FP-ALMS algorithm

Consider an unknown sparse system where \({\mathbf{x}}\left( k \right) = [x\left( k \right), x\left( {k - 1} \right), . . . , x\left( {k - M + 1} \right)]^{T}\) signifies the input signal with length \(M\). The unknown physical system vector is denoted by \({\varvec{w}}_{0}\) of size \(M \times 1\), whereas the desired signal \(d\left( k \right) = {\varvec{w}}_{0}^{T} {\varvec{x}}\left( k \right) + \eta \left( k \right)\) with \(\eta \left( k \right)\) being the noise signal. The error \(e\left( k \right)\) in the system’s output is stated as

with \(\hat{y}\left( k \right) = \hat{\user2{w}}^{T} {\varvec{x}}\left( k \right)\) characterizes the adaptive filter’s output having \(\hat{\user2{w}}\left( k \right) = [\hat{w}_{1} , \hat{w}_{2} , . . . , \hat{w}_{M} ]^{T}\) represents the adaptive filter’s weight vector. The ALMS algorithm’s cost function is given as [25]

where \(\zeta \left( k \right) = E\left[ {e^{2} \left( k \right)} \right]\) is the LMS algorithm’s cost function [4] and \(\gamma\) > 0 suggests the steepness of the arctangent framework. Utilizing the gradient descent technique, the ALMS algorithm’s weight update representation is written as [25]

In Eq. (3), µ′ representing step size Eqs. (1) and (3) is combined to produce the following result:

with the cumulative step-size factor denoted by \(\mu = \mu^{\prime } \gamma\). Equation (4) is represented as

with the nonlinear function \(f\left( {e\left( k \right)} \right)\) is indicated as

It is seen that for large values of \(e\left( k \right)\), the nonlinear function \(f\left( {e\left( k \right)} \right)\) becomes 0. This results in an improvement of the robustness of the ALMS algorithm to impulsive noise. Meanwhile when \(\gamma \to 0\), the ALMS algorithm is identical to the LMS algorithm, and when \(\gamma \to \infty\), \(f\left( {e\left( k \right)} \right)\) approaches 0 thus reducing the convergence rate. Using filter proportionate adaptation concepts, the ALMS algorithm can take advantage of the system’s sparsity by multiplication of the proportionate gain matrix \({\varvec{Q}}\left( k \right)\) with a weight update vector to benefit from the sparsity and thus increasing the convergence time. The weight vector formula of the novel filter proportionate ALMS (FP-ALMS) algorithm is stated as

where \( {\varvec{Q}}\left( k \right) = {\text{diag}}(q_{1} \left( k \right),q_{2} \left( k \right) \ldots q_{M} \left( k \right)\)). The gain factor elements are given as [14]

where \(l = 1,2, \ldots M\), \(- 1 \le \theta \le 1,\) and ε is a positive number that avoids division by 0 in Eq. (8). The step size in Eq. (7) is revised in accordance with the filter coefficient and is written as

where

When all the filter coefficients are 0 initially, a slight medication is done in \(l_{\infty } \left( k \right)\) to avoid the step size of stalling and is written as

where \(\delta\) is a small positive value that becomes ineffective post-first iteration [11].

3 Performance analysis

This segment presents the performance study of the suggested FP-ALMS algorithm. The step size limits needed to satisfy the convergence requirement and how proportionate terms affect steady-state behavior are discussed using the transformed domain model [24]. The proposed FP-ALMS algorithm utilizes the transformed matrix \({\varvec{Q}}^{\frac{1}{2}} \left( k \right)\) given as

and the transformed input vector \({\varvec{x}}_{t} \left( k \right) = {\varvec{Q}}^{\frac{1}{2}} \left( k \right){\varvec{x}}\left( k \right)\). Similarly, the transformed filter coefficients \(\hat{\user2{w}}_{t} \left( k \right) = {\varvec{Q}}^{{ - \frac{1}{2}}} \left( k \right)\hat{\user2{w}}\left( k \right)\). From the equations written above we get \(\hat{\user2{w}}_{t}^{T} \left( k \right){\varvec{x}}_{t} \left( k \right) = \hat{\user2{w}}^{T} \left( k \right){\varvec{x}}\left( k \right)\). Let the weight error vector is set as \(\tilde{\user2{w}}\left( k \right) = {\varvec{w}}_{o} - \hat{\user2{w}}\left( k \right)\), in the transformed domain we get \(\tilde{\user2{w}}_{t} \left( k \right) = {\varvec{Q}}^{{ - \frac{1}{2}}} \left( k \right)\left( {{\varvec{w}}_{o} - \hat{\user2{w}}\left( k \right)} \right) = {\varvec{Q}}^{{ - \frac{1}{2}}} \left( k \right){\varvec{w}}_{o} - \hat{\user2{w}}_{t} \left( k \right)\). Therefore \(e\left( k \right)\) is written as

where \(e_{a} \left( k \right) = \tilde{\user2{w}}^{T} \left( k \right){\varvec{x}}\left( k \right) = \tilde{\user2{w}}_{t}^{T} \left( k \right){\varvec{Q}}^{\frac{1}{2}} \left( k \right){\varvec{Q}}^{{ - \frac{1}{2}}} \left( k \right){\varvec{x}}_{t} \left( k \right) = \tilde{\user2{w}}_{t}^{T} \left( k \right){\varvec{x}}_{t} \left( k \right)\) is the apriori error. Expressing Eq. (7) with reference to the weight error vector \(\tilde{\user2{w}}_{t} \left( k \right)\) and assuming that \({\varvec{Q}}\left( k \right)\) varies slowly as used in [22, 24], we get

The mean square performance evaluation for the FP-ALMS algorithm is obtained using the conservation of energy equation.

Substituting \(e_{a} \left( k \right)\) in Eq. (15) and assuming \(E\left[ {\left\|{{\varvec{x}}_{t} \left( k \right) } \right\|}^{2}\right]\) is asymptotically not dependent of \(f^{2} \left( {e\left( k \right)} \right)\), the relationship shown below is attained

For steady-state conditions as \(k \to \infty\),

This, the stability condition concerning µ is provided by

If we utilize the premise that \({\varvec{Q}}\left( k \right)\) is not dependent on \({\varvec{x}}_{t} \left( k \right)\) then

If \({\mathbf{S}}\left( k \right) = E\left[ {{\mathbf{Q}}^{\frac{1}{2}} \left( k \right){\varvec{R}}_{{\varvec{x}}} {\mathbf{Q}}^{\frac{1}{2}} \left( k \right)} \right]\), then

where \({\varvec{R}}_{x}\) is the autocorrelation matrix and \({\text{Tr}}\) is the trace operator. Using Eq. (19) in Eq. (18) we get

Equation (20) illustrates that the stability bound matches up to that of the ALMS algorithm if \({\varvec{Q}}\left( k \right) = {\varvec{I}}\). Equation (20) can also be written as

where \(\mu_{m}\) represents the step-size upper limit.

4 Steady-state performance

The steady-state EMSE in the context of an impulsive noise scenario as well as the impact of the proportionate gain factor over the final EMSE is investigated here. The steady-state EMSE is determined by calculating \(\mathop {{\text{Lim}}}\limits_{{k \to \infty }} \;E\left[ {\| {e_{a} \left( k \right) } \|^{2}} \right] \). Substituting Eq. (17) into Eq. (16) and using Eq. (19), we get

The Taylor series is used to expand the nonlinear component of error as seen below.

where \(O\left( {e_{a}^{2} \left( k \right)} \right)\) denotes the third- and higher-order terms of \(e_{a} \left( k \right) \), whereas \(f^{\prime}\left( {\eta \left( k \right)} \right)\) and \(f^{\prime\prime}\left( {\eta \left( k \right)} \right)\) represent the 1st- and 2nd-order derivation of \(f\left( {\eta \left( k \right)} \right)\) and is written as

and

The left half of Eq. (22) is obtained as

Utilizing the premise that the noise \(\eta \left( k \right)\) is i.i.d. (independent and identically distributed) having mean 0 and uncorrelated to the signal that serves as the input \({\varvec{x}}\left( k \right), \) together with a priori error \(e_{a} \left( k \right)\) having 0 mean and uncorrelated to the noise \(\eta \left( k \right)\) and ignoring the high-order components [22, 24], Eq. (26) is

Similarly, the RHS of (22) is given as

The EMSE expression of the novel FP-ALMS algorithm is produced by removing EMSE from Eqs. (27) and (28) and utilizing the derivatives obtained from Eqs. (24) and (25).

The expectation terms in Eq. (29) can be obtained by integration [17]. Equation (29) illustrates the steady-state EMSE for the FP-ALMS algorithm and is analogous to the ALMS algorithm [27] which indicates the calculation is true.

The PMCC algorithm, IP-MCC algorithm, P-MVC algorithm, and the suggested algorithm’s computational complexity are illustrated in Table. 1. The complexity is measured using the required number of additions, multiplications, divisions, and exponential operations. Table 1 shows that the proposed FP-ALMS algorithm needs extra 2 additions, 7 multiplications, and 1 division terms in comparison with the P-MVC criterion-based algorithm.

5 Simulation study

Three distinct experiments were assessed to determine the impact of the proposed approach. The suggested FP-ALMS algorithm was compared against the PMCC, IP-MCC, and P-MVC-based algorithms. The mean square deviation \({\text{MSD}} \left( {{\text{dB}}} \right) = 20\log_{10} {\|{\hat w}}\left( k \right) - {\varvec{{w}}_{{0}}\|_{2}}\) is the measurement metric employed to assess the proposed algorithm’s performance.

5.1 Experiment-I

The unidentified system to be simulated comprising of 120 samples is symbolized by the impulse response \(h\left( n \right)\) and is shown in Fig. 1. It is generated as \(h\left( n \right) = \exp \left( { - \beta n} \right)r\left( n \right)\), where the sequence \(\beta\) is a uniformly distributed group of data lying from -0.5 to 0.5 and determines the decay rate of the envelope. The signal that serves as the input for computation is a random signal with a variance of 1 and a mean of 0. The system noise consists of a mix of white Gaussian noise having to mean of 0 and variance of 0.01 with impulsive noise \(v_{i} \left( n \right)\) such that \(\eta \left( n \right) = v_{w} \left( n \right) + v_{i} \left( n \right)\). \(v_{i} \left( n \right)\) is generated as \(v_{i} \left( n \right) = B\left( n \right)I\left( n \right)\), in which the Bernoulli process is denoted by \(B\left( n \right)\) with occurrence probability \({\text{Pr}}\left( {B\left( n \right) = 1} \right) = P\) where \(P\) is the success probability. \(I\left( n \right)\) is the Gaussian process featuring 0 mean and variance of \(1000\). The parameters for simulation utilized in this experiment for the various algorithms are as follows: PMCC \((\mu = 0.001, \;p = 0.75,\;\sigma = 1.25),\) IPMCC \(\left( {\mu = 0.001,\; p = 0.75,\;\sigma = 1.25, \;\epsilon = 0.01} \right)\), PMVC \(\left( {\mu = 0.001, \;p = 1,\; \tau = 0.1} \right)\), and proposed \(\left( {\mu = 0.001,\; p = 0.75,\; \gamma = 0.6} \right)\). Figure 2 depicts the MSD curve of the suggested approach with \(P = 0.05\). The suggested FP-ALMS algorithm converges at around 793 iterations, whereas the PMVC, IPMCC, and PMCC converge at around 1692, 2600, and 648 iterations, respectively. Though the PMCC algorithm converges fast, it achieves higher MSD value. The MSD values obtained by PMCC, IP-MCC, P-MVC, and the proposed algorithm are − 12.84, − 20, − 19.99, and − 20 dBs, respectively. This suggests that the proposed approach can be considered over the other algorithms.

Figure 3 depicts the simulated and theoretical values of the proposed FP-ALMS algorithm's steady-state MSE. The step size was changed from 0.2 to 0.6. Equation (29) provides the theoretical values.

5.1.1 Choice of parameters γ and θ

To examine the impact of the various parameters \(\gamma {\text{ and}}\,\, \theta\) on the performance of the proposed algorithm, the MSD curves of the proposed algorithm are obtained using Experiment-I. The behavior for different values of \(\gamma \,\,{\text{and}}\,\, \theta\) is compared and plotted in Fig. 4 and Fig. 5 accordingly. Figure 4 depicts that for \(\gamma = 6\), the proposed FP-ALMS algorithm converges slowly but converges fast for both values of \(\gamma = 1\) and \(\gamma = 0.6\). For Experiment-I, \(\gamma = 0.6\) is considered as it achieved a lower MSD value of − 20.05 dB compared to − 19.9 dB for \(\gamma = 1\). Figure 5 shows that for \(\theta = 0.75\), the proposed FP-ALMS algorithm converges slightly faster compared to \(\theta = 0.9\) and \(\theta = - 0.75\), but the MSD values almost remain the same for different values of \(\theta\). Similarly, the optimized values of \(\gamma \, {\text{and}}\, \theta \) are obtained through simulation for Experiment-II.

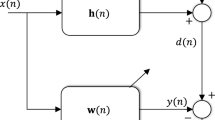

5.2 Experiment-II

An experiment is put out to determine the room’s acoustic transfer function to compare the resilience of the FP-ALMS algorithm to PMCC, IP-MCC, and P-MVC criterion-based algorithms. The block diagram representation of the different components employed in the setup of the experiment is shown in Fig. 6. The setup comprises a dSPACE MicroLab Box which is a small development system for the laboratory that brings together great performance and versatility with a small size and affordability. It can be used in signal processing and other research areas like medical engineering, vehicle engineering, etc. The dSPACE MicroLab Box has numerous analogs-to-digital converters (ADC) and digital-to-analog converters (DAC) ports programmed by the MATLAB-Simulink software. One of the MicroLab Box's DAC ports uses an input random noise to stimulate the speaker and produce acoustic noise. To drive the speaker, a connection is done from the DAC port to the speaker via the reconstruction filter and the power amplifier. The speaker excitation signal acts as the input of the unidentified transfer function, whereas the received microphone signal acts as the output. The input signal's sampling frequency is 10 kHz, and there is a 65-cm gap between the speaker and the microphone. Inside a laboratory room are kept a speaker and a microphone. The room’s impulse response is produced by the LMS algorithm which runs in real time in the identical MicroLab Box lasting for about 5 min [11]. Figure 7 shows the impulse response that was so acquired. The input signal needed for determining the room transfer function is a random signal with a variance of 4 and a mean of 0. The system noise is generated as per the first experiment. The parameters for simulation utilized in this experiment for the various algorithms are as follows: PMCC (\(\mu = 0.001,\; p = 0.5,\;\sigma = 1.25),\) IPMCC \(\left( {\mu = 0.001,\; p = 0.5,\;\sigma = 1.25, \;\epsilon = 0.01} \right)\), PMVC \(\left( {\mu = 0.001,\; p = 4, \;\tau = 0.1,\;p = 0.5} \right)\), and proposed \(\left( {\mu = 0.001,\; p = 0.5, \;\gamma = 0.9} \right)\). Figure 8 illustrates the MSD curve of the suggested algorithm. The PMCC, IP-MCC, P-MVC, and the suggested algorithm are used to further identify the transfer function as the unknown system. The MSD value obtained by the PMCC algorithm is − 25.8 dB, the IP-MCC algorithm is − 20.95 dB, the P-MVC algorithm is − 24.45 dB, and the proposed algorithm is − 26.07dB respectively, which shows that the proposed algorithm attains the lowest MSD value when compared to the current algorithms.

6 Conclusion

The paper introduced a novel filter proportionate arctangent framework based on the least mean square to identify sparse systems. The FP-ALMS algorithm's step size is adjusted proportionally to the filter coefficient. To evaluate the effectiveness of the FP-ALMS algorithm, the steady-state EMSE is obtained using the Taylor expansion approach. Simulations demonstrate that the FP-ALMS algorithm outperforms other modern algorithms in terms of robustness in an impulsive noise environment.

Availability of data and materials

No public dataset is used.

References

Wu, Y., Qing, Z., Ni, J., Chen, J.: Block-sparse sign algorithm and its performance analysis. Digit. Signal Process. 128, 103620 (2022)

Yu, Y., Yang, T., Chen, H., Lamare, R.C.D., Li, Y.: Sparsity-aware SSAF algorithm with individual weighting factors: performance analysis and improvements in acoustic echo cancellation. Signal Process. 178, 107806 (2021)

He, R., Ai, B., Wang, G., Yang, M., Huang, C., Zhong, Z.: Wireless channel sparsity: measurement, analysis, and exploitation in estimation. IEEE Wirel. Commun. 28(4), 113–119 (2021)

Haykin, S.O.: Adaptive Filter Theory, 4th edn. Prentice-Hall, Upper Saddle River (2002)

Patnaik, A., Nanda, S.: The variable step-size LMS/F algorithm using nonparametric method for adaptive system identification. Int. J. Adapt. Control Signal Process. 34(12), 1799–1811 (2020)

Luis Perez, F., André Pitz, C., Seara, R.: A two-gain NLMS algorithm for sparse system identification. Signal Process. 200, 108 (2022)

Bershad, N.J., Bermudez, J.C.M.: Stochastic analysis of the least mean kurtosis algorithm for Gaussian inputs. Digit. Signal Process. 54, 35 (2016)

Seng, K.P., Man, Z., Wu, H.R.: Lyapunov-theory-based radial basis function networks for adaptive filtering. IEEE Trans. Circuits Syst I Fundam. Theory Appl. 49(8), 1215–1220 (2002)

Mengüç, E.C., Acır, N.: An augmented complex-valued Lyapunov stability theory based adaptive filter algorithm. Signal Process. 137, 10–21 (2017)

Ma, W., Duan, J., Cao, J., Li, Y., Chen, B.: Proportionate adaptive filtering algorithms based on mixed square/fourth error criterion with unbiasedness criterion for sparse system identification. Int. J. Adapt. Control Signal Process. 32(11), 1644–1654 (2018)

Rosalin, N.K.R., Das, D.P.: Filter proportionate normalized least mean square algorithm for a sparse system. Int. J. Adapt. Control Signal Process. 33(11), 1695–1705 (2019)

Duttweiler, D.L.: Proportionate normalized least-mean-squares adaptation in echo cancelers. IEEE Trans Speech Audio Process. 8(5), 508–518 (2000)

Patnaik, A., Nanda, S.: Convex combination of nonlinear filters using improved proportionate least mean square/fourth algorithm for sparse system identification. J. Vib. Eng. Technol. (2023). https://doi.org/10.1007/s42417-023-00885-w

Benesty, J., Gay, S.L.: An improved PNLMS algorithm. In: 2002 IEEE International Conference on Acoustics, Speech, and Signal Processing, Orlando, FL, USA (2002)

Liu, L., Fukumoto, M., Saiki, S.: An improved mu-law proportionate NLMS algorithm. In: 2008 IEEE International Conference on Acoustics, Speech and Signal Processing, Las Vegas, NV, USA (2008)

Rakesh, P., Kishore Kumar, T., Albu, F.: Novel sparse algorithms based on Lyapunov stability for adaptive system identification. Radioengineering 27(1), 270 (2018)

Yoo, J.: An improved least mean kurtosis (LMK) algorithm for sparse system identification. Int. J. Inf. Electron. Eng. (2012). https://doi.org/10.7763/IJIEE.2012.V2.246

Singh, A., Principe, J.C.: Using Correntropy as a cost function in linear adaptive filters. In: 2009 International Joint Conference on Neural Networks, Atlanta, GA, USA (2009)

Chen, B., Xing, L., Zhao, H., Zheng, N., Prı´ncipe, J.C.: Generalized correntropy for robust adaptive filtering. IEEE Trans. Signal Process. 64(13), 3376–3387 (2016)

Ma, W., Zheng, D., Zhang, Z., Duan, J., Chen, B.: Robust proportionate adaptive filter based on maximum correntropy criterion for sparse system identification in impulsive noise environments. Signal Image Video Process. 12(1), 117–124 (2017)

Wu, Z., Peng, S., Chen, B., Zhao, H., Principe, J.: Proportionate minimum error entropy algorithm for sparse system identification. Entropy 17(12), 5995–6006 (2015)

Gogineni, V.C., Mula, S.: Improved proportionate-type sparse adaptive filtering under maximum correntropy criterion in impulsive noise environments. Digit. Signal Process. 79, 190–198 (2018)

Huang, F., Zhang, J., Zhang, S.: Maximum versoria criterion-based robust adaptive filtering algorithm. IEEE Trans. Circuits Syst. II Express Briefs 64(10), 1252–1256 (2017)

Radhika, S., Albu, F., Chandrasekar, A.: Proportionate maximum versoria criterion-based adaptive algorithm for sparse system identification. IEEE Trans. Circuits Syst. II Express Briefs 69(3), 1902–1906 (2022)

Kumar, K., Pandey, R., Bora, S.S., George, N.V.: A robust family of algorithms for adaptive filtering based on the arctangent framework. IEEE Trans. Circuits Syst. II Express Briefs 69(3), 1967–1971 (2022)

Patnaik, A., Nanda, S.: Arctangent framework based least mean square/fourth algorithm for system identification. In: Proceedings of International Conference on Robotics, Control and Computer Vision: IRCCV (2023)

Jia, W., et al.: Steady-state performance analysis of the arctangent LMS algorithm with Gaussian input. IEEE Trans. Circuits Syst. II Express Briefs (2023). https://doi.org/10.1109/TCSII.2023.3248222

Funding

No funding was received.

Author information

Authors and Affiliations

Contributions

The first author proposed the idea and wrote the manuscript text. The second author simulated the results.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they do not have any competing interest.

Ethical approval

No studies on human or animals are conducted.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Rosalin, Patnaik, A. A filter proportionate LMS algorithm based on the arctangent framework for sparse system identification. SIViP 18, 335–342 (2024). https://doi.org/10.1007/s11760-023-02729-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02729-2