Abstract

Many methods have been proposed for image denoising, among which the non-local means (NLM) denoising is widely used for fully exploiting the self-similarity of natural images. For NLM denoising, it needs to calculate the similarity of clean image blocks as weights. But affected by noise, it is challenging to accurately get the similarity of clean image patches. Most existing NLM denoising approaches often cause the restored image to be over smoothed and lose lots of details, especially for image with high noise levels. To tackle this, a novel singular value decomposition-based similarity measure method is proposed, which can effectively reduce the disturbance of noise. For the method, we first calculate and vectorize the singular values of two image patches extracted from the noisy image and compute Euclidean distances and cross-angles of the vectors. Second, we propose to utilize the geometric average of Euclidean distance and cross-angle to calculate the similarity between two image patches which is resistant to noise. Third, the proposed similarity measure is applied to non-local means denoising to compute similarity of noisy image patches. Experimental results show that compared to state-of-the-art denoising algorithms, the proposed method can effectively eliminate noise and restore more details with higher peak signal-to-noise ratio (PSNR) and the structural similarity (SSIM) index values.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

An image is usually degraded during capture, storage, processing, and reproduction, which may cause poor visual perception. Evaluating and displaying the quality of the corrupted images are thus a problem that urgently needs to be tackled. Up to now, many methods have been proposed to solve this problem; generally, they are grouped in two categories: subjective and objective assessment [1]. Subjective assessment is acknowledged as the most exact method for evaluating quality. It requires many people to mark an image in varying circumstances and takes the average value as the final score. However, subjective assessment calls for higher experimental conditions, and it is difficult to implement. Generally, the scores captured by subjective evaluation are regarded as landmarks for testing and verifying objective assessments [2, 3]. Objective assessment is widely used in image processing, as it can be realized by computers and ignores the influence of subjective human opinion. Objective assessment is always divided into three categories: full-reference assessment (FR) [4, 5], reduced-reference assessment (RR) [6], no-reference assessment (NR) [7].

FR assumes that the undegraded image can be captured; the quality score is the similarity between the degraded and undegraded images. Mean square error (MSE) and PSNR are typical FR methods, accepted for their simple mathematical expressions and straightforward physical meanings. However, MSE and PSNR are error sensitivity-based quality measurements; their quality is not in line with human perception [8, 9]. In [10], based on the assumption that human eyes are sensitive to variation in image structure, SSIM method is proposed, which uses a new method associated with perceptual sensitivity. In SSIM, the measurement is separated into three parts, luminance, contrast, and structure, and they are combined to measure similarity. More recently, Tan et al. [11] have simplified the mathematical expression in an additive distortion model, which greatly decreases the complexity of SSIM. Md [12] uses joint statistics of luminance and disparity of images to assess natural stereo scene images. Under the assumption that a semantically significant object would be enhanced by the human visual system, Wang [13] finds the object-detecting features, speeds up robust features, and pools the difference to assess quality similarity. RR claims that part of the information for a perfect reference image is known, and that the similarity score is captured by comparing features. For NR, all information about the reference image is unknown, so it is difficult to get accurate quality estimation for corrupted images. Feature description is a good approach to no-reference image quality evaluation. In [14], Oszust applies statistics to capture perceptual features and analyze them with support vector regression to derive a prediction. In [15], a local feature descriptor is designed to get distorted image features, and the features are mapped to a subjective opinion score to estimate the quality. Choosing good features for NR is difficult work, as insufficient features may cause inaccurate evaluation. With the development of machine learning, learning methods have been used to extract features for image quality assessment [16, 17]. Vega [18] adopts deep learning techniques to assess live video stream quality, which could allow an accurate real-time judgement. Bosse [19] trains an end-to-end deep neural network to extract the features and uses a purely data-driven technique that can tackle both FR and NR problems.

Image denoising is a fundamental and indispensable low-level process of many image processing tasks [20,21,22,23,24]. Image always is degraded by noise during the process of capture, transmission, and storage. Up to now, many image denoising algorithms have been proposed [25,26,27], among which NLM denoising is a popular and widely used technique [25, 28,29,30]. NLM denoising is based on the assumption of self-similarity of the natural image: Similar patches recur many times in different spatial locations [31]. Evaluating the similarity of two patches is significant for NLM algorithms. For the task of NLM denoising, patches are corrupted by noise, and ordinary techniques cannot predict the similarity accurately.

In this paper, we propose a novel singular value decomposition (SVD)-based method to estimate the similarity of image patches with noise, which could effectively alleviate the influence of noise. For two image patches, first obtain and vectorize their singular values; then, Euclidean distance and cross-angles of the two vectors are calculated and combined with their geometric mean to evaluate the similarity of the two patches and analyze the anti-interference to noise character of the proposed similarity metric; finally, the similarity metric is applied in NLM denoising. Experimental results show that compared with some denoising algorithms, the proposed singular value decomposition-based NLM (SVDNLM) denoising method greatly improved the performance.

2 Related works

2.1 SVD

SVD always correlates with the structure of a matrix and is widely used in many image processing tasks, such as image denoising [32, 33], sparse representation [34], and image quality assessment [35, 36]. SVD divides a matrix into the product of three other matrices. Let A be a matrix with dimensions \(m\times n\). Then, SVD can be described as \(A=USV^T\), where U and V are orthogonal matrices composed of left and right singular vectors with dimensions \(m\times m\) and \(n\times n\), respectively, while S is a matrix of the form \(\bigl ( {\begin{matrix} \Sigma &{} 0 \\ 0 &{} 0 \end{matrix}} \bigr )\) . \(\Sigma \) is a diagonal matrix of the form \(diag(\sigma _1, \sigma _2,\ldots ,\sigma _r)\), the elements in the diagonal line are the singular values of A, and\(\sigma _1\ge \sigma _2\ge \cdots \ge \sigma _r>0\). In principal components analysis (PCA), SVD is related to covariance matrix and can be used to seek the solution of PCA. PCA is helpful to suppress data noise by dropping its smaller eigenvalues. For an image corrupted by AWGN, structure commonly correlates with larger singular values and noise to smaller singular values. A common approach is thus to remove the smaller singular values and keep the larger ones to maintain the structure and eliminate the noise [32, 33].

2.2 NLM

NLM is a kind of spatial filter, and it is proposed on the assumption that image scenes tend to repeat themselves in different spatial positions and scales. It is first proposed for image denoising, the pixel values of the destination, and is calculated by the weighted average of the whole image pixels [25]. Later, some improved NLM denoising algorithms emerged by modifying the way of weight calculating and optimizing the hyper-parameters [29, 30, 37]. Glasner [31] counts the number of similar sciences appearing in a natural image and testified the rationality of the self-similarity assumption of NLM. Matan [38] connects the self-similarity of a single image and similarity across image sequences to generate a high-resolution image. Later, NLM is widely used in single image super-resolution [39, 40]. Deng [41] takes NLM as a regularity term in the process of improving single image resolution. Zhang [39] combines NLM with steering kernel regression to recover a high-quality image.

3 Proposed method

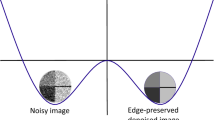

In this section, we detail our novel SVD-based technique to measure the similarity of noisy image patches. Yuan [35] applies the cross-angle to assess similarity of image patches . This can work well around edges, but not for work in a smooth area. We take four image patches, where the pixels in each patch have the same values, but there are different values in different patches. Figure1 shows the cross-angles of vectors of singular values of the four image patches. Figure 1a–d shows image patches with pixel values 50, 100, 150, and 200, respectively. Figure1b–d all shows the same cross-angle, with regard to image patch in Fig.1a, indicating that Fig.1a–d shows the same image patches, but they obviously are not. Furthermore, smooth image patches which are being degraded by noise may cause a highly inaccurate similarity evaluation. For a natural image, especially when the size of image patch is small, a large number of smooth patches would be found. To tackle this problem, we combine the cross-angle with the Euclidean distance of vectors of singular values and map the numerical similarity to the range [0,1] for intuitive judgment and convenient expansion for NLM denoising. A numerical value of 0 indicates minimum similarity, and a numerical value of 1 implies maximum similarity.

3.1 SVD-based image patch similarity measurement

Considering the insufficiency of the cross-angle method for image patch similarity measurement, this section details our novel method for image patch similarity comparison. Let p and \({\hat{p}}\) be image patches of size \(n\times n\), and \(S=(s_1,s_2,\ldots ,s_n)^T\) and \({\hat{S}}=({\hat{s}}_1,{\hat{s}}_2,\ldots ,{\hat{s}}_n)^T\) be column vectors of singular values of p and \({\hat{p}}\), respectively. The cross-angle of vectors S and \({\hat{S}}\) can be described as follows:

where C is the cross-angle. As the singular values of a matrix are nonnegative, the range of C is \([0,\pi /2]\). A numerical value of 0 implies the greatest similarity, and \(\pi /2\) indicates the least similarity. Customarily, smaller values represent less similarity, and larger values indicate greater similarity. Thus, we eliminate the numerical value \(\pi \) and map the cross-angle to the range of [0, 1]:

The range of \(Q_1\) is [0, 1], a numerical value of 0 implies minimum similarity, and a numerical value of 1 indicates maximum similarity. In order to solve the insufficiency of the cross-angle metric, the Euclidean distance of singular values is used to measure the similarity of image patches. The Euclidean distance of S and \({\hat{S}}\) is defined as follows:

In order to be consistent with cross-angle measurement, variable D should be mapped to range [0, 1]. Thus, we can get a measurement of the similarity in Euclidean distance between relevant image patches:

where h is a relaxation factor. When D tends to \(\infty \), \(Q_2\) tends to 0; when the value of D is 0, \(Q_2\) is 1. The value of \(Q_2\) decreases as the distance D increases. A straightforward way to combine the cross-angle and Euclidean distance relevance metrics is the arithmetic average of \(Q_1\) and \(Q_2\).

From (5), it can be seen that if \(Q_1\) is large, then even if \(Q_2\) is very small, the measurement Q may not reflect the true similarity. For example, for two image patches of size \(3\times 3\) with pixel values 1 and 255, respectively, the value of \(Q_1\) is 1, and the value of \(Q_2\) tends to be 0 when h is small enough. The value of Q is approximately 0.5, which is obviously not in line with human perception. We hope that either \(Q_1\) or \(Q_2\) is very small, Q should also have a small value. Thus, we adopt the geometric mean of \(Q_1\) and \(Q_2\) to measure the similarity:

Since the ranges of \(Q_1\) and \(Q_2\) are [0, 1], in (6), the range of Q is [0,1]. A numerical value of 0 implies the lowest similarity, and 1 indicates the highest similarity. If the values of either \(Q_1\) or \(Q_2\) are 0, the value of Q would be 0, and only if both \(Q_1\) and \(Q_2\) are large, Q can have a large value.

For the proposed image similarity assessment and NR assessment, both the reference images are unknown. The proposed method is to evaluate the similarity of clean images through their corresponding noisy images by suppressing the influence of noise. The NR assessment is to evaluate the similarity of the restored image with the unknown original image.

3.2 Robustness analysis

In a natural image, noise is customarily included in the high-frequency information. In some SVD-based denoising algorithms, noise is assumed to be correlated with smaller singular values, and the noise can be removed by dropping some of these smaller singular values [32, 33]. Considering the cross-angle C defined in (1),

The numerator and denominator are both composed of sums of products of singular values. The product of the smaller singular values accounts for only a small fraction of the total and does not seem to have any effect on the cross-angle. Thus, the effects of noise can be suppressed in the measurement of cross-angle C, as well as \(Q_1\). In the same way, the distance D defined in (3) is composed of the sum of the squares of the differences between the corresponding singular values of p and \({\hat{p}}\). The difference between the smaller singular values makes only a small contribution to the sum. Thus, the distance relevance measurement \(Q_2\), defined in (4), can effectively alleviate the impact of noise. Thus, the measurement Q that we proposed in (6) is robust to noise.

4 Application to NLM denoising

The NLM filter is a development of the bilateral filter. It assumes that local structures tend to repeat themselves at different spatial locations in a natural image. Mathematically, the NLM filter is a solution to the optimization problem of least squares [38]:

where \(x_{ij}\) and \(y_{kl}\) are pixels in clean and noise images at indices (ij) and (kl), respectively. \(P(x_{ij})\) is the neighborhood of \(x_{ij}\), and \(w_{klij}\) is the weight describing the correlation between \(x_{ij}\) and \(y_{kl}\). The NLM filter is described as follows:

From (9), it can be seen that the numerical value of \(x_{ij}\) is the weighted average of \(y_{kl}\) in the corresponding neighborhood. In the traditional NLM denoising method, the weights are computed based on MSE. Although widely used due to its convenience for computation, this is not in line with human perception, especially when an image is corrupted by noise. In this paper, we calculate the weight using our image patch similarity measurement Q, which is well correlated with human vision and can effectively suppress the influence of noise. To reduce the amount of computation and improve performance, only patches whose MSE is less than T are needed to evaluate the similarity; the weights of other patches are set to 0. The mathematical expression is as follows:

where \(Y_{kl}\) and \(Y_{ij}\) are image patches with center at \(y_{kl}\) and \(y_{ij}\). \(Q_{klij}\) is the similarity of image patches \(Y_{kl}\) and \(Y_{ij}\), \(MSE_{klij}\) is the mean square error of image patches \(Y_{kl}\) and \(Y_{ij}\), T is a predefined threshold, and \(M\times N\) is the number of pixels.The proposed algorithm is detailed as follows:

5 Experimental results and analysis

In this section, ten classical images “Lena,” “house,” “parrot,” “couple,” “Barbara,” “boat,” “man,” “peppers,” “monarch,” and “airplane” are used to verify the effectiveness of our algorithm. Our experiment is performed in MATLAB 2018 with a Windows 10 operating system, Intel(R) Core i7-8700 CPU, and 16GB memory. Two image quality metrics, PSNR [9] and SSIM [10], are employed to evaluate the quality of the restored images.

After doing the experiments using different values of h defined in Eq. 4, we set its value to be \(0.2\sigma \). Empirically, patch size has important impact on the restoration result. We take several different size patches to restore the image and find patch of \(3\times 3\) obtains the best performance. In the paper, we set the size of the patch to be \(3\times 3\). The choice of threshold T is directly concerning to the success of noise removal. If the numerical value T is much too smaller, only little pixels are left to construct the original clean image that would pull-in new noise; on the contrary, too larger value of T, would cause the image to be over smoothed, increase computational cost, and consume more time. Table 1 shows the PSNR and SSIM of three restored images “house,” “parrot,” and “peppers” with \(\sigma =30\). When the threshold T is 500 or 1000, the three restored images get higher PSNR and SSIM values. For two noisy image patches, larger noise standard error is usually accompanied by larger MSE, and larger MSE means less similarity. For NLM denoising, we hope the estimation of the restored pixel uses enough center pixels of patches with high similarity. Thus, as the noise standard error becomes larger, the threshold T should become larger. So we chose threshold T to be 500 when the standard deviation is no greater than 30 and the threshold T to be 1000 when standard deviation is greater than 30. To cut down the computational cost, we do not search for similar patches in the entire image but in a rectangular neighborhood of size \(15\times 15\) centered at the pixel.

The restored images are compared with several algorithms: mean filter (MF), bilateral filter (BF), and NLM [25]. Figure 2 shows the simulation results for the image “parrot” with standard deviation \(\sigma =30\). By sight, all the algorithms are good at eliminating noise, and the simulation results of SVDNLM include more details. In quantity, NLM has higher PSNR and SSIM values than MF and BF, and SVDNLM gets the highest PSNR and SSIM values. Figure 3 shows the restoration results of image “Barbara” of several denoising algorithms with standard deviation \(\sigma =50\). For MF, much noise is left in such a high noise level. BF and NLM both cause the image to be over smoothed, and the details are lost seriously. The SVDNLM proposed can sufficiently eliminate noise and hold the edges. Figure 4 shows the experimental results for the image “monarch” with standard deviation \(\sigma =60\). MF and NLM cause the image to be over-smoothed, and a large amount of details are lost. The bilateral filter cannot effectively eliminate noise in such a high standard deviation, and the noise therefore remains. SVDNLM has the best visual effect, which could both effectively eliminate noise and preserve the details.

Table 2 shows the average PSNR results of ten classical images at different noise levels. When noise standard deviation \(\sigma \) is no more than 40, BF gets higher values than MF, but when \(\sigma \) is larger than 40, PSNR values for BF are lower than for MF. This is because calculating the weight using distance and the absolute difference between two pixels is meaningless in an image with such a high noise level. NLM has similar results as BF. This is because, in an image with high-level noise, the mean value of noise in the patch will greatly deviate from zero, and the weights cannot reflect the true similarity of patches. SVDNLM works better for images with either low or high noise levels. Table 3 shows the mean values of SSIM for the ten classical images, which has the similar changing rules as PSNR. When \(\sigma \) is smaller, NLM achieves higher values than MF and BF; when \(\sigma \) is larger, NLM, MF, and BF, all achieve similar values. SVDNLM performs best across the whole range of noise levels.

Figure 5 shows the method noise of experimental results of image “parrot” of several methods with noise standard deviation \(\sigma =30\). All methods can effectively eliminate the noise at such a noise level. Owing to the effect of over smoothing, MF, BF, and NLM all cause much details lost. The figure shows that SVDNLM algorithm only loses little details, which indicates the superiority of our methods. Figure 6 shows the method noise of restored image “peppers” with noise standard deviation \(\sigma =60\). BF has some noise left and loses many details at such a high noise level. MF and NLM cause the restored images to be over smoothed. As shown in Fig. 5, SVDNLM gets the best performance.

6 Conclusion

This paper proposes a novel method of evaluating the similarity for noisy image patches, which combines the cross-angle and Euclidean distance of vectors of singular values of image patches and could effectively alleviate the interference of noise. The method is then applied to NLM denoising. Experimental results show that compared to several state-of-the -art algorithms, SVDNLM can effectively eliminate noise and retain more details, achieve larger values of PSNR and SSIM, and greatly improve the performance of NLM.

References

Fang, Y., Yan, J., Liu, J., Wang, S., Li, Q., Guo, Z.: Objective quality assessment of screen content images by uncertainty weighting. IEEE Trans. Image Process. 26, 2016–2027 (2017)

Zhang, Y., Ngan, K.N.: Objective quality assessment of image retargeting by incorporating fidelity measures and inconsistency detection. IEEE Trans. Image Process. 26(12), 5980–5993 (2017)

Bampis, C.G., Li, Z., Moorthy, A.K., Katsavounidis, I., Aaron, A., Bovik, A.C.: Study of temporal effects on subjective video quality of experience. IEEE Trans. Image Process. 26(11), 5217–5231 (2017)

Claudio, E.D.D., Jacovitti, G.: A detail-based method for linear full reference image quality prediction. IEEE Trans. Image Process. 27(1), 179–193 (2018)

Wang, H., Fu, J., Lin. W., Hu, S., Kuo, C.C.J. ,Zuo , L.: Image quality assessment based on local linear information and distortion-specific compensation. IEEE Trans. Image Process. 26(2), 915–926 (2016)

Zhang, C., Cheng, W., Hirakawa, K.: Corrupted reference image quality assessment of denoised images. IEEE Trans. Image Process. 28(4), 1732–1747 (2019)

Rohil, M.K., Gupta, N., Yadav, P.: An improved model for no-reference image quality assessment and a no-reference video quality assessment model based on frame analysis. Signal Image Video Process. 14, 205–213 (2020)

Wang, Z., Bovik, A.C.: Mean squared error: love it or leave it? A new look at signal fidelity measures. IEEE Signal Process. Mag. 26(1), 98–117 (2009)

Horé, A.,Ziou, D.: Image quality metrics: PSNR vs. SSIM. In: 2010 International Conference on Pattern Recognition, IEEE Computer Society, pp. 2366–2369 (2010)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Tan, H.L., Li, Z., Tan, Y.H., Rahardja, S., Yeo, C.: A perceptually relevant MSE-based image quality metric. IEEE Trans. Image Process. 22(11), 4447–4459 (2013)

Md, S.K., Appina, B., Channappayya, S.S.: Full-reference stereo image quality assessment using natural stereo scene statistics. IEEE Signal Process. Lett. 22(11), 1985–1989 (2015)

Wang, F., Sun, X., Guo, Z., Huang, Y., Fu, K.: An object-distortion based image quality similarity. IEEE Signal Process. Lett. 22(10), 1534–1537 (2015)

Oszust, M.: No-reference image quality assessment using image statistics and robust feature descriptors. IEEE Signal. Process. Lett. 24(11), 1656–1660 (2017)

Oszust, M.: Local feature descriptor and derivative filters for blind image quality assessment. IEEE Signal. Process. Lett. 26(2), 322–326 (2019)

Ma, K., Liu, W., Liu, T., Wang, Z., Tao, D.: dipIQ: blind image quality assessment by learning-to-rank discriminable image pairs. IEEE Trans. Image Process. 26(8), 3951–3964 (2017)

Ma, K., Liu, W., Zhang, K., Duanmu, Z., Wang, Z., Zuo, W.: End-to-end blind image quality assessment using deep neural networks. IEEE Trans. Image Process. 27(3), 1202–1213 (2018)

Vega, M.T., Mocanu, D.C., Famaey, J., Stavrou, S., Liotta, A.: Deep learning for quality assessment in live video streaming. IEEE Signal. Process. Lett. 24(6), 736–740 (2017)

Bosse, S., Maniry, D., Müller, K.R., Wiegand, T., Samek, W.: Deep neural networks for no-reference and full-reference image quality assessment. IEEE Trans. Image Process. 27(1), 206–219 (2018)

Shan, W., Liu, P., Mu, L., Cao, C., He, G.: Hyperspectral image denoising with dual deep CNN. IEEE Access 7, 171297–171312 (2019)

Yang, Y., Ping, Z., Ma, F., Wang, Y.: Fusion of hyperspectral and multispectral images with sparse and proximal regularization. IEEE Access 7, 186352–186363 (2019)

Zhang, J., Lu, Z., Li, M., Wu, H.: GAN-based image augmentation for finger-vein biometric recognition. IEEE Access 7, 183118–183132 (2019)

Xue, S., Qiu, W., Liu, F.: Faster image super-resolution by improved frequency-domain neural networks. Signal. Image Video Process. 14, 257–265 (2020)

Wang, Y., Wang, J., Song, X., Han, L.: An efficient adaptive fuzzy switching weighted mean filter for salt-and-pepper noise removal. IEEE Signal. Process. Lett. 23(11), 1582–1586 (2016)

Buades, A., Coll, B., Morel, J.M.: A review of image denoising algorithms, with a new one. SIAM J. Multiscale Model. Simul. 4(2), 490–530 (2005)

Chen, J., Zhan, Y., Cao, H.: Adaptive sequentially weighted median filter for image highly corrupted by impulse noise. IEEE Access 7, 158545–158556 (2019)

Liu, X., Chen, Y.: NLTV-Gabor-based models for image decomposition and denoising. Signal Image Video Process. 14, 305–313 (2020)

Lu, L., Jin, W., Wang, X.: Non-local means image denoising with a soft threshold. IEEE Signal Process. Lett. 22(7), 833–837 (2015)

Souidene, W., Megrhi, S., Beghdadi, A., Amar, C.B.: Perceptual non local mean**. Control and Signal Processing, P-NLM) denoising, International Symposium on Communications (2012)

Lai, R., Dou, X.: Improved non-local means filtering algorithm for image denoising. Int. Cong. Image Signal Process. 2, 720–722 (2010)

Glasner, D., Bagon, S., Irani, M.: Super-resolution from a single image. Presented **(2009)

Rajwade, A., Rangarajan, A., Banerjee, A.: Image denoising using the higher order singular value decomposition. IEEE Trans. Pattern Anal. Mach. Intell. 35(4), 849–862 (2013)

Jha, S.K., Yadava, R.D.S.: Denoising by singular value decomposition and its application to electronic nose data processing. Denoising by Singular Value Decomposition and Its Application to Electronic Nose Data Processing 11(1), 35–44 (2011)

Aharon, M., Elad, M., Bruckstein, A.: K-SVD: an algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 54(11), 4311–4322 (2006)

Yuan, F., Huang, L.-F., Yao, Y.: Algorithm for image quality measurement using singular value decomposition. Wireless Mobile Sens. Netw, IET (2007)

Mansouri, A., Aznaveh, A.M., Azar, F.T., Jahanshahi, J.A.: Image quality assessment using the singular value decomposition theorem. Opt. Rev. 16(2), 49–53 (2009)

Van, D.V.D., Kocher, M.: SURE-based non-local means. IEEE Signal Process. Lett. 16(11), 973–976 (2009)

Matan, P., Michael, E., Hiroyuk, T., Peyman, M.: Generalizing the nonlocal-means to super-resolution reconstruction. IEEE Trans. Image Process. 18(1), 36–51 (2009)

Zhang, K., Gao, X., Tao, D., Li, X.: Single image super-resolution with non-local means and steering kernel regression. IEEE Trans. Image Process. 21(11), 4544–4556 (2012)

Romano, Y., Protter, M., Elad, M.: Single image interpolation via adaptive nonlocal sparsity-based modeling. IEEE Trans. Image Process. 23(7), 3085–3098 (2014)

Deng, C., Tian, W., Wang, S., et al.: Structural similarity based single image super-resolution with nonlocal regularization. Optik 125, 4005–4008 (2014)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Wang, Y., Song, X., Chen, K. et al. A novel singular value decomposition-based similarity measure method for non-local means denoising. SIViP 16, 403–410 (2022). https://doi.org/10.1007/s11760-021-01948-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-021-01948-9