Abstract

Direction-of-arrival (DOA) estimation of multiple emitters with sensor arrays has been a hot topic in the area of signal processing during the past decades. Among the existing DOA estimation methods, the subspace-based ones have attracted a lot of research interest, mainly due to their satisfying performance in direction estimation precision and super-resolution of temporally overlapping signals. However, subspace-based DOA estimation methods usually contain procedures of covariance matrix decomposition and refined spatial searching, which are computationally much demanding and significantly deteriorate the computational efficiency of these methods. Such a drawback in heavy computational load of the subspace-based methods has further blocked the application of them in practical systems. In this paper, we follow the major process of the subspace-based methods to propose a new DOA estimation algorithm, and devote ourselves to reduce the computational load of the two procedures of covariance matrix decomposition and spatial searching, so as to improve the overall efficiency of the DOA estimation method. To achieve this goal, we first introduce the propagator method to realize fast estimation of the signal-subspace, and then establish a DOA-dependent characteristic polynomial equation (CPE) with its order equaling the number of incident signals (which is generally much smaller than that of array sensors) based on the signal-subspace estimate. The DOA estimates are finally obtained by solving the low-dimensional CPE. The computational loads of both the subspace estimation and DOA calculation procedures are thus largely reduced when compared with the corresponding procedures in traditional subspace-based DOA estimation methods, e.g., MUSIC. Theoretical analyses and numerical examples are carried out to demonstrate the predominance of the proposed method in both DOA estimation precision and computational efficiency over existing ones.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The problem of direction-of-arrival (DOA) estimation with sensor arrays is widely studied in various areas [1,2,3,4], including radar, sonar, astronomy and seismic detection. Various methods have been proposed to deal with this problem, which can be coarsely separated into three categories, i.e., the spectral ones [5,6,7,8,9,10,11,12], the subspace-based ones [13,14,15,16,17,18,19] and the sparsity-inducing ones [20,21,22,23,24,25,26,27,28]. The methods based on spatial spectrum analyzing were proposed much earlier before the others, but they perform not very well in DOA estimation precision and simultaneous signal resolution [5,6,7,8,9,10,11,12]. Thus, they were replaced by the subspace-based ones quickly after the proposal of MUSIC [13]. The sparsity-inducing methods have attracted much research attention recently. They have been demonstrated in various numerical experiments to surpass their counterparts in DOA estimation precision and adaption to demanding signal environments with low signal-to-noise ratio (SNR) or limited snapshots [20,21,22,23,24,25,26,27,28]. However, their shortcoming in heavy computational load is also very significant.

The subspace-based methods perform appropriately between the spectrum and the sparsity-based ones in DOA estimation precision and computational efficiency, and they are preferred by most researchers among the existing methods in the past decades, mainly due to their conciseness in implementation, moderate performance in direction estimation precision and super-resolution of spatially adjacent emitters [13,14,15,16,17,18,19]. Although the traditional subspace-based DOA estimation methods, e.g., MUSIC [5], are computationally far more efficient than the sparsity-inducing ones [20,21,22,23,24,25,26,27,28], they still contain two computationally consuming procedures, i.e., eigen-decomposition of the array output covariance matrix and refined spatial searching. The computational loads of the two procedures aggravate rapidly with the increase in array sensor number and enhancement of required DOA estimation precision. Sufficient literatures have contributed much to improve the computational efficiencies of these two procedures. A large amount of them make use of special geometries of particular arrays and use numerical algorithms to replace refined spatial searching, such as root-MUSIC [14] and ESPRIT [15], so as to improve the efficiency of the DOA calculation procedure. For reducing the computational load of the subspace estimation procedure, the most widely studied technique is the propagator method (PM) [29], which substitutes algebraic calculation for matrix decomposition to obtain estimates of the signal- and/or noise-subspace of the array outputs.

Although the existing fast methods have gone some steps ahead in saving the computational load of DOA estimation, they still have an obvious shortcoming. That is, they generally consider only one of the two computationally consuming procedures, and the computational load for DOA estimation is not saved as a whole. A straightforward idea for solving such a problem is to combine two algorithms which aim at fast subspace estimation and DOA calculation, respectively, so that the computational efficiencies of both procedures can be improved all together, and the DOA estimation is thus implemented in shorter time. By now, such a combinational idea has been successfully applied to solve the 2-D DOA estimation problem in [30, 31], which introduce both PM and ESPRIT to realize fast 2-D direction finding. But this method increases largely the dimension of the array output when estimating the propagator, and thus, the computational loads of the successive subspace estimation and numerical DOA calculation are aggravated unexpectedly. In order to further improve the computational efficiency of DOA estimation, we present a PM-based low-dimensional (LD) equation rooting method in this paper. The propagator is used to realize fast signal-subspace estimation, and the signal-subspace estimate is then exploited to establish a characteristic polynomial (CP) equation with a very low order, which equals the number of the incident signals. The DOA estimates are finally obtained by solving this low-dimensional equation. By following such an implementation flow, the computational loads within both procedures of subspace estimation and DOA calculation are significantly saved, and the computational efficiency for DOA estimation is improved as a whole. The rest of the paper mainly consists of four parts. Section 2 formulates the DOA estimation problem and reviews the implementation process of traditional subspace-based DOA estimation methods. Section 3 proposes the new DOA estimation method step by step with detailed derivations and implementations and also compares the computational efficiency of the proposed method with that of the existing methods theoretically. Section 4 demonstrates the predominance of the proposed method over existing ones via numerical examples, and Sect. 5 concludes the whole paper.

2 Problem formulation

Suppose that K signals impinge onto an M-element uniform linear array (ULA) consisting of omnidirectional sensors from directions of \(\varTheta =[\theta _1,\ldots ,\theta _K]\) simultaneously, the array output at time t is

where \(\mathbf A (\varTheta )=[\mathbf a (\theta _1),\ldots ,\mathbf a (\theta _K)]\) is the array responding matrix, each matrix column \( \mathbf a (\theta _k)=[1,\hbox {e}^{j\psi _k},\ldots ,\hbox {e}^{j(M-1)\psi _k}]^\mathrm{T} \) is the array responding vector of a single signal, \( \psi _k=2{\pi }D{\sin }\theta _k/{\lambda } \) represents the phase shift of the kth signal when propagating between adjacent array sensors, D is the spacing between adjacent array sensors, \( \lambda \) is the signal wavelength, which is assumed to be equal for different signals as there is usually a spectral filter before the digital sampler letting in only signals of identical frequencies, \( \mathbf s (t)=[s_1(t),\ldots ,s_K(t)]^\mathrm{T} \) is the signal waveform vector consisting of the K signal samples at time t and \( \mathbf v (t)=[v_1(t),\ldots ,v_M(t)]^\mathrm{T} \) is the additive array noise, which is generally assumed to be independent between different array sensors and identically Gaussian distributed.

If N snapshots, denoted by \( \mathbf X =[\mathbf x (1),\ldots ,\mathbf x (N)] \), are collected by the array receiver during the observation interval, the array output covariance can be estimated as follows [13]:

The estimation precision of the covariance matrix improves when more snapshots are collected, i.e., as N increases. When N approaches infinity, the estimate is disturbance-free as follows:

where \( \mathbf R _s=\lim _{N \rightarrow +\infty } \) \({\frac{1}{N}\sum _{t=1}^{N}{} \mathbf s (t)\mathbf s ^H(t)} \) is the disturbance-free covariance matrix of the signal waveform vector, \( \sigma ^2 \) is the variance of the additive noise, \( \mathbf I _M \) is the unit matrix with dimension \( M \times M \).

When N is moderately large, \( \hat{\mathbf{R }} \) well approximates \( \mathbf R \), and the randomness of the additive noise is significantly reduced from the array covariance matrix in the statistical sense, as is shown in the expression of \( \mathbf R \). The effect of the array noise in the covariance matrix estimate can thus be well separated from the signals. Based on the fast developing of digital samplers, we are now able to collect a great amount of samples even for temporally very short signals, such as radar pulses which may be as short as several microseconds.

As the signal components are statistically separated from the additive noise in \( \mathbf R \), and \( \hat{\mathbf{R }} \) well approximates \( \mathbf R \) when the snapshot number is adequately large, researchers have been trying different ways to extract the signal components from the noisy covariance matrix estimate and then obtain DOA estimates based on them. Subspace-based methods, such as MUSIC [13], achieve this goal by decomposing the covariance matrix into signal- and noise-subspaces, which are orthogonal to each other and the signal-subspace spans a hypersurface identical to that spanned by the array responding vectors.

In the subspace-based methods, \( \hat{\mathbf{R }} \) is eigen-decomposed to estimate the signal-subspace \( \hat{\mathbf{U }}_\mathrm{s} \) and noise-subspace \( \hat{\mathbf{U }}_\mathrm{n} \), i.e.,

where \( \hat{\varSigma }_\mathrm{s} \) and \( \hat{\varSigma }_\mathrm{n} \) are diagonal matrices with K larger and \( M-K \) smaller eigenvalues on their diagonals in the descending order.

It can be concluded through theoretical derivations that [13]

where \( \mathbf U _\mathrm{s} \) and \( \mathbf U _\mathrm{n} \) are signal- and noise-subspaces of \( \mathbf R \), they can also be deemed as perturbation-free counterparts of \( \hat{\mathbf{U }}_\mathrm{s} \) and \( \hat{\mathbf{U }}_\mathrm{n} \), respectively, \( \hbox {span}\{\mathbf{Z }\} \) represents the space spanned by the columns of \( \mathbf Z \).

When adequately many snapshots are collected, \( \hat{\mathbf{R }} \) approximates \( \mathbf R \) well and \( \hat{\mathbf{U }}_\mathrm{n} \) also approximates \( \mathbf U _\mathrm{n} \) well; thus, \( \mathbf A (\varTheta ) \) is orthogonal to \( \hat{\mathbf{U }}_\mathrm{n} \) with minute deviations. Such an approximate orthogonality between the array responding vectors of the incident signals and the estimated noise-subspaces is then exploited for DOA estimation as follows:

The cost function within the brace in (6) is calculated by checking the candidate directions within the potential spatial domain of the incident signals. When \( \theta \) approaches one of the K signal directions, the orthogonality between \( \mathbf a (\theta ) \) and \( {\hat{\mathbf{U }}}_\mathrm{n} \) is enhanced, and the cost function is maximized locally. Since the space spanned by \( \hbox {span}\{\mathbf{U }_\mathrm{s}\} \) has a dimension of \( M-K \), its orthogonal space has a dimension of K, thus the cost function will not maximize at directions other than the K ones corresponding to the incident signals.

In order to catch the maxima in (6) with satisfying precision, and also obtain refined DOA estimates, the spatial search procedure in (6) is generally implemented with a very small directional step, which may be as small as 0.1\(^{\circ }\) or 0.01\(^{\circ }\). The smaller the directional step is, the more testings are required to complete spatial searching, and the heavier the computational load of this procedure will be. In addition, the eigen-decomposition procedure is also computationally consuming, especially when the array sensor number is very large. The two procedures thus aggravate the computational load of the subspace-based methods significantly. In order to overcome the shortcoming of the subspace-based methods in heavy computational load, researchers have made great efforts to propose more efficient optional methods. PM [29] and root-MUSIC [14] are two representative ones among the most widely studied fast DOA estimation methods, and they can be combined together to further reduce the computational load for DOA estimation [30, 31]. But as root-MUSIC requires the solving of a (\(2 M -2\))th-order equation, its computational load is still significant when the array is large.

3 Computationally efficient direction finding

In this section, we first exploit the technique of PM to obtain a signal-subspace estimate of the array output covariance matrix, and then establish a Kth-order DOA-dependent equation for DOA calculation based on the signal-subspace estimate.

3.1 Fast subspace estimation using PM

The technique of PM realizes fast subspace estimation via numerical calculations by making use of the array output covariance matrix, which avoids the computationally heavy procedure of matrix eigen-decomposition. In the previous literatures, the noise-subspace is considered more often than the signal-subspace, because the former can be exploited straightforwardly for successive DOA estimation in subspace-based direction finding methods.

The noise-subspace estimate given by PM is [29]

where \( \hat{\mathbf{P }}=(\hat{\mathbf{R }}_1^H\hat{\mathbf{R }}_1)^{-1}\hat{\mathbf{R }}_1^H\hat{\mathbf{R }}_2 \) with \( \hat{\mathbf{R }}_1 \) and \( \hat{\mathbf{R }}_2 \) being the submatrices consisting of the first K and last \( M-K \) columns of \( \hat{\mathbf{R }} \) and \( \mathbf I _{M-K} \) stands for the \( (M-K)\times (M-K) \) identity matrix.

The noise-subspace estimate obtained from (7) is then used for DOA estimation by exploiting certain orthogonality testing method such as refined spatial searching, and the DOA estimates are given by

It should be noted that further orthogonalization of the columns of \( \hat{\mathbf{Q }}_\mathrm{n} \) in (7) helps to improve the precision of the noise-subspace estimate and the final DOA estimates, and the variant method is named orthogonal propagator method (OPM) [29]. But as the orthogonalization process aggravates the computational load of PM, which deviates from the major goal of the research in this paper, we do not take it into account.

In our method, it is the signal-subspace, instead of the noise-subspace, that will be used for successive DOA estimation; thus, we extend the PM technique mentioned above for signal-subspace estimation. According to the orthogonality between the signal- and the noise-subspaces, the signal-subspace estimate can be easily obtained based on (7) as follows:

where \( \hat{\mathbf{P }} \) is defined in the same way as that in (7) and \( \mathbf I _K \) represents the \( K \times K \) identity matrix.

For notational convenience, we also denote the perturbation-free counterpart of \( \hat{\mathbf{Q }}_\mathrm{s} \) by \( \mathbf Q _\mathrm{s} \).

3.2 Fast DOA estimation via low-dimensional equation rooting

After estimating the signal-subspace according to (9), our idea of fast DOA estimation is implemented by establishing and solving a low-dimensional equation with roots of \( \hbox {e}^{j\psi _k} (k=1,\ldots ,K) \). Denote the coefficients of such an equation by \( b_{K-1},\ldots ,b_0 \), i.e.,

Also denote \( \mathbf b =[b_0,b_1,\ldots ,b_{K-1}]^\mathrm{T} \) and

By setting \( \alpha =\hbox {e}^{j\psi _k} \) for \( k=1,\ldots ,K \) in (10) and multiplying both sides with \( \hbox {e}^{jJ\psi _k} \), one can yield the following system of homogeneous equations,

In can then be concluded by combining (11), (12) and the formulation of \( \mathbf A (\varTheta ) \) that

As \( \mathbf Q _\mathrm{s} \) is a substitution of \( \mathbf A (\varTheta ) \), it also holds that

Similarly, by setting \( \alpha =\hbox {e}^{j\psi _k} \) for \( k=1,\ldots ,K \) in (10) and multiplying both sides with \( \hbox {e}^{-j(J+K)\psi _k} \), one can yield another system of homogeneous equations as follows:

Thus, the equalities of \( \mathbf B \tilde{\mathbf{A }}(\varTheta )=\mathbf 0 _{(M-K) \times 1} \) also hold similar to (15), which can be deemed as a conjugate form of the result presented in [32], together with the following equalities,

where \( \tilde{\mathbf{A }}(\varTheta ) \) and \( \tilde{\mathbf{Q }}_\mathrm{s} \) are variants of \( \mathbf A (\varTheta ) \) and \( \mathbf Q _\mathrm{s} \) by reversing the order of the rows and conjugating the matrix elements.

Denote the column vector formed by the mth row elements of \( \mathbf Q _\mathrm{s} \) by \( \mathbf q _m \), i.e., \( \mathbf q _m=[\mathbf Q _\mathrm{s}(m,1),\ldots ,\mathbf Q _\mathrm{s}(m,K)]^\mathrm{T} \), and then, (12) can be rewritten as follows

or more compactly as

where \( \hbox {vec}(\varGamma ) \) stands for the column vector established by concatenating the columns of \( \varGamma \).

Similarly, (15) can be rewritten as follows

where \( (~)^* \) is the conjugate operator.

Denote \( \varSigma _l=[\mathbf q _l,\mathbf q _{l+1},\ldots ,\mathbf q _{M-K+l-1}] \) and \( \varSigma _l^{'}=[\mathbf q _{M-K+l-1}^{*},\ldots ,\mathbf q _l^{*}] \), and then, the following equations can be obtained by combining (18) and (19),

In order to simplify the notations, we further denote \( \varPsi =[\hbox {vec}([\varSigma _1,\varSigma _{K+1}^{'}]),\ldots ,\hbox {vec}([\varSigma _K,\varSigma _2^{'}])] \) and \( \gamma =\hbox {vec}([\varSigma _{K+1},\varSigma _1^{'}]) \), and then, an estimate of the coefficients of the equation in (10) can be derived from (20) as follows:

As only finite snapshots can be collected in practical applications, the signal-subspace estimate is always perturbation contaminated, and thus, only approximate equation coefficients can be obtained. We denote the perturbated counterpart of (21) as follows:

where \( \hat{\varPsi }=[\hbox {vec}([\hat{\varSigma }_1,\hat{\varSigma }_{K+1}^{'}]),\ldots ,\hbox {vec}([\hat{\varSigma }_K,\hat{\varSigma }_2^{'}])] \), \( \hat{\gamma }=\hbox {vec}([\hat{\varSigma }_{K+1},\hat{\varSigma }_1^{'}]) \), \( \hat{\varSigma }_l=[\hat{\mathbf{q }}_l,\hat{\mathbf{q }}_{l+1},\ldots ,\hat{\mathbf{q }}_{M-K+l-1}] \), \( \hat{\varSigma }_l^{'}=[\hat{\mathbf{q }}_{M-K+l-1}^{*},\ldots ,\hat{\mathbf{q }}_l^{*}] \) and \( \hat{\mathbf{q }}_m=[\hat{\mathbf{Q }}_\mathrm{s}(m,1),\ldots ,\hat{\mathbf{Q }}_\mathrm{s}(m,K)]^\mathrm{T} \).

The coefficient estimates can then be used to establish the characteristic equation as

Solving this equation yields its K roots \( \hat{\alpha }_k \) for \( k=1,\ldots ,K \), the signal directions are then estimated according to

where \( \hbox {Ang}(\cdot ) \) means taking the angle of a complex scalar.

3.3 Computational load analysis

It can be concluded from the implementation process of the new method that, with the signal-subspace being estimated using the PM technique, only numerical calculations as shown in (22) are needed to establish the Kth-order characteristic equation, which is then solved with efficient algorithms to obtain the signal DOA estimates. The estimation of the signal-subspace requires \( KM^2+K^2(M-K)+O(K^3) \) complex multiplications, the estimation of the equation coefficients requires \( 2K(K+1)(M-K)+O(K^3) \) complex multiplications, and the solving of the Kth-order equation requires only O(K) calculations by using numerical methods such as the gradient descent algorithm. As the number of array sensors is generally far larger than that of incident signals, i.e., \( M \gg K \), the computational complexity of the proposed method can be approximated as \( KM^2 \) complex multiplications in total by neglecting the lower-order items.

According to similar analyses, it can also be concluded that the original MUSIC algorithm requires approximately \( O(M^3) \) complex multiplications to estimate the noise-subspace and much more calculations to scan the potential spatial scope with a refined step to estimate the signal directions. The method of root-MUSIC is computationally more efficient than MUSIC, as it replaces the spatial scanning procedure with much simpler equation rooting, but it still needs to eigen-decompose the array output covariance to estimate the noise-subspace. Its computation load is about \( O(M^3) \) complex multiplications, which is much heavier than that of the proposed method as \( M \gg K \).

4 Numerical examples

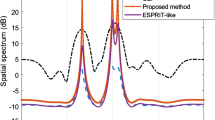

This section carries out two groups of simulations, so as to compare the performance of the proposed method (denoted by PM + LD\(~-~\)rMUSIC) and the method combining PM and root-MUSIC (denoted by PM + rMUSIC) in detection and DOA estimation. Signal environments with different signal-to-noise ratios (SNR) and inter-signal angular distances are considered.

Assume that two equally powered signals impinge onto an eight-element half-wavelength interspaced ULA, 1000 snapshots are collected in each simulation, and 1000 independent trials are carried out for performance analysis in each scenario. As both methods are numerical ones, the cases when the derivations of both DOA estimates from the true signal directions are smaller than 1\(^\circ \) are deemed as correct detection, and only these cases are considered for DOA estimation precision analysis.

In the first group of simulations, we fix the directions of the two incident signals at 5\(^\circ \) and 10\(^\circ \) and vary the SNR of each signal from 5dB to 15dB, and the correct detection probabilities of PM + rMUSIC and PM + LD\(~-~\)rMUSIC are given in Fig. 1, and the root mean square errors (RMSE) of the DOA estimates of both methods for the 5\(^\circ \) signal are presented in Fig. 2, while that for the 10\(^\circ \) signal is not listed as it is similar to that of the 5\(^\circ \) one.

In the second group of simulations, we fix the SNR of both signals at 10 dB and the direction of the first source at 5\(^\circ \) and vary the angular distance between the two sources from 3\(^\circ \) to 7\(^\circ \), and the correct detection probabilities and the DOA estimation RMSE of the 5\(^\circ \) signal of both PM + rMUSIC and PM + LD\(~-~\)rMUSIC are shown for comparison in Figs. 3 and 4.

Figures. 1 and 3 show that the proposed method surpasses the method of PM + rMUSIC in detection performance, especially when the SNR is low and the signals are spatially much adjacent. And when the SNR is lower than 10 dB or the angular distance between the two signals is smaller than 5\(^\circ \), the proposed method obtains DOA estimates of higher precision than PM + rMUSIC. But as the SNR further increases or the signal angular distance becomes larger, PM + rMUSIC exceeds the proposed method in DOA estimation precision, as shown in Figs. 2 and 4.

As only the cases with correct detection are considered when obtaining the RMSE statistics of the DOA estimates, the predominance of the proposed method in detection is excluded in Figs. 2 and 4. Even so, the proposed method still shows a more satisfying (or comparable in some cases) DOA estimation precision when compared with PM + rMUSIC.

5 Conclusions

In order to improve the computational efficiency of DOA estimation as much as possible, this paper introduces the PM technique to give a fast signal-subspace estimation of the array outputs and then exploits the signal-subspace estimate to establish a low-dimensional characteristic equation, whose order equals the number of the incident signals, and the signal directions are finally obtained by solving this equation. By taking all the DOA estimation procedures into consideration, the proposed method improves the computational efficiency for DOA estimation as a whole. Two groups of simulations are carried out in different scenarios of varying SNR and inter-signal angular distance to compare the performance of the proposed method and another computational efficient one, which combines PM and root-MUSIC directly, in terms of detection probability and DOA estimation precision. The proposed method surpasses the method of PM + rMUSIC in performance in most of the scenarios, especially when the SNR is low and the inter-signal angular distance is small.

References

Han, Y., Tran, V.: Recursive Bayesian beamforming with uncertain projected steering vector and strong interferences. Signal Image Video Process 10(5), 975–982 (2016)

Tao, J., Fan, Q., Yu, F.: Stokes parameters and DOAs estimation of partially polarized sources using a EM vector sensor. Signal Image Video Process. 10(8), 1–8 (2016)

Ta, S., Wang, H., Chen, H.: Two-dimensional direction-of-arrival estimation and pairing using L-shaped arrays. Signal Image Video Process. 10(8), 1511–1518 (2016)

Wu, L., Liu, Z., Jiang, W.: A direction finding method for spatial optical beam-forming network based on sparse Bayesian learning. Signal Image Video Process. 11(2), 203–209 (2017)

Capon, J.: High-resolution frequency-wavenumber spectrum analysis. Proc. IEEE 57(8), 1408–1418 (1969)

Marzetta, T.L.: A new interpretation for Capon’s maximum likelihood method of frequency-wavenumber spectrum estimation. IEEE Trans. Acoust. Speech Signal Process. 31, 445–449 (1983)

Burg, J.P.: Maximum entropy spectral analysis. In: Proceeding of 37th Annual International SEG meeting, Oklahoma City (1967)

Capon, J.: Maximum-likelihood spectral estimation. In: Haykin, S. (ed.) Nonlinear Methods of Spectral Analysis, pp. 155–179. Springer, New York (1979)

Cox, H., Zeskind, R.M., Owen, M.M.: Robust adaptive beamforming. IEEE Trans. Acoust. Speech Signal Process. ASSP 35, 1365–1376 (1987)

Li, J., Stoica, P., Wang, Z.: On robust Capon beamforming and diagonal loading. IEEE Trans. Signal Process. 51(7), 1702–1715 (2003)

Stoica, P., Wang, Z., Li, J.: Robust Capon beamforming. IEEE Signal Process. Lett. 10(6), 172–175 (2003)

Somasundaram, S.D.: Linearly constrained robust Capon beamforming. IEEE Trans. Signal Process. 60(11), 5845–5856 (2012)

Schmidt, R.O.: Multiple emitter location and signal parameter estimation. IEEE Trans. Antennas Propag. AP 34, 276–280 (1986)

Barabell, A.J.: Improving the resolution performance of eigenstructure-based direction-finding algorithms. In: Proceedings of the International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Boston, MA, pp. 336–339 (1983)

Roy, R., Kailath, T.: ESPRIT—estimation of signal parameters via rotational invariance techniques. IEEE Trans. Acoust. Speech Signal Process. 37(7), 984–995 (1989)

Rao, B.D., Hari, K.V.S.: Performance analysis of Root-MUSIC. IEEE Trans. Acoust. Speech Signal Process. 37(12), 1939–1949 (1989)

Stoica, P., Nehorai, A.: MUSIC, maximum likelihood, and Cramer–Rao bound. IEEE Trans. Acoust. Speech Signal Process. 37(5), 720–741 (1989)

Wang, H., Kaveh, M.: On the performance of signal-subspace processing—part I: narrow-band systems. IEEE Trans. Acoust. Speech Signal Process. 34(5), 1201–1209 (1986)

Mestre, X., Lagunas, M.A.: Modified subspace algorithms for DoA estimation with large arrays. IEEE Trans. Signal Process. 56(2), 598–614 (2008)

Liu, Z.-M., Huang, Z.-T., Zhou, Y.-Y.: Direction-of-arrival estimation of wideband signals via covariance matrix sparse representation. IEEE Trans. Signal Process. 59(9), 4256–4270 (2011)

Liu, Z.-M., Huang, Z.-T., Zhou, Y.-Y.: An efficient maximum likelihood method for direction-of-arrival estimation via sparse Bayesian learning. IEEE Trans. Wireless Commun. 11(10), 3607–3617 (2012)

Liu, Z.-M., Zhou, Y.-Y.: A unified framework and sparse Bayesian perspective for direction-of-arrival estimation in the presence of array imperfections. IEEE Trans. Signal Process. 61(15), 3786–3798 (2013)

Malioutov, D., Cetin, M., Willsky, A.S.: A sparse signal reconstruction perspective for source localization with sensor arrays. IEEE Trans. Signal Process. 53(8), 3010–3022 (2005)

Hyder, M.M., Mahata, K.: Direction-of-arrival estimation using a mixed L2,0 norm approximation. IEEE Trans. Signal Process. 58(9), 4646–4655 (2010)

Liu, Z.-M., Huang, Z.-T., Zhou, Y.-Y.: Sparsity-inducing direction finding for narrowband and wideband signals based on array covariance vectors. IEEE Trans. Wireless Commun. 12(8), 3896–3907 (2013)

Wang, L., Zhao, L., Bi, G., Wan, C., Zhang, L., Zhang, H.: Novel wideband DOA estimation based on sparse Bayesian learning with dirichlet process priors. IEEE Trans. Signal Process. 64(2), 275–289 (2016)

Hawes, M., Mihaylova, L., Septier, F., Godsill, S.: Bayesian compressive sensing approaches for direction of arrival estimation with mutual coupling effects. IEEE Trans. Antennas Propag. 65(3), 1357–1368 (2017)

Liu, Z.-M., Guo, F.-C.: Azimuth and elevation estimation with rotating long-baseline interferometers. IEEE Trans. Signal Process. 63(9), 2405–2419 (2015)

Marcos, S., Marsal, A., Benidir, M.: The propagator method for source bearing estimation. Sig. Process. 42, 121–138 (1995)

Tayem, N., Kwon, H.M.: L-shape 2-dimensional arrival angle estimation with propagator method. IEEE Trans. Antennas Propag. 53(5), 1622–1630 (2005)

Shu, T., Liu, X., Lu, J.: Comments on L-shape 2-dimensional arrival angle estimation with propagator method. IEEE Trans. Antennas Propag. 56(5), 1502–1503 (2008)

Stoica, P., Sharman, K.C.: Novel eigenanalysis method for direction estimation. IEE Proc. F Radar Signal Process. 137(1), 19–26 (1990)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Shiying, T., Hongqiang, W. Propagator-based computationally efficient direction finding via low-dimensional equation rooting. SIViP 12, 83–90 (2018). https://doi.org/10.1007/s11760-017-1133-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-017-1133-4