Abstract

This paper examines the performance of improvement search as a function of the quality of the starting solution in the planar (or continuous) p-median problem. We show that using optimal solutions of the analogue discrete p-median problem as the starting solution for heuristic improvement algorithms, as recommended in the literature, can actually lead to inferior performance. That is, good starting solutions obtained in the discrete space with a fraction of the effort can actually be better, a counter-intuitive result that illustrates in a different context the less is more principle recently advocated in the literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

An efficient way to solve many evaluation and optimization problems is through discretization of the continuous model. For example, integration of a continuous function is evaluated by the Simpson rule or Gaussian quadrature formulas (Abramowitz and Stegun 1972). Ordinary differential equations are solved by the Runge–Kutta method (Runge 1895; Kutta 1901; Ince 1926). Many models that are based on normal distributions are solved by discretizing the distribution (e.g. Drezner and Zerom 2016). Aboolian et al. (2007) solved a competitive location model with concave demand by converting the continuous function to a sequence of discrete lines termed the TLA (tangent line approximation) method. Brimberg et al. (2017a) solved the p-median problem by injecting potential locations for the facility in an iterative approach. There are exceptions to this rule. For example, integer linear programming solutions may not be close to the continuous ones. However, it is reasonable to expect that solving multiple facility location problems by discretizing the problem formulation will be an effective approach. In this paper we investigate the application of discrete p-median solutions as starting solutions for solving the continuous p-median problem. We show that using the best discrete solutions as starting solutions for continuous optimization may not be the best strategy for planar p-median problems.

The continuous p-median problem (Drezner et al. 2016; Drezner and Salhi 2017), also known as the multi-source Weber problem (Brimberg et al. 2000; Kuenne and Soland 1972; Hansen et al. 1998), or continuous location-allocation problem (Love et al. 1988; Brimberg et al. 2008), requires finding p sites for facilities in Euclidean space in order to minimize a weighted sum of distances from a set of demand points (fixed points, existing facilities, customers) to their closest facility. Let \( X_{i} \) denote the location of facility \(i \in \{1,\ldots , p\}\), and \(A_j\) the known location of demand point \(j \in N=\{1,\ldots , n\}\). In the typical scenario, which is assumed here, the \(X_i = (x_i, y_i)\) and \(A_j = (a_j, b_j)\) for all i, j are points in the plane. As well, distances are assumed to be measured by the Euclidean norm, so that

The weights (or demands) at the \(A_j\)’s are given by \(w_j > 0, j \in \{1,\ldots , n\}\). We formalize the planar p-median problem as follows:

where \(X = \{X_1,\ldots , X_p\}\) denotes the set of location variables.

This model was originally proposed by Cooper (1963, 1964), who also observed that the objective function f(X) is non-convex, and may contain several local optima. The problem was later shown to be NP-hard (Megiddo and Supowit 1984; Garey and Johnson 1979; Kariv and Hakimi 1979). For recent reviews of the discrete p-median problem see Daskin and Maass (2015), and for the planar p-median problem see Brimberg and Hodgson (2011).

The single facility 1-median problem termed the Weber problem (Weber 1909) has a long history dating back to the French mathematician Pierre De Fermat of the 1600s. Recent reviews of the Weber problem are Wesolowsky (1993); Church (2019); Drezner et al. (2002).

The connection between the discrete p-median problem, where potential facility locations are restricted to a given set of nodes (or demand points), and its continuous counterpart, where the facility sites are modeled as unknown points in the plane, has been a topic of study since the introduction of the continuous problem by Cooper (1963, 1964). Hansen et al. (1998) propose a heuristic that first finds an optimal solution of the discrete problem, and then performs one step of continuous adjustment starting from this solution to obtain the final one. Very good computational results are reported, but as observed in Brimberg et al. (2000), the CPU time to find an optimal solution to the discrete p-median becomes prohibitively high for larger problem instances. In this paper we show, through extensive computational tests, a rather counter-intuitive result that good discrete starting solutions can actually be better than optimal (or best-known) ones. The implication is that heuristics that interface between discrete and continuous versions of the p-median (e.g., Brimberg et al. 2014), should not focus efforts on obtaining exact solutions in the discrete phase, but rather introduce some randomness by using multiple good discrete solutions as starting points for the continuous phase. This will save considerable execution time, and may, depending on the improving search, produce as good or better continuous solutions.

The remainder of the paper is organized as follows. In Sect. 2, a binary linear program is formulated to find the optimal solution to the discrete p-median problem. The formulation is extended to find the k best solutions to the discrete p-median problem using CPLEX recursively. A genetic algorithm is also presented to find ‘good quality’ discrete solutions, but still, notably inferior to the solutions to the discrete p-median problem. Section 3 presents extensive computational results followed by some discussion in Sect. 4. A summary of conclusions is presented in Sect. 5. The main conclusion is that, although good discrete starting solutions result in better continuous solution quality than random discrete solutions (i.e., poor ones), there is a point reached where further improvement of the discrete solution can be counter-productive in the continuous phase. That is, “good” can sometimes be better than “best”. This also corroborates the ‘less is more’ philosophy adopted in some recent papers on heuristic design (e.g., Mladenović et al. 2016; Brimberg et al. 2017b).

2 Generating starting solutions

We investigate an approach suggested in the literature (e.g., Hansen et al. 1998; Brimberg et al. 2014) of finding the best selection of p demand points out of n given points, to form the starting solution for locating p facilities in the plane \({\mathbb {R}}^2\). Let \(d_{ij}\) be the distance between demand points i and j. We minimize the value of the discrete objective function by selecting set P containing p out of the n demand points. The standard formulation for the discrete p-median problem is:

We also extended this idea by finding the second best, third best, etc., until say the \(k=100 \text {th}\) best, and then using each of these as starting solutions to solve the continuous p-median problem. Two approaches are tested: (i) finding the k best solutions exactly by a binary linear program, and (ii) finding heuristically good solutions by a genetic algorithm.

2.1 A binary linear programming formulation

The following formulation for finding the best set is similar to the one in ReVelle and Swain (1970); Kalczynski and Drezner (2020); Daskin (1995); Daskin and Maass (2015). Let \(x_j\in \{0,1\}\) be a binary variable. \(x_j=1\) if demand point j is selected for locating a facility, and zero otherwise. \(y_{ij}=1\) if selected demand point j is the closest facility to demand point i. The BLP (binary linear programming) optimization problem is:

For the mixed version MBLP (mixed binary linear program), \(0\le y_{ij}\le 1\) are continuous variables for \(i=1,\ldots , n\), \(j=1,\ldots ,n\). In the computational experiments we applied BLP as it required less CPU time than MBLP. In formulation (4) there are \(n^2+n\) variables, and \(n^2+n+1\) constraints not including the last three.

2.2 Finding the best k discrete solutions

Let \(P^{(1)}\) be the optimal set. In order to get the second best solution add the constraint

getting the second best set \(P^{(2)}\). Once the best \(k\ge 1\) sets are found, the \((k+1)^{th}\) best solution is obtained by adding to the original problem (4) k constraints:

2.3 A genetic algorithm

We create a population of pop solutions, such as \(pop=100\), and once the genetic algorithm terminates, we have a sorted list of solutions by the objective function value of the discrete p-median. We can apply up to pop of the best population members as starting solutions. We propose a variant of a genetic algorithm (Goldberg 2006; Holland 1975), which is similar to such algorithms that were designed and successfully applied to problems of selecting p items out of n with various objective functions (Alp et al. 2003; Berman et al. 2003; Drezner et al. 2020).

2.3.1 Outline of the genetic algorithm

Every population member is a list of p demand points where facilities are located. The iterations are repeated until the best population member is not improved in a pre-specified number of consecutive iterations N. In most experiments we used \(N=2p\) or 5p.

-

1.

A population of pop (we used \(pop=100\)) sets of p demand points is either randomly generated or generated by a construction algorithm (described below). Set \(iter=0\).

-

2.

Two population members are selected by the parents selection rule detailed below.

-

3.

An offspring is produced by the merging process detailed below.

-

4.

If the offspring is worse than the worst population member, or is identical to an existing population member, go to Step 7.

-

5.

If the offspring is better than the best population member, set \(iter=0\).

-

6.

The offspring replaces the worst population member.

-

7.

Set \(iter=iter+1\), and if \(iter\le N\), go to Step 2.

-

8.

The final population is the desired list of starting solutions. It can be sorted by the value of the discrete objective function, which is actually the same as the continuous objective function value.

The following parents selection rule was proposed in Drezner and Marcoulides (2003). It is based on the biological concepts of inbreeding and outbreeding depression (Edmands 2007; Fenster and Galloway 2000; Drezner and Drezner 2020). A successful offspring is formed in nature when the parents are not too close genetically, such as siblings, (inbreeding depression) or too dissimilar (outbreeding depression). We assume that \(2p<n\).

The Parents Selection Rule:

Define:

- s::

-

Number of potential second parents (a parameter). In Drezner and Marcoulides (2003), and later applications, \(s=2\) or 3 provided the best performance. A large value of s may lead to outbreeding depression. \(s=1\) is the “standard” parent selection rule of randomly selecting two parents. The selected individual is typically neither the most similar nor the most different member of the population. In nature, breeding with individuals that are genetically very similar (e.g. siblings, parents) tends to produce inferior offspring, termed inbreeding depression (Freeman et al. 2014). Conversely, breeding with individuals that are very different, also tends to be disadvantageous. This is termed outbreeding depression (Edmands 2007; Fenster and Galloway 2000).

- c::

-

Similarity count between two population members. It is the number of demand points that are included in both members.

The process:

-

1.

One population member is randomly selected as the first parent.

-

2.

\(s\ge 1\) potential second parents are randomly selected from the remaining \(pop-1\) population members.

-

3.

For each potential second parent, the similarity count with the first parent, c, is found.

-

4.

The potential second parent with the smallest value of c is selected with ties broken arbitrarily, so that the parents are as dissimilar as possible,.

The Merging Process:

-

1.

The combined set of demand points in the two parents is created. c points are common to the two parents; so there are \(2p-c\) points in the combined set.

-

2.

Randomly select c points, which are not in the combined set (out of the remaining \(n-(2p-c)\) points), and add them to the combined set. This is done to avoid the possibility that a “good” demand point is not present in any population member and thus will not be present in future generations. The extended set has 2p members.

-

3.

A reverse descent algorithm is applied on the extended set:

-

(a)

Select the point in the set, not among the c common points, whose removal increases the objective function the least.

-

(b)

The removal of points is repeated for p iterations, so that the set is reduced to p points defining the offspring.

-

(a)

2.3.2 Efficient calculation of the merging process

-

1.

The set P of 2p demand points for facility locations is given. The c members that are common to the two parents are moved to the beginning of the list and duplicates removed. Set the number of demand points in P to \(m=2p-c\).

-

2.

A vector D of the distances \(D_i\) between demand point i and the closest facility is found for \(i=1,\ldots ,n\) and the objective function F for the m-median problem is calculated.

-

3.

Set the facility to be removed \(j=c+1\), and the minimum increase in the objective function \(\Delta F\) to a large number.

-

4.

The increase in the value of the objective function \(\Delta F_j\) by removing facility j from P is calculated as follows:

-

(a)

Set \(\Delta F_j=0\), \(i=1\).

-

(b)

If \(d_{ij}>D_i\) go to Step 4D

-

(c)

If \(d_{ij}=D_i\), find the minimum distance \({\hat{D}}_i\) to the demand points in \(P-\{j\}\) and set \(\Delta F_j=\Delta F_j+w_i({\hat{D}}_i-D_i)\).

-

(d)

Set i to \(i+1\).

-

(e)

If \(i\le n\) go to Step 4b.

-

(a)

-

5.

If \(\Delta F_j<\Delta F\) set \(\Delta F=\Delta F_j\) and record the value of \({\hat{j}}=j\).

-

6.

Set \(j=j+1\).

-

7.

If \(j\le m\) go to Step 4

-

8.

Remove Facility \({\hat{j}}\) from P; set \(m=m-1\). Update the vector D: not changing it when \(d_{ij}>D_i\), and if \(d_{ij}=D_i\) replace \(D_i\) by \({\hat{D}}_i\) as in Step 4c.

-

9.

Update \(F=F+\Delta F\).

-

10.

If \(m>p\) go to Step 3. Otherwise, stop with the set P and its objective function F.

If the merging process is performed in a straightforward way, i.e., calculating the value of the objective function for each potential removal of demand point j from the set P, there are \(O(p^2)\) such evaluations and each requires O(np) operations for a total complexity of \(O(np^3)\). In the efficient calculations: Step 4b requires O(1) operations, and Step 4c requires O(p) operations but is performed on average only \(\frac{1}{p}\) of the time. Therefore, each evaluation of the objective function is of complexity O(n). The number of the objective function calculations remains the same, reducing the complexity to \(O(np^2)\).

2.4 Starting solutions for the genetic algorithm

We tested two starting solution approaches for the discrete problem: a random selection of p demand points (RAND), and the construction algorithm (CONS) suggested in Brimberg and Drezner (2020); Kalczynski et al. (2020b, 2020a). The construction algorithm, which applies the GRASP approach (Feo and Resende 1995), is outlined as follows:

-

1.

The first two demand points are randomly selected.

-

2.

The demand point with the largest minimum distance to the already selected points is selected with probability \(\frac{2}{3}\), and the one with the second-largest minimum distance is selected with probability \(\frac{1}{3}\).

-

3.

Repeat Step 2 until p demand points are selected.

2.4.1 Improving the starting solution

Once the starting solutions were determined, we applied the RATIO algorithm (Brimberg and Drezner 2020), which is a small modification of the IALT algorithm (Brimberg and Drezner 2013), on the set of starting solutions. Cooper (1963, 1964) suggested the ALT (alternating) algorithm. Since each demand point gets its services from the closest facility, each facility attracts a subset of demand points. The algorithm alternates between determining the subsets attracted by each facility, and re-locating the facilities to the optimal location for each subset, until stabilization. Once Cooper’s algorithm terminates, the IALT algorithm finds for each demand point the difference between the distances to the closest facility and the second closest, and considers re-assigning the demand points with the L smallest differences (we use \(L=20\)) individually from the closest facility to the second closest. Another iteration of Cooper’s algorithm is then performed on the two adjusted subsets. If no improvement for all the transfers is found, the process terminates. The RATIO algorithm selects the L smallest ratios between the distances rather than differences. It was found in Brimberg and Drezner (2020) that this small modification significantly improves the performance of the IALT algorithm.

Since the merging process consumes most of the calculation time, and is performed about p times, the complexity of the genetic algorithm using the efficient calculations is \(O(np^3)\) rather than \(O(np^4)\). The complexity of the RATIO procedure (Brimberg and Drezner 2020) is the same as the IALT algorithm (Brimberg and Drezner 2013), which is \(O(np^2)\) per iteration.

3 Computational experiments

The FORTRAN programs used double precision arithmetic. They were compiled by an Intel 11.1 FORTRAN Compiler with no parallel processing. They were run on a desktop with the Intel i7-6700 3.4GHz CPU processor and 16GB RAM. Only one processor was used. The BLP was run on IBM’s CPLEX (CPLEX and IBM ILOG 2009) Optimization Studio 12.8 environment. We used the default CPLEX MIP solver settings. The solvers were run on a virtualized Windows environment with 16 vCPUs and 128GB of vRAM. The physical server used was a 2 CPU (8 cores each) PowerEdge R720 Intel E5-2650 CPUs with 128 GB RAM using shared storage on MD3620i via 10GB interfaces.

We first tested the approaches on the 50 randomly generated instances of problems used in Brimberg and Drezner (2020). The number of demand points is \(n=100,200,\ldots ,1000\), and the number of facilities is \(p=5,10,15,20,25\), for a total of 50 instances. We also tested problems that were extensively investigated in the literature, and report results on two of them with \(n=654, 1060\) demand points (Reinelt 1991), for a total of 58 instances. In total, 108 instances were tested.

The starting solutions were found in two ways: (i) the best 100 discrete solutions found optimally by the binary linear program; and (ii) the final population members of the genetic algorithm.

3.1 Testing randomly generated instances

In Table 1 we report the continuous solution found by starting at the optimal (best) discrete solution. The best discrete solution was obtained by solving (4) using CPLEX. Some of the largest problem sets required several days of computing time. Applying RATIO on the best discrete solution required a negligible fraction of one second by a FORTRAN program. The results are disappointing. The best known (BK) continuous solution was found for only 12 of the 50 instances. The average percentage above the best known solution was 0.048%.

For comparison, we report in Table 2 the analogous results obtained from repeating the genetic algorithm 10 and 100 times using \(N=2p\), \(s=1,2\ldots ,5\) second parents, and the best 10 members of each final population. When 100 runs are performed, the best known continuous solution was found for all 50 instances by any number of parents, except in two cases where it was found in 49 instances. All variants performed about equally well. The best performing variant (with 100 replications) used random starting solutions, and \(s=2\) second parents.

As noted above, the best known continuous solution was not obtained in many cases using the optimal discrete solution as the starting solution for RATIO. We further investigated this phenomenon on six instances of \(n=100,\ldots ,600\), and \(p=5\). The 1000 best discrete solutions for these instances were found by total enumeration with a FORTRAN program. The number of feasible combinations ranges from about 75 million for \(n=100\) to 637 billion for \(n=600\). The value of the objective function for each combination was calculated, and the sorted vector of the 1000 best objective function values created. Run times for these instances ranged from about 3 seconds for \(n=100\) to about 61 hours for \(n=600\).

In Table 3 we report the 10 highest ranked (best) discrete solutions, and the 1000\(^{\text{ th }}\) one. We examined all 1000 of them, but it is not reasonable to report on all of them. The best found genetic discrete solution was ranked several times even worse than the 1000\(^{\text{ th }}\) one. In Table 4 the ranks of the best found genetic solution for \(n=100,200\) are reported. For example, the best genetic solution using \(s=3\) second parents for \(n=100\) was the 266\(^{\text{ th }}\) best discrete solution. For \(n\ge 300\) all solutions were ranked higher than the 1000\(^{\text{ th }}\) one. It is interesting and perhaps surprising that even though the values of the discrete objective function of the genetic algorithm solutions were rather inferior, they resulted in excellent continuous solutions (see Table 2).

In Table 5 we report the distribution of the 1000 continuous objectives obtained by RATIO starting at the best 1000 discrete starting solutions for \(n\le 600\), and \(p=5\). For \(n=200,300,500\), the best known continuous solution was obtained from the best discrete solution, as was also reported in Table 1. For \(n=500\) all 1000 best discrete solutions yielded the best continuous solution. A different behavior is observed for \(n=100,400,600\). For \(n=100\), the best continuous solution was obtained for the 15\(^{\text{ th }}\) discrete solution, meaning that the best 14 ones did not yield the best continuous solution. For \(n=400\) the 3\(^{\text{ rd }}\), and for \(n=600\) the 5\(^{\text{ th }}\), found the best known continuous solution. The solution obtained by the best discrete solution is \(0.010\%\) above the best known for \(n=100\), and 0.003%, 0.007% for \(n=400,600\). For \(n=100\), ten different solutions were obtained, while for \(n=600\) there were seventeen different solutions.

In Table 6 we report on the continuous solutions found using the top 10 discrete solutions obtained by CPLEX with (4) and additional constraints (5). The improvement in the results is disappointing, compared with the results starting at the best discrete solution reported in Table 1. The best known continuous solution was obtained at least once in 21 of the 50 instances, an increase of only 9 instances. The average best result was 0.035% above the best known solution, down from 0.048%. There are also some unusual results. In eleven instances the same continuous solution was found for all ten discrete starting solutions, but it was not the best known solution. In conclusion, for this set of test problems, the best discrete solutions are not recommended as starting solutions.

3.2 Testing the \(n=654\) and 1060 Instances

To further investigate the conclusion that optimal, or close to optimal, discrete starting solutions do not perform well for the planar p-median problem, we tested the procedures on the \(n=50, 287, 654, 1060\) instances, with various values of p, which were tested in many papers. The \(n=50, 287\) (Eilon et al. 1971; Bongartz et al. 1994) instances, which were solved optimally by Krau (1997), are quite easy. Therefore, we report the results for the \(n=654, 1060\) (Reinelt 1991) instances. The best known solutions for the \(n=654, 1060\) problems were reported in Drezner and Drezner (2020). In Table 7 we compare the best known continuous objective to the optimal discrete objective for the \(n=654,~1060\) instances.

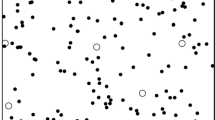

The distribution of the demand points for the \(n=654, 1060\) instances is definitely not uniformly random (see Figs. 1,2) as in the instances examined in Sect. 3.1. In Tables 8,9 we report the best continuous result obtained by applying RATIO on the best 100 discrete solutions for the \(n=654,~1060\) instances, compared with the results of applying RATIO on the genetic algorithm final population members. In Fig. 1, the optimal discrete solution and best known continuous solution for \(n=654,~p=10\) facilities are depicted. The best discrete solution is quite far from the continuous one. In fact, all 100 best discrete solutions resulted in the same inferior continuous solution by applying RATIO, as reported in Table 8. In Fig. 2, the best known continuous and optimal discrete solutions for \(n=1060\), \(p=10\) are depicted. The solution points are closer to each other for \(n=1060\).

3.2.1 Analysis and discussion of the \(n=654,~p=10\) instance

The optimal discrete objective of 115788.7512 is 0.39% above the best known continuous objective (BK). The 100\(^{th}\) best discrete solution is 0.44% above BK. All these 100 starting solutions resulted in the same continuous objective of 115384.6427, which is 0.04% above BK.

When using the genetic results as starting solutions with \(N=2p\), out of 25,000 starting solutions (5,000 starting solutions for each of the five second parents), 2,318 resulted in BK. The distribution of the discrete values of these 2,318 starting solutions is: the best one is 117595.2606 which is 1.96% above BK, the 100\(^{th}\) best of these is 3.98% above BK, and the last one on the list of 2318 is 152.55% above BK!

When N in the genetic algorithm was increased from 2p to 5p, the results were better. There were 6,327 BK’s, with the best discrete objective among these results of 116042.5786, which is 0.61% above BK, number 100 is 0.90% above BK, and the last one, number 6,327, is 95.45% above BK. In conclusion, the best continuous solutions can often be found using moderately good discrete starting solutions, and in some cases even bad ones. If the discrete starting solutions are optimal or near optimal, the continuous results may be inferior.

In Table 10, various statistical results and run times of various approaches are depicted for the \(n=654, 1060\) instances. In the genetic variants, all 100 final population members were used as starting solutions.

Referring to Table 10, the following observations may be made:

-

1.

When RATIO is applied directly to random discrete solutions, the best obtained continuous objective values for each p are bad. The average for the deviation of the best solution for each p is 12.33% for \(n = 654\), and 3.15% for \(n = 1060\). Note that these averages are based on best found results from 5000 starting solutions for each instance. The results improve somewhat for CONS starting solutions (9.19% for \(n = 654\), and 1.93% for \(n = 1060\)). We conclude that the application of the genetic algorithm to obtain “good” discrete starting solutions is well worth the extra effort, as seen by the substantial improvement in the averages of best results.

-

2.

The best overall solution quality reported in Table 10 is obtained by using the 100 best discrete solutions as starting solutions. (Further analysis reported in Table 11 shows a better percentage above the best known solution of 0.052% for \(n=654\) and 0.043% for \(n=1060\) by a very shallow genetic algorithm). However, the reduction in percent deviation over the genetic results is of the order of 0.005%, which is quite negligible in practical terms. Furthermore, as seen in Tables 8 and 9, the improvement applies only to the larger instances (larger values of p), while the genetic starting solutions gave better results for the smaller ones.

3.2.2 Analyzing the depth of the genetic algorithm

Define the depth of the genetic algorithm as: \(depth=\frac{N}{p}\). The deeper the genetic algorithm, the greater are the number of iterations executed, and the longer are the run times (approximately proportional to the depth). Therefore, the final population of the genetic algorithm improves with depth. If we wish to have approximately the same genetic algorithms run time, the number of repetitions of the genetic algorithm should be inversely proportional to the depth.

We selected the value of the depth multiplied by the number of populations equal 240, so there are 20 integer values of the depth and number of populations, see Table 11. Note that for each tested population, 100 starting solutions are created for RATIO. Each instance was run five times applying five different random seeds. The average of the five best found solutions by each of the five random seeds is reported. Graphs showing the percentage above the best known solution as a function of \(\log (depth)\), obtained from Table 11, are depicted in Fig. 3.

The total run time of the procedures consists of generating the starting solutions, running the genetic algorithms, and running RATIO. Generating starting solutions and running RATIO are performed the same number of times for each p (second column in Table 11). We estimated the run time of generating 5000 starting solutions and 5000 runs of RATIO as 0.51 min for \(n=654\), and 4.71 min for \(n=1060\) (Table 10). For example, (referring to Table 11) for depth=1 and \(n=1060\), the procedure required an average of 61.49 min. Generating 24000 starting solutions and running RATIO 24000 times required \(24000\times \frac{4.71}{5000}=22.61\) min. Therefore, the genetic algorithms (that were repeated 240 times) required 61.49-22.61=38.88 min, which is about 10 sec per each genetic algorithm. In contrast, one run of the genetic algorithm using depth=240 required 27.57 min.

We calculated simple regression lines, with the dependent variable of the average percentage above the best known solution for \(n=654, 1060\), on the 20 points for each n depicted in Fig. 3. As the independent variable we tested: (i) depth, (ii) log(depth), and (iii) \(\left[ \log (depth)\right] ^\lambda \), for the \(\lambda \) that best fits the data (maximum R value). We found, by the Solver in Excel, that the best value for \(\lambda \) is 1.5 for \(n=640\), and 3.4 for \(n=1060\). The results are depicted in Table 12. All regression lines are statistically significant with very small p-values. This means that the quality deteriorates significantly as the genetic algorithm provides better objective values of the starting solution, when the number of runs are controlled by the run time of the genetic algorithm. More populations with lower quality discrete starting solutions yield better continuous objectives.

4 Discussion

When all the locations of the facilities in the optimal continuous solution coincide with demand points, the optimal discrete solution is the same as the continuous one. How likely is it that a facility is located at a demand point? In the optimal solution (continuous or discrete), the set of demand points is partitioned by a Voronoi diagram (Suzuki and Okabe 1995; Okabe et al. 2000; Voronoï 1908; Aurenhammer et al. 2013) to p non-intersecting subsets, and each has a facility located at the optimal location for the subset minimizing the weighted sum of distances. Therefore, the two solutions will be the same if every single facility solution for a subset is located at a demand point.

When the number of demand points increases, one would expect that the probability that the Weber solution point is on a demand point converges to 1. There is “no space” left between demand points, when the number of demand points increases to infinity. However, Drezner and Simchi-Levi (1992) showed that when n demand points are randomly generated in a unit circle, then the probability that the Weber solution with Euclidean distances is on a demand point is approximately \(\frac{1}{n}\). This counter-intuitive result was verified by simulation. Consider the \(n=654\), \(p=10\) case. For \(n=654\) and one facility, the probability that the solution is on a demand point is about 0.15%. When 10 about equally sized subsets are created, the probability that the location of the facility serving a subset is on a demand point is 1.5%. The probability that all 10 facilities are located at demand points is basically zero (\(10^{-18}\)). The numbers for \(n=1060\) are even lower. The optimal discrete will hardly ever be the same as the optimal continuous.

The subsets are separated by perpendicular bisectors between the facilities. If the facilities are moved, the perpendicular bisectors can change quite a bit, even for small perturbations. The partitioned subsets for the optimal continuous solution can be significantly different from those of the optimal discrete solution. This may explain why using the optimal discrete solution as a starting solution is not the “best” choice, as we often observed in the computational results.

On the other hand, even if the optimal discrete solution leads to the optimal (or best known) continuous one, there are likely many other discrete solutions of varying quality that will also produce the same result. That is, there may be several discrete solutions that map onto the same continuous solution (local optimum obtained by the RATIO algorithm). For example, consider the extreme case, instance \(n = 500\), \(p = 5\) in Table 5, where all 1000 best discrete solutions map onto the same (best known) continuous solution.

The computational results show that using “good” discrete starting solutions from our genetic algorithm often yield better continuous solutions than those obtained by optimal or near-optimal discrete solutions. Not only do we get better solutions, but the CPU time is also reduced by one or more orders of magnitude. However, this is not uniformly the case. As noted above, the optimal and near-optimal discrete solutions were able to beat the genetic starting solutions for several larger instances (see Tables 8 and 9). Consider, for example, the \(n = 654\), \(p = 85\) instance, where the percent deviation obtained with the 100 best discrete solutions and the genetic solutions were, respectively, 0.18% and 0.54%, a difference of 0.36% in favor of the optimal/near optimal discrete solutions.

Similar conclusions are drawn by Drezner and Drezner (2016). They investigated sequential location of two facilities. Once the first facility is located, the second facility is located at its optimal location considering the location of the first one (termed the conditional location model (Minieka 1980; Drezner 1995)). They found that for the 2-median and 2-center problems, random location of the first facility is better, while for locating two new competing facilities, the optimal location for the first facility is better.

5 Conclusions

In this paper we examined the use of discrete starting solutions for solving the continuous p-median problem. One would expect that better discrete starting solutions should result in better continuous solutions. However, we found that this is not the case. Moderately good starting solutions can often yield better continuous solutions. We tested using as starting solutions: (i) the optimal discrete solution, (ii) the 100 best discrete solutions, and (iii) the final population of a genetic algorithm designed to find good discrete solutions.

The 100 best discrete solutions provided quite good continuous solutions. However, run time was two orders of magnitude longer than the time required by the genetic algorithm. If we would allow the genetic algorithm to consume that much run time, it is clear that best continuous solutions obtained would be much improved from the same population of starting solutions. Actually, the best performance was observed when many populations of a very short genetic algorithm, whose results are not so good, were applied. We also evaluated the effect of the quality of the genetic algorithm on the final outcome. It is clear that when using about the same run time, better genetic final populations (fewer final populations though), perform worse. Statistical analysis shows that this inferiority is statistically significant, as evidences by extremely small p-values.

We found that a diverse set of “good” discrete starting solutions is a better option than relying on a single optimal (or near-optimal) discrete solution. Furthermore, this diverse set may be obtained with less computational effort. Our research also shows that at some point, further improvement in quality of the discrete starting solution may actually result in inferior continuous solutions. This turning point would depend on the local search being implemented in the continuous space. This notion of a “turning point” may provide some insight on what constitutes a good set of starting solutions. If the current best continuous solution is reached from starting solution \(X^*\), and there is no improvement obtained from several better quality starting solutions, we might surmise that the current set of starting solutions is a good one for the applied heuristic. This could also become an interesting direction for future research.

Future research should investigate other continuous search algorithms, such as the standard Cooper method (which is faster but less powerful than the RATIO algorithm we used), to determine the effect on our conclusions of the quality of the local search. Also, it would be useful to investigate using discrete starting solutions for solving other continuous multiple facility location problems, such as the p-center, and finding whether “best” discrete solutions perform better or worse than “good” discrete solutions.

References

Aboolian R, Berman O, Krass D (2007) Competitive facility location model with concave demand. Eur J Oper Res 181:598–619

Abramowitz M, Stegun I (1972) Handbook of Mathematical Functions. Dover Publications Inc., New York

Alp O, Drezner Z, Erkut E (2003) An efficient genetic algorithm for the \(p\)-median problem. Ann Oper Res 122:21–42

Aurenhammer F, Klein R, Lee D-T (2013) Voronoi Diagrams and Delaunay Triangulations. World Scientific, New Jersey

Berman O, Drezner Z, Wesolowsky GO (2003) Locating service facilities whose reliability is distance dependent. Comput Oper Res 30:1683–1695

Bongartz I, Calamai PH, Conn AR (1994) A projection method for \(\ell _p\) norm location-allocation problems. Math Program 66:238–312

Brimberg J, Drezner Z (2013) A new heuristic for solving the \(p\)-median problem in the plane. Comput Oper Res 40:427–437

Brimberg J, Drezner Z (2020) Improved starting solutions for the planar \(p\)-median problem. Yugoslav J Oper Res. https://doi.org/10.2298/YJOR2003

Brimberg J, Hodgson MJ (2011) Heuristics for location models. In: Eiselt HA, Marianov V (eds) Foundations of Location Analysis: International Series in Operations Research & Management Science, vol 155. Springer, New York, pp 335–355

Brimberg J, Hansen P, Mladenović N, Taillard E (2000) Improvements and comparison of heuristics for solving the uncapacitated multisource Weber problem. Oper Res 48:444–460

Brimberg J, Hansen P, Mladonovic N, Salhi S (2008) A survey of solution methods for the continuous location allocation problem. Int J Oper Res 5:1–12

Brimberg J, Drezner Z, Mladenović N, Salhi S (2014) A new local search for continuous location problems. Eur J Oper Res 232:256–265

Brimberg J, Drezner Z, Mladenovic N, Salhi S (2017a) Using injection points in reformulation local search for solving continuous location problems. Yugoslav J Oper Res 27:291–300

Brimberg J, Mladenović N, Todosijević R, Urošević D (2017b) Less is more: solving the max-mean diversity problem with variable neighborhood search. Inf Sci 382:179–200

Church RL (2019) Understanding the Weber location paradigm. In: Eiselt HA, Marianov V (eds) Contributions to Location Analysis - In Honor of Zvi Drezner’s 75th Birthday. Springer Nature, Switzerland, pp 69–88

Cooper L (1963) Location-allocation problems. Oper Res 11:331–343

Cooper L (1964) Heuristic methods for location-allocation problems. SIAM Rev 6:37–53

CPLEX, IBM ILOG (2009). V12. 1: User’s Manual for CPLEX. In: International Business Machines Corporation, Incline Village, NV, 46(53):157

Daskin MS, Maass KL (2015). The p-median problem. In: Laporte G, Nickel S, da Gama FS (eds.) Location science. Springer, New York, pp 21–45

Daskin MS (1995) Network and Discrete Location: Models, Algorithms, and Applications. John Wiley & Sons, New York

Drezner Z (1995) On the conditional \(p\)-median problem. Comput Oper Res 22:525–530

Drezner T, Drezner Z (2016) Sequential location of two facilities: Comparing random to optimal location of the first facility. Ann Oper Res 246:1–15

Drezner Z, Drezner TD (2020) Biologically inspired parent selection in genetic algorithms. Ann Oper Res 287:161–183

Drezner Z, Marcoulides GA (2003) A distance-based selection of parents in genetic algorithms. In: Resende MGC, de Sousa JP (eds) Metaheuristics: Computer Decision-Making. Kluwer Academic Publishers, Boston, pp 257–278

Drezner Z, Salhi S (2017) Incorporating neighborhood reduction for the solution of the planar \(p\)-median problem. Ann Oper Res 258:639–654

Drezner Z, Simchi-Levi D (1992) Asymptotic behavior of the Weber location problem on the plane. Ann Oper Res 40:163–172

Drezner Z, Zerom D (2016) A simple and effective discretization of a continuous random variable. Commun Stat Simul Comput 45:3798–3810

Drezner Z, Klamroth K, Schöbel A, Wesolowsky GO (2002) The Weber problem. In: Drezner Z, Hamacher HW (eds) Facility Location: Applications and Theory. Springer, Berlin, pp 1–36

Drezner Z, Brimberg J, Salhi S, Mladenović N (2016) New local searches for solving the multi-source Weber problem. Ann Oper Res 246:181–203

Drezner T, Drezner Z, Kalczynski P (2020) Directional approach to gradual cover: the continuous case. CMS. https://doi.org/10.1007/s10287-020-00378-1

Edmands S (2007) Between a rock and a hard place: evaluating the relative risks of inbreeding and outbreeding for conservation and management. Mol Ecol 16:463–475

Eilon S, Watson-Gandy CDT, Christofides N (1971) Distribution Management. Hafner, New York

Fenster CB, Galloway LF (2000) Inbreeding and outbreeding depression in natural populations of Chamaecrista fasciculata (Fabaceae). Conserv Biol 14:1406–1412

Feo TA, Resende MG (1995) Greedy randomized adaptive search procedures. J Global Optim 6:109–133

Freeman S, Harrington M, Sharp JC (2014) Biological Science. Second Canadian Edition, Pearson, Toronto

Garey MR, Johnson DS (1979). Computers and intractability: A guide to the theory of NP-completeness. freeman, San Francisco

Goldberg DE (2006) Genetic algorithms. Pearson Education, Delhi

Hansen P, Mladenović N, Taillard É (1998) Heuristic solution of the multisource Weber problem as a \(p\)-median problem. Oper Res Lett 22:55–62

Holland JH (1975) Adaptation in Natural and Artificial Systems. University of Michigan Press, Ann Arbor

Ince EL (1926) Ordinary Differential Equations. Reprinted in 1956 by Dover Publications, Inc., USA

Kalczynski P Drezner Z (2020). The obnoxious facilities planar \(p\)-median problem. In review, arXiv:2004.03038 [math.OC]

Kalczynski P, Brimberg J, Drezner Z (2020a). The importance of good starting solutions in the minimum sum of squares clustering problem. In review, arXiv:2004.04593 [cs.LG]

Kalczynski P, Goldstein Z, Drezner Z (2020b). Partitioning items into mutually exclusive groups. In review, arXiv:2002.11536 [math.OC]

Kariv O, Hakimi SL (1979) An algorithmic approach to network location problems. II: The \(p\)-medians. SIAM J Appl Math 37:539–560

Krau S (1997). Extensions du problème de Weber. PhD thesis, École Polytechnique de Montréal

Kuenne RE, Soland RM (1972) Exact and approximate solutions to the multisource Weber problem. Math Program 3:193–209

Kutta W (1901) Beitrag zur näherungweisen integration totaler differentialgleichungen. Z Angew Math Phys 46:435–453

Love RF, Morris JG, Wesolowsky GO (1988) Facilities Location: Models & Methods. North Holland, New York

Megiddo N, Supowit K (1984) On the complexity of some common geometric location problems. SIAM J Comput 18:182–196

Minieka E (1980) Conditional centers and medians on a graph. Networks 10:265–272

Mladenović N, Todosijević R, Urošević D (2016) Less is more: basic variable neighborhood search for minimum differential dispersion problem. Inf Sci 326:160–171

Okabe A, Boots B, Sugihara K, Chiu SN (2000) Spatial Tessellations: Concepts and Applications of Voronoi Diagrams. Wiley Series in Probability and Statistics. John Wiley, Hoboken

Reinelt G (1991) TSLIB a traveling salesman library. ORSA J Comput 3:376–384

ReVelle CS, Swain RW (1970) Central facilities location. Geograph Anal 2:30–42

Runge C (1895) Über die numerische auflösung von differentialgleichungen. Math Ann 46:167–178

Suzuki A, Okabe A (1995) Using Voronoi diagrams. In: Drezner Z (ed) Facility Location: A Survey of Applications and Methods. Springer, New York, pp 103–118

Voronoï G (1908) Nouvelles applications des paramètres continus à la théorie des formes quadratiques. deuxième mémoire. recherches sur les parallélloèdres primitifs. Journal für die reine und angewandte Mathematik 134:198–287

Weber A (1909) Über den Standort der Industrien, 1. Teil: Reine Theorie des Standortes. English Translation: on the Location of Industries. University of Chicago Press, Chicago, IL. Translation published in 1929

Wesolowsky GO (1993) The Weber problem: History and perspectives. Locat Sci 1:5–23

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kalczynski, P., Brimberg, J. & Drezner, Z. Less is more: discrete starting solutions in the planar p-median problem. TOP 30, 34–59 (2022). https://doi.org/10.1007/s11750-021-00599-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11750-021-00599-w