Abstract

We develop a method originally proposed by R. A. Fisher into a general procedure, called tailoring, for deriving goodness-of-fit tests that are guaranteed to have a \(\chi ^{2}\) asymptotic null distribution. The method has a robustness feature that it works correctly in testing a certain aspect of the model while some other aspect of the model may be misspecified. We apply the method to small area estimation. A connection, and difference, to the existing specification test is discussed. We evaluate performance of the tests both theoretically and empirically, and compare the performance with several existing methods. Our empirical results suggest that the proposed test is more accurate in size, and has either higher or similar power compared to the existing tests. The proposed test is also computationally less demanding than the specification test and other comparing methods. A real-data application is discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Goodness-of-fit tests for mixed models, or mixed effects models, have received considerable attention in recent literature (e.g., Jiang 2001; Claeskens and Hart 2009; Dao and Jiang 2016). Such tests are relevant to many practical problems. For example, mixed effects models are extensively used in small area estimation (SAE; e.g., Rao and Molina 2015). Here the term small area typically refers to a population for which reliable statistics of interest cannot be produced based on direct sampling from the population due to certain limitations of the available data. Examples of small areas include a geographical region (e.g., a state, county, municipality), a demographic group (e.g., a specific age \(\times \) sex \(\times \) race group), a demographic group within a geographic region, etc. Statistical models, especially mixed effects models, have played key roles in improving small area estimates by borrowing strength from relevant sources. It is known, however, that in case of model misspecification, the traditional empirical best linear unbiased prediction (EBLUP) method may lose efficiency. See, for example, Jiang et al. (2011). In case of model misspecification, an alternative method, known as observed best prediction (OBP), is shown to be more accurate than the EBLUP. On the other hand, when the underlying model is correctly specified, EBLUP is known to be more efficient than OBP (e.g., Jiang et al. 2011, 2015). Therefore, it is important, in practice, to know whether or not the assumed model is appropriate in order to come up with a more efficient SAE strategy.

A standard assumption for mixed effects models, in general (e.g., Jiang 2007), is that the random effects are normally distributed. This assumption has had substantial impact on many aspects of the inference. For example, estimation of the mean squared prediction errors of small area predictors is an important issue in SAE (e.g., Rao and Molina 2015). The well-known Prasad-Rao method (Prasad and Rao 1990) depends on the normality assumption and may not be accurate if the assumption fails (e.g., Lahiri and Rao 1995). Also, prediction interval obtained via parametric bootstrap methods (e.g., Chatterjee et al. 2008) depends heavily on the normality assumption. The normality assumption is even more critical for inference about generalized linear mixed models (GLMMs; e.g., Jiang 2007). See, for example, Jiang and Nguyen (2009). Although there are strategies that are less dependent on the normality assumption, those strategies are often less efficient than the normality-based method when the normality assumption actually holds, or approximately holds. Thus, it is important to check the validity of the normality assumption so that an appropriate, or more efficient, method can be used for the inference.

In the literature of mixed effects models, such problems as discussed above have to do with mixed model diagnostics; see, for example, Pierce (1982), sec. 2.4.1 of Jiang (2007), Claeskens and Hart (2009). Jiang (2001) proposed a \(\chi ^{2}\)-type goodness-of-fit test for linear mixed model (LMM) diagnostics, whose asymptotic null distribution is a weighted \(\chi ^{2}\), where the weights are eigenvalues of some nonnegative definite matrix. Claeskens and Hart (2009) proposed an alternative approach to the \(\chi ^{2}\) test for checking the normality assumption in LMM. The authors considered a class of distributions that include the normal distribution as a reduced, special case. The test is based on the likelihood-ratio test (LRT) that compares the “estimated distribution” and the null distribution (i.e., normal). A model selection procedure via the information criteria is used to determine the larger class of distributions for the LRT. In particular, the asymptotic null distribution is in the form of the distribution of \(\sup _{l\ge 1}\{2Q_{l}/l(l+3)\}\), where \(Q_{l}=\sum _{q=1}^{l}\chi _{q+1}^{2}\), l is the order of polynomial, and \(\chi _{2}^{2}, \chi _{3}^{2},\dots \) are independent such that \(\chi _{j}^{2}\) has a \(\chi ^{2}\) distribution with j degrees of freedom, \(j\ge 2\).

The \(\chi ^{2}\)-type tests depend on the choice of cells, based on which the observed and expected cell frequencies are evaluated. As noted by Jiang and Nguyen (2009), performance of the \(\chi ^{2}\) test is sensitive to the choice of the cells, and there is no “optimal choice” of such cells known in the literature. On the other hand, the Claeskens-Hart test depends on the choice of the information criterion. As is well known, there are different versions of the information criteria, such as AIC (Akaike 1956), BIC (Schwarz 1978), HQ (Hannan and Quinn 1979). The difference in the performance of the test by different information criteria is unclear. Furthermore, the weighted-\(\chi ^{2}\) asymptotic null distribution of Jiang (2001) depends on eigenvalues of a certain matrix, whose expressions are complicated, and involve unknown parameters. These parameters need to be estimated in order to obtain the critical values of the tests. Due to such a complication, Jiang (2001) suggests to use a Monte-Carlo method to compute the critical value; but, by doing so, the usefulness of the asymptotic result may be undermined. Similarly, the asymptotic distribution of the Claeskens-Hart test is not simple and involves supreme of normalized partial sums of \(\chi ^{2}\) random variables.

It might be argued that, in today’s computer era, having a \(\chi ^{2}\) asymptotic distribution is, perhaps, not as important as in the past. However, there are, still, attractive features of the \(\chi ^{2}\) limiting distribution that are worth pursuing. First, the \(\chi ^{2}\) distribution corresponds to the right standardization–it is the “square” of the norm of a standard multivariate normal random vector. In this regard, anything other than \(\chi ^{2}\) leaves, at least, some room for improvement. In other words, if the limiting distribution is not a (central) \(\chi ^{2}\), the test statistic has not been completely standardized. Note that, while there is only one way of a complete standardization, there are many, if not infinitely many, ways of incomplete standardization, so it may not be convincing why one way is preferred over the others. Second, having a computer-driven, non-analytic asymptotic distribution makes it difficult to study properties of the limiting distribution. For example, how does the reduction of complexity of the model under the null hypothesis play a role? It may not be easy to tell if all one gets are a bunch of numbers. A related issue is regarding direction of improvement. This may not be easy to see without a simple analytic expression for the asymptotic distribution.

In Sect. 2, we generalize a method initiated by Fisher (1922) in deriving goodness-of-fit tests (GoFTs) that are guaranteed to have asymptotic \(\chi ^{2}\) null distributions. A robust feature of the proposed test is that it can be used to test a certain aspect of the assumed model while another aspect of the model is misspecified. We also discuss a connection, and difference, between our GoFT and the specification test based on the generalized method of moments (GMM; e.g., Hall 2005) that is known in the econometrics literature.

In Sect. 3, we apply our generalized procedure to SAE to derive a goodness-of-fit test under the Fay-Herriot model (Fay and Herriot 1979). The test is developed under a predictive consideration that incorporates the special interest of SAE. In Sect. 4, we evaluate performance of the proposed GoFT via simulation studies. We compare our GoFT with several competing methods, including the specification test. The results show that our GoFT is more accurate in term of the size, and has higher or similar power compared to the competing methods. Our GoFT is also computationally less demanding than the specification test. A real data example is discussed in Sect. 5. Some concluding remarks and future directions are given in Sect. 6. Proofs and technical details are deferred to Appendix. Computer codes are provided as supplementary materials.

2 Tailoring

In this section, we describe a general approach to obtaining a test statistic that has an asymptotic \(\chi ^{2}\) distribution under the null hypothesis. The original idea can be traced back to R. A. Fisher (1922), who used the method to obtain an asymptotic \(\chi ^{2}\) distribution for Pearson’s \(\chi ^{2}\)-test, when the so-called minimum chi-square estimator is used. However, Fisher did not put forward the method that he originated under a general framework, as we do here. Suppose that there is a sequence of s-dimensional random vectors, \(B(\vartheta )\), which depend on a vector \(\vartheta \) of unknown parameters with dimension r such that, when \(\vartheta \) is the true parameter vector, one has \(\mathrm{E}\{B(\vartheta )\}=0\), \(\mathrm{Var}\{B(\vartheta )\}=I_{s}\), and, as the sample size increases,

where \(|\cdot |\) denotes the Euclidean norm. However, because \(\vartheta \) is unknown, one cannot use (1) for GoFT. What is typically done, such as in Pearson’s \(\chi ^{2}\)-test, is to replace \(\vartheta \) by an estimator, \({\hat{\vartheta }}\). Question is: what is \({\hat{\vartheta }}\)? The ideal scenario would be that, after replacing \(\vartheta \) by \({\hat{\vartheta }}\) in (1), one has a reduction of degrees of freedom (d.f.), which leads to

where \(\nu =s-r>0\). This is the famous “subtract one degree of freedom for each parameter estimated” rule taught in many elementary statistics books (e.g., Rice 1995, p. 242). However, as is well known (e.g., Moore 1978), depending on what \({\hat{\vartheta }}\) is used, (2) may or may not hold, regardless of what degrees of freedom are actually involved. In fact, the only method that is known to achieve (2) without restriction on the distribution of the data is Fisher’s minimum \(\chi ^{2}\) method. In a way, the method allows one to “cut-down” the d.f. of (1) by r, and thus convert an asymptotic \(\chi ^{2}_{s}\) to an asymptotic \(\chi ^{2}_{\nu }\). For such a reason, we have dubbed the method, under the more general setting below, tailoring. We develop the method with a heuristic derivation, with the rigorous justification given in the Appendix.

The “right” estimator of \(\vartheta \) for tailoring is supposed to be the solution to an estimating equation of the following form:

where \(A(\vartheta )\) is an \(r\times s\) non-random matrix that plays the role of tailoring the s-dimensional vector, \(B(\vartheta )\), to the r-dimensional vector, \(C(\vartheta )\). The specification of A will become clear at the end of the derivation. Throughout the derivation, \(\vartheta \) denotes the true parameter vector. For notation simplicity, we use A for \(A(\vartheta )\), \({\hat{A}}\) for \(A({\hat{\vartheta }})\), etc, where \({\hat{\vartheta }}\) is the solution of (3). Under regularity conditions, one has the following expansions, which can be derived from the Taylor series expansion and large-sample theory (e.g., Jiang 2010):

Because \(\mathrm{E}_{\vartheta }\{B(\vartheta )\}=0\) [see above (1)], one has

where \(U=\mathrm{E}_{\vartheta }(\partial B/\partial \vartheta ')\). We assume that A is chosen such that

Then, it is easy to verify that \(I_{s}-U(AU)^{-1}A\) is symmetric and idempotent. If we further assume that the following limit exists:

then P is also symmetric and idempotent. Thus, assuming that \(B{\mathop {\rightarrow }\limits ^\mathrm{d}}N(0,I_{s})\), which is typically the argument leading to (1), one has, by (7), \({\hat{B}}{\mathop {\rightarrow }\limits ^\mathrm{d}}N(0,P)\), hence (e.g., Searle 1971, p. 58) \(|{\hat{B}}|^{2}{\mathop {\rightarrow }\limits ^\mathrm{d}}\chi ^{2}_{\nu }\), where \(\nu =\mathrm{tr}(P)=s-r\). This is exactly (2).

It remains to answer one last question: Is there such a non-random matrix \(A=A(\vartheta )\) that satisfies (8) and (9)? We show that, not only the answer is yes, there is an optimal one. Let \(A=N^{-1}U'W\), where W is a symmetric, non-random matrix to be determined, and N is a normalizing constant that depends on the sample size. By (4) and the fact that \(\mathrm{Var}_{\vartheta }(B)=I_{s}\) [see above (1)], we have

by, for example, Lemma 5.1 of Jiang (2010). The equality on the right side of (10) holds when \(W=I_{s}\), giving the optimal A:

The A given by (11) clearly satisfies (8) [which is \(U(U'U)^{-1} U'\)]. It will be seen in the next section that, with \(N=m\), (9) is expected to be satisfied. It should be noted that the solution to (3), \({\hat{\vartheta }}\), does not depend on the choice of N.

Remark 1

A basic assumption for the tailoring method to work is that \(\mathrm{E}\{B(\vartheta )\}=0\) when \(\vartheta \) is the true parameter vector. However, from the proof of the result (see Appendix) it is seen that the condition “\(\vartheta \) is the true parameter vector” is not critical. For example, in case there is a model misspecification, a “true parameter vector” may not exist. Nevertheless, what is important is that there is some parameter vector, \(\vartheta \), which is not necessarily the true parameter vector, such that the equation

holds. This equation holds, of course, when \(\vartheta \) is the true parameter vector, but it can also hold when the true parameter vector does not exist, such as under model misspecification. In fact, in the latter case, one may define the “true parameter vector” as the unique \(\vartheta \), assumed exist, that satisfies (12). Note that the number of equations in (12) is the same as the dimension of \(\vartheta \); thus, one expect that a solution exists and is unique, under some regularity conditions. To see that (12) is the key, note that under (12), (3) is equivalent to \(A(\vartheta )[B(\vartheta )-\mathrm{E}\{B(\vartheta )\}]=0\), where the expectation is with respect to the true underlying distribution. It follows that one can replace \(B(\vartheta )\) by \(B(\vartheta )-\mathrm{E}\{B(\vartheta )\}\), which has mean zero, and all of the arguments in the proof go through. This property has given tailoring some unexpected robustness feature, that is, it can work correctly in spite of some model misspecification. We illustrate more specifically in the next section.

Remark 2

There is a connection between the tailoring method and the specification test (ST) based on GMM (e.g., Hall 2005). However, there is also a difference. The difference is that ST is equivalent to (3) with \(A(\vartheta )\) given by (11) without the expectation, but for tailoring the expectation is taken first before using it in (3). One may compare this difference to that between the observed Fisher information and expected one in maximum likelihood (ML) estimation (Efron and Hinkley 1978). Although it may be argued that, asymptotically, this difference may be of lower order—in fact, ST may also be viewed as an extension of the original idea of Fisher (1922), and it has the same asymptotic null distribution as tailoring—finite-sample performance may differ. We demonstrate this difference in our simulation study in Sect. 4. Furthermore, because, after taking the expectation, some terms in \(\partial B'/\partial \vartheta \) in (11) may vanish, the form of \(A(\vartheta )\) in (3) may be substantially simplified. One may, again, compare this to Fisher scoring in ML. For example, McCullagh and Nelder (1989, p. 42) developed the well-known GLM algorithm and noted that it often simplifies the numerical computation of the ML estimator. In our simulation study, we have also observed that tailoring is computationally less demanding than ST, apparently also due to the simplification of taking the expectation. See Sect. 4 for more detail.

Remark 3

The asymptotic covariance matrix of \({\hat{\vartheta }}\), that is, the left side of (10), has a “sandwich” expression, which is similar to the well-known sandwich estimator of the (asymptotic) covariance matrix of a GEE (generalized estimating equations) estimator. See, for example, Kauermann and Carroll (2001), who studied impact of the sandwich estimator in terms of relative efficiency and coverage probability of the resulting confidence interval. The sandwich estimator provides robust estimation of the variation of the GEE estimator when the variance-covariance structure of the data is misspecified. The robustness feature of tailoring is, in a way, more general in that it is not necessarily with respect to misspecification of the variance-covariance structure. For example, in the next section we are mainly concerned with misspecification of the mean function.

3 Applying tailoring to SAE

As noted, we intend to develop a GoFT that takes into account the special interests in SAE problems. The development is based on an appropriate objective function in conjunction with the tailoring method. We shall focus on area-level models (Fay and Herriot 1979); extension of the method to other types of SAE models, such as the nested-error regression model (Battese et al. 1988), is fairly straightforward.

There are different aspects of the model that are subject to model checking. Although the focus here is on testing for the normality assumption of the random effects, the method can be easily extended to testing other aspects of the assumed model. As noted (see Remark 1 of the previous section), the proposed test has a robustness feature that it can be used to test one aspect of the model assumption, here normality, while other aspects of the model, for example, the mean function, may be misspecified.

The Fay-Herriot (FH) model may be expressed as that (i) \((y_{i},\theta _{i}), i=1,\dots ,m\) are independent; (ii) \(y_{i}|\theta _{i}\sim N(\theta _{i}, D_{i})\); and (iii) \(\theta _{i}\sim N(x_{i}'\beta ,\sigma ^{2})\). Here, \(y_{i}\) is the direct estimate from the ith area, \(\theta _{i}\) is the small area mean, \(x_{i}\) is a vector of observed covariates, \(\beta \) is a vector of unknown parameters, \(\sigma ^{2}\) is an unknown variance, and \(D_{i}\) is a sampling variance that is assumed known. The normality assumption has to do with (iii). The reason that this is not an issue with (ii) is because, in practice, \(y_{i}\) is typically a sample summary such as a sample mean or proportion; as a result, the normality assumption in (ii) often holds approximately due to the central limit theorem (CLT). However, there is no obvious reason to believe that the CLT should hold for (iii). Thus, we consider a broader class of distributions, namely, the skewed normal distribution (SN; Azzalini and Capitanio 2014), which includes the normal distribution as a special case. Under the SN distribution, (iii) is replaced by (iii) \(\theta _{i}\sim \mathrm{SN}(x_{i}'\beta ,\sigma ^{2},\alpha )\), which denotes the SN distribution with mean \(x_{i}'\beta \), variance \(\sigma ^{2}\), and skewness parameter \(\alpha \) (see below), noting that \(\alpha =0\) leads to the normal distribution. We denote the model parameters as \(\psi =(\beta ',\sigma ^{2},\alpha )'\).

Suppose that, under the null hypothesis, there is a reduction in the dimension of the parameter vector such that \(\gamma =\gamma _{0}\) under the null hypothesis, where \(\gamma \) is a sub-vector of \(\psi \) and \(\gamma _{0}\) is known. Let \(\vartheta \) denote the vector of parameters in \(\psi \) other than \(\gamma \). In this section, notation such as \(\mathrm{E}_{\vartheta }\), etc. will be understood as expectation, etc. under the null hypothesis.

The LRT is often used in the context of goodness-of-fit. However, because, in SAE, the primary interest is prediction of mixed effects (e.g., Jiang 2007; Rao and Molina 2015), it is reasonable to develop something that is closely related to the predictive interest. To motivate something that is in a similar spirit of LRT, but takes into account the SAE interest, let us consider the problem from a “Bayesian” perspective.

In general, let \(\theta \) be a vector of unobserved quantities that one wishes to predict (e.g., small area mean \(\theta _i\) in FH model), \(\psi \) be the vector of parameters involved in either \(f(y|\theta )\) or \(f(\theta )\), and y a vector of observations. The likelihood function may be viewed, using a Bayesian term, as a marginal likelihood with the distribution of \(\theta \), \(f(\theta )\), treated as a prior, that is,

The likelihood is used for estimation of fixed parameters, which are associated with either \(f(\theta )\) or \(f(y|\theta )\) or both. To come up with a predictive version of the likelihood, we may simply replace the prior in (13) by its “posterior”, that is, the conditional pdf of \(\theta \) given y, \(f(\theta |y)\). With this replacement, we obtain

We call (14) the predictive likelihood, or PL. The reason is that, if parameter estimation is of primary interest, one uses the prior, \(f(\theta )\), to obtain the (marginal) likelihood (13). Now, because we replace \(f(\theta )\) by \(f(\theta |y)\), which is the main outcome for the prediction of \(\theta \), and then go through the same operation, the output (14) should be called a predictive likelihood. It should be noted that the predictive likelihood is not necessarily a likelihood, as it does not always possess some of the well-known properties of the likelihood. However, we can, at least, adjust the score equation of the PL to make it unbiased.

The adjusted PL score is given by

We call the estimator of \(\psi \) obtained by solving the adjusted PL score equation, \(s_{\mathrm{a}}(\psi )=0\), or, equivalently, the following equation:

maximum adjusted PL estimator, or Maple, in view of its analogy to the MLE.

Under the FH model, it is easy to show that \(f(\theta |y,\psi )=\prod _{i=1}^{m} f(\theta _{i}|y_{i},\psi )\) where \(\theta =(\theta _1,...,\theta _m).\) Thus, we have

where \(f(y_i|y_i,\psi )=\int f(y_i|\theta _i,\psi ) f(\theta _i|y_i,\psi )\mathrm{d} \theta _i\). Without the null hypothesis that the random effects are normal, that is, \(\alpha =0\), we have \(y_i|\theta _i \sim N(\theta _i,D_i)\) and \(\theta _i\sim \mathrm{SN}(x'_i \beta , \sigma ^{2}, \alpha )\). It is then shown in the Appendix that

where \(\alpha _{si}=(4-s)\sigma \alpha /\sqrt{\{(4-s)\sigma ^{2}+D_i\}\{(4 -s)\sigma ^{2}+(1+\alpha ^{2})D_i\}}\), \(s=2,3\), \(B_{i}=\sigma ^{2}/(\sigma ^{2} +D_{i})\), and \(\varPhi (\cdot ), \phi (\cdot )\) denote the cdf, pdf of N(0, 1), respectively. Note that, when \(\alpha =0\), (18) reduces to that under normality. Also note that \(f(y_i|y_i,\psi )\ne f(y_{i},\psi )\).

By (17), the PL can be expressed as \(\prod _{i=1}^{m}f(y_{i}|y_i, \psi )\). To test \(\mathrm{H}_0: \alpha =0\), let

One can derive the adjusted PL equation, (16), as follows:

where \(a_i(\sigma ^{2})=(1-B_i)^2/D_i(1+B_i)\), \(b_i(\sigma ^{2})= (1-B_i)^3(3+B_i)/2D^2_i(1+B_i)^2\), \(c_i(\sigma ^{2})=(1-B_i)^2(3 +B_i)/2D_i (1+B_i)^2\), and \(d_i(\sigma ^{2})=\sqrt{2/\pi }\sigma (1 -B_i)^2/D_i(1+B_i)\).

Let \(\vartheta \) denote the true \(\vartheta \). If the model is correctly specified under the null hypothesis, then, under the null hypothesis, \(\sum _{i=1}^{m}b_{i}(y_{i},\vartheta )\) is a sum of independent random vectors with mean zero. On the other hand, if there is some misspecification in the mean function that the true \(\beta \), hence the true \(\vartheta \), does not exist (under the null hypothesis), we again define the “true \(\vartheta \)” as the unique solution to (12). Then, all of the arguments in the derivation of Sect. 2 go through by replacing \(\sum _{i=1}^{m}b_{i}(y_{i},\vartheta )\) with \(\sum _{i=1}^{m} [b_{i}(y_{i},\vartheta )-\mathrm{E}\{b_{i}(y_{i},\vartheta )\}]\). Furthermore, we have \(V_{b}(\vartheta )=\mathrm{Var}_{\vartheta }\{\sum _{i=1}^{m}b_{i}(y_{i}, \vartheta )\}=\sum _{i=1}^{m}\mathrm{Var}_{\vartheta }\{b_{i}(y_{i},\vartheta )\}\), where

with \(g_i(\sigma ^{2})=(1-B_i)^3/D_i(1+B_i)^2\) and \(h_i(\sigma ^{2})=(1 -B_i)^4(3+B_i)^2/2D^2_i(1+B_i)^4\).

Thus, if we let \(B(\vartheta )=V_{b}^{-1/2}(\vartheta )\sum _{i=1}^{m}b_{i}( y_{i},\vartheta )\), we have \(B(\vartheta ){\mathop {\longrightarrow }\limits ^\mathrm{d}}N(0, I_{s})\), where \(s=\mathrm{dim}(\psi )=3\). It follows that (1) holds. Because \(r=\mathrm{dim}(\vartheta )=2<s\), the tailoring method applies to yield (2) with \(\nu =s-r =1\). In particular, we have

where \(b_{i}\) is defined above and

This gives \(A(\vartheta )\) for solving the tailoring equation (3).

4 Simulation study

We carry out a simulation study to evaluate performance of the tailoring methods based on Maple, described in the previous section, and compare it with existing methods. Specifically, we compare our method with those of Pierce (1982), Jiang (2001), Claeskens and Hart (2009), and ST based on GMM (e.g., Hall 2005). For Pierce (1982), the test statistic under the FH model for \(\mathrm{H}_0: \alpha =0\) is given by \({\hat{F}}\equiv m{\hat{T}}^2_m/V\), where

with \(P=\lim \mathrm{E}(\partial T_m/\partial \psi )\). The asymptotic null distribution of the test statistic is \(\chi ^2_{1}\). In the current case, it can be shown that

In the case of Jiang (2001), one has the test statistic

where \(N_k=\sum _{i=1}^{m}1_{(y_i \in C_k)}=\#\{1 \le i \le m: y_i \in C_k\},\) and \(p_k(\psi )=\sum _{i=1}^{m}P_{\psi }(y_i \in C_k)= \sum _{i=1}^{m}p_{ik}(\psi ).\) More specifically, the cells, \(C_{k}\), \(1 \le k \le K\) are defined as follows: \(C_1=(-\infty ,c_1], C_k=(c_{k-1}, c_k], 2 \le k \le K-1,\) and \(C_K=(c_{K-1},\infty )\). Regarding the choice of K and \(c_k\)’s, by Jiang (2001), we may choose \(K=\max (p+2,[m^{1/5}])\), where p is the dimension of \(\beta \). Once K is chosen, the \(c_k\)’s are chosen so that there are equal number of \(y_i\)’s within each \(C_k, 1\le k \le K\). It then follows that \(N_k=m/K, 1 \le k \le K\). Finally, the \(p_{ik}(\psi )\) have the following expressions:

We then use a Monte-Carlo method (e.g., bootstrapping) to compute the critical values, as suggested by Jiang (2001).

In the case of Claeskens and Hart (2009), one uses the test statistic

where \(\log L\) is the log-likelihood and M is the order of polynomial which plays the role of a smoothing parameter. The test is based on the LRT which compares the estimated distribution (\(M>0\)) and the null distribution (\(M=0\); i.e., normal). Similar to the Jiang (2001), one needs to use replications from the test statistic above to approximate the critical values. We consider \(M=2\) in our simulation study.

As noted (see Remark 2 in Sect. 2), the ST is simply tailoring with the expectation sign in (11) removed. In the case of Maple, to obtain the A corresponding to ST, we have \(\partial b_{i}'/\partial \vartheta =(a_{ist})_{1 \le s\le 2, 1\le t\le 3}\), where

with

and \(\partial d_i(\sigma ^2)/\partial \sigma ^2=-2b_i(\sigma ^2)\sqrt{2/\pi } \sigma +a_i(\sigma ^2)/\sqrt{2\pi }\sigma \).

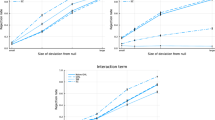

To evaluate and compare performance of the aforementioned methods, let \({\hat{B}}^2_{\mathrm{PL}}\), \({\hat{F}}\), \({\hat{\chi }}^{2}_{\mathrm{J}}\), \({\hat{\chi }}^2_{\mathrm{CH}},\) and \({\hat{B}}^2_{\mathrm{ST}}\) represent the test statistics for tailoring/Maple, Pierce (1982), Jiang (2001), Claeskens and Hart (2009), and ST, respectively [for notation simplicity we write \(|B({\hat{\vartheta }})|^2\) as \({\hat{B}}^2\)]. We consider two situations where the assumed model is either correct or misspecified. The assumed model is a FH model:

however, the data are generated under the following FH model:

where \(m=2n,D_i=D_{i1}\) for \(1 \le i \le n\) and \(D_i=D_{i2}\) for \(n+1 \le i \le m\). We choose \(\sigma ^2=10\), noting that \(v_i \sim SN(0, \sigma ^2,\alpha )\) and \(e_i \sim N(0,D_i)\). The \(D_{i1}\) are generated from the uniform distribution between 3.5 and 4.5. There are two scenarios for \(D_{i2}\), one generated from U(3.5, 4.5) and the other from U(0.5, 1.5). Let \(\beta _1=1\), and \(\beta _2=1\) or 3; and the true value of \(\alpha \) is 0 under the null hypothesis, and 0.5 under the alternative. The \(x_i\)’s are generated from the uniform distribution between 0 and 1, and fixed during the simulation study. Note also that the \(D_i\)’s are fixed during the simulation study. It is seen that, when \(\beta _{1}\ne \beta _{2}\), the underlying model is misspecified.

We consider testing \(H_0: \alpha =0\) with three different levels of significance, 0.01, 0.05, 0.10, and four different sample sizes, \(m=50, 100, 200\), and 500. We run \(R=5,000\) simulations to calculate \({\hat{B}}^2_{\mathrm{PL}},{\hat{F}},{\hat{\chi }}^2_{\mathrm{J}}\), \({\hat{\chi }}^2_{\mathrm{CH}}\), and \({\hat{B}}^2_{\mathrm{ST}}\). In particular, we generate response variable \(y_i^{(r_1)}=\beta _1 x_i+v^{(r_1)}_i+e^{(r_1)}_i, (1 \le i \le n; r_1=1,...,R)\), and \(y_i^{(r_1)}=\beta _{2}x_i+v^{(r_1)}_i+e^{(r_1)}_i, (n+1 \le i \le m; r_1 =1,...,R)\), where \(v^{(r_1)}_i \sim SN(0,\sigma ^2,\alpha =0)\) and \(e^{(r_1)}_i \sim N(0,D_{i1})\) for \(1 \le i \le n\) and \(e^{(r_1)}_i \sim N(0,D_{i2})\) for \(n +1 \le i \le m\). For each simulated dataset, we estimate \(\sigma ^2\) and \(\beta _{1}\) for \(\hat{B}^2_{\mathrm{PL}}\), \({\hat{F}}\), \({\hat{\chi }}^2_{\mathrm{J}}\), \(\hat{\chi }^2_{\mathrm{CH}}\), and \({\hat{B}}^2_{\mathrm{ST}}\), where \(r=2\) and \(s=3\). Note that for \({\hat{B}}^2_{\mathrm{PL}}\), we use tailoring to estimate the model parameters; we use the Prasad-Rao approach for \({\hat{F}}\) and \({\hat{\chi }}^2_{\mathrm{J}}\) as it is computationally faster, the MLE for \({\hat{\chi }}^2_{\mathrm{CH}}\), and GMM for \({\hat{B}}^2_{\mathrm{ST}}\).

Also, we use 1000 replications to obtain the critical values, in each simulation run, for \({\hat{\chi }}^2_{\mathrm{J}}\), and 100 replication run for \({\hat{\chi }}^2_{\mathrm{CH}}\) (due to the fact that the latter is computationally more intensive). To obtain the sizes of the tests, we count the number of times (out of R) that \({\hat{B}}^{2(r_1)}_{\mathrm{PL}}\), \({\hat{F}}^{(r_1)}\), and \({\hat{B}}^{2(r_1)}_{\mathrm{ST}}\) exceed the critical values for the three different levels of significance, namely, \(\chi ^2_{(0.01)}(1)=6.63\), \(\chi ^2_{(0.05)}(1)= 3.84\), \(\chi ^2_{(0.10)}(1)=2.70\), and divide those numbers by R. In the cases of Jiang (2001) and Claeskens and Hart (2009), \({\hat{\chi }}^{2(r_1)}_{\mathrm{J}}\) and \({\hat{\chi }}^{2(r_1)}_{\mathrm{CH}}\) are compared with their corresponding critical values obtained using the bootstrap approach (i.e., 1000 replications for Jiang test and 100 replications for CH test under the null hypothesis), in each simulation run. The powers of the tests are obtained the same way, the only difference being that the sizes are computed when the data are generated under the null hypothesis \(\alpha =0\), while the powers under the alternative of \(\alpha =0.5\).

The empirical size and power for different levels of significance, different scenarios, and different methods are reported in Tables 1, 2 and 3. It seems that, with the increasing sample size (m) and for all three different levels of significance, \({\hat{B}}^2_{\mathrm{PL}}\) and \({\hat{\chi }}^{2}_{\mathrm{J}}\) have approximately the right size under different scenarios. However, in the case of \({\hat{F}}\), the test does not seem to have the right size if there are misspecifications in the mean and significant change in the range of the sampling variances for the small areas. The size also does not seem to improve for \({\hat{\chi }}^2_{\mathrm{CH}}\) with increasing sample size. As for \({\hat{B}}^2_{\mathrm{ST}}\), it seems that the test does not have the right size until \(m=500\). Regarding the power, \({\hat{B}}^2_{\mathrm{PL}}\) seems to perform very well under all scenarios. The power performance of \({\hat{\chi }}^2_{\mathrm{J}}\) seems to be poor compared to \({\hat{B}}^2_{\mathrm{PL}}\), while the power performance of \({\hat{F}}\) and \({\hat{B}}^2_{\mathrm{ST}}\) is similar to that of \({\hat{B}}^2_{\mathrm{PL}}\). It appears that the power of \({\hat{\chi }}^2_{\mathrm{CH}}\) does not also improve with increasing sample size.

Note, in particular, that \({\hat{B}}^2_{\mathrm{ST}}\) performs poorly in size unless \(m=500\). To further investigate the possible reason for this, we provide in Table 4 median estimates of \({\sigma ^2}\) over the simulation runs R under different sample sizes and scenarios, for PL (tailoring) and ST (GMM). It is seen that the estimate of \(\sigma ^{2}\) by GMM performs poorly until \(m=500\).

It has also been observed that PL (tailoring) is computationally much less demanding than ST (GMM). For example, the rate of convergence for the parameter estimates GMM/ST, in terms of the number of iterations needed for the Newton-Raphson procedure to achieve a given level of accuracy (the larger m the slower convergence), was much lower than that for the corresponding Maple/tailoring method.

5 Median income data

We discuss two applications of the tailoring method regarding the median income data of four-person families at the state level in the USA (Ghosh et al. 1996). The first application has to do for choosing an appropriate model; the second is about checking the normality assumption. The data has been analyzed by several researchers using different set-ups. In this analysis, the response variable \(y_i\) is the four-person median income from the sample survey at state i in year 1989, and \(x_i\) is the census four-person median income at state i in year 1979 (\(i=1,...,m=51\)).

5.1 Choosing an appropriate model

An inspection of the scatter plot (Fig. 1) suggests that a quadratic model may fit the data well. As a starting point, we test whether a quadratic mixed model rather than linear mixed model fits the data well. That is,

where the \(v_i\)’s are state-specific random effects and \(e_i\)’s are sampling errors. It is assumed that \(v_i\) and \(e_i\) are independent with \(v_i \sim N(0, \sigma ^2)\) and \(e_i \sim N(0, D_i )\) with known \(D_i\). We now test \(H_0: \beta _2=0\) vs \(H_1: \beta _2 \ne 0\). In the case of Maple/tailoring approach, the model parameter estimates are \({\hat{\beta }}_0=4503.2, {\hat{\beta }}_1=1.60, {\hat{\sigma }}^2=1.9\times 10^{7}\) which result in rejecting \(H_0\) as \({\hat{B}}^2_{PL}=5.36>3.84\) [\(= \chi ^2_{0.05}(1)\)]. Thus, the test suggests that the linear model is inappropriate.

Based on the above result, we can also evaluate the cubic model

or a quadratic-outlying (Q-O) model (due to the point at the right side of the scatterplot of \(y_i\) vs \(x_i\); see Jiang et al. 2011), as

In the case of Maple/tailoring approach, the model parameter estimates are \({\hat{\beta }}_0=-72375.1, {\hat{\beta }}_1=845.1, {\hat{\beta }}_{2}=-1.50, {\hat{\sigma }}^2=16982730\), which cannot reject \(H_0\) as \({\hat{B}}^2_{PL}=1.19<3.84\) [\(=\chi ^2_{0.05}(1)\)]. Thus, the test confirms the quadratic model is an appropriate model for this data rather than the cubic model.

Finally, we consider the Q-O model. To evaluate the Q–O model, we use Maple in conjunction with tailoring to test \(H_0: \beta _3=0\) in model (21). In the case of Maple/tailoring approach, the model parameter estimates are \({\hat{\beta }}_0=-72375.1, {\hat{\beta }}_1=849.1, {\hat{\beta }}_2=-1.50, {\hat{\sigma }}^2=16982730\), which cannot reject the \(H_0\) as \({\hat{B}}^2_{PL}=1.31< 3.84\) [\(=\chi ^2_{0.05}(1)\)]. Thus, the test confirms that the quadratic model as an appropriate model for this data rather than the Q-O model.

Overall, we conclude that the quadratic model is a good fit for the data.

It should be noted that we also applied the methods of Pierce (1982), Jiang (2001), and Claeskens and Hart (2009) to this data. None of these tests were able to reject the linear model null hypothesis. This seems to be consistent with the pattern observed in our simulation study in Sect. 4 that these tests appear to have lower power than our tests.

5.2 Checking the normality assumption

It is known that income data are typically not normal. In this application, our goal is to check the normality assumption for median incomes of four-person families at the state level in the USA (Ghosh et al. 1996). Following Sect. 5.1, we consider the quadratic model (19).

We consider testing \(\mathrm{H}_0: \alpha =0\) vs \(\mathrm{H}_1: \alpha \ne 0\). First, we apply the Maple approach in conjunction with tailoring. The parameter estimates are \({\hat{\beta }}_1=2.07, {\hat{\beta }}_2=-1.2\times 10^{-5}, {\hat{\sigma }}^{2}=1.9\times 10^{7}\), which result in \({\hat{B}}^2_{\mathrm{PL}}=4.67> 2.70=\chi ^2_{1}(0.90)\), rejecting \(\mathrm{H}_0\) at the 10% significance level.

Next, we apply the ST method to this data. In this case, the GMM estimates are \({\hat{\beta }}_1=2.07, {\hat{\beta }}_2=-1.3\times 10^{-5}, {\hat{\sigma }}^2=1.9\times 10^{7}\), which result \({\hat{B}}^2_\mathrm{ST}=4.93>2.70\), also rejecting \(H_0\) at the 10% significance level.

Note that, although the values of tailoring and GMM estimates are very close, the GMM equation for ST is more complicated than the tailoring one due to not taking the expected value of the A matrix (see Remark 2 in Sect. 2). This may explain the poor performance of ST in terms of the size (when m is moderate) and computational efficiency in our simulation study reported in Sect. 4.

We also applied the methods of Pierce (1982), Jiang 2001, and Claeskens and Hart (2009) to test the hypothesis. None of these tests were able to reject the normality assumption. For the latter two methods, this seems to be consistent with the pattern observed in our simulation study in Sect. 4 that these tests appear to be less powerful.

6 Discussion

There are multiple ways of checking for goodness-of-fit. A main reason that PL is considered in the context of SAE is due to an intuitive fact that it gets the predictive distribution of \(\theta \), the vector of small area means, involved in the process. To illustrate with a simple example, consider the following James-Stein example. Suppose that \(y_{i}=\theta _{i}+e_{i}\), \(i=1,\dots ,m\), where \(\theta _{i}, e_{i}, i=1,\dots ,m\) are independent such that \(\theta _{i}\sim N(\mu ,A)\), \(e_{i}\sim N(0,1)\). The model is a special case of the Fay-Herriot model. From a Bayesian perspective, \(\theta _{i}\) has a prior distribution, which is normal with mean \(\mu \) and variance A. However, the predictive distribution of \(\theta _{i}\), given the data \(y=(y_{i})_{1\le i\le m}\), is normal with mean \(w\mu +(1-w)y_{i}\) and variance wA, where \(w=(A+1)^{-1}\). It is clear that the data has an impact on understanding the distribution of \(\theta \), going from the prior distribution to the predictive distribution. This is what we want to check with our goodness-of-fit test. In contrast, the traditional likelihood corresponds to using the prior distribution of \(\theta \) instead of the predictive distribution [compare (13) and (14)]. The data has no impact on the prior distribution; in other words, the prior distribution is not sensitive to how one predicts the distribution of \(\theta \) using the data. Therefore, intuitively, the likelihood based method has little to do with the main interest of SAE, that is, the prediction of \(\theta \).

The next question is how this intuition makes a difference. This has to do with the main objective of using a statistical model. Is the model used for interpretation or prediction? If the model is used for interpretation, then perhaps one can ignore a few outliers because, here, the focus is the main trend, or big picture. However, if the main objective is prediction, the outliers may not be ignored. In practice, ignoring a few outliers can result in the cost of millions of dollars, if not billions of dollars. The objective is taken seriously, for example, in our real-data example (see Sect. 5.1). Here, a single point on the right side appears to be an outlier. If one uses a non-predictive goodness-of-fit test, such as Pierce (1982), Jiang (2001), and Claeskens and Hart (2009), none of these tests have rejected the linear model null hypothesis (see the last paragraph of Sect. 5.1). This suggests that these tests tend to look at the big picture, and therefore ignore the “outlier”. On the other hand, our predictive-based test, that is, PL/tailoring, is able to reject the null hypothesis. This means that PL is taking the “outlier” more seriously by considering its potential impact on the prediction.

Regarding extension of the proposed method to other SAE models, note that tailoring is a general method that can be implemented as long as one has a base function, \(B(\vartheta )\), in hand that satisfies certain conditions (see the first paragraph of Sect. 2). For example, to extend our GoFT to the nested-error regression (NER; Battese et al. 1988) model, we need to (I) set up a framework for GoFT; and (II) construct an appropriate base function. We discuss these two parts below. (I) An NER model can be expressed as \(y_{ij}=x_{ij}'\beta +v_{i}+e_{ij}\), \(i=1,\dots ,m\), \(j=1,\dots ,n_{i}\), where \(y_{ij}\) is the outcome variable, \(x_{ij}\) is a known vector of auxiliary variables, \(\beta \) is a vector of fixed effects, \(v_{i}\) is an area-specific random effect, and \(e_{ij}\) is an error. The standard assumptions are that the random effects and errors are independent with (i) \(v_{i}\sim N(0,\sigma _{v}^{2})\) and (ii) \(e_{ij}\sim N(0,\sigma _{e}^{2})\). Note that, unlike in the Fay-Herriot model, here, it may not be reasonable to assume that the distribution of \(e_{ij}\) is normal, because the central limit theorem (CLT) may not apply. Thus, to set up the GoFT framework, we may replace both (i) and (ii) by the skewed normal distribution family. (II) The general principle of PL (see the middle part of Sect. 3) still applies to this case. We just need to derive the resulting base function following the general steps and, with the base function, the resulting GoFT by the tailoring method. We will develop the details, and study performance of the resulting test in our future work.

Supplementary Materials The supplementary materials contain R codes and corresponding “readme” files for the simulation and application conducted in this work.

References

Akaike H (1956) Information theory as an extension of the maximum likelihood principle. In: Petrov BN, Csaki F (eds) Second international symposium on information theory. Akademiai Kiado, Budapest, pp 267–281

Azzalini A, Capitanio A (2014) The skew-normal and related families. Cambridge University Press, New York

Battese GE, Fuller WA, Harter RM (1988) An error-components model for prediction of county crop areas using survey and satellite data. J Am Stat Assoc 80:28–36

Chatterjee S, Lahiri P, Li H (2008) Parametric bootstrap approximation to the distribution of EBLUP, and related prediction intervals in linear mixed models. Ann Stat 36:1221–1245

Claeskens G, Hart JD (2009) Goodness-of-fit tests in mixed models (with discussion). TEST 18:213–239

Dao C, Jiang J (2016) A modified Pearson’s \(\chi ^{2}\) test with application to generalized linear mixed model diagnostics. Ann Math Sci Appl 1:195–215

Datta GS, Hall P, Mandal A (2011) Model selection by testing for the presence of small-area effects, and application to area-level data. J Am Stat Assoc 106:362–374

Efron B, Hinkley DV (1978) Assessing the accuracy of the maximum likelihood estimator: observed versus expected Fisher information. Biometrika 65:457–487

Fay RE, Herriot RA (1979) Estimates of income for small places: an application of James-Stein procedures to census data. J Am Stat Assoc 74:269–277

Fisher RA (1922) On the interpretation of chi-square from contingency tables, and the calculation of P. J R Stat Soc 85:87–94

Foutz RV (1977) On the unique consistent solution to the likelihood equation. J Am Stat Assoc 72:147–148

Ganesh N (2009) Simultaneous credible intervals for small area estimation problems. J Mult Anal 100:1610–1621

Ghosh M, Nangia N, Kim D (1996) Estimation of median income of four-person families: A Bayesian time series approach. J Am Stat Assoc 91:1423–1431

Hall AR (2005) Generalized method of moments (advanced texts in econometrics). Oxford University Press, Oxford

Hannan EJ, Quinn BG (1979) The determination of the order of an autoregression. J R Stat Soc B 41:190–195

Jiang J (2001) Goodness-of-fit tests for mixed model diagnostics. Ann Stat 29:1137–1164

Jiang J (2007) Linear and generalized linear mixed models and their applications. Springer, New York

Jiang J (2010) Large sample techniques for Statistics. Springer, New York

Jiang J, Nguyen T (2009) Comments on: Goodness-of-fit tests in mixed models by G Claeskens and JD Hart. TEST 18:248–255

Jiang J, Nguyen T, Rao JS (2010) Fence method for nonparametric small area estimation. Surv Method 36:3–11

Jiang J, Nguyen T, Rao JS (2011) Best predictive small area estimation. J Am Stat Assoc 106:732–745

Jiang J, Nguyen T, Rao JS (2015) Observed best prediction via nested-error regression with potentially misspecified mean and variance. Surv Method 41:37–55

Kauermann G, Carroll RJ (2001) A note on the efficiency of sandwich covariance matrix estimation. J Am Stat Assoc 96:1387–1396

Lahiri P, Rao JNK (1995) Robust estimation of mean squared error of small area estimators. J Am Stat Assoc 90:758–766

McCullagh P, Nelder JA (1989) Generalized linear models, 2nd edn. Chapman and Hall, London

Moore DS (1978) Chi-square tests, in Studies in Statistics (RV Hogg, edn.). Mathematical Society of America, Providence

Morris CN, Christiansen CL (1995) Hierarchical models for ranking and for identifying extremes with applications, in Bayes Statistics 5. Oxford University Press, Oxford

Pierce D (1982) The asymptotic effect of substituting estimators for parameters in certain types of statistics. Ann Stat 10:475–478

Prasad NGN, Rao JNK (1990) The estimation of mean squared error of small-area estimators. J Am Stat Assoc 85:163–171

Rao JNK, Molina I (2015) Small area estimation, 2nd edn. Wiley, New York

Rice JA (1995) Mathematical statistics and data analysis, 2nd edn. Duxbury Press, Belmont

Schwarz G (1978) Estimating the dimension of a model. Ann Stat 6:461–464

Searle SR (1971) Linear models. Wiley, New York

Acknowledgements

Constructive comments and suggestions of two referees, which led to an improved version of this article, are greatly appreciated. The research of Jiming Jiang is supported by the NSF grants SES-1121794 and DMS-1713120. The research of Mahmoud Torabi is supported by a grant from the Natural Sciences and Engineering Research Council of Canada (NSERC).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

This appendix provides justification for the heuristic derivation given in Sect. 2 regarding the asymptotic null distribution as well as other details.

1.1 A.1 Notation and regularity conditions

Let \(\vartheta _{0}\) denote the true \(\vartheta \); \(\Vert M\Vert =\sqrt{\lambda _{\max }(M'M)}\) the spectral norm of matrix M, where \(\lambda _{\max }\) denotes the largest eigenvalue; \(\lambda _{\min }\) the smallest eigenvalue; and \(|v|=\sqrt{v'v}\) the Euclidean norm of vector v. Note that the matrices \(A(\vartheta ), B(\vartheta )\), etc. in Sect. 2 depend on the sample size, m, although the dependence will be implicit in notation.

Suppose that \(B(\vartheta )\) in (1) can be expressed as \(B(\vartheta )= V^{-1/2}(\vartheta )\sum _{i=1}^{m}b_{i}(Y_{i},\vartheta )\), where \(Y_{1}, \dots ,Y_{m}\) are independent random vectors, \(\mathrm{E}_{\vartheta }\{ b_{i}(Y_{i},\vartheta )\}=0\), \(\mathrm{Var}_{\vartheta }\{b_{i}(Y_{i},\vartheta ) \}\) exists, and \(V(\vartheta )=\sum _{i=1}^{m}\mathrm{Var}_{\vartheta }\{b_{i}( Y_{i},\vartheta )\}\) is nonsingular. Then, with \(N=m\) and \(A(\vartheta )\) given by (11), the \(C(\vartheta )\) in (3) can be expressed as

with \(c_{m,i}(\vartheta )\) defined in an obvious way. Denote \(\varDelta C( \vartheta )=(\partial /\partial \vartheta ')C(\vartheta )\). We assume the following regularity conditions as \(m\rightarrow \infty \):

A1. The parameter space of \(\vartheta \), \(\varTheta \), is an open subset of \(R^{r}\), and \(c_{m,i}\) is continuously differentiable with respect to \(\vartheta \) for each \(1\le i\le m\).

A2. With probability tending to one, \(\varDelta C(\vartheta _{0})\) is nonsingular.

A3. \(D(\vartheta )=\lim _{m\rightarrow \infty }\mathrm{E}\{\varDelta C(\vartheta )\}\) exists, and there is a constant \(\delta >0\) such that

1.2 A.2 Asymptotic behavior of \({\hat{\vartheta }}\)

In this subsection, we state a result regarding existence, uniqueness, and consistency of \({\hat{\vartheta }}\), the solution to the tailoring equation (3) which is our estimator of \(\vartheta \) used in (2). The proof is very similar to that of Theorem 2 of Foutz (1977), and therefore is omitted.

Lemma 1. Under assumptions A1–A3, there exists a sequence of estimators, \({\hat{\vartheta }}\), such that \(C(\hat{\vartheta })=0\) with probability tending to one, and \(\hat{\vartheta }{\mathop {\longrightarrow }\limits ^\mathrm{P}}\vartheta _{0}\). Furthermore, if \({\tilde{\vartheta }}\) also satisfies the above, then \(\mathrm{P}({\tilde{\vartheta }}={\hat{\vartheta }})\rightarrow 1\) as \(m \rightarrow \infty \).

1.3 A.3 Asymptotic distribution of \(B({\hat{\vartheta }})\)

We assume the following additional regularity conditions:

-

A4. there is a full rank matrix, Q, such that

$$\begin{aligned} \frac{1}{\sqrt{m}}\mathrm{E}_{\vartheta _{0}}\left( \frac{\partial B}{\partial \vartheta '}\right) \longrightarrow Q\;\;\mathrm{and}\;\; \frac{1}{\sqrt{m}}\left\{ \frac{\partial B}{\partial \vartheta '}-\mathrm{E}_{\vartheta _{0}}\left( \frac{\partial B}{\partial \vartheta '}\right) \right\} {\mathop {\longrightarrow }\limits ^\mathrm{P}}0, \end{aligned}$$where \(\partial B/\partial \vartheta '\) is evaluated at \(\vartheta _{0}\).

-

A5. There is a compact subspace of \(\varTheta _\mathrm{c}\subset \varTheta \) that contains \(\vartheta _{0}\) as an interior point such that the \(\sup _{\vartheta \in \varTheta _{\mathrm{c}}}\Vert \cdot \Vert \) of \(V(\vartheta )/m\) and of its up to second partial derivatives (with respect to \(\vartheta \)) are bounded, and \(\liminf [\inf _{\vartheta \in \varTheta _\mathrm{c}}\lambda _{\min }\{V(\vartheta )/m \}]>0\).

-

A6. For the same \(\varTheta _{\mathrm{c}}\), the \(\sup _{\vartheta \in \varTheta _{\mathrm{c}}}\Vert \cdot \Vert \) of \(m^{-1}\sum _{i=1}^{m}\mathrm{E}_{\vartheta } (\partial b_{i}/\partial \theta ')\) and of its up to second partial derivatives (with respect to \(\vartheta \)) are bounded; and the \(\sup _{\vartheta \in \varTheta _{\mathrm{c}}}\Vert \cdot \Vert \) of \(m^{-1}\sum _{i=1}^{m} b_{i}\) and its up to second partial derivatives with respect to \(\vartheta \) are bounded in probability.

-

A7. \(\forall \epsilon >0\), \(\max _{1\le i\le m}\mathrm{E}_{\vartheta _{0}}\{b_{i}^{2}1_{(|b_{i}|>\epsilon m)}\}\rightarrow 0\) as \(m\rightarrow \infty \), where \(b_{i}=b_{i}(Y_{i},\vartheta _{0})\).

Theorem 1

Let \({\hat{\vartheta }}\) denote the estimator in Lemma 1. Under assumptions A1–A7, we have \(B({\hat{\vartheta }}){\mathop {\longrightarrow }\limits ^\mathrm{d}}N(0,P)\), where \(P=I_{s}-Q(Q'Q)^{-1}Q'\) is idempotent with rank \(\nu =s-r\).

Proof

First, by assumptions A5, A7, and the central limit theorem for an array of independent random variables (e.g., Theorem 6.12 of Jiang 2010), it follows that

Next, by the Taylor series expansion, we have

where \(\partial C/\partial \vartheta '\) is evaluated at \(\vartheta _{0}\), and \(\partial ^{2}C_{(k)}/\partial \vartheta \partial \vartheta '\) denotes the kth component of C evaluated at some \(\vartheta _{(k)}\) that lies between \(\vartheta _{0}\) and \({\hat{\vartheta }}\). By assumptions A4–A6, it follows that

Similarly, by assumptions A5 and A6, it can be shown that

By (A.2)–(A.4), and Lemma 1, we have \(0=C(\vartheta _{0})+\{Q'Q+o_\mathrm{P}(1)\}({\hat{\vartheta }}-\vartheta _{0})\), or

On the other hand, again by the Taylor series expansion, we have

where \(\partial B/\partial \vartheta '\) is evaluated at \(\vartheta _{0}\), and \(\partial ^{2}B_{(k)}/\partial \vartheta \partial \vartheta '\) denotes the kth component of B evaluated at some \(\vartheta _{(k)}\) that lies between \(\vartheta _{0}\) and \({\hat{\vartheta }}\). By assumption A4 and (A.1), it is easy to see that \(C(\vartheta _{0})=O_{\mathrm{P}}(m^{-1/2})\). It follows by (A.5) that \({\hat{\vartheta }}-\vartheta _{0}=O_{\mathrm{P}}(m^{-1/2})\). Therefore, by assumptions A5 and A6, it can be shown that the last term on the right side of (A.6) is \(o_{\mathrm{P}}(1)\). Furthermore, by assumption A4, we have

Combining (A.5)–(A.7), we have

and \(P=I_{s}-Q(Q'Q)^{-1}Q'\) is idempotent with \(\mathrm{rank}(P)=s-r\).

Corollary 1

Under the conditions of Theorem 1, we have \(|B({\hat{\vartheta }})|^{2}{\mathop {\longrightarrow }\limits ^\mathrm{d}}\chi _{s-r}^{2}\).

1.4 A.4 Derivation of (18)

We have \(f(\theta _i|y_i)=f(y_i|\theta _i)f(\theta _i)/\int f(y_i|\theta _i) f(\theta _i) \mathrm{d}\theta _i=I_{i1}/I_{i2}\). For \(I_{i1}\), we have

Next, we can show, after some simplification, that

where \(\mu _i=(D_i x'_i \beta +\sigma ^{2}y_i)/(\sigma ^{2}+D_i)\) and \(\sigma ^2_i=B_i D_i\) with \(B_i=\sigma ^{2}/(\sigma ^{2}+D_i)\). It follows that \(I_{i1}\) can be expressed as

Thus, we obtain the expression \(f(\theta _i|y_i)=\)

where \(d_i=(y_i-x'_i \beta )/\sqrt{\sigma ^{2}+D_i}\), and we have used the following fact:

Azzalini and Capitanio (2014): Let \(\phi (\cdot )\) and \(\varPhi (\cdot )\) denote pdf and cdf of the standard normal distribution. Then for all constants \(a, b \in R\) and real value u, we have \(\int _{-\infty }^{+\infty }\phi (u)\varPhi (a+bu)\mathrm{d}u=\varPhi (a/\sqrt{1+b^2})\).

To calculate \(f(y_i|y_i),\) we have

It can now be shown, after some simplification, that

where \(\mu _{\theta i}=(D_i \mu _i +\sigma ^2_i y_i)/(\sigma ^2_i+D_i)\) and \(\sigma ^2_{\theta i}=\sigma ^2_i D_i/(\sigma ^2_i+D_i)\). Thus, we have

where \(f_i=\{2\sigma /(2\sigma ^{2} +D_i)\}(y_i-x'_i \beta )\). From here it is easy to derive (18).

Rights and permissions

About this article

Cite this article

Jiang, J., Torabi, M. Goodness-of-fit test with a robustness feature. TEST 31, 76–100 (2022). https://doi.org/10.1007/s11749-021-00772-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11749-021-00772-0