Abstract

We investigate the errors in covariates issues in a generalized partially linear model. Different from the usual literature (Ma and Carroll in J Am Stat Assoc 101:1465–1474, 2006), we consider the case where the measurement error occurs to the covariate that enters the model nonparametrically, while the covariates precisely observed enter the model parametrically. To avoid the deconvolution type operations, which can suffer from very low convergence rate, we use the B-splines representation to approximate the nonparametric function and convert the problem into a parametric form for operational purpose. We then use a parametric working model to replace the distribution of the unobservable variable, and devise an estimating equation to estimate both the model parameters and the functional dependence of the response on the latent variable. The estimation procedure is devised under the functional model framework without assuming any distribution structure of the latent variable. We further derive theories on the large sample properties of our estimator. Numerical simulation studies are carried out to evaluate the finite sample performance, and the practical performance of the method is illustrated through a data example.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Generalized partially linear models have been widely used in statistics. Such models enrich the more classic generalized linear models by allowing a covariate to enter the link function through a nonparametric form. This is useful when the dependence of the response to some covariates, even after transformation through a suitable link function, is still not linear and difficult to specify. At the same time, the model also allows the more classic generalized linear dependence on some other covariates. Many works exist in the literature for estimation and inference for generalized partially linear models, see, for example, Carroll et al. (1995), Liang et al. (2009), Apanasovich et al. (2009), and Yu and Ruppert (2012).

When one of the covariates involved in the generalized partially linear model cannot be measured precisely, the problem becomes much more difficult. In fact, most of the works in handling measurement error issues in the generalized partially linear model considered only the case that measurement error occurs to a covariate involved in the linear component (Ma and Carroll 2006; Liu et al. 2017; Liang and Ren 2005; Liu 2007; Liang and Thurston 2008). When the model degenerates to simply the generalized linear model, even more literatures exist to handle the measurement error issues (Carroll et al. 2006; Stefanski and Carroll 1985, 1987; Huang and Wang 2001; Ma and Tsiatis 2006; Buonaccorsi 2010; Xu and Ma 2015). When handled properly, it can be shown that the parameters can be estimated at the root-n convergence rate despite of the presence of the measurement error and the possible presence of the nonparametric function in the model. However, it is a different story when the covariate inside the nonparametric function itself is measured with error. We conjecture that this is because as soon as the covariate inside an unknown function is subject to error, the problem falls into the general framework of nonparametric measurement error models and the standard practice for estimation and inference is through deconvolution. Deconvolution method is widely used in handling latent components and has been used to show that nonparametric regression with errors in covariates can have very slow convergence rate. Possibly due to these inherent difficulties, generalized partially linear models with errors in the covariate inside the nonparametric function have not been studied systematically.

We tackle this difficult problem where the error occurs to the covariate inside the nonparametric component of the generalized partially linear model through a novel approach that avoids the deconvolution treatment completely. Two key ideas lead to our success in this endeavor. The first is the idea of using B-splines expansion to approximate the nonparametric function of the latent covariate. The B-spline nature allows us to write out the approximation form without having to perform the estimation simultaneously. This is different from nonparametric estimation via kernel method, where the approximation and estimation is integrated and inseparable. The second idea is the recognition that after the B-spline approximation, the error-free model is effectively a parametric model, or at least a parametric model in terms of operation, hence the only nonparametric component in the measurement error model is the distribution of the latent covariate. This implies that the semiparametric approach in Tsiatis and Ma (2004) can be adopted here to help establishing the estimation procedure. The encouraging discovery is that we not only can bypass the difficulties caused by nonparametric function of a covariate measured with error in terms of estimation, we also prove that the procedure can retain the root-n convergence rate of the parameter estimation in the original model.

The structure of this paper is as follows. We describe the model and the estimation methodology in Sect. 2, following with establishing the large sample properties of the parameter estimation in Sect. 3. Two simulation studies are conducted in Sect. 4, and we analyze the AIDS Clinical Trials Group (ACTG) study in Sect. 5. We finish the paper with some discussions in Sect. 6. All the technical details and proofs are provided in “Appendix”.

2 Main results

2.1 The model

We work in a measurement error model framework, sometimes also referred to as errors-in-covariate model. It is different from the standard regression model; in that, at least one of the covariates is not directly observable. Instead, a measurement of this covariate that contains error is observed only. Generally speaking, in a standard regression problem, we would observe independent and identically distributed (i.i.d.) observations \((X_i, \mathbf{Z}_i, Y_i), i=1, \dots , n\), where \((X_i, \mathbf{Z}_i)\) is the covariate and \(Y_i\) is the response. Then, with a specific model of \(Y_i\) given \(X_i\) and \(\mathbf{Z}_i\), we can then proceed to estimate the unknown components in this regression relation. However, in a measurement error model, \(X_i\) is no longer available, instead, only an errored version of \(X_i\), say \(W_i\) is observed. Thus, the goal is to still perform the estimation of the parameters in the model of \(Y_i\) given \((X_i, \mathbf{Z}_i)\), but using \((W_i, \mathbf{Z}_i, Y_i)\)’s, instead of \((X_i, Z_i, Y_i)\)’s.

In this paper, we study the generalized partially linear model

Here, Y is the univariate response variable and X and \(\mathbf{Z}\) are covariates. We assume the univariate variable X to be compactly supported. Without loss of generality, let the support be \([0, \,\,\,1]\). We assume \(\mathbf{Z}\in {{\mathscr {R}}}^{p_z}, p_z\ge 1\). The unknown components in (1) are \({\varvec{\beta }}\in {\mathscr {R}}^{p_z}\) and \({\varvec{\alpha }}\), whose estimation and inference are of the main interest to us, and the nuisance function \(g(\cdot )\), which contributes to the name “partially linear.” The link function \(f(\cdot )\) is assumed to be known. Here, the parameter \({\varvec{\beta }}\) describes the linear effect of the covariate in \(\mathbf{Z}\), \(g(\cdot )\) describes the unspecified effect of X and \({\varvec{\alpha }}\) arises according to the link function f. For example, \(f(\cdot )\) can be the inverse logit link function \(f(\cdot )=1-1/\{\exp (\cdot )+1\}\) or the normal link function \(f(\cdot )=\exp \{ -(\cdot )^2/(2\alpha ^2)\}/\{(2\pi \alpha ^2)^{1/2}\}\). Note that in the logistic example, the parameter \({\varvec{\alpha }}\) does not appear, while in the normal example, \(\alpha \) captures the standard error of Y. Now, although Y and \(\mathbf{Z}\)’s are observable, \(\mathbf{X}\) is not. Instead, X is a random variable measured with error. Thus, lieu of observing X, we observe W, where

and U is a normal random error independent of X and \(\mathbf{Z}\) with mean zero and variance \(\sigma _U^2\). For ease of the presentation of the main methodology, we assume \(\sigma _U^2\) is known. When \(\sigma _U^2\) is unknown, a common approach is to use repeated measurements to estimate \(\sigma _U^2\) first and then plug in. The observed data are \((W_i, \mathbf{Z}_i, Y_i), i=1, \dots , n\), which are iid. Our goal is to estimate \({\varvec{\alpha }}\), \({\varvec{\beta }}\), together with \(g(\cdot )\) hence to understand the dependence of Y on the covariates \((X,\mathbf{Z})\).

2.2 Efficient score derivation

For preparation, we first approximate g(x) with a B-spline representation, i.e., \(g(x)\approx \mathbf{B}(x)^{\mathrm{T}}{\varvec{\gamma }}\). Under this approximation, model (1) becomes

which is a complete parametric model with unknown parameters \(\varvec{\theta }\equiv ({\varvec{\alpha }}^{\mathrm{T}},{\varvec{\beta }}^{\mathrm{T}},{\varvec{\gamma }}^{\mathrm{T}})^{\mathrm{T}}\). This model falls in the general framework of Tsiatis and Ma (2004), hence their estimation procedure can be adopted here. Specifically, the joint distribution of the observed variables conditional on \(\mathbf{Z}\) is

with the condition distribution function \(f_{X\mid \mathbf{Z}}(x,\mathbf{z})\) being a nuisance parameter. The nuisance tangent space \(\varLambda \) and its orthogonal complement \(\varLambda ^\perp \) can be written as

The efficient score for \(\varvec{\theta }\) is the residual of its score vector \(\mathbf{S}_{\varvec{\theta }}(y,w,\mathbf{z})\) after projecting it on to the nuisance tangent space \(\varLambda \), denoted by

where \( \mathbf{S}_{\varvec{\theta }}(y,w,\mathbf{z},\varvec{\theta })\equiv {\partial \log f_{W,Y\mid \mathbf{Z}}(y,w,\mathbf{z},\varvec{\theta })}/{\partial \varvec{\theta }}. \) Here, “\(_{\mathrm{res}}\)” stands for residual. The detailed form of \(\mathbf{S}_{\mathrm{res}}(y,w,\mathbf{z},\varvec{\theta })\) is given as

where \(\mathbf{a}(X,\mathbf{Z},\varvec{\theta })\) satisfies

Now, noting that the above derivation is obtained from the approximate model (3), we hence perform some further analysis. Separating the components corresponding to \({\varvec{\alpha }}, {\varvec{\beta }}\) and \({\varvec{\gamma }}\) in \(\varvec{\theta }\), we can write \(\mathbf{S}_{\varvec{\theta }}(y,w,z,\varvec{\theta })=\{ \mathbf{S}_{{\varvec{\alpha }},{\varvec{\beta }}}(y,w,z,\varvec{\theta })^{\mathrm{T}}, \mathbf{S}_{{\varvec{\gamma }}}(y,w,z,\varvec{\theta })^{\mathrm{T}}\}^{\mathrm{T}}\), which leads to the corresponding relation as follows:

The estimating equation of the approximate model can be written as

Remember that our original model contains an unknown function g(z). Thus, for the estimation of \({\varvec{\alpha }}, {\varvec{\beta }}\), it is beneficial to treat g as a nuisance parameter as well first, and estimate \({\varvec{\alpha }}, {\varvec{\beta }}\) using profiling. We then plug in the estimated values of \({\varvec{\alpha }}\) and \({\varvec{\beta }}\) and estimate g via the B-spline approximation. Of course, in addition to g, the distribution of the unobservable covariate conditional on the observable covariate \(\mathbf{Z}\) is also a nuisance component and still has to be taken into account.

Let \({\varvec{\delta }}\equiv ({\varvec{\alpha }}^{\mathrm{T}},{\varvec{\beta }}^{\mathrm{T}})^{\mathrm{T}}\) be a p-dimensional parameter. We propose to solve for \({\varvec{\gamma }}\) from \(\sum _{i=1}^n{\mathbf{S}_{\mathrm{res}}}_2(Y_i,W_i,\mathbf{Z}_i,\varvec{\theta })=\mathbf{0}\) to obtain \(\widehat{{\varvec{\gamma }}}({\varvec{\delta }})\) first. Now from

we can construct the nuisance tangent space as \(\varLambda =\varLambda _{f_X}+\varLambda _g\), where

where \(s(y,t,{\varvec{\alpha }}) \equiv \partial \log f(y,t,{\varvec{\alpha }})/\partial t\). Note that \(\varLambda _{f_X}\) and \(\varLambda _g\) are not orthogonal to each other. We can further verify that

The efficient score for \({\varvec{\delta }}\) is now the residual of the score vector \(\mathbf{S}_{{\varvec{\delta }}}\) after projecting it on to the nuisance tangent space \(\varLambda \), denoted by

Its explicit form is given as

where \(\mathbf{a}(X,\mathbf{Z})\) and \(\mathbf{b}(X)\) satisfy

We can then form the estimating equation \(\sum _{i=1}^n\mathbf{S}_{\mathrm{eff}}\{Y_i, W_i, \mathbf{Z}_i, {\varvec{\delta }},\widehat{{\varvec{\gamma }}}({\varvec{\delta }})\}=\mathbf{0}\) to solve for \(\widehat{{\varvec{\delta }}}\) as the estimator, where \(\mathbf{a}(X,\mathbf{Z}), \mathbf{b}(X)\) are the solutions to the integral equations in (8).

2.3 Estimation under working model

The above derivations are based on efficient score calculation and hence will yield the efficient estimator. However, a close look at the procedure reveals that the procedure is not practical because the implementation relies on the unknown function \(f_{X\mid \mathbf{Z}}(x,\mathbf{z})\). Thus, our estimator needs to be calculated under a posited working model of \(\mathbf{f}_{X\mid \mathbf{Z}}^*(x,\mathbf{z})\). The procedure is described below, where we use \(^*\) to denote a quantity whose calculation is carried out using \(\mathbf{f}_{X\mid \mathbf{Z}}^*(x,\mathbf{z})\) instead of \(\mathbf{f}_{X\mid \mathbf{Z}}(x,\mathbf{z})\).

- 1.

Posit a working model \(\mathbf{f}_{X\mid \mathbf{Z}}^*(x,\mathbf{z})\).

- 2.

Solve for \({\varvec{\gamma }}\) from \(\sum _{i=1}^n{\mathbf{S}_{\mathrm{res}}}_2^*(Y_i,W_i,\mathbf{Z}_i,\varvec{\theta })=\mathbf{0}\) to obtain \(\widehat{{\varvec{\gamma }}}({\varvec{\delta }})\).

- 3.

Calculate the score function \(\mathbf{S}_{{\varvec{\delta }}}^*(Y, W, \mathbf{Z},{\varvec{\delta }},g)\) under the working model \(\mathbf{f}_{X\mid \mathbf{Z}}^*(x,\mathbf{z})\).

- 4.

Solve the integral equation (8) to get \(\mathbf{a}(X, \mathbf{Z})\) and \(\mathbf{b}(X)\).

- 5.

Calculate the approximate efficient score function \(\mathbf{S}_{\mathrm{eff}}^*(Y, W, \mathbf{Z}, {\varvec{\delta }},\widehat{g})\) following (7), where \(\widehat{g}(\cdot )=\mathbf{B}(\cdot )^{\mathrm{T}}\widehat{{\varvec{\gamma }}}({\varvec{\delta }})\).

- 6.

Solve the estimating equation \(\sum _{i=1}^n\mathbf{S}_{\mathrm{eff}}^*(Y_i, W_i, \mathbf{Z}_i, {\varvec{\delta }},\widehat{g})=\mathbf{0}\) to obtain \(\widehat{{\varvec{\delta }}}\).

When we calculate \(\mathbf{a}(X,\mathbf{Z})\) at each observed \(\mathbf{z}\) value and calculate \(\mathbf{b}(\mathbf{X})\), we discretize the distribution of X on m equally spaced points on the support of \(f_{X\mid \mathbf{Z}}(x,\mathbf{z})\) and calculate the probability mass function \(\pi _j(\mathbf{Z})\) at each of the m points. We of course normalize the \(\pi _j(\mathbf{Z})\) in order to ensure \(\sum _{j=1}^m\pi _j(\mathbf{Z})=1\). Note that using the discretization,

Further, \(\mathbf{S}_{{\varvec{\delta }}}^*(Y, W, \mathbf{Z},{\varvec{\delta }},g)\), \(E^*\{\mathbf{a}(X,\mathbf{Z}) | Y, W, \mathbf{Z}\} \) and \(E^*[s\{Y,\mathbf{Z}^{\mathrm{T}}{\varvec{\beta }}+g(X,{\varvec{\delta }}),{\varvec{\alpha }}\}\mathbf{b}(X)| Y, W, \mathbf{Z}]\) can be approximated by

Let \(\mathbf{A}(X,\mathbf{Z})\equiv \{\mathbf{a}(x_1, \mathbf{Z}),\dots , \mathbf{a}(x_m,\mathbf{Z})\}^{\mathrm{T}}\) and \(\mathbf{B}(X)\equiv \{\mathbf{b}(x_1),\dots , \mathbf{b}(x_m)\}^{\mathrm{T}}\). Let \(\mathbf{M}_1(X,\mathbf{Z})\equiv \{\mathbf{m}_1(x_1, \mathbf{Z}),\dots ,\mathbf{m}_1(x_m,\mathbf{Z})\}^{\mathrm{T}}\) be a \(m\times p_{{\varvec{\delta }}}\) matrix, where \(p_{{\varvec{\delta }}}\) is the length of \({\varvec{\delta }}\) and \(\mathbf{m}_1(x_i,\mathbf{Z})\equiv E\{\mathbf{S}_{{\varvec{\delta }}}^*(Y, W, \mathbf{Z},{\varvec{\delta }},g)\mid x_i, \mathbf{Z}\}\). Further, let \(\mathbf{M}_2(X,\mathbf{Z})\equiv \{\mathbf{m}_2(x_1, \mathbf{Z}),\dots ,\mathbf{m}_2(x_m,\mathbf{Z})\}^{\mathrm{T}}\) be a \(m\times p_{{\varvec{\delta }}}\) matrix, where we define \(\mathbf{m}_2(x_i,\mathbf{Z})\equiv E\left[ \mathbf{S}_{{\varvec{\delta }}}^*(Y, W, \mathbf{Z},{\varvec{\delta }},g)s\{Y,\mathbf{Z}^{\mathrm{T}}{\varvec{\beta }}+g(x_i)\}\mid x_i, \mathbf{Z}\right] \). Finally, let \(\mathbf{C}(X,\mathbf{Z})\) be a \(m \times m\) matrix with the (i, j) block equal to

let \(\mathbf{D}(X,\mathbf{Z})\) be an \(m \times m\) matrix with the (i, j) block equal to

let \(\mathbf{F}(X,\mathbf{Z})\) be an \(m \times m\) matrix with the (i, j) block equal to

and let \(\mathbf{G}(X,\mathbf{Z})\) be an \(m \times m\) matrix with the (i, j) block

We can then get \(\mathbf{a}(x_i,\mathbf{Z})\) and \(\mathbf{b}(x_i)\) by solving

3 Asymptotic properties

Let \({\mathbf{S}_{\mathrm{res}}}_2(Y_i, W_i, \mathbf{Z}_i, {\varvec{\alpha }},{\varvec{\beta }}, g)\) be \({\mathbf{S}_{\mathrm{res}}}_2(Y_i, W_i, \mathbf{Z}_i,{\varvec{\alpha }},{\varvec{\beta }}, {\varvec{\gamma }})\) with all the appearance of \(\mathbf{B}(X)^{\mathrm{T}}{\varvec{\gamma }}\) in it replaced by g(X). We first list the set of regularity conditions required for establishing the large sample properties of our estimator.

- (C1)

The true density \(f_X(x)\) is bounded with compact support. Without loss of generality, we assume the support of \(f_X(x)\) is [0, 1].

- (C2)

The function \(g(x) \in C^q([0, 1])\), \(q>1\), is bounded.

- (C3)

The spline order \(r \ge q\).

- (C4)

Define the knots \(t_{-r+1} = \dots = t_0 = 0< t_1< \dots< t_N < 1 = t_{N+1} = \dots = t_{N+r}\), where N is the number of interior knots that satisfies \(N \rightarrow \infty \), \(N^{-1}n(\log {n})^{-1} \rightarrow \infty \) and \(Nn^{-1/(2q)} \rightarrow \infty \) as \(n \rightarrow \infty \). Denote the number of spline bases \(d_{{\varvec{\gamma }}}\), i.e., \(d_{{\varvec{\gamma }}}=N+r\).

- (C5)

Let \(h_j\) be the distance between the jth and \((j-1)\)th interior knots. Let \(h_b = \max _{1\le j \le N} h_j\) and \(h_s = \min _{1\le j \le N} h_j\). There exists a constant \(c_h \in (0, \infty )\) such that \(h_b/h_s < c_h\). Hence, \(h_b = O_p(N^{-1})\) and \(h_s = O_p(N^{-1})\).

- (C6)

\({\varvec{\gamma }}_0\) is a \(d_{{\varvec{\gamma }}}\)-dimensional spline coefficient vector such that \(\sup _{x \in [0, 1]}|\mathbf{B}(x)^{\mathrm{T}}{\varvec{\gamma }}_0 - g(x)| = O_p(h_b^q)\).

- (C7)

The equation set

$$\begin{aligned} E\{\mathbf{S}_{\mathrm{eff}}^*(Y_i, W_i, \mathbf{Z}_i, {\varvec{\delta }},{\varvec{\gamma }})\}= & {} \mathbf{0}, \\ E\{{\mathbf{S}_{\mathrm{res}}}_2^*(Y_i, W_i, \mathbf{Z}_i, {\varvec{\delta }}, {\varvec{\gamma }})\}= & {} \mathbf{0} \end{aligned}$$has unique root for \(\varvec{\theta }\) in the neighborhood of \(\varvec{\theta }_0\). Recall that \(\varvec{\theta }=({\varvec{\alpha }}^{\mathrm{T}}, {\varvec{\beta }}^{\mathrm{T}}, {\varvec{\gamma }}^{\mathrm{T}})^{\mathrm{T}}\) and \({\varvec{\delta }}=({\varvec{\alpha }}^{\mathrm{T}},{\varvec{\beta }}^{\mathrm{T}})^{\mathrm{T}}\). The derivatives with respect to \(\varvec{\theta }\) of the left-hand side are smooth functions of \(\varvec{\theta }\), with its singular values bounded and bounded away from \(\mathbf{0}\). Let the unique root be \(\varvec{\theta }^*\). Note that \(\varvec{\theta }_0\) and \(\varvec{\theta }^*\) are functions of N, that is, for any sufficiently large N, there is a unique root \(\varvec{\theta }^*\) in the neighborhood of \(\varvec{\theta }_0\).

- (C8)

The maximum absolute row sum of the matrix \(\partial \mathbf{S}_{\mathrm{eff}}^*(Y_i, W_i, \mathbf{Z}_i, {\varvec{\delta }}_0, {\varvec{\gamma }}_0)/\partial {\varvec{\gamma }}_0^{\mathrm{T}}\), i.e., \(\Vert \partial \mathbf{S}_{\mathrm{eff}}^*(Y_i, W_i, \mathbf{Z}_i, {\varvec{\delta }}_0, {\varvec{\gamma }}_0)/\partial {\varvec{\gamma }}_0^{\mathrm{T}}\Vert _{\infty }\), is integrable.

The conditions listed above are all standard bounded, smoothness conditions on functions and some classical conditions imposed on the spline order and number of knots. These are commonly used conditions in spline approximation and semiparametric regression literature. We now establish the consistency of \(\widehat{{\varvec{\delta }}}_n\) and \(\widehat{{\varvec{\gamma }}}_n\) as well as the asymptotic distribution property of \(\widehat{{\varvec{\delta }}}_n\).

Theorem 1

Assume Conditions \((\mathrm {C}1){-}(\mathrm {C}7)\) to hold. Let \(\widehat{\varvec{\theta }}_n \) satisfy

Then, \(\widehat{\varvec{\theta }}_n - \varvec{\theta }_0=o_p(1)\) element-wise.

The result in Theorem 1 is used to further establish the asymptotic properties of the estimator of the parameters of interest \(\widehat{{\varvec{\delta }}}_n\) and the estimator of the function of interest \(\mathbf{B}(\cdot )^{\mathrm{T}}\widehat{{\varvec{\gamma }}}_n\).

Theorem 2

Assume Conditions \((\mathrm {C}1)-(\mathrm {C}8)\) to hold and let

Then,

Consequently, \(\sqrt{n}(\widehat{{\varvec{\delta }}}_n-{\varvec{\delta }}_0)\rightarrow N(\mathbf{0}, \mathbf{V})\) in distribution when \(n\rightarrow \infty \), where

Theorem 2 indicates that \({\varvec{\delta }}\) is estimated at the root-n rate. The proofs of Theorems 1 and 2 are given in “Appendix.” Because the B-spline estimation of \(g(\cdot )\) is at a slower rate than root-n, the estimation of \({\varvec{\delta }}\) does not have any impact on the first-order asymptotic properties of \(\widehat{g}\). Thus, for the analysis of the asymptotic properties of \(\widehat{g}\), we can treat \({\varvec{\delta }}\) as known. Then, the proof of Theorem 2 in Jiang and Ma (2018) can be directly used. We skip the details of the proof and provide the specific convergence property of the estimation of g in Theorem 3.

Theorem 3

Assume Conditions \((\mathrm{C}1)-(\mathrm{C}8)\) to hold and let

Then, \(\Vert \widehat{{\varvec{\gamma }}}_n - {\varvec{\gamma }}_0\Vert _2 = O_p\{(nh_b)^{-1/2}\}\). Further,

This leads to that \(\widehat{g}(x)\), which equals \(\mathbf{B}(x)^{\mathrm{T}}\widehat{{\varvec{\gamma }}}_n\), satisfies \(\sup _{x \in [0, 1]} |\widehat{g}(x) - g(x)|= O_p\{(nh_b)^{-1/2}\}\). Specifically, \(\text{ bias }\{\widehat{g}(x)\} = E\{\widehat{g}(x) - g(x)\} = O(h_b^{q-1/2})\) and

4 Numerical study

In our first simulation, we generated the observations \((W_i, \mathbf{Z}_i, Y_i)\) from the model

where \(W=X+U\) and \(U=\mathrm{normal}(0,0.03)\). The true function is: \(g(x)=-5\exp \{-\,0.8(x-2.5)^2\}\) and H(t) is the inverse logit link function. We set \(\beta _1=1\), \(\beta _2=0.5\), \(\beta _3=1\) and \(\beta _4=-0.3\). The sample size is 1000, and we run 1000 simulations. \(X_i\) is generated from a truncated normal distribution with mean 0.5 and variance 1 / 36 on [0,1] independently of \(\mathbf{Z}_i\). We implemented our method using a normal working model, corresponding to a correct working model case. In order to investigate the performance of our method under a misspecified working model, we also performed another study, in which we have \(X_i\) generated from a truncated student t distribution with degrees of freedom 5. Covariates \(Z_{1i}\), \(Z_{2i}\) and \(Z_{4i}\) are generated from the standard normal distribution. The covariate \(Z_{3i}\) is generated from a uniform distribution on \([-1,1]\). In both studies, we estimated both the parameters \(\beta _1\), \(\beta _2\), \(\beta _3\), \(\beta _4\) and the function g(x).

In the second simulation, we set the true g function to be \(g(x)= -5\exp (-0.2x^2)+5\), while all other settings remain the same. Similarly to the first simulation, we compared the performance of a correct working model and a misspecified working model in terms of estimating both \(\beta _1\), \(\beta _2\), \(\beta _3\), \(\beta _4\) and g(x).

In the third simulation, we increase the sample size to 2000 to see the performance of our method while having a g function that has more nonlinear feature. Specifically, we set \(g(x)=\sin (2\pi x)\), while keep all other settings unchanged.

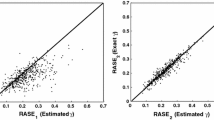

In simulations 1, 2 and 3, we discretized the distribution of X on [0, 1] to \(m=15\) equal segments and we use the truncated normal distribution discussed earlier as our working model. We used quadratic splines with seven equally spaced knots on [0, 1] to estimate g(x). The number of knots is chosen to be larger than \(n^{1/4}\) to reflect condition (C4). When we further increase the number of knots, the results do not change much. The simulation results are shown in Tables 1, 2 and Figs. 1, 2 and 3.

The results in Tables 1 and 2 show little bias for the \({\varvec{\beta }}\) estimation, regardless a correct working model or a misspecified working model is used. Figures 1, 2 and 3 show that the estimators of g(x) have somewhat large bias on the boundary in both methods, which are within our expectation when factoring in the boundary effect. The performance of g(x) estimation is satisfactory in the interior of the function domain. The simulation results show no big difference between the performance of the correct working model of \(f_X(x)\) and a misspecified one, confirming our theory on consistency in both cases.

5 Data analysis

The data set we analyzed is from an AIDS Clinical Trials Group (ACTG) study. The goal of this study is to compare four different treatments, “ZDV,” “ZDV+ddI,” “ZDV+ddC” and “ddC,” on HIV-infected adults whose CD4 cell counts were from 200 to 500 per cubic millimeter. We labeled those treatments as treatment 1, treatment 2, treatment 3 and treatment 4. We used treatment 1 as the base treatment because it is a standard treatment. There were 1036 patients enrolled in the study and they had no antiretroviral therapy at enrollment. The criterion that we used to compare the four treatments is whether a patient has his or her CD4 count drop below 50%, which is an important indicator for HIV-infected patients to develop AIDS or die. We have \(Y=1\) if a patient has his or her CD4 count drop below 50%, and \(Y=0\) otherwise.

Our model has the form:

where \(W=X+U\) and \(U=\mathrm{normal}(0,\sigma ^2_U)\). The covariates \(Z_1\), \(Z_2\) and \(Z_3\) are dichotomous variables. \(Z_{1i}=Z_{2i}=Z_{3i}=0\) indicates that the ith individual receives treatment 1, the base treatment; \(Z_{1i}=1\) and \(Z_{2i}=Z_{3i}=0\) indicates that the ith individual receives treatment 2; \(Z_{1i}=0\), \(Z_{2i}=1\) and \(Z_{3i}=0\) indicates that the ith individual receives treatment 3; \(Z_{1i}=Z_{2i}=0\) and \(Z_{3i}=1\) indicates that the ith individual receives treatment 4. The covariate X is the baseline log(CD4 count) prior to the start of treatment. Because CD4 count cannot be measured precisely, X is considered as our unobservable covariate. We use the average of two available measurements of \(\log \)(CD4 count) as W.

First, we estimated the variance of U using the two repeated measurements and we got \(\widehat{\sigma }^2_U=0.3\). Then, we constructed our working model of unobservable variance X. We assume that X follows a truncated normal distribution and estimated its variance by \(\widehat{\sigma }^2_X=\widehat{\sigma }^2_W-\widehat{\sigma }^2_U\).

Table 3 shows that treatment 2, treatment 3 and treatment 4 are more efficient than the baseline treatment, i.e., treatment 1, at 90% confidence level according to the P-values of \(\beta _1\), \(\beta _2\) and \(\beta _3\). The estimated index function g(x) is in Fig. 4. We generated 1000 bootstrapped samples and calculated the bootstrapped mean, median and 90% confidence band for g(x). It shows that g(x) is an decreasing function, indicating that a large baseline CD4 count leads to a smaller risk of developing AIDS or having his/her CD4 counts drop below 50%. Thus, our analysis indicates that in general, the alternative treatments and a higher baseline CD4 count are beneficial to a patient.

6 Discussion

We devised a consistent and locally efficient estimation procedure to estimate both parameters and functions in a generalized partially linear model where the covariate inside the nonparametric function is subject to measurement error. The method does not make any assumption on the distribution of the covariate measured with error other than its finite support, which is easily satisfied in practice. The method is efficient in terms of estimating the model parameters if a correct working model is used, and retains its consistency even if this working model is misspecified. The estimation procedure breaks free from the deconvolution approach, which is the norm of practice in handling nonparametric problems with measurement errors. Instead, a novel usage of B-spline approach in combination with semiparametric method is exploited to push through the analysis.

Many possible extensions can be explored further. Possibilities include handling multivariate covariates measured with error, via multivariate B-splines, or incorporating index modeling approach or additive structures. Although our method is developed conceptually for generalized linear models, we did not really make use of the linear structure, hence any model of the form \(f(Y, g(X), \mathbf{Z}, {\varvec{\beta }})\) can be treated in a similar way. To this end, the continuous Y case typically involves normal error and has been widely studied, while the binary response case is studied in the main text of this work. When Y is count data, many computational issues arise, and is worth careful investigation further.

We have assumed the measurement error U to either have a known distribution, or to have its model parameters estimable from multiple observations. Of course, any other available information to identify the measurement error distributional model parameter also works and the plug-in procedure is largely “blind” to how the parameter is estimated. Of course, the estimated distributional model parameter will alter the estimation variability of \({\varvec{\delta }}\), which can be take into account in a standard way (Yi et al. 2015).

References

Apanasovich TV, Carroll RJ, Maity A (2009) SIMEX and standard error estimation in semiparametric measurement error models. Electron J Stat 3:318–348

Buonaccorsi JP (2010) Measurement error: models, methods, and applications. Chapman & Hall/CRC, New York

Carroll RJ, Fan J, Gijbels I, Wand MP (1995) Generalized partially linear single-index models. J Am Stat Assoc 92:477–489

Carroll RJ, Ruppert D, Stefanski LA, Crainiceanu C (2006) Measurement error in nonlinear models: a modern perspective, 2nd edn. CRC Press, London

Huang Y, Wang CY (2001) Consistent functional methods for logistic regression with errors in covariates. J Am Stat Assoc 96:1469–1482

Jiang F, Ma Y (2018) A spline-assisted semiparametric approach to nonparametric measurement error models. arXiv:1804.00793

Liang H, Ren H (2005) Generalized partially linear measurement error models. J Comput Graph Stat 14:237–250

Liang H, Thurston SW (2008) Additive partial linear models with measurement errors. Biometrika 95:667–678

Liang H, Qin Y, Zhang X, Ruppert D (2009) Empirical likelihood-based inferences for generalized partially linear models. Scand Stat Theory Appl 36:433–443

Liu L (2007) Estimation of generalized partially linear models with measurement error using sufficiency scores. Stat Probab Lett 77:1580–1588

Liu J, Ma Y, Zhu L, Carroll RJ (2017) Estimation and inference of error-prone covariate effect in the presence of confounding variables. Electron J Stat 11:480–501

Ma Y, Carroll RJ (2006) Locally efficient estimators for semiparametric models with measurement error. J Am Stat Assoc 101:1465–1474

Ma Y, Tsiatis AA (2006) Closed form semiparametric estimators for measurement error models. Stat Sin 16:183–193

Stefanski LA, Carroll RJ (1985) Covariate measurement error in logistic regression. Ann Stat 13:1335–1351

Stefanski LA, Carroll RJ (1987) Conditional scores and optimal scores in generalized linear measurement error models. Biometrika 74:703–716

Tsiatis AA, Ma Y (2004) Locally efficient semiparametric estimators for functional measurement error models. Biometrika 91:835–848

Xu K, Ma Y (2015) Instrument assisted regression for errors in variables models with binary response. Scand J Stat 42:104–117

Yi G, Ma Y, Spiegelman D, Carroll RJ (2015) Functional and structural methods with mixed measurement error and misclassification in covariates. J Am Stat Assoc 110:681–696

Yu Y, Ruppert D (2012) Penalized spline estimation for partially linear single-index models. J Am Stat Assoc 97:1042–1054

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Yang’s research was supported by the National Nature Science Foundation of China Grants 11471086 and 11871173, the National Social Science Foundation of China Grant 16BTJ032, the National Statistical Scientific Research Center Projects 2015LD02, and the Fundamental Research Funds for the Central Universities 19JNYH08. Ma’s work is partially supported by NSF and NIH.

Appendix

Appendix

1.1 A.1 Proof of Theorem 1

From the definitions of \(\mathbf{S}_{\mathrm{eff}}^*(Y_i, W_i,\mathbf{Z}_i, {\varvec{\delta }}, g)\) and \({\mathbf{S}_{\mathrm{res}}}_2^*(Y_i, W_i, \mathbf{Z}_i, {\varvec{\delta }},{\varvec{\gamma }})\), we have

where \(_a\) here and throughout the text stands for “approximate,” and \(E_a\) indicates the expectation calculated with \(g(\cdot )\) replaced by the approximate model \(\mathbf{B}(\cdot )^{\mathrm{T}}{\varvec{\gamma }}_0\). Taking another expectation, we get

Using Condition \((\mathrm {C}6)\), we further get

component-wise. Condition \((\mathrm {C}7)\) ensures that \([E\{\mathbf{S}_{\mathrm{eff}}^*(Y_i, W_i, \mathbf{Z}_i, {\varvec{\delta }}, {\varvec{\gamma }})\}^{\mathrm{T}}, E\{{\mathbf{S}_{\mathrm{res}}}_2^*(Y_i, W_i, \mathbf{Z}_i, {\varvec{\delta }}, {\varvec{\gamma }})\}^{\mathrm{T}}]^{\mathrm{T}}\) is invertible near its zero \(\varvec{\theta }^*\) as a vector function of \(\varvec{\theta }\), and the first derivative of the inverse function is bounded in the neighborhood of \(\varvec{\theta }^*\). Therefore, \(\Vert \varvec{\theta }^* - \varvec{\theta }_0\Vert _2 = o_p(1)\). On the other hand, since

we have

element-wise. Using exactly the same argument as above, we can also obtain \(\Vert \widehat{\varvec{\theta }}_n -\varvec{\theta }^*\Vert _2=o_p(1)\). Hence, combining the two results, we get \(\Vert \widehat{\varvec{\theta }}_n - \varvec{\theta }_0\Vert _2=o_p(1)\). \(\square \)

1.2 A.2 Proof of Theorem 2

We first write

where

where

and \(\widetilde{{\varvec{\delta }}}_n\) is on the line connecting \({\varvec{\delta }}_0\) and \(\widehat{{\varvec{\delta }}}_n\).

We further expand \(\mathbf{T}_1\) as a function of \(\widehat{{\varvec{\gamma }}}_n({\varvec{\delta }}_0)\) about \({\varvec{\gamma }}_0({\varvec{\delta }}_0)\) to obtain

where

and \(\widetilde{{\varvec{\gamma }}}_n({\varvec{\delta }}_0)\) is on the line connects \(\widehat{{\varvec{\gamma }}}_n({\varvec{\delta }}_0)\) and \({\varvec{\gamma }}_0({\varvec{\delta }}_0)\).

Because of the consistency of \(\mathbf{B}(x)^{\mathrm{T}}\widetilde{{\varvec{\gamma }}}_n\) to g(x) derived from Condition (C6) and Theorem 1, and the weak law of large numbers, for arbitrary \(d_{{\varvec{\gamma }}}\times p\) matrix \(\mathbf{G}\) with \(\Vert \mathbf{G}\Vert _2 = 1\), we have

where

Here, like before, \(f(y_i, w_i, \mathbf{z}_i, {\varvec{\delta }}_0, {\varvec{\gamma }}, f_X)\) stands for \(f(y_i, w_i, \mathbf{z}_i, {\varvec{\delta }}_0, g, f_X)\) with \(g(\cdot )\) replaced by \(\mathbf{B}(\cdot )^{\mathrm{T}}{\varvec{\gamma }}\), and \(\mathbf{S}_{a, {\varvec{\gamma }}}(y_i, w_i, \mathbf{z}_i, {\varvec{\delta }}_0, {\varvec{\gamma }}_0)\equiv \partial \log f(y_i, w_i, \mathbf{z}_i, {\varvec{\delta }}_0, {\varvec{\gamma }}, f_X)/\partial {\varvec{\gamma }}\). The second equality holds by condition \((\mathrm {C}6)\).

The third equality holds because \(\Vert \partial \mathbf{S}_{\mathrm{eff}}^*(y_i, w_i,\mathbf{z}_i, {\varvec{\delta }}_0, {\varvec{\gamma }}_0)/\partial {\varvec{\gamma }}_0^{\mathrm{T}}\Vert _{\infty }\) is integrable by condition \((\mathrm {C}8)\) and \(f(y_i, w_i, \mathbf{z}_i, {\varvec{\delta }}_0,{\varvec{\gamma }}_0,f_X)\) is absolutely integrable. The fourth equality holds also by condition \((\mathrm {C}6)\). The fifth equality holds because \(E\{\mathbf{S}_{\mathrm{eff}}^*(y_i, w_i, \mathbf{z}_i, {\varvec{\delta }}, g)\} = \mathbf{0}\). For the last equality, we note that

By Condition \((\mathrm {C}6)\) and definitions of \(\varLambda _{g}\) and \(\varLambda _{a, {\varvec{\gamma }}}\), for any \(d_{{\varvec{\gamma }}} \times p\) matrix \(\mathbf{G}\), there exists a function \(\mathbf{h}(y_i, w_i, \mathbf{z}_i, {\varvec{\delta }}_0, g) \equiv E[s\{y_i, \mathbf{z}_i^{\mathrm{T}}{\varvec{\beta }}_0+g(X),{\varvec{\alpha }}_0\} \mathbf{G}^{\mathrm{T}}\mathbf{B}(X) \mid y_i,w_i,\mathbf{z}_i] \in \varLambda _{g}\) such that

Further, \(\mathbf{S}_{\mathrm{eff}}^*(y_i, w_i, \mathbf{z}_i, {\varvec{\delta }}_0, g)\) is orthogonal to any function in \(\varLambda _{g}\), thus the last equality holds. Hence, we obtain \(\Vert \mathbf{T}_{12}\{\widetilde{{\varvec{\gamma }}}({\varvec{\delta }}_0)\} \Vert _2= O_p(h_b^q) \).

Based on the asymptotic results of Proposition 4 in Jiang and Ma (2018), we have \(\Vert \widehat{{\varvec{\gamma }}}_n({\varvec{\delta }}_0) - {\varvec{\gamma }}_0({\varvec{\delta }}_0)\Vert _2 = O_p\{(nh_b)^{-1/2}\}\). Then, we have

Further, by \((\mathrm {C}6)\) we have \(\mathbf{T}_{11} = n^{-1/2}\sum _{i=1}^{n}\mathbf{S}_{\mathrm{eff}}^*(Y_i, W_i, \mathbf{Z}_i, {\varvec{\delta }}_0, g)+O_p(n^{1/2}h_b^q)\). Since \(h_b^{q-1/2} = o_p(n^{1/2}h_b^q)\), and \(n^{1/2}h_b^q= o_p(1)\) by conditions \((\mathrm {C}4)\) and \((\mathrm {C}5)\), then

We next consider each term in \(\mathbf{T}_2(\widetilde{{\varvec{\delta }}}_n)\). Since \(\widehat{{\varvec{\gamma }}}_n(\cdot )\) satisfies

for any \({\varvec{\delta }}\),

Then,

where

Hence,

By the consistency of \(\widetilde{{\varvec{\delta }}}_n\) to \({\varvec{\delta }}_0\) and \(\mathbf{B}(x)^{\mathrm{T}}\widehat{{\varvec{\gamma }}}_n\) to g(x), we have

and

From (A.1), we also have

Based on the proof of Proposition 4 in Jiang and Ma (2018), we have \(\Vert \mathbf{T}_{23}(\widetilde{{\varvec{\delta }}}_n)^{-1}\Vert _2 = O_p(h_b^{-1})\). Therefore, we have \(\mathbf{T}_{22}(\widetilde{{\varvec{\delta }}}_n) \{\mathbf{T}_{23}(\widetilde{{\varvec{\delta }}}_n)\}^{-1} \mathbf{T}_{24}(\widetilde{{\varvec{\delta }}}_n)=O_p(h_b^{q-1})\), where \(q>1\) by condition \((\mathrm {C}2)\). Thus,

Therefore,

Since \(n^{-1/2}\sum _{i=1}^{n}\mathbf{S}_{\mathrm{eff}}^*(Y_i, W_i, \mathbf{Z}_i, {\varvec{\delta }}_0, g)\) is the sum of zero-mean random vectors, this will converge in distribution to a multivariate normal distribution with mean \(\mathbf{0}\) and covariance matrix \(\mathbf{V}\) given in Theorem 2. \(\square \)

Rights and permissions

About this article

Cite this article

Wang, Q., Ma, Y. & Yang, G. Locally efficient estimation in generalized partially linear model with measurement error in nonlinear function. TEST 29, 553–572 (2020). https://doi.org/10.1007/s11749-019-00668-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11749-019-00668-0

Keywords

- B-splines

- Efficient score

- Errors in variables

- Generalized linear models

- Instrumental variables

- Measurement errors

- Partially linear models

- Semiparametrics