Abstract

This paper deals with the estimation and prediction problems of spatio-temporal processes by using state-space methodology. The spatio-temporal process is represented through an infinite moving average decomposition. This expansion is well known in time series analysis and can be extended straightforwardly in space–time. Such an approach allows easy implementation of the Kalman filter procedure for estimation and prediction of linear time processes exhibiting both short- and long-range dependence and a spatial dependence structure given on the locations. Furthermore, we consider a truncated state-space equation, which allows to calculate an approximate likelihood for large data sets. The performance of the proposed Kalman filter approach is evaluated by means of several Monte Carlo experiments implemented under different scenarios, and it is illustrated with two applications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the last decade, there has been an increasing interest in modeling spatio-temporal data that result from dynamic processes in constant evolution in both space and time. Geostatistics has been used to deal with such spatio-temporal processes by providing covariance models to analyze the dependence structure. Since the eighties, a wealth of papers have provided a variety of spatio-temporal models that have been used in many scientific areas, such as hydrology, environmental sciences, geology, astronomy, neuroscience, ecology, atmospheric sciences, oceonography, and economy; this list is indeed virtually endless [see for instance, Eynon and Switzer (1983), Bilonick (1985), Oehlert (1993) among others]. Usually, the proposed models exhibit a hierarchical structure incorporating spatial or spatio-temporal dependencies. Indeed, approaches based on hierarchical spatio-temporal structures have been used to achieve flexible models that capture the dynamic behavior of the data. This has been the case in Brown et al. (1994) who applied a hierarchical model to a relatively low-dimensional space–time air pollution problem. Similarly, Handcock and Wallis (1994) used a Bayesian kriging approach for space–time modeling of meteorological fields. Waller et al. (1997) employed hierarchical Bayesian space–time models for mapping disease rates. Wikle et al. (1998) used this class of models in the analysis of monthly averaged maximum temperature data distributed in space and time, and Hughes et al. (1999) used hidden Markov models with unobserved weather states to model space–time atmospheric precipitation. We can also find applications in environmental pollution problems, where, for example, Ippoliti (2001) analyzed the levels of sulfur dioxide in Milan (Italy), providing a spatio-temporal state-space representation. Fasso et al. (2007) combined the state-space method with calibration techniques and applied them to fine particulate matter (PM\(_{10}\)) data in the spatio-temporal dimension. Cameletti et al. (2013) considered a hierarchical spatio-temporal model for particulate matter concentrations. This proposal involves a Gaussian Field, affected by a measurement error, and a state process characterized by a first-order autoregressive dynamic model and spatially correlated innovations. Another interesting spatio-temporal application for the interpolation of daily rainfall data using state-space models has been proposed by Militino et al. (2015). For an overview of hierarchical dynamical spatio-temporal models, the recent book by Cressie and Wikle (2011) provides an excellent starting point for researchers in this area.

In these modeling exercises, the Kalman filter algorithm has proved to be a powerful tool for the statistical treatment of state-space models, providing the estimation of parameters (given in the state vector) and the prediction of unobserved values at a specific location. For instance, Huang and Cressie (1996) and Wikle (2003) developed empirical Bayesian space–time Kalman filter models for the investigation of snow water equivalent and monthly precipitation. Mardia et al. (1998) considered a mixed approach between the Kalman filter algorithm and Kriging methodology (named as kriged Kalman Filter), in which the state equation incorporates different forms of temporal dynamics to model space–time interactions. Wikle and Cressie (1999) presented an approach to space–time prediction that achieves dimension reduction through a spatio-temporal Kalman filter. Xu and Wikle (2007) proposed a spatio-temporal dynamic model formulation with restricted parameter matrices based on prior scientific knowledge, and developed a general expectation–maximization (GEM) algorithm to carry out the estimations. Stroud et al. (2010) applied a dynamic state-space model to a sequence of SeaWiFS satellite images on the Lake Michigan, where a great amount of sediments was observed after a great storm. In this study, the authors implemented a comprehensive version of the Kalman filter, called ensemble Kalman filter, which allows to deal with problems of nonlinearities and high dimensionality inherent in satellite images. They were able to provide maps of concentrations of sediments with uncertainties in space and time. To deal with forecasting on spatio-temporal processes, Zes (2014) used the state-space system and time-varying parameter least squares autoregressive system, with their respective solving algorithms, the Kalman filter, and autoregressive adaptive least squares (ALS). More recently, Bocquet et al. (2015) discussed the methods available for data assimilation in atmospheric models, including ensemble Kalman filter. A common feature in the above-mentioned papers is that the unobserved state vector is responsible of capturing the temporal dependence through a Markovian temporal evolution, with autoregressive or vector autoregressive type of models. This means that the proposed approaches belong to the class of short-memory models in time series data.

Our approach considers a more general framework. Specifically, we allow to capture the temporal dependence of both short- and long-memory processes, as well as modeling the spatial dependence. We use the Kalman filter algorithm for estimation and prediction of spatio-temporal processes, but on the basis of a new updating scheme of the unobserved state vector, which is different from the above-mentioned proposals for the following reasons:

-

We propose the use of an infinite moving average expansion (MA\((\infty )\)) as a form of representing linear processes to deal with spatio-temporal models.

-

Our proposal includes short- or long-memory models to capture the temporal dependence, such as ARMA(p, q) and ARFIMA(p, d, q) models.

-

Instead of directly calculating the likelihood of the spatio-temporal process, we propose an approximation to the likelihood function based on the truncated state-space representation. This, to some extent, reduces the size of memory required and overcomes the computational burdens.

-

Finally, a methodology of imputation of missing observations is also proposed.

The plan of the paper is the following. Section 2 discusses a class of spatio-temporal processes and their representation in MA(\(\infty \)) expansions. Section 3 presents the state-space models and the Kalman filter algorithm for estimating the parameters involved in the temporal dependence and the spatial structure. In Sect. 4, a simulation study reveals the adequacy and the good behavior of our proposal under a variety of practical scenarios. Section 5 applies our proposal to global total column ozone levels and Irish wind speed data. The paper ends with some final conclusions and a discussion.

2 A class of spatio-temporal processes

Consider the class of spatio-temporal processes given by the infinite moving average expansion,

for \(t=1,\ldots ,T\), where \(\mathbf{s}\) represents a location in the spatial domain \(D\subset \mathbb {R}^{2}\), \({\varvec{\beta }}=[\beta _1,\ldots , \beta _{p}]^{\top }\) is a vector of parameters \({{\varvec{M}}}_{t}\left( \textstyle \mathbf{s}\right) =[M_{t}^{(1)}(\mathbf{s}),\ldots , M_{t}^{(p)}(\mathbf{s})]\) is a p-dimensional vector of non-stochastic regressors, \(\{\psi _j\}\) is a sequence of coefficients satisfying \(\sum _{j=0}^\infty \psi _j^2<\infty \), and \(\{\eta _t(\mathbf{s})\}\) a sequence of temporally independent and spatially stationary Gaussian processes, with \({{\mathrm{\mathbb {E}}}}(\eta _t(\mathbf{s}))=0 ~ \forall ~ \mathbf{s}\in D\), and covariance function

Here, \(C^{\eta }:[0, \infty ) \rightarrow \mathbb {R}\) such that the composition \(C^{\eta } \circ ||\cdot || : \mathbb {R}^{2} \rightarrow \mathbb {R}\) is an isotropic covariance function (Daley and Porcu 2014), where \(||\cdot ||\) is the Euclidean distance, \(\circ \) denotes composition, and \({\varvec{\theta }}\) is a parameter vector. This representation is similar to the well-known MA\((\infty )\) decomposition for the error sequence \(\{\varepsilon _{t}(\mathbf{s})\}\). We say that a stationary process with lag-h temporal covariance \(\kappa (h)\) is said to have short-memory if \(\sum _{h=-\infty }^{\infty } \left| \kappa (h)\right| < \infty \), and in this case the process in (2) will be called a short-memory process. On the other hand, if \(\sum _{h=-\infty }^{\infty } \left| \kappa (h)\right| = \infty \), the process in Eq. (2) will be called a long-memory process. Another characterization is based directly on the MA\((\infty )\) decomposition of the process (2). It is said that the process \(\{\varepsilon _{t}(\mathbf{s})\}\) has short-memory if \(\psi _j \sim \exp (-a j)\) for \(j\ge 1\), with a a positive constant. On the other hand, the process has long-memory if \(\psi _j \sim j^{d-1}\) for some \(d\in (0,1/2)\) [see Palma et al. (2013)]. Here, \(\sim \) means that the ratio of both sides tends to one. We now consider the issue of the spatial and temporal dependencies of the process \(Y_{t}(\mathbf{s})\). In the case of the MA\((\infty )\) decomposition for the spatio-temporal process defined by (1), the covariance across both space and time is given by

where \(\xi =||\mathbf{s}-\mathbf{s}'||, \mathbf{s}, \mathbf{s}' \in D\), \(h=t-t', ~ t, t' \in \mathbb {Z_+}\) and \(\kappa (\cdot )\) is a temporal covariance function. Thus, the spatio-temporal covariance function of the process \(Y_{t}(\mathbf{s})\) can be written as the product of a purely spatial with a purely temporal covariance function. In such a case, we say that \(Y_{t}(\mathbf{s})\) has a separable spatio-temporal covariance function (Gneiting 2002). For a neater and self-contained exposition, we discuss some examples below.

Example 1

As an example of short-memory process, we consider the regression model with autoregressive moving average errors, denoted by ARMA(p, q), and defined as

for \(t=1,2\ldots , T\). When \(p=q=1\), i.e., \(\varepsilon _{t}(\mathbf{s})= \phi \varepsilon _{t-1}(\mathbf{s}) + \theta \eta _{t-1}(\mathbf{s}) + \eta _{t}(\mathbf{s})\), we obtain a special case of the errors in (2) with coefficients \(\psi _j=\left( \phi -\theta \right) \phi ^{j-1}\) for \(j\ge 1\) and \(\psi _j=1\) for \(j=0\) in the MA\((\infty )\) process (hereafter, we assume that \(\psi _0=1\), unless specified otherwise) . When \(q=0\), we have an autoregressive AR(p) process defined as \(\varepsilon _{t}(\mathbf{s})= \sum _{j=1}^{p} \phi _j \varepsilon _{t-j}(\mathbf{s}) + \eta _{t}(\mathbf{s}),\) for \(t=1,2\ldots , T\). If \(p=1\), then \(\varepsilon _{t}(\mathbf{s})= \phi \varepsilon _{t-1}(\mathbf{s}) + \eta _{t}(\mathbf{s})\), this process is a special case of the errors in (2) with coefficients \(\psi _j=\phi ^j\) in the MA\((\infty )\) process. Straightforward calculations show that the temporal covariance function from an AR(1) process is \(\kappa (h)= \frac{\phi ^{h}}{1-\phi ^2}\) for \(h>0\), see Mikosch et al. (1995) for other MA\((\infty )\) representations on ARMA models.

Example 2

Other example of the regression model (1) is the stationary fractional noise (FN) errors (2) with infinite moving average coefficients \(\psi _{j}=\frac{\varGamma (j+d)}{\varGamma (j+1)\varGamma (d)}\), where \(\varGamma (\cdot )\) is the Gamma function and d is the long-memory coefficient. The expected value is given by \({{\mathrm{\mathbb {E}}}}(Y_{t}(\mathbf{s}))= {{\varvec{M}}}_{t}(\mathbf{s}){\varvec{\beta }}\), while the temporal covariance function is \(\kappa (h)=\sigma ^2\frac{\varGamma (1-2d)\varGamma (h+d)}{\varGamma (1-d) \varGamma (d)\varGamma (h+1-d)}\), for \(h>0\), with \(d\in (0,1/2)\). A natural extension of the FN model is the stationary autoregressive fractionally integrated moving average ARFIMA(p, d, q) process, defined by \(\varPhi \left( B\right) \varepsilon _{t}(\mathbf{s})=\varTheta \left( B\right) \left( 1-B \right) ^{-d}\eta _{t}(\mathbf{s})\), for \(t=1,2,\ldots T\), where B is the backward shift operator, \(\varPhi \left( B\right) =1+\phi _{1}B+\cdots +\phi _{p}B^{p}\) is an autoregressive polynomial, and \(\varTheta \left( B \right) =1+\theta _1B+\cdots +\theta _{q}B^{q}\) is a moving average polynomial. The infinite moving average coefficients \(\psi _{j}\) satisfy the following relation

where the weights \(\pi _j(d)\) are given by \(\pi _{j}(d)=\frac{\varGamma (j+d)}{\varGamma (j+1)\varGamma (d)}\), for \(0<d <1/2\); see Kokoszka and Taqqu (1995) for more details.

For the spatial covariance structure defined in (3), we consider the general class of Matérn covariance models (Matérn 1986) given by

where \(\rho >0\), \(\nu \ge 0\), \(\sigma ^2 >0\) and \(K_{\nu }\) is the modified Bessel function of the second kind of order \(\nu \). Known special cases will be shown in detail in Sect. 4.

3 State-space representation

Before starting with the state-space (SS throughout) representation defined through Eq. (1), a general version of the SS system for spatio-temporal processes is given by

where \(Y_{t}(\cdot )\) is the observation for time t at location \(\mathbf{s}\in D\), \(G_{t}(\mathbf{s})\) is an observation operator, \({{\varvec{M}}}_{t}(\mathbf{s})\) is a vector of exogenous or predetermined variables, \([X_{t}(\mathbf{s}) \quad {\varvec{\beta }}_t(\mathbf{s})]^{\top }\) is a state vector, and \(W_{t}(\mathbf{s})\) is an observation noise with variance \({R_{W}}\). In addition, \(F_{t}(\cdot )\) is a state transition operator, \(I_r\) denotes the \(r\times r\) identity matrix hereafter, H is a linear operator, \(V_{t}(\mathbf{s})\) is a spatially colored, temporally white and Gaussian with mean zero and covariance function \({{\mathrm{cov}}}(V_{t}(\mathbf{s}), V_{t}(\mathbf{s}')) =C^{V}(\xi ; {\varvec{\theta }})\), and \(V_{t}(\mathbf{s})\) is uncorrelated with \(W_{t}(\mathbf{s})\), i.e., \({{\mathrm{\mathbb {E}}}}(W_{t}(\mathbf{s})V_{t'}(\mathbf{s}))=0\) for all \(\mathbf{s}\in D\) and for all \(t, t' \in \mathbb {Z_+}\).

The process (1) can be represented by a SS system as above by generalizing the infinite-dimensional equations given by Hannan and Deistler (1988) and Ferreira et al. (2013) to the spatio-temporal case. This can be achieved by assignation of the \((j + 1)\) component of the state vector as the lag in j steps of the process \(\{\eta _{t}(\mathbf{s})\}\) defined in the model error \(\{\varepsilon _{t}(\mathbf{s})\}\) , i.e., \(X_{t}^{(j+1)} (\mathbf{s})=\eta _{t-j}(\mathbf{s})\) for \(j=0,1,\ldots \). In such a case, the process specified by (1) can be represented by the following infinite-dimensional state-space system

for \(t=1,\ldots ,T\), where \(G= \left[ \begin{array}{ccccccc} 1 &{}\quad \psi _1 &{}\quad \psi _2&{}\quad \ldots &{} \\ \end{array}\right] \),

,

\(I_{\infty }=\text {diag}\{1,1,\ldots \}\), and

\({R_{W}}=0\), i.e., we assume that our observed data are measured without an additive error. From these equations, observe that

\(H=[1,0, 0, \ldots ]^{\top }\) and

\(V_{t}(\mathbf{s})=\eta _{t+1}(\mathbf{s})\). Estimation techniques related to (6) have a notorious computational burden; for this reason, we truncate the expansion in (2) after some

\(m \in \mathbb {Z_{+}}\) components, so that an approximation for

\(\{Y_{t}(\mathbf{s})\}\) can be written as

,

\(I_{\infty }=\text {diag}\{1,1,\ldots \}\), and

\({R_{W}}=0\), i.e., we assume that our observed data are measured without an additive error. From these equations, observe that

\(H=[1,0, 0, \ldots ]^{\top }\) and

\(V_{t}(\mathbf{s})=\eta _{t+1}(\mathbf{s})\). Estimation techniques related to (6) have a notorious computational burden; for this reason, we truncate the expansion in (2) after some

\(m \in \mathbb {Z_{+}}\) components, so that an approximation for

\(\{Y_{t}(\mathbf{s})\}\) can be written as

for some positive integer m. Thus, the finite-dimensional SS representation of model (7) is considered, with observation and state equations given by

where

. It is worth noting that the matrices involved in the truncated Kalman equations have the following dimensions. For

\(\mathbb {M}_{\mathbb {R}(p \times q)}\) being the space of real-value matrices of dimension

\(p \times q\), we have

\(G \in \mathbb {M}_{\mathbb {R}(1 \times (m+1))}\),

\(X_{t}(\mathbf{s})\in \mathbb {M}_{\mathbb {R}((m+1)\times 1)}\),

\(F \in \mathbb {M}_{\mathbb {R}((m+1)\times (m+1))}\) and \(H \in \mathbb {M}_{\mathbb {R}((m+1)\times 1)}\). The following result establishes the asymptotic magnitude of the truncation error when approximating (1) by (7).

. It is worth noting that the matrices involved in the truncated Kalman equations have the following dimensions. For

\(\mathbb {M}_{\mathbb {R}(p \times q)}\) being the space of real-value matrices of dimension

\(p \times q\), we have

\(G \in \mathbb {M}_{\mathbb {R}(1 \times (m+1))}\),

\(X_{t}(\mathbf{s})\in \mathbb {M}_{\mathbb {R}((m+1)\times 1)}\),

\(F \in \mathbb {M}_{\mathbb {R}((m+1)\times (m+1))}\) and \(H \in \mathbb {M}_{\mathbb {R}((m+1)\times 1)}\). The following result establishes the asymptotic magnitude of the truncation error when approximating (1) by (7).

Proposition 1

For given \(m\in \mathbb {Z_{+}}\), \(t\in \mathbb {Z}\) and \(\underline{\mathbf{s}}=(\mathbf{s}_1, \mathbf{s}_2, \ldots , \mathbf{s}_{n})^{\top }\). Let \({\varvec{\eta }}_{t}(\underline{\mathbf{s}}) = (\eta _{t}(s_1), \ldots , \eta _{t}(s_n))^{\top }\) and \(r_{m}(\underline{\mathbf{s}}) : D \rightarrow \mathbb {M}_{\mathbb {R}(n \times n)}\) be the error variance matrix, defined as \(r_{m}(\underline{\mathbf{s}})={{\mathrm{Var}}}(\sum _{j=0}^\infty \psi _j{\varvec{\eta }}_{t-j}(\underline{\mathbf{s}})- \sum _{j=0}^{m} \psi _j {\varvec{\eta }}_{t-j}(\underline{\mathbf{s}}))\). Then, for large m, n, \(a>0\) and \(0<d<1/2\), we have the following:

where \(||A||= \max _{1\le i \le n}\sum _{j=1}^{n} \mid a_{ij} \mid \) is the infinity norm of A.

Proof

For an infinite moving average process \(\{Y_{t}(\underline{\mathbf{s}})\}\), the error variance matrix for the truncated series is

where the coefficients satisfy \(\psi _{m}\sim \frac{\varTheta (1) }{\varPhi (1)} \frac{m^{ d-1}}{\varGamma (d)}\), as \(m \rightarrow \infty \) and where d is the long-memory parameter, see Corollary 3.1 of Kokoszka and Taqqu (1995). In particular, if \(\{Y_{t}(\mathbf{s})\}\) is a FN(d) process with \(\psi _{m}=\frac{\varGamma (m+d)}{\varGamma (m+1)\varGamma (d)}\), by applying the Stirling’s approximation we have \(\psi _{m} \sim \frac{m^{d-1}}{\varGamma (d)}\), \(m\rightarrow \infty \). Furthermore, using Lemma 3.3 of Palma (2007) we get

so that (9) implies \(b_m= \mathcal {O}(m^{2d-1})\) when \(m \rightarrow \infty \). On the other hand, \(||\mathbf{{c}}_n||_{\infty } = \max _{1\le i \le n}\sum _{j=1}^{n} \mid C^{\eta }(||\mathbf{s}_i-\mathbf{s}_j||)\mid \le n\sigma ^2 \) where \(\sigma ^2=C^{\eta }(0; {\varvec{\theta }})\). Thus, \(\mathbf{{c}}_n = \mathcal {O}(n)\) for large n, proving the result. The proof for the case of a short-memory process is similar to the previous case, using \(\psi _{m}\sim \exp {(-am)}\) when \( m \rightarrow \infty \).

Some comments are in order. First, space–time asymptotics is still an open problem in the geostatistical literature. Most of the contributions are devoted to either of these two approaches: on the one hand, one might fix the number of spatial sites and let the number of temporal observations tend to infinity. This is the approach followed, for instance, by Li et al. (2008). According to this framework, evaluating the performance of our estimators amounts to apply mutandis the results in Chan and Palma (1998). Another approach might be obtained by increasing domain asymptotics (Guyon 1995). In this case, space–time asymptotics would be covered by the results in Guyon (1995). A very tempting approach might be to consider infill asymptotics in space and increasing domain in time. To the knowledge of the authors, this approach is not available in the literature and represents a major challenge.

3.1 Derivation of the Kalman filter algorithm

We now develop the Kalman filter algorithm for the spatio-temporal process defined in Eq. (7) with the associated SS representation given in (8). The Kalman filter is a powerful tool to make inferences about the state vector, which allows to calculate the conditional mean and covariance matrix of the state vector \(\left[ X_{t}(\mathbf{s}), {\varvec{\beta }}(\mathbf{s})\right] ^{\top }\). For the sake of simplicity, we restrict our attention to studying the behavior of the parameter estimates of the error \(\{\varepsilon _{t}(\cdot )\}\) process in the regression model (1), i.e.,

in such a case, the state vector is reduced down to \(X_{t}(\mathbf{s})\). The Kalman filter recursive equations are well known, but we present them here to setup notation, and for a self-contained exposition. First, define the \(n\times 1\) vector \(\mathbf{{Y}}_t=[Y_t(\mathbf{s}_1), \ldots , Y_{t}(\mathbf{s}_n)]^{\top }\) containing the data values at n spatial locations, \(\{\mathbf{s}_i\}_{i=1}^{n}\), at time t and let \(\mathbf{{X}}_{t}=[X_t(\mathbf{s}_1), \ldots , X_{t}(\mathbf{s}_n)]^{\top }\) be an \(n \times 1\) vector for an unobservable spatio-temporal state process for n locations, where each component of this state vector are \((m+1)\)-dimensional vectors. Let \(\widehat{X}_{t}(\mathbf{s})= {{\mathrm{\mathbb {E}}}}(X_{t}(\mathbf{s})| \mathbf{{X}}_1,\ldots , \mathbf{{X}}_t )\) the best linear unbiased predictor (BLUP) of the unobserved state \(X_{t}(\mathbf{s})\) and let \(\varOmega _{t}(\mathbf{s},\mathbf{s}') = {{\mathrm{cov}}}(X_{t}(\mathbf{s})-\widehat{X}_{t}(\mathbf{s}), X_{t}(\mathbf{s}')-\widehat{X}_{t}(\mathbf{s}') )\) be the state prediction error variance-covariance matrix. Finally, the initial state vector has mean \(\widehat{X}_1(\mathbf{s})={{\mathrm{\mathbb {E}}}}([\eta _0(\mathbf{s}), \eta _{-1}(\mathbf{s}), \ldots , \eta _{1-m}(\mathbf{s})]^{\top })=\mathbf{{0}}_{((m+1) \times 1)}\) and covariance matrix

which is a \((m+1) \times ( m+1)\) matrix. The Kalman filter allows to estimate the state vector, \({X}_{t+1}(\mathbf{s})\) for \(\mathbf{s}\in D\) and its prediction error based on the information available at time t. These estimates are given by

where

where \(\varTheta ^{\top }_{t}(\mathbf{s})\in \mathbb {M}_{\mathbb {R}((m+1) \times n)}\) and \(C^{HV}(\mathbf{s},\mathbf{s}') \in \mathbb {M}_{\mathbb {R}((m+1)\times ( m+1))}\). Let \({\varvec{\theta }}\) be a parameter vector specifying model (10), then the log-likelihood function \(\mathcal {L}(\cdot )\) (up to a constant), can be obtained from (11),

where \({\varvec{\epsilon }}_{t}({\varvec{\theta }}) = \left( \mathbf{{Y}}_{t}- {{\widehat{\mathbf{Y}}}}_{t}\right) \) is the innovation vector, and \({\varvec{\Delta }}_{t}({\varvec{\theta }})\) is the innovation covariance matrix at time t obtained using the parameter value \({\varvec{\theta }}\). Hence, the approximate maximum likelihood estimates (MLE) provided by the Kalman equations (11) is given by \(\widehat{{{\varvec{\theta }}}} = \arg \max _{{{{\varvec{\theta }}}} \in {\varvec{\varTheta }}} \mathcal {L}({\varvec{\theta }})\), where \({\varvec{\varTheta }}\) is a parameter space. Note that the Kalman equations (11) can be applied directly to the general state-space representation (5), yielding in this case an exact MLE. In order to obtain predictions of unobserved values at a location \(\mathbf{s}_0\), we define the best linear predictor as

which is the k-step-out-sample predictor based on the finite past for \(k=1, \ldots , K\). These forecasts and their mean squared prediction error are obtained from the Kalman recursive equations given by (11), as follows

Additionally, its mean squared prediction error is calculated using the following recursive relations

Furthermore, the prediction error variance \({\varvec{\Delta }}_{T+k}(\mathbf{s}_0,\mathbf{s}_0)\) satisfies

3.2 Missing observations

The analysis of missing observations in time series is an issue that has been treated by several authors, see Harvey (1989) and Durbin and Koopman (2012), among others. The SS method and its associated Kalman filter algorithm provides a simple methodology for handling missing values.

In order to describe this procedure, we assume that for the set of missing observations \(Y_{t}(\mathbf{s})\) for \(t=n+1, \ldots , T-n\), the vector \({\varvec{\epsilon }}_{t}(\mathbf{s})\) and the matrix \(\varTheta _{t}(\mathbf{s})\) of the Kalman filter are set to zero, and the Kalman updates become

for \(t=n+1, \ldots , T-n\). This imputation procedure provides an alternative method to the forecasts of \(Y_{T+k}(\mathbf{s})\) together with their forecast error, and this can be obtained merely by treating \(Y_{t}(\mathbf{s})\) for \(t=T+1, \ldots , T+k\) as missing observations, and continuing the Kalman filter beyond \(t=T\) with \({\varvec{\epsilon }}_{t}(\mathbf{s})=0\) and \(\varTheta _{t}(\mathbf{s})=0\) for \(t>T\). This procedure for forecasting is an elegant feature of SS methods for time series analysis.

4 Simulation studies

For the simulation studies, we used R free software (Core Team 2017) and C subroutines connected to R (Peng and de Leeuw 2002) through the interface called .C. The numerical optimization of the Gaussian log-likelihood function to obtain the QML estimates was carried out using the nlminb command of R. This method makes use of the subroutine “BFGS” corresponding to a quasi-Newton method (Broyden 1969; Fletcher 1970; Goldfarb 1970). We used nlminb because this optimizer can be implemented even when the sample size is small, obtaining convergence in the optimization process. In addition, it is less sensitive to initial values compared to other optimizers. We use Monte Carlo experiments to analyze the finite sample behavior of the Kalman filter estimator, for both short- and long-memory spatio-temporal processes, as detailed through Sect. 2. In particular, we consider two models, the first is an ARMA(1, 1), a short-memory case, and the second is an ARFIMA, a long-memory case.

4.1 Short-memory case

Consider an ARMA(1, 1) model for the errors defined by (1) with

where \(\{\eta _{t}(\cdot )\}\) are independent over time, and follow a stationary, zero mean Gaussian spatial random process and covariance function given by Eq. (3). We consider the general class of Matérn covariance models defined by Eq. (4). In particular, we use two types of covariance functions generating two possible models:

-

Model 1 \(\nu =\frac{1}{2}\), corresponding to the exponential model

$$\begin{aligned} C^{\eta }(\xi ; {\varvec{\theta }})=\sigma ^2 \exp \{-\rho \xi \}, \quad \xi \ge 0, \quad {\varvec{\theta }}=(\sigma ^2, \rho , 1/2)^{\top }, \quad \text{ and } \end{aligned}$$ -

Model 2 \(\nu =\frac{3}{2}\), which leads to

$$\begin{aligned} C^{\eta }(\xi ; {\varvec{\theta }})=\sigma ^2\left( 1+ \rho \xi \right) \exp \{-\rho \xi \} , \quad \xi \ge 0, \quad {\varvec{\theta }}=(\sigma ^2, \rho , 3/2)^{\top }. \end{aligned}$$

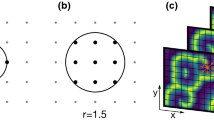

The choice of \(\nu \) in \(C^{\eta }\) affects the mean square (m.s.) differentiability of the associate random field. For Model 1, the associated Gaussian random field will be a.s. continuous but no m.s. differentiable. For Model 2, it will be m.s. differentiable. We assume to observe a spatio-temporal process \(\{\varepsilon _{t}({\mathbf{s}}_{i}): i=1, \ldots ,n; t=1, \ldots , T\}\), on a regular, rectangular grid of \(n\times n=N\) spatial locations in \([1,n]^2\), and at equidistant time points. For the data generation scheme, the process is generated recursively from (15) with initial values \(\eta _{1}(\mathbf{s}) \sim N(0, C^{\eta }(0))\) and \(\varepsilon _{1}(\mathbf{s})= C^{\eta }(0)/(1-\phi ^2) + \eta _{1}(\mathbf{s})\). Each realization is of length \(T=100, 250\). In addition, the parameters of the covariance function are considered constant, with different values for \(\sigma ^2\) and \(\rho \). Finally, we simulate each process 100 times, and for each simulation the Kalman filter estimates are evaluated by the relative bias (RelBias) and by the mean square error (MSE) defined as \(\text{ RelBias }({\varvec{\theta }})=\frac{1}{100}\sum _{i=1}^{100}\left( \widehat{\theta }_{i}/\theta _{i} - 1\right) \quad \text {and} \quad \text{ MSE }({\varvec{\theta }})=\frac{1}{100}\sum _{i=1}^{100}\left( \widehat{\theta }_{i}-\theta _{i}\right) ^{2}\), where \(\widehat{\theta }_{i}\) is the Kalman filter estimate of \(\theta _i\) for the ith realization. A preliminary assessment of the SS approach is related to the choice of the truncation level, which has an influence on the parameter estimates. Figure 1 plots the estimated MSE for an AR(1) model for different values of \(\phi \) and \(\rho \) as a function of the truncation level, m, for the MA\((\infty )\) decomposition. Figure 2 displays the MSE for different values of d and \(\rho \) as a function of the truncation level, m, based on a FN(d) model. In both cases, we use the Model 1 as spatial covariance function with \(\sigma ^2=1\), \(T=250\) and \(N=100\) spatial locations. In these graphs, darker regions represent the minimal empirical MSE, while lighter regions indicate greater MSE values. Note that, for the long-memory case an improvement in terms of MSE is evidenced when the truncation parameter is \(m \ge 10\). Similar results are obtained in Chan and Palma (1998) with \(m> 6\) when considering only the temporal domain. The short-memory case requires a lower level of truncation (\(m=5\)) on the state-space representation to guarantee the efficient performance of the truncated MLE estimates. In light of this evidence and due to space constraints, only a subset (\(m=5,10\)) of the results are presented; however, other results and codes are available from the authors upon request.

Table 1 shows the estimates of the parameters for two truncation levels, \(m = 5, 10\) and \(N=100\) spatial locations. We have used a combination of parameter values that reproduce widely encountered situations in practical analysis. These scenarios are shown in Table 1. Note that the estimates are very close to their theoretical counterparts. Furthermore, it is noteworthy that goodness-of-fit criteria such as the standard deviation (SD), bias and \(\sqrt{{\hbox {MSE}}}\) are very similar in relation to the level of truncation. In effect, if the truncation parameter is \(m=5\), the truncated Kalman filter works extremely well for both sample sizes.

4.2 Long-memory case

Consider now the following stationary ARFIMA(0, d, 1) model defined by

for \(j\ge 1\), where \(\varGamma (\cdot )\) is the Gamma function, d is the long-memory coefficient such that \(0<d<1/2\) and \(\theta \) is a moving average coefficient satisfying \(|\theta | < 1\). Concerning the innovations \(\{\eta _{t}(\mathbf{s})\}\), these were generated considering the same spatial structure as for the short-memory case. The samples from this ARFIMA process are generated using the innovation algorithm; see Brockwell and Davis (1991), page 172. In this implementation, the temporal covariance of the process \(\{\varepsilon _{t}(\mathbf{s})\}\) is given by

for \(h>0\). We offer some practical combination of parameter values commonly encountered in data set analysis with long-range dependence. Table 2 reports the results from the Monte Carlo simulations for several parameter values and two truncation levels, \(m=5,10\). The simulations are based on sample sizes \(T=100, 250\) and 100 replications. As indicated in the previous case, the observed means for the estimates are close to their expected values. In contrast to the findings in the short-memory case, a long-memory process requires a higher level of truncation on the state-space representation to guarantee the efficient performance of the truncated MLE estimates.

Another important aspect that should be assessed is the out-of-sample predictive ability of our methodology. To this end, we simulated a dataset under ARMA(1, 1) and ARFIMA(0, d, 1) models, and for both cases we used the Model 1 as spatial covariance structure. For each simulated dataset, we fitted three models, giving rise to three cases of comparison, namely AR(1), ARMA(1, 1) and ARFIMA(0, d, 1). We used cross-validation techniques to compare these three cases. Cross-validation is implemented on the observed data with K time points deleted for each location \(\mathbf{s}\), i.e., \(\{Y_{t}(\mathbf{s}_i): t=1,\ldots , T-K ; i=1,\ldots ,n \}\), and then predicting \(\{\widehat{Y}_{t_k}(\mathbf{s}_i)\}_{k=1}^{K}\) from the remaining data. We used the cross-validation statistics suggested by Carroll and Cressie (1997), given by

where \(\widehat{Y}_{t_k}(\mathbf{s}_i)\) is the prediction of the process at location \(\mathbf{s}_i\) and \(t_{k} ~ (k=1,\ldots , K)\), and \({\varvec{\Delta }}_{t_k}(\mathbf{s}_i)\) is the corresponding prediction variance. These values are obtained from (13) and (14), respectively. CR\(_1\) indicates the unbiasedness of the predictor and should be approximately equal to zero. CR\(_2\) checks the accuracy of the standard deviation of the prediction error and should be approximately equal to one. CR\(_3\) is a measure of goodness of prediction. One would like CR\(_3\) to be small, which indicates that the predicted values are close to the true values. For the data generation scheme, ARMA(1, 1) is generated with parameters \((\phi , \theta , \sigma ^2, \rho )=(0.45, 0.3,0.5,0.35)\) whereas for the ARFIMA(0, d, 1) we used \((d, \theta , \sigma ^2, \rho )=(0.15, 0.45, 1.5, 0.55)\) with sample sizes of \(T=250\), \(N=25\) locations and truncation level, \(m=10\). Table 3 displays the average on the locations of the cross-validation statistics based on predictions for 5 days, i.e., \(K=5\). It can be noticed that all these measures favor our true simulated data.

4.3 Estimation with a small number of observations and missing data

The performance of the Kalman filter estimator when the size of the time series is small and has missing observations is assessed in this section. For this case, we use an AR(1) for the temporal structure, and for the spatial dependence we use a third model defined as

-

Model 3: \(\nu =\infty \), corresponding to the Gaussian model

$$\begin{aligned} C^{\eta }(\xi ; {\varvec{\theta }})=\sigma ^2 \exp \{-\rho ^2 \xi ^2\}, \quad \xi \ge 0, \quad {\varvec{\theta }}=(\sigma ^2, \rho , \infty )^{\top }. \end{aligned}$$

We consider a regular grid on the square \([0,10]^2\) and level of truncation \(m= 5\). In addition, we incorporate \(10\%\) and \(20\%\) of missing values, which have been randomly selected for each simulation over 10 locations specified in the cartesian plane with coordinates (i, 8) for \(i=1,\ldots ,10\). For the AR(1) case, we consider \(\phi =0.65\), variance scale \(\sigma ^2= 1\) and spatial correlation \(\rho =0.5\). Finally, we work with sample sizes \(T=15, 50\), and 100 replications.

Table 4 shows the estimates of the parameters and the mean square errors. Note that these estimates are very close to their theoretical counterparts. As expected, the precision of the estimates worsens as the percentage of missing data increases.

5 Real data applications

5.1 TOMS data

The Kalman filter algorithm presented in Sect. 3.1 is now applied to Level 3 Total Ozone Mapping Spectrometer (TOMS) data. TOMS Level-3 data have been analyzed in some recent papers, including Jun and Stein (2008) and Porcu et al. (2015). We refer to these papers for a detailed description of the data. It is worth pointing out that TOMS data are located on a spatially regular grid of \(1^{\circ }\) latitude by \(1.25^{\circ }\) longitude away from the poles, i.e., from a latitude interval \([-89.5,89.5]\) to a longitude interval \([-180,180]\). We focus our analysis on selected 140 spatial points with all temporal observations for a total of 2100 observations, both in space and time. In addition, we converted coordinates from longitude/latitude to universal transverse mercator (utm) coordinates. The projections are obtained using spTransform from the rgdal package (Bivand et al. 2015) which uses the PROJ.4 projection library to perform the calculations. The temporal covariance was analyzed by considering three structures, namely AR(1), AR(2) and ARMA(1,1) models. For the spatial covariance, we considered Model 1, 2 and 3 as described in the previous Section. Table 5 reports the parameter estimates using the Kalman filter with truncation level \(m=5\). From Table 5, we note that the difference between the estimation performance is not so apparent. On the other hand, parameters \(\phi _2\) and \(\theta \) provide relatively little information compared to the other parameters, indicating that the temporal model has a potential correlation structure of AR(1) type. We computed these statistics for predicting the last day of measurement, i.e., \(K = 1\). The averages of the cross-validation statistics are presented in Table 6. We note no significant difference between all these measures. Nevertheless, we can conclude that for Model 1 and AR(1) model, the CR\(_3\) exhibits a smaller value than the other cases. Figure 3 displays the performance of this model in terms of the marginal spatial semivariograms and marginal sample autocorrelation function (ACF). As shown in this figure, the Kalman filter estimation offers a better fit for the modeling of TOMS data.

5.2 Irish wind data

Wind energy has grown significantly in developed countries and supplying electricity depends on methods of predicting wind speed in certain locations. The Irish wind speed data have been studied by several authors, in particular, Haslett and Raftery (1989), Gneiting (2002), Stein (2005) and Bevilacqua et al. (2012). Following Haslett and Raftery (1989), we omitted the Rosslare station and then considered a square root transformation to have deseasonalized data. The seasonal component was estimated by calculating the average of the square roots of the daily means over all years and stations for each day of the year, and regressing the result on a set of annual harmonics. We refer to their paper for a detailed description of these data. Following Haslett and Raftery (1989), we use a long-memory process to model the temporal dependence, and an exponential model for the spatial covariance, defined as

where \({\varvec{\theta }}=(\sigma ^2, \rho )^{\top }\), with \(\sigma ^2 \in (0, 1]\) and \(\rho >0\). In this direction, we have proposed three different models for the temporal dependence, namely FN (d), ARFIMA(1, d, 0) and ARFIMA(2, d, 0). In order to evaluate a possible structure of short-memory on the temporal covariance, ARMA(1, 1) and AR(1) models are also proposed. In addition, we considered all data except the last week, which will be used as a validation set. In order to obtain the Kalman filter estimation, we used a truncation level \(m=10\). The parameters estimated for these cases are given as follows:

-

For the AR(1) model, \(\widehat{\phi }=0.8564\), \(\widehat{\sigma }^2=0.7654\) and \(\widehat{\rho }=0.00438\), whereas for the ARMA(1, 1), \(\widehat{\phi }=0.6523\), \(\widehat{\theta }=0.3812\), \(\widehat{\sigma }^2=0.5841\) and \(\widehat{\rho }= 0.00817\).

-

For the FN(d) model, the estimates are: \(\widehat{d}=0.3373\), \(\widehat{\sigma }^2= 0.98799\) and \(\widehat{\rho }=0.00137\).

-

For the ARFIMA(1, d, 0) model, we have that \(\widehat{d}= 0.3137\), \(\widehat{\phi }= 0.04386\), \(\widehat{\sigma }^2= 0.98878\) and \(\widehat{\rho }= 0.00147\).

-

For the ARFIMA(2, d, 0) model, \(\widehat{d}= 0.3251\), \(\widehat{\phi }_1= 0.0101 \), \(\widehat{\phi }_2= -0.0599\), \(\widehat{\sigma }^2= 0.99786\) and \(\widehat{\rho }= 0.00164\).

To choose the best model, we considered the previous defined cross-validation statistics. In this case, we perform predictions for 7 days, i.e., \(K=7\). From Table 7, we can see that for the ARFIMA(1, d, 0) model, the CR\(_3\) exhibits a smaller value than the other cases. From these results, we focus on the goodness-of-fit analysis of the long-memory models. Figure 4 exhibits two panels exploring the correlations of the stations and the marginal sample ACF. Note that from panel (b) all the marginal sample ACF decay slowly, confirming a long-memory behavior. The dashed line represents the FN(d) model, the continuous line corresponds to the ARFIMA(1, d, 0) model, while the dotted line represents the behavior of the ARFIMA(2, d, 0) case. It seems that the ARFIMA(1, d, 0) model offers a better fit to the temporal sample ACF, whereas the behavior of the spatial correlations are very similar.

6 Discussion

In this article, we have proposed a state-space methodology to model spatio-temporal processes. In particular, we have proposed to model the temporal dependence structure both short- and/or long-memory through the infinite moving average representation MA\((\infty )\). In this context, we have incorporated the ARFIMA models to quantify the temporal correlation and Matérn covariance models to characterize the spatial correlation in the spatio-temporal processes. In terms of the estimation procedure, we have proposed an approximation to the likelihood functions via truncation which provides an efficient means to calculate the MLE. Simulation studies evidenced that the proposed approach can be extremely efficient for small truncation levels. Furthermore, this approach allows to overcome the computational burdens while reducing substantially the size of the required memory whenever we deal with large spatio-temporal datasets.

In addition, we used the Kalman filter algorithm to obtain the k- step ahead prediction and handle missing values without any additional assumption or additional procedure of imputation to fill in the missing values. These features provide clear advantages over alternatives procedures that deal with spatio-temporal models.

An interesting direction for future research is to use state-space models in the MA\((\infty )\) expansion to incorporate non-stationarity, by introducing time-varying models and/or location-dependence processes on the observation operator \(G_{t}(\mathbf{s})\). Although this would imply a significant increase of parameters to be estimated, it would require only minor changes in the algorithms presented here. Rao (2008) proposed a local least squares method to estimate the parameters of a spatio-temporal model with location-dependent parameters which are used to describe spatial non-stationarity, and we have recently begun to work combining these two ideas.

References

Bevilacqua M, Gaetan C, Mateu J, Porcu E (2012) Estimating space and space–time covariance functions for large data sets: a weighted composite likelihood approach. J Am Stat Assoc 107(497):268–280

Bilonick RA (1985) The space–time distribution of sulfate deposition in the northeastern united states. Atmos Environ (1967) 19(11):1829–1845

Bivand R, Keitt T, Rowlingson B (2015) rgdal: bindings for the geospatial data abstraction library. R Foundation for Statistical Computing, Vienna

Bocquet M, Elbern H, Eskes H, Hirtl M, Zabkar R, Carmichael G, Flemming J, Inness A, Pagowski M, Pérez Camaño J et al (2015) Data assimilation in atmospheric chemistry models: current status and future prospects for coupled chemistry meteorology models. Atmos Chem Phys 15(10):5325–5358

Brockwell PJ, Davis RA (1991) Time series: theory and methods, 2nd edn. Springer, New York

Brown PJ, Le ND, Zidek JV (1994) Multivariate spatial interpolation and exposure to air pollutants. Can J Stat 22(4):489–509

Broyden CG (1969) A new double-rank minimization algorithm. Not Am Math Soc 16:670

Cameletti M, Lindgren F, Simpson D, Rue H (2013) Spatio-temporal modeling of particulate matter concentration through the spde approach. AStA Adv Stat Anal 97(2):109–131

Carroll SS, Cressie N (1997) Spatial modeling of snow water equivalent using covariances estimated from spatial and geomorphic attributes. J Hydrol (Amst) 190(1):42–59

Chan NH, Palma W (1998) State space modeling of long-memory processes. Ann Stat 26(2):719–740

Cressie N, Wikle CK (2011) Statistics for spatio-temporal data. Wiley, New York

Daley D, Porcu E (2014) Dimension walks and schoenberg spectral measures. P Am Math Soc 142(5):1813–1824

Durbin J, Koopman SJ (2012) Time series analysis by state space methods. Number 38 in Oxford statistical science series. Oxford University Press, Oxford

Eynon B, Switzer P (1983) The variability of rainfall acidity. Can J Stat 11:11–24

Fasso A, Cameletti M, Nicolis O (2007) Air quality monitoring using heterogeneous networks. Environmetrics 18(3):245–264

Ferreira G, Rodríguez A, Lagos B (2013) Kalman filter estimation for a regression model with locally stationary errors. Comput Stat Data Anal 62:52–69

Fletcher R (1970) A new approach to variable metric methods. Comput J 13:317–322

Gneiting T (2002) Nonseparable, stationary covariance functions for space–time data. J Am Stat Assoc 97(458):590–600

Goldfarb D (1970) A family of variable metric methods derived by variational means. Math Comput 24:23–26

Guyon X (1995) Random fields on a network: modeling, statistics, and applications. Springer, New York

Handcock MS, Wallis JR (1994) An approach to statistical spatial-temporal modeling of meteorological fields. J Am Stat Assoc 89(426):368–378

Hannan EJ, Deistler M (1988) The statistical theory of linear systems. Wiley, New York

Harvey AC (1989) Forecasting structural time series and the Kalman filter. Cambridge University Press, Cambridge

Haslett J, Raftery AE (1989) Space–time modelling with long-memory dependence: assessing Ireland’s wind power resource. J R Stat Soc C Appl 38(1):1–50

Huang H-C, Cressie N (1996) Spatio-temporal prediction of snow water equivalent using the Kalman filter. Comput Stat Data Anal 22(2):159–175

Hughes JP, Guttorp P, Charles SP (1999) A non-homogeneous hidden markov model for precipitation occurrence. J R Stat Soc C Appl 48(1):15–30

Ippoliti L (2001) On-line spatio-temporal prediction by a state space representation of the generalized space time autoregressive model. Metron Int J Stat LIX:157–169

Jun M, Stein ML (2008) Nonstationary covariance models for global data. Ann Appl Stat 2(4):1271–1289

Kokoszka PS, Taqqu MS (1995) Fractional arima with stable innovations. Stoch Proc Appl 60(1):19–47

Li B, Genton MG, Sherman M (2008) On the asymptotic joint distribution of sample space–time covariance estimators. Bernoulli 14(1):228–248

Mardia KV, Goodall C, Redfern EJ, Alonso FJ (1998) The kriged Kalman filter. Test 7(2):217–282

Matérn B (1986) Spatial variation, volume 36 of lecture notes in statistics. Springer, Berlin

Mikosch T, Gadrich T, Kluppelberg C, Adler RJ (1995) Parameter estimation for arma models with infinite variance innovations. Ann Stat 23(1):305–326

Militino A, Ugarte M, Goicoa T, Genton M (2015) Interpolation of daily rainfall using spatiotemporal models and clustering. Int J Climatol 35(7):1453–1464

Oehlert GW (1993) Regional trends in sulfate wet deposition. J Am Stat Assoc 88(422):390–399

Palma W (2007) Long-memory time series: theory and methods. Wiley series in probability and statistics. Wiley, Hoboken

Palma W, Olea R, Ferreira G (2013) Estimation and forecasting of locally stationary processes. J Forecast 32(1):86–96

Peng RD, de Leeuw J (2002) An introduction to the .C interface to R. UCLA, Academic Technology Services, Statistical Consulting Group

Porcu E, Bevilacqua M, Genton MG (2015) Spatio-temporal covariance and cross-covariance functions of the great circle distance on a sphere. J Am Stat Assoc. doi:10.1080/01621459.2015.1072541

R Core Team (2017) R: a language and environment for statistical computing. R Foundation for Statistical Computing, Vienna

Rao SS (2008) Statistical analysis of a spatio-temporal model with location-dependent parameters and a test for spatial stationarity. J Time Ser Anal 29(4):673–694

Stein ML (2005) Space–time covariance functions. J Am Stat Assoc 100(469):310–321

Stroud JR, Stein ML, Lesht BM, Schwab DJ, Beletsky D (2010) An ensemble Kalman filter and smoother for satellite data assimilation. J Am Stat Assoc 105(491):978–990

Waller LA, Carlin BP, Xia H, Gelfand AE (1997) Hierarchical spatio-temporal mapping of disease rates. J Am Stat Assoc 92(438):607–617

Wikle CK (2003) Hierarchical models in environmental science. Int Stat Rev 71(2):181–199

Wikle CK, Cressie N (1999) A dimension-reduced approach to space-time Kalman filtering. Biometrika 86(4):815–829

Wikle CK, Berliner LM, Cressie N (1998) Hierarchical Bayesian space–time models. Environ Ecol Stat 5(2):117–154

Xu K, Wikle CK (2007) Estimation of parameterized spatio-temporal dynamic models. J Stat Plan Inference 137(2):567–588

Zes D (2014) Facile spatio-temporal modeling, forecasting with adaptive least squares and the Kalman filter. J Environ Stat 6(1). http://jes.stat.ucla.edu/v06/i01

Acknowledgements

The first author would like to express his thanks for the support from DIUC 215.014.024-1.0, established by the Universidad de Concepción and Postdoctoral scholarship from Conicyt, Chile, 2014 (Folio 74150023). Jorge Mateu’s research was supported by Grant MTM2013-43917-P from the Spanish Ministry of Science and Education, and Grant P1-1B2015-40 and Emilio Porcu’s research was supported by Fondecyt Regular Project from Ministery of Science and Education, Chile.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ferreira, G., Mateu, J. & Porcu, E. Spatio-temporal analysis with short- and long-memory dependence: a state-space approach. TEST 27, 221–245 (2018). https://doi.org/10.1007/s11749-017-0541-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11749-017-0541-7