Abstract

Applications, such as military and disaster response, can benefit from robotic collectives’ ability to perform multiple cooperative tasks (e.g., surveillance, damage assessments) efficiently across a large spatial area. Coalition formation algorithms can potentially facilitate collective robots’ assignment to appropriate task teams; however, most coalition formation algorithms were designed for smaller multiple robot systems (i.e., 2–50 robots). Collectives’ scale and domain-relevant constraints (i.e., distribution, near real-time, minimal communication) make coalition formation more challenging. This manuscript identifies the challenges inherent to designing coalition formation algorithms for very large collectives (e.g., 1000 robots). A survey of multiple robot coalition formation algorithms finds that most are unable to transfer directly to collectives, due to the identified system differences; however, auctions and hedonic games may be the most transferable. A simulation-based evaluation of five total algorithms from two combinatorial auction families and one hedonic game family, applied to homogeneous and heterogeneous collectives, demonstrates that there are collective compositions for which no evaluated algorithm is viable; however, the experimental results and literature survey suggest paths forward.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Robotic collectives, defined here as > 50 robots with limited sensing and computational capabilities as compared to a typical robot in a smaller multiple robot system, are increasingly relevant for military (e.g., building or area surveillance) (Defense Advanced Research Projects Agency, 2019) and disaster response (e.g., damage assessments, search and rescue) (Hildmann & Kovacs, 2019) applications. A fundamental problem is coalition formation for task allocation, or partitioning robots into teams for task performance (Gerkey & Matarić, 2004; Korsah et al., 2013). Effective coalition formation can enable collectives to perform multiple cooperative tasks distributed over large environments efficiently; however, collectives’ scale and the application domains’ constraints make this problem challenging.

Military and disaster response applications require high-quality coalition formation solutions for hundreds to thousands of robots in near real-time (e.g., < 5 min). Low-bandwidth, deployed networks (e.g., mobile ad hoc networks) will often be utilized, due to damaged permanent infrastructure or remote deployment locations (Klinsompus & Nupairoj, 2015). Minimal, distributed communication is required to reserve bandwidth for mission critical communications (Jahir et al., 2019; Legendre et al., 2011; Shah et al., 2019). Additionally, frequent communication with a central entity is infeasible, as collectives can be highly distributed (Berman et al., 2009a; Hamann, 2018).

Many prior collective coalition formation algorithms assign approximate fractions of collectives to tasks (e.g., Berman et al., 2009b; Mather & Hsieh, 2011), or determine when robots participate in continuous tasks (e.g., Castello et al., 2013; Pang et al., 2019). These algorithms have been applied to large collectives, but do not address common robot coalition formation requirements, including optimizing solutions based on task utilities (i.e., numbers representing how important tasks are to a mission) and allowing team size specification. Many tasks require a minimum team size (e.g., cordon, area coverage), or an exact team size (e.g., moving a piece of debris, guarding a building entrance). This manuscript’s focus is exact team sizes, as assigning more than the minimum robots unnecessarily reduces the resources available for new tasks, which can emerge frequently in highly dynamic domains.

Other existing coalition formation incorporates exact and approximation (e.g., Aziz et al., 2021; Dutta & Asaithambi, 2019; Service & Adams, 2011a; Zhang & Parker, 2013), auction-based (e.g., Chen & Sun, 2011; Guerrero et al., 2017; Oh et al., 2017), biologically-inspired (e.g., Agarwal et al., 2014; Mouradian et al., 2017; Yeh & Sugawara, 2016; Haque et al., 2013), and, recently, hedonic game-based (e.g., Czarnecki & Dutta, 2019; Jang et al., 2019) algorithms. However, software agent algorithms (e.g., Liemhetcharat & Veloso, 2014; Michalak et al., 2010; Rahwan & Jennings, 2008; Rahwan et al., 2009; Shehory & Kraus, 1995; Sless et al., 2014) are not directly transferable to collectives due to differences between software agents and embodied robots (Vig & Adams, 2007). Additionally, robot coalition formation algorithms were evaluated predominantly for smaller multiple robot systems, not collectives, and few evaluations consider practical communication requirements, so it is unknown if the algorithms will scale. Thus, existing algorithms either are not, or have not been demonstrated to be, viable for collectives.

This manuscript assesses robot coalition formation algorithms’ viability. A simulation-based evaluation with up to one thousand robots demonstrates that multiple robot coalition formation algorithms do not fully satisfy the target domains’ solution quality, runtime, and communication requirements when applied to collectives. In fact, there are collective compositions for which no existing algorithm is suitable; however, potential avenues for addressing these collective compositions are suggested.

1.1 Coalition formation for task allocation

Coalition formation for task allocation partitions agents into teams for task performance (Service & Adams, 2011b). The input is a set of \(n\) agents, \(A = \{a_1, \dots , a_n\}\), a set of \(m\) tasks, \(T = \{t_1, \dots , t_m\}\), and a characteristic function, \(f: (t_j, C_j) \rightarrow \mathbb {R}\), where \(C_j \subseteq A\) is the set of agents assigned to task \(t_j\) (Service & Adams, 2011a). Characteristic functions vary by application; however, \(f(t_j, C_j)\) generally represents the inherent value of coalition \(C_j\) completing task \(t_j\) (Service & Adams, 2011a; Zhang & Parker, 2013).

A coalition formation for task allocation problem assumes that a coalition’s value can be calculated considering only agents in the coalition (i.e., not considering agents assigned to other tasks) (Rahwan et al., 2009). The optimal solution is a coalition structure, \(CS = \{(t_1, C_1), \dots (t_m, C_m)\}\), that satisfies:

This manuscript considers a characteristic function where \(f(t_j, C_j)\) is equal to task \({t_j}'s\) utility (i.e., representing the inherent value to the overall mission of completing \(t_j\)) if \(C_j\) provides all of \({t_j}'s\) required capabilities, and 0 otherwise. Maximizing the utility enables the collective to maximize its achievement of mission objectives; thus, the goal is for a collective coalition formation to achieve as close to 100% of the total mission utility as possible. However, deriving an optimal solution, or a provably reasonable approximation, is NP-hard (Service & Adams, 2011a; Sandholm et al., 1999). Specifically, no polynomial time algorithm can produce an \(O(|C|^{1-\epsilon })\) or \(O(m^{1-\epsilon })\) approximation, where \(C\) is the set of non-zero valued coalitions and \(\epsilon >0\), unless \({\text{P}}={\text{NP}}\) (Service & Adams, 2011a; Sandholm et al., 1999). Additionally, the establishment of any bound on solution quality requires considering at least \(O(2^{n-1})\) coalition structures (Sandholm et al., 1999). This computational complexity hinders producing high-utility coalition structures for large numbers of agents.

2 Coalition formation for multiple versus collective robot systems

Coalition formation has received much attention in the software multi-agent and multiple robot communities, where a software multi-agent system comprises two or more software agents, and a multiple robot system is two to fifty robots. Software multi-agent coalition formation cannot be transferred directly to robotic systems, as robots have different constraints and practical considerations (Vig & Adams, 2007). As embodied agents, robots have kinematic and dynamic constraints and relatively static, nontransferable capabilities (i.e., sensors and actuators) (Vig & Adams, 2007). Robots are also likely to have power constraints (Diehl & Adams, 2021b) and less available communication (Diehl & Adams, 2021a), which coalition formation must accommodate.

Multiple robot coalition formation considers embodied agents; however, transferring algorithms to robotic collectives can be complicated by differences in scale, capabilities, and communication. Key differences between collective and multiple robot systems must be considered when designing collective coalition formation algorithms, as summarized in Table 1.

2.1 Agent architecture

Robotic collectives typically incorporate simple control models (Brambilla et al., 2013) (e.g., repulsion, attraction, orientation (Hartman & Beneš, 2006), while multiple robot system architectures are often more sophisticated (e.g., belief-desire-intention). A reason for this difference is design choice. Robotic collectives were inspired by biological collectives, which achieve complex tasks through cooperation, rather than individual sophistication (Brambilla et al., 2013). Another reason is scale. Robotic collectives have more robots, so the allowable monetary and time costs per robot are lower; thus, even as robot technology and collective design improve, collective robots are likely to be less capable than their multiple robot system counterparts and have less computation available for coalition formation.

2.2 System capabilities

System capabilities are the tasks that a multiple robot system or collective can perform as a product of the individual robots’ capabilities and interactions with other robots and the environment. Capabilities are relatively static when they correspond to hardware (e.g., sensors and actuators) (Vig & Adams, 2007), but emergent behaviors can vary dynamically (Beni, 2005).

Multiple robot systems can exhibit emergent behaviors, especially as more advanced artificial intelligence is incorporated (e.g., Costa et al., 2019); however, emergent behavior is intrinsic to collectives. Biologically-inspired collective capabilities (e.g., target selection Reina et al., 2015) typically rely on emergent behaviors, and the use of reactive architectures (see Sect. 2.1) renders interactions with the environment and other robots especially impactful (Hartman & Beneš, 2006). Collective coalition formation must adapt task assignments quickly as emergent capabilities change.

2.3 System composition

Collective and multiple robot systems may be homogeneous (i.e., robots have identical capabilities) or heterogeneous (i.e., some robots have different capabilities). Collective research has primarily considered homogeneous systems (e.g., Reina et al., 2015; Prorok et al., 2017; Van Der Blom & Bäck, 2018), while multiple robot coalition formation has considered heterogeneity extensively (e.g., Guerrero et al., 2017; Sen & Adams, 2013b; Zhang & Parker, 2012). Heterogeneous collectives enable more diverse applications (Defense Advanced Research Projects Agency, 2019; Clark et al., 2021; Prabhakar et al., 2020); thus, collective coalition formation must also account for heterogeneity.

Multiple robot systems can be highly heterogeneous, as each robot may be manufactured with unique capabilities (Hamann, 2018), while collectives’ larger scale means that robots are likely to be manufactured in batches, where each robot in a given batch has the same set of capabilities. Collective coalition formation algorithms may be able to leverage this reduced heterogeneity to decrease the required computation (Service & Adams, 2011a).

2.4 Communication

Coalition formation often assumes fully connected communication networks in which each robot can communicate with every other robot directly (e.g., Jang et al., 2018; Tang, 2006). This assumption can be feasible for multiple robot systems, if the deployment domains have good network coverage (Diehl & Adams, 2021b); however, robotic collectives, like their biological counterparts, are likely to rely on distributed, local communication to facilitate scalability (Berman et al., 2009a; Hamann, 2018). Individuals in spatial swarms (e.g., bird flocks (Ballerini et al., 2008), fish schools (Couzin et al., 2005)) often communicate with only a subset of their neighbors (Ballerini et al., 2008; Haque et al., 2016; Couzin et al., 2002; Strandburg-Peshkin et al., 2013), while colonies (e.g., bees (Seeley, 2010), ants (Gordon, 1999)) generally have fully connected communication topologies within a central hub and no, limited, or spatial swarm-like, communication outside the hub (Reina et al., 2015). Spatial swarms and colonies can theoretically approximate fully connected topologies by using local communication to propagate messages, but at the cost of delays and solution quality degradation; thus, collective robot coalition formation needs to rely primarily on local communication.

Many collective and multiple robot system applications will use temporary, deployable communication networks (e.g., ad hoc networks), due to damaged permanent infrastructure or remote deployment locations (Jahir et al., 2019; Legendre et al., 2011; Shah et al., 2019). Temporary network nodes (e.g., ground and aerial robots, satellites, balloons, blimps, tablets, or smart phones (Jahir et al., 2019; Pandey & De, 2017)) cannot replace permanent infrastructure fully, given their comparatively limited power and bandwidth (Muralidhar & Madhavi, 2018). Thus, multiple robot systems may send only medium-sized, rich messages, while collective robots may send only small, simple messages, due to the collectives’ scale (e.g., thousands of robots, large area coverage). Collective coalition formation will need to accommodate limited message sizes.

3 Background and related work

Collective robots and coalition formation have, until recently (Dutta et al., 2021; Czarnecki & Dutta, 2021), been treated as distinct research areas, leaving collective coalition formation relatively unstudied. Collective and multiple robot coalition formation algorithms are discussed, as well as additional background on coalition formation.

3.1 The services model

Coalition formation requires determining if a coalition is capable of completing a task, given the coalition members’ capabilities (Vig & Adams, 2007). There is a longstanding distinction between robot and software agent capability models, as hardware capabilities are not instantaneously transferable (Vig & Adams, 2007). Robots’ software capabilities can also be more difficult to transfer, as software may be hardware-dependent (e.g., sensor-dependent controllers), and only limited communication may be available if a transfer is viable.

The robot services model considers high-level robot behaviors (e.g., surveillance) called services (Service & Adams, 2011a; Vig & Adams, 2007). Each robot has a service vector specifying the services that it can perform, and each task has a vector specifying the number of robots required to perform each service. A coalition can perform a task if there are sufficient robots to provide all required services, where each robot performs a single service at a time (Service & Adams, 2011a). This model is efficient when different sensors or actuators produce the same behavior, which supports scalability (Service & Adams, 2011a). Additionally, the services abstraction facilitates task design by users who are less familiar with robot hardware (e.g., tacticians (Defense Advanced Research Projects Agency, 2019)).

Three other common robot capabilities models exist. The robot types model, which considers sets of robots with identical capabilities, can be converted to the services model (Service & Adams, 2011a). The resources model considers tasks’ resource (i.e., sensor and actuator) requirements and if resources are collocated or provided by different robots (Vig & Adams, 2007). Certain problems (e.g., identifying the lowest cost coalition for a task) are more computationally difficult than with the services model (Service & Adams, 2011a), and more knowledge of robot hardware is needed for task design. The schema model determines the information flow from robots’ environmental sensors to code modules to actuators (Tang & Parker, 2005). This model allows for very flexible task performance, but considers only motion-based (i.e., not processing or sensing) tasks. This manuscript focuses on applying the services model.

3.2 Collective coalition formation

Coalition formation algorithms for robotic collectives, as opposed to smaller multiple robot systems, typically do not solve coalition formation as defined in Sect. 1.1. Instead, the algorithms solve biologically-inspired, reduced difficulty problems that consider inexact team sizes, which can be a fraction of the collective or depend on mission conditions (Huang & Robinson, 1992; Theraulaz et al., 1998). The resulting problem formulations generally do not optimize mission utility. Recently, hedonic games have also been applied to collective coalition formation to solve problems more similar to Sect. 1.1, optimizing utility and specifying target team sizes. Approaches with inexact team sizes and leveraging hedonic games are surveyed.

3.2.1 Inexact team sizes

Many inexact team size coalition formation approaches model biological collectives’ behaviors. Stimulus–response is based on entomology (Theraulaz et al., 1998) and sociology (Granovetter, 1978), in which each individual chooses from a set of mutually exclusive options based on a combination of individual preference and external stimuli. For example, individuals may or may not participate in a continuous resource-gathering task based on how close the collective is to its desired resource level (Castello et al., 2014; Krieger et al., 2000; Rosenfeld et al., 2006; Yongming et al., 2010) or the amount of interference between robots (Pang et al., 2019). Stimulus–response algorithms have also been used for continuous cooperative monitoring tasks (Low et al., 2004), and to distribute approximate numbers of robots to distinct task sites (Kanakia et al., 2016). These algorithms can scale well, as they require little to no communication, and the computational requirements depend on the various specified thresholds, not the collective size; however, these models are not directly transferable to the target collective coalition formation optimization problem, as there is not an existing mapping from coalition formation’s characteristic function to stimulus–response models’ thresholds and stimuli.

Algorithms that distribute approximate fractions of a collective among tasks have also incorporated the activator/inhibitor model that represents labor division in bee colonies (Huang & Robinson, 1992; Xiao & Wang, 2018). The algorithms’ agents are modeled as having an internal activator that causes them to progress through a task sequence, while progression can be inhibited by factors, such as the number of robots per task (Zahadat et al., 2015; Zahadat & Schmickl, 2016). These algorithms require relatively simple computation and rely predominantly on distributed local communication; however, they do not solve the desired optimization problem, as they assume that all tasks will be assigned robots without considering task utility. Recall that optimizing task utility is important in the target domain, as it enables the collective to maximize its achievement of mission objectives.

Another notable algorithm family with inexact team sizes uses ordinary differential equations modeling the robots’ transition rates between tasks (e.g., Berman et al., 2009b; Elamvazhuthi et al., 2018; Hsieh et al., 2008; Mather & Hsieh, 2011). Individual robot controllers are derived from these equations and determine robots’ stochastic transitions between tasks. Equilibrium is reached when each task is assigned approximately the desired fraction of the collective. A key advantage of these algorithms is that the complexity of the robots’ controllers does not increase with the collective size, facilitating scaling (Berman et al., 2009b). Some algorithms were evaluated with as many as 2,000 (Mather & Hsieh, 2011), and even 20,000 (Hsieh et al., 2008) simulated point-based robots. Additionally, algorithm performance is distributed and requires no communication between robots when visually estimating the number of robots currently assigned to each task is possible. Otherwise, the robots at a task site must communicate with each other. However, the majority of these algorithms consider only homogeneous collectives, and none consider the services model. A service extension will likely be difficult, as the number of transitions that must be considered increase substantially with the number of services and services per robot. These algorithms also do not transfer directly to the coalition formation optimization problem, as they assign fractions of the collective to tasks without addressing task utilities. Finally, a key limitation is that robots travel between task sites during coalition formation. Quadcoptors can potentially travel quickly, but doing so wastes their already very limited battery lives (Diehl & Adams, 2021b). Conversely, ground robots generally have longer battery lives, but may require substantial time to travel between tasks, as the area of operation for the target applications can be large and highly deconstructed; thus, this family of algorithms is excluded from the empirical evaluation.

3.2.2 Hedonic games

Recent collective coalition formation leverages anonymous hedonic games, where coalition size determines utility, and each robot tries to optimize its individual utility by joining and leaving coalitions until Nash stability is reached (i.e., robots cannot benefit by individually changing coalitions) (Drèze & Greenberg, 1980). Centralized hedonic games (Czarnecki & Dutta, 2019, 2021) are not well-suited to collectives, as they require frequent communication with a centralized computer (Sect. 2.4). Distributed algorithms are most relevant.

The distributed algorithms (Jang et al., 2019, 2018; Dutta et al., 2021) derived from the GRoup Agent Partitioning and Placing Event (GRAPE) algorithm (Jang et al., 2018), in which each robot joins its preferred coalition, sends the resulting coalition structure to its network neighbors, and updates its coalition structure based on neighbors’ messages. The GRAPE algorithm variations allow for homogeneous systems (Jang et al., 2018), variations in the performance of a single service (Jang et al., 2019), or heterogeneity under the resources model (Dutta et al., 2021); however, no services model variant exists.

The GRAPE variations are fast (i.e., milliseconds with 50 robots) and use local communication (Jang et al., 2019, 2018; Dutta et al., 2021); however, evaluation with large, heterogeneous collectives is an open problem. Additionally, individual robot utilities are not equivalent to coalition utilities, and differences may create suboptimal solutions (Jang et al., 2019, 2018).

3.3 Multiple robot coalition formation

Multiple robot coalition formation or ST-MR task allocation (i.e., a robot performs a Single Task and a task is performed by Multiple Robots) is well-studied (Gerkey & Matarić, 2004; Korsah et al., 2013). The pros and cons of the primary algorithms are outlined.

3.3.1 Exact and approximation algorithms

Exact algorithms return optimal solutions, while approximation algorithms return solutions within a known factor of optimal. These solution quality guarantees can be advantageous; however, exact and approximation algorithms have not been favored historically for multiple robot coalition formation, due to coalition formation’s high computational complexity (Service & Adams, 2011a; Zhang & Parker, 2013).

These algorithm types are more feasible if the search space is constrained. Dynamic programming with a limited number of robot types, \(j < O(n)\), produces optimal solutions with a \(O(n^{2j}m)\) computational complexity (Service & Adams, 2011a), compared to the \(O(3^n)\) otherwise required (Rothkopf et al., 1998). Limited robot types (see Sect. 2.3) can be a valid assumption; however, the dynamic programming algorithm is centralized, which is less compatible with collectives (see Sect. 2.4).

Adaptations of Shehory and Kraus’s distributed software multi-agent algorithm incorporate a maximum coalition size constraint, \(k < O(n)\) (Shehory & Kraus, 1995, 1998). The algorithms have two stages (Vig & Adams, 2007, 2006b). First, each robot calculates its possible coalitions’ values. Next, robots broadcast their best coalitions. The best overall coalition is greedily added to the coalition structure until all tasks have been assigned or no coalitions can perform the remaining tasks. The base computational complexity is \(O(n^km)\), and the approximation ratio depends on the heuristic used to identify the best coalition (Shehory & Kraus, 1995, 1998).

There are three common heuristics. Maximum utility selects the highest utility coalition and finds solutions within a factor \(k+1\) of the optimal utility (Service & Adams, 2011a). Minimum cost selects the lowest cost (i.e., 1/utility) coalition and finds solutions within a factor \(O(k/\log k)\) of the optimal cost (Shehory & Kraus, 1998). Average utility finds the coalition with the highest utility to size ratio, producing solutions within \(2k\) of the optimal utility (Zhang & Parker, 2013). This heuristic is useful when smaller coalitions are favored, and the utility does not depend on coalition size.

The resource-centric heuristic aims to prevent robots that are needed for many tasks from being assigned early (Zhang & Parker, 2013). The heuristic ranks coalitions based on utility and the degree to which their selection will limit future task allocation. The approximation factor is (\(2k+2\)), and the computational complexity is \(O(\min (n,m)m^2\left( {\begin{array}{c}n\\ k\end{array}}\right) ^2)\) (Zhang & Parker, 2013).

Any algorithm variation can incorporate the Fault Tolerance Coefficient to balance assigning redundant robots, in case some fail to perform tasks, with the cost of using extra robots (Vig & Adams, 2006b). Additionally, other algorithm variations exist. Service and Adams incorporated the services model and bipartite matching, reducing the computational complexity to \(O(n^{3/2}m)\) (Service & Adams, 2011a). Dutta et al.’s one-to-many bipartite matching-based algorithm has a \(O(nm)\) computational complexity and a \((1/(1+\max (k-\Delta )))\) cost approximation ratio, where \(\Delta \in \{0, 1\}\); however, it only allows for homogeneous systems (Dutta & Asaithambi, 2019).

The advantage of the greedy approximation algorithms is that they provide solution quality guarantees, are flexible in the objective functions that they can optimize, and facilitate heterogeneity. However, their reasonable computational complexities rely on a small maximum coalition size, \(k\), which is unlikely given collectives’ scale. The algorithms also rely on all-to-all broadcasts to communicate coalition values among the robots, which may be infeasible with deployed disaster response and military networks. Thus, existing variations cannot be easily applied to collective coalition formation.

3.3.2 Auction algorithms

Auctions are a market-based resource allocation method, where buyers and sellers exchange information about the price at which they are willing to buy and sell goods (Phelps et al., 2008). A seller aims to obtain a high price, while a buyer’s goal is to obtain goods for a low price. Auction algorithms are popular for multiple robot coalition formation, because they are an intuitive method of distributing resources. Existing algorithms vary based on the method of mapping tasks and robots to buyers and sellers, as well as the auction type.

Some algorithms have robots bid directly on tasks. First-price, one round auction-based algorithms auction off tasks in the order that they are received (Gerkey & Matarić, 2002; Sujit et al., 2008). An auctioneer broadcasts a task’s required resources to the robots, robots reply with the cost of their participation, and the auctioneer chooses the lowest cost coalition that can perform the task. The advantage is fast runtimes relative to other auction mechanisms; however, a less valuable task received before a more valuable task is given precedence. Double auctions address this limitation by auctioning off all tasks at once (Guerrero et al., 2017; Xie et al., 2018). Robots in Guerrero et al.’s double auction algorithm send task auctioneers information about their relevant abilities, and task auctioneers send back bids based on the utility of the tasks they represent (Guerrero et al., 2017), while in Xie et al.’s algorithm, the tasks start the auction process (Xie et al., 2018). These algorithms allow tasks to compete for resources, at the cost of additional communication.

Robots can also bid on tasks through intermediaries. Project Manager-Oriented Coalition Formation has robots elect project managers for each task, which select coalitions of their neighbors in the network topology (Oh et al., 2017). This approach ensures that robots in a coalition can communicate, but severely restricts the solution space. The Automated Synthesis of Multi-robot Task solutions through software Reconfiguration (ASyMTRe) algorithm variants have robots form coalitions that bid on tasks (Zhang & Parker, 2012; Tang & Parker, 2007). All ASyMTRe variations use the schema model. Finally, other algorithms have tasks bid on robot capabilities. The Robot Allocation through Coalitions Using Heterogeneous Non-Cooperative Agents (RACHNA) approach uses an ascending auction, with tasks bidding on services via service agents (Vig & Adams, 2006a). A limitation is that robots can be unnecessarily reassigned. A simultaneous descending auction addresses this limitation, at the cost of an empirically higher runtime (Service et al., 2014).

Overall, auction-based approaches are more applicable to collectives than exact and approximation algorithms, as they do not make assumptions about coalition size or require communication with a central computer. However, the requirement that all robots communicate with the task auctioneers (or task agents with service agents) may be difficult for military and disaster response networks (Vig & Adams, 2006a; Service et al., 2014). The communication required is substantial and can increase at least linearly with the collective size, potentially exceeding deployed network’s limited bandwidth. Additionally, algorithms were generally not evaluated with large collectives, so it is necessary to assess scalability.

3.3.3 Biologically-inspired algorithms

Early scalable robot coalition formation leveraged biologically-inspired optimization mechanisms. The simulated Annealing inspired ANT colony optimization (sA-ANT) algorithm, which leverages ant colony optimization and simulated annealing, allocates only one task at a time (Sen & Adams, 2013b). Double-layered ant colony optimization addresses this limitation (Yeh & Sugawara, 2016). Additionally, particle swarm optimization and the Pareto Archived Evolution Strategy-based algorithm addressed multi-objective coalition formation (Agarwal et al., 2014; Mouradian et al., 2017).

Most algorithms were evaluated in simulation at or near the scale of collectives (Agarwal et al., 2014; Mouradian et al., 2017; Sen & Adams, 2013b); however, the algorithms are centralized and either allocate a single task at a time (Mouradian et al., 2017; Sen & Adams, 2013b) or their experimental run times indicate requiring over 15 min for even small collectives (Agarwal et al., 2014; Yeh & Sugawara, 2016).

Haque et al. proposed a decentralized algorithm based on alliance formation between male bottlenose dolphins (Haque et al., 2013); however, this algorithm restricts coalition sizes, which is not well suited to collectives.

3.4 Summary

The algorithm types most likely to be suitable for collectives, given the coalition formation optimization problem, are hedonic games and auctions. Hedonic games produce solutions quickly with multiple robot systems and can be distributed; however, distributed hedonic games have not been evaluated with large heterogeneous collectives (e.g., 1000 robots), and communication requirements have generally not been evaluated. Auctions are promising, given that some existing algorithms (e.g., Zhang & Parker, 2012; Xie et al., 2018; Vig & Adams, 2006a) are decentralized and consider heterogeneous systems; however, auctions also have not been evaluated at the scale of collectives and may require excessive communication.

4 Algorithms

Hedonic game and auction coalition formation algorithms were evaluated for the viability of their use with collectives. Homogeneous GRAPE, a distributed hedonic game-based algorithm, is evaluated, as there is no services model variant (Jang et al., 2018). The distributed auction-based algorithms are RACHNA (Vig & Adams, 2007, 2006a) and simultaneous descending auction (Service et al., 2014), which incorporate common auction protocols.

4.1 GRAPE

GRAPE incorporates an anonymous hedonic game, meaning that each robot joins the most individually profitable coalition, as determined based on coalition size, not the coalition members’ identities (Jang et al., 2018). During each iteration of GRAPE, robots select their highest valued coalition, broadcast their beliefs, about all robots’ current task assignments, and update their belief states based on messages from neighboring robots in the network topology. A robot’s message is given precedence if the robot’s belief state has been updated more times, or the same number of times and more recently, than the receiving robot’s. This precedence system serves as a distributed mutex that allows only a single robot to alter the valid coalition assignments during each iteration. The algorithm completes when a Nash stable partition is derived.

GRAPE requires \(O(m)\) computation and \(O(n)\) communication per iteration on a single robot, where \(m\) is the number of tasks, and \(n\) is the collective size (Jang et al., 2018). A Nash stable partition can be found in \(O(n^2)\) iterations, given a fully connected network topology, resulting in \(O(n^2m)\) computational and \(O(n^3)\) communication complexities (Jang et al., 2018). Any connected network topology with a diameter \(d_G\) has a \(O(n^2md_G)\) computational complexity with a \(O(n^3d_G)\) communication complexity (Jang et al., 2018).

These complexities require that each robot’s reward for any given task decreases as coalition size increases (Jang et al., 2018). This manuscript uses a peaked reward, or a system reward that is highest when a task is assigned a coalition with exactly the desired number of robots, divided evenly among coalition members (Jang et al., 2018). An individual robot’s reward for task \(t_j\) with utility \(u_j\) that requires \(n_j\) robots and is assigned to coalition \(C_j\) is:

If multiple service types are available, this function cannot determine which robots will perform each service type; thus, GRAPE is considered only for homogeneous collectives with one service type.

4.2 RACHNA

RACHNA incorporates a combinatorial ascending auction, meaning that buyers bid on bundles of goods, and bids increase as the auction progresses (Vig & Adams, 2006a). The sellers are service agents (i.e., one agent per service type), and the buyers are task agents. Service agents sell a service type and track robots’ current salaries (i.e., rewards for coalition membership) (Vig & Adams, 2006a). Task agents bid on service bundles, which they are awarded if the bid is at least the robots’ salaries, plus \(|C_j |\times \epsilon _{inc}\), where \(C_j\) is the task’s coalition and \(\epsilon _{inc}\) is a fixed wage increase. A tasks’ maximum bid is its utility.

At most \(n(u_{max}/\epsilon _{inc})\) bidding rounds occur, where \(u_{max}\) is the highest task utility, with \(O(m)\) bids per round. Task agents require \(O(n)\) computation to determine their bids, and the \(\vert S |\) service agents require \(O(n\log n)\) computation to process bids, resulting in \(O(mn^2\log n (u_{max}/\epsilon _{inc}))\) total computation. A service agent communicates with at most all task agents and robots per round (Vig & Adams, 2006a). Messages exchanged with task agents are size \(O(n)\), and messages exchanged with the robots have size \(O(1)\); thus, the communication complexity is \(O(nm|S |)\) per round and \(O(n^2\,m|S |(u_{max}/\epsilon _{inc}))\) in total.

Prior evaluation of RACHNA treated \(\epsilon _{inc}\) as a fixed parameter controlling the degree of competition (Vig & Adams, 2007, 2006a; Sen & Adams, 2013a). However, tasks for which \(|C_j |\times \epsilon _{inc} > u_j\) cannot be assigned coalitions, even when sufficient robots exist. This limitation is incompatible with collectives’ potentially large coalition sizes; thus, RACHNA must incorporate collective size into its \(\epsilon _{inc}i\) threshold dynamically. \({RACHNA}_{dt}\) denotes a new implementation of RACHNA where \(\epsilon _{inc} = 1 / n\). RACHNAdt’s computational complexity is \(O(mn^3\log n (u_{max}))\), and the communication complexity is \(O(n^3m|S |(u_{max}))\).

4.3 Simultaneous descending auction

Simultaneous descending auction, like RACHNA, incorporates a combinatorial auction with service and task agents. Each robots’ salary is set initially to the maximum task utility, plus \(\epsilon _{dec}\), where \(\epsilon _{dec}\) is a fixed wage decrement. Robot’s salaries are decremented by \(\epsilon _{dec}\) at the beginning of each bidding round, and task agents that still require additional services bid on service bundles. If the task’s utility is higher than the total salaries of all robots in the bundle, the task agent is able to afford a service bundle. The auction stops when all robots have been purchased or all salaries are zero.

Two implementations are considered. The first, simultaneous descending auction with a small coalition optimization (denoted SDASCO), has task agents determine their bids by enumerating all coalitions that meet their service requirements. The computational complexity is \(O(mn^k)\) per iteration, where \(k\) is the maximum coalition size (Service et al., 2014). There are at most \(u_{max}/\epsilon _{dec}\) iterations, resulting an an overall computational complexity of \(O(mn^ku_{max}/\epsilon _{dec})\). This implementation is faster than the alternative with small coalitions, \(k \le 3\), and was evaluated in prior work (Service et al., 2014).

The second implementation, denoted SDAM, determines task agents’ bids using weighted bipartite matching (Service et al., 2014). The computational complexity is \(O(mn^4u_{max}/\epsilon _{dec}\)), regardless of coalition size (Service et al., 2014). This complexity is identical to SASCO’s when \(k=4\) and faster when \(k>4\) (Service et al., 2014). Both implementations have communication complexities of \(O((m+n)|S |u_{max}/\epsilon _{dec})\), as service agents communicate with at most all robots and task agents during each iteration. All considered algorithms’ reward/cost functions are summarized in Table 2.

5 Experimental design

A simulation-based experiment assessed each algorithm’s viability, where a viable algorithm produces near-optimal solutions in near real-time for a range of collective coalition formation problems, as required for highly dynamic domains. The target thresholds are < 5 min runtimes and > 95% utility solutions. Relaxed thresholds of < 10 min runtimes and > 90% utility solutions are also considered to account for the fact viability is application-dependent, and some deployments may have more lenient requirements.

Recall that communication resources in the target domains are often very limited and easily overwhelmed (Klinsompus & Nupairoj, 2015). For example, cellular communication was down for several days during the 2023 Maui wildfires, leaving responders with only minimal, deployable communication networks (Kelly, 2023). There is no standard threshold for acceptable communication limits, as limits can depend on a number of factors (e.g., routing protocols, communication medium, network topology, developments in networking technology, the quantity of communication required for task performance); however, the objective in all instances is to use minimal communication, preserving resources for the transmission of data relevant to task performance. This manuscript assesses communication by comparing the algorithms. The total communication target is < 500 MB, corresponding to the lowest maximum communication empirically required for the majority of the considered coalition formation problems. An algorithm that satisfies this target requires minimal communication in that it requires no more communication than the overall lowest-communication algorithm. A relaxed < 600 MB total communication target was also considered.

The experiment considered achievable missions, meaning that the collectives’ robots offered sufficient services to perform all tasks simultaneously. Real-world collective deployments will ideally incorporate achievable missions but are unlikely to involve substantially more than the minimum required robots, due to the expense and logistical challenges of deploying large collectives. The experiment’s collectives possessed exactly the minimum number of robots required (i.e., coalition formation solutions must utilize all robots).

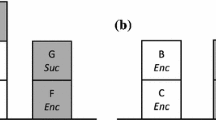

The independent variables were the algorithm, collective size, number of tasks, and collective composition (see Table 3). The algorithms considered were GRAPE, RACHNA (\(\epsilon _{inc}=1\)), RACHNAdt, SDASCO (\(\epsilon _{dec}=1\)), and SDAM (\(\epsilon _{dec}=1\)). The collective size varied from multiple robot systems (i.e., 25–50 robots) to collectives (i.e., 100–1000 robots). The numbers of tasks were 1%, 10%, and 50% the number of robots, corresponding to average coalition sizes 100, 10, and 2, respectively. 1% tasks corresponds to large-scale tasks (e.g., establishing network coverage or searching for survivors), while 10% tasks corresponds to medium-scale tasks (e.g., assessing a damaged building or creating a cordon). Examples of small-scale 50% tasks include guarding a building entrance or providing a medical evacuation. 1% tasks (i.e., coalition size 100) was used only for collectives with > 100 robots, as a mission is only achievable if the number of robots per task is smaller than the collective size. Collective composition encompassed the number of service types and services per robot, where more capable robots had more services, and the number of service types and services per robot combinations determined the level of heterogeneity. A collective was homogeneous if the numbers of service types and services per robot were equal. Otherwise, the collective was heterogeneous.

GRAPE was considered only for homogeneous collectives with one service type, as GRAPE does not incorporate a services model. Additionally, SDASCO was considered only for problems with multiple robot systems (i.e., 25 or 50 robots), due to the algorithm’s high computational complexity. The other algorithms were considered for all independent variable value

The experiment used a centralized C++ simulator on a HP Z640 Workstation (Intel Xeon processor, 62 GB RAM) (Sen & Adams, 2013a). The simulator performed each algorithm iteration for each robot sequentially and assumed a fully connected communication topology. The robots’ embodiment was partially represented by the services model; however, the robots’ positions were not considered, as incorporating positions in the utility function, when applicable, is highly dependent on the application environment and robot hardware. Twenty-five problem instances were randomly generated per independent variable combination, where a problem instance comprised sets of robots and tasks, each with associated services. Robots’ and tasks’ services were selected randomly, and each task was assigned a random integer utility in the range [1, 50]. Each trial, or problem, was allocated twelve hours to produce a solution. This time limit is too long for high tempo dynamic domains (e.g., disaster response) or even short term pre-mission planning (e.g., 2–4 h breaks between mission deployments (Defense Advanced Research Projects Agency, 2019)). All algorithms can produce partial solutions, but this capability was not evaluated.

The dependent variable success rate represents the ratio of problems for which algorithms provided non-zero utility solutions within the time limit. A trial was unsuccessful if the computer’s memory limit was exceeded, the algorithm’s runtime exceeded the 12-hour time limit, or the algorithm was otherwise unable to assign any robots to tasks. Only successful trials were considered when analyzing the other dependent variables, as unsuccessful trials can cause less suitable algorithms to appear to perform well under certain metrics. For example, an algorithm that quickly exceeds the memory limit, resulting in no viable solution, will have a very low runtime, but is less suitable than an algorithm that produces a solution given a longer runtime.

The other dependent variables are runtime, total communication, and percent utility. Runtime is the time in minutes (min) and seconds (s) required for an algorithm to produce a solution. Total communication is the sum of all message sizes in megabytes (MB). Percent utility measures the solution quality of successful trials (i.e., solution utility/optimal utility). Overall, higher success rates and percent utilities with lower runtimes and total communication are preferred. It was hypothesized that none of the algorithms will perform well for all metrics across the range of collective sizes and compositions.

6 Results

Results are presented for homogeneous and heterogeneous collectives. Box plots were used, as the data was not normally distributed. Non-parametric statistical methods assessed significance across each independent variable. Mann–Whitney–Wilcoxon tests compared the numbers of service types and the services per robot, while Kruskal-Wallis analysis with Mann–Whitney–Wilcoxon post-hoc tests compared across the collective sizes and percent tasks. All analysis included only independent variable combinations for which an algorithm had successful trials across all independent variable values (e.g., percent task analysis considered only 500 and 1000 robot collectives). An algorithm was deemed viable if it had consistently low runtimes and communication, as well as high percent utilities, for all independent variable values.

6.1 Homogeneous collective results

A total of 600 trials with homogeneous collectives were run for each of RACHNA, RACHNAdt, and SDAM. Recall that GRAPE’s analysis considered only the 300 trials with one service type, and SDASCO was considered for only the 200 multiple robot trials.

6.1.1 GRAPE

GRAPE produced optimal solutions for all trials with one service type, for a 50% overall success rate. Recall that GRAPE was unable to perform the 300 trials with five service types, or half the trials.

All successful trials had low runtimes, well within the < 5 min target (Fig. 1). Runtimes did increase substantially from multiple robot systems (< 1 s) to collectives (< 3 min 53 s), as well as with percent tasks. The increases were significant with collective size \((H (n=50) = 238.94, p < 0.01)\) and percent tasks \((H (n=50) = 74.50\, p < 0.01)\), with significant post-hoc analyses (p < 0.01). The longest runtimes were for 1000 robot collectives with 50% tasks (i.e., the largest number of robots and tasks), consistent with GRAPE’s \(O(n^2m)\) computational complexity. Nevertheless, all of GRAPE’s runtimes were sufficiently fast for high-tempo applications.

GRAPE’s total communication increased only with collective size (Table 4). This increase was significant \((H (n=50) = 249.00, p<0.01)\), with significant post-hoc analyses (p < 0.01). Recall that GRAPE’s per iteration communication complexity is \(O(n)\). Percent tasks and problem instance did not impact the communication requirement, because all problems required n iterations (i.e., no robots deviated from their initially selected coalitions).

6.1.2 RACHNA and RACHNAdt

RACHNAdt solved all problems, while RACHNA produced solutions for only 82.3% of trials. All RACHNA trials with 1% tasks were unsuccessful, as well as 12% with 25 robots, 10% tasks, and either collective composition. All unsuccessful trials considered problems in which every tasks’ required coalition size exceeded its utility, consistent with RACHNA’s known limitation.

RACHNA’s and RACHNAdt’s runtimes differed by < 1 min (see Fig. 2). Both algorithms had low runtimes with multiple robot systems (< 1 s), which increased substantially for collectives (< 125 min 48 s). Runtimes also increased with percent tasks, consistent with the \(O(mn^3\log n)\) per iteration computational complexity. Additionally, runtimes increased with the number of service types, which corresponds to an increased number of service agents performing computation. The longest runtimes were for 1000 robots, 50% tasks, and five service types. These runtimes were much too long for high-temp domains.

RACHNA’s runtime increase with respect to collective size was significant \((H (n_{25} = 44, n = 50) = 458.75, p < 0.01)\), with significant post-hoc tests (p < 0.01). Note that the lower number of samples for a collective size of 25 is due to unsuccessful trials. RACHNA’s runtime also increased significantly when the percent tasks increased from 10% to 50% (p < 0.01) and the service types increased from one to five \((p=0.01)\).

RACHNAdt produced similar results. RACHNAdt’s runtimes increased significantly with collective size \((H (n = 50) = 467.32, p<0.01))\) and percent tasks \((H (n=50) = 138.08, p<0.01)\). Post-hoc tests found that all differences were significant (p < 0.01). A significant difference also existed for increased numbers of service types (p < 0.01).

RACHNA’s and RACHNAdt’s communication results also had similar trends (Fig. 3), although RACHNAdt required up to an additional 600 MB. Both algorithms’ communication increased substantially from multiple robot systems (≤70.81 MB) to collectives (≤27.25 GB), as well as with percent tasks and service types. 1,000 robot collectives with 50% tasks and five service types required the most communication, consistent with the algorithms \(O(nm|S |)\) per iteration communication complexity.

RACHNA’s communication increase across collective sizes was significant \((H (n_{25} = 44, n = 50) = 384.03, p<0.01)\), with significant post-hoc analyses (p < 0.01). RACHNA’s communication also increased significantly from 10% to 50% tasks (p < 0.01) and one to five service types (p < 0.01). Note that a Mann–Whitney–Wilcoxon test was used to assess significance with respect to percent tasks, as RACHNA had successful trials for only two values.

Similarly, RACHNAdt’s communication increased significantly with collective size \((H (n=50) = 390.97, p<0.01))\) and percent tasks \((H (n=50) = 234.59, p<0.01)\). Post-hoc tests identified significant differences between the collective sizes (p < 0.01) and percent tasks (p < 0.01). A significant increase from one to five service types was also identified (p < 0.01).

RACHNA produced near-optimal solutions for most problems (Table 5), but high variability resulted in low worst case utilities. Unassigned tasks were generally those requiring more robots than supported by the task utility, given RACHNA’s fixed threshold limitation. Such tasks occur more often with lower percent tasks (i.e., larger coalitions) and more substantially impact mission performance with fewer robots (i.e., fewer tasks for a given value of % tasks). Thus, multiple robot systems with 10% tasks (i.e., the lowest percent utility with successful trials) had the most variable percent utilities.

Significant differences existed across the collective sizes \((H (n_{25}=44, n=50) = 53.16, p<0.01)\), specifically, between 25 and 100–1000 robots (p < 0.01), 50 and 100–1000 robots (p = 0.02 for collective size 100, p < 0.01, otherwise), and 100 and 1000 robots (p < 0.01). No significant difference was found between 25 and 50 robots or 100 and 500 robots. 10% and 50% tasks also differed significantly (p < 0.01), while one and five service types did not. RACHNAdt outperformed RACHNA, producing optimal solutions in all trials.

6.1.3 Simultaneous Descending Auction

The results for the SDAM and SDASCO simultaneous descending auction implementations are presented separately, due to vastly different performance. SDAM successfully produced optimal solutions in all trials.

SDAM’s runtimes were within the < 5 min target for most trials, but increased substantially for collectives with five service types (Fig. 4). Multiple robot trials had < 1 s runtimes, which increased for collectives with one service type to ≤ 6 min 29 s. Collectives with five service types had runtimes ≤ 26 min 24 s. The increase with the number of service types can be attributed to the fact that task agents must consider each robot for more roles when determining their bids. Runtimes also decreased with the percent tasks, despite SDAM’s \(O(mn^4)\) per iteration computational complexity. This trend can be explained by the fact that higher percent tasks correspond to smaller coalitions. The smaller coalitions are cheaper for the task agents, thus enabling tasks to bid in fewer iterations. The longest runtimes occurred with 1000 robots, five service types and 50% tasks.

The runtime increases across collective sizes \((H (n=50) = 465.37, p<0.01)\) and tasks \((H (n=50) = 10.61, p<0.01)\) were significant, as were the collective size post-hoc analyses (p < 0.01); however, only differences between 10% and 50% tasks (p < 0.01) and 1% and 50% tasks (p = 0.01) were significant. No difference between 1% and 10% tasks was found. A significant increase from one to five service types was also identified (p < 0.01).

SDAM’s total communication was low with multiple robot systems (< 1.2 MB), but increased substantially for robotic collectives (see Fig. 5). Collectives with one service type used up to 86.29 MB total, while collectives with five service types used up to 428.03 MB. The total communication also increased with service types and percent tasks, consistent with the \(O((m+n)|S |)\) per iteration communication complexity. 1000 robot collectives with five service types and 50% tasks required the most communication.

Each of these trends was significant. Communication increased significantly across collective sizes (H (\((H (n=50) = 435.46, p<0.01)\) and tasks (H (\((H (n=50) = 35.17, p<0.01)\), with significant post-hoc analyses (p < 0.01). A significant increase from one to five service types was also found (p < 0.01).

SDAM’s performance was generally better than SDASCO’s across all metrics. Recall that the SDASCO evaluation considered only the 200 problem instances with multiple robot systems (33.3% of all trials), due to SDASCO’s exponential computation requirement. SDASCO’s success rate with multiple robot systems was only 62.5%, for an overall success rate of 20.8%.

The unsuccessful trials occurred when SDASCO exceeded either the computer’s memory limit or the 12-hour runtime limit. The memory limit was exceeded for the 25 trials with 50 robots, 10% tasks, and one service type. The runtime limit was exceeded for the 50 trials with five service types and 10% tasks, regardless of the number of robots. SDASCO produced optimal solutions for the remaining 125 trials.

SDASCO’s runtimes increased with the number of robots, service types, and decreased percent tasks, consistent with SDASCO’s \(O(mn^k)\) per iteration computational complexity (Table 6). Note that decreased service types decreases m, but also increases k. Each of the differences was statistically significant (p < 0.01). Runtimes were also more variable.

The variation in runtimes was due to SDASCO’s sensitivity to differences in problem difficulty. SDASCO’s computational complexity is exponential in coalition size, and the longest runtimes for each independent variable combination were for the trials with the highest maximum coalition size. Variation in coalition size resulted in a worst case runtime (i.e., > 6 hours), much too long for high tempo applications or short term pre-mission planning.

The communication increased with the number of robots, tasks, and service types (Table 7), consistent with SDASCO’s \(O((m+n)|S |)\) communication complexity. Each of these increases was significant (p < 0.01).

6.2 Homogeneous Collective Discussion

The homogeneous collective experiment assessed whether GRAPE, RAC-HNA, RACHNAdt, SDAM, and SDASCO are suitable for very large homogeneous collectives in highly dynamic domains. The hypothesis that no algorithm fully satisfied the 100% success rate, < 5 min runtime, < 500 MB total communication, and > 95% utility criteria was supported. Algorithms also failed to meet the relaxed criteria (i.e., < 10 min runtime, < 600 MB communication, > 90% utility), denoted as the reasonably close criteria in Tables 8, 9 and 10.

SDASCO satisfied the evaluation criteria for the fewest independent variable combinations (i.e., three out of twenty-four), as shown in Table 8. All criteria were satisfied for single-service systems with 10% tasks and 25 robots, as well as 50% tasks and 25–50 robots. Relaxing the target runtime to < 10 min enables SDASCO to satisfy all criteria for one additional independent variable combination (i.e., five services, 50% tasks, 25 robots). This relaxation is relatively reasonable for near real-time coalition formation; however, there are no other independent variable combinations for which SDASCO can satisfy all criteria, short of ignoring both the runtime and communication requirements entirely, which cannot be done in near real-time domains.

means that the algorithm met the criterion for all trials with the independent variable combination, while a

means that the algorithm met the criterion for all trials with the independent variable combination, while a  means that all trials were reasonably close to meeting the criterion. An

means that all trials were reasonably close to meeting the criterion. An  means that the criterion was not met, or trials were not attempted due to known algorithm limitations. A - means that the independent variable combination is invalid

means that the criterion was not met, or trials were not attempted due to known algorithm limitations. A - means that the independent variable combination is invalidSDASCO’s evaluation considered only multiple robot systems (i.e., ≤50 robots) due to a high computational complexity; however, it is clear from SDASCO’s poor performance with 25 and 50 robots that the algorithm will not scale to collectives. SDASCO’s major limitation is the excessive memory and computation required to enumerate the possible coalitions for each task. The evaluation criteria were satisfied only when there were few possible coalitions (i.e., 25 robots, 50% tasks, 1 service type), while performance degraded substantially with higher numbers of possible coalitions (i.e., increased service types, increased numbers of robots, decreased percent tasks). Homogeneous collectives are expected to possess large numbers of robots, as well as be assigned tasks that require large coalitions (e.g., assessing damage to a city block), which will result in even worse performance than occurred with multiple robot systems. Thus, SDASCO is not a viable approach for near real-time coalition formation with homogeneous collectives.

The next least viable algorithm was RACHNA (Table 8). RACHNA fully satisfied the evaluation criteria for only six independent variable combinations (i.e., one or five services with 50% tasks and 25–100 robots). Relaxing the runtime requirement to < 10 min and the utility requirement to > 90% enables RACHNA to satisfy all requirements for three additional independent variable combinations (i.e., one service with 10% tasks and 500 robots, five services with 10% tasks and 100 robots, five services with 50% tasks and 500 robots). However, RACHNA cannot satisfy all evaluation criteria for the remaining independent variable combinations due to low percent utilities, as well as high worst-case runtimes and communication.

One of RACHNA’s limitations was its high runtimes and communication requirements with 500–1000 robots, which well exceeded the evaluation criteria. The other major limitation was RACHNA’s inability to assign coalitions to tasks with lower utilities than required coalition sizes. This limitation prevented RACHNA from solving any coalition formation problems with 1% tasks (i.e., 100 robot coalitions), as well as satisfying the utility criterion with 10% tasks (i.e., 10 robot coalitions). The limitation was masked somewhat for 10% tasks with increased collective size, due to the increased number of tasks. However, the percent utilities and success rates were lower than RACHNAdt’s, which, paired with comparable communication and runtimes, means that RACHNAdt is generally preferable to RACHNA.

means that the algorithm met the criterion for all trials with the independent variable combination, while a

means that the algorithm met the criterion for all trials with the independent variable combination, while a  means that all trials were reasonably close to meeting the criterion. An

means that all trials were reasonably close to meeting the criterion. An  means that the criterion was not met, or trials were not attempted due to known algorithm limitations. A - means that the independent variable combination is invalid

means that the criterion was not met, or trials were not attempted due to known algorithm limitations. A - means that the independent variable combination is invalidRACHNAdt satisfied all evaluation criteria for sixteen independent variable combinations, including all combinations with multiple robot systems, as well as 100 robots (see Table 9). All criteria were also satisfied for single-service collectives with 500 robots and 1–10% tasks, as well as 1000 robots and 1% tasks, and five service collectives with 500 robots and 1% tasks. Relaxing the communication requirement to < 600 MB enables RACHNAdt to satisfy the criteria for one additional independent variable combination (i.e., five services, 1% tasks, 100 robots). RACHNAdt’s > 30 min runtimes and > 1 GB communication with all other independent variable combinations far exceeded the acceptable limits for near real-time domains.

RACHNAdt’s primary strength was that the success rate and utility criteria were always met, given sufficient time and communication. This performance makes RACHNAdt well-suited for applications in which pre-mission planning is possible (i.e., the tasks are known at least a few hours prior to deployment). However, RACHNAdt’s runtime and communication with 500–1000 robots are not suitable for near real-time domains, as the runtime and communication requirements are only met for some percent tasks (i.e., coalition sizes) and number of services. The number of services will generally be known prior to deployment, but the percent tasks will not, as tasks arise dynamically; thus, it generally cannot be guaranteed that RACHNAdt will be able to produce solutions within the runtime and communication requirements during any deployment with very large homogeneous collectives.

GRAPE fully satisfied the evaluation criteria for fewer independent variable combinations than RACHNAdt (i.e., the six combinations with one service and 25–100 robots), as shown in Table 9. GRAPE’s primary limitation was its inability to solve any coalition formation problems with multiple service types, due to its lack of a services model. However, GRAPE with only one service type satisfied the success rate, runtime, and percent utility requirements for all independent variable combinations. The number of service types will generally be known prior to deployment, so GRAPE, unlike RACHNAdt, is suitable for deployment in near real-time domains, given single service collectives and sufficient communication infrastructure. Deployed networks will require reducing GRAPE’s communication complexity, while multiple service collectives will require integration with a services model.

means that SDAM met the criterion for all trials with the independent variable combination, while a

means that SDAM met the criterion for all trials with the independent variable combination, while a  means that all trials were reasonably close to meeting the criterion. An

means that all trials were reasonably close to meeting the criterion. An  means that the criterion was not met. A - means that the independent variable combination is invalid

means that the criterion was not met. A - means that the independent variable combination is invalidSDAM best satisfied the evaluation criteria, meeting all criteria for nineteen independent variable combinations (see Table 10). The success rate, communication, and utility criteria were satisfied for all independent variable combinations. The runtime requirement was also met with 25–500 robots, and relaxing the requirement to < 10 min enables SDAM to satisfy the criteria with 1000 robots and a single service type. However, SDAM’s runtimes with five service types and 1000 robots were too long for near real-time domains.

Overall, SDAM satisfied the domain criteria reasonably well for single service collectives, and GRAPE produced solutions even more quickly, given sufficient communication infrastructure. However, no algorithm fully satisfied the performance criteria with five service types and 1000 robots.

6.3 Heterogeneous Collective Results

A total of 900 heterogeneous collective trials were run for each of RACH-NA, RACHNAdt, and SDAM. SDASCO was run for only the 300 trials with multiple robot systems (i.e., 25–50 robots), due to its exponential computational complexity. Recall that GRAPE was not considered for heterogeneous collectives, as it does not incorporate the services model.

6.3.1 RACHNA and RACHNAdt

RACHNA produced solutions for only 82.9% of trials. The 150 trials with 1% tasks were unsuccessful, due to RACHNA’s known limitation. The other four unsuccessful trials occurred with 25 robots and 10% tasks. Specifically, there was one unsuccessful trial with five services and one service per robot, two trials with ten services and one service per robot, and one trial with ten services and five services per robot. RACHNAdt had a 100% success rate.

RACHNA and RACHNAdt’s runtimes differed by ≤6 min, as shown in Fig. 6. Both algorithms’ runtimes were low for multiple robot systems (< 1 s) and increased substantially for collectives with ten service types and five services per robot (≤27 min 5 s). The runtimes were lower with five service types and one service per robot (≤ 2 min 16 s), as well as ten service types and one service per robot (≤ 53 s). Runtimes also increased with percent tasks, consistent with the algorithms’ \(O(mn^2\log n)\) per iteration computational complexity. Additionally, the runtimes increased with services per robot and decreased service types, as the number of services per robot divided by the service types determines the number of robots that each service agent must consider. The longest runtimes occurred with 1000 robots, ten service types, five services per robot, and 50% tasks.

RACHNA’s runtime increase with respect to collective size was significant \((H (n_{25}=71, n=75) = 627.11, p<0.01)\), with significant post-hoc analyses (p < 0.01). RACHNA’s runtime also increased significantly with percent tasks (p < 0.01), decreased service types (p = 0.02), and services per robot (p < 0.01). Note that service type analysis compared five service types and one service per robot to ten service types and one service per robot. Similarly, services per robot analysis compared ten service types and one service per robot to ten service types and five services per robot.

RACHNAdt’s runtimes increased significantly across collective sizes \((H (n=75) = 637.45, p<0.01)\) and tasks \((H (n=150) = 150.44, p<0.01)\), with significant post-hoc analyses (p < 0.01). Significant differences between services (p < 0.01) and services per robot (p = 0.02) were also identified.

RACHNA’s and RACHNAdt’s communication had similar trends (see Fig. 7), although RACHNAdt required an additional < 500 MB. Communication increased substantially from multiple robot systems (≤78.62 MB) to collectives (≤27,601.14 MB or 27.6 GB), consistent with the \(O(n^3m|S |)\) per iteration communication complexity. Communication also increased with percent tasks and services per robot. 1000 robot collectives with ten services, five services per robot, and 50% tasks required the most communication.

These trends were significant. RACHNA’s communication increased significantly across collective sizes \((H (n_{25}=71, n=75) = 616.84, p<0.01)\), with significant post-hoc analyses (p < 0.01). RACHNA’s communication also grew significantly with percent tasks (p < 0.01) and services per robot (p < 0.01). No difference was identified between five and ten service types.

RACHNAdt produced similar results. Significant increases were identified across collective sizes \((H (n=75) = 626.14, p<0.01)\) and percent tasks \((H (n=150) = 356.24, p<0.01)\), with significant post-hoc analyses (p < 0.01). Five and ten services per robot also differed significantly (p < 0.01). No difference was identified between five and ten service types.

RACHNA produced near-optimal solutions for most problems (Table 11), but high variability caused low worst-case utilities. Utilities were most variable with small collectives and 10% tasks, as with homogeneous collectives.

RACHNA’s utilities differed significantly across collective sizes \((H (n_{25}=71, n=75) = 66.49, p<0.01)\). Specifically, post-hoc tests found significant differences between 25 and 50–1000 robots (p < 0.01), 50 and 500–1000 robots (p < 0.01), and 100 and 1000 robots (p = 0.03). No significant differences between other collective sizes were identified. 10% and 50% tasks differed significantly (p < 0.01), while no significant differences were found between five and ten services, or one and five services per robot. RACHNAdt outperformed RACHNA, producing optimal solutions for all trials.

6.3.2 Simultaneous Descending Auction

SDAM’s and SDASCO’s results are presented separately, as they differ substantially. SDAM had a 100% success rate.

SDAM’a runtimes (Fig. 8) were low for multiple robot systems (< 1 s), but increased substantially for collectives (≤29 min 29 s), consistent with its \(O(mn^4)\) per iteration computational complexity. Additionally, runtimes increased with services per robot, as robots were considered for more roles. 1% and 10% tasks performed similarly, but had higher runtimes than 50% tasks. The decrease in runtime can be attributed to smaller coalitions (i.e., higher percent tasks) being cheaper, thus requiring fewer bidding iterations. There was no detectable difference between five and ten service types.

The increases across collective sizes \((H (n=75) = 694.31, p<0.01)\) and percent tasks \((H (n=150) = 24.23, p<0.01)\) were significant. Post-hoc tests revealed significant differences between all collective sizes (p < 0.01), as well as 1–10% and 50% tasks (p < 0.01); however, 1% and 10% tasks did not differ significantly. A significant increase between one and five services per robot was also identified (p < 0.01). No difference between five and ten service types was found. The longest runtimes occurred with 1000 robots, ten service types, and five services per robot.

SDAM’s total required communication (Fig. 9) was < 2 MB with multiple robot systems and increased to as much as 661.39 MB with collectives. The total communication also increased with percent tasks, service types, and service types per robot, consistent with SDAM’s \(O((m+n)|S |)\) per iteration communication complexity. 1000 robot collectives with ten service types, five services per robot, and 50% tasks required the most communication.

Significant differences were identified across collective sizes \((H (n=75) = 693.91, p<0.01)\) and percent tasks \((H (n=150) = 153.99, p<0.01)\), with significant post-hoc analyses (p < 0.01). The increases with service types (p = 0.04) and services per robot (p < 0.01) were also significant.

SDAM derived optimal solutions with one service per robot, as well as five services per robot and 1% or 10% tasks. The percent utilities with five services per robot and 50% tasks (Table 12) were lower, but still near optimal.

The difference in utility with one and five service types per robot was significant (p < 0.01), as was the difference across collective sizes \((H (n=75) = 12.39, p=0.01)\). Post-hoc tests identified significant differences between collective sizes 25 and 500–1000 (p < 0.01), 50 and 500–1000 (p = 0.02 and p = 0.03, respectively), and 100 and 500–1000 (p = 0.03 and p = 0.04, respectively). No significant difference was found between other collective sizes, five and ten service types, or across percent tasks.

SDASCO performed worse than SAM, completing only 64.7% (i.e., 194) of the 300 multiple robot system trials. Specifically, SDASCO failed to solve any of the 75 trials with 50 robots and 10% tasks, due to exceeding the runtime limit. Additionally, SDASCO with ten service types and five services per robot exceeded the time limit for all 25 trials with 25 robots and a 10% tasks, as well as 6 of the 25 trials with 50 robots and a 50% tasks. The remaining multiple robot trials were completed successfully. SDASCO’s evaluation did not include the 600 trials with collectives, for an overall 32.3% success rate.

SDASCO’s runtimes increased with collective size, services per robot, and decreased percent tasks (Table 13). Significant differences were identified between 25 and 50 robot collectives (p < 0.01), 10% and 50% tasks (p < 0.01), and one and five services per robot (p < 0.01) No significant difference existed between five and ten service types. These trends resulted in a maximum runtime (i.e., 1 h 33 min) that is much too long for the near real-time allocation necessary to support high tempo applications, but short enough to support short term pre-mission planning for multiple robot systems.

The communication requirements increased significantly with collective size (p < 0.01), as shown in Table 14. As well, the amount of needed communication was significantly higher based on the percent tasks (p < 0.01), services types (p < 0.01), and services per robot (p = 0.04).

SDASCO derived optimal solutions for all successful trials with five services and one service per robot, as well as all ten services and one service per robot. The median percent utilities with ten services, five services per robot, and 50% tasks are 99.28 (minimum = 96.0, maximum = 100.0) with 25 robots, and 99.82 (minimum = 99.27, maximum = 100.0) with 50 robots. These utilities are lower than for other compositions, but still near optimal.

6.4 Heterogeneous collective discussion

The heterogeneous collective experiments assessed the algorithms’ ability to produce near-optimal solutions (i.e., > 95% utility) for very large collectives in near real-time (i.e., < 5 min) and using minimal communication (i.e., < 500 MB), where relaxed thresholds (i.e., < 10 min, < 600 MB, > 90% utility) were considered reasonably close to satisfying the criteria. The hypothesis that none of GRAPE, RACHNA, RACHNAdt, SDAM, and SDASCO fully satisfied all criteria was supported, even with the relaxed thresholds.

means that the algorithm met the criterion for all trials, while a

means that the algorithm met the criterion for all trials, while a  means that all trials were reasonably close to meeting the criterion. An

means that all trials were reasonably close to meeting the criterion. An  means that the criterion was not met, or trials were not attempted due to known algorithm limitations. A - means that the independent variable combination is invalid

means that the criterion was not met, or trials were not attempted due to known algorithm limitations. A - means that the independent variable combination is invalidGRAPE was unable to address coalition formation for heterogeneous collectives, as it lacks a services model. SDASCO had the next worst performance, meeting all criteria for only five of the thirty-six independent variable combinations (see Table 15). Specifically, all criteria were satisfied for three independent variable combinations with five service types and one service per robot (i.e., 10% tasks and 25 robots, 50% tasks and 25–50 robots), two with ten service types and one service per robot (i.e., 10%-50% tasks and 25 robots), and none with ten service types and one five services per robot. Relaxing the criteria to < 10 min, < 600 MB, and < 90% utility does not enable SDASCO to satisfy the criteria for any additional variable combinations.

SDASCO’s poor performance with multiple robot systems (i.e., 25–50 robots) demonstrated that the algorithm will not scale to collectives. SDASCO’s worst case runtimes are far too long for near real-time domains and are expected to increase significantly with collectives. Additionally, SDASCO’s \(O(nm^ku_{max}/\epsilon _{dec})\) computational complexity made SDASCO very sensitive to variations in coalition size k, resulting large variations in runtimes. High and variable runtimes, coupled with high communication complexities, prevent SDASCO from scaling to collectives.

RACHNA satisfied all criteria for nine independent variable combinations (i.e., 50% tasks and 25–100 robots, regardless of the collective composition). Relaxing the percent utility criteria to > 90% enables RACHNA to meet all criteria for two additional independent variable combination (i.e., 10% tasks, 500 robots, five or ten service types, and one service per robot). Relaxing the runtime and communication requirements to < 10 min and < 600 MB respectively, does not increase the number of independent variable combinations for which RACHNA satisfies all criteria. RACHNA’s primary limitations were the same as with homogeneous collectives, namely high runtimes and communication with 500–1000 robots, as well as an inability to assign coalitions to tasks requiring more robots than their utilities support.

means that the algorithm met the criterion for all trials, while a