Abstract

In this study, an overview of the computational tools developed in the area of metal-based additively manufactured (AM) to simulate the performance metrics along with their experimental validations will be presented. The performance metrics of the AM fabricated parts such as the inter- and intra-layer strengths could be characterized in terms of the melt pool dimensions, solidification times, cooling rates, granular microstructure, and phase morphologies along with defect distributions which are a function of the energy source, scan pattern(s), and the material(s). The four major areas of AM simulation included in this study are thermo-mechanical constitutive relationships during fabrication and in-service, the use of Euler angles for gaging static and dynamic strengths, the use of algorithms involving intelligent use of matrix algebra and homogenization extracting the spatiotemporal nature of these processes, a fast GPU architecture, and specific challenges targeted toward attaining a faster than real-time simulation efficiency and accuracy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The numerical modeling of additive manufacturing (AM) processes and using those tools for process and performance characterization is gaining interest in the user community such that these tools could be used for process, materials, geometry, and performance optimization. In order to use these tools, an overview of the fundamentals and a few numerical tool functionalities has been discussed in this work. First, the concept of a ‘finite’ volume enclosed by a lower dimensional surface, line, and point boundaries needs to be understood.[1–3] In traditional metallurgy, generally representative microstructural observations are made at local length scales using multi-scale optical, scanning, and transmission electron microscopy. These are followed by mechanical tests such as tensile and high-cycle fatigue tests to understand how the local microstructures are phenomenologically tied to their mechanical property counterparts. When, for example, in traditional tensile tests the dog-bone geometry[4] introduces a macroscopic uniform state of deformation, it becomes very easy to connect the local microstructures with their macroscopic property analogs provided that the microstructure is more or less uniformly distributed in the ‘finite’ volume.[5–11]

In the case of metal melting-based additive manufacturing technologies, the energy sources are very small (~100 µm)[12,13] leading to microstructural variations in the fabricated structures and the freedom to create complex geometries with high precision.[13] This resolution and geometric complexity, however, makes it difficult to apply a phenomenological understanding based on local microstructure to overall part performance. In order to circumvent these issues, it is important to simulate performance metrics using distributed volume-based numerical techniques such as ‘The Finite Element’ method which automatically compensates for the volume enclosures. This compensation is in-built in the finite element methodology since it first propagates forcing functions such as point force(s), pressure, and other types of boundary conditions (displacement and interfacial interactions)/stimulus from the region of exposure to the enclosure boundaries, and then the effect of the boundaries are taken into account and the response function is back-propagated throughout the volume. The forward and backward propagations occur seamlessly due to the embedment of a positive definite stiffness matrix which can be decomposed using a Cholesky algorithm into an upper and lower triangular matrix, each responsible for one kind of propagation to compute force equilibrium and displacement compatibility in the entire fabricated structure.[14–16]

In order to perform meaningful analysis of performance metrics of AM structures, a capable finite element formulation should include capturing the multi-scale microstructure via efficient spatiotemporal homogenization methodologies, an architecture to apply point-, line-, and area-based boundary conditions, and an effective and efficient mesh generation strategy to map out the size and shape of the AM structure along with spatial anomalies such as precipitates and appropriate surface integrals to account for the nature and intensity of the boundary conditions.[17]

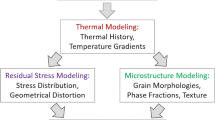

The objectives for process modeling in AM are slightly different from simulation and modeling of part performance metrics, but a ‘finite’ volume processing domain, using a finite element strategy, can also be used. The major objective here is to correlate the energy source, raw material characteristics, and geometry with the thermal history and cooling rates which in turn leads to predictions of melt pool dimensions, defects, residual stresses, and distortions. The simulation objectives could be set such that the optimized process parameters such as power, part orientations, and speed could be obtained for cost functions such as minimal residual stress or minimal defects for a given geometry. Together, process and performance modeling of AM structures could help reduce the number of fabrication, metallurgical characterization, and ‘standardized shape’ mechanical testing experiments typically used for qualifying AM for a particular application. It should also be noted that a huge amount of experimental effort is required to quantify the effects of small, accurate, controlled, and fast-moving energy sources across a wide range of geometrical variations.[17] These correlations cannot be captured using traditional approaches, and hence simulation is required to gain the understanding required to design better and optimize existing processes to enable the ‘next industrial revolution’ sooner rather than later.

2 Methodology

The methodology for simulation of process and performance metrics includes three steps, namely the preprocessing, solution, and postprocessing steps.

2.1 Preprocessing Stage

For process simulations, during the preprocessing stage, the first step is to computationally represent the scan patterns. However, such scan vector information is not readily available from most machine vendors. An in-house mechanism for computational representations of scan patterns has been developed for this purpose. A layer-by-layer video of a part being fabricated can be used to figure out the start point, scan angles, scan directions, and the order of scan lines. Once the scan characteristics have been obtained, representative scan lines closely matching the machine vendor scan strategy are fed to the simulation. An example showing the close match between the scan patterns predicted using the in-house computational tools and a real scan pattern extracted from an EOS M270 machine are shown in Figure 1. In the future, the preferred method for obtaining accurate scan pattern information will be to read scan vectors directly from machine build software. In order to develop and test new types of scan strategies, a flexible algorithm-based scan pattern generator is also available.

The second important step during the preprocessing stage is material data collection. The important thermal variables to be measured include the heat energy absorption coefficients (i.e., laser absorptivity), density of different states of matter (powder, bulk-solid, liquid, and vapor) at different temperatures, specific heat, and thermal conductivity. These variables, particularly for a powder bed, are typically unknown. To experimentally determine these variables, several experiments have been developed. Laser illumination of a square/circular patch of area using a set scan pattern to heat powder to a temperature below its melting point while monitoring that patch using an IR camera enables us to calculate spatiotemporal heat energy characteristics and correlate these to absorptivity and thermal conductivity of the powder bed. To establish density of the powder bed, experiments involve creating a thin-walled cylindrical or prismatic container in the machine, accurately measuring the weight and volume of the geometry when filled with and emptied of powder, and calculating the powder packing density accordingly. These experiments were developed in conjunction with one of our research partners, Mound Laser & Photonics Center in Dayton, Ohio.

The specific heat as a function of temperature is generally obtained from the literature and/or using Thermocalc.[18] Other variables of interest such as the temperature- and phase-dependent coefficient of thermal expansion, elastic modulus, poisson’s ratio, yield strength, and strain rate sensitivity as well as the solidus temperature, solid-state transition temperature(s) and phase(s) if present, latent heat of fusion, latent heat of vaporization, liquidus temperature, and vaporization temperature (including those of any low-elemental weight constituents) are typically obtained from literature values.[19]

A few theoretical Scheil predictions for computing the solidus and liquidus temperatures as a function of carbon percentage in CoCrMoC alloy powder used for fabrication using the Selective Laser Melting are shown in Figures 2(a) through (c). The reason behind investigating the state change temperatures as a function of carbon percentage is to determine the amount of uncertainty in solidus and liquidus temperatures since the powder manufacturers such as Carpenter Powder Products, Inc. do not provide with a range of Carbon percentage instead of a set value for the Carbon percentage and to experimentally obtain the carbon percentage would require WDS or other wet chemical analysis methods are expensive. Therefore, Scheil calculations could be used for predicting the range of solidus and liquidus temperatures as a function of Carbon percentage. It should be also noted that, with increasing carbon content, the evolving solid comprises more deleterious phases such as Laves, M23C6, and σ phases. Also, it could be seen that the evolving solid volume fraction changes as a function of carbon percentage in these illustrations. It should be also noted that Carbon has been assumed to be a fast diffuser after a weight percentage of 0.15 pct or more.

Variation of solidus and liquidus temperatures as a function of increasing carbon percentage (a) 0.08 pct, (b) 0.15 pct, and (c) 0.25 pct. It could be clearly observed that deleterious phase precipitations increase as a function of increasing carbon percentages. All the computations have been performed using the Thermocalc TCFE7 database

Thermocalc databases could be also used for assessing the bulk thermal properties such as thermal conductivity, specific heat, and density changes as a function of temperature. A few examples showing thermal properties for a representative CoCrMoC alloy at 62.85 pct Cobalt, 30 pct Chromium, 7 pct Molybdenum, and 0.15 pct Carbon are shown in Figure 3.

The third step during the preprocessing stage is to generate 2.5-dimensional and full 3-dimensional meshes such that the macroscopic domains and the dynamic energy sources are fully captured. The 2.5-dimensional mesh is a Z-directional extension of the mesh shown in Figure 4. The fine mesh region follows the laser movement, with laser exposure. A full 3-dimensional mesh (Figure 5) has also been developed such that the number of degrees of freedom can be reduced significantly, and the quality of the solution can be made better. Other advantage of having a full 3-dimensional mesh is to ease the use of an eigensolver[15,17] as the mesh transitions from a fine to coarse mesh domain in x-, y-, and z-directions in exactly the same fashion, whereas the 2.5-dimensional mesh is symmetric in the x- and y-directions but is extruded in the −z-direction without any length scale change.

A coarse mesh grid with a fine mesh box that moves with the laser[17]

For performance metric simulations, the preprocessing stage comprises obtaining the dislocation density and other microstructural features such as precipitates, grain boundaries, euler angle, and macroscopic texture, if any. Detailed preprocessing including mesh generation required for performance metric simulations has been provided in prior work by the authors.[17,20,21] Figures 4, 5 and 6 illustrate various types of preprocessing experiments and simulation conditions.

The governing equations and boundary conditions for carrying out process simulations are well addressed in the literature.[22–31] Similarly, the in-house thermo-mechanical constitutive relationships for performance metric modeling are addressed in the literature.[17,20,21,32–36]

The computational complexity for currently available finite element algorithms to simulate a full-sized part which fills an SLM machine has been demonstrated to be intractable,[37] even for the most powerful supercomputers. The present simulation environment includes an attempt to solve the problem with intelligent solver algorithms and inclusion of problem specific asymptotics (Figures 7 and 8).

2.2 Solution Stage

For process simulations, some of the solver options and a brief review of the efficiency algorithms are provided as follows:

2.2.1 Intelligent assembly of stiffness matrices[17]

In Figure 4, two meshes are shown. The finer mesh captures the high fidelity of thermal gradients around the laser beam, whereas the coarser mesh captures the slow gradients where such a fine mesh is not required. As the laser moves in space and time, the fine mesh changes its configuration as well. In traditional finite element packages, the assembly of elements and renumbering of nodes is required to be done repeatedly for moving meshes as a function of fine mesh movements, whereas in the proposed framework,[17] intelligent algorithms have been formulated and programed for higher efficiencies such that the nodal connectivity and assembly calculations are not required to be redone for each fine mesh movement which is tied to the laser movement instead those movements can be taken into account using a cut-and-paste stiffness matrix methodology.[17]

2.2.2 Intelligent Cholesky algorithm for small numbers truncation and data compression[17]

The Intelligent Cholesky algorithm neglects small numbers present in the lower triangular Cholesky matrix based on criteria such as near-neighborhood connectivity of a physical node in the mesh and dot product multiplication of two rows of the original Cholesky matrix and determining if the final product is smaller than a certain threshold resulting in reduced FLOPs involved in computing the degrees of freedom as the addresses of the non-zero Cholesky matrix components are registered. This strategy also reduces the memory requirement to store these matrices. The data reduction provided depends on the finite element mesh or sparsity pattern of the initial stiffness matrix requiring Cholesky factorization. In the case of intelligent stiffness matrix assembly to replicate the point laser energy input during the selective laser melting process, the FLOPs and data compression reduction is typically around 100× depending on the nature of the problem as all the significant Cholesky matrix component addresses are relatively fixed in the fine mesh once a near steady-state thermal profile is achieved.

2.2.3 Eigenmodal solver[15,17]

The eigenmodal solver is a dimensional reduction technique for thermal problems. The dimensional reduction provides cross-sectional thermal modes propagating in the −z-direction of the powder bed on illuminating the top +z surface of the bed with a point laser or a collection of multi-beam electron energy sources. This solution methodology is the first formal beam theory developed for thermal problems. This innovation further results in huge savings during the computation of the degrees of freedom. These savings could easily result in solutions which are anywhere ~108 to 1014 times faster than the FEA solutions with negligible or no loss of accuracy. An example has been shown which depicts the one-to-one match between the finite element and the eigensolver solutions in Figure 9. It should be noted that this method solves for inhomogeneous material distributions as well. An example of such a distribution is the placement of solid and powder in arbitrary finite element cells in the powder bed at different locations to realize the complex 3-dimensional geometries.

Apart from various efficiency algorithms mentioned above to determine the melt pool and the thermal/residual stress contours throughout the computational domain, a myriad of new algorithms have been developed to characterize defects such as keyholes which are a result of the vaporization phenomenon. First vaporization creates an open jaw cavity in the regions of maximum laser intensity followed by the partial filling of this cavity as a result of the turbulent fluid flow in the melt pool. This results in the closure of the vaporization cavity leading to keyhole porosities.

2.3 Keyhole Porosity

2.3.1 Governing equations (Reynolds averaged Navier–Stokes method (RANS))

The governing equations for fluid flow in the melt pool are defined in Eq. [1]:

where \( \gamma = \frac{\mu }{\rho } \) is the ratio between viscosity μ and density ρ, P l denotes the pressure, and \( f = \frac{{f_{0} }}{\rho } \) is the mass density of body force and external forces where f 0 is the sum of internal body force and external forces inside the melt pool. The variables \( {\text{u, v,}} \) and w denote the x, y, and z components of the velocity with \( \bar{u}, \;\bar{v}, \) and \( \bar{w} \) denoting the mean velocities in the mutually perpendicular axis, respectively, and the primed quantities denote the turbulent fluctuations. This formulation is similar to the time homogenization scheme for Dislocation Density-based Crystal Plasticity Finite Element method.[38]

2.3.2 Boundary conditions

The initial velocity of the liquid melt at the liquid–vapor (plasma) interface can be calculated using Bernoulli’s principle with the known depth of every node at the interface using Eq. [2]:

where the pressure is denoted as P, melt pool velocity v, and relative height Δh, which is known at the surface of the melt pool, and any node (1) with known pressure P 1 on the plasma/liquid boundary and the velocity v 1 can then be calculated as shown in Figure 10.

The pressure at the interior nodes such as P 1 at node (1) comprises the recoil pressure in the plasma zone, surface tension, laser-induced thermocapillary, hydrostatic and hydrodynamic components of pressure which need to be considered.

A sample result from this investigation in 2-dimensional scaled coordinate system representing the back of the melt pool is shown in Figure 11. A detailed description and results will be discussed in a future research communication.

It could be observed from Figure 11 that the velocity profile at the plasma region become almost horizontal and the biggest in magnitude around 0.5 scaled depth although the scale depth of the plasma wall is always shallower compared to the maximum depth of the melt pool; therefore, generally the depth at which the biggest velocity magnitude occurs is at around 33 to 40 pct depth of the maximum melt pool depth.

2.3.3 Sherman Morrison Woodburry algorithm and preconditioners[14]

The Sherman Morrison algorithm performs a multi-rank update of the inverse of a matrix such that the stiffness matrix does not need to be recalculated and inverted with laser or electron beam energy source position changes. The implementation of this algorithm reduces the computational costs involved in matrix inverse reconstructions and solution updates specifically for solution frameworks which include iterative convergence and where the change in stiffness matrix between two iterations is minimal. It provides computational speed enhancements of 100× to 1000× depending on the reduced dimension rank of the update.

In the above equation, \( U, V \) denote the eigenvectors and D denotes the eigenvalues of the change in stiffness matrix denoted by ΔK.

These algorithms are being programed to make use of Graphical Processing Units using the CULASparse solver. Other implementations of these kinds of elastic FEM problems have similarly been attempted.[39]

For performance metric simulations, the steps involved in the solution stage have been described in Reference 20. These steps are typically implemented for Dislocation Density-based Crystal Plasticity. A new classical crystal plasticity-based user material, element assembly, and finite element subroutines have been also developed to act as an intermediate step of homogenization between the dislocation density-based crystal plasticity and macroscopic continuum length scale anisotropic plasticity. The steps for computing the performance metric degrees of freedom such as displacements and other integration point variables such as stresses, hardness evolutions and aggregate crystal rotations as a function of material deformation are described here.

First, an implicit algorithm involving unbalanced force is set up. This algorithm is based on the Newton’s 3rd law which states that for every action exerted by an external stimulus on a system there exists an equal and opposite reaction by the system on the external stimulus.

In order to ascertain this equilibrium at each point inside the computational domain, the offset from this equilibrium is computed in terms of the residual force. We define the residual as follows:

where R f denotes the residual force, f external is the externally applied force by the stimulus, and f internal is the force distribution the material volume creates such that the f external could be counteracted. The internal force, f internal, is defined as follows:

where B denotes the strain–displacement matrix, σ(u, ∇u) is the stress vector obtained by transforming the stress tensor to a vector, and V is the volume of an element.

The algorithm to solve the non-linear finite element problem is described in Table I.

Various nonlinearities such as the shear strain rate (\( \dot{\gamma }^{\alpha } ) \), critical resolved shear stress (CRSS) at each slip system (α), and stress update (σ) occur simultaneously, and therefore their respective evolutions are nested together and shown in Table II.

The importance of convergence has been shown with an example simulation replicating the uniaxial tensile testing of a single-crystal copper bar. A comparison of the material stress–applied strain curve for simulation with and without correct adaptive time stepping as shown in Table II along with the definition of unbalanced force in Eq. [4] has been illustrated in Figure 12. It could be observed that the Jacobian becomes unstable near the yield point and leads to oscillatory stress–strain behavior where an adaptive time stepping scheme has not been followed.

Volume-averaged stress (material reaction)–strain (applied) behavior plotted using adaptive time stepping and compared against fixed time stepping counterpart. A force unbalance Newton–Raphson implicit algorithm has been used in both the cases although the adaptive time stepping leads to appropriate mechanical behavior, whereas a fixed time stepping leads to numerical instabilities near the yield point

2.4 Postprocessing Stage

Postprocessing of process simulation results involves visualizing variables of interest such as thermal contours, phase evolutions, and residual stress distributions in 3-dimensional space for process simulations. Similar variables of interest for performance metric simulations include stress and equivalent plastic strains. Some examples showing results of process and performance simulations are illustrated in Figures 13 and 14, respectively.

The postprocessing of thermal contours is also used to determine the in-plane bonding and closure of scan lines to form solidified bulk in a consecutive manner such that the lack-of-fusion defects could be identified. A recent numerical investigation has been conducted to predict these defects by predicting the melt pool width and subtracting this width from the scan line preset spacing. In order to conduct this numerical experiment, powders from three independent vendors have been chosen, their powder bed densities and thermal conductivity have been measured, or other thermal parameters such as the specific heat have been identified from the literature, thereby constructing full spectra of material properties and parameters to be inserted in the process simulations. A detailed study on this numerical experiment and associated experiments to provide data for the simulations and further validate the simulation results could be found in literature.[41]

Some of the results from this study could be summarized in Figure 15 where the lack-of-fusion porosity has been experimentally observed. It is shown that experimental porosity levels are increasing with decreasing power-to-scan speed ratios, which seems to validate the numerical hypothesis that lack-of-fusion porosity is a direct function of melt pool width. The width has been also observed to be decreasing as the power-to-scan speed ratio decreases, causing powder to entrap in those locations. The rectification of this problem using new process parameters and their microstructural characterizations has been also conducted.[41] This study provides a quantitative guideline for the selection of powder-specific process parameters and is the very first study in this area.

The microstructural performance metric simulations are generally followed by spatiotemporal homogenizations where the continuum anisotropic plasticity laws are deduced as functions of the microstructure, so that full part mechanical behavior simulations could be conducted. An example showing the dependency of these parameters on microstructure is shown in Figures 16 and 17. The case of temporal homogenizations using in-house algorithms is discussed elsewhere.[38]

3 Conclusions

The simulation strategy described in this paper has successfully been used to understand AM process and part performance metrics. These approaches are being validated experimentally for both process and performance simulations of metal melting-based additive manufacturing technology. Some of the salient features of this algorithmic approach are as follows:

-

1.

Scan strategies and geometries are directly inserted into the simulations along with multi-scale meshes to take into account the local effects of energy source(s), local and non-local microstructural morphologies, and geometrical features.

-

2.

Automated mesh generation enables rapid generation of optimized meshes with convergence assurance for a given process.

-

3.

The effect of alloying elements on cooling curves can be directly obtained using TC-Prisma software which serves as a look-up table for precipitation of phases such as secondary and tertiary precipitates in Nickel-based superalloys.

-

4.

Efficiency algorithms ensure that multi-scale simulations execute at real-time or close to real-time speeds.

-

5.

AM process costs can be reduced by prediction and rectification of common build failure modes such as residual stress-induced blade crashes.

-

6.

A complete software solution is available to predict part manufacturing characteristics and part performance metrics for a prescribed application.

References

T.J.R. Hughes: The Finite Element Method: Linear Static and Dynamic Finite Element Analysis, Courier Dover Publications, Mineola 2012.

J.N. Reddy: An Introduction to the Finite Element Method, McGraw-Hill New York, 1993.

B.A. Szabo, and I. Babuška: Finite Element Analysis, Wiley, New York, 1991.

ASTM Standard, Annual book of ASTM standards 2004, vol. 3, pp. 57-72.

YC Chen and K Nakata, Materials & Design 2009, vol. 30, pp. 469-474.

R Filip, K Kubiak, W Ziaja and J Sieniawski, Journal of Materials Processing Technology 2003, vol. 133, pp. 84-89.

HK Rafi, D Pal, N Patil, TL Starr and BE Stucker, Journal of Materials Engineering and Performance 2014, vol. 23, pp. 4421-4428.

N Kumar, RS Mishra, CS Huskamp and KK Sankaran, Materials Science and Engineering: A 2011, vol. 528, pp. 5883-5887.

G Lütjering, Materials Science and Engineering: A 1998, vol. 243, pp. 32-45.

Z.Y. Ma, S.R. Sharma and R.S. Mishra: Scripta Mater. 2006, vol. 54, pp. 1623–1626.

RS Mishra, SX McFadden, RZ Valiev and AK Mukherjee, JOM 1999, vol. 51, pp. 37-40.

K. Zeng, D. Pal, and B.E. Stucker: in Solid Freeform Fabrication Symposium, (Austin, TX, 2012).

I. Gibson, D.W. Rosen, and B. Stucker: Additive Manufacturing Technologies, Springer, New York, 2010.

D. Pal, N. Patil, K. Zeng, C. Teng, S. Xu, T. Sublette, and B.E. Stucker: in Proceedings of the Solid Freeform Fabrication Symposium, Austin, TX, 2014.

N. Patil, D. Pal, and B.E. Stucker: in Proceedings of the Solid Freeform Fabrication Symposium, Austin, TX, 2013.

D. Pal, N. Patil, and B.E. Stucker: in Additive Manufacturing Consortium, Lawrence Livermore National Laboratory, 2014.

D. Pal, N. Patil, K. Zeng and B.E. Stucker: J. Manuf. Sci. Eng., 2014, vol. 136, pp. 061022.1−061022.16

Thermocalc, “Thermocalc for Windows” (Thermocalc, 2014), http://www.thermocalc.com/media/8139/tcw_examples.pdf 31st Dec 2014.

Thermocalc, “TC-Prisma User Guide and Examples” (Thermocalc, 2014), http://www.thermocalc.com/media/6045/tc-prisma_user-guide-and-examples.pdf 31st Dec 2014.

D. Pal and B. Stucker, Journal of Applied Physics 2013, vol. 113, p. 203517.1–203517.8.

D. Pal, S. Behera, and S. Ghosh: in United States National Congress on Computational Mechanics, 2009.

M Matsumoto, M Shiomi, K Osakada and F Abe, International Journal of Machine Tools and Manufacture 2002, vol. 42, pp. 61-67.

M Shiomi, A Yoshidome, F Abe and K Osakada, International Journal of Machine Tools and Manufacture 1999, vol. 39, pp. 237-252.

F Verhaeghe, T Craeghs, J Heulens and L Pandelaers, Acta Materialia 2009, vol. 57, pp. 6006-6012.

MF Zaeh and G Branner, Production Engineering 2010, vol. 4, pp. 35-45.

K Dai and L Shaw, Acta Materialia 2004, vol. 52, pp. 69-80.

S Kolossov, E Boillat, R Glardon, P Fischer and M Locher, International Journal of Machine Tools and Manufacture 2004, vol. 44, pp. 117-123.

IA Roberts, CJ Wang, R Esterlein, M Stanford and DJ Mynors, International Journal of Machine Tools and Manufacture 2009, vol. 49, pp. 916-923.

A Hussein, L Hao, C Yan and R Everson, Materials & Design 2013, vol. 52, pp. 638-647.

T.H.C. Childs, C. Hauser, and M. Badrossamay: in Proceedings of the Institution of Mechanical Engineers, Part B: Journal of Engineering Manufacture 2005, vol. 219, pp. 339–57.

D Deng and H Murakawa, Computational materials science 2006, vol. 37, pp. 269-277.

H Ding and YC Shin, Journal of Manufacturing Science and Engineering 2014, vol. 136, p. 041003.1–11.

L Ding, X Zhang and CR Liu, Journal of Manufacturing Science and Engineering 2014, vol. 136, p. 041020.

W Hammami, G Gilles, AM Habraken and L Duchêne, International journal of material forming 2011, vol. 4, pp. 205-215.

F Bridier, DL McDowell, P Villechaise and J Mendez, International Journal of Plasticity 2009, vol. 25, pp. 1066-1082.

N.R. Barton, J.V. Bernier, R.A. Lebensohn, and A.D. Rollett: in Electron Backscatter Diffraction in Materials Science, Springer, New York, 2009, pp 155–67.

B.E. Stucker, K. Zeng, S. Xu, N. Patil, C. Teng, T. Sublette, and D. Pal: in Proceedings of the Solid Freeform Fabrication Symposium, Austin, TX, 2014.

D. Pal and B.E. Stucker: in Proceedings of the Solid Freeform Fabrication Symposium, Austin, TX, 2012.

J Zhang and L Zhang, Mathematical Problems in Engineering 2013, vol. 2013, pp. 1-12.

J.C. Simo and T.J.R. Hughes: Computational Inelasticity, Springer, New York, 2008.

H. Gong, H. Gu, J.J.S. Dilip, D. Pal, A. Hicks, H. Doak, and B.E. Stucker: in Solid Freeform Symposium, Austin, Texas, 2014.

Acknowledgments

The authors would like to thank Dr. Khalid Rafi at Nanyang Technological University, Mr. Hengfeng Gu at North Carolina State University, and Dr. Haijun Gong and Mr. Ashabul Anam at University of Louisville for their insightful discussions with the authors. Funding: The authors would like to gratefully acknowledge the funding support from the Office of Naval Research (N000141110689 & N000140710633), the Air Force Research Laboratory (as a subcontractor to Mound Laser & Photonics Center on three SBIR projects), the National Institute of Standards and Technology (70NANB12H262), and the National Science Foundation (CMMI-1234468).

Author information

Authors and Affiliations

Corresponding author

Additional information

Manuscript submitted December 31, 2014.

Rights and permissions

About this article

Cite this article

Pal, D., Patil, N., Zeng, K. et al. An Efficient Multi-Scale Simulation Architecture for the Prediction of Performance Metrics of Parts Fabricated Using Additive Manufacturing. Metall Mater Trans A 46, 3852–3863 (2015). https://doi.org/10.1007/s11661-015-2903-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11661-015-2903-7