Abstract

We propose a method for variable selection in discriminant analysis with mixed continuous and binary variables. This method is based on a criterion that permits to reduce the variable selection problem to a problem of estimating suitable permutation and dimensionality. Then, estimators for these parameters are proposed and the resulting method for selecting variables is shown to be consistent. A simulation study that permits to study several properties of the proposed approach and to compare it with an existing method is given, and an example on a real data set is provided.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The problem of classifying an observation into one of several classes on the basis of data consisting of both continuous and categorical variables is an old problem that has been tackled under different forms in the literature. The earliest works in this field go back to Chang and Afifi (1979) and Krzanowski (1975) who used the location model introduced by Olkin and Tate (1961) to form a classification rule in the context of discriminant analysis involving two groups. More recent work has focused on defining distance measures between populations or making inference on them (e.g., Krzanowski 1983, 1984; Bar-Hen and Daudin 1995; Bedrick et al. 2000; de Leon and Carriere 2005). One of the most important problem in the context described above is the problem of selecting the appropriate categorical and/or continuous variables to use for discrimination. Indeed, it is well recognized that using fewer variables improve classification performance and permits to avoid estimation problems (e.g. McLachlan 1992; Mahat et al. 2007). There are several works dealing with this problem, mainly in the context of a location model. Some of these works are based on the use of distances between populations for determining the most predictive variables (Krzanowski 1983; Daudin 1986; Bar-Hen and Daudin 1995; Daudin and Bar-Hen 1999). Krusinska (1989a, b, 1990) used methods based on the percentage of misclassification, Hotelling’s \(T^2\) and graphical models. More recently, Mahat et al. (2007) proposed a method based on distance between groups as measured by smoothed Kullback–Leiler divergence. All these works consider the case of two groups and, to the best of our knowledge, the case of more than two groups have not yet been considered for variable selection purpose. So, it is of great interest to introduce a method that can be used when the number of groups is greater than two. Such an approach have been proposed recently in Nkiet (2012) for the case of continuous variables only. It is based on a criterion that permits to characterize the set of variables that are appropriate for discrimination by means of two parameters, so that the variable selection problem reduces to that of estimating these parameters.

In this paper, we extend the approach of Nkiet (2012) to the case of mixed variables. The resulting method has two advantages; first, it can be used when the number of groups is greater than two, and secondly it just requires that the random vector consisting of the continuous variables has finite fourth order moment. No assumption on the distribution of this random vector is needed and, therefore, we do not suppose that the location model holds. In Sect. 2, we introduce a criterion by means of which the set of variables to be estimated is characterized by means of suitable permutation and dimensionality. Then, estimating this criterion is tackled in Sect. 3. More precisely, empirical estimators as well as non-parametric smoothing procedure are used for defining an estimator of the criterion. In the first case, we obtain properties of the resulting estimator that permits to obtain its asymptotic distribution. Section 4 is devoted to the definition of our proposal for variable selection. Consistency of the method, when empirical estimators are used, is then proved. Section 5 is devoted to the presentation of numerical experiments made in order to study several properties of the proposal and to compare it with an existing method. The first issue that is adressed concerns the impact of choosing penalty functions that are involved in our procedure, and that of the type of estimators that is used. The results reveal low impact on the performance of the proposed method, and that in case of very small cell incidences it is preferable to use smoothed estimators. Since this method depends on two real parameters, it is of interest to study their influence on its performance and, consequently, to define a strategy that permits to choose optimal values for them; we propose a method based on leave-one-out cross validation for obtaining these optimal values. When using this approach, the obtained results show that the proposal is competitive with that of Mahat et al. (2007). A real data example is given in Sect. 6.

2 Statement of the problem

Letting \(\left( {\varOmega },{\mathcal {A}},P\right) \) be a probability space, we consider random vectors \(X=\left( X^{(1)},\ldots ,X^{(p)}\right) ^T\) and \(Y=\left( Y^{(1)},\ldots ,Y^{(d)}\right) ^T\) defined on this probability space and with values in \({\mathbb {R}}^p\) and \(\{0,1\}^d\) respectively. The r.v. X consists of continuous random variables whereas Y consists of binary random variables. As usual, Y may be associated to a multinomial random variable by considering \(U=1+\sum _{j=1}^dY^{(j)}\,2^{j-1}\) which has values in \(\{1,\ldots ,M\}\), where \(M=2^d\). In fact, Y is essentially the binary representation of U, and U provides a labeling of the underlying d-dimensional contingency table. Suppose that the observations of (X, Y) come from q groups \(\pi _1,\ldots \pi _q\) (with \(q\ge 2\)) characterized by a random variable Z with values in \(\{1,\ldots ,q\}\); this means that (X, Y) belongs to \(\pi _\ell \) if, and only if, one has \(Z=\ell \). Such framework has been considered in the literature for classification purposes. Indeed, for the case of two groups, that is when \(q=2\), Krzanowski (1975) proposed a classification rule based on a normal distribution location model where, conditionally on \(U=m\) and \(Z=\ell \), the vector X has the p-variate distribution \(N(\mu _{m\ell },{\varSigma })\). This rule allocates a future observation (x, y) of (X, Y) to \(\pi _1\) if

where \(m=1+\sum _{j=1}^dy^{(j)}\,2^{j-1}\), \(p_{m\ell }=P(U=m|Z=\ell )\) and \(\gamma \) is a constant that depends on costs due to missclassification and prior probabilities for the two groups. The case where \(q>2\) was considered by de Leon et al. (2011) in the context of general mixed-data models. In this case, the optimum rule classifies an observation (x, y) into the class \(\pi _{\ell ^*}\) if

where

with \(\tau _\ell =P(Z=\ell )\). As it can be seen, these rules involve observations of all the variables \(X^{(j)}\) in X. Nevertheless, as it is well recognized (see, e.g., McLachlan 1992; Mahat et al. 2007), using fewer variables may improve classification performance. So, it is of real interest to perform selection of the \(X^{(j)}\)’s from a sample of (X, Y, Z). For doing that, we extend an approach proposed by Nkiet (2012) for the case of continuous variables to the case of mixed continuous-binary variables where selection is from the continuous variables only. This approach first consists in introducing a criterion by means of which the set of variables that are adequate for discrimination is characterized. For any \(m\in \{1,\ldots ,M\}\) we put

and, assuming that \({\mathbb {E}}\left( \Vert X\Vert ^2\right) <+\infty \) where \(\Vert \cdot \Vert \) denotes the usual Euclidean norm of \({\mathbb {R}}^p\), we consider the covariance matrix of X conditionally on \(U=m\) given by

wher \(a^T\) denotes the transpose of a. Througout this paper we assume that the matrix \(V_m\) is invertible. Let us represent the set of continuous variables by the set \(I=\{1,\ldots ,p\}\) and, for any subset \(K:=\{i_1,\ldots ,i_k\}\) of I, consider the \(k\times p\) matrix defined by:

where

This matrix selects from x in \({\mathbb {R}}^p\) the components contained in the set K; more precisely, \(A_K\) transforms any vector \(x=(x_1,\ldots ,x_p)^T\) in \({\mathbb {R}}^p\) to the vector \(A_Kx\) in \({\mathbb {R}}^k\) whose components are the components \(x_i\) of x such that \(i\in K\). Then putting

we introduce

where \({\mathbb {I}}_p\) is the \(p\times p\) identity matrix. In fact, \(\xi _{K|m}\) is the criterion introduced in Nkiet (2012), conditionally on \(U=m\). It quantifies the loss of information resulting from selection of the variables of X belonging to K provided that the event \(\{U=m\}\) is realized (see Theorem 2.1 in Nkiet 2012). From this, we look for a criterion that quantifies the loss of information resulting from the above variable selection but without taking into account the value that will be taken by U since this value is unknown a priori. This leads to consider the criterion

by means of which we will characterize the subset \(I_0\) of variables that do not make any contribution for the discrimination between the q groups, that is variables of which the related components of the vector \({\varSigma }^{-1}\mu _{m\ell }\) that arises in the discriminant function (3) are constant as \(\ell \) varies in \(\{1,\ldots ,q\}\), for any \(m\in \{1,\ldots ,M\}\). Therefore,

where \(I_{0,m}\) denotes the subset of continuous variables whose related components of \({\varSigma }^{-1}\mu _{m\ell }\) are constant for \(\ell \in \{1,\ldots ,q\}\). Then, our problem reduces to the problem of estimating the subset \(I_1\) given by

where \(I_{1,m}=I-I_{0,m}\). An explicit expression of \(I_{1,m}\) can be obtained by using results from McKay (1977) (see also Fujikoshi 1982, 1985). Indeed, let \(\lambda _{1,m} \ge \lambda _{2,m} \ge \cdots \ge \lambda _{p,m}\) denote the eigenvalues of \(T_{m} = V_{m}^{-1}B_{m}\) where \(B_m\) is the between groups covariance matrix conditionally on \(U=m\) given by

and let \(\upsilon _{i}^{m}=(\upsilon _{i1}^{m},\ldots ,\upsilon _{ip}^{m})^{T}\)\((i=1,\ldots ,p)\) be an eigenvector of \(T_{m}\) associated with \(\lambda _{i,m}\), then (see McKay 1977; Fujikoshi 1982),

where \(r_m\) denotes the rank of \(T_m\).

Now, we give a characterization of \(I_1\) by means of the criterion (6). For doing that, we consider the following assumption:

\( ({\mathcal {A}}_1){:}\;\text {For all }m\in \{1,\ldots ,M\},\,\,p_m>0. \)

Then, we have:

Proposition 1

We assume that \(({\mathcal {A}}_1)\) holds. Then, for \( K \subset I\) we have \(\xi _{K} = 0\) if and only if \(I_{1}\subset K\).

Proof

For any fixed \(m\in \{1,\ldots ,M\}\), we denote by \(P^{\left\{ U=m\right\} }\) the conditional probability to the event \(\{U=m\}\). Then applying Theorem 2.1 of Nkiet (2012) with the probability space \(\left( {\varOmega },{\mathcal {A}},P^{\{U=m\}}\right) \), we obtain the equivalence: \(\xi _{K|m} = 0\Leftrightarrow I_{1,m} \subset K\). Thus:

\(\square \)

From this proposition, it is easily seen that, putting \(K_i=I-\{i\}\), one has the equivalence: \(\xi _{K_i}>0\Leftrightarrow i\in I_{1}\). Now, let \(\sigma =\left( \sigma (1),\ldots ,\sigma (p)\right) \) be the permutation of I such that:

- (a):

-

\(\xi _{K_{\sigma \left( 1\right) } }\ge \xi _{K_{\sigma \left( 2\right) } }\ge \cdots \ge \xi _{K_{\sigma \left( p\right) } }\);

- (b):

-

\(\xi _{K_{\sigma \left( i\right) } }=\xi _{K_{\sigma \left( j\right) } }\) and \(i<j\;\)imply \(\sigma \left( i\right) <\sigma \left( j\right) \).

Remark 1

This just means that the \(\xi _{K_i}\)’s are ranked in nonincreasing order so that the ex aequos are ranked in increasing order of the corresponding indices. Then, \(\sigma (i)\) is the index corresponding to the i-th largest element.

Since \(I_{1}\) is a non-empty set, there exists an integer \(s\in I\) which is equal to p when \(I_{1}=I\), and satisfying

when \(I_{1}\ne I\). The integer s will be the number of selected variables, and:

Therefore, estimating \(I_1\) reduces to estimating the two parameters \(\sigma \) and s. For doing that, we first need to consider an estimator of the criterion given in (6).

3 Estimating the criterion

Let \(\{(X_i,Y_i,Z_i)\}_{1\le i\le n}\) be an i.i.d. sample of (X, Y, Z) with

we put \(U_i=1+\sum _{j=1}^dY^{(j)}_i\,2^{j-1}\). In this section, we define estimators for the criterion given in (6) by estimating the parameters involved in its definition. First, empirical estimators are introduced and properties of the resulting estimator of the criterion are given, and secondly we consider estimators obtained by using non-parametric smoothing procedures as in Mahat et al. (2007).

3.1 Empirical estimators

Putting

we estimate \(p_{m}\), \(p_{\ell |m}\), \(\mu _{m}\), \(\mu _{\ell ,m}\) and \(V_{m}\) respectively by:

and

Then, considering

and

we take as estimator of \(\xi _K\) the random variable \({\widehat{\xi }}^{(n)}_K\) defined by:

Now, we will derive a result which establishes strong consistency for \({\widehat{\xi }}_{K}^{(n)}\) and will be useful for determining its asymptotic distribution and consistency of the proposed method for selecting variables for n approaching \(+\,\infty \). Let us consider the random matrices

with values in the space \({\mathcal {M}}_{qp,M}({\mathbb {R}})\) of \(pq\times M\) matrices. We also introduce the random vectors

and the random matrices

and

Further, we consider the random matrices

with values in

then, we put

Note that, for any (a, b, c, d, e) \(\in \)\({\mathcal {E}}\), we can write:

We introduce the projectors

the vector

and the maps \({\varLambda }_{K|m}\), \({\varPhi }_{\ell ,K|m}\) and \({\varPsi }_{\ell ,K|m}\) defined on \({\mathcal {E}}\) by

and

Then, we can formulate the following theorem that asserts the consistency of \({\widehat{\xi }}_{K}^{(n)}\) for n approaching \(+\,\infty \). Moreover, we provide an asymptotic approximation for \({\widehat{\xi }}_K^{(n)}\) that will be useful for deriving its asymptotic distribution. Let us introduce the following assumptions:

\( ({\mathcal {A}}_2){:}\; \text {For all }(\ell ,m)\in \{1,\ldots ,q\}\times \{1,\ldots ,M\},\,\,p_{\ell \vert m}>0; \)

\( ({\mathcal {A}}_3){:}\;{\mathbb {E}}\left( \Vert X\Vert ^2\right) <+\,\infty . \)

Then, we have:

Theorem 1

We assume that the asumptions \( ({\mathcal {A}}_1)\), \( ({\mathcal {A}}_2)\) and \( ({\mathcal {A}}_3)\) hold. Then, for any subset K of I we have:

-

(i)

\({\widehat{\xi }}_{K}^{(n)}\) converges almost surely to \(\xi _{K}\) as \(n \rightarrow +\,\infty \).

-

(ii)

$$\begin{aligned} n {\widehat{\xi }}_{K}^{(n)}= & {} \sum _{m=1}^{M}\sqrt{n}{\widehat{{\varLambda }}}_{K|m}^{(n)}({\widehat{W}}^{(n)}) + \sum _{m=1}^{M}\sum _{\ell =1}^{q}\left( p_{m}{\widehat{{\varPhi }}}_{\ell ,K|m}^{(n)}\left( {\widehat{W}}^{(n)}\right) \right. \\&+\, \left. p_{m}p_{\ell |m}\Vert {\widehat{{\varPsi }}}_{\ell ,K|m}^{(n)}\left( {\widehat{W}}^{(n)}\right) + \sqrt{n}{\varDelta }_{\ell ,K\vert m}\Vert \right) ^{2}\\ \end{aligned}$$

where \(\left( {\widehat{{\varLambda }}}_{K|m}^{(n)}\right) _{n \in {\mathbb {N}}^*}\), \(\left( {\widehat{{\varPhi }}}_{\ell ,K|m}^{(n)}\right) _{n \in {\mathbb {N}}^*}\) and \(\left( {\widehat{{\varPsi }}}_{\ell ,K|m}^{(n)}\right) _{n \in {\mathbb {N}}^*}\) are sequences of random operators that converge almost surely uniformly to \({\varLambda }_{K|m}\), \({\varPhi }_{\ell ,K|m}\) and \({\varPsi }_{\ell ,K|m}\) respectively.

3.2 Non-parametric smoothing procedure

As it is well known, empirical estimators could be not suitable, because many cell entries of the d-variate contingency table corresponding to the d-categorical variables could be very small, so that corresponding estimates could be poor or non-existent (see Aspakourov and Krzanowski 2000). To overcome these problems, smoothed non-parametric estimators (of probabilities, means and covariance matrices) introduced in Aspakourov and Krzanowski (2000) and Mahat et al. (2007) can be used in order to estimate the criterion (6). Denote by D the dissimilarity defined on \(\{1,\ldots ,M\}^2\) by

where, for any \(k\in \{1,\ldots ,M\}\), \(\mathbf{y }_k=\left( \mathbf{y }_k^{(1)},\ldots ,\mathbf{y }_k^{(d)}\right) ^T\in \{0,1\}^d\) is the vector of binary variables satisfying \(1+\sum _{j=1}^d\mathbf{y }_k^{(j)}\,2^{j-1}=k\). Then, given a smoothing parameter \(\lambda \in ]0,1[\), we consider the weights \(w(m,k)=\lambda ^{D(m,k)}\) and estimate \(p_{m}\), \(p_{\ell |m}\), \(\mu _{m}\), \(\mu _{\ell ,m}\) and \(V_{m}\) respectively by:

and

Then, we obtain an estimator \({\widetilde{\xi }}_K^{(n)}\) of the criterion by replacing in (4), (5) and (6) the parameters \(p_{m}\), \(p_{\ell |m}\), \(\mu _{m}\), \(\mu _{\ell ,m}\) and \(V_{m}\) by their estimators given above. Note that the smoothing parameter \(\lambda \) must be obtained before parameters can be estimated. Mahat et al. (2007) suggested to choose a value of \(\lambda \) that gives good performance of a classification rule and proposed to obtain it by minimizing Brier score (e.g. Hand 1997, p. 101). No consistency result has been given for the aforementioned estimators.

4 Selection of variables

From Eq. (7) it is seen that estimation of \(I_{1}\) reduces to the determination of the permutation \(\sigma =\left( \sigma (1),\ldots ,\sigma (p)\right) \) and the number of selected variables s. In this section, estimators of \(\sigma \) and s are proposed and consistency properties of these estimators are obtained in Theorem 2 for the case where the criterion \(\xi _K\) is estimated by using empirical estimators as indicated in (8).

4.1 Estimation of \(\sigma \) and s

Let us consider a sequence \(\left( f_{n}\right) _{n\in {\mathbb {N}}^{*}}\) of functions from I to \({\mathbb {R}}_{+}\) such that \(f_n\sim n^{-\alpha }f\) where \(\alpha \in \left] 0,1/2\right[ \) and f is a strictly decreasing function from I to \({\mathbb {R}}_{+}\). Then, recalling that \(K_{i}=I-\left\{ i\right\} \), we put

and we take as estimator of \(\sigma \) the random permutation \(\widehat{\sigma }^{\left( n\right) }\) of I such that

and if \({\widehat{\phi }}_{{\widehat{\sigma }}^{\left( n\right) }\left( i\right) }^{\left( n\right) }={\widehat{\phi }}_{{\widehat{\sigma }}^{\left( n\right) }\left( j\right) }^{\left( n\right) }\) with \(i<j\), then the order is defined by \({\widehat{\sigma }}^{\left( n\right) }\left( i\right) <{\widehat{\sigma }}^{\left( n\right) }\left( j\right) \).

Remark 2

Just like \(\sigma \), the permutation \(\widehat{\sigma }^{\left( n\right) }=\left( \widehat{\sigma }^{\left( n\right) }(1),\ldots ,\widehat{\sigma }^{\left( n\right) }(p)\right) \) is defined by ranking the \({\widehat{\phi }}_{i}^{\left( n\right) }\)’s in nonincreasing order so that the ex aequo are ranked in increasing order of the corresponding indices. Then, \(\widehat{\sigma }^{\left( n\right) } (i)\) is the index corresponding to the i-th largest element.

Furthermore, we consider the random set \({\widehat{J}}_{i}^{\left( n\right) }=\left\{ {\widehat{\sigma }}^{\left( n\right) }\left( j\right) ;\;1\le j\le i\right\} \) and the random variable

where \(\left( g_{n}\right) _{n\in {\mathbb {N}}^{*}}\) is a sequence of functions from I to \({\mathbb {R}}_{+}\) such that \(g_n\sim n^{-\beta }g\) where \(\beta \in \left] 0,1\right[ \) and g is a strictly increasing function. Then, we take as estimator of s the random variable

The variable selection is achieved by taking the random set

as estimator of \(I_{1}\).

Remark 3

Since \(\lim _{n\rightarrow +\,\infty }f_n(i)=0\) and \(\lim _{n\rightarrow +\,\infty }g_n(i)=0\) for any \(i\in I\), it is easily deduced from (10), (11) and Theorem 1 that \({\widehat{\phi }}^{(n)}_i\) and \({\widehat{\psi }}^{(n)}_i\) are consistent estimators of \(\xi _{K_i}\) and \(\xi _{J_i}\) (with \(J_i:=\left\{ \sigma \left( j\right) ;\;1\le j\le i\right\} \) ) respectively, just like \({\widehat{\xi }}^{(n)}_{K_i}\) and \({\widehat{\xi }}^{(n)}_{{\widehat{J}}^{(n)}_i}\). The penalty terms \(f_n(i)\) and \(g_n(\widehat{\sigma }^{\left( n\right) } (i))\) that are introduced in (10) and (11) permit to avoid ties in the values of \({\widehat{\phi }}^{(n)}_i\) and \({\widehat{\psi }}^{(n)}_i\). Indeed, even if one has \({\widehat{\xi }}^{(n)}_{K_i}= {\widehat{\xi }}^{(n)}_{K_j}\) (resp. \({\widehat{\xi }}^{(n)}_{J_i}={\widehat{\xi }}^{(n)}_{J_j}\)) for \(i\ne j\), we will have \({\widehat{\phi }}^{(n)}_i\ne {\widehat{\phi }}^{(n)}_j\) (resp. \({\widehat{\psi }}^{(n)}_i\ne {\widehat{\psi }}^{(n)}_j\) ). This property is necessary, in the proof of Theorem 2 given below, for obtaining consistency of the proposed estimators \({\widehat{\sigma }}^{(n)}\) and \({\widehat{s}}^{(n)}\). If we directly use \({\widehat{\xi }}^{(n)}_{K_i}\) and \({\widehat{\xi }}^{(n)}_{{\widehat{J}}^{(n)}_i}\) consistency cannot be obtained because the aforementioned property may not be satisfied. This motivates the introduction of the penalty functions \(f_n\) and \(g_n\) in (10) and (11).

4.2 Consistency

When the empirical estimators defined in Sect. 3.1 are considerd, we establish consistency for the preceding estimators. We first give a proposition that is useful for proving the consistency theorem. There exist \(t\in I\) and \(\left( m_{1},\ldots ,m_{t}\right) \in I^{t}\) such that \(m_{1}+\cdots +m_{t}=p\), and \(\xi _{K_{\sigma ( 1) }} =\cdots =\xi _{K_{\sigma ( m_{1}) }}>\xi _{K_{\sigma ( m_{1}+1) }}=\cdots =\xi _{K_{\sigma ( m_{1}+m_{2}) }}>\cdots \cdots >\xi _{K_{\sigma ( m_{1}+\cdots +m_{t-1}+1) }}=\cdots =\xi _{K_{\sigma ( m_{1}+\cdots +m_{t}) }}\). We consider the set E of integers \(\ell \) satisfying \(1\le \ell \le t\) and \(m_{\ell }\ge 2 \), and we put \(m_{0}:=0\) and \(F_{\ell }:=\left\{ \sum _{k=0}^{\ell -1}m_k+1,\ldots ,\sum _{k=0}^{\ell }m_k-1\right\} \) (\(\ell \in \left\{ 1,\ldots ,t\right\} \)). Then, introducing the assumption

\( ({\mathcal {A}}_4){:}\;{\mathbb {E}}\left( \Vert X\Vert ^4\right) <+\,\infty , \)

we have:

Proposition 2

We assume that the asumptions \( ({\mathcal {A}}_1)\), \( ({\mathcal {A}}_2)\) and \( ({\mathcal {A}}_4)\) hold, and that \(E \ne \varnothing \). Then for any \(\ell \in E\) and any \(i \in F_{\ell }\), \(n^{\alpha }\left( {\widehat{\xi }}^{(n)}_{K_{\sigma (i)}} - {\widehat{\xi }}^{(n)}_{K_{\sigma (i+1)}}\right) \) converges in probability to 0, as \(n \rightarrow +\,\infty \).

The following theorem gives consistency of the estimators \({\widehat{\sigma }}^{(n)}\) and \({\widehat{s}}^{(n)}\) defined in Sect. 4.1. The proof of this theorem is similar to that of Theorem 3.1 in Nkiet (2012).

Theorem 2

We assume that the asumptions \( ({\mathcal {A}}_1)\), \( ({\mathcal {A}}_2)\) and \( ({\mathcal {A}}_4)\) hold. Then, we have:

-

(i)

\(\lim _{n\rightarrow +\,\infty }P\left( {\widehat{\sigma }}^{\left( n\right) }=\sigma \right) =1;\)

-

(ii)

\({\widehat{s}}^{\left( n\right) }\) converges in probability to s, as \(n\rightarrow +\,\infty \).

As a consequence of this theorem, we easily obtain:

This shows the consistency of our method for selecting variables in discriminant analysis with mixed variables.

Remark 4

Technical arguments in the proofs of Proposition 2 and Theorem 2 motivate the introduction of \(f_n\) and \(g_n\) in (10) and (11) (see Remark 3). They also explain the choice of f, g, \(\alpha \) and \(\beta \) with the related properties. Indeed:

-

(i)

in the proof of Proposition 2 we have, for instance, the inequality

$$\begin{aligned} |{\widehat{A}}^{(n)}_{i}| \le n^{\alpha -1/2} \left( \Vert {\widehat{{\varLambda }}}^{(n)}_{K_{\sigma (i)}\vert m}\Vert _{\infty } + \Vert {\widehat{{\varLambda }}}^{(n)}_{K_{\sigma (i+1)}\vert m}\Vert _{\infty }\right) \Vert {\widehat{W}}^{(n)}\Vert _{\mathcal {E}} \end{aligned}$$from which we want to prove that \({\widehat{A}}^{(n)}_{i}\) converges in probability to 0 as \(n\rightarrow +\,\infty \). Since, as \(n\rightarrow +\,\infty \), \(\Vert {\widehat{{\varLambda }}}^{(n)}_{K_{\sigma (i)}\vert m}\Vert _{\infty } + \Vert {\widehat{{\varLambda }}}^{(n)}_{K_{\sigma (i+1)}\vert m}\Vert _{\infty }\) converges almost surely to \(\Vert {\varLambda }_{K_{\sigma (i)}\vert m}\Vert _{\infty } + \Vert {\varLambda }_{K_{\sigma (i+1)}\vert m}\Vert _{\infty }\) and \(\Vert {\widehat{W}}^{(n)}\Vert _{\mathcal {E}}\) converges in distribution to \(\Vert W\Vert _{\mathcal {E}}\), where W has a normal distribution, we have to take \(\alpha <1/2\) in order to obtain the required convergence property. In addition, for having \(\lim _{n\rightarrow +\,\infty }f_n(i)=0\) we must take \(\alpha >0\).

-

(ii)

In the proof of Theorem 2, a similar argument leads to \(0<\beta <1\). Further, we want to obtain \(f(\sigma (i))-f(\sigma (i+1))>0\) and \(g(\sigma (i))-g(\sigma (s))>0\) for \((i,s)\in I^2\) satisfying \(\sigma (i)<\sigma (i+1)\) and \(\sigma (i)>\sigma (s)\). That is why f (resp. g) is taken as a strictly decreasing (resp. increasing) function.

Remark 5

The variable selection method that is described above can be performed by using smoothed non-parametric estimators defined in Sect. 3.2. It suffices to replace \({\widehat{\xi }}_{K_i}^{(n)}\) and \({\widehat{\xi }}_{{\widehat{J}}_i}^{(n)}\) by \({\widetilde{\xi }}_{K_i}^{(n)}\) and \({\widetilde{\xi }}_{{\widehat{J}}_i}^{(n)}\) in (10) and (11). But, since no consistency results are known for these estimators, we could not garantee consistency of the resulting method.

5 Numerical experiments

In this section, we report results of simulations made for studying properties of the proposed method. Several issues are adressed: the influence of the penalty functions \(f_n\) and \(g_n\) introduced in (10) and (11), the type of estimator and the parameters \(\alpha \) and \(\beta \) on the performance of the procedure, optimal choice of these parameters and comparison with the method proposed in Mahat et al. (2007).

5.1 The simulated data sets

Each data set was generated as follows: for a given value of p, \(X_i\) is generated from a multivariate normal distribution in \({\mathbb {R}}^p\) with mean \(\mu \) and covariance matrix given by \({\varGamma }=\frac{1}{2}({\mathbb {I}}_p+J_p)\), where \({\mathbb {I}}_p\) is the \(p\times p\) identity matrix and \(J_p\) is the \(p\times p\) matrix whose elements are all equal to 1. For a given d and \(M=2^d\), \(U_{i}\) is generated from a discrete distribution on \(\{1,\ldots ,M\}\) with probabilities \(q_1,\ldots ,q_M\) such that \(\sum _ {k=1}^Mq_k=1\), that is equivalent to generate \(Y_i\) as random vector with d coordinates being binary random variables. For this, two models are used:

-

(i)

Model 1:\(q_1=q_2=\cdots =q_M=1/M\) (uniform distribution);

-

(ii)

Model 2:\(M=8\) and \(q_1=q_3=q_5=0.001\), \(q_2=q_4=q_6=q_7=q_8=0.1994\).

Model 2 corresponds to the case where many cell incidences are very small and will be relevant in order to compare empirical and non-parametric estimators. Two groups of data was generated as indicated above with \(\mu =\mu _1=(0,\dots ,0)^T\) for the first group and \(\mu =\mu _2=(\mu ^{(2)}_1,\ldots ,\mu ^{(2)}_p)^T\) for the second group, where

for \(k=1,\ldots ,[(p+1)/2]\), the notation [x] denoting the integer part of x. Our simulated data is based on two independent data sets: training data and test data, each with sample size \(n=100,\, 300,\,500,\,1000\) and with size \(n_1=n_2=n/2\) for the two groups. The training data is used for selecting variables and the test data is used for computing the correct classification rate (CCR), that is the proportion of correct classification. For the two groups case (i.e. when \(q=2\)) classification after variable selection is achieved by using the rule (1) assuming equal costs and equal prior probabilities in both two groups (hence \(\log (\gamma )=0\)), and for the multiple classes case (i.e. when \(q>2\)) the rule (2) is used assuming that \(\tau _1=\cdots =\tau _q\). The average of CCR over 1000 independent replications is used for measuring the performance of the methods.

5.2 Influence of penalty functions and type of estimator

In order to evaluate the impact of penalty functions on the performance of our method we took \(f_n(i)=n^{-\alpha }/ h_k(i)\) and \(g_n(i)=n^{-\beta } h_k(i)\), \(k=1,\ldots ,13\), with \(\alpha =\beta =1/4\), \(h_1(x)=x\), \(h_2(x)=x^{0.1}\), \(h_3(x)=x^{0.5}\), \(h_4(x)=x^{0.9}\), \(h_5(x)=x^{10}\), \(h_6(x)=\ln (x)\), \(h_7(x)=\ln (x)^{0.1}\), \(h_8(x)=\ln (x)^{0.5}\), \(h_9(x)=\ln (x)^{0.9}\), \(h_{10}(x)=x\ln (x)\), \(h_{11}(x)=\left( x\ln (x)\right) ^{0.1}\), \(h_{12}(x)=\left( x\ln (x)\right) ^{0.5}\), \(h_{13}(x)=\left( x\ln (x)\right) ^{0.9}\). For each of these functions, we computed CCR by using both empirical estimators from Sect. 3.1 and non-parametric smoothing procedure introduced in Sect. 3.2. For this latter type of estimator, the smoothing parameter \(\lambda \) was computed from a cross validation method on the training sample in order to maximize correct classification rate. The results for Model 1 are given in Table 1. It is observed that there is no significant difference between the results obtained for the different functions. So, it seems that choosing penalty functions has no influence on the performance of our method. Also, the results in Table 1 don’t really show any significant difference between the empirical and smoothed estimators when cell entries of the d-dimensional variate contingency table corresponding to the d categorical variables are not small. However, when Model 2 is used, i.e in the case where the aforementioned cell entries are very small, we see in Table 2 that estimates based on empirical estimators can often not be computed (NA=not available), certainly because of the absence of observations in some cells, while the results are suitable with smoothed estimators. This suggests that one should prefer to use smoothed estimator for performing the proposed method.

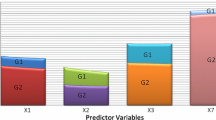

5.3 Influence of parameters \(\alpha \) and \(\beta \)

Since tuning parameters may have impact on the performance of a statistical procedure, it is important to study their influence. That is why numerical experiments have been carried out in order to investigate the influence of \(\alpha \) and \(\beta \) on the performance of our method. For doing that, we made simulations as indicated above by taking

with \(\alpha =0.1\), 0.2, 0.3, 0.4, 0.45, and \(\beta \) varying in [0, 1[. The results are reported in Fig. 1a–c. Although the obtained curves vary as \(\alpha \) and \(\beta \) vary, we cannot say that these parameters have a marked impact on the performance of our method. Indeed, all differences in CCR values are negligible since they do not exceed 0.003. Thus, one can think that, in practice, our method can be performed with arbitrary values for \(\alpha \) and \(\beta \) without significantly affecting its performance. However, an interesting alternative would be to determine optimal values of these parameters. An approach for doing that via cross-model validation is described in the following section.

5.4 Choosing optimal \((\alpha ,\beta )\)

We propose a method for making an optimal choice of \((\alpha ,\beta )\) based on leave-one-out cross validation used in order to maximize corect classification rate. For each \(k\in \{1,\ldots ,n\}\), after removing the k-th observation for X and Y in the training sample our method for selecting variable is applied on this remaining sample with a given value for \((\alpha ,\beta )\) and penalty functions taken as in (12). Then, the observation that have been removed is allocated to a group \({\widetilde{g}}_{\alpha ,\beta }(k)\) in \(\{1,\ldots ,q\}\) by using the rule given in (1) (for the two groups case) or in (2) (for the case of more than two groups) based on the variables that have been selected in the previous step. Then, we consider

and we take as optimal value for \((\alpha ,\beta )\) the pair \((\alpha _{opt},\beta _{opt})\) defined by:

5.5 Algorithm

Algorithm1 describes the proposed method for practically choose optimal \((\alpha ,\beta )\) from simulated data. It can obviously be adapted for permorming our method on real data sets.

Average of CCR values over 1000 replications for Model 1 with \(q=2\) classes, \(d=3\) binary variables, \(M=2^3=8\), \(p=5\) continuous variables, \(\alpha =0.1,\, 0.2,\, 0.3,\, 0.4,\, 0.45\) and \(\beta \) varying in ]0, 1[. Weightings obtained from penalty functions \(f_7=n^{-\alpha }/ h_7\) and \(g_7=n^{-\beta } h_7\). a\(n=100\). b\(n=300\). c\(n=500\)

5.6 Comparison with the method of Mahat et al. (2007)

In order to compare our method to that of Mahat et al. (2007), 1000 independent replications were made. For each of these replications the method described in Algorithm 1 was performed:

-

(i)

the training sample is used for selecting variables from our method and that of Mahat et al. (2007); our method is used with penalty functions given in (12) and optimal \((\alpha ,\beta )\) obtained by using leave-one-out cross validation as indicated in (13);

-

(ii)

the test sample is then used for computing CCR for the two methods.

Model 1 was used with large number of variables: the number of continuous variables is \(p=100\), and the number of binary variables is \(d=6,8\); then, the number of cells of the resulting multinomial variable is \(M=64{,}256\). The average of CCR over the 1000 replications is then computed. Table 3 gives the results for three methods:

-

our method with empirical estimators (Meth1);

-

our method with smoothed non-parametric estimators (Meth2);

-

the method of Mahat et al. (2007) (Meth3).

The average of CCR before variable selection (denoted by CCRb in Table 3 and Table 4) is also computed in order to compare the results with that corresponding to the ’true’ model involving all p continuous variables. The results in Table 3 do not show a superiority of one of the methods compared to the other. However, they give another illustration of the interest to use our method with smoothed non-parametric estimators rather than empirical estimators, especially in high-dimensional context. Indeed, when \(d=8\) and \(n=300\), 500, there are more cells than observations in each dataset. Therefore, some cells do not have any incidence. When \(d=6\) or when \(d=8\) and \(n=1000\), some cells may have no incidence. Then estimates based on empirical estimators cannot be computed while this problem does not arise for the smoothed non-parametric estimators. Comparing the results with that obtained from the ’true’ model, it appears that our method has good behavior since the difference between CCRb and CCR is not large, especially for large values of n.

One of the advantages of the method we propose is that it can be used when there are more than two groups, which is not the case for classical methods, especially that of Mahat et al. (2007). For illustrating this fact, we made simulations for the case of three groups. Model 1 was used again with the first two groups taken as above, and a third group corresponding to the mean \(\mu _3=(\mu ^{(3)}_{1},\ldots ,\mu ^{(3)}_{p})^T\) with:

for \(k=1,\ldots ,[(p+1)/2]\). Taking \(p=25\) and \(d=3,8\) we computed the average of CCR over 1000 replications. The results are reported in Table 4. We obtained better results with smoothed non-parametric estimators. When \(d=3\) the obtained results are better than these from the ’true’ model, and when \(d=8\) the difference between CCRb and CCR is larger.

6 A data example

To further demonstrate its practical usefulness, we apply our method to the cover type dataset from the Repository of Machine Learning Databases maintained by the University of California at Irvine. This dataset consists of 1818 tree observations from areas of the Roosevelt National Forest in Colorado. The units are the 1818 trees for which several cartographic variables are observed. There are seven tree types, each represented by an integer variable: (i) Spruce/Fir; (ii) Lodgepole Pine; (iii) Ponderosa Pine; (iv) Cottonwood/Willow; (v) Aspen; (vi) Douglas-fir; (vii) Krummholz. The tree type is an observed variable that induces 7 classes of units. For each unit 10 numerical variables and 44 binary variables are observed. The numerical variables are: (V1) elevation (in meters); (V2) aspect (in degrees azimuth); (V3) slope (in degrees); (V4) horizontal distance to nearest surface water features; (V5) vertical distance to nearest surface water features; (V6) horizontal distance to nearest roadway; (V7) hillshade index at 9am; (V8) hillshade index at noon; (V9) hillshade index at 3pm; (V10) horizontal distance to nearest wildfire ignition points. We considered 10 of the 44 aforementioned binary variables: 4 binary variables giving wilderness area designation, and 6 binary variables defining soil type.

This data set is suitable for classification as it permits to predict the type of a given tree for which the above binary and numerical variables are observed, by using an allocation rule like the one defined in (2). We are interested in determining, among the 10 numerical variables given above, those that are relevant for this classification approach. For doing that, we applied our method on this data set, with \(n=1818\), \(p=10\), \(q=7\), \(d=10\) and \(M=2^{10}=1024\), and by using the smoothed non-parametric estimators defined in 3.2. The penalty functions used were the function given in (12). The data set was divided in two parts of equal sizes \(n_1=n_2=n/2\). The first part was used as a training set for estimating optimal \(\alpha \) and \(\beta \) via leave-one-out cross validation as indicated in Sect. 5.4, and for selecting variables i.e. estimating the set of relevant numerical variables for discriminating between the seven groups. The second part is then used as a test sample for computing CCR afer variable selection by using the rule (2) assuming that \(\tau _1=\cdots =\tau _7\). We found that the relevant numerical predictors are V1, V6 and V10, and that \(CCR=0.5830\). We also computed CCR with all 10 continuous variables (denoted by CCRb) in order to compare it with the preceding value, and we obtained \(CCRb=0.5940\). These results suggests that:

-

elevation (V1), horizontal distance to nearest roadway (V6) and horizontal distance to nearest wildfire ignition points (V10) are the most relevant variables for predicting cover type in the presence of the binary variables giving wilderness area designation and defining soil type;

-

our method behaves well since the difference between CCRb and CCR is quite small.

This example also shows the ability of our method to perform in case there are more than two groups (here, there are \(q=7\) groups) and when both continuous and binary variable have high dimensions.

7 Conclusion

In this paper, we introduce a variable selection method for discrimination among several groups with both continuous and categorical predictors. The main advantages of the proposal are: (i) no assumption on the distribution of the involved predictors is needed, in particular the location model, which is classically assumed in this context, is not supposed to hold; (ii) it can be used for more than two groups, which is not the case for conventional methods which are limited to the case of two groups. Both theoretical results and numerical studies show that our method behaves well, especially when smoothed non-parametric estimators are used for estimating the proposed criterion.

References

Aspakourov O, Krzanowski WJ (2000) Non-parametric smoothing of the location model in mixed variables discrimination. Stat Comput 10:289–297

Bar-Hen A, Daudin JJ (1995) Generalization of the Mahalanobis distance in the mixed case. J Multivar Anal 53:332–342

Bedrick EJ, Lapidus J, Powell JF (2000) Estimating the Mahalanobis distance from mixed continuous and discrete data. Biometrics 56:394–401

Chang PC, Afifi AA (1979) Classification based on dichotomous and continue variables. J Am Stat Assoc 69:336–339

Daudin JJ (1986) Selection of variables in mixed-variable discriminant analysis. Biometrics 42:473–481

Daudin JJ, Bar-Hen A (1999) Selection in discriminant analysis with continuous and discrete variables. Comput Stat Data Anal 32:161–175

De Leon AR, Carriere KC (2005) A generalized Mahalanobis distance for mixed data. J Multivar Anal 92:174–185

De Leon AR, Soo A, Williamson T (2011) Classification with discrete and continuous variables via general mixed-data models. J Appl Stat 38:1021–1032

Fujikoshi Y (1982) A test for additional information in canonical correlation analysis. Ann Inst Stat Math 34:523–530

Fujikoshi Y (1985) Selection of variables in two-group discriminant analysis by error rate and Akaike’s information criteria. J Multivar Anal 17:27–37

Hand DJ (1997) Construction and assessment of classification rules. Wiley, Chichester

Krusinska E (1989a) New procedure for selection of variables in location model for mixed variable discrimination. Biom J 31:511–523

Krusinska E (1989b) Two step semi-optimal branch and bound algorithm for feature selection in mixed variable discrimination. Pattern Recognit. 22:455–459

Krusinska E (1990) Suitable location model selection in the terminology of graphical models. Biom J 32:817–826

Krzanowski WJ (1975) Discrimination and classification using both binary and continuous variables. J Am Stat Assoc 70:782–790

Krzanowski WJ (1983) Stepwise location model choice in mixed variable discrimination. J R Stat Soc C 32:260–266

Krzanowski WJ (1984) On the null distribution of distance between two groups, using mixed continuous and categorical variables. J Classif 1:243–253

Mahat NI, Krzanowski WJ, Hernandez A (2007) Variable selection in discriminant analysis based on the location model for mixed variables. Adv Data Anal Classif 1:105–122

McKay RJ (1977) Simultaneous procedures for variable selection in multiple discriminant analysis. Biometrika 64:283–290

McLachlan GJ (1992) Discriminant analysis and statistical pattern recognition. Wiley, New York

Nkiet GM (2012) Direct variable selection for discrimination among several groups. J Multivar Anal 105:151–163

Olkin I, Tate RF (1961) Multivariate correlation models with mixed discrete and continuous variables. Ann Math Stat 32:448–465. J Multivar Anal 105:151–163

Acknowledgements

We are very grateful to two anonymous referees for their helpful and constructive comments, which led to a much improved manuscript. Research by Alban Mbina Mbina was supported in part by the Agence Universitaire de la Francophonie (AUF).

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Mbina Mbina, A., Nkiet, G.M. & Eyi Obiang, F. Variable selection in discriminant analysis for mixed continuous-binary variables and several groups. Adv Data Anal Classif 13, 773–795 (2019). https://doi.org/10.1007/s11634-018-0343-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11634-018-0343-0