Abstract

In this paper, we analyze some theoretical properties of the problem of minimizing a quadratic function with a cubic regularization term, arising in many methods for unconstrained and constrained optimization that have been proposed in the last years. First we show that, given any stationary point that is not a global solution, it is possible to compute, in closed form, a new point with a smaller objective function value. Then, we prove that a global minimizer can be obtained by computing a finite number of stationary points. Finally, we extend these results to the case where stationary conditions are approximately satisfied, discussing some possible algorithmic applications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we address the solutions of the following (possibly non-convex) optimization problem:

where \(c \in \mathbb {R}^n\), Q is a symmetric \(n \times n\) matrix, \(\sigma \) is a positive real number and, here and in the rest of the article, \(||\cdot ||\) is the Euclidean norm.

In recent years, there has been a growing interest in studying the properties of problem (1), since functions of the form of m(s) are used as local models (to be minimized) in many algorithmic frameworks for unconstrained optimization [1,2,3,4,5,6,7, 11, 12, 14, 17,18,19], which have been even extended to the constrained case [2, 8, 16]. To be more specific, let us consider the unconstrained optimization problem

where \(f :\mathbb {R}^n \rightarrow \mathbb {R}\) is a twice continuously differentiable function. The class of methods proposed in the above cited papers is mostly characterized by the iteration \(x^{k+1} = x^k + s^k\), being \(s^k\) a (possibly approximate) minimizer of the cubic model

where \(\sigma ^k\) is a suitably chosen positive real number. Interestingly, it can be shown that, under suitable assumptions, this algorithmic scheme is able to achieve quadratic convergence rate and a worst-case iteration complexity better than the gradient method. In particular, if \(\nabla ^2 f(x)\) is Lipschitz continuous and \(s^k\) is a global minimizer of \(m^k(s)\), Nesterov and Polyak [18] proved a worst-case iteration count of order \(O(\varepsilon ^{-3/2})\) to obtain \(||\nabla f(x^k)|| \le \varepsilon \). Cartis et al. [6, 7] generalized this result, obtaining the same complexity bound, but allowing for a symmetric approximation of \(\nabla ^2 f(x^k)\) to be used in \(m^k(s)\) and relaxing the condition that \(s^k\) is a global minimizer of \(m^k(s)\). Moreover, superlinear and quadratic convergence rate were proved under appropriate assumptions, but without requiring \(\nabla ^2 f(x^k)\) to be globally Lipschitz continuous.

The intuition behind the algorithm proposed by Cartis, Gould and Toint is that the parameter \(\sigma ^k\) plays the same role as the (reciprocal of the) trust-region radius in trust-region methods. Moreover, some theoretical properties of trust-region models can be extended to (1), such as the existence of necessary and sufficient conditions for global minimizers even when m(s) is non-convex [6, 14, 18]. In this fashion, Cartis, Gould and Toint proposed the Adaptive Regularization algorithm using Cubics (ARC) that, besides having the theoretical convergence properties mentioned above, is in practice comparable with state-of-the-art trust-region methods.

In this respect, in the above cited papers different strategies were proposed to minimize \(m^k(s)\). In particular, in [6, 18] some iterative techniques were devised to compute global minimizers, that are based on solving a one-dimensional non-linear equation.

Starting from these considerations, here we focus on the solutions of problem (1), pointing out some theoretical properties that, besides their own interest, may be useful from an algorithmic point of view. In particular, we first extend the results obtained in [15] for trust-region models and we show that, given any stationary point of (1) that is not a global minimizer, we can compute, in closed form, a new point that reduces m(s). So, a global minimizer of (1) can be obtained by repeating this step a finite number of times, that is, computing at most \(2(k+1)\) stationary points, where k is the number of distinct negative eigenvalues of the matrix Q. Further, we show how this strategy can be generalized to the case where stationary conditions are approximately satisfied, opening to a possible practical usage of the proposed results.

The rest of the paper is organized as follows. Section 2 is the core of the paper, where we point out some theoretical properties of the stationary points of (1) and analyze how to compute global minima by escaping from stationary points that are not global minimizers. In Sect. 3 we generalize these properties, considering approximate stationary points, and we briefly discuss how these results can used in a more general framework. Finally, we draw some conclusions in Sect. 4.

2 Properties of stationary points

In this section, we present the main results of the paper. First, let us report the definition of stationary points of problem (1) and recall a known result on necessary and sufficient conditions for global optimality, whose proof can be found in [6]. From now on, we indicate with I the \(n \times n\) identity matrix.

Definition 1

We say that \(s^* \in \mathbb {R}^n\) is a stationary point of problem (1) if

or equivalently,

Theorem 1

A point \(s^* \in \mathbb {R}^n\) is a global minimizer of problem (1) if and only if it satisfies stationary conditions (2)–(3) and the matrix \((Q + \sigma ||s^*|| I)\) is positive semidefinite. Moreover, \(s^*\) is unique if \((Q + \sigma ||s^*|| I)\) is positive definite.

Now, exploiting the close relation between problem (1) and the trust-region model (see [9] for an overview on trust-region methods), we extend the results obtained in [15] to show that

-

(i)

given a stationary point \(\bar{s}\) of (1) that is not a global minimizer, we can compute, in closed form, a new point \(\hat{s}\) such that \(m(\hat{s}) < m(\bar{s})\);

-

(ii)

a global minimizer of (1) can be obtained by computing at most \(2(k+1)\) stationary points, where k is the number of distinct negative eigenvalues of the matrix Q.

We start by proving the first point, as stated in the following theorem.

Theorem 2

Let \(\bar{s}\) be a stationary point of problem (1). We define the point \(\hat{s}\) as follows:

-

(a)

if \(c^T \bar{s} > 0\), then

$$\begin{aligned} \hat{s} := -\bar{s}; \end{aligned}$$ -

(b)

if \(c^T \bar{s} \le 0\) and a vector \(d \in \mathbb {R}^n\) exists such that \(d^T(Q + \sigma ||\bar{s}|| I) d < 0\),

-

(i)

if \(\bar{s} = 0\), then

$$\begin{aligned} \hat{s} := \bar{s} + \alpha d, \end{aligned}$$with

$$\begin{aligned} 0< \alpha < -\frac{3 \, d^T Q d}{2 \, \sigma ||d||^3}; \end{aligned}$$ -

(ii)

if \(\bar{s} \ne 0\) and \(\bar{s}^T d \ne 0\), then

$$\begin{aligned} \hat{s} := \bar{s} - 2 \frac{\bar{s}^T d}{||d||^2} d; \end{aligned}$$ -

(iii)

if \(\bar{s} \ne 0\) and \(\bar{s}^T d = 0\), then

$$\begin{aligned} \hat{s} := \bar{s} - 2 \frac{\bar{s}^T z}{||z||^2} z, \end{aligned}$$where \(z := \bar{s} + \alpha d\) and

$$\begin{aligned} \alpha > \frac{c^T d - \sqrt{\left( c^T d\right) ^2 + \left( c^T \bar{s}\right) \bigl [d^T (Q + \sigma ||\bar{s}|| I) d\bigr ]}}{d^T (Q + \sigma ||\bar{s}|| I) d}. \end{aligned}$$

-

(i)

We have that

Proof

In case (a), we can write

Now, we consider case (b) and distinguish the three subcases.

-

(i)

From (2)–(3), we have that \(c=0\). Thus, we can write

$$\begin{aligned} m(\bar{s} + \alpha d) = m(\alpha d) = \frac{1}{2} \alpha ^2 d^T Q d + \frac{1}{3} \sigma \alpha ^3 ||d||^3, \quad \forall \alpha \in \mathbb {R}^n. \end{aligned}$$Consequently,

$$\begin{aligned} m(\bar{s} + \alpha d) = \frac{1}{6} \alpha ^2 \left( 3 d^T Q d + 2 \sigma \alpha ||d||^3 \right) < 0 = m(\bar{s}), \end{aligned}$$for all \(\displaystyle {0< \alpha < -\frac{3 \, d^T Q d}{2 \, \sigma ||d||^3}}\).

-

(ii)

First, we observe that

$$\begin{aligned} \biggl \Vert \bar{s} - 2 \frac{\bar{s}^T d}{||d||^2} d \biggr \Vert ^2 = ||\bar{s}||^2 + \biggl (2 \frac{\bar{s}^T d}{||d||^2} \biggr )^2 ||d||^2 - 4 \frac{\bar{s}^T d}{||d||^2} \left( \bar{s}^T d\right) = ||\bar{s}||^2. \end{aligned}$$(4)Moreover, the function m(s) can be written as

$$\begin{aligned} m(s) = c^T s + \frac{1}{2} s^T (Q + \sigma ||s|| I) s - \frac{1}{6} \sigma ||s||^3. \end{aligned}$$(5)Using (4) and (5), we can write \(\displaystyle {m\biggl (\bar{s} - 2 \frac{\bar{s}^T d}{||d||^2} d\biggr )}\) as

$$\begin{aligned} c^T \biggl (\bar{s} - 2 \frac{\bar{s}^T d}{||d||^2} d\biggr ) + \frac{1}{2} \biggl (\bar{s} - 2 \frac{\bar{s}^T d}{||d||^2} d\biggr )^T (Q + \sigma \Vert \bar{s} \Vert I) \biggl (\bar{s} - 2 \frac{\bar{s}^T d}{||d||^2} d\biggr ) - \frac{1}{6} \sigma ||\bar{s}||^3. \end{aligned}$$Rearranging and taking into account that \(\nabla m(\bar{s}) = Q \bar{s} + \sigma ||\bar{s}|| \bar{s} + c\), we obtain

$$\begin{aligned} m\biggl (\bar{s} - 2 \frac{\bar{s}^T d}{||d||^2} d\biggr ) = m(\bar{s}) + \frac{1}{2} \biggl ( 2 \frac{\bar{s}^T d}{||d||^2} \biggr )^2 d^T (Q + \sigma ||\bar{s}|| I) d - 2 \frac{\bar{s}^T d}{||d||^2} \nabla m(\bar{s})^T d. \end{aligned}$$(6)Stationary conditions (2)–(3) imply that \(\nabla m(\bar{s}) = 0\). Exploiting the fact that \(d^T(Q + \sigma ||\bar{s}|| I) d < 0\), we get \(\displaystyle {m\biggl (\bar{s} - 2 \frac{\bar{s}^T d}{||d||^2} d\biggr )} < m(\bar{s})\).

-

(iii)

Using the definition of z, we can write

$$\begin{aligned} \begin{aligned} z^T (Q + \sigma ||\bar{s}|| I) z&= (\bar{s} + \alpha d)^T (Q + \sigma ||\bar{s}|| I) (\bar{s} + \alpha d) \\&= \bar{s}^T (Q + \sigma ||\bar{s}|| I) \bar{s} + \alpha ^2 d^T (Q + \sigma ||\bar{s}|| I) d \\&\quad + 2 \alpha d^T (Q + \sigma ||\bar{s}|| I) \bar{s}. \end{aligned} \end{aligned}$$From stationary conditions (2)–(3), we have that \(Q \bar{s} + \sigma ||\bar{s}|| \bar{s} = -c\). So, we obtain

$$\begin{aligned} z^T (Q + \sigma ||\bar{s}|| I) z = \alpha ^2 d^T (Q + \sigma ||\bar{s}|| I) d - 2 \alpha c^T d - c^T \bar{s}. \end{aligned}$$It is straightforward to verify that the right-hand side of the above equality is negative for all \(\alpha > \tilde{\alpha }\), where

$$\begin{aligned} \tilde{\alpha } = \frac{c^T d - \sqrt{\left( c^T d\right) ^2 + \left( c^T \bar{s}\right) \bigl [d^T (Q + \sigma ||\bar{s}|| I) d\bigr ]}}{d^T (Q + \sigma ||\bar{s}|| I) d}. \end{aligned}$$Consequently, since \(z = \bar{s} + \alpha d\) with \(\alpha > \tilde{\alpha }\), it follows that \(z^T (Q + \sigma ||\bar{s}|| I) z < 0\). We can thus proceed as in case (ii) by defining the point \(\hat{s} = \bar{s} - 2 \dfrac{\bar{s}^T z}{||z||^2} z\) and we get the result. \(\square \)

Remark 1

Conditions of Theorem 2 are satisfied if and only if the stationary point \(\bar{s}\) is not a global minimizer. It follows from the fact that, if (a) or (b) hold at \(\bar{s}\), then \(\bar{s}\) is not a global minimizer; vice versa, if \(\bar{s}\) is not a global minimizer, then \((Q + \sigma ||\bar{s}|| I)\) is not positive semidefinite (see Theorem 1) and then (b) holds.

Now, we show how the above result can be exploited to obtain a global minimizer of (1) by computing a finite number of stationary points. We first need the following lemma, stating that two stationary points of problem (1) with the same norm produce the same objective value.

Lemma 1

Let \(\hat{s}\) and \(\bar{s}\) be two points satisfying stationary conditions (2)–(3) with the same \(\lambda \). Then,

Proof

For every pair \((s,\lambda )\) satisfying (2)–(3), we can write

Then,

\(\square \)

The following proposition establishes a bound on the maximum number of stationary points with different norm. The proof follows the same line of arguments used in [6] to characterize global minimizers of the cubic model. It is entirely reported here for the sake of completeness.

Proposition 1

At most \(2(k+1)\) points that satisfy (2)–(3) with distinct values of \(\lambda \) exist, where k is the number of distinct negative eigenvalues of Q.

Proof

First, we observe that if \(\lambda = 0\), then \(s = 0\) is the only point that satisfies (2)–(3). So, in the following we consider the case in which \(\lambda > 0\) (i.e., \(s \ne 0\)). Let \(V \in \mathbb {R}^{n \times n}\) be an orthonormal matrix such that

where \(M := \text {diag}_{i = 1,\dots ,n}\{\mu _i\}\) and \(\mu _1 \le \cdots \le \mu _n\) are the eigenvalues of Q. Now, we can introduce the vector \(a \in \mathbb {R}^n\) and consider the transformation

Pre-multiplying (2) by \(V^T\), we get

and then

where \(\beta = -V^T c\).

The above expression can be equivalently written as

Moreover, from (3) we get

Using (7) and (8), we can rewrite the stationary conditions as follows:

where

Now, we have two cases.

-

(i)

\(\beta _i = 0\) for all \(i = 1,\ldots ,n\) (i. e., \(c=0\)). It follows that \(g(\lambda ) = 0\) in all the domain and system (9) does not admit solutions. In this case, only \(s = 0\) satisfies stationary conditions (2)–(3).

-

(ii)

An index \(i \in \{1,\ldots ,n\}\) exists such that \(\beta _i \ne 0\) (i. e., \(c \ne 0\)). Without loss of generality, we assume that \(\mu _1,\ldots ,\mu _p \le 0\), with \(p \le n\). Then \(g(\lambda )\) is defined in the following \(n+2\) subintervals:

$$\begin{aligned} \begin{aligned}&\left( -\infty , -\mu _n\right) \; \cup \; \left( -\mu _n ,-\mu _{n-1}\right) \; \cup \; \cdots \; \cup \; \left( -\mu _{p+1}, 0\right) \\&\quad \cup \left( 0, -\mu _p\right) \; \cup \; \cdots \; \cup \; \left( -\mu _2 ,-\mu _1\right) \; \cup \; \left( -\mu _1, +\infty \right) . \end{aligned} \end{aligned}$$Computing the derivatives of \(g(\lambda )\), we obtain

$$\begin{aligned} \frac{d}{d \lambda } g(\lambda )= & {} - 2 \sum _{i=1}^n \beta _i^2 \left[ \lambda (\mu _i + \lambda )\right] ^{-3} (\mu _i + 2\lambda ), \\ \frac{d^2}{d \lambda ^2} g(\lambda )= & {} 2 \sum _{i=1}^n \beta _i^2 \left[ \lambda (\mu _i + \lambda )\right] ^{-4} \left[ 10 \lambda ^2 + 10 \mu _i \lambda + 3 \mu _i^2\right] . \end{aligned}$$It is straightforward to verify that \(\dfrac{d^2}{d \lambda ^2} g(\lambda ) > 0\) in all the points where \(g(\lambda )\) is defined, that is, \(g(\lambda )\) is strictly convex in all the non-empty subintervals that define its domain. Taking into account that \({\lim _{\lambda \rightarrow 0} g(\lambda ) = +\infty }\), \({\lim _{\lambda \rightarrow -\mu _i} g(\lambda ) = +\infty }\) for all \(\beta _i \ne 0\) and \({\lim _{\lambda \rightarrow \pm \infty } g(\lambda ) = 0}\), we get that \(g(\lambda )\) has at most \(2(n+1)\) roots: at most one in each extreme subinterval and at most two in all the other subintervals. Now, let \(k \le p\) be the number of distinct negative eigenvalues \(\mu _i\). It follows that system (9) has at most \(2k+1\) solutions: at most two in each subinterval \((0, -\mu _k)\), \((-\mu _k, -\mu _{k-1}),\dots ,(-\mu _2 ,-\mu _1)\), and at most one in the subinterval \((-\mu _1,+\infty )\). Taking into account the case \(\lambda = 0\), we conclude that there exist at most \(2(k+1)\) distinct values of \(\lambda \) satisfying stationary conditions (2)–(3). \(\square \)

From Lemma 1 and Proposition 1, we easily get the following corollary, establishing a bound on the maximum number of distinct values assumed by the objective function m(s) at stationary points.

Corollary 1

The maximum number of distinct values of the objective function m(s) at stationary points is \(2(k+1)\), where k is the number of distinct negative eigenvalues of Q.

At least from a theoretical point of view, Theorem 2 and Corollary 1 suggest a possible iterative strategy to obtain a global minimizer of problem (1). Namely, we can compute a stationary point \(\bar{s}\) by some local algorithm and check the conditions of Theorem 2: if none of them is satisfied, then \(\bar{s}\) is a global minimizer (see Remark 1); otherwise, we get a new point \(\hat{s}\) such that \(m(\hat{s}) < m(\bar{s})\) and, starting from \(\hat{s}\), we can compute a new stationary point and iterate. Corollary 1 ensures that this procedure is finite and returns a global minimizer of problem (1).

To be rigorous, the above strategy is well defined under the assumption that stationary points can be computed in a finite number of iterations by a local algorithm. Unfortunately, optimization methods only ensure asymptotic convergence and, in practice, a point \(\bar{s}\) is returned such that \(||\nabla m(\bar{s})|| \le \varepsilon \), being \(\varepsilon \) a desired tolerance. In the next section, we show how Theorem 2 can be generalized to cope with this case and discuss possible algorithmic applications.

3 Extension to approximate stationary points

In this section, first we extend Theorem 2 to the case where stationary conditions are approximately satisfied, and then we briefly discuss how these results may be used in an algorithmic framework, showing some numerical examples.

Assuming that \(\bar{s} \in \mathbb {R}^n\) is a non-stationary point of problem (1), of course we have \(||\nabla m(\bar{s})|| > 0\), or equivalently, \(|\nabla m(\bar{s})^T d| > 0\) for some \(d \in \mathbb {R}^n\). The next theorem states some conditions to compute a point \(\hat{s}\) such that \(m(\hat{s}) < m(\bar{s})\).

Theorem 3

Given \(\bar{s} \in \mathbb {R}^n\), let us define the point \(\hat{s}\) as follows:

-

(a)

if \(c^T \bar{s} > 0\), then

$$\begin{aligned} \hat{s} := -\bar{s}; \end{aligned}$$ -

(b)

if \(c^T \bar{s} \le 0\) and a vector \(d \in \mathbb {R}^n\) exists such that \(d^T(Q + \sigma ||\bar{s}|| I) d < -\varepsilon _2 ||d||^2\),

-

(i)

if \(\bar{s} = 0\) and \(\varepsilon _2 \ge 0\), then, assuming without loss of generality that \(c^T d \le 0\),

$$\begin{aligned} \hat{s} := \bar{s} + \alpha d, \end{aligned}$$with \(\displaystyle {0< \alpha < -\frac{3 \, d^T Q d}{2 \, \sigma ||d||^3}}\);

-

(ii)

if \(\bar{s} \ne 0\), \(\bar{s}^T d \ne 0\) and \(\varepsilon _2 \ge \biggl |\dfrac{\nabla m(\bar{s})^T d}{\bar{s}^T d}\biggr |\), then

$$\begin{aligned} \hat{s} := \bar{s} - 2 \frac{\bar{s}^T d}{||d||^2} d; \end{aligned}$$ -

(iii)

if \(\bar{s} \ne 0\), \(\bar{s}^T d = 0\) and \(\varepsilon _2 > \dfrac{|\nabla m(\bar{s})^T \bar{s}|}{||\bar{s}||^2}\), then, assuming without loss of generality that \(\nabla m(\bar{s})^T d \ge 0\),

$$\begin{aligned} \hat{s} := \bar{s} - 2 \frac{\bar{s}^T z}{||z||^2} z, \end{aligned}$$where \(z:= \bar{s} + \alpha d\) and \(\alpha > 0\) is sufficiently large to satisfy

$$\begin{aligned} z^T(Q + \sigma ||\bar{s}|| I)z < -\varepsilon _2 ||z||^2. \end{aligned}$$

-

(i)

We have that

Proof

The proof of case (a) is the same as for Theorem 2. Now, we consider case (b) and distinguish the three subcases.

-

(i)

Since we are assuming that \(c^T d \le 0\), we can write

$$\begin{aligned} \begin{aligned} m(\bar{s} + \alpha d)&= m(\alpha d) = \alpha c^T d + \frac{1}{2} \alpha ^2 d^T Q d + \frac{1}{3} \sigma \alpha ^3 ||d||^3 \\&\le \frac{1}{2} \alpha ^2 d^T Q d + \frac{1}{3} \sigma \alpha ^3 ||d||^3 \end{aligned} \end{aligned}$$and we obtain the result by the same arguments used in the proof of point (b)-(i) of Theorem 2.

-

(ii)

Using (6), and exploiting the fact that \(d^T(Q + \sigma ||\bar{s}|| I) d < -\varepsilon _2 ||d||^2\), we get

$$\begin{aligned} \begin{aligned}&m\biggl (\bar{s} - 2 \frac{\bar{s}^T d}{||d||^2} d\biggr ) < m(\bar{s}) - \frac{1}{2} \biggl ( 2 \frac{\bar{s}^T d}{||d||^2} \biggr )^2 \varepsilon _2 ||d||^2 - 2 \frac{\bar{s}^T d}{||d||^2} \nabla m(\bar{s})^T d \\&\quad \le m(\bar{s}) - \frac{1}{2} \biggl ( 2 \frac{\bar{s}^T d}{||d||^2} \biggr )^2 \varepsilon _2 ||d||^2 + 2 \frac{\left| {\bar{s}^T d}\right| }{||d||^2} \left| {\nabla m(\bar{s})^T d}\right| \\&\quad = m(\bar{s}) - 2 \frac{\left| {\bar{s}^T d}\right| }{||d||^2} \Bigl ( \left| {\bar{s}^T d}\right| \varepsilon _2 - \left| {\nabla m(\bar{s})^T d}\right| \Bigr ) \le m(\bar{s}), \end{aligned} \end{aligned}$$where the last inequality follows from the fact that \(\varepsilon _2 \ge \biggl |\dfrac{\nabla m(\bar{s})^T d}{\bar{s}^T d}\biggr |\).

-

(iii)

Since \(d \ne 0\), we can first assume that \(\alpha > 0\) is sufficiently large to satisfy \(z \ne 0\). Replacing d with z in (6), we obtain

$$\begin{aligned} m\biggl (\bar{s} - 2 \frac{\bar{s}^T z}{||z||^2} z\biggr ) = m(\bar{s}) + \frac{1}{2} \biggl ( 2 \frac{\bar{s}^T z}{||z||^2} \biggr )^2 z^T (Q + \sigma ||\bar{s}|| I) z - 2 \frac{\bar{s}^T z}{||z||^2} \nabla m(\bar{s})^T z. \end{aligned}$$Taking into account that \(z = \bar{s} + \alpha d\) and \(\bar{s}^T z = \bar{s}^T (\bar{s} + \alpha d) = ||\bar{s}||^2\), we can write

$$\begin{aligned} \begin{aligned} m\biggl (\bar{s} - 2 \frac{\bar{s}^T z}{||z||^2} z\biggr )&= m(\bar{s}) + \frac{1}{2} \biggl ( 2 \frac{||\bar{s}||^2}{||z||^2} \biggr )^2 z^T (Q + \sigma ||\bar{s}|| I) z - 2 \frac{||\bar{s}||^2}{||z||^2} \nabla m(\bar{s})^T (\bar{s} + \alpha d) \\&\le m(\bar{s}) + \frac{1}{2} \biggl ( 2 \frac{||\bar{s}||^2}{||z||^2} \biggr )^2 z^T (Q + \sigma ||\bar{s}|| I) z - 2 \frac{||\bar{s}||^2}{||z||^2} \nabla m(\bar{s})^T \bar{s} \\&\le m(\bar{s}) + 2 \frac{||\bar{s}||^2}{||z||^2} \biggl (||\bar{s}||^2 \, \frac{z^T (Q + \sigma ||\bar{s}|| I) z}{||z||^2} + \left| {\nabla m(\bar{s})^T \bar{s}}\right| \biggr ), \end{aligned} \end{aligned}$$(10)where the first inequality follows from the fact that \(\nabla m(\bar{s})^T d \ge 0\) and \(\alpha > 0\). Now, let us define \(\theta \in (0,1)\) such that \(\varepsilon _2 = \dfrac{1}{\theta } \dfrac{|\nabla m(\bar{s})^T \bar{s}|}{||\bar{s}||^2}\). Exploiting the fact that \(\theta \in (0,1)\) and \(d^T(Q + \sigma ||\bar{s}|| I) d < -\varepsilon _2 ||d||^2\), for sufficiently large \(\alpha > 0\) we have

$$\begin{aligned} \frac{\biggl (\dfrac{\bar{s}}{\alpha }+d\biggr )^T (Q + \sigma ||\bar{s}|| I) \biggl (\dfrac{\bar{s}}{\alpha }+d\biggr )}{\dfrac{||\bar{s}||^2}{\alpha ^2} + ||d||^2} = \frac{(\bar{s} + \alpha d)^T (Q {+} \sigma ||\bar{s}|| I) (\bar{s} {+} \alpha d)}{||\bar{s}||^2 {+} \alpha ^2 ||d||^2} {<} -\theta \varepsilon _2. \end{aligned}$$Taking into account that \(z = \bar{s} + \alpha d\) and \(||z||^2 = ||\bar{s}||^2 + \alpha ^2 ||d||^2\), it follows that, for sufficiently large \(\alpha >0\),

$$\begin{aligned} \begin{aligned} \frac{z^T (Q + \sigma ||\bar{s}|| I) z}{||z||^2} < -\theta \varepsilon _2. \end{aligned} \end{aligned}$$Combining this inequality with (10), for sufficiently large \(\alpha > 0\) we can write

$$\begin{aligned} m\biggl (\bar{s} - 2 \frac{\bar{s}^T z}{||z||^2} z\biggr ) < m(\bar{s}) + 2 \frac{||\bar{s}||^2}{||z||^2} \Bigl (-\theta \varepsilon _2 ||\bar{s}||^2 + \left| {\nabla m(\bar{s})^T \bar{s}}\right| \Bigr ) = m(\bar{s}), \end{aligned}$$where the equality follows from the fact that \(\varepsilon _2 = \dfrac{1}{\theta } \dfrac{|\nabla m(\bar{s})^T \bar{s}|}{||\bar{s}||^2}\). \(\square \)

Remark 2

It is straightforward to verify that, when \(\bar{s}\) is a stationary point, Theorem 3 coincides with Theorem 2.

Remark 3

Using (6), Theorem 3 can be strengthened by replacing the condition b-(ii) with the condition that a direction d exists such that \(\bar{s} \ne 0\), \(\bar{s}^T d \ne 0\) and

Remark 4

From a computational point of view, condition (a) of Theorem 3 can be easily checked with a negligible cost. To check condition (b), we have to verify if there exists a negative curvature direction with respect to the matrix \((Q + \sigma ||\bar{s}|| I)\). This can be done, for example, by calculating the smallest eigenvalue and the associate eigenvector of that matrix. If such a direction exists, we see that, for case (b)-(i), this is enough to ensure that \(m(\hat{s}) < m(\bar{s})\). For case (b)-(ii) and (b)-(iii), we have to check if \(\varepsilon _2\) is sufficiently large. It is easy to verify that, if \(||\nabla m(\bar{s})|| \le \varepsilon \), then condition (b)-(ii) is verified whenever \(\varepsilon _2 \ge \varepsilon ||d||/|\bar{s}^T d|\), and condition (b)-(iii) is verified whenever \(\varepsilon _2 > \varepsilon /||\bar{s}||\). Therefore, the threshold value of \(\varepsilon _2\) for satisfying conditions b-(ii) and b-(iii) is related to \(||\nabla m(\bar{s})||\), that is, the tolerance we have chosen to solve problem (1).

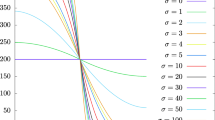

Let us concluding this section by discussing some possible algorithmic applications of our results, even if defining a proper optimization method is beyond the scope of the paper. A first naive strategy to exploit Theorem 3 is checking if one of its conditions holds after that an approximate stationary point \(\bar{s}\) of problem (1) is computed with the desired tolerance by a local algorithm. If this is the case, then we can compute the point \(\hat{s}\) and restart the local algorithm from \(\hat{s}\). To provide some numerical examples, we have inserted this strategy within the ARC algorithm described in [6, 7] to minimize the cubic model at each iteration, giving rise to an algorithm that we name ARC\(^+\). In particular, at every iteration of ARC\(^+\) and ARC, a truncated-Newton method has been used as local solver for the minimization of the cubic model, starting from a randomly chosen point. The codes have been written in Matlab, using built-in functions to compute eigenvalues and eigenvectors needed to check the conditions of Theorem 3. We have considered a set of 130 unconstrained test problems of the form \(\min _{x \in \mathbb {R}^n} \, f(x)\) from the CUTEst collection [13] and, among them, we have then selected the 39 for which the two algorithms performed differently and both converged to a point \(x^*\) such that \(||\nabla f(x^*)||_{\infty } \le 10^{-5}\) within a maximum number of iterations, set equal to \(10^5\). The results on this subset of problems are reported in Table 1, where obj and iter denote the final objective value and the number of iterations, respectively. We see that, in 28 out 39 cases, ARC\(^+\) converged in fewer iterations. Taking a look to the performance profile [10] reported in Fig. 1, we also observe that, on the considered subset of problems, ARC\(^+\) is more robust than ARC in terms of number of iterations. We have then repeated the same experiments by using the Cauchy point as starting point for the minimization of the cubic model, but no significative difference emerged between ARC\(^+\) and ARC. This opens a question about possible relations between the Cauchy point and the global minimizers, which can be subject of future research.

It is worth pointing out that the above described ARC\(^+\) method could be too expensive in terms of CPU time, since it requires the computation of eigenvalues and eigenvectors at the end of each local minimization. Nevertheless, a more refined way to exploit Theorem 3 for algorithmic purposes can be based on checking if one of its conditions is satisfied during the iterations of the local method, instead of at the end. This can be done efficiently when the local method is able to detect negative curvature directions. Assuming that a sequence of points \(\{s^k\}\) and a sequence of directions \(\{d^k\}\) are produced by the local algorithm, since \(\nabla ^2 m(s^k) = Q + \sigma ||s^k|| I + \sigma \dfrac{s^k (s^k)^T}{||s^k||}\), we have \(\displaystyle {(d^k)^T (Q + \sigma ||s^k|| I) d^k = (d^k)^T \nabla ^2 m(s^k) d^k - \sigma \frac{((s^k)^T d^k)^2}{||s^k||}}\). Therefore, if \(d^k\) is a negative curvature direction with respect to \(\nabla ^2 m(s^k)\), condition (b) of Theorem 3 is verified for some \(\varepsilon _2 \ge 0\), provided \(c^T \bar{s} \le 0\). Then, a new point that ensures a decrease in the objective function may be easily computed. In this case, condition (b) of Theorem 3 can therefore be checked without the need of computing eigenvalues and eigenvectors. Finally, other checks can be included in the scheme to ensure convergence of such modification of the local algorithm.

4 Conclusions

In this paper, we have highlighted some theoretical properties of the stationary points of problem (1), whose solutions are of interest for many optimization methods. We have shown that, given a stationary point of problem (1) that is not a global minimizer, it is possible to compute, in closed form, a new point that reduces the objective function value. Then, we have pointed out how a global minimum point of problem (1) can be obtained by computing at most \(2(k+1)\) stationary points, where k is the number of distinct negative eigenvalues of the matrix Q. Further, we have extended these results to the case where stationary conditions are approximately satisfied, sketching some possible algorithmic applications.

Performance profile for the number of iterations related to the numerical experiments reported in Table 1

We think that the most natural extension of the results presented in this paper is the definition of a proper algorithm for unconstrained optimization, based on the iterative computation of the solutions of problem (1), for which some preliminary ideas have been proposed at the end of Sect. 3. This can be a challenging task for future research.

References

Bellavia, S., Morini, B.: Strong local convergence properties of adaptive regularized methods for nonlinear least squares. IMA J. Numer. Anal. 35(2), 947–968 (2014)

Benson, H.Y., Shanno, D.F.: Interior-point methods for nonconvex nonlinear programming: cubic regularization. Comput. Optim. Appl. 58(2), 323–346 (2014)

Bianconcini, T., Sciandrone, M.: A cubic regularization algorithm for unconstrained optimization using line search and nonmonotone techniques. Optim. Methods Softw. 31(5), 1008–1035 (2016)

Bianconcini, T., Liuzzi, G., Morini, B., Sciandrone, M.: On the use of iterative methods in cubic regularization for unconstrained optimization. Comput. Optim. Appl. 60(1), 35–57 (2015)

Birgin, E.G., Gardenghi, J.L., Martínez, J.M., Santos, S.A., Toint, P.L.: Worst-case evaluation complexity for unconstrained nonlinear optimization using high-order regularized models. Math. Program. 163(1–2), 359–368 (2017)

Cartis, C., Gould, N.I.M., Toint, P.L.: Adaptive cubic regularisation methods for unconstrained optimization. Part I: motivation, convergence and numerical results. Math. Program. 127(2), 245–295 (2011)

Cartis, C., Gould, N.I.M., Toint, P.L.: Adaptive cubic regularisation methods for unconstrained optimization. Part II: worst-case function-and derivative-evaluation complexity. Math. Program. 130(2), 295–319 (2011)

Cartis, C., Gould, N.I.M., Toint, P.L.: An adaptive cubic regularization algorithm for nonconvex optimization with convex constraints and its function-evaluation complexity. IMA J. Numer. Anal. 32(4), 1662–1695 (2012)

Conn, A.R., Gould, N.I.M., Toint, P.L.: Trust Region Methods. SIAM, Philadelphia (2000)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91(2), 201–213 (2002)

Dussault, J.P.: Simple unified convergence proofs for the trust-region and a new ARC variant. Tech. rep., University of Sherbrooke, Sherbrooke, Canada (2015)

Gould, N.I.M., Porcelli, M., Toint, P.L.: Updating the regularization parameter in the adaptive cubic regularization algorithm. Comput. Optim. Appl. 53(1), 1–22 (2012)

Gould, N.I.M., Orban, D., Toint, P.L.: CUTEst: a constrained and unconstrained testing environment with safe threads for mathematical optimization. Comput. Optim. Appl. 60(3), 545–557 (2015)

Griewank, A.: The modification of Newtons method for unconstrained optimization by bounding cubic terms. Tech. Rep. NA/12 (1981)

Lucidi, S., Palagi, L., Roma, M.: On some properties of quadratic programs with a convex quadratic constraint. SIAM J. Optim. 8(1), 105–122 (1998)

Nesterov, Y.: Cubic regularization of Newton’s method for convex problems with constraints. Tech. Rep. 39, CORE (2006)

Nesterov, Y.: Accelerating the cubic regularization of Newtons method on convex problems. Math. Program. 112(1), 159–181 (2008)

Nesterov, Y., Polyak, B.T.: Cubic regularization of Newton method and its global performance. Math. Program. 108(1), 177–205 (2006)

Weiser, M., Deuflhard, P., Erdmann, B.: Affine conjugate adaptive Newton methods for nonlinear elastomechanics. Optim. Methods Softw. 22(3), 413–431 (2007)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Cristofari, A., Dehghan Niri, T. & Lucidi, S. On global minimizers of quadratic functions with cubic regularization. Optim Lett 13, 1269–1283 (2019). https://doi.org/10.1007/s11590-018-1316-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-018-1316-0