Abstract

This paper presents several models addressing optimal portfolio choice, optimal portfolio liquidation, and optimal portfolio transition issues, in which the expected returns of risky assets are unknown. Our approach is based on a coupling between Bayesian learning and dynamic programming techniques that leads to partial differential equations. It enables to recover the well-known results of Karatzas and Zhao in a framework à la Merton, but also to deal with cases where martingale methods are no longer available. In particular, we address optimal portfolio choice, portfolio liquidation, and portfolio transition problems in a framework à la Almgren–Chriss, and we build therefore a model in which the agent takes into account in his decision process both the liquidity of assets and the uncertainty with respect to their expected return.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The modern theory of portfolio selection started in 1952 with the seminal paper [34] of Markowitz.Footnote 1 In his paper, Markowitz considered the problem of an agent who wishes to build a portfolio with the maximum possible level of expected return, given a limit level of variance. He then coined the concept of efficient portfolio and described how to find such portfolios. Markowitz paved the way for studying theoretically the optimal portfolio choice of risk-averse agents. A few years after Markowitz’s paper, Tobin published indeed his famous research work on the liquidity preferences of agents and the separation theorem (see [45]), which is based on the ideas developed by Markowitz. A few years later, in the sixties, Treynor, Sharpe, Lintner, and Mossin introduced independently the Capital Asset Pricing Model (CAPM) which is also built on top of the ideas of Markowitz. The ubiquitous notions of \(\alpha \) and \(\beta \) owe a lot therefore to Markowitz modern portfolio theory.

Although initially written within a mean-variance optimization framework, the so-called Markowitz problem can also be written within the Von Neumann-Morgenstern expected utility framework. This was for instance done by Samuelson and Merton (see [36, 37, 42]), who, in addition, generalized Markowitz problem by extending the initial one-period framework to a multi-period one. Samuelson did it in discrete time, whereas Merton did it in continuous time. It is noteworthy that they both embedded the intertemporal portfolio choice problem into a more general optimal investment/consumption problem.Footnote 2

In [36], Merton used partial differential equation (PDE) techniques for characterizing the optimal consumption process of an agent and its optimal portfolio choices. In particular, Merton managed to find closed-form solutions in the constant absolute risk aversion (CARA) case (i.e., for exponential utility functions), and in the constant relative risk aversion (CRRA) case (i.e., for power and log utility functions). Merton’s problem has then been extended to incorporate several features such as transaction costs (proportional and fixed) or credit constraints. Major advances to solve Merton’s problem in full generality have been made in the eighties by Karatzas et al. using (dual) martingale methods. In [26], Karatzas, Lehoczky, and Shreve used a martingale method to solve Merton’s problem for almost any smooth utility function and showed how to partially disentangle the consumption maximization problem and the terminal wealth maximization problem. Constrained problems and extensions to incomplete markets were then considered—see for instance the paper [11] by Cvitanić and Karatzas.

In the literature on portfolio selection or in the slightly more general literature on Merton’s problem, input parameters (for instance the expected returns of risky assets) are considered known constants, or stochastic processes with known initial values and dynamics. In practice however, one cannot state for sure that price returns will follow a given distribution. Uncertainty on model parameters is the raison d’être of the celebrated Black–Litterman model (see [7]), which is built on top of Markowitz model and the CAPM. Nevertheless, like Markowitz model, Black–Litterman model is a static one. In particular, the agent of Black–Litterman model does not use empirical returns to dynamically learn the distribution of asset returns.

Generalizations of optimal allocation models (or models dealing with Merton’s problem) involving filtering and learning techniques in a partial information framework have been proposed in the literature. The problems that are addressed are of three types depending on the assumptions regarding the drift: unknown constant drift (e.g. [10, 13, 28]), unobserved drift with Ornstein–Uhlenbeck dynamics (e.g. [8, 17, 32, 41]), and unobserved drift modelled by a hidden Markov chain (e.g. [9, 25, 40, 43]). In the different models, filtering (or learning) enables to estimate the unknown parameters from the dynamics of the prices, and sometimes also from additional information such as analyst views or expert opinions (see [14, 18]) or inside information (see [13, 38]).

Most models (see [6, 10, 13, 28,29,30, 38, 39]) use martingale (dual) methods to solve optimal allocation problems under partial information. For instance, in a framework similar to ours, Karatzas and Zhao [28] considered a model where the asset returns are Gaussian with unknown mean and they used martingale methods under the filtration of observables to compute, for almost any utility function, the optimal portfolio allocation (there is no consumption in their model).

Some models, like ours, use instead Hamilton–Jacobi–Bellman (HJB) equations and therefore PDE techniques. Rishel [41] proposed a model with one risky asset where the drift has an Ornstein–Uhlenbeck dynamics and solved the HJB equation associated with CRRA utility functions. Interestingly, it is one of the rare references to tackle the question of explosion when Bayesian filtering and optimization are carried out simultaneously. Brendle [8] generalized the results of [41] to a multi-asset framework and also considered the case of CARA utility functions. Fouque et al. [17] solved a related problem with correlation between the noise process of the price and that of the drift and used perturbation analysis to obtain approximations. Li et al. [32] also studied a similar problem with a mean-variance objective function. Rieder and Bäuerle [40] proposed a model with one risky asset where the drift is modelled by a hidden Markov chain and solved it with PDEs in the case of CRRA utility function.

Outside of the optimal portfolio choice literature, several authors proposed financial models in which both online learning and stochastic optimal control coexist. For instance, Laruelle et al. proposed in [31] a model in which an agent optimizes its execution strategy with limit orders and simultaneously learns the parameters of the Poisson process modelling the execution of limit orders. Interesting ideas in the same field of algorithmic trading can also be found in the work of Fernandez-Tapia (see [16]). An interesting paper is also that of Ekström and Vaicenavicius [15] who tackled the problem of the optimal time at which to sell an asset with unknown drift. Recently, Casgrain and Jaimungal [9] also used similar ideas for designing algorithmic trading strategies.

In this paper, we consider several problems of portfolio choice, portfolio liquidation, and portfolio transition in continuous time in which the (constant) expected returns of the risky assets are unknown but estimated online. In the first sections, we consider a multidimensional portfolio choice problem similar to the one tackled by Karatzas and Zhao in [28] with a rather general Bayesian prior for the drifts (our family of priors includes compactly supported and Gaussian distributions).Footnote 3 For this problem, with general Bayesian prior, we derive HJB equations and show that, in the CARA and CRRA cases, these equations can be transformed into linear parabolic PDEs. The interest of the paper lies here in the fact that our framework is multidimensional and general in terms of possible priors. Moreover, unlike other papers, we provide a verification result and this is important in view of the explosion occurring for some couples of priors and utility functions. We then specify our results in the case of a Gaussian prior for the drifts and recover formulas already present in the literature (see [28] or limit cases of [8]). The Gaussian prior case is discussed in depth, (i) because the associated PDEs can be simplified into simple ODEs (at least for CARA and CRRA utility functions) that can be solved in closed form by using classical tricks, and (ii) because Gaussian priors provide examples of explosion: the problem may not be well posed in the CRRA case when the relative risk aversion parameter is too small.

The PDE approach is interesting in itself and we believe that it enables to avoid the laborious computations needed to simplify the general expressions of Karatzas and Zhao. However, our message is of course not limited to that one. The PDE approach can indeed be used in situations where the (dual) martingale approach cannot be used. In the last section of this paper, we use our approach to solve the optimal allocation problem in a trading framework à la Almgren–Chriss. The Almgren–Chriss framework was initially built for solving optimal execution problems (see [1, 2]) but it is also very useful outside of the cash-equity world. For instance, Almgren and Li [3], and Guéant and Pu [22] used it for the pricing and hedging of vanilla options when liquidity matters.Footnote 4 The model we propose is one of the first models that uses the Almgren–Chriss framework for addressing an asset management problem, and definitely the first paper in this area in which the Almgren–Chriss framework is used in combination with Bayesian learning techniques.Footnote 5 We also show how our framework can be slightly modified for addressing optimal portfolio liquidation and transition issues.

This paper aims at proving that online learning—in our case on-the-fly Bayesian estimations—combined with stochastic optimal control can be very efficient to tackle a lot of financial problems. It is essential to understand that online learning is a forward process whereas dynamic programming classically relies on backward induction. By using these two classical tools simultaneously, we do not only benefit from the power of online and Bayesian learning to continuously learn the value of unknown parameters, but we also develop a framework in which agents learn and make decisions knowing that they will go on learning in the future in the same manner as they have learnt in the past. The same ideas are for instance at play in the literature on Bayesian multi-armed bandits where the unknown parameters are the parameters of the prior distributions of the different rewards.

In Sect. 2, we provide the main results related to our Bayesian framework. We first compute the Bayesian estimator of the drifts entering the dynamics of prices (more precisely the conditional mean given the prices trajectory and the prior). We then derive the dynamics of that Bayesian estimator. These results are classical and can be found in [5] or [33], but they are recalled for the sake of completeness. In Sect. 3, we consider the portfolio allocation problem of an agent in a context with one risk-free asset and d risky assets, and we show how the associated HJB equations can be transformed into linear parabolic PDEs in the case of a CARA utility function and of a CRRA utility function. As opposed to most of the papers in the literature, we also provide verification theorems. This is of particular importance because the Bayesian framework leads to blowups for some of the optimal control problems. In Sect. 4, we solve the same portfolio allocation problem as in Sect. 3 but in the specific case of a Gaussian prior. We show that a more natural set of state variables can be used to solve the same problem. We also provide an example of blowup in the Gaussian case. In Sect. 4, thanks to closed-form solutions, we also analyze the role of learning on the dynamics of the allocation process of the agent. In Sect. 5, we introduce liquidity costs through a modelling framework à la Almgren–Chriss and we use our combination of Bayesian learning and stochastic optimal control techniques for solving various portfolio choice, portfolio liquidation, and portfolio transition problems.

2 Bayesian learning

2.1 Notations and first properties

We consider an agent facing a portfolio allocation problem with one risk-free asset and d risky assets.

Let \(\left( \Omega ,\left( {\mathcal {F}}_{t}^{W}\right) _{t\in {\mathbb {R}}_{+}},{\mathbb {P}}\right) \) be a filtered probability space, with \(\left( {\mathcal {F}}_{t}^{W}\right) _{t\in {\mathbb {R}}_{+}}\) satisfying the usual conditions. Let \(\left( W_{t}\right) _{t\in {\mathbb {R}}_{+}}\) be a d-dimensional Brownian motion adapted to \(\left( {\mathcal {F}}_{t}^{W}\right) _{t\in {\mathbb {R}}_{+}}\), with correlation structure given by \( d\left\langle W^{i},W^{j}\right\rangle _t =\rho ^{ij}dt\) for all i, j in \(\left\{ 1,\ldots ,d\right\} \).

The risk-free interest rate is denoted by r. We index by \(i \in \left\{ 1,\ldots ,d\right\} \) the d risky assets. For \(i\in \left\{ 1,\ldots ,d\right\} \), the price of the ith asset \(S^i\) has the classical log-normal dynamics

where the volatility vector \(\sigma = (\sigma ^{1}, \ldots , \sigma ^d)'\) satisfies \(\forall i \in \left\{ 1,\ldots ,d\right\} , \sigma ^{i} > 0\), and where the drift vector \(\mu =(\mu ^{1}, \ldots , \mu ^d)'\) is unknown.

We assume that the prior distribution of \(\mu \), denoted by \(m_\mu \), is sub-Gaussian.Footnote 6 In particular, it satisfies the following property:

Throughout, we shall respectively denote by \(\rho = (\rho ^{ij})_{1\le i,j \le d}\) and \(\Sigma = (\rho ^{ij}\sigma ^{i}\sigma ^{j})_{1\le i,j \le d}\) the correlation and covariance matrices associated with the dynamics of prices.

We also denote by \((Y_t)_{t\in {\mathbb {R}}_+}\) the process defined by

Remark 1

Both \(\mu \) and \((W_{t})_{t\in {\mathbb {R}}_+}\) are unobserved by the agent, but for each index \(i\in \left\{ 1,\ldots ,d\right\} \), \(\mu ^i t+\sigma ^i W_{t}^i\) is observed at time \(t\in {\mathbb {R}}_+\) because

The evolution of the prices reveals information to the agent about the true value of the drift vector \(\mu \). In what follows we denote by \({\mathcal {F}}^{S} = \left( {\mathcal {F}}_{t}^{S}\right) _{t\in {\mathbb {R}}_{+}}\) the filtration generated by \(\left( S_{t}\right) _{t\in {\mathbb {R}}_{+}}\) or equivalently by \(\left( Y_{t}\right) _{t\in {\mathbb {R}}_{+}}\).

Remark 2

\((W_t)_{t\in {\mathbb {R}}_+}\) is not an \({\mathcal {F}}^{S}\)-Brownian motion, because it is not \({\mathcal {F}}^{S}\)-adapted.

We introduce the process \((\beta _t)_{t\in {\mathbb {R}}_+}\) defined by

Remark 3

\((\beta _t)_{t\in {\mathbb {R}}_+}\) is well defined because of the assumption (2) on the prior \(m_\mu \).

From an investor’s point of view, \((\beta _t)_{t\in {\mathbb {R}}_+}\) is of main concern. It encapsulates the information gathered so far about the returns one can expect from the assets.

The first result stated in Theorem 1 is a formula for \(\beta _t\).

Theorem 1

Let us define

where \(\odot \) denotes the element-wise multiplication of vectors.

F is a well-defined finite-valued \(C^\infty ({\mathbb {R}}_+\times {\mathbb {R}}^d)\) function.

We have

where

and where we denote by \(\vec {1}\) the vector \(\left( 1, \ldots , 1 \right) '\in {\mathbb {R}}^{d}\).

Before we prove Theorem 1, let us introduce the probability measure \({\mathbb {Q}}\) defined by

where \(\alpha : z = (z^1, \ldots , z^d)' \in {\mathbb {R}}^d \mapsto \left( \frac{z^1-r}{\sigma ^1},\ldots ,\frac{z^d-r}{\sigma ^d} \right) '\) and T is an arbitrary constant in \({\mathbb {R}}^*_+\).

Girsanov’s theorem implies that the process \(\left( W_t^{\mathbb {Q}}\right) _{t\in [0,T]}\) defined by

is a d-dimensional Brownian motion with correlation structure given by \(\rho \) under \({\mathbb {Q}}\) and adapted to the filtration \(\left( {\mathcal {F}}^S_t\right) _{t\in [0,T]}\). Moreover

The following proposition will be used in the proof of Theorem 1.

Proposition 1

Under the probability measure \({\mathbb {Q}}\), \(\mu \) is independent of \(W_t^{\mathbb {Q}}\) for all \(t\in [0,T]\).

Proof

Since, for all \(t\in [0,T]\), \(\mu \) is independent of \(W_t\) under the probability measure \({\mathbb {P}}\), we have, for \((t,a,b)\!\in \![0,T]\times {\mathbb {R}}^d\times {\mathbb {R}}^d\),

Now, let us notice that

and \(\exp \left( -\frac{t}{2}b'\rho b\right) \) is the Fourier transform of \(W_t^{\mathbb {Q}}\) under the probability measure \({\mathbb {Q}}\).

Therefore,

hence the result. \(\square \)

We are now ready to prove Theorem 1.

Proof of Theorem 1

Let us first show that F is a well-defined finite-valued \(C^\infty ({\mathbb {R}}_+\times {\mathbb {R}}^d)\) function.

We have

Therefore, to show that F takes finite values, we just need to prove that for \(a\in {\mathbb {R}}^d\),

Thanks to condition (2) on the prior, there exists \(\eta > 0\) such that \({\mathbb {E}} \left[ \exp \left( \eta \left||\mu \right||^2\right) \right] < +\infty \). Therefore,

Consequently, F is well defined and takes finite values.

For proving that F is in fact a \(C^\infty ({\mathbb {R}}_+\times {\mathbb {R}}^d)\) function, we see by formal derivation that it is sufficient to prove that, for all \(n\in {\mathbb {N}}\),

is bounded over all compact sets of \({\mathbb {R}}^d\). We have

hence the result.

We are now ready to prove the formula for \(\beta _t\).

By Bayes’ theorem we have, for all t in [0, T],

Since

we have

Proposition 1 now yields

Consequently

Therefore, and because T is arbitrary, we have

\(\square \)

Throughout this article, we assume that the prior \(m_\mu \) is such that G has the following Lipschitz property with respect to y:

As we shall see below, this assumption is verified if \(m_\mu \) has a compact support. It is also verified for \(m_\mu \) Gaussian (see Proposition 12 for instance).Footnote 7 However, it is not true in general for all sub-Gaussian priors.

2.2 Dynamics of \((\beta _t)_{t\in {\mathbb {R}}_+}\)

Let us define the process \(\left( {\widehat{W}}_t\right) _{t\in {\mathbb {R}}_+}\) by

Remark 4

The process \(({\widehat{W}}_t)_{t \in {\mathbb {R}}_+}\) is called the innovation process in filtering theory. As shown below for the sake of completeness, it is classically known to be a Brownian motion (see for instance [5] on continuous Kalman filtering).

Proposition 2

\(\left( \widehat{W}_{t}\right) _{t\in {\mathbb {R}}_+}\) is a d-dimensional Brownian motion adapted to \(\left( {\mathcal {F}}_{t}^{S}\right) _{t\in {\mathbb {R}}_+}\), with the same correlation structure as \(\left( {W}_{t}\right) _{t\in {\mathbb {R}}_+}\), i.e.,

Proof

To prove this result, we use Lévy’s characterization of a Brownian motion.

Let \(t\in {\mathbb {R}}_+\). By definition, we have

hence the \({\mathcal {F}}_{t}^{S}\)-measurability of \(\widehat{W}_{t}\).

Let \(s,t\in {\mathbb {R}}_+\), with \(s<t\). For \(i\in \{ 1,\ldots ,d\}\),

For the first term, the increment \(W^i_{t}-W^i_{s}\) is independent of \({\mathcal {F}}_{s}^{W}\) and independent of \(\mu \). Therefore, it is independent of \({\mathcal {F}}_{s}^{S}\) and we have

Regarding the second term, we have

by definition of \(\beta ^i_{u}\).

We obtain that \(\left( \widehat{W}_{t}\right) _{t\in {\mathbb {R}}_+}\) is an \({\mathcal {F}}^{S}\)-martingale.

Since \((\widehat{W}_t)_{t\in {\mathbb {R}}_+}\) has continuous paths and \(d\langle \widehat{W}^i,\widehat{W}^j\rangle _{t} =\rho ^{ij}dt\), we conclude that \((\widehat{W}_t)_{t\in {\mathbb {R}}_+}\) is a d-dimensional \({\mathcal {F}}^{S}\)-Brownian motion with correlation structure given by \(\rho \). \(\square \)

We are now ready to state the dynamics of \(\left( \beta _t\right) _{t \in {\mathbb {R}}_+}\).

Theorem 2

\(\left( \beta _t\right) _{t \in {\mathbb {R}}_+}\) has the following dynamics:

Proof

By Itō’s formula and Theorem 1, we have

Because \(\left( \beta _t\right) _{t\in {\mathbb {R}}_+}\) is a martingale under \(({\mathbb {P}},{\mathcal {F}}^S)\), we have

\(\square \)

The results obtained above (Theorems 1, 2) will be useful in the next section on optimal portfolio choice. The process \((\beta _t)_{t \in {\mathbb {R}}_+}\) indeed represents the best estimate of the drift in the dynamics of the prices.

2.3 A few remarks on the compact support case

The results presented in the next sections of this paper are valid for sub-Gaussian prior distributions \(m_\mu \) satisfying (12). A special class of such prior distributions is that of distributions with compact support.

We have indeed the following proposition:

Proposition 3

If \(m_\mu \) has a compact support, then G and all its derivatives are bounded over \({\mathbb {R}}_+\times {\mathbb {R}}^d\).

Proof

Let us consider \(i \in \{1, \ldots , d\}\). By definition, the \(i^\text {th}\) coordinate of G is \(G^i = \frac{\partial _{y^i} F}{F}\). Therefore, by immediate induction,

is the sum and product of terms of the form

Now, for \((t,y) \in {\mathbb {R}}_+\times {\mathbb {R}}^d\), and for \(m,m' \in {\mathbb {N}}, k_1, \ldots , k_m \in \{1, \ldots , d\}\),

where

and where \((e_k)_{1\le k \le d}\) is the canonical basis of \({\mathbb {R}}^d\).

Therefore

hence the result. \(\square \)

In addition to showing that the Lipschitz hypothesis (12) is true when \(m_\mu \) has a compact support, Proposition 3 will be useful in Sect. 3 to provide a large class of priors for which there is no blowup phenomenon in the equations characterizing the optimal portfolio choice of an agent.

3 Optimal portfolio choice

In this section we proceed with the study of optimal portfolio choice. For that purpose, let us set an investment horizon \(T\in {\mathbb {R}}_+^*\).

Let us also introduce the notion of “linear growth” for a process in our d-dimensional context. This notion plays an important part in the verification theorems.

Definition 1

Let us consider \(t \in [0,T]\). An \({\mathbb {R}}^{d}\)-valued, measurable, and \({\mathcal {F}}^{S}\)-adapted process \(\left( {\zeta }_{s}\right) _{s\in [t,T]}\) is said to satisfy the linear growth condition with respect to \(\xi = (\xi _s)_{s \in [t,T]}\) if,

The first subsection is devoted to the CARA case, and the second one focuses on the CRRA case.

3.1 CARA case

We consider the portfolio choice of the agent in the CARA case. We denote by \(\gamma > 0\) his absolute risk aversion parameter.

We define, for \(t \in [0,T]\) the set

We denote by \(\left( M_{t}\right) _{t\in [0,T]} \in {\mathcal {A}} = {\mathcal {A}}_0\) the \({\mathbb {R}}^d\)-valued process modelling the strategy of the agent. More precisely, \(\forall i \in \left\{ 1,\ldots ,d\right\} \), \(M^i_t\) represents the amount invested in the ith asset at time t. The resulting value of the agent’s portfolio is modelled by a process \((V_t)_{t\in [0,T]}\) with \(V_0 > 0\). The dynamics of \(\left( V_t\right) _{t \in [0,T]}\) is given by the following stochastic differential equation (SDE):

With the notations introduced in Sect. 2, we have

and

Given \(M\in {\mathcal {A}}_{t}\) and \(s\ge t\), we define therefore

For an arbitrary initial state \((V_{0},y_0)\), the agent maximizes, over M in the set of admissible strategies \({\mathcal {A}}\), the expected utility of his portfolio value at time T, i.e.,

The value function v associated with this problem is then defined by

The HJB equation associated with this problem is

with terminal condition

To solve the HJB equation, we use the following ansatz:

Proposition 4

Suppose there exists \(\phi \in C^{1,2}\left( \left[ 0,T\right] \times {\mathbb {R}}^d\right) \) satisfying

with terminal condition

Then u defined by (21) is solution of the HJB equation (19) with terminal condition (20).

Moreover, the supremum in (19) is achieved at:

Proof

Let us consider \(\phi \in C^{1,2}([0,T]\times {\mathbb {R}}^d)\) solution of (22) with terminal condition (23). For u defined by (21) and by considering \({\widetilde{M}}=Me^{r(T-t)}\), we have

The supremum in the above expression is reached at

corresponding to

Plugging this expression in the partial differential equation, we get:

As it is straightforward to verify that u satisfies the terminal condition (20), the result is proved. \(\square \)

From the previous proposition, we see that solving the HJB equation (19) with terminal condition (20) boils down to solving (22) with terminal condition (23). Because (22) is a simple parabolic PDE, we can easily build a strong solution.

Proposition 5

Let us define

where \(\forall (t,y)\in [0,T]\times {\mathbb {R}}^d\), \(\forall s \in [t,T]\),

Then \(\phi \) is a \(C^{1,2}([0,T]\times {\mathbb {R}}^d)\) function, solution of (22) with terminal condition (23).

Furthermore,

Proof

Because of the assumption (12) on G, the first part of the proposition is a consequence of classical results for parabolic PDEs and of the classical Feynman–Kac representation (see for instance [19, 27]).

For the second part, we notice first that

Therefore, by (12), there exists a constant \(C \ge 0\) such that

By (12) again, there exists a constant \(C' \ge 0\) such that

Now, by Theorem 2, \(\forall s \in [t,T]\),

Therefore,

Now, for \(p \ge 1\), we have

Because \(m_\mu \) is sub-Gaussian, there exists \(p > 1\) such that \(\frac{d{\mathbb {Q}}}{d{\mathbb {P}}} \in L^p(\Omega ,{\mathbb {P}})\). Because of the Lipschitz assumption on G, we have for any \(q>1\), and in particular for q such that \(\frac{1}{p} + \frac{1}{q} = 1\), that

Therefore,

We can conclude that \({\mathbb {E}}^{{\mathbb {Q}}}[\left||G(s,Y^{t,y}_s) - G(t,y)\right||]\) is bounded uniformly, and therefore using Eqs. (28) and (29) that \(\left||\nabla _y \phi \right||\) is indeed at most linear in y uniformly in \(t \in [0,T]\). \(\square \)

Using the above results, we know that there exists a \(C^{1,2,2}([0,T]\times {\mathbb {R}}\times {\mathbb {R}}^d)\) function u solution of the HJB equation (19) with terminal condition (20). By using a verification argument, we can show that u is in fact the value function v defined in Eq. (18) and then solve the problem faced by the agent. This is the purpose of the following theorem.

Theorem 3

Let us consider the \(C^{1,2}([0,T]\times {\mathbb {R}}^d)\) function \(\phi \) defined by (26). Let us then consider the associated function u defined by (21).

For all \(\left( t,V,y\right) \in \left[ 0,T\right] \times {\mathbb {R}}\times {\mathbb {R}}^{d}\) and \(M = (M_s)_{s \in [t,T]}\in {\mathcal {A}}_t\), we have

Moreover, equality in (30) is obtained by taking the optimal control \((M^{\star }_s)_{s \in [t,T]}\in {\mathcal {A}}_t\) given by (24), i.e.,

In particular \(u=v\).

Proof

From the Lipschitz property of G stated in Eq. (12) and the property of \(\phi \) stated in Eq. (27), we see that \((M_s^\star )_{s \in [t,T]}\) is indeed admissible (i.e., \((M_s^\star )_{s \in [t,T]} \in {\mathcal {A}}_t\)).

Let us then consider \(\left( t,V,y\right) \in [0,T]\times {\mathbb {R}}\times {\mathbb {R}}^{d}\) and \(M = (M_s)_{s \in [t,T]}\in {\mathcal {A}}_t\).

By Itō’s formula, we have for all \(s\in [t,T]\)

where

Note that we have

Let us subsequently define, for all \(s\in [t,T]\),

and

We have

and

Therefore

By definition of u, \({\mathcal {L}}^Mu\left( s,V^{t,V,y,M}_s,Y^{t,y}_s\right) \le 0\) and \({\mathcal {L}}^Mu\left( s,V^{t,V,y,M}_s,Y^{t,y}_s\right) =0\) if \(M_s=M^{\star }_s\). As a consequence, \(\left( u\left( s,V^{t,V,y,M}_s,Y^{t,y}_s\right) \left( \xi ^M_{t,s}\right) ^{-1} \right) _{s\in [t,T]}\) is nonincreasing, and therefore

with equality when \((M_s)_{s \in [t,T]} = (M_s^\star )_{s \in [t,T]}\).

Subsequently,

with equality when \((M_s^\star )_{s \in [t,T]} = (M_s)_{s \in [t,T]}\).

To conclude the proof let us show that \({\mathbb {E}}\left[ \xi ^M_{t,T}\right] = 1\). To do so, we will use the fact that \(\xi ^M_{t,t}=1\) and prove that \(\left( \xi ^M_{t,s}\right) _{s\in [t,T]}\) is a martingale under \(\left( {\mathbb {P}},\left( {\mathcal {F}}_s^{S}\right) _{s \in [t,T]}\right) \).

Because \(M \in {\mathcal {A}}_t\), and because of Eq. (27), we know that there exists a constant C such that

By definition of \((Y^{t,y}_s)_{s \in [t,T]}\), there exists therefore a constant \(C'\) such that

Now, by using the above inequality along with Eq. (2) and classical properties of the Brownian motion, we easily prove that

From Novikov’s condition (or more exactly one of its corollary—see for instance Corollary 5.14 in [27]), we see that \(\left( \xi ^M_{t,s}\right) _{s\in [t,T]}\) is a martingale under \(\left( {\mathbb {P}},\left( {\mathcal {F}}_s^{S}\right) _{s \in [t,T]}\right) \), hence the result

with equality when \((M_s^\star )_{s \in [t,T]} = (M_s)_{s \in [t,T]}\). Therefore,

\(\square \)

The optimal portfolio choice of an agent with a CARA utility function is therefore fully characterized. Let us now turn to the case of an agent with a CRRA utility function.

3.2 CRRA case

We consider the portfolio choice of the agent in the CRRA case. We denote by \(\gamma > 0\) the relative risk aversion parameter.

We denote by \(U^{\gamma }\) the utility function of the agent, i.e.,

If \(\gamma < 1\), we define for \(t \in [0,T]\) the set

If \(\gamma \ge 1\), we define for \(t \in [0,T]\) the set

We denote by \(\left( \theta _{t}\right) _{t\in [0,T]} \in {\mathcal {A}}^\gamma = {\mathcal {A}}^\gamma _0\) the \({\mathbb {R}}^d\)-valued process modelling the strategy of the agent. More precisely, \(\forall i \in \left\{ 1,\ldots ,d\right\} \), \(\theta ^i_t\) represents the part of the wealth invested in the ith risky asset at time t. The resulting value of the agent’s portfolio is modelled by a process \((V_t)_{t\in [0,T]}\) with \(V_0 > 0\). The dynamics of \(\left( V_t\right) _{t \in [0,T]}\) is given by the following stochastic differential equation (SDE):

With the notations introduced in Sect. 2, we have

and

Given \(\theta \in {\mathcal {A}}^\gamma _{t}\) and \(s\ge t\), we define

For an arbitrary initial state \((V_{0},y_0)\), the agent maximizes, over \(\theta \) in the set of admissible strategies \({\mathcal {A}}^\gamma \), the expected utility of his portfolio value at time T, i.e.,

The value function v associated with this problem is then defined by

The HJB equation associated with this problem is given by

with terminal condition

To solve the HJB equation and then solve the optimal portfolio choice problem, we need to consider separately the cases \(\gamma =1\) and \(\gamma \ne 1\).

3.2.1 The \(\gamma \ne 1\) case

To solve the HJB equation when \(\gamma \ne 1\), we use the following ansatz:

Proposition 6

Suppose there exists a positive function \(\phi \in C^{1,2}\left( \left[ 0,T\right] \times {\mathbb {R}}^d\right) \) satisfying

with terminal condition

Then u defined by (38) is solution of the HJB equation (36) with terminal condition (37).

Moreover, the supremum in (36) is achieved at

Proof

Let us consider \(\phi \in C^{1,2}\left( \left[ 0,T\right] \times {\mathbb {R}}^d\right) \), positive solution of (39) with terminal condition (40). For u defined by (38), we have:

The supremum in the above expression is reached at

Plugging this expression in the partial differential equation, we get:

As it is straightforward to verify that u satisfies the terminal condition (37), the result is proved. \(\square \)

For solving our problem, we would like to prove that there exists a (positive) \(C^{1,2}\left( \left[ 0,T\right] \times {\mathbb {R}}^d\right) \) function \(\phi \) solution of (39) with terminal condition (40) such that \(\frac{\nabla _y\phi }{\phi }\) is at most linear in y. However, unlike what happened in the CARA case, there is no guarantee, in general, that such a function exists. We will even show in Sect. 4 that there are blowup cases for some Gaussian priors in the case \(\gamma < 1\).

Even though there is no general result, we can state for instance a result in the case of a prior distribution \(m_\mu \) with compact support.

Proposition 7

Let us suppose that the prior distribution \(m_\mu \) has compact support.

Let us define

where \(\forall (t,y)\in [0,T]\times {\mathbb {R}}^d\), we introduce for \(s \in [t,T]\),

Furthermore, in that case

Proof

By using Theorem 1 and Proposition 3, we easily see that formal differentiations are authorized. Therefore \(\phi \) is a \(C^{1,2}\left( \left[ 0,T\right] \times {\mathbb {R}}^d\right) \) function solution of (39) with terminal condition (40).

For the second point, we write, for \((t,y) \in [0,T]\times {\mathbb {R}}^d\),

We have

Because of the Lipschitz property of G and Grönwall inequality, \(\sup _{s \in [t,T]} \left||D_y Z^{t,y}_s\right||\) is uniformly bounded on \([0,T]\times {\mathbb {R}}^d\). By Proposition 3, we then deduce that there exists \(C \ge 0\) such that

Therefore,

Hence the result. \(\square \)

We now write a verification theorem and provide a result for solving the problem faced by the agent under additional hypotheses.

Theorem 4

Let us suppose that there exists a positive function \(\phi \in C^{1,2}([0,T]\times {\mathbb {R}}^d)\) solution of (39) with terminal condition (40). Let us also suppose that

Let us then consider the function u defined by (38).

For all \(\left( t,V,y\right) \in \left[ 0,T\right] \times {\mathbb {R}}_+^*\times {\mathbb {R}}^{d}\) and \(\theta = (\theta _s)_{s \in [t,T]}\in {\mathcal {A}}^\gamma _t\), we have

Moreover, equality in (45) is obtained by taking the optimal control \((\theta ^{\star }_s)_{s \in [t,T]}\in {\mathcal {A}}^\gamma _t\) given by (41), i.e.,

In particular \(u=v\).

Proof

The proof is similar to that of the CARA case, therefore we do not detail all the computations.

From the Lipschitz property of G stated in Eq. (12) and assumption (44) on \(\phi \), we see that \((\theta _s^\star )_{s \in [t,T]}\) is indeed admissible (i.e., \((\theta _s^\star )_{s \in [t,T]} \in {\mathcal {A}}^\gamma _t\)).

Let us then consider \(\left( t,V,y\right) \in [0,T]\times {\mathbb {R}}_+^*\times {\mathbb {R}}^{d}\) and \(\theta = (\theta _s)_{s \in [t,T]}\in {\mathcal {A}}^\gamma _t\).

By Itō’s formula, we have for all \(s\in [t,T]\)

where

Note that we have

Let us subsequently define, for all \(s\in [t,T]\),

and

We have

By definition of u, \({\mathcal {L}}^{\theta }u\left( s,V_s^{t,V,y,\theta },Y_s^{t,y}\right) \le 0\) and \({\mathcal {L}}^\theta u\left( s,V_s^{t,V,y,\theta },Y_s^{t,y}\right) =0\) if \(\theta _s=\theta _s^\star \). As a consequence, \(\left( u\left( s,V_s^{t,V,y,\theta },Y_s^{t,y}\right) \left( \xi _{t,s}^{\theta }\right) ^{-1} \right) _{s\in [t,T]}\) is nonincreasing, and therefore

with equality when \(\left( \theta _s\right) _{s\in [t,T]}=\left( \theta _s^\star \right) _{s\in [t,T]}\).

Subsequently,

with equality when \((\theta _s^\star )_{s \in [t,T]} = (\theta _s)_{s \in [t,T]}\).

Using the same method as in the proof of Theorem 3, we see that \({\mathbb {E}}[\xi _{t,T}^{\theta ^\star }] = 1\). Therefore,

We have just shown the second part of the theorem. For the first part, we consider the cases \(\gamma \ge 1\) and \(\gamma <1\) separately because the set of admissible strategies is larger in the second case.

-

(a)

If \(\gamma \ge 1\), \(\left( \theta _s\right) _{s\in [t,T]}\in {\mathcal {A}}_{t}^{\gamma }\) verifies the linear growth condition. Therefore, using the assumption on \(\dfrac{\nabla _y\phi }{\phi }\) and the same argument as in Theorem 3, we see that \(\left( \xi _{t,s}^{\theta }\right) _{s\in [t,T]}\) is a martingale with \({\mathbb {E}}\left[ \xi _{t,s}^{\theta }\right] =1\) for all \(s\in [t,T]\).

We obtain

$$\begin{aligned} {\mathbb {E}}\left[ U^{\gamma }\left( V_{T}^{t,V,y,\theta }\right) \right]\le & {} u\left( t,V,y\right) . \end{aligned}$$ -

(b)

If \(\gamma <1\), then we define the stopping time

$$\begin{aligned} \tau _{n}= & {} T\wedge \inf \left\{ s\in \left[ t,T\right] ,\quad \left||\kappa _{s}^{\theta }\right||\ge n\right\} . \end{aligned}$$We use this stopping time in order to localize Eq. (47)

$$\begin{aligned} u\left( \tau _{n},V_{\tau _{n}}^{t,V,y,\theta },Y_{\tau _{n}}^{t,y}\right)\le & {} \xi _{t,\tau _{n}}^{\theta }u\left( t,V,y\right) . \end{aligned}$$By taking the expectation, we have, for all \(n\in {\mathbb {N}}\),

$$\begin{aligned} {\mathbb {E}}\left[ u\left( \tau _{n},V_{\tau _{n}}^{t,V,y,\theta },Y_{\tau _{n}}^{t,y}\right) \right]\le & {} u\left( t,V,y\right) . \end{aligned}$$As u is nonnegative when \(\gamma <1\), we can apply Fatou’s lemma

$$\begin{aligned} {\mathbb {E}}\left[ \liminf _{n\rightarrow +\infty }\ u\left( \tau _{n},V_{\tau _{n}}^{t,V,y,\theta },Y_{\tau _{n}}^{t,y}\right) \right]\le & {} \liminf _{n\rightarrow +\infty }\ {\mathbb {E}}\left[ u\left( \tau _{n},V_{\tau _{n}}^{t,V,y,\theta },Y_{\tau _{n}}^{t,y}\right) \right] \\\le & {} u\left( t,V,y\right) . \end{aligned}$$Because \(\left( \theta _s\right) _{s\in [t,T]}\in {\mathcal {A}}_{t}^{\gamma }\), \(\tau _{n}\rightarrow _{n \rightarrow +\infty } T\) almost surely. Therefore

$$\begin{aligned} {\mathbb {E}}\left[ U^{\gamma }\left( V_{s}^{t,V,y,\theta }\right) \right]\le & {} u\left( t,V,y\right) . \end{aligned}$$

In both cases, we conclude that

\(\square \)

The above verification theorem can be used for instance when \(m_\mu \) has a compact support because of (43). In the next section, we address the case of Gaussian priors and we shall see that there is a blowup phenomenon associated with the solution of the partial differential equation (39) with terminal condition (40) when \(\gamma \) is too small.

Before we turn to the Gaussian case, let us consider the specific case \(\gamma =1\).

3.2.2 The \(\gamma =1\) case

To solve the HJB equation when \(\gamma = 1\), we use the following ansatz:

Proposition 8

Suppose there exists a function \(\phi \in C^{1,2}\left( \left[ 0,T\right] \times {\mathbb {R}}^d\right) \) satisfying

with terminal condition

Then u defined by (48) is solution of the HJB equation (36) with terminal condition (37).

Moreover, the supremum in (36) is achieved at

Proof

Let us consider \(\phi \in C^{1,2}\left( \left[ 0,T\right] \times {\mathbb {R}}^d\right) \), solution of (49) with terminal condition (50). For u defined by (48), we have:

The supremum in the above expression is reached at

Therefore

As it is straightforward to verify that u satisfies the terminal condition (37), the result is proved. \(\square \)

From the previous proposition, we see that solving the HJB equation (36) with terminal condition (37) boils down to solving (49) with terminal condition (50). Because (49) is a simple parabolic PDE, we can easily build a strong solution.

Proposition 9

Let us define

where \(\forall (t,y)\in [0,T]\times {\mathbb {R}}^d\), \(\forall s \in [t,T]\),

Then \(\phi \) is a \(C^{1,2}([0,T]\times {\mathbb {R}}^d)\) function, solution of (49) with terminal condition (50). Furthermore,

Proof

Because of the assumption (12) on G, the first part of the proposition is a consequence of classical results for parabolic PDEs and of the classical Feynman–Kac representation (see for instance [19, 27]).

For the second part, we notice first that

We have

Because of the Lipschitz property of G and Grönwall inequality, \(\sup _{s \in [t,T]} \left||D_y Y^{t,y}_s\right||\) is uniformly bounded on \([0,T]\times {\mathbb {R}}^d\). Therefore, by (12), there exists a constant \(C \ge 0\) such that

By (12) there exists a constant \(C' \ge 0\) such that

But, by using Theorem 2

Therefore, using the Lipschitz property of G we see that \({\mathbb {E}}\left[ \left||G(s,Y^{t,y}_s) - G(t,y)\right||\right] \) is bounded by a constant that depends on T only. Combining this result with Eqs. (54) and (55), we obtain the property (53). \(\square \)

We now write a verification theorem and provide a result for solving the problem faced by the agent.

Theorem 5

Let us consider the function \(\phi \in C^{1,2}([0,T]\times {\mathbb {R}}^d)\) defined by (52). Let us then consider the function u defined by (48).

For all \(\left( t,V,y\right) \in \left[ 0,T\right] \times {\mathbb {R}}_+^*\times {\mathbb {R}}^{d}\) and \(\theta = (\theta _s)_{s \in [t,T]}\in {\mathcal {A}}^1_t\), we have

Moreover, equality in (45) is obtained by taking the optimal control \((\theta ^{\star }_s)_{s \in [t,T]}\in {\mathcal {A}}^1_t\) given by (51), i.e.,

In particular \(u=v\).

Proof

The proof is similar to that of the \(\gamma > 1\) case, therefore we do not detail all the computations.

From the Lipschitz property of G stated in Eq. (12), we see that \((\theta _s^\star )_{s \in [t,T]}\) is indeed admissible (i.e., \((\theta _s^\star )_{s \in [t,T]} \in {\mathcal {A}}^1_t\)).

Let us then consider \(\left( t,V,y\right) \in [0,T]\times {\mathbb {R}}_+^*\times {\mathbb {R}}^{d}\) and \(\theta = (\theta _s)_{s \in [t,T]}\in {\mathcal {A}}^1_t\).

By Itō’s formula, we have for all \(s\in [t,T]\)

where

Note that we have

Let us subsequently define, for all \(s\in [t,T]\),

and

We have

By definition of u, \({\mathcal {L}}^{\theta }u\left( s,V_s^{t,V,y,\theta },Y_s^{t,y}\right) \le 0\) and \({\mathcal {L}}^\theta u\left( s,V_s^{t,V,y,\theta },Y_s^{t,y}\right) =0\) if \(\theta _s=\theta _s^\star \). As a consequence, \(\left( u\left( s,V_s^{t,V,y,\theta },Y_s^{t,y}\right) \left( \xi _{t,s}^{\theta }\right) ^{-1} \right) _{s\in [t,T]}\) is nonincreasing, and therefore

with equality when \(\left( \theta _s\right) _{s\in [t,T]}=\left( \theta _s^\star \right) _{s\in [t,T]}\).

Subsequently,

with equality when \((\theta _s)_{s \in [t,T]} = (\theta _s^\star )_{s \in [t,T]}\).

\(\left( \theta _s\right) _{s\in [t,T]}\in {\mathcal {A}}_{t}^{1}\) verifies the linear growth condition. Therefore, using Eq. (53) and the same argument as in Theorem 3, we see that \(\left( \xi _{t,s}^{\theta }\right) _{s\in [t,T]}\) is a martingale with \({\mathbb {E}}\left[ \xi _{t,s}^{\theta }\right] =1\) for all \(s\in [t,T]\).

We obtain

with equality when \(\left( \theta _s\right) _{s\in [t,T]}=\left( \theta _s^\star \right) _{s\in [t,T]}\).

We conclude that

\(\square \)

4 Optimal portfolio choice in the Gaussian case: a tale of two routes

We showed in Sect. 3 that solving the optimal portfolio choice problem boils down to solving linear parabolic PDEs in the CARA and CRRA cases. One important case in which these PDEs can be solved in closed form is that of a Gaussian prior. Moreover, in the Gaussian prior case, there are two routes to solve the problem with PDEs because, as we shall see below, \(\beta \) appears to be a far more natural state variable than y. In this section, we solve the optimal portfolio choice problem in the case of a Gaussian prior using these two different routes and we discuss two essential points: (i) the influence of online learning on the optimal investment strategy, and (ii) the occurrence of blowups in some CRRA cases.

4.1 Bayesian learning in the Gaussian case

Let us consider a non-degenerate multivariate Gaussian prior \(m_\mu \), i.e.,

where \(\beta _0\in {\mathbb {R}}^d\) and \(\Gamma _0\in S_d^{++}({\mathbb {R}})\).

Our first goal is to obtain closed-form expressions for F and G in the Gaussian case. In order to obtain these expressions we shall use the following lemma:

Lemma 1

Proof

Using the canonical form of a polynomial of degree 2, we get

Therefore,

\(\square \)

We are now ready to derive the expressions of F and G.

Proposition 10

For the multivariate Gaussian prior \(m_\mu \) given by (59), F and G are given by: \(\forall t\in {\mathbb {R}}_+,\forall y\in {\mathbb {R}}^d,\)

Proof

\(\forall t\in {\mathbb {R}}_+,\forall y\in {\mathbb {R}}^d\),

Therefore,

where

and

Thanks to the above lemma, we have

Differentiating \(\log F\) brings

\(\square \)

Using Theorems 1 and 2, we now deduce straightforwardly the value of \(\beta _t\) and its dynamics.

Proposition 11

where \(\Gamma _{t} = \left( \Gamma _{0}^{-1}+t\Sigma ^{-1}\right) ^{-1}.\)

Remark 5

Classical Bayesian analysis or application of classical filtering tools enables to prove that the posterior distribution of \(\mu \) given \({\mathcal {F}}^S_t\) is in fact the Gaussian distribution \({\mathcal {N}}(\beta _t,\Gamma _t)\). In particular, it is noteworthy that the covariance matrix process \((\Gamma _t)_{t \in {\mathbb {R}}_+}\) is deterministic.

The above analysis shows that, in the Gaussian prior case, the problem can be written with two different sets of state variables: (y, V) or \((\beta ,V)\). We can consider indeed that the problem is described, as in Sect. 3, by the stochastic differential equations

or alternatively by the following stochastic differential equations

In what follows, we are going to solve the optimal portfolio choice problem in the Gaussian prior case by using alternatively the two different routes associated with these two ways of describing the dynamics of the system.

Remark 6

It is noteworthy that the dynamics of \((\beta _t)_{t \in {\mathbb {R}}_+}\) in the Gaussian case, as written in Eq. (65), does not involve any term in Y. From Theorem 2, we see that this is related to the fact that the matrix \(D_y G(t,\cdot )\) is independent of y in the Gaussian case. A natural question is whether or not this property is specific to a Gaussian prior distribution. In fact, the answer is positive. If indeed \(D_y G(t,\cdot )\) is independent of y, then \(\log F(t,\cdot )\) is a polynomial of (maximum) degree 2, i.e.,

where \(A(t)\in {\mathbb {R}},B(t)\in {\mathbb {R}}^d,\) and \(C(t)\in S_d({\mathbb {R}})\). Since

the Laplace transform of \(m_\mu \) is the exponential of a polynomial of (maximum) degree 2, and \(m_\mu \) is therefore Gaussian (possibly degenerate, even in the form of a Dirac mass).

Before we solve the PDEs in the CARA and CRRA cases in the next subsections, let us state some additional properties that will be useful to simplify future computations.

Proposition 12

The dynamics of the conditional covariance matrix process \((\Gamma _t)_{t \in {\mathbb {R}}_+}\) is given by:

The first order partial derivatives of G are given by:

\(\forall t \in {\mathbb {R}}_+, \forall y \in {\mathbb {R}}^d,\)

Proof

Equation (66) is a simple consequence of the definition of \(\Gamma _{t}\).

Equation (67) derives from the differentiation of Eq. (61) with respect to y.

For Eq. (68), we use Eqs. (61) and (66) to obtain

\(\square \)

Remark 7

From Eq. (67), we see that

Therefore Gaussian priors satisfy (12) as announced in Sect. 2.

We are now ready to solve the PDEs and derive the optimal portfolios in the CARA and CRRA cases.

4.2 Portfolio choice in the CARA case

4.2.1 The general method with y

Following the results of Sect. 3, solving the optimal portfolio choice of the agent in the CARA case boils down to solving the linear parabolic PDE (22) with terminal condition (23).

Because \(G(t,\cdot )\) is affine in y for all \(t\in [0,T]\) in the Gaussian case, we easily see from the Feynman–Kac representation (26) that for all \(t\in [0,T], \phi (t,\cdot )\) is a polynomial of degree 2 (in y). However looking for that polynomial of degree 2 in y by using the PDE (22) or Eq. (26) is cumbersome. As we shall see, the main reason for this is that \(\beta \) is in fact a more natural variable to solve the problem than y. In fact, a better ansatz than a general polynomial of degree 2 in y is the following:

where \(a(t) \in {\mathbb {R}}\) and \(B(t) \in S_d({\mathbb {R}})\).

We indeed have the following proposition:

Proposition 13

Assume there exists \(a\in C^{1}\left( \left[ 0,T\right] \right) \) and \(B\in C^{1}\left( \left[ 0,T\right] ,S_{d}\left( {\mathbb {R}}\right) \right) \) satisfying the following system of linear ODEs (for \(t \in [0,T]\)):

with terminal condition

Then, the function \(\phi \) defined by (69) satisfies (22) with terminal condition (23).

Proof

Let us consider (a, B) solution of (70) with terminal condition (71). For \(\phi \) defined by (69), we have, by using the formulas of Proposition 12 that

Therefore \(\phi \) is solution of the PDE (22) and it satisfies obviously the terminal condition (23). \(\square \)

The system of linear ODEs (70) with terminal condition (71) can be solved in closed form. This is the purpose of the following proposition.

Proposition 14

The functions a and B defined (for \(t \in [0,T]\)) by

satisfy the system (70) with terminal condition (71).

Wrapping up our results, we can state the optimal portfolio choice of an agent with a CARA utility function in the case of the Gaussian prior (59).

Proposition 15

In the case of the Gaussian prior (59), the optimal strategy \((M^{\star }_t)_{t \in [0,T]}\) of an agent with a CARA utility function is given by

Proof

Let us consider a and B as defined in Proposition 14. Then, let us define \(\phi \) by (69). We know from the verification theorem (Theorem 3) and especially from Eq. (24) that

\(\square \)

We see from the form (69) of the solution \(\phi \) and from Eq. (72) that \(G(t,Y_t)\) rather than \(Y_t\) itself is the driver of the optimal behavior of the agent at time t. Because of Eq. (7), this means that \(\beta \) rather than y is the natural variable for addressing the problem. In what follows, we solve the optimal portfolio choice problem in the case of a Gaussian prior by taking another route, on which the dynamics of the system is given by the stochastic differential equations (65) rather than (64).

4.2.2 Solving the problem using \(\beta \)

On our first route for solving the above optimal portfolio choice problem, the central equation was the HJB equation (19) associated with the stochastic differential equations (64). Instead of using the stochastic differential equations (64), we now reconsider the problem in the Gaussian prior case by using the stochastic differential equations (65).Footnote 8

The value function \({\tilde{v}}\) associated with the problem is now given by

where the set of admissible strategies is

and where, \(\forall t \in [0,T], \forall (V,\beta ,M) \in {\mathbb {R}}\times {\mathbb {R}}^d \times \tilde{{\mathcal {A}}}_t, \forall s \in [t,T],\)

It is noteworthy that for all \(t \in [0,T]\), \(\tilde{{\mathcal {A}}}_{t} = {\mathcal {A}}_t\). There is indeed no difference between the linear growth condition with respect to \(\beta \) and the linear growth condition with respect to Y in the Gaussian prior case. This is easy to see on Eq. (62), recalling that \(\beta _0\) and \(Y_0\) are known constants.

The HJB equation associated with this optimization problem is

with terminal condition

By using the ansatz

we obtain the following proposition:

Proposition 16

Suppose there exists \(\tilde{\phi }\in C^{1,2}\left( \left[ 0,T\right] \times {\mathbb {R}}^{d}\right) \) satisfying

\(\forall (t,\beta ) \in [0,T]\times {\mathbb {R}}^{d}\),

with terminal condition

Then \({\tilde{u}}\) defined by (77) is solution of the HJB equation (75) with terminal condition (76).

Moreover, the supremum in (75) is achieved at

For solving Eq. (78) with terminal condition (79), it is naturalFootnote 9 to consider the following ansatz:

where \({\tilde{a}}(t) \in {\mathbb {R}}\) and \({\tilde{B}}(t) \in S_d({\mathbb {R}})\).

The next proposition states the ODEs that \({\tilde{a}}\) and \({\tilde{B}}\) must satisfy.

Proposition 17

Assume there exists \({\tilde{a}}\in {C}^{1}\left( \left[ 0,T\right] \right) \) and \({\tilde{B}}\in {C}^{1}\left( \left[ 0,T\right] ,S_{d}\left( {\mathbb {R}}\right) \right) \) satisfying the following system of linear ODEs (for \(t \in [0,T]\)):

with terminal condition

Then, the function \(\tilde{\phi }\) defined by (81) satisfies (78) with terminal condition (79).

The system of linear ODEs (82) with terminal condition (83) can be solved in closed form. This is the purpose of the following proposition.

Proposition 18

The functions \({\tilde{a}}\) and \({\tilde{B}}\) defined, for \(t \in [0,T]\) by

satisfy the system (82) with terminal condition (83).

We are now ready to state the main result of this subsection, whose proof is similar to that of Theorem 3.

Theorem 6

Let us consider \({\tilde{a}}\) and \({\tilde{B}}\) as defined in Proposition 18. Let us then define \(\tilde{\phi }\) by (81) and, subsequently, \({\tilde{u}}\) by (77).

For all \(\left( t,V,\beta \right) \in \left[ 0,T\right] \times {\mathbb {R}}\times {\mathbb {R}}^{d}\) and \(M = (M_s)_{s \in [t,T]}\in \tilde{{\mathcal {A}}}_t\), we have

Moreover, equality in (84) is obtained by taking the optimal control \((M^{\star }_s)_{s \in [t,T]}\in \tilde{{\mathcal {A}}}_t\) given by

In particular \({\tilde{u}}={\tilde{v}}\).

4.2.3 Comments on the results: understanding the learning-anticipation effect

In the case of an agent maximizing an expected CARA utility objective function, the optimal portfolio allocation is given by

Of course, if \(\mu \) was known, the optimal strategy would be

It is essential to notice that the optimal strategy does not boil down (except at time \(t=T\)) to the naive strategy

which consists in replacing, at time t, \(\mu _\text {known}\) by the current estimator \(\beta _t\) in Eq. (87).

The sub-optimality of the naive strategy comes from the fact that the agent does not only learn but knows that he will go on learning in the future, and uses that knowledge to design his investment strategy. We call this effect the learning-anticipation effect.

To better understand this learning-anticipation effect, it is interesting to study the case \(d=1\). In that case, let us denote by \(\sigma \) the volatility of the risky asset and let us assume that the prior distribution for \(\mu \) is \({\mathcal {N}}(\beta _0, \nu _0^2)\), where \(\nu _0 > 0\). The agent following the optimal strategy invests at time t the amount

in the risky asset, whereas the naive strategy would consist instead in investing the amount

The magnitude of the learning-anticipation effect can be measured by the multiplier \(\chi = \frac{\sigma ^2 + \nu _0^2 t}{\sigma ^2 + \nu _0^2 T}\). \(\chi \in [0,1]\), and the further from 1 the multiplier (i.e., the smaller in this case), the larger the learning-anticipation effect.

\(\chi \) is an increasing function of t with \(\chi =1\) at time \(t=T\). This means that the agent invests less (in absolute value) in the risky asset than he would if he opted for the naive strategy, except at time T because there is nothing more to learn. In other words, he is prudent and waits for more precise estimates of the drift.

\(\chi \) is also an increasing function of \(\sigma \). The smaller \(\sigma \), the more important the learning-anticipation effect. When volatility is low, it is really valuable to wait for a good estimate of \(\mu \) before investing.

\(\chi \) is a decreasing function of \(\nu _0\) and T. The longer the investment horizon and the higher the uncertainty about the value of the drift, the stronger the incentive of the agent to start with a small exposure (in absolute value) in the risky asset and to observe the behavior of the risky asset before adjusting his exposure, ceteris paribus.

4.3 Portfolio choice in the CRRA case

4.3.1 The general method with y

In Sect. 3, and more precisely in Theorem 5, we have seen that an agent with a \(\log \) utility function has an optimal investment strategy that depends on the prior only through G. There is therefore no need to solve PDEs.

In the CRRA case, when \(\gamma \ne 1\), following the results of Sect. 3, we see that solving the optimal portfolio choice of the agent boils down to solving the linear parabolic PDE (39) with terminal condition (40).

In order to solve this equation, we consider the following ansatz:

where \(a(t) \in {\mathbb {R}}\) and \(B(t) \in S_d({\mathbb {R}})\).

We have the following proposition:

Proposition 19

Assume there exists \(a\in C^{1}\left( \left[ 0,T\right] \right) \) and \(B\in C^{1}\left( \left[ 0,T\right] ,S_{d}\left( {\mathbb {R}}\right) \right) \) satisfying the following system of linear ODEs (for \(t \in [0,T]\)):

with terminal condition

Then, the function \(\phi \) defined by (89) satisfies (39) with terminal condition (40).

Proof

Let us consider (a, B) solution of (90) with terminal condition (91). For \(\phi \) defined by (89), we have, by using the formulas of Proposition 12,

Therefore \(\phi \) is solution of the PDE (39) and it satisfies obviously the terminal condition (40). \(\square \)

The system of ODEs (90) is not a system of linear ODEs. The equation for B is indeed a Riccati equation. Luckily, \(t \mapsto - \frac{1}{\gamma }\Sigma \Gamma _t^{-1} \Sigma \) is a trivial solution of the second differential equation of the system (90). Therefore, using a classical trick of Riccati equations, we can look for a solution B of the form

where \(E \in C^1([0,T],S_d({\mathbb {R}}))\).

With this ansatz, looking for a solution B to the above Riccati equation boils down to solving the linear ODE

and verifying that for all \(t \in [0,T]\), E(t) is invertible.

The unique solution to Eq. (92) is given in the following straightforward proposition:

Proposition 20

The function E defined by

is the unique solution of the Cauchy problem (92).

Regarding the invertibility of E(t) for all \(t \in [0,T]\), we have the following result:

Proposition 21

Let us consider E as defined by Eq. (93).

E(t) is invertible for all \(t \in [0,T]\) if and only if (i) \(\gamma > 1\) or (ii) \(\gamma < 1\) and \(T < \frac{\gamma }{1-\gamma } \lambda _{\min }\left( \Sigma ^{\frac{1}{2} }\Gamma _{0}^{-1}\Sigma ^{\frac{1}{2} }\right) \), where the function \(\lambda _{\min }(\cdot )\) maps a symmetric matrix to its lowest eigenvalue.

Proof

Let us consider \(t \in [0,T]\). E(t) is invertible if and only if \(\Gamma _{t}+ (\gamma - 1)\Gamma _{t}\Gamma _{T}^{-1}\Gamma _{t}\) is invertible.

If \(\gamma >1\), then \(\Gamma _{t}+ (\gamma - 1)\Gamma _{t}\Gamma _{T}^{-1}\Gamma _{t}\) is the sum of two positive definite symmetric matrices. It is therefore an invertible matrix.

If \(\gamma <1\), then, using the definition of \((\Gamma _t)_{t \in [0,T]}\), we have

Therefore E(t) is invertible if and only if \(-\frac{t + (\gamma -1)T}{\gamma }\) is not eigenvalue of \(\Sigma ^{\frac{1}{2} }\Gamma _{0}^{-1}\Sigma ^{\frac{1}{2}}\), hence the result. \(\square \)

The above result is very important. It means that when \(\gamma < 1\) the solution of (90) with terminal condition (91) blows up in finite time, in the sense that the solution can only be defined on an interval of the form \((\tau ,T]\). If T is small enough so that \(\tau < 0\), then the solution exists on [0, T]. Otherwise, we are enable to solve (90) with terminal condition (91) on [0, T]. When \(d=1\), B(t) is a scalar and it goes to \(+\infty \) as \(t \rightarrow \tau \). In particular, this means that the value function stops to be defined because it becomes infinite.

We are now ready to solve (90) with terminal condition (91).

Proposition 22

Let us assume that either (i) \(\gamma > 1\) or (ii) \(\gamma < 1\) and \(T < \frac{\gamma }{1-\gamma } \lambda _{\min }\left( \Sigma ^{\frac{1}{2} }\Gamma _{0}^{-1}\Sigma ^{\frac{1}{2} }\right) \).

Then, the functions a and B defined (for \(t \in [0,T]\)) by

satisfy the system (90) with terminal condition (91).

Wrapping up our results, we can state the optimal portfolio choice of an agent with a CRRA utility function with \(\gamma \ne 1\) in the case of the Gaussian prior (59).

Proposition 23

Let us consider the Gaussian prior (59). Let us assume that either (i) \(\gamma > 1\) or (ii) \(\gamma < 1\) and \(T < \frac{\gamma }{1-\gamma } \lambda _{\min }\left( \Sigma ^{\frac{1}{2} }\Gamma _{0}^{-1}\Sigma ^{\frac{1}{2} }\right) \).

Then, the optimal strategy \((\theta ^{\star }_t)_{t \in [0,T]}\) of an agent with a CRRA utility function with \(\gamma \ne 1\) is given by

Proof

Let us consider a and B as defined in Proposition 22. Then let us define \(\phi \) by (89). It is straightforward to see that \(\phi \) satisfies (44). Consequently, we know from the verification theorem (Theorem 4) and especially from Eq. (46) that

\(\square \)

Remark 8

It is noteworthy that when \(\gamma \rightarrow 1\), we recover the result of Theorem 5, i.e. \(\theta ^{\star }_t = G(t,Y_t)\).

As in the CARA case, we see from the form (89) of the solution \(\phi \) and from Eq. (94) that \(G(t,Y_t)\) rather than \(Y_t\) itself is the driver of the optimal behavior of the agent at time t. Because of Eq. (7), this means that \(\beta \) rather than y is the natural variable for addressing the problem. In what follows, we solve the optimal portfolio choice problem in the case of a Gaussian prior by taking another route, on which the dynamics of the system is given by the stochastic differential equations (65) rather than (64).

4.3.2 Solving the problem using \(\beta \)

On our first route for solving the above optimal portfolio choice problem, the central equation was the HJB equation (36) associated with the stochastic differential equations (64). Instead of using the stochastic differential equations (64), we now reconsider the problem in the Gaussian prior case by using the stochastic differential equations (65).Footnote 10

If \(\gamma < 1\), we define for \(t \in [0,T]\) the set

If \(\gamma > 1\), we define for \(t \in [0,T]\) the set

As in the CARA case, we have in fact \(\tilde{{\mathcal {A}}}^\gamma _{t} = {\mathcal {A}}^\gamma _{t}, \forall \gamma > 0\).

The value function \({\tilde{v}}\) associated with the problem is now given by

where, \(\forall t \in [0,T], \forall (V,\beta ,\theta ) \in {\mathbb {R}}_+^*\times {\mathbb {R}}^d \times \tilde{{\mathcal {A}}}_t, \forall s \in [t,T],\)

The HJB equation associated with this optimization problem is

with terminal condition

By using the ansatz

we obtain the following proposition:

Proposition 24

Suppose there exists \(\tilde{\phi }\in C^{1,2}\left( \left[ 0,T\right] \times {\mathbb {R}}^{d}\right) \) satisfying

\(\forall (t,\beta ) \in [0,T]\times {\mathbb {R}}^{d}\),

with terminal condition

Then \({\tilde{u}}\) defined by (99) is solution of the HJB equation (97) with terminal condition (98).

Moreover, the supremum in (97) is achieved at

For solving Eq. (100) with terminal condition (101), we consider the following ansatz:

where \({\tilde{a}}(t) \in {\mathbb {R}}\) and \({\tilde{B}}(t) \in S_d({\mathbb {R}})\).

The next proposition states the ODEs that \({\tilde{a}}\) and \({\tilde{B}}\) must satisfy.

Proposition 25

Assume there exists \({\tilde{a}}\in {C}^{1}\left( \left[ 0,T\right] \right) \) and \({\tilde{B}}\in {C}^{1}\left( \left[ 0,T\right] ,S_{d}\left( {\mathbb {R}}\right) \right) \) satisfying the following system of linear ODEs (for \(t \in [0,T]\)):

with terminal condition

Then, the function \(\tilde{\phi }\) defined by (103) satisfies (100) with terminal condition (101).

The system of linear ODEs (104) with terminal condition (105) can be solved in closed form on [0, T] when there is no blowup. This is the purpose of the following proposition.

Proposition 26

Let us assume that either (i) \(\gamma > 1\) or (ii) \(\gamma < 1\) and \(T < \frac{\gamma }{1-\gamma } \lambda _{\min }\left( \Sigma ^{\frac{1}{2} }\Gamma _{0}^{-1}\Sigma ^{\frac{1}{2} }\right) \).

Then, the functions \({\tilde{a}}\) and \({\tilde{B}}\) defined, for \(t \in [0,T]\) by

satisfy the system (104) with terminal condition (105).

We are now ready to state the main result of this subsection, whose proof is similar to that of Theorem 4.

Theorem 7

Let us assume that either (i) \(\gamma > 1\) or (ii) \(\gamma < 1\) and \(T < \frac{\gamma }{1-\gamma } \lambda _{\min }\left( \Sigma ^{\frac{1}{2} }\Gamma _{0}^{-1}\Sigma ^{\frac{1}{2} }\right) \).

Let us consider \({\tilde{a}}\) and \({\tilde{B}}\) as defined in Proposition 26. Let us then define \(\tilde{\phi }\) by (103) and, subsequently, \({\tilde{u}}\) by (99).

For all \(\left( t,V,\beta \right) \in \left[ 0,T\right] \times {\mathbb {R}}_+^*\times {\mathbb {R}}^{d}\) and \(\theta = (\theta _s)_{s \in [t,T]}\in \tilde{{\mathcal {A}}}_t\), we have

Moreover, equality in (106) is obtained by taking the optimal control \((\theta ^{\star }_s)_{s \in [t,T]}\in \tilde{{\mathcal {A}}}_t\) given by

In particular \({\tilde{u}}={\tilde{v}}\).

4.3.3 Comments on the results: beyond the learning-anticipation effect

In the case of an agent maximizing an expected CRRA utility objective function, the optimal portfolio allocation is given by the formula

whenever either (i) \(\gamma \ge 1\) or (ii) \(\gamma < 1\) and \(T < \frac{\gamma }{1-\gamma } \lambda _{\min }\left( \Sigma ^{\frac{1}{2} }\Gamma _{0}^{-1}\Sigma ^{\frac{1}{2} }\right) \).

When \(\gamma \ne 1\), the optimal strategy does not boil to the naive strategy

However, it does in the case of a logarithmic utility function (i.e., \(\gamma = 1\)). This means that there is no learning-anticipation effect in the case of an agent with a \(\log \) utility.

As in the CARA case, it is interesting to analyze the formula in the one-asset case \(d=1\). In that case, let us denote by \(\sigma \) the volatility of the risky asset and let us assume that the prior distribution for \(\mu \) is \({\mathcal {N}}(\beta _0, \nu _0^2)\), where \(\nu _0 > 0\). The agent following the optimal strategy invests at time t a proportion of his wealth

in the risky asset, whereas the naive strategy would consist instead in investing the proportion

When \(\gamma > 1\), we observe a learning-anticipation effect similar to that of the CARA case. It is measured by the multiplier \(\chi = \frac{\sigma ^2 + \nu _0^2 t}{\sigma ^2 + \nu _0^2 \frac{t+(\gamma -1)T}{\gamma }} \in [0,1]\). \(\chi \) is an increasing function of t. This means that the agent invests less (in absolute value) in the risky asset than he would if he opted for the naive strategy, except at time T because there is nothing more to learn. \(\chi \) is also an increasing function of \(\sigma \). The smaller \(\sigma \), the more important the learning-anticipation effect. When volatility is low, it is really valuable to wait for a good estimate of \(\mu \) before investing. \(\chi \) is eventually a decreasing function of \(\nu _0\) and T. The longer the investment horizon and the higher the uncertainty about the value of the drift, the stronger the incentive of the agent to start with a small exposure (in absolute value) in the risky asset and to observe the behavior of the risky asset before adjusting his exposure, ceteris paribus.

All the above effects are in line with the CARA case: the agent is prudent and waits to know more. However, the effects are reversed when \(\gamma < 1\). In that case indeed, the multiplier \(\chi \) ceases to be below 1. Instead, it is above 1. In fact, the very possibility that expected returns could be very high (or very low because we can short) creates an incentive for the agent to have a higher exposure in the risky asset. Then, as the uncertainty reduces through learning, the agent adjusts his position towards a milder one and ends up with the same position as in the naive strategy when \(t=T\).

It is noteworthy that \(\chi \) at time 0 tends to \(+\infty \) when \(\gamma \) tends to \(\frac{\nu _0^2}{\sigma ^2 + \nu _0^2 T}\), and this corresponds to the threshold found in Proposition 21 for the blowup occurring exactly at time \(t=0\). This means, if \(\beta _0 > r\), that the agent wants to borrow an infinite amount of money at time 0 to invest in the risky asset.

This reversed phenomenon is linked to the qualitative difference between the power utility functions when \(\gamma >1\), which are bounded from above, and the power utility functions when \(\gamma <1\), which have no upper bound. This difference explains why, for \(\gamma < 1\) and for a Gaussian prior distribution (which is unbounded), the multiplier \(\chi \) and the value function can blowup to \(+\infty \) and therefore stop to be defined if T is too large (or equivalently if \(\gamma \) is too small when T is fixed).

5 Optimal portfolio choice, portfolio liquidation, and portfolio transition with online learning and execution costs

The results presented above have been obtained by using PDE methods only. It is noteworthy that one could have derived the same formulas by using the martingale method of Karatzas and Zhao [28]. However the martingale method requires a model in which there are martingales, and there are many problems in which martingales cannot be exhibited. The goal of this section is to show how PDEs can be used to address problems for which the martingale method cannot be applied.

The classical literature on portfolio choice and asset allocation mainly considers frictionless markets. In that case, both PDE methods and martingale methods can be used for solving the problem, because there exists an equivalent probability measure under which discounted prices, and therefore discounted portfolio values, are martingales. Martingale methods cannot be used however when one adds frictions in the model. In what follows, we consider frictions in the form of execution costs, as in optimal execution models àla Almgren–Chriss (see [1, 2]). We show that the PDE method presented in the previous sections enables to address the optimal portfolio choice problem, but also optimal portfolio liquidation and optimal portfolio transition problems, when there are execution costs and when one learns the value of the drift over the course of the optimization problem.

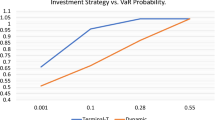

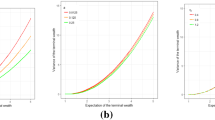

We first present the modelling framework and a generic optimization problem encompassing the three types of problem we consider. We then derive the associated HJB equation and derive a simpler PDE using an ansatz. We then focus on the specific case in which (i) the prior distribution of the drift is Gaussian and (ii) the execution costs and penalty functions are quadratic, because in that case the PDE boils down to a system of ODEs that can be solved numerically. We then show some numerical examples for each of the problems.

5.1 Notations and setup of the model

5.1.1 Price dynamics and Bayesian learning of the drift

As above we consider a financial market with one risk-free asset and d risky assets. In order to simplify the equations, we assume that the risk-free asset yields no interest. It is noteworthy that the model can easily be generalized to the case of a non-zero risk-free interest rate r.

We index by \(i \in \left\{ 1,\ldots ,d\right\} \) the d risky assets. For \(i\in \left\{ 1,\ldots ,d\right\} \), the price of the ith risky asset \(S^i\) has the following drifted Bachelier dynamicsFootnote 11

where the volatility vector \(\sigma = (\sigma ^{1}, \ldots , \sigma ^d)'\) satisfies \(\forall i \in \left\{ 1,\ldots ,d\right\} , \sigma ^{i} > 0\), and where the drift vector \(\mu =(\mu ^{1}, \ldots , \mu ^d)'\) is unknown.

As above, we assume that the prior distribution of \(\mu \), denoted by \(m_\mu \), is sub-Gaussian.

Throughout, we shall respectively denote by \(\rho = (\rho ^{ij})_{1\le i,j \le d}\) and \(\Sigma = (\rho ^{ij}\sigma ^{i}\sigma ^{j})_{1\le i,j \le d}\) the correlation and covariance matrices associated with the dynamics of prices.

We introduce the process \((\beta _t)_{t\in {\mathbb {R}}_+}\) defined by

We can state a result similar to that of Theorem 1.

Theorem 8

Let us define

F is a well-defined finite-valued \(C^\infty ({\mathbb {R}}_+\times {\mathbb {R}}^d)\) function.

We have

where

As in Sect. 2, we define the process \(\left( {\widehat{W}}_t\right) _{t\in {\mathbb {R}}_+}\) by

Using the same method as in Sect. 2, we can prove the following result on \(\left( {\widehat{W}}_t\right) _{t\in {\mathbb {R}}_+}\):

Proposition 27

\(\left( \widehat{W}_{t}\right) _{t\in {\mathbb {R}}_+}\) is a Brownian motion adapted to \(\left( {\mathcal {F}}_{t}^{S}\right) _{t\in {\mathbb {R}}_+}\), with the same correlation structure as \(\left( {W}_{t}\right) _{t\in {\mathbb {R}}_+}\)

The Brownian motion \(\left( \widehat{W}_{t}\right) _{t\in {\mathbb {R}}_+}\) is used to re-write Eq. (108) as

5.2 Almgren–Chris modelling framework and optimization problems

We consider the modelling framework introduced by Almgren and Chriss in [1, 2] (see also [21, 24]). In this framework, we do not consider the Mark-to-Market (MtM) value of the portfolio as a state variable. Instead, we consider separately the position \(q\in {\mathbb {R}}^d\) in the risky assets and the amount \(X\in {\mathbb {R}}\) on the cash account.

Let us set a time horizon \(T\in {\mathbb {R}}_+^*\). The strategy of the agent is described by the stochastic process \(\left( v_{t}\right) _{t\in [0,T]} \in {\mathcal {A}}^{\text {AC}} = {\mathcal {A}}^{\text {AC}}_0\), where, for \(t \in [0,T]\),

This process represents the velocity at which the agent buys and sells the risky assets. In other words,

Now, for \(v\in {\mathcal {A}}^{\text {AC}}\), the amount on the cash account evolves as

where \(\forall i\in \{1,\ldots ,d\},(V^i_{t})_{t\in [0,T]}\) is a deterministic process, continuousFootnote 12 and bounded, modelling the market volume for the ith risky asset,Footnote 13 and where \((L^i)_{1\le i \le d}\) model execution costs. For each \(i \in \{1, \ldots , d\}\), the execution cost function \(L^i\in C({\mathbb {R}},{\mathbb {R}}_{+})\) classically satisfies:

-

\(L^i(0)=0\),

-

\(L^i\) is increasing on \({\mathbb {R}}_{+}\) and decreasing on \({\mathbb {R}}_{-}\),

-

\(L^i\) is strictly convex,

-

\(L^i\) is asymptotically superlinear, i.e.,

$$\begin{aligned} \lim _{|y|\rightarrow +\infty }\frac{L^i(y)}{|y|}= & {} +\infty . \end{aligned}$$

Remark 9

In applications, \(L^i\) is often a power function \(L^i(y)=\eta ^i\left| y\right| ^{1+\phi ^i}\) with \(\phi ^i>0\), or a function of the form \(L^i(y)=\eta ^i\left| y\right| ^{1+\phi ^i}+\psi ^i|y|\) with \(\phi ^i,\psi ^i>0\), where \(\psi ^i\) takes account of proportional costs such as bid-ask spread or stamp duty. In the original Almgren–Chriss framework, the execution costs are quadratic. This corresponds to \(L^i(y)=\eta ^iy^{2}\) (\(\phi ^i=1,\psi ^i=0\)).

Given \(v\in {\mathcal {A}}^{\text {AC}}_t\), we define for \(s \ge t\),

We assume that the agent has a constant absolute risk aversion denoted by \(\gamma >0\). For an arbitrary initial state \((x_{0},q_0,S_0)\), the optimization problems we consider are of the following generic form:

where the penalty function \(\ell \) is assumed to be continuous and convex.

The choice of the penalty function \(\ell \) depends on the problem faced by the agent:

-

In the case of a portfolio choice problem, we can assume that \(\ell = 0\) or that \(\ell \) penalizes illiquid assets (see for instance [21, 24]).

-

In the case of an optimal portfolio liquidation problem, we can assume that the penalty function is of the form \(\ell (q) = \frac{1}{2} q'Aq\) with \(A\in S_d^{++}({\mathbb {R}})\) such that the minimum eigenvalue of A is large enough to force (almost complete) liquidation.Footnote 14

-

In the case of an optimal portfolio transition problem, we can assume that the penalty function is of the form \(\ell (q) = \frac{1}{2} \left( q-q_{\mathrm {target}}\right) 'A\left( q-q_{\mathrm {target}}\right) \) with \(A\in S_d^{++}({\mathbb {R}})\) such that the minimum eigenvalue of A is large enough to force \(q_T\) to be very close to the target \(q_{\mathrm {target}}\).Footnote 15

5.3 The PDEs in the general case

Let us introduce the value function \({\mathcal {V}}\) associated with the above generic problem.

The HJB equation associated with the problem is

with terminal condition

For reducing the dimensionality of the problem, we consider the following ansatz

We have the following result:

Proposition 28

Suppose there exists \(\theta \in {C}^{1,2,2}\left( \left[ 0,T\right] \times {\mathbb {R}}^d\times {\mathbb {R}}^d\right) \) satisfying

with terminal condition

where for all \(i\in \{1,\ldots , d\}\), \(H^i\) is the Legendre-Fenchel transform of \(L^i\), i.e.

Then u defined by (124) is solution of the HJB equation (122) with terminal condition (123).

Moreover, the supremum in (122) is achieved at \(v^{\star }(t,q,S) = \left( v^{i\star }(t,q,S)\right) _{1 \le i \le d}\), where

Proof

Let us consider \(\theta \in {C}^{1,2,2}\left( \left[ 0,T\right] \times {\mathbb {R}}^d\times {\mathbb {R}}^d\right) \) solution of the PDE (125) with terminal condition (126). For u defined by (124), we have

As it is straightforward to verify that u satisfies the terminal condition (123), the result is proved. \(\square \)