Abstract

We consider an optimal stochastic impulse control problem over an infinite time horizon motivated by a model of irreversible investment choices with fixed adjustment costs. By employing techniques of viscosity solutions and relying on semiconvexity arguments, we prove that the value function is a classical solution to the associated quasi-variational inequality. This enables us to characterize the structure of the continuation and action regions and construct an optimal control. Finally, we focus on the linear case, discussing, by a numerical analysis, the sensitivity of the solution with respect to the relevant parameters of the problem.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper we consider a one dimensional stochastic impulse optimal control problem modeling the economic problem of irreversible investment with fixed adjustment cost.

Let \(X=\{X_t\}_{t\ge 0}\) be a real valued positive process representing an economic indicator (such as the GDP of a country, the production capacity of a firm and so on) on which a planner/manager can intervene. When no intervention is undertaken, it is assumed that the process X evolves autonomously according to a time-homogeneous Itô diffusion. On the other hand, the planner may act on this process, increasing its value, by choosing a sequence of interventions dates \(\{\tau _n\}_{n\ge 1}\) and of intervention amplitudes \(\{i_n\}_{n\ge 1}\), with \(i_n>0\) .Footnote 1 Hence, the control is represented by a sequence of couples \(\left\{ (\tau _n,i_n)\right\} _{n\ge 1}\): the first component represents the intervention time, the second component the size of intervention. The goal of the controller is to maximize over the set of all admissible controls, the expected total discounted income

where f is a reward function, \(c_0>0\) and \(c_1>0\) represent, respectively, the proportional and the fixed cost of intervention, and \(\rho >0\) is a discount factor.

From the modeling side, our problem is the “extension” to the case \(c_1>0\) of the same problem already treated in the literature in the case \(c_1=0\) (see, e.g. [63, Ch. 4, Sec. 5]. In this respect, it applies to economic problems of capacity expansion, notably irreversible investment problems.Footnote 2

From the theoretical side, the introduction of a fixed cost of control is relevant, as it leads from a problem well posed (in the sense of existence of optimal controls) as a singular control problem to a problem well posed as an impulse control problem.Footnote 3 Such a change is not priceless at the theoretical level. Indeed, the introduction of a fixed cost of control has two unpleasant effects. Firstly, it destroys the concavity of the objective functional even if the revenue function is concave. Secondly, when approaching the problem by dynamic programming techniques (as we do), the dynamic programming equation has a nonlocal term and takes the form of a quasi-variational inequality (QVI, hereafter), whereas it is a variational inequality in the singular control case.

1.1 Related literature

First of all, it is worth noticing that the stochastic impulse control setting has been widely employed in several applied fields: e.g., exchange and interest rates [20, 49, 56], portfolio optimization with transaction costs [34, 51, 57], inventory and cash management [12, 21, 27,28,29, 44, 45, 58, 62, 67, 68, 71], real options [47, 53], reliability theory [7]. More recently, games of stochastic impulse control have been investigated with application to pollution [38].

From a modeling point of view, the closest works to ours can be considered [3, 6, 26, 35, 51]. On the theoretical side, starting from the classical book [17], several works investigated QVIs associated to stochastic impulse optimal control in \(\mathbb {R}^n\). Among them, we mention the recent [43] in a diffusion setting and [14, 31] in a jump-diffusion setting. In particular [17, Ch. 4] deals with Sobolev type solutions, whereas [43] deals with viscosity solutions. These two works prove a \(W^{2,p}\)-regularity, with \(p<\infty \), for the solution of QVI, which, by classical Sobolev embeddings, yields a \(C^1\)-regularity. However, it is typically not easy to obtain by such regularity information on the structure of the so called continuation and action regions, hence on the candidate optimal control. If this structure is established, then one can try to prove a verificiation theorem to prove that the candidate optimal control is actually optimal. In a stylized one dimensional example, [43, Sec. 5] successfully employs this method by exploiting the regularity result proved in [43, Sec. 4] to depict the structure of the continuation and action region for the problem at hand. Concerning verification, we need to mention the recent paper [15], which provides a non-smooth verification theorem in a quite general setting based on the stochastic Perron method to construct a viscosity solution to QVI; also this paper, in the last section, provides and application of the results to a one dimensional problem with an implementable solution. In dimension one other approaches, based on excessive mappings and iterated optimal stopping schemes, have been successfully employed in the context of stochastic impulse control (see [3, 6, 35, 46]). More recently, these methods have been extended to Markov processes valued in metric spaces (see [25]); again a complete description of the solution is shown in one dimensional examples.

1.2 Contribution

From the methodological side our work is close to [43]. As in the latter, we follow a direct analytical method based on viscosity solutions and we do not employ a guess-and-verify approach.Footnote 4 Indeed, we directly provide necessary optimality conditions that, by uniqueness, fully characterize the solution. In particular, we do not postulate the smooth-fit principle, as it is usually done in the guess-and-verify approach, but we prove it directly.Footnote 5 To the best of our knowledge a rigorous analytical treatment as ours of the specific problem treated in this paper seems to be still missing in the literature. It is important to notice that our analysis yields a a complete and implementable characterization of the optimal control policy through the identification of the continuation and action regions. Since the aforementioned techniques based on excessive mappings seems to be perfectly employable to our problem (even under weaker assumption), it is worth to point out that our contribution is methodological. As it is well known, the (implementable) characterization of the optimal control in stochastic impulse control problems is a challenging task in dimension larger than one. Hence, it is important to have at hand an approach like ours that might be generalized to address impulse control problems in multi-dimensional setting. To this regard, it is worth to notice the following.

-

To the best of our knowledge, the only study providing a complete picture of the solution in dimension two—through a two dimensional (S, s)-rule—is the recent paper [16] (see also [69] in a deterministic setting). The techniques used there are analytical and based on the study of QVI’s. Unfortunately, in this paper, the authors are able to provide a complete solution only in a very specific case.

-

In the presence of semiconvex data, our approach to prove \(C^1\) regularity of the value function based on semiconvexity jointly with the viscosity property, unlike [43], might be successful to prove a directional regularity result just along nondegenerate directions (see [37] in a singular control context).

-

The directional regularity result mentioned above might be sufficient to derive the right optimality condition to solve the control problem (see again [37] in a singular control context).

1.3 Contents

In Sect. 2 we set up the problem. In Sect. 3 we state some preliminary results on the value function v, in particular we show that it is semiconvex. In Sect. 4 we derive QVI associated to v and show that it solves the latter in viscosity sense. After that, we prove that v is of class \(C^2\) in the continuation region (the region where the differential part of QVI holds with equality, see below) and of class \(C^1\) on the whole state space (Theorem 4.6, our first main result), hence proving the smooth fit-principle. We prove the latter result relying just on the semiconvexity of v and exploting the viscosity supersolution property; unlike [43], this allows to avoid the use of a deep theoretical result such as the Calderon–Zygmund estimate. So, with respect to the aforementioned reference, our method of proof is cheaper from a theoretical point of view; on the other hand, it heavily relies on assumptions guaranteeing the semiconvexity of v. In Sect. 5 we use the latter regularity to establish the structure of the continuation and action regions—the real unknown of the problem—showing that they are both intervals. This allows to express explicitly v up to the solution of a nonlinear algebraic system of three variables (Theorem 5.11, our second main result). In Sect. 6, relying on the results of the previous section, we are able to construct an optimal control policy (Theorem 6.1, our third main result). The latter turns out to be based on the so called (S, s)-rule:Footnote 6 the controller acts whenever the state process reaches a minimum level s (the “trigger” boundary) and brings immediately the system at the level \(S>s\) (the “target” boundary). Finally, in Sect. 7, we provide a numerical illustration of the solution when X follows a geometric Brownian motion dynamics between intervation times, analyzing the sensitivity of the solution with respect to the volatility coefficient \(\sigma \) and to and the fixed cost \(c_1\).

2 Problem formulation

We introduce some notation. We set

The set \(\mathbb {R}_{++}\) will be the state space of our control problem. Throughout the paper we adopt the conventions \(e^{-\infty }=0\) and \(\inf \emptyset =\infty \). Moreover, we simply use the symbol \(\infty \) in place of \(+\infty \) when positive quantities are involved and no confusion may arise. Finally, the symbol n will always denote a natural number.

Let \((\Omega ,\mathscr {F}, \{\mathscr {F}_t\}_{t\ge 0}, \mathbb {P})\) be a filtered probability space satisfying the usual conditions and supporting a a one dimensional Brownian motion \(W=\{W_t\}_{t\ge 0}\). We denote \( \mathbb {F}{:}{=}\{\mathscr {F}_t\}_{t\in \overline{\mathbb {R}}_+}\), where we set \(\displaystyle {\mathscr {F}_\infty {:}{=}\bigvee _{t\in \mathbb {R}_+}\mathscr {F}_t}\). We take \(b,\sigma :\mathbb {R}\rightarrow \mathbb {R}\) satisfying the following

Assumption 2.1

\(b,\sigma :\mathbb {R}\rightarrow \mathbb {R}\) are Lipschitz continuous functions, with Lipschitz constants \(L_b,L_\sigma \), respectively, identically equal to 0 on \((-\infty ,0]\) and with \(\sigma >0\) on \(\mathbb {R}_{++}\). Moreover, \(b,\sigma \in C^1(\mathbb {R}_{+})\) and \(b^{\prime },\sigma ^{\prime }\) are Lipschitz continuous on \(\mathbb {R}_{++}\), with Lipschitz constants \(\tilde{L}_b, \tilde{L}_\sigma >0\), respectively.

Remark 2.2

The requirement that \(b^{\prime },\sigma ^{\prime }\) are Lipschitz continuous is typical when one wants to prove the semiconvexity/semiconcavity of the value function in stochastic optimal control problem (see, e.g., the classical reference [72, Ch. 4, Sec. 4.2] in the context of regular stochastic control; [14] in the context of impulse control). We use this assumpton since, as outlined in the introduction, in our approach the proof of the semiconvexity of the value function will be a crucial step towards the proof of the \(C^1\) regularity.

Let \(\tau \) be a (possibly not finite) \(\mathbb {F}\)-stopping time and let \(\xi \) be an \(\mathscr {F}_\tau \)-measurable random variable. By standard SDE’s theory with Lipschitz coefficients, Assumption 2.1 guarantees that there exists a unique (up to undistinguishability) \(\mathbb {F}\)-adapted process \(Z^{\tau ,\xi }=\{Z^{\tau ,\xi }_t\}_{t\ge 0}\) with continuous trajectories on \([\tau ,\infty )\), such that

Moreover, by a straightforward adaptation of [50, Sec. 5.2, Prop. 2.18] to random initial data, we obtain

Now fix \(x\in \mathbb {R}_{++}\). By (2.2) and Assumption 2.1, it follows that \(Z^{0,x}\) takes values in \(\mathbb {R}_+\). Due to the nondegeneracy assumption on \(\sigma \) over \(\mathbb {R}_{++}\), as a consequence of the results of [50, Sec. 5.5.C], the process \(Z^{0,x}\) is a (time-homogeneous) regular diffusion on \(\mathbb {R}_{++}\); i.e., setting \( \tau _{x,y}{:}{=}\inf \left\{ t\ge 0:Z^{0,x}_t=y\right\} , \) one has

In “Appendix” we show that Assumption 2.1 guarantees that the boundaries 0 and \(+\infty \) are natural for \(Z^{0,x}\) in the sense of Feller’s classification.

We introduce now a set of admissible controls and their corresponding controlled process. As a set of admissible controls (i.e., feasible investment strategies) we consider the set \(\mathscr {I}\) of all sequences of couples \(I=\left\{ (\tau _n,i_n)\right\} _{n\ge 1}\) such that:

-

(i)

\(\{\tau _n\}_{n\ge 1}\) is an increasing sequence of \({\overline{\mathbb {R}}}_+\)-valued \(\mathbb {F}\)-stopping times such that \(\tau _n<\tau _{n+1}\)\(\mathbb {P}\)-a.s. over the set \(\{\tau _n<\infty \}\) and

$$\begin{aligned} \lim _{n\rightarrow \infty }\tau _n =\infty \quad \mathbb {P}\text{-a.s. }; \end{aligned}$$(2.3) -

(ii)

\(\{i_n\}_{n\ge 1}\) is a sequence of \(\mathbb {R}_{++}\)-valued random variables such that \(i_n\) is \(\mathscr {F}_{\tau _n}\)-measurable for every \(n\ge 1\);

-

(iii)

The following integrability condition holds:

$$\begin{aligned} \sum _{n\ge 1} \mathbb {E} \left[ e^{-\rho \tau _n}(i_n+1) \right] <\infty . \end{aligned}$$(2.4)

For \(n\ge 1\), \(\tau _n\) represents an intervention time, whereas \(i_n\) represents the intervention size at the corresponding intervention time \(\tau _n\). Condition (2.3) ensures that, within a finite time interval, only a finite number of actions are executed. We allow the case \(\tau _n=\infty \) definitively, meaning that only a finite number of actions are taken. Condition (2.4) ensures that the functional defined below is well defined. We call null control any sequence \(\{(\tau _n,i_n)\}_{n\ge 1}\) such that \(\tau _n=\infty \) for each \(n\ge 1\) and denote any of them by \(\emptyset \). Notice that using the same notation \(\emptyset \) for the null controls is not ambiguous with regard to the control problem we are going to define, as any null control will give rise to the same payoff.

Given a control \(I\in \mathscr {I}\), an initial stopping time \(\tau \ge 0\) and a random variable \(\xi >0\)\(\mathbb {P}\)-a.s. \(\mathscr {F}_\tau \)-measurable, we denote by \(X^{\tau ,\xi ,I}=\{X^{\tau ,\xi ,I}_r\}_{r\in [0,\infty )}\) the unique (up to indistinguishability) càdlàg process on \([\tau ,\infty )\) solving the SDE (in integral form)

If \(t=0\) and \(\xi \equiv x\in \mathbb {R}_{++}\) then we denote \(X^{0,\xi ,I}\) by \(X^{x,I}\). It is easily seen that, if \(\tau ^{\prime }\) is another stopping time such that \(\tau ^{\prime }\ge \tau \), then the following flow property holds true

Note that, up to undistinguishability, we have \( X^{x,\emptyset }= Z^{0,x}\). Moreover, setting by convention \(\tau _0{:}{=}0\), \( i_0{:}{=}0\) and \(X_{0^-}{:}{=}x\), we have recursively on \(n\in \mathbb {N}\)

Then, by (2.2), we have the following monotonicity of the controlled process with respect to the initial data

Next, we introduce the optimization problem. Given \(\rho >0\), \(f:\mathbb {R}_{++}\rightarrow \mathbb {R}_{++}\) measurable, \(c_0>0\), \(c_1>0\), we define the payoff functional J by

We notice that (2.4) and the fact that f is bounded from below ensure that J(x, I) is well defined and takes values in \(\mathbb {R}\cup \{\infty \}\).

We will make use of the following assumption on f.

Assumption 2.3

\(f\in C^1(\mathbb {R}_{++};\mathbb {R}_+)\), \(f^{\prime }>0\), \(f^{\prime }\) is strictly decreasing and f satisfies the Inada condition at \(\infty \):

Finally, without loss of generality, we assume that \(f(0^+){:}{=}{\displaystyle \lim _{x\rightarrow 0^+} f(x)=0}\).

Note that

by Assumption 2.1. The following assumption will ensure finiteness for the problem (Proposition 3.2).

Assumption 2.4

\(\rho >M_b\).

Assumptions 2.1, 2.3 and 2.4 will be standing through the rest of the manuscript.

The optimal control problem that we address consists in maximizing the functional (2.8) over \(I\in \mathscr {I}\), i.e., for each \(x\in \mathbb {R}_+\), we consider the maximization problem

Remark 2.5

The fact that \(c_1>0\) means that there is a fixed cost when the investment occurs. This provides that (P) is well posed as an impulse control problem, i.e. optimal controls can be found within the class of impulse controls . If it was \(c_1=0\) (only proportional intervention cost), the setting providing existence of optimal controls would be the more general singular control setting (see e.g. [63, Ch. 4]). For comparison between impulse and singular control we refer to [18]; for the relevance of the introduction of the fixed cost we refer to [61], where the asymptotics for \(c_1\rightarrow 0\) is investigated. In Sect. 7.1.2, we comment this issue through the numerical outputs.

We also notice that one might consider more general intervention costs \(C:\mathbb {R}_{++}\rightarrow \mathbb {R}_+\) increasing and convex, (e.g. \(C(i)=\alpha i^2+\beta i+c_1\) with \(\alpha ,c_1>0\) and \(\beta \ge 0\)). We believe that, at least for a suitable subclass of such cost functons, the solution would depict the same structure as the one we provide here in the affine case (i.e. \(C(i)=c_0i+c_1\)). On the other hand, we underline that at many points our proofs make use of the affine structure of the cost and the generalization seems to be not straightforward.

3 Preliminary results on the value function

In this section we introduce the value function associated with (P) and establish some basic properties of it. We define the value function v by

We notice that v is \(\overline{\mathbb {R}}_{+}\)-valued, as by Assumption 2.3

Note that \(\hat{v}\) is nondecreasing as \(f^{\prime }>0\) (Assumption 2.3) and by (2.7).

Proposition 3.1

v is nondecreasing.

Proof

Let \(0<x\le x^{\prime }\). Since \(f^{\prime }>0\) (see Assumption 2.3), from (2.7) we get \(J(x;I)\le J(x^{\prime };I)\) for every \(I\in \mathscr {I}\). The claim follows by taking the supremum over \(I\in \mathscr {I}\). \(\square \)

We denote by \(f^*\) the Fenchel–Legendre transform of f on \(\mathbb {R}_{++}\):

Nonnegativity and continuity of f (see Assumption 2.3) and the condition \(f^{\prime }(\infty )=0\) (again Assumption 2.3) guarantee that \(0\le f^*(\alpha )<\infty \) for all \(x\in \mathbb {R}_{++}\).

Proposition 3.2

For all \(\alpha \in \left( 0,c_0\rho \right] \) we have

and

Proof

The fact that \(0\le \hat{ v}\le v\) was already noticed in (3.2). We show the remaining inequality. Let \(x\in \mathbb {R}_{++}\) and \(I\in \mathscr {I}\). For \(R>0\), define the stopping time \( \hat{\tau }_R{:}{=}\inf \left\{ t\ge 0:X^{x,I}_t\ge R\right\} . \) Notice that, since \(b\in C^1(\mathbb {R}_{++};\mathbb {R})\) and \(b(0)=0\) by Assumption 2.1, mean value theorem yields

where \(M_b\) is defined in (2.9). Set \(\tau _0{:}{=}0\) and let \(t\in \mathbb {R}_{++}\). Applying Itô’s formula to \(\varphi (s,X^{x,I}_s){:}{=}e^{-\rho s}X^{x,I}_s\), \(s\in [0,\hat{\tau }_R)\), taking expectations after considering that \(X_s^{x,I}\in (0,R)\) for \(s\in [0,\hat{\tau }_R)\), summing up over \(n\in \mathbb {N}\) and using (3.6) and 2.4, we get

By Fatou’s lemma, letting \(R\rightarrow \infty \) and observing that \(\tau _{R} \rightarrow \infty \)\(\mathbb {P}\)-a.s. , we get

By integrating the second term on the right-hand side of (3.7), we have using Fubini–Tonelli’s Theorem (as all the integrands involved are nonnegative)

Therefore, taking into account (3.7), (3.8) and (2.4), we have

Now let \(\alpha >0\). By definition of \(f^*\) and by (3.9), we can write

By arbitrariness of \(I\in \mathscr {I}\), if \(\alpha \in \left( 0,c_0\rho \right] \), the latter provides the last inequality in (3.4).

Take now \(\alpha \in (0,c_0\rho ]\). By (3.4) we have

By arbitrariness of \(\alpha \) we get (3.5). \(\square \)

Assumption 3.3

The following conditions hold true.

-

(i)

\(\rho >\max \big \{B_0, C_0\big \}\) where \(B_0,C_0\) are the constants defined in Lemma A.3.

-

(ii)

For each \(\beta >0\),

$$\begin{aligned} \ M(\beta ){:}{=}\mathbb {E} \left[ \int _0^\infty e^{-\rho t} \left( f^{\prime }\left( X_t^{\beta ,\emptyset }\right) \right) ^2 dt \right] <\infty . \end{aligned}$$(3.10) -

(iii)

For each \(\eta >0\), the function f is semiconvex on \([\eta , \infty )\). Precisely, there exists a nonincreasing function \(K_0:\mathbb {R}_{++}\rightarrow \mathbb {R}_{++}\) such that

$$\begin{aligned} f(\lambda x+(1-\lambda )y)- \lambda f(x)-(1-\lambda )f(y) \le K_0(\eta ) \lambda (1-\lambda ) (y-x)^2, \quad \forall \lambda \in [0,1], \ \forall x,y\in [\beta ,\infty ). \end{aligned}$$(3.11) -

(iv)

The function \(K_0\) in (iii) is such that, for each \(\beta >0\),

$$\begin{aligned} \hat{ M}(\beta ){:}{=}\mathbb {E} \left[ \int _0^\infty e^{-\rho t} \left( K_0\left( X_t^{\beta ,\emptyset }\right) \right) ^2 dt \right] <\infty . \end{aligned}$$(3.12)

Remark 3.4

Semiconvex functions are functions that can be written as difference of a convex function and a quadratic one (see [26, Prop. 1.1.3] or [72, Ch. 4, Sec. 4.2]). Moreover, a function \(\varphi \in C^2([\beta ,\infty );\mathbb {R})\) verifies (3.11) with \(K_0(\eta ):= -2\,\inf _{[\eta ,\infty )} f^{\prime \prime }\) (see again [26, Prop. 1.1.3]).

The following Proposition shows that power functions satisfy Assumption 3.3(ii)–(iv).

Proposition 3.5

Let \(f\in C^2(\mathbb {R}_{++};\mathbb {R})\) such that \(f^{\prime }>0\), \(f^{\prime \prime }<0\) and

for some \(C_0> 0\) and \(\gamma \in (0,1)\) and let \(\rho >L_b(1-\gamma )+\frac{1}{2}L_\sigma ^2(1-\gamma )(2-\gamma )\). Then f satisfies Assumption 3.3(ii)–(iv).

Proof

Let \(\beta \in \mathbb {R}_{++}\) and observe that, by Assumption 2.1, we have

With a localization procedure similar to the one of the prof of Proposition 3.2 (now keeping the process \(X^{\beta ,\emptyset }\) away from 0), we get from Itô’s formula

Then Assumption 3.3(ii) follows from (3.13) and Gronwall’s Lemma applied to the inequality above.

Moreover, note that, since \(\xi \mapsto -C_0(1+|\xi |^{\gamma -2})\) is negative and increasing, by Remark 3.4 and (3.13) we obtain that f verifies Assumption 3.3(iii) with

Finally, similarly as above, we have

Then Assumption 3.3(iv) follows from Gronwall’s Lemma applied to the inequality above and from (3.14). \(\square \)

Remark 3.6

Note that, if \(\rho \) satisfies Assumption 3.3(i), then it also satisfies the requirement of Proposition 3.5.

Proposition 3.7

Let Assumption 3.3 hold. Then v is semiconvex on \([\beta ,\infty )\) for each \(\beta >0\), i.e., for each \(\beta >0\) there exists \(K_1(\beta )>0\) such that

Proof

Fix \(\beta >0\). Let \(x,y\in [\beta , \infty )\) with \(x\le y\) and \(I\in \mathscr {I}\). For each \(\lambda \in [0,1]\) set \(z_\lambda {:}{=}\lambda x+(1-\lambda )y\) and \(\Sigma ^{\lambda ,x,y,I}{:}{=}\lambda X^{x,I}+(1-\lambda )X^{y,I}\). We write

Applying Hölder’s inequality, observing that \(X^{\beta ,\emptyset }\le X^{z_\lambda ,I}\wedge \Sigma ^{\lambda ,x,y,I}\), that \(f^{\prime }\) is decreasing, using Assumption 3.3(i) and using Lemma A.3(ii), we write

Moreover, by Assumption 3.3(i), (iii), (iv), again using Hölder’s inequality and applying Lemma A.3(i), we have

Now let \(\delta >0\) and let I be such that \(v(z)\le J(z,I)+\delta \). The inequalities above provide

where \(K_1(\beta ){:}{=}\frac{ \hat{ M}(\beta )}{(\rho -C_0)^{1/2}}+\frac{ A_0^{1/2} \hat{ M}(\beta )}{(\rho -B_0)^{1/2}}\). We then obtain (3.15) by arbitrariness of \(\delta \). \(\square \)

In view of the fact that the results which follow rely on the semiconvexity of v, Assumption 3.3 will be standing for the remaining of this section and in Sects. 4, 5 and 6.

Define the space

We recall that semiconvex functions on open sets are locally Lipschitz. So, by Propositions 3.2 and 3.7, we have \(v\in {\text {Lip}}_{{\small {\mathrm{loc}}},c_0}(\mathbb {R}_{++})\). The space \( {\text {Lip}}_{{\small {\mathrm{loc}}},c_0}(\mathbb {R}_{++})\) will be used in the next section.

4 Dynamic programming

The dynamic programming equation associated to our dynamic optimization problem is the quasi-variational inequality (see, e.g., [17])

where \(\mathscr {L}\) and \(\mathscr {M}\) are operators formally defined by

We note that \(\mathscr {L}\) is a differential operator, so it has a local nature, while \(\mathscr {M}\) is a functional operator having a nonlocal nature.

4.1 Continuation and action region

Here we define and study the first properties of the continuation and action region in the state space \(\mathbb {R}_{++}\).

Lemma 4.1

\({\mathscr {M}}\) maps \({\text {Lip}}_{{\small {\mathrm{loc}}},c_0}(\mathbb {R}_{++})\) into itself.

Proof

Let \(u\in {\text {Lip}}_{{\small {\mathrm{loc}}},c_0}(\mathbb {R}_{++})\). Then there exists \(\overline{x} ,\varepsilon >0\) such that

By (4.3), for all \(i>0\), \(x\ge \overline{x}\), we have

Hence, by taking the supremum over \(i>0\),

which shows that \({\displaystyle \limsup _{x\rightarrow \infty }\frac{\mathscr {M}u(x)}{x}< c_0}\).

Now we show that \(\mathscr {M}u\) is Lipschitz continuous on \([M^{-1},M]\) for each \(M>0\). Using (4.3) one can show that

Set

The limit (4.4) provides that there exists \(R>0\) such that

Hence, we have

Now let \(\hat{L}\) be the Lipschitz constant of \(u|_{[M^{-1},M+R]}\). Then, if \(M^{-1}\le x<y\le M\), \(0<i\le R\), we can write

Now the claim follows by taking the supremum over \(i\in (0,R]\) on (4.6) and recalling (4.5). \(\square \)

By definition of v we have

hence

We define the continuation region\(\mathscr {C}\) and the action region\(\mathscr {A}\) by

They will represent, respectively, the region where it will be convenient to let the system evolve autonomously and the region where it wil be convenient to undertake an action by exercising an impulse. By Proposition 3.2 and Lemma 4.1, both members of (4.8) are finite continuous functions. In particular, \(\mathscr {C}\) is open and \(\mathscr {A}\) is closed in \(\mathbb {R}_{++}\).

For \(x\in \mathscr {A}\), let us introduce the set

Clearly \(\Xi (x)\) is empty if \(x\in \mathscr {C}\). In principle \(\Xi (x)\) might be empty even if \(x\in \mathscr {A}\), but this is not the case as shown by the following.

Proposition 4.2

Let \(x\in \mathscr {A}\).

-

(i)

\(\Xi (x)\) is not empty.

-

(ii)

For all \(\xi \in \Xi (x)\), we have \(x+\xi \in \mathscr {C}.\)

Proof

(i) Let \(x\in {\mathscr {A}}\) and take a sequence \(\{i_n\}_{n\in \mathbb {N}{\setminus }\{0\}}\subset \mathbb {R}_{++}\) such that

Then, considering that \({\displaystyle {\limsup _{i\rightarrow \infty }\frac{v(x+i)}{x+i}=0}}\) by Proposition 3.2 and that \(\mathscr {M}v(x)\) is finite, we easily see, arguing by contradiction, that, in order to fulfill (4.11), the sequence \(\{i_n\}_{n\in \mathbb {N}}\) must be bounded. Hence, by considering a subsequence if necessary, we have \(i_n\rightarrow i^*\in \mathbb {R}_+\). Let us show that \(i^*>0\). Indeed, assume by contradiction that \(i^*=0\). By (4.11), taking into account that v is continuous and that \(v(x)={\mathscr {M}}v(x)\) as \(x\in \mathscr {A}\), we obtain \(v(x)={\mathscr {M}}v(x)\le v(x)-c_1\), a contradiction. Then we have shown that \(i^*>0\). From (4.11) we obtain, by continuity, \({\mathscr {M}}v(x) = v(x+i^*)-c_0i^*-c_1\) and the claim follows.

(ii) This part of the proof closely follows the proof of [43, Prop. 2]. We omit it for brevity.

\(\square \)

Note that, as a consequence of Proposition 4.2, we have \(\mathscr {C}\ne \emptyset \). Indeed, either \(\mathscr {A}=\emptyset \), thus \(\mathscr {C}=\mathbb {R}_{++}\); or \(\mathscr {A}\ne \emptyset \), thus \(\mathscr {C}\ne \emptyset \) by Proposition 4.2(ii). Formally, Proposition 4.2(ii) says that, if the system is in a position \(x\in \mathscr {A}\): (i) an optimal control exists [part (i)]; (ii) this optimal control places the system in \(\mathscr {C}\) [part (ii)]. We will verify this fact rigorously afterwards.

4.2 Dynamic programming principle and viscosity solutions

The rigorous connection between v and (QVI) passes through the dynamic programming principle (DPP).

Proposition 4.3

For every \(x>0\) and every \(\mathbb {F}\)-stopping time \(\tau \in \overline{\mathbb {R}}_+\),

Proof

We refer to [22] (for the finite horizon case; our formulation is the usual one for time homogeneous infinite horizon problems). \(\square \)

Here we study (QVI) by means of viscosity solutions.

Definition 4.4

(Viscosity solution) Let \(u\in {\text {Lip}}_{{\small {\mathrm{loc}}},c_0}(\mathbb {R}_{++})\).

-

(i)

u is a viscosity subsolution to (QVI) if for every \((x_0,\varphi )\in \mathbb {R}_{++}\times C^2(\mathbb {R}_{++})\) such that \(u-\varphi \) has a local maximum at \(x_0\) and \(u(x_0)=\varphi (x_0)\) we have

$$\begin{aligned} \min \big \{\mathscr {L}\varphi (x_0)-f(x_0),u(x_0)-\mathscr {M}u(x_0)\big \}\le 0; \end{aligned}$$ -

(ii)

u is a viscosity supersolution to (QVI) if for every \((x_0,\varphi )\in \mathbb {R}_{++}\times C^2(\mathbb {R}_{++})\) such that \(u-\varphi \) has a local minimum at \(x_0\) and \(u(x_0)=\varphi (x_0)\) we have

$$\begin{aligned} \min \big \{\mathscr {L}\varphi (x_0)-f(x_0),u(x_0)-\mathscr {M}u(x_0)\big \}\ge 0; \end{aligned}$$ -

(iii)

u is a viscosity solution to (QVI) if it is both a viscosity subsolution and a viscosity supersolution of (QVI).

Proposition 4.5

The value function v is a viscosity solution of (QVI).

Proof

Supersolution property Let \(x_0\in \mathbb {R}_{++}\) and \(\varphi \in C^2(\mathbb {R}_{++})\) be such that \(v-\varphi \) has a local minimum at \(x_0\) and \(v(x_0)=\varphi (x_0)\). In particular, \(v\ge \varphi \) on \((x_0-\delta ,x_0+\delta )\) for a suitable \(\delta \in (0,x_0)\). By (4.8) we only need to show that \(\mathscr {L}\varphi (x_0)-f(x_0)\ge 0\). To this aim, consider the stopping time \(\tau {:}{=}\inf \left\{ t\ge 0:|X^{x_0,\emptyset }_t-x_0|>\delta \right\} \) and note that \(\mathbb {P}\{\tau >0\}=1\) by continuity of trajectories. Then, from (DPP) we get

From this we derive

By applying Dynkin’s formula, dividing by \(\varepsilon \), letting \(\varepsilon \rightarrow 0^+\) and considering that \(X^{x,\emptyset }\) is right-continuous in 0 and \(\mathbb {P}\{\tau >\varepsilon \}\rightarrow 1\) as \(\varepsilon \rightarrow 0^+\), we obtain the desired inequality.

Subsolution property Let \(x_0\in \mathbb {R}_{++}\) and \(\varphi \in C^2(\mathbb {R}_{++})\) be such that \(v-\varphi \) has a local maximum at \(x_0\) and \(v(x_0)=\varphi (x_0)\). If \(v(x_0)={\mathscr {M}}v(x_0)\), then we are done. Then assume \(v(x_0)\ge \xi + {\mathscr {M}}v(x_0)\) for some \(\xi >0\). In this case, we need to show that \( \mathscr {L}\varphi (x_0)-f(x_0)\le 0\). Assume by contradiction that \( \mathscr {L}\varphi (x_0)-f(x_0)\ge \varepsilon >0\). By continuity of \({\mathscr {L}}\varphi -f\) and of \(v-{\mathscr {M}}v\) and in view of the fact that \(v-\varphi \) has a local maximum at \(x_0\) and \(\varphi (x_0)=v(x_0)\), there exists \(\delta \in (0,x_0/2)\) such that

Now define the stopping time \( \tau {:}{=}\inf \{t\ge 0:| X_t^{x_0,\emptyset }-x_0|>\delta \} \) and note that \(\mathbb {P}\{\tau >0\}=1\). In view of (4.14)(iii), undertaking an investment in the region \(B(x_0,2\delta ]\) is not optimal. Hence (DPP) can be rewritten limiting the ranging of I to the set of controls such that \(\tau _1>\tau \), yielding the simple equality

Finally, we have, by (4.15), Dynkin’s formula and (4.14)(i)–(ii),

This provide a contradiction as \(\mathbb {P}\left\{ \tau >0\right\} =1\). \(\square \)

4.3 Regularity of the value function

Here we establish the regularity properties of the value function. Precisely, exploiting the semiconvexity provided by Proposition 3.7 and the viscosity property provided by Proposition 4.5, we show that it is of class \(C^1\) on \(\mathbb {R}_{++}\) and of class \(C^2\) on \(\mathscr {C}\).

Theorem 4.6

\(v\in C^1(\mathbb {R}_{++};\mathbb {R})\,\bigcap \, C^2(\mathscr {C};\mathbb {R})\).

Proof

Let \(x_0\in \mathbb {R}_{++}\). As v is semiconvex in a neighborhood of \(x_0\) (Proposition 3.7), in such a neighborhood it can be written as difference of a convex function and a quadratic one (see Remark 3.4). Hence, the one-side derivatives \(v^{\prime }_+(x_0), v^{\prime }_-(x_0)\) exist and \(v^{\prime }_-(x_0)\le v^{\prime }_+(x_0)\). To show that v is differentiable at \(x_0\), we need to show that the previous inequality is indeed an equality. Assume, by contradiction, that \(v^{\prime }_-(x_0)< v^{\prime }_+(x_0)\). Then we can construct a sequence of functions \(\{\varphi _n\}_{n\in \mathbb {N}} \subset C^2(\mathbb {R}_{++})\) such that, for every \(n\in \mathbb {N}\),

Then \({\mathscr {L}}\varphi _n(x_0)-f(x_0)\rightarrow -\infty \) as \(n\rightarrow \infty \), which is impossible as v is a viscosity supersolution to (QVI), by Proposition 4.5. Hence it must be \(v^{\prime }_-(x_0)=v^{\prime }_+(x_0)\). By arbitrariness of \(x_0\), this shows that v is differentiable on \(\mathbb {R}_{++}\). By semiconvexity we deduce that \(v\in C^1(\mathbb {R}_{++})\) (see [65, Theorem 25.5]).

The fact that \(v\in C^2({\mathscr {C}};\mathbb {R})\) follows from a standard localization argument: in each interval \((a,b)\subset {\mathscr {C}}\) the function v is a viscosity solution to the linear equation \({\mathscr {L}}u-f=0\) with boundary conditions \(u(a)=v(a)\) and \(u(b)=v(b)\). By uniform ellipticity of \({\mathscr {L}}\) over (a, b) (see, e.g., [36, Ch. 6]), this equation admits a unique solution in \(C^2((a,b);\mathbb {R})\), which must also be a viscosity solution. By uniqueness of viscosity solutions to the linear equation above with Dirichlet boundary conditions, we conclude that v coincide with the classical solution, hence \(v\in C^2((a,b);\mathbb {R})\). As \(\mathscr {C}\) is open, the claim follows by arbitrariness of (a, b). \(\square \)

Corollary 4.7

We have

-

(i)

\(v^{\prime }(x+\zeta )=c_0\), for every \(x\in \mathscr {A}, \ \forall \zeta \in \Xi (x)\).

-

(ii)

\(v^{\prime }(x) =c_0\), for every \(x\in \mathscr {A}.\)

Proof

The proof is the same as in [43, Lemma. 5.2] and we skip it for the sake of brevity. \(\square \)

Corollary 4.7(i) will be used in the next section to characterize the optimal target point, i.e. the point in the continuation region where it is optimal to place the system when it reaches the action region.

5 Explicit expression of the value function

In this section we characterize \(\mathscr {C},\mathscr {A}\) and v up to the decreasing solution of the homogeneous ODE \(\mathscr {L}=0\) and to the solution of a nonlinear system of three algebraic equations.

Lemma 5.1

\(\mathscr {A}\) does not contain any interval of the form \([a,\infty )\), with \(a>0\). In particular \(\mathscr {C}\ne \emptyset \).

Proof

Assume, by contradiction, that there exists \(a>0\) such that \(\mathscr {A}\supset [a,\infty )\). Then, due to Lemma 4.7(ii), we have

which contradicts Proposition 3.2. On the other hand we should also have

So it must be

which is impossible as \(c_1>0\). \(\square \)

The following assumption ensures that the action region is an interval.

Assumption 5.2

\(b|_{\mathbb {R}_+}\) is concave.

Lemma 5.3

Let Assumption 5.2 hold. Then \(\mathscr {A}\) is an interval.

Proof

Since \(\mathscr {A}\) is closed, it is sufficient to show that there do not exist points \(x_0,x_1\in \mathbb {R}_{++}\), with \(x_0<x_1\), such that \(x_0,x_1 \in \mathscr {A}\) and \((x_0,x_1)\subset \mathscr {C}.\) Arguing by contradiction, we assume that such points instead exist. Given \(x\in (x_0,x_1)\), set \(j{:}{=}i-(x_1-x)\) for every \(i>0.\)

Then, recalling that \(x\in \mathscr {C}\), so \(v(x)>\mathscr {M}v(x)\), and that \(x_1\in \mathscr {A}\), hence \(v(x_1)=\mathscr {M}v(x_1)\), we can write

Therefore

Due to Proposition 4.2(i), we have for some for some \(y_1>x_1\), \(y_1\in \mathscr {C}\),

On the other hand, \(v\ge \mathscr {M}v\) implies

Combining (5.2) and (5.3) we get

Then (5.1) and (5.4) show that the function

is such that \(\varphi (x_1)=v(x_1)\) and \(v-\varphi \) has a local minimum at \(x_1\). Since v is a viscosity supersolution to (QVI), this implies

Now, by (5.1), there exists \(\xi \in (x_0,x_1)\) such that \(v^{\prime }(\xi )<c_0\). Let

The definition above is well posed as \(x_0\in \mathscr {A}\), so that by Corollary 4.7(ii) we have \(v^{\prime }(x_0)=c_0\). Moreover, by continuity of \(v^{\prime }\) and by definition of \(y_2\) we have

Therefore, considering that v is twice differentiable in \((x_0,\xi )\) as this interval is contained in \(\mathscr {C}\), from (5.6) and by continuity of \(v^{\prime }\) we see that

The equality \(\mathscr {L}v=f\) holds in classical sense at \(y_2\), hence (5.7) entails

Combining (5.5) with (5.8), we get

On the other hand, considering (5.1) with \(x=y_2\) and then combining it with (5.9), we get

Now, as \(x_1\in \mathscr {A}\), by (5.2) we have

The function v is twice differentiable at \(y_1\) since \(y_1\in \mathscr {C}\), so (5.11) yields

Therefore the equality\(\mathscr {L}v(y_1)=f(y_1)\) yields the inequality

Combining (5.12) with (5.5), we get

On the other hand, from (5.11) we get

So, from (5.13) and (5.14) we get

To conclude, note that (5.10) and (5.15) are not compatible with the strict concavity of

which follows from Assumptions 2.3 and 5.2. \(\square \)

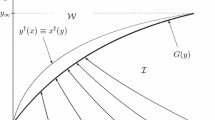

Under Assumption 5.2, Lemma 5.1 and Lemma 5.3 provide

Case (i) above corresponds to the case in which the continuation region invades all the state space and it is never convenent to undertake an action. In case (ii) the action region is not empty and there is convenience to undertake an action when the system reaches this region.

Consider the homogeneous ODE

By [19, Th. 16.69] its general solution is of the form

where \(\psi , \varphi \) are, respectively, the unique (up to a multiplicative constant) strictly increasing and strictly decreasing solutions to (5.17) and, as 0 and \(\infty \) are not accessible boundaries for the reference diffusion Z, these fundamental solutions satifsy the following boundary conditions

Other properties of these functions can be found on [19, Sec. 16.11]. On the other hand, the function \(\hat{v}\) defined in (3.2) is the unique solution in \(\mathbb {R}_{++}\), within the class of functions having at most linear growth, to the nonhomogeneous ODE \(\mathscr {L}u=f\) (see [19, Th. 16.72]: actually in the quoted result the function f is required to be bounded, but the proof works as well in our context within the class of functions having at most linear growth). It follows that every classical solution to

where \(\mathscr {I}\) is an open interval, must have the form \(u=A\psi +B \varphi +\hat{v}\). Therefore, as by Proposition 4.5 and Theorem 4.6 the value function v solves in classical sense (5.19), according to the two possibilities of (5.16), in case (i) there must exist real numbers A, B such that

in case (ii) there must exist real numbers \(A_r,B_r,A_s,B_s\)

Proposition 5.4

Let Assumption 5.2 hold. According to the cases (i) and (ii) of (5.16) we have, respectively:

-

If case (i) holds, then \(v\equiv \hat{v}\), hence \(A=B=0\) in (5.20);

-

If case (ii) holds, then \({\displaystyle \lim _{x\rightarrow \infty }} (v(x)-\hat{v}(x))=0\) and \(A_s=B_r=0\), \(A_r,B_s\ge 0\) in (5.21).

Proof

Assume that case (i) holds. As \(\mathscr {L}v=f\) on \(\mathscr {C}=\mathbb {R}_{++}\), by a standard localization procedure we get (see, e.g., the proof of Proposition 3.2)

We pass to the limit \(t\rightarrow \infty \) on the first addend of the right hand side by using the monotone convergence theorem. As for the second addend, we use (3.4) and (3.7) with \(I=\emptyset \) to write

Then

By arbitrariness of \(\alpha \) we conclude that

Hence

By definition of \(\hat{v}\) and by the inequality \(v\ge \hat{ v}\), this proves the claim.

Now assume that case (ii) holds. For each \(x>s\) set \(\tau _{x}{:}{=}\inf \left\{ t\ge 0:X^{x,\emptyset }_t\le s\right\} \). As \(\infty \) is a natural boundary for \(Z^{0,x}=X^{x,\emptyset }\), by (A.2) we have

If \(0<x<x^{\prime }\), by (2.7) with \(I=\emptyset \) we get

so, we also have \(\tau _x\le \tau _{x^{\prime }}\)\(\mathbb {P}\)-a.s.. If \(\{x_n\}_{n\in \mathbb {N}}\) is a sequence diverging to \(\infty \), we then have

As \(\mathscr {L}v=f\) on \((s,\infty )\), as for (5.22), we get

Therefore, splitting over \(\{\tau _{x_n}< t\}\) and \(\{\tau _{x_n}\ge t\}\) the second addend on the right hand side,

for all \(t\ge 0\). Now we pass to the limit \(t\rightarrow \infty \) by using the same arguments used to obtain (5.23), and we get

Then, the definition of \(\hat{v}\) provides

Using (5.25) and recalling that \(v \ge \hat{v}\), we conclude \( \displaystyle \lim _{n\rightarrow \infty } (v(x_n)-\hat{v}(x_n))=0\). Since the sequence \(\{x_n\}_{n\in \mathbb {N}}\) was arbitrary, we conclude

From (5.18) and (5.27) we have \(A_s=0\) and \(B_s\ge 0\). Finally, since \(v\ge \hat{v}\) and v is finite in (0, r), from (5.18) we have \(A_r\ge 0\) and \(B_r=0\). \(\square \)

Set

We are going to introduce an assumption, requiring that \(c_1\) is not too large, that guarantees, at once, that the action region is not empty and that the structure of the continuation and action regions are

Under this nice structure, it turns out that it is convenient to undertake an action when the system lies below a given threshold and lat it evolve autonomously when the system lies above this threshold. Henceforth, we will call this threshold trigger boundary.

Assumption 5.5

\(c_1<{ \hat{ v}^*}(c_0)\).

The following result provides a way to check explicitly the validity of Assumption 5.5.

Proposition 5.6

Let \(f(x)\ge K x^\gamma \) for some \(K>0\), \(\gamma \in (0,1)\) and set \(K^{\prime }:= \frac{\gamma K}{\rho +\gamma L_b+\frac{1}{2}\gamma (1-\gamma )L_\sigma ^2}\). Then

Proof

Let \(x\in \mathbb {R}_{++}\). With a localization procedure similar to the one of the proof of Proposition 3.2, we get from Itô’s formula

Then we get

From that and from the assumption on f, we obtain

Hence,

\(\square \)

Proposition 5.7

Let Assumptions 5.2 and 5.5 hold. Then there exists \(s>0\) such that \(\mathscr {C}=(s,\infty )\) and, consequently, \(\mathscr {A}=(0,s]\).

Proof

First, notice that, as \(\hat{ v}\) satisfies (3.4), it follows that \({ \hat{ v}^*}\) is finite on \(\mathbb {R}_{++}\). Considering that \(v\ge \hat{ v}\) and that \(\hat{ v}\) is nondecreasing, we have

Now assume by contradiction that \((0,r)\subset \mathscr {C}\), for some \(r>0\). By Proposition 5.4 we have

for some \(A_r\ge 0\). Then, as \(\psi (0^+)=0\), we must have \(v(0^+)=\hat{v}(0^+)=0\). The latter contradicts (5.28), hence we conclude. \(\square \)

Under Assumptions 5.2 and 5.5, the structure of \(\mathscr {C}\) and \(\mathscr {A}\) established by Proposition 5.7 joined with Proposition 5.4 provides the following structure for v: for some \(B= B_s\ge 0\)

Lemma 5.8

Let Assumption 5.2 hold. Let \(a\ge 0\) and let \(u\in C^2((a,\infty );\mathbb {R})\) satisfy \(\mathscr {L}u=f\) on \((a,\infty )\). If \(x_0\in (a,\infty )\) is a local minimum point for \(u^{\prime }\), then \(u^{\prime }(x_0)> 0\) and there is no local maximum point for \(u^{\prime }\) in \((x_0,\infty )\).

Proof

As \(b,\sigma , {f}\in C^1(\mathbb {R}_{++};\mathbb {R})\), from

we obtain \(u^{\prime \prime }\in C^1((a,\infty );\mathbb {R})\), i.e. \(u\in C^3((a,\infty );\mathbb {R})\). We differentiate (5.30) getting

Let \(x_0\in (a,\infty )\) be a local minimum point for \(u^{\prime }\). Then \(u^{\prime \prime }(x_0)=0\) and \(u^{\prime \prime \prime }(x_0)\ge 0\) so, by (5.31), we have

Note that from (5.32), using Assumptions 2.3 and 2.4, we obtain \(u^{\prime }(x_0)>0\). Now, arguing by contradiction, assume that \(x_1\in (x_0,\infty )\) is local maximum point for \(u^{\prime }\). Then \(u^{\prime \prime }(x_1)=0\) and \(u^{\prime \prime \prime }(x_1)\le 0\), so, by (5.31), we have

Without loss of generality, we can assume that

Combining (5.32) and (5.33) and taking account that \(f^{\prime }\) is strictly decreasing, we get

Now, by Assumption 5.2 we have \(b^{\prime }(x_0)\ge b^{\prime }(x_1)\). So, the fact that \(u^{\prime }(x_0)> 0\) and (5.35) yield

By Assumption 2.4, we have the \(\rho -b^{\prime }(x_1)>0\). Hence, from (5.36) we obtain \(u^{\prime }(x_1)< u^{\prime }(x_0)\), contradicting (5.34). \(\square \)

Recall that a function \(\varphi :\mathscr {O}\rightarrow \mathbb {R}\), with \(\mathscr {O}\) open interval, is said quasiconcave if

Strictly quasiconcave functions can be characterized as functions that are either strictly increasing, or strictly decreasing, or strictly increasing on the left of a point \(x^*\in \mathscr {O}\) and strictly decreasing on the right of \(x^*\).

Lemma 5.9

Let Assumption 5.2 hold. Let \(a\ge 0\), let \(u\in C^2((a,\infty );\mathbb {R})\) satisfy \(\mathscr {L}u=f\) on \((a,\infty )\) and assume that \(\displaystyle \liminf _{x\rightarrow \infty }u^{\prime }(x)\le 0\). Then \(u^{\prime }\) is strictly quasiconcave.

Proof

By virtue of [9, Proposition 3.24], it is sufficient to show that \(u^{\prime }\) does not admit any local minimum. Argue by contradiction and assume that \(x_0\in (a,\infty )\) is a local minimum point for \(u^{\prime }\). The proof of Lemma 5.8 shows then that \(u^{\prime }(x_0)>0\). Hence, since \(\displaystyle \liminf _{x\rightarrow \infty }u^{\prime }(x)\le 0\), there must exists a local maximum point \(x_1\in (x_0,\infty )\). This contradicts Lemma 5.8 and we conclude. \(\square \)

Proposition 5.10

Let Assumptions 5.2 and 5.5 hold.

-

(i)

There exists a unique \(S\in \mathscr {C}=(s,\infty )\) such that \(v^{\prime }(S)=c_0\).

-

(ii)

There exists (a unique) \(x^*\in (s,S)\) such that \(v^{\prime }\) is strictly increasing in \((s,x^*]\) and strictly decreasing in \([x^*,\infty )\).

-

(iii)

\(\displaystyle \lim _{x\rightarrow \infty } v^{\prime }(x)= 0\).

Proof

(i) Corollary 4.7(i) and Proposition 4.2(i) yield the existence of \(S\in \mathscr {C}=(s,\infty )\) such that \(v^{\prime }(S)=c_0\). Regarding uniqueness, observe first that v satisfies the requirements of Lemma 5.9 (plugging v in place of u) with \(a=s\) and where

holds by (3.4). Then the fact that \(v^{\prime }(s)=c_0\) by Corollary 4.7(ii) yields the uniqueness.

(ii) By (5.29) we have \(v^{\prime }(s)=c_0\). By (i) above we have \(v^{\prime }(S)=c_0\) and \(v^{\prime }(x)\ne c_0\) for each \(x\in (s,S)\). Then the claim follows by Lemma 5.9.

(iii) This follows immediately by monotonicity of \(v^{\prime }\) on \([x^*,+\infty )\), (5.37) and Proposition 3.1, which provides \(v^{\prime }\ge 0\). \(\square \)

Theorem 5.11

Let Assumptions 5.2 and 5.5 hold. The value function has the form

and the triple (B, s, S) is the unique solution in \(\mathbb {R}_+\times \mathbb {R}_{++}^2\) to the system

Proof

Consider (5.29). The expression of v over \((s,\infty )\) in (5.38) and (5.29) is the same. As for the expression of v over (0, s], we note that, by definition of \(\Xi (s)\), Proposition 4.2, Corollary 4.7 and Proposition 5.10(i), we have

Since \(s\in \mathscr {A}\), we have \(v(s)=[\mathscr {M}v](s)\); so, from (5.40) we get

from which we get the expression of v over (0, s] in (5.38). Then the three equations of (5.39) follow, respectively, by imposing the continuity of v at s, the smooth-fit at s (as \(v\in C^1(\mathbb {R}_{++};\mathbb {R})\)) and the condition of Proposition 5.10(i) defining S.

To show that (5.39) has a unique solution in \(\mathbb {R}_+\times \mathbb {R}_{++}^2\), we consider the function

For each \(\hat{B}\ge 0\), \(\mathscr {L}h(\hat{B},\cdot )=0\) in \(\mathbb {R}_{++}\) and \(\displaystyle \liminf _{x\rightarrow \infty }\,h_x(\hat{B},x)\le 0\) by (3.4) and (5.18). By Lemma 5.9\(h_x(\hat{B},\cdot )\) is strictly quasiconcave; hence, there exist at most two solutions \(\hat{s},\hat{S}\) to \(h_x(\hat{B},\cdot )=c_0\) in \(\mathbb {R}_{++}\). If such solutions exist, we have \(h(\hat{B},\cdot )-c_0> 0\) on \((\hat{s}\wedge \hat{S},\hat{s}\vee \hat{S})\). Therefore, if \((\hat{B},\hat{s},\hat{S})\in {\mathbb {R}_+\times \mathbb {R}_{++}^2}\) solves (5.39), then (5.39)(i) yields

This forces \(\hat{s}=\hat{s}\wedge \hat{S}\), \(\hat{S}=\hat{s}\vee \hat{S}\), \(\hat{s}\ne \hat{S}\). By the argument above we see that, if \((B_1,s_1,S_1)\) and \((B_2,s_2,S_2)\) are two different solutions to (5.39) in \(\mathbb {R}_+\times \mathbb {R}_{++}^2\), we need to have \(s_1<S_1\), \(s_2<S_2\) and \(B_1\ne B_2\).

Now assume, by contradiction, that \((B_1,s_1,S_1)\) and \((B_2,s_2,S_2)\) are two different solutions of (5.39) in \(\mathbb {R}_+\times \mathbb {R}_{++}^2\). Without loss of generality, we can assume \(B_1< B_2\). Recalling that \(\varphi \) is strictly decreasing, we have

The latter inequality, Lemma 5.9 and (5.39)(ii)–(iii) provide

We can then write, using (5.41)–(5.42) and (5.39)(i),

which is a contradiction. \(\square \)

6 Optimal control

In this section, through Theorem 6.1, we describe the structure of an optimal control for our problem through a recursive rule. In the economic literature—see the stream of papers on stochastic impulse control at the beginning of the paragraph on the related linterature in the Introduction and [16]—this rule is known as (S, s)-rule. Informally, this rule, rigorously stated in Theorem 6.1 below, can be described as follows.

-

The point s works as an optimal trigger boundary: when the state variable is at level s or below such level (i.e., it is within the action region \(\mathscr {A}\)), the controller acts.

-

The point S works as an optimal target boundary: when the controller acts, she/he does that in such a way to place the state variable at the level \(S\in \mathscr {C}\).

-

When the state variable lies in the region \(\mathscr {C}\), the controller let it evolve autonomously without undertaking any action until it exits from this region.

Such rule is made rigorous by the following construction. Let \(x\in \mathbb {R}_{++}\) and consider the control \(I^*=\{(\tau _n,i_n)\}_{n\ge 1}\) defined as follows:

and then, recursively for \(n\ge 1\),

Note that, for \(\mathbb {P}\)-a.e. \(\omega \in \{\tau _n<\infty \}\), by continuity of \(\mathbb {R}_+\rightarrow \mathbb {R},\ t\mapsto Z^{\tau _n,S}_{\tau _n+t}(\omega )\) and since \(S>s\), we have \(\tau _{n+1}(\omega )>\tau _n(\omega )\).

Theorem 6.1

(Optimal control) Let Assumptions 5.2 and 5.5 hold.

Let \(x\in \mathbb {R}_{++}\) and consider the control \(I^*=\{(\tau _n,i_n)\}_{n\ge 1}\) defined above. Then \(I^*\in \mathscr {I}\) and it is optimal for the problem starting at x, i.e., \(J(x,I^*)=v(x)\).

Proof

Admissibility As noticed above, \(\tau _n< \tau _{n+1}\)\(\mathbb {P}\)-a.s. on \(\{\tau _n<\infty \}\). Moreover, for each \(n\ge 1\), \(i_n\) is constant; so, as a random variable, it is trivially \(\mathscr {F}_{\tau _n}\)-measurable.

Now, for fixed \(\varepsilon >0\) such that \(S-\varepsilon S^2>s\), define the auxiliary sequence \(\{\tau ^\varepsilon _n\}_{n\ge 1}\) of stopping times by

and

We notice that \(\tau ^\varepsilon _n\) is finite and \(\tau ^\varepsilon _{n+1}> \tau ^\varepsilon _n\)\(\mathbb {P}\)-a.s.. Moreover, the random variables \(\{ \tau ^\varepsilon _{n+1}- \tau ^\varepsilon _n\}_{n\ge 1}\) are identically distributed and \( \tau ^\varepsilon _{n+1}- \tau ^\varepsilon _n\) is independent on \(\mathscr {F}_{ \tau ^\varepsilon _n}\). Finally, it can be verified by induction that

from which we obtain

Define \(Y^\varepsilon {:}{=}\inf \left\{ t\ge 0 :Z^{0,S}_t -\varepsilon \left( Z^{0,S}_t +t \right) ^2 \le s\right\} \). Then \(\tau ^\varepsilon _{n+1}-\tau ^\varepsilon _n\sim Y^\varepsilon \) for all \(n\ge 1\). Observe that \(Y^\varepsilon \) increases as \(\varepsilon \) tends to \(0^+\). Let \({\displaystyle {Y{:}{=}\lim _{\varepsilon \rightarrow 0^+}Y^\varepsilon }}\). Since \(S-\varepsilon S^2>s\) entails \(Y^\varepsilon >0\), we have in particular \(Y>0\). We can then write, using (6.1) and Fatou’s Lemma in the first inequality below,

Summing over \(n\ge 1\) and taking into account that \(\mathbb {E}[e^{-\rho Y}]<1\), from (6.2) we get

Both conditions (2.3) and (2.4) follow from (6.3), so the control \(I^*\) is admissible.

Optimality Set \(X^*{:}{=}X^{x,I^*}\). We observe that, by (3.4), (3.7) and (6.3), we have

Let \(T>0\) and set \(\tau _0{:}{=}0^-\). Observe that by definition \(X^*\in [s,+\infty )\) and recall that \(\mathscr {L} v=f\) on \(\mathscr {C}=(s,\infty )\). For all \(n\in \mathbb {N}\) we apply Itô’s formula to \(v(X^*)\) in the interval \([\tau _n\wedge T,\tau _{n+1}\wedge T)\). Note that \(v^{\prime }\) is bounded in \([s,\infty )\) by Proposition 5.10, so

Hence, taking the expectation in the Itô formula and taking into account that \(\mathscr {L}v(X^*)=f(X^*)\), we get

Now fix for the moment \(\omega \in \Omega \), \(n\ge 1\) and assume that \(\tau _{n}(\omega )\le T\). By definition of \(i_n(\omega )\) and considering that \(X^{*}_{\tau _n^-}(\omega )\in \mathscr {A}\) we have (cf. also Corollary 4.7, Proposition 4.2(i) and the definition of S in Proposition 5.10(i))

Hence, considering that \(\mathscr {M} v(X^{*}_{\tau _n^-}(\omega ))=v(X^{*}_{\tau _n^-}(\omega ))\), we have

It follows that, for all \(n\ge 1\),

Using (6.5) and (6.7), we can then write, for \(N\ge 1\),

By passing to the limit \(N\rightarrow \infty \) and using (2.3), we obtain

We take now the \({\displaystyle \liminf _{T\rightarrow \infty }}\), using (6.4) on the first addend of the left hand side, monotone convergence on the third addend of the left hand side and Fatou’s lemma on the right hand side. We obtain

which shows that \(I^*\) is optimal (Fig. 1). \(\square \)

7 Numerical illustration in the linear case

In the previous sections we have characterized the solution of the dynamic optimization problem through the unique solution of the nonlinear algebraic system (5.39) in the triple (A, s, S). In this section we specialize the study when the reference process Z follows a geometric Brownian motion dynamics, i.e. when \( b(x){:}{=}\nu x\), \( \sigma (x){:}{=}\sigma x\), with \(\nu \in \mathbb {R}\), \(\sigma >0\) and when \(f(x)=\frac{x^\gamma }{\gamma }\), with \(0<\gamma <1\), assuming

In this way, Assumptions 2.1, 2.3, 2.4, 3.3(ii)–(iv), 5.2 are satisfied.Footnote 7 In the present case we have

where m is the negative root of the characteristic equation

associated with \(\mathscr {L}u=0\), i.e.

and

The problem with no fixed cost, i.e. when \(c_1=0\), is investigated in the singular control setting (the right one to get existence of optimal controls, see Remark 2.5) in [63, Sec. 4.5]. In this case, the value function v and the optimal reflection boundary s are characterized in [63, Th. 4.5.7] through an algebraic system too. Such system can be solved providing, in our notation,

We make Assumption 5.5; the latter in the present case reads as

Moreover, Assumption 3.3(i) would read as

However, as we show below, in the linear-homogeneous case under consideration here, we do not need to make this assumption: we can exploit the linear dependence of the controlled process on the initial datum and the homogeneity of f to show the result of semiconvexity stated, for the general case, in Proposition 3.7. Consequently, the other results of the paper hold under no further assumption. Indeed, observing that the terms \(\{i_n\}_{n\ge 1}\) enter in the dynamics of \(X^{x,I}\) in additive form, we have

that we can use to prove the following result.

Proposition 7.1

In the above framework we have, for every \(\lambda \in [0,1]\) and every \(x,y\ge \varepsilon >0\)

Proof

Let \(0< \xi \le \xi ^{\prime }\). Then, for suitable \(\eta ,\eta ^{\prime }\in [\xi ,\xi ^{\prime }]\) we have, by Lagrange’s Theorem,

Let now \(0<\varepsilon \le x\le y\), \(\lambda \in [0,1]\) and set \(z{:}{=}\lambda x+(1-\lambda )y\). Let \(\delta >0\) and let \(I_\delta \in {\mathscr {I}}\) be a \(\delta \)-optimal control for v(z). Then, using (7.7), the fact that \(X^{x,I}\ge X^{x,\emptyset }\), and recalling (7.6), we get

the claim. \(\square \)

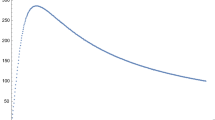

7.1 Numerical illustration

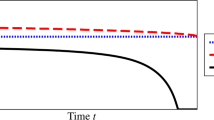

We perform a numerical analysis of the solution solving the nonlinear system (5.39). In Fig. 2, we provide the picture of the value function and its derivative when the parameters are set as follows: \(\rho = 0.08, \ \nu = - 0.07, \ \sigma = 0.25, \ c_0 = 1, \ c_1 = 10, \ \gamma = 0.5.\) Solving (5.39) with these entries and with \(\varphi (x)=x^m\), where m is given by (7.2), yields

In the rest of this section we discuss numerically the solution, illustrating how changes in parameters affect the value function and the trigger and target boundaries s, S, which describe the optimal control.Footnote 8

7.1.1 Impact of volatility

In Table 1 we report the relevant values the solution for different values of the volatility \(\sigma \). The other parameters are set as follows: \(\rho = 0.08, \ \nu = -0.07,\ \gamma = 0.5,\ c_0 = 1,\ c_1 = 10\).

Figure 3, drawn imposing the same values of parameters, represents the trigger level s, the target level S, and their difference \(S-s\) as functions of the volatility \(\sigma \). The figure and the table show that, when uncertainty increases, the action region \(\mathscr {A}\) shrinks and the investment size \(S-s\) shrinks. The first effect is well-known in the economic literature of irreversible investments without fixed costs as value of waiting to invest: an increase of uncertainty leads to postpone the investment (see [54]). We can see that, in our fixed cost context, also the size of the optimal investment is negatively affected by an increase of uncertainty.

7.1.2 Impact of fixed cost

In Table 2 we report the relevant values of the solution for different values of the fixed cost \(c_1\), when the other parameters are set as follows: \(\sigma =0.1,\ \rho = 0.08,\ \nu = - 0.07,\ \gamma = 0.5,\ c_0 = 1.\) In the row corresponding to \(c_1=0\), there are reported the outputs of the corresponding singular control problem, computed according to the values of s and B expressed by (7.4).Footnote 9). It can be observed that the convergence as \(c_1\rightarrow 0^+\) is pretty slow; this is consistent with the theoretical result of [61], which would state, in our case, \(\frac{\partial v (\cdot ;c_1)}{\partial c_1}(0^+)=-\infty \).

Figure 4, drawn imposing the same values of parameters, shows that, as \(c_1\) increases, the action region \(\mathscr {A}\) shrinks and the investment size \(S-s\) expands. Both these effects are expected: the first one is the counterpart of the value of waiting to invest, now with respect to the fixed cost of investment, rather than with respect to uncertainty; the second one expresses the fact that an increase of the fixed cost leads to invest less often, then to provide a larger investment size when the investment is undertaken.

Notes

The fact that only positive intervention, i.e. \(i_n>0\), is allowed is expressed in the economic literature of Real Options by saying that the investment is irreversible.

Other than in [63, Ch. 4, Sec. 5], irreversible and reversible investment problems with no fixed investment costs are largely treated in the mathematical economic literature, both over finite and infinite horizon. We mention, among others [1, 2, 4, 5, 10, 11, 23, 24, 30, 32, 33, 37, 39,40,41,42, 52, 55, 59, 64, 70].

The smooth-fit principle has also been established, when the diffusion is assumed to be transient, by techniques based on excessive function (see [66]).

Actually, we should consider \(b(x)=\nu x\) if \(x>0\) and \(b(x)=0\) otherwise and similarly for \(\sigma \), in order to fit Assumption 2.1. But this does not matter because our controlled process lies in \(\mathbb {R}_{++}\).

The simulations are done for negative values of \(\nu \), thinking of it as a depreciation factor. We omit, for the sake of brevity, to report the simulations that we have performed for positive values of \(\nu \), as the outputs show the same qualitative behaviour as in the case of negative \(\nu \).

In this case the optimal control consists in a reflection policy at a boundary; in other terms the interval [s, S] degenerates in a singleton \(\{s\}=\{S\}\).

References

Abel, A.B., Eberly, J.C.: Optimal investment with costly reversibility. Rev. Econ. Stud. 63, 581–593 (1996)

Aïd, R., Federico, S., Pham, H., Villeneuve, B.: Explicit investment rules with time-to-build and uncertainty. J. Econ. Dyn. Control 51, 240–256 (2015)

Alvarez, L.H.: A class of solvable impulse control problems. Appl. Math. Optim. 49, 265–295 (2004)

Alvarez, L.H.: Irreversible capital accumulation under interest rate uncertainty. Math. Methods Oper. Res. 72(2), 249–271 (2009)

Alvarez, L.H.: Optimal capital accumulation under price uncertainty and costly reversibility. J. Econ. Dyn. Control 35(10), 1769–1788 (2011)

Alvarez, L.H., Lempa, J.: On the optimal stochastic impulse control of linear diffusions. SIAM J. Control Optim. 47(2), 703–732 (2008)

Anderson, R.F.: Discounted replacement, maintenance, and repair problems in reliability. Math. Oper. Res. 19(4), 909–945 (1994)

Arrow, K.J., Harris, T., Marshak, J.: Optimal inventory policy. Econometrica 19(3), 250–272 (1951)

Avriel, M., Diewert, W.E., Schaible, S., Zang, I.: Generalized Concavity. Classics in Applied Mathematics, vol. 63. SIAM, Philadelphia (2010)

Baldursson, F.M., Karatzas, I.: Irreversible investment and industry equilibrium. Finance Stoch. 1(1), 69–89 (1997)

Bank, P.: Optimal control under a dynamic fuel constraint. SIAM J. Control Optim. 44(4), 1529–1541 (2005)

Bar-Ilan, A., Sulem, A.: Explicit solution of inventory problems with delivery lags. Math. Oper. Res. 20(3), 709–720 (1995)

Bar-Ilan, A., Sulem, A., Zanello, A.: Time-to-build and capacity choice. J. Econ. Dyn. Control 26, 69–98 (2002)

Bayraktar, E., Emmerling, T., Menaldi, J.L.: On the impulse control of jump diffusions. SIAM J. Control Optim. 51(3), 2612–2637 (2013)

Belak, C., Christensen, S., Seifred, F.T.: A general verification result for stochastic impulse control problems. SIAM J. Control Optim. 55(2), 627–649 (2017)

Bensoussan, A., Chevalier-Roignant, B.: Sequential capacity expansion options. Oper. Res. 67(1), 33–57

Bensoussan, A., Lions, J.L.: Impulse Control and Quasi-Variational Inequalities. Gauthier-Villars, Paris (1984)

Bensoussan, A., Liu, J., Yuan, J.: Singular control and impulse control: a common approach. Discrete Contin. Dyn. Syst. (Ser. B) 13(1), 27–57 (2010)

Breiman, L.: Probability. Classics in Applied Mathematics. SIAM, Philadelphia (1992)

Cadellinas, A., Zapatero, F.: Classical and impulse stochastic control of the exchange rate using interest rates and reserves. Math. Finance 10(2), 141–156 (2000)

Cadenillas, A., Lakner, P., Pinedo, M.: Optimal control of a mean-reverting inventory. Oper. Res. 58(6), 1697–1710 (2010)

Chen, Y.-S.A., Guo, X.: Impulse control of multidimensional jump diffusions in finite time horizon. SIAM J. Control Optim. 51(3), 2638–2663 (2013)

Chiarolla, M.B., Ferrari, G.: Identifying the free boundary of a stochastic, irreversible investment problem via the Bank–El Karoui representation theorem. SIAM J. Control Optim. 52(2), 1048–1070 (2014)

Chiarolla, M.B., Haussman, U.G.: On a stochastic irreversible investment problem. SIAM J. Control Optim. 48(2), 438–462 (2009)

Christensen, S.: On the solution of general impulse control problems using superharmonic functions. Stoch. Process. Appl. 124(1), 709–729 (2014)

Christensen, S., Salminen, P.: Impulse control and expected suprema. Adv. Appl. Probab. 49(1), 238–257 (2017)

Constantidinies, G.M., Richard, S.F.: Existence of optimal simple policies for discounted-cost inventory and cash management in continuous time. Oper. Res. 26(4), 620–636 (1978)

Dai, J.G., Yao, D.: Brownian inventory models with convex holding cost, part 1: average-optimal controls. Stoch. Syst. 3(2), 442–499 (2013)

Dai, J.G., Yao, D.: Brownian inventory models with convex holding cost, part 2: discount-optimal controls. Stoch. Syst. 3(2), 500–573 (2013)

Davis, M.H., Dempster, M.A.H., Sethi, S.P., Vermes, D.: Optimal capacity expansion under uncertainty. Adv. Appl. Probab. 19, 156–176 (1987)

Davis, M., Guo, X., Wu, G.: Impulse control of multidimensional jump diffusions. SIAM J. Control Optim. 48, 5276–5293 (2010)

De Angelis, T., Ferrari, G.: A stochastic partially reversible investment problem on a finite time-horizon: free-boundary analysis. Stoch. Process. Appl. 124(3), 4080–4119 (2014)

De Angelis, T., Federico, S., Ferrari, G.: Optimal boundary surface for irreversible investment with stochastic costs. Math. Oper. Res. 42(4), 1135–1161 (2017)

Eastham, J.F., Hastings, K.J.: Optimal impulse control of portfolios. Math. Oper. Res. 13(4), 588–605 (1988)

Egami, M.: A direct solution method for stochastic impulse control problems of one-dimensional diffusions. SIAM J. Control Optim. 47(3), 1191–1218 (2008)

Evans, L.: Partial Differential Equations. Graduate Studies in Mathematics, vol. 19, Second edn. AMS, Providence (2010)

Federico, S., Pham, H.: Characterization of optimal boundaries in reversible investment problems. SIAM J. Control Optim. 52(4), 2180–2223 (2014)

Ferrari, G., Koch, T.: On a strategic model of pollution control. Ann. Oper. Res. (2018). https://doi.org/10.1007/s10479-018-2935-7

Ferrari, G.: On an integral equation for the free-boundary of stochastic, irreversible investment problems. Ann. Appl. Probab. 25(1), 150–176 (2015)

Ferrari, G., Salminen, P.: Irreversible investment under Lèvy uncertainty: an equation for the optimal boundary. Adv. Appl. Probab. 48(1), 298–314 (2016)

Gu, J.W., Steffensen, M., Zheng, H.: Optimal dividend strategies of two collaborating businesses in the diffusion approximation model. Math. Oper. Res. 43, 377–398 (2018)

Guo, X., Pham, H.: Optimal partially reversible investments with entry decision and general production function. Stoch. Process. Appl. 115(5), 705–736 (2005)

Guo, X., Wu, G.: Smooth fit principle for impulse control of multidimensional diffusion processes. SIAM J. Control Optim. 48(2), 594–617 (2009)

Harrison, J.M., Sellke, T.M., Taylor, A.J.: Impulse control of Brownian motion. Math. Oper. Res. 8(3), 454–466 (1983)

He, S., Yao, D., Zhang, H.: Optimal ordering policy for inventory systems with quantity-dependent setup costs. Math. Oper. Res. 42(4), 979–1006 (2017)

Helmes, K.L., Stockbridge, R.H., Zhu, C.: A measure approach for continuous inventory models: discounted cost criterion. SIAM J. Control Optim. 53(4), 2100–2140 (2015)

Hodder, J.E., Triantis, A.: Valuing flexibility as a complex option. J. Finance 45, 549–565 (1990)

Jack, A., Zervos, M.: Impulse control of one-dimensional itô diffusions with an expected and a pathwise ergodic criterion. Appl. Math. Optim. 54, 71–93 (2006)

Jeanblanc-Picqué, M.: Impulse control method and exchange rate. Math. Finance 3(2), 161–177 (1993)

Karatzas, I., Shreve, S.E.: Brownian Motion and Stochastic Calculus, 2nd edn. Springer, New York (1991)

Korn, R.: Portfolio optimization with strictly positive transaction costs and impulse control. Finance Stoch. 2, 85–114 (1998)

Manne, A.S.: Capacity expansion and probabilistic growth. Econometrica 29(4), 632–649 (1961)

Mauer, D.C., Triantis, A.: Interactions of corporate financing and investment decisions: a dynamic framework. J. Finance 49, 1253–1277 (1994)

McDonald, R., Siegel, D.: The value of waiting to invest. Q. J. Econ. 101(4), 707–727 (1986)

Merhi, A., Zervos, M.: A model for reversible investment capacity expansion. SIAM J. Control Optim. 46(3), 839–876 (2007)

Mitchell, D., Feng, H., Muthuraman, K.: Impulse control of interest rates. Oper. Res. 62(3), 602–615 (2014)

Morton, J., Oksendal, B.: Optimal portfolio management with fixed costs of transactions. Math. Finance 5, 337–356 (1995)

Muthuraman, K., Seshadri, S., Wu, Q.: Inventory management with stochastic lead times. Math. Oper. Res. 40(2), 302–327 (2014)

Øksendal, A.: Irreversible investment problems. Finance Stoch. 4(2), 223–250 (2000)

Øksendal, B., Sulem, A.: Applied Stochastic Control of Jump-Diffusions. Springer, Berlin (2007)

Øksendal, B., Ubøe, J., Zhang, T.: Non-robustness of some impulse control problems with respect to intervention costs. Stoch. Anal. Appl. 20(5), 999–1026 (2002)

Ormeci, M., Dai, J.G., Vande Vate, J.: Impulse control of Brownian motion: the constrained average cost case. Math. Oper. Res. 56(3), 618–629 (2008)

Pham, H.: Continuous-Time Stochastic Control and Applications with Financial Applications. Stochastic Modelling and Applied Probability, vol. 61. Springer, Berlin (2009)

Riedel, F., Su, X.: On irreversible investment. Finance Stoch. 15(4), 607–633 (2011)

Rockafellar, R.T.: Convex Analysis. Princeton University Press, Princeton (1970)

Salminen, P., Ta, B.Q.: Differentiability of excessive functions of one-dimensional diffusions and the principle of smooth-fit. Adv. Math. Finance 104, 181–199 (2015)

Scarf, H.: The optimality of \((S,s)\) policies in the dynamic inventory problem. In: Karlin, S., Suppes, P. (eds.) Mathematical methods of social sciences 1959: proceedings of the first Stanford symposium, pp. 196–202. Stanford University Press (1960)

Sulem, A.: A solvable one-dimensional model of a diffusion inventory system. Math. Oper. Res. 11, 125–133 (1986)

Sulem, A.: Explicit solution of a two-dimensional deterministic inventory problem. Math. Oper. Res. 11(1), 134–146 (1986)

Wang, H.: Capacity expansion with exponential jump diffusion process. Stoch. Stoch. Rep. 75(4), 259–274 (2003)

Yamazaki, K.: Inventory control for spectrally positive Lévy demand processes. Math. Oper. Res. 42(1), 302–327 (2016)

Yong, J., Zhou, X.Y.: Stochastic Controls: Hamiltonian Systems and HJB equations. Springer, Berlin (1999)

Acknowledgements

The authors are sincerely grateful to the Associate Editor and to two anonymous Referees for their careful reading and very valuable comment that improved the final version of the paper. They also thank Giorgio Ferrari for his very valuable comments and suggestions. Mauro Rosestolato thanks the Department of Political Economics and Statistics of the University of Siena for the kind hospitality in March 2017 and the grant Young Investigator Training Program financed by Associazione di Fondazioni e Casse di Risparmio Spa supporting this visit. He also thanks the ERC 321111 Rofirm for the financial support.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A Appendix

A Appendix

Proposition A.1

Under Assumption 2.1 the boundaries 0 and \(+\infty \) are natural in the sense of Feller’s classification for the diffusion \(Z^{0,x}\).

Proof

Clearly \(+\infty \) is not accessible, in the sense that \(Z^{0,x}\) does not explode in finite time. It remains to show that 0 is not accessible, that is

that both 0 and \(+\infty \) are not entrance, that is

To this end, we introduce the speed measure m of the diffusion \(Z^{0,x}\) transformed to natural scale (see [19, Prop. 16.81, Th. 16.83]). Up to a multiplicative constant, we have

Assumption 2.1 implies that for some \(C_0,C_1>0\) we have \(|b(\xi )|\le C_0 \xi \) and \(\sigma ^2(\xi )\le C_1\xi ^2\) for every \(\xi \in \mathbb {R}_+\). According to [19, Prop. 16.43] we compute \(\int _0^1 ym(dy) \). We have

Set \(F(y):=\int _1^y\frac{-2C_0\xi }{\sigma ^2(\xi )}d\xi \). We have

This shows, by [19, Prop. 16.43], that (A.1) holds, The fact that 0 is not-entrance, i.e. that the first limit in (A.2) holds, is then consequence of [19, Prop. 16.45(a)]. Let us show, finally, that also \(+\infty \) is not-entrance, i.e. that the second limit in (A.2) holds. In this case, according to [19, Prop. 16.45(b)] we consider \(\int _1^{+\infty } ym(dy)\) and see, with the same computations as above, that it is equal to \(+\infty \). By the aforementioned result we conclude that \(+\infty \) is not entrance. \(\square \)

Remark A.2

The property (A.1) can be generalized to the case of random initial data. Let \(\tau \) be a (possibly infinite) \(\mathbb {F}\)-stopping time and let \(\xi \) be an \(\mathscr {F}_\tau \)-measurable random variable, clearly we have the equality in law \( Z^{\tau ,\xi }_{t+\tau }= \left( Z^{0,x}_t \right) _{|_{x=\xi }}\). By (A.1), it then follows that

Lemma A.3

Let \(I\in \mathscr {I}\), \(x,y\in \mathbb {R}_{++}\).

-

(i)

We have

$$\begin{aligned} \mathbb {E} \left[ |X^{x,I}_s-X^{y,I}_s|^4 \right] \le |x-y|^4 e^{C_0 t}\quad \forall t \ge 0, \end{aligned}$$(A.4)where \(C_0 {:}{=}4L_b+6L_\sigma ^2.\)

-

(ii)

For each \(\lambda \in [0,1]\) and \(x,y\in \mathbb {R}_{++}\), define \(z_\lambda {:}{=}\lambda x+(1-\lambda )y\). Then

$$\begin{aligned} \mathbb {E} \left[ \left| X^{z_\lambda ,I}_t - \lambda X^{x,I}_t - (1- \lambda ) X^{y,I}_t\right| ^2 \right] \le A_0\lambda ^2(1-\lambda )^2 |x-y|^{4} e^{B_0t} \quad \forall \lambda \in [0,1], \ \forall t\ge 0, \end{aligned}$$(A.5)where \(A_0>0\) and \(B_0 {:}{=}2L_b+2L_\sigma ^2+\tilde{L}_b\).

Proof

(i) We apply Itô’s formula to \(|X^{x,I}-X^{y,I}|^4\) and then—after a standar localization procedure with stopping times to let the stochastic integral term be a martingale and all the other expectations be well defined and finite; see e.g. the proof of Proposition 3.2—we take the expectation. We get, also using Assumption 2.1,

The claim follows by Gronwall’s inequality.

(ii) Define \(\Sigma ^{\lambda ,x,y,I}{:}{=}\lambda X^{x,I} + (1- \lambda ) X^{y,I}\). We apply Itô’s formula to the process \((X^{z_\lambda ,I}-\Sigma ^{\lambda ,x,y,I})^2\) and then—after a standar localization procedure with stopping times to let the stochastic integral term be a martingale and all the other expectations are well defined and finite; see e.g. the proof of Proposition 3.2—take the expectation, obtaining, also using Assumption 2.1,

By doing the same computations as in [72, p. 188] in order to obtain [72, p. 188, formulae (4.22) and (4.23)], we have

where \(\tilde{L}_b, \tilde{L}_\sigma \) are as in Assumption 2.1. Then, by using (A.7) and (A.8) in (A.6), we get

Using the inequality

and (A.4) into (A.9), we obtain

where \(C_0\) is the constant of (A.4). We conclude by Gronwall’s inequality. \(\square \)

Rights and permissions

About this article

Cite this article

Federico, S., Rosestolato, M. & Tacconi, E. Irreversible investment with fixed adjustment costs: a stochastic impulse control approach. Math Finan Econ 13, 579–616 (2019). https://doi.org/10.1007/s11579-019-00238-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11579-019-00238-w

Keywords

- Impulse stochastic optimal control

- Quasi-variational inequality

- Viscosity solution

- Irreversible investment

- Fixed cost

AMS Subject Classification

- 93E20 (Optimal stochastic control)

- 35Q93 (PDEs in connecton woth control and optimization)

- 35D40 (Viscosity solution)

- 35B65 (Smoothness and regularity of solutions)