Abstract

This paper deals with the problem of delay-interval-dependent stability criteria for switched Hopfield neural networks of neutral type with successive time-varying delay components. A novel Lyapunov–Krasovskii (L–K) functionals with triple integral terms which involves more information on the state vectors of the neural networks and upper bound of the successive time-varying delays is constructed. By using the famous Jensen’s inequality, Wirtinger double integral inequality, introducing of some zero equations and using the reciprocal convex combination technique and Finsler’s lemma, a novel delay-interval dependent stability criterion is derived in terms of linear matrix inequalities, which can be efficiently solved via standard numerical software. Moreover, it is also assumed that the lower bound of the successive leakage and discrete time-varying delays is not restricted to be zero. In addition, the obtained condition shows potential advantages over the existing ones since no useful term is ignored throughout the estimate of upper bound of the derivative of L–K functional. Using several examples, it is shown that the proposed stabilization theorem is asymptotically stable. Finally, illustrative examples are presented to demonstrate the effectiveness and usefulness of the proposed approach with a four-tank benchmark real-world problem.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Over the past decades, switched neural networks (SNNs) have become a popular research topic that attracts researcher’s attention, various delayed neural networks such as Hopfield NNs, Cohen–Grossberg NNs, cellular NNs and bidirectional associative memory NNs have been extensively investigated. Switched systems are an important class of hybrid dynamical systems which are composed of a family of continuous-time or discrete-time subsystems and a rule that orchestrates the switching among them. Switched systems provide a natural and convenient unified framework for mathematical modeling of many physical phenomena and practical applications, such as autonomous transmission systems, computer disc drivers, room temperature control, power electronics, chaos generators, to name but a few. In recent years, considerable efforts have been focused on the analysis and design of switched systems. In this regard, lots of valuable results in the stability analysis and stabilization for linear or nonlinear hybrid and switched systems were established (see Liberzon and Morse 1999; Song et al. 2008; Zong et al. 2008; Hetel et al. 2008 and references therein).

Within the last few decades, many researcher’s have well-focused on the dynamic analysis of Hopfield NNs, which was first introduced by Hopfield (1982, 1984), has drawn considerable attention due to their many applications in different areas such as pattern recognition, associative memory and combinatorial optimization. Since, the stability is one of the most important behaviors for the NNs, a great deal of results concerning the asymptotic or exponential stability have been proposed (see e.g., Xu 1995; Cao and Ho 2005; Cao et al. 2007, 2008, 2016; Manivannan et al. 2016; Aouiti et al. 2016; Yang et al. 2006; Zhou et al. 2009 and the references therein). It is well known that time delays are often encountered in NNs which may degrade the system performance and cause oscillation, leading to instability. Therefore, it is of great importance to study the asymptotic or exponential stability of NNs with time delay. Meanwhile, neutral time-delay systems are frequently encountered in many practical situations such as in chemical reactors, water pipes, population ecology, heat exchangers, robots in contact with rigid environments (Zhang and Yu 2010; Niculescu 2001), and so on. A neutral time-delay system contains delays both in its state, and in its derivatives of state. Therefore, many dynamical NNs are described with neutral functional differential equations that include neutral delay differential equations as their special case. These NNs are called neutral type NNs or NNs of neural-type.

Since, we know that successive time-varying delay model has a more strapping application background in remote control and control system. For example, we consider a state-feedback networked control, where the physical plant, controller, sensor, and actuator are placed at different places and signals are transmitted from one device to another. Along with the delays, there are two network-induced ones, one from sensor to controller and the other from controller to actuator. Then, the closed loop system will appear with two additive time delays in the state. Thus, in the network transmission settings, the two delays are usually time varying with dissimilar properties. Therefore, it is of substantial importance to study the stability of systems with two additive time-varying delay components. Motivated by the previous discussion, in this paper we are concerned with the problem of stability analysis for SHNNs of neutral type with successive time-varying delay components. In this connection, recently a new form of NNs with two additive time-varying delays has been considered in Zhao et al. (2008), Gao et al. (2008) and Shao and Han (2011). In Lam et al. (2007) and Rakkiyappan et al. (2015a, b), it was mentioned that in network controlled system (NCS), if the signal transmitted from one point to another passes through few segments of networks, then successive delays are induced with different properties owing to variable transmission conditions. That is, if the physical plant and the state-feedback controller are given by \(\dot{z}(t)={\mathcal{A}}z(t) + {\mathcal{B}}u(t)\) and \(u_c(t)=Kx_c(t)\), then it is appropriate to consider time-delays in the dynamical model as \(\dot{z}(t)={\mathcal{A}}z(t) + {\mathcal{B}} Kz(t-h_1(t)-h_2(t))\), where \(h_1(t)\) is the time-delay induced from sensor to controller and \(h_2(t)\) is the delay induced from controller to the actuator. Therefore, the stability analysis of such system was earlier carried out by adding up all the successive delays into a single delay, that is \(h_1(t) + h_2(t)=h(t)\) to develop a sufficient stability condition. Therefore, the problem of stability analysis of NNs with successive time-varying delays in the state has received more and more attention and become more popular in recent years (see Rakkiyappan et al. 2015a, b; Senthilraj et al. 2016; Samidurai and Manivannan 2015; Dharani et al. 2015 and the references therein).

Recently, the stability of systems with leakage delays becomes one of the hot topics and it has been studied by many researcher’s in the literature. The research about the leakage delay (or forgetting delay), which has been found in the negative feedback of system, can be traced back to 1992. In Kosko (1992), it was observed that the leakage delay had great impact on the dynamical behavior of the system. Since then, many researcher’s have paid much attention to the systems with leakage delay and some interesting results have been derived. For example, Gopalsamy (1992), considered a population model with leakage delay and found that the leakage delay can destabilize a system. In Gopalsamy (2007), the bidirectional associative memory (BAM) neural networks with constant leakage delays were investigated based on L–K functions and properties of M-matrices. Inspired by Gopalsamy (2007), recently it is essential important to study the stability of delayed NNs with leakage effects have been existing in Samidurai and Manivannan (2015), Sakthivel et al. (2015), Li et al. (2011, 2015), Lakshmanan et al. (2013), Li and Yang (2015), and Balasubramaniam et al. (2012).

So far, recently Rakkiyappan et al. (2015a, b), established the exponential synchronization of complex dynamical networks with control packet loss and additive time-varying delays. Currently, Senthilraj et al. (2016), proposed the problem of stability analysis of uncertain neutral type BAM neural networks with two additive time-varying delay components. Very recently, robust passivity analysis for delayed stochastic impulsive NNs with leakage and additive time-varying delays have been established by Samidurai and Manivannan (2015). Very recently, Rakkiyappan et al. (2015a, b), analyzed synchronization for singular complex dynamical networks with Markovian jumping parameters and two additive time-varying delay components. More recently, new stability criteria for switched Hopfield NNs of neutral type with additive time-varying discrete delay components and finitely distributed delay were studied by Dharani et al. (2015). Lakshmanan et al. (2013), stability problem concerned with the BAM neural networks with leakage time delay and probabilistic time-varying delays was studied. Li and Yang (2015) analyzed the leakage delay has significant impacts on the dynamical behavior of genetic regulatory networks (GRNs) and can bring tendency to destabilize systems. Recently, in Li et al. (2015) considered stability problem for a class of impulsive NNs model, which includes simultaneously parameter uncertainties, stochastic disturbances and two additive time-varying delays in the leakage term. Balasubramaniam et al. (2012), deals with the problem of delay-dependent global asymptotic stability of uncertain switched Hopfield NNs with discrete interval and distributed time-varying delays and time delay in the leakage term.

Very recently, Sakthivel et al. (2015), considered the issue of state estimation for a class of BAM neural networks with leakage term. Fuzzy cellular NNs with time-varying delays in the leakage terms have been extensively studied by Yang (2014), without assuming the boundedness on the activation functions. In Zhang et al. (2010), studied a class of new NNs referred to as switched neutral-type NNs with time-varying delays, which combines switched systems with a class of neutral-type NNs. By using an average dwell time method and new L–K functional to assure the global exponential stability and decay estimation for a class of switched Hopfield NNs of neutral type in Zong et al. (2010). In Li and Cao (2013), proposed the switched exponential state estimation and robust stability for interval neural networks with the average dwell time. Very recently, Li et al. (2014) concerned with a class of nonlinear uncertain switched networks with discrete time-varying delays, based on the strictly complete property of the matrices system and the delay-decomposing approach. In Ahn (2010) first time, proposed the \(H_\infty\) weight learning law to study not only guarantee the asymptotical stability of switched Hopfield NNs, but also reduce the effect of external disturbance to an \(H_\infty\) norm constraint.

With the motivation mentioned above, a new delay-interval-dependent stability criterion for SHNNs of neutral type with successive time-varying delay components is proposed in this paper. By fully using the available information about time-delays and activation functions, a novel L–K functional is constructed. Our main goal is to establish the delay-interval-dependent stability criteria, such that the concerned NNs are asymptotically stable. Make use of new technique to estimate the lower and upper bound information of the time-varying delay and L–K functional with double and triple integral terms, we apply WDII, introducing of some zero equations and using the RCC technique and Finsler’s lemma, new stability criteria for a class of SHNNs of neutral type is obtained in terms of LMIs, which ensures the asymptotic stability. Finally, four numerical examples are given to demonstrate the effectiveness and applicability of our theoretical results.

The main contribution of this paper lies in the following aspects:

-

A novel L–K functional is introduced which includes more information about successive time-varying delays and slope of the neuron activation function. Such type of L–K functional has not yet been considered in the previous literature on the stability of SHNNs of neutral type with successive time-varying delay components are introduced.

-

Different from others in Dharani et al. (2015), Balasubramaniam et al. (2012), Zong et al. (2010), Li and Cao (2013), Li et al. (2014), Cao et al. (2013) and Ahn (2010); several numerical examples are presented to illustrate the validity of the main results with a real-world simulation. This implies that the results of the present paper are essentially new.

-

Inspired by the works in Kwon et al. (2014a, (2014b), some zero equations which would include more quadratic and integral terms are introduced. These terms are merged with the time derivative of L–K functional and combined with RCC approach, which in turn can enhance the feasibility region of stability criterion.

-

Moreover, WDII Lemma is taken into account to bound the time-derivative of triple integral L–K functionals, this gives more tighter bounding technology to deal with such L–K functionals, this technique has been never used in previous literature for the stability of SHNNs of neutral type.

Notations Throughout this paper, the superscripts T and \(-1\) mean the transpose and the inverse of a matrix respectively. \({\mathbb{R}}^n\) denotes the n-dimensional Euclidean space, \({\mathbb{R}}^{n\times m}\) is the set of all \(n \times m\) real matrices. For symmetric matrices P and \(Q, P > Q\) (respectively, \(P = Q\)) means that the matrix \(P - Q\) is positive definite (respectively, non-negative). \(I_n, 0_n\) and \(0_m,n\) stands for \(n \times n\) identity matrix, \(n \times n\) and \(n \times m\) zero matrices, respectively and symmetric term in a symmetric matrix is denoted by \(*, X^\bot\) denotes a basis for the null-space of X. If the Matrices are not explicitly stated, it is assumed to compatible dimensions.

Problem formulation and preliminaries

Consider the following delayed Hopfield neural network model Dharani et al. (2015) of neutral type with successive time-varying delay components and distributed delay as:

where \(y(t) = [y_1(t), y_2(t), \dots, y_n(t)]^T \in {\mathbb{R}}^n\) is the state vector of the network at time t, n corresponds to the number of neurons, \(f(y(t)) = [f_1(y_1(t)), f_2(y_2(t)), \dots, f_n(y_n(t))]^T \in {\mathbb{R}}^n\) is the neuron activation function. The matrix \(D =\)diag\((d_1, d_2, \ldots, d_n)\) is a diagonal matrix with positive entries \(d_i > 0.\, A, B, C, E\) are the connection weight matrix and coefficient matrix, the discretely delayed connection weight matrix, the distributively delayed connection weight matrix and coefficient matrix of the time derivative of the delayed states, respectively. \(J=[J_1,J_2,\dots,J_n]^T\) is the constant external input vector. \(\varphi _i(t) (i\in N)\) is a continuous vector-valued initial function on \([-\bar{r},0],\overline{r}=\)max\(\{\delta _{1U}, \delta _{2U}, h_{1U}, h_{2U}, \tau, \sigma \}\). \(\delta _1(t), \delta _2(t)\) and \(h_1(t), h_2(t)\) are leakage and discrete interval time-varying continuous functions that represent the two delay components in the state respectively, \(\tau (t)\) and \(\sigma (t)\) are denotes the distributive and neutral time delays, and which satisfies the following:

where \(\delta _{1U}\ge \delta _{1L}, \delta _{2U}\ge \delta _{2L}, \delta _{U}\ge \delta _{L}, h_{1U}\ge h_{1L}, h_{2U}\ge h_{2L}, h_{U}\ge h_{L}, \tau, \sigma, \eta _1, \eta _2, \mu _1, \mu _2, \tau _D\) and \(\sigma _D\) are known real constants. Note that \(\delta _{1L}, \delta _{2L}, \delta _L, h_{1L}, h_{2L}, h_L\) may not be equal to 0. we denote

Remark 2.1

The first term in the right side of (1) variously known as forgetting or leakage term. It is known from the literature on population dynamics [see Gopalsamy (1992)] that time delays in the stabilizing negative feedback terms will have a tendency to destabilize a system. \(f_j(\cdot ),\, j=1,2, \dots,n\) are signal transmission functions. Furthermore, system (1) contains some data about the derivative of the past state to further analysis and model the dynamics for such complex neural responses. Hence system (1) has been referred to as neutral-type system, in which the system has both the state delay and the state derivative with delay, the so-called neutral delay.

Throughout this paper, it is assumed that each neuron activation function \(f_j(\cdot )\) in (1) satisfies:

Assumption (H)

(Liu et al. 2006) For any \(j\in \{1,2,\dots,n\},\, f_j(0)=0\) and their exist constants \(k^{-}_{j}\) and \(k^{+}_{j}\) such that

for all \(\alpha _1\ne \alpha _2\), where \(\alpha _1,\alpha _2\in {\mathbb{R}}.\) Then by Brouwer’s fixed-point theorem Cao (2000) and Assumption H, it can be proved that there exist at least one equilibrium point for system (1). Let \(z^* = [z^*_1, z^*_2, \ldots, z^*_n]^T\) be one equilibrium point of system (1). For convenience we shift \(z^*\) to the origin by making the following transformation: \(z(\cdot ) = y(\cdot ) - y^*\) and then system (1) can be rewritten as

where \(z(t) = [z_1(t), z_2(t),\dots, z_n(t)]^T\) is the state vector of the transformed system, the initial condition \(\phi (t) = \varphi (t) - z^*, g(z(t)) = [g_1(z_1(t)), g_2(z_2(t)),\dots, g_n(z_n(t))]^T, g_j(z_j(t)) = f_j (z_j(t) + z^*_j) - f_j(z^*_j), \,\,j=1,2,\dots, n.\) According to Assumption H, function \(g_j(\cdot )\) satisfies the following condition:

The switched Hopfield neural network of neutral type with discrete and distributed delays are described as

where \(\varrho (t)\) is a switching signal which takes its values in the finite set \({\mathcal{K}} = \{1,2, \dots,m\}.\) Define the indicator function \(\gamma (t) = [\gamma _1(t), \gamma _2(t), \ldots, \gamma _n(t)]^T\), where

and \(k \in K.\) Thus, the model (8) can also be described by

As (9) must be satisfied under any switching rules, it follows that \(\sum _{k=1}^m \gamma _k(t) = 1.\) Next, we present some preliminary lemmas, which are needed in the proof of our main results.

Lemma 2.1

(Gu 2000) For any positive definite matrix \(M \in {\mathbb{R}}^{n\times n}\), scalars \(h_2>h_1>0\), vector function \(w:[h_1,h_2]\rightarrow {\mathbb{R}}^n\) such that the integrations concerned are well defined, the following inequality holds:

Lemma 2.2

(Park et al. 2011) Let \(f_1,f_2,\dots,f_N: R^m \longmapsto R\) have positive values in an open subset D of \(R^m\). Then, the reciprocally convex combination of \(f_i\) over D satisfies

subject to

Lemma 2.3

(Park et al. 2015) For a given matrix \(M>0\), given scalars a and b satisfying \(a<b\), the following inequality holds for all continuously differentiable function in \([a,b] \rightarrow {\mathbb{R}}^n:\)

where

Remark 2.2

So far, very recently the WDII is proposed by Park et al. (2015). Employing WDII is sure to get less conservative criteria than applying the Jensen’s inequality. Therefore, this integral inequality takes advantage of the following information from three aspects: the first is to use the information on the state such as x(t), the second is to benefit information on the integral of the state over the period of the delay such as \(\int _{t-\bar{\tau }}^tx(s) ds\) or \(\int _{t-\tau (t)}^tx(s) ds\) and the third is to employ the information on the double integral of the state over the period of the delay such as \(\int _{-\bar{\tau }}^0\int _{t+u}^tx(s) ds\) or \(\int _{-\tau (t)}^0\int _{t+u}^tx(s) ds.\) Therefore, which gives the more information about the plant states such as \(x(t),\int _{t-\bar{\tau }}^tx(s) ds\) or \(\int _{t-\tau (t)}^tx(s) ds\) and \(\int _{-\bar{\tau }}^0\int _{t+u}^tx(s) ds\) or \(\int _{-\tau (t)}^0\int _{t+u}^tx(s) ds.\) Hence, Lemma 2.3 may provide tighter bound than the Jensen’s inequality.

Lemma 2.4

(Boyd et al. 1994) Let \(\xi \in {\mathbb{R}}^n,\Phi =\Phi ^T \in {\mathbb{R}}^{n \times n}\) such that rank \((B)<n\). The following statements are equivalent

-

(i)

\(\xi ^T \Phi \xi < 0, \quad \forall B \xi = 0, \quad \xi \ne 0,\)

-

(ii)

\({B^\bot }^T \Phi B^{\bot } < 0,\) where \(B^{\bot }\) is a right orthogonal complement of B.

Lemma 2.5

(Boyd et al. 1994) For a given matrices \(A_{11}, A_{12}, A_{21}, A_{22}\) with appropriate dimensions, \(\begin{bmatrix} A_{11}&A_{12} \\ A_{21}&A_{22} \\ \end{bmatrix} < 0\), holds if and only if \(A_{22}<0, A_{11}-A_{12}A_{22}^{-1}A_{12}^T < 0\).

Main results

In this section, we will propose a stability criteria for system (9). For the sake of simplicity of matrix and vector representation, \(e_i \in {\mathbb{R}}^{56 n \times n}\ (i=1,2, \dots, 56)\) are defined as block entry matrices (for example \(\left. e_4^T = \left[ 0_n, \quad 0_n, \quad 0_n, \quad I_n, \quad \underbrace{0_n, \dots \dots \dots, 0_n}_{52 \ times} \right] \right)\). The other notations are defined as

Theorem 3.1

For given positive scalars - \(\delta _{1L}\), \(\delta _{1U}\), \(\delta _{2L}\), \(\delta _{2U}\), \(h_{1L}\), \(h_{1U}\), \(h_{2L}\), \(h_{2U}\), \(\delta_1\), \(\delta_2\), \(h_1\), \(h_2\), \(\tau\), \(\sigma\), \(\eta_1\), \(\eta_2\), \(\mu_1\), \(\mu_2\), \(\tau_D\), \(\sigma_D\) and diagonal matrices \(K_p,K_m\), then the neural network described by (9) is globally asymptotically stable, for any time-varying delay \(\delta (t),h(t),\tau (t)\) and \(\sigma (t)\) satisfying (2), if there exist positive definite matrices \(P_i (i=1,2, \dots,18)\in {\mathbb{R}}^{n \times n},T_i (i=1,2,3)\in {\mathbb{R}}^{n \times n},Q_i (i=1,2,\dots,17)\in {\mathbb{R}}^{n \times n}U,V,W,X,Y,Z\in {\mathbb{R}}^{n \times n},\bar{U}{\in {\mathbb{R}}^{2n \times 2n}},\bar{V}{\in {\mathbb{R}}^{2n \times 2n}},\bar{W}{\in {\mathbb{R}}^{2n \times 2n}},\bar{X}{\in {\mathbb{R}}^{2n \times 2n}},\bar{Y}{\in {\mathbb{R}}^{2n \times 2n}},\bar{Z}{\in {\mathbb{R}}^{2n \times 2n}}\),\(R_i (i=1,2,\dots,6)\in {\mathbb{R}}^{n \times n},S_i (i=1,2)\in {\mathbb{R}}^{n \times n}\), positive diagonal matrices \(\Delta _l=\hbox {diag}\left\{ \lambda _{l1}, \lambda _{l2}, \dots, \lambda _{ln}\right\},\Lambda _l=\)diag\(\left\{ \mu _{l1}, \mu _{l2}, \dots, \mu _{ln}\right\},H\in {\mathbb{R}}^{n \times n},G_i (i=1,2,\dots,7)\in {\mathbb{R}}^{n \times n}\), any symmetric matrices \(F_i\in {\mathbb{R}}^{n \times n}(i=1,2, \dots,6)\), any matrices \({\mathcal{L}}, {\mathcal{M}}, {\mathcal{N}}\in {\mathbb{R}}^{2n \times 2n}\) such that the following LMIs hold:

Proof

Let us consider the following Lyapunov–Krasoskii functional candidate:

where

Taking the time derivative of V(z(t), t) along the trajectories of system (9) yields

where

Applying Lemma 2.1, we have

Inspired by the ideas in the works of Kwon et al. (2014a, b), following six zero equalities with any symmetric matrices \(F_i, \ i=1,2,\dots,6\) are introduced:

By summing the above six zero equalities given in the Eqs. (22)–(27), it can be obtained

Using Lemma 2.1, the following inequalities hold

By considering integral terms in (29) with the equation (28), if the inequalities in (11), (12) and (13) are holds, then by utilizing Lemmas 2.1 and 2.2, it follows that

and similarly, we have

From (30)–(32), it is concluded that

Applying Lemma 2.3, the integral terms in (34) can be rewritten as

Similarly, we have

where

Utilizing Lemma 2.1, we have

On the other hand, for any matrix H with appropriate dimension, it is true that

From (6), the following inequality holds for any positive diagonal matrices \(G_i, \ i=1,2, \dots,7\)

From Eqs. (16)–(46), by using S-procedure in Boyd et al. (1994), if Eqs. (11)–(13) hold, then an upper bound of \(\dot{V}(z(t),t)\) can be written as

Based on Lemma 2.4, \(\zeta ^T(t) \ \Xi \ \zeta (t) < 0\) with \(\Gamma \ \zeta (t) = 0\) is equivalent to \((\Gamma ^\perp )^T \ \Xi \ \Gamma ^\perp <0.\) Therefore, if the inequality (10) holds, the equilibrium point of system (9) is asymptotically stable. This completes the proof

Remark 3.1

For the case of SHNNs without neutral term, we let \(E_k = 0\) in (9) and the following corollary can be obtained with a proof similar to Theorem 3.1. In this case, network (9) can be rewritten as

Corollary 3.1

For given positive scalars \(\delta _{1L},\delta _{1U},\delta _{2L},\delta _{2U},h_{1L},h_{1U},h_{2L},h_{2U},\delta _1,\delta _2,h_1,h_2,\tau,\eta _1,\eta _2,\mu _1,\mu _2,\tau _D\), and diagonal matrices \(K_p,K_m\), then the neural network described by (48) is asymptotically stable, for any time-varying delay \(\delta (t),h(t)\) and \(\tau (t)\) satisfying (2), if there exist positive definite matrices \(P_i (i=1,2, \dots,18)\in {\mathbb{R}}^{n \times n},T_i (i=1,2,3)\in {\mathbb{R}}^{n \times n},Q_i (i=1,2,\dots,17)\in {\mathbb{R}}^{n \times n}U,V,W,X,Y,Z\in {\mathbb{R}}^{n \times n},\bar{U}{\in {\mathbb{R}}^{2n \times 2n}},\bar{V}{\in {\mathbb{R}}^{2n \times 2n}},\bar{W}{\in {\mathbb{R}}^{2n \times 2n}},\bar{X}{\in {\mathbb{R}}^{2n \times 2n}},\bar{Y}{\in {\mathbb{R}}^{2n \times 2n}},\bar{Z}{\in {\mathbb{R}}^{2n \times 2n}},R_i (i=1,2,\dots,6)\in {\mathbb{R}}^{n \times n},S_1 \in {\mathbb{R}}^{n \times n}\), positive diagonal matrices \(\Delta _l=\hbox {diag}\left\{ \lambda _{l1}, \lambda _{l2}, \dots, \lambda _{ln}\right\},\Lambda _l=\hbox {diag}\left\{ \mu _{l1}, \mu _{l2}, \dots, \mu _{ln}\right\},H\in {\mathbb{R}}^{n \times n},G_i (i=1,2,\dots,7)\in {\mathbb{R}}^{n \times n}\), any symmetric matrices \(F_i\in {\mathbb{R}}^{n \times n}(i=1,2, \dots,6)\), any matrices \({\mathcal{L}}, {\mathcal{M}}, {\mathcal{N}}\in {\mathbb{R}}^{2n \times 2n}\) such that the following LMIs hold:

where \(\Xi\) is same as defined in Theorem 3.1 with \(E_k = 0.\)

Proof

For the proof, consider the same Lyapunov–Krasovskii functional (10) with \(S_2 = 0\) in \(V_9(z(t),t).\) Then by following the same procedure in Theorem 3.1, we obtain \(\Xi\) with \(S_2 = 0.\) Then by defining \(\overline{\Gamma } = \left[ \underbrace{0_n \dots \dots 0_n}_{28 \ times} A_k \underbrace{0_n \dots \dots 0_n}_{5 \ times} B_k \underbrace{0_n \dots \dots 0_n}_{4 \ times} C_k \underbrace{0_n \dots \dots 0_n}_{5 \ times} -D_k \underbrace{0_n \dots \dots 0_n}_{9 \ times} \right]\) and its right orthogonal complement by \(\overline{\Gamma }^T\) we conclude the proof similar to Theorem 3.1. \(\square\)

Remark 3.2

For the case of SHNNs without leakage and neutral term, we let \(E_k = 0\) in (9) and the following corollary can be obtained with a proof similar to Theorem 3.1. In this case, network (9) can be rewritten as

Corollary 3.2

For given positive scalars \(h_{1L},h_{1U},h_{2L},h_{2U},h_1,h_2,\tau,\mu _1,\mu _2,\tau _D\), and diagonal matrices \(K_p,K_m\), then the neural network described by (51) is asymptotically stable, for any time-varying delay h(t) and \(\tau (t)\) satisfying (2), if there exist positive definite matrices \(P_i (i=1,7, \dots,18)\in {\mathbb{R}}^{n \times n},T_i (i=1,2,3)\in {\mathbb{R}}^{n \times n},Q_i (i=6,7,\dots,17)\in {\mathbb{R}}^{n \times n},\bar{U}{\in {\mathbb{R}}^{2n \times 2n}},\bar{V}{\in {\mathbb{R}}^{2n \times 2n}},\bar{W}{\in {\mathbb{R}}^{2n \times 2n}},\bar{X}{\in {\mathbb{R}}^{2n \times 2n}},\bar{Y}{\in {\mathbb{R}}^{2n \times 2n}},\bar{Z}{\in {\mathbb{R}}^{2n \times 2n}}\),\(R_i (i=1,2,\dots,6)\in {\mathbb{R}}^{n \times n},S_1 \in {\mathbb{R}}^{n \times n}\), positive diagonal matrices \(\Delta _l=\hbox {diag}\left\{ \lambda _{l1}, \lambda _{l2}, \dots, \lambda _{ln}\right\},\Lambda _l=\hbox {diag}\left\{ \mu _{l1}, \mu _{l2}, \dots, \mu _{ln}\right\},H\in {\mathbb{R}}^{n \times n},G_i (i=1,2,\dots,7)\in {\mathbb{R}}^{n \times n}\), any symmetric matrices \(F_i\in {\mathbb{R}}^{n \times n}(i=1,2, \dots,6)\), any matrices \({\mathcal{L}}, {\mathcal{M}}, {\mathcal{N}}\in {\mathbb{R}}^{2n \times 2n}\) such that the following LMIs hold:

where \(\Xi\) is same as defined in Theorem 3.1 with \(E_k = 0.\)

Proof

For the proof, consider the same Lyapunov–Krasovskii functional (10) with \(P_i=0,\, i=2,3,\dots,6, \, Q_i, i=1,2,\dots,5,\,U=V=W=X=Y=Z=0,\,S_2 = 0\) in \(V_4(z(t),t),V_5(z(t),t),V_6(z(t),t)\) and \(V_9(z(t),t).\) Then by following the same procedure in Theorem 3.1, we obtain \(\Xi\) with \(P_i=0,\, i=2,3,\dots,6,\,Q_i, i=1,2,\dots,5,\,U=V=W=X=Y=Z=0,\,S_2 = 0.\) Then by defining \(\widehat{\overline{\Gamma }} = \left[ -D_K \underbrace{0_n \dots \dots 0_n}_{27 \ times} A_k \underbrace{0_n \dots \dots 0_n}_{5 \ times} B_k \underbrace{0_n \dots \dots 0_n}_{4 \ times} C_k \right]\) and its right orthogonal complement by \(\overline{\Gamma }^T\) we conclude the proof similar to Theorem 3.1. \(\square\)

Remark 3.3

We may also consider the case of SHNNs without leakage, distributed and neutral term, we let \(\delta (t)=C_k=E_k = 0\) in (9) and the following corollary can be obtained with a proof similar to Theorem 3.1. In this case, network (9) can be rewritten as

Corollary 3.3

For given positive scalars \(h_{1L},h_{1U},h_{2L},h_{2U},h_1,h_2,\mu _1,\mu _2\), and diagonal matrices \(K_p,K_m\), then the neural network described by (54) is asymptotically stable, for any time-varying delay h(t) satisfying (2), if there exist positive definite matrices \(P_i (i=1,7, \dots,18)\in {\mathbb{R}}^{n \times n},T_i (i=1,2,3)\in {\mathbb{R}}^{n \times n},Q_i (i=6,7,\dots,17)\in {\mathbb{R}}^{n \times n},\bar{U}{\in {\mathbb{R}}^{2n \times 2n}},\bar{V}{\in {\mathbb{R}}^{2n \times 2n}},\bar{W}{\in {\mathbb{R}}^{2n \times 2n}},\bar{X}{\in {\mathbb{R}}^{2n \times 2n}},\bar{Y}{\in {\mathbb{R}}^{2n \times 2n}},\bar{Z}{\in {\mathbb{R}}^{2n \times 2n}},R_i (i=1,2,\dots,6)\in {\mathbb{R}}^{n \times n}\), positive diagonal matrices \(\Delta _l=\hbox {diag}\left\{ \lambda _{l1}, \lambda _{l2}, \dots, \lambda _{ln}\right\},\Lambda _l=\hbox {diag} \left\{ \mu _{l1}, \mu _{l2}, \dots, \mu _{ln}\right\},H\in {\mathbb{R}}^{n \times n},G_i (i=1,2,\dots,7)\in {\mathbb{R}}^{n \times n}\), any symmetric matrices \(F_i\in {\mathbb{R}}^{n \times n}(i=1,2, \dots,6)\), any matrices \({\mathcal{L}}, {\mathcal{M}}, {\mathcal{N}}\in {\mathbb{R}}^{2n \times 2n}\) such that the following LMIs hold:

where \(\Xi\) is same as defined in Theorem 3.1 with \(\delta (t)=C_k=E_k = 0.\)

Proof

For the proof, consider the same Lyapunov–Krasovskii functional (10) with \(P_i=0,\, i=2,3,\dots,6,\,Q_i, i=1,2,\dots,5,\,U=V=W=X=Y=Z=0,\,S_1=S_2 = 0\) in \(V_4(z(t),t),V_5(z(t),t),V_6(z(t),t)\) and \(V_9(z(t),t).\) Then by following the same procedure in Theorem 3.1, we obtain \(\Xi\) with \(P_i=0,\, i=2,3,\dots,6,\,Q_i, i=1,2,\dots,5,\,U=V=W=X=Y=Z=0,\,S_1=S_2 = 0.\) Then by defining \(\Psi = \left[ -D_K \underbrace{0_n \dots \dots 0_n}_{27 \ times} A_k \underbrace{0_n \dots \dots 0_n}_{5 \ times} B_k \underbrace{0_n \dots \dots 0_n}_{4 \ times} \right]\) and its right orthogonal complement by \(\Psi ^T\) we conclude the proof similar to Theorem 3.1. \(\square\)

Remark 3.4

In order to use more information about neuron activation functions, in this paper terms on the slope of neuron activation functions are introduced in the L–K functional to study the stability of addressed NNs. In Shao and Han (2011) have used the term.

in their L–K functional for the neuron activation function \(g(z(\cdot ))\). By utilizing the condition (4) about the slope of the neuron activation functions into the L–K functional, the term

has been introduced in Li et al. (2011). Recently, only few authors have employed delay bounds into the slope of neuron activation functions in the L–K functional, see Kwon et al. (2014a, b). Inspired by these works, in this paper, we consider a new \(V_2(z(t),t)\), which indicates that more information about neuron activations has been used and it has not been considered in any of the previous works that deal with the stability of SHNNs with successive time-varying delay components.

Remark 3.5

In order to reduce the conservatism of stability conditions, inspired by the ideas in Kwon et al. (2014b), six zero integral equalities in (22)–(27) are introduced and terms involving these inequalities are merged with Eq. (29) during the calculation of \(V_7(z(t),t)\). After then, reciprocal convex combination technique is utilized in the proof of Theorem 3.1, which can lead to a further improvement of the stability criterion. It is noted that introducing augmented L–K functional and zero integral inequalities and utilizing reciprocal convex combination technique can lead to less conservative results.

Remark 3.6

The number of decision variables used in Theorem 3.1 is larger than the previous studies in Rakkiyappan et al. (2015a, b), Senthilraj et al. (2016), and Dharani et al. (2015). Because, the reason is the proposed model consists of an additive interval time-delay components in the state both of discrete delay and leakage delay with newly augmented form of L–K functionals. As we know that, in order to reduce the computational burden the Finsler’s lemma was conducted in the proof of Theorem 3.1, which in turn to reduces the computational burden. As a result, proposed stability criteria gives better results while maintaining lower computational burden.

Remark 3.7

It is important to note that very limited works have been done on stability of switched Hopfield NNs of neutral-type with time-varying delays. More particularly, stability analysis of switched Hopfield NNs of neutral-type with successive interval time-varying delay components in the state both of discrete and leakage delay has not been completely studied in previous literature (see e.g., Rakkiyappan et al. 2015a, b; Senthilraj et al. 2016; Dharani et al. 2015). In order to fill such a gap, in this paper we aimed to obtain new stability criteria for switched Hopfield NNs of neutral-type with successive interval time-varying delay components in the state both of discrete and leakage delay is proposed. Therefore, the results of the present paper are essentially new. Hence, unfortunately we could not provide any comparison results over existing methods in order to show the improvements.

Remark 3.8

It is noted that, very recently Zeng et al. (2015) proposed the free-matrix-based integral inequality and this integral inequality used for handling the double integral L–K functionals, that offers a new tighter information on the upper bounds of time-varying delay and its interval for the time-delay systems. Therefore, we utilizing this integral inequality to deal with such L–K functionals, which turn to reduce the conservatism further. Thus, there is no limit for such improvements on delay bounds of time-delay systems it’s basically depends on choosing good L–K functionals and computing it’s derivative with an newly improved integral inequalities or some other techniques called delay-partitioning approaches and so on. Thus, in the future, the inequality proposed in Zeng et al. (2015) can be used in order to achieve improved results for delayed NNs.

Remark 3.9

It is well-known that most of the existing results concerning the stability problem of delayed switched Hopfield NNs of neutral type. However, switched Hopfield NNs of neutral type with successive interval time-varying delay components in the state of discrete delay and leakage delay has not been considered in the previous works. In contrast to the system models in Rakkiyappan et al. (2015a, b), Senthilraj et al. (2016), Dharani et al. (2015); one can find that their results cannot be applicable to system (1). This indicates that the proposed system model and obtained results are essentially new. There is no doubt that studying stability analysis for the systems described in (9), with leakage and discrete interval time-varying delays is sure not only to enhance the dynamic research theory of system model proposed in (9), but also further enrich the foundation of realistic application for the delayed SHNNs, as shown in the following numerical section.

Numerical examples

In this section, we provide four numerical examples to demonstrate the effectiveness of our delay-dependent stability criteria.

Example 4.1

Consider system (9) with \(n = k = 2\) and

The activation functions are assumed to be

It is easy to check that the activation functions are satisfied (6) with \(K_m=\hbox {diag}\left\{ 0,0\right\},K_p=\hbox {diag}\left\{ 1,1\right\}\). Also let \(\delta _{1L}=0.10,\delta _{1U}=0.20,\delta _1=0.30,\delta _{2L}=0.15,\delta _{2U}=0.25,\delta _2=0.40,h_{1L}=0.50,h_{1U}=1.0,h_1=1.50,h_{2L}=0.80,h_{2U}=1.0,h_2=1.80,\tau =0.30,\sigma =0.40,\eta _1=0.4,\eta _2=0.5,\mu _1=0.4,\mu _2=0.5,\tau _D=0.5,\sigma _D=0.5.\) By our Theorem 3.1 and Matlab LMI toolbox, it is found that the equilibrium point of system (9) is asymptotically stable. It can also be verified that the LMIs (10)–(13) are feasible for larger upper delay bounds \(\delta _1, \delta _2, h_1, h_2, \tau\) and \(\sigma\). lt shows that all the conditions stated in Theorem 3.1 have been satisfied and hence system (9) with the above given parameters are asymptotically stable.

Example 4.2

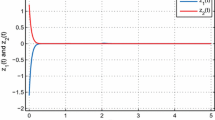

Consider the switched Hopfield neural network without neutral term as in (48) with the parameters \(D_k, A_k, B_k, C_k (k = 1, 2)\) as defined in Example 4.1. By choosing \(\delta _1(t)=0.1+0.1\cos (0.5t),\delta _2(t)=0.2+0.2\cos (0.5t),h_1(t)=0.6+0.6\sin (0.5t),h_2(t)=0.7+0.7\sin (0.5t),\tau (t)=0.25+0.25\cos (3t)\), we let \(\delta _{1L}=0.05,\delta _{1U}=0.15,\delta _1=0.20,\delta _{2L}=0.10,\delta _{2U}=0.30,\delta _2=0.40,h_{1L}=0.40,h_{1U}=0.80,h_1=1.20,h_{2L}=0.50,h_{2U}=1.0,h_2=1.50,\tau =0.50\) and \(\eta _1=0.2,\eta _2=0.3,\mu _1=0.4,\mu _2=0.5,\tau _D=0.5.\) Also letting \(g_{i}(z_{i})=0.5\left( |z_i+1| - |z_i-1|\right), \ i=1,2.\) it can be easily verified that the activation functions holds with \(K_m=\hbox {diag}\left\{ 0,0\right\},K_p=\hbox {diag}\left\{ 1,1\right\}\). By using Matlab LMI toolbox, it is found that LMI (49) and (50) is feasible. Thus, it can be conclude that the switched NNs (48) is asymptotically stable and the state trajectories of the dynamical system is converges to the zero equilibrium point with an initial state \([-0.2,0.2]^T\), it can be shown in Fig. 1. Suppose, if we take leakage time-varying delay \(\delta _1(t)=0.15+0.15\cos (0.5t) (\delta _1\ge 0.30),\delta _2(t)=0.25+0.25\cos (0.5t) (\delta _2\ge 0.50)\), it is found that the neural network (48) is actually unstable and the state trajectories of the dynamical system is not converges to the zero equilibrium point, it can be shown in Fig. 2. According to this example, it can be conclude that the leakage delay has a significant effect in the dynamical behaviour of the switched NNs.

Remark 4.1

As is well-known that the leakage time delays are unavoidable and their occurrence causes instability or oscillation, it can be verified through different simulation results for different time delays especially for the leakage delay that the oscillation of the dynamics increases when time delays are chosen to be larger, which would obviously affect the stability. Thus, time delays in the leakage term have a great impact on the stability of the considered switched system.

Example 4.3

Consider the switched Hopfield neural network without leakage and neutral term as in (51) with the parameters \(A_k, B_k, C_k, D_k (k = 1, 2)\) as defined in Example 4.1. By choosing \(h_1(t) = 0.8 + 0.8 \sin (0.5t),h_2(t) = 1.2 + 1.2 \sin (0.5t), \tau (t) = 0.5 + 0.5 \cos (3t),\) we let \(h_{1L}=0.50,h_{1U}=1.10,h_1 = 1.60,h_{2L}=0.70,h_{2U}=1.70,h_2 = 2.40,\tau = 1.0\) and \(\mu _1 = 0.3,\mu _2 = 0.35,\tau _D=0.5\). Also letting \(g_1(z) = g_2(z) = 0.5 (|z + 1|- |z- 1|)\), it can be easily verified that the neuron activation function holds with \(K_m=\hbox {diag}\left\{ 0,0\right\},K_p=\hbox {diag}\left\{ 1,1\right\}\). By using Matlab LMI toolbox, it is found that LMIs in Corollary 3.2 is feasible. Thus, we can conclude that the model (51) is asymptotically stable. The simulation results for the above mentioned delay values also ensure the asymptotic stability of the model (51). Hence, the convergence of the SHNNs (51) is shown in Fig. 3, with an initial state \([-0.4,0.8]^T\).

Example 4.4

So far, originally NNs embody the characteristics of real biological neurons that are connected or functionally related in a nervous system. On the other hand, NNs can represent not only biological neurons but also other practical systems namely the quadruple-tank process system can be shown in Fig. 5. The setup consists of four interacting tanks, two water pumps and two valves. The two process inputs are the voltages \(\upsilon _1\) and \(\upsilon _2\) supplied to the two pumps. Tank 1 and Tank 2 are placed below Tank 3 and Tank 4 to receive water flow by the action of gravity. Hence as shown in Fig. 4, the quadruple-tank process can be expressed clearly using the neural network model, see for instance, Samidurai and Manivannan (2016), Lee et al. (2013), Huang et al. (2012), Haoussi et al. (2011) and Johansson (2000); proposed the state-space equation of the quadruple-tank process and designed the state feedback controller as follows:

where

Generally speaking, the differential equations representing the mass balances in the delayed [transport delay \(h(t)=h_1(t) + h_2(t)\)] equations. To derive a more interesting control problem, transport delays can easily be added by delaying the inlet of water to the tanks, so it is the possible approach used to examine in this paper. Moreover, in this present study transport delays between valves and tanks being additive interval time-varying, it is also taken into account but not exists in previous literature in the following aspects. For simplicity, it was assumed that \(\tau _1=0,\ \tau _2=0\) and \(\tau _3=h(t)=h_1(t) + h_2(t)\) (since \(h_{1L}\le h_1(t)\le h_{1U}\) and \(h_{2L}\le h_2(t)\le h_{2U}\)). Here, the control input \(\bar{u}(t)\), means that the amount of water supplied by the pumps. Therefore, it is true that \(\bar{u}(t)\) has a threshold value due to the limited area of the hose and the capacity of the pumps. Therefore, it is natural to consider \(\bar{u}(t)\), as a nonlinear function as follows:

The quadruple-tank process (57) can be rewritten to the form of system (54) with \(k=1\), as follows:

where \(D_1 = -\bar{A_0} - \bar{A_1}, \quad A_1 = \bar{B_0}\bar{K}, \quad B_1 = \bar{B_1}\bar{K}, \quad g(\cdot ) = \bar{g}(\cdot )\). In addition, \(K_m=\hbox {diag}\left\{ 0,0,0,0\right\},K_p=\hbox {diag}\left\{ 0.1,0.1,0.1,0.1\right\}\) with \(h_{1L}=0.60,h_{1U}=1.20,h_1=1.80,h_{2L}=0.80,h_{1U}=1.50,h_2=2.30,\mu _1=\mu _2=0.5\). Using MATLAB LMI control Toolbox and by solving LMIs in Corollary 3.3, we found that the quadruple-tank process system (58) is asymptotically stable. By choosing \(h_1(t) = 0.9 + 0.9 \sin (0.5t),h_2(t) = 1.15 + 1.15 \sin (0.5t),\mu _1=\mu _2=0.5\) and \(g_i(z_i)=0.1\left( \mid z_i+1 \mid - \mid z_i-1 \mid \right), \quad i=1,2,\dots, 4\), it can be easily verified that Assumption (H) is holds. Figure 5 shows the state trajectories of the system is converges to zero equilibrium point with an initial state \([-0.3,0.2,0.5,-0.4]\), hence it is found that the dynamical behavior of the quadruple-tank process system (58) is asymptotically stable.

Schematic representation of the quadruple-tank process. Source: From Johansson (2000)

Conclusions

In this paper, the problem of new delay-interval-dependent stability criteria for SHNNs of neutral type with time delays have been investigated. In order to achieving stability results, some suitable L–K functional under the weaker assumption of neuron activation function divided by states are utilized to enhance the feasible region of proposed stability criteria. By using the famous Jensen’s inequality, WDII Lemma, introducing of some zero equations and combined with RCC technique, a novel delay-interval-dependent stability criterion is derived in terms of linear matrix inequalities (LMIs). Then the feasibility and effectiveness of the developed methods have been shown by interesting numerical simulation examples. The proposed approach is finally demonstrate the numerical simulation of the benchmark problem that takes into account additive time-varying delays, showing the feasibility of the proposed approach on a realistic problem. Therefore, our results have an important significance in theory and design, as well as in applications of neutral type SHNNs with delays in leakage terms.

References

Aouiti C, M’hamdi MS, Touati A (2016) Pseudo almost automorphic solutions of recurrent neural networks with time-varying coefficients and mixed delays. Neural Process Lett 43:1–20

Ahn CK (2010) An \(H_\infty\) approach to stability analysis of switched Hopfield neural networks with time-delay. Nonlinear Dyn 60:703–711

Boyd S, Ghaoui LE, Feron E, Balakrishnan V (1994) Linear matrix inequalities in system and control theory. Society for Industrial and Applied Mathematics, Philadelphia

Balasubramaniam P, Vembarasan V, Rakkiyappan R (2012) Global robust asymptotic stability analysis of uncertain switched Hopfield neural networks with time delay in the leakage term. Neural Comput Appl 21:1593–1616

Cao J, Ho DWC (2005) A general framework for global asymptotic stability analysis of delayed neural networks based on LMI approach. Chaos Solitons Fractals 24:1317–1329

Cao J, Ho DWC, Huang X (2007) LMI-based criteria for global robust stability of bidirectional associative memory networks with time delay. Nonlinear Anal 66:1558–1572

Cao J, Feng G, Wang Y (2008) Multistability and multiperiodicity of delayed Cohen–Grossberg neural networks with a general class of activation functions. Phys D 237:1734–1749

Cao J, Rakkiyappan R, Maheswari K, Chandrasekar A (2016) Exponential \(H_\infty\) filtering analysis for discrete-time switched neural networks with random delays using sojourn probabilities. Sci China Tech Sci 59:387–402

Cao J, Alofi A, Al-Mazrooei A, Elaiw A (2013) Synchronization of switched interval networks and applications to chaotic neural networks. Abstr Appl Anal 2013:1–11

Cao J (2000) Global asymptotic stability of neural networks with transmission delays. Int J Syst Sci 31:1313–1316

Dharani S, Rakkiyappan R, Cao J (2015) New delay-dependent stability criteria for switched Hopfield neural networks of neutral type with additive time-varying delay components. Neurocomputing 151:827–834

Gao H, Chen T, Lam J (2008) A new delay system approach to network-based control. Automatica 44:39–52

Gopalsamy K (1992) Stability and oscillations in delay differential equations of population dynamics. Kluwer, Dordrecht

Gopalsamy K (2007) Leakage delays in BAM. J Math Anal Appl 325:1117–1132

Gu K (2000) An integral inequality in the stability problem of time delay systems. In: Proceedings of the 39th IEEE conference on decision control, pp 2805–2810

Haoussi FE, Tissir EH, Tadeo F, Hmamed A (2011) Delay-dependent stabilisation of systems with time-delayed state and control: application to a quadruple-tank process. Int J Syst Sci 42:41–49

Huang T, Li C, Duan S, Starzyk JA (2012) Robust exponential stability of uncertain delayed neural networks with stochastic perturbation and impulse effects. IEEE Trans Neural Netw Learn Syst 23:866–875

Hopfield J (1984) Neurons with graded response have collective computational properties like those of two-state neurons. Proc Natl Acad Sci 81:3088–3092

Hopfield J (1982) Neural networks and physical systems with emergent collective computational abilities. Proc Natl Acad Sci 79:2554–2558

Hetel L, Daafouz J, Iung C (2008) Equivalence between the Lyapunov–Krasovskii functionals approach for discrete delay systems and that of the stability conditions for switched systems. Nonlinear Anal Hybrid Syst 2:697–705

Johansson KH (2000) The quadruple-tank process: a multivariable laboratory process with an adjustable zero. IEEE Trans Control Syst Technol 8:456–465

Kwon OM, Park MJ, Park JH, Lee SM, Cha EJ (2014a) On stability analysis for neural networks with interval time-varying delays via some new augmented Lyapunov–Krasovskii functional. Commun Nonlinear Sci Numer Simulat 19:3184–3201

Kwon OM, Park JH, Lee SM, Cha EJ (2014b) New augmented Lyapunov–Krasovskii functional approach to stability analysis of neural networks with time-varying delays. Nonlinear Dyn 76:221–236

Kosko B (1992) Neural networks and fuzzy systems. Prentice Hall, New Delhi

Liberzon D, Morse AS (1999) Basic problems in stability and design of switched systems. IEEE Control Syst Mag 19:59–70

Lam J, Gao H, Wang C (2007) Stability analysis for continuous systems with two additive time-varying delay components. Syst Control Lett 56:16–24

Li X, Rakkiyappan R, Balasubramaniam P (2011) Existence and global stability analysis of equilibrium of fuzzy cellular neural networks with time delay in the leakage term under impulsive perturbations. J Franklin Inst 348:135–155

Lakshmanan S, Park JH, Lee TH, Jung HY, Rakkiyappan R (2013) Stability criteria for BAM neural networks with leakage delays and probabilistic time-varying delays. Appl Math Comput 219:9408–9423

Li L, Yang Y (2015) On sampled-data control for stabilization of genetic regulatory networks with leakage delays. Neurocomputing 149:1225–1231

Li J, Hu M, Guo L, Yang Y, Jin Y (2015) Stability of uncertain impulsive stochastic fuzzy neural networks with two additive time delays in the leakage term. Neural Comput Appl 26:417–427

Li N, Cao J (2013) Switched exponential state estimation and robust stability for interval neural networks with the average dwell time. IMA J Math Control Inf 32:257–276

Li N, Cao J, Hayat T (2014) Delay-decomposing approach to robust stability for switched interval networks with state-dependent switching. Cogn Neurodyn 8:313–326

Liu Y, Wang Z, Liu X (2006) Global exponential stability of generalized recurrent neural networks with discrete and distributed delays. Neural Netw 19:667–675

Lee TH, Park JH, Kwon OM, Lee SM (2013) Stochastic sampled-data control for state estimation of time-varying delayed neural networks. Neural Netw 46:99–108

Manivannan R, Samidurai R, Sriraman R (2016) An improved delay-partitioning approach to stability criteria for generalized neural networks with interval time-varying delays. Neural Comput Appl 27:1–17

Niculescu SI (2001) Delay effects on stability: a robust control approach. Springer, Berlin

Park MJ, Kwon OM, Park JH, Lee SM, Cha EJ (2015) Stability of time-delay systems via Wirtinger-based double integral inequality. Automatica 55:204–208

Park PG, Ko JW, Jeong C (2011) Reciprocally convex approach to stability of systems with time-varying delays. Automatica 47:235–238

Rakkiyappan R, Sakthivel N, Cao J (2015a) Stochastic sampled-data control for synchronization of complex dynamical networks with control packet loss and additive time-varying delays. Neural Netw 66:46–63

Rakkiyappan R, Kaviarasan B, Rihan FA, Lakshmanan S (2015b) Synchronization of singular Markovian jumping complex networks with additive time-varying delays via pinning control. J Franklin Inst 352:3178–3195

Samidurai R, Manivannan R (2016) Delay-range-dependent passivity analysis for uncertain stochastic neural networks with discrete and distributed time-varying delays. Neurocomputing 185:191–201

Senthilraj S, Raja R, Jiang F, Zhu Q, Samidurai R (2016) New delay-interval-dependent stability analysis of neutral type BAM neural networks with successive time delay components. Neurocomputing 171:1265–1280

Samidurai R, Manivannan R (2015) Robust passivity analysis for stochastic impulsive neural networks with leakage and additive time-varying delay components. Appl Math Comput 268:743–762

Sakthivel R, Vadivel P, Mathiyalagan K, Arunkumar A, Sivachitra M (2015) Design of state estimator for bidirectional associative memory neural networks with leakage delays. Inf Sci 296:263–274

Shao H, Han QL (2011) New delay-dependent stability criteria for neural networks with two additive time-varying delay components. IEEE Trans Neural Netw 22:812–818

Song Y, Fan J, Fei M, Yang T (2008) Robust \(H_\infty\) control of discrete switched systems with time delay. Appl Math Comput 205:159–169

Xu Z (1995) Global convergence and asymptotic stability of asymmetric Hopfield neural networks. J Math Anal Appl 191:405–427

Yang H, Chu T, Zhang C (2006) Exponential stability of neural networks with variable delays via LMI approach. Chaos Solitons Fractals 30:133–139

Yang G (2014) New results on the stability of fuzzy cellular neural networks with time-varying leakage delays. Neural Comput Appl 25:1709–1715

Zong G, Liu J, Zhang Y, Hou L (2010) Delay-range-dependent exponential stability criteria and decay estimation for switched Hopfield neural networks of neutral type. Nonlinear Anal Hybrid Syst 4:583–592

Zhang H, Liu Z, Huang GB (2010) Novel delay-dependent robust stability analysis for switched neutral-type neural networks with time-varying delays via SC technique. IEEE Trans Syst Man Cybern Syst Part B Cybern 40:1480–1491

Zhao Y, Gao H, Mou S (2008) Asymptotic stability analysis of neural networks with successive time delay components. Neurocomputing 71:2848–2856

Zhang D, Yu L (2010) \(H_\infty\) filtering for linear neutral systems with mixed time-varying delays and nonlinear perturbations. J Franklin Inst 347:1374–1390

Zhou J, Li S, Yang Z (2009) Global exponential stability of Hopfield neural networks with distributed delays. Appl Math Model 33:1513–1520

Zong G, Xu S, Wu Y (2008) Robust \(H_\infty\) stabilization for uncertain switched impulsive control systems with state delay: an LMI approach. Nonlinear Anal Hybrid Syst 2:1287–1300

Zeng HB, He Y, Wu M, She J (2015) Free-matrix-based integral inequality for stability analysis of systems with time-varying delay. IEEE Trans Autom Control 60:2768–2772

Acknowledgments

The work of first two authors was supported by the Department of Science and Technology—Science and Engineering Research Board (DST-SERB), Government of India, New Delhi, for its financial support through the research Project Grant No. SR/FTP/MS-041/2011.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Manivannan, R., Samidurai, R., Cao, J. et al. New delay-interval-dependent stability criteria for switched Hopfield neural networks of neutral type with successive time-varying delay components. Cogn Neurodyn 10, 543–562 (2016). https://doi.org/10.1007/s11571-016-9396-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11571-016-9396-y