Abstract

This paper provides sufficient conditions for the stability, asymptotic stability, uniform stability, boundedness and uniformly boundedness of solutions of a certain class of second-order nonlinear vector differential equations using the second method of Lyapunov. By constructing a suitable complete Lyapunov function, which serves as a basic tool, we establish the properties mentioned above and thereby improve and complement some known results found in the literature. Lastly, the correctness of our main results is justified by the examples given.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we consider the following second order nonlinear vector differential equation:

or its equivalent system:

where \( X, Y : \mathbb {R}^+ \rightarrow \mathbb {R}^n, ~\mathbb {R}^+ = [ 0, \infty )\); \( H: \mathbb {R}^n \rightarrow \mathbb {R}^n \); \( P: \mathbb {R}^+ \times \mathbb {R}^n \times \mathbb {R}^n \rightarrow \mathbb {R}^n \); F is an \( n \times n \) continuous symmetric positive definite matrix function dependent on the arguments displayed explicitly and the dots indicate differentiation with respect to variable t. It is assumed that both H and P are continuous with respect to their variables. Furthermore, the existence and uniqueness of the solutions of Eq. (1.1) are assumed. The Jacobian matrix \(J_H(X)\) of H(X) is given by

where \((i, j = 1, 2, ..., n);\) \( (x_1, x_2, ..., x_n)\) and \((h_1, h_2, ...,h_n)\) represent the components of X and H respectively. We also assumed throughout this paper that the Jacobian matrix \(J_H(X)\) exists and is continuous. The symbol \( \langle X, Y \rangle = \sum _{i = 1}^{n}x_iy_i\) is used to denote the usual scalar product of any two vectors \(X, Y \in \mathbb {R}^n.\)

The study of qualitative behaviour of solutions of second order scalar linear and nonlinear differential equations have been studied by many authors in the literature due to their applications in many fields of science and technology such as biology, physics, chemistry, control theory, economy, communication network, financial mathematics, medicine and mechanics among many other fields. To study the qualitative behaviour of solutions of differential equations, the second method of Lyapunov has proven to be an effective method among other methods available. This method, involves the construction of a suitable functional known as Lyapunov function. Unfortunately, to construct a good lyapunov function especially for nonlinear differential equations remains a difficult task. For further studies on the subject of qualitative behaviour of solutions of differential equations, interested reader(s) may look at papers of Abou-El-Ela and Sadek [1, 2], Adeyanju [5], Adeyanju and Adams [6], Ademola [4], Ademola et al. [3], Ahmad and Rama [7], Alaba and Ogundare [8], Awrejcewicz [9], Baliki [10], Cartwright [13], Chicone [14], Ezeilo [15,16,17], Grigoryan [18], Hale [19], Jordan [20], Loud [21], Ogundare et al. [22], Omeike et al. [23,24,25], Reissig et al. [27], Sadek [28], Smith [29], Tejumola [30,31,32], Tunc [35, 36], Tunc and Mohammed [33, 38, 39], Tunc and Tunc [40], Yoshizawa [41] and Zainab [42].

Ezeilo [16] used the well-known direct method of Lyapunov to examine the convergence of solutions of a certain second order differential equation similar to (1.1) with \(F(X,\dot{X})\equiv C \), C being a real constant \( n \times n \) matrix and \(H(X) = G(X)\). His result was an extension of the convergence result obtained by Loud [21] for the second order scalar differential equation:

where c is a positive constant.

Also worthy of mentioning is the work of Tejumola [31], who considered a certain second order matrix differential equation of the form:

A being a constant \( n \times n\) symmetric matrix, X, H(X), P are \( n \times n\) continuous matrices. He discussed three different properties of this differential equation which are: stability of the trivial solution when \(H(0) = 0\) and \(P \equiv 0\), the ultimate boundedness of all solutions and the existence of periodic solutions. Adeyanju [5] proved some results on the limiting regime in the sense of Demidovic for a certain class of second order vector differential equations similar to Eq. (1.1) with \(F(X,\dot{X})\) being replaced by an \(n \times n\) symmetric, positive definite constant matrix A.

Earlier, Omeike et al. [23] established some conditions for the boundedness of solutions of Eq. (1.1) by using an incomplete Lyapunov function supplemented with a signum function.

The above results of Omeike et al. [23] and other papers mentioned above serve as a motivation for this work. Our goal is to rather use a complete Lyapunov function to study the asymptotic stability of the trivial solution(which was not considered by Omeike et al. [23]) and boundedness of all solutions of the Eq. (1.1) or system (1.2) with simpler conditions.

2 Preliminary results

The following lemmas are very important for the proof of the three theorems contained in this paper.

Lemma 2.1

Let A be a real symmetric \(n \times n\) matrix and

where \(\delta _a\) and \( \Delta _a \) are respectively the least and greatest eigenvalues of the matrix A. Then, for any \(X \in \mathbb {R}^n\) we have,

Proof

Lemma 2.2

Let H(X)be a continuously differentiable vector function with \(H(0) = 0\). Then,

-

(i)

$$\begin{aligned} \langle H(X), X \rangle = \int _0 ^1 X^T J_H(\sigma X)Xd\sigma , \end{aligned}$$

-

(ii)

$$\begin{aligned} \frac{d}{dt} \int _0 ^1 \langle H(\sigma X), X \rangle d \sigma = \langle H(X), Y \rangle . \end{aligned}$$

Proof

Lemma 2.3

[19] Suppose \(f(0) = 0\). Let V be a continuous functional defined on \(C_H = C\) with \(V(0) = 0\) and let u(s) be a function, non-negative and continuous for \(0 \le s < \infty \), \(u(s) \rightarrow \infty \) as \(s \rightarrow \infty \) with \(u(0) = 0\). If for all \(\varphi \in C,\) \(u(|\phi (0)|) \le V(\varphi ), ~ V(\varphi ) \ge 0, \dot{V}(\varphi ) \le 0\),then the zero solution of \(\dot{x} = f(x_t)\) is stable.

If we define \( Z = \{ \varphi \in C_H: \dot{V}(\varphi ) = 0\}\), then the zero solution of \(\dot{x} = f(x_t)\)is asymptotically stable, provided that the largest invariant set in Z is \(Q=\{0\}. \)

Definition 2.4

[12, 37] A continuous function \(W: \mathbb {R}^n \rightarrow \mathbb {R}^+\) with \(W(0) = 0\), \(W(s) > 0\) if \(s > 0\), andW strictly increasing is a wedge.

Definition 2.5

[12, 37] Let D be an open set in \(\mathbb {R}^n\) with \(0 \in D.\) A function \( V: [0, \infty ) \times D \rightarrow [0, \infty )\) is called positive definite if \(V(t, 0) = 0\) and if there is a wedge \(W_1\) with \(V(t, x) \ge W_1(|x|),\) and is called a decrescent function if there is a wedge \(W_2\) with \(V(t,x) \le W_2(|x|).\)

Theorem 2.6

[12, 37] If there is a Lyapunov function V for the Eq. (1.1) and wedges satisfying:

-

(i)

\(W_1(|\phi (0)|) \le V(t, \phi ) \le W_2(\parallel \phi \parallel )\), \( ( where ~ W_1(r) ~ and ~ W_2(r) ~ are ~ wedges) \),

-

(ii)

\(V^{\prime }(t, \phi ) \le 0,\)

then the zero solution of (1.1) is uniformly stable.

Theorem 2.7

[37, 42] Suppose that there exists a continuous Lyapunov function \(V(t, \phi )\)defined for all\(t \in \mathbb {R}^+\) and \(\phi \in S^*\),which satisfies the following conditions:

-

(i)

\(a(|\phi (0)|) \le V(t, \phi ) \le b_1(|\phi (0)|) + b_2(\parallel \phi \parallel )\), where \( a(r), ~ b_1(r), ~b_2(r) \in CI\) (CI denotes the set of continuous increasing functions) and are positive for \(r > H \) and \(a(r) - b_2(r) \rightarrow \infty \) as \(r \rightarrow \infty ,\)

-

(ii)

\(V^{\prime }(t, \phi ) \le 0,\)

then the solutions of (1.1) is uniformly bounded.

3 Basic assumptions

In this section, we give the basic assumptions for the main results.

Assumptions

Suppose the following assumptions hold:

-

(T1)

\(J_H(X)\) and F(X, Y) are symmetric, positive definite and their eigenvalues \(\lambda _i (J_H(X))\) and \(\lambda _i (F(X,Y))\) respectively satisfy

$$\begin{aligned} \delta _h \le \lambda _i(J_H(X)) \le \Delta _h, ~ \text{ for } \text{ all }~ X \in \mathbb {R}^n, \end{aligned}$$(3.1)$$\begin{aligned} \alpha - \epsilon \le \lambda _i(F(X, Y)) \le \alpha , ~ \text{ for } \text{ all }~ X, Y \in \mathbb {R}^n, \end{aligned}$$(3.2)where \(\delta _h\), \(\alpha \), \(\epsilon \) and \( \Delta _h\) are positive constants and \(H(0) = 0, ~ H(X) \ne 0\) (whenever \(X \ne 0\)), such that

$$\begin{aligned} \delta \ge \frac{\alpha + \epsilon }{\alpha - \epsilon } >1. \end{aligned}$$ -

(T2)

There exist a positive finite constant \(K_2\) and a continuous function \(\theta (t)\) such that vector P(t, X, Y) satisfies:

$$\begin{aligned} \parallel P(t,X,Y) \parallel \le \theta (t) \{ 1 + (\Vert X\Vert + \Vert Y\Vert )\}, \end{aligned}$$(3.3)where \( \int ^t_0\theta (s)ds \le K_2 < \infty \) for all \(t \ge 0.\)

4 Main results

Here are the main results of the paper. Let

then we have the following theorem.

Theorem 4.1

Suppose the conditions stated under the basic assumption (T1) above are satisfied, then the zero solution of the Eq. (1.1) or system (1.2) is uniformly stable and asymptotically stable.

Proof: We begin by defining a continuously differentiable function \(V(t) = V(X(t),Y(t))\) by

\(\alpha \) and \(\delta \) are as defined above. Without doubt, \(V(0,0) = 0\). By Lemma 2.1 and Lemma 2.2 we have

where \(\delta _1 = \min \{\delta , \delta _h ( \delta + 1)\}\).

Similarly, using Lemma 2.1 and Lemma 2.2, the following is evident

where \(\delta _2 = \max \{\big ((\Delta _h ( \delta + 1) + 2\alpha ^2\big ), (\delta + 2)\}.\) It follows that

The time derivative of the function V(t) along the solution path of the equation being studied is given by

where I is an \(n \times n\) identity matrix. The above derivative can be written as

where

and

But

Also from Lemma 2.2, we have

Hence,

where \(\delta _3 = \frac{1}{2}\min \{\alpha \delta _h; (\delta + 1)( \alpha - \epsilon ) - 2 \alpha \}.\)

Similarly,

let \(K_1^2 = \frac{1}{2} \min \Big ( \frac{1}{\delta _h}, \frac{(\alpha - \epsilon )(\delta + 1)}{\alpha \epsilon ^2} \Big ), \) then

Thus,

The above inequality shows that the derivative with respect to t of the Lyapunov function V(t) along the solution path of Eq. (1.1) is negative semidefinite. Thus, we conclude by Theorem 3 that the zero solution of Eq. (1.1) is stable and also uniformly stable.

Next is to show that the zero solution is asymptotically stable. Define

Applying the so-called LaSalle’s invariance principle, it follows that \((X,Y) \in W\) implies that \(X = 0 = Y\). This shows that the largest invariant set contained in the set W is \((0,0) \in W\). We can therefore conclude by Lemma 3 that the zero solution of the Eq. (1.1) is asymptotically stable and thereby conclude the proof of Theorem 4.1.

Our next theorem is on boundedness of solutions of equation (1.1). Let

Theorem 4.2

If the assumptions (T1) and (T2) hold, then there exists a positive constant D such that all the solutions of equation (1.1) satisfy the inequalities

as \(t \rightarrow + \infty \).

Proof: Now that \(P(t,X,Y) \ne 0\), the time derivative of the Lyapunov function V(t) used in the proof of Theorem 5 is given by

where \(\delta _4 = \max \{\alpha ; (\delta + 1)\}.\)

Applying the inequalities,

and (4.2) to \(\dot{V}(t)\) above, we obtain

where \( \delta _5 = 2\delta _4\) and \( \delta _6 = 6\delta _1^{-1} \delta _4.\)

Integrating both sides of (4.4) from 0 to \(t(t \ge 0),\) yields

Setting \(\delta _7 = V(0) + \delta _5 K\), then we get

Applying Gronwall-Bellman inequality [26] to the above inequality produces

From inequalities (4.5) and left hand side of (4.2) we can deduce that,

It then follows from (4.6) that,

This completes the proof of Theorem 4.2 and the boundedness of solutions of Eq. (1.1) or system (1.2) is established.

Corollary 4.3

Under the assumptions of Theorem 4.2, all the solutions of equation (1.1) are uniformly bounded.

Theorem 4.4

Under the assumptions of Theorem 4.2 but with modification that

\(\theta (t) \in L^1[0, \infty )\) for all \(t \in \mathbb {R}^+;\) \( L^1(0, \infty )\) is the space of Lebesgue integrable functions. Then there exists a positive constant \(D_*\) such that all the solutions of equation (1.1) ultimately satisfy the inequalities

as \(t \rightarrow + \infty \).

Proof

From the proof of Theorem 4.2, we have

But,

On applying this inequality and the left hand side of (4.2), we have

Integrating both sides of the above inequality from 0 to \(t(t > 0),\) and letting \( \delta _8 = 2^{\frac{3}{2}} \delta _4 \delta _1^{ -\frac{1}{2}},\) gives

where \(D_2\) is a positive constant. From inequalities (4.8) and the left hand side of (4.2) it is clear that,

It follows from (4.9) that,

This ends the proof of the theorem. \(\square \)

Remark 4.5

This result was established without using the popular Gronwall-Bellman inequality.

Remark 4.6

We have been able to establish the boundedness results of Eq. (1.1) or system (1.2) without using the signum function as in the case of the boundedness results of Omeike et al. [23] obtained for this same equation.

5 Examples

Example 5.1

We consider the following special cases of system (1.2) for \(n = 2\) when \(P(t, X, Y) \equiv 0\) and when \(P(t, X, Y) \ne 0.\)

Let

After some calculations we obtain the following as the eigenvalues of matrix F(X, Y).

and

so that,

The Jacobian matrix of vector H(X) is given by

Then, we obtain the bounds for the eigenvalues of this matrix as \(1 \le \lambda _i(J_H(X)) \le 5, ~(i = 1, 2, 3,...)\).

In addition, let

Then,

where

Therefore, all the conditions of Theorem 4.1 and Theorem 4.2 are satisfied.

Example 5.2

Suppose in the Example 5.1 above, we have

Then,

Again, all the conditions of Theorem 4.4 are satisfied.

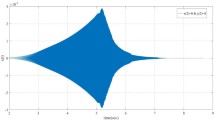

Figure 1 below shows that the trivial solution of the example constructed is stable, asymptotically stable and uniformly stable when \(P(t, X, Y) \equiv 0.\)

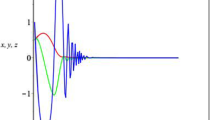

Figure 2 shows the boundedness of solutions of the example constructed when \(P(t, X, Y) \ne 0.\)

6 Conclusion

In this paper, a certain second order vector differential equation is considered. By constructing a new complete Lyapunov function, we established results on the stability, asymptotic stability, uniform stability, boundedness and uniform boundedness of solutions of the equation considered. The results of this paper complement and improve on some established results found in the literature. Examples are given to illustrate the correctness of main results.

References

Abou-El-Ela, A. M. A., Sadek, A. I.: On the stability of solutions for certain second-order stochastic delay differential equations. Ann. Differen. Equat 6, 131–141 (1990)

Abou-El-Ela, A.M.A., Sadek, A.I., Mahmoud, A.M.: On the stability of solutions for certain second-order stochastic delay differential equations. Differ. Eqs. Control Process. 2, 1–13 (2015)

Ademola, A.T., Ogundare, B.S., Ogundiran, M.O., Adesina O.A.: Periodicity, stability, and boundedness of solutions to certain second order delay differential equations. Int. J. Differ. Eqs. 2016, Article ID 2843709 , 1-10, (2016)

Ademola, A.T.: Boundedness and stability of solutions to certain second order differential equations. Differ. Eqs. Control Process. 3, 38–50 (2015)

Adeyanju, A.A.: Existence of a limiting regime in the sense of demidovic for a certain class of second order non-linear vector differential equation. Differ. Eq. Control process. 4, 63–79 (2018)

Adeyanju, A.A., Adams, D.O.: Some new results on the stability and boundedness of solutions of certain class of second order vector differential equations. Int. J. Math. Anal. Optim. Theory Appl. 7(1), 108–115 (2021)

Ahmad, S., Rama Mohana Rao, M.: Theory of ordinary differential equations. With applications in biology and engineering. Affiliated East-West Press Pvt. Ltd., New Delhi (1999)

Alaba, J.G., Ogundare, B.S.: On stability and boundedness properties of solutions of certain second order non-autonomous non-linear ordinary differential equation. Kragujevac J. Math. 39(2), 255–266 (2015)

Awrejcewicz, J.: Ordinary Differential Equations and Mechanical Systems. Springer, Cham (2014)

Baliki, A., Benchohra, M., Graef, J.R.: Global existence and stability for second order functional evolution equations with infinite delay. Electron. J. Qual. Theory Differ. Equ. 2016, Paper No. 23 , 10 pp, (2016)

Bellman, R.: Introduction to matrix analysis, reprint of the second edition (1970), with a forward by Gene Golub. Classics in Applied Mathematics, 19. Society for Industrial and Applied Mathematics (SIAM), philadelphia, PA, (1997)

Burton, T.A.: Stability and Periodicity of Solutions of Ordinary and Functional Differential Equations. Academic Press, Orlando (1985)

Cartwright, M.L., Littlewood, J.E.: On nonlinear differential equations of the second order. Ann. Math. 48, 472–494 (1947)

Chicone, C.: Ordinary differential equations with applications. Texts in Applied Mathematics, vol. 34. Springer, New York (1999)

Ezeilo, J.O.C.: On the existence of almost periodic solutions of some dissipative second order differential equations. Ann. Mat. Pura Appl. 65(4), 389–406 (1964)

Ezeilo, J.O.C.: On the convergence of solutions of certain system of second order differential equations. Ann. Mat. Pura Appl. 72(4), 239–252 (1966)

Ezeilo, J.O.C.: n-Dimensional extensions of boundedness and stability theorem for some third order differential equation. J. Math. Anal. Appl. 18, 395–416 (1967)

Grigoryan, G.A.: Boundedness and stability criteria for linear ordinary differential equations of the second order. Russ. Math. 57(12), 8–15 (2013)

Hale, J.: Sufficient conditions for stability and instability of autonomous functional-differential equations. J. Differ. Eqs. 1, 452–482 (1965)

Jordan, D.W., Smith, P.: Nonlinear Ordinary Differential Equations: Problems and Solutions. A Sourcebook for Scientists and Engineers. Oxford University Press, Oxford (2007)

Loud, W.S.: Boundedness and convergence of solutions of \( \ddot{x} + c \dot{x} + g(x) = e(t)\). Duke Math. J. 24, 63–72 (1957)

Ogundare, B.S., Ademola, A.T., Ogundiran, M.O., Adesina, O.A.: On the qualitative behaviour of solutions to certain second order nonlinear differential equation with delay. Annali dell’Universita’ di Ferrara Sez VII Sci. Mat. 63(2), 333–351 (2017)

Omeike, M.O., Oyetune, O.O., and Olutimo A.L.: Boundedness of solutions of a certain system of second-order ordinary differential equations. Acta. Univ. Palacki. Olumuc. Fac. rer.nat., Mathematical, 53, 107-115, (2014)

Omeike, M.O., Adeyanju, A.A., Adams, D.O.: Stability and boundedness of solutions of certain vector delay differential equations. J. Niger. Math. Soc. 37(2), 77–87 (2018)

Omeike, M.O., Adeyanju, A.A., Adams, D.O., Olutimo, A.L.: Boundedness of certain system of second order differential equations. Kragujevac J. Math. 45(5), 787–796 (2021)

Rao, M.R.M.: Ordinary Differential Equations. Affiliated East West Private Limited, London (1980)

Reissig, R., Sansone, G., Conti, R.: Non-linear Differential Equations of Higher Order. Noordhoff, Leyden (1974). Translated from the German

Sadek, A.I.: On the stability of a nonhomogeneous vector differential equations of the fourth-order. Appl. Math. Comp. 150, 279–289 (2004)

Smith, H.: An introduction to delay differential equations with applications to the life sciences. Texts in Applied Mathematics, vol. 57. Springer, New York (2011)

Tejumola, H.O.T.: On a Lienard type matrix differential equations. Atti Accad. Naz. Lincei Rend. Cl. Sc. Fis. Mat. Natur. (8)60, 2, 100-107, (1976)

Tejumola, H.O.T.: On the Boundedness and periodicity of solutions of certain third-order nonlinear differential equation. Ann. Math. Pura Appl. 83(4), 195–212 (1969)

Tejumola, H.O.T.: Boundedness Criteria for solutions of some second-order differential equations. Atti Accad. Naz. Lincei Rend. Cl. Sci. Fis. Mat. Natur 50(8), 432–437 (1971)

Tunc, C., Mohammed, S.A.: On the asymptotic analysis of bounded solutions to nonlinear differential equations of second order. Advances in Difference Equations volume 2019, Article number: 461 (2019)

Tunc, C.: Hamdullah Sevli: on the instability of solutions of certain fifth order nonlinear differential equations. Memoirs Differ. Eqs. Math. Phys. 5, 147–156 (2005)

Tunc, C.: On the stability and boundedness of solutions of nonlinear vector differential equations of third order. Nonlinear Anal. 70(2), 2232–2236 (2009)

Tunc, C.: A note on boundedness of solutions to a class of non-autonomous differential equations of second. Appl. Anal. Discrete Math. 4(2), 361–372 (2010)

Tunc, C.: Stability to vector Lienard equation with constant deviting argument. Nonlinear Dyn. 73, 1245–1251 (2013)

Tunc, C., Tunc, O.: On the boundedness and integration of non-oscillatory solutions of certain linear differential equations of second order. J. Adv. Res. 7(1), 165–168 (2016). https://doi.org/10.1016/j.jare.2015.04.005

Tunc, C., Tunc, O.: A note on the stability and boundedness of solutions to non-linear differential systems of second order. J. Assoc. Arab Univ. Basic Appl. Sci 24, 169–175 (2017). https://doi.org/10.1016/j.jaubas.2016.12.004

Tunç, O., Tunç, C.: On the asymptotic stability of solutions of stochastic differential delay equations of second order. J. Taibah Univ. Sci. 13(1), 875–882 (2019)

Yoshizawa, T.: Stability theory by Liapunov’s second method. Publications of the Mathematical Society of Japan, Japan (1966)

Zainab, M.J.: Bounded Solution of the Second Order Differential Equation \( \ddot{x} + f(x) \dot{x} + g(x) = u(t)\). Baghdad Sci. J. 12(4), 822–825 (2015)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

There is no competing interests as regard this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Adeyanju, A.A. Stability and boundedness criteria of solutions of a certain system of second order differential equations. Ann Univ Ferrara 69, 81–93 (2023). https://doi.org/10.1007/s11565-022-00402-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11565-022-00402-z