Abstract

Purpose

Dental radiography represents 13% of all radiological diagnostic imaging. Eliminating the need for manual classification of digital intraoral radiographs could be especially impactful in terms of time savings and metadata quality. However, automating the task can be challenging due to the limited variation and possible overlap of the depicted anatomy. This study attempted to use neural networks to automate the classification of anatomical regions in intraoral radiographs among 22 unique anatomical classes.

Methods

Thirty-six literature-based neural network models were systematically developed and trained with full supervision and three different data augmentation strategies. Only libre software and limited computational resources were utilized. The training and validation datasets consisted of 15,254 intraoral periapical and bite-wing radiographs, previously obtained for diagnostic purposes. All models were then comparatively evaluated on a separate dataset as regards their classification performance. Top-1 accuracy, area-under-the-curve and F1-score were used as performance metrics. Pairwise comparisons were performed among all models with Mc Nemar’s test.

Results

Cochran's Q test indicated a statistically significant difference in classification performance across all models (p < 0.001). Post hoc analysis showed that while most models performed adequately on the task, advanced architectures used in deep learning such as VGG16, MobilenetV2 and InceptionResnetV2 were more robust to image distortions than those in the baseline group (MLPs, 3-block convolutional models). Advanced models exhibited classification accuracy ranging from 81 to 89%, F1-score between 0.71 and 0.86 and AUC of 0.86 to 0.94.

Conclusions

According to our findings, automated classification of anatomical classes in digital intraoral radiographs is feasible with an expected top-1 classification accuracy of almost 90%, even for images with significant distortions or overlapping anatomy. Model architecture, data augmentation strategies, the use of pooling and normalization layers as well as model capacity were identified as the factors most contributing to classification performance.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Background

According to conservative estimates, half a billion dental diagnostic radiological examinations are performed annually, with a global average of 74 per 1000 inhabitants, representing 13% of all diagnostic radiology testing [1]. Three hundred million intraoral radiographs are produced annually in the US and the EU [2, 3]. An increasing share is digitally acquired due to clinical advantages, radiation protection considerations and financial barrier lifting. Being non-physical, digital radiographs tend to be indefinitely stored, leading to accumulation in vast archives, especially in large institutions.

Digital X-ray images are accompanied by standardized metadata, ideally generated during production. However, manual recording is often necessary, leading to inaccuracies attributed to lack of staff motivation or training [4]. Deficiencies in healthcare datasets are a well-documented problem associated with labor repetition and high repair cost.

Automated algorithms could become a third party responsible for generating standardized, high-quality metadata, allowing labor reallocation to more creative tasks and uplifting metadata value above the cost of maintenance if deployed at scale.

An intraoral radiograph’s anatomical region is a piece of metadata consisting of predetermined anatomical classes that correspond to standard radiographic projections, currently manually recorded by the human operator. Reliable recording is vital for diagnosis and the benefits of DICOM and is a prerequisite for the implementation of hanging protocols and the creation of standardized radiographic layouts [5, 6]. Additionally, it can be valuable for machine learning model development [7] and the searchability of radiographic archives. Automated classification exclusively from pixel data would enable its generation on the modality or database level and eliminate the need for manual preselection.

Since the extent of the presumably depicted anatomy is known, it can be expressed as a problem of image classification among predefined classes, a fundamental problem in the field of computer vision. Recent advancements, especially after the introduction of the still-relevant AlexNet architecture in the ILSCVR competition [8,9,10], have allowed the domination of the field by a variety of algorithms where neural networks (especially convolutional ones) achieve relatively good performance in the task of classifying natural images into different classes [11].

The subsequent release of libre software that abstracts underlying development processes enabled the rapid development of relevant applications by independent researchers. In 2017, over 300 applications related to radiological imaging had been published [12], while in the US, major organizations in radiology recently issued a roadmap for future research in the field of machine learning in relation to radiology [7].

All the previously discussed benefits could be possibly provided by employing convolutional neural network architectures to achieve automated classification of intraoral radiograph anatomical regions.

In addition, exposing radiology workers to this recently introduced field through applications designed to eliminate trivial tasks can enhance familiarization with its concepts and shortcomings, leading to wider acceptance without the potential implications of diagnostic applications.

The purpose of this study is to systematically develop literature-based neural networks architectures (models) capable of classifying the anatomical region of intraoral radiographs based exclusively on pixel data, as well as to deduce the most appropriate architectures and training strategies by cross-comparing model performance on a predefined dataset. By using libre software, a simple model development methodology, a small dataset and limited computational and financial resources, wide adoption and reproducibility are facilitated. To our knowledge, no similar studies currently exist in the literature.

Materials and methods

The STARD 2015 [13] and CLAIM [14] checklists for the standardization and enhancement of the quality of diagnostic accuracy and artificial intelligence studies were followed where applicable.

Dataset generation

The present study is a retrospective study utilizing archived intraoral radiographs obtained for diagnostic purposes. No subjects were exposed to X-rays for the purposes of this study. All subjects provided written consent.

Adult patients of any age and gender were included in chronological order without further inclusion or exclusion criteria. Non-adult patients were excluded. Included images were digital periapical or bite-wing radiographs obtained by the same modality (PSP plates, SOREDEX Scanora scanner).

The original uncompressed image data were fully anonymized and randomly shuffled by a hash-based algorithm. The dataset was evaluated for content relevance, technical and visual quality and proper classification by one evaluator with 8 years of experience, excluding images of inadequate content or poor quality.

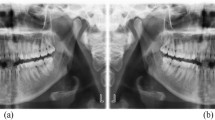

Twenty-two unique anatomical classes were identified. Class definitions assumed that each projection clearly depicts the region of three consecutive teeth, except for the anterior classes 12–22 and 32–42 which included four. Classes LBW1, LBW2 and RBW1, RBW2 were merged due to data scarcity.

The resulting dataset was then randomly split into an 80% training subset and a 20% validation subset for model training. Proper dataset and split sizes were determined by a pilot study.

Model definition

Model definitions and training methodology were based on the dominant initial choices derived from an extensive review of the literature [11] and are described in Table 1.

All models were developed using the Keras API v2.2.4, the Anaconda distribution of Python programming language v3.6.7 and TensorFlow v1.14 as a backend, by adding convolutional architectures as feature extractors on top of a multilayer perceptron classifier and trained using fully supervised learning with backpropagation of error.

Initially, a fully connected two-layer multilayer perceptron (1024 and 512 wide) with flattened input was trained as a baseline classifier. Then, a simple convolutional network of three convolutional blocks as described in TensorFlow’s documentation (convolutional layers of width 64, 128 and 128 respectively, a 3 × 3 filter size, and a max pooling level) was added as a baseline feature extractor.

A group of more advanced and innovative convolutional networks were then consecutively added; the deep but simple convolutional architecture VGG16 [15], the MobilenetV2 as a balanced architecture against model size and performance [16] and the Inception-ResNetV2 as a high-capacity, high-performing architecture especially suitable for small datasets [17, 18].

Additional models were generated with the insertion of batch normalization layers [19] and the use of both the flattening or the global average pooling layer [20] as bridging between the feature extractor part and the MLP classifier.

Dropout layers of 0.20–0.50 rates [21] and three different data augmentation strategies applied on-the-fly were used as regularization (described in Table 2).

The final layer of every model was a softmax-activated dense layer with a range equal to the number of classes (22), so that model output could be expressed as multiple percentages of per class prediction confidence that add up to 100%. The class with the highest confidence was the top model choice for the evaluation of top-1 accuracy.

All models were initialized with default Keras parameters and trained with categorical cross-entropy as a loss function, Adam optimizer [22], a batch size of 64, and learning rate reduction by 50% in validation top-1 accuracy plateaus. Training lasted 100 epochs with early stopping if validation top-1 accuracy or loss function stopped improving after a set number of epochs. ReLUs [23] were used as activation functions. All other hyperparameters were constant.

Input resolution was 224 × 224 grayscale, or RGB in models requiring three-channel input. The corresponding Keras preprocessing function was used; otherwise, images were rescaled to the 0–1 range.

Reproducibility and fair comparisons among models were facilitated by a common seed value for all pseudo-random number generators.

Class imbalance was mitigated using a weighted version of the loss function based on class weights calculated on the validation subset.

Model evaluation

Evaluation was performed on a separate test dataset, not involved in model training, consisting of 261 intraoral radiographs of patients, balanced for both sexes and all five age groups described in the NHANES [24], to reduce dataset bias. Its size allows the detection of large discrepancies in accuracy between the test and validation subsets, without being large enough to impact the training dataset.

Total training time, per sample prediction time, loss function minimization, top-1 accuracy, precision, recall, F1-score and area under the curve were obtained by Keras and Scikit-Learn.

Metrics were macro-averaged from all classes, where applicable. Since some classes were considerably underrepresented, in-depth per class performance analysis was deemed out-of-scope.

Top-1 class predictions for all test images were dichotomized to either true or false predictions in a one-vs-all fashion against the ground truth, then used for statistical analysis.

A significance level of 0.05 was set for all statistical tests. Comparison of the proportions of misclassifications across all models was performed with Cochran's Q test. Pairwise comparisons across models were performed with McNemar’s test. P values were adjusted with the Bonferroni correction [25,26,27].

Test calculations were performed with IBM SPSS statistical package version 25, p value adjustments with R statistical package version 3.6.1 and ROC curves were calculated with Scikit-Learn version 0.22.

Results

Dataset generation

Out of a total of 17,781 images, 15,254 were accepted for further processing, resulting in a training subset of 12,213 images and a validation subset of 3041 images. Due to prior randomization and anonymization, the exact number of subjects included in the study is unknown. Class weights ranged from 1 to 26.65. A full dataset description is given in Table 3.

A total of 36 models were trained and evaluated. Summaries of model training history and model performance across the test dataset are presented in Table 4. Learning curves for each model are found in “Appendix of ESM”.

Comparison among all models

Cochran's Q test indicates a statistically significant difference across the 36 models in terms of the proportion of misclassifications on the test dataset, χ2(35) = 3034.949, p < 0.001.

Post hoc analysis—pairwise comparisons

An overview of the levels of statistical significance for the Bonferroni-adjusted p values for model pairwise comparisons with McNemar’s test is shown in Fig. 1. A complete table of p values is found in Appendix.

Failed models

MLPs without batch normalization (0, 1, 2) failed to train (top-1 accuracy 0.05–0.10, F1-score 0–0.01 and AUC equal to 50). Therefore, a statistically significant difference with any other model (p < 0.001 for all pairs) was to be expected, except for members of the same group.

Comparisons among advanced models

No statistically significant differences were observed among advanced group models (18–35), regardless of bridging layer or data augmentation strategy (p > 0.05 for all pairs). Advanced models had top-1 accuracy ranging from 0.81 to 0.89, F1-score between 0.71–0.86 and AUC of 0.86–0.94.

Comparison of baseline models against advanced models

Baseline models 3, 4, 6, 7, 12 and 13 showed no statistically significant differences against the advanced group, except for pairs 4–18 (p < 0.05), 4–22 (p < 0.05) and 4–31 (p < 0.05), indicative of comparable high performance (top-1 accuracy 0.77–0.87, F1-score 0.70–0.81 and AUC 0.84–0.90).

In contrast, models 5, 10, 11 and 17 showed statistically significant differences with all models in the advanced group (p < 0.001 for all pairs) as a result of poor performance (top-1 accuracy 0.46–0.61, F1-scores 0.33–0.48, AUC 0.67–0.75).

Models 14, 16 showed statistically significant differences with the advanced group except model 24 (p < 0.001 against models 18, 21, 22, 26–35, p < 0.01 against models 19, 23, 24 and p < 0.05 against model 20). Their top-1 accuracy was 0.69 and 0.68, F1-scores 0.60 and 0.61, respectively, and AUC 0.81 for both.

Comparisons among baseline models

Models 11 and 17 (top-1 accuracy 0.46, F1-score 0.33, AUC 0.67 and top-1 accuracy 0.47, F1-score 0.36, AUC 0.68) had the lowest performance among all models (except for models 0, 1, 2) and showed a statistically significant difference with any other baseline model (p < 0.001 except pairs 11–10, 17–10 where p < 0.01 and 11–5, 17–5 where p < 0.05).

Models 5 and 10 also showed a statistically significant difference compared to all baseline models (p < 0.001 with models 3, 4, 6, 7, 12, 13 and p < 0.05 with models 8, 11, 15, 17) as well as low performance (top-1 accuracy 0.61, F1-score 0.47, AUC 0.74 and top-1 accuracy 0.61, F1-score 0.48, area AUC 0.75), excluding pairs 5–9, 5–10, 5–14, 5–16, 10–5, 10–9, 10–14, 10–16 where no statistically significant difference was observed.

Models 8, 9, 14, 15, 16 (top-1 accuracy 0.68–0.75, F1-score 0.58–0.67, AUC 0.79–0.84) showed a statistically significant difference in the proportion of errors against models 11, 17 (p < 0.001) and the highest performing baseline model 7 (p < 0.001 with models 9, 14, 16 and p < 0.01 with models 8, 15). In addition, model 8 showed a statistically significant difference with models 5 and 10 (p < 0.05). Models 14, 15, 16 also showed significant differences in the pairs 14–12 (p < 0.01), 14–13 (p < 0.01), 15- 5 (p < 0.05), 15–10 (p < 0.05) 16–12 (p < 0.001), 16–13 (p < 0.05). This implies that this subset of models lies in the middle in terms of performance of the baseline model group.

Baseline models 3, 4, 6, 12, 13 (top-1 accuracy 0.77–0.84, F1-score 0.70–0.79, AUC 0.84–0.90) showed a statistically significant difference with models 5, 10, 11, 17 (p < 0.001 except pair 4–10 where p < 0.01), as well as pairs 4–7 (p < 0.05), 12–14 (p < 0.01), 12–16 (p < 0.001), 13–14 (p < 0.01), 13–16 (p < 0.05), while no statistically significant difference was observed between them.

Baseline model 7 was the highest performing model of the baseline group (top-1 accuracy 0.87, F1-score 0.81, area AUC 90). There was a statistically significant difference with every other model in the baseline group (p < 0.001 with models 5, 9, 10, 11, 14, 16, 17, p < 0.01 with models 8, 15 and p < 0.05 with model 4) except for the high-performing baseline models 3, 6, 12, 13, with which no statistically significant difference was observed.

Discussion

This study investigated whether neural networks could classify the anatomical region of intraoral radiographs based solely on image data, and the influence of their architectural elements. According to our findings, it is feasible with an expected top-1 accuracy of 80–90%, when trained with small datasets.

Intraoral images usually depict very similar anatomical features, especially when they are part of a series from the same patient, where a significant amount of overlap is expected. Therefore, an understanding of the relative positioning of the depicted structures is essential for their classification. On the contrary, deep learning classifiers have mostly been studied with images of discrete objects against different backgrounds. Bearing that in mind, testing with a multitude of architectures was deemed appropriate as some of them could fail to address this unique challenge.

Multilayer perceptrons (MLPs)

MLPs without normalization failed to train. However, the introduction of batch normalization [19] allowed training comparable to that of advanced models. This finding indicates that input and layer normalization may allow non-convolutional architectures to perform adequately in radiographic image classification tasks. However, these models performed poorly alongside data augmentation, indicating a dependency on input images with little variation.

Baseline convolutional models

Most baseline convolutional models achieved adequate performance. Batch normalization [19] improved training and performance in some architectures. A typical data augmentation strategy resulted in better training, but the introduction of an aggressive strategy led to deteriorating performance.

Advanced models

All advanced models had comparable performance and outperformed most baselines. However, due to the strict nature of the Bonferroni p value adjustment, subtle differences may not be elucidated, while different errors may occur on the test dataset for each model. Furthermore, some models trained irregularly, as it is evident by their learning curves.

Data augmentation

Data augmentation is a common technique for generating more samples from small datasets, and it is considered vital to prevent overfitting, especially in models with large capacities. It can also function as a measure of a model's resilience to improper input, as represented by the aggressive strategy.

Baseline model performance was severely downgraded with aggressive data augmentation, although some were robust or even benefited from subtle transformations. Most advanced group models were resistant to aggressive data augmentation and capable of managing degraded images.

Global average pooling

Using a global average pooling layer [20] in baseline models significantly limited their performance, a finding directly associated with the resulting marked reduction in model capacity.

On the other hand, parameter reduction seemed to favor the advanced group, where it showed no detrimental effects even when applied concurrently with aggressive data augmentation. A regularization effect could also be observed in many models’ learning curves.

Clinical recommendations

Based on the above, the use of models from the advanced group trained with aggressive data augmentation and a Global Average Pooling layer [20] as a bridge between the feature extractor and the classifier parts is recommended.

Choosing an architecture should be a compromise between other parameters, such as resources and time availability. In a clinical setting where long waiting times can be a major disadvantage, a smaller and faster model such as MobilenetV2 might be more useful, while the InceptionResnetV2-based architecture could be better suited for tasks such as database maintenance.

Limitations

Our models demonstrate all limitations inherent in most deep learning models. They were developed empirically on natural images, which prior studies have shown not to be the same as X-ray imaging feature-wise [28]; they lack theoretical explanation for their performance and have high computational costs not favoring experimentation. They also lack outcome justification and are vulnerable against specific images containing irrelevant features able to trigger a predictable output (adversarial samples), making trusted input a necessity.

Such models supposedly require large amounts of data to train. Building large data sets with medical data is a laborious undertaking with serious ethical, legal and financial considerations, while for smaller datasets containing well-structured images, solutions other than neural networks may be more efficient. However, in this study good performance was achieved with limited data.

In addition to the fore-mentioned, a significant limitation is the introduction of dataset bias in model outputs. Sources of dataset bias in this study were the use of only high-quality radiographs out of a single modality, which were diagnostic and contained mostly tooth or prosthesis imaging (rarely depicted exclusively bone structures or contained instruments). Equally important is the fact that these models are only able to replicate the classification criteria applied by the evaluator (evaluator bias). The above could significantly limit our models from performing consistently under different conditions. Training with a multitude of diverse datasets could partially resolve this issue. Currently, model generalizability to datasets with different specifications is not guaranteed.

Another limitation is class imbalance, a direct result of uneven clinical demand and the retrospective nature of the study. Creating a perfectly balanced dataset would require either excluding a large portion of our dataset, with a possible decline in performance, or a prospective study design, exposing subjects to X-rays for study purposes and breaching the ALARA principle without clearly determined benefits. In view of the above, using a weighted loss function seems to be an appropriate compromise. However, all models exhibited their worst performance in the underrepresented classes.

Recommendations for further research

Further gains in performance are anticipated by training with a larger and more balanced dataset, by using loss functions that count in the inherent order of anatomical regions, with the transfer learning and fine-tuning technique and with the combined use of model ensembles.

Availability of data and material

Not publicly available.

Code availability

Code will be published in the following link: https://github.com/nkyventidis/intraoral-radiograph-classifier.

References

[UNSCEAR] United Nations Scientific Committee on the Effects of Atomic Radiation, Sources and effects of ionizing radiation: United Nations Scientific Committee on the Effects of Atomic Radiation (2008) UNSCEAR report to the General Assembly, with scientific annexes. United Nations, New York, p 2010

[FDA] Food and Drug Administration, Dental Radiography: Doses and Film Speed (2017). https://www.fda.gov/radiation-emitting-products/nationwide-evaluation-x-ray-trendsnext/dental-radiography-doses-and-film-speed. Accessed 25 June 2020

Horner K, Rushton VV, Tsiklakis K, Hirschmann P, Stelt PF, Glenny A, Velders X, Pavitt S (2004) European guidelines on radiation protection in dental radiology: the safe use of radiographs in dental practice. Radiat Prot 136:11–17

Horner K (2012) Radiation protection in dental radiology. In: Proceedings of international conference 3–7 December 2012, International Atomic Energy Agency, Bonn, Germany, 2012

[NEMA] National Electrical Manufacturers Association (2005) Digital Imaging and Communications in Medicine, Supplement 60: Hanging Protocols, 2005

[NEMA] National Electrical Manufacturers Association (2019) Digital Imaging and Communications in Medicine, PS3.17 2019d - Explanatory Information, 2019

C. Langlotz, B. Allen, B. Erickson, J. Kalpathy-Cramer, K. Bigelow, T. Cook, A. Flanders, M. Lungren, D. Mendelson, J. Rudie, G. Wang, K. Kandarpa (2019) A roadmap for foundational research on artificial intelligence in medical imaging: from the (2018) NIH/RSNA/ACR/The academy workshop. Radiology 291:781–791

Deng J, Dong W, Socher R, Li J, Li K, Fei-Fei L (2009) Imagenet: a large-scale hierarchical image database. In: IEEE conference on computer vision and pattern recognition. IEEE, pp. 248–255

Krizhevsky A, Sutskever I, Hinton G (2012) ImageNet classification with deep convolutional neural networks. In: Advances in neural information processing systems, vol 25. Curran Associates, Inc., pp 1097–1105

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M, Berg A, Fei-Fei L (2015) ImageNet large scale visual recognition challenge. Int J Comput Vis IJCV 115:211–252

Rawat W, Wang Z (2017) Deep convolutional neural networks for image classification: a comprehensive review. Neural Comput 29:2352–2449

Litjens G, Kooi T, Bejnordi B, Setio A, Ciompi F, Ghafoorian M, Van Der Laak J, Van Ginneken B, Sánchez C (2017) A survey on deep learning in medical image analysis. Med Image Anal 42:60–88

Bossuyt P, Reitsma J, Bruns D, Gatsonis C, Glasziou P, Irwig L, Lijmer J, Moher D, Rennie D, de Vet H, Kressel H, Rifai N, Golub R, Altman D, Hooft L, Korevaar D, Cohen J (2015) STARD 2015: an updated list of essential items for reporting diagnostic accuracy studies. Radiology 277(2015):826–832

Mongan J, Moy L, Kahn CE (2020) Checklist for artificial intelligence in medical imaging (CLAIM): a guide for authors and reviewers. Radiol Artif Intell 2:e200029. https://doi.org/10.1148/ryai.2020200029

Simonyan K, Zisserman A, (2015) Very deep convolutional networks for large-scale image recognition, In: 3rd Int. Conf. Learn. Represent. ICLR 2015 San Diego CA USA May 7–9 2015 Conf. Track Proc

Sandler M, Howard A, Zhu M, Zhmoginov A, Chen L (2018) MobileNetV2: inverted residuals and linear bottlenecks. In: 2018 IEEECVF conference on computer vision and pattern recognition, 2018, pp 4510–4520

Szegedy C, Ioffe S, Vanhoucke V, Alemi A (2017) Inception-v4, inception-ResNet and the impact of residual connections on learning. In: Proceedings of thirty-first AAAI conference on artificial intelligence. AAAI Press, pp 4278–4284.

Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z (2016) Rethinking the inception architecture for computer vision. In: 2016 IEEE conference on computer vision and pattern recognition CVPR, 2016, pp 2818–2826

Ioffe S, Szegedy C (2015) Batch normalization: accelerating deep network training by reducing internal covariate shift. In: International conference on machine learning, 2015, pp 448–456

Lin M, Chen Q, Yan S (2014) Network In Network, In: 2nd Int. Conf. Learn. Represent. ICLR 2014 Banff AB Can. April 14-16 2014 Conf. Track Proc

Srivastava N, Hinton G, Krizhevsky A, Sutskever I, Salakhutdinov R (2014) Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 15:1929–1958

Kingma D, Ba J (2015) Adam: a method for stochastic optimization, In: 3rd Int. Conf. Learn. Represent. ICLR 2015 San Diego CA USA May 7-9 2015 Conf. Track Proc

Nair V, Hinton G (2010) Rectified linear units improve restricted boltzmann machines. In: Proceedings of 27th international conference on international conference on machine learning, Omnipress, Madison, WI, USA, 2010, pp 807–814

[NCHS] National Center for Health Statistics (1999) National Health and Nutrition Examination Survey Data., U.S. Department of Health and Human Services, Hyattsville, MD, 1999.

Dietterich T (1998) Approximate statistical tests for comparing supervised classification learning algorithms. Neural Comput 10:1895–1923

Demšar J (2006) Statistical comparisons of classifiers over multiple data sets. J Mach Learn Res 7:1–30

García S, Herrera F, Shawe-Taylor J (2008) An extension on “statistical comparisons of classifiers over multiple data sets” for all pairwise comparisons. J Mach Learn Res 9:2677–2694

Chow L, Paramesran R (2016) Review of medical image quality assessment. Biomed Signal Process Control 27:145–154

Funding

This study has received no external funding.

Author information

Authors and Affiliations

Contributions

Kyventidis Nikolaos involved in conceptualization, methodology, software, validation, formal analysis, investigation, resources, data curation, writing—original draft, visualization. Angelopoulos Christos involved in resources, data curation, writing—review and editing, supervision, project administration.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest, financial or otherwise.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

About this article

Cite this article

Kyventidis, N., Angelopoulos, C. Intraoral radiograph anatomical region classification using neural networks. Int J CARS 16, 447–455 (2021). https://doi.org/10.1007/s11548-021-02321-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-021-02321-4