Abstract

We consider reaction networks that admit a singular perturbation reduction in a certain parameter range. The focus of this paper is on deriving “small parameters” (briefly for small perturbation parameters), to gauge the accuracy of the reduction, in a manner that is consistent, amenable to computation and permits an interpretation in chemical or biochemical terms. Our work is based on local timescale estimates via ratios of the real parts of eigenvalues of the Jacobian near critical manifolds. This approach modifies the one introduced by Segel and Slemrod and is familiar from computational singular perturbation theory. While parameters derived by this method cannot provide universal quantitative estimates for the accuracy of a reduction, they represent a critical first step toward this end. Working directly with eigenvalues is generally unfeasible, and at best cumbersome. Therefore we focus on the coefficients of the characteristic polynomial to derive parameters, and relate them to timescales. Thus, we obtain distinguished parameters for systems of arbitrary dimension, with particular emphasis on reduction to dimension one. As a first application, we discuss the Michaelis–Menten reaction mechanism system in various settings, with new and perhaps surprising results. We proceed to investigate more complex enzyme catalyzed reaction mechanisms (uncompetitive, competitive inhibition and cooperativity) of dimension three, with reductions to dimension one and two. The distinguished parameters we derive for these three-dimensional systems are new. In fact, no rigorous derivation of small parameters seems to exist in the literature so far. Numerical simulations are included to illustrate the efficacy of the parameters obtained, but also to show that certain limitations must be observed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Reducing the dimension of chemical and biochemical reaction networks or mechanisms is of great relevance both for theoretical considerations and for laboratory practice. For instance, the fundamental structure of a reaction mechanism is frequently known, or assumed from educated guesswork, but reaction rate constants are a priori unknown. Moreover, due to possible wide discrepancies in timescales, as well as limitations on experimentally obtainable data, it is important to identify scenarios and parameter regions that guarantee accuracy of a suitably chosen reduction. Singular perturbations frequently appear here,Footnote 1 and the fundamental theorems by Tikhonov (1952) and Fenichel (1979) provide a procedure to determine a reduced equation, and reliable convergence results. These theorems require an a priori identification of a perturbation parameter (also called “small parameter”). From a qualitative perspective, one actually considers a critical manifold together with an associated small parameter, and a corresponding slow invariant manifold. Given a well-defined limiting process for the small parameter, theory guarantees convergence of solutions of the full system to corresponding solutions of the reduced system. From a practical (“laboratory”) perspective, however, convergence theorems are not sufficient, and quantitative results are needed to gauge the accuracy of fitting procedures. This implies the need for an appropriate small parameter, which we denote by \(\varepsilon _S\) for the moment, that also reflects quantitative features. In contrast to the critical manifold, from a qualitative perspective the perturbation parameter is far from unique.Footnote 2 From a quantitative perspective, ideally \(\varepsilon _S\) should provide an upper estimate for the discrepancy between the exact and approximate solutions over the whole course of the slow dynamics. From a biochemical perspective it should elucidate the influence of reaction parameters. In many application-oriented publications, the authors assume (explicitly or implicitly) that certain perturbation parameters provide a quantitative estimate for the approximation; see, e.g., Heineken et al. (1967), Segel (1988), Tzafriri (2003), Schnell (2014), Choi et al. (2017).Footnote 3 However, while heuristical arguments may support such assumptions, no mathematical proof is given [see the discussion of the Michaelis–Menten system in Eilertsen et al. (2022)]. From the applied perspective, in absence of rigorous results on quantitative error estimates for reductions of biochemical reaction networks or mechanisms, there is no alternative to employing heuristics. Thus, there exists a sizable gap between available theoretical results and applications, and closing this gap requires further theoretical results. The present paper is intended as a contribution toward narrowing the gap, invoking mathematical theory.

From an overall perspective (based on a derivation of singular perturbation theorems), one could say that finding ideal small parameters for a given singular perturbation scenario requires a three-step procedure:

-

1.

In a first step, estimate the approach of a particular solution to the slow manifold: A common method employs Lyapunov functions. Thus, one obtains a parameter that measures the discrepancy between the right-hand sides of the full system and the reduced equation, following a short initial transient.

-

2.

In a second step, estimate a suitable critical time at which the slow dynamics sets in, and estimate the solution at this critical time. This is needed to guarantee that the transient phase is indeed short, and to obtain a suitable initial value for the reduced equation.

-

3.

In a third step, estimate the approximation of the exact solution by the corresponding solution of the reduced equation.Footnote 4

At first glance, this procedure seems to pose no problems. The feasibility of the steps outlined above is guaranteed by standard results about ordinary differential equations. But, the hard part lies in their practical implementation for a given parameter-dependent system. Generally, it is not easy to obtain meaningful and reasonably sharp estimates. A case-by-case discussion seems unavoidable [see, Schnell and Maini (2000), Eilertsen et al. (2018, 2021a), Eilertsen and Schnell (2018, 2020) for examples employing various alternative approaches], for each given system.

With the three steps as a background, our goal is to make a significant contribution toward the first step, via linear timescale arguments. We will both expand and improve existing results, and moreover obtain perturbation parameters for higher-dimensional systems for which no rigorous results have previously been reported. In a biochemical context, it seems that timescale arguments were first introduced by Segel (1988), and Segel and Slemrod (1989). Conceptually, we build upon this approach, but we take a consistent local perspective. Thus, we consider (real parts of) eigenvalue ratios, based on the idea that underlies computational singular perturbation theory, going back to Lam and Goussis (1994). Our emphasis is on obtaining parameters that are workable for application-oriented readers in mathematical enzymology, and admit an interpretation in biochemical terms.

1.1 Background

A solid mathematical foundation for qualitative viability of most reduction procedures in chemistry and biochemistry is provided by singular perturbation theory (Tikhonov 1952; Fenichel 1979). This was first clearly stated and utilized in Heineken et al. (1967).

For illustrative purposes, and as further motivation, we consider a familiar system from biochemistry, viz. the (irreversible) Michaelis–Menten reaction mechanism or network (Michaelis and Menten 1913), which is modeled by the two-dimensional differential equation

For small initial enzyme concentration with respect to the initial substrate concentration, Briggs and Haldane (1925) assumed quasi-steady state (QSS) for complex concentration, thus obtaining the QSS manifold given by

and reduction to the Michaelis–Menten equation

To quantify the notion of smallness for enzyme concentration, they introduced the dimensionless parameter

[later utilized by Heineken et al. (1967) in the first application of singular perturbation theory to this reaction], and required \(\varepsilon _{BH}\ll 1\) as a necessary condition for accuracy of the reduction. Further parameters to ensure accuracy of approximation by the Michaelis–Menten equation were introduced later on. Reich and Selkov (1974) introduced

for which Palsson and Lightfoot (1984) later gave a justification based on linearization at the stationary point 0.Footnote 5 Moreover, Segel and Slemrod (1989) derived

The fundamental approach by Segel and Slemrod (1989), obtaining perturbation parameters by comparing suitable timescales, has been used widely in the literature ever since.Footnote 6

For Michaelis–Menten reaction mechanism, singular perturbation theory shows convergence of solutions of (1) to corresponding solutions of the reduced equation as \(e_0\rightarrow 0\), in which case all of the parameters \(\varepsilon _{BH},\,\varepsilon _{RS},\,\varepsilon _{SSl}\) approach zero. But on the other hand, it is not generally true that \(\varepsilon _{BH}\rightarrow 0\), or \(\varepsilon _{RS}\rightarrow 0\), or \(\varepsilon _{SSl}\rightarrow 0\), implies convergence to the solution of the reduced system. This, as well as related matters, was discussed in detail in Eilertsen et al. (2022), with a presentation of counterexamples. We also invite the readers to see other examples in Sect. 4.

These facts illustrate that considering a single parameter—without context and without a clearly defined notion of the limiting process—will generally not be sufficient to ensure the validity of some particular reduction. In a singular perturbation setting the critical manifold is the basic object, and one generally needs to specify the way in which corresponding small parameters approach zero.

With regard to the procedure outlined in Steps 1 to 3 above, a wish list for small parameters includes the following physically motivated conditions:

-

\(\varepsilon _S\) is dimensionless;

-

\(\varepsilon _S\) is composed of reaction rates and initial values (admitting an interpretation in physical terms);

-

\(\varepsilon _S\) is controllable in experiments.

These requirements will be taken into account as well.

Our vantage point is work by Goeke et al. (2015, 2017), which provides an algorithmic approach to determine critical parameter values (Tikhonov–Fenichel parameter values, TFPV), and their critical manifolds: Choosing a curve in parameter space (with curve parameter \(\varepsilon \)) that starts at a TFPV gives rise to a singularly perturbed system, based on a clearly defined approach of the small parameter to zero.

Pursuing a less ambitious goal than the one outlined in Steps 1 to 3 above, we will utilize the separation of timescales on the slow manifold, adapting work by Lam and Goussis (1994) on computational singular perturbation theory. We focus attention on local considerations. Timescales are identified as inverse absolute real parts of eigenvalues of the linearization of a vector field, near stationary points. Restriction to the vicinity of stationary points is an essential condition here. Given a singular perturbation setting, Zagaris et al. (2004) proved that the approach via “small eigenvalue ratios” is consistent. Unless some eigenvalues of large modulus are purely imaginary, the eigenvalue approach provides a small parameter that satisfies the requirement in Step 1 above, up to a multiplicative constant that remains to be determined.Footnote 7 But dealing directly with eigenvalues (even in the rare case when they are explicitly known) is generally too cumbersome to allow productive work and concrete conclusions.

The emphasis of the present paper lies on local (linear) timescale estimates and comparisons, using a mix of algebraic and analytic tools. We will obtain parameters that are palatable to application-oriented readers and allow for interpretation in a biochemical context. Most of the parameters obtained have not appeared in the literature before, and some perhaps are unexpected.

1.2 Overview of Results

Given a chemical or biochemical reaction network or mechanism, we will present a method to obtain distinguished dimensionless parameters. These parameters are directly related to the local fast-slow dynamics of the singularly perturbed system. In contrast to many existing timescale estimates in the literature, the one employed here is conceptually consistent. Timescale considerations mutate from artwork to a relatively routine procedure, and we establish necessary conditions for timescale separation and singular perturbation reductions.

In the preparatory Sect. 2, we collect some notions and results related to singular perturbation theory. In particular, we recall Tikhonov–Fenichel parameter values (TFPV). We also note properties of the Jacobian and its characteristic polynomial on the critical manifold. It should be emphasized that our search always begins with identifying a TFPV and its associated critical manifold; all our small parameter estimates are rooted in this scenario. We establish a repository of dimensionless parameters from coefficients of the characteristic polynomial, and we recall the relation between these coefficients and the eigenvalues of the Jacobian. Finally, we fix some notation and establish some blanket nondegeneracy conditions that are assumed throughout the paper.

Section 3 is devoted to one-dimensional critical manifolds, which are of considerable relevance to experimentalists. Generally, the timecourse of a single product or substrate is measured in an experiment. Specific kinetic parameters (such as the Michaelis constant) are estimated via nonlinear regression, in which the recorded timecourse data is fitted to a one-dimensional and autonomous QSS model that approximates substrate depletion (or product formation) of the reaction on the slow timescale; see, for example, Stroberg and Schnell (2016) and Choi et al. (2017). In the one-dimensional setting, near the critical manifold there is one and only one eigenvalue of the Jacobian with small absolute real part. From the characteristic polynomial, we obtain distinguished small parameters, and we establish their correspondence to timescales. The parameters thus obtained admit an interpretation in terms of reaction parameters, so they satisfy a crucial practical requirement. They measure the ratio of the slow to the fastest timescale, and thus provide a necessary condition for timescale separation. But, in dimension greater than two, this condition is not strong enough when there are large discrepancies within the fast timescales. According to Appendix, Sect. 9.1, the ratio of the slow to the “slowest of the fast” timescales is the relevant quantity. To estimate this ratio, we introduce another type of parameter that yields sharp estimates whenever all eigenvalues are “essentially real” [borrowing terminology of Lam and Goussis (1994)]. We then specialize our results to systems of dimensions two and three.

In Sect. 4, we apply the results from Sect. 3 to the (reversible and irreversible) Michaelis–Menten system in various circumstances. We obtain a distinguished parameter for the reversible system with small enzyme concentration; this seems to be new. Specializing to the irreversible case, we obtain a parameter \(\varepsilon _{MM}\) and conclude, via an argument different from Palsson and Lightfoot (1984), that the Reich-Selkov parameter \(\varepsilon _{RS}\) is the most suitable among the standard parameters in the irreversible system. Moreover, we obtain a rather surprising distinguished parameter for the partial equilibrium approximation with slow product formation. To support the claim that this is indeed an appropriate parameter for Step 1, as stated above, we determine relevant Lyapunov estimates, and we add some observations with regard to Step 3. To illustrate the necessity of some technical restrictions in our results, we close this section by discussing a degenerate scenario with a singular critical variety.

In Sect. 5, we turn to critical manifolds of dimension greater than one. Imitating the approach for one-dimensional critical manifolds and invoking results from local analytic geometry, we obtain distinguished parameters that measure the ratio of the fastest timescale to the “fastest of the slow” timescales. We provide a detailed analysis for three-dimensional systems with two-dimensional critical manifold.

In Sect. 6, we apply our theory to some familiar three-dimensional systems from biochemistry, viz. cooperative systems with two complexes, and competitive as well as uncompetitive inhibition, for low enzyme concentration. For these systems the only available perturbation parameters in common use seem to be \(\varepsilon _{BH}=e_0/s_0\), \(\varepsilon _{SSl}\) and ad hoc variants of these. There seems to exist no derivation of small parameters via timescale arguments (in the spirit of Segel and Slemrod) in the literature. We thus break new ground, and we obtain meaningful and useful distinguished parameters. We illustrate our results with several numerical examples, to verify the efficacy of the parameters. But, we also include simulations to show their limited applicability in certain regions of parameter space. Such limitations were to be expected, since Steps 2 and 3 are needed for a complete analysis. These examples also illustrate the necessity of additional hypotheses imposed in the derivation of the distinguished parameters.

In Sect. 7, we consider some reductions of three-dimensional systems obtained via projection onto two-dimensional critical manifolds. Specifically, we compute some two-dimensional reductions of the competitive and uncompetitive inhibitory reaction mechanisms, and we derive distinguished parameters that are relevant for the accuracy of these reductions. Again, we illustrate our results by numerical simulations. To finish, we discuss a three timescale scenario that leads to a hierarchical structure in which the two-dimensional slow manifold contains an embedded one-dimensional “very slow” manifold.

Section 9, an Appendix, is a recapitulation of the Lyapunov function method for singularly perturbed systems, also outlining the relevance of the eigenvalue ratios for Step 1, and some observations on Steps 2 and 3. Moreover, Appendix contains a summary of some facts from the literature, and proofs for some technical results. Sections 2, 3 and 5 as well as Appendix (Sect. 9) are mostly technical. Readers primarily interested in applications may want to skim these only, and focus on the applications in Sects. 4, 6 and 7.

2 Preliminaries

We will discuss parameter-dependent ordinary differential equations

with the right-hand side a polynomial in x and \(\pi \). Our main motivation is the study of chemical mass action reaction mechanisms and their singular perturbation reductions.

2.1 Tikhonov–Fenichel Parameter Values (a Review)

We consider singular perturbation reductions that are based on the classical work by Tikhonov (1952) and Fenichel (1979). Frequently the pertinent theorems are stated for systems in slow-fast standard form

with a small parameter \(\varepsilon \), subject to certain additional conditions. In slow time, \(\tau =\varepsilon t\), the reduced system takes the form

and the above mentioned conditions ensure that the second equation admits a local resolution for \(u_2\) as a function of \(u_1\) and \(\varepsilon \). For general parameter-dependent systems (7) one first needs to identify the parameter values from which such reductions emanate. We recall some notions and results (slightly modified from Goeke et al. 2015):

-

1.

A parameter \(\widehat{\pi }\in \Pi \) is called a Tikhonov–Fenichel parameter value (TFPV) for dimension \(s\) ( \(1\le s\le n-1\)) of system (7) whenever the following hold:

-

(i)

An irreducible component of the critical variety, i.e., of the zero set \( \mathcal {V}(h(\cdot , \widehat{\pi }))\) of \(x\mapsto h(x\,,\widehat{\pi })\), contains a (Zariski dense) local submanifold \(\widetilde{Y}\) of dimension \(s\), which is called the critical manifold.

-

(ii)

For all \(x\in \widetilde{Y}\) one has \(\textrm{rank}\,D_1h(x,\widehat{\pi })=n-s\) and

$$\begin{aligned} \mathbb R^n = \textrm{Ker}\ D_1h(x,\widehat{\pi }) \oplus \textrm{Im}\ D_1h(x,\widehat{\pi }). \end{aligned}$$Here \(D_1\) denotes the partial derivative with respect to x.

-

(iii)

For all \(x\in \widetilde{Y}\) the nonzero eigenvalues of \(\ D_1h(x,\widehat{\pi }) \) have real parts \(<0\).

-

(i)

-

2.

Given a TFPV, for any smooth curve \(\varepsilon \mapsto \widehat{\pi }+\varepsilon \rho +\cdots \) in parameter space \(\Pi \), the system

$$\begin{aligned} \dot{x}=&{} h(x,\widehat{\pi }{+}\varepsilon \rho {+}\cdots ){=}h(x,\widehat{\pi }){+}\varepsilon D_2h(x,\widehat{\pi })\,\rho {+}\cdots {=:} h^{(0)}(x) {+}\varepsilon h^{(1)}(x)+\cdots , \end{aligned}$$with \(D_2\) denoting the partial derivative with respect to \(\pi \), admits a singular perturbation (Tikhonov–Fenichel) reduction. A standard method is to fix a parameter direction and a “ray” \(\varepsilon \mapsto \widehat{\pi }+\varepsilon \rho \) in parameter space. In a chemical interpretation this may correspond to a gradual increase of some parameters, such as initial concentrations. Our work will always be based on this procedure; by this specification we avoid ambiguities about the range of parameters.

-

3.

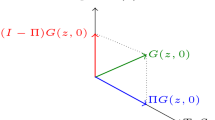

The computation of a reduction in the coordinate-free setting is described in Goeke and Walcher (2014): Assuming the TFPV conditions in item 1, there exist rational functions P, with values in \(\mathbb R^{n\times (n-s)}\), and \(\mu \), with values in \(\mathbb R^{n-s}\), such that

$$\begin{aligned} h^{(0)}(x)=P(x)\mu (x) \text { on } \widetilde{Y}, \end{aligned}$$and P(x) as well as \(D\mu (x)\) have full rank on \(\widetilde{Y}\). The reduced equation on \(\widetilde{Y}\) then has the representation

$$\begin{aligned} \dot{x}=\varepsilon \left( I-P(x)\left( D\mu (x)P(x)\right) ^{-1}D\mu (x)\right) h^{(1)}(x), \end{aligned}$$(9)which is correct up to \(O(\varepsilon ^2)\). By Tikhonov and Fenichel, solutions of (7) that start near \(\widetilde{Y}\) will converge to solutions of the reduced system as \(\varepsilon \rightarrow 0\). But some caveats are in order:

-

The reduction is guaranteed only locally, for neighborhoods of compact subsets of the critical manifold and for sufficiently small \(\varepsilon \). Determining a neighborhood explicitly for which the reduction is valid poses an individual problem for each system.Footnote 8

-

In particular, the distance of the initial value of (7) from the slow manifold (not only from the critical manifold) is relevant for the reduction. In general, an approximate initial value for the reduced equation on the slow manifold must be determined.

-

If the transversality condition in (ii) above breaks down, standard singular perturbation theory is no longer applicable. But, even when it is satisfied, the range of validity for the reduction may be quite small. This reflects the effect of a local transformation to Tikhonov standard form.

-

Finally, the reduced equation may be trivial, in which case higher-order terms in \(\varepsilon \) are dominant and no conclusion can be drawn from the first order reduction. By the same token, if the term following \(\varepsilon \) in (9) is small, then the quality of the reduction may be poor.

-

-

4.

Turning to computational matters, consider the characteristic polynomial

$$\begin{aligned} \chi (\tau ,x,\pi )=\tau ^n+\sigma _{1}(x,\pi )\tau ^{n-1}+\cdots + \sigma _{n-1}(x,\pi )\tau +\sigma _n(x,\pi ) \end{aligned}$$(10)of the Jacobian \(D_1h(x,\pi )\). Then, given \(0<s<n\), a parameter value \(\widehat{\pi }\) is a TFPV with locally exponentially attracting critical manifold \(\widetilde{Y}\) of dimension s, and \(x_0 \in \widetilde{Y}\), only if the following hold:

-

\(h(x_0,\widehat{\pi })=0\).

-

The characteristic polynomial \(\chi (\tau ,x,\pi )\) satisfies

-

(i)

\(\sigma _n(x_0,\widehat{\pi })=\cdots =\sigma _{n-s+1}(x_0,\widehat{\pi })=0\);

-

(ii)

all roots of \(\chi (\tau ,x_0,\widehat{\pi })/\tau ^s\) have negative real parts.

-

(i)

This characterization shows that \(x_0\) satisfies an overdetermined system of equations (more than n equations in n variables), which in turn allows to algorithmically determine conditions on \(\widehat{\pi }\) by way of elimination theory; see Goeke et al. (2015). Due to the Hurwitz-Routh theorem (see, e.g., Gantmacher 2005),

$$\begin{aligned} \sigma _k(x_0,\widehat{\pi })>0 \text { for }x_0\in \widetilde{Y},\,1\le k\le n-s \end{aligned}$$is a necessary consequence of condition (ii). Necessary and sufficient conditions for TFPV are stated in Goeke et al. (2015), but we will not need them here.

-

2.2 Dimensionless Parameters

From Goeke et al. (2015), one finds critical parameter values and corresponding critical manifolds, but there remains to specify the notion of “small perturbation,” and to relate it to reaction parameters. Singular perturbation theory guarantees convergence in the limit \(\varepsilon \rightarrow 0\), but for a given system estimates for the rate of convergence are desirable.

To be physically meaningful, relevant small parameters should be dimensionless. The only dimensions appearing in reaction parameters are time and concentration, thus by dimensional analysis (Buckingham Pi Theorem; see, e.g., Wan 2018), there exist \(\ge m-2\) independent dimensionless Laurent monomials in the parameters, such that every dimensionless analytic function of the reaction parameters can locally be expressed as a function of these.Footnote 9 This collection may be quite large; we impose the additional requirement that parameters should correspond to timescales. In a preliminary step, we therefore list an inventory of rational dimensionless quantities for the network or mechanism.

Lemma 1

Let (7) correspond to a CRN with mass action kinetics, and \(\chi \) as in (10). Then:

-

(a)

The coefficient \(\sigma _k\) of \(\chi \) has dimension \((\textrm{Time})^{-k}\).

-

(b)

Whenever \(i_1,\ldots ,i_p\ge 1\) and \(j_1,\ldots ,j_q\ge 1\) are integers such that \(i_1+\cdots +i_p=j_1+\cdots +j_q\), the expression

$$\begin{aligned} \dfrac{\sigma _{i_1}\cdots \sigma _{i_p}}{\sigma _{j_1}\cdots \sigma _{j_q}} \end{aligned}$$(when defined) is dimensionless.

Proof

Every monomial on the right-hand side of (7) has dimension \(\textrm{Concentration}/\textrm{Time}\), since this holds for the left-hand side. The entries of the Jacobian \(D_1h\) are obtained via differentiation with respect to some \(x_i\), hence have dimension \((\textrm{Time})^{-1}\). Since \(\sigma _i\) is a polynomial in the matrix enries of degree i, part (a) follows. Part (b) is an immediate consequence.

2.3 Timescales

There exist various notions of timescale in the literature, and in some cases this ambiguity influences the derivation of small parameters. For a case in point, we invite the reader to see Segel and Slemrod (1989), who use different notions of timescale for the fast and slow dynamics. But, for systems that decay or grow exponentially, and by extension for linear and approximately linear systems, there exists a well-defined notion:

Definition 1

Let \(A:\,\mathbb R^n\rightarrow \mathbb R^n\) be a linear map, and consider the linear differential equation \(\dot{x}= A\,x\). For \(\lambda \) an eigenvalue of A, with nonzero real part, we call \(|\textrm{Re}\,\lambda |^{-1}\) the timescale corresponding to \(\lambda \).

The timescale of an invariant subspace \(V\subseteq \mathbb R^n\) (which is a subspace of a sum of generalized eigenspaces) is defined as the slowest timescale of the eigenvalues involved.

For a single eigenvalue, the timescale characterizes the speed of growth or decay of solutions along the generalized eigenspace of \(\lambda \). For an invariant subspace, it characterizes the speed for generic initial values.

We will work with this consistent notion of linear timescale, and its extension to linearizations of nonlinear systems near stationary points, throughout the paper. Thus, we adopt the perspective taken in Lam and Goussis (1994), which is justified by Fenichel’s local characterization of the dynamics near the critical manifold \(\widetilde{Y}\) (Fenichel 1979, Section V), as proven by Zagaris et al. (2004). Indeed, the time evolution near \(\widetilde{Y}\) is governed by the linearization \(D_1h(x,\widehat{\pi }+\varepsilon \rho )\), with \(\pi =\widehat{\pi }+\varepsilon \rho \) close to a TFPV \(\widehat{\pi }\), and \(x\in \widetilde{Y}\). For \(\pi =\widehat{\pi }\) the Jacobian has vanishing eigenvalues, hence for \(\pi \) near \(\widehat{\pi }\) one will have eigenvalues of small modulus, while all nonzero eigenvalues of \(D_1h(x,\widehat{\pi })\) have negative real parts.

From a practical perspective, eigenvalues are at best inconvenient to work with. Moreover, in our context, resorting to numerical approximations is not a viable option. To obtain more palatable parameters, we recall the correspondence between the eigenvalues \(\lambda _1,\ldots ,\lambda _n\) of \(D_1h(x,\widehat{\pi }+\varepsilon \rho )\) and the coefficients \(\sigma _k\) of the characteristic polynomial. One has

with the summation extending over all tuples \(i_1,\ldots ,i_k\) such that \(1\le i_1<\cdots <i_k\le n\). In particular

2.4 Blanket Assumptions

The principal goal of the present paper is to provide consistent and workable local timescale estimates in terms of the reaction parameters. Throughout the remainder of the paper, the following notions will be used and the following assumptions will be understood:

-

1.

We consider a polynomial parameter-dependent system (7), and a TFPV \(\widehat{\pi }\) for dimension \(s\ge 1\), with critical manifold \(\widetilde{Y}\). The entries of \(\widehat{\pi }\) are not uniquely determined by the critical manifold. We allow these entries to range in a suitable compact subset of parameter space (to be restricted by requirements in the following items).

-

2.

We fix \(\rho \) in the parameter space, and consider the singularly perturbed system for the ray in parameter space \(\widehat{\pi }+\varepsilon \rho \), with \(0\le \varepsilon \le \varepsilon _\textrm{max}\), and restrictions on \(\varepsilon _\textrm{max}>0\) to be specified.

-

3.

Moreover, we let \(K\subset \mathbb R^n\) be a compact set with nonempty interior, such that \(\widetilde{Y}\cap K\) is also compact. K should contain the initial values for all relevant solutions of (7).Footnote 10

-

4.

Since \(\widehat{\pi }\) is a TFPV, we have \(\sigma _k(x,\widehat{\pi })>0\) for all \(x\in \widetilde{Y}\cap K\), \(1\le k\le n-s\). We choose \(\varepsilon _\textrm{max}\) so that \(\sigma _k(x,\widehat{\pi }+\varepsilon \rho )\) is defined and bounded above and below by positive constants on

$$\begin{aligned} K^*=K^*(\varepsilon _\textrm{max})=\left( \widetilde{Y}\cap K\right) \times [0,\varepsilon _\textrm{max}], \end{aligned}$$(12)for \(1\le k\le n-s\). Such a choice is possible by compactness and continuity, given a suitable compact set in parameter space.

-

5.

As a crucial basic condition, we require that Tikhonov–Fenichel reduction is accurate up to order \(\varepsilon ^2\) in a compact neighborhood \(\widetilde{K}\) of \(\widetilde{Y}\cap K\), with \(\varepsilon \le \varepsilon _\textrm{max}\). Consult Sect. 9.1 to verify that this requirement can be satisfied.

We emphasize that the present paper focuses on asymptotic timescale estimates near the critical manifold, which are based on Fenichel’s local theory. The determination of \(\varepsilon _\textrm{max}\) (and by extension, the range of applicability) will not be addressed in general. Moreover, in applications we may replace sharp estimates by weaker ones that permit an interpretation in biochemical terms.

3 Critical Manifolds of Dimension One

In this technical section, we consider system (7) in \(\mathbb R^n\), \(n\ge 2\) with a critical manifold of dimension \(s=1\). We will derive two types of distinguished parameters that characterize timescale discrepancies, and discuss systems of dimensions two and three in some detail.

We have \(\sigma _n(x,\widehat{\pi })=0\) on \(\widetilde{Y}\), and \(\sigma _k(x,\widehat{\pi }+\varepsilon \rho )>0\) for \(1\le k\le n-1\), \(x\in \widetilde{Y}\cap K\) and \(0\le \varepsilon \le \varepsilon _\textrm{max}\). Moreover

with a polynomial \(\widehat{\sigma }_n\). We require the nondegeneracy condition

Denote by \(\lambda _1,\ldots ,\lambda _n\) the eigenvalues of \(D_1h(x,\pi )\), choosing the labels so that \(\lambda _n(x,\widehat{\pi })=0\) for all \(x\in \widetilde{Y}\cap K\).

The following facts are known. We recall some proofs in Appendix, for the reader’s convenience.

Lemma 2

-

(a)

One has

$$\begin{aligned} \lambda _n(x,\widehat{\pi }+\varepsilon \rho )=\varepsilon \widehat{\lambda }_n(x,\widehat{\pi },\rho ,\varepsilon ), \end{aligned}$$with \(\widehat{\lambda }_n\) analytic, and \(\widehat{\lambda }_n(x,\widehat{\pi },\rho ,0)\not =0\) on K.

-

(b)

Given \(\beta >1\), there exist \(\Theta >0\), \(\theta >0\) such that \(-\Theta /\beta \le \textrm{Re}\,\lambda _i(x,\widehat{\pi })\le -\beta \theta \) for all \(x\in \widetilde{Y}\cap K\), \(1\le i\le n-1\).

-

(c)

For suitably small \(\varepsilon _\textrm{max}\), one has

$$\begin{aligned} -\Theta \le \textrm{Re}\,\lambda _i(x,\widehat{\pi }+\varepsilon \rho )\le -\theta \end{aligned}$$for all \((x,\varepsilon )\in K^*\), \(1\le i\le n-1\).

3.1 Distinguished Small Parameters

We turn to the construction of small parameters from the repository in Lemma 1. Consider the rational function

Definition 2

-

(i)

Let

$$\begin{aligned} \begin{array}{rcl} L(\widehat{\pi },\rho )&{}:=&{}\inf _{x\in \widetilde{Y}\cap K}\left| \dfrac{\widehat{\sigma }_n(x,\widehat{\pi },\rho ,0)}{\sigma _{1}(x,\widehat{\pi })\cdot \sigma _{n-1}(x,\widehat{\pi })}\right| ,\\ U(\widehat{\pi },\rho )&{}:=&{}\sup _{x\in \widetilde{Y}\cap K}\left| \dfrac{\widehat{\sigma }_n(x,\widehat{\pi },\rho ,0)}{\sigma _{1}(x,\widehat{\pi })\cdot \sigma _{n-1}(x,\widehat{\pi })}\right| .\\ \end{array} \end{aligned}$$(16) -

(ii)

We call,

$$\begin{aligned} \varepsilon ^*(\widehat{\pi },\rho ,\varepsilon ):=\varepsilon \cdot U(\widehat{\pi },\rho ), \end{aligned}$$(17)the distinguished upper bound for the TFPV \(\widehat{\pi }\) with parameter direction \(\rho \) of system (7), and we call,

$$\begin{aligned} \varepsilon _*(\widehat{\pi },\rho ,\varepsilon ):=\varepsilon \cdot L(\widehat{\pi },\rho ), \end{aligned}$$(18)the distinguished lower bound for the TFPV \(\widehat{\pi }\) with parameter direction \(\rho \).

By the nondegeneracy condition, one has \(U(\widehat{\pi },\rho )\ge L(\widehat{\pi },\rho )>0\). We obtain the following asymptotic inequalities:

Proposition 1

Given \(\alpha >0\), for sufficiently small \(\varepsilon _\textrm{max}\), the inequalities

hold on \(K^*\).

Proof

By analyticity in \(\varepsilon \) one has, for \(\varepsilon _\textrm{max}\) sufficiently small,

for all \((x,\varepsilon )\in K^*\). The assertion follows.

Remark 1

There are two points to make:

-

By definition, determining the distinguished upper and lower bounds amounts to determining the maximum and minimum of a rational function on a compact set. It may not be possible (or not advisable) to determine \(\varepsilon ^*\) or \(\varepsilon _*\) exactly, and one may have be content with sufficiently tight upper resp. lower estimates.

-

The derivation of the small parameters involves the critical manifold and the TFPV \(\widehat{\pi }\), hence they depend on these choices. Moreover, there is some freedom of choice for the parameter direction \(\rho \), which also influences the bounds. For these reasons one should not assume universal efficacy of any small parameter without further context.

3.2 The Correspondence to Timescales

We now discuss the correspondence between timescales and the parameters determined from (15). By direct verification, via (11) one finds for the eigenvalues \(\lambda _1,\ldots ,\lambda _n\) of \(D_1h(x,\pi )\):

Lemma 3

-

(a)

The identity

$$\begin{aligned} \sum _{i\not =j}\frac{\lambda _i}{\lambda _j}=\frac{\sigma _1\sigma _{n-1}}{\sigma _n}-n \end{aligned}$$(20)holds whenever all \(\lambda _i\not =0\).

-

(b)

With \((x,\varepsilon )\in K^*\), for \(\varepsilon \not =0\) one has

$$\begin{aligned} \frac{1}{\varepsilon }\sum _{i<n}\lambda _i/\widehat{\lambda }_n+\sum _{i\not =j;\,i,j<n}\lambda _i/\lambda _j+\varepsilon \sum _{i<n}\widehat{\lambda }_n/\lambda _i=\frac{1}{\varepsilon }\frac{\sigma _1\sigma _{n-1}}{\widehat{\sigma }_n}-n. \end{aligned}$$

This gives rise to further asymptotic inequalities:

Proposition 2

Let \(\beta \), \(\theta \) and \(\Theta \) be as in Lemma 2, and \(\alpha >0\). Then, for sufficiently small \(\varepsilon _\textrm{max}>0\), the following hold:

-

(a)

For all \((x,\varepsilon )\in K^*\),

$$\begin{aligned} \frac{1}{(1+\alpha )}\varepsilon _*(\widehat{\pi },\rho ,\varepsilon )\le \left| \dfrac{\lambda _n(x,\widehat{\pi }+\varepsilon \rho )}{\sum _{i<n}\lambda _i(x,\widehat{\pi }+\varepsilon \rho )}\right| \le (1+\alpha )\varepsilon ^*(\widehat{\pi },\rho ,\varepsilon ). \end{aligned}$$(21)In particular, there exist constants \(C_1,\,C_2\), such that

$$\begin{aligned} C_1 \varepsilon \le \left| \dfrac{\lambda _n(x,\widehat{\pi }+\varepsilon \rho )}{\sum _{i<n}\lambda _i(x,\widehat{\pi }+\varepsilon \rho )}\right| \le C_2 \varepsilon . \end{aligned}$$ -

(b)

The global estimates

$$\begin{aligned} \frac{1}{(1+\alpha )}\varepsilon _*\le \dfrac{\inf |\lambda _n|}{(n-1)\Theta }\le \dfrac{\sup |\lambda _n|}{(n-1)\theta } \le (1+\alpha )\varepsilon ^* \end{aligned}$$(22)hold, with infimum and supremum being taken over all \((x,\varepsilon )\in K^*\).

Proof

From Lemma 3 one obtains that

for all \((x,\varepsilon )\in K^*\), with bounded \(\eta \). Combining this with Proposition 1 yields the assertions of part (a), and also

for all \((x,\varepsilon )\in K^*\), provided \(\varepsilon _\textrm{max}\) is sufficiently small. Noting

the second statement follows by standard estimates.

Informally speaking, Proposition 2 provides estimates for the ratio of the slowest to the fastest timescale, with \(\sum _{i<n}\lambda _i\) being dominated by the real part with largest modulus. Thus, for dimension \(n>2\), the estimates may be unsatisfactory whenever \(\Theta \gg \theta \). For applications the second estimate in (22) is more relevant, since the fast dynamics will be governed by the smallest absolute real part of \(\lambda _1,\ldots ,\lambda _{n-1}\) (see, Sect. 9.1). The parameter \(\varepsilon ^*\) by itself does not completely characterize the timescale discrepancies, as should be expected. If there is more than one eigenvalue ratio to consider then a single quantity cannot measure all of them.

However, in the following—specialized but relevant—setting a general estimate can be obtained from the coefficients of the characteristic polynomial.

Proposition 3

Let \(\beta \), \(\theta \) and \(\Theta \) be as in Lemma 2, and \(\alpha >0\). Moreover assume that the eigenvalues \(\lambda _1,\ldots ,\lambda _{n-1}\) satisfy \(|\textrm{Re}\,\lambda _j|>|\textrm{Im}\,\lambda _j|\), and let \(|\textrm{Re}\,\lambda _1|\ge \cdots \ge |\textrm{Re}\,\lambda _{n-1}|\). Define

Then, for sufficiently small \(\varepsilon _\textrm{max}>0\), one has

Whenever \(\lambda _{n-1}\in \mathbb R\), then the estimate can be sharpened to

Proof

-

(i)

Preliminary observation: Let \(k\ge 2\) and \(\beta _1,\ldots ,\beta _k\in \mathbb C\) with negative real parts, and \(|\textrm{Re}\,\beta _1|\ge \cdots \ge |\textrm{Re}\,\beta _{k}|\). Moreover denote by \((-1)^\ell \tau _\ell \) the \(\ell ^\textrm{th}\) elementary symmetric polynomial in the \(\beta _j\). If \(|\textrm{Re}\,\beta _j|>|\textrm{Im}\,\beta _j|\) for \(j=1,\ldots ,k\), then

$$\begin{aligned} |\textrm{Re}\,\beta _k|\ge \dfrac{\tau _{k}}{\sqrt{2}\tau _{k-1}}, \text { and } |\beta _k|\ge \dfrac{\tau _{k}}{\tau _{k-1}} \text { when } \beta _k\in \mathbb R. \end{aligned}$$To verify this, recall

$$\begin{aligned} \sum _{i\not =j}\dfrac{\beta _i}{\beta _j}=\dfrac{\tau _1\tau _{k-1}}{\tau _k}-k\le \dfrac{\tau _1\tau _{k-1}}{\tau _k}-1. \end{aligned}$$Now, for complex numbers \(z,\,w\) with negative real parts and \(|\textrm{Re}\,z|>|\textrm{Im}\,z|\), \(|\textrm{Re}\,w|>|\textrm{Im}\,w|\), one has \(\textrm{Re}\,\frac{z}{w}>0\). Therefore, all \(\textrm{Re}\,\beta _i/\beta _j>0\), \(1\le i,\,j\le k-1\), and since their sum is real we obtain the estimate

$$\begin{aligned} \dfrac{\tau _1}{|\beta _k|}=\sum _{i=1}^{k}\dfrac{\beta _i}{\beta _k}=1+\sum _{i=1}^{k-1}\dfrac{\beta _i}{\beta _k}\le \dfrac{\tau _1\tau _{k-1}}{\tau _k}. \end{aligned}$$With \(|\textrm{Re}\,\beta _k|\ge |\beta _k|/\sqrt{2}\) the assertion follows. For real \(\beta _k\) the factor \(\sqrt{2}\) may be discarded.

-

(ii)

We apply the above to the \(\lambda _i(x,\widehat{\pi })\) and \(\sigma _j(x,\widehat{\pi })\), \(1\le i \le n-1\), obtaining

$$\begin{aligned} \sigma _1\le \sqrt{2}|\textrm{Re}\,\lambda _{n-1}|\ \dfrac{\sigma _1\sigma _{n-2}}{\sigma _{n-1}}. \end{aligned}$$By Lemma 3, we have (with arguments \(x\in \widetilde{Y}\cap K\), \(\widehat{\pi }\) and \(\rho \) suppressed)

$$\begin{aligned} \left| \dfrac{\sigma _1\sigma _{n-1}}{\widehat{\sigma }_n}\right| =\left| \dfrac{\lambda _1+\cdots +\lambda _{n-1}}{\widehat{\lambda }_n}\right| =\left| \dfrac{\sigma _1}{\widehat{\lambda }_n}\right| \le \sqrt{2}\left| \dfrac{\textrm{Re}\,\lambda _{n-1}}{\widehat{\lambda }_n}\right| \cdot \left| \dfrac{\sigma _1\sigma _{n-2}}{\sigma _{n-1}}\right| , \end{aligned}$$and in turn

$$\begin{aligned} \left| \dfrac{\widehat{\lambda }_n(x,\widehat{\pi },\rho ,0)}{\textrm{Re}\,\lambda _{n-1}(x,\widehat{\pi })}\right| \le \sqrt{2} \,\dfrac{\widehat{\sigma }_n(x,\widehat{\pi },\rho ,0)\sigma _{n-2}(x,\widehat{\pi })}{\sigma _{n-1}(x,\widehat{\pi })^2}. \end{aligned}$$By continuity and compactness the assertion readily follows when \(\varepsilon _\textrm{max}\) is sufficiently small. As in (i) the factor \(\sqrt{2}\) may be discarded for real \(\lambda _{n-1}\).

Remark 2

There are four observations to make:

-

As with the distinguished upper bound, determining \(\mu ^*\) amounts to finding the maximum of a rational function on a compact set.

-

The proofs of Propositions 2 and 3 implicitly impose further restrictions on \(\varepsilon _\textrm{max}\).

-

Proposition 3 holds in particular in settings when all eigenvalues are “essentially real,” meaning small \({|{\hbox {Im}} \lambda |}/{ |{\hbox {Re}} \lambda |}\). This is frequently the case for chemical networks and reaction mechanisms.

-

One can obviously derive analogous, but weaker estimates, whenever the ratios \({ |{\hbox {Im}} \lambda |}/{|{\hbox {Re}} \lambda |}\) are bounded above by some constant. Likewise, the estimates underlying part (i) of the proof could be sharpened.

3.3 Two-Dimensional Systems

We turn to systems of dimension two, where a TFPV necessarily refers to a critical manifold of dimension \(s=1\). We keep the notation and conventions from Sect. 2.4. Rather than specializing the asymptotic results from Propositions 2 and 3, we will retrace their derivation and obtain slightly sharper estimates.

First and foremost, the TFPV conditions imply that \(\sigma _1\) must be bounded above and below by positive constants. The accuracy of the reduction is reflected in the ratio of the eigenvalues \(\lambda _1,\,\lambda _2\) of \(D_1h(x,\widehat{\pi }+\varepsilon \rho )\) with x in the critical manifold, and \(\lambda _2=0\) at \(\widehat{\pi }\). Then

and moreover \( \lambda _2=\varepsilon \widehat{\lambda }_2\) and \( \sigma _2=\varepsilon \widehat{\sigma }_2\). For \(n=2\) the familiar identity

for \(\lambda _1\not =0,\,\lambda _2\not =0\) yields sharper estimates than Proposition 2. Similar estimates were also used in Eilertsen et al. (2022).

Lemma 4

-

(a)

For all \(M>1,\,\widetilde{M}>2,\, M^*>3\) the implications

$$\begin{aligned} \begin{array}{rcl} |\lambda _1/\lambda _2|&{}>&{}M \Rightarrow |\sigma _1^2/\sigma _2|>M+2;\\ |\sigma _1^2/\sigma _2|&{}\le &{}\widetilde{M} \Rightarrow |\lambda _1/\lambda _2|\le \widetilde{M}-2;\\ |\sigma _1^2/\sigma _2|&{}>&{}M^* \Rightarrow |\lambda _1/\lambda _2|>M^*-3, \end{array} \end{aligned}$$hold whenever \(|\lambda _2/\lambda _1|<1\).

-

(b)

In the TFPV case,

$$\begin{aligned} \frac{1}{\varepsilon }\cdot \frac{\sigma _1^2}{\widehat{\sigma }_2}=2+\varepsilon \frac{\widehat{\lambda }_2}{\lambda _1} + \frac{1}{\varepsilon }\frac{\lambda _1}{\widehat{\lambda }_2} \end{aligned}$$and with \(\varepsilon \rightarrow 0\)

$$\begin{aligned} \frac{\widehat{\sigma }_2(x,\widehat{\pi },\rho ,0)}{\sigma _1^2(x,\widehat{\pi })}=\frac{\widehat{\lambda }_2(x,\widehat{\pi },\rho ,0)}{\lambda _1(x,\widehat{\pi })}. \end{aligned}$$ -

(c)

For given \(\alpha >0\), suitable choice of \(\varepsilon _\textrm{max}\) yields

$$\begin{aligned} \frac{1}{(1+\alpha )}\varepsilon _*\le \inf \dfrac{|\lambda _2|}{|\lambda _1|}\le \sup \dfrac{|\lambda _2|}{|\lambda _1|} \le (1+\alpha )\varepsilon ^*, \end{aligned}$$(27)with infimum and supremum taken over all \((x,\varepsilon )\in K^*\).

Lemma 4 shows that \(\varepsilon ^*\) provides a tight global upper estimate for the eigenvalue ratio (and thus for the timescale ratio) as \(\varepsilon \rightarrow 0\), with x running through \(\widetilde{Y}\cap K\). Moreover, in the analysis of particular systems, one may retrace the arguments leading to the lemma, and determine estimates for \(\varepsilon _\textrm{max}\), e.g., from higher-order Taylor expansions.

3.4 Three-Dimensional Systems

We specialize the general results to dimension three. Given the blanket assumptions from Sect. 2.4, we denote by \(\lambda _1,\lambda _2\), and \(\lambda _3=\varepsilon \widehat{\lambda }_3\) the eigenvalues of the linearization. We have

and similar expressions for L and \(\varepsilon _*\).

Proposition 4

As for applicability of the parameter \(\mu ^*\), one has:

-

(a)

-

The eigenvalues \(\lambda _1\) and \(\lambda _2\) are real if and only if \(\sigma _1^2-4\sigma _2\ge 0\).

-

Given that \(\lambda _1\not \in \mathbb R\) and \(\lambda _2=\overline{\lambda }_1\), one has \(|\textrm{Re}\,\lambda _1|>|\textrm{Im}\,\lambda _1|\) if and only if \(\sigma _1^2-2\sigma _2> 0\).

-

-

(b)

Assume that one of the conditions in part (a) holds. Then, given \(\alpha >0\), for sufficiently small \(\varepsilon _\textrm{max}\) one has

$$\begin{aligned} \sup _{(x,\varepsilon )\in K^*}\left| \dfrac{\lambda _3}{\textrm{Re}\,\lambda _{2}}\right| \le \sqrt{2} (1+\alpha )\ \mu ^*, \end{aligned}$$resp.

$$\begin{aligned} \sup _{(x,\varepsilon )\in K^*}\left| \dfrac{\lambda _3}{\lambda _{2}}\right| \le (1+\alpha )\ \mu ^*\text { whenever } \lambda _{2}\in \mathbb R; \end{aligned}$$with

$$\begin{aligned} \mu ^*=\varepsilon \cdot \sup _{x\in \widetilde{Y}\cap K}\left| \dfrac{\widehat{\sigma }_3(x,\widehat{\pi },\rho ,0)\cdot \sigma _{1}(x,\widehat{\pi })}{\sigma _{2}(x,\widehat{\pi })^2}\right| . \end{aligned}$$

Proof

To determine the nature of the eigenvalues on the critical manifold, we use the identity

This implies the (of course well known) first statement of part (a). The second statement follows from

The rest is straightforward with Proposition 3.

Remark 3

We make the following two points

-

For \(\lambda _1\) and \(\lambda _2\) real and negative, one obtains a lower estimate from

$$\begin{aligned} \left| \dfrac{2\lambda _2}{\widehat{\lambda }_3}\right| \le \left| \dfrac{\lambda _1+\lambda _2}{\widehat{\lambda }_3}\right| =\left| \dfrac{\sigma _1\sigma _2}{\widehat{\sigma }_3}\right| \Longrightarrow 2\left| \dfrac{\widehat{\sigma }_3}{\sigma _1\sigma _2}\right| \le \left| \dfrac{\widehat{\lambda }_3}{\lambda _2}\right| \text { on } \widetilde{Y}\cap K. \end{aligned}$$ -

If \(\lambda _1\) is not real and \(\lambda _2=\overline{\lambda }_1\), with negative real parts, then the specialization of (20), viz.

$$\begin{aligned} \frac{\lambda _1+\lambda _2}{\lambda _3} +\left( \frac{\lambda _1}{\lambda _2}+\frac{\lambda _2}{\lambda _1}\right) +\left( \frac{\lambda _3}{\lambda _1}+\frac{\lambda _3}{\lambda _2}\right) =\frac{\sigma _1\sigma _2}{\sigma _3}-3, \end{aligned}$$for real \(\lambda _3\), \(|\lambda _3|<|\textrm{Re}\,\lambda _1|\), shows that both the second term and the third term on the left-hand side are bounded below by \(-2\) and above by 2, and we obtain

$$\begin{aligned} \dfrac{\sigma _1\sigma _2}{\sigma _3}-7\le \dfrac{2\textrm{Re}\,\lambda _1}{\lambda _3}\le \dfrac{\sigma _1\sigma _2}{\sigma _3}+1. \end{aligned}$$In particular this yields an asymptotic timescale estimate

$$\begin{aligned} \left| \dfrac{\widehat{\lambda }_3}{\textrm{Re}\,\lambda _1}\right| \rightarrow 2\left| \dfrac{\widehat{\sigma }_3}{\sigma _1\sigma _2}\right| \text { as }\varepsilon \rightarrow 0. \end{aligned}$$

Remark 4

When all eigenvalues are real then one obtains the ratio of \(\lambda _1\) and \(\lambda _2\), with \(|\lambda _2|\le |\lambda _1|\), from

and the arguments leading up to Lemma 4. With

the following hold for every \(\alpha >0\), with sufficiently small \(\varepsilon \):

-

On \(\widetilde{Y}\cap K\) one has

$$\begin{aligned} \left| \dfrac{\lambda _2}{\lambda _1}\right| \ge \dfrac{\kappa _*}{1+\alpha }. \end{aligned}$$ -

If \(|\lambda _2/\lambda _1|\le \delta \) for all \(x\in \widetilde{Y}\cap K\) then \(\kappa ^*\le \dfrac{\delta }{2\delta +1}\).

Large discrepancy between \(\lambda _1\) and \(\lambda _2\) (in addition to \(\mu ^*\ll 1\)) may indicate a scenario with three timescales (informally speaking): slow, fast and very fast. Cardin and Texeira (2017) provided a rigorous extension of Fenichel theory for such settings, providing solid ground for their analysis. Note that large discrepancy between \(\varepsilon ^*\) and \(\mu ^*\) implies large discrepancy between \(\lambda _1\) and \(\lambda _2\), in view of the definitions.

4 Michaelis–Menten Reaction Mechanism Revisited

The reader may wonder why we include a rather long section on the most familiar reaction in biochemistry. The basic motivation is that some widely held beliefs on its QSS variants are problematic [see, Eilertsen et al. (2022), for a recent study]. Beyond this, the timescale ratio approach actually yields new results for the reversible Michaelis–Menten (MM) system, as well as for MM with slow product formation.

4.1 The Reversible Reaction with Low Enzyme Concentration

The reversible MM reaction mechanism with low enzyme concentration corresponds to the system

with standard initial conditions \(s(0)=s_0\), \(c(0)=0\). The earliest discussion of (31) dates back to Miller and Alberty (1958), but the reversible reaction has garnered relatively little attention compared to the irreversible one.

The parameter space \(\Pi =\mathbb R_{\ge 0}^6\) has elements \((e_0,s_0,k_1,k_{-1},k_2,k_{-2})^\textrm{tr}\), and we set \(x=(s,\,c)^\textrm{tr}\). As is well known, setting \(e_0=0\) and all other parameters \(>0\) defines a TFPV, with the critical manifold \(\widetilde{Y}\) given by \(c=0\). For the reduced equation, one finds (see, e.g., Noethen and Walcher 2011)

By the first blanket assumption in Sect. 2.4, we restrict \((s_0,k_1,k_{-1},k_2,k_{-2})^\textrm{tr}\) to a compact subset of the open positive orthant. With fixed \(e_0^*>0\) (with dimension concentration), we let \(\rho =(e_0^*,0,\ldots ,0)^\textrm{tr}\). We will work with both \(e_0\) and \(\varepsilon e_0^*\). Rather than obtaining \(\varepsilon _*\) and \(\varepsilon ^*\) directly from Lemma 4, we retrace their derivation and get error estimates in the process. The coefficients of the characteristic polynomial with \(x\in \widetilde{Y}\) are

The set K (compatible with the standard initial conditions), defined by \(0\le s\le s_0\) and \(0\le c\le e_0^*\), is compact and positively invariant.

We only discuss the case \(k_1\ge k_{-2}\). The other case amounts to reversing the roles of s and p. Note that \(\sigma _2\) is independent of s. The minimum of \(\sigma _1(x,\widehat{\pi }+\varepsilon \rho )\) on \(\widetilde{Y}\cap K\) equals

and the maximum is

In particular, the minimum of \(\sigma _1(x,\widehat{\pi })\) on \(\widetilde{Y}\cap K\) equals

Moreover, we have

a positive constant.

By Lemma 4 and its derivation, we find

valid for all \(\varepsilon >0\). Neglecting higher-order terms in \(\varepsilon \) yields

Therefore, it seems appropriate to define the distinguished local parameter for the reversible MM system as

It appears that this particular parameter has not been introduced so far, nor has any close relative. Indeed, there seem to exist no parameters in the literature that were specifically derived for the reversible reaction. In their discussion of the reversible system, Seshadri and Fritzsch (1980) worked with the parameter \(\varepsilon _{RS}\) that Reich and Selkov had designed for the irreversible system; see Eq. (5).

4.2 The Irreversible Reaction with Low Enzyme Concentration

We specialize to the irreversible case, and thus we have the differential equation (1) with \(e_0=\varepsilon e_0^*\). The QSS manifold of this system is defined by \(c=g(s):=e_0s/(K_M+s)\).

4.2.1 Distinguished Small Parameters

The parameters from the reversible scenario simplify to

with

Note that the TFPV and nondegeneracy conditions, together with the compactness condition in parameter space, require that \(k_2\) is bounded below by some positive constant.

As in the previous section, we find that \(\varepsilon _{MM}\) is a sharp upper estimate for the eigenvalue ratio. In fact,

throughout.

As noted in Introduction, various small parameters have been proposed for the irreversible MM system. Comparing these, we note

with the Reich-Selkov parameter. Whenever \(k_{-1}\) and \(k_2\) have the same order of magnitude (in any case \(k_2\) must be bounded away from 0 by nondegeneracy), the disparity between \(\varepsilon _{MM}\) and \(\varepsilon _{RS}\) may be seen as inessential.

The parameters \(\varepsilon _{MM}\) and \(\varepsilon _{RS}\) differ markedly from the most familiar small parameters, viz. \(\varepsilon _{BH}\) [see (4) as used by Heineken et al. (1967)], and \(\varepsilon _{SSl}\) [see (6) as introduced in Segel and Slemrod (1989)], which both involve the initial substrate concentration. As shown in Noethen and Walcher (2007), smallness of the Segel–Slemrod parameter is necessary and sufficient to ensure negligible loss of substrate in the initial phase. But, as noted in Patsatzis and Goussis (2019) and in Eilertsen et al. (2022), large initial substrate concentration—while ensuring a fast approach to the QSS manifold—is not sufficient to guarantee a good QSS approximation over the whole course of the reaction. A general argument in favor of \(\varepsilon _*\) and \(\varepsilon _{MM}\) is that they directly measure the local ratio of timescales.

4.2.2 Further Observations

We briefly discuss what can be inferred from

alone, with no further restriction on the limiting process.

In the simplest imaginable scenario, letting a parameter tend to zero might automatically imply validity of some QSS approximation, but this is not the case here. The TFPV conditions on \(\sigma _1\) imply that \(k_{-1}\) is bounded above and we obtain three cases: In addition to the case \(e_0\rightarrow 0\), we have the case \(k_1\rightarrow 0\), yielding a singular perturbation reduction with the same critical manifold but a linear reduced equation. Furthermore we have the case \(k_2\rightarrow 0\), which leads to a singular perturbation scenario with a different critical manifold and different reduction (see, the next subsection).

This observation supports a statement from Introduction. A given small parameter by itself will in general not determine a unique singular perturbation scenario, and a transfer without reflection of the reduction procedure from one scenario to a different one may yield incorrect results. It is necessary to consider the complete setting, including TFPV, critical manifold and small parameter. Moreover, one needs to carefully stipulate how limits are taken. For instance, letting \(s_0\rightarrow \infty \), while ensuring \(\varepsilon _{SSl}\rightarrow 0\), will fail to ensure convergence. Likewise, letting, e.g., \(k_{-1}\rightarrow \infty \) in the Reich-Selkov parameter does not imply convergence.

For the irreversible reaction with substrate inflow at rate \(k_0\), one obtains the same expressions for \(\sigma _2/\sigma _1^2\) at the TFPV with \(k_0=0\) and \(e_0=0\) (all other parameters \(>0\)), the critical manifold being given by \(c=0\). Before obtaining \(\varepsilon _*,\,\varepsilon ^*\) one needs to choose appropriate initial conditions; we take \(s(0)=c(0)=0\) here. Solutions are not necessarily confined to compact sets, so one may not be able to choose the set K from Sect. 2.4 to be positively invariant. In the case \(s(0)=c(0)=0\) the computation of the distinguished upper bound \(\varepsilon ^*\) works as in the case with no influx; the supremum exists and is equal to \(\varepsilon _{MM}\). However, one gets \(\varepsilon _*\rightarrow 0\) with increasing s when there exists no positive stationary point (all solutions are unbounded in positive time), hence the lower estimate provides no information. If there exists a finite positive stationary point \(\widetilde{s}\) of the reduced equation, then one obtains \(\varepsilon _*>0\) by replacing \(s_0\) by \(\widetilde{s}\) in the lower estimate in 4.2.1. In this case, a compact positively invariant set exists with \(s\le \widetilde{s}\), as was shown in Eilertsen et al. (2021b).

4.3 The Irreversible Reaction with Slow Product Formation

We turn to the scenario with slow product formation, the other reactions being fast.Footnote 11 Here \(k_2=0\), with all other parameters \(>0\), defines a TFPV with critical manifold \(\widetilde{Y}\) given by

Although setting up \(k_2=0\) appears counterintuitive for an enzyme catalayzed reaction, there is a family of enzymes, known as pseudoenzymes, that have either zero catalytic activity (\(k_2=0\)), or vestigial catalytic activity (\(k_2\approx 0\)) due to the lack of catalytic amino acids or motifs (Eyers and Murphy 2016). These enzymes exist in all the kingdoms of life and are also named as “zombie” enzyme, dead enzyme, or prozymes. Pseudoenzymes play different functions in signaling network, such as serving as dynamic scaffolds, modulators of enzymes, or competitors in canonical signaling pathways (Murphy et al. 2017). Since one frequently finds incorrect reductions in the literature, it seems appropriate to recall correct ones. Heineken et al. (1967) provided a correct reduction (see, (34) below). In Goeke and Walcher (2013), a version for substrate concentration is given:

With known \(e_0\), this equationFootnote 12 in principle allows to identify the limiting rate \(k_2e_0\) and the equilibrium constant \(K_S\). It should be noted that one also needs an appropriate initial time and initial value for the reduction. Since one cannot assume negligible substrate loss in the transient phase, an appropriate fitting would require completion of Step 2 of the program outlined in Introduction.

4.3.1 Distinguished Small Parameters

Intersecting \(\widetilde{Y}\) with the positively invariant compact set K defined by \(0\le s\le s_0\) and \(0\le c\le e_0\), amounts to restricting \(0\le s\le s_0\). The elements of the parameter space \(\Pi =\mathbb R_{\ge 0}^5\) have the form \((e_0,s_0,k_1,k_{-1},k_2)^\textrm{tr}\), and a natural choice of ray direction is \(\rho =(0,0,0,0,k_2^*)^\textrm{tr}\), with \(k_2=\varepsilon k_2^*\).

The coefficients of the characteristic polynomial on \(\widetilde{Y}\) are

To distinguish small parameters, we need to consider the following steps:

-

We first evaluate the nondegeneracy conditions for the coefficients of the characteristic polynomial, from TFPV requirements and compactness. The minimum of \(\sigma _1(x,\widehat{\pi })\) on \(\widetilde{Y}\cap K\) is equal to \(k_{-1}+k_1e_0\) when \(k_1e_0\le k_{-1}\), and equal to \(2\sqrt{k_{-1}k_1e_0}\) otherwise. This minimum must be bounded below by some positive constant. Combining this observation with the boundedness of the maximum of

$$\begin{aligned} \widehat{\sigma }_2=\dfrac{k_2^*k_{-1}k_1e_0}{k_1s+k_{-1}} \quad \text {on} \quad [0,\,s_0], \end{aligned}$$which is equal to \(k_2^*k_1e_0\), one sees that \(k_1e_0\) and \(k_{-1}\) must be bounded above and below by positive constants.

-

Turning to small parameters, in the asymptotic limit one obtains

$$\begin{aligned} \varepsilon ^*=k_2\sup \dfrac{av}{(a+v^2)^2} \quad \text {with} \quad a=k_{-1}k_1e_0,\,v=k_1s+k_{-1}, \end{aligned}$$where the supremum is taken over \(k_{-1}\le v\le k_{-1}+k_1s_0\). By elementary calculus one finds the global maximum of this function on the unbounded interval \(v\ge 0\), thus for sufficiently large \(s_0\) we obtain the maximum at \(v=\sqrt{k_{-1}k_1e_0/3}\), and find the estimate

$$\begin{aligned} \varepsilon ^{*}\le \dfrac{3\sqrt{3}}{16}\dfrac{k_2}{\sqrt{k_{-1}\cdot k_1e_0}}=:\dfrac{3\sqrt{3}}{8}\cdot \varepsilon _{PE},\quad \text {with }\varepsilon _{PE}:=\dfrac{2k_2}{\sqrt{k_{-1}\cdot k_1e_0}}. \end{aligned}$$Note that \(\varepsilon _{PE}\) always yields an upper estimate for the eigenvalue ratio near the critical manifold. One could thus discard the factor \(1+\alpha \) in Lemma 4.

-

Depending on the given parameters, in some cases one may obtain sharper estimates for \(\varepsilon ^*\) from the endpoints of the interval \([0,s_0]\). In any case, to determine \(\varepsilon _*\) one needs to consider the boundary points of this interval.

-

The expression for \(\varepsilon _{PE}\) may look strange, but \(\sqrt{k_2/k_{-1}}\cdot \sqrt{k_2/(k_1e_0)}\) is the geometric mean of two reaction rate ratios, thus admits a biochemical interpretation. There is little work in the literature on small parameters for the case of slow product formation. Heineken et al. (1967) suggested \( {k_2}/{(k_1s_0)}\), while Patsatzis and Goussis introduced a parameter depending on s and c along a trajectory, taking the maximum over all \(s,\,c\) yields \({k_2}/{k_{-1}}\). The latter represents a commonly accepted “small parameter” for this scenario; see, Keener and Sneyd (2009, Section 1.4.1). In the limiting case \(k_2\rightarrow 0\), one also has \(\varepsilon _{MM}\rightarrow 0\), but one should not conclude that the standard QSS approximation is valid here. Recall that, in the low enzyme setting, \(k_2\) needs to be bounded away from zero due to nondegeneracy requirements.

4.3.2 Approach to the Slow Manifold

For MM reaction mechanism with slow product formation, we specialize the arguments in Appendix 9.1.1 to determine \(\varepsilon _L\), and show that \(\varepsilon _{PE}\) appears naturally in this estimate.Footnote 13 We use the results (and refer to the notation) of Sect. 9.1.

We rewrite the system in Tikhonov standard form. Since \(\frac{d}{dt}(s+c)=-k_2c\), \(s+c\) is a first integral of the fast system in the limit \(k_2=0\), with \(x=s+c\), \(y=s\) (so \(c=x-y\), \(x\ge y\ge 0\)), and \(k_2=\varepsilon k_2^*\) we obtain

with

We focus on the particular initial conditions with zero complex, thus

The QSS variety \(\widetilde{Y}\) is defined by \(y=h_+(x)\), and the reduced equation reads

We use the notation and apply the general procedure from the Sect. 9.1, with

and \(g(x)=h_+(x)\). We will use some properties of q in the following. The calculation of \(q'(x)\) leads to

hence \(|q'(x)|\le 1\) for all \(x\ge 0\). Moreover, the sign of \(q'\) changes from − to \(+\) at \(x=e_0-K_S\) when \(e_0-K_S\ge 0\), and is otherwise positive for all \(x\ge 0\). Thus, the minimum of q is attained at 0, with value \(K_S+e_0\), when \(e_0<K_S\), and is attained at \(e_0-K_S\), with value \(2\sqrt{K_Se_0}\), when \(e_0\ge K_S\). By the arithmetic–geometric mean inequality, we thus have

This shows

and we arrive at

According to Sect. 9.1, \(\gamma ^{-1}\) is an appropriate timescale for the approach to the slow manifold.

To determine \(\kappa \), we have \(g(x)=h_+(x)=\frac{1}{2}(x-K_S-e_0+q(x))\), thus \(|g'(x)|\le 1\), and

hence we may set \(\kappa =k_2e_0\).

Altogether, we obtain from the Lyapunov function the (dimensional) parameter

To obtain a non-dimensional small parameter, normalization by \(e_0\) seems to be the natural choice here, which yields

In this particular setting, the local timescale parameter completely characterizes the approach of the solution to the slow manifold.

4.3.3 Estimates for Long Times

We will not attempt to estimate a critical time for the onset of the slow dynamics, and without this we cannot determine approximation errors for solutions of the reduced equation (as outlined in Sect. 9.1.3). In this respect, the discussion of the MM reaction mechanism with slow product formation remains incomplete. But the following observation provides a relevant condition for the long-term behavior. Since \(|y-g(x)|\rightarrow e_0\cdot \varepsilon _{PE}\), the solution will enter the domain with \(|y-g(x)|\le 2 e_0\cdot \varepsilon _{PE}\) after some short transitory phase.Footnote 14 In this domain, we obtain the reduced equation with error term:

By a differential inequality argument, the solution of \(\dot{x}=U(x)\), with positive initial value, is an upper bound for the first entry of the solution of (33), given appropriate initial values near the QSS variety. Moreover the solution of the reduced equation (34) with the same initial value remains positive. For \(t\rightarrow \infty \), the absolute value of the difference of these solutions converges to the stationary point of \(\dot{x}=U(x)\), which therefore indicates the discrepancy. We determine the stationary point, neglecting terms of order \(>1\) in \(k_2\):

Thus, we obtain the parameter

which provides an upper bound for the long-term discrepancy of the true solution and its approximation.

4.4 A Degenerate Scenario

To illustrate the limitations of the approach via Proposition 2, consider the irreversible system with TFPV \(k_{-1}=k_2=0\), the other parameters positive, and \(\rho =(0,0,0,k_{-1}^*,k_2^*)^\textrm{tr}\). Here the critical variety is reducible, being the union of the lines \(Y_1\), \(Y_2\) defined by \(e_0-c=0\) resp. \(s=0\), and the TFPV conditions fail at their intersection. We consider the case \(e_0<s_0\), and define \(\widetilde{Y}_1\) by \(c=e_0,\,s>0\). The fast system admits the first integral \(s+c\), so the initial value of the slow system on \(\widetilde{Y}_1\) is close to \((s_0-e_0,e_0)^\textrm{tr}\). Proceeding, one may choose

Then, \(\widetilde{Y}_1\cap K\) is compact, but not positively invariant, and on this set one has

Here, the nondegeneracy condition in (13) fails, and we obtain no timescale ratio by way of Lemma 4. A direct computation in a neigborhood of \(\widetilde{Y}_1\) yields

but this obscures the fact that both eigenvalues approach zero as \(s\rightarrow 0\). Standard singular perturbation methods are not sufficient to analyze the dynamics of this system for small \(\varepsilon \).

5 TFPV for Higher Dimensions

We keep the notation and conventions from Sects. 2.1 and 2.4, but now we will focus on a TFPV \(\widehat{\pi }\) for dimension \(s>1\). The goal of this technical section is to identify distinguished parameters and discuss their relation to timescales. There is a rather obvious direct extension of results from the \(s=1\) case, but the timescale correspondence will be not as pronounced. Moreover, we will need to impose a stronger nondegeneracy condition. We abbreviate

keeping in mind that \(\widetilde{\sigma }_i(x,0)>0\) for all \(x\in \widetilde{Y}\cap K\) and \(1\le i\le n-s\), due to \(\widehat{\pi }\) being a TFPV. Additionally, we set \(\widetilde{\sigma }_0:=1\).

5.1 Distinguished Small Parameters

Some notions and results from Sect. 3 can easily be modified for the case \(s>1\). For suitable \(\varepsilon _\textrm{max}>0\), we have

and due to \(\sigma _{n-s+1}(x,\widehat{\pi })=0\) for \(x\in \widetilde{Y}\cap K\), we obtain

with a polynomial \(\widehat{\sigma }_{n-s+1}\), for all \((x,\varepsilon )\in K^*\).

Definition 3

Let

Now, we define

the distinguished upper bound for the TFPV \(\widehat{\pi }\) for dimension s, with parameter direction \(\rho \), of system (7). Moreover we call

the distinguished lower bound for the TFPV \(\widehat{\pi }\) for dimension s with parameter direction \(\rho \).

As in the case of reduction to dimension one, determining the distinguished parameters amounts to determining the extrema of a rational function on a compact set, or (when this is not possible, or not sensible) determining reasonably sharp estimates for these extrema. We note the following straightforward variant of Proposition 1.

Proposition 5

Given \(\alpha >0\), for sufficiently small \(\varepsilon _\textrm{max}\), the estimates

hold on \(K^*\).

5.2 The Correspondence to Timescales

Proofs of the following statements are given in Appendix (Lemmas 6 and 7).

Let \(\widehat{\pi }\) be a TFPV for dimension s, with critical manifold \(\widetilde{Y}\). Then for all \(x\in \widetilde{Y}\cap K\) one has

with polynomials \(\widehat{\sigma }_i\).

Assume that (44) is given, and furthermore assume the nondegeneracy condition

Then the zeros \(\lambda _i(x,\widehat{\pi }+\varepsilon \rho )\) of the characteristic polynomial can be labeled such that

and

with continuous functions in \(\varepsilon \).

Given the nondegeneracy assumptions, we turn to discussing the correspondence of \( \varepsilon _{*}\) and \( \varepsilon ^*\) to timescales. By (11), and by the definition of \(\widetilde{\sigma }_i\) in (39), one has

This directly provides a result on separation of timescales.

Proposition 6

Assume that the nondegeneracy condition (45) holds.

-

(a)

The identity

$$\begin{aligned} \dfrac{\widetilde{\sigma }_{n-s+1}}{\widetilde{\sigma }_1\widetilde{\sigma }_{n-s}}=\dfrac{ \lambda _{{n-s+1}}+\cdots +\lambda _n}{\lambda _1+\cdots +\lambda _{n-s}}+\varepsilon ^2\,(\cdots )=\varepsilon \dfrac{ \widehat{\lambda }_{{n-s+1}}+\cdots +\widehat{\lambda }_n}{\lambda _1+\cdots +\lambda _{n-s}}+\varepsilon ^2\,(\cdots ) \end{aligned}$$holds on \(K^*\), with \((\cdots )\) representing a continuous function.

-

(b)

Given \(\alpha >0\), and \(\varepsilon _\textrm{max}\) sufficiently small, the estimates

$$\begin{aligned} \frac{1}{(1+\alpha )}\varepsilon _*(\widehat{\pi },\rho ,\varepsilon )\le \left| \dfrac{\sum _{i\le n-s}\lambda _i(x,\widehat{\pi }+\varepsilon \rho )}{\sum _{j>n-s}\lambda _j(x,\widehat{\pi }+\varepsilon \rho )}\right| \le (1+\alpha )\varepsilon ^*(\widehat{\pi },\rho ,\varepsilon ) \end{aligned}$$(46)hold for all \((x,\varepsilon )\in K^*\). In particular, there exist constants \(C_1,\,C_2\) such that

$$\begin{aligned} \;\;C_1 \varepsilon \le \left| \dfrac{\sum _{i\le n-s}\lambda _i(x,\widehat{\pi }+\varepsilon \rho )}{\sum _{j>n-s}\lambda _j(x,\widehat{\pi }+\varepsilon \rho )}\right| \le C_2 \varepsilon . \end{aligned}$$

Thus, for higher dimensions of the critical manifold the coefficients of the characteristic polynomial still provide—albeit weaker—estimates for timescale ratios. Informally speaking, \({\widetilde{\sigma }_{n-s+1}}/({\widetilde{\sigma }_1\widetilde{\sigma }_{n-s}})\) measures the ratio of the “fastest slow timescale” and the “fastest fast timescale.” Similar to the situation for \(s=1\), a more relevant ratio is the one of the “fastest slow timescale” and the “slowest fast timescale.” We invite readers to compare Sect. 9.1 in Appendix. We remark that for real or “essentially real” \(\lambda _1,\ldots ,\lambda _{n-s}\) one may obtain results similar to Proposition 3, but we will not pursue this further.

5.3 Further Dimensionless Parameters

Given the setting of (44), it is natural to ask about different types of dimensionless small parameters, in addition to the distinguished ones obtained from Proposition 5. We consider terms of the form

with \(k\ge 1\), \(\ell \ge 0\), \(m>0\), and the indices \(1\le j_1\le \cdots \le j_\ell \), \(1\le v_1\le \cdots \le v_m\) subject to the following conditions:

-

(1)

“Dimensionless”: This mean by Lemma 1

$$\begin{aligned} j_1+\cdots +j_\ell +(n-s)+v_1+\cdots +(n-s)+v_m=(n-s)+k. \end{aligned}$$ -

(2)

“Order one in \(\varepsilon \)”:

$$\begin{aligned} v_1+\cdots +v_m=k-1. \end{aligned}$$

Proposition 7

The only classes of dimensionless small parameters that satisfy (1) and (2) are the following:

-

(a)

\(m=1\) with \(\ell =1\) and \(j_1=1\), with parameters

$$\begin{aligned} \dfrac{\widetilde{\sigma }_{n-s+k}}{\widetilde{\sigma }_1\,\widetilde{\sigma }_{n-s+k-1}}, \quad 2\le k\le s. \end{aligned}$$(47) -

(b)

\(m=2\), \(n\ge 4\), \(s=n-1\) and \(\ell =0\), with parameters

$$\begin{aligned} \dfrac{\widetilde{\sigma }_{2+v_1+v_2}}{\widetilde{\sigma }_{1+v_1}\,\widetilde{\sigma }_{1+v_2}}, \quad 1\le v_1\le v_2,\quad v_1+v_2\le n-2. \end{aligned}$$(48)

Proof

Combining (1) and (2) one finds

thus, necessarily \(m\le 2\) due to \(s<n\). In case \(m=1\), one has \(\ell =1\) and \(j_1=1\). In case \(m=2\), one necessarily has \(s=n-1\) and \(\ell =0\).

To obtain explicit parameter bounds in the first case, use

to determine

and small parameters

Remark 5

In the first case, there is a notable correspondence to eigenvalues (thus to timescales). A variant of the argument in Proposition 6 shows that

where \(\tau _\ell \) denotes the \(\ell ^\textrm{th}\) elementary symmetric polynomial in s variables.

5.4 Dimension Three

We specialize the results to dimension three and \(s=2\), assuming nondegeneracy. By (44), \(\widetilde{\sigma }_2\) is of order \(\varepsilon \), and \(\widetilde{\sigma }_3\) is of order \(\varepsilon ^2\).

In view of Propositions 5 and 6, we consider

Informally speaking, this expression governs the ratio of the fastest slow timescale to the fast timescale, which is the pertinent ratio according to Sect. 9.1.2. We obtain

as well as

Similar to the observations in Remark 4, disparate slow eigenvalues may indicate a scenario with three timescales (informally speaking, fast, slow and very slow). To measure the disparity, we use Proposition 7 and consider

Combining parameters shows

Thus, the constants

measure the disparity of \(\widehat{\lambda }_2\) and \(\widehat{\lambda }_3\). In particular, given that \(|\lambda _3|\le |\lambda _2|\) one has

6 Case Studies: Reduction from Dimension Three to One

In this section, we discuss two biochemically relevant modifications of the MM reaction mechanism and a non-Michaelis–Menten reaction mechanism, with low enzyme concentration, and their familiar (quasi-steady state) reductions to dimension one. This seems to be the first instance that small parameters in the spirit of Segel and Slemrod—although consistently based on linear timescales—are derived for these reaction mechanisms in a systematic manner. Note that, in the application-oriented literature, the perturbation parameter of choice mostly seems to be \(\varepsilon _{BH}=e_0/s_0\), on loan from the MM reaction mechanism.

We will directly consider the asymptotic small parameters \(\varepsilon ^*, \,\varepsilon _*,\,\mu ^*\) by application of the results in Sect. 3, and obtain rather satisfactory estimates for these. Considering the steps outlined in the Introduction, we thus complete a substantial part of Step 1. Proceeding beyond this, along the lines of Sect. 9.1, would involve considerable and lengthy work for each system, so we will not go further. However, to test and illustrate the efficacy of the parameters, we include extensive numerical simulations. We also include examples that demonstrate the limitations of the local timescale approach, and in particular show that the nondegeneracy conditions imposed on the “non-small” parameters are necessary.

6.1 Cooperativity Reaction Mechanism

The (irreversible) cooperative reaction mechanism

is a non-Michaelis–Menten reaction mechanism of enzyme action. It is modeled by the mass action equations

via stoichiometric conservation laws. Typical initial conditions are \(s(0)=s_0,\,e(0)=e_0,\,\) and \(c_1(0)=c_2(0)=p(0)=0\). The conservation laws yield the compact positively invariant set

with some reference value \(e_0^*>0\). The parameter space \(\Pi =\mathbb R_{\ge 0}^8\) has elements \( (e_0,s_0,k_1,k_{-1},k_2,k_3,k_{-3},k_4)^\textrm{tr},\) and setting \(e_0=0\) defines a TFPV,

for dimension one, subject to certain nondegeneracy conditions on the \(k_i\). The associated critical manifold is

We now set \(\rho =(e_0^*,0,\ldots ,0)^\textrm{tr}\), and consider the perturbed system with parameter \(\pi =\widehat{\pi }+\varepsilon \rho \). The singular perturbation reduction (according to formula (9) in Sect. 2.1) was carried out in Noethen and Walcher (2007, Section 4) and Goeke and Walcher (2013, Examples 8.2 and 8.7). This reduction agrees with the well known classical quasi-steady state reduction for complexes of the cooperativity reaction mechanism (see Keener and Sneyd 2009, Section 1.4.4). We have

The quasi-steady state variety (see, Keener and Sneyd 2009) is given parametrically by