Abstract

For successful learning, students need to evaluate their learning status relative to their learning goals and regulate their study in response to such monitoring. The present study investigated whether making metacognitive judgments on previously studied text would enhance the learning of that studied (backward effect) and newly studied text material (forward effect). We also examined how different learning goals would orient learners to adopt different study strategies and, in turn, affect learning performance by asking learners to make different types of metacognitive judgments. In two experiments, participants studied two different passages across two sections (Sections A and B). They were asked to make either inference-based or memory-based metacognitive judgments on the studied passage of Section A before studying a new passage in Section B. The study-only control group did not make any metacognitive judgments between sections. On completion of Section B, all participants were given final retention and transfer tests on both sections. The meta-analytic results from the two experiments revealed that making inference-based metacognitive judgments was more beneficial than simply studying the material for both retention and transfer of the previously studied and newly studied text material. However, the benefit of memory-based metacognitive judgments was limited, in that it did not enhance retention performance of the previously studied material compared to the control condition. The current findings suggest that the effectiveness of metacognitive judgments varies depending on the learning goal. Highlighting a high-level learning goal seems to influence learners’ all knowledge levels, showing a cascading effect.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

For successful learning, students should be able to evaluate and manage their learning. Due to its critical role in learning, metacognition attracted a lot of attention in the field of education and cognitive psychology. Metacognition is “the knowledge and control one has over one’s thinking and learning activities” (Swanson, 1990, p. 306). In the field of education, many researchers tried to develop metacognitive instruction that helps students reflect upon their learning and apply metacognitive knowledge and skills (e.g., Bannert et al., 2009; Bannert & Reimann, 2012; Lin et al., 1999; Mevarech & Kramarski, 2003). They often provided students with metacognitive instruction about why and how to use metacognitive activities and investigated whether these metacognitive activities could enhance learning. For example, Daumiller and Dresel (2019) examined the effects of metacognitive prompts in self-regulated learning with digital media. The metacognitive instructions included prompts for the learning process (e.g., “Reflect on what you have already understood and what you could still engage with more intensively.”) and regulation of study strategies (e.g., “Think about how you could further optimize your current learning.”). The results showed metacognitive prompts led to the use of cognitive strategies and better knowledge acquisition. Similarly, Lin and Lehman (1999) demonstrated that prompting college students to think about their learning decisions retrospectively enhanced transfer performance in biology subject. Bannert et al. (2009) also found positive effects of metacognitive support devices (i.e., providing students with metacognitive instruction and prompts to apply metacognitive activities they had learned while studying) in learning psychological theories of multimedia learning.

On the other hand, many researchers in the field of cognitive psychology looked at metacognition as ongoing monitoring and control that accompany the cognitive processes (Nelson & Narens, 1990) and investigated how these two processes, monitoring and control, are related to each other. Researchers ask participants to assess their learning status using various measures such as a judgment of learning (JOL), comprehension rating (Thiede et al., 2003), and prediction of performance (POP, Ackerman & Goldsmith, 2011) in the middle of or at the end of the study to examine how such monitoring is related to study behaviors (e.g., study time allocation, restudy selection). For example, in a typical JOL task, participants are asked to rate the likelihood of remembering or answering questions about the studied material on a later test, often using a scale ranging from 0 to 100 points (Bjork et al., 2013; Dunlosky & Lipko, 2007). Recent research, however, suggests that the act of making a metacognitive judgment (e.g., JOL) can influence learning not only by affecting subsequent study behaviors but also by directly affecting learning outcomes, a phenomenon called JOL reactivity (Janes et al., 2018; Mitchum et al., 2016; Soderstrom et al., 2015; Witherby & Tauber, 2017). For example, Janes et al. (2018) examined the effect of making JOLs on the learning of related (e.g., pledge-promise) and unrelated word pairs (e.g., mercy-justice). Participants either made JOLs during learning or merely studied word pairs without making JOLs. In the final cued-recall test, the JOL group outperformed the study-only group for the related word pairs, implying that JOLs can directly enhance the recall performance of learned material. Positive effects of JOLs have been reported in the learning of various materials, including word pairs (Janes et al., 2018; Mitchum et al., 2016; Soderstrom et al., 2015), faces (Sommer et al., 1995), and text material (Dobson et al., 2019); they have also been reported when tests are delayed (Tekin & Roediger, 2021).

There are several accounts for the JOL reactivity (for reviews, see Double et al., 2018; Double & Birney, 2019). One of the main accounts is the covert-retrieval hypothesis (Dunlosky & Nelson, 1992; Nelson & Dunlosky, 1991; Spellman & Bjork, 1992). Making metacognitive judgments can encourage learners to covertly retrieve the information (Son & Metcalfe, 2005), and such retrieval attempts can alter the memory. Indeed, Nelson and Dunlosky (1991) reported many people attempted retrieval before making metacognitive judgments. Prior studies demonstrating the memory benefits of JOLs often used a list of paired associates and asked participants to predict their future performance when providing incomplete information. For example, Soderstrom et al. (2015) had participants study a set of word pairs (e.g., doctor - nurse), and provided them with only the cue words (e.g., doctor) to predict the likelihood of recalling the target words (e.g., nurse). Such procedure can involve covert retrieval of the target words before making JOLs and this retrieval process can enhance later memory performance (Spellman & Bjork, 1992), similar to testing effect (Roediger & Karpicke, 2006). Consistent with the covert-retrieval account, Kubik et al. (2022) reported that making JOLs with incomplete information (but not JOLs with complete information) enhanced subsequent learning, probably because it elicits covert retrieval attempts. During covert retrieval, learners face the challenge of simultaneously monitoring and retrieving previously studied information but they can gain mnemonic benefits.

The JOL reactivity can occur even when it is unlikely to evoke covert retrieval (for a meta-analysis, see Double et al., 2018) because the judgment itself can encougage learners to use more elaborative process. While making metacognitive judgments, learners can process information more thoroughly (Dougherty et al., 2005) and encode study materials more effectively (Tekin & Roediger, 2020). Also, making JOLs may encourage learners to change their learning goals and adjust study strategies to focus on specific learning materials (changed-goal hypothesis; Mitchum et al., 2016).

Previous research suggests that making metacognitive judgments can influence learning in two ways. First, making metacognitive judgments of learned material can influence subsequent learning by affecting learners’ study behavior, demonstrating the forward effect (Lee & Ha, 2019; cf. a forward effect of testing; Pastötter & Bäuml, 2014). Second, making metacognitive judgments of learned material can also influence the learning of that judged material by inducing learners to covertly retrieve studied materials (Spellman & Bjork, 1992), as shown in the JOL reactivity, demonstrating the backward effect (Lee & Ha, 2019; cf. a backward effect of testing; Pastötter & Bäuml, 2014). Combining these two directional effects of metacognitive judgments on learning, we can expect that making metacognitive judgments on previous learning would enhance the learning of the materials learned before and after making metacognitive judgments. However, prior research has paid little attention to learning goals learners should consider when making metacognitive judgments. Learners monitor their learning status and adjust their behavior according to their learning goals (Winne & Nesbit, 2010), deciding which study strategies will best meet these objectives (Dunlosky et al., 2013). If students misperceive the goal, they may utilize inappropriate information to monitor their learning and adopt non-optimal tactics to achieve the goal (Butler, 1994; Butler & Winne, 1995). Therefore, it is important for students to consider appropriate learning goals while monitoring their learning status for successful learning.

Accordingly, we expect that depending on whether students are informed of the learning goal they should achieve, and the type of learning goal that is given while making metacognitive judgments, their subsequent study behavior and learning outcomes will differ. There are two reasons why we expect that the type of learning goal would have a different effect on the learning process and outcome. First, a large body of research has shown that the types of learning goals given to students lead them to select different learning strategies. Research on the test expectancy effect (Finley & Benjamin, 2012; Thiede et al., 2011) demonstrates that students decide their study strategies with a specific learning goal in mind. When they are given different information about the anticipated test format, they choose the learning strategies they perceive to be effective in gaining a higher score on the test. For instance, Thiede et al. (2011) demonstrated the effect of test expectancy using science text materials. In the inference-test expectancy condition, participants were instructed to take a test to assess their ability to infer between different parts of the text. In the memory-test expectancy condition, they were prompted that they would be taking a test to assess their ability to remember details of the text. The results showed that the inference-test expectancy group outperformed the memory-test expectancy group in the final inference test, while the results were reversed in the final memory test.

Several other researchers have suggested that the type of questions students expect influences their effort and use of learning strategies (Joughin, 2010). Students tend to change their study strategies in free-recall versus cued-recall tests (Finley & Benjamin, 2012; Rivers & Dunlosky, 2021). Rouet et al. (2001) also demonstrated that the students who were given application-based questions were more likely to use a review-and-integrate strategy, whereas those who were given memory-based questions were more likely to use a locate-and-memorize strategy while studying a scientific text.

Second, previous studies have demonstrated that the effect of metacognitive judgments on learning varies depending on the type of judgment (e.g., Lee & Ha, 2019; Nguyen & McDaniel, 2016). Nguyen and McDaniel (2016) examined the effect of making different types of metacomprehension judgments accompanied by the Read-Recite-Review (3R) strategy on text comprehension. The participants provided either judgments of inferencing (JOIs) or JOLs on the studied passages. In the JOI condition, participants made judgments about how well they would be able to apply core information in the passages. In the JOL condition, participants judged the likelihood of remembering the information from the studied text. The results showed that inference and problem-solving performance were enhanced only when making JOIs following retrieval practice. This suggests that not all kinds of metacognitive judgments enhance learning; whether inference or memory is considered for the learning goal is important while making metacognitive judgments. However, this study focused only on whether making metacognitive judgments would enhance the learning of previously studied material when combined with retrieval practice (i.e., 3R strategy), while not considering the effect of metacognitive judgments on subsequent learning.

Lee and Ha (2019) also reported that making metacognitive judgments facilitated inductive learning, but the benefits appeared differently depending on the type of judgment. In this study, making JOLs at the category level (i.e., predicting the likelihood of correctly classifying new exemplars of the studied categories) or global level (i.e., predicting overall performance) enhanced subsequent learning of new material (Experiments 2–3). However, making item-level JOLs (e.g., predicting the likelihood of correctly indicating a category given a certain exemplar) did not show benefits compared to the restudy control condition (Experiment 1). The researchers argued that this might be because making a JOL at a category or global level makes the learning goal clear to participants. The goal of inductive learning is to abstract commonalities among multiple exemplars, generalizing what is learned to other new examples. Therefore, if the item-level JOLs had participants focus on processing individual examples rather than abstraction and generalization, their metacognitive activities were probably not relevant to the learning goal. Although this study suggested that learning goals should be considered while making metacognitive judgments, it did not directly examine the effect of different types of learning goals accompanied by metacognitive judgments. Hence, the present study investigated whether providing different learning goals while making metacognitive judgments would orient learners to use different learning strategies and, in turn, affect their learning.

The present study

The goals of the current study were two-fold. The primary aim was to examine whether eliciting metacognitive judgments of learned materials would facilitate the learning of studied and subsequently studied material in text comprehension. To accomplish this goal, the current study used the forward-testing-effect test paradigm, in which participants were instructed to conduct a different interim activity for prior learning (e.g., interim testing and interim restudy) before studying new material. To be specific, we adopted the procedure used by Lee and colleagues (Choi & Lee, 2020; Lee & Ahn, 2018; Lee & Ha, 2019), which investigated the forward testing effect in inductive learning. We hypothesized that eliciting metacognitive judgments on previously studied text would enhance the learning of the previously learned material (the backward effect) and that of newly studied material (the forward effect) in text comprehension.

However, we expected that the effect of metacognitive judgments would differ depending on what learning goals learners consider while making such judgments. Thus, our secondary goal was to investigate how the effect of metacognitive judgments changes based on the type of learning goal emphasized while making judgments. We hypothesized that participants would regulate the learning process differently depending on their learning goal and that learning performance would improve when the type of learning goal was matched to the level of learning required for the final test. Specifically, we tested the effects of inference-based and memory-based metacognitive judgments on retention and transfer performance. If memory-based metacognitive judgments lead learners to focus on memorizing specific details, then they will show better performance in the retention test. Similarly, if making inference-based metacognitive judgments encourage learners to focus on understanding and inferring from the learning materials, then they will show better performance in the transfer test.

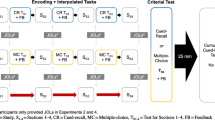

Figure 1 shows a schematic representation of the overall procedures used in this study. Through two experiments, we had participants study two different expository texts across two sections (Sections A and B), with one text in each section, making metacognitive judgments of the studied material between the two sections. The participants were randomly assigned to one of three conditions: inference-based metacognitive judgment, memory-based metacognitive judgment, and study-only control. In both metacognitive judgment conditions, participants were prompted to make metacognitive judgments on the material of Section A by answering a series of metacognitive questions relative to either inference-based or memory-based learning goals. In the control condition, the participants studied the passage in Section A without making any metacognitive judgments. After this manipulation, all the participants studied the passage of a new topic in Section B. No manipulation occurred in this section; thus, all participants studied the same passage for the same amount of time in an identical environment. Finally, all participants were given retention and transfer tests for the materials of both sections. Conducting tests for both sections allowed us to examine both the backward and forward effects of metacognitive judgments. If the metacognitive judgment groups outperformed the study-only control group in the final test of Section A, then this result would demonstrate the backward effect of metacognitive judgments on text comprehension. If the metacognitive judgment groups showed higher performance in the final test of Section B than the study-only control group, these results would reveal the forward effect of metacognitive judgments in text learning.

Experiment 1

Experiment 1 aimed to investigate whether metacognitive judgments of previously learned material would facilitate the learning of the already judged and newly studied material in text learning. We examined the effects of inference-based and memory-based metacognitive judgments, compared to the study-only control condition on the retention and transfer performance.

Method

Participants

To determine the sample size, a power analysis was conducted using G*Power (Faul et al., 2007). Because there was no prior study that directly investigated the effect of metacognitive judgments with different learning goals, we obtained effect size (d = 0.78) from Thiede et al. (2011), which examined the effect of inference test versus memory test expectancy. The analysis indicated that a sample size of 27 participants was required per condition, assuming α = 0.05, a power of 0.80, and a two-tailed test. Thus, we recruited approximately 30 participants per condition. The participants were 108 undergraduates from a large university in South Korea; 14 participants were excluded from the data analyses because they left the experiment idle for more than 10 min or lost internet connection in the middle of the experiment. Therefore, data from 94 participants (61 females, 33 males; mean age = 22.69 years) were analyzed. The participants took part online and were provided monetary compensation for participation. Each participant was randomly assigned to one of three conditions: 32 in the inference-based metacognitive judgment, 31 in the memory-based metacognitive judgment, and 31 in the study-only condition.

Design

The present study used a 3 (type of activity: inference-based metacognitive judgment, memory-based metacognitive judgment, study-only) × 2 (type of test: retention, transfer) mixed design. The type of activity was a between-subjects factor, and the type of test was a within-subject factor. In the metacognitive judgment conditions, participants were asked to make either inference-based or memory-based metacognitive judgments on the materials of Section A, before moving on to studying Section B. In the study-only condition, the participants studied Section A without making metacognitive judgments.

Materials and procedure

The current research was approved by the Institutional Review Board of the university where the present study took place, and participants completed an online informed consent form before taking part in the experiment. The materials were presented using the Qualtrics software (accessed at Qualtrics.com). Prior to undertaking the study, each participant was informed of the purpose and overall procedures of the experiment. Participants were told that they would read text passages about two different topics (i.e., bats and bread), one in each section of the experiment, and would be tested later on the studied text materials. The text passages used in the experiment were obtained from Butler (2010).Footnote 1 All passages were translated into Korean, as were the test questions. Each passage was nearly 800 words in length and organized into eight paragraphs containing either a single fact or concept.

Participants studied one topic (e.g., bats) in Section A and then another topic (e.g., bread) in Section B. The text materials were counterbalanced between the two sections to control for specific item effect. In Section A, participants read the text either for 6 min (inference-based and memory-based metacognitive judgment conditions) or 8 min (study-only control condition). Because the metacognitive judgment conditions were given 2 min for making judgments, a further 2 min of study time was given to the control group, providing all groups with the same Section A duration. Thereafter, the participants were given different instructions depending on the condition.

In both metacognitive judgment conditions, participants were asked to go through three phases of metacognitive judgment: monitoring, evaluating, and planning. These three metacognitive skills were considered critical for the regulation of learning (Schraw, 1998), and were included to prevent participants from prematurely finishing their metacognitive thinking (Lee & Ha, 2019). Monitoring refers to an individual’s online perception of their learning performance. Evaluating is assessing learning outcomes and checking learning efficiency. Planning refers to the selection of learning strategies and the subsequent regulation of learning behavior. The participants undertook all three phases in order, being instructed by a different prompt in each phase, adapted from the metacognitive questions used by Lee and Ha (2019).

First, in the monitoring phase, participants were asked to think thoroughly about how well they learned from the Section A text, and were asked, “In a final test, you will be given memory/inference questions concerning the text you just studied, how likely is it that you will correctly answer the memory/inference question?” Participants typed a number between 0 and 100 (%). Second, in the evaluating phase, participants were instructed to think thoroughly about the strategies they had used to study the text, and were asked, “How effective do you think your study strategies were in memorizing/inferring from the text?” Participants typed a number between 0 (not effective at all) and 100 (very effective). Finally, in the planning phase, participants were asked to think fully about what strategies would be effective in learning from a new text in the next section, and were asked, “How likely is it that you would change the strategies used in the previous section?” Participants typed a number between 0 (will not change at all) and 100 (will change completely). To prevent them from ceasing their metacognitive activities too early, the participants were not allowed to input their responses until 30 s had passed, following which a response instruction was displayed on the screen, leaving 10 s for input. Participants in the metacognitive judgment conditions, therefore spent 40 s for each phase of metacognitive judgment, resulting in a total of 2 min on metacognitive judgments.

After completing Section A, Section B followed, with all participants being instructed to study the other text passage. They read the passage for 6 min without any additional activities; thus, all three groups studied Section B under the same conditions.

On completion of Section B, all participants solved a series of simple math problems for 3 min and took a final test, with 12 questions for each of the two studied passages (Sections A and B). The test questions were selected from Butler (2010), consisting of retention and transfer problems. For each passage, there were eight retention and four transfer questions. Retention questions were selected to assess how well the participants could memorize facts and concepts from the studied texts. An example of the retention question is as follows: “Bats sleep hanging upside down in a high location to avoid predators. What is the main predator of bats?” (Answer: Birds of prey are the main predator of bats.). Transfer questions were used to evaluate how well participants could apply the facts or concepts within texts to a different domain. An example of the transfer question is as follows: “The U.S. Military is looking at bat wings for inspiration in developing a new type of aircraft. How would this new type of aircraft differ from traditional aircrafts like fighter jets?” (Answer: Traditional aircrafts are modeled after bird wings, which are rigid and good for providing lift. Bat wings are more flexible, and thus an aircraft modeled on bat wings would have greater maneuverability.) The participants took a final test at their own pace, and no feedback was provided. After the final test, demographic data were collected, and the participants were shown a debriefing page.

Scoring

We used the scoring scheme used by Butler (2010). Each response was scored as correct or incorrect (no partial credit was provided). If a participant’s response included information containing the core concept, it was scored as the correct answer. Two coders scored responses to all the questions independently, and any differences were resolved by the first author. Cohen’s kappa (Cohen, 1960) was used to calculate the interrater reliability. Reliability was high for both retention (κ = 0.98) and transfer questions (κ = 0.97).

Results

Metacognitive judgments

The participants in the inference-based and memory-based metacognitive judgment conditions provided three ratings on the materials of Section A. There was not a reliable rating difference between the two interim metacognitive judgment groups for monitoring, t(61) = 0.95, p = .346, evaluating, t(61) = 0.32, p = .752, and planning, t(61) = 1.68, p = .099.

We also computed metacognitive accuracy to see how participants’ metacognitive rating corresponds to actual test performance. Participants provided only a one-time single aggregate monitoring judgment for Section A, and we computed the difference between this predicted performance and actual performance. Because positive and negative differences can cancel each other out when computing average per condition, we chose to report the absolute difference between the predicted and actual performance. Higher values indicate larger inaccuracies. The mean absolute difference was 28.66 (SD = 20.94) for inference-based judgment and 22.48 (SD = 13.53) for memory-based judgment conditions, respectively. The difference measures were not significantly different between the two judgment conditions, t(59) = 1.29, p = .202.

Final test performance

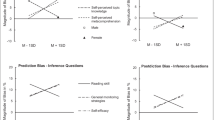

Figure 2 shows the mean percentage of correct responses in the final test of Sections A and B. To examine backward and forward effects separately, two separate 3 × 2 mixed analyses of variance (ANOVAs) were conducted on the number of correct answers for Sections A and B. The type of activity (inference-based judgment vs. memory-based judgment vs. study-only) was a between-subjects factor and the type of test (retention vs. transfer) was a within-subject factor. For all pairwise comparisons, we made a Bonferroni adjustment to maintain a family-wise Type 1 error rate of 0.05.

To examine whether making metacognitive judgments facilitated the learning of previously studied material (backward effect), we first looked at the patterns of performance for Section A. For Section A, there was a significant main effect for the type of activity, F(2, 91) = 7.52, p < .001, ηp2 = 0.142. The participants who made inference-based judgments outperformed the study-only group regardless of the type of test, mean difference = 21.77, 95% CI [8.04, 35.51], p = .001, d = 0.93. No other group differences were observed. The performance of the memory-based judgment group was not significantly different from that of the inference-based judgment group, mean difference = -9.27, 95% CI [-23.01, 4.46], p = .309, or study-only group, mean difference = 12.50, 95% CI [-1.35, 26.35], p = .091. There was not a significant main effect for the type of test, F(1, 91) = 3.18, p = .078, ηp2 = 0.034, nor was there an interaction between the type of activity and type of test, F(2, 91) = 0.22, p = .802, ηp2 = 0.005, indicating that the effect of metacognitive judgments did not change depending on the test type.

To examine whether making metacognitive judgments facilitated the learning of newly studied material (forward effect), we examined the patterns of performance for Section B. For Section B, there was a significant main effect for the type of activity, F(2, 91) = 11.94, p < .001, ηp2 = 0.208. Both the inference-based (mean difference = 28.59, 95% CI [14.24, 42.94], d = 0.93) and memory-based judgment (mean difference = 17.14, 95% CI [2.68, 31.60], d = 0.54) groups outperformed the study-only group, regardless of the type of test. There was no reliable performance difference between the inference-based and memory-based judgment groups, mean difference = 11.45, 95% CI [-2.89, 25.80], p = .164. In addition, there was a significant main effect for the type of test, F(1, 91) = 17.70, p < .001, ηp2 = 0.163, such that the retention performance was significantly higher than the transfer performance regardless of the type of activity, mean difference = 11.37, 95% CI [6.00, 16.73]. The interaction between the type of activity and type of test was not significant, F(2, 91) = 1.22, p = .30, ηp2 = 0.026.

Discussion

Experiment 1 demonstrated the positive effects of making metacognitive judgments on text learning. However, the benefits of metacognitive judgments differed depending on the type of judgment. Specifically, we obtained both backward and forward effects of the inference-based metacognitive judgments. Making inference-based metacognitive judgments facilitated not only the learning of previously studied materials but also the learning of newly studied ones, compared to study-only control. This is noteworthy because participants from all three conditions studied the passage in Section B under the same circumstance (i.e., reading the passage for 6 min). The only difference among the conditions was whether participants made metacognitive judgments on the material of Section A before studying Section B. Conversely, compared to the study-only control, making memory-based metacognitive judgments facilitated only the learning of newly studied materials, but not the learning of previously studied ones.

Differential effects depending on the type of judgment may have occurred because people were engaged in different cognitive processes while making metacognitive judgments. Previous studies suggest that learners use learning strategies differently depending on the learning goals they expect (Middlebrooks et al., 2017). The participants in the inference-based metacognitive judgment condition might focus on the conceptual understanding of learning materials, thus using more holistic and associative learning strategies, whereas the participants in the memory-based metacognitive judgment condition might focus on detail-based memorization. Accordingly, in the following experiment, we asked participants in the metacognitive judgment conditions to report the learning strategies they used for their prior learning and the strategies they would use for their subsequent learning of new material.

It should be noted that we failed to obtain an interaction effect between the type of activity and the type of test. We originally hypothesized that the memory-based and inference-based metacognitive judgment groups would outperform the other groups on retention and transfer tests, respectively. However, the effect of making different types of metacognitive judgments did not change depending on the test type in both Sections A and B. These results are in contrast with the test expectancy effect (Thiede et al., 2011). This may be because participants did not know what type of test questions would appear in the final test; thus, they did not have a clear learning goal. Although participants were explicitly informed that they would be tested with memory questions (in the memory-based metacognitive judgment condition) or inference questions (in the inference-based metacognitive judgment condition) while making metacognitive judgments relative to this specific learning goal, it is not clear whether they were aware of what their learning objective was (i.e., what types of test questions would appear). To address this issue, Experiment 2 included a manipulation check to ascertain that the participants in the inference- and memory-based metacognitive judgment conditions identified their learning goals as inference and memory, respectively.

Experiment 2

Experiment 2 once again investigated whether making metacognitive judgments could facilitate learning of already studied and newly studied text, including how their positive effects would change depending on the type of learning goal highlighted while making metacognitive judgments. Additionally, Experiment 2 investigated whether the participants would use different study strategies depending on the learning goal emphasized while making metacognitive judgments. Two modifications were made. First, a manipulation check was included to ensure that the participants identified the learning goal as intended: either inference or memory. We also asked the study-only group to report their learning goal, to examine which learning goal (i.e., inference or memory) they spontaneously set when they were not given an explicit one by the experimenter. Second, participants were asked to write their responses in detail while making three phases of metacognitive judgment: monitoring, evaluating, and planning.

Method

Participants

To determine the sample size, we used the effect size (ηp2 = 0.208) observed in Experiment 1. A power analysis using G*Power (Faul et al., 2007) indicated that a sample size of 20 participants per condition was required, assuming α = 0.05, a power of 0.80, and F tests. Thus, we recruited approximately 30 participants per condition. One hundred and thirty-three undergraduates from a large university in South Korea participated online with monetary compensation; the data from 43 participants were removed from analyses because they left the experiment idle for more than 10 min or lost internet connection in the middle of the experiment. Therefore, the data of 90 participants (62 females, 28 males; mean age = 21.67 years) were analyzed. Each participant was randomly assigned to one of the three conditions, with a total of 30 each in the inference-based metacognitive judgment, memory-based metacognitive judgment, and study-only conditions.

Design, materials, and procedure

The design and procedures were identical to those used in Experiment 1, except for two changes. First, we used a manipulation check to ensure that the participants knew which learning goal they should achieve (i.e., what type of test they would be given). Before asking them to make metacognitive judgments, we explicitly informed the participants of the type of test questions (memory or inference) they would be given later. Then, on the following page, we checked whether they correctly remembered the learning goal by asking the following question: “In the test phase, what types of questions do you expect to be asked?” The participants chose one of three items (memory, inference, and I do not know). Participants in the study-only condition were also asked the same question as in the other conditions. This was done to examine the type of learning goals the study-only group spontaneously set while not being given one in any phase of the experiment.

Second, in the inference-based and memory-based metacognitive judgment conditions, participants went through three phases of metacognitive judgment, as in Experiment 1. However, this time, they were asked to provide written responses for each question before providing a number rating. The questions used for the written responses were constructed so that participants would think thoroughly before giving a number rating; they were given 30 s to write a response. The questions used for the number rating were identical to those used in Experiment 1, and the participants were given 10 s. In the monitoring phase, participants were asked, “In a final test, you will be given memory/inference questions concerning the text you have just studied. Which part of the text do you think you will be able to correctly memorize/infer? Write down your answers.” In the evaluating phase, participants were asked, “What strategies did you use to memorize/infer from the text while studying? Write down your answers.” Finally, in the planning phase, participants were asked, “In the next section, you will study a new passage. When the learning goal is to memorize/infer from the text, what strategies do you think will be effective in achieving the goal? Write down your answers.” All other procedures were identical to those used in Experiment 1.

Scoring

The scoring scheme for the final test was the same as that used in Experiment 1. The interrater reliability was high for both retention (κ = 0.97) and transfer questions (κ = 0.95). Any differences were resolved by the first author.

Results

Manipulation check

A manipulation check revealed that all participants in the metacognitive judgment conditions identified the learning goal as intended. The inference-based metacognitive judgment group chose the inference goal (100%) and the memory-based metacognitive judgment group chose the memory goal (100%). Most participants in the study-only condition chose memory (80%), and only a few participants chose either inference (10%) or “I do not know” (10%).

Metacognitive judgments

The participants in the inference-based and memory-based metacognitive judgment conditions provided three ratings on the material of Section A. There was no reliable rating difference between the two metacognitive judgment groups for monitoring, t(58) = -1.77, p = .082, evaluating, t(58) = -0.56, p = .578, and planning, t(58) = 1.12, p = .267.

We also computed the absolute difference between the predicted performance and actual performance for Section A. The mean absolute difference was 23.67 (SD = 16.08) for inference-based judgment and 22.67 (SD = 16.32) for memory-based judgment conditions, respectively. These difference measures were not significantly different between the two judgment conditions, t(56) = 0.20, p = .842.

Final test performance

Figure 3 presents the mean percentage of correct responses in the final test of Sections A and B. To examine backward and forward effects separately, two separate 3 × 2 mixed ANOVAs were conducted on the number of correct answers for Sections A and B. The type of activity (inference-based metacognitive judgment vs. memory-based metacognitive judgment vs. study-only) was a between-subjects factor and the type of test (retention vs. transfer) was a within-subject factor. For all pairwise comparisons, we made a Bonferroni adjustment to maintain a family-wise Type 1 error rate of 0.05.

To examine whether the act of making metacognitive judgments facilitated the learning of previously studied material (backward effect), we first examined the patterns of performance in Section A. For Section A, there was not a significant main effect for the type of activity, F(2, 87) = 2.53, p = .086, ηp2 = 0.055, indicating no significant mean difference among the three groups. However, there was a significant main effect for the type of test, F(1, 87) = 10.41, p = .002, ηp2 = 0.107. The retention performance was significantly higher than the transfer performance regardless of the type of activity, mean difference = 9.03, 95% CI [3.47, 14.59]. There was not a significant interaction between the type of activity and type of test, F(2, 87) = 1.73, p = .183, ηp2 = 0.038.

To examine whether making metacognitive judgments affected the learning of newly studied material (forward effect), we examined the patterns of performance for Section B. For Section B, there was a significant main effect for the type of activity, F(2, 87) = 12.44, p < .001, ηp2 = 0.222. The inference-based judgment group outperformed both the memory-based judgment group, mean difference = 16.46, 95% CI [3.29, 29.63], p = .009, d = 0.62, and the study-only group, mean difference = 26.67, 95% CI [13.50, 39.84], p < .001, d = 1.01. There was not a reliable performance difference between the memory-based judgment and study-only groups, mean difference = 10.21, 95% CI [-2.96, 23.38], p = .185. There was also a significant main effect for the type of test, F(1, 87) = 8.72, p = .004, ηp2 = 0.091. The retention performance was significantly higher than the transfer performance, mean difference = 10.00, 95% CI [3.27, 16.73].

More interestingly, there was a significant interaction between the type of activity and type of test, F(2, 87) = 6.31, p = .003, ηp2 = 0.127, suggesting that the effect of the type of activity varied according to the type of test. The follow-up one-way ANOVAs revealed significant group differences in retention, F(2, 87) = 4.19, p = .018, ηp2 = 0.088, and transfer performance, F(2, 87) = 14.58, p < .001, ηp2 = 0.251. Specifically, for retention performance, the inference-based judgment group outperformed the study-only group, mean difference = 16.67, 95% CI [1.35, 31.98], p = .028, d = 0.69. The memory-based judgment group did not significantly differ from either the inference-based judgment group, mean difference = -2.08, 95% CI [-17.40, 13.23], p = 1.00, or the study-only group, mean difference = 14.58, 95% CI [-0.73, 29.90], p = .067. For transfer performance, the inference-based judgment group outperformed both the memory-based judgment group, mean difference = 30.83, 95% CI [13.02, 48.65], p < .001, d = 1.10, and the study-only group, mean difference = 36.67, 95% CI [18.85, 54.48], p < .001, d = 1.30. There was no reliable performance difference between the memory-based judgment and study-only groups, mean difference = 5.83, 95% CI [-11.98, 23.65], p = 1.00.

Analyses of written responses in metacognitive judgment conditions

In both metacognitive judgment conditions, the participants provided written responses while making three phases of judgment. To investigate whether the learning strategies differed by the type of metacognitive judgment, we analyzed written responses from only the evaluating and planning phases. In the monitoring phase, participants reported the keyword content (e.g., Bat species) of the text, but not the learning strategies they used. Depending on which strategies the participants mentioned in their responses, each response was classified into one of four categories: inference-focused strategies, memory-focused strategies, both, and others. Specifically, if the responses included words such as “inference,” “application,” and “understanding,” they were classified as inference-focused strategies. If the responses included words such as “remembering,” “memorization,” and “memory,” they were classified as memory-focused strategies. If the responses included keywords containing both strategies, they were classified as both. If the responses included information that belongs to neither inference nor memory strategies (e.g., “I read the text in order”), they were classified as others. Two coders independently scored all the responses. The interrater reliability was high for both the evaluating (κ = 0.90) and planning phases (κ = 0.95). Any differences were resolved by the first author.

Table 1 shows the distribution of the learning strategies participants reported in the evaluating and planning phases. Chi-square tests were conducted to determine the response difference in the conditions (inference-based metacognitive judgment vs. memory-based metacognitive judgment) according to the type of learning strategy (inference-focused vs. memory-focused). In the evaluating phase for the learned text materials, the two groups did not differ in the type of learning strategy, χ2 = 4.02, p = .26. In contrast, in the planning phase for the new text materials, there was a significant difference between the two groups, χ2 = 16.62, p < .001. The inference-based judgment group was more likely to report that they would use inference-focused over memory-focused learning strategies (56.7 vs. 16.7). The memory-based judgment group was more likely to report that they would use memory-focused over inference-focused learning strategies (63.3 vs. 16.7).

Discussion

In Experiment 2, for both Sections A and B, the participants performed better on the retention than the transfer test, probably because memory questions were easier than inference questions. More importantly, we found different patterns of the results between the two sections. Concerning the final performance for Section A, all groups showed similar levels of retention and transfer performance. Neither the inference-based nor the memory-based metacognitive judgment groups performed better than the study-only group on the previously studied passage, indicating that there were not backward effects of metacognitive judgments on text comprehension. In contrast, for Section B, the inference-based metacognitive judgment group outperformed the study-only group in both the retention and transfer tests, indicating a forward effect of inference-based metacognitive judgments on text comprehension. However, we did not find a significant benefit of memory-based metacognitive judgments compared with the study-only group, suggesting that the relative benefits of metacognitive judgments could differ depending on the learning goal highlighted while making such judgments. Experiment 2, therefore, partially replicated the findings of Experiment 1. However, the most important finding that we repeatedly demonstrated in both experiments was that making inference-based metacognitive judgments on prior learning enhanced the learning of new materials.

Additionally, we hypothesized that the learning differences among the conditions would result from the utilization of different strategies. Consequently, Experiment 2 classified the participants’ written responses of the evaluating and planning phases into either inference- or memory-focused strategies. In the evaluating phase, when people were prompted to report which learning strategies they used for the previously studied texts in Section A, the distribution of memory- versus inference-focused strategies was not significantly different between the two groups. Overall, the participants in both groups reported that they used more memory-focused strategies (overall M = 48.3%) than inference-focused strategies (overall M = 26.7%). This probably explains why we did not observe performance difference between the two metacognitive judgment conditions for Section A. Conversely, for the planning phase, when participants were asked about which strategies they would use for the subsequent new passage in Section B, inference-based metacognitive judgment group was more likely to report that they would use inference-focused learning strategies than the memory-based metacognitive judgment group. There was a forward effect of making inference-based metacognitive judgments for retention and transfer performance in Section B, suggesting that the positive effect of making inference-based metacognitive judgments could be due to the learning strategies the participants adopted.

Meta-analysis

There were some inconsistent results between the two experiments. For example, inference-based metacognitive judgment was more beneficial than study-only for Section A in Experiment 1, but not in Experiment 2. However, the results in Figs. 2 and 3 suggest that the rank order of mean performance among the three conditions was the same in both retention and transfer tests between the two experiments. The inference-based metacognitive judgment group always showed the best performance and the study-only group always showed the worst performance; the memory-based judgment group fell in between. The same ordinal patterns of the results suggest that the inconsistency across the two experiments might be due to low statistical power in individual experiment. Although we determined a sample size based on prior study, our sample was only enough to detect a medium to large effect size. Therefore, we conducted a mini meta-analysis to assess the overall effect of inference- and memory-based metacognitive judgments compared to the study-only control group. Mini meta-analysis is recommended for a small number of similar studies when there are inconsistent findings across them (Goh et al., 2016).

For each experiment, we computed effect sizes (Cohen’s d) of the inference-based metacognitive judgment and memory-based metacognitive judgment, with the study-only condition as a reference group, and then overall effect was determined using fixed effects model. Table 2 shows the summarized results of the meta-analysis. Across the two experiments, the overall effect of metacognitive judgment was always significant, except for one case. Specifically, the overall effect of the inference-based metacognitive judgment was always significant. The effect sizes ranged from medium to large (d = 0.67 ~ 1.16). The inference-based group outperformed the study-only group in both retention and transfer tests for both Sections A and B, indicating both forward and backward effects of inference-based metacognitive judgment. On the other hand, although the overall effect of memory-based metacognitive judgment was significant for most tests, its effect was not significant for the retention test of Section A (d = 0.28). The all other significant effect sizes were medium (d = 0.41 ~ 0.62). Overall, the meta-analytic results showed that the act of making a metacognitive judgment enhanced learning, but its facilitative effect varied depending on the type of metacognitive judgment. Making an inference-based metacognitive judgment, but not necessarily memory-based metacognitive judgment, was more benefial than simply studying the material.

General discussion

The present research was the first to test both the forward and backward effects of metacognitive judgments on text comprehension. Although emerging research suggests that making metacognitive judgments can influence learning of the already judged material and subsequently studied material, most studies that examined the backward effect of metacognitive judgments (e.g., JOL reactivity) were conducted using simple learning materials such as word pairs or lists (Janes et al., 2018; Kubik et al., 2022; Myers et al., 2020; Soderstrom et al., 2015). To the best of our knowledge, the only research that examined the direct influence of metacognitive judgments on text comprehension was conducted by Ariel et al. (2021). However, this research did not investigate the forward effects of metacognitive judgments. Besides, most research on the forward effect of metacognitive judgments focused on how monitoring influenced the subsequent study of the judged material, rather than learning performance itself, by examining study behaviors, including study time allocation and restudy material selection (e.g., Metcalfe & Finn, 2008; Thiede et al., 2003).

Backward effect of metacognitive judgments

For the backward effect of making metacognitive judgments, we hypothesized that making metacognitive judgments on studied text materials would facilitate the learning of those studied text materials. This hypothesis was mostly based on reasoning from research on the JOL reactivity, which found that making JOLs during learning directly improved learning (Double et al., 2018; Janes et al., 2018; Soderstrom et al., 2015; Witherby & Tauber, 2017). Before making metacognitive judgments, learners make a covert retrieval attempt (Putnam & Roediger, 2013), and this can improve memory by bringing incomplete information to the mind (Spellman & Bjork, 1992). However, in our research, making metacognitive judgments on prior learning did not always lead to performance enhancement. In Experiments 1–2, the participants in the memory-based judgment condition did not outperform the study-only condition in any tests for Section A. Although the meta-analysis revealed a significant effect of the memory-based judgment for transfer (d = 0.43), it failed to show a significant benefit for retention (d = 0.28). In contrast, the meta-analysis demonstrated a significant medium-sized effect of the inference-based metacognitive judgment for both retention (d = 0.67) and transfer (d = 0.69), although the significance was not consistently observed in the analysis of the individual experiment.

There are three possible reasons why the backward effect of metacognitive judgment was not consistently observed in our research. First, participants perhaps were not engaged in active retrieval of the studied material while making metacognitive judgments. Previous research suggests that metacognitive judgments provide learning benefits when learners covertly retrieve studied materials (Jönsson et al., 2012; Kimball & Metcalfe, 2003; Spellman & Bjork, 1992). However, making JOLs may not always cause retrieval processes, especially when participants prematurely finish such processes (the truncated search hypothesis; Tauber et al., 2015). Likewise, in the present study, participants might have not retrieved the text material while making metacognitive judgments, thus resulting in no benefit of metacognitive judgments on the previously studied text material. Indeed, Ariel et al. (2021) demonstrated that simply instructing students to make standard forms of JOLs (which do not evoke a retrieval attempt) did not enhance text comprehension; only when participants were prompted to retrieve text content before making JOLs did they show better text learning compared to the no-JOL control group.

Second, participants did not have an opportunity to regulate their learning of the already studied material (Thiede et al., 2003). Previous studies that reported positive backward effects of making metacognitive judgments often used multiple lists of learning items such as word lists (e.g., Soderstrom et al., 2015). Because participants make a JOL after each item, such procedure is very likely to allow participants to adjust their learning strategies while learning is progressing. In the present study, however, participants made metacognitive judgments only after the learning process was finished, thus were not able to adjust their learning.

Finally, the procedure for making metacognitive judgments was experimenter-paced. The participants were given a fixed amount of time for every step of making judgments (i.e., monitoring, evaluating, and planning). Although this procedure equalized the amount of time spent across the conditions, the participants may not have had enough time to make high-quality metacognitive judgments. For instance, in order to decide how well participants have learned, they may want to retrieve the studied material. The limited time may have prevented them from active retrieval, encouraging them to simply give a required response (e.g., answer with a number, 0–100%). In addition, the experimenter-paced procedure made it impossible to observe learners’ self-regulating behavior. The forward and backward effects of metacognitive judgments could become larger in self-regulated learning environments. Future studies need to investigate how learners allocate study time and adjust study strategies depending on the type of metacognitive judgments.

Forward effect of metacognitive judgments

For the forward effect of making metacognitive judgments, we hypothesized that eliciting metacognitive judgments on studied text materials would improve learning of new text material. Consistent with our hypothesis, the inference-based metacognitive judgment group always outperformed the study-only group in both the retention and transfer tests of Section B of Experiments 1 and 2. The meta-analysis also revealed a significant large-sized effect of the inference-based judgment for retention (d = 0.82) and transfer (d = 1.16). This finding is consistent with a great deal of research showing that metacognitive judgments influence students’ behavior, thus resulting in performance enhancement (Metcalfe & Finn, 2008; Thiede et al., 2003). For example, Lee and Ha (2019) revealed the forward effects of metacognitive judgments in inductive learning. When participants made category-level or global metacognitive judgments on previously learned categories, they showed better classification performance for the newly studied categories than those who did not make metacognitive judgments.

However, not all types of metacognitive judgments seem comparably effective to enhance learning. The memory-based metacognitive judgment did not show a significant benefit compared to the study-only condition in Experiment 2. Although the meta-analytic results revealed significant benefit of the memory-based judgment for both retention (d = 0.62) and transfer (d = 0.41), the effect sizes were smaller than those of the inference-based metacognitive judgment.

We conjecture that greater benefit of the inference-based metacognitive judgment was because the participants in this condition set a higher learning goal, thus using more effective learning strategies in their subsequent study. Depending on the type of test, learners adjust their learning strategies (Jensen et al., 2014) and vary their levels of processing (Thiede et al., 2011). Likewise, asking learners to make higher-level metacognitive judgments might encourage them to use study strategies that help connect different pieces of information and develop a deeper understanding. Indeed, in the current study, many of the inference-based metacognitive judgment group (56.7%) reported that they would use study strategies such as “organize the text materials” and “link the text content to the prior knowledge” for new text materials. The results suggest that making metacognitive judgments with a high-level learning goal allows learners to use study strategies that evoke deeper cognitive processing and exert more effort, resulting in more learning gains compared to the control group.

Learning goal of metacognitive judgments

We examined whether learning would improve when the level of learning goal (memory vs. inference) matched the kind of learning required for the final test (retention vs. transfer). Although previous studies on metacognitive judgments suggested that learning goals influenced the use of learning strategies and outcomes (Winne & Nesbit, 2010), the effect of different types of learning goals was not directly investigated. Prior studies on the test expectancy effect demonstrated that students adjusted their study strategies to best match the demands of the final test (Finley & Benjamin, 2012; Rouet et al., 2001). Accordingly, one may expect that the memory-based and inference-based metacognitive judgment groups would show better performance on retention and transfer tests, respectively. However, the memory-based metacognitive judgment group revealed superiority in none of the retention tests. Rather, the meta-analysis demonstrated that the inference-based metacognitive judgment was always more benefial than the study-only control for both retention and transfer, indicating a cascading effect, whereas the memory-based metacognitive judgment was not always more benefial than the study-only control. This finding is consistent with many previous studies reporting that higher-level quizzes not only enhance transfer performance but also facilitate memory performance (Jensen et al., 2014; McDaniel et al., 2013; Roelle et al., 2019). For example, Jensen et al. (2014) reported that students who had high-level quizzes showed better performance in both the low-level and high-level final tests than those who had low-level quizzes.

One possible reason for this effect found in the current and previous studies is that high-level learning requires the mastery of low-level knowledge. The revised Bloom’s taxonomy (Anderson et al., 2001) suggests that the cognitive process occurs in steps: higher-order learning (e.g., applying, analyzing, evaluating, and creating) requires mastering lower-order learning (e.g., remembering and understanding). To use and apply what they learned, students probably have to first memorize the basic terms and link this new knowledge with their prior knowledge. Consequently, participants who were given an inference goal may use more elaboration strategies to help retention and transfer.

Indeed, when we asked participants to report their learning strategies while making metacognitive judgments, the response patterns were quite different between the memory-based and inference-based metacognitive judgment groups. In the evaluating phase, many participants in both metacognitive judgment conditions reported that they mainly used memory-focused study strategies for Section A. For example, many students reported that they tried to memorize the keywords (e.g., features of megabat species, main ingredients of bread) in the text. However, in the planning phase, many participants in the inference-based metacognitive judgment condition said they would change their strategies from memorizing to elaborating, understanding, and inferring for the new passage; conversely, the participants in the memory-based metacognitive judgment condition said they would not change their strategies and continue using memorization. This suggests that when participants are given a specific learning goal, they regulate their study according to this goal. Specifically, providing a high-level goal appears to orient learners to use strategies appropriate for achieving both high-level and low-level learning.

Although it is clear that learning goal should be considered while making metacognitive judgments, admittedly, the present study cannot conclude whether the learning benefit was due to making metacognitive judgments per se or simply being induced by the learning goal provided for the metacognitive judgment conditions. Future studies could include new control conditions where only inference- or memory-learning goals are provided without requiring metacognitive judgments. By comparing two new control conditions and inference- and memory-based metacognitive judgment conditions, the relationship between metacognitive judgments and learning could be clarified.

Conclusion and implications

In summary, the present research suggests that metacognitive judgments on prior learning can potentiate learning and the benefits of metacognitive judgments can differ depending on the type of learning goal. These findings provide metacognitive researchers with two important messages. First, metacognitive researchers need to be more cautious about asking learners to provide metacognitive ratings such as JOLs. Making metacognitive judgments may not be a neutral act of evaluating one’s learning; rather they can directly affect subsequent learning outcome, and possibly affect previous learning, especially when it involves active retrieval. Although various education researchers demonstrated the benefits of metacognitive instruction on subsequent learning, their instruction often involved multiple sessions of training and a set of elaborated retrospective questioning/prompts (e.g., Safari & Meskini, 2016). The metacognitive prompts used in the present study, however, did not require any training. They were simple and generic enough to be used for any type of text material. Those simple questions affected learners’ study behavior (i.e., study strategies) and in turn, facilitated learning of new text material even when they were not given the freedom to allocate their study time. These findings suggest that researchers should be aware that the simple act of evaluating one’s learning may have some power to affect learning outcomes.

Second, metacognitive researchers also need to be careful about which learning goal is implied when asking participants to provide metacognitive evaluations. Our learning goal manipulation appeared to guide learners to use different learning strategies for their subsequent study of new materials so that they could achieve a different level of learning. After making a metacognitive judgment, the inference-based metacognitive judgment group appeared to change the learning strategy from memorization to understanding, whereas the memory-based metacognitive judgment group seemed to use the memorization strategy without changing the learning strategy. Our findings suggest that type of learning goal can drive differences in study strategies. Accordingly, the way in which participants are asked to evaluate their learning may encourage them to employ certain strategies in their subsequent study and affect learning performance.

Although the current study provides metacognitive researchers with cautious messages, from an educational perspective, it also suggests that the simple act of making metacognitive judgments can enhance learning. Without training, our simple questioning method facilitated retention and transfer performance, especially when students considered inference as their learning goal. Although we generally agree that students need questions from all knowledge levels (e.g., Bloom’s taxonomy: knowledge, comprehension, and application) for learning (Halis, 2002), the present study suggests that the effectiveness of metacognitive judgments may vary depending on the level of the goal. Metacognitive judgments with high-level learning goals may be enough to enhance all knowledge levels for old and new learning. That is, when people make judgments of learning relative to a higher-level learning goal, such as inference, rather than a lower-level learning goal, such as memory, they seem to remember more and achieve a better understanding of the subsequently learned texts. To facilitate the learning of new content, teachers may want to encourage their students to evaluate their learning status according to higher-level learning goals.

Data availability

The data for all experiments have been made available at https://osf.io/abhvu/?view_only=b90eae66055b450cab7920c356708b26. None of the experiments were preregistered.

Notes

We thank Andrew C. Butler at Washington University in St. Louis for providing the original text material used in this study (https://doi.org/10.1037/a0019902.supp)

References

Ackerman, R., & Goldsmith, M. (2011). Metacognitive regulation of text learning: On screen versus on paper. Journal of Experimental Psychology: Applied, 17(1), 18–32. https://doi.org/10.1037/a0022086

Anderson, L. W., Krathwohl, D. R., Airasian, P. W., Cruikshank, K. A., Mayer, R. E., Pintrich, P. R., & Wittrock, M. C. (2001). A taxonomy for learning, teaching, and assessing: A revision of Bloom’s taxonomy of educational objectives (abridged edition). Addison Wesley Longman.

Ariel, R., Karpicke, J. D., Witherby, A. E., & Tauber, S. K. (2021). Do judgments of learning directly enhance learning of educational materials? Educational Psychology Review, 33(2), 693–712. https://doi.org/10.1007/s10648-020-09556-8

Bannert, M., Hildebrand, M., & Mengelkamp, C. (2009). Effects of a metacognitive support device in learning environments. Computers in Human Behavior, 25, 829–835. https://doi.org/10.1016/j.chb.2008.07.002

Bannert, M., & Reimann, P. (2012). Supporting self-regulated hypermedia learning through prompts. Instructional Science, 40(1), 193–211. https://doi.org/10.1007/s11251-011-9167-4

Bjork, R. A., Dunlosky, J., & Kornell, N. (2013). Self-regulated learning: Beliefs, techniques, and illusions. Annual Review of Psychology, 64(1), 417–444. https://doi.org/10.1146/annurev-psych-113011-143823

Butler, A. C. (2010). Repeated testing produces superior transfer of learning relative to repeated studying. Journal of Experimental Psychology: Learning Memory and Cognition, 36(5), 1118–1133. https://doi.org/10.1037/a0019902

Butler, D. L. (1994). From learning strategies to strategic learning: Promoting self-regulated learning by post secondary students with learning disabilities. Canadian Journal of Special Education, 9(3–4), 69–101.

Butler, D. L., & Winne, P. H. (1995). Feedback and self-regulated learning: A theoretical synthesis. Review of Educational Research, 65(3), 245–281. https://doi.org/10.3102/003465430650032450

Choi, H., & Lee, H. S. (2020). Knowing is not half the battle: The role of actual test experience in the forward testing effect. Educational Psychology Review, 32(3), 765–789. https://doi.org/10.1007/s10648-020-09518-0

Cohen, J. (1960). A coefficient of agreement for nominal scales. Educational and Psychological Measurement, 20(1), 37–46. https://doi.org/10.1177/001316446002000104

Daumiller, M., & Dresel, M. (2019). Supporting self-regulated learning with digital media using motivational regulation and metacognitive prompts. The Journal of Experimental Education, 87(1), 161–176. https://doi.org/10.1080/00220973.2018.1448744

Dobson, J. L., Linderholm, T., & Stroud, L. (2019). Retrieval practice and judgements of learning enhance transfer of physiology information. Advances in Health Sciences Education, 24(3), 525–537. https://doi.org/10.1007/s10459-019-09881-w

Double, K. S., Birney, D. P., & Walker, S. A. (2018). A meta-analysis and systematic review of reactivity to judgements of learning. Memory (Hove, England), 26(6), 741–750. https://doi.org/10.1080/09658211.2017.1404111

Double, K. S., & Birney, D. P. (2019). Reactivity to measures of metacognition. Frontiers in Psychology, 10, 1–12. https://doi.org/10.3389/fpsyg.2019.02755

Dougherty, M. R., Scheck, P., Nelson, T. O., & Narens, L. (2005). Using the past to predict the future. Memory & Cognition, 33, 1096–1115. https://doi.org/10.3758/BF03193216

Dunlosky, J., & Lipko, A. R. (2007). Metacomprehension: A brief history and how to improve its accuracy. Current Directions in Psychological Science, 16(4), 228–232. https://doi.org/10.1111/j.1467-8721.2007.00509.x

Dunlosky, J., & Nelson, T. O. (1992). Importance of the kind of cue for judgments of learning (JOL) and the delayed-JOL effect. Memory & Cognition, 20(4), 374–380. https://doi.org/10.3758/BF03210921

Dunlosky, J., Rawson, K. A., Marsh, E. J., Nathan, M. J., & Willingham, D. T. (2013). Improving students’ learning with effective learning techniques: Promising directions from cognitive and educational psychology. Psychological Science in the Public Interest, 14(1), 4–58. https://doi.org/10.1177/1529100612453266

Faul, F., Erdfelder, E., Lang, A. G., & Buchner, A. (2007). G* power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39(2), 175–191. https://doi.org/10.3758/BF03193146

Finley, J. R., & Benjamin, A. S. (2012). Adaptive and qualitative changes in encoding strategy with experience: Evidence from the test-expectancy paradigm. Journal of Experimental Psychology: Learning Memory and Cognition, 38(3), 632–652. https://doi.org/10.1037/a0026215

Goh, J. X., Hall, J. A., & Rosenthal, R. (2016). Mini meta-analysis of your own studies: Some arguments on why and a primer on how. Social and Personality Psychology Compass, 10(10), 535–549. https://doi.org/10.1111/spc3.12267

Halis, Ä. (2002). Instructional technologies and material development. Nobel Press.

Janes, J. L., Rivers, M. L., & Dunlosky, J. (2018). The influence of making judgments of learning on memory performance: Positive, negative, or both? Psychonomic Bulletin & Review, 25(6), 2356–2364. https://doi.org/10.3758/s13423-018-1463-4

Jensen, J. L., McDaniel, M. A., Woodard, S. M., & Kummer, T. A. (2014). Teaching to the test… or testing to teach: Exams requiring higher order thinking skills encourage greater conceptual understanding. Educational Psychology Review, 26(2), 307–329. https://doi.org/10.1007/s10648-013-9248-9

Jönsson, F. U., Hedner, M., & Olsson, M. J. (2012). The testing effect as a function of explicit testing instructions and judgments of learning. Experimental Psychology, 59, 251–257. https://doi.org/10.1027/1618-3169/a000150

Joughin, G. (2010). The hidden curriculum revisited: A critical review of research into the influence of summative assessment on learning. Assessment & Evaluation in Higher Education, 35(3), 335–345. https://doi.org/10.1080/02602930903221493

Kimball, D. R., & Metcalfe, J. (2003). Delaying judgments of learning affects memory, not metamemory. Memory & Cognition, 31(6), 918–929. https://doi.org/10.3758/BF03196445

Kubik, V., Koslowski, K., Schubert, T., & Aslan, A. (2022). Metacognitive judgments can potentiate new learning: The role of covert retrieval. Metacognition and Learning, 1–21. https://doi.org/10.1007/s11409-022-09307-w

Lee, H. S., & Ahn, D. (2018). Testing prepares students to learn better: The forward effect of testing in category learning. Journal of Educational Psychology, 110(2), 203–217. https://doi.org/10.1037/edu0000211

Lee, H. S., & Ha, H. (2019). Metacognitive judgments of prior material facilitate the learning of new material: The forward effect of metacognitive judgments in inductive learning. Journal of Educational Psychology, 111(7), 1189–1201. https://doi.org/10.1037/edu0000339

Lin, X., Hmelo, C., Kinzer, C. K., & Secules, T. J. (1999). Designing technology to support reflection. Educational Technology Research and Development, 47(3), 43–62. https://doi.org/10.1007/BF02299633

Lin, X., & Lehman, J. D. (1999). Supporting learning of variable control in a computer-based biology environment: Effects of prompting college students to reflect on their own thinking. Journal of Research in Science Teaching, 36(7), 837–858. https://doi.org/10.1002/(SICI)1098-2736(199909)36:7<837::AID-TEA6>3.0.CO;2-U

McDaniel, M. A., Thomas, R. C., Agarwal, P. K., McDermott, K. B., & Roediger, H. L. (2013). Quizzing in middle-school science: Successful transfer performance on classroom exams. Applied Cognitive Psychology, 27(3), 360–372. https://doi.org/10.1002/acp.2914

Metcalfe, J., & Finn, B. (2008). Evidence that judgments of learning are causally related to study choice. Psychonomic Bulletin & Review, 15(1), 174–179. https://doi.org/10.3758/PBR.15.1.174

Mevarech, Z. R., & Kramarski, B. (2003). The effects of metacognitive training versus worked-out examples on students’ mathematical reasoning. British Journal of Educational Psychology, 73(4), 449–471. https://doi.org/10.1348/000709903322591181

Middlebrooks, C. D., Murayama, K., & Castel, A. D. (2017). Test expectancy and memory for important information. Journal of Experimental Psychology: Learning Memory and Cognition, 43(6), 972–985. https://doi.org/10.1037/xlm0000360

Mitchum, A. L., Kelley, C. M., & Fox, M. C. (2016). When asking the question changes the ultimate answer: Metamemory judgments change memory. Journal of Experimental Psychology: General, 145(2), 200–219. https://doi.org/10.1037/a0039923

Myers, S. J., Rhodes, M. G., & Hausman, H. E. (2020). Judgments of learning (JOLs) selectively improve memory depending on the type of test. Memory & Cognition, 48(5), 745–758. https://doi.org/10.3758/s13421-020-01025-5

Nelson, T. O., & Dunlosky, J. (1991). When people’s judgments of learning (JOLs) are extremely accurate at predicting subsequent recall: The “delayed-JOL effect. Psychological Science, 2(4), 267–271. https://doi.org/10.1111/j.1467-9280.1991.tb00147.x

Nelson, T. O., & Narens, L. (1990). Metamemory: A theoretical framework and new findings. In G. H. Bower (Ed.), The psychology of learning and motivation (26 vol., pp. 125–141). Academic.

Nguyen, K., & McDaniel, M. A. (2016). The JOIs of text comprehension: Supplementing retrieval practice to enhance inference performance. Journal of Experimental Psychology: Applied, 22, 59–71. https://doi.org/10.1037/xap0000066a

Pastötter, B., & Bäuml, K. H. T. (2014). Retrieval practice enhances new learning: The forward effect of testing. Frontiers in Psychology, 5, 1–5. https://doi.org/10.3389/fpsyg.2014.00286

Putnam, A. L., & Roediger, H. L. (2013). Does response mode affect amount recalled or the magnitude of the testing effect? Memory & Cognition, 41(1), 36–48. https://doi.org/10.3758/s13421-012-0245-x

Rivers, M. L., & Dunlosky, J. (2021). Are test-expectancy effects better explained by changes in encoding strategies or differential test experience? Journal of Experimental Psychology: Learning Memory and Cognition, 47(2), 195–207. https://doi.org/10.1037/xlm0000949

Roediger, H. L., & Karpicke, J. D. (2006). The power of testing memory: Basic research and implications for educational practice. Perspectives on Psychological Science, 1(3), 181–210. https://doi.org/10.1111/j.1745-6916.2006.00012.x

Roelle, J., Roelle, D., & Berthold, K. (2019). Test-based learning: Inconsistent effects between higher-and lower-level test questions. The Journal of Experimental Education, 87(2), 299–313. https://doi.org/10.1080/00220973.2018.1434756

Rouet, J., Vidal-Abarca, E., Erboul, A. B., & Millogo, V. (2001). Effects of information search tasks on the comprehension of instructional text. Discourse Processes, 31(2), 163–186. https://doi.org/10.1207/S15326950DP3102_03

Safari, Y., & Meskini, H. (2016). The effect of metacognitive instruction on problem solving skills in iranian students of health sciences. Global Journal of Health Science, 8(1), 150–156. https://doi.org/10.5539/gjhs.v8n1p150

Schraw, G. (1998). Promoting general metacognitive awareness. Instructional Science, 26(1/2), 113–125. https://doi.org/10.1023/A:1003044231033

Soderstrom, N. C., Clark, C. T., Halamish, V., & Bjork, E. L. (2015). Judgments of learning as memory modifiers. Journal of Experimental Psychology: Learning Memory and Cognition, 41(2), 553–558. https://doi.org/10.1037/a0038388