Abstract

Credit network configurations play a crucial role in determining the vulnerability of the economic system. Following the network-based financial accelerator approach, we constructed an agent based model reproducing an artificial credit network that evolves endogenously according to the leverage choices of heterogeneous firms and banks. Thus, our work aims at defining both early warning indicators for crises and policy precautionary measures based on the endogenous credit network dynamics. The model is calibrated on a sample of firms and banks quoted in the Japanese stock-exchange markets from 1980 to 2012. Both empirical and simulated data suggest that credit and connectivity variations could be used as early warning measures for crises. Moreover, targeting banks that are central in the credit network in terms of size and connectivity, the capital-related macro-prudential policies may reduce systemic vulnerability without affecting aggregate output.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

After the 2007 crisis, systemic risk and macro-prudential policies have been at the center of the economic debate (Basel Committee 2010; Yellen 2011; Ghosh and Canuto 2013; MARS 2014). Several studies on interbank markets and credit networks show that networks configurations play a crucial role in determining the vulnerability of the economic system (Allen and Gale 2000; Iori et al. 2006; Battiston et al. 2012; Caccioli et al. 2012; Allen et al. 2012; Palestrini 2013; Battiston et al. 2016).

Simulating an endogenous evolving credit network, we aim at gaining insights into the relation between network configurations and systemic risk in order to select early warning indicators for crises and define policy precautionary measures.

Following the network-based financial accelerator approach (Delli Gatti et al. 2010; Riccetti et al. 2013; Catullo et al. 2015), we constructed a macroeconomic agent-based model (Delli Gatti et al. 2005; Delli Gatti et al. 2010; Dosi et al. 2010) reproducing an artificial credit network, which evolves endogenously through individual demand and supply of loans of heterogeneous firms and banks. We modified both learning and credit matching mechanisms of the Catullo et al. (2015) model in order to increase the stability of individual leverage choices and to reduce the inertia of the network configuration dynamics. Moreover, adopting the methodology followed by Schularick and Taylor (2012), we isolated early warning indicators for crises (Alessi and Detken 2011; Babecky et al. 2011; Betz et al. 2014; Drehmann and Juselius 2014; Alessi et al. 2015) and we simulated different policy scenarios using capital-related macroprudential policiesFootnote 1 (IMF 2011; Claessens 2014; MARS 2014; Angeloni 2014).

The model defines a simple interaction structure between firms and banks. Firms fund production through internal resources and by borrowing money from banks. By increasing the leverage, firms are able to raise their production level and thus boost the expected revenues. However, firm revenues are influenced by idiosyncratic shocks. Consequently, if a firm increases its leverage, its expected profits will augment. At the same time, however, the firm’s exposure to negative shocks will raise along with its failure probability (Greenwald and Stiglitz 1993; Delli Gatti et al. 2005; Riccetti et al. 2013). Moreover, high levels of target leverage are associated with high interest rates on loans and high probability of suffering credit rationing. Similarly, high levels of leverage result in the augmentation of the expected profits for banks, but also in the raising of their exposure to the firm’s failure.

Therefore, firms and banks have to deal with the trade-off between increasing their leverage to augment expected profit, and reducing exposure to contain failure probability (Riccetti et al. 2013; Catullo et al. 2015). In the attempt of gaining satisfying levels of profits without being exposed to excessive risks, firms and banks choose their target level of leverage through a simple reinforcement learning procedure (Tesfatsion 2005; Catullo et al. 2015). Consequently, modifying individual credit demand and supply, agent target leverage choices determine the evolution of the credit network.

We calibrated the model on a sample of firms and banks quoted on the Japanese stock-exchange markets from 1980 to 2012 (Marotta et al. 2015), reproducing the levels of leverage, connectivity and output volatility observed in the empirical dataset.

Model simulations generate endogenous pro-cyclical fluctuations of credit and connectivity. Indeed, since they lend to relatively robust firms, banks are able to increase their net-worth during expansions: hence, they do not suffer from firm failures. Consequently, the bank’s supply of loans augments implying that borrowing money for firms becomes easier. Thus, both the firm’s leverage and the integration of the credit network tend to increase. However, the default risk increases with leverage, and high connectivity may foster the diffusion of negative effects of firms and banks failure (Delli Gatti et al. 2010; Riccetti et al. 2013; Catullo et al. 2015). In effect, aggregate credit leverage and connectivity are positively correlated with the number of firm failures. Therefore, during expansionary phases credit, leverage and connectivity growth may create the conditions for future recessions and crises (Minsky 1986).

Indeed, following the methodology developed by Schularick and Taylor (2012), we found that both credit and connectivity growth rates are positively correlated with crisis probability and that they are effective early warning measures in both empirical and simulated data.

Moreover, the model is suitable for designing macro prudential policies which exploit agent heterogeneity and the network’s interaction structure. Indeed, capital-related measures which force banks to avoid lending to more indebted firms may decrease the output’s volatility without causing consistent credit reductions and, thus, output contractions.

We also tested permanent capital-related measures applied to larger and more connected banks only. When interventions target banks that are relatively central in the credit network in terms of size and connections, the vulnerability of the economic system may be substantially reduced without affecting aggregate credit supply and output. Thus, the analysis of credit network connectivity may be useful for assessing the emerging of system risk. Besides, agent-based models that endogenize credit network dynamics may be used for testing the effectiveness of early warning indicators and the effects of different macro-prudential policies.

The paper is structured as follows. The next section describes the agent-based model: agents behavioral assumptions, matching mechanisms between banks and firms and leverage decisions. The third section illustrates simulation results. In first instance, we will focus on the patterns of calibrated simulations. Secondly, we test the effectiveness of connectivity measures as early warning indicators. After that, we will implement simple macro-prudential capital-related measures. The last section contains our conclusions.

2 The model

Our artificial economy is populated by M banks and N heterogeneous firms. Firms produce a homogeneous good using capital as the only input, they fund production through their net-worth and thanks to bank loans. We assume that, in each period, both banks and firms try to reach a target leverage level (Adrian and Shin 2010; Riccetti et al. 2013; Aymanns et al. 2016) chosen according to a reinforcement learning mechanism based on Tesfatsion (2005).

2.1 Firms

Firms use capital (\(K_{it}\)) to produce output through a non-linear production function:

The firm’s balance sheet is:

Capital is given by net-worth (\(E_{it}\)), loans contracted at time t (\(L_{it}\)) and the share of the loans borrowed at time \(t-1\) that have not been repaid yet (\(\phi L_{it-1}\)). Firms can receive loans from more than one bank, thus the amount of loan borrowed by a firm is given by the sum of the loans received by the lending banks (z):

In each period the firms fix a target leverage level (\(\lambda _{it}\)) so that the loan demand (\({L_{it}}^d\ge 0\)) derives from the target leverage chosen:

with the target leverage equal to:

The higher the target leverage, the higher the level of indebtedness. If \(\lambda _{it}=1\) the capital is financed completely by internal sources. Each period, target leverage (\(\lambda _{it}\)) is chosen following the reinforcement learning algorithm described in Sect. 2.4. Firms can choose their leverage strategy (\(\lambda _{it}\)) among a given discrete set of strategies \(\varLambda _f\), with \(\lambda _{it}\ge 1\). We assume that \(\lambda _{it}\) can not be greater than a given maximum value, which represents the maximum level of risk a firm is allowed to take.

The interest rate (\(r_{it}\)) associated to each loan is a function of the firm target leverage and the interest rate (r) payed by banks on deposits. The \(\eta \) parameter is a measure of the sensitivity of banks to the borrower risk (\(\eta >0\)):

Profits derive from the difference between revenues (\(u_{it}Y_{it}\)) and costs, which are in turn given by the sum of interests on loans (\(r_{it}L_{it}+\phi r_{it-1}L_{it-1}\)) and a fixed costs (F).

Net revenues (\(u_{it}Y_{it}\)) depend on the stochastic value \(u_{it}\), which represents uncertain events that are not explicitly modeled (Greenwald and Stiglitz 1993; Riccetti et al. 2013):

Thus, net revenues are given by both a fixed component (m) and a probabilistic one representing idiosyncratic shocks on firms revenues (\(\epsilon _{it}\)). Since the expected value of \(\epsilon _{it}\) is zero, the expected marginal net revenue is equal to m.

A part of the profits is not accumulated (\(\tau \pi _{it}\), \(0<\tau <1\)), thus net-worth becomes:

2.2 Banks

Banks supply loans (\(L_{zt}\)) through their net-worth (\(E_{zt}\)) and deposits (\(D_{zt}\)): the banks’ balance sheet is given by \(L_{zt}=D_{zt}+E_{zt}\). Banks establish the level of credit supply following the same reinforcement learning algorithm used by firms, choosing a level of target leverage \(\lambda _{zt}\), from a discreet set of values (\(\varLambda _b\)). Deposits (\(D_{zt}\)) are computed as the residual between loans (\(L_{zt}\)) and net-worth (\(E_{zt}\)). The amount of new credit potential supply is reduced by the sum of the loans to firms i (\(i \in I_{zt-1}\)) that are not already matured

Thus, as for firms, riskier leverage strategies correspond to higher levels of \(\lambda _{zt}\). Indeed, the higher \(\lambda _{zt}\), the higher the supply of loans that is not covered by net-worth (\(E_{zt}\)) but relies on deposits (\(D_{zt}\)). We assume that banks have a maximum level of target leverage because of prudential reasons and in conformity with international credit agreements (Basel’s agreements). Moreover, for prudential reasons, a bank can provide to a single firm only a fraction of its supplied loans according to the parameter \(\zeta \), hence \(\zeta {L_{zt}}^s\) is the maximum amount of loan that can be offered to a single firm.

Bank revenues are given by the interest payed on the loans by borrowers at time \(t-1\), \(i \in I_{zt-1}\) and borrowers at time t (\(i \in I_{zt}\)). Bad debts (\(BD_{zt}\) and \(BD_{zt-1}\)) are costs for banks, they are given by loans that are not payed back because of the failure of the borrowing firms. Moreover, banks have to pay a given interest rate r on deposits along with a fixed cost (F).

A part of the profits is not accumulated (\(\tau \pi _{zt}\), \(0<\tau <1\)), thus the net-worth (\(E_{zt}\)) becomes:

2.3 Matching among banks and firms

Each period, banks and firms establish respectively their supply and demand of loans choosing their target leverage. Each bank offers loans to demanding firms until its supply is exhausted. On the other hand, firms may borrow credit from different banks until their loan demand is satisfied. Thus, firms can be linked with more banks at each time.

In first instance, firms ask for loans to linked banks. To each linked bank (z) firm i asks for a fraction (\(L_{izt}^d\)) of its total demand for loans (\(L_{it}^d\)); this fraction is proportional to the credit of the previous period provided by bank z to firm i (\(L_{izt-1}\)) divided by the total credit received by firm i at time \(t-1\) (\(L_{it-1}\)).

If the loans received by firm i are lower than the ones asked to its linked banks, firm i will ask for loans to randomly chosen other banks.

A bank can deny loans to riskier firms, the probability (\(p_R\)) that the demand for loans of firm i is not accepted increases in the firm target leverage (\(\lambda _{it}\)):

If the bank loan supply is lower than the sum of the accepted demands of the linked firms, the bank grants to each firm a loan proportional to its demand. Thus, the loan given to firm i, in the set of the j linked firms (\(I_a\)) is given by:

On the contrary, when credit supply is higher than the accepted demand for loans, a bank may provide credit to other firms.

Therefore, credit network evolves according to the individual demand and supply of loans. A new credit link is established when the demand of loans of a firm is accepted by a bank with which the firm was not previously linked, while the credit link between a bank and a firm is cut when:

-

1.

The firm or the bank fails;

-

2.

The firm or the bank does not ask/offer loans at time t;

-

3.

The bank refuses to provide loans because the firm is considered too risky;

2.4 Leverage choice

In each period, the agents (both banks and firms) choose a target leverage level (\(\lambda _{xt}\)). The target leverage (\(\lambda _{xt}\)) is chosen among a limited set of values (\(\varLambda \), in effect \(\varLambda _f\) for firms and \(\varLambda _b\) for banks). The set of possible levels of leverage is limited for banks because they have to respect capital requirements (in line with Basel’s Agreements).Footnote 2 It is limited for firms because we assume that banks do not provide credit to highly indebted firms in order to reduce bad debts.

The choice mechanism is based on the Tesfatsion (2005) reinforcement learning algorithm. In each period, firms and banks choose one of the possible levels of leverage. At the beginning of the following period, the agents observe the result of their choices: i.e., the profit (\(\pi _{xt-1}\)) received. In this paragraph, we denote the past profit \(\pi _{xt-1}\) as \(\pi _{xst-1}\) to underline that it is the profit deriving from the choice of a particular leverage strategy, i.e. a particular value of \(\lambda _{s}\) at time \(t-1\) for agent i. The profit received in the previous period, when the target leverage \(\lambda _s\) was chosen, is used to update \(q(\lambda _s)_{xt}\):

The strength of the memory of the agent is given by the parameter \(\chi \). At the beginning of each period, the effectiveness of every leverage level \(q(\lambda _s)_{t-1}\) is reduced by a small percentage (\(\xi \)): \(q(\lambda _s)^F_{xt-1}=(1-\xi )q(\lambda _s)_{xt-1}\), where \(\xi \) represents the extent of ‘forgetting processes’.

Agents may choose among a restricted set of possible levels of leverage (\(\varLambda _a \subseteq \varLambda \)). In fact, since loans have a two-period maturity, agents have to consider also their past debts, which leads to a certain level of leverage inherited from past loans. Moreover, firms would not choose levels of leverage that generate costs higher that the expected profits. Since the production function is concave, the higher the level of capital used, the lower the marginal production and thus the marginal value of expected profit, while financial costs increase linearly with leverage. According to Eqs. 1, 5 and 6, it is convenient to take a certain level of leverage if the associated loan cost (\(r_{it}L_{it}^D\)) is lower than gains, \(m \rho (K+L)^\beta -\rho (K)\). Therefore, firms will choose among a reduced set of leverage target possibilities which may not include lower level of \(\lambda _{xt}\) because of the debt inherited from the past. At the same time, this set excludes higher level of \(\lambda _{xt}\) because at higher levels of leverage financial costs may overcome expected profits. Bank’s leverage choices are reduced only if constrained by previous period loans (in Fig. 1, in panel a the complete set of leverage, while the reduced set is illustrated in panel b).

Agents adjust their leverage only gradually, thus each period agents may choose among three strategies: the leverage chosen in the past period and the two levels of leverage immediately adjacent (for instance, in the Fig. 1 panel c, if leverage was 5, the agent may choose among 4, 5 and 6). Thus, the agent’s choices are restricted to the \(\varLambda _c\) set, with (\(\varLambda _c \subseteq \varLambda _a \subseteq \varLambda \)). If the past level of leverage is not into the available set of leverage, the agent will choose the nearest level of leverage allowed (in Fig. 1 panel d, the level of leverage 6 is not allowed thus the agent will choose 4). Moreover, if no level of \(\lambda \) is allowed, the agents will choose the one corresponding to the lowest level of leverage, thus the lower \(\lambda \). The lowest value of \(\lambda \) for firms is \(\lambda =1\), meaning that in this case a firm does not borrow money but use only its internal resources and previous loans, if any. The lowest level of \(\lambda \) for banks is higher than one, otherwise with \(\lambda =1\) a bank would not offer any credit, thus, ceasing its activity.

Once the effectiveness of each strategy is valued, the agents will associate to each strategy a certain probability that this strategy will be chosen in the following period. The probability of choosing a particular level of leverage (strategy \(\lambda _s\)) among the allowed levels of leverage (\(\varLambda _c\)) is given by \(p(\lambda _s)_{xt}\). This probability is different for each agent according to its past profit results:

where \(X_{xst}\) is the strength associated by agent x to a strategy at time t, which depends on its effectiveness. The exponential values of the strength (\(X_{xst}\)) of each strategy are used to compute the probability of choosing it \(p(\lambda _s)_{xt}\). Taking the exponential, strategies that are more efficient have a more than proportional probability to be chosen. The probability of choosing a strategy s is computed as the exponential value of its strength divided by the sum of the exponential value of all the strategies among which the agent may choose.

In general, choosing higher levels of leverage may lead to higher profits. However, higher leverage implies higher risks for both firms and banks. Moreover, firms with higher target leverage levels pay higher interest rates and they have a higher probability of not being accepted as borrowers. Besides, banks with high target leverage have to pay high volumes of interest on deposits while, in case of scarcity of credit demand, they may not be able to lend all the credit they offer.

To allow a continuous exploration of the action space, there is a relatively little probability (\(\mu \)) that in each period the agents choose their leverage strategy randomly without considering their respective effectiveness, always into the set of the allowed levels of leverage (\(\varLambda _c\)). The forgetting mechanism and the random choice probability allow agents to explore their strategy space avoiding the possibility of being trapped in sub-optimal solutions or in strategies that are not more effective in a continuously evolving economic environment.

3 Simulation results

3.1 Empirical data and simulation results

Simulated data are calibrated on a sample of firms and banks quoted on the Japanese stock exchange markets from 1980 to 2012. This dataset collects yearly balance sheet data and the value of loans among banks and firms. On average, the dataset includes 226.181 banks and 2218.152 firms, located in 35 prefectures.

We have run three groups of 10 different Monte Carlo simulations and we calibrated the model in order to replicate in each of these simulated groups the same level of leverage, connectivity and output volatility observed empirically. Indeed, high output volatility may imply high crisis probability. Moreover, high leverage may increase the vulnerability of the economic system, and high connectivity may foster the diffusion of shocks throughout the economy.

In our simulations, we fix a ratio of 10 firms to each bank that is similar to the empirical one. We assume that each simulated period corresponds to a quarter of year and we run simulations for 500 periods. We analyze simulated data from the 368th to the 500th period in order to have 132 quarters that correspond to 33 years, the same time span of the empirical dataset.

An extensive stream of empirical literature has focused its attention on the study of the size distributions of economic agents. Among the others, Axtell (2001) has analyzed the 1997 census data for U.S. firms and has discovered that firms size distribution follows a Zipf’s Law. Janicki and Prescott (2006) replicate this analysis for the U.S. banks, employing a dataset of the U.S. federal bank regulators. They have found that the banks size follows a nested distribution which is lognormal in the lower-middle part with a Pareto-distributed tail. In credit markets Masi and Gallegati (2011) and Lux (2016) observe a more heterogeneous degree distribution for banks rather than for firms. This means a thicker tail of the banks’ degree distribution compared to the one of the firms. In other words, a pool of few banks finances a large set of firms.

Following the methodology proposed by Clementi et al. (2006), Clauset et al. (2009) we test the presence of “fat tails” in the distributions of size and degree in both empirical and simulated data (Figs. 2, 3), hence considering the last year of our empirical dataset and ten different Monte Carlo simulations (for a more detailed description of the methodology see the “Appendix”). The estimation procedure consists of three steps: the estimation of the power-law distribution scale (\(\hat{x}_{min}\)) and shape (\(\hat{\alpha }_{\text {Hill}}\)) parameters, testing the goodness-of-fit of the power law hypothesis through data bootstrap (Kolmogorov–Smirnov, as null hypothesis the distribution has a power low tail), testing the power law hypothesis against a “light-tailed” distribution (we used the Vuong (1989) test, testing the power law against the exponential hypothesis).

Tables 1 and 2 report the results of the three tests performed for both empirical and simulated data respectively. Considering the Pareto \({\hat{\alpha }_{Hill}}\) parameters and the Vuong tests, our estimates suggest that the distributions of firm and bank size (assets and equities for banks) show the presence of “fat tails”. This evidence seems to be stronger for the distribution of bank assets. Moreover, according to the Pareto \({\hat{\alpha }_{Hill}}\) parameters, the degree distribution of banks seems to be more heterogeneous that the one of firms. Therefore, in both simulated and empirical data few banks seems to occupy a central position in our credit network in terms of both size and connectivity.

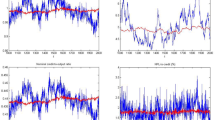

The empirical dataset includes only yearly data over just 33 years, while simulated data are collected from multiple simulations with a quarterly structure. Thus, simulated data can be used to explore the relation between macro-variables considering the cross correlation of their cyclical component detrended through the HP filter. As shown in Fig. 4, the output is positively correlated with leverage and credit, thus high levels of output are associated with high levels of credit and firm leverage. Also connectivity, defined as the average normalized degree, is positively correlated with the output.

In the simulated model, crises are triggered by the failure of firms: if indebted firms suffer a negative idiosyncratic demand shock, they may fail. Failing firms do not repay their debts to lending banks thus, in turn, some banks may fail or may contract their loans supply. If the banks that are hit by failed firms are relatively central in the credit network (in terms of both credit supplied and number of loans provided to firms) the credit supply contraction may affect the whole economy and, consequently, aggregate output may fall down. Figure 5 shows how failures are significantly correlated with the four macro variable we considered above: output, credit, leverage and connectivity. Indeed, when leverage is high, also the probability of failure of firms increases; also, as mentioned above, the leverage is positively correlated with output, credit and connectivity. Moreover, these four macro variables seem to anticipates firm failures: when their values increase, also the number of firm failures tends to augment in the following periods.

Therefore, expansionary phases, characterized by high output, lead to increasing leverage and connectivity growth, which in turns may augment the vulnerability of the system, amplifying and diffusing the effects of local shocks as, for instance, firm or bank failures. In fact, expansions may create the condition for following output decrease. In the next section, we are going to test the effectiveness of these macro variables as early warning indicators for crisis, conceived as huge output contractions.

3.2 Empirical and simulated relation between credit network dynamics and crises

In order to detect the early warning properties of credit, leverage and connectivity, we follow the methodology applied by Schularick and Taylor (2012). They showed that after WWII, credit growth rates have a significant impact on crisis probability and, then, credit variations are effective early warning measures for crises. The relation between credit dynamics and crisis probability is tested implementing a Panel Logit model on a dataset composed by annual data of 12 country over 140 years:

where (\(p_{it}\)) is the crisis probability, \((L)\varDelta log CREDIT_{it}\) are lagged credit logarithmic variations and \(\mathbf X _{it}\) are control variables. The higher the predicted values of the regressions, the higher the probability of a crisis to occur.

Roc curve analysis is used to test the effectiveness of credit variations as early warning measures, valuing the extent of the trade-off between false alarm and hit ratio (Alessi and Detken 2011; Babecky et al. 2011; Betz et al. 2014; Drehmann and Juselius 2014; Alessi et al. 2015). Indeed an early warning indicator may be conceived as a source of signal of different intensity. Over a certain threshold, the signal may alert policy makers, since the stronger the signal, the higher the probability of crisis (Fig. 6). Thus, if the policy maker intervenes only when the intensity of the signal is strong, false alarm probability is reduced. Conversely, an alert threshold that is too high may discourage policy intervention even when a crisis is approaching, hence it reduces the hit ratio of the indicator. Therefore, early warning indicators may be valued by their capacity of reducing the trade-off between false alarms and hit ratio. A basic measure of this trade-off is the Auroc, which is the area below the Roc curve: the largest this area, the higher the effectiveness of the indicator as an early warning measure.

We implement this Logit regressions on our empirical dataset. In order to apply this methodology, we build a Panel, dividing firms according to the prefecture they belong to. In the dataset we have yearly data for 35 prefectures, for a 33 years time span. However, to balance our Panel we consider only prefectures that are present in all the periods of our dataset. Moreover, we drop those prefectures that have a small number of firms (we fixed the minimum number of firm at twenty). Hence, our dataset shrinks from 35 to 8 prefectures over 33 years. For each prefecture we compute the aggregate output as the sum of firm revenues, and we define a crisis as an annual reduction of aggregate output lower than \(-5\)%.Footnote 3

Consequently, the effective dimensions of our dataset is quite limited. Nevertheless—as suggested by Schularick and Taylor (2012)—credit variation lags seems to be significant predictors of crisis probability (Table 3). Indeed, we test the relation between credit variations for 5 year lags (credit) and crisis probability (Table 3, column credit), and we assume this specification as the baseline econometric model.

The third lag of credit variation is significant and positively correlated with credit probability. The sum of the credit variation coefficients is statistically significant at 10%. Moreover, the Wald test on the five lags is significant at the 15% (\(\chi ^2\) lags) and the whole regression \(\chi ^2\) is also significant at 1%. Besides the AUROC is greater than 0.5. Thus, credit growth seems to contribute to generate the conditions for crisis occurrence and it may be an effective early warning indicator for crises.

We control for lagged output variations (y), for a leverage level measured as credit over output (credit / y) and for a connectivity measure (deg).Footnote 4

Output variations seems to be slightly positively correlated with crises probability, even if they do not contribute to increase the Auroc level. The leverage level does not seem to impact on crisis probability in a significant way. Conversely, the connectivity variations seem to be positively correlated with crisis probability. Three lags are positive—the first, the fifth and the fourth—but only the first two are significant, while the the second and the third are negative but not statistically different from zero. Therefore, the sum of the lags is not significant while jointly connectivity variation lags are significant at 10%. Adding connectivity among the regressors has increased the Auroc: according to the De Long test, this increment is statistically significant at 20%. Indeed, connectivity variations are positively correlated with crisis probability, since a strictly interconnected network may foster the diffusion of shocks.

In the last column, we jointly regress all the control variables (Table 3, column all). This regression seems to confirm the results emerging from the previous ones: credit variations are positively correlated with crisis probability, moreover output and connectivity variations anticipate crises probability.

Nevertheless, taking into account the limits of our dataset (in particular the scarce number of observations), our analysis seems to show that credit variations may be correlated with crisis probability and connectivity may improve the effectiveness of early warning measures of crisis.

We apply the same econometric analysis to simulated data, and we try to compare it with the empirical one. Simulations gives the advantage of generating data from a stylized and controlled process. However, in simulation we don’t have different prefectures. Thus, in order to build a Panel dataset—with a comparable dimension respect to the empirical one—instead of prefectures we use ten different Monte Carlo runs of the simulation model. Moreover, as for the calibration of the model, we repeat the analysis three times, using each time only ten different simulation runs (see “Appendix”).

Credit variations are positively correlated to crisis probability (see Table 4, 9, 10, column credit) in all the three sets of simulation that are taken into consideration. In particular credit variations are strongly significant in both the second and third sets (9 and 10), where both the sum of the lagged coefficients and the five lags are jointly statistically significant at the 5%. Moreover, in all the simulated sets the associated Auroc level is higher than 0.5 in a significant way. Thus, in simulated data, similarly to empirical results, credit variation lags are positively correlated with crisis probability.

When we include output or connectivity in the regression (\(credit+y\) and \(credit+deg\)), we observe that output and connectivity variations are correlated to crisis probability and, according to De Long test, they produce an increase of the Auroc, significant at 5% level. The regression model with both credit and output variations (credit+y) displays a stronger positive correlation between credit variations and crisis occurrence, respect to the baseline specification (credit). Also the output variation coefficients are significant even though they show a negative sign, which is at odds with the empirical results. This is not due to a simple negative correlation between output variations and crisis, since if we consider only the lagged \(\varDelta (y)\) as regressors we get positive coefficients. Rather the (credit + y) model seems to capture two different effects of opposite sign. On one hand, there is an “exposure” effect, i.e. the increase of credit augments the likely of a crisis occurrence. On the other, there is a “patrimonial effect”, namely during expansionary phases there is also a net worth increase that augment agent’s resilience to negative shocks, and lowers the crisis probability accordingly.

Similarly to the empirical dataset, in all the three simulation sets, the crisis probability is positively correlated with an increase in the average normalized degree. At the same time, the correlation between crisis and credit variations becomes not significant of negative. Indeed, credit plays a twofold role respect to crisis probability. On one side, credit growth increases the systemic risk by fostering bilateral exposures; on the other, it sustains output, thus, reducing the crisis probability. Adding the connectivity variation among regressors, the bilateral exposure effect is catched by the normalized degree variable. Therefore, the variation of connectivity is able to capture the prevalence of systemic risk respect to the sharing risk.

Furthermore, the (\(credit+deg\)) model leads to a higher Auroc level, respect to the baseline model (Fig. 6) and—in two out of three sets—to the (\(credit+y\)) one. Moreover, including connectivity the R-squared increases and the AIC shrinks. Besides, connectivity variations are significant even in the regression with all the independent variables (All), thus controlling also for output variations (y) and leverage (credit / y). Therefore, simulation data suggest that connectivity variations may be effective early warning measures for crisis.

Besides, in simulated data, following the same econometric approach we control for other network measures that may be correlated to crisis probability: assortativity with respect to leverage (assortativity) and the maximum value of agents page rank (pageRank).

Assortativity measures the degree of homogeneity in terms of leverage among connected firms and banks. Assortativity varies between one and minus one. If assortativity is near to one, it means that high leverage banks are connected with high leverage firms and vice-versa, while if assortativity is close to minus one, high leverage banks are connected with low leverage firms and vice-versa. Thus, assortativity may provide a synthetic measure of bank credit diversification. The ‘PageRank’ algorithm is used by Google for ranking agents connectivity. Indeed, for instance, Battiston et al. (2012) and Thurner and Poledna (2013) applied the ‘DebtRank’, a measure inspired to the ‘PageRank’, to value agent centrality in credit networks.

Normalized degree variations (deg) show higher Auroc levels, which are associated to higher R-squared and lower AIC, in two out of three sets. Thus, normalized degree seems to be more efficient as an early warning measure with respect to assortativity and ‘PageRank’ (Table 5, and in the “Appendix” tables).

4 Precautionary macro policy experiments

The previous paragraph suggested that credit and connectivity may be used as early warning measures of crisis: in this section we describe some simulation experiments on precautionary policies aiming at reducing crisis probability (Alessi and Detken 2011; Babecky et al. 2011; Betz et al. 2014; Drehmann and Juselius 2014; Alessi et al. 2015). Agent-based methodology allows us to implement macroeconomic prudential policies that focus on agents heterogeneity and their interactions (Popoyan et al. 2017), thus we tested a simple capital-related measure: when a bank is targeted by the policy, this bank can not provide credit to riskier firms, which are those firms with high leverage.Footnote 5

Firstly, we permanently apply this policy to all the banks. After that, we focus the policy only to bigger and more connected banks. We test these three scenarios running 30 simulations lasting 33 years as above. We apply the policy measures to the last 17 years of the simulation, and we use the same simulation seeds for the different policy scenarios in order to reproduce the same conditions when each policy begins.

In order to show the effects of this capital-related measure on output level and crisis probability, we apply it permanently to all the banks and we consider different levels of firm riskiness allowed.

Figure 7 shows that starting from no intervention (‘no’) and gradually reducing the maximum level of firm leverage allowed, the lower the firm leverage permitted, the stricter the policy. Initially, the output slightly increases and the crisis probability decreases. However, when policies become stricter, the level of output declines because credit supply suffers an excessive compression that leads to output reduction.

The effective implementation of permanent credit restrictive measures applied to all the agents may be problematic, while the previous paragraphs show that network configuration dynamics may be correlated to crisis probability. Thus, we apply capital-related measures to banks that are central in the credit network, which are the biggest and most connected. Indeed, the distributions of net-worth and normalized degree of banks in simulated data are characterized by the presence of few larger banks with high levels of connectivity (Fig. 8; Tables 1, 2). In order to value the effect of the policy we consider: output level, crisis probability and probability of intervention (i.e.; the probability p(int) that in each period a bank is targeted by the policy).

Bank size is computed according to its relative net-worth level (\(E_{it}\)): bigger banks are those bank that cover a larger share of total banks net-worth in a given period of the simulation. The credit restrictive policy is a temporary policy that is applied only when a bank overcomes a certain relative size threshold and consists in banning credit to firms with relatively high target leverage (target leverage \(\lambda _{it}>5.0\)) We tested different relative size thresholds, starting from a high level of concentration (0.2: when a bank controls more of the 20% of the total bank net-worth).

Indeed, when the credit restriction is applied to bigger banks only, the economy becomes more stable without reducing output levels (Fig. 9). For instance, if the policy is applied to banks that represent more than the 10% of total bank net-worth, crisis probability decreases, while output level remains stable. Moreover, the number of banks involved by this measure is quite limited.

Finally, we target the previous capital-related measure only to the more connected banks. Figure 10 shows the effects of this policy calibrated on different normalized degree thresholds, starting from a threshold of 0.5 (i.e.; targeting the banks with a normalized degree higher than 0.5 only, that are those bank connected with at least half of the firms). When the policy is focused on the more connected banks, crisis probability decreases without affecting the output. For instance, targeting banks with a normalized degree higher than 0.3 consistently reduces crisis probability, while the output level remains stable and the number of banks affected by this policy is extremely low.

Therefore, policy interventions that target bigger or more connected banks only seem to be effective macro prudential instruments. In fact, these selective policies reduce the number of agents that should be monitored. Moreover, they could be calibrated according to early warning measures. Thus, the coordination between early warning indicators and agent specific interventions may help controlling systemic vulnerability.

5 Conclusions

In this paper we described an agent-based model that reproduces the evolution of a credit network as the result of the individual leverage choices of banks and firms. We calibrated the model on an empirical credit network dataset and, following the methodology used by Schularick and Taylor (2012), we analyzed the effectiveness of connectivity as an early warning indicator for crises.

The model underlines the importance of credit network configurations for the analysis of systemic risk. The empirical data we used and particularly, simulated data, suggest that credit and connectivity variations are effective early warning measures for crises. Indeed, in our agent-based model, expansionary phases are associated with increasing credit and connectivity which, in turn, may create the conditions for crises: high levels of credit may be associated with high leverage, which increases firm failure probability. Firm failures affect negatively bank balance sheets, leading to credit contractions, which in a strongly connected network, may affect the whole economy. Therefore, credit network configurations may be used to define the timing of macro-prudential policies.

Moreover, according to our simulated experiments, capital-related policies based on quantitative restriction may be effective in reducing crisis probability. Selective quantitative restriction measures focusing on larger or more connected banks may reduce systemic risk without negatively affecting credit supply and output level. In fact, capital-related policies that target agents that are central in the credit network, in terms of both credit and connections, may reduce systemic vulnerability.

The model can be extended in different ways. For instance, if it would be possible to access other datasets, the analysis developed in this paper may be tested by calibrating simulations to other, possible larger, samples of firms and banks. Moreover, the model could be extended to include different macro-prudential policies, trying to coordinate interventions with early warning measures.

Notes

According to Adrian et al. (2015), policy interventions which aim to limit the leverage ratio and the maximal exposure allowed, as well as to define countercyclical capital buffers, belong to the capital-related macroprudential tools class.

For the sake of clarity, we remind that we have defined the leverage \(\lambda _t=\frac{Total Assets}{Equity}\) as many others in literature (Adrian and Shin 2010; Aymanns et al. 2016), which corresponds exactly to the inverse of the Basel III leverage ratio, \(\frac{tier 1 capital}{Total Exposure}\).

We defined a dummy variable with values equal to one when output decrease is lower than \(-0.05\)%, else the other periods the dummy variable is equal to zero. This dummy variable is used as dependent variable in the panel logit regressions.

The connectivity measure we have considered is the average normalized degree, measured as the average number of connections—in our case lending agreements—of each firm divided by the maximum number of possible links, which corresponds to the total number of banks.

To avoid confusion, \(\alpha _{\text {Hill}}\) returns the shape parameter of a Pareto distribution, whose probability distribution is \(p(x)=\frac{\alpha k^{\alpha }}{x^{\alpha +1}}\). Therefore, whenever in this paper we talk about power law distribution we refer to the general form \(p\left( x\right) \propto x^{-\left( \alpha +1\right) }\). Thus, in our notation, the power law scaling exponent is the Pareto distribution shape parameter.

References

Adrian T, Shin HS (2010) Liquidity and leverage. J Financ Intermed 19(3):418–437

Adrian T, de Fontnouvelle P, Yang E, Zlate A (2015) Macroprudential policy: case study from a tabletop exercise. Staff reports 742, Federal Reserve Bank of New York

Alessi L, Detken C (2011) Quasi real time early warning indicators for costly asset price boom/bust cycles: a role for global liquidity. Eur J Polit Econ 27(3):520–533

Alessi L, Antunes A, Babecky J, Baltussen S, Behn M, Bonfim D, Bush O, Detken C, Frost J, Guimaraes R, Havranek T, Joy MK (2015) Comparing different early warning systems: results from a horse race competition among members of the macro-prudential research network. MPRA paper 62194, University Library of Munich, Germany

Alfons A, Templ M (2013) Estimation of social exclusion indicators from complex surveys: the R package laeken. J Stat Softw 54(15):1–25

Allen F, Gale D (2000) Financial contagion. J Polit Econ 108(1):1–33

Allen F, Babus A, Carletti E (2012) Asset commonality, debt maturity and systemic risk. J Financ Econ 104(3):519–534

Angeloni I (2014) European macroprudential policy from gestation to infancy. Financ Stab Rev 18:71–84

Axtell RL (2001) Zipf distribution of US firm sizes. Science 293(5536):1818–1820

Aymanns C, Caccioli F, Farmer JD, Tan VWC (2016) Taming the Basel leverage cycle. J Financ Stab 27:263–277

Babecky G, Havranek T, Matiju J, Rusnak M, Smidkova K, Vasieek B (2011) Early warning indicators of crisis incidence: evidence from a panel of 40 developed countries. Working papers IES 2011/36, Charles University Prague, Faculty of Social Sciences, Institute of Economic Studies

Basel Committee (2010) Basel III: a global regulatory framework for more resilient banks and banking systems. Basel Committee on Banking Supervision, Basel

Battiston S, Delli Gatti D, Gallegati M, Greenwald B, Stiglitz JE (2012a) Liaisons dangereuses: increasing connectivity, risk sharing, and systemic risk. J Econ Dyn Control 36(8):1121–1141

Battiston S, Puliga M, Kaushik R, Tasca P, Caldarelli G (2012b) DebtRank: too central to fail? Financial Networks, the FED and Systemic Risk. Scientific Reports 2(541)

Battiston S, Farmer JD, Flache A, Garlaschelli D, Haldane AG, Heesterbeek H, Hommes C, Jaeger C, May R, Scheffer M (2016) Complexity theory and financial regulation. Science 351(6275):818–819

Betz F, Opric S, Peltonen TA, Sarlin P (2014) Predicting distress in European banks. J Bank Finance 45(C):225–241

Bruno V, Shim I, Shin HS (2017) Comparative assessment of macroprudential policies. J Financ Stab 28:183–202

Caccioli F, Catanach TA, Farmer JD (2012) Heterogeneity, correlations and financial contagion. Adv Complex Syst 15:1250058-1-1

Catullo E, Gallegati M, Palestrini A (2015) Towards a credit network based early warning indicator for crises. J Econ Dyn Control 50:78–97

Claessens S (2014) An overview of macroprudential policy tools. IMF working papers 14/214, International Monetary Fund

Clauset A, Shalizi CR, Newman ME (2009) Power-law distributions in empirical data. SIAM Rev 51(4):661–703

Clementi F, Di Matteo T, Gallegati M (2006) The power-law tail exponent of income distributions. Phys A Stat Mech Appl 370(1):49–53

De Masi G, Gallegati M (2011) Bank-firms topology in Italy. Empir Econ 43(2):851–866

Dell’Ariccia G, Laeven L, Suarez GA (2017) Bank leverage and monetary policy’s risk-taking channel: evidence from the United States. J Finance 72:613654

Delli Gatti D, Di Guilmi C, Gaffeo E, Giulioni G, Gallegati M, Palestrini A (2005) A new approach to business fluctuations: heterogeneous interacting agents, scaling laws and financial fragility. J Econ Behav Organ 56(4):489–512

Delli Gatti D, Gallegati M, Greenwald B, Russo A, Stiglitz JE (2010) The financial accelerator in an evolving credit network. J Econ Dyn Control 34:1627–1650

Dosi G, Fagiolo G, Roventini A (2010) Schumpeter meeting Keynes: a policy-friendly model of endogenous growth and business cycles. J Econ Dyn Control 34(9):1748–1767

Drehmann M, Juselius M (2014) Evaluating early warning indicators of banking crises: satisfying policy requirements. Int J Forecast 30(3):759–780

Ghosh S, Canuto O (eds) (2013) Dealing with the challenges of macro financial linkages in emerging markets. The World Bank, Washington

Gillespie CS (2014) Fitting heavy tailed distributions: the poweRlaw package. R package version 0.20.5

Greenwald BC, Stiglitz JE (1993) Financial market imperfections and business cycles. Q J Econ 108(1):77–114

Hill BM et al (1975) A simple general approach to inference about the tail of a distribution. Ann Stat 3(5):1163–1174

IMF (2011) Macroprudential policy; what instruments and how to use them? Lessons from country experiences. IMF working papers 11/238, International Monetary Fund

Iori G, Jafarey S, Padilla FG (2006) Systemic risk on the interbank market. J Econ Behav Organ 61(4):525–542

Janicki H, Prescott ES (2006) Changes in the size distribution of us banks: 1960–2005. FRB Richmond Econ Q 92(4):291–316

Lux T (2016) A model of the topology of the bank—firm credit network and its role as channel of contagion. J Econ Dyn Control 66:36–53

Marotta L, Micciche S, Fujiwara Y, Iyetomi H, Aoyama H, Gallegati M, Mantegna RN (2015) Bank-firm credit network in Japan. A bipartite analysis and the characterization and time evolution of clusters of credit. PLOS ONE 5(10):e0123079

MARS (2014) Report on the macro-prudential research network (MARS). Report, European Central Bank

Minsky HP (1986) Stabilizing an unstable economy. Yale University Press, New Haven

Palestrini A (2013) Deriving aggregate network effects in macroeconomic models. Available at SSRN 2329107

Popoyan L, Napoletano M, Roventini A (2017) Taming macroeconomic instability: monetary and macro-prudential policy interactions in an agent-based model. J Econ Behav Organ 134:117–140

Riccetti L, Russo A, Gallegati M (2013) Leveraged network-based financial accelerator. J Econ Dyn Control 37(8):1626–1640

Schularick M, Taylor AM (2012) Credit booms gone bust: monetary policy, leverage cycles, and financial crises, 1870–2008. Am Econ Rev 102(2):1029–61

Tesfatsion L (2005) Agent-based computational economics: a constructive approach to economic theory. In: Tesfatsion L, Judd KL (eds) Handbook of computational economics, vol 2. North-Holland, Amsterdam, pp 831–880

Thurner S, Poledna S (2013) DebtRank-transparency: controlling systemic risk in financial networks. Scientific Reports 3(1888)

Vuong Q (1989) Likelihood ratio tests for model selection and non-nested hypotheses. Econometrica 57(2):307–333. doi:10.2307/1912557

Yellen JL (2011) Macroprudential supervision and monetary policy in the post-crisis world. Bus Econ 46(1):3–12

Author information

Authors and Affiliations

Corresponding author

Additional information

The research leading to these results has received funding from the European Union, Seventh Framework Programme FP7, under grant agreement FinMaP no: 612955 and Symphony no: 611875.

Appendix

Appendix

1.1 Simulation parameters

Simulations last 500 periods, there are 500 firms and 50 banks (see simulation parameters in Table 6). The initial value of firms’ net-worth is \(E_{i}=1\), that of bank’s net-worth is \(E_{z}=5\). Firms and banks with non-positive net-worth levels exit from the market and are substituted with firms and banks having a level of net-worth relatively lower than the one of incumbents; entering firms have \(E_{i}=mf+ U(-0.1,0.1)*mf\) and entering banks have \(E_{z}=mb+ U(-0.1,0.1)*mb\), with mf the average size of firms and mb the average size of banks. This entry condition assures that enters have a size that is in line with the other competitors.

The set of leverage value of firms is

\(\varLambda _f=\{1.0,1.5,2.0,1.25,3.0,3.5,4.0,4.5,5.0,5.5,6.0,6.5,7.0,7.5,8.0,8.5,9.0,9.5,10.0\}\).

The set of leverage value of banks is

\(\varLambda _b=\{10.0,15.0,20.0,25.0,30.0,35.0,40.0,45.0,50.0,55.0,60.0,65.0,70.0,75.0,80.0,85.0,90.0,95.0,100.0\}\).

1.2 Calibration

In the empirical dataset we consider only banks and firms with strictly positive net-worth, asset and liability values, in order to clear the dataset from uncertain values. We calibrate the model parameters in order to generate similar aggregate values (significant at 2%) of empirical output volatility (output growth rate standard deviation), leverage (average firm leverage) and connectivity (average normalized degree). We test three groups of ten simulation each, the empirical dataset last for 33 years, thus the values are computed over the last 33 years of simulated data (Table 7).

In the subset of data that we used for correlating crisis probability with credit, connectivity and other macro-variables, the crisis probability, which by definition corresponds to an output slowdown of \(-5\)% (Table 8).

1.3 Estimation of power law parameters

The estimation procedure we have followed to detect the presence of power law tails in the distributions of the economic agents, is the one proposed by Clementi et al. (2006), Clauset et al. (2009). Essentially, it consists of three stepsFootnote 6:

-

1.

Estimating the power-law distribution parameters (scale \(x_{min}\) and shape \(\alpha \));

-

2.

Testing the goodness-of-fit of the power law hypothesis,

-

3.

Testing the power law hypothesis against a “light-tailed” distribution (the Vuong (1989) test);

In turn, the estimation of the power law parameter has been splitted in two different steps according to Clementi et al. (2006);

-

(i)

Fristly, we estimated the scale parameter \(x_{min}\), according to Clauset et al. (2009) ;

-

(ii)

Then, for a given \(x_{min}\), we estimated the shape parameters \(\alpha \) using the Hill estimator by Hill (1975).

The problem of finding the adequate cut-off, after which a distribution clearly displays a fat tail behaviour, it is always a sensitive issue. Clauset et al. (2009) propose a methodology that avoids arbitrariness in such a choice. Their idea is to (optimally) choose the value of \(x_{min}\) that minimizes the distance between the probability distribution of our data and its best-fit power law model. The statistical distance of the two probability distributions is expressed by the Kolmogorov–Smirnov (KS) statistic

Once we got an estimate for \(x_{min}\), we use all the \(x_i\) observations larger than \(x_{min}\), to calculate the Hill estimator, which extracts the shape parameter of the Pareto distributionFootnote 7:

where k is the number of observation such that \(x_i>x_{min}\).

After the estimation of scale and shape parameters, we got a power law model for our data. The next step is to measure whether such hypothesis is plausible. Following Clauset et al. (2009) we have performed a goodness-of-fit test via bootstrap, based on the KS statistics. The null hypothesis \(H_0\) is that (the tail) of our data are drawn from a power law distribution. The bootstrap procedure works as follow: for a given \(x_{min}\) and \(\alpha \), estimated from the original data, n distributions have been simulated from a nested model composed by:

-

An uniform random variable, \(U\sim (1,x_{min}), \forall x_i\le x_{min}\);

-

A power law with the same \(\alpha \) of the original data, \(\forall x_i> x_{min}\).

The KS statistics of each simulated distribution (\(KS_{sim}\)) is compared with the one of the original data (\(KS_d\)). The resulting p value of the bootstrap test is the number of times that the original data model outperforms the simulated models, i.e. \((KS_d\le KS_{sim})\) (Gillespie 2014, see).

Even though a large p value may suggest that the power law is a good model for the tails of our distributions, we need to rule out the possibility that even some other distributions might provide an equally (or even better) reasonable fit. The Vuong test fulfills this task, by calculating the statistical difference of two fitted models. According to the null hypothesis \(H_0\), both the models are equally close to the true data generating process (DGP). The alternative hypothesis \(H_1\) is that only one of them is closer to the DGP. Under \(H_0\), the Vuong test statistic, V, is asymptotically distributed as a standard normal (Vuong 1989, see). Furthermore, in case of rejection of \(H_0\), the value of V gives information on which of the two model is closer to the true DGP. Namely, if \(V>z_{\alpha }\) the model 1 is better than 2, and the reverse is true whether \(V<-z_{\alpha }\).

In our case we have chosen to compare a “fat tailed” distribution (i.e. the power law model) against a “light tailed” one (i.e. the exponential); in such a way that the Vuong test is not only a test on two different distributions but also a statistical test on the presence/absence of thickness in the tail of the considered distributions.

1.4 Early warning measures

We report the results of the Logit regressions for other two groups of ten simulation each. We test the correlation between crisis probability with respect credit, connectivity, leverage and output variations (Tables 9, 10). Moreover, we show the correlation between crisis probability and different connectivity measures (Tables 11, 12).

Rights and permissions

About this article

Cite this article

Catullo, E., Palestrini, A., Grilli, R. et al. Early warning indicators and macro-prudential policies: a credit network agent based model. J Econ Interact Coord 13, 81–115 (2018). https://doi.org/10.1007/s11403-017-0199-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11403-017-0199-y