Abstract

The financial crisis led to a number of new systemic risk measures and a renewed concern over the risk of contagion. This paper surveys the systemic risk literature with a focus on the importance of contributions made by those emphasizing a network-based approach, and how that compares with more commonly used approaches. Research on systemic risk has generally found that the risk of contagion through domino effects is minimal, and thus emphasized focusing on the resiliency of the financial system to broad macroeconomic shocks. Theoretical, methodological, and empirical work is critically examined to provide insight on how and why regulators have emphasized deregulation, diversification, size-based regulations, and portfolio-based coherent systemic risk measures. Furthermore, in the context of network analysis, this paper reviews and critically assesses newly created systemic risk measures. Network analysis and agent-based modeling approaches to understanding network formation offer promise in helping understand contagion, and also detecting fragile systems before they collapse. Theory and evidence discussed here implies that regulators and researchers need to gain an improved understanding of how topology, capital requirements, and liquidity interact.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The 2008 global financial crisis was the result of numerous failures across a highly interconnected dynamic network. This systemic event—which had no identifiable beginning and a number of potential causes—highlighted the importance of understanding financial network dynamics and characteristics. In the wake of the crisis, researchers have attempted to map financial networks and quantify the risk of a future systemic collapse (i.e., systemic risk). Network-based models offer one analytical framework to examine the issue of systemic risk in financial markets. This paper surveys the development of these network-based models, and discusses the ways in which the approach could be better integrated with other risk measures based on an equilibrium approach.

In 2013, Janet Yellen presented a narrative of the financial crisis that described how issues of counterparty risk and contagion were seen as minimal risks by regulators prior to the crisis.Footnote 1 Additionally, much of the pre-crisis theoretical literature discussing financial networks had shown minimal dangers of counterparty risk and contagion (Upper 2011; Drehmann 2009; Eisenberg and Noe 2001). Early network-based models finding that these risks were of little importance led to certain policy prescriptions, including diversification, deregulation, reliance on market discipline, and the use of portfolio-based risk measures (Allen and Gale 2000; Aragonés et al. 2008). Interbank lending market models like those found in Allen and Gale (2000) were the foundation of the literature supporting these policy prescriptions, and remains the focus of many studies since the financial crisis. Recent financial innovations like credit default swaps—which were being sold by numerous unregulated firms—unearthed glaring holes in regulation and oversight which appeared only after the crisis began (Markose et al. 2010).

This paper meets several ends through a survey of the literature on financial network modeling and systemic risk, with a focus on providing a summary of the successes and remaining gaps in the literature. Models of financial networks attempting to understand systemic risk have their roots in a traditional economic equilibrium (i.e., general equilibrium) approach. As described below, early theoretical models by Allen and Gale (2000) and Freixas et al. (2000) have overshadowed subsequent work on empirical networks, agent-based models, and other work which challenges the notion that financial markets gravitate towards a steady-state of stability or non-existence. The hallmark of the general equilibrium approach to networks often entails static agent behavior including rational expectations and representative agents. Historically network models of systemic risk are often overlooked for various reasons, including the types of networks considered, the algorithms used to solve models, and the scope of study.Footnote 2 In the context of network modeling, this paper reviews and critically assesses new systemic risk measures designed to detect or mitigate future financial crises. By linking research from different perspectives, we can provide a suitably wide foundation for studying systemic events. Examination of contrasting approaches, including general equilibrium, empirical, simulation, network, and agent-based modeling, reveals many areas where research is needed.Footnote 3 This survey also suggests changes to the current regulatory approach, including variable capital and liquidity requirements, greater transparency, and improved bankruptcy laws.

Recent research on financial networks offers important insights into systemic risk by studying contagious links and fragile network structures. Regulators should expand their focus beyond firms that exhibit certain traits (e.g., size) to include entities where new contagious links might develop. Recent network research also shows considerations might need to be taken regarding mergers, acquisitions, and break-ups as they could weaken the network structure. Regulators cannot reasonably expect to prepare for every contingency, nor can they track every type of asset. However, a number of potential crises are currently being ignored by widespread use of methods that focus on only the investments and relationships of a few large institutions. There are numerous theories and empirical studies indicating regulators and researchers should focus on the interaction of network topology and regulations like capital and liquidity requirements. Agent-based models have helped deepen behavioral foundations, as seen in models including network-based financial and leverage accelerators which produce risk endogenously. These accelerators help explain why networks can become more or less conducive to contagion. An improved understanding of these agents’ behavioral foundations promises to help us better comprehend systemic risk.

The remainder of this survey is organized as follows. Section 2 provides a taxonomy of systemic risk, a discussion of relevant externalities, and introduces the concept of endogenous risk. Section 3 reviews recent research on networks, complexity, and agent-based models as it relates to systemic risk. Section 4 reviews both traditional and network-based systemic risk measures. Section 5 discusses the reliability of systemic risk measures, and Sect. 6 concludes.

2 Defining, categorizing, and measuring systemic risk

This paper does not claim to be the first paper to survey the literature on systemic risk, and this fast progressing field of study implies that any attempt at an exhaustive review would be out of date before publication. There are several existing survey articles that can help interested researchers understand the scope of study on systemic risk (Table 1).

There are many different taxonomies used to categorize systemic risk, but one proposed by De Bandt et al. (2010) (“De Bandt” hereafter) provides the most straightforward description of systemic events that might occur (Fig. 1).Footnote 4 Narrow systemic events impact a single (or a few) financial institutions leading to adverse effects on other banks. Broad systemic events occur when simultaneous adverse or systematic shocks impact a large number of financial institutions. Broad systemic events are likely to occur when institutions have highly correlated exposures. Strong systemic events result in the failure of financial institutions that were fundamentally solvent prior to the event. Conversely, weak systemic events do not result in failures. Contagion occurs when a strong and narrow systemic event occurs, and systemic risk (in either a broad or narrow sense) is defined as the risk of experiencing a strong systemic event. Thus, under De Bandt’s taxonomy, systemic risk measurement is an attempt to quantify the occurrence of contagion or a widespread macroeconomic shock resulting in the failure of a number of financial institutions.

De Bandt also extends this taxonomy to include horizontal systemic events as those that occur only in the financial sector without spreading to the general economy, and vertical events as those with spillovers to real activity. While a great deal of systemic risk research reviewed here focuses on the financial sector alone—and therefore horizontal events—the more interesting events are arguably vertical events in which the financial sector has negative impacts on the broader economy. Hence, any model of vertical events must incorporate a financial system that serves a role in helping the real economy function. Recent work has shown that increases in credit and more lenient lending standards are associated with increases in output growth (Cappiello et al. 2010; Bernanke and Gertler 2010; Kashyap and Stein 2000; Bernanke et al. 1999). A weakness in De Bandt’s taxonomy is that systemic events generally must be caused by an exogenous—and often large–shock to an individual or group of institutions. Borio and Drehmann (2009) note that a taxonomy like De Bandt’s rules out the possibility that the financial system is actually the source of the shock and resulting contagion.

Borio and Drehmann (2009) provide three categories of analytical frameworks used to model financial distress: self-fulfilling v. fundamental; the result of endogenous financial cycles or exogenous negative shocks amplified by the system; and how broad versus narrow shocks can impact the system through spillovers. They also note that a commonality in these analytical frameworks is how “aggregate risk is endogenous to the collective behavior” of individuals, and that this endogenous risk is destabilizing to the overall system. This endogeneity is particularly relevant to network and agent-based models of the financial system. Endogenous risk can be described as the “conjunction of (i) individuals reacting to their environment and (ii) where the individual actions affect their environment” (Daníelsson and Shin 2003). Endogenous risk is present wherever actions of market participants influence the movements, correlations, and payoffs of assets, unlike systems where agents act independently and cannot affect the ultimate course of events.

Three episodes have been described as the result of endogenous risk: the 1987 stock market crash; the LTCM crisis; and the dollar/yen collapse in October 1998 (Daníelsson and Shin 2003). In each of these events the structure of the system contributed to vicious feedback loops of actions and outcomes that ultimately culminated in a systemic event. A portion of the systemic risk that was realized in each situation is inherently due to the rapid changes in prices that firms pay for assets (Zigrand 2010). Furthermore, the size and scope of the networks evolve in each event as some participants enter while others exit.

Evolution fits into modeling of financial markets in a couple of key ways. General equilibrium models with static networks and behavioral assumptions typically speak in terms of transition dynamics, moving from one steady state to another. In network modeling, static behavior is still often assumed for agents, but the modeler can account for evolution of network connections and how shocks are transmitted. Agent-based models might loosely be considered those that incorporate both evolving networks and behavior. There is a tradeoff in the shift towards more realistic modeling of agent and network behavior in the sense that calibration and validation of these models is exponentially more difficult.Footnote 5

The role of evolution in financial system modeling is treated differently when progressing from the general equilibrium approach to agent-based modeling. In general equilibrium models like Shleifer and Vishny (2011), evolution is often simplified as being either network or pecuniary externalities resulting from exogenous shocks (Shleifer and Vishny 2011). Network and pecuniary externalities are both important components of endogenous risk, and can be modeled directly. A network externality would exist if market participants failed to internalize the benefits or losses from changes in the size of the network, and a pecuniary externality would exist when price changes are not compensated by those entering (or leaving) a market (Liebowitz and Margolis 1994). From a modeling perspective, ignoring these externalities and focusing on equilibrium effects (as in Shleifer and Vishny 2010) may gloss over potentially useful regulatory solutions to crises. The risk of endogenous events manifests when panic spreads, key firms cease trading, followed by subsequent declines in both the size of the system and prices (Fig. 1c–f). Sections 3.2 and 3.3 address network research exemplifying the importance of understanding evolving topology and asset prices.Footnote 6

From a network-modeling perspective, endogenous risk is closely related to both the topology of the network and behavior of agents. Adaptations of network topology, agent behaviors, and regulations on capital or liquidity requirements can result in a fundamentally weaker structure depending on the importance of the individual firms (Madhavan 2012; Daníelsson and Zigrand 2012; Daníelsson et al. 2013). Therefore, an inherently weaker financial system might collapse from a small shock that has contagious effects on behavior. These behavioral changes can occur on either side of the balance sheet by broadly affecting asset values or through bank runs—wholesale or retail.

The risk of contagion is very specific to both the markets being considered, and the timing of exogenous events that might stress a system (Degryse and Nguyen 2007; Roukny et al. 2013; Hasman 2013). At this time there is little research or understanding of the linkages and synergies of multiple layered—or multiplex—markets.Footnote 7 Thurner and Poledna (2013) stresses that interbank markets are important, but that collateral and credit derivative markets also play an important role in the shock amplification facilitated by financial networks. The lack of timely detailed information about balance sheet exposures in the variety of networks considered here is a common critical theme in the literature.

Upper (2011) provides a survey of the simulation literature and the different channels through which contagion in financial networks might operate. He offers a mostly skeptical view on the usefulness of research on simulating contagion which has generally focused on how shocks to one financial institution can—like a domino effect—either directly or indirectly impact other institutions. Generally, the risk of a simulated targeted shock causing contagion is found to be low, but if it does occur the losses can be severe and widespread.Footnote 8 However, the typical methods reviewed within the literature to determine this are very stylized, lack important theoretical foundations, and might be generally too narrow in scope to be useful for future indicators. The main positive contribution in the literature reviewed there, is that network modeling can help detect the systemically important nodes in a network, and has kept some emphasis on the potential issue of contagion (Upper 2011).Footnote 9

Network-based research has identified the importance of the robust-yet-fragile nature of financial networks. This has been seen in many studies where more highly connected networks have a low probability of a contagious event, due to risk sharing. Yet, when a contagious event occurs the outcome will be more damaging and widespread due to the same connectivity that helps prevent some events (Chinazzi and Fagiolo 2013). A spontaneous unraveling due to financial imbalances might be more likely to occur in one state versus another, taking an otherwise harmless event and amplifying it into an “endogenous extreme event” ( European Central Bank 2010b; Thurner 2011). Papademos (2009) highlights the importance of structural shifts stating, “systemic risk is partly endogenous as it depends on the collective behaviour of financial institutions and their inter-connectedness, as well as the interaction between financial markets and the macroeconomy.” While some regulators have taken a closer look at endogenous risk, little attention is actually paid to it via the most common systemic risk measures put in place since the crisis. Improved network maps are necessary in order to gain a better understanding of the resiliency of financial markets, but a general lack of data and transparency has made this difficult.

While data are often insufficient, there has been no shortage of attempts at quantifying systemic risk. Bisias et al. (2012) provide a list and code for 31 such measures, many of which assume that “systemic risk arises endogenously within the system.” They provide a taxonomy of risk measures by data requirements with a separate category for network measures with granular foundations.Footnote 10 While this would seem to place network modeling squarely in the center of risk measurement, the network modeling research cited by Bisias et al. (2012) are mostly limited in scope and often rely on aggregate data. One of the aims here is to highlight the foundation of network research to those examining systemic risk, and to look at the linkages with other approaches.

3 Networks and systemic risk

Recent empirical research has helped map various financial network structures with the limited data that is available. Unfortunately, the lack of data also implies the true nature of the process of financial network formation and evolution will remain a mystery for some time. Generally, there is insufficient data on the dynamic linkages across firm balance sheets which has led to a continued reliance on theoretical and simulation methods.Footnote 11 Empirical and general equilibrium network models are also able to offer insights on relationships and laws governing networks, but generally understate the role of deeper fundamental issues including: complexity; bounded rationality; and herd behavior.Footnote 12 Even where data are more complete—as is the case in the interbank lending market—any individual asset being studied is only a minor contributor to overall systemic risk. Strategies taken by firms may begin as broadly heterogeneous in nature, but competitive pressures may lead to herding and a more homogeneous or fragile state. Each of the following facets of this literature are reviewed below in the context of understanding systemic risk: theoretical foundations; network solving algorithms; firm and asset heterogeneity; empirical research; and simulated networks and dynamics.Footnote 13

3.1 Theoretical foundations of systemic risk

Financial market regulations have a history of being developed from theoretical research which found increased diversification and interconnectedness lowered systemic risk. A number of network studies reviewed here demonstrate that these early models ignored certain potential downsides of a more complex system. Policy trends supporting increased diversification (e.g., the 1999 Financial Modernization Act (i.e., Gramm-Leach-Bliley Act) and interconnection (e.g., the Commodity Futures Modernization Act of 2000) were based on the notion that markets operate most efficiently in the presence of minimal regulatory interference.

Seminal theoretical work by Allen and Gale (2000) showed that more complete markets with increased diversification and interconnection are always a positive thing.Footnote 14 Since the publication of Allen and Gale (2000), numerous examples of contrary evidence have been published—and are outlined below. However, political debates and public discourse have been slow to dislodge from this narrative.

Financial networks have become increasingly interlinked through asset derivatives like credit default swaps that are intended to diversify risk throughout a system. While increasing interconnectedness is not necessarily a problem, it is troubling how little is understood regarding systemic risk when network topology rapidly changes and asset liquidity disappears. In markets with completely isolated firms holding correlated risks, the only potential systemic risk would be a broad shock impacting all agents separately. As the network becomes connected, the previously created risk is still held, but is now diversified. It is the act of diversification itself along with the assumption of liquid markets which introduces the potential for contagion.

The general equilibrium approach to financial market contagion suggested policymakers deregulate the industry while encouraging widespread risk sharing. Allen and Gale (2000) provide the seminal starting point for studying a general equilibrium approach to financial market contagion and systemic risk. Based on needs for liquidity in a theoretical interbank network, Allen and Gale (2000) find that more complete markets are more stable. Bank relationships are an important factor in determining market fragility, but highly correlated liquidity shocks are associated with an increasingly fragile financial system. Freixas et al. (2000) similarly found that more tightly linked interbank markets help individual institutions avoid the problem of asset illiquidity. While these might be interesting theoretical solutions to general equilibrium network models, the implications of these findings were far reaching. The assumption of a complete network with full risk sharing is not valid in the real world, and subsequent research has shown that small shocks can in fact lead to large contagious events (Allen and Gale 2004).

Three theoretical models stand out in their unique extensions to the early work by Allen and Gale (2000) in that fragile but highly connected networks are considered ex-ante optimal. Leitner (2005) expands the Allen and Gale (2000) model by allowing linked banks to coordinate for a bailout after the threat of contagion has arisen. Leitner (2005) shows that “private sector bailouts may be a feature of an optimal-risk sharing structure.” The implication is that banks willingly accept the risk of contagion, in part because an existing coordination mechanism provides firms a form of insurance which cannot be contracted prior to the contagious event. However, if the shock to the system is large enough, the private sector bailout may be insufficient and the entire system may collapse as a result. Brusco and Castiglionesi (2007) take a different approach to extending the Allen and Gale (2000) model to include a gambling asset which allows a role for moral hazard. Depositors willingly accept increased risk since long-term investment and welfare rises as a result of the liquidity coinsurance of interbank deposits. The tradeoff is that the risk of contagion endogenously and rationally develops as bank linkages increase (Brusco and Castiglionesi 2007). Thus, there is a welfare tradeoff present in requiring banks to hold more capital since investment and growth would subsequently decline. Castiglionesi and Navarro (2008) use a model similar to Brusco and Castiglionesi (2007), and find ex-ante optimal networks like those produced in Leitner (2005). A key difference between Castiglionesi and Navarro (2008) and Leitner (2005) is that the coordination mechanism—which was key to the earlier work—does not need to exist to attain a similar optimally fragile financial network. The ultimate network structure of Castiglionesi and Navarro (2008) is tiered (i.e., core-periphery or money-center), but increasingly connected as the gambling asset becomes less risky (Table 2).

Subsequent work by Ana Babus extends the earlier work of Allen and Gale (2000) to incorporate endogenous network formation (Babus 2007) and bank choice of the degree of linkage (Babus 2005). Babus (2005) shows that banks exhibiting loss averse behavior under a given network structure yield suboptimal results when networks are incomplete. In complete networks, “banks choose a degree of interdependence such that contagion risk is at a minimum” (Babus 2005). Babus (2007) allows banks to endogenously create a network as banks form links to insure against contagion. Generally, Babus (2007) finds that the networks that are formed through strategic action to insure against liquidity risk exhibit endogenously determined probabilities of contagion. The efficient networks that form as a result of these interactions are found to be very resilient to contagion.

More expansive views of the Allen and Gale (2000) model find that a highly interconnected and diversified system may in fact be more fragile (Battiston et al. 2012b; Brusco and Castiglionesi 2007; Stiglitz 2010; Blume et al. 2011; Dasgupta 2004; Amini et al. 2010; Acemoglu 2012; Iori et al. 2006; Grilli et al. 2015; Tedeschi et al. 2012). If increased diversification and connectivity can result in instability, policies discouraging the spreading of risk may be required. Battiston et al. (2012b) use a financial accelerator model along with interdependence to show that increases in interconnectivity do not monotonically decrease risk.Footnote 15 Financial accelerators were first introduced into the agent-based network model of Delli Gatti et al. (2010), expanded in work by Riccetti et al. (2013), and empirically calibrated by Bargigli et al. (2014). Delli Gatti et al. (2010) raised the issue that accelerators could be at the root of contagion, suggesting topology was closely related. Expansions by Riccetti et al. (2013), Bargigli et al. (2014), and Tedeschi et al. (2012) incorporate a leverage accelerator alongside a network-based financial accelerator, yielding pro-cyclical credit. The leverage accelerator introduced by Riccetti et al. (2013) describes firms which raise their leverage ratios during good times, and reduce them during a downturn. The key issue raised by Battiston et al. (2012a, b) is that contagion remains a potentially important issue where the benefits of diversification can be overwhelmed. Battiston et al. (2012a) develops a similar model of default cascades, but shows that diversification can be detrimental from within the contagion itself. In comparison to Battiston et al. (2012b)—where the accelerator works outside the cascade—the contagion in Battiston et al. (2012a) occurs because the number of defaulting agents have an effect on the probability another party will default. Thus, default cascades can develop because counterparty risk combines fire-sale losses which amplify the direct loss of default (Battiston et al. 2012a; Cifuentes et al. 2005).Footnote 16 Once fire-sales take hold systemic risk rises as credit risk interacts with market risk and counterparty risk (Cifuentes et al. 2005). The accelerator processes described here are one way of accounting for the augmentation of shocks that might otherwise have limited impact.

Network theory can help identify the thresholds of connectivity where contagious effects might develop and become meaningful (Amini et al. 2010, 2011). Regulators might only need to understand contagious links rather than entire network structures when using incomplete financial market network maps (Amini et al. 2011). Derivatives, such as credit default swaps (CDSs) and repo holdings are only a couple examples of contagious links that were not well understood before the crisis yet proved to be how many firms were interconnected. If theoretical thresholds existed to measure network safety, contagious links could be monitored by regulators.

Both theory and empirical evidence have provided conflicting guidance with regard to the proper level of capital or liquidity requirements (Allen and Carletti 2013). Increased capital requirements on banks might lead monitored financial institutions to transfer credit risk to unmonitored insurers increasing systemic risk (Allen and Gale 2006). Thus, rather than increase regulation and capital requirements, Allen and Gale (2006) suggest the opposite in order to bring credit risk back from the shadows, lowering overall systemic risk. Cifuentes et al. (2005) also show that capital requirements in combination with mark-to-market accounting can exacerbate systemic risk, amplifying an otherwise minor shock. Cifuentes et al. (2005) suggests raising liquidity standards, but other studies have shown that regulatory policies like higher reserve requirements could lead to reductions in output growth (Tedeschi et al. 2012).

Policy exercises by Gai et al. (2011) show that tougher and time-varying liquidity requirements reduce the risk of contagion in a theoretical network model. Gai et al. (2011) test randomly constructed versus concentrated (i.e., hub) networks for resilience in markets with liquidity hoarding due to haircut or margin shocks. Certain network structures prove to be beyond tipping points where liquidity hoarding spreads across the entire network. Concentrated and more highly connected networks are more likely to face contagious effects, and shocks targeted at major participants are also likely to spread widely. Additionally, greater transparency is found to help prevent liquidity hoarding by reducing the size of haircuts in the system. However, the objections of Allen and Gale (2006) that credit risk will move to an unregulated sector or country are still relevant as long as there is someplace where firms can operate without being heavily regulated. It is therefore important to further examine where the suggested tipping points occur with respect to certain regulations like liquidity and capital requirements.

3.1.1 Algorithms and network contagion

Numerous studies searching for the causes of financial network crises have found contagion due to narrow shocks of second order importance relative to broad correlated shocks (Upper 2011; Elsinger et al. 2006a, b; Pokutta et al. 2011; Giesecke and Weber 2004; Aikman et al. 2009; Drehmann 2009; Eisenberg and Noe 2001). However, it still unresolved that contagion and counterparty risk from narrow shocks should not be considered a potential problem by regulators. Network research on contagion and systemic risk has been simplified using algorithms to estimate the scope of knock-on effects from a single default. These algorithms help put dollar values on potential losses of contagion during systemic events, while testing for varying levels of capital and liquidity requirements. Unfortunately, the algorithms used to conclude contagion is a second-order risk often underestimate the magnitude of the problem.

The Eisenberg and Noe (2001) algorithm (EN algorithm) is a commonly used method of measuring the risk of contagion.Footnote 17 The EN algorithm can be employed to estimate stress in a financial system by using snapshots of interbank positions and available exposure information (Aikman et al. 2009; Boss et al. 2004; Battiston et al. 2012b; Gai and Kapadia 2010). Using a matrix of exposures to “clear the network following the default of one or more institutions,” the EN algorithm is intended to help determine how a contagious default spreads, as well as estimate resulting counterparty credit losses (Aikman et al. 2009).

While the EN algorithm explicitly accounts for the risk of contagion, the costs are potentially underestimated in three main ways. First, the algorithm is simply a method of determining a clearing vector in a network with a central counterparty. In markets without a central counterparty to clear transactions, systemic risk must be higher since firms are necessarily more illiquid (Table 3). Also, the EN algorithm assumes there is no delay between an initial bankruptcy and the final determination of payments. In fact, delays in bankruptcy proceedings can increase uncertainty and lead to further insolvency through feedback mechanisms without any further shocks to the system. Finally, it is unclear if the correlation of defaults is considered by those using the algorithm, since the original paper only considers stochastic and not strategic firm behavior.

Modified versions of the EN algorithm provide evidence that uncertainty in bankruptcy costs (Elsinger et al. 2006a), or accounting for fire-sales (Hałaj and Kok 2013, 2015) (i.e., the leverage accelerator) can lead to higher costs of contagion. Furthermore, Cont et al. (2013) show that the algorithms used by Elsinger et al. (2006a) still drastically understate the risk of contagion and systemic risk by averaging the impact of an individual failure across all firms (i.e., the maximum entropy approach). Evidence from the Brazilian banking system shows contagion risk is increased at larger and more interconnected institutions (Cont et al. 2013).Footnote 18 As market clearing algorithms become more realistic, estimated costs of contagion have risen which implies previous studies have largely understated those risks. Regulatory changes that could help clear a system following a bankruptcy would be those allowing for a faster resolution of insolvent financial institutions. Less uncertainty in bankruptcy would give more credence to commonly used network clearing algorithms and their estimates of systemic risk. In the absence of efficient bankruptcy laws, regulators and researchers should be cautious of ignoring the potential extraordinary costs to contagion.

One of the more significant new methods of estimating contagion and systemic risk has been offered in a series of studies that could be categorized as the DebtRank approach pioneered by Battiston et al. (2012c). The DebtRank (DR) approach is a measure similar to feedback centrality which recursively accounts for distress in one or more institutions. A key difference from the EN algorithm (Eisenberg and Noe 2001), Contagion Index (Cont et al. 2013), and default cascade (Battiston et al. 2012a) approaches, is that the DR measure can simulate distress propagation without observing a failing institution. DebtRank is a “measure of the total economic value in the network that is potentially affected by a node” (Battiston et al. 2012c). Using panel data of the Federal Reserve’s discount lending program from 2007 to 2010, in combination with data on equity relationships of the same institutions, the DR measure can track the centrality ranking of a firm as it adapts over time. At the peak of the crisis in 2008, Battiston et al. (2012c) find that most firms were very fragile, and also had a high DR, implying they were more likely to fail and that those failures were more likely to spread. Importantly, Battiston et al. (2012c) find that size is not the sole dominant force driving systemic risk. Battiston et al. (2012c) find where firms that firms which held less than 10% of assets were able to impact an estimated 70% of the network.

It should be noted that DebtRank suffers from the same shortcomings in data as other measures, and relies on proxies for firm relationships. Thurner and Poledna (2013) proposes requiring transparency of individual institutions DR measures as a way of remedying the systemic risk endogenously created by firms. Thurner and Poledna (2013) notes, “opacity in financial networks rules out the possibility of rational risk assessment, and consequently, transparency, i.e., access to system-wide information as a necessary condition for any systemic risk management.” By making a bank’s DR public, and punishing those who borrow from risky lenders, systemic risk becomes far less significant. Another shortcoming of the original DR measure is that banks can only propagate a shock the first time it is received, potentially underestimating the risk of contagion (Bardoscia et al. 2015). Bardoscia et al. (2015) alter the original DR algorithm, and use it to test a network of European banks from 2008 to 2013, and estimate the network can amplify exogenous shocks between a factor of three (normal phase) to six (crisis phase). The network endogenously adjusts over time, and has differing amplification of shocks depending on the network and individual firm fragility.

3.1.2 Firm and asset heterogeneity

A theoretical understanding of systemic risk sources demands a deeper knowledge of the systems that have proven robust over time to large systemic shocks (May et al. 2008). Less diverse systems, especially when highly interconnected, have been found to face an increased risk of systemic events. Haldane (2009a) posits that the financial system had become too homogenized. Firms copied others behavior reducing diversity while becoming more interconnected. Haldane and May (2011) suggest using three macroprudential tools to help reduce homogenization and shape the topology of financial networks: setting stronger regulatory capital and liquidity ratios; creating systemic regulatory requirements; and create a centralized system for netting and clearing of derivatives.Footnote 19 Reversing the trend of homogenization through policies such as the Volcker-rule could help separate financial risks within institutions, increase modularity, and reduce systemic risk (Haldane and May 2011).

The assessment that homogenization should be reversed is not universally agreed upon however, since the wrong type of compartmentalization could limit the policy’s effectiveness, increase local risk, and may lead to negative feedback loops raising overall risk (May et al. 2008). Furthermore, while there appears to be a declining trend in the diversity of firm activities, new loans were becoming increasingly heterogeneous and riskier. The level of compartmentalization may be critical to reducing systemic risk, but both diverse and homogeneous networks are susceptible to greater systemic risk as long as there is little to no transparency at the level of asset origination. Following the Gramm-Leach-Bliley Act of 1999, financial institutions were able to diversify and de-compartmentalize their activities. Hedge funds acted more like banks while banks took on more hedge fund like risk. Additionally, tax preferences for debt over equity contributed to the long-term trend of increased debt reliance by banks and the financial system tilted towards increased leverage and risk taking activity (Allen and Carletti 2013). At the same time the overall level of underlying risk was increasing in a way that strict compartmentalization might have only pushed more risk taking into a more lightly regulated sector (Allen and Gale 2006). Unless compartmentalization prevented the real estate bubble from inflating in the first place, it is hard to see how a broad systemic event could have been stopped by walling off risks. Risky sub-prime assets were held by most large financial institutions, and their collapse turned out to be highly correlated.

Johnson (2011) further criticizes the Haldane and May (2011) model for the fact that “tiny changes in the model’s assumptions...can inadvertently invert the emergent dynamics.” Since agents can change how they interact, simplistic assumptions about behavior are prone to erroneous conclusions and possibly bad policy. Johnson (2011) also argues that simulated models need to consistently use quantitative estimates for parameters rather than ad hoc measures often employed in models. Upper (2011) provides a more detailed criticism of the toy model problem raised by Johnson (2011), explaining that a failure to provide solid behavioral foundations and assuming a rudimentary behavior leads to simulations with inaccurate or misleading results. In most simulations analyzed by Upper (2011), banks are caught off guard by the failure of a linked financial institution, creating losses that can cascade through a network.Footnote 20 In reality however, agents act strategically and may herd as some institutions are able to observe the deterioration of a failing institution beforehand and attempt to unwind or hedge their relationship before a failure occurs. Studies of herding behavior in financial networks will require an agent-based approach with microfoundations and strategic behavior at the firm level. Recent work in progress by Bluhm et al. (2013) provides a model with microfoundations to bank behavior and endogenous network formation.Footnote 21 Using the EN algorithm to simulate cascades, Bluhm et al. (2013) show prudential regulations offer tradeoffs between provision of credit and systemic safety. The individually optimal unwinding of relationships can ultimately contribute to the failure of an institution as was the case with Lehman Brothers. Most network-based models would have failed to predict the collapse of Lehman Brothers, and also would have provided little help understanding the potential knock-on effects due to their failure (Upper 2011). Government intervention limited the immediate damage due to the failure of Lehman Brothers, and future models should focus on giving the government a role in potentially intervening in market failures.

3.2 Empirical network research

Where data is available empirical studies have shown networks like interbank markets and payment systems exhibit scale-free topology such that the largest firms (i.e., hubs) not only capture a large number of all connections in financial markets, but also that the vast majority of transactions run through these firms.Footnote 22 Empirical network research can help determine which network fundamentals produce more robust financial frameworks (May et al. 2008). In scale-free distributed networks, hubs are generally found to be the most likely sources of systemic risk where shocks have the potential to lead to contagious defaults. Randomly distributed networks, where traffic is not dominated by a few firms, do not suffer the same problems of contagion as scale-free networks. Random networks must experience shocks exceeding a certain threshold in order to bring down a large part of a network. The critical nature of network dynamics comes from collective effects where relatively simple behavioral components lead to emergent phenomenon (Bouchaud 2009). In general it is likely true that robustness in real world networks is an emergent feature which cannot be engineered through top-down risk controls (Kambhu et al. 2007). Empirical studies have been able to uncover some real world network features which have proven relatively robust to certain external shocks.

Soramäki et al. (2007) employ Fedwire data to examine 62 daily networks of interbank payment flows in the US around September 11th, 2001, and find that the number of nodes (firms) and links in the network fell significantly around that date but quickly recovered. A unique feature of Fedwire is that the entire network can be mapped on a given day, such that all types of systemic risk addressed here can be understood. The US interbank market is stable, susceptible to targeted shocks on hubs, and exhibits significant topological shifts when confronted with broad non-financial shocks (Soramäki et al. 2007).Footnote 23

Varying topologies of financial markets reveals different regulatory requirements are necessary for most markets. As previously mentioned, preventing systemic crises may require regulators to steer financial network topology towards stability by adjusting capital and liquidity requirements, limiting size (i.e., mergers and acquisitions), or walling off risks. The natural variation inherent in international financial markets implies regulators might want to avoid applying one-size-fits-all rules to different networks. International evidence reveals that interbank market topologies are significantly different, with each requiring unique regulations. Interbank markets in Austria, Germany, Sweden, Italy, Belgium, and Japan are similar in some ways to the US (Boss et al. 2004; Craig and Peter 2014; Blåvarg and Nimander 2002; Mistrulli 2011; Inaoka et al. 2004). The Austrian interbank topology is approximately scale-free and similar to the US (Boss et al. 2004). Simulations by Boss et al. (2004) show that a fully integrated Austrian interbank market would actually be less stable than the topology seen at the time. Boss et al. (2004) suggested that the reason they see a divergence from the theories of Allen and Gale (2000) is due to the scale-free nature of the networks. Craig and Peter (2014) and Blåvarg and Nimander (2002) both display a tiered structure in the German and Swedish interbank markets respectively, where money-center banks dominate an otherwise sparse network. Bank balance sheets correlate with purposeful behavior, and the topology is at least partially determined by these decisions (Craig and Peter 2014). The most widely studied market is Italy’s e-MID interbank network, which has a heterogeneous microstructure that is not scale-free in terms of the size of firms, but does exhibit a heavier tail than seen in a random network (Iori et al. 2008). The e-MID market also has a clustering coefficient lower than a random network, implying intermediaries in Italy are not necessary to create interbank loans and raising the possibility of widespread preferential lending between banks. Iazzetta and Manna (2009) and Delpini et al. (2013) also examine the e-MID market, and show that a consolidation trend has led towards a tiered structure with few hubs from a more fully connected network. The resulting system is more robust to random shocks, but targeted shocks would likely have much larger effects in a tiered network. Since tiered networks can be caused by mergers and changes in lending practices it is highly likely that any simple reorganization of asset holdings is not neutral to the overall structure of risk. Degryse and Nguyen (2007) examine the Belgian interbank market, and find it has also shifted to a tiered structure over time through mergers and acquisitions. The Belgian banking system has also become far more reliant upon international linkages, making any management of the system more difficult. Degryse and Nguyen (2007) also find that increased concentration of the Belgian interbank market has reduced the potential risk and impact of contagion. The Japanese financial network is similar to the US in terms of topology, but a careful examination reveals the “structure is a result of the pursuance of ’efficiency’ rather than ’stability”’ (Inaoka et al. 2004). Other markets in Portugal, Netherlands, and Switzerland have topology that is significantly different from the US (Cocco et al. 2009; Squartini et al. 2013; Müller 2006). In the case of the fragmented Portuguese interbank market bilateral agreements are evidence of cooperation under repeated interaction between banks which help determine terms of loans such as interest rates and duration (Cocco et al. 2009). The Dutch interbank system is not characterized by a core-periphery structure (Squartini et al. 2013). While the Swiss banking system is highly centralized with two very large banks playing key roles and several subnetworks exhibiting different, more homogeneous characteristics (Müller 2006).

Topology plays an important role in contagion through interbank markets, where more complete and homogeneous networks are typically found to be more robust to random shocks than fragmented networks. However, Craig and Peter (2014) note that the commonly observed tiered money-center banking system is not dense as would be predicted by Allen and Gale (2000), Babus (2005) and Leitner (2005) (Table 4). The observed core-periphery structure is also persistent, a fact which “clashes with the view that random shocks are the basis for understanding interbank activity” (Craig and Peter 2014). Mistrulli (2011) proposes another empirical challenge to the diversification-is-good argument of Allen and Gale (2000) and others. Mistrulli (2011) examines the widespread use of maximum entropy methods when data are incomplete on interbank exposures. Essentially, the maximum entropy approach assumes risk exposures are evenly spread across the entire market (i.e., complete markets).Footnote 24 In the e-MID market, Mistrulli (2011) finds that the maximum entropy approach under a narrow shock would provide a questionable measure of the severity of contagion since the assumption is all institutions are impacted, but by a very small amount. The alternative approach of Anand et al. (2015) is to look at the minimum density (MD) to determine how a network might be distributed in a cost optimizing way. Degryse and Nguyen (2007) also examine an alternative to the maximum entropy approach by endogenizing the losses in the event of default. In a similar spirit as financial and leverage accelerators, losses are amplified under certain weaker topographies (Degryse and Nguyen 2007). The maximum entropy approach likely overstates the spread of an individual shock on the basis of the number of firms impacted, but understate the transmission that it would have on a real-world counterparty. Generally, this research finds that complete markets are not universally more resilient to contagion, but may in fact be worse in some circumstances (Mistrulli 2011).

3.3 Simulated networks and dynamics

While data exists for the payments and interbank systems they are not the only relevant networks for understanding systemic risk and contagion (May et al. 2008). To improve the understanding of market dynamics where complete maps of markets are lacking, researchers can experiment on simulated topologies. Given that many systemic risk issues are related to balance sheets which are only periodically available, simulation is needed to assess asset-pricing bubbles and credit crises (May et al. 2008).

Nier et al. (2007) use simulated network models to show that increased connectivity does not monotonically decrease systemic risk. A negative relationship between contagion and capital exists, suggesting greater capital requirements might help regulators prevent contagion (Nier et al. 2007). Simulated variations to the structure of financial networks can lead to ranging levels of systemic risk. An even broader implication is that the size of the shock that hits a system will have varying effects depending on the structure of the network. Other simulation work demonstrates financial networks exhibit a “robust-yet-fragile tendency: while the probability of contagion may be low, the effects can be extremely widespread when problems occur” (Gai and Kapadia 2010).Footnote 25 This also serves as a warning against assuming that previous resiliency is evidence of resiliency in the present since structures may shift dramatically over time (Gai and Kapadia 2010).Footnote 26 Roukny et al. (2013) takes an approach similar to Gai and Kapadia (2010) and Battiston et al. (2012a) and simulate a wide array of network topologies, firm capital ratios, market liquidity, and narrow versus broad shocks. Roukny et al. (2013) show that topology is one key to stability, but that market liquidity is a more important determinant of optimal market architecture. When markets are more illiquid, topology plays a more important role. Gaffeo and Molinari (2015) use a simulation approach to studying proposed Basel III rules on liquidity and capital requirements, noting significant tradeoffs for regulators who wish to impose stricter policies. While these studies are unique in their approach, Nier et al. (2007), Gai and Kapadia (2010), Roukny et al. (2013), and Gaffeo and Molinari (2015) are relatively weak in terms of behavioral foundations. However, their approach is appealing for approximating systemic risk by generalizing beyond a single network to examine the broader role for regulation and interaction.

There is a significant amount of empirical research that shows certain financial markets—particularly interbank—are tiered (i.e., core-periphery, money-center). While some evidence points to more homogeneous behavior (Haldane and May 2011), other research has shown a significant shift away from complete markets (Degryse and Nguyen 2007; Iazzetta and Manna 2009). One potential implication of this trend is that firms might actually be becoming more heterogeneous in terms of size and behavior. Lenzu and Tedeschi (2012) and Tedeschi et al. (2012) both take a simulation approach to studying the role of firm heterogeneity on systemic risk, and produce similar results. The key contributions by Lenzu and Tedeschi (2012) and Tedeschi et al. (2012) were to incorporate the leverage accelerator into agent-based simulated network models. A decline in market liquidity here can be the main culprit in leading to otherwise healthy firms failing. Like some of the empirical research which stress-tested real-world markets, Lenzu and Tedeschi (2012) find that random networks are more stable than scale-free topologies in the face of random shocks. Caccioli et al. (2012) use the methods of Gai and Kapadia (2010) to find essentially the opposite from Lenzu and Tedeschi (2012). In Caccioli et al. (2012) and Georg (2013), scale-free systems are more resilient to random shocks, and contagion is more likely to occur when one of the larger or more connected institutions are shocked. Georg (2013) used a dynamic banking system which allows firms to optimize balance sheets, and provides an additional theoretical model to show that network structure is significant in times of crisis. While not ruling out the importance of contagion, Georg (2013) emphasizes consideration of broad shocks as being more important. Since broad shocks impact multiple firms, the likelihood of systemic risk is higher when a greater number of critical agents are impacted. Delpini et al. (2013) examines the question of which firms are most critical to the system using the e-MID market and simulation methods. Delpini et al. (2013) finds that the firms that are most connected or the largest lenders are not necessarily the firms that are most likely to lead to contagion. Rather, the key to contagion in the e-MID market is a reduction in liquidity and trust—the same accelerators described before. The key difference between these strands of the literature is that stronger behavioral foundations and the presence of an accelerator process can reverse the general findings. However, these somewhat conflicted studies do highlight the important role played by both liquidity and capital requirements in predicting contagion.

A proposed regulatory target is the mandated use of central counterparties for certain assets like CDS. Simulated networks have been used to explore the effects of concentration and tiering—reflecting the ratio of members and general clearing members—on network stability (Galbiati and Soramäki 2012).Footnote 27 Fewer general clearing members who trade directly with the central counterparty would require additional precautionary funds and raising the individual cost of participation (Galbiati and Soramäki 2012). On the other hand, more concentrated networks with more inequality across general clearing members decreases the risk for the central clearing system. Simulated random networks of banks, firms, and insurers linked directly by contracts and indirectly by CDS arrangements increases instability if banks are taking the opportunity to raise risk when hedged via an external insurance sector (Heise and Kuhn 2012). As expected, members of the insurance sector face larger risks of insolvency when they are hedging the banking system. If the banks are trading CDS between themselves without an external insurance sector, the overall risk of maximum losses rises, and the system is even further destabilized. This research by Galbiati and Soramäki (2012) and Heise and Kuhn (2012) makes the point that increased connectivity is not necessarily a stabilizing force, and that regulating CDS will depend on how the derivatives are used. Puliga et al. (2014) examine the CDS market in conjunction with the housing market and report that CDS spreads—which are commonly presumed to be predictive of stress—actually are only coincident and not leading indicators of crises. When CDS are combined with housing data and the DebtRank approach to estimating contagion, there is an increase in the ability for CDS to perform as predictive measures (Puliga et al. 2014). Puliga et al. (2014) shows that macroeconomic risk alone cannot capture the systemic risk created during a general downturn in home prices, but that incorporating network effects reveals a larger concern. Research by Kaushik and Battiston (2013) examines the role that interdependence and trend reinforcement play in determining the level of systemic risk, finding that high levels of individual risk tend to be associated with other firms facing stress. This is evidence that the financial accelerator does appear to play a role in CDS markets, as collateral would deteriorate across the system as interdependent firms—but not necessarily similarly exposed—firms would face more difficult borrowing constraints. Additional evidence demonstrates that the expansion of financial instruments for speculation and hedging may in fact destabilize markets rather than increase systemic safety (Brock et al. 2009). Margin requirements which prevent collapse depend on the topology of the network, and fragility depends on who is considered part of the system being monitored. Hedging risk outside of the narrowly defined financial sector does not make it disappear, but in fact raises overall risk.

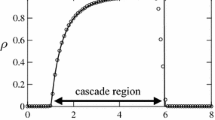

The lack of meaningful behavioral foundations and unrealistic scenarios are common in over a dozen widely cited simulation studies (Upper 2011). Network parameters need to be better estimated and firm behavior needs to have realistic behavioral foundations since rules are shown to significantly affect the emergent topology (Schweitzer et al. 2009). One way of improving on the simulated network models are to incorporate behavioral foundations through an agent-based approach (Fig. 1). The agent-based modeling approach also played an important role in developing the network-based financial accelerator and leverage accelerators described earlier (Battiston et al. 2012a; Tedeschi et al. 2012; Lenzu and Tedeschi 2012; Roukny et al. 2013; Bargigli et al. 2014; Riccetti et al. 2013; Grilli et al. 2015). The endogeneity of behavior—and therefore topology—can only be modeled by allowing for heterogeneous behavior of agents (Table 5). Lenzu and Tedeschi (2012) also take an agent-based approach to simulate endogenous network formation, which can then be stressed by a series of shocks.Footnote 28 When trying to determine if a certain market topology is more stable than another it is also important to determine if the network-based financial accelerator or leverage accelerator play a role in any potential contagion. In addition to concerns about systemic risk in interbank markets, there are other markets that might interact and amplify risk. Montagna and Kok (2013) provide an agent-based model showing that the interaction across multiple markets results in non-linearities in systemic risk. Thus, these accelerators might play a meaningful and synergistic role across multiple markets where a single firm operates. There has yet to be much discussion regarding the layering—or multiplex—of financial markets, and this shortcoming presents a potential avenue for future research (Thurner and Poledna 2013).

4 Measuring systemic risk

Network researchers should be aware of the recent advances in measuring systemic risk since these measures can be used to judge policy effectiveness. An array of new measures are aimed at locating potential systemic risk at large publicly-traded financial institutions. A survey by Bisias et al. (2012) noted 31 measures of systemic risk which is certainly out of date considering the speed of research in this field. The intention here is not to update the list provided by Bisias et al. (2012) or others, but to relate what can be learned from these measures. One feature that many new systemic risk measures share is a fundamental shortcoming by using a portfolio approach to measure risk. The portfolio approach understates potential risk because it is unable to match the scope of the problem. Measures like value-at-risk (VaR) and expected shortfall (ES) have been employed in an attempt to quantify the systemic risk of systemically important financial institutions (SIFIs). The primary intent of VaR and ES models is quantifying potential losses for portfolios of assets where the composition of the portfolio is known and one can estimate probabilities and correlations of potential returns to the individual assets. Measures such as VaR and ES are unable to capture risks that are diversified outside the scope of firms being monitored. Thus the portfolio approach questionably assumes that all firms that might be systemically important are actually being monitored by regulators before any crisis begins, the necessary information on those firms is available, and the tools to take effective action are already in place. Of additional concern, most newly constructed systemic risk measures overstate their ability to deal with black swans—those events that occur with extremely low probability and have enormous financial losses (Taleb 2010). Network modeling can move beyond these standard measures to show where they might fail to detect otherwise unforeseen risks.

Debates over the scope of systemic risk analysis start at the point of defining SIFIs. Officially, SIFIs in the US are determined using a size threshold including only those firms with over $50 billion in consolidated assets. Firms are deemed systemically significant due to their “nature, scope, size, scale, concentration, interconnectedness, or mix of activities” of the firm (Labonte 2010). Firms meeting the $50 billion in consolidated assets threshold would need to surpass one additional threshold in order to be considered systemically important. The Financial Stability Oversight Council (FSOC) decides which firms should be regulated based on a six-factor framework.Footnote 29 “Three of the six categories—size, substitutability and interconnectedness—seek to assess the potential impact of the nonbank financial company’s financial distress on the broader economy” (Financial Stability Oversight Council 2011). The other three categories, “leverage, liquidity risk and maturity mismatch—seek to assess the vulnerability of a nonbank financial company to financial distress” (Financial Stability Oversight Council 2011). Therefore, SIFIs are examined for their potential susceptibility to both narrow and broad shocks.

4.1 Conventional measures of systemic risk

Measures like VaR and ES were not designed to measure systemic risk since they do not incorporate network effects beyond the direct linkages of a firm. These measures and others have recently been adapted to measure systemic risk by estimating the correlations of risk across multiple firms. Increased complexity through diversification and interconnectivity often reduce the risk exposure measured by VaR or ES at an individual firm, while increasing overall risk in the system. Microprudential regulations often focus on measures such as VaR which estimate the maximum likely loss at some pre-specified confidence level (Aragonés et al. 2008).Footnote 30 The VaR measure is notably blind to what might happen during tail events, and so ES measures were developed to estimate the losses conditional upon tail events occurring (Aragonés et al. 2008).Footnote 31 However, ES measures also fall short by assuming risk neutrality across tail events thereby underestimating the black swan problem of convexity (i.e., low probability/high-loss events) (Taleb 2011).Footnote 32 Most new systemic risk measures are improving the ability to identify the most important firms when confronted with broad shocks, but still fail to capture much of the risk from narrow or endogenous system shocks.

Measures like VaR and ES have been improved by taking greater account of contagion and network effects. An institution’s CoVaR relative to the system is the VaR of the system conditional on that institution being in distress (Adrian and Brunnermeier 2009). Adrian and Brunnermeier (2009) also describe how CoVaR can be used to measure the increased risk at one institution given that another institution has become distressed. CoVaR measures for the U.K. raised estimated median bank risk by 40%, evidence that the measures are able to account for some of the potential knock-on effects that result from stress in another part of the system (Haldane 2009b). While this is a notable improvement in measuring overall risk, VaR and CoVaR measures of systemic risk have been shown to ignore tail risk, and are not based on any existing explicit economic theory (Acharya et al. 2010). In response to criticisms of using CoVaR to measure risk, Adrian and Brunnermeier (2009) suggest using conditional expected shortfall (Co-ES) to examine the expected shortfall conditional on one firm falling into distress. CoVaR and Co-ES rely on estimating the distress of the overall economy using available market measures including liquidity spreads, changes to market interest rates, credit spreads, and market measures for the stock market implied volatility. For CoVaR and Co-ES to be employed the firms being monitored must be publicly traded, a potentially dubious assumption.

The ECB generally recommends following slightly different measures to estimate systemic risk: the systemic expected shortfall (SES) and marginal expected shortfall (MES) (European Central Bank 2010a).Footnote 33 The MES and SES methods incorporate power-law risks due to the largest firms and shocks when estimating systemic losses (Brownlees and Engle 2011; Acharya et al. 2010). Evidence shows that the MES would have been able to detect some of the firms that failed during the crisis had the measure been in use ex ante(Acharya et al. 2010). The NYU Volatility Laboratory or V-Lab is based off the work of Brownlees and Engle (2011), and has resulted in a running list of the top ten firms in terms of MES, systemic risk contributions, and leverage (http://vlab.stern.nyu.edu/welcome/risk). Again however, it should be noted that reliance on available historical data understates the risk of future extreme events

An array of other systemic risk measures have been developed based on higher frequency market data.Footnote 34 At the firm level, intraday stock prices and CDS spreads for insurance firms and banks have been employed to measure systemic risk (Chen et al. 2013). Linear and nonlinear Granger causality tests show banks create systemic risk for insurers by transferring risk to the insurance sector (Chen et al. 2013). These new high frequency risk measures face a couple of notable shortcomings and unusual features. Under certain conditions, Zhou (2013) finds increased capital requirements could result in more systemic risk by increasing the correlation of holdings across different institutions. If liabilities are significantly different than the asset side, increased capital requirements can lower systemic risk. One factor potentially undermining this research is that transitional dynamics are ignored, and only equilibrium conditions are focused on. Reliance on using correlations prevents many systemic risk measures from taking account of truly extreme events, and their limitation to analyzing publicly traded firms is also somewhat troubling (Segoviano and Goodhart 2009). Since correlations can rapidly change and privately held firms may be systemically important, it is unclear if these measures are sensitive enough to be practical and timely in the event of another crisis.

If the total potential government cost to a systemic event could be estimated well in advance, it might be optimal to have firms bear a proportionate responsibility for their individual contribution to the overall cost. Costs could be allocated to individual firms in the form of risk-based insurance fees capturing their isolated contribution to an overall measure of systemic risk. Contingent claims analysis (CCA) is intended to capture tail dependence between multiple entities, with the goal of estimating the market value of government liabilities in the event of a systemic crisis (Gray and Jobst (2010) and Gray et al. (2007)). Information from equity and CDS markets is used in estimating an implicit put option yielding the systemic CCA.Footnote 35 Gray and Jobst (2010) argue that their proposed CCA method is an improvement over the CoVaR, SES, and DIP measures of systemic risk in that the estimates are time varying. It is further argued that the systemic CCA is also able to capture multivariate dependence across firms, rather than just a bivariate dependence in the case of CoVaR and SES measures. While the CCA is a very practical and timely measure, like other conventional measures it is limited to large publicly traded firms.

4.2 Stress tests

To make stress testing effective in measuring more forms of systemic risk, methods should consider counterparty risk, collective actions taken by other institutions, and endogenous systemic effects affecting both assets and liabilities. In practice, stress tests fail to capture the interconnected nature of the financial system and counterparty risk. Stress-tests of individual banks have been the most widely publicized approach for measuring the risk of broad systemic events. Under this approach, when individual banks are deemed safe, the broader system is also considered to be safe. The 19 banks identified as SIFIs in April 2011 completed individually specified stress tests under the Comprehensive Capital Assessment Review (CCAR), and commonly specified scenarios under the Supervisory Capital Assessment Program (SCAP) (Dudley 2011). Often, counterparty risk is downplayed in stress testing since it only arises after insolvencies take place, “[o]nce a bank is insolvent because of credit or market risk exposures counterparty credit risk in the interbank market crystallises” (Drehmann 2009). Moody’s Analytics reported that the CCAR stress tests would likely have been unable to predict the 2008 failure of Washington Mutual, one of the largest banks in the US during the midst of the crisis (Hughes et al. 2012).

The shortcoming of both the SCAP and CCAR stress tests is that they generally fail to take account of second-order effects, and ignore network externalities that would be present when a bank actually fails a stress test (Haldane 2009b). As noted earlier, simplifying network effects as only externalities may be dangerous. Certain network structures are more vulnerable than others, and the evolution towards those states must be studied further (Acemoglu 2012). Recent financial market reforms can be criticized on the grounds that neither the Dodd-Frank Wall Street Reform and Consumer Protection Act nor Basel III really attempts to reform the problem of the shadow banking system, or “mitigate the fire-sales and credit-crunch effects that can arise as a consequence of excessive short-term debt anywhere in the financial system (Hanson et al. 2011).”Footnote 36 More specifically, systemic risk measures should not only be able to identify individually systemic institutions, but also those that are systemic as part of a herd (Adrian and Brunnermeier 2009).

4.3 Network measures of systemic risk

Network analysis and simulation has marginally added to the debate on measuring systemic risk. While empirical network analysis suffers from problems due to the lack of data, these models benefit by being able to account for narrow and endogenous events as well as potential regulatory changes. Under recent Basel rulings, banks were allowed to reduce their capital held against assets which were insured using credit default swaps. Markose et al. (2010) conduct stress tests using a Systemic Risk Ratio—based on US CDS data—which are comparable to the US SCAP stress test program. Credit default swaps allowed the financial system to spread in terms of scope to hedge funds, insurers, and private equity funds, while at the same time being incredibly opaque instruments. The authors find a “hub like dominance of a few financial entities in the US CDS market,” concluding that the network is fundamentally unstable (Markose et al. 2010). Based on a agent-based theoretical model the measures would likely be more in tune during periods of stress when market-based reduced form models might become unhinged. Agent-based models have the capacity to model both varying firm size and behavior as well as incorporate varying regulatory strategies.Footnote 37 Only recently have agent-based models incorporating these features come into existence.Footnote 38

Kaushik and Battiston (2013) provide a different look at how CDS data can be used to estimate systemic risk. By examining CDS data, they are able to calculate two systemic risk measures, impact centrality and vulnerability centrality. Impact centrality rises for firms who impact many systemically important firms, and vulnerability centrality rises when a firm has “strong dependencies from many nodes which are in turn heavily vulnerable” (Kaushik and Battiston 2013). Together, these measures can help determine which firms are more important to the stability of the overall system. Due to interconnectedness and trend reinforcement an institution that is highly levered would have wide-reaching impacts if they suffered only a minor shock. While impact and vulnerability centrality help determine the identity of systemically important institutions, Battiston et al. (2012c) note that the DebtRank measure has an advantage in light of its ability to estimate monetary losses.

Network models have helped traditional risk measures take account of potential fire sales. Boss et al. (2006) and Martínez-Jaramillo et al. (2010) combine a CoVaR approach using Credit Risk+, to model default dependency across assets. Boss et al. (2006) crafted the Systemic Risk Monitor (SRM) for use in the Austrian central bank—Oesterreichische Nationalbank. Using data from the Major Loans Register, the SRM combines standard risk management with a network model. Without using a theoretical model for agent behavior, the SRM aggregates the banking system using a portfolio approach employed by many others. Martínez-Jaramillo et al. (2010) build on Boss et al. (2006) to analyze systemic risk in the Mexican interbank system adding a risk measure for the entire system which can be decomposed into baseline shocks and contagious effects. Additional accounting for correlated holdings of banks helps to simulate the possibility of a broad shock leading to a contagious event. In general it is found that the distribution of shocks might be more important than the topology of the system at a point in time. Simulations conducted using a variety of risk correlations provide evidence that even with highly correlated holdings banks face a low risk of a systemic event.

Empirical network research has provided a few notable estimates of systemic risk (Levy-Carciente et al. 2015; Bargigli et al. 2015; Huang et al. 2013). Huang et al. (2013) offer a new model of contagion that they apply to a snapshot of the US banking network in 2008. As the banking system was reeling from the real estate market crash, the authors are able to mostly replicate which banks failed under a simulation. Levy-Carciente et al. (2015) expand on this approach, by making their model dynamic in an application to Venezuela. Thier dynamical bank-asset bipartite network model DBNM-BA method allows tracking the risk of both assets and institutional stability.

Preventing contagion through bailouts may be costly to governments, but the benefits of prevention might outweigh the potential costs. The effective credit rating grade (ECRG) model developed by Sieczka et al. (2011) uses simulation methods to show that bailouts might help arrest contagion. The ECRGs are modeled to interact across institutions, and shows that a single default can lead to a contagion of downgrades in creditworthiness across the system. Thus, while most measures show contagion is a second-order matter to broad shocks, there is still evidence showing that understanding narrow shocks might be worth the trouble.

5 Do these systemic risk measures work?

What method should be used to measure systemic risk? Individually, the conventional risk measures all offer the benefit of helping to identify which firms might be considered systemically important. All of the aforementioned measures fail to fully capture the convexity present in the very worst states of the world. A 1% CoVaR or SES would still heavily weight the outcomes closest to the 1% threshold, and put very little emphasis on the magnitude of losses in the one in a million events. While any measure of risk has its shortfalls, large increases in risk like the CoVaR estimates by the Bank of England should be alarming (Haldane 2009b).

One of the most troublesome features of the systemic risk measurement literature is the emphasis on coherent risk measures where the components of risk are both subadditive and linearly homogeneous (Aragonés et al. 2008).Footnote 39 Subadditivity implies that diversification of two combined portfolios have lower risk than the individual portfolios (\(\rho (A+B)\le \rho (A)+\rho (B)\)). When estimating systemic risk it might be worth considering risk measures which allow for destructive synergistic effects as in the case of two portfolios whose correlations might rapidly change or a network structure where contagious defaults are more likely to occur. Additionally, the activity of creating new risky long-lasting underlying assets only increases overall risk even if it is coherently measured. At the firm level risk is likely coherent, but overall systemic risk may rise suddenly if a large amount of a particular type of asset is created. With an abundance of overpriced assets in the market a widespread decline in asset prices may be much more severe than anticipated—leading to a serious miscalculation in potential system wide losses.

Cont et al. (2010) provides evidence that coherent risk measurement procedures such as expected shortfall are not robust to small changes in the data or misspecification errors used to calibrate estimators. “There is a conflict between coherence (more precisely, the subadditivity) of a risk measure and the robustness in the statistical sense...” (Cont et al. 2010). The use of historical data to estimate the risk of future losses in coherent risk measures is highly sensitive to outliers and overstates the case for diversification.Footnote 40 Cont et al. (2010) provides further reason to be concerned about using coherent risk measures to approximate systemic risk when estimation methods and robustness checks might be as important.

The assumption that portfolios are linear combinations of risk is weak (de Vries 2005). Systemic risk is a result of interconnection, such that “a very large shock may topple the entire system, since no bank is able to bear its share in the adverse movement” (de Vries 2005). Diversification can spread risk around, serving to increase systematic and systemic risk where multiple institutions would be impacted by shocks to correlated portfolios.