Abstract

Purpose

The Higg Materials Sustainability Index (MSI) consists of five life cycle indicators to help the apparel industry inform material selection at the design stage. Until 2020, the Higg MSI applied a single score and after much debate, in 2021, indicators will no longer be aggregated. The problem of tradeoffs remains, and so this study evaluates potential aggregation approaches to help decision makers deal with tradeoffs that solve previous issues and allow for an integrated view.

Methods

Aggregation can be compensatory such as in the case of the weighted sum in the previous Higg MSI, or partially compensatory, and this relates as to how tradeoffs are managed. This study compares aggregation in the Higg MSI to four other aggregation methods via a comparative application using six textile materials (cotton, wool, PET, nylon 6, lyocell, and viscose) that, while not functionally equivalent on a mass basis, serve as an illustration of the effects of aggregation. This paper compares three compensatory aggregation methods to results from the Higg MSI—internal normalization of division by maximum, global normalization, monetization—and one partially compensatory method—stochastic multi-attribute analysis (SMAA). Methods were chosen to ensure a broad coverage according to their applicability to the Higg MSI.

Results and discussion

The comparison of raw materials using the impact categories used in the MSI Higg show tradeoffs, particularly for two materials which are the best performing materials in two impact categories and worse performing materials in the other two impact categories (out of four categories). For materials presenting tradeoffs, results show a distinct pattern between compensatory methods and SMAA. Compensatory single score methods place these materials in the lowest ranks, even lower than a material which is not the best performing material in any category. In SMAA, these same two materials rank above the mediocre material. There is a difference in how compensatory methods and partially compensatory methods handle the tradeoffs, between impacts and the resulting ranking of the materials.

Conclusions

Analysis shows that the current approach to aggregation in the Higg MSI is based on a weighted sum and, as with the other fully compensatory approaches, has three fundamental problems: linear compensation between poor and good performances, lack of accounting of mutual differences, and inverse proportionality. These problems can lead to material decisions that may enable burden shifting and unintended environmental consequences as a result of using the Higg MSI.

Recommendations

The Higg MSI needs to support companies in understanding the environmental sustainability of their products to be able to identify improvement options in a way that can adapt to the industry’s environmental concerns and business strategy. Therefore, it is recommended that the Higg MSI apply aggregation that is methodologically defensible regardless of the material in question to incentivize a healthy competition for environmental stewardship among industry members.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The Higg Materials Sustainability Index (Higg MSI) provides quantitative information on the environmental impact of materials used in textiles. The Higg MSI was developed by the Sustainable Apparel Coalition (SAC) as a tool for its users to reduce the life cycle impacts of their products (SAC 2020a). The SAC, which now has over 200 industry members in the apparel, footwear, and home textile sectors, offers a common platform for companies to compare different materials, inform material selection at the design stage, and support communication of information to the public in a consistent manner. The Higg MSI aggregates four cradle-to-fabric-gate life-cycle-based environmental indicators (global warming, water scarcity, eutrophication potential, and abiotic depletion potential) and a fifth semi-quantitative indicator on chemistry into single value scores (Table 1).

Part of the efforts of the SAC is to continue to provide scientifically robust information to its members; thus, approaches within the Higg MSI are regularly updated to represent scientific advancements and best available data (SAC 2017; Lollo and O’Rourke 2020). One of the areas identified for improvement is in the aggregation approach (Watson and Wiedemann 2019), and it is the subject of the latest update. In November, 2020, the SAC announced that due to stakeholders concerns, the Higg MSI will no longer apply aggregation in 2021 and allow industry members to view individual performances of impact categories as well as allow them to apply their own criteria for decision making (SAC 2020b). Therefore, the Higg MSI will go from application of a weighted sum to aggregate indicators, to avoiding aggregation and leaving industry members to deal with the criteria on their own. This change is understandable because the issues of the weighted sum for environmental sustainability applications, involving compensation, inverse proportionality, and bias are well documented in the literature (reviewed in Sect. 1.2). However, the apparel sector is now left with the same problem as before and their solution may not necessarily mean progress. Industry members may apply other versions of the weighted sum and not realize the inherent methodological issues or leave it up to the team to decide and this process is subject to cognitive biases. Research shows that when faced with information overload, it is common to focus on a single criteria (such as global warming) and systematically allow for burden shifting, or to decide on our preconceived notions and judgments (Buchanan and Kock 2001; van Knippenberg et al. 2015; Goette et al. 2019).

A critical issue with the normalization used in the weighted sum applied by the Higg MSI is that impact categories contribute to the final score in a way that is inversely proportional as to how these issues perform with the point of reference—meaning that aspects with the highest environmental impact in the area of reference (the normalization reference) contribute the least to the score and vice versa because the contribution to score is driven by the magnitude of the denominator (the area of reference) (White and Carty 2010). For example, the more we reduce our global ozone depletion emissions, the more strongly this impact will influence the results.

Another limitation of the aggregation method used in the Higg MSI is the way in which it deals with tradeoffs—poor and good performances of an alternative. This issue is separate from input data values and a matter of the algorithm itself. The weighted sum used in the Higg MSI allows for full compensation between poor and good performances, which allows for improvements in one environmental aspect to be compensated for by degradation in others, indefinitely. A further limitation is that the weighting (weighting referring to the action of applying weight factors to results) used in the Higg MSI reflects the biases of some, but not all stakeholders, which the ISO standards for LCA prohibit for public comparisons, such as those provided by the Higg MSI (ISO 14044 2006). It is important to recognize that although it applies “equal weighting,” it pertains to a particular point of view where all impacts have the same importance. This does not reflect the points of view that might consider climate change or water scarcity more important.

Due to the increasing use of the Higg MSI in guiding the textile, apparel, and footwear sector in environmental sustainability decisions, it is important to take a closer look at how data is being interpreted with the aim of improving decision support. We recognize this as a multicriteria decision analysis problem where different apparel and textile brands have to deal with multiple environmental indicators and that when making comparisons, they frequently face tradeoffs. Without a conscious decision-making approach, organizations are vulnerable to subpar decision-making processes regardless of the accuracy of the data being presented to them.

The goal of this study is to explore alternative ways of aggregating life cycle environmental impacts into points to form the Higg MSI and to assess whether the aggregation aspect of the Higg MSI can be improved with a partially compensatory approach. Alternative approaches to aggregation are assessed on their robustness, how they cope with tradeoffs, and their ability to adopt developments in the field such as to incorporating uncertainty, dealing with multiple stakeholders, and providing decision support as well as their conformance with the ISO standards for public comparisons.

This study starts by reviewing the methodological implications of the aggregation approach applied by the Higg MSI until 2020 and its problems prior to exploring future avenues.

1.1 Basics of the weighted sum and its implementation in the Higg MSI

In general terms, a weighted sum has two steps: first there is a scaling (also known as normalization) step which converts the different units of the indicators (kg CO2 eq, and m3 for example) into a common unit by dividing by a point of reference (referred in the LCA literature as normalization reference); the second step consists of the application of importance weight coefficients (referred in the LCA literature as weight factors). The scaled and weighted indicators are summed up to form the overall score in points (Eq. 1).

Where the characterized result (CR) of indicator, i, is divided by the normalization reference (NR) of the corresponding indicator, i. The Higg MSI normalizes according to the industry’s annual impact. The division is later multiplied by the weight factor (w) of indicator i. The Higg MSI assigns equal weight factors (w) for all indicators, or “1”. The resulting point value of the Higg MSI for alternative, a, is the sum of this operation across indicators.

This form of weighted sum is common in a broad range of applications and is also used in the LCA community as described by the ISO standards in the optional interpretation steps although not allowed in public comparative assertions (Guinée 2002; Bare and Gloria 2006; ISO 14044 2006; Ryberg et al. 2014). The weighted sum as shown in Eq. 1 can have variations with regards to the normalization reference chosen (\({NR}_{i}).\)

The normalization step in a weighted sum can be done with respect to an external or internal reference, and each approach has different implications for the interpretation of results (Tolle 1997; Norris 2001; Prado et al. 2012) although in both cases, the aggregation remains compensatory. In external normalization, as used by the Higg MSI, results are normalized according to a reference outside of the scope of the study. Typically, in LCA, this external reference consists of the impacts of a given geographical region (global, regional, or national) over the course of a year (Bare and Gloria 2006; Sleeswijk et al. 2008; Lautier et al. 2010). Several impact assessment methods, such as CML-IA, ReCiPe, and TRACI, provide external normalization reference datasets that can be used to evaluate the results (Guinée 2002; Goedkoop et al. 2009; Bare 2011).

Internal normalization consists of scaling the study results relative to a reference value within the study boundary. It can be division by the largest, an alternative identified as the baseline, or an average result for the alternatives being evaluated. Before reference datasets for normalization were compiled for various geographical regions, internal normalization was more commonly used, but concerns such as rank reversal and insensitivity to magnitude (Norris 2001; Prado et al. 2012) led to the preferred use of external normalization in LCA. In both approaches, it has been found that the normalization step has a large influence in the outcome of results (Stewart 2008; Myllyviita et al. 2014; Pollesch and Dale 2015; Prado et al. 2017a; Wulf et al. 2017), and therefore, it is important to study the implications of each approach.

1.2 Fundamental limitations of the weighted sum for the Higg MSI

While the Higg MSI is a great step forward for the apparel industry, as alluded to above, there are a number of issues that may hinder the overall goal of reducing life cycle impacts. A better understanding of these limitations will allow the industry to improve the Higg MSI with better outcomes for its goal.

The use of a weighted sum, either with external or internal normalization, has fundamental issues that remain regardless of the coverage of the normalization reference (national, global, or industry based) and regardless of whether the data compilation and quality issues are addressed (Prado et al. 2012). These are labeled as fundamental limitations because they stem from either methodological inconsistencies or unsuitable for the type of indicators at hand.

First, there is an issue of inverse proportionality, where the impact scores that are most severe in the normalization reference area will yield systematically lower normalized scores, and vice versa. Because of this reversal, the greater the improvements the industry has in an impact area, the more important that area will become to the scoring, resulting in further emphasis in reduction of those impacts while leaving the others to be increased or left at status quo. White and Carty (2010) identified this bias in 800 selected inventory processes in TRACI and CML-IA 2000 impact assessment methods where the normalization references of each method consistently highlight the same toxicity categories. These findings are also confirmed in several studies as reported by Prado et al. (2017b) where the ReCiPe, CML, and TRACI impact assessment methods show systematic biases across product systems. The Higg MSI normalizes with regards to the annual impacts of the sector and in a weighted sum; therefore, it is also subject to the effect of inverse proportionality even if annual industry data for normalization is up to date and compiled with a high degree of completion and accuracy. This effect contradicts one of the objectives of the Higg MSI, which aims to improve the environmental sustainability performance of the textile and apparel sector. If the goal of the index is to inform decisions and if the normalization is systematically masking key aspects, guidance can be misleading.

A complementary issue in how characterized performance is normalized is the accounting of uncertainty. Studies in life cycle impact assessment show that the issues highlighted by standard normalization references (regional or global) tend to be those with the largest uncertainties (Prado et al. 2017b). Therefore, the extent to which a certain impact category contributes to the single score is inversely proportional to the industry hotspots (inverse proportionality) and also independent of the robustness of the indicator. The Higg MSI copes with this issue by reducing the score to the four impact categories considered most robust and excludes from the index other concerns, although these are required in the data submission process (such as agricultural land occupation, and human toxicity cancer and noncancer).

The exclusion of many impact categories due to uncertainty is also problematic. Environmental sustainability is a multidimensional problem extending beyond the four life cycle impact categories and thus having an index with a limited coverage also means leaving behind other life cycle impact issues relevant to organizations, as well as emerging issues that have yet to be integrated in life cycle impact assessment, such as microplastics. To wait for a robust enough life cycle impact assessment method to include such indicators in the index is to produce an index with a limited capability to inform decision support.

The second fundamental problem of the weighted sum deals with its incompatibility with environmental applications due to compensation. A weighted sum with normalization by division (external or internal) forms a linear aggregation algorithm where there is no limit in scale to the normalized result. This means that the normalized magnitudes can differ by great amounts, minimizing the effect of weights. Thus, the normalized result, and thereby, the normalization reference, drives the aggregated result (White and Carty 2010; Myllyviita et al. 2014; Castellani et al. 2016; Prado et al. 2017b, 2019; Wulf et al. 2017). This linearity enables what is known in decision analysis and aggregation theory as “compensation” (Munda and Nardo 2009; Rowley et al. 2012). Compensation is the characteristic of a method that enables poor and good performance to make up for one another indefinitely. For environmental applications, this means that improvements in one impact category can make amends for increases in environmental impact in another impact category—thus leading to environmental burden shifting. Fully compensatory methods enable a single good performance to drive the results thus favoring extreme solutions, which deal with tradeoffs in ways that are incompatible with a strong sustainability perspective (Stewart 2008; Prado et al. 2019).

The property of compensation is not flawed, per se, as it is applicable in cases where poor and good performances should offset each other, such as with economic criteria (profit and loss). However, in an environmental and/or sustainability context the practice of compensatory aggregation methods for a composite index is deemed unsuitable and instead partially compensatory/non-linear methods are recommended instead (Munda and Nardo 2009; Pollesch and Dale 2015, 2016; Munda 2016). Therefore, the issue of compensation is of concern for the Higg MSI which aims to promote improved material sustainability decision making via scientifically derived quantitative information.

The third fundamental limitation with the current approach to aggregation is known in decision analysis as the “typical weighting error” (Edwards and Barron 1994). The aggregation algorithm should take into account the change in preferences, and the degree of importance can change with respect to the spread (how different alternatives are). For example, when purchasing a car, price is an important criterion (measure of importance), but the degree of importance changes if one car costs $15,000 and the other $15,100 (measure of spread). A $100 difference could be deemed negligible in this purchase. The effect of spread in the degree of importance is recognized in the decision analysis literature as the range-sensitivity principle (Fischer 1995). In the weighted sum, as currently applied in the Higg MSI, the measure of spread is not taken into account—neither by the normalization nor the weighting step—which then constitutes a “typical weighting error” and a methodological inconsistency. The measure of spread in life cycle midpoint indicators can be difficult to assess because, unlike a $100 difference, it is difficult to determine when a certain amount of kg of CO2 per functional unit represents a negligible or a significant amount To solve this issue, the normalization approach would have to consider mutual differences with regard to uncertainty, which implies an entirely different approach to normalizing by division (represented in Eq. 1) as shown in outranking.

The other solution to the typical weighting error in the current Higg MSI is to apply tradeoff weights as opposed to importance weights. Tradeoff weights require information on what can constitute a swap between criteria (Hammond et al. 1999; Keeney 2002). A task that is difficult to apply when the units of life cycle impact categories are difficult to relate to each other in terms of magnitude, such as the swap equivalency between kg of CO2 eq. and kg of SO2 eq. This is a broader problem in the life cycle community, which is an active area of research aiming to estimate importance weights (Finnveden 1999; Bengtsson and Steen 2000; Ahlroth 2014; Itsubo et al. 2015) for life cycle impact categories; however, these authors do not mention the potential for theoretical inconsistency in its intended application.

This critique is not intended to invalidate the Higg MSI as a whole because this platform has been able to unite key stakeholders in the apparel and textile industry around scientifically-based life cycle impact assessment data and represents an initiative with the potential to improve decision making in the sector. Rather, this critique delves deeper into remaining methodological issues, even unrecognized in the mainstream by general LCA practitioners, to illustrate gaps and seek improvement options.

These three fundamental issues, as described initially, apply to all forms of a weighted sum, and therefore, the critique applies to alternative aggregation methods tested in this study, such as global normalization (a weighted sum with normalization according to annual global impact), and internal normalization by maximum. Only the second fundamental problem applies to monetization. Each aggregation method, including the partially compensatory method, stochastic multi-attribute analysis (SMAA), is explained in the methodology section.

2 Methodology

To analyze the aggregation approach in the Higg MSI along with alternative approaches, this study uses a set of midpoint environmental impact results for a range of textile raw materials (fossil and biobased) and simulates a single score. The raw materials are compared on the basis of 1 kg each, and, even though these materials may not be equivalent on a mass basis, the profile of results serves as a typical illustration of tradeoffs often faced in comparative analyses. The aggregation methods (independent variable) are compared based on the ranking of alternatives that derived from the single scores (dependent variable).

2.1 Selected textile materials

The study uses life cycle impact data covering cradle-to-gate results for key raw materials in textiles: cotton, lyocell, nylon 6, polyethylene terephthalate (PET), viscose and wool—a mix of biobased and fossil-based materials. Given the comparison on a kg basis is not functionally equivalent and that the comparative data has not undergone an ISO review procedure the raw material options are anonymized and labelled A, B, C, D, E, and F, to focus on how the aggregation method handles the information and avoid inconsistencies in the comparison of raw materials. For the purposes of this study, the impact categories to be aggregated consist of the four life cycle based indicators (global warming, eutrophication, abiotic depletion (fossil fuels), and water scarcity) used in the Higg MSI and excludes the fifth semi-quantitative criterion on chemistry (Fig. 2). Midpoint impact data was based on a per kg basis for various textile materials derived from the Higg Material Sustainability Index database (version 2.0).

Note that the Higg MSI undergoes semiannual updates concerning data, and in 2020, the water metric has changed from cumulative water use measured in m3 to water scarcity considering water availability also measured in m3. Still, the aggregation method remains the same. Prior to 2019, the chemistry points were added after the weighted sum, and therefore, these were excluded originally. In 2020, the chemistry points then became part of the weighted sum and while they derive from a life cycle impact indicator, freshwater ecotoxicity, there is a conversion procedure based on an arbitrary scale and type of product. Therefore, for illustrative purposes, we exclude this from the evaluation of aggregation approaches as these do not depend on the number of criteria.

Therefore, the methodological illustration in this study remains valid even though it does not reflect the data updates. The midpoint results provided follow the life cycle inventory modeling assumptions and impact assessment of the Higg MSI (SAC 2017). The data for each material consists of a midpoint result for each indicator and a set of data quality scores for the material in general (not specific to each indicator). Therefore, this study takes precalculated midpoint values for textile materials as a point of departure.

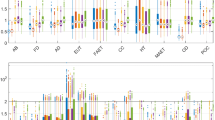

The midpoint results for the six selected materials (Fig. 1) show tradeoffs in all four categories where the materials with the highest impacts in some categories can also be the ones with lowest impact in others. Two raw materials stand-out in terms of tradeoffs: raw material A that has the highest water scarcity by about one order of magnitude as compared to the other materials, but it also has the lowest global warming impact and abiotic depletion. Raw material F shows a similar pattern where it has the highest global warming impact by approximately a factor of 5 as compared to the other raw materials as well as the highest eutrophication impacts, but it has some of the lowest impacts in water scarcity and abiotic depletion. Overall, there is no single raw material option that performs best in any category and so any material choice results in a compromise. It is unclear how organizations, brands and designers will select materials based on this information. The existence of tradeoffs like these provides a suitable basis for our case study to evaluate the alternative aggregation approaches.

2.2 Selected aggregation approaches

The Higg MSI single score is meant to provide easy to understand information about a material’s environmental impact in a way that facilitates decision-making around material selection. For these purposes, this study evaluates five methods of aggregation for use in the Higg MSI, including the current approach of the Higg MSI, and four potential alternatives (Table 2).

2.3 Weighted sum-based approaches: Higg MSI, weighted sum with global normalization and weighted sum of internal normalization of division by maximum

These three aggregation approaches follow the same procedure with the only difference being the normalization value. Equation 2 shows the weighted sum in generic terms.

where.

\({CR}_{i}\) is the characterized result of indicator, i;

\({NF}_{i}\) is the normalization factor (the inverse of a normalization reference value). Table 3 shows the normalization values used in each case.

\({w}_{i}\) is the weight factor of indicator i.

To align with the weighting procedure of the Higg MSI, all weights were equal to 1.

All of the weighted sum methods are prohibited by the ISO 14044 standard for public disclosure because they include weight factors that represent a particular value system (ISO 2006).

2.4 SMAA

The application of SMAA requires an additional preparation step, which is not needed in the weighted sum methods. The preparation consists of the inclusion of uncertainty factors to transform the results of impact categories, given as single values (as shown in Fig. 1), to probability distributions.

Propagation of this uncertainty is estimated using the data quality factors for each material and then applied to the pedigree matrix (Eq. 3) to generate a geometrical standard deviation which is a parameter of dispersion (Frischknecht et al. 2004). Note that, while the pedigree matrix consists of six data quality indicators, the data provided by the Higg MSI reports on four quality indicators. To fill the gaps, a default value of 3, representing a mid-case, was applied to the remaining indicators (reliability and sample size) as shown in Table 4.

The rating in each data quality indicator category (as shown in Table 4) corresponds to a pre-defined uncertainty factor. For example, material A has a rating of 2 in the temporal data quality indicator (Table 4), which corresponds to an uncertainty factor of 1.03 as shown in Table 5. Each rating in each data quality indicator corresponds to a specific uncertainty factor that is predetermined and shown in Table 5. The calculation procedure of these uncertainty factors is explained in dedicated publications (Weidema and Wesnæs 1996; Ciroth et al. 2016; Muller et al. 2016).

The uncertainty factors are then used in Eq. 3, to calculate a geometric standard deviation (GSD), per material, that allows for simulation of the uncertainty around single-value midpoint scores.

where.

A with subscripts 1 to 6, is a function of the uncertainty factor (\({U}_{x})\), provided in Table 5, of each data quality indicator score as follows, \({A}_{x }={[\mathrm{ln}\left({U}_{x}\right)]}^{2}\).

\({A}_{b}\) corresponds to the basic uncertainty indicator calculated by \({A}_{b }={[\mathrm{ln}\left({U}_{b}\right)]}^{2}\), with a default value chosen for this study of \({U}_{b}=1.05\) for all textile materials based on a representative value of uncertainty factor given a rating of 3. The basic uncertainty corresponds to the epistemic error, the intrinsic error in measurement. When propagating uncertainty in inventory, the uncertainty factor is provided by ecoinvent depending on the type of the exchange (combustion type, or agricultural for example) (Lewandowska et al. 2004; Muller et al. 2016). The basic uncertainty factor serves as an estimation of uncertainty when information is scarce.

GSD is the geometric standard deviation used in conjunction with the given mean value to generate a probability distribution for each impact category result. Unlike an arithmetic standard deviation, a geometrical standard deviation does not have units and acts as a measure of dispersion (an error factor) that applies to each of the four midpoint scores per alternative (GSD per material in Table 6).

Once the parameter of dispersion based on data quality is quantified, it is possible to generate results showing probability distributions to be used in SMAA calculations (Fig. 2). SMAA is explained in a step-wise manner in Prado and Heijungs (2018). Here, we include a description of the general approach with definition of parameters.

The normalization component of SMAA consists of outranking, namely the PROMEETHEE II method, used for ranking purposes (Brans et al. 1986; Brans and Mareschal 2005). Outranking scales results internally, in a pair-wise manner, using a non-linear function. Within each criterion, i (or impact category in the case of LCA), and each Monte Carlo run, r, outranking evaluates whether the difference, \({d}_{ijkr}\) (on the x-axis), between an alternative material, j, with respect to another alternative material, k, falls under complete preference (for j), partial preference (for j) or indifference to whether j or k is better (Fig. 3). A preference threshold and an indifference threshold per criterion, i (\({P}_{i}\) and \({Q}_{i}\) respectively on the x-axis) provide the boundaries for each determination. These thresholds can be elicited from experts, or as in the case of LCA, the thresholds are derived from the uncertainty in the data (Rogers and Bruen 1998; Prado-Lopez et al. 2014). The preference threshold, \({P}_{i},\) is the average standard deviation of all alternative materials in impact category, i. The indifference threshold, \({Q}_{i}\), is half of \({P}_{i}\).

The outranking function in Fig. 3 is set up so that a lower environmental impact is preferred. Depending on how alternative materials perform with respect to each other, they obtain an outranking score (\({\theta }_{ijkr}\) on the y-axis) of 0, 1, or between 0 and 1, which is what makes SMAA a partially compensatory method. When an alternative material is superior to another material, in a magnitude greater than the preference threshold,\({P}_{i},\) the alternative obtains an outranking score of 1, not more. Likewise, if the alternative material is inferior or indifferent, the alternative obtains a score of 0, not less. If the difference, \({d}_{ijkr}\),lies between the preference and indifference threshold, the alternative material obtains a score, \({\theta }_{ijkr}\), between 0 and 1.

The main differences between SMAA and other methods evaluated in this study is that, in outranking, (1) there is a limit to the normalized value and (2) that the outranking score is tied to the mutual differences with respect to uncertainty. It may be that an alternative is superior in one aspect (impact area) than in an another, but the better (alternative) material would not obtain more than the maximum amount of points. In addition, by including uncertainty, SMAA takes into account the whole range of performance, that is, the stochastic value of the LCA midpoint result via Monte Carlo runs (the randomly obtained values with successive Monte Carlo simulations for the probability distribution determined for each impact category). Therefore, an outranking score will be assigned per run, as indicated by the subscript r in Fig. 3. It may be that alternative A is superior to B in some runs and indifferent or inferior in other runs. Thus, outranking scores reflect this uncertainty. Moreover, when evaluating the mutual difference with respect to uncertainty, alternative material, k, needs to have a larger difference from material j in the aspects (impact areas) with greatest uncertainty to fall within the complete preference range. This requirement means that multiple aspects (impact categories) with different levels of uncertainty can be considered and included in the analysis, instead of filtering out aspects with high uncertainty from the start. By including the uncertainty in the aggregation procedure, SMAA is sensitive to changes in uncertainty information. In the absence of a measure of maximum impact allowed (such as a planetary boundary to the scale of kg of raw materials for textiles), a useful metric to inform the decision is the mutual difference (Prado-Lopez et al. 2016). The impact category that has the largest difference is the impact category for which the decision has the highest impact.

Weights in SMAA are also assigned as exogenous coefficients of importance or independent variables, each of which may be important. Instead of applying a single weight to each impact category, SMAA applies a distribution of weights that reflect all the possible preferences. Stochastic weights provide an alternative to the “equal weights” that were chosen for Higg MSI, which was partially designed to reflect a neutral stance as to the importance of any single impact category. With stochastic weights, it is possible to cover the whole spectrum of choices that a user may make about the importance of an impact category and to avoid imposing a particular value system, which is a concern in the ISO interpretation guidelines for sharing comparative assertions in LCA. Since SMAA does not use the weighting method prohibited in ISO 14,044 and does not violate the underlying reason behind the prohibition, it may be used for public disclosure.

More importantly, the use of stochastic weight coefficients with an outranking scaling does not represent a “typical weighting error” as described for the application of the weighted sum. Thus, aggregation based on outranking and importance coefficients represents a methodologically sound approach.

In the end, aggregation in SMAA is a function of the outranking scores referred to as positive flows (the alternative versus the others) and negative flows (the others in relation to the alternative in question) that are weighted with the stochastic weights and summed up to generate a set of stochastic scores which are later turned into a probabilistic ranking (Tervonen and Lahdelma 2007). Probabilistic rankings give the likelihood that an alternative occupies a certain rank. For instance, alternative A may be 70% likely to rank first.

2.5 Monetization

In the monetization approach, no normalization is applied. Instead, each indicator is weighted by the monetary cost of the impact. This study applies a range of monetary values (Table 7) for each impact category to generate a lower and upper score for each alternative (Eq. 4).

where.

a refers to an alternative, a textile material in this case; \({CR}_{i, a}\) refers to the characterized result over impact category, i, for alternative, a; \({MF}_{i,\mathit{low}or high}\) refers to the monetization factor that converts each unit of impact to monetary units. Monetary values are converted to EUR2018 equivalents as a function of the currency inflation (details found in supplementary Excel file).

Values for global warming derive from a meta-analysis that takes into account the damage by each additional unit of kg of CO2 eq (Bruyn et al. 2010). This range is also consistent with other methods that take into account abatement and damage costs (Table 3 in Trucost 2015). Eutrophication costs are based on damage (for the low estimate) and abatement (for the high estimate) costs (Bruyn et al. 2010). The costs for abiotic depletion range from zero from an abatement and damage cost perspective because depleted resources have no costs associated with damage or abatement (Bruyn et al. 2010) to 2.9 USD2008 kJ−1 for crude oil according to a cost surplus approach (Ponsioen et al. 2014). For the case of abiotic depletion, this study includes a cost estimate different to damage or abatement with the aim of representing a range of values. This monetization approach serves as a screening assessment based on damage and abatement costs. In the event of performing monetization based on market prices such as carbon (or others that emerge in efforts to combat environmental impact), it will be necessary to consider the variability of markets in the impact assessment. It is recommended that further literature review is performed in this case given the variety of approaches and estimations specific to particular situations and geographical locations.

3 Results

Prior to assessing the results of the different aggregation approaches it is important to do a recap of midpoint performances per raw material (Fig. 1). At midpoint, raw material B is best in two out of four impact categories, and it does not perform worst in the remaining two. raw material C and D are not the best, nor the worst, in any impact category, which means both materials are somewhere in the middle across the four impact categories. Raw material A is the best in one category and the worst in one. Similarly, raw material F is not the best in any category but is nearly the best in one category and worst in another. Finally, raw material E is worst in one category and is not the best in any category. The extent by which a raw material is “best” or “worst” is different in each case, which has to do with the measure of spread.

Results of the different aggregation methods show the contribution of impact categories to the single score: global warming (GW), eutrophication (EUT), water scarcity (WS), and abiotic depletion (ABD). Based on the total Higg MSI score, the materials can be ranked to assess how the different aggregation approaches perform in judging the relative favorability of the materials.

Starting with the current Higg MSI aggregation method, results show that raw material B has the lowest total score, meaning the Higg MSI ranks it as the most environmentally preferred material of the six options (Fig. 4). The score also shows that raw material A has the highest total score followed by raw material F. Global warming, abiotic depletion, and eutrophication make up most of the score for most of the materials, and water scarcity dominates the score of raw material A. Raw materials C and D rank in the middle of the materials (second and third place), and raw material E ranks fourth, despite not having a preferable performance in any impact category.

When applying internal normalization of division by the maximum, results show the same rank ordering as in the MSI Higg MSI, but the total scores are closer in magnitude (Fig. 5). Here, raw material B still has the lowest impact score. Raw material D and E still rank in the middle, and raw material A and F are the two materials with the highest impact score, ranking in fifth and sixth place (Fig. 5). The composition of single scores in the method of internal normalization is dominated by eutrophication and abiotic depletion. Global warming does not have as large as an influence as with the current Higg MSI (Fig. 4).

Results using the corresponding global normalization references generate a single score where raw material B has the lowest impact score and raw material A the largest score (Fig. 6). A difference in the ranking of materials occurs with raw materials D and E, in which raw material E has a better single score than D. The profile of scores in this aggregation approach has visible contributions from half of the impact categories. The other two impact categories are orders of magnitude smaller and have no influence on the results once normalized, which is due to the magnitude of the normalization reference. These results show a case in which the normalization reference is most dominant.

The Higg MSI utilizes characterized impact categories of three different life cycle impact assessment methods: IPCC, CML, and Pfister et al.(2009), which each have an annual world normalization reference for a different year and a different compilation approach. Therefore, aggregation using a global normalization, in this case, can suffer from additional inconsistencies beyond those previously discussed for this approach. A similar pattern where the normalization reference dominates has been identified in various impact assessment methods (Prado et al. 2017a).

Monetization of life cycle impacts allows for the aggregation of results into a single monetary metric. Figure 7 shows the results of the monetization approach and the low and high estimates per impact category for each raw material. Results show that, on average, the environmental impacts of raw material A have the highest cost followed by raw material F. Raw material B has the lowest cost, according to this approach, meaning that it is the preferred material from an environmental impact standpoint. The contribution to the total cost (Fig. 7) show that for raw material A, the costs due to water scarcity dominate which is in alignment with the contribution of the other aggregation approaches. For the other materials, most of the cost comes from the global warming impacts.

Given that SMAA takes into account uncertainty of the impact categories performance, results when using SMAA show a probabilistic ranking, which is illustrated by the likelihood of each alternative to occupy a certain rank. Rank 1 represents the most environmentally preferred material, and rank 6 is the least preferable material, given the aggregated performance of the four environmental indicators and stochastic weights.

Results show that raw material B is most likely preferable material followed by raw material C (Fig. 8). Because these outcomes are not deterministic ranks, there is no exact ordering of alternatives, rather the graph informs how competitive each material is with respect to each other. Here, raw material B is a strong best alternative, as it has an 80% likelihood to occupy the first rank. Raw material C has a 50% likelihood to occupy the second rank. Raw material D is somewhat equally distributed between the third, fourth, and fifth rank, with a 27%, 27%, and 32% likelihood of occupying these ranks, respectively. Therefore, raw material D does not have a dominant position, as raw material B or raw material C do, in a single rank, rather it is a competitive midrange alternative. Raw material A has a likelihood that is not particularly strong in any single rank, and raw material A can occupy any of the six ranks with a similar probability of 8%, 20%, 13%, 21%, 14%, and 25% likelihood, respectively. Although the highest likelihood for raw material A is in the last rank, raw material A is not the most likely last material, rather raw material E is. This change for raw material E is a key difference in comparison with all the other aggregation methods. The other methods place raw material E more favorably in the middle, despite raw material E not being an alternative that excels in any impact category and is has the highest impact in one impact category.

Probabilistic ranking of materials generated with SMAA. Rank 1 on the x-axis represents the most preferable position, which is most likely occupied by raw material B. The last place, rank 6, is most likely occupied by raw material E, making it the least preferable material, followed by raw materials A and F. Raw materials C and D rank in the middle

Both raw material A and raw material F have a group of probabilities that permit occupying the whole range of ranks, without one rank being particularly likely, because their environmental performances have high tradeoffs with respect to the other materials. They are the best two performing materials for half the impact categories (GW and AB for raw material A, and AB and WS for raw material F) and the worst two materials in the other two categories, (EUT and WS for raw material A, and GW and EUT for raw material F) (Fig. 1). Lastly, raw material E appears to be the least environmentally preferred material with the highest likelihood to occupy the sixth rank.

SMAA results are a product of 1000 Monte Carlo runs in which the individual impact category contributions to the aggregated score vary each time. To plot the contributions of the impact category for the SMAA method and to understand the composition of the scores in the Monte Carlo runs, an average contribution of an impact category to the score in the runs can be visualized (Fig. 9). Unlike the other contribution plots, this graph indicates the categories and the extent to which each category positively contributed to the score, not the impact magnitude per se. A larger contribution from an impact category in Fig. 9 points out to a favorable performance in that category as compared to the others. These represent positive contributions which “help” a material rank more favorably. For example, raw material A has a favorable performance in GW and AB and therefore it gets most of its “good” points from here.

4 Discussion

After a thorough evaluation of the current aggregation approach in the Higg MSI, the limitations of the weighted sum approach were found to affect comparative results in a negative way. The ranking of various materials in the Higg MSI does not represent the tradeoffs at midpoint, which can be seen with the relative aggregated scores for raw materials A, E, and F. All aggregation methods favored raw material B, which can be justified on the basis of its midpoint performance, but fully compensatory methods also favored raw material E over A and F given that E does not excel at any impact category, while A and F do (Fig. 1).

Figure 10 shows a summary of the resulting ranks of raw materials aggregated scores and a pattern can be identified with compensatory and noncompensatory methods. The ranking of raw materials is most similar in compensatory methods than with SMAA (partially compensatory) where raw materials A and F go up in rank and raw material E goes down in rank. Raw materials A and F have favorable performances in half the impact categories, but in compensatory methods these still have lower ranks than raw material E that does not excel in any impact category and is worst in one. In SMAA, the only partially compensatory method, raw material E takes the last rank (goes from rank 4 in compensatory approaches, to rank 6) and raw materials A and F go up in rank.

Summary of aggregation results. The color indicates the rank ranging from blue for the best rank (1) to red for the last rank (6), for each material according to the aggregation methods tested. This figure shows how raw material B and C ranks first and second respectively across aggregation methods, but that raw material E ranges between rank 3 and 4, but in SMAA, ranks last (6)

The differences in rank orderings between methods are due to the level of compensation. A weighted sum for instance, which is the basis of the compensatory methods, allows for burden shifting between impact categories limiting the ability of a method to have a balanced assessment of pros and cons, thus reducing the ability of the Higg MSI to guide environmental sustainability decisions. This is evidenced by the fact that a raw material with a less favorable performance across midpoints (such as raw material E), scores better than materials with a more favorable performance across midpoints such as raw material A.

There are various aspects affecting the robustness of the current MSI Higg index starting with the fact that the impact categories contribute to the final score in an inversely proportional manner to the industry’s benchmark (normalization reference) and not by the measure of spread between alternatives—making this a case of a typical weighting error.

Robustness is also affected by basing the index on average performances (midpoints) when these performances are not equally representative, i.e., have different measures of spread, for the type of materials evaluated. Datasets of biobased materials, typically have much higher uncertainties (and variability) than datasets of industrially produced materials, such as fossil-based polymers. Exclusion of uncertainty can be misleading in the case of comparisons involving industrial and agricultural systems if averages are not comparable given the spread. This lack of clarity is a concern for the users of the Higg MSI (Watson and Wiedemann 2019).

Uncertainty of life cycle assessment results is not accounted for in the current aggregation method in the Higg MSI. The closest information to uncertainty are the additional data quality descriptors and it is up to the decision maker to interpret this on their own. Even when two materials are indistinguishable when uncertainty is included, these methods include average difference in the assessment. Including the range of most likely performances and knowing where results are statistically meaningful can contribute to better decisions.

Additionally, the ability to take into account information with different levels of uncertainty would enable the Higg MSI to include a more complete set of environmental indicators and provide better information for decision-making. This can also improve comparisons of agricultural and industrial based systems, a common comparison, as data would be interpreted with the spread. This would provide a more holistic representation of environmental sustainability while attending to the concerns of data robustness.

As an alternative to aggregation based on a weighted sum, there is SMAA which can account for uncertainty in the aggregation of results and can incorporate information with different levels of uncertainty.

Furthermore, outranking normalization as performed in SMAA facilitates inclusion of input criteria in different scales (ordinal or cardinal), which allows for consideration of qualitative criteria. While, in the current Higg MSI, input criteria are normalized by the industry’s performance and leaving the analysis subject to inverse proportionality. In SMAA, normalization is relative to the alternatives being considered, which allows for inclusion of input criteria without comparing to the industry’s performance. It must be noted that the impacts of the entire industry, which are currently used as a normalization reference, still serve a purpose in benchmarking for industry wide assessments.

SMAA opens an opportunity to consider emerging concerns of the industry for which there are no operational impact assessment models yet. One example of these concerns is microplastic release and biodegradability, which is currently not considered in life cycle impact assessments. These topics are important to the industry and consumers due to unknown impacts associated with microplastics. Criteria of biodegradability could be included in SMAA through several possible paths, such as a qualitative scale (1 to 5) corresponding to the time a material takes to biodegrade or as a yes/no criteria describing whether the material is biodegradable or not—depending on the information available. The current form of aggregation of the Higg MSI is limited in its ability to address the industry’s current environmental sustainability concerns and, as a result, this method limits the ability of the Higg MSI to inform decision making or adapt to emerging environmental concerns of significance.

Illustration of SMAA in this study uses midpoints as these are the indicators used in the Higg MSI, and although previous SMAA studies in LCA also use midpoints (Rogers and Seager 2009; Prado-Lopez et al. 2014), it can also technically apply to endpoints. However, midpoints would be a recommended starting point to avoid the uncertainties in the pathways between midpoint and endpoint in LCIA. Also, one of the aims of endpoints is decision support by means of reducing indicators, but this is provided by SMAA with foundations in the decision analysis field. The discussions in the LCA field between midpoints and endpoints have spanned many years (Bare et al. 2000; Kägi et al. 2016), and the overall message is that midpoints provide more robust information but can be difficult to manage. Endpoints make LCA results easier to manage but at a cost due to the uncertainties in the pathways and that they are important to show results at midpoint. Similarly, SMAA would act as a compliment to midpoint results as it is important to understand the underlying tradeoffs.

Although it is expected that SMAA can represent an improvement over current aggregation practice in the Higg MSI (or even the practice of no aggregation), there are challenges to its implementation and communication. First, SMAA requires quantitative uncertainty data, which is currently not available for each midpoint category. A similar procedure, as performed here of converting the data quality indicators to an estimation of dispersion can apply to cases for which uncertainty results are not available or as a transition while the database gathers the required information (instead of requiring midpoint values, the MSI Higg can request mean and standard deviations of results). This will require efforts to adapt the submission process and platform to calculate the single scores accordingly.

Besides implementation, another challenge with SMAA results may be interpretation. Explaining the results can be more difficult than the current bar charts because the SMAA graphs are portraying more information and because the audience is less familiar with distributions and uncertainty in general. To address these concerns, it would be necessary to draft different viewing modes, so that SMAA results can be simplified according to the audience. Also, it could be useful to provide tutorials on how to interpret results as part of the help section on the Higg Web site.

It must be noted that SMAA is only applicable for comparative assessments as it produces a number that is relative to the alternatives being compared. This result is unlike the current method of aggregation (and the other compensatory methods included here), which generates a single score that is independent of the comparison being made. This means that results of a comparison are only valid for that comparison and the alternatives included and cannot be taken out of context.

5 Conclusion

The current Higg MSI allows for full compensation between indicators and does not provide a balanced view of tradeoffs in textile materials. This also means that the analysis does not consider the significance of mutual differences and creates an index vulnerable to the effects of inverse proportionality (masking the aspects most impactful to the industry and vice versa) while neglecting uncertainty of life cycle impacts. The current approach to aggregation in the Higg MSI has opportunity for improvements, which could enhance the ability of the Higg MSI to support industry decisions and it does not necessarily mean aggregation has to be avoided as planned for 2021, but rather improved.

To enable a treatment of tradeoffs that is in line with a strong sustainability perspective, it is recommended that the Higg MSI examine implementation of a partially compensatory aggregation approach instead of leaving decision makers unaided. Such an approach can consider uncertainty in the data, provide more meaningful comparisons between bio and industrial systems, and enable the inclusion of environmental criteria with higher uncertainty levels, as well as qualitative indicators.

As the industry makes advances in sustainability efforts, the Higg MSI should strive to provide an environmental sustainability decision tool that (1) enhances understanding of the environmental sustainability of material operations and enables identification improvement options; (2) covers impacts broadly in a way that can adapt to the industry’s environmental concerns and each business’s strategy; and (3) applies methodologies that are defensible regardless of the material in question, incentivizing a healthy competition for environmental stewardship among industry members.

References

Ahlroth S (2014) The use of valuation and weighting sets in environmental impact assessment. Resour Conserv Recycl 85:34–41. https://doi.org/10.1016/j.resconrec.2013.11.012

Bare J (2011) TRACI 2.0: The tool for the reduction and assessment of chemical and other environmental impacts 2.0. Clean Technol Environ Policy 13:687–696. https://doi.org/10.1007/s10098-010-0338-9

Bare J, Gloria T (2006) Critical analysis of the mathematical relationships and comprehensiveness of life cycle impact assessment approaches. Environ Sci Technol 40:1104–1035. https://doi.org/10.1021/es051639b

Bare JC, Hofstetter P, Pennington DW, de Haes HAU (2000) Midpoints versus endpoints: the sacrifices and benefits. Int J Life Cycle Assess 5:319–326. https://doi.org/10.1007/BF02978665

Bengtsson M, Steen B (2000) Weighting in LCA – approaches and applications. Environ Prog 19:101–109. https://doi.org/10.1002/ep.670190208

Brans JP, Mareschal B (2005) PROMETHEE methods. In: Figueira JR, Greco S, Ehrgott M (eds) Multiple Criteria Decision Analysis. State of the Art Surveys. Springer New York, pp 163–195

Brans JP, Vincke P, Mareschal B (1986) How to select and how to rank projects: the Promethee method. Eur J Oper Res 24:228–238. https://doi.org/10.1016/0377-2217(86)90044-5

Bruyn S De, Korteland M, Markowska A, et al (2010) Shadow Prices Handbook Valuation and weighting of emissions and environmental impacts. Delft

Buchanan J, Kock N (2001) Multiple criteria decision making in the new millennium. Lecture notes in economics and mathematical systems. In: Köksalan M, Zionts S (eds) Multiple Criteria decision making in the new millennium. Springer, pp 49–58

Castellani V, Sala S, Benini L (2016) Hotspots analysis and critical interpretation of food life cycle assessment studies for selecting eco-innovation options and for policy support. J Clean Prod 1–13. https://doi.org/10.1016/j.jclepro.2016.05.078

Ciroth A, Muller S, Weidema B, Lesage P (2016) Empirically based uncertainty factors for the pedigree matrix in ecoinvent. Int J Life Cycle Assess 21:1338–1348. https://doi.org/10.1007/s11367-013-0670-5

Edwards W, Barron FH (1994) Smarts and smarter: Improved simple methods for multiattribute utility measurement. Organ Behav Hum Decis Process 60:306–325

Finnveden G (1999) A critical review of operational valuation/weighting methods for life cycle assessment. Swedish Environ Prot Agency

Fischer G (1995) Range sensitivity of attribute weights in multiattribute value models. Organ Behav Hum Decis Process 62:252–266

Frischknecht R, Jungbluth N, Althaus H-J et al (2004) The ecoinvent database: overview and methodological framework (7 pp). Int J Life Cycle Assess 10:3–9. https://doi.org/10.1065/lca2004.10.181.1

Goedkoop M, Heijungs R, Huijbergts M et al (2009) ReCiPe 2008 . Report 1 : Characterisation

Goette L, Han H-J, Leung B (2019) Information overload and confirmation bias. Cambridge Work Pap Econ

Guinée JB (ed) (2002) Handbook on life cycle assessment operational guide to the ISO standards

Hammond J, Keeney RL, Raiffa H (1999) Smart choices. Harvard Business School Press, Boston, MA

ISO 14044 (2006) ISO 14044: Environmental Management — Life Cycle Assessment — Requirements and Guidelines. Environ Manage 3–54

Itsubo N, Murakami K, Kuriyama K et al (2015) Development of weighting factors for G20 countries—explore the difference in environmental awareness between developed and emerging countries. Int J Life Cycle Assess 1–16. https://doi.org/10.1007/s11367-015-0881-z

Kägi T, Dinkel F, Frischknecht R et al (2016) Session “Midpoint, endpoint or single score for decision-making?”—SETAC Europe 25th Annual Meeting, May 5th, 2015. Int J Life Cycle Assess 21:129–132. https://doi.org/10.1007/s11367-015-0998-0

Keeney RL (2002) Common Mistakes in Making Value Trade-Offs. Oper Res 50:935–945. https://doi.org/10.1287/opre.50.6.935.357

Lautier A, Rosenbaum RK, Margni M et al (2010) Development of normalization factors for Canada and the United States and comparison with European factors. Sci Total Environ 409:33–42. https://doi.org/10.1016/j.scitotenv.2010.09.016

Lewandowska A, Foltynowicz Z, Podlesny A (2004) Comparative lca of industrial objects part 1: lca data quality assurance — sensitivity analysis and pedigree matrix. Int J Life Cycle Assess 9:86–89. https://doi.org/10.1007/BF02978567

Lollo N, O’Rourke D (2020) Measurement without clear incentives to improve: the impacts of the higg facility environmental module (FEM) on apparel factory practices and performance. SocArXiv. https://doi.org/10.31235/osf.io/g67d8

Muller S, Lesage P, Ciroth A et al (2016) The application of the pedigree approach to the distributions foreseen in ecoinvent v3. Int J Life Cycle Assess 21:1327–1337. https://doi.org/10.1007/s11367-014-0759-5

Munda G (2016) Multiple Criteria decision analysis and sustainable development. In: Greco S, Ehrgott M, Figueira JR (eds) Multiple criteria decision analysis: state of the art surveys. New York, pp 1235–1267

Munda G, Nardo M (2009) Noncompensatory/nonlinear composite indicators for ranking countries: a defensible setting. Appl Econ 14:1513–1523. https://doi.org/10.1080/00036840601019364

Myllyviita T, Leskinen P, Seppälä J (2014) Impact of normalisation, elicitation technique and background information on panel weighting results in life cycle assessment. Int J Life Cycle Assess 19:377–386. https://doi.org/10.1007/s11367-013-0645-6

Norris GA (2001) The requirement for congruence in normalization. Int J Life Cycle Assess 6:85–88. https://doi.org/10.1007/BF02977843

Pfister S, Koehler A, Hellweg S (2009) Assessing the environmental impact of freshwater consumption in life cycle assessment. Environ Sci Technol 43:4098–4104. https://doi.org/10.1021/es802423e

Pollesch N, Dale VH (2015) Applications of aggregation theory to sustainability assessment. Ecol Econ 114:117–127. https://doi.org/10.1016/j.ecolecon.2015.03.011

Pollesch NL, Dale VH (2016) Normalization in sustainability assessment: Methods and implications. Ecol Econ 130:195–208. https://doi.org/10.1016/j.ecolecon.2016.06.018

Ponsioen TC, Vieira MDM, Goedkoop MJ (2014) Surplus cost as a life cycle impact indicator for fossil resource scarcity. Int J Life Cycle Assess 19:872–881. https://doi.org/10.1007/s11367-013-0676-z

Prado-Lopez V, Seager TP, Chester M et al (2014) Stochastic multi-attribute analysis (SMAA) as an interpretation method for comparative life-cycle assessment (LCA). Int J Life Cycle Assess 19:405–416. https://doi.org/10.1007/s11367-013-0641-x

Prado-Lopez V, Wender BA, Seager TP et al (2016) Tradeoff evaluation improves comparative life cycle assessment: a photovoltaic case study. J Ind Ecol 20. https://doi.org/10.1111/jiec.12292

Prado V, Cinelli M, Haar SF Ter et al (2019) Sensitivity to weighting in life cycle impact assessment ( LCIA ). Int J Life Cycle Assess

Prado V, Heijungs R (2018) Implementation of stochastic multi attribute analysis (SMAA) in comparative environmental assessments. Environ Model Softw 109:223–231. https://doi.org/10.1016/j.envsoft.2018.08.021

Prado V, Rogers K, Seager TP (2012) Integration of MCDA tools in valuation of comparative life cycle assessment

Prado V, Wender BA, Seager TP (2017a) Interpretation of comparative LCAs: external normalization and a method of mutual differences. Int J Life Cycle Assess 22. https://doi.org/10.1007/s11367-017-1281-3

Prado V, Wender BA, Seager TP (2017b) Interpretation of comparative LCAs: external normalization and a method of mutual differences. Int J Life Cycle Assess 22:2018–2029. https://doi.org/10.1007/s11367-017-1281-3

Rogers K, Seager TP (2009) Environmental Decision-making using life cycle impact assessment and stochastic multiattribute decision analysis: a case study on alternative transportation fuels. Environ Sci Technol 43:1718–1723. https://doi.org/10.1021/es801123h

Rogers M, Bruen M (1998) Choosing realistic values of indifference, preference and veto thresholds for use with environmental criteria within ELECTRE. Eur J Oper Res 107:542–551. https://doi.org/10.1016/S0377-2217(97)00175-6

Rowley HV, Peters GM, Lundie S, Moore SJ (2012) Aggregating sustainability indicators: beyond the weighted sum. J Environ Manage 111:24–33. https://doi.org/10.1016/j.jenvman.2012.05.004

Ryberg M, Vieira MDM, Zgola M et al (2014) Updated US and Canadian normalization factors for TRACI 2.1. Clean Technol Environ Policy 16:329–339. https://doi.org/10.1007/s10098-013-0629-z

SAC (2017) Higg Material Sustainability Index (MSI) Methodology

SAC (2020a) Sustainable Apparel Coalition. https://apparelcoalition.org/higg-product-tools/

SAC (2020b) Evolution of Higg MSI will deepen and expand focus on product-level impacts in 2021. Press Release

Sleeswijk AW, van Oers LFCM, Guinée JB et al (2008) Normalisation in product life cycle assessment: An LCA of the global and European economic systems in the year 2000. Sci Total Environ 390:227–240. https://doi.org/10.1016/j.scitotenv.2007.09.040

Stewart TJ (2008) Robustness Analysis and MCDA. In: European Working Group Multiple Criteria Decision Aiding. Newsletter of the European Working Group “Multicriteria Aid for Decisions”

Tervonen T, Lahdelma R (2007) Implementing stochastic multicriteria acceptability analysis. Eur J Oper Res 178:500–513. https://doi.org/10.1016/j.ejor.2005.12.037

Tolle D (1997) Regional Scaling and Normalization in LCIA. Int J Life Cycle Assess 2:197–208

Trucost (2015) Trucost’S Valuation Methodology

van Knippenberg D, Dahlander L, George G (2015) From the Editors: Information, Attention, and Decision Making. Acad Manag J 58:649–657

Watson KJ, Wiedemann SG (2019) Review of methodological choices in LCA-based textile and apparel rating tools : key issues and recommendations relating to assessment of fabrics made from natural fibre types. Sustainability 11:

Weidema BP, Wesnæs MS (1996) Data quality management for life cycle inventories—an example of using data quality indicators. J Clean Prod 4:167–174. https://doi.org/10.1016/S0959-6526(96)00043-1

White P, Carty M (2010) Reducing bias through process inventory dataset normalization. Int J Life Cycle Assess 15:994–1013. https://doi.org/10.1007/s11367-010-0215-0

Wulf C, Zapp P, Schreiber A et al (2017) Lessons learned from a life cycle sustainability assessment of rare earth permanent magnets. J Ind Ecol 00:1–13. https://doi.org/10.1111/jiec.12575

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Adriana Del Borghi.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Prado, V., Daystar, J., Wallace, M. et al. Evaluating alternative environmental decision support matrices for future Higg MSI scenarios. Int J Life Cycle Assess 26, 1357–1373 (2021). https://doi.org/10.1007/s11367-021-01928-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11367-021-01928-8