Abstract

Purpose

The use of life cycle assessment (LCA) as a decision support tool can be hampered by the numerous uncertainties embedded in the calculation. The treatment of uncertainty is necessary to increase the reliability and credibility of LCA results. The objective is to provide an overview of the methods to identify, characterize, propagate (uncertainty analysis), understand the effects (sensitivity analysis), and communicate uncertainty in order to propose recommendations to a broad public of LCA practitioners.

Methods

This work was carried out via a literature review and an analysis of LCA tool functionalities. In order to facilitate the identification of uncertainty, its location within an LCA model was distinguished between quantity (any numerical data), model structure (relationships structure), and context (criteria chosen within the goal and scope of the study). The methods for uncertainty characterization, uncertainty analysis, and sensitivity analysis were classified according to the information provided, their implementation in LCA software, the time and effort required to apply them, and their reliability and validity. This review led to the definition of recommendations on three levels: basic (low efforts with LCA software), intermediate (significant efforts with LCA software), and advanced (significant efforts with non-LCA software).

Results and discussion

For the basic recommendations, minimum and maximum values (quantity uncertainty) and alternative scenarios (model structure/context uncertainty) are defined for critical elements in order to estimate the range of results. Result sensitivity is analyzed via one-at-a-time variations (with realistic ranges of quantities) and scenario analyses. Uncertainty should be discussed at least qualitatively in a dedicated paragraph. For the intermediate level, the characterization can be refined with probability distributions and an expert review for scenario definition. Uncertainty analysis can then be performed with the Monte Carlo method for the different scenarios. Quantitative information should appear in inventory tables and result figures. Finally, advanced practitioners can screen uncertainty sources more exhaustively, include correlations, estimate model error with validation data, and perform Latin hypercube sampling and global sensitivity analysis.

Conclusions

Through this pedagogic review of the methods and practical recommendations, the authors aim to increase the knowledge of LCA practitioners related to uncertainty and facilitate the application of treatment techniques. To continue in this direction, further research questions should be investigated (e.g., on the implementation of fuzzy logic and model uncertainty characterization) and the developers of databases, LCIA methods, and software tools should invest efforts in better implementing and treating uncertainty in LCA.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Life cycle assessment (LCA) methodology is now a recognized and widespread approach for evaluating the environmental impacts of products, technologies, and policies. The use of LCA as a decision support tool can, however, be hampered by the numerous uncertainties embedded in the calculation, as well as the fact that the results cannot be verified, validated, or confirmed due to many constraints (technical, conceptual, legal, etc.) (Oreskes et al. 1994). Suspicion of model choice manipulation and contradictory conclusions can thus emerge from LCA studies. The reliability of LCA should therefore be improved to gain credibility and avoid wrong decisions. It is necessary to address uncertainties within the model and in the results to achieve these objectives and force the practitioner to question the quality of the assessment. The word “model” refers here to the conversion of inputs into outputs (or results). A conventional LCA study uses goal and scope criteria and foreground data as inputs. These are processed by LCA software containing background inventory databases and impact assessment methods (model structure and parameters) to obtain the potential environmental impacts of the studied system (outputs). The development of the background database or impact assessment methods also relies on a specific set of inputs, model formulations, and parameters. The treatment of uncertainty follows the same steps for different types of LCA models: (i) characterization, i.e., the qualitative and quantitative description of uncertainties from the model and inputs; (ii) uncertainty analysis, i.e., the propagation of uncertainty to the outputs; (iii) sensitivity analysis, i.e., the analysis of the influence of input uncertainty on output uncertainty; and (iv) communication, i.e., the ability to inform the audience about uncertainty. Although the ISO standards 14040/44 (2006a, b) recommend performing sensitivity and uncertainty analyses, especially for comparative LCA, and although some Product Category Rules (PCR) require a sensitivity analysis, these practices are still not generalized. LCA practitioners tend to focus only on scenario analysis for a few assumptions (e.g., comparing results for different allocation rules or electricity mixes). In LCA literature, the uncertainty topic is becoming more and more present, as highlighted in Fig. 1. Several scholars have published reviews on the characterization (Heijungs and Frischknecht 2005), propagation (Heijungs and Huijbregts 2004; Groen et al. 2014), sensitivity analysis (Wei et al. 2015; Groen et al. 2016), and communication (Gavankar et al. 2014) of uncertainties. Lloyd and Ries (2007) integrated all these aspects in a survey on 24 LCA studies. Nevertheless, there have been many developments in the last 10 years (as observed in Fig. 1). In addition, previous papers stay quite theoretical, without giving insights into the practical implementation of methods, and are not always accessible to the majority of LCA practitioners, due to the required knowledge in mathematics, for example. There is therefore a need to fill this gap by providing an updated literature and LCA software functionality review for the different phases of uncertainty treatment in LCA, in order to support analysts in its implementation regardless of his/her level of expertise.

Based on a preliminary study carried out for the SCORELCA association (Igos and Benetto 2015), this paper goes one step further than previous literature by explaining and analyzing the feasibility and interest of applied methods to characterize (Section 2), propagate (Section 3), analyze the influence (Sections 4 and 5), and communicate uncertainties in LCA studies. This critical analysis is then translated into practical recommendations for a broad public of LCA practitioners (Section 7). In order to support the pedagogical approach of this paper, the outcomes of the analysis are summarized at the end of each section, and in illustrative tables and figures. In addition, all the reviewed papers (165 documents) are listed and classified in the Electronic Supplementary Material (ESM) table. If a reader wants more details on a specific method, he/she can easily find the corresponding reference by applying a filter. By facilitating the spreading of uncertainty analysis knowledge and application, the reliability of LCA studies should increase further. The research of documents was done using Google Scholar and the findit.lu portal (Luxembourg national library platform grouping several databases such as Scopus, Web of Science or Wiley library), with the keywords “uncertainty,” “error,” “sensitivity,” and “variability” associated with “life cycle assessment,” “environmental assessment,” or “environmental impact.” Peer-reviewed articles and PhD theses were selected, as well as conference papers if no peer-reviewed article with similar content was available. Since the aim was to obtain an overview of methods applied in LCA, articles using the same methods as other previous studies were not included in the list (this was the case for scenario analysis and Monte Carlo sampling). In addition, LCA guidelines, such as the ILCD handbook (European Commission–EC 2010), ISO standards (2006a, b), CALCAS project deliverables (Zamagni et al. 2008), and ecoinvent reports (Weidema et al. 2013), were included in the analysis.

2 Uncertainty identification and characterization

2.1 Sources of uncertainty

Uncertainty was defined by Walker et al. (2003) as “any deviation from the unachievable ideal of completely deterministic knowledge of the relevant system.” The authors identified three dimensions of uncertainty: (i) the location, i.e., the position of uncertainty within the modeling framework; (ii) the level, i.e., the degree of uncertainty; and (iii) the nature, i.e., the relation of uncertainty with reality.

For the location, LCA scholars (Lloyd and Ries 2007; Zamagni et al. 2008) usually distinguish three main aspects: parameters (quantifiable data), the model (structure of relationships), and scenario(s) (choices related to the context of the study). This classification differs slightly to that of Walker et al. (2003), where context replaces scenario (which can be ambiguous), and a distinction is made between inputs (reference data and driving forces) and parameters (used to calibrate the model). In order to avoid confusion, we propose renaming the common LCA classifications as quantity (inputs and parameters), model structure and context. Quantity uncertainty is present for any data used for the inventory and the impact assessment. For the latter, LCA practitioners usually apply characterization factors (CFs) from life cycle impact assessment (LCIA) methods, which are currently provided without uncertainty ranges. Of course, CFs do contain uncertainty, e.g., from the quantities used in cause-effect chain modeling (e.g., reference environment characteristics). Model structure uncertainty corresponds to the mismatch between the modeled (mathematical) relationships and the real causal structure of a system. Indeed, several assumptions are made to build a process inventory or an LCIA model, such as linearity (e.g., consumption per reference flow, toxicity dose-response), lack of correlations (e.g., emissions of a vehicle independent of fuel use), or common behavior within archetypes (e.g., generic CFs for effects specific to local conditions). Model structure uncertainty due to the calculation tool (e.g., sequential or matrix approach used in LCA software) can be neglected. Generally, with a higher level of complexity, model structure uncertainty decreases (better representativeness of the reality) while the higher number of required inputs makes quantity uncertainty increase (van Zelm and Huijbregts 2013). Context uncertainty is induced by the fact that the LCA practitioner makes methodological choices related to the goal and scope of the study, such as the definition of the functional unit, cut-off rule, allocation rule, choice of marginal suppliers, indirect consequential effects, or impact weighting perspective, to cite a few. These normative choices reflect the beliefs or objectives of the modeler and should be listed in the LCA report (according to ISO 14044 2006b).

The level of uncertainty is related to the available information to describe the quantity, model structure, or context. The lowest level corresponds to a situation where there are representative statistical data to accurately determine the quantity or validate the model structure, while the highest level corresponds to total ignorance, where we do not even know that we do not know, e.g., ignorance of our state of ignorance about things or relations (Walker et al. 2003). The qualitative evaluation of the uncertainty level is useful for prioritizing further efforts to treat uncertainties.

Regarding the nature of uncertainty, epistemic uncertainty is due to a lack of knowledge or representativeness and can be reduced with more research and efforts (e.g., more collected data, higher model complexity). Aleatory or ontic uncertainty is due to the inherent variability and the lack of determinability of the system (inherent randomness of nature, observer effect) and cannot be reduced. Both natures of uncertainty can be present for quantity uncertainty, model structure uncertainty, and context uncertainty (see Table 1).

As proposed by the ILCD handbook (EC 2010), efforts for uncertainty treatment should focus on low-quality (high uncertainty) elements with a high significance for LCA results. To do this, the uncertainties in the different LCA stages should be listed, as exhaustively as possible, and their levels should be qualitatively defined. Table 1, inspired by the uncertainty matrix from Walker et al. (2003), could support this process to understand where and how uncertainty can be present in an LCA model. For a conventional LCA study, the definition of criteria for the goal and scope of the study is part of the context uncertainty, while elements from the LCI and LCIA can encompass uncertainty from the model structure and quantity. Uncertainty due to a lack of consistency or representativeness is classified into epistemic uncertainty, while the variability of data or relationships pertains to ontic uncertainty. Practitioners could list the uncertainties based on examples from Table 1 and assign a qualitative score for each of them, e.g., from “very low” to “very high” or from “complete knowledge” to “conscious ignorance.” Uncertainties of CFs could be qualitatively assessed based on the method manual, related peer-review publications, and the ILCD handbook (EC 2011).

2.2 Characterization

Uncertainty characterization has a great influence on further steps and should be done carefully. Based on previous definitions, context and model structure uncertainties are linked to linguistic formulations, while quantity uncertainty can be more easily determined and is therefore addressed more often.

2.2.1 Quantity uncertainty

When characterizing quantity uncertainty, researchers aim to define the variability range of the data according to the determination method (accuracy and representativeness, i.e., epistemic uncertainty) and to the system stochasticity (variations according to location, time, and objects). Several indicators are commonly used: intervals (lower and upper bond), variance (dispersion), probability distribution (probability of the occurrence of a random variable), and possibility distribution or fuzzy intervals (imprecise set of possible values). The information given by probability distribution is rich and allows statistical treatment (e.g., determination of a confidence interval or correlation). The other types of indicators require less data and can even be determined from a subjective expert evaluation. For example, several authors (e.g., Weckenmann and Schwan 2001; Tan 2008) used fuzzy membership functions to translate vague information into numerical reasoning; the range of all possible values is represented by a non-zero membership degree (called support) and the range of the most likely values with a membership degree of 1 (core). To date, LCA software tools have only included functions to implement uncertainty via probability distributions, except CMLCA which also accepted variance (see Table 2). While CMLCA and OpenLCA allow uncertainties for both LCI and LCIA data to be implemented, users of SimaPro or GaBi can only specify them for LCI parameters (and only for foreground processes in GaBi). This limitation restricts the propagation of uncertainties from the different phases of LCA. The open source framework Brightway2 proposes 11 types of distribution, and other types of characterization could be possible by using Python programming language functions, while other LCA tools are restricted to four types (and even two types in GaBi). When the amount of available data is large enough to derive probability distributions, this type of characterization is preferred. For advanced practitioners, several techniques (not detailed here) exist to properly define probability distributions, such as bootstrap simulation (Frey and Li 2002), Bayesian inference (Lo et al. 2005), statistical tests (Sonnemann et al. 2003; Wang and Shen 2013) or maximum likelihood estimation (Sugiyama et al. 2005; Guo and Murphy 2012). The analyst can gather specific measurements for the case study (specific primary data) or data from other sources (non-specific primary data or secondary data from literature or databases). In the first case, the epistemic uncertainty can be represented by the accuracy of measurements and the ontic uncertainty by the spread of points. In the second case, the lack of representativeness of the collected data needs to be considered for the epistemic uncertainty. This process is usually performed via quality indicators from a pedigree matrix, which has been adapted for LCA (Weidema and Wesnaes 1996; Maurice et al. 2000) and implemented in the ecoinvent database (Frischknecht et al. 2007; Weidema et al. 2013; Muller et al. 2016a). From each collected data point, the practitioner can judge the reliability, completeness, temporal, geographical, and technological correlation on a scale from 1 to 5, which can be translated into uncertainty factors (used to derive lognormal standard deviation in ecoinvent). In OpenLCA and CMLCA software, uncertainty ranges can be automatically derived based on pedigree matrix indicators entered by the user. Despite the criticism related to its reliability and subjectivity (Lloyd and Ries 2007; Frischknecht et al. 2007), the pedigree matrix nevertheless remains a useful tool for evaluating epistemic uncertainty, and was recently updated with empirical data (Ciroth et al. 2015; Muller et al. 2016b). Its consistency can also be improved by the involvement of several experts to confront opinions. Henriksson et al. (2014) developed an Excel tool to calculate the uncertainty of a quantity derived from data from different sources, based on the value, inherent uncertainty, and quality of collected data. The authors also proposed a threshold of eight data points to be able to define the type of probability distribution. If not enough information exists to properly define distribution, the practitioner could test different types of distributions, as shown by Lacirignola et al. (2017) for emerging technologies.

2.2.2 Model structure and context uncertainty

Model structure and context uncertainties are usually characterized by the definition of alternative scenarios in order to compare LCA results for different combinations of assumptions (e.g., choice of supplier, of spatial resolution, of allocation procedure). The “true” error of the model structure can only be estimated by confronting modeled results with validation data, which can exist for parts of an LCA model (e.g., statistical data on product performances or on vehicle emissions for driving cycles). Due to a lack of measurements, LCA practitioners often compare their results with similar LCA studies. This process can nevertheless only evaluate the consistency of the model with previous works, rather than its uncertainty. Recently, several papers have developed statistical methods to characterize model or scenario uncertainties. Jung et al. (2014) and Mendoza Beltran et al. (2016) implemented uncertainty distributions or indicators for the choice of multi-functionality solving approach. The definition of probability distribution for the allocation method, which does not reflect real phenomena, can nevertheless be questionable (as underlined by Morgan et al. 1990). Kätelhön et al. (2016) defined additional parameters to reflect suboptimal decisions in the choice of technologies, which are characterized with the uncertainty distribution centered on zero. Van Zelm and Huijbregts (2013) explored different model formulations for the calculations of freshwater ecotoxicity CFs and determined the model uncertainty by the ratio of the median values with the most complex model (assumed with lower model uncertainty). Outside the LCA field, recommendations were made to characterize model structure and context uncertainties for integrated assessment and environmental modeling (van Asselt and Rotmans 2001; Refsgaard et al. 2006). Validation should be first prioritized, and if this is not possible, multiple conceptual models can be defined to explore different formulations and assumptions (such as scenario analysis). This process requires the involvement of relevant experts, who review each alternative modeling approach (e.g., via qualitative indicators from a pedigree matrix), to obtain a tenable and adequate range of plausible model structures.

2.2.3 Consideration of correlations

Despite the presence of correlations in LCA models (e.g., emissions depending on fuel consumption, shares between different end-of-life pathways, and interdependencies between LCIA indicators), their quantitative characterization is rarely performed due to a lack of data and functions within LCA software tools and databases. The indicators of correlation are theoretically determined from samples of the studied variables, which are rarely available. Wei et al. (2015) proposed a method to determine the correlation between parameters empirically. If stochastic external phenomena (e.g. soil quality) affect several uncertain parameters (e.g., agricultural yield and leaching rate of nitrate), the latter are correlated. The correlation coefficient is estimated based on the expertise of the practitioner. In Jung et al. (2014), the covariance is determined for the allocation factors for two co-products whose sum should be equal to one. Percentages are recurrent in LCA (e.g., electricity mix, product composition, allocation) and specifying uncertainty ranges without constraints or correlations can lead to absurd values (e.g., sum of percentages below zero or above one). The best approach for defining uncertainty for percentages is to apply the Dirichlet multivariate distribution because the numbers are defined on the interval [0; 1] and their sum is equal to one. However, this type of distribution is not yet available in LCA software. Due to the complexity of determining correlations, Groen and Heijungs (2016) investigated the effects of ignoring them in LCA. Based on common possible correlation types (for LCI data), the authors evaluated whether the output variance would increase or decrease. This technique could be further explored by including different distribution types for LCIA data and by testing it for a detailed LCA study. Finally, in a comparative LCA study, the compared systems generally use the same background processes (same database), which induces a correlation between them. This issue can be addressed by the common propagation of uncertainty from the background (see Section 3).

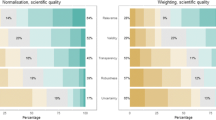

To conclude, there are four usual ways to characterize uncertainty: (i) probability distribution, which requires efforts and data but which provides rich information, can include correlations and is available in LCA software; (ii) variance, which is more easily estimated but only available in CMLCA; (iii) fuzzy sets, which can translate expert opinions but are less reliable and not available in LCA software; and (iv) multiple scenarios, which are useful for model structure/context uncertainty and available in LCA software, but depend on expert judgment (Fig. 2).

Classification of methods for uncertainty characterization, uncertainty analysis, and sensitivity analysis, according to the amount of information they provide, their availability in LCA software, the time and effort they require, and their reliability and validity. The number of icons in the box reflects the level for each criterion, from low with one icon to high with three icons. The compatibility of uncertainty characterization types, i.e., none (no characterization required), P (probability distribution), V (variance), F (fuzzy set), and M (multiple scenarios), is displayed next to the method’s name for uncertainty or sensitivity analysis

3 Uncertainty propagation

3.1 Sampling methods

Sampling methods aim to simulate several result possibilities. The sampling of the input probability distributions can statistically estimate the result distribution. The most common approach in LCA is the Monte Carlo method (e.g., Maurice et al. 2000; Huijbregts et al. 2003; Sonnemann et al. 2003). This random sampling method, implemented in all commonly used LCA software (except Umberto LCA+), requires a high number of simulations in order to obtain representative results (1000 or 10,000 runs often used in literature), leading to a calculation time of several hours. Unfortunately, it is difficult to predict the number of required simulations. The convergence of results is not always obtained and a rule of thumb of 10,000 iterations is normally applied to ensure stable variance. The Latin hypercube approach based on stratified sampling, i.e., input distribution is divided into equal intervals where only one value is taken for calculation (e.g., Geisler et al. 2005; Thabrew et al. 2008; de Koning et al. 2010), provides the possibility of limiting the calculation intensity. Quasi-Monte Carlo sampling was also applied in Groen et al. (2014), where previously sampled points are considered to fill any gaps. Both stratified and quasi-random sampling are spread more evenly over the input space, promising faster rates of convergence compared to the Monte Carlo method and therefore allowing the required number of simulations to be reduced. These advanced sampling analyses are, however, applied less because practitioners need to use non-LCA tools such as Oracle Crystal Ball, MATLAB, or SimLab. Correlations between parameters for Monte Carlo sampling were considered in Bojaca and Schrevens (2010), which experimentally determined the covariance of inventory data. Instead of sampling the univariate probability distribution for each parameter, the authors generated multivariate normal distribution (with R software) for the vector of correlated parameters. This method allows the uncertainty of the results to be reduced by limiting the sampling possibilities. In addition, for a comparative Monte Carlo analysis, due to the correlated background system (as discussed in Section 2.2.3), a discernibility analysis must be run, i.e., sampling the difference between two systems instead of sampling the two systems separately. The statistical treatment of the results determines whether the difference is significant or not, e.g., via the 95% confidence interval. If more than two systems are studied, multiple comparisons should be performed in pairs to rank the compared systems. Finally, as we saw in Section 2.2.2, some authors characterized model structure or context uncertainty with probability distributions, which were then propagated with Monte Carlo simulations. Sampling methods are practical to propagate uncertainties described with probability distributions (Monte Carlo sampling available in LCA software and probability distribution within the ecoinvent database) and are interesting to derive indicators for the reliability of the results (e.g., probability of occurrence, confidence intervals).

3.2 Analytical approach

Introduced by Heijungs (1994), this approach is based on the analytical resolution of the LCA matrix function. The first-order approximation of the Taylor series expansion expresses the result variance as a function of the variances of the inputs and their covariance. Initially, for simplification purposes, only the variances of the technological and environmental matrices, i.e., LCI data, are considered, and the covariance term is overlooked. This approach, implemented in CMLCA software, was also adapted for input-output tables (Heijungs and Lenzen 2014). Hong et al. (2010) and Imbeault-Tétrault et al. (2013) applied the analytical approach by considering lognormal distributions of LCI data and of CFs for climate change impact. This adaptation is useful since the majority of probability distributions used in LCA are lognormal (e.g. in ecoinvent database). As for sampling methods, the analytical approach was also used to propagate uncertainties from allocation factors, for which an assumed variance was implemented (Jung et al. 2014). The inclusion of correlations was tested only for a simple case study by Groen and Heijungs (2016) and should be investigated further. For a comparative study, Hong et al. (2010) calculated the geometric standard deviation of the ratio between the two systems’ results to test the degree of confidence in the comparison outcome (discernibility analysis). Several authors (Hong et al. 2010; Heijungs and Lenzen 2014; Imbeault-Tétrault et al. 2013; Bisinella et al. 2016; Groen and Heijungs 2016) compared the results of the analytical approach and Monte Carlo sampling. They observed small differences (in general, lower than 10%), while the data collection effort and the calculation time were significantly lower for analytical resolution. However, the variance contains much less information than probability distribution, and therefore allows less statistical treatment. In addition, the first-order approximation is only valid for small variations along a non-linear function. The previous conclusions should therefore be compared to results from complex models with larger uncertainties. Nevertheless, analytical resolution remains an efficient method (especially in terms of computational cost) for estimating the uncertainty ranges of LCA results and should therefore continue to be implemented in LCA software tools to facilitate its application.

3.3 Fuzzy logic

Weckenmann and Schwan (2001) first propagated the fuzzy membership functions (see Section 2.2.1) of inventory data to LCA results via fuzzy arithmetic, comparable to interval calculation. The determination of fuzzy sets is based on a pessimistic interval (support) and an optimistic interval (core), defining a trapezoidal function. This approach was formalized by Tan (2008) for the LCA matrix resolution. For membership degrees (called ɑ-cuts), the intervals of the technology and environmental matrices are used to calculate the inventory results. The higher the LCI values, the higher the resulting emissions and the lower the resulting resources (negative flows). The proposed resolution was mathematically demonstrated in Heijungs and Tan (2010), and is effective provided that the LCI is a square matrix, without avoided processes (allocation required) and with positive waste flows in the treatment processes. Cruze et al. (2013), however, showed that additional conditions should also be satisfied, i.e., the inventory matrices should be M-matrices, which calls into question the applicability of this method. Fuzzy logic was also applied to LCA via the fuzzy inference system (Ardente et al. 2004). This method included the addition of qualitative judgment into the definition of fuzzy sets, in a similar way to the pedigree matrix, further aggregated based on rules defined by experts. The subjectivity of this approach can hamper its use for decision support. Since fuzzy membership functions can be easily determined, Clavreul et al. (2013) applied a hybrid approach combining statistical sampling and fuzzy sets, inspired by methods developed for risk assessment (Guyonnet et al. 2003; Baudrit et al. 2005). Inventory quantities are described either with probability distributions or with trapezoidal fuzzy sets, depending on the quality and quantity of available data. Sampling is applied to both types: one point for probability distributions and one interval for fuzzy sets are sampled for each iteration. The smallest and largest values are calculated for each iteration, further constituting two probability distributions for the LCA results, which can be weighted to obtain one single distribution. Compared to Monte Carlo sampling, the interpretation of a family of distribution was more difficult and the weighted curve was close to Monte Carlo results, but the weighting attribution can be challenging (Clavreul et al. 2013). Finally, fuzzy logic was combined with multi-criteria analysis tools to incorporate uncertainty from an expert judgment (e.g., to determine weights) and therefore define the ranking order for LCA scenarios (Geldermann et al. 2000; Güereca et al. 2007; Benetto et al. 2008).

To summarize outcomes from the uncertainty analysis review (see Fig. 2), probability distributions can be propagated with sampling methods (Monte Carlo available in LCA software tools except Umberto LCA+) in a reliable way but with a significant calculation time (despite advanced approaches can decrease it). Analytical resolution, available in CMLCA, can rapidly propagate the variance of inputs, but provides less information and is less reliable, in particular for large uncertainties. Fuzzy logic, applied to fuzzy sets, in LCA is not fully mature and not yet implemented in LCA software. The reliability and information provided can increase if combined with probability distribution sampling (hybrid approach).

4 Scenario analysis

Scenario analysis can easily be used in any LCA software tool for both uncertainty and sensitivity analyses to treat model structure and context uncertainty (via multiple scenarios). For uncertainty analysis, the range of possible LCA results (e.g., interval) is calculated from different model formulations. This has been done for LCIA methods (Hung and Ma 2009), allocation procedures (Malça and Freire 2012), and LCI assumptions (Röder et al. 2015). As highlighted in Section 2.2.2, the reliability of this analysis increases with the definition of multiple conceptual models reviewed by relevant experts. A broader range of possibilities can be mapped through the application of the Exploratory Modelling and Analysis (EMA) method. This method, applied by Noori et al. (2014) for a predictive life cycle costing (LCC) study, can explore plausible combinations, given the available information, without being restricted to specific sets of pre-defined models (e.g., for emerging technologies).

Regarding sensitivity analysis, scenario analysis is largely used to test uncertain choices within the LCA model (e.g., background processes, LCIA methods, functional unit). The amplitude of the results’ difference from the baseline scenario demonstrates the sensitivity of the results to each choice tested. The LCA practitioner can also observe whether the ranking of the systems studied is affected by the scenario analysis.

5 Sensitivity analysis

Sensitivity analyses aim to understand the main sources of result uncertainty. The outcomes can be used to prioritize future efforts to decrease the uncertainty of the LCA model and its inputs by refining the most critical elements in an iterative approach.

5.1 Local sensitivity analysis

Local sensitivity analysis (LSA) refers to the variation of one input around its reference point, keeping the others at their nominal values. The most basic approach is called one-at-a-time (OAT), also known as perturbation analysis (Heijungs and Kleijn 2001), where the sensitivity measure for an input quantity is the ratio between the variation of the model results and of the studied quantity. OAT is easy to apply in any LCA software tool. Several strategies can be applied for the variation of the inputs. In some cases, the same arbitrary variation (e.g., ± 20%) is applied to all uncertain data, avoiding the need to collect more data but adding bias to the results because of the different uncertainty ranges within inputs (the sensitivity to quantities with low uncertainty is overestimated and vice versa). For a more accurate OAT analysis, the variation ranges should be based on the characterization of quantity uncertainty (see Section 2.2.1), e.g., taking standard deviation as the upper and lower boundaries.

Another mathematical method for LSA is partial matrix-based derivatives (Heijungs 1994; Sakai and Yokoyama 2002), also called marginal analysis. The partial derivatives of the output model (LCI or LCA result) with respect to each input parameter (LCI quantity) represent the sensitivity coefficients. This method has a very short calculation time and is available in CMLCA. However, it does not take into account the parameter uncertainties, i.e., the results can be highly sensitive to a parameter even if the parameter is precise and accurate (low uncertainty). To correct this bias, Heijungs (2010) developed a method called Key Issue Analysis (KIA), based on the first-order approximation of the Taylor series expansion. The sensitivity coefficient is equal to the square of the partial derivative multiplied by the ratio between the input variance and the output variance. Even if Groen et al. (2016) classified it as a global approach, it is by definition an LSA since the Taylor series approximates a function in the neighborhood of a specific point, and the sensitivity coefficient is derived from the first-order derivative (other quantities remain fixed). The comparison of KIA with other global approaches showed that KIA gave reliable results only where there was a small variation in input (Groen et al. 2016).

All LSA approaches assume model linearity, browse a limited range of input domains, and ignore the correlation and interactions between parameters (Saltelli and Annoni 2010). Their interpretation is thus limited. The ease of use of the OAT method makes it interesting for LCA practitioners even if the computation time can be long with large numbers of parameters. This analysis should be based on representative variations to increase its reliability. With CMLCA software, the KIA method is preferred to marginal analysis in order to consider the variance of inputs. KIA is fast and can therefore be considered as a preliminary step for sensitivity analysis.

5.2 Screening method

The method of elementary effects (MoEE) has been used as an intermediate tool to prepare a global sensitivity analysis with satisfactory results (Mery et al. 2014; Mutel et al. 2013; de Koning et al. 2010). MoEE examines the output sensitivity to variations of inputs (within their minimum and maximum values) in iterations using random starting points. The quantities are varied in series: a normalized variation is applied to one of the quantities at each iteration while considering the values from the previous iteration for the other quantities (instead of keeping their mean values). This method is therefore based on OAT variations but can explore a representative set of the input space with the optimization of the trajectories of the variations (Mutel et al. 2013); thus, it is classified in-between a local and global sensitivity analysis. The elementary effect (EE) represents the output variation divided by the input variation observed on each point of the chosen trajectories. The average of EEs obtained for an input measures the model sensitivity to this quantity, while their standard deviation reflects the nonlinearity effects induced by this quantity. MoEE gives more reliable and informative results than LSA, but it does not consider correlations nor the distribution of uncertainties. Thanks to its faster convergence, MoEE can be used as a preliminary step to global sensitivity analysis (using non-LCA software) to identify the key parameters that need to be investigated further (Wei et al. 2015).

5.3 Global sensitivity analysis

Global sensitivity analysis (GSA) aims to evaluate the sensitivity of the outputs to the entire input space. A simple GSA approach consists of a correlation analysis based on the sampled results from uncertainty propagation (e.g., Monte Carlo sampling). Regression coefficients are calculated from the slope of output in response to the input samples. The contribution to output variance for one quantity is estimated from the specific regression coefficient divided by the sum of regression coefficients. A variant consists in calculating correlation from the rank of values, i.e., Spearman’s rank correlation coefficients. This method, applied several times in LCA (e.g., in Geisler et al. 2005; Mutel et al. 2013), has the ability to detect correlation for non-linear models. In addition, the significance of the coefficients obtained can be checked through the calculation of the p value (commonly used significance level of 0.05). Correlation analysis could be easily implemented in LCA software by recording sampled inputs to calculate the coefficients after the Monte Carlo analysis. With similar computational time and required information (probability distributions), variance decomposition techniques can calculate the first-order sensitivity index S1 (direct contribution to the output variance of each input) and the total order sensitivity index ST (direct and indirect contributions). The difference between ST and S1 represents the interaction effects, i.e., the non-additivity of operations within the model. It is important to clarify the distinction between correlations, defined by the relationships of random variables, and interactions, produced by the model due to causality relations. To calculate these indices, the (extended) Fourier Amplitude Sensitivity Test (FAST and eFAST) uses sinusoidal functions while the Sobol method adapts quasi-Monte Carlo sampling. These approaches have been used in LCA (de Koning et al. 2010; Padey et al. 2012; Cucurachi and Heijungs 2014; Wei et al. 2015) using non-LCA software tools such as Simlab or R. In Wei et al. (2015), the Sobol method is adapted to consider correlations with inputs. Dependent parameters are grouped into clusters for which multidimensional sensitivity indices are calculated. This section showed that GSA methods can offer a robust analysis of the output sensitivity, by exploring the entire input space and providing other useful information (significance and interaction effects). Based on the probability distribution of inputs, they nevertheless require a large amount of data and a high calculation time, and they are not implemented in LCA software tools.

To conclude this section on sensitivity analysis (see Fig. 2), OAT variations can be applied for any type of uncertainty characterizations (even when no data exist) in LCA software but can be time-consuming and provide little information. The marginal analysis does not require any data, and is fast and readily available in CMLCA, but the interpretation is very limited. KIA can provide more information by including actual variances. The MoEE can be performed with any type of characterization, provides useful information and is quite reliable. Finally, the correlation analysis, the (e)FAST and Sobol methods treat probability distributions by providing various outcomes depending on result sensitivity, but they are time-consuming (a bit less for correlation analysis but less reliable than (e)FAST and Sobol methods).

6 Uncertainty communication

Communicating the uncertainty of an LCA study is imperative to ensure the transparency and the credibility of the assessment (Gavankar et al. 2014). Since LCA results are more widely spread and disseminated (e.g., to policy-makers, marketing departments, general public), the uncertainty component becomes more critical to avoid biased interpretation from non-experts.

The uncertainty of the LCA evaluation can be described qualitatively and quantitatively. The qualitative assessment is in fact required by the ISO standards (2006a, b) and the ILCD Handbook (EC 2010). Indeed, the LCA practitioner should first report in the goal and scope definition of all the elements that are part of the context uncertainty, e.g., methodological choices, assumptions, and definition of functional unit. For the LCI, a data quality assessment should be performed, e.g., including technological, geographical, and time-related representativeness, completeness, precision, methodological appropriateness, and consistency (EC 2010). These criteria reflect the epistemic (lack of knowledge) and ontic (variability) uncertainty of the quantities used. For the LCIA, the practitioner should justify the selection of indicators and models, therefore evaluating its consistency with the goal and scope of the LCA and the scientific and technical validity of the models (qualitatively assess the model structure uncertainty). Finally, the interpretation phase should consider the appropriateness of the LCA model definition and its limitations, via completeness, sensitivity, and consistency checks. These steps raise questions about the influence of the uncertain inputs, which are quantitatively assessed via sensitivity analysis (OAT and scenario analyses are commonly used). For a comparative study, quantitative uncertainty analysis is required and is usually performed via a discernibility analysis from a Monte Carlo sampling of the inputs’ probability distributions. Therefore, a proper LCA study should communicate all the different components of uncertainty treatment: the identification, qualitative characterization, and sensitivity analysis for non-comparative evaluation; as well as the quantitative characterization and uncertainty analysis for comparative study. The question of how to present them is still open to the practitioner. The identification of uncertainty sources could be done in one single table (e.g., as in Table 1) or described in each step of the LCA. For the characterization, qualitative judgment can be described in the text or in an uncertainty matrix, while quantitative characterization can be presented together with the tables of data used (e.g., adding columns for variance, probability distribution criteria, or intervals). LCA results can be shown with their uncertainty (standard deviation, variance, fuzzy sets, or box plots) instead of fixed values if an uncertainty analysis was performed, and this should be mentioned in the text. Finally, sensitivity analysis results can be communicated via a table of coefficients (possibly with a color code), histograms for scenario analysis or other types of figures for MoEE or Sobol indices (such as in Mery et al. 2014 and Cucurachi and Heijungs 2014).

In order to effectively communicate the uncertainty of LCA studies to non-experts, Gavankar et al. (2014) defined five criteria: (i) report uncertainty, (ii) provide context, (iii) develop scenarios when quantitative methods cannot be used, (iv) use common language for the subjective definition of probabilities, and (v) facilitate the access to uncertainty information. If statistical tools cannot be used, the authors propose using an “uncertainty diamond” figure to evaluate the overall uncertainty of the study (differentiating uncertainty due to lack of information, process variation, scenarios, and external issues) and a matrix to assess data quality (based on level of completeness and reliability). These graphic tools are interesting but can also lead to subjective judgment and contain confusing criteria. For example, the authors distinguish uncertainty due to lack of information (depending on information gaps) as well as uncertainty due to scenarios (depending on the available information to develop and rank scenarios). These criteria seem to overlap.

Despite the fact that LCA studies should in theory report most of the uncertainty components (uncertainty analysis only for comparative studies), there is still no harmonized approach for communicating them. The major requirement is to be transparent about uncertainty in the different LCA stages and the effect of it on the results.

7 Recommendations and discussion

Following the literature review, this final part aims to provide recommendations for LCA practitioners in the different steps of uncertainty treatment, i.e., identification, characterization, uncertainty analysis, sensitivity analysis, and communication. This chain of operations should be performed for any LCA study or LCI/LCIA model development since, as mentioned before, uncertainty is always present and should not be ignored. The treatment of uncertainty is essential in order to understand the location and level of uncertainty, as well as their effects on the results, thus avoiding defective decision-making. Its generalization also ensures the refinement and credibility of LCA because practitioners better understand the limitations of the results and present them more transparently. In addition, for comparative studies, this work can determine whether the preference for one system can be questionable due to result uncertainties. In this case, a sensitivity analysis can identify key elements to be further studied. The technique applied depends on the competences, software used, and time and budget available for the analysis. We classified the recommended approach into three levels: basic, intermediate, and advanced (Table 3). While the first two can be followed using common LCA software (SimaPro, GaBi, and OpenLCA), the advanced approach requires the use of non-LCA software such as Matlab, R, or Simlab (which can be combined with open-source software tools such as OpenLCA and Brightway2). Nevertheless, as noted in Section 2.2.1 and in Table 2, GaBi software can only propagate uncertainties from foreground processes, even using ecoinvent database, and is thus not recommended for an overview on LCI uncertainties. Basic and intermediate approaches differ according to the efforts investigated to treat uncertainty.

For uncertainty identification, we distinguished two possibilities. The first one is to list only critical elements (with significant uncertainty) for all LCA stages, while the second option, which is more complete, consists of developing an uncertainty matrix, i.e., exhaustively identifying uncertainty sources and their level (see Section 2.1). The important uncertainty elements, i.e., with significant uncertainty and contribution to the results, should then be characterized (as recommended by EC 2010). For quantities, the basic method is to define the minimum and maximum values, while probability distributions can be defined for the intermediate level (following the procedure from Henriksson et al. (2014) considering the spread and quality of collected data points). Integrating correlations and statistical tests for the choice of distribution type is advised for advanced practitioners. This should be done as a priority with specific primary data, complemented with literature data or expert judgment, if necessary. If quantity uncertainty is characterized with very few data, different types of distributions could be tested, as in Lacirignola et al. (2017). The characterization with variance or a fuzzy set is excluded from the table, due to the fact that variance-based methods are only included in CMLCA software, which is currently not broadly used by LCA practitioners, and their reliability is restricted to small variation ranges (see Sections 3.2 and 5.1). Even if they are not specified here, these techniques could be applied by CMLCA users as a preliminary step when the amount of uncertain data is high. Regarding approaches based on fuzzy logic, they are not yet mature enough to be implemented by LCA practitioners but should be investigated further. For the characterization of uncertainty for model structures and contexts, LCA practitioners need to develop alternative formulations, if possible with the support of relevant experts. In addition, for advanced users, when validation data exist, the model error can be estimated. Concerning uncertainty analysis, the basic approach is to define the range of results based on optimistic and pessimistic scenarios (defined from the range of quantities and the alternative model formulations). Then, Monte Carlo analysis (at least 1000 runs) can be easily performed to propagate quantity uncertainty with LCA software tools and estimate the distribution of results. The use of the ecoinvent database is recommended in order to include background uncertainties (not available in the GaBi or ELCD database). The sampling should be applied on the difference between systems for a comparative study (discernibility analysis) and for the alternative scenarios defined in order to characterize model structure and context uncertainty (if time and budget allow it). With advanced tools, sampling can be improved using the Latin hypercube analysis and multivariate sampling if correlations were defined. In order to identify the main contributors to the results’ uncertainties, i.e., sensitivity analysis, the only option with LCA software tools (except for CMLCA) is the one-at-a-time (OAT) variations, which should be carried out based on realistic ranges (not arbitrary). Advanced techniques (MoEE and GSA) allow a better understanding of the uncertainty effects (non-linear effects and interactions). Their application depends on the amount of uncertain data and the presence of correlations, following the procedure from Wei et al. (2015). For model structure/context uncertainty, only scenario analysis can be carried out. Finally, the minimum level of communication is to include a paragraph to discuss critical uncertainties in the LCA model and their effects on the results (qualitative approach). The intermediate approach consists of presenting LCI data and LCA results with their uncertainty (e.g., in inventory table, error bars in histogram) and commenting on them in the abstract (to increase their visibility). Finally, results from advanced approaches can be presented depending on the technique applied.

In order to facilitate and increase the reliability of the treatment of uncertainty, several developments should be investigated by LCA scholars and tool developers.

First, regarding research activities, fuzzy logic seems promising to translate expert judgment when data are lacking. The techniques to characterize, propagate, and analyze fuzzy sets should be further formalized and tested to gain reliability and feasibility. The characterization of the model structure and context uncertainty also raises research questions, e.g., about the relevance of defining probability distributions for choices or the method for gathering expert opinion to evaluate the quality of alternative scenarios. Regarding LCI database, ecoinvent flows already contain probability distributions, for which pedigree matrix criteria are being refined by the developers. These efforts should be continued (e.g., to express correlations) and replicated for other databases. Concerning LCIA methods, CFs are currently provided without uncertainty (except for specific studies such as Mutel et al. 2013, van Zelm and Huijbregts 2013). It is essential to change this practice to be able to propagate uncertainties from both LCI and LCIA. Regarding LCA software tools, the developers should extend their functions. Some should be feasible in the short term, e.g., the possibility to calculate correlation coefficients and contribution to variance for uncertain foreground data after running the Monte Carlo analysis. Some functions are already present in some tools and could be available in others, e.g., the variance-based methods (implemented in CMLCA), the possibility to define more types of probability distributions (as in Brightway2) for LCI and LCIA data (as in CMLCA and OpenLCA), and the automatic calculation of uncertainty based on pedigree scores (available in CMLCA and OpenLCA). Finally, additional developments should be carried out in the medium/long term, such as the implementation of eFAST or Sobol techniques, the Latin hypercube sampling or the reduction of calculation time.

8 Conclusions

This paper presents the main techniques applied to treat uncertainty in LCA, i.e., to characterize uncertainty sources, propagate them to the results (uncertainty analysis), analyze their effects (sensitivity analysis) and communicate about uncertainty. By briefly explaining the concepts, giving examples and references, and summarizing the applicability and reliability of the methods in a common scheme, the authors hope that this study can increase LCA practitioners’ knowledge related to uncertainty. In addition, the development of recommendations considering the current functions of LCA software tools should facilitate the application of uncertainty treatment techniques to further increase the reliability and transparency of LCA studies. This review also highlights that even with very little data and common LCA software, it is possible to propagate and analyze uncertainties, e.g., via the definition of alternative scenarios or via OAT variations, and, in any case, a qualitative assessment can be performed (e.g., assigning the level of uncertainties in different LCA stages). The practitioner should thus not be afraid to treat uncertainty, but, on the contrary, this work will support him/her to better understand his/her model and related results. The generalization of the uncertainty/sensitivity analysis and its transparent communication will make the LCA field more robust and credible to support decisions. To move forward in this direction, further research questions should be investigated (e.g., the application of fuzzy logic, the characterization of model uncertainty) and the developers of databases, LCIA methods, and software tools should invest effort into better implementing and treating uncertainty in LCA.

References

Ardente F, Beccali M, Cellura M (2004) F.A.L.C.A.D.E.: a fuzzy software for the energy and environmental balances of products. Ecol Model 176:359–379

Baudrit C, Guyonnet D, Dubois D (2005) Post-processing the hybrid method for addressing uncertainty in risk assessments. Environ Eng 131:1750–1754

Benetto E, Dujet C, Rousseaux P (2008) Integrating fuzzy multicriteria analysis and uncertainty evaluation in life cycle assessment. Environ Model Softw 23:1461–1467

Bisinella V, Conradsen K, Christensen TH, Astrup TF (2016) A global approach for sparse representation of uncertainty in life cycle assessments of waste management systems. Int J Life Cycle Assess 21:378–394

Bojaca CR, Schrevens E (2010) Parameter uncertainty in LCA: stochastic sampling under correlation. Int J Life Cycle Assess 15:238–246

Ciroth A, Muller S, Weidema B, Lesage P (2015) Empirically based uncertainty factors for the pedigree matrix in ecoinvent. Int J Life Cycle Assess 21(9):1338–1348

Clavreul J, Guyonnet D, Tonini D, Christensen TH (2013) Stochastic and epistemic uncertainty propagation. Int J Life Cycle Assess 18:1393–1403

Cruze N, Goel PK, Bakshi BR (2013) On the “rigorous proof of fuzzy error propagation with matrix-based LCI”. Int J Life Cycle Assess 18:516–519

Cucurachi S, Heijungs R (2014) Characterisation factors for life cycle impact assessment of sound emissions. Sci Total Environ 468:280–291

De Koning A, Schowanek D, Dewaele J, Weisbrod A, Guinée J (2010) Uncertainties in a carbon footprint model for detergents; quantifying the confidence in a comparative result. Int J Life Cycle Assess 15:79–89

European Commission (2010) International Reference Life Cycle Data System (ILCD) Handbook—general guide for life cycle assessment—detailed guidance. Joint Research Centre—Institute for Environment and Sustainability. First edition March 2010. EUR 24708 EN. Publications Office of the European Union, Luxembourg

European Commission (2011) International Reference Life Cycle Data System (ILCD) Handbook—recommendations for life cycle impact assessment in the European context—based on existing environmental impact assessment models and factors. Joint Research Centre—Institute for Environment and Sustainability. First edition November 2011. EUR 24571 EN. Publications Office of the European Union, Luxembourg

Frey HC, Li S (2002) Methods and example for development of a probabilistic per-capita emission factor for VOC emissions from consumer/commercial product use. In Proceedings of the 95th Annual Conference & Exhibition of Air & Waste Management, Baltimore, MD, June 2002; Paper 42162

Frischknecht R, Jungbluth N, Althaus HJ, Doka G, Heck T, Hellweg S, Hischier R, Nemecek T, Rebitzger G, Spielmann M, Wernet G (2007) Overview and methodology. Ecoinvent report no. 1. Swiss Centre for Life Cycle Inventories, Dübendorf

Gavankar S, Anderson S, Keller AA (2014) Critical components of uncertainty communication in life cycle assessments of emerging technologies–nanotechnology as a case study. J Ind Ecol 19(3):468–479

Geisler G, Hellweg S, Hungerbühler K (2005) Uncertainty analysis in life cycle assessment (LCA): case study on plant-protection products and implications for decision making. Int J Life Cycle Assess 10(3):184–192

Geldermann J, Spengler T, Rentz O (2000) Fuzzy outranking for environmental assessment—case study: iron and steel making industry. Fuzzy Sets Syst 115:45–65

Groen EA, Heijungs R (2016) Ignoring correlation in uncertainty and sensitivity analysis in life cycle assessment: what is the risk? Environ Impact Assess Rev 62:98–109

Groen EA, Heijungs R, Bokkers EAM, de Boer IJM (2014) Methods for uncertainty propagation in life cycle assessment. Environ Model Softw 62:316–325

Groen EA, Bokkers EAM, Heijungs R, de Boer IJM (2016) Methods for global sensitivity analysis in life cycle assessment. Int J Life Cycle Assess 22(7):1125–1137

Güereca LP, Agel N, Baldasano JM (2007) Fuzzy approach to life cycle impact assessment—an application for biowaste management systems. Int J Life Cycle Assess 12(7):486–496

Guo M, Murphy RJ (2012) LCA data quality: sensitivity and uncertainty analysis. Sci Total Environ 435-436:230–243

Guyonnet D, Bourgine B, Dubois D, Fargier H, Côme B, Chilès JP (2003) Hybrid approach for addressing uncertainty in risk assessments. Environ Eng 129:68–78

Heijungs R (1994) A generic method for the identification of options for cleaner products. Ecol Econ 10:69–81

Heijungs R (2010) Sensitivity coefficients for matrix-based LCA. Int J Life Cycle Assess 26:511–520

Heijungs R, Frischknecht R (2005) Representing statistical distributions for uncertain parameters in LCA—relationships between mathematical forms, their representation in EcoSpold, and their representation in CMLCA. Int J Life Cycle Assess 10(4):248–254

Heijungs R, Huijbregts MAJ (2004) A review of approaches to treat uncertainty in LCA. In complexity and integrated resources management. In: Pahl-Wostl C, Schmidt S, Rizzoli AE, Jakeman AJ (eds) Complexity and integrated resources management, University of Osnabrück, Germany, 14–17 June 2004. International Environmental Modelling and Software Society, Manno, pp 332–339

Heijungs R, Kleijn R (2001) Numerical approaches towards life cycle interpretation—five examples. Int J Life Cycle Assess 6(3):141–148

Heijungs R, Lenzen M (2014) Error propagation methods for LCA—a comparison. Int J Life Cycle Assess 19:1445–1461

Heijungs R, Tan RR (2010) Rigorous proof of fuzzy error propagation with matrix-based LCI. Int J Life Cycle Assess 15:1014–1019

Henriksson PJG, Guinée JB, Heijungs R, de Koning A, Green DM (2014) A protocol for horizontal averaging of unit process data—including estimates for uncertainty. Int J Life Cycle Assess 19:429–436

Hong J, Shaked S, Rosenbaum RK, Jolliet O (2010) Analytical uncertainty propagation in life cycle inventory and impact assessment: application to an automobile front panel. Int J Life Cycle Assess 15:499–510

Huijbregts MAJ, Gilijamse W, Ragas ADMJ, Reijnders L (2003) Evaluating uncertainty in environmental life-cycle assessment. A case study comparing two insulation options for a Dutch one-family dwelling. Environ Sci Technol 37:2600–2608

Hung ML, Ma HW (2009) Quantifying system uncertainty of life cycle assessment based on Monte Carlo simulation. Int J Life Cycle Assess 14:19–27

Igos E, Benetto E (2015) Uncertainty sources in LCA, calculation methods and impacts on interpretation. Study no. 2014-03, SCORELCA association, available at https://www.scorelca.org/en/studies-lca.php Accessed 29 August 2017

Imbeault-Tétrault H, Jolliet O, Deschênes L, Rosenbaum RK (2013) Analytical propagation of uncertainty in life cycle assessment using matrix formulation. J Ind Ecol 17(4):485–492

ISO (International Organization for Standardization) (2006a) Environmental management—Life cycle assessment—Principles and framework. ISO 14040:2006; Second Edition 2006-06. ISO, Geneva

ISO (International Organization for Standardization) (2006b) Environmental management — Life cycle assessment — Requirements and guidelines. ISO 14044:2006; First edition 2006-07-01. ISO, Geneva

Jung J, von der Assen N, Bardow A (2014) Sensitivity coefficient-based uncertainty analysis for multi-functionality in LCA. Int J Life Cycle Assess 19:661–676

Kätelhön A, Bardow A, Suh S (2016) Stochastic technology choice model for consequential life cycle assessment. Environ Sci Technol 50:12575–12583

Lacirignola M, Blanc P, Girard R, Pérez-López P, Blanc I (2017) LCA of emerging technologies: addressing high uncertainty on inputs' variability when performing global sensitivity analysis. Sci Total Environ 578:268–280

Lloyd SM, Ries R (2007) Characterizing, propagating, and analyzing uncertainty in life-cycle assessment. J Ind Ecol 11(1):161–179

Lo SC, Ma H, Lo SL (2005) Quantifying and reducing uncertainty in life cycle assessment using the Bayesian Monte Carlo method. Sci Total Environ 340:23–33

Malça J, Freire F (2012) Addressing land use change and uncertainty in the life-cycle assessment of wheat-based bioethanol. Energy 45:519–527

Maurice B, Frischknecht R, Coelho-Schwirtz V, Hungerbühler K (2000) Uncertainty analysis in life cycle inventory. Application to the production of electricity with French coal power plants. J Clean Prod 8:95–108

Mendoza Beltran A, Heijungs R, Guinée J, Tukker A (2016) A pseudo-statistical approach to treat choice uncertainty: the example of partitioning allocation methods. Int J Life Cycle Assess 21:252–264

Mery Y, Tiruta-Barna L, Baudin I, Benetto E, Igos E (2014) Formalization of a technical procedure for process ecodesign dedicated to drinking water treatment plants. J Clean Prod 68:16–24

Morgan MG, Henrion M, Small M (1990) Uncertainty: a guide to dealing with uncertainty in quantitative risk and policy analysis. Cambridge University Press, New York

Muller S, Lesage P, Ciroth A, Mutel C, Weidema BP, Samson R (2016a) The application of the pedigree approach to the distributions foreseen in ecoinvent v3. Int J Life Cycle Assess 21(9):11327–11337

Muller S, Lesage P, Samson R (2016b) Giving a scientific basis for uncertainty factors used in global life cycle inventory databases: an algorithm to update factors using new information. Int J Life Cycle Assess 21:1185–1196

Mutel CL, de Baan L, Hellweg S (2013) Two-step sensitivity testing of parametrized and regionalized life cycle assessments: methodology and case study. Environ Sci Technol 47:5660–5667

Noori M, Tatari O, Nam B, Golestani B, Greene J (2014) A stochastic optimization approach for the selection of reflective cracking mitigation techniques. Transp Res 69:367–378

Oreskes N, Shrader-Frechette K, Belitz K (1994) Verification, validation, and confirmation of numerical models in the earth sciences. Science 263(5147):641–646

Padey P, Beloin-Saint-Pierre D, Girard R, Le-Boulch D, Blanc I (2012) Understanding LCA results variability: developing global sensitivity analysis with Sobol indices. Int Symp Life Cycle Assess Constr Civ Eng Build, July 2012. RILEM Publications, Nantes, p 19–27

Refsgaard JC, van der Sluijs JP, Brown J, van der Keur (2006) A framework for dealing with uncertainty due to model structure error. Adv Water Resour 29:1586–1597

Röder M, Whittaker C, Thornley P (2015) How certain are greenhouse gas reductions from bioenergy? Life cycle assessment and uncertainty analysis of wood pellet-to-electricity supply chains from forest residues. Biomass Bioenergy 79:50–63

Sakai S, Yokoyama K (2002) Formulation of sensitivity analysis in life cycle assessment using a perturbation method. Clean Tech Environ 4:72–78

Saltelli A, Annoni P (2010) How to avoid a perfunctory sensitivity analysis. Environ Model Softw 25:1508–1517

Sonnemann GW, Schuhmacher M, Castells F (2003) Uncertainty assessment by a Monte Carlo simulation in a life cycle inventory of electricity produced by a waste incinerator. J Clean Prod 11:279–292

Sugiyama H, Fukushima Y, Hirao M, Hellweg S, Hungerbühler K (2005) Using standard statistics to consider uncertainty in industry-based life cycle inventory databases. Int J Life Cycle Assess 10(6):399–405

Tan RR (2008) Using fuzzy numbers to propagate uncertainty in matrix-based LCI. Int J Life Cycle Assess 13(7):585–592

Thabrew L, Lloyd S, Cypcar C, Hamilton JD, Ries R (2008) Life cycle assessment of water-based acrylic floor finish maintenance programs. Int J Life Cycle Assess 13(1):65–74

Van Asselt MBA, Rotmans J (2001) Uncertainty in integrated assessment modelling–from positivism to pluralism. Clim Chang 54:75–105

Van Zelm R, Huijbregts MAJ (2013) Quantifying the trade-off between parameter and model structure uncertainty in life cycle impact assessment. Environ Sci Technol 47:9274–9280

Walker WE, Harremoës P, Rotmans J, van der Sluijs JP, van Asselt MBA, Janssen P, Krayer von Krauss MP (2003) Defining uncertainty: a conceptual basis for uncertainty management in model-based decision support. Integr Assess 4(1):5–17

Wang E, Shen Z (2013) A hybrid data quality Indicator and statistical method for improving uncertainty analysis in LCA of complex system - application to the whole-building embodied energy analysis. J Clean Prod 43:166–173

Weckenmann A, Schwan A (2001) Environmental life cycle assessment with support of fuzzy-sets. Int J Life Cycle Assess 6(1):13–18

Wei W, Larrey-Lassalle P, Faure T, Dumoulin N, Roux P, Mathias JD (2015) How to conduct a proper sensitivity analysis in life cycle assessment: taking into account correlations within LCI data and interactions within the LCA calculation model. Environ Sci Technol 49:377–385

Weidema BP, Wesnaes MS (1996) Data quality management for life cycle inventories—an example of using data quality indicators. J Clean Prod 4(3–4):167–174

Weidema BP, Bauer C, Hischier R, Mutel C et al (2013) Overview and methodology. Data quality guideline for the ecoinvent database version 3. The ecoinvent Centre, St. Gallen

Zamagni A, Buttol P, Porta PL, Buonamici R et al (2008) Critical review of the current research needs and limitations related to ISO-LCA practice - Deliverable D7 of work package 5 of the CALCAS project. http://www.estis.net/builder/includes/page.asp?site=calcas&page_id=8215FF89-5114-4748-BE6C-1F0F1E69DAF5. Accessed 29 August 2017

Acknowledgements

The authors gratefully acknowledge the members of the SCORELCA association for their feedback on the review of and recommendations for uncertainty treatment methods.

Funding

This work was funded by the SCORELCA association (study 2014-03).

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible editor: Adisa Azapagic

Electronic supplementary material

ESM 1

(XLSX 30 kb)

Rights and permissions

About this article

Cite this article

Igos, E., Benetto, E., Meyer, R. et al. How to treat uncertainties in life cycle assessment studies?. Int J Life Cycle Assess 24, 794–807 (2019). https://doi.org/10.1007/s11367-018-1477-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11367-018-1477-1