Abstract

With the advent of the ‘age of conspiracism’, the harmfulness of conspiratorial narratives and mindsets on individuals’ mentalities, on social relations, and on democracy, has been widely researched by political scientists and psychologists. One known negative effect of conspiracy theories is the escalation toward political radicalism. This study goes beyond the exploration of mechanisms underpinning the relationship between conspiracy theory and radicalization to focus on possible approaches to mitigating them. This study sheds light on the role of counter-conspiracy approaches in the process of deradicalization, adopting the case study of anti-China sentiment and racial prejudice amid the Covid-19 pandemic, through conducting an experiment (N = 300). The results suggest that, during critical events such as the Covid-19 pandemic, exposure to countermeasures to conspiracist information can reduce individual acceptance of radicalism. We investigated two methods of countering conspiracy theory, and found that: (1) a content-targeted ‘inoculation’ approach to countering conspiracy theory can prevent the intensification of radicalization, but does not produce a significant deradicalization effect; and (2) an audience-focused ‘disenchantment’ method can enable cognitive deradicalization, effectively reducing the perception of competitive victimhood, and of real and symbolic threats. This study is one of the first attempts to address causality between deradicalization and countermeasures to conspiracy theories in the US-China relations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

First reported as a local epidemic in Wuhan city in early December 2019, coronavirus (Covid-19) infections at the time of writing have exceeded the 556 million mark worldwide, with more than 6.3 million deaths. Governments around the world have launched various draconian and often uneven restrictions to combat this indeterminate, generalized threat, and social discontent has been strong and persistent. Conspiracy theories regarding the origin of the virus, the transmission routes, and a possible vaccine have spread almost as quickly as the virus itself. These theories are exemplified by some popular, novel, and eye-catching narratives, such as: the virus was (deliberately or not) leaked from China’s Wuhan Institute of Virology; 5G networks can spread the virus; and Bill Gates and the ID2020 coalition aim to build a global surveillance state through using a Covid-19 vaccine to ‘microchip’ the world population [37, 100], to name only a few. Such conspiracist rhetoric is not only accumulated and disseminated by anonymous users of social media, some is even integrated into official discourses and directly or indirectly encouraged by government leaders and senior officials. For example, Brazil’s education minister has suggested that the pandemic was a part of the Chinese government’s ‘plan for world domination’.

Citizens around the world have suffered the brutal force of these ‘infodemic’ politics [116]. With the advent of an ‘age of conspiracism’, the harmfulness of conspiratorial narratives and mindsets on individuals’ mentalities, on social relations, and on democracy, has been widely researched by social psychologists and political scientists [e.g., 33, 53, 54, 104, 106]. The conspiracist narratives about Covid-19 have been dynamic and changing, adapting to social contexts and increasingly emboldening political radicalism across the globe. Several far-right political parties and groups, including in the United States, United Kingdom, Italy, Spain and Germany, have latched onto the Covid-19 crisis to advance anti-immigrant, white supremacist and xenophobic conspiracy theories that demonize foreigners and prominent individuals. Conspiracist narratives reinforce ideas of who is ‘clean’, and advocate varied forms of ‘epidemiological placism’ [96] and ‘racist biopolitics’ [61]. Within conspiracy theories, the unequivocal condemnation of political opposition and the call for the urgent eradication of dissent undermines pluralist democratic discourse, thus fueling political polarization and radicalization [4, 45, 99]. The proliferation of conspiracy theories has also coincided with an escalating radical, racist attacks in the US, the UK, Australia and Africa (e.g., NBC [78], The [102].

Five decades ago, Hofstadter made a clear association between extremist views, antagonistic attitudes and conspiracy beliefs in his discussion of 'Paranoid Style' [46]. Recent studies have further theorized belief in conspiracy theories as a potential amplifier of radicalization [64]. Unfortunately, in the face of ongoing and unprecedented racial and international tensions, few studies have gone beyond the exploration of the conspiracy theory–radicalization mechanism to focus on possible approaches to mitigating the negative effects of infodemic politics. This study sheds light on the role of counter-conspiracy strategies in the process of deradicalization, adopting a case study of anti-China sentiment and racial discrimination amid the Covid-19 pandemic.

In this study, we argue that, during a critical event such as the Covid-19 pandemic, exposure to conspiracy-theory countermeasures can lead to a diminished acceptance of radicalism. We have examined the efficacy of two methods of countering conspiracy theories. Our results show that the relationship between (counter-)conspiracy theories and (de)radicalization is more complicated than we predicted. We found that the ‘content-focused’ approach to countering conspiracy theories (‘inoculation strategy’ in our study) can prevent the intensification of radicalization, but doesn’t produce a significant deradicalization effect. However, we also found that an audience-focused method (a ‘disenchantment’ approach) can enable cognitive deradicalization. The effects of the disenchantment treatment on individuals’ adoption of radicalized sentiments were shown to be negatively mediated by competitive victimhood, realistic threat (which is related to concerns posed by an out-group to an in-group’s existence), and symbolic threat—a finding that bridges the previously separate discussions on the psychological factors leading to (de)radicalization and the cognitive influences of conspiracy theory.

Revisiting Conspiracy Theory Research

It is important to start our discussion with a definition of conspiracy theories, differentiating the concept from other closely related (and sometimes overlapping) types of misleading information such as fake news, disinformation, and misinformation. Fake news is usually viewed as “…fabricated information that mimics news media content in form but not in organizational process or intent. Fake news outlets, in turn, thus lack the news media’s editorial norms and processes for ensuring the accuracy and credibility of information” [65, p.1094]. Bennett and Livingston [9] define disinformation as “intentional falsehoods spread as news stories or simulated documentary formats to advance political goals” (p.124). The concept of disinformation thus refers to problematic information deliberately aimed at deceiving others, while misinformation refers to false or inaccurate information circulating as a result of honest mistakes, negligence, or unconscious biases.

Different from fake news, disinformation, and misinformation, the concept of conspiracy theory cares less about the information, per se, and more about people’s beliefs about and perceptions of the information. ‘Conspiracy theories’ are commonly viewed as ‘explanatory beliefs’ (either speculative or evidence-based) or ‘worldviews’ that are used to make sense of historical causality and political events [13, 34]. A conspiracy theory can be defined as “an explanation of historical, ongoing, or future events that cites as a main causal factor a small group of powerful persons, the conspirators, acting in secret for their own benefit against the common good” [104, p.32]. Existing studies highlight some characteristics or ‘rules’ of conspiracy theories, including unrealistic assumptions about the potency of causation (‘pervasive potency’), a binary of pure good and evil (‘Manichean binary’), and an elusive style of warranting and validating knowledge (‘elusive epistemology’) [4].

A culture of suspicion and conspiracy is not an uncommon response to today’s complex and secretive post-modern society, and can be seen as a rational attempt to understand social and political contexts [40, 56, 104]. This paper’s approach views belief in conspiracy theories as a process of motivated, rational reasoning. At the individual level, in line with theories of cognitive dissonance, individuals’ conspiratorial beliefs are likely to depend on the extent to which conspiratorial theories correspond with pre-existing stances, attitudes and beliefs [67]. From the group-based perspective, conspiracy theories are viewed as a form of ‘motivated collective cognition’, as they can serve important advantages for the ingroup, such as raising collective self-esteem, putting the blame on external actors, and strengthening ingroup identity via victimization. Individual and situational factors interact to amplify the conspiracy beliefs to a greater extent than any single factor does on its own in the context of the Covid-19 pandemic [74].

With regards to the consequences of conspiracy theories and beliefs, empirical research proves that exposure to conspiracy theories can: directly increase negative feelings of powerlessness, disillusionment, uncertainty, mistrust, and anomie [32, 53, 54], decrease individuals’ trust in government and discourage activities of political engagement, such as voting [59]; interfere with intergroup relations by stirring up prejudice and discrimination [101],fuel violence towards others [7],and lead people to disengage from social norms, making them more likely to engage in counter-normative behavior [58]. In addition, in the special cases of environmental issues and public health emergencies, conspiracy mindsets can lead to science denialism, impact individuals’ medical choices, and exacerbate public health crises [16, 23].

Radicalization and Deradicalization

The term ‘radical’ is usually treated as an opposition to ‘moderate’, indicating a relative position on a continuum of organized opinion [93 p.481]. Individuals can move toward radicalism through ascending stages of radicalization [77 p.241], radicalization thus indicates movement along that continuum of organized opinion [93 p.481]. Radicalism is a point of transition from a stage of activism characterized by psychological and behavioral readiness to engage in “legal and non-violent political action” toward one characterized by “readiness to engage in illegal and violent political action” [77] p.240). It involves turning toward a stage of extremism involving the psychological characteristics of being inflexible, closed-minded, and non-compromising, having a ‘black and white’ view of the world, being intolerant to difference, and having a propensity for mass political violence when the opportunity offers itself [77, 91].

Radicalization is a process of relative change, a socialization during which internalization and adoption of radical views leads to the legitimation of political violence [e.g., 48, 95]. It consists of movement toward the supporting or enacting radical behavior, which has been theorized through various types of developmental models (e.g., [60, 76, 88, 114]. Individual radicalization consists of three major ingredients: the motivational element, which is the goal of one’s activity [8, 90, 70, 107], the ideology that identifies the means to that goal [17, 86, 87, 121]; and the social process (group dynamics serving as the vehicle whereby the individual comes into contact with the ideology [86, 87, 111]. Notably, ideology, regardless of its specific content, whether it be ethno-nationalist ideology, socialist ideology, or religious ideology, has a central function of means suggestion. This study explores the ideological component of radicalization as essentially a violence-justifying means, rather than specific content of belief. It is concerned with the motivational element, the perceived means to attain the goal, and the individual psychological perception of the social and group conditions and dynamics.

A person undergoing the transformative process toward radicalism can be motivated by multiple, interrelated psychological forces. These can be individual experiences characterized by intense trouble, difficulty or danger leading to personal instability, or the perception of their community or group as facing an external, imminent existential threat [47, 52, 63, 114]. Social identity perspectives show how biased dynamics convince individuals that their personal disadvantages are largely the result of their being members of a community that has been collectively victimized or threatened, and that the alleviation of community grievances and the amelioration of threats to community survival will only occur by resorting to violence [52], p.1081). Moreover, these real or perceived threats may turn into heightened forces that drive radicalization through a quest for significance or the avoidance of an anticipated (or threatened) loss of significance [63, 112 p.853]. One motivational condition that activates this quest for significance is ‘relative deprivation’, meaning a comparison process whereby one perceives the self (egoistic deprivation) or the ingroup to which one belongs (fraternal deprivation) as not having what they deserve [112 p.854].

These psychological forces of radicalization may compel individuals to justify the use of violence in attaining their goals. Three components of violence-justifying ideology are involved in this process: grievance (injustice, harm) believed to have been suffered by one’s group [2, 29], a culprit presumed responsible for the perpetrated grievance; and a morally warranted and effective method of removing the dishonor created by the supposed injustice [63, 76]. Political and psychological research has pointed to grievances, victimization, and perceived and fraternal deprivation as some of the primary push factors for people in adopting more violent ideologies [11, 76].

Deradicalization constitutes a reversal of radicalization, indicated by a reduced commitment to the focal, ideological goal, or a reduced commitment to violence as a means to achieve that goal [63 p.84, 90]. These violent means might be relinquished on either moral grounds or grounds of ineffectiveness [63] p.85). Further, the de-legitimization of the use of violence may depend on a revision of the individual’s worldview, involving five components: de-naturalization, clarification, differentiation, complication and restructuration [92 p.46; 103]. These de-radicalizing processes can reduce the individual’s commitment to embracing an all-encompassing exclusionary ideology and lower the degree of entitativity and cognitive closure that differentiates between ingroup and outgroup, thus weakening the individual’s identification with rigidly structured, intolerant, and ethnocentric social groups.

ConspiraIcy Theory-Radicalization Dynamics

Investigating the interrelations between conspiracy theories and radicalization is a recent academic effort. A few studies have pointed out that conspiracy theories have great potential to fuel prejudice, discrimination, and violence towards others, and thus accelerate political extremism and the process of radicalization. For instance, Bartlett and Miller [7] have contended that conspiracy theories act as ‘radicalization multipliers’ within groups,similarly, Lee [64] has suggested that conspiracy theorizing can be a by-product of engaging in extremist spaces, especially during times of political and social uncertainty. Recent studies also suggested that political extremists, especially conservative ones, are more likely to believe conspiracy theories, and the extremist prediction of alternative beliefs only takes place when the beliefs in question are partisan in nature and the measure of ideology is identity-based [31, 35, 49, 105].

Moreover, both conspiracy theories and political extremity derive from and gain their profound appeal during times of political uncertainty and subsequent ‘agency panic’ [73]. Indeed, political psychologists have contended that people are motivated to view powerful others as conspiring against them when they experience fear, anxiety, and loss of control and significance [50, 80, 106, 112], leading to a need to engage with groups and ideas that promise a radical alternative [e.g., 76, 86, 87].

One potential mechanism through which conspiracy theories can facilitate radical sentiments and behaviors is in the manner that conspiratorial narratives and thinking enhance ingroup bonding and exacerbate outgroup antagonism. Previous studies have demonstrated that stereotype, prejudice and outgroup hostility are antecedents and consequences, of conspiracy belief. People’s pre-existing attitudes and beliefs (e.g., racism, prejudice, collective narcissism) predict endorsement of outgroup conspiracy theories [19]. This means that those who hold such mentalities deem conspiracy-driven explanations of political events more acceptable, viewing other nations as hostile toward their own nation [97]. Conspiracy theories provide clear and unambiguous narratives, structuring the world into ingroups and outgroups, reinforcing the sense of specialness that comes from having access to insider knowledge, and enhances the appeal of extremist narratives. Furthermore, conspiracy theories project prejudice more strongly in times of political uncertainty, and provide a “moral justification for immoral actions” [25, p 115] such as intergroup discrimination. It has been substantiated in some studies that both conspiracy narratives and radical sentiments can lead groups towards violence by exaggerating or creating an outgroup ‘threat’, shutting down dissenting voices, and legitimizing the use of violence in order to awaken others [7, 76, 77].

The advent of the digital epoch further worsens the situation and enhances the reciprocal causation between ‘conspiracy beliefs’ and ‘outgroup hostility and radicalization’. Due to selective media exposure and confirmation bias, people tend to accept information that adheres to their pre-existing attitudes and beliefs, while avoiding information that contradicts them. Del Vicario et al.’s [27] research has shown that this is also true when considering conspiracy theories’ consumption and diffusion—online environments have reinforced people’s pre-existing conspiracy worldviews. Hence, there exists a ‘spiral of conspiracy belief’, where those who hold racist views or see China as a geostrategic threat to the United States are more likely to subscribe to content and information regarding China-related conspiracy theories,then, after conspiratorial media exposure, their suspicion and hostility towards China and the Chinese people is strengthened, making future efforts to debunk said beliefs increasingly difficult.

Existing studies exploring ‘conspiracy theory–radicalization’ dynamics have suggested that conspiracy beliefs might heighten a set of factors, such as perceived threat and relative victimhood, that can be positively correlated with an individual’s susceptibility to radicalized views against certain social groups in times of political uncertainty. Perhaps in part due to this theoretical position, the existing literature has placed a lopsided emphasis on conspiracy information that accelerates individuals’ adoption of more radicalized views. As such, countermeasures that might produce an antidote to radicalization have not been accorded an equal degree of academic attention. Building on the intellectual landscapes of radicalization and conspiracy theory research that have evolved over the last few years, this paper attempts to move one step forward in analyzing the intersection of deradicalization and the strategies for debunking conspiracy theory belief, asking the question: can debunking conspiracy theories play a positive role in deradicalization? We hypothesize that if conspiracy theory information contributes to radicalization, it follows that countermeasures against conspiracy theory will moderate such radical sentiments.

-

H1: Debunking conspiratorial information can mitigate the effects of radicalization or even result in deradicalization.

Content-Targeted and Audience-Focused Intervention

This study is part of the academic effort to theorize and develop multiple approaches to combat conspiracy theories (e.g., [36, 41, 66]. Source-targeting interventions focus on the supply-side of conspiracy theory, relying on governmental policies, legislation and social media companies’ censorship to reduce the chances of audiences encountering conspiratorial information [84]. Despite some successes in combating conspiracy discourses, such interventions are sometimes viewed as morally problematic (the growth of social media censorship, as part of extended social control, may pose an existential threat to freedom of speech [62]), technically ineffective (conspiracy narratives can spread through the insidious approach of ‘just asking questions’, making regulations and algorithm-based filters useless), and economically unviable (sensationalist and eye-catching conspiratorial discourses are profitable in the “clickbait” media economy, incentivizing many online platforms to facilitate the dissemination of such content). Considering the impotency of supply-side interventions, developing effective methods from the demand-side/acceptance-side is more important than ever [23].

The present investigation sheds light on the acceptance-side of countering conspiracy theories. This line of academic enquiry attempts to discover if it is possible to mitigate the negative influence of conspiratorial media exposure on individuals [e.g., 57, 113, 118]. These endeavors can be generally classified into two subtypes: content-targeted and audience-focused. The former aims at debunking conspiracy theories’ arguments, in the hope that this will discredit them in the eyes of the observer [5]. The conventional approach is to uncover the logical and factual inconsistencies of a conspiracy narrative and thus undermine its credibility [18, 108]. One common approach is fact-checking. Recent research offers compelling evidence that fact-checking messages can be effective in promoting accurate beliefs following exposure to false information, at least in research settings [118]. However, this method is limited in its external applicability since most people do not actively seek out fact-checking messages and rarely encounter them when using social media. Another widely adopted content-targeted method is the ‘inoculation’ strategy. This begins by presenting weak arguments of persuasion and misinformation (i.e., containing obvious logical fallacies), which are expected to “inoculate" the attitudinal immune system of the subject against similar threats in the future [5, 22]. This approach draws on psychological inoculation theory to help cultivate ‘mental antibodies’ against fake news and misinformation [5]. Lewandowsky’s [66] recent research finds that climate misinformation is best disarmed through a process of inoculation. Furthermore, in one preregistered study, the inoculation effect of combating disinformation was shown to be stable over a week-long delay [69]. Another effective and relevant example of this is Roozenbeek and van der Linden’s [85] ‘Bad News’, a browser game in which players take on the role of fake news creators and learn about several common misinformation techniques. This game has shown consistent and significant inoculation effects.

Yet, there remains a key issue when conducting inoculation treatment: the inoculation approach was originally developed, and is often used, with the aim of protecting individuals’ (positive) pre-existing viewpoints from the influence of future malicious information [6, 5]. The mechanism behind the approach is also partly dependent on people’s engagement in “identity-protective motivated reasoning”. However, people’s pre-existing convictions can be widely varied, and many people already see the outside world through certain ideologies. Miller et al. [75] argued that the tendency to endorse a conspiracy theory is highest among people who have, inter alia, a particular ideological worldview to which the conspiracy theory can be linked, and are motivated to protect said worldview. The inoculating effects on this subpopulation can be less effective, as people with pre-existing beliefs may find a conspiracy discourse (even it contains obvious logical errors) consistent with their pre-existing convictions, making it prone to integration into their conspiratorial world views. Therefore, the effect of this content-focused approach might be very limited in reducing the radicalized views of certain subgroups. Based on the above discussion, we make the following prediction regarding the role of inoculation strategy in deradicalization:

-

H1a: The content-targeted inoculation strategy can mitigate the effects of radicalization, but does not produce deradicalization.

On the other hand, ‘audience-focused’ (or ‘human-focused’) debunking approaches decode the mechanisms of conspiracy theories in order to improve individuals’ logical thinking, analytic thinking and psychological condition. For instance, Swami [101] found that verbal fluency and cognitive fluency tasks eliciting analytic thinking reduced belief in conspiracist ideation. Moreover, building feelings of certainty, trust, control and self-efficacy through methods such as recalling experiences of successfully controlled events, has been suggested as a possible way to reduce conspiracy theorizing [30]. This paper theorizes a disenchantment approach that focuses on educating people on the nature and features of conspiracy theories, attempting to cast light on the mechanisms that allow them to infiltrate people’s belief systems (e.g., what is a conspiracy theory? Why do people seek conspiracy messages during political uncertainty?). This approach aims to help individuals better understand the information-processing of conspiracy theories, their micro-systems, and their psychological antecedents. This is expected to strengthen feelings of self-efficacy, self-understanding, self-control, and to reduce conspiratorial ideations.

Notably, the disenchantment treatment attempts to subvert the cultural familiarity of conspiracy texts’ intended meaning. By focusing on deconstructing the cultural mechanism of conspiracy discourses, the disenchantment approach encourages varied degrees of cognitive intervention into the interpretations of conspiracy theories. As such, if the belief worked upon were racist in nature, the disenchantment treatment would be more effective than only focusing on the media content. The content-targeted approach does not count for the possibility of false propositions sometimes making sense due to factors such as pre-existing conspiracy or racist convictions. The disenchantment approach, however, avoids false but sensical claims by exposing the highly-biased process of production underpinning conspiracy theories, helping validate a baseline that does not make sense a priori. In other words, the disenchantment approach focuses on exposing the problems of conspiracy theory's epistemological base—regarded as “elusive epistemology” [4]—which are not explicated within the process of truth verification and validation.

As discussed earlier, the content-focused, inoculation strategy may not be able to reduce the radicalized effects of conspiracy theories in those with conducive pre-existing beliefs. The disenchantment approach helps address the culturally biased context that constitutes the falsehood of claims, even though they may appear to make sense to groups with certain racist convictions. Thus, as compared with the content-focused approach, we can expect an increased effectiveness of the audience-focused, disenchantment approach in reducing radicalized views fueled by conspiracy theories. Thus, we make a prediction regarding the role of disenchantment strategy in deradicalization, as follows:

-

H1b: The audience-focused, disenchantment approach can mitigate the effects of radicalization or even result in deradicalization.\

The Mediating Role of Counter-Narratives

The mediating role of counter-conspiracist narratives on radical sentiments has not yet been adequately addressed in the existing literature. Existing arguments that hold a ‘coupling’ view of ‘conspiracy theory–radicalization’ treat conspiracy theory and radicalization as implicated in, co-constituted by, and co-evolving with each other. Bearing in mind interactionist or relationalist perspectives, it is not a surprising conclusion that exposure to conspiracy theories, which heighten a sense of victimhood, perception of threat, group exceptionalism and outsider status, and which produce hypersensitive agency detection, can contribute to cognitive radicalization. However, the mediating role of these factors, and whether they are predictors or outcomes of deradicalization, is unclear. Despite this ambiguity, we consider these factors to be potential mediators of deradicalization, based on the reasoning that the active intervention of counter-conspiratorial narratives may produce a weakened tendency to see one's ingroup as especially deprived and an outgroup as a threat and cause of the perceived grievance. This should decrease negativity towards the outgroup and the propensity for violence. Applied to the anti-Asian and anti-Chinese context in the US, this reasoning allows us to propose that counter-conspiracist intervention should, in psychological factors such as perceived threat and relative victimhood, weaken individual negative evaluations toward East Asian and especially Chinese people, contributing to a decline in radicalized perspectives against these groups. Building on these insights, we specify the following questions as an extension to our central hypotheses (H1).

-

RQ: Can the effect of the debunking treatment on individual adoption of radicalized sentiments be mediated by conspiratorial beliefs, competitive victimhood, realistic threat, and symbolic threat?

-

RQ1: Can the debunking treatment lead to reduced conspiracy belief, and does this lead to cognitive deradicalization?

-

RQ2: Can the debunking treatment lead to reduced competitive victimhood, and does this lead to cognitive deradicalization?

-

RQ3: Can the debunking treatment lead to a reduced sense of symbolic and realistic threat, and does this lead to cognitive deradicalization?

Method

Sample

To explore the influence of debunking narratives on conspiracy belief within people with radical anti-Chinese sentiments, we designed a survey experiment. Our participants (N = 300) were recruited from Amazon Mechanical Turk (MTurk) in September 2020. Participants were American adults, who were paid $3 for their participation. Our sample was 39% female, 77% white and 3.7% non-Chinese Asian. The median age of the sample was 34, and the median level of education was a 4-year college degree. Previous studies have substantiated the usefulness of MTurk in navigating public opinon about China in the US during Covid-19 [68].

Design and Procedure

This study adopted a between-subject experiment design. 300 participants were randomly assigned to one of three conditions (about 100 participants for each condition—an adequate sample size, which has been adopted in many previous media studies experiments, e.g., [55, 117]. In each condition, participants were exposed to a video that lasted approximately five minutes. The first and second conditions consisted of differing countermeasures to conspiracy theories that target China as a ‘hidden hand’ behind Covid-19. The final condition was a control condition where no information was given. It should be noted that our selection of conspiracy theory intervention methods is representative, but not exhaustive. Following the countermeasures, participants rated their belief in the conspiracy theory and radical attitudes, followed by a battery of questions detecting their feelings of threat (symbolic and realistic) and competitive victimhood.

The first condition was a content-based countermeasure belonging to the ‘inoculation strategies’. The corresponding experiment stimulus contained a conspiracy theory on Coronavirus and China from the Alex Jones Show—a right-wing conspiracist program. In the show, Jones demonstrates a number of printed news reports as proof of his ‘Wuhan lab’ conspiracy theory. However, the reports are either hypothetical or self-contradictory. The last image shot from inside the Wuhan lab shows only normal laboratory activities. The rationale of the selection of Alex Jones’s original show as the inoculation treatment (without dispelling its factual or logic errors) is two-fold. First, some previous studies have found that inoculation messages can induce resistance to both sides of the same issues [5]. For example, Pfau et al.’s [83] research illustrated that inoculation treatments were successful in preventing the persuasion of participants who were against the legalization of marijuana, as well as with those who favored legalization. This phenomenon is also particularly apparent when it comes to conspiracy theories, as obvious correction efforts may be incorporated into the ‘meta-conspiracy’—i.e., the belief that people and institutions who try to debunk conspiracy theories may, themselves, be part of the conspiracy. In this vein, to avoid a ‘backfiring’ of the inoculation strategy, the authors of this study adopted Alex Jones’s original show as the inoculation stimulus without providing additional debunking content.

Second, Alex Jones is a notorious conspiracy theorist in the United States, whose social media accounts were permanently banned by most major tech companies, including Twitter, Facebook, YouTube and Apple, in 2018, because of his dissemination of false and misleading content. Several defamation lawsuits have also been filed against him concerning his claim that the mass shooting at Sandy Hook Elementary School in Newtown was fake [21]. Therefore, it is proposed that Jones’s discredited and shock-based story telling might undermine the credibility of the information in his show, and that the combination of the obviously flawed information and Jones’s hosting style (which is aggressive, exaggerated and over-the-top) might induce participants’ intuitive aversion, skepticism and resistance, and help prevent them from accepting similar conspiracy theories and radical views thereafter.

The second condition involved an audience-focused, disenchantment approach. The corresponding experiment stimulus contained a “mini-lecture on conspiracy theories”, consisting of two parts. In the first half of the video, social psychologist Roland Imhoff speaks on the nature and features of conspiracy theories, and the mechanisms by which they work on people’s mindsets (e.g., What is conspiracy theory? Why do people seek conspiracy messages during times of social and political uncertainty, such as a pandemic? Which groups are most vulnerable groups to conspiracy narratives? What are the negative consequences of conspiracy beliefs, especially during public health crises?). In the second half of the video, a few widely spread conspiracy theories regarding Covid-19 are debunked. This experiment stimulus is expected to help people better understand the essence of conspiracy theories, breaking the ‘myth’ of conspiracy mindsets and providing individuals a sense of ‘knowing-ness’ and ‘control-ness’. Notably, this second part is supplementary to the first, providing examples of the debunking of conspiracy theories in everyday life. It helps link the knowledge in the first part to real-world examples, facilitating the audience’s appreciation of the reasoning involved in the debunking. While both parts of the video are about debunking, the first half is more knowledge-based, contextual and insightful, playing a central role of deconstructing the rationale of conspiratorial narrative, and as such, driving the process of debunking.

Three months prior to the main experiment, a pilot test was conducted to investigate the validity of the debunking effect of Alex Jones’s video, and another approach (a mini lecture on conspiracy theories), in August 2020. Participants (N = 90) were recruited from MTurk and were randomly assigned to one of three conditions—the first condition using the inoculation strategy, the second using a mini-lecture on conspiracy theories, and the third being a control condition. Following the manipulation, participants rated their beliefs about the conspiracy theory. The pilot test confirmed the debunking effects of both methods, as both stimuli mitigated conspiratorial belief (M = 3.14 and SD = 1.17 for the control group; M = 2.78 and SD = 1.14 for participants who were assigned to the inoculation strategy of Alex Jones’s video; and M = 2.48 and SD = 1.13 for participants who were assigned to the mini-lecture strategy. The mean differences between each treatment group and the control group are statistically significant). From this, we concluded that the treatments were adequate for use in the main experiment.

Measurement

Dependent Variable

The Radicalism Intention Scale in the present study is an 8-item measure revised from the Activism-Radicalism Intention Scale (ARIS; [77]. The Radicalism Intention Scale examines radicalized sentiment (e.g., “I would participate in a public protest against oppression of my group even if I thought the protest might turn violent”). Each item is rated on a 5-point Likert scale, ranging from 1 (strongly disagree) to 5 (strongly agree), with a value of 3 for “neutral.” The original 10-item ARIS includes two subscales: one to measure attitude towards activism (5-items) and the other measuring radical attitude (5-items). Our Radicalism Intention Scale adopts only the “radicalism” component of the ARIS, which we restructured into two groups: one showing items tailored to a China-specific context (e.g., “I feel that some Chinese just deserve to be beaten if that can protect the rights of my group”), and the other showing items pertaining to a general, non-ethnic-specific context (Cronbach’s α = 0.94, M = 2.80, SD = 1.14).Footnote 1

Mediators

The measure of symbolic threat was a 3-item, self-report questionnaire [98], tailored to the American Chinese context. A typical item was: “American identity is threatened because there are too many Chinese people today” (Cronbach’s α = 0.92, M = 2.80, SD = 1.27). The measure of realistic threat, modified from the scale by Stephan et al. [98], included five statements that assess the effects of the Chinese on the economic situation in the U.S., e.g., “To be able to compete with the Chinese, we will lose our social security.” (Cronbach’s α = 0.94, M = 2.84, SD = 1.21). For symbolic and realistic threat, responses ranged from 1 for strongly disagree to 5 for strongly agree. The symbolic threat scale [98] has shown reliability in previous studies, 0.80 [42] and 0.82 to 0.86 [24]. Similarly, the realistic threat scale has shown high reliability in previous research: 0.89 [42] and 0.85 to 0.90 [25, 24].

The competitive victimhood scale was adapted from Noor et al. work [79] to the US Covid-19 context. The scale was a 3-item, self-report questionnaire measuring individual tendency to see one’s group as having suffered more or less relative to an outgroup (e.g., “On average, in this Covid-19 pandemic, those who think the same as we do about China have been harmed more than those who do not agree with us about China”.) The responses to the items are on a scale from 1 for strongly disagree to 5 for strongly agree (Cronbach’s α = 0.86, M = 3.09, SD = 1.00).

The conspiracy beliefs scale measured an individual’s conspiratorial belief relating to China and Chinese people with four items on a scale of 1 (strongly disagree) to 5 (strongly agree); two items were coronavirus-focused, e.g., “The Chinese government and state-owned research institutions have falsified or covered up the real origins of COVID-19 to serve their own selfish ends”; the other two were more general, e.g., “China and Chinese Americans are often involved in secret plots and schemes intended to destabilize this country” (Cronbach’s α = 0.89, M = 3.10, SD = 1.10).

Demographics

Participants provided their demographic details, consisting of sex, age, party identification, political orientation, and highest educational qualification.

Results

Prior to our main results, it should be reported that the mean value of the radicalism intension scale for the control group was 3.00 (SD = 1.11), higher than those of the two treatment groups (M = 2.73 and SD = 1.12 for the content-based countermeasure group and M = 2.65 and SD = 1.16 for the audience-focused countermeasure group). This means that participants who were not given any debunking information stimulus already showed a partially radicalized psychological condition.

Moreover, the effects of the two countermeasures on each item for measuring the Radicalism Intention Scale were tested with ordinary least squares (OLS) regressions, with the two groups corresponding to the two countermeasures coded as two independent dummy variables, and the survey results for each item as dependent variables. According to the estimation results as shown in Table 1, the content-focused intervention method failed to produce significant changes for the values of most items, as compared to the control group. Meanwhile the human-focused method significantly lowered the values for 6 of the 8 items, with Item 4 marginally missing the threshold (its p-value being slightly above 0.10, i.e. 0.12). Following the conventions of similar studies, the aggregate results from Item 1–8 were used as the values for the Radicalism Intention Scale in the following analysis [77].

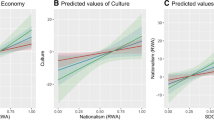

To test for H1 and answer RQ, we used PROCESS [43], an SPSS macro that facilitates mediation analysis based on ordinary least squares (OLS) regressions. We ran a multiple mediation analysis using MODEL 4 in PROCESS. In total, six regression models were estimated for the analysis. Model 1 was the total effect model, with the radicalization intention scale as the dependent variable and the experimental treatment variables (coded as two dummy variables to distinguish between the three groups, i.e., the content-focused countermeasures group, the human-focused countermeasures group, and the control group) as the independent variables. Model 2 was the direct effect model, with the radicalization intention scale as the dependent variable and the four mediators (namely, M1: conspiracy belief scale, M2: competitive victimhood scale, M3: measure of symbolic threat, and M4: measure of realistic threat) also added into the independent variables besides the experimental treatment variables. Models 3, 4, 5, and 6 were used to analyze the indirect effects via M1, M2, M3, and M4, respectively. For Model 3, M1 was the dependent variable; Model 4, M2; Model 5, M3; and Model 6, M4. For Models 3, 4, 5, and 6, the experimental treatment variables were the independent variables. All six models controlled for a set of common covariates, including gender, age, ethnic background, education level, income level, and party affiliation. A summary of estimation results for the six models is shown in Table 2.

Our first hypothesis inquired as to whether debunking conspiratorial information can mitigate the effects of radicalization or cause deradicalization. Specifically, H1a predicted that the content-focused method of debunking conspiracy theory would mitigate the effects of radicalization and potentially result in deradicalization. The mitigation and deradicalization effects were not significant in Model 1 (e.g., for content-focused, B = -0.22, SE = 0.15, p = 0.134). H1b predicted that the human-focused method of debunking conspiracy theory would mitigate the effects of radicalization and result in deradicalization. Consistent with this hypothesis, the mitigation and deradicalization effects were significant (e.g., in Model 1, for human-focused, B = -0.32, SE = 0.15, p = 0.028). After controlling for the effects of the covariates, the group of participants that watched the video explaining how conspiracy theories work obtained a statistically lower level of radicalization compared to the control group. Thus, H1b was supported.

Our related research question asked whether the effect of debunking conspiracy theory on individual adoption of radicalized sentiments is mediated by conspiracist belief, competitive victimhood, or realistic and symbolic threats. Specifically, RQ1 asked whether debunking approaches can lead to reduced conspiracist belief or cognitive deradicalization. The mediation effect was significant in the human-focused method of debunking conspiracy theory, but not in the content-focused method (e.g., in Model 3, for content-focused, B = -0.07, SE = 0.14, p = 0.649; for human-focused, B = -0.42, SE = 0.14, p = 0.004; and in Model 2, for M1 [conspiracy belief scale], B = 0.13, SE = 0.05, p = 0.010). RQ2 asked whether debunking approaches can lead to reduced competitive victimhood, and whether this leads to cognitive deradicalization. The mediation effect was significant in the human-focused debunking method but not in the content-focused debunking method (e.g., in Model 4, for content-focused, B = -0.22, SE = 0.14, p = 0.101; for human-focused, B = -0.37, SE = 0.13, p = 0.006; and in Model 2, for M2 [competitive victimhood scale], B = 0.22, SE = 0.05, p = 0.000). RQ3 asked whether debunking methods can lead to a reduced sense of symbolic and realistic threats, and whether this leads to cognitive deradicalization. The mediation effect was significant in the human-focused method but not in the content-focused method (e.g., in Model 5, for content-focused, B = -0.20, SE = 0.17, p = 0.227; for human-focused, B = -0.43, SE = 0.16, p = 0.009; in Model 6, for content-focused, B = -0.25, SE = 0.16, p = 0.117; for human-focused, B = -0.43, SE = 0.15, p = 0.006; and in Model 2, for M3 [measure of symbolic threat], B = 0.23, SE = 0.07, p = 0.002; for M4 [Measure of realistic threat], B = 0.32, SE = 0.08, p = 0.000).

Table 3 summarizes the total direct and indirect effects of the independent variables (i.e., the two experimental treatment variables: content-focused and human-focused) on the dependent variable (i.e., the radicalization intention scale), while Figs. 1 and 2 present conceptual diagrams for the effects of the four mediators on the two types of debunking conspiracy measures on the radicalization level. For both content-focused and human-focused countermeasures, there was no statistically significant direct effect on the radicalization level (e.g., in Model 2, for content-focused, B = -0.04, SE = 0.08, p = 0.618; for human-focused, B = 0.05, SE = 0.08, p = 0.550). The indirect effects of the four mediators were all significant for human-focused countermeasures; none of these indirect effects were significant for content-focused countermeasures.

Discussion

Owing to the dramatic impact of the Covid-19 pandemic and the high complexity of explaining the outbreak's cause, people who suffered from agency panic and who felt a lack of control became more vulnerable to conspiratorial information and thinking that could lead to radicalized views. The present study suggests a positive effect of debunking approaches on moderating or even reversing the tendency to adopt radicalized views toward Chinese people during the Covid-19 pandemic in the US. The human-focused disenchantment treatment demonstrated a significant deradicalization effect, whereas the effect of the inoculation approach achieved just a little more than preventing further attitude intensification. The study tested a mediation model in which the effect of debunking methods on people’s tendency to adopt more radicalized attitudes was mediated by conspiracy beliefs, perception of threat, and victimhood. The results generally support our predictions, with some unexpected yet crucial findings.

First, the results support the hypothesis that the approach of debunking conspiracist information can mitigate the effects of radicalization or even result in deradicalization. The radical intention scale, used as the dependent variable in our experiment, was abridged from the Activism-Radicalism Intention Scales [77]. Adopting only the more extreme part of the ARIS scale (radicalism), this study found that participants in the control condition who were not given any (counter-)conspiracy information stimulus already showed a partially radicalized psychological condition. Because all participants were randomly chosen in our study, we could predict that the groups involved in the inoculation and disenchantment treatments would share a similar readiness to adopt radicalized sentiments. In a condition where subjects were already receptive to some radicalized sentiments, this study finds that the media treatment approach to debunking conspiracy theories can moderate or even reverse the attitudinal shift towards radicalism, leading to a reduction of commitment to the means of violence.

Second, the results demonstrate that the content-focused approach to debunking conspiracy theory (i.e., inoculation strategy) can prevent the intensification of radicalization. Our pilot study included a component testing the participants’ attitudes towards the credibility of Alex Jones, measuring the extent to which they believe his stories and theories are fact-based and truthful. Low credibility was recorded in the pilot test (M = 1.65, SD = 1.02, 1 = very incredible and 5 = very credible). Low credibility of the speaker of a conspiracy theory is a crucial condition of the inoculation approach because it is assumed that an audience will be inherently resistant to any theories provided by a speaker who is seen as untrustworthy and unreliable. More importantly, the video stimulus, featuring obvious fabrication of evidence presented in a high-flown and provocative manner by a notorious host, constitutes a kind of over-stimulation to the audience. The over-stimulation, over-reaction and hyped-up performance aimed at instilling conspiracist beliefs in the audience drive the semantic frame of conspiracy theory to the point of reversal, where the audience becomes reserved towards, inattentive to, and resilient against the conspiracist information, inhibiting the possible move toward a more radicalized view. However, our results also showed that preventing increased radicalization by cultivating inattention and lack of interest in the conspiracy theory did not produce cognitive deradicalization.

Notably, the baseline in our survey, before intervention, was one of relatively high acceptance of radicalized sentiments. In this context, the inoculation treatment only slightly reduced commitment to radical views. The usually high level of radicalism that we found may be due to domestic and international events at the time of the study. Domestically, the survey was conducted just days before the 2020 presidential election, which was perhaps the most polarized in US history. Internationally, US-China relations had sunk to the lowest level since their normalization, accelerated by the Covid-19 pandemic and years of anti-China propaganda during the Trump administration. It suggests a ‘hit-the-floor’ theory—that is, once a society has been politically mobilized to a tipping point of violence, the ability of a conspiracy theory to fan radicalized attitudes may be diminished, which may subsequently prevent further intensification.

Third, the results demonstrate that the audience-focused method (i.e., disenchantment with conspiracy theory) can provoke a cognitive change to reverse radicalization. Conspiracy theory invents a fantasy object as a source of threat, presumably in order to restore a sense of security sought by people in times of political uncertainty. The disenchantment treatment decodes the mechanisms of conspiracist narratives, through which illusions are cognitively projected onto the real world. By deconstructing this mechanism of retrospective determination, conspiracist beliefs can be mitigated, helping to dissuade individuals from seeing conspiracy theories as naturally occurring. This is further supported by our results, which show that audience-focused disenchantment with conspiracy theories leads to a reduced conspiracist belief, and in turn leads to cognitive deradicalization.

The results also suggest that the effects of counter-conspiracy theory on adoption of radicalized sentiment are mediated by competitive victimhood, realistic threat and symbolic threat. As a result of the negative relationship between competitive victimhood and audience-focused disenchantment of conspiracy theories, a weakened feeling of competitive victimhood contributes to the individual’s reduced commitment to radicalized views. In a similar fashion, audience-focused disenchantment treatment leads to a reduced sense of symbolic and realistic threat, which in turn leads to cognitive deradicalization. Decoding the myth or the mechanism of conspiracy theories helps restore the audience’s feelings of self-efficacy and self-control, while discouraging the need to make an abject other a source of threat or a justification for self-perceived victimhood. This leads to a reduced need to embrace all-encompassing exclusionary ideologies and to a lowering of the entitativity and cognitive closure that differentiate between ingroup and outgroup.

While this study finds the disenchantment method to be effective in deradicalization, we find no significant utility for the inoculation treatment in deradicalization or the associated mediating factors. This confirms the existing idea that the inoculation approach’s mechanism lies in people’s engagement in ‘identity-protective motivated reasoning’. In turn, the inoculating effects on the sub-population of ‘firm conspiracy believers’ are less effective, as these individuals might absorb any conspiracy discourse (even if it contains obvious logical fallacies) and integrate it into their pre-existing conspiratorial worldviews.

In conclusion, while existing literature predicts that conspiracy theory can lead to radicalization [64], our results advance the theory of conspiracy-radicalization dynamics, suggesting that, during critical events such as the Covid-19 pandemic, exposure to countermeasures to conspiracist information can reduce radicalism. The results show more complicated relationships between (counter-)conspiracy theory and (de)radicalization than were predicted. Namely, that the content-focused approach to debunking conspiracy theory (i.e., inoculation strategy) can prevent the intensification of radicalization without producing a significant effect of deradicalization, whereas the audience-focused method (i.e., disenchantment of conspiracy theory) can facilitate a cognitive change and reverse radicalization. Further, it finds that the disenchantment strategy’s influence on an individual’s adoption of radicalized sentiment is mediated by competitive victimhood, realistic threat and symbolic threat—a finding that bridges the previously separate discussions of the psychological factors leading to (de)radicalization and the cognitive influences of conspiracy theory.

This study is an attempt to demonstrate causality between countermeasures to conspiracy theories and deradicalization. It not only contributes to our knowledge of conspiracy theories, but also focuses on what can we do about the problem, i.e., developing possible solutions to the kind of misinformation-facilitated radicalization that has caused significant rifts in society and driven international relations into a downward spiral. However, we need to mention the following possible limitations regarding the measurements used in this study.

First, this study used a conventional five-point scale to measure conspiracy beliefs, featuring two options to disagree, two to agree, and a neutral option. The potential limitation of this measurement practice is that it may inflate estimates of specific conspiracy beliefs, especially for people who are low in political knowledge or cognitive reflection [20, 100]. To achieve a more accurate assessment of people’s conspiratorial endorsement, Clifford et al. [20] have proposed an improved question format, which presents respondents with an explicit choice between a conspiratorial and a conventional explanation for an event, and provides a no-opinion response option. Further work is needed to improve the validity of conspiracy belief measurement when examining the ‘conspiracy theory-radicalism’ dynamics.

Second, some specific radicalization measures risk being hypothetical to audiences who do not believe in radical anti-China views in the first place. Further work on this topic may consider broadening the choices of response option to ensure that non-radical participants are well represented. Third, the survey measured the key dependent variable only once. As such, it did not examine whether radicalization views have changed over time. It can only compare the individual attitudes across the conditions. Since radicalization is a product of complex psychological conditions that undergo change, further work may consider providing clearer evidence of change that might further complicate the relationship between conspiracy beliefs and radicalization. Fourth, in terms of the media content adopted in the audience-focused disenchantment approach, we should acknowledge the risk that using the videos introduced too much “noise”, which might have somehow disturbed the debunking effect. Moreover, future research can investigate the effects of other contributing factors to radicalization, such as uncertainty and a quest for significance, which were not explored in the present study.

In addition, we should emphasize that threat perception and prejudice are not the same; instead, threat perception is frequently, but not solely, generated or exacerbated by prejudice. For example, people assume an existential threat from China because they might have biased—or unbalanced—views that implicate China. In other words, people might be biased and some of them might then subscribe to these perceptions of threat because they tally with their pre-existing conviction that the Chinese are devious; others may view China as a geostrategic threat or competitor. Thus, prejudice can inflate threat perception [14]. Meanwhile, prejudice also involves a number of other psychological factors, such as competitive victimhood and conspiracy beliefs in the present context. Competitive victimhood is produced by perceived social/political/racial injustice towards one’s group and perceptions of one’s group status relative to other groups. People’s pre-existing attitudes and beliefs (e.g., racism, prejudice, collective narcissism) predict endorsement of outgroup conspiracy theories [19]. The present study explored these specific operationalizing factors (threat, victimhood and conspiracy beliefs), which are intimately related to anti-China prejudice in a context in which radicalization is enabled.

Meanwhile, it is worth noting that the current project has implications beyond the COVID-19 pandemic and anti-Chinese sentiment. The COVID-19 pandemic has intensified China’s relations with the major western countries [51, 81], amplified nationalist ideologies and strategies at home and abroad [38, 122], and accelerated the transformation of the global economic order [3, 12, 110]. Republican hawkishness toward China during the Trump era has triggered deep anxieties and fear of a potentially complete collapse in the US–China relations (for Hawkish tendencies in public opinon on the COVID-19, see [68],also see [119], for a survey of Chinese opinons on COVID-19). In the post-COVID era, Biden’s fixation on reversing polarization at home may resort to consolidating the image of a shared enemy abroad, particularly when Democrats and Republicans agree on virtually nothing but the “China threat”. Although problematizing and confronting China may serve as a soothing balm for partisan and social polarization in the US after COVID-19, it could trap Biden administration in a downward spiral of worsening relations with China and risk a heightened degree of radicalization in anti-China sentiment at home. The domestic need for political reconciliation will unfortunately provide both an emotional base and a political incentive for the growth of anti-China conspiracy theories that are likely to be embedded into a state project of internal unity. The entangling of conspiracy theories with foreign hostility produce ideological rigidity in foreign policy view at best; at worst, it can turn conspiratorial views into actual international aggression.

A critical assessment of these possible consequences will help build a dialogue with studies on the U.S.-China competition beyond COVID-19, and provide a more sophisticated and nuanced account on the logic of the US’s China strategy [94, 120]. While this paper focuses on the American opinions on China, a comparison with other regions [see, for example, 89, 109] or between different social groups [44] will be particularly fruitful given the variations the citizens of different countries have in perceiving the role of China in the pandemic [82]. Our findings also suggest a potentially useful approach to developing counter-conspiracy tools for non-state-centric security governance in COVID-19 and beyond [15].

Moreover, it should be noted that there was a disparity between the data from the pilot test and the main experiment, which was unexpected, but which is not unreasonable if we consider the extraordinary socio-political context of the time in which the pilot test and experiment took place. The inconsistency can be explained by the fast-changing situation and the partisan-driven social conflict that was intensifying as the presidential election approached—such that the polarizing of political positions and ideological orientations compromised the effects of the inoculation strategy.

Finally, we should admit that the psychological intervention/manipulation may not be a lasting contribution to societal improvement. Instead, acknowledging the boundaries of our understanding and fostering debates about facts in good faith is the goal we should all embrace. Multiple actors with multiple agendas push for or against certain narratives, but honest scientific admission of our inability to solve this question at this time is almost absent. Against this backdrop, we should stand united in the pursuit of the truth.

Notes

It should be acknowledged that several of the specific radicalization measures were worded such that they might produce a hypothetical question for some audiences. Further work is needed to improve the validity of radicalism measurement by revising the question format.

References

Abalakina-Paap, Marina, Walter G. Stephan, Traci Craig, and W. Larry Gregory. 1999. Beliefs in conspiracies. Political Psychology 20 (3): 637–647.

Ajil, Ahmed. 2020. Politico-ideological violence: Zooming in on grievances. European Journal of Criminology. https://doi.org/10.1177/1477370819896223.

Albertoni, Nicolas, and Carol Wise. 2020. International trade norms in the age of Covid-19 nationalism on the rise? Fudan Journal of the Humanities and Social Sciences. 14: 41–66.

Baden, Christian, and Tzlil Sharon. 2020. Blinded by the lies? Toward an integrated definition of conspiracy theories. Communication Theory. https://doi.org/10.1093/ct/qtaa023.

Banas, John A., and Gregory Miller. 2013. Inducing resistance to conspiracy theory propaganda: Testing inoculation and metainoculation strategies. Human Communication Research 39: 184–207.

Banas, John A., and Stephen A. Rains. 2010. A meta-analysis of research on inoculation theory. Communication Monographs 77 (3): 281–311.

Bartlett, Jamie, and Carl Miller. 2010. The Power of Unreason: Conspiracy Theories, Extremism and Counter-Terrorism. London: Demos.

Barrett, Robert S. 2011. Interviews with killers: Six types of combatants and their motivations for joining deadly groups. Studies in Conflict & Terrorism 34 (10): 749–764.

Bennett, W. Lance., and Steven Livingston. 2018. The disinformation order: Disruptive communication and the decline of democratic institutions. European journal of communication 33 (2): 122–139.

Bloom, Mia M. 2004. Palestinian suicide bombing: Public support, market share and outbidding. Political Science Quarterly 199: 61–88.

Botha, Anneli. 2014. Political socialization and terrorist radicalization among individuals who joined al-Shabaab in Kenya. Studies in Conflict & Terrorism 37 (11): 895–919.

Boylan, Brandon M., Jerry McBeath, and Bo. Wang. 2021. US–China relations: Nationalism, the trade war, and COVID-19. Fudan Journal of the Humanities and Social Sciences. 14: 23–40.

Brotherton, Rob. 2015. Suspicious Minds: Why We Believe Conspiracy Theories. New York: Bloomsbury.

Búzás, Zoltán I. 2013. The color of threat: Race, threat perception, and the demise of the Anglo-Japanese Alliance (1902–1923). Security Studies 22 (4): 573–606.

Caballero-Anthony, Mely, and Lina Gong. 2021. Security governance in east asia and China’s response to COVID-19. Fudan Journal of the Humanities and Social Sciences. 14: 153–172.

Carey, John M., Victoria Chi, D.J. Flynn, Brendan Nyhan, and Thomas Zeitzoff. 2020. The effects of corrective information about disease epidemics and outbreaks: Evidence from zika and yellow fever in Brazil. Science Advances. 6: eaaw7449.

Castano, Emanuele, and Roger Giner-Sorolla. 2006. Not quite human: Infrahuminization in response to collective responsibility for intergroup killing. Journal of Personality and Social Psychology 90: 804–818.

Chan, Man-pui Sally., Christopher R. Jones, Kathleen Hall Jamieson, and Dolores Albarracín. 2017. Debunking: A meta-analysis of the psychological efficacy of messages countering misinformation. Psychological Science 28: 1531–1546.

Cichocka, Aleksandra, Marta Marchlewska, and Agnieszka Golec de Zavala. 2016. Does self-love or self-hate predict conspiracy beliefs? Narcissism, self-esteem, and the endorsement of conspiracy theories. Social Psychological & Personality Science 7 (2): 157–166.

Clifford, Scott, Kim Yongkwang, and Brian W. Sullivan. 2019. An improved question format for measuring conspiracy beliefs. Public Opinion Quarterly 83 (4): 690–722.

CNN. 2018. “Six More Sandy Hook Families Sue Broadcaster Alex Jones”. Retrieved from https://edition.cnn.com/2018/05/23/us/alex-jones-sandy-hook-suit/index.html.

Compton, Josh, and Michael Pfau. 2008. Inoculating against pro-plagiarism justifications: Rational and affective strategies. Journal of Applied Communication Research 36: 98–119.

Craft, Stephanie, Seth Ashley, and Adam Maksl. 2017. News media literacy and conspiracy theory endorsement. Communication and the Public 2 (4): 388–401.

Croucher, Stephen M. 2013. Integrated threat theory and acceptance of immigrant assimilation: An analysis of muslim immigration in Western Europe. Communication Monographs 80: 46–62.

Croucher, Stephen M., Johannes Aalto, Sarianne Hirvonen, and Melodine Sommier. 2013. Integrated threat and intergroup contact: An analysis of Muslim immigration to Finland. Human Communication 16: 109–120.

De Goede, Marieke, and Stephanie Simon. 2013. Governing future radicals in Europe. Antipode 45 (2): 315–335.

Del Vicario, Michela, Gianna Vicaldo, Alessandro Bessi, Abiana Zollo, Antonio Scala, Guido Caldarelli, and Walter Quattrociocchi. 2016. Echo chambers: Emotional contagion and group polarization on Facebook. Scientific Reports 6: 37825.

Doosje, Bertjan, Annemarie Loseman, and Kees Van Bos Den. 2013. Determinants of radicalization of Islamic youth in the Netherlands: Personal uncertainty, perceived injustice, and perceived group threat. Journal of Social Issues 69 (3): 586–604.

Doosje, Bertjan, Fathali M. Moghaddam, Arie W. Kruglanski, Arjan de Wolf, Liesbeth Mann, and Allard Rienk Feddes. 2016. Terrorism, radicalization and de-radicalization. Current Opinion in Psychology 11: 79–84.

Douglas, Karen M., and Ana C. Leite. 2017. Suspicion in the workplace: Organizational conspiracy theories and work-related outcomes. British Journal of Psychology 108: 486–506.

Douglas, Karen M., Joseph Uscinski, Robbie M. Sutton, Aleksandra Cichocka, Turkay Nefes, Chee Siang Ang, and Farzin Deravi. 2019. Understanding conspiracy theories. Advances in Political Psychology 40 (1): 3–35.

Douglas, Karen M., Sutton Robbie M., Jolley Daniel., and Wood Michael. J. 2015. “The Social, Political, Environmental and Health-Related Consequences of Conspiracy Theories: Problems and Potential Solutions”. In M. Bilewicz, A. Cichocka, A. & W. Soral (Eds.), The Psychology of Conspiracy. Abingdon, Oxford: Taylor & Francis.

Einstein, Katherine L., and David M. Glick. 2015. Do I think BLS data are BS? The consequences of conspiracy theories. Political Behavior 37 (3): 679–701.

Enders, Adam M., and Steven M. Smallpage. 2019. Informational cues, partisan-motivated reasoning, and the manipulation of conspiracy beliefs. Political Communication 36 (1): 83–102.

Enders, Adam M., and Joseph E. Uscinski. 2021. Are Misinformation, antiscientific claims, and conspiracy theories for political extremists? Group Processes & Intergroup Relations. 24 (4): 583–605.

Farrell, J., K. McConnell, and R. Brulle. 2019. Evidence-based strategies to combat scientific misinformation. Nature Climate Change 9: 191–195.

Georgiou, Neophytos, Paul Delfabbro, and Ryan Balzan. 2020. Covid-19-related conspiracy beliefs and their relationship with perceived stress and pre-existing conspiracy beliefs. Personality and Individual Differences 166: 1100201.

Givens, John Wagner, and Evan Mistur. 2021. The sincerest form of flattery: Nationalist emulation during the COVID-19 pandemic. Journal of Chinese Political Science 26: 213–234.

Goertzel, Ted. 1994. Belief in conspiracy theories. Political Psychology 1994: 731–742.

Goldberg, Robert A. 2004. Who profited from the crime? Intelligence failure, conspiracy theories, and the case of September 11. Journal of Intelligence and National Security 19 (2): 249–261.

Golob, T., M. Makarovi, and M. Rek. 2021. Meta-reflexivity for resilience against disinformation. [Meta-reflexividad para la resiliencia contra la desinformación]. Comunicar 66: 107–118.

Gonzalez, Karina Velasco, Maykel Verkuyten, Jeroen Weesie, and Edwin Poppe. 2008. Prejudice towards Muslims in the Netherlands: Testing integrated threat theory. British Journal of Social Psychology 47: 667–685.

Hayes, Andrew F. 2013. Introduction to mediation, moderation, and conditional process analysis. New York: Guilford Press.

He, Zhining, and Zhe Chen. 2021. The social group distinction of nationalists and globalists amid COVID-19 pandemic. Fudan Journal of the Humanities and Social Sciences. 14: 67–85.

Hellinger, Daniel C. 2019. Conspiracies and Conspiracy Theories in the Age of Trump. Cham: Springer.

Hofstadter, Richard. 1964. The paranoid style in American politics. Harper's Magazine: 77–86.

Horgan, John. 2005. The Psychology of Terrorism. New York: Routledge.

Horgan, John, and Kurt Braddock. 2010. Rehabilitating the terrorists? Challenges in Assessing the effectiveness of de-radicalisation programs. Terrorism and Political Violence 22 (2): 267–291.

Imhoff, R., Dieterle, L., & Lamberty, P. 2020. Resolving the Puzzle of conspiracy worldview and political activism: belief in secret plots decreases normative but increases nonnormative political engagement. Social Psychological and Personality Science. https://doi.org/10.1177/1948550619896491

Jasko, Katarzyna, Gary LaFree, and Arie Kruglanski. 2017. Quest for significance and violent extremism: The case of domestic radicalization. Political psychology 38 (5): 815–831.

Jaworsky, Bernadette Nadya, and Runya Qiaoan. 2021. The politics of blaming: The narrative battle between China and the US over COVID-19. Journal of Chinese Political Science 26: 295–315.

Jensen, Michael A., Anita Atwell Steate, and Patrick A. James. 2020. Radicalization to violence: A pathway approach to studying extremism. Terrorism and Political Violence 32 (5): 1067–1090.

Jolley, Daniel, and Karen M. Douglas. 2014a. The social consequences of conspiracism: Exposure to Conspiracy theories decreases intentions to engage in politics and to reduce one’s carbon footprint. British Journal of Psychology 105 (1): 35–36.

Jolley, Daniel and Karen M. Douglas. 2014b. The effects of anti-vaccine conspiracy theories on vaccination intentions. PLoS ONE 9(2): e89177

Jolley, Daniel, Meleady Rose, and Karen M. Douglas. 2020. Exposure to intergroup conspiracy theories promotes prejudice which spreads across groups. British Journal of Psychology. 111: 17–35.

Jones, Laura. 2008. A geopolitical mapping of the post-9/11 world: Exploring conspiratorial knowledge through fahrenheit 9/11 and the manchurian candidate. Journal of Media Geography 111: 44–50.

Jones-Jang, Mo. S., Tara Mortensen, and Jingjing Liu. 2019. Does media literacy help identification of fake news? Information literacy helps, but other literacies don’t. American Behavioral Scientist 65 (2): 371–388.

Karstedt, Susanne, and Stephen Farrall. 2006. The moral economy of everyday crime: Markets, consumers and citizens. British Journal of Criminology 46 (6): 1011–1036.

Kim, Minchul, and Xiaoxia Cao. 2016. The impact of exposure to media messages promoting government conspiracy theories on distrust in the government: Evidence from a two-stage randomized experiment. International Journal of Communication 10: 3808–3827.

King, Michael, and Donald M. Taylor. 2011. The radicalization of homegrown jihadists: A review of theoretical models and social psychological evidence. Terrorism and Political Violence 23 (4): 602–622.

Kotsila, Panagiota, and Giorgos Kallis. 2019. Biopolitics of public health and immigration in times of crisis: The Malaria Epidemic in Greece (2009–2014). Geoforum 106: 223–233.

Kreko, Peter. 2020. Countering conspiracy theories and misinformation. In Routledge Handbook of Conspiracy Theories, ed. M. Butter and P. Knight, 242–256. New York: Routledge.

Kruglanski, Arie W., Michele J. Gelfand, Jocelyn J. Bélanger, Anna Sheveland, Malkanthi Hetiarachchi, and Gunaratna Rohan. 2014. The psychology of radicalization and deradicalization: How significance quest impacts violent extremism. Political Psychology 35 (S1): 69–93.

Lee, Benjamin. 2020. Radicalization and Conspiracy Theories. In Butter, M., & Knight, P. (Eds.) Routledge Handbook of Conspiracy Theories. New York: Routledge.

Lazer, David MJ., Matthew A. Baum, Yochai Benkler, Adam J. Berinsky, Kelly M. Greenhill, Filippo Menczer, Miriam J. Metzger, et al. 2018. The science of fake news. Science 359: 1094–1096.

Lewandowsky, Stephan. 2021. Climate change disinformation and how to combat it. Annual Review of Public Health 42: 1–21.

Lewandowsky, Stephan, Klaus Oberauer, and Gilles E. Gignac. 2013. NASA faked the moon landing—therefore, (climate) science is a hoax: An anatomy of the motivated rejection of science. Psychological Science 24 (5): 622–633.

Lin, Hsuan-Yu. 2021. COVID-19 and American attitudes toward U.S.-China disputes. Journal of Chinese Political Science 26: 139–168.

Maertens, Rakoen, Frederik Anseel, and Sander van der Linden. 2020. Combatting climate change misinformation: Evidence for longevity of inoculation and consensus messaging effects. Journal of Environmental Psychology. 70: 101445.

McBride, Megan K. 2011. The logic of terrorism: Existential anxiety, the search for meaning, and terrorist ideologies. Terrorism and Political Violence 23 (4): 560–581.

McGuire, W.J. 1962. Persistence of the resistance to persuasion induced by various types of prior belief defenses. Journal of Abnormal and Social Psychology 64: 241–248.

McGuire, W.J., and D. Papageorgis. 1961. The relative efficacy of various types of prior belief defense in producing immunity against persuasion. Journal of Abnormal Social Psychology 62: 327–337.

Melley, Timothy. 2000. Empire of Conspiracy: The Culture of Paranoia in Postwar America. London: Cornell University Press.

Miller, Joanne. 2020. Psychological, political, and situational factors combine to boost COVID-19 conspiracy theory beliefs. Canadian Journal of Political Science 53 (2): 327–334.

Miller, Joanne M., Kyle L. Saunders, and Christina E. Farhart. 2016. Conspiracy endorsement as motivated reasoning: The moderating roles of political knowledge and trust. American Journal of Political Science 60: 824–844.

Moghaddam, Fathali M. 2005. The staircase to terrorism: A psychological exploration. American Psychologist 60 (2): 161–169.

Moskalenko, Sophia, and Clark McCauley. 2009. Measuring political mobilization: The distinction between activism and radicalism. Terrorism and Political Violence 21 (2): 239–260.

NBC NEWS. 2020. “Asian Americans Report Over 659 Racist Acts Over Last Week.” 26 March. https://www.nbcnews.com/news/asian-america/asian-americans-report-nearly-500-racist-acts-over-last-week-n1169821.

Noor, Masi, Rupert Brown, and Garry Prentice. 2008. Precursors and mediators of inter-group reconciliation in Northern Ireland: A new model. British Journal of Social Psychology 47: 481–495.

Orehek, Edward, et al. 2013. Terrorism—a (self) love story: Redirecting the significance quest can end violence. American Psychologist 68 (7): 559–575.

Pan, Gguangyi, and Alexander Korolev. 2021. The struggle for certainty: Ontological security, the rise of nationalism, and Australia-China tensions after COVID-19. Journal of Chinese Political Science. 26: 115–138.

Papageorgiou, Maria, and Daniella da Silva Nogueira de Melo. 2022. China as a responsible power amid the COVID-19 crisis: Perceptions of partners and adversaries on twitter. Fudan Journal of the Humanities and Social Sciences 15: 159–188.

Pfau, M., S.M. Semmler, L. Deatrick, L. Lane, A. Mason, G. Nisbett, et al. 2009. Nuances about the role and impact of affect and enhanced threat in inoculation. Communication Monographs 76: 73–98.

Radu, Roxana. 2020. Fighting the ‘Infodemic’: Legal responses to COVID-10 disinformation. Social Media + Society.

Roozenbeek, Jon, and Sander van der Linden. 2019. The fake news game: Actively inoculating against the risk of misinformation. Journal of Risk Research. 22 (5): 570–580.