Abstract

Three artificial intelligence (AI) data-driven techniques, including artificial neural network (ANN), support vector regression (SVR), and adaptive neuro-fuzzy inference system (ANFIS), were applied for modeling and predicting turbidity removal from water using graphene oxide (GO). Based on partial mutual information (PIM) algorithm, pH, GO dosage, and initial turbidity were selected as the input variables for developing the models. The prediction performance of the AI-based models was compared with each other and with the response surface methodology (RSM) model, previously reported by the authors, as well. The models’ estimation accuracy was assessed through statistical measures, including mean-squared error (MSE), root-mean-square error (RMSE), mean absolute error (MAE), and coefficient of determination (R2). Among the evaluated models, ANN had the highest estimation accuracy as it showed the highest R2 for the validation data (0.949) and the lowest MSE, RMSE, and MAE values. Furthermore, ANN predicted 76.1% of data points with relative errors (RE) less than 10%. In contrast, the weakest prediction performance belonged to the SVR model with the lowest R2 for both calibration (0.712) and validation (0.864) data. Besides, only 57.1% of the SVR’s predictions were characterized by RE < 10%. The ANFIS and RSM models exhibited a more or less similar performance in terms of R2 for the validation data (0.877 and 0.871, respectively) and other statistical parameters. According to the results, the ANN technique is proposed as the best option for modeling the process. Nevertheless, as the RSM technique provides valuable information about the contribution of the independent operational parameters and their complex interaction effects using the least number of experiments, simulating the process by this technique before modeling by ANN is inevitable.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Although water treatment plants (WTPs) are mostly operated by experts and experienced operators, developing intelligent data-driven models is an essential requirement for enhancing the operation and control quality of the treatment processes. Undoubtedly, the use of intelligent data-driven models for predicting the treatment units’ responses to various technical, physical, chemical, and biological features would enhance the performance of the WTPs. Nowadays, intelligent data-driven models are well-known techniques for predicting the dynamic state of the environmental systems (May et al. 2008; Wu et al. 2014; Hawari et al. 2017; Saadatpour et al. 2020). The data-driven models could be instrumental in operating WTPs when applying physically based numerical models, and/or human resources may cause some restrictions. However, the development of the data-driven models requires a proper and deep understanding of the theoretical foundations of the processes and their physical, chemical, biological, and technical concepts that generate the observed system dynamics in a WTP (Li et al. 2014; Wu et al. 2014; Li et al. 2015a, b; Saadatpour et al. 2020).

Various techniques have been suggested within the literature to develop data-driven models, based on their application to a range of environmental systems (Pascual-Pañach et al. 2021). Artificial neural network (ANN) is an advanced data-driven method inspired by the nervous system in biological organisms and extensively considered in various environmental disciplines (Onukwuli et al. 2021). Typically, ANN structure consists of an input layer, one or more hidden layers, and an output layer. These layers are interconnected to processing units, named neurons, through weights of the neural network (Samadi et al. 2021a). ANN can approximate all types of nonlinear relationships between inputs and outputs if the data-preprocessing techniques are used (Rajendra et al. 2009). Najah et al. (2009) attempted to propose a model for predicting total dissolved solids (TDS), electrical conductivity (EC), and turbidity at the Johor river basin using three-layered feed-forward backpropagation ANN. According to their results, the water quality parameters were simulated correctly and predicted with a mean absolute error of about 10%. Nasr et al. (2012) applied an ANN technique to simulate the performance of the El-Agamy wastewater treatment plant in Egypt. As they reported, chemical oxygen demand (COD), biochemical oxygen demand (BOD), and total suspended solids (TSS) were estimated with a high correlation coefficient (R2 > 0.9) with the ANN model. In another study, Giwa et al. (2016) investigated the effect of mixed liquor suspended solid (MLSS), dissolved oxygen (DO), EC, and pH on the removal efficiency of COD, phosphate (\({\text{PO}}_{4}^{-3}\text{-P}\)), and ammonium \({\text{(NH}}_{4}^{+}\text{-N)}\) from wastewater in an integrated electrically enhanced membrane bioreactor. The ANN model based on the Levenberg–Marquardt backpropagation algorithm predicted the effluent concentration of the contaminants with a high correlation coefficient (R2 = 0.99) (Giwa et al. 2016).

Despite the fact that ANN models are capable of predicting water quality parameters, in some cases where the input parameters are unclear, these techniques encounter problems in determining nonlinear relationships. Some studies revealed that an adaptive neuro-fuzzy inference system (ANFIS) may be a better alternative for such problems (Fu et al. 2020). Indeed, it has the advantages of both ANN and fuzzy inference system (FIS) to handle the uncertainty and noisy data (Zaghloul et al. 2020). Kim and Parnichkun (2017) proposed a hybrid of k-means-ANFIS to predict the settled water turbidity and determine the optimum coagulant dosage using full-scale historical data. Based on the results, sub-models constructed by the k-means-ANFIS were superior to single ANFIS and ANN. In another research conducted by Hawari et al. (2017), fuzzy logic-based and multiple linear regression (MLR) models were used to predict the treated wastewater volume from a multimedia filter under different influent flow rates and turbidities. As they explained, although the regression model had higher accuracy in predicting the treated wastewater, the fuzzy-based model, due to considering the uncertainties in input parameters, was more reliable (Hawari et al. 2017).

Support vector regression (SVR) is another supervised machine learning technique which can alleviate the limitation of ANNs. Unlike ANNs, SVR has a simple geometric interpretation, and also a few model parameters should be adjusted (Parveen et al. 2017). Some previous studies have been shown that SVR-based models can be superior to MLR and ANN in predicting the adsorption process (Parveen et al. 2017 and 2019). Li et al. (2021) used machine learning methods including SVR and Gaussian process regression (GPR) to determine the relationship between the hydraulic conditions and the efficiency of the flocculation process. They reported that the SVR model predicted the turbidity removal efficiencies, based on various hydraulic conditions, better than the GPR model. However, working with a large dataset due to memory requirement and determining the best kernel function are significant challenges to SVR (Zaghloul et al. 2020).

Unlike the physically based numerical models, which are applied to depict and quantify the relationships between different input–output variables, the artificial intelligence (AI) techniques are capable of considering the uncertainties and provide a fast and accurate way to determine the system responses without depicting the structures of the processes (Saadatpour et al. 2020).

Coagulation–flocculation is one of the most crucial water treatment processes known as an economic and robust method for destabilizing suspended and colloidal particles and removing turbidity from water (Metcalf and Eddy 2003; Onukwuli et al. 2021). The process is severely affected by operational and environmental factors such as pH, initial turbidity, coagulant dosage, mixing speed, process time, and temperature (Gupta et al. 2016; Aboubaraka et al. 2017). The variety of effective factors makes the process highly complicated. This raises the need for an efficient predictive model for the process (Zhu et al. 2021).

Due to the cost-effectiveness and more or less satisfying performance of conventional coagulants such as metal salts, they have been widely used in water treatment works. Nonetheless, the traditional coagulants have drawbacks such as generating large sludge volumes, inefficiency at low temperatures, and bringing about the Alzheimer’s disease, making them as a threat to human health and environment (Crini and Lichtfouse 2019; Nnaji et al. 2020; Ezemagu et al. 2021).

Graphene oxide (GO) is a two-dimensional carbon-based nanomaterial that due to its special surface properties and functional groups has recently been examined as a coagulant in water and wastewater treatment studies (Yang et al. 2013; Aboubaraka et al. 2017; Rezania et al. 2021). Moreover, GO is superior to other conventional coagulants in terms of biodegradability (Sanchez et al. 2012). However, due to the novelty of the subject and lack of studies in this field, there are very few reports regarding the modeling of the GO-based coagulation–flocculation process. Rezania et al. (2021) investigated the GO performance as a coagulant in turbidity removal from water and simulated the process through response surface methodology (RSM). Although RSM is appropriate for modeling quadratic processes and provides comprehensive information on sensitivity analysis and interaction of independent operating parameters (Igwegbe et al. 2021), not all nonlinear systems are necessarily well compatible with second-order polynomials (Bhatti et al. 2011). In addition, RSM modeling requires a predefined acceptable fitting function (Karthic et al. 2013) and a determining suitable range for each input parameter (Maran and Priya 2015).

According to the best knowledge of the authors, no reports have been published so far on modeling the GO-based coagulation–flocculation process using the aforesaid AI techniques and as well on comparing the models’ performance and determining the most appropriate technique for predicting the process efficiency. As discussed above, the successful applications of ANN, ANFIS, and SVR techniques have been reported in many environmental engineering problems, especially in predicting water and wastewater treatment processes. These data mining techniques represent priorities over conventional modeling, such as the strength to handle large amounts of noisy data even in dynamic and nonlinear frameworks, especially when the underlying physical, chemical, or biological process is not completely understood. All these factors, along with features such as generality, user-friendly, and ready-made apps, provided a strong incentive to evaluate and compare the performance of the aforementioned techniques in predicting the turbidity removal from water using graphene oxide (GO).

In addition, it is of high importance to note that the coagulation performance and mechanisms of GO nanoparticles are different from conventional coagulants because the coagulation properties of GO are due to its surface characteristics, while the coagulation properties of conventional coagulants are brought about from their hydrolysis in water. Therefore, determining an appropriate AI technique for modeling the GO-based coagulation–flocculation process will be valuable.

Given the reasons discussed above, the main objective of the present work was developing and comparing the capability of the aforementioned AI-based data mining models, i.e., ANN, SVR, and ANFIS, in predicting the GO performance as a coagulant in the removal of turbidity from drinking water. The prediction performance of the AI-based models was compared with each other and with the response surface methodology (RSM) model, previously reported by the authors (Rezania et al. 2021), as well. The experiments were performed using jar test instrument, and partial mutual information (PMI) algorithm was used to determine the appropriate input variables. The models’ prediction performance was compared using statistical indicators.

Methodology

Data collection

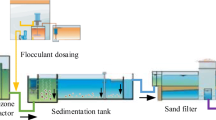

The data used for the development of the models have already been generated by the authors in a recent study (Rezania et al. 2021), in which they evaluated the GO performance as a novel coagulant in turbidity removal from water. The study was performed using single-layer GO with a layer thickness of 0.7–1.4 nm. For preparing turbid samples, garden soil particles passed through the sieve No. 200 were dispersed in 2 L tap water. In order to obtain a uniform dispersion, the stock suspension was first stirred at 100 rpm for 1 h and then left for 24 h for complete hydration of the particles. In the next step, the suspension was stirred again and allowed to settle for 60 min. The obtained supernatant was used to prepare samples with different levels of turbidity (Rezania et al. 2021). A six-paddle jar test apparatus was used for performing the coagulation–flocculation process. The turbid samples were first agitated at rapid mixing rate of 200 rpm for 2 min and then were slowly stirred at 50 rpm for 15 min at room temperature. The effect of pH (3–11), GO dosage (2.5–30 mg/L), initial turbidity (25–300 NTU), rapid mixing time (1–5 min), and slow mixing time (10–40 min) on the turbidity removal efficiency was evaluated through the mentioned jar test procedure. After performing 79 one factor at a time (OFAT) tests, the process was simulated through RSM (Rezania et al. 2021). A central composite design (CCD) containing 20 different combinations of experimental runs, with 8 star points, 6 axials, and 6 center points, was selected for building quadratic models. To reduce the experimental errors, all experiments were carried out in randomized order. The second-order polynomial model in coded form obtained by Rezania et al. (2021) is as follows:

Input variable selection

Selecting variables relevant to the target is one of the most important issues regarding the development of data-driven models. Furthermore, the performance of such models can be adversely affected if either too few or too many inputs are selected (Wu et al. 2014; Li et al. 2015a,b). Generally, input data selection in an environmental modeling context is a complicated issue due to a lack of understanding of the underlying physical–chemical and biological processes. For this reason, the partial mutual information (PMI) algorithm introduced by Sharma (2000) is commonly used to determine the appropriate input data.

The PMI value for the output variable y and the input variable x for a selected input data set {z} are calculated as follows:

where \({\text{x}}^{^{\prime}}\text{= x- E[x|z]}\),\({\text{y}}^{^{\prime}}\text{= y- E[y|z]}\), operator E[.] denotes the expectation operation, \({\text{f}}\left({\text{y}}^{^{\prime}}\right)\) and \({\text{f}}\left({\text{x}}^{^{\prime}}\right)\) are the marginal probability density functions (pdfs), and \({\text{f}}\left({\text{x}}^{^{\prime}}\mathrm{,}{\text{y}}^{^{\prime}}\right)\) is joint probability densities. As the greater the PMI score, the higher the effectiveness of the input variable on the response.

Based on the PMI analysis results (described in the third to the fifth section), among the evaluated parameters, GO dosage, pH, and initial turbidity were determined as the most effective independent variables for developing the models. This result is consistent with the explanations of Rezania et al. (2021) that changing the rapid and slow mixing times had a negligible effect on turbidity removal efficiency using GO. Similarly, Naeem et al. (2018) showed that because of the abundant active sites on GO-based nanocomposite, a significant adsorptive removal of contaminant particles occurred in the early minutes of the process, and increase in the contact time did not have much effect on process efficiency.

Data splitting

In this step, the available data is split into calibration (including training and test datasets if cross-validation is used) and validation data sets. Data splitting can be categorized as unsupervised and supervised methods (Maier et al. 2010). In the present study, random data splitting as the most commonly used unsupervised data splitting method was used (Mirri et al. 2020). As a result, the 79 experimental data obtained from OFAT tests was divided into calibration (73%) and validation (27%) sets and used to calibrate and validate the selected artificial intelligence models, i.e., ANN, ANFIS, and SVR. The validation data was also applied to Eq. 1 to calculate the predicted values of turbidity removal efficiency by the RSM model. Finally, all models were evaluated and compared with one another in terms of their performance in predicting turbidity removal from water using GO.

Artificial intelligence models

Artificial neural network (ANN)

ANNs based on their capability of learning from large-size data sets are applicable in predicting nonlinear functions (Samadi et al. 2021b). The original database should be large enough to be divided into calibration and validation sets, either using the supervised or unsupervised method (Maier et al. 2010). Training data are used during the learning process to find the pattern between variables and response(s).

Validation data is also utilized to evaluate network performance. In the present work, the feed-forward backpropagation neural network, which is one of the most popular ANN architectures developed by Rumelhart et al. (1986), was created in MATLAB 2020b mathematical software. In the backpropagation algorithm, when the output is firstly calculated, the difference between the obtained and the desired response is mapped; then, weights of the network are updated with the aim of minimizing the loss function. The number of hidden layers is a significant aspect of a neural network design since it can affect the accuracy of the response. As there is still no specific method for determining appropriate network architecture before the learning step, it is often done by trial-and-error process. Nevertheless, for the vast majority of problems, to avoid the risk of over-fitting, using one hidden layer with sufficient neurons is more reasonable than increasing the number of the hidden layers (Wu et al. 2015). For this reason, an ANN network with one hidden layer was used in this study. The network structure used in this paper (see Fig. 1) comprises three layers, which are illustrated completely in the Discussion section.

Adaptive neuro-fuzzy inference systems (ANFIS)

The adaptive neuro-fuzzy inference system (ANFIS), proposed by Jang (1993), is a composite of ANN and fuzzy inference system (FIS). Adaptive networks reduce the required time for processing large datasets by finding optimal network structure automatically. In this approach, input data functions such as weights and biases can be adapted in the training process, which leads to reduce the error rate. On the other side, FIS uses the “IF…THEN” rules as well connectors “OR” or “AND” to map inputs to output(s). Every FIS is consisting of three main parts: fuzzy rules, a database, and a reasoning mechanism (Onukwuli et al. 2021). The rule base of the FIS with two inputs (x1 and x2) and one output (f), based on the Takagi–Sugeno type (Takagi and Sugeno 1983; Anadebe et al. 2020), can be shown as follows:

where A1 and A2 and B1 and B2 are the fuzzy sets for input parameters x1 and x2, respectively, and p1 and q1 and p2 and q2 are the consequent parameters obtained by the least square method. The detailed descriptions of the general structure of the ANFIS (see Fig. 2) are expressed as:

-

Layer 1: every node in this layer is an adaptive node that computes the membership value of an input variable. Generalized bell-shaped, Gaussian, trapezoidal-shaped, and triangular-shaped are some popular types of membership functions. If the Gaussian membership function (μ) is adopted, the output of the node is calculated as follows:

$${\mathrm\mu}_{\mathrm{Ai}}=\exp\;\left[\frac{-0.5\;\left(\mathrm x-{\mathrm c}_i\right)}{{\mathrm{{\sigma}i}}_2}\right]$$(5)where \({\text{x}}\) is the input to node i and Ai is the linguistic variable and (σi,\({\text{c}}_{i}\) ) are premise parameters. Indeed, Fuzzification occurs in this layer.

-

Layer 2: in this layer, circle (fixed) nodes, labeled as Π, multiplies the incoming signals from the previous layer, which represent the firing strength:

$$\begin{array}{cc}{\mathrm\omega}_i\,={\mathrm\mu}_{\mathrm{Ai}}\times{\mathrm\mu}_{\mathrm{Bi}}&\mathrm i=1,2\end{array}$$(6) -

Layer 3: each node in this layer calculates the normalized firing strength as

$$\begin{array}{cc}{\overline{\mathrm\omega}}_i=\frac{{\mathrm\omega}_{\mathit i}}{{\mathrm\omega}_{\mathrm i}+{\mathrm\omega}_2}&\mathrm i=1,2\end{array}$$(7) -

Layer 4: the output of each node can be obtained by multiplying the normalized firing strength with the first-order Sugeno model as follows:

$$\overline{{\mathrm\omega}_{\mathrm i}}\;{\mathrm f}_i=\overline{{\mathrm\omega}_{\mathrm i}}\left[{\mathrm p}_i\times{\mathrm x}_{\mathrm i}+{\mathrm q}_i\times{\mathrm x}_2\right]$$(8) -

Layer 5: the single node in this layer computes the output of the model by Eq. 9:

$$\sum\nolimits_{\text{i}}{\stackrel{-}{*}}_{\text{i}} \, {\text{f}}_{i} =\frac{\sum_{\text{i}}{*}_{i} \, {\text{f}}_{i}}{\sum_{\text{i}}{*}_{i} \, }$$(9)

Support vector regression (SVR)

Support vector regression (SVR) is a machine learning algorithm that applies some basic concepts of support vector machine (SVM) for complicated regression problems. In this study, ɛ-SVR technique, as the most widely LibSVM model, was used using the MATLAB 2020b platform. For a dataset {(xi,yi), i = 1, 2, ⋅ ⋅ ⋅, N}, where xi ε RN is the input and yi ε RN is the target; the SVR function mathematically can be shown as

where ω is the parameter of the linear SVR, \({\text{b}}\) is the bias term, and ϕ(x) is a nonlinear mapping function. ω and \({\text{b}}\) can be estimated by minimizing the regression risk as follows:

where \({\text{c}}\) represents the penalty variable and \({\mathrm\xi}_{\mathrm i},{\mathrm\xi}_{\mathrm i}^\ast\) are slack variables. Using the “Lagrangian” function, the approximate function can be expressed by Eq. 12:

where \(\alpha \text{+}{{\alpha }_{\text{i}}}^{*}\) are Lagrangian multipliers and \({\text{k}}\left(\text{x,}{\text{x}}_{\text{i}}\right)\) is the kernel function. In this work, the radial basis function (RBF) kernel was applied for constructing the SVR. The RBF kernel due to its high computational efficiency and capability of separating linear data is the most useful function (Nie et al. 2020), which is as follows:

where \(\gamma\) is the kernel parameter. As the accuracy of the SVR model can be affected by the value of \(\gamma\), \({\text{c}}\), and \(\varepsilon\), the best values of them were determined by trial and error process.

Model evaluation

The goodness-of-fit of the developed models was assessed via statistical indices, including the mean-squared error (MSE), root mean square error (RMSE), mean absolute error (MAE), and coefficient of determination (R2). MSE can be obtained using Eq. 14:

where \({\text{y}}_{\text{act,i}}\) and \({\text{y}}_{\text{est,i}}\) represent the ith observed and estimated values of the efficiency of the turbidity removal, respectively, and n is the total number of input data. MSE varies from positive infinity to zero, such that the closer it is to zero, the better the fit of the model.

RMSE is the square root of the average of squared errors. This non-negative index indicates the best fit to the data in the value of zero (never happen in practice). RMSE is formulated as follows:

MAE, shown in Eq. 16, is another proper error index statistic representing the average absolute difference between the predicted and the observed values. Similarly, MAE values near zero show a relevant result of the model:

The coefficient of R2 indicates the ability of the model to approximate the actual data points, which varies between zero and one. The more the coefficient of determination, the better the fit of the model to the data. R2 is calculated by the following relation:

where \({\overline{y} }_{\text{act}}\) and \({\overline{y} }_{\text{est}}\) denote the ith measured and predicted values of the turbidity removal efficiency, respectively.

The relative error is another indicator for assessing the models’ accuracy in predicting responses. Based on the formula represented in Eq. 18, the lower the value of the relative error, the higher the accuracy of the proposed model:

Results and discussion

Assessment of the ANN model

The proposed optimal ANN structure consists of an input layer (representing the most appropriate variables, i.e., GO dosage, pH, and initial turbidity), one hidden layer, and one output layer as the network’s response (turbidity removal efficiency). Additionally, “Tansig” and “Purelin” transfer functions were employed at the hidden and the output layers, respectively. In order to avoid over-fitting of the model, a program was developed in MATLAB 2020b software to find the optimum number of neurons and to automatically provide the best network training and learning functions, as well. As a result, a network with three neurons in the hidden layer, the “Trainbr” as learning function, and the “learnlv1” as the training function indicated the most accurate response compared with the other architectures.

Figure 3a and b show the modeling results of the efficiency of the turbidity removal from water utilizing GO in the lab-scale water treatment process. The scatter plot of observed and predicted turbidity removal values for calibration data is displayed in Fig. 3a. The high value of R2 (0.9129) shows an excellent performance of the model. Additionally, the coefficient of determination (R2) between the actual values (results obtained in the laboratory) and the predicted values of the validation data estimated through ANN is equal to 0.9492, which indicates that the model reasonably offers a good fit (see Fig. 3b). The high coefficients of R2 in Fig. 3 prove that the calibration and validation processes of the developed ANN model have been accomplished well. Similar results have been reported by Onukwuli et al. (2021) who simulated the dye-polluted wastewater decontamination using bio-coagulants via ANN model. They fed the model with 100 experimental data, 70% of which was used for training and the rest for validation and testing processes. According to their results, the developed ANN model predicted the process with very high accuracy (Regression coefficient R2 = 0.9999) due to its ability to approximate all types of structures (Onukwuli et al. 2021). Zangooei et al. (2016) simulated the coagulation–flocculation process with poly aluminum chloride (PAC) as coagulant, to predict the water turbidity after the process. They considered three independent variables including pH, PAC dosage, and influent turbidity for modeling the process using multi-layer neural network. As they described, their ANN model had the ability to predict the effluent turbidity with a high coefficient of determination (R2 = 0.96) during testing the model (Zangooei et al. 2016). It is noteworthy that Zangooei et al. (2016) used 236 experimental data, of which 85 percent was used for training, and the rest was used for the testing of the network. It is therefore interesting that using much lower number of data in the present study (79 data) and only 73% of the data for training the model, the ANN technique still obtained outstanding results in terms of the coefficient of determination for both the calibration (R2 = 0. 9129) and the validation (R2 = 0. 9492) processes. Such observation may be supported by the fact that ANN as a black box model focuses mainly on the analysis of the available data and simulation of any nonlinear equation (Golbaz et al. 2020).

Assessment of the ANFIS model

ANFIS calibration and validation performances are presented in Fig. 4a and b, respectively. With regard to the coefficient of determination equal to 0.936 for calibration of ANFIS model (Fig. 4a), it can be concluded that the developed model has a suitable performance in approximating the turbidity removal efficiency using GO as a coagulant. Moreover, the coefficient of R2 (0.877) for validation data denotes the effectiveness and the reliability of the proposed model for extracting features from input data (Fig. 4b).

Similarly, Taheri et al. (2013) pointed out that ANFIS model successfully predicted the electrocoagulation–coagulation process with R2 value of 0.923 for a total of 78 test and train data. Also, some previous investigations recommended ANFIS as a powerful tool for modeling of adsorption process (Khomeyrani et al. 2021; Hanumanthu et al. 2021). Heddam et al. (2012) used ANFIS for modeling of coagulant dosage in a water treatment plant. As they described, the developed subtractive clustering-based ANFIS model provided accurate and reliable coagulant dosage prediction. The qualitative human judgment and expert knowledge, dependency of input variables, absence of mathematical models, and nonlinearity of relationships are the conditions making ANFIS a favorable modeling method for the processes such as coagulation–flocculation which involve many complex physical and chemical phenomena (Heddam et al. 2012; Hawari et al. 2017).

Assessment of the SVR model

The coefficient of determination between the predicted values and the calibration data is shown in Fig. 5a. Regarding the correlation coefficient of more than 0.7, SVR had an acceptable performance in the calibration process. Similarly, R2 of 0.864 between the observed and the predicted values in the validation process pointed out the applicability of SVR for predicting the turbidity removal from water using GO (see Fig. 5b). This may be attributed to the fact that although other traditional regression models use the empirical risk minimization principle (ERM) to minimize the training error, SVR, using the structural risk minimization (SRM) principle, considers the capacity of the learning machines, which leads to optimizing the generalization accuracy (Parveen et al. 2017).

Parveen et al. (2017) reported high correlation coefficient of R = 0.9986 for predicting the adsorptive removal of Cr(VI) ions from wastewater via SVR-based model they developed using a whole data set of 124 samples (80% as training and 20% as test datasets). According to another study conducted by Parveen et al. (2019), the SVR-based model accurately predicted the adsorptive removal of Ni(II) ions from wastewater, with high correlation coefficient (R) of 0.993. They used a whole dataset of 382 samples partitioned into two parts as the training (80%) and the test (20%) datasets. Additionally, Zaghloul et al. (2020) proved that SVR technique, thanks to the penalty placed on the prediction errors, predicted the aerobic granular process with high accuracy (R2 of 0.99 for validation data). They fed their SVR model with 2920 experimental data, 89% of which was used for training and the rest for validation processes. Comparison of the results of the present study with previous studies shows that the prediction performance of the SVR technique depends not only on the type of problem, but also on availability of a sufficiently large data set.

Comparison of the data-driven models

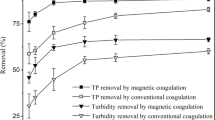

Table 1 shows satisfactory relationship between the experimental results and the predicted data proposed by RSM, ANN, ANFIS, and SVR models through validation process. Statistical measures including MSE, RMSE, MAE, and R2 were used to precisely compare the capability of the models in predicting the turbidity removal using GO. The results are given in Table 2. As seen in the table, the predicted values of all the models correlated very well with the observed results (R2 > 0.86). Generally, correlation of R2 greater than 0.8 and low relative errors between measured and predicted values prove strong model performance (Kennedy et al. 2015).

However, the highest R2 of the validation process and the lowest values of MSE, RMSE, and MAE indicators were obtained for the ANN model. While the values of the validation R2 for the ANFIS, SVR, and RSM models were more or less similar and in the range of 0.864–0.877, this parameter was remarkably higher and about 0.95 for the ANN model. It means ANN model was the most accurate model in approximating the effect of GO on turbidity removal from water, thanks to its capability of predicting multiple complex and nonlinear functions. Besides, ANN technique is flexible in terms of adding new experimental data to build a reliable and accurate ANN model without requiring a standard experimental design (Geyikçi et al. 2012; Maran and Priya 2015).

The results are consistent with some previous reports. According to Zangooei et al. (2016), ANN model outperformed fuzzy regression analysis in simulating the coagulation–flocculation process and predicting effluent turbidity under different experimental conditions (pH, influent turbidity, and PAC concentration). As they described, the R2 of the validation process for the ANN model was 0.96, while it was 0.93 for the fuzzy regression analysis with quadratic function. In addition, Maran and Priya (2015), Golbaz et al. (2020), Onu et al. (2021), and Onukwuli et al. (2021) proved the superiority of the ANN over the RSM model due to the higher deviation of the predictions of the RSM while insignificant residual values of the ANN. However, Igwegbe et al. (2019) who simulated the adsorptive removal of methylene blue dye by ANN and RSM techniques, both using the same experiments planned through the CCD (21 runs), explained that due to the very limited number of experimental runs, the prediction performance of ANN model was less acceptable compared with RSM model. Similar results were also reported by Uzoh and Onukwuli (2017), who compared prediction performance of ANN and RSM models, both developed using the same 30 experiments designed by RSM. Uzoh and Onukwuli (2017) described that ANN generally performs better when very large number of data points is used for training the network. Therefore, it can be concluded that in a situation where very little data can be provided and used, the RSM technique will make a more accurate prediction than the ANN method.

As represented in Fig. 5 and Table 2, the SVR model had the lowest values of the R2 for both calibration (0.7119) and validation (0.8643) datasets and the highest MSE, RMSE, and MAE values, as well. Accordingly, the SVR model exhibited the weakest performance in predicting the GO-based coagulation process, among the evaluated techniques. This result is inconsistent with those reported by Parveen et al. (2019) who explained that the SVR-based model predicted the adsorptive removal of Cr(VI) ions from wastewater with a higher correlation coefficient than the ANN model (R = 0.9986 and 0.9331, respectively). These contradictory results point to the fact that the performance of the modeling techniques depends substantially on the type of problem and also confirm the importance of determining the appropriate method for modeling each process.

The ANFIS model represented the highest R2 for the calibration process. This indicates that ANFIS had the best performance in the training step. In addition, for both ANN and SVR models, the value of R2 for the validation data was larger than that of the calibration data, while this was vice versa for the ANFIS model as it showed smaller R2 for the validation than the calibration data. This is because the ANFIS model considers the uncertainties of the input/output data and experimental conditions, which helps the model provide a more appropriate drawing of the actual process. Zangooei et al. (2016) who used ANFIS technique for modeling the turbidity removal using PAC as the coagulant similarly reported a larger R2 for the training than the testing dataset.

Figure 6 shows the observed data alongside the estimated values of the turbidity removal efficiency for the proposed models during the validation process. In order to better interpret the figure from the viewpoint of the characteristics of errors of each model, the relative errors of the predictions generated by the models were measured according to Eq. 17 and plotted versus the observed data in Fig. 7a. It should be noted that the relative error is indefinite when the actual value is zero as it appears in the denominator (Chen et al. 2017). For this reason, the second point of Fig. 6 in which the observed removal efficiency was equal to zero was not represented in Fig. 7a. As seen in Fig. 7a, for all the models, the largest relative errors were obtained for the smaller observed data (i.e., the lower efficiencies). It can also be found that as the amount of the observed data increases, the relative errors approach zero. Obtaining smaller relative errors for the larger observed data (i.e., the higher efficiencies) and larger relative errors for the smaller observed data could be, respectively, attributed to the abundance of the larger observed data and the low number of the smaller observed data. In fact, the models were better trained and calibrated for the larger observed values. It is noteworthy that the very good performance of the GO under the most of the tested conditions led to the abundance of the larger observed values.

Figure 7b shows the relative errors versus the frequency distribution for each model based on the validation data set. According to the obtained results, the SVR model led to larger errors compared with the two others. As depicted in Fig. 7b, only 57.1% of the predictions generated by the SVR model had a relative error less than 10%. However, 76.1%, 71.4%, and 66.6% of the results generated by ANN, RSM, and ANFIS models, respectively, were characterized by the same relative error. Moreover, about 62% of the results obtained by the SVR model revealed a positive relative error, indicating an obvious tendency of the model to underestimate the observed data. This was vice versa for the ANN model, which overestimated 62% of the experimental results. The performance of the ANFIS and RSM models were better in this regard as they predicted the observed data with more normal error distributions than the ANN and SVR models. Based on the results, 47.7% and 52.3% of the predictions generated by the ANFIS and RSM models, respectively, suffered from a negative relative error.

Generally, all the models provided accurate and reliable turbidity removal predictions and could minimize the dependency on knowledge of the physicochemical properties of the processes. However, the characteristics of the ANN technique, such as the ability to learn nonlinear functions with complex relationships, not stopping the output approximation in case of the corruption of one or more cells of the ANN (fault tolerance), and generalizing and inferring unseen relationships on unobserved data, made this technique superior than the other models in predicting the process efficiency. Nevertheless, the ability of the ANFIS model to take into account the uncertainties in the approximation process cannot be ignored, and therefore, it is recommended to use this AI technique for modeling the processes with considerable uncertainties in the input/output data and the experimental conditions. Additionally, despite the fact that the predictions of the RSM model were less accurate than the ANN and ANFIS models, use of this modeling technique, even before developing the artificial intelligence models, is essential for understanding the nature of the process and obtaining useful information about the contribution of independent variables and their complex interactions.

Identification of the input parameters using PMI

The degree of importance of input variables used in the data-driven models on the desired output was investigated using the PMI algorithm. The higher the PMI score for the identified variable, the greater the effect of that parameter on the response. In order to address the impacts of physical and chemical issues on turbidity removal efficiency, the input parameters including pH, GO dosage, initial turbidity, and rate of slow and rapid mixing steps were considered. The results indicated that pH, GO dosage, and initial turbidity were the most important parameters, affecting the water turbidity removal using GO as a coagulant. The PMI scores obtained for pH, GO dosage, and initial turbidity (as input parameters for developing the data-driven models) were 0.72, 0.608, and 0.415, respectively, which showed that pH was the most effective input parameter on the process efficiency. It is noteworthy that the results obtained by the PMI algorithm were in line with those reported by Rezania et al. (2021), who simulated the process through RSM and found pH and GO dosage orderly as the most effective parameters on the process.

Conclusions

In the present study, three different artificial intelligence models consisting of ANN, ANFIS, and SVR were developed for predicting the turbidity removal efficiency using GO as a coagulant and then compared with each other and with the results obtained by the RSM model, previously reported by the authors, as well. The ability of the models to predict the process efficiency was compared using statistical indices. All the models successfully approximated the behavior of the process, based on their high coefficients of determination (R2 > 0.86) for validation process. However, the highest validation R2 and the lowest values of MSE, RMSE, and MAE indicators were obtained for the ANN model (0.949, 32.61, 5.71, and 4.22, respectively), indicating the superior performance of this AI technique than the other techniques in predicting the process efficiency. In contrast, the SVR model represented the weakest prediction performance with the lowest validation R2 of 0.864. It was also found that the ANN model predicted the observed data with low error margins as 76.1% of predictions performed by this technique had relative errors (RE) of less than 10%. However, only 57.1% of the predictions generated by the SVR model were characterized with RE < 10%.

According to the results, ANN was distinguished as the most appropriate technique for modeling the process. However, simulating the process using RSM technique is also recommended as it helps to understand the nature of the process and the interaction effects of the independent variables, using the least number of the experiments.

For future research works, it is recommended to train the algorithms developed in the present work using more experimental data, in order to expand the applicability of the models. Moreover, using other machine learning algorithms for modeling the process and comparing the results with the present study will be of great value.

Data availability

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Aboubaraka AE, Aboelfetoh EF, Ebeid EZM (2017) Coagulation effectiveness of graphene oxide for the removal of turbidity from raw surface water. Chemosphere 181:738–746

Anadebe VC, Onukwuli OD, Abeng FE, Okafor NA, Ezeugo JO, Okoye CC (2020) Electrochemical-kinetics, MD-simulation and multi-input single-output (MISO) modeling using adaptive neuro-fuzzy inference system (ANFIS) prediction for dexamethasone drug as eco-friendly corrosion inhibitor for mild steel in 2 M HCl electrolyte. J Taiwan Inst Chem Eng 115:251–265

Bhatti MS, Kapoor D, Kalia RK, Reddy AS, Thukral AK (2011) RSM and ANN modeling for electrocoagulation of copper from simulated wastewater: multi objective optimization using genetic algorithm approach. Desalination 274(1–3):74–80

Chen C, Twycross J, Garibaldi JM (2017) A new accuracy measure based on bounded relative error for time series forecasting. PLoS One 12(3):e0174202

Crini G, Lichtfouse E (2019) Advantages and disadvantages of techniques used for wastewater treatment. Environ Chem Lett 17(1):145–155

Ezemagu IG, Ejimofor MI, Menkiti MC, Nwobi-Okoye CC (2021) Modeling and optimization of turbidity removal from produced water using response surface methodology and artificial neural network. S Afr J Chem Eng 35:78–88

Fu Z, Cheng J, Yang M, Batista J, Jiang Y (2020) Wastewater discharge quality prediction using stratified sampling and wavelet de-noising ANFIS model. Comput Electr Eng 85:106701

Geyikçi F, Kılıç E, Çoruh S, Elevli S (2012) Modelling of lead adsorption from industrial sludge leachate on red mud by using RSM and ANN. Chem Eng J 183:53–59

Giwa A, Daer S, Ahmed I, Marpu PR, Hasan SW (2016) Experimental investigation and artificial neural networks ANNs modeling of electrically-enhanced membrane bioreactor for wastewater treatment. J Water Process Eng 11:88–97

Golbaz S, Nabizadeh R, Rafiee M, Yousefi M (2020) Comparative study of RSM and ANN for multiple target optimisation in coagulation/precipitation process of contaminated waters: mechanism and theory. Int J Environ Anal Chem. https://doi.org/10.1080/03067319.2020.1849663

Gupta VK, Carrott PJM, Singh R, Chaudhary M, Kushwaha S (2016) Cellulose: a review as natural, modified and activated carbon adsorbent. Bioresour Technol 216:1066–1076

Hanumanthu JR, Ravindiran G, Subramanian R, Saravanan P (2021) Optimization of process conditions using RSM and ANFIS for the removal of remazol brilliant orange 3R in a packed bed column. J Indian Chem Soc 98(6):100086

Hawari AH, Elamin M, Benamor A, Hasan SW, Ayari MA, Electorowicz M (2017) Fuzzy logic-based model to predict the impact of flow rate and turbidity on the performance of multimedia filters. J Environ Eng (New York) 143(9):04017065

Heddam S, Bermad A, Dechemi N (2012) ANFIS-based modelling for coagulant dosage in drinking water treatment plant: a case study. Environ Monit Assess 184(4):1953–1971

Igwegbe CA, Mohmmadi L, Ahmadi S, Rahdar A, Khadkhodaiy D, Dehghani R, Rahdar S (2019) Modeling of adsorption of methylene blue dye on Ho-CaWO4 nanoparticles using response surface methodology (RSM) and artificial neural network (ANN) techniques. MethodsX 6:1779–1797

Igwegbe CA, Onukwuli OD, Ighalo JO, Menkiti MC (2021) Bio-coagulation-flocculation (BCF) of municipal solid waste leachate using Picralima nitida extract: RSM and ANN modelling. Curr Opin Green Sustain Chem 4:100078

Jang JS (1993) ANFIS: adaptive-network-based fuzzy inference system. IEEE T SYST MAN CY C 23(3):665–685

Karthic P, Joseph S, Arun N, Kumaravel S (2013) Optimization of biohydrogen production by Enterobacter species using artificial neural network and response surface methodology. J Renew Sustain Energy 5(3):033104

Kennedy MJ, Gandomi AH, Miller CM (2015) Coagulation modeling using artificial neural networks to predict both turbidity and DOM-PARAFAC component removal. J Environ Chem Eng 3(4):2829–2838

Khomeyrani SFN, Azqhandi MHA, Ghalami-Choobar B (2021) Rapid and efficient ultrasonic assisted adsorption of PNP onto LDH-GO-CNTs: ANFIS, GRNN and RSM modeling, optimization, isotherm, kinetic, and thermodynamic study. J Mol Liq 333:115917

Kim CM, Parnichkun M (2017) Prediction of settled water turbidity and optimal coagulant dosage in drinking water treatment plant using a hybrid model of k-means clustering and adaptive neuro-fuzzy inference system. Appl Water Sci 7(7):3885–3902

Li X, Zecchin AC, Maier HR (2014) Selection of smoothing parameter estimators for general regression neural networks–applications to hydrological and water resources modelling. Environ Model Softw 59:162–186

Li X, Maier HR, Zecchin AC (2015a) Improved PMI-based input variable selection approach for artificial neural network and other data driven environmental and water resource models. Environ Model Softw 65:15–29

Li X, Zecchin AC, Maier HR (2015b) Improving partial mutual information-based input variable selection by consideration of boundary issues associated with bandwidth estimation. Environ Model Softw 71:78–96

Li M, Hu K, Wang J (2021) Study on optimal conditions of flocculation in deinking wastewater treatment. J Eng Appl Sci 68(1):1–14

Maier HR, Jain A, Dandy GC, Sudheer KP (2010) Methods used for the development of neural networks for the prediction of water resource variables in river systems: current status and future directions. Environ Model Softw 25(8):891–909

Maran JP, Priya B (2015) Comparison of response surface methodology and artificial neural network approach towards efficient ultrasound-assisted biodiesel production from muskmelon oil. Ultrason Sonochem 23:192–200

May RJ, Dandy GC, Maier HR, Nixon JB (2008) Application of partial mutual information variable selection to ANN forecasting of water quality in water distribution systems. Environ Model Softw 23(10–11):1289–1299

Metcalf L, Eddy HP (2003) Wastewater engineering–treatment and reuse, 4th edn. McGraw-Hill, New York

Mirri S, Delnevo G, Roccetti M (2020) Is a COVID-19 second wave possible in Emilia-Romagna (Italy)? Forecasting a future outbreak with particulate pollution and machine learning. Computation 8(3):74

Naeem H, Ajmal M, Muntha S, Ambreen J, Siddiq M (2018) Synthesis and characterization of graphene oxide sheets integrated with gold nanoparticles and their applications to adsorptive removal and catalytic reduction of water contaminants. RSC Adv 8(7):3599–3610

Najah A, Elshafie A, Karim OA, Jaffar O (2009) Prediction of Johor River water quality parameters using artificial neural networks. Eur J Res 28(3):422–435

Nasr MS, Moustafa MA, Seif HA, El Kobrosy G (2012) Application of artificial neural network (ANN) for the prediction of EL-AGAMY wastewater treatment plant performance-EGYPT. Alex Eng J 51(1):37–43

Nie Z, Shen F, Xu D, Li Q (2020) An EMD-SVR model for short-term prediction of ship motion using mirror symmetry and SVR algorithms to eliminate EMD boundary effect. Ocean Eng 217:107927

Nnaji P, Anadebe C, Onukwuli OD (2020) Application of experimental design methodology to optimize dye removal by Mucuna sloanei induced coagulation of dye-based wastewater. Desalin Water Treat 198:396–406

Onu CE, Nwabanne JT, Ohale PE, Asadu CO (2021) Comparative analysis of RSM, ANN and ANFIS and the mechanistic modeling in eriochrome black-T dye adsorption using modified clay. S Afr J Chem Eng 36:24–42

Onukwuli OD, Nnaji PC, Menkiti MC, Anadebe VC, Oke EO, Ude CN, Ude CJ, Okafor NA (2021) Dual-purpose optimization of dye-polluted wastewater decontamination using bio-coagulants from multiple processing techniques via neural intelligence algorithm and response surface methodology. J Taiwan Inst Chem Eng 125:372–386

Parveen N, Zaidi S, Danish M (2017) Development of SVR-based model and comparative analysis with MLR and ANN models for predicting the sorption capacity of Cr (VI). Process Saf Environ Prot 107:428–437

Parveen N, Zaidi S, Danish M (2019) Support vector regression (SVR)-based adsorption model for Ni (II) ions removal. Groundw Sustain Dev 9:100232

Pascual-Pañach J, Cugueró-Escofet MÀ, Sànchez-Marrè M (2021) Interoperating data-driven and model-driven techniques for the automated development of intelligent environmental decision support systems. Environ Model Softw 140:105021

Rajendra M, Jena PC, Raheman H (2009) Prediction of optimized pretreatment process parameters for biodiesel production using ANN and GA. Fuel 88(5):868–875

Rezania N, Zonoozi MH, Saadatpour M (2021) Coagulation-flocculation of turbid water using graphene oxide: simulation through response surface methodology and process characterization. Environ Sci Pollut Res 28(12):14812–14827

Rumelhart DE, Hinton GE, Williams RJ (1986) Learning representations by back-propagating errors. Nature 323(6088):533–536

Saadatpour M, Afshar A, Solis SS (2020) Surrogate-based multiperiod, multiobjective reservoir operation optimization for quality and quantity management. J Water Resour Plan Manag 146(8):04020053

Samadi M, Afshar MH, Jabbari E, Sarkardeh H (2021a) Prediction of current-induced scour depth around pile groups using MARS, CART, and ANN approaches. Mar Georesources Geotechnol 39(5):577–588

Samadi M, Sarkardeh H, Jabbari E (2021b) Prediction of the dynamic pressure distribution in hydraulic structures using soft computing methods. Soft Comput 25(5):3873–3888

Sanchez VC, Jachak A, Hurt RH, Kane AB (2012) Biological interactions of graphene-family nanomaterials: an interdisciplinary review. Chem Res Toxicol 25(1):15–34

Sharma A (2000) Seasonal to interannual rainfall probabilistic forecasts for improved water supply management: part 1-a strategy for system predictor identification. J Hydrol 239(1–4):232–239

Taheri M, Alavi Moghaddam MR, Arami M (2013) Techno-economical optimization of Reactive Blue 19 removal by combined electrocoagulation/coagulation process through MOPSO using RSM and ANFIS models. J Environ Manage 128:798–806

Takagi T, Sugeno M (1983) Derivation of fuzzy control rules from human operator’s control actions. IFAC Proceedings Volumes 16(13):55–60

Uzoh CF, Onukwuli OD (2017) Optimal prediction of PKS: RSO modified alkyd resin polycondensation process using discrete-delayed observations, ANN and RSM-GA techniques. J Coat Technol Res 14(3):607–620

Wu W, Dandy GC, Maier HR (2014) Protocol for developing ANN models and its application to the assessment of the quality of the ANN model development process in drinking water quality modelling. Environ Model Softw 54:108–127

Wu ML, Wang YS, Gu JD (2015) Assessment for water quality by artificial neural network in Daya Bay, South China Sea. Ecotoxicology 24(7):1632–1642

Yang Z, Yan H, Yang H, Li H, Li A, Cheng R (2013) Flocculation performance and mechanism of graphene oxide for removal of various contaminants from water. Water Res 47:3037–3046

Zaghloul MS, Hamza RA, Iorhemen OT, Tay JH (2020) Comparison of adaptive neuro-fuzzy inference systems (ANFIS) and support vector regression (SVR) for data-driven modelling of aerobic granular sludge reactors. J Environ Chem Eng 8(3):103742

Zangooei H, Delnavaz M, Asadollahfardi G (2016) Prediction of coagulation and flocculation processes using ANN models and fuzzy regression. Water Sci Technol 74(6):1296–1311

Zhu G, Xiong N, Wang C, Li Z, Hursthouse AS (2021) Application of a new HMW framework derived ANN model for optimization of aquatic dissolved organic matter removal by coagulation. Chemosphere 262:127723

Acknowledgements

The authors gratefully acknowledge the Iran University of Science and Technology (IUST) for its financial supports and providing the research materials and equipment.

Funding

The research was financially supported by Iran University of Science and Technology (IUST) through the research budgets of the university.

Author information

Authors and Affiliations

Contributions

Hasani Zonoozi and Saadatpour supervised the study and planed and designed the research; Rezania carried out the tests and chemical analyses; Ghasemi and Hasani Zonoozi prepared the original draft of the manuscript; all authors participated in interpretation of the results and in writing, reading, and approving the final version of the manuscript, as well.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable

Consent for publication

Not applicable

Competing interests

The authors declare no competing interests.

Additional information

Responsible Editor: Marcus Schulz

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ghasemi, M., Hasani Zonoozi, M., Rezania, N. et al. Predicting coagulation–flocculation process for turbidity removal from water using graphene oxide: a comparative study on ANN, SVR, ANFIS, and RSM models. Environ Sci Pollut Res 29, 72839–72852 (2022). https://doi.org/10.1007/s11356-022-20989-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11356-022-20989-2