Abstract

Water quality prediction is the basis for the prevention and control of water pollution. In this paper, to address the problem of low prediction accuracy of existing empirical models due to the non-smoothness and nonlinearity of water quality series, a novel water quality forecasting model integrating synchrosqueezed wavelet transform and deep extreme learning machine optimized with the sparrow search algorithm (SWT-SSA-DELM) was proposed. First, the water quality series was denoised by SWT to reduce the non-stationarity and randomness of water quality series. Then, construct DELM by combining ELM and an autoencoder, and an innovative metaheuristic algorithm, SSA, was used to optimize the hyperparameters of the DELM. Finally, the constructed feature vector was used as the input of the DELM, and the proposed water quality prediction model SWT-SSA-DELM was trained and tested with the data sets of Xinchengqiao and Xiaolangdi in the Yellow River Basin, China. Models such as ELM and DELM alone, as well as their improved form based on ensemble learning, long short-term memory network (LSTM), autoregressive integrated moving average (ARIMA) were adopted as comparison models. The results make it evident that the model presented, linking the ability to ensure convergence to the global optima of the SSA with the nonlinear mapping of the DELM, outperforms similar models in terms of predictive performance, with average MAE, MAPE, and RMSE of 0.15, 2.02%, and 0.21 in the test stage, which is 72.82%, 72.88%, and 74.32% lower than the baseline ELM model, respectively.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The issue of water resources is one of the most important concerns in the world today. Surface water, also called land water, is a water resource including rivers, lakes, and swamps. Most of the water utilized by humans comes from surface water. For example, the tap water for the daily use of every household is essentially surface water processed by water plants. Although China has a top-ranking amount of water resources, the per capita share is small due to its large area and population (Sun et al. 2017). Also, human-induced pollutions caused severe water shortages. Water pollution adds to the insufficient surface water resources and seriously hinders the coordinated development of regional ecological civilization and economical construction (Yang and Chen 2020). Therefore, it is of great significance to carry out high-precision advance prediction of water quality, so as to provide scientific basis for relevant management measures.

At present, water quality prediction methods mainly include two types: one is the traditional prediction methods, that is, the classical mathematics as the theoretical basis, including time series prediction method, regression analysis, Markov model (Li and Song 2015), water quality simulation prediction method, etc.; the second is based on artificial intelligence prediction methods, including gray model, artificial neural network prediction method (Kim and Seo 2015; Chatterjee et al. 2017), support vector machine regression prediction method, etc. In recent years, with the rapid development in the field of machine learning, artificial neural networks with the advantage of nonlinear mapping have become one of the most widely used methods for water quality prediction (Azad et al. 2019; Kadkhodazadeh and Farzin 2021). However, the traditional artificial neural network has some shortcomings, such as poor generalization ability, over fitting, easy to fall into local minimum, and so on (Ding et al. 2011), which limits its application. ELM is a single hidden layer feedforward neural network proposed by Huang et al. (2006). ELM overcomes a host of shortcomings of traditional feedforward neural network algorithms, and gradually becomes a powerful tool in the field of water quality prediction with its advantages of fast learning speed and strong generalization ability (Heddam and Kisi 2017; Fijani et al. 2019).

With the change of water quality data acquisition method from manual to automated collection, the amount of water quality data accumulated over time has become very large and contains more information. Traditional neural networks, including ELM, are no longer prominent in the learning of large data volumes, and deep learning, which originates from artificial neural networks, is more advantageous in processing large data volumes (Jin et al. 2020). In addition, water quality information exists in the form of multivariate time series data sets, and the time series information in water quality information should be considered in water quality prediction. To achieve efficient processing of time series, Huang et al. (2013) pioneered the DELM, also called multilayer extreme learning machine (ML-ELM). DELM is a deep learning-based pattern recognition method formed by expanding on ELM, which first employs multiple extreme learning machine-autoencoders (ELM-AE) for unsupervised pre-training, and then uses the output weights of each ELM-AE to initialize the entire DELM (Zhang et al. 2016).

Compared with other depth methods, DELM has the advantage of fast training, but the input layer weights and biases are randomly generated orthogonal random matrices in the pre-training process of ELM-AE; meanwhile, ELM-AE uses least squares to update parameters during unsupervised pre-training, but only the output layer weights are updated, while the input layer weights and biases are not updated, which leads to the effect of DELM affected by the random input weights and biases of each ELM-AE (Pacheco et al. 2018). To overcome this drawback, domestic and foreign scholars mostly use metaheuristic algorithms to optimize the target parameters (Azad et al. 2018; Farzin et al. 2020), including cat swarm optimization (Chu et al. 2006), fruit fly optimization algorithm (Pan 2012), chicken swarm optimization (Meng et al. 2014), and dragonfly algorithm (Meraihi et al. 2020). SSA, first proposed by Xue and Shen (2020), is a new type of swarm intelligence optimization algorithm. Compared with other algorithms, it has the characteristics of high solution rate and high accuracy; however, so far, there is no research on the optimal selection of DELM hyperparameters based on SSA and its application in water quality prediction.

The presence of abnormal events in the natural environment can cause sudden changes in water quality, and the resulting noisy data can affect the data distribution of the water quality series, which greatly affects the accuracy of the model. In order to reduce the impact of noisy data, the original time series data need to be processed for noise reduction to remove the noise in the data and reduce the interference with the prediction model. The commonly used noise reduction methods include Savitzky-Golay (SG) filtering (Bi et al. 2021), wavelet noise reduction (He et al. 2008), Kalman filtering (Rajakumar et al. 2019), and singular value decomposition (Sun et al. 2021). Among them, the denoising effect of wavelet noise reduction method is influenced by the type of base wavelet and threshold selection method; Kalman filter cannot play a good noise reduction effect on nonlinear data, and it only considers Gaussian white noise, and the noise reduction effect will become worse when the interference environment noise is unknown. The singular value decomposition reconstructs the signal by selecting a suitable singular value threshold to achieve noise suppression, but inappropriate selection of singular value threshold is easy to cause signal distortion. The SWT solves the frequency mixing problem by compressing the wavelet transformed time–frequency map in the frequency direction, while filtering out some wavelet coefficients of small amplitude in the process of squeezing according to the set threshold, which has strong robustness to noise. At present, there are few studies on the application of SWT in water quality data denoising, and its denoising effect on water quality series is worthy of further study (Xue et al. 2018).

To sum up, the goal of this paper is: (1) using SWT to denoise the original data and compare the performance with peer denoising algorithms; (2) constructing the SSA-DELM model and determining the optimal hyperparameters; (3) integrating the SWT-SSA-DELM model and verifying its effectiveness with examples.

According to literature, there are few studies that apply DELM to water quality parameter prediction; therefore, it is worth exploring and analyzing the prediction performance for the improved DELM. Comparing to existing models, our novel method makes the following major contributions to the literature: (1) a new data cleansing technique is established for water quality time series; (2) a novel optimal-hybrid model combining SSA and DELM with high accuracy is proposed. The rest of the paper is organized as follows. In the section ‘Materials and methods,’ the SWT, DELM, and SSA are introduced and the SWT-SSA-DELM hybrid model is proposed. The ‘Results’ and ‘Discussion’ sections contain the results and discussion of SWT-SSA-DELM in practical application. Section ‘Conclusion’ concludes the study and proposes future research plans.

Materials and methods

Data description

The Yellow River Basin is located between 32°N–42°N and 96°E–119°E, with a length of about 1900 km from east to west and a width of about 1100 km from north to south (Fig. 1). The Yellow River is the mother river of the Chinese nation, with a total length of 5464 km, which is the second largest river in China. It originates from the Bayan Har Mountain on the Qinghai Tibet Plateau and flows through nine provinces (regions) including Qinghai, Sichuan, Gansu, Ningxia, Inner Mongolia, Shaanxi, Shanxi, Henan, and Shandong, covering a total area of 7.95 × 105 km2.

The data sets used were collected at the Xinchengqiao and Xiaolangdi gauging stations, from 2010 to 2016 with a week’s interval, which spans a period of 365 weeks. DO was selected as a predictor in the monitoring index.

The monitoring data of DO at Xinchengqiao and Xiaolangdi are depicted in Fig. 2. The statistical analysis results (Table 1) of the original time series of DO concentration show that the data have a wide range of variation, and the standard deviation (SD) implies a high deviation of data from the mean value.

SWT

Daubechies et al. (2011) proposed the SWT method by combining the synchrosqueezed technology with the continuous wavelet transform (CWT), which improves the time–frequency resolution by compressing and rearranging the CWT transform coefficients in the frequency direction.

For any sequence \(s\left(t\right)\in {L}^{2}\left(R\right)\), its CWT is expressed as:

where Ws(a, b) is the wavelet transform coefficient spectrum, a and b are the scale and displacement variables, respectively, and ψ(·) is the wavelet basis function.

According to Plancherel theorem, Ws(a, b) is rewritten as CWT of signal s in frequency domain:

where ξ is the angular frequency and \(\widehat{s}\left(\xi \right)\) is the Fourier transform of the signal s. When the wavelet transform coefficient of any point (a, b) is not equal to 0, the instantaneous frequency ωs(a, b) of signal s is:

Then, the mapping from starting point (b, a) to (b, ωs(a, b)) is established by synchrosqueezed transformation, and Ws(a, b) is transformed from the time-scale plane to the time–frequency scale plane to obtain the new time–frequency spectral map.

In practical application, the frequency variable ω, the scale variable a, and the displacement variable b need to be discretized first. When the frequency ω and scale a are discrete variables, Ws(a, b) can be obtained only when ak-ak-1 = (Δa)k is satisfied at the point ak, and its corresponding synchrosqueezed transformation Ts(a, b) can also be calculated accurately only when the continuous interval [ω-1/2Δω, ω + 1/2Δω] is satisfied. Finally, the expressions of the synchrosqueezed wavelet transform are obtained by combining different conditions:

After synchrosqueezing, the signal can still be reconstructed:

The specific steps can be summarized as follows (Wang 2017):

-

(1)

Obtain the water quality sequence data, perform CWT on the sequence s, and obtain its wavelet transform coefficients Ws in the frequency domain;

-

(2)

When the wavelet transform coefficient is not 0, the corresponding instantaneous frequency ωs can be obtained;

-

(3)

The instantaneous frequency ωs is transformed by synchrosqueezing to obtain its new time–frequency spectrogram.

ELM

Given P different data samples \(\left({x}_{j},{t}_{j}\right)\in {R}^{n}\times {R}^{m}\), for a single hidden layer feedforward neural network with N hidden layer nodes and an activation function of g(x), the output expression is as follows:

where bi represents the randomly generated hidden layer biases; αi denotes the weights connecting the input layer and the hidden layer; βi denotes the weights connecting the hidden layer and the output layer; and j = 1,2, …, N. If g(x) is infinitely differentiable, then the true output value of the input sample can be approximated with zero error, which can be expressed as:

and abbreviated as:

where H is the hidden layer output matrix:

The ELM algorithm steps are (Zounemat-Kermani et al. 2021):

-

(1)

The algorithm is given the training sample \(S=\left\{\left(x_j,t_j\right)\vert x_j\in R^n,t_j\in R^m,j=1,2,...,N\right\}\) and the hidden layer activation function g(x).

-

(2)

The number of hidden layer nodes N is set, and the algorithm randomly generates the input weights αi and biases bi.

-

(3)

The hidden layer output matrix H is calculated.

-

(4)

The output weight β is calculated, β = H*T, H* represents the Moore–Penrose generalized inverse matrix of H.

In order to improve the generalization ability and stability of the algorithm, Huang et al. (2006) added the parameter I/C on the basis of β. The formula is as follows:

Hierarchical autoencoder

AE is a feedforward unsupervised neural network that uses a back-propagation algorithm to make the network output value equal to the network input value. The AE with a single hidden layer contains an encoder network and a decoder network. The structure of AE can also be seen as a simple three-layer neural network structure: an input layer, a hidden layer, and an output layer, where the output layer has the same network nodes as the input layer. Its purpose is to reconstruct the input signal to get the output signal after the coding and decoding process of the neural network, and the ideal situation is that the input signal is the same as the output signal. The specific network structure is shown in Fig. 3.

The encoding process of the AE can be expressed as:

The decoding process of the AE can be expressed as:

where w1, b1 refer to the weights and biases of the encoder, and w2, b2 refer to the weights and biases of the decoder.

The activation function sf of encoder belongs to nonlinear transformation, and sigmoid, tanh, and rule functions are usually used. The activation function sg of the decoder generally adopts sigmoid function or constant function. So the network parameters of the autoencoder are θ = [w1, w2, b1, b2]. When the error between the input signal x and the reconstructed signal y is very small, it means that the neural network of the AE has been trained. The reconstruction error function L(x, y) is used to represent the error of x and y.

If the activation function sg of the decoder is a constant function, then the reconstruction error function can be expressed as:

If the activation function sg of the decoder is a sigmoid function, then the reconstruction error function can be expressed as:

Let the training sample data set be \(S={\left\{{X}^{\left(i\right)}\right\}}_{i=1}^{N}\), then the loss function of AE can be expressed as:

For ELM, the input sample data equals the output sample data, which ensures that it can learn without supervision; the randomly generated weights α and biases b of the hidden layer nodes are orthogonal to each other, which is the basis of ELM-AE. In addition, for ELM-AE, the input data is mapped to the high-dimensional space via the generated orthogonal hidden layer parameters, which can be introduced according to the Johnson–Lindenstrauss lemma:

where α = [α1, α2,…, αN] is the orthogonal weight to connect the input layer and the hidden layer nodes, b = [b1, b2,…, bN] represents the orthogonal hidden layer node biases. The output weight β of ELM-AE performs a learning conversion from the feature space to the input data, and it can be obtained from Eq. (12).

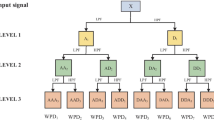

DELM

DELM is a hierarchical neural network superimposed by ELM-AE, and its architecture is presented in Fig. 4. DELM does not need fine-tuning, and its hidden layer parameters are initialized by ELM-AE so that the parameters are orthogonal to each other. The output matrixes of the last hidden layer and output layer are calculated with the least square method. Each layer’s output is then taken as the input of the next layer in turn, and the stopping criterion is the set precision or operation time (Tissera and McDonnell 2016).

Network structure of DELM (a) The first layer weight matrix (b) The i + 1 layer weight matrix (c) The last layer weight matrix (Kasun et al. 2013)

The main execution steps of DELM can be described as follows:

-

(1)

The algorithm is given the training sample \(S=\left\{\left(x_j,t_j\right)\vert x_j\in R^n,t_j\in R^m,j=1,2,...,N\right\}\) and the activation function of the hidden layer g(x).

-

(2)

The network structure of DELM is set to make x = y; the hidden layer is stratified by the ELM-AE so that the parameters are orthogonal to each other.

-

(3)

The hidden layer output matrix H is calculated.

-

(4)

The output weight β is calculated with Formula (17).

-

(5)

Steps (3) and (4) are repeated if 1 ≤ i ≤ y-1, the output matrix of the hidden layer of the ith layer is calculated.

-

(6)

If i = y, the output matrix of the last hidden layer is determined by the least square method.

SSA

In ELM, the selection of input weights α and biases b of each ELM-AE pre-trained by DELM significantly influences the stability and accuracy of DELM. Therefore, SSA is adopted to assist in optimizing the input weights and biases of DELM.

The sparrow search algorithm is inspired by the predatory and anti-predatory behavior of sparrows in biology (Xue and Shen 2020). The sparrow set matrix is as follows.

where n is the number of sparrows, i = (1, 2, … n), d is the dimension of variables.

The matrix of fitness values for sparrows is expressed as follows.

where each value in Fx indicates the fitness value of the individual. Sparrows with better fitness values are given priority to obtain food and act as producers to lead the whole population towards the food source. The position of the producer is updated in the following way (Mao et al. 2021).

where t denotes the number of current iterations, j = (1, 2, … d), \({X}_{i,j}^{t}\) denotes the position of the ith sparrow in the jth dimension. itermax denotes the maximum number of iterations, α belongs to a random number in the range (0, 1), and \({R}_{2}\left({R}_{2}\in \left[0,1\right]\right)\) and \(ST\left(ST\in \left[0.5,1\right]\right)\) represent the warning value and the safety value, respectively. Q is a random number obeying a normal distribution of [0,1]. L denotes a matrix of 1 × d and each element of the matrix is 1. When R2 < ST, this indicates that there are no natural predators around and the finder is able to conduct a wide search for food. If R2 ≥ ST, it means that some sparrows have found natural enemies and the whole population needs to fly quickly to other safe areas.

Except for the producers, the remaining are all scroungers, and the position update formula of the scroungers is as follows.

where Xworst denotes the global worst position, A denotes a 1 × d matrix, each element in the matrix is assigned a random value of 1 or -1, and A+ = AT(AAT)−1. When i > n/2, it means that the ith scrounger with a poor fitness value does not get food and has a very low energy value, so it needs to fly to other places to forage for more energy.

When the population is foraging, a small number of sparrows will be selected for vigilance, and when a natural enemy appears, either the spotter or the scrounger will abandon the current food and fly to a new location. SD (generally taken as 10%–20%) sparrows are randomly selected from the population for early warning behavior each generation. The location updating formula is as follows:

where Xbest denotes the global best position, β is the step control parameter, a normally distributed random number with mean 0 and variance 1, and k is a uniform random number in the range of [-1,1]. Here, fi is the current fitness value of the sparrow. fg and fw are the current global best and worst fitness values, respectively. ε is the minimum constant to avoid 0 in the denominator. When fi > fg, it means that the sparrow is at the edge of the population and is highly vulnerable to natural predators; fi = fg indicates that the sparrow in the middle of the population is aware of the danger of being attacked by natural predators and needs to move closer to other sparrows. k refers to the moving direction of the sparrow, i.e., the step control parameter.

Proposed model descriptions

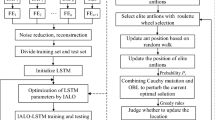

The architecture of the SWT-SSA-DELM model is shown in Fig. 5 and is implemented through the following three steps.

-

(1)

Based on SWT technology for noise reduction of the original time series, the core technology can be outlined as continuous wavelet transform, instantaneous frequency acquisition and compression and recombination.

-

(2)

SSA was introduced into the DELM model to integrate the SSA-DELM hybrid model.

-

(3)

Build the interface between noise reduction data and hybrid prediction model, analyze and verify the effectiveness of SWT-SSA-DELM model through case studies.

Benchmark models

In this paper, models such as ELM and DELM alone, as well as their improved form based on ensemble learning, LSTM, back propagation neural network (BPNN), support vector regression machine (SVR), ARIMA were adopted as comparison models.

LSTM

Recurrent neural network (RNN) refers to the introduction of the concept of time series in the design of network structure. The concept of time series in the network structure design makes it more suitable for the analysis of time series data. However, traditional RNNs suffer from the problem of gradient disappearance with increase in time interval. To solve this long dependence problem, Hochreiter and Schmidhuber (1997) proposed LSTM based on RNN. The LSTM network is a RNN that modifies the structure of the memory unit by transforming the tanh layer in the traditional RNN to a structure that includes a memory unit and a gate mechanism (Song et al. 2021a, b). This mechanism determines how the information in the memory unit should be used and updated, thus alleviating the problem of gradient spreading and explosion.

BPNN

The BP neural network model consists of an input layer, an output layer, and several hidden layers, and the output of the previous layer is the input of the next layer. The network training process is divided into forward propagation and backward propagation (Jia and Zhou 2020). Given an input, it is propagated from the input layer to the hidden layer and then to the output layer for processing by forward propagation, while backward propagation is used to adjust the weights and biases of the model based on error feedback. This calculation ends when the error of the network output is reduced to a set criterion or the training count is reached.

SVR

Support vector machine (SVM) is a machine learning algorithm based on the theory of statistical VC dimension and the theory of structural risk minimization. The core idea is to find the partitioned hyperplane with the maximum interval of heterogeneous support vectors in the data space to serve as the optimal decision boundary. When SVM is applied to the regression problem, it is known as SVR, which uses the hyperplane decision boundary in support vector classification as the regression model.

ARIMA

ARIMA model is one of the methods of time domain analysis of time series. It mainly includes AR model, MA model, ARIMA model, autoregressive conditional heteroskedasticity model (ARCH), and its derivative models. ARIMA model is a method developed on the basis of AR model and MA model, which can smooth the non-stationary time series by difference method, and can overcome the shortcomings of AR model and MA model which can only deal with stationary time series. At the same time, this model has the characteristics of simple structure and few input variables compared with ARCH model and its derivatives, which is one of the most widely used methods for univariate time series data forecasting.

Parameter specification

The selected monitoring data can be abnormal and missing due to instrument failure, signal interference, etc. The original data were first detected for outliers, and the criterion for determining this was the laida criterion (Zhou 2020), the outliers were considered as missing values, and then the missing values were filled using the Lagrange interpolation method.

In this paper, the original data is divided into training and test sets in the ratio of 7:3. The sliding window length is 10, that is, the data of the first 9 weeks is used to predict the next one. Based on experience, the data needs to be normalized for better and faster model training:

where the ith variable is represented by xi; ximin and ximax represent the minimum and maximum values of xi in the training set, respectively.

After the training process of the model is completed, the output of the normalized model is denormalized to the actual values.

After extensive literature search (Farzin and Valikhan Anaraki 2021) and trial-and-error calculations, the parameters of the applied model in this paper are determined. Among them, the DELM model parameters are set as follows: the activation function is sigmoid, 30 hidden layers are set, and the number of nodes in each hidden layer is 20. The SSA parameters for optimizing DELM are set as follows: set the number of sparrows to 10, up to 100 iterations, and the proportion of producers to 20% initially. In this paper, the prediction results of SWT-SSA-DELM are compared with those of some basic algorithms and their improved versions. The base ELM model sets 10 hidden layer nodes, the kernel function of both DELM and SVR is set to radial basis kernel function, SVR generates hyperparameters by fivefold cross-validation, where the kernel parameter g and penalty parameter C both take values in the interval [-10, 10]. The BPNN is a single implied layer structure, with 10 hidden layer nodes, learning rate set to 0.1, maximum 1000 iterations, and target accuracy set to 1e-3.

The initial parameters of the standalone LSTM algorithm are L1 = 20, L2 = 20, Lr = 0.005, and K = 20, where L1 and L2 refer to the number of LSTM units in the first layer and the number of LSTM units in the second layer, Lr refers to the learning rate, and K refers to the number of training iterations. For the three indicators in the ARIMA (p, d, q) structure, based on the Akaike information criterion (AIC) and Bayesian information criterion (BIC), the p and q that minimize the sum of AIC and BIC are used as the parameters of the ARIMA model. In this paper, the range of p and q values is 1 ~ 5, and the range of d values is 0 ~ 2, where p is the autoregressive order, q is the moving average order, and d is the number of differences. For the combined models with ELM, DELM as the substrate, the parameter settings are kept consistent with those of the subcomponents. In addition, the best root mean square error (RMSE) is selected as the fitness function of the applied models.

Performance metrics

The selection of reasonable performance criteria is of utmost importance for assessing model performance. However, there is no universal standard index for evaluating the effectiveness of prediction models. In this paper, the Nash–Sutcliffe efficiency coefficient (NSE), mean absolute error (MAE), RMSE, mean relative error (MAPE), index of agreement (IA), correlation coefficient (CC), determination coefficient (R2), and the width of uncertainty band for the 95% confidence level (± 1.96Se) (Alizadeh et al. 2018) are adopted to statistically evaluate the models applied in this paper. The mathematical representations are cited as follows:

where n is the prediction data size, \({DO}_{i}\) and \({\widehat{DO}}_{i}\) represent the measured and predicted values of DO concentration at time i, and \(\overline{DO }\) denotes the mean value of the actual DO concentration.

In general, the smaller the value of MAE, MAPE, and RMSE, the smaller the error with the actual value. The MAPE-based model performance can be classified into four classes: ‘excellent,’ ‘good,’ ‘reasonable,’ ‘inaccurate,’ with the corresponding MAPE values ranging from < 10%, 10%–20%, 20%–50%, and > 50%, respectively (Lu and Ma 2020). The range of NSE is (-∞,1], NSE = 1 indicates the excellent correspondence between the predicted data and the measured data, and the closer the NSE is to 1, the better the quality of the prediction results. The IA can range from 0 to 1; larger values of both IA and Se represent better prediction performance of the model.

Results

Although the data sets of both Xinchengqiao and Xiaolangdi monitoring points are calculated in this paper, in order to avoid repetition, the main body only focuses on the Xinchengqiao. The detailed introduction of the prediction results of Xiaolangdi is shown in Table 3.

Sensitivity analysis

The values of the parameters of the models applied in this paper have been given in the subsection ‘Parameter specification,’ and this subsection mainly focuses on the details of the parameter settings of the proposed model SWT-SSA-DELM. The parameters of SWT-SSA-DELM mainly include two parts, SSA and DELM, where the parameters of SSA are referred to the literature (Song et al. 2021a, b), and the number of iterations is increased to 100. The important parameters of DELM include hidden layer size or number of hidden layers, and number of nodes in each hidden layer, which are determined by trial and error. In order to find the best values of the number of hidden layers and nodes in each hidden layer, the number of hidden layers is varied from 10 to 60 at intervals of 10, and the number of nodes in each hidden layer is varied from 5 to 30 at intervals of 5. The variation surface of RMSE was obtained by interpolation, as shown in Fig. 6. It can be seen that, for the sample data in Xinchengqiao, the best value of RMSE (0.171) is related to the number of hidden layers and nodes in each hidden layer with values of 30 and 20, respectively, and the RMSE increases by the distance from these values.

Evaluation of noise reduction effect

The time–frequency plots of the raw time series before and after noise reduction are given in Fig. 7. Through local amplification, the denoised data is greatly reduced in the high-frequency range, which is the performance of high-frequency noise component being kicked out.

Figure 8(a) is a comparison diagram of the fast Fourier transform (FFT) spectrum with or without SWT noise reduction. It can be found that as far as the morphology is concerned, the spectral curves of the data with and without SWT processing do not differ much. However, based on the amplified observation of the high-frequency part of the curve, it can be seen that after noise reduction, the distribution of the data on the high-frequency component is significantly weakened, and the noise above 13 Hz basically disappears, and the noise reduction performance is good. Figure 8(b) presents the time series of water quality with and without SWT treatment. Echoing Fig. 8(a), the water quality data after the noise reduction process is smoother and more rounded, and to a certain extent, the outliers in the data are removed.

To test the noise reduction effect of SWT on water quality signals, the results are compared with those obtained by combining EMD, EEMD, CEEMD, CEEMDAN, and VMD with FE, respectively. The basic mode of the denoising algorithm for comparison is to remove the first two terms with higher FE in the decomposed components, and then reconstruct the remaining components. This paper adopts three commonly used noise reduction effect evaluation criteria, namely RMSE, signal-to-noise ratio Rsn (Song et al. 2021a, b), CC, residual energy percentage Esn, and sequence smoothness (Davies 1994). A good noise reduction model usually corresponds to smaller RMSE, larger Rsn, CC, Esn, and smoothness.

Table 2 shows the noise reduction effect of different methods. According to Table 2, the performance of SWT is the best among similar noise reduction models in four evaluation metrics: RMSE, Rsn, CC, and smoothness, and the performance of SWT is second only to CEEMD-FE in Esn.

Figure 9 shows the reconstructed sequence errors of various noise reduction methods, including EMD-FE, EEMD-FE, CEEMD-FE, CEEMDAN-FE, and VMD-FE. The amplitude of sequence error after SWT noise reduction fluctuates in [-0.73,0.60], and its noise reduction performance clearly outperforms the remaining five models. The results are basically consistent with Table 2.

Evaluation of predictive performance

The evolution curve of the fitness value of the applied algorithm is depicted in Fig. 10, where the X-axis represents the number of iterations and the Y-axis represents the fitness value. As the algorithm iterated, the error gradually converged, and the model with SWT preprocessing converged more rapidly. The error convergence curve of the SWT-SSA-DELM model achieves the target accuracy within 10 iterations, and is able to remain in the optimal state after that, which also indicates the superior learning and training ability of the proposed model. It is worth noting that the initial fitness value of the model pre-treated by SWT is lower, which also indirectly verifies the effectiveness of SWT.

Figure 11(a) shows the frequency distribution histogram of applied algorithms. The prediction errors of the hybrid SWT-SSA-DELM proposed in this paper are distributed around the zero point, i.e., the probability density of the interval with smaller errors is larger and has higher prediction accuracy. The ELM, DELM, SSA-ELM, and SSA-DELM models have a wider range of error distribution and higher probability density at larger errors, and the proposed models have better prediction accuracy than the SWT-ELM, SWT-DELM, and SWT-SSA-ELM models.

Figure 11(b) shows the MAPE statistics of applied algorithms. The results show that the mean values of MAPE of the eight methods are less than 10%, which indicates that these models can meet the needs of general water quality prediction accuracy. Among similar models, SWT-SSA-DELM has the smallest MAPE value and even the outliers do not exceed the 10% threshold, and compared with standalone ELM, the MAPE values of SWT-SSA-DELM have decreased by 78.54%, indicating its excellent predictive performance.

To further test and evaluate the feasibility and superiority of the SWT-SSA-DELM, LSTM, ARIMA, SVR, and BPNN are used as the benchmark algorithms in this paper.

The Taylor plot corresponding to the predicted results of each model is presented in Fig. 12. The root mean square deviation (RMSD) values are sorted as follows: SWT-SSA-DELM < SWT-DELM < SSA-DELM < DELM < BPNN < SVR < LSTM < ARIMA; the CC are sorted as follows: SWT-SSA-DELM > SWT-DELM > SSA-DELM > DELM > BPNN > SVR > LSTM > ARIMA. Considering the multiple error evaluation indicators, the SWT-SSA-DELM model has the most superior predictive capability, which can meet the requirements of water quality prediction in the Yellow River Basin.

The correlation between the measured and predicted DO concentrations of applied algorithms is presented in Fig. 13. The black line and red line represent the y = x reference line and regression line, respectively. It can be found that the SWT-SSA-DELM model has the highest fitting degree between the predicted value and the measured value, and the CC value and R2 can reach 0.983 and 0.966, respectively, which are significantly higher than other models. Compared with DELM, which is only stacked by layered autoencoders, the error statistics results of SSA-DELM verify the importance of parameter optimization based on SSA, and through further comparison with SWT-DELM, the necessity of SWT preprocessing is confirmed.

Violin plot is used to display the distribution status and probability density of multiple sets of data. This kind of graph combines the characteristics of the box plot and the density graph, and is mainly used to show the distribution shape of the data. Figure 14(a) shows the violin plot of applied algorithms for model performance. The violin plot patterns of the prediction results of SWT-SSA-DELM are most similar to those of the original measurement data, and the median and frequency distributions are closest to the true values, indicating the best prediction performance. And according to the prediction results of DO, the water quality of two monitoring stations is good according to the Chinese water quality classification standard.

The prediction curves of various methods are presented in Fig. 14(b). The predicted result curves of the algorithms applied in this paper are generally consistent in terms of trend, but there are some differences in details. The prediction results of SWT-SSA-DELM are the closest to the measured values, and the predicted results are largely distributed within the 95% confidence interval of the actual values, indicating a relatively accurate prediction.

Table 3 summarizes the prediction results of different models. The performance of SWT-SSA-DELM has been dramatically improved. Compared with the basic ELM, the SWT-SSA-DELM model reduced by 79.05%, 78.54%, 80.23%, and 77.64% in terms of MAE, MAPE, RMSE, and width of uncertainty band, and increased by 210.33%, 76.16%, 792.56%, and 49.02% in terms of R2, CC, NSE, and IA, respectively. In addition, its improvement for the standalone ELM model was higher than that of the other models, and its error performance was also better than some new improved ELM algorithms (Yu et al. 2018; Liu et al. 2020). Moreover, the predicted water quality of Xiaolangdi hydrological station has the same pattern as that of Xinchengqiao, indicating the stability of the proposed algorithm.

Discussion

Summarizing the above research results, the proposed SWT-SSA-DELM model outperforms other models in terms of prediction performance. It is worth mentioning that the SWT-ELM and SSA-ELM correspond to the ELM processed by data cleaning and meta-inspired optimization algorithm, respectively, and the former has better prediction performance than the latter. Based on the monitoring data of Xinchengqiao, SWT-ELM is 49.38%, 51.22%, 42.01%, and 42.37% lower in MAE, MAPE, RMSE, 1.96Se, respectively, and 32.59%, 15.12%, 34.70%, and 8.75% higher in R2, CC, NSE, and IA, respectively, compared with SSA-ELM. Furthermore, the forecasting results applied at Xinchengqiao for both models are consistent with those at Xiaolangdi station, which also indirectly indicates the importance of data cleaning. Currently, a lot of research work has been invested in the integration of meta-inspired optimization algorithms with basis functions, while the attention to data cleaning, i.e., data preparation, has yet to be increased.

SWT is mainly applied to noise reduction processing of chaotic signals in the fields of microseismic signals, wind power, lightning electric field signals, etc. The chaotic signals in these fields usually have huge data volume and can give full play to the noise reduction performance of SWT. In this paper, we choose the weekly water quality monitoring data of the Yellow River basin; although the monitoring time span reaches 7 years, the data volume of monitoring indicators is only 365, which is much smaller than the data magnitude of the data of other fields mentioned above. Therefore, in future research, a data set with greater monitoring frequency or longer monitoring period will be considered to ensure the quality of the training set. Furthermore, limited by the completeness of the data, only one monitoring indicator, DO, was selected as a predictor in this paper. In order to better verify the robustness and adaptability of the proposed model, multiple monitoring indicators from multiple regions should be selected to participate in the prediction in future studies. In addition, two-phase denoising techniques, i.e., singular spectrum decomposition, CEEMDAN, VMD, and other decomposition techniques combined with SWT, are worth exploring for their noise reduction effectiveness.

In this paper, the sliding window algorithm is employed to implement single-step forward scrolling prediction. Both the sliding window size and the specific forward prediction step have an impact on the model prediction performance; therefore, the determination of these two parameters and the detailed impact on the model error need to be further explored.

The hyperparameters of DELM are optimally selected using SSA in this paper, and although SSA is of great use in this process, it is not without its drawbacks. SSA alone is prone to fall into local extremes in later iterations due to the reduction of population diversity. However, few attempts have been made to optimize and improve the SSA, and the performance of the improved SSA deserves to be evaluated and explored in future studies.

Conclusion

Highly accurate water quality prediction model has a pivotal role in water resources allocation, scheduling, and other decision making. In this paper, an improved hybrid DELM model (SWT-SSA-DELM) for water quality prediction was proposed and tested with hydrological monitoring data, from 2010 to 2016, measured at Xinchengqiao and Xiaolangdi, Yellow River Basin, China. The statistical parameters MAE, MAPE, RMSE, R2, CC, NSE, IA, and the width of uncertainty band for the 95% confidence level were used to assess the performance of the model. The standalone ELM and DELM, as well as their improved form after ensemble learning, LSTM, ARIMA, SVR, and BPNN were used as comparative prediction algorithms.

The statistical results indicated that the water quality prediction model based on SWT-SSA-DELM outperforms peer models. The DELM can extract the in-depth information in the water quality time series, which eliminates the defect that the ordinary ELM model is difficult to capture the high correlation characteristics due to the single hidden layer structure, dramatically improving the accuracy of the prediction model.

Although the performance of the model put forward in this paper is acceptable, there is still room for improvement in its performance: (1) choose a more complete data set, and two-phase denoising techniques can be used to study the noise reduction performance; (2) study the effects of the sliding window size and the forward prediction step on prediction accuracy; (3) develop and improve the existing SSA algorithm, one idea is to use hybrid sine cosine algorithm and Lévy flight algorithm to avoid SSA falling into local optimum.

Data Availability

All data generated or analyzed during this study are included in this published article.

References

Alizadeh MJ, Kavianpour MR, Danesh M, Adolf J, Shamshirband S, Chau KW (2018) Effect of river flow on the quality of estuarine and coastal waters using machine learning models. Eng Appl Comput Fluid Mech 12(1):810–823. https://doi.org/10.1080/19942060.2018.1528480

Azad A, Karami H, Farzin S, Saeedian A, Kashi H, Sayyahi F (2018) Prediction of water quality parameters using ANFIS optimized by intelligence algorithms (case study: Gorganrood River). KSCE J Civ Eng 22(7):2206–2213. https://doi.org/10.1007/s12205-017-1703-6

Azad A, Karami H, Farzin S, Mousavi SF, Kisi O (2019) Modeling river water quality parameters using modified adaptive neuro fuzzy inference system. Water Sci Eng 12(1):45–54. https://doi.org/10.1016/j.wse.2018.11.001

Bi J, Lin Y, Dong Q, Yuan H, Zhou M (2021) Large-scale water quality prediction with integrated deep neural network. Inf Sci 571:191–205. https://doi.org/10.1016/j.ins.2021.04.057

Chatterjee S, Sarkar S, Dey N, Ashour AS, Sen S, Hassanien AE (2017) Application of cuckoo search in water quality prediction using artificial neural network. Int J Comput Intellig Stud 6(2–3):229–244. https://doi.org/10.1504/IJCISTUDIES.2017.089054

Chu SC, Tsai PW, Pan JS (2006) Cat swarm optimization. Pacific Rim international conference on artificial intelligence. Springer, Berlin, pp 854–858. https://doi.org/10.1007/978-3-540-36668-3_94

Daubechies I, Lu J, Wu HT (2011) Synchrosqueezed wavelet transforms: An empirical mode decomposition-like tool. Appl Comput Harmon Anal 30(2):243–261. https://doi.org/10.1016/j.acha.2010.08.002

Davies M (1994) Noise reduction schemes for chaotic time series. Physica D 79(2–4):174–192. https://doi.org/10.1016/S0167-2789(05)80005-3

Ding S, Su C, Yu J (2011) An optimizing BP neural network algorithm based on genetic algorithm. Artif Intell Rev 36(2):153–162. https://doi.org/10.1007/s10462-011-9208-z

Farzin S, Valikhan Anaraki M (2021) Modeling and predicting suspended sediment load under climate change conditions: a new hybridization strategy. J Water Clim Change. https://doi.org/10.2166/wcc.2021.317

Farzin S, Chianeh FN, Anaraki MV, Mahmoudian F (2020) Introducing a framework for modeling of drug electrochemical removal from wastewater based on data mining algorithms, scatter interpolation method, and multi criteria decision analysis (DID). J Clean Prod 266:122075. https://doi.org/10.1016/j.jclepro.2020.122075

Fijani E, Barzegar R, Deo R, Tziritis E, Skordas K (2019) Design and implementation of a hybrid model based on two-layer decomposition method coupled with extreme learning machines to support real-time environmental monitoring of water quality parameters. Sci Total Environ 648:839–853. https://doi.org/10.1016/j.scitotenv.2018.08.221

He L, Huang GH, Zeng GM, Lu HW (2008) Wavelet-based multiresolution analysis for data cleaning and its application to water quality management systems. Expert Syst Appl 35(3):1301–1310. https://doi.org/10.1016/j.eswa.2007.08.009

Heddam S, Kisi O (2017) Extreme learning machines: a new approach for modeling dissolved oxygen (DO) concentration with and without water quality variables as predictors. Environ Sci Pollut Res 24(20):16702–16724. https://doi.org/10.1007/s11356-017-9283-z

Hochreiter S, Schmidhuber J (1997) Long short-term memory. Neural Comput 9(8):1735–1780. https://doi.org/10.1162/neco.1997.9.8.1735

Huang G, Zhu Q, Siew CK (2006) Extreme learning machine: theory and applications. Neurocomputing 70(1–3):489–501. https://doi.org/10.1016/j.neucom.2005.12.126

Huang GB, Kasun LLC, Zhou H, Vong CM (2013) Representational learning with extreme learning machine for big data. IEEE Intell Syst 28(6):31–34

Jia H, Zhou X (2020) Water Quality Prediction Method Based on LSTM-BP. 2020 12th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), vol 1. IEEE, New Jersey, pp 27–30. https://doi.org/10.1109/IHMSC49165.2020.00014

Jin J, Jiang P, Li L, Xu H, Lin G (2020) Water quality monitoring at a virtual watershed monitoring station using a modified deep extreme learning machine. Hydrol Sci J 65(3):415–426. https://doi.org/10.1080/02626667.2019.1699245

Kadkhodazadeh M, Farzin S (2021) A Novel LSSVM Model Integrated with GBO Algorithm to Assessment of Water Quality Parameters. Water Resour Manage 35:3939–3968. https://doi.org/10.1007/s11269-021-02913-4

Kasun LLC, Zhou H, Huang G, Vong CM (2013) Representational learning with ELMs for big data. IEEE Intell Syst 28(6):31–34

Kim SE, Seo IW (2015) Artificial Neural Network ensemble modeling with conjunctive data clustering for water quality prediction in rivers. J Hydro-Environ Res 9(3):325–339. https://doi.org/10.1016/j.jher.2014.09.006

Li X, Song J (2015) A New ANN-Markov chain methodology for water quality prediction. 2015 International Joint Conference on Neural Networks (IJCNN). IEEE, New Jersey, pp 1–6. https://doi.org/10.1109/IJCNN.2015.7280320

Liu H, Zhang Y, Zhang H (2020) Prediction of effluent quality in papermaking wastewater treatment processes using dynamic kernel-based extreme learning machine. Process Biochem 97:72–79. https://doi.org/10.1016/j.procbio.2020.06.020

Lu H, Ma X (2020) Hybrid decision tree-based machine learning models for short-term water quality prediction. Chemosphere 249:126169. https://doi.org/10.1016/j.chemosphere.2020.126169

Mao Q, Zhang Q, Mao C, Bai J (2021) Mixing Sine and Cosine Algorithm with Lévy Flying Chaotic Sparrow Algorithm Journal of Shanxi University (Natural Science Edition) (in Chinese) https://doi.org/10.13451/j.sxu.ns.2020135

Meng X, Liu Y, Gao X, Zhang H (2014) A new bio-inspired algorithm: chicken swarm optimization. International conference in swarm intelligence. Springer, Cham, pp 86–94. https://doi.org/10.1007/978-3-319-11857-4_10

Meraihi Y, Ramdane-Cherif A, Acheli D, Mahseur M (2020) Dragonfly algorithm: a comprehensive review and applications. Neural Comput Appl 32:16625–16646. https://doi.org/10.1007/s00521-020-04866-y

Pacheco AG, Krohling RA, da Silva CA (2018) Restricted Boltzmann machine to determine the input weights for extreme learning machines. Expert Syst Appl 96:77–85. https://doi.org/10.1016/j.eswa.2017.11.054

Pan WT (2012) A new fruit fly optimization algorithm: taking the financial distress model as an example. Knowl-Based Syst 26:69–74. https://doi.org/10.1016/j.knosys.2011.07.001

Rajakumar AG, Mohan Kumar MS, Amrutur B, Kapelan Z (2019) Real-time water quality modeling with ensemble Kalman filter for state and parameter estimation in water distribution networks. J Water Resour Plan Manag 145(11):04019049. https://doi.org/10.1061/(ASCE)WR.1943-5452.0001118

Song C, Yao L, Hua C, Ni Q (2021a) A water quality prediction model based on variational mode decomposition and the least squares support vector machine optimized by the sparrow search algorithm (VMD-SSA-LSSVM) of the Yangtze River, China. Environ Monit Assess 193(6):1–17. https://doi.org/10.1007/s10661-021-09127-6

Song C, Yao L, Hua C, Ni Q (2021b) A novel hybrid model for water quality prediction based on synchrosqueezed wavelet transform technique and improved long short-term memory. J Hydrol 603:126879. https://doi.org/10.1016/j.jhydrol.2021.126879

Sun Y, Liu N, Shang J, Zhang J (2017) Sustainable utilization of water resources in China: A system dynamics model. J Clean Prod 142:613–625. https://doi.org/10.1016/j.jclepro.2016.07.110

Sun Z, Chang NB, Chen CF, Mostafiz C, Gao W (2021) Ensemble Learning via Higher Order Singular Value Decomposition for Integrating Data and Classifier Fusion in Water Quality Monitoring. IEEE J Select Top Appl Earth Observ Remote Sens 14:3345–3360. https://doi.org/10.1109/JSTARS.2021.3055798

Tissera MD, McDonnell MD (2016) Deep extreme learning machines: supervised autoencoding architecture for classification. Neurocomputing 174:42–49. https://doi.org/10.1016/j.neucom.2015.03.110

Wang C (2017) Research on fault diagnosis method for rotating machinery based on synchrosqueezing wavelet transform. Zhejiang Normal University, Jinhua

Xue J, Shen B (2020) A novel swarm intelligence optimization approach: sparrow search algorithm. Syst Sci Cont Eng 8(1):22–34. https://doi.org/10.1080/21642583.2019.1708830

Xue YJ, Cao JX, Zhang GL, Cheng GH, Chen H (2018) Application of synchrosqueezed wavelet transforms to estimate the reservoir fluid mobility. Geophys Prospect 66(7):1358–1371. https://doi.org/10.1111/1365-2478.12622

Yang X, Chen X (2020) Using a combined evaluation method to assess water resources sustainable utilization in Fujian Province, China. Environ Dev Sustain 23:8047–8061. https://doi.org/10.1007/s10668-020-00939-z

Yu T, Yang S, Bai Y, Gao X, Li C (2018) Inlet water quality forecasting of wastewater treatment based on kernel principal component analysis and an extreme learning machine. Water 10(7):873. https://doi.org/10.3390/w10070873

Zhang N, Ding S, Shi Z (2016) Denoising Laplacian multi-layer extreme learning machine. Neurocomputing 171:1066–1074. https://doi.org/10.1016/j.neucom.2015.07.058

Zhou Y (2020) Real-time probabilistic forecasting of river water quality under data missing situation: Deep learning plus post-processing techniques. J Hydrol 589:125164. https://doi.org/10.1016/j.jhydrol.2020.125164

Zounemat-Kermani M, Alizamir M, Fadaee M, Sankaran Namboothiri A, Shiri J (2021) Online sequential extreme learning machine in river water quality (turbidity) prediction: a comparative study on different data mining approaches. Water Environ J 35(1):335–348. https://doi.org/10.1111/wej.12630

Acknowledgements

We sincerely thank the anonymous reviewers for their time and effort devoted to improving the manuscript.

Author information

Authors and Affiliations

Contributions

SC was involved in methodology, writing—original draft, writing—review & editing, software, data curation. YL helped in writing—review & editing, supervision. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Responsible Editor: Marcus Schulz

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Highlights

• A novel optimal-hybrid model is developed for water quality forecasting.

• Deep extreme learning machine (DELM) is employed to forecast water quality parameters.

• A new idea of synchrosqueezed wavelet transform method is proposed to denoise water quality series.

Rights and permissions

About this article

Cite this article

Song, C., Yao, L. Application of artificial intelligence based on synchrosqueezed wavelet transform and improved deep extreme learning machine in water quality prediction. Environ Sci Pollut Res 29, 38066–38082 (2022). https://doi.org/10.1007/s11356-022-18757-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11356-022-18757-3