Abstract

Cognitive diagnosis models (CDMs) are useful statistical tools in cognitive diagnosis assessment. However, as many other latent variable models, the CDMs often suffer from the non-identifiability issue. This work gives the sufficient and necessary condition for identifiability of the basic DINA model, which not only addresses the open problem in Xu and Zhang (Psychometrika 81:625–649, 2016) on the minimal requirement for identifiability, but also sheds light on the study of more general CDMs, which often cover DINA as a submodel. Moreover, we show the identifiability condition ensures the consistent estimation of the model parameters. From a practical perspective, the identifiability condition only depends on the Q-matrix structure and is easy to verify, which would provide a guideline for designing statistically valid and estimable cognitive diagnosis tests.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Cognitive diagnosis models (CDMs), also called diagnostic classification models (DCMs), are useful statistical tools in cognitive diagnosis assessment, which aims to achieve a fine-grained decision on an individual’s latent attributes, such as skills, knowledge, personality traits, or psychological disorders, based on his or her observed responses to some designed diagnostic items. The CDMs fall into the more general regime of restricted latent class models in the statistics literature and model the complex relationships among the items, the latent attributes and the item responses for a set of items and a sample of respondents. Various CDMs have been developed with different cognitive diagnosis assumptions, among which the Deterministic Input Noisy output “And” gate model (DINA; Junker and Sijtsma, 2001) is a popular one and serves as a basic submodel for more general CDMs such as the general diagnostic model (von Davier, 2005), the log linear CDM (LCDM; Henson et al., 2009), and the generalized DINA model (GDINA; de la Torre, 2011).

To achieve reliable and valid diagnostic assessment, a fundamental issue is to ensure that the CDMs applied in the cognitive diagnosis are statistically identifiable, which is a necessity for consistent estimation of the model parameters of interest and valid statistical inferences. The study of identifiability in statistics and psychometrics has a long history (Koopmans and Reiersøl, 1950; McHugh, 1956; Rothenberg, 1971; Goodman, 1974; Gabrielsen, 1978). The identifiability issue of the CDMs has also long been a concern, as noted in the literature (DiBello et al., 1995; Maris and Bechger, 2009; Tatsuoka, 2009; DeCarlo, 2011; von Davier, 2014). In practice, however, there is often a tendency to overlook the issue due to the lack of easily verifiable identifiability conditions. Recently, there have been several studies on the identifiability of the CDMs, including the DINA model (e.g., Xu and Zhang, 2016) and general models (e.g., Xu, 2017; Xu and Shang, 2017; Fang et al., 2017).

However, the existing works mostly focus on developing sufficient conditions for the model identifiability, which might impose stronger than needed or sometimes even impractical constraints on designing identifiable cognitive diagnostic tests. It remains an open problem in the literature what would be the minimal requirement, i.e., the sufficient and necessary conditions, for the models to be identifiable. In particular, for the DINA model, Xu and Zhang (2016) proposed a set of sufficient conditions and a set of necessary conditions for the identifiability of the slipping, guessing and population proportion parameters. However, as pointed out by the authors, there is a gap between the two sets of conditions; see Xu and Zhang (2016) for examples and discussions.

This paper addresses this open problem by developing the sufficient and necessary condition for the identifiability of the DINA model. Furthermore, we show that the identifiability condition ensures the statistical consistency of the maximum likelihood estimators of the model parameters. The proposed condition not only guarantees the identifiability, but also gives the minimal requirement that the DINA model needs to meet in order to be identifiable. The identifiability result can be directly applied to the DINO model (Templin and Henson, 2006) through the duality of the DINA and DINO models. For general CDMs such as the LCDM and GDINA models, since the DINA model can be considered as a submodel of them, the proposed condition also serves as a necessary requirement. From a practical perspective, the sufficient and necessary condition only depends on the Q-matrix structure and such easily checkable condition would provide a practical guideline for designing statistically valid and estimable cognitive tests.

The rest of the paper is organized as follows. Section 2 introduces the basic model setup and the definition of identifiability. Section 3 states the identifiability results and includes several illustrating examples. Section 4 gives a further discussion, and the Appendix provides the proof of the main results.

2 Model Setup and Identifiability

We consider the setting of a cognitive diagnosis test with binary responses. The test contains J items to measure K unobserved latent attributes. The latent attributes are assumed to be binary for diagnosis purpose, and a complete configuration of the K latent attributes is called an attribute profile, which is denoted by a K-dimensional binary vector \({\varvec{\alpha }}=(\alpha _1,\ldots ,\alpha _K)^\top \), where \(\alpha _k\in \{0,1\}\) represents deficiency or mastery of the kth attribute. The underlying cognitive structure, i.e., the relationship between the items and the attributes, is described by the so-called Q-matrix, originally proposed by Tatsuoka (1983). A Q-matrix Q is a \(J\times K\) binary matrix with entries \(q_{j,k}\in \{0,1\}\) indicating the absence or presence of the dependence of the jth item on the kth attribute. The jth row vector \({\varvec{q}}_j\) of the Q-matrix, also called the \({\varvec{q}}\)-vector, corresponds to the attribute requirements of item j.

A subject’s attribute profile is assumed to follow a categorical distribution with population proportion parameters \({\varvec{p}}:= (p_{{\varvec{\alpha }}}:{\varvec{\alpha }}\in \{0,1\}^K)^\top \), where \(p_{{\varvec{\alpha }}}\) is the proportion of attribute profile \({\varvec{\alpha }}\) in the population and \({\varvec{p}}\) satisfies \(\sum _{{\varvec{\alpha }}\in \{0,1\}^K}p_{{\varvec{\alpha }}}=1\) and \(p_{{\varvec{\alpha }}}>0\) for any \({\varvec{\alpha }}\in \{0,1\}^K\). For an attribute profile \({\varvec{\alpha }}\) and a \({\varvec{q}}\)-vector \({\varvec{q}}_j\), we write \({\varvec{\alpha }}\succeq {\varvec{q}}_j\) if \({\varvec{\alpha }}\) masters all the required attributes of item j, i.e., \(\alpha _k \ge q_{j,k}\) for any \(k \in \{1, \ldots , K\}\), and write \({\varvec{\alpha }}\nsucceq {\varvec{q}}_j\) if there exists some k such that \(\alpha _k < q_{j,k}.\) Similarly, we define the operations \(\preceq \) and \(\npreceq \).

A subject provides a J-dimensional binary response vector \({\varvec{R}}=(R_1,\ldots ,R_J)^\top \in \{0,1\}^J\) to these J items. The DINA model assumes a conjunctive relationship among attributes, which means it is necessary to master all the attributes required by an item to be capable of providing a positive response to it. Moreover, mastering additional unnecessary attributes does not compensate for the lack of the necessary attributes. Specifically, for any item j and attribute profile \({\varvec{\alpha }}\), we define the binary ideal response \(\xi _{j,{\varvec{\alpha }}} = I({\varvec{\alpha }}\succeq {\varvec{q}}_j)\). If there is no uncertainty in the response, then a subject with attribute profile \({\varvec{\alpha }}\) will have response \(R_j=\xi _{j,{\varvec{\alpha }}} \) to item j. The uncertainty of the responses is incorporated at the item level, using slipping and guessing parameters. For each item j, the slipping parameter \(s_j := P(R_j=0\mid \xi _{j,{\varvec{\alpha }}} =1)\) denotes the probability of a subject giving a negative response despite mastering all the necessary skills, while the guessing parameter \(g_j := P(R_j=1\mid \xi _{j,{\varvec{\alpha }}} =0)\) denotes the probability of giving a positive response despite deficiency of some necessary skills.

Note that if some item j does not require any of the attributes, namely \({\varvec{q}}_j\) equals the zero vector \(\mathbf {0}\), then \(\xi _{j,{\varvec{\alpha }}}=1\) for all attribute profiles \({\varvec{\alpha }}\in \{0,1\}^K\). Therefore, in this special case, the guessing parameter is not needed in the specification of the DINA model. The DINA model item parameters then include slipping parameters \({\varvec{s}}= (s_1,\ldots ,s_J)^\top \) and guessing parameters \({\varvec{g}}^{-} = (g_j: \forall j \text{ such } \text{ that } {\varvec{q}}_j\ne \mathbf {0} )^\top \). We assume \(1-s_j>g_j\) for any item j with \({\varvec{q}}_j\ne \mathbf {0}\). For notational simplicity, in the following discussion we define the guessing parameter of any item with \({\varvec{q}}_j=\mathbf {0}\) to be a known value \(g_j\equiv 0\), and write \({\varvec{g}}=(g_1,\ldots , g_J)^\top \).

Conditional on the attribute profile \({\varvec{\alpha }}\), the DINA model further assumes a subject’s responses are independent. Therefore, the probability mass function of a subject’s response vector \({\varvec{R}}= (R_1,\ldots ,R_J)^\top \) is

where \({\varvec{r}}= (r_1,\ldots ,r_J)^\top \in \{0,1\}^J\).

Suppose we have N independent subjects, indexed by \(i=1,\ldots , N\), in a cognitive diagnostic assessment. We denote their response vectors by \(\{{\varvec{R}}_i: i=1,\ldots ,N\}\), which are our observed data. The DINA model parameters that we aim to estimate from the response data are \(({\varvec{s}},{\varvec{g}},{\varvec{p}})\), based on which we can further evaluate the subjects’ attribute profiles from their “posterior distributions.” To consistently estimate \(({\varvec{s}},{\varvec{g}},{\varvec{p}})\), we need them to be identifiable. Following the definition in the statistics literature (e.g., Casella and Berger, 2002), we say a set of parameters in the parameter space B for a family of probability density (mass) functions \(\{f(~\cdot \mid \beta ):\beta \in B\}\) is identifiable if distinct values of \(\beta \) correspond to distinct \(f(~\cdot \mid \beta )\) functions, i.e., for any \(\beta \) there is no \(\tilde{\beta }\in B\backslash \{\beta \}\) such that \(f(~\cdot \mid \beta ) \equiv f(~\cdot \mid \tilde{\beta })\). In the context of the DINA model, we have the following definition.

Definition 1

We say the DINA model parameters are identifiable if there is no \((\bar{{\varvec{s}}},\bar{{\varvec{g}}},\bar{{\varvec{p}}})\ne ({\varvec{s}},{\varvec{g}},{\varvec{p}})\) such that

Remark 1

Identifiability of latent class models is a well-established concept in the literature (e.g., McHugh, 1956; Goodman, 1974). Recent studies on the identifiability of the CDMs and the restricted latent class models include Liu et al. (2013), Chen et al. (2015), Xu and Zhang (2016), Xu (2017), and Xu and Shang (2017). However, as discussed in the introduction, most of them focus on developing sufficient conditions, while the sufficient and necessary conditions are still unknown.

3 Main Result

We first introduce the important concept of the completeness of a Q-matrix, which was first introduced in Chiu et al. (2009). A Q-matrix is said to be complete if it can differentiate all latent attribute profiles, in the sense that under the Q-matrix, different attribute profiles have different response distributions. In this study of the DINA model, completeness of the Q-matrix means that \(\{{\varvec{e}}_k^\top :k=1,\ldots ,K\}\subseteq \{{\varvec{q}}_j:j=1,\ldots ,J\}\), equivalently, for each attribute there is some item which requires that and solely requires that attribute. Up to some row permutation, a complete Q-matrix under the DINA model contains a \(K\times K\) identity matrix. Under the DINA model, completeness of the Q-matrix is necessary for identifiability of the population proportion parameters \({\varvec{p}}\) (Xu and Zhang, 2016).

Besides the completeness, an additional necessary condition for identifiability was also specified in Xu and Zhang (2016) that each attribute needs to be related with at least three items. For easy discussion, we summarize the set of necessary conditions in Xu and Zhang (2016) as follows.

Condition 1

-

(i)

The Q-matrix is complete under the DINA model and without loss of generality, we assume the Q-matrix takes the following form:

(3)

(3)where \(\mathcal{I}_K\) denotes the \(K\times K\) identity matrix and \(Q^*\) is a \((J-K)\times K\) submatrix of Q.

-

(ii)

Each of the K attributes is required by at least three items.

Though necessary, Xu and Zhang (2016) recognized that Condition 1 is not sufficient. To establish identifiability, the authors also proposed a set of sufficient conditions, which, however, is not necessary. For instance, the Q-matrix in (4), which is given on page 633 in Xu and Zhang (2016), does not satisfy their sufficient condition but still gives an identifiable model.

In particular, their sufficient condition C4 requires that for each \(k\in \{1,\ldots , K\}\), there exist two subsets \(S_k^+\) and \(S_k^-\) of the items (not necessarily nonempty or disjoint) in \(Q^*\) such that \(S_k^+\) and \(S_k^-\) have attribute requirements that are identical except in the kth attribute, which is required by an item in \(S_k^+\) but not by any item in \(S_k^-\). However, the first attribute in (4) does not satisfy this condition. Examples of this kind of Q-matrices not satisfying their C4 but still identifiable are not rare and can be easily constructed as shown below in (5).

It has been an open problem in the literature what would be the minimal requirement of the Q-matrix for the model to be identifiable. This paper solves this problem and shows that Condition 1 together with the following Condition 2 are sufficient and necessary for the identifiability of the DINA model parameters.

Condition 2

Any two different columns of the submatrix \(Q^*\) in (3) are distinct.

We have the following identifiability result.

Theorem 1

(Sufficient and Necessary Condition) Conditions 1 and 2 are sufficient and necessary for the identifiability of all the DINA model parameters.

Remark 2

From the model construction, when there are some items that require none of the attributes, all the DINA model parameters are \(({\varvec{s}},{\varvec{p}})\) and \({\varvec{g}}^{-} = (g_j: \forall j \text{ such } \text{ that } {\varvec{q}}_j\ne \mathbf {0} )^\top \). Theorem 1 also applies to this special case that the proposed conditions still remain sufficient and necessary for the identifiability of \(({\varvec{s}},{\varvec{g}}^{-},{\varvec{p}})\), under a Q-matrix containing some all-zero \({\varvec{q}}\)-vectors. See Proposition 2 in Appendix for more details.

Conditions 1 and 2 are easy to verify. Based on Theorem 1, it is recommended in practice to design the Q-matrix such that it is complete, has each attribute required by at least three items, and has K distinct columns in the submatrix \(Q^*\). Otherwise, the model parameters would suffer from the non-identifiability issue. We use the following examples to illustrate the theoretical result.

Example 1

From Theorem 1, the Q-matrices in (4) and (5) satisfy both Conditions 1 and 2 and therefore give identifiable models, while the results in Xu and Zhang (2016) cannot be applied since their condition C4 does not hold. On the other hand, the Q-matrices below in (6) satisfy the necessary conditions in Xu and Zhang (2016), but they do not satisfy our Condition 2, so the corresponding models are not identifiable.

Example 2

To illustrate the necessity of Condition 2, we consider a simple case when \(K=2\). If Condition 1 is satisfied but Condition 2 does not hold, the Q-matrix can only have the following form up to some row permutations,

where the first two items give an identity matrix, while the next \(J_0\) items require none of the attributes and the last \(J-2-J_0\) items require both attributes. Under the Q-matrix in (7), we next show the model parameters \(({\varvec{s}},{\varvec{g}},{\varvec{p}})\) are not identifiable by constructing a set of parameters \((\bar{{\varvec{s}}},\bar{{\varvec{g}}},\bar{{\varvec{p}}})\ne ({\varvec{s}},{\varvec{g}},{\varvec{p}})\) which satisfy (2). Recall from the model setup in Sect. 2 that for any item \(j\in \{3,\ldots ,J_0+2\}\) that has \({\varvec{q}}_j=\mathbf {0}\), the guessing parameter is not needed by the DINA model and for notational convenience, we set \(g_j \equiv \bar{g}_j \equiv 0\). We take \(\bar{{\varvec{s}}} ={\varvec{s}}\), \(\bar{g}_j=g_j\) for \(j=J_0+3,\ldots , J\), and \(\bar{p}_{(11)}=p_{(11)}\). Next we show the remaining parameters \((g_1,g_2,p_{(00)}, p_{(10)},p_{(01)})\) are not identifiable. From Definition 1, the non-identifiability occurs if the following equations hold (see the Supplementary Material for the computational details): \(P\big ((R_1,R_2)=(r_1,r_2)\mid Q, \bar{{\varvec{s}}},\bar{{\varvec{g}}},\bar{{\varvec{p}}}\big ) = P\big ((R_1,R_2)=(r_1,r_2)\mid Q, {\varvec{s}},{\varvec{g}},{\varvec{p}}\big )\) for all \((r_1,r_2)\in \{0,1\}^2,\) where \((R_1,R_2)\) are the first two entries of the random response vector \({\varvec{R}}\). These equations can be further expressed as the following equations in (8):

For any \(({\varvec{s}},{\varvec{g}},{\varvec{p}})\), there are four constraints in (8) but five parameters \((\bar{g}_1,\bar{g}_2,\bar{p}_{(00)}, \bar{p}_{(10)},\bar{p}_{(01)})\) to solve. Therefore, there are infinitely many solutions and \(({\varvec{s}},{\varvec{g}},{\varvec{p}})\) are non-identifiable.

Example 3

We provide a numerical illustration of Example 2. Without loss of generality, we take \(J_0=0\), since whether there exist zero \({\varvec{q}}\)-vector items makes no impact on the non-identifiability phenomenon as illustrated in (8). We take \(J=10\) and set the true parameters to be \((p_{(00)},~p_{(10)},~p_{(01)},~p_{(11)})=(0.1,~ 0.3,~ 0.4,~ 0.2)\) and \(s_j=g_j=0.2\) for \(j\in \{1,\ldots , 10\}\). We first generate a random sample of size \(N=200\). From the data, we obtain one set of maximum likelihood estimators as follows:

Based on (8), we can construct infinitely many sets of \((\bar{{\varvec{s}}},\bar{{\varvec{g}}},\bar{{\varvec{p}}})\) that are also maximum likelihood estimators. For instance, we take \(\bar{{\varvec{s}}}=\hat{\varvec{s}}\), \(\bar{g}_j = \hat{g}_j\) for \(j=3,\ldots ,10\), \(\bar{p}_{(11)}=\hat{p}_{(11)}\), and \(\bar{p}_{(00)} = 0.998\cdot \hat{p}_{(00)}\). Then solve (8) for the remaining parameters \(\bar{p}_{(10)}\), \(\bar{p}_{(01)}\), \(\bar{g}_1\) and \(\bar{g}_2\) to get

The two different sets of values \((\hat{\varvec{s}},\hat{\varvec{g}},\hat{\varvec{p}})\) and \((\bar{{\varvec{s}}},\bar{{\varvec{g}}},\bar{{\varvec{p}}})\) both give the identical log-likelihood value \(-\,1132.1264\), which confirms the non-identifiability.

To further illustrate the above argument does not depend on the sample size, we generate a random sample of size \(N=10^5\) and obtain the following estimators:

Similarly, we set \(\bar{{\varvec{s}}}=\hat{\varvec{s}}\), \(\bar{g}_j = \hat{g}_j\) for \(j=3,\ldots ,10\), \(\bar{p}_{(11)}=\hat{p}_{(11)}\), and \(\bar{p}_{(00)} = 0.998\cdot \hat{p}_{(00)}\). Solving (8) gives

where the two different sets of values \((\hat{\varvec{s}},\hat{\varvec{g}},\hat{\varvec{p}})\) and \((\bar{{\varvec{s}}},\bar{{\varvec{g}}},\bar{{\varvec{p}}})\) both lead to the identical log-likelihood value \(-\,571659.1708\). This illustrates that the non-identifiability issue depends on the model setting instead of the sample size. In practice, as long as Conditions 1 and 2 do not hold, we may suffer from similar non-identifiability issues no matter how large the sample size is.

Identifiability is the prerequisite and a necessary condition for consistent estimation. Here, we say a parameter is consistently estimable if we can construct a consistent estimator for the parameter. That is, for parameter \(\beta \), there exists \(\hat{\beta }_N\) such that \(\hat{\beta }_N-\beta \rightarrow 0\) in probability as the sample size \(N\rightarrow \infty \). When the identifiability conditions are satisfied, we show that the maximum likelihood estimators (MLEs) of the DINA model parameters \(({\varvec{s}},{\varvec{g}},{\varvec{p}})\) are statistically consistent as \(N\rightarrow \infty \). For the observed responses \(\{{\varvec{R}}_i: i=1,\ldots , N\}\), we can write their likelihood function as

where \(P({\varvec{R}}={\varvec{R}}_i\mid Q,{\varvec{s}},{\varvec{g}},{\varvec{p}})\) is as defined in (1). Let \((\hat{\varvec{s}}, \hat{\varvec{g}},\hat{\varvec{p}})\) be the corresponding MLEs based on (9). We have the following corollary.

Corollary 1

When Conditions 1 and 2 are satisfied, the MLEs \((\hat{\varvec{s}}, \hat{\varvec{g}},\hat{\varvec{p}})\) are consistent as \(N\rightarrow \infty \).

The results in Theorem 1 and Corollary 1 can be directly applied to the DINO model through the duality of the DINA and DINO models (see Proposition 1 in Chen et al., 2015). Specifically, when Conditions 1 and 2 are satisfied, the guessing, slipping, and population proportion parameters in the DINO model are identifiable and can also be consistently estimated as \(N\rightarrow \infty \).

Moreover, the proof of Corollary 1 can be directly generalized to the other CDMs that the MLEs of the model parameters, including the item parameters and population proportion parameters, are consistent as \(N\rightarrow \infty \) if they are identifiable. Therefore under the sufficient conditions for identifiability of general CDMs developed in the literature such as Xu (2017), the model parameters are also consistently estimable. Although the minimal requirement for identifiability and estimability of general CDMs is still unknown, the proposed Conditions 1 and 2 are necessary since the DINA model is a submodel of them. For instance, Xu (2017) requires two identity matrices in the Q-matrix to obtain identifiability, which automatically satisfies Conditions 1 and 2 in this paper.

We next present an example to illustrate that when the proposed conditions are satisfied, the MLEs of the DINA model parameters are consistent.

Example 4

We perform a simulation study with the following Q-matrix that satisfies the proposed sufficient and necessary conditions. The true parameters are set to be \(p_{{\varvec{\alpha }}}=0.125\) for all \({\varvec{\alpha }}\in \{0,1\}^3\), and \(s_j=g_j=0.2\) for \(j=1,\ldots ,6\).

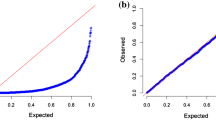

For each sample size \(N=200\cdot i\) where \(i=1,\ldots ,10\), we generate 1000 independent datasets and use the EM algorithm with random initializations to obtain the MLEs of model parameters for each dataset. The mean-squared errors (MSEs) of the parameters \({\varvec{s}}\), \({\varvec{g}}\), \({\varvec{p}}\) computed from the 1000 runs are shown in Table 1 and Fig. 1. One can see that the MSEs keep decreasing as the sample size N increases, matching the theoretical result in Corollary 1.

4 Discussion

This paper presents the sufficient and necessary condition for identifiability of the DINA and DINO model parameters and establishes the consistency of the maximum likelihood estimators. As discussed in Sect. 3, the results would also shed light on the study of the sufficient and necessary conditions for general CDMs.

This paper treats the attribute profiles as random effects from a population distribution. Under this setting, the identifiability conditions ensure the consistent estimation of the model parameters. However, generally in statistics and psychometrics, identifiability conditions are not always sufficient for consistent estimation. An example of identifiable but not consistently estimable is the fixed effects CDMs, where the subjects’ attribute profiles are taken as model parameters. Consider a simple example of the DINA model with nonzero but known slipping and guessing parameters. Under the fixed effects setting, the model parameters include \(\{{\varvec{\alpha }}_i, i=1,\ldots ,N\}\), which are identifiable if the Q-matrix is complete (e.g., Chiu et al., 2009). But with fixed number of items, even when the sample size N goes to infinity, the parameters \(\{{\varvec{\alpha }}_i, i=1,\ldots ,N\}\) cannot be consistently estimated. In this case, to have the consistent estimation of each \({\varvec{\alpha }}\), the number of items needs to go to infinity and the number of identity sub-Q-matrices also needs to go to infinity (Wang and Douglas, 2015), equivalently, there are infinitely many sub-Q-matrices satisfying Conditions 1 and 2.

When the identifiability conditions are not satisfied, we may expect to obtain partial identification results that certain parameters are identifiable, while others are only identifiable up to some transformations. For instance, when Condition 1 is satisfied, the slipping parameters are all identifiable and guessing parameters of items \((K+1,\ldots , J)\) are also identifiable. It is also possible in practice that there exist certain hierarchical structures among the latent attributes. For instance, an attribute may be a prerequisite for some other attributes. In this case, some entries of \({\varvec{p}}\) are restricted to be 0. It would also be interesting to consider the identifiability conditions under these restricted models. For these cases, weaker conditions are expected for identifiability of the model parameters. In particular, completeness of the Q-matrix may not be needed. We believe the techniques used in the proof of the main result can be extended to study such restricted models and would like to pursue this in the future.

References

Casella, G., & Berger, R. L., (2002). Statistical inference. Duxbury Pacific Grove, CA, 2 edition.

Chen, Y., Liu, J., Xu, G., & Ying, Z. (2015). Statistical analysis of \(Q\)-matrix based diagnostic classification models. Journal of the American Statistical Association, 110, 850–866.

Chiu, C.-Y., Douglas, J. A., & Li, X. (2009). Cluster analysis for cognitive diagnosis: Theory and applications. Psychometrika, 74, 633–665.

de la Torre, J. (2011). The generalized DINA model framework. Psychometrika, 76, 179–199.

DeCarlo, L. T. (2011). On the analysis of fraction subtraction data: The DINA model, classification, class sizes, and the Q-matrix. Applied Psychological Measurement, 35, 8–26.

DiBello, L. V., Stout, W. F., & Roussos, L. A. (1995). Unified cognitive psychometric diagnostic assessment likelihood-based classification techniques. In P. D. Nichols, S. F. Chipman, & R. L. Brennan (Eds.), Cognitively diagnostic assessment (pp. 361–390). Hillsdale, NJ: Erlbaum Associates.

Fang, G., Liu, J., & Ying, Z. (2017). On the identifiability diagnostic classification models. arXiv Preprint.

Gabrielsen, A. (1978). Consistency and identifiability. Journal of Econometrics, 8(2), 261–263.

Goodman, L. A. (1974). Exploratory latent structure analysis using both identifiable and unidentifiable models. Biometrika, 61, 215–231.

Henson, R. A., Templin, J. L., & Willse, J. T. (2009). Defining a family of cognitive diagnosis models using log-linear models with latent variables. Psychometrika, 74, 191–210.

Junker, B. W., & Sijtsma, K. (2001). Cognitive assessment models with few assumptions, and connections with nonparametric item response theory. Applied Psychological Measurement, 25, 258–272.

Koopmans, T. C., & Reiersøl, O. (1950). The identification of structural characteristics. Annals of Mathematical Statistics, 21, 165–181.

Liu, J., Xu, G., & Ying, Z. (2013). Theory of self-learning Q-matrix. Bernoulli, 19(5A), 1790–1817.

Maris, G., & Bechger, T. M. (2009). Equivalent diagnostic classification models. Measurement, 7, 41–46.

McHugh, R. B. (1956). Efficient estimation and local identification in latent class analysis. Psychometrika, 21, 331–347.

Rothenberg, T. J. (1971). Identification in parametric models. Econometrica, 39, 577–591.

Tatsuoka, C. (2009). Diagnostic models as partially ordered sets. Measurement, 7, 49–53.

Tatsuoka, K. K. (1983). Rule space: An approach for dealing with misconceptions based on item response theory. Journal of Educational Measurement, 20, 345–354.

Templin, J. L., & Henson, R. A. (2006). Measurement of psychological disorders using cognitive diagnosis models. Psychological Methods, 11, 287–305.

von Davier, M. (2005). A general diagnostic model applied to language testing data. Princeton, NJ: Educational Testing Service. (Research report).

von Davier, M. (2014). The DINA model as a constrained general diagnostic model: Two variants of a model equivalency. British Journal of Mathematical and Statistical Psychology, 67(1), 49–71.

Wang, S., & Douglas, J. (2015). Consistency of nonparametric classification in cognitive diagnosis. Psychometrika, 80(1), 85–100.

Xu, G. (2017). Identifiability of restricted latent class models with binary responses. The Annals of Statistics, 45, 675–707.

Xu, G., & Shang, Z. (2017). Identifying latent structures in restricted latent class models. Journal of the American Statistical Association, (accepted).

Xu, G., & Zhang, S. (2016). Identifiability of diagnostic classification models. Psychometrika, 81, 625–649.

Acknowledgements

The authors thank the editor, the associate editor, and two reviewers for many helpful and constructive comments. This work is partially supported by National Science Foundation (Grant No. SES-1659328).

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix: Proof of Theorem 1

Appendix: Proof of Theorem 1

To study model identifiability, directly working with (2) is technically challenging. To facilitate the proof of the theorem, we introduce a key technical quantity following that of Xu (2017), the marginal probability matrix called the T-matrix. The T-matrix \(T({\varvec{s}},{\varvec{g}})\) is defined as a \(2^J\times 2^K\) matrix, where the entries are indexed by row index \({\varvec{r}}\in \{0,1\}^J\) and column index \({\varvec{\alpha }}\). Suppose that the columns of \(T({\varvec{s}},{\varvec{g}})\) indexed by \(({\varvec{\alpha }}^1,\ldots ,{\varvec{\alpha }}^{2^K})\) are arranged in the following order of \(\{0,1\}^K\)

where \(\mathbf {0}\) denotes the column vector of zeros, \(\mathbf {1}\) denotes the column vector of ones, and \({\varvec{e}}_k\) denotes a standard basis vector, whose kth element is one and the rest are zero; to simplify notation, we omit the dimension indices of \(\mathbf {0}, \mathbf {1}\) and \({\varvec{e}}_k\)’s. Similarly, suppose that the rows of \(T({\varvec{s}},{\varvec{g}})\) indexed by \(({\varvec{r}}^1,\ldots ,{\varvec{r}}^{2^J})\) are arranged in the following order

The \({\varvec{r}}=(r_1,\ldots , r_J)\)th row and \({\varvec{\alpha }}\)th column element of \(T({\varvec{s}},{\varvec{g}})\), denoted by \(t_{{\varvec{r}},{\varvec{\alpha }}}({\varvec{s}},{\varvec{g}})\), is the probability that a subject with attribute profile \({\varvec{\alpha }}\) answers all items in the subset \(\{j: r_j=1\}\) positively, that is, \(t_{{\varvec{r}},{\varvec{\alpha }}}({\varvec{s}},{\varvec{g}}) = P({\varvec{R}}\succeq {\varvec{r}}\mid Q,{\varvec{s}},{\varvec{g}},{\varvec{\alpha }}). \) When \({\varvec{r}}=\mathbf {0}\), \(t_{\mathbf {0},{\varvec{\alpha }}}({\varvec{s}},{\varvec{g}}) = P({\varvec{r}}\succeq \mathbf {0}) = 1 \text{ for } \text{ any } {\varvec{\alpha }}.\) When \({\varvec{r}}={\varvec{e}}_j\), for \(1\le j\le J\), \(t_{{\varvec{e}}_j,{\varvec{\alpha }}}({\varvec{s}},{\varvec{g}}) =P(R_j=1 \mid Q,{\varvec{s}},{\varvec{g}},{\varvec{\alpha }}).\) Let  be the row vector in the T-matrix corresponding to \({\varvec{r}}\). Then for any \({\varvec{r}}\ne \mathbf {0}\), we can write

be the row vector in the T-matrix corresponding to \({\varvec{r}}\). Then for any \({\varvec{r}}\ne \mathbf {0}\), we can write  where \(\odot \) is the element-wise product of the row vectors.

where \(\odot \) is the element-wise product of the row vectors.

By definition, multiplying the T-matrix by the distribution of attribute profiles \({\varvec{p}}\) results in a vector, \(T({\varvec{s}},{\varvec{g}}){\varvec{p}}\), containing the marginal probabilities of successfully responding to each subset of items positively. The \({\varvec{r}}\)th entry of this vector is

We can see that there is a one-to-one mapping between the two \(2^J\)-dimensional vectors \(T({\varvec{s}},{\varvec{g}}){\varvec{p}}\) and \(\left( P({\varvec{R}}= {\varvec{r}}\mid Q,{\varvec{s}},{\varvec{g}},{\varvec{p}}):~ {\varvec{r}}\in \{0,1\}^J\right) \). Therefore, Definition 1 directly implies the following proposition.

Proposition 1

The parameters \(({\varvec{s}},{\varvec{g}},{\varvec{p}})\) are identifiable if and only if for any \((\bar{{\varvec{s}}},\bar{{\varvec{g}}},\bar{{\varvec{p}}})\ne ({\varvec{s}},{\varvec{g}},{\varvec{p}})\), there exists \({\varvec{r}}\in \{ 0, 1\}^J\) such that

Proposition 1 shows that to establish the identifiability of \(({\varvec{s}},{\varvec{g}},{\varvec{p}})\), we only need to focus on the T-matrix structure.

The following proposition characterizes the equivalence between the identifiability of the DINA model associated with a Q-matrix with some zero \({\varvec{q}}\)-vectors and that associated with the submatrix of Q containing all of those nonzero \({\varvec{q}}\)-vectors. The proof of Proposition 2 is given in the Supplementary Material.

Proposition 2

Suppose the Q-matrix of size \(J\times K\) takes the form

where \(Q'\) denotes a \(J'\times K\) submatrix containing the \(J'\) nonzero \({\varvec{q}}\)-vectors of Q, and \(\mathbf {0}\) denotes a \((J-J')\times K\) submatrix containing those zero \({\varvec{q}}\)-vectors of Q. Then, the DINA model associated with Q is identifiable if and only if the DINA model associated with \(Q'\) is identifiable.

By Proposition 2, without loss of generality, in the following we assume the Q-matrix does not contain any zero \({\varvec{q}}\)-vectors and prove the necessity and sufficiency of the proposed Conditions 1 and 2.

Proof of Necessity

The necessity of Condition 1 comes from Theorem 3 in Xu and Zhang (2016). Now suppose Condition 1 holds, but Condition 2 is not satisfied. Without loss of generality, suppose the first two columns in \(Q^*\) are the same and the Q takes the following form

where \({\varvec{v}}\) is any binary vector of length \(J-K\). To show the necessity of Condition 2, from Proposition 1, we only need to find two different sets of parameters \(({\varvec{s}},{\varvec{g}},{\varvec{p}})\ne ( \bar{{\varvec{s}}},\bar{{\varvec{g}}},\bar{{\varvec{p}}})\) such that for any \({\varvec{r}}\in \{0,1\}^J\), the following equation holds

We next construct such \(({\varvec{s}},{\varvec{g}},{\varvec{p}})\) and \((\bar{{\varvec{s}}}, \bar{{\varvec{g}}},\bar{{\varvec{p}}})\). We assume in the following that \(\bar{{\varvec{s}}}={\varvec{s}}\) and \(\bar{g}_j =g_j\) for any \(j> 2\), and focus on the construction of \((\bar{g}_1,\bar{g}_2,\bar{{\varvec{p}}})\ne ( g_1, g_2, {\varvec{p}}) \) satisfying (12) for any \({\varvec{r}}\in \{0,1\}^J\). For notational convenience, we write the positive response probability for item j and attribute profile \({\varvec{\alpha }}\) in the following general form \( \theta _{j,{\varvec{\alpha }}} := (1-s_j)^{\xi _{j,{\varvec{\alpha }}} }g_j^{1-\xi _{j,{\varvec{\alpha }}}}. \) So based on our construction, for any \(j>2\), \(\theta _{j,{\varvec{\alpha }}} = \bar{\theta }_{j,{\varvec{\alpha }}}\).

We define two subsets of items \(S_0\) and \(S_1\) to be

where \(S_0\) includes those items not requiring any of the first two attributes, and \(S_1\) includes those items requiring both of the first two attributes. Then, since Condition 2 is not satisfied, we must have \(S_0\cup S_1 = \{3,4,\ldots ,J\}\), i.e., all but the first two items either fall in \(S_0\) or \(S_1\). Now consider any \({\varvec{\alpha }}^*\in \{0,1\}^{K-2}\), for any item \(j\in S_0\), the four attribute profiles \((0,0,{\varvec{\alpha }}^*)\), \((0,1,{\varvec{\alpha }}^*)\), \((1,0,{\varvec{\alpha }}^*)\) and \((1,1,{\varvec{\alpha }}^*)\) always have the same positive response probabilities to j, and for any \(j\in S_1\), the three attribute profiles \((0,0,{\varvec{\alpha }}^*)\), \((1,0,{\varvec{\alpha }}^*)\), \((0,1,{\varvec{\alpha }}^*)\) always have the same positive response probabilities to j. In summary,

For any response vector \({\varvec{r}}\in \{0,1\}^J\) such that \({\varvec{r}}_{S_1}:=(r_j: j\in S_1)\ne \mathbf {0}\), namely \(r_j=1\) for some item j requiring both of the first two attributes, we discuss the following four cases.

-

(a)

For any \({\varvec{r}}\) such that \((r_1,r_2) = (0,0)\) and \({\varvec{r}}_{S_1}\ne \mathbf {0}\), from (13) and the definition of the T-matrix, (12) is equivalent to

$$\begin{aligned}&\sum _{{\varvec{\alpha }}^*}\left\{ \left[ \prod _{j>2:\,r_j=1} \theta _{j,\,(0,0,{\varvec{\alpha }}^*)}\right] \big [p_{(0,0,{\varvec{\alpha }}^*)}+p_{(0,1,{\varvec{\alpha }}^*)}+p_{(1,0,{\varvec{\alpha }}^*)}\big ]\right. \\&\qquad \left. +\, \left[ \prod _{j>2:\,r_j=1} \theta _{j,\,(1,1,{\varvec{\alpha }}^*)}\right] p_{(1,1,{\varvec{\alpha }}^*)} \right\} \\&\quad = \sum _{{\varvec{\alpha }}^*}\left\{ \left[ \prod _{j>2:\,r_j=1}\bar{\theta }_{j,\,(0,0,{\varvec{\alpha }}^*)}\right] \big [\bar{p}_{(0,0,{\varvec{\alpha }}^*)}+\bar{p}_{(0,1,{\varvec{\alpha }}^*)}+\bar{p}_{(1,0,{\varvec{\alpha }}^*)}\big ]\right. \\&\qquad \left. +\, \left[ \prod _{j>2:\,r_j=1}\bar{\theta }_{j,\,(1,1,{\varvec{\alpha }}^*)}\right] \bar{p}_{(1,1,{\varvec{\alpha }}^*)} \right\} \\&\quad = \sum _{{\varvec{\alpha }}^*}\left\{ \left[ \prod _{j>2:\,r_j=1} \theta _{j,\,(0,0,{\varvec{\alpha }}^*)}\right] \big [\bar{p}_{(0,0,{\varvec{\alpha }}^*)}+\bar{p}_{(0,1,{\varvec{\alpha }}^*)}+\bar{p}_{(1,0,{\varvec{\alpha }}^*)}\big ]\right. \\&\qquad \left. +\, \left[ \prod _{j>2:\,r_j=1} \theta _{j,\,(1,1,{\varvec{\alpha }}^*)}\right] \bar{p}_{(1,1,{\varvec{\alpha }}^*)} \right\} , \end{aligned}$$where the last equality above follows from \(\theta _{j,{\varvec{\alpha }}} = \bar{\theta }_{j,{\varvec{\alpha }}}\) for any \(j>2\). To ensure the above equations hold, it suffices to have the following equations satisfied for any \({\varvec{\alpha }}^*\in \{0,1\}^{K-2}\)

$$\begin{aligned} {\left\{ \begin{array}{ll} p_{(1,1,{\varvec{\alpha }}^*)} = \bar{p}_{(1,1,{\varvec{\alpha }}^*)}; \\ p_{(0,0,{\varvec{\alpha }}^*)} + p_{(1,0,{\varvec{\alpha }}^*)} + p_{(0,1,{\varvec{\alpha }}^*)} = \bar{p}_{(0,0,{\varvec{\alpha }}^*)} +\bar{p}_{(1,0,{\varvec{\alpha }}^*)} +\bar{p}_{(0,1,{\varvec{\alpha }}^*)}. \end{array}\right. } \end{aligned}$$(14) -

(b)

For any \({\varvec{r}}\) such that \((r_1,r_2) = (1,0)\) and \({\varvec{r}}_{S_1}\ne \mathbf {0}\), from (13) and the definition of the T-matrix, (12) can be equivalently written as

$$\begin{aligned}&\sum _{{\varvec{\alpha }}^*}\left\{ \left[ \prod _{j>2:\,r_j=1} \theta _{j,\,(0,0,{\varvec{\alpha }}^*)}\right] \big [ g_1 (p_{(0,0,{\varvec{\alpha }}^*)} + p_{(0,1,{\varvec{\alpha }}^*)}) + (1-s_1) p_{(1,0,{\varvec{\alpha }}^*)} \big ]\right. \\&\qquad \left. + \left[ \prod _{j>2:\,r_j=1} \theta _{j,\,(1,1,{\varvec{\alpha }}^*)}\right] (1-s_1) p_{(1,1,{\varvec{\alpha }}^*)} \right\} \\&\quad = \sum _{{\varvec{\alpha }}^*}\left\{ \left[ \prod _{j>2:\,r_j=1} \theta _{j,\,(0,0,{\varvec{\alpha }}^*)}\right] \big [ \bar{g}_1 (\bar{p}_{(0,0,{\varvec{\alpha }}^*)} + \bar{p}_{(0,1,{\varvec{\alpha }}^*)}) + (1-s_1) \bar{p}_{(1,0,{\varvec{\alpha }}^*)} \big ] \right. \\&\qquad \left. + \left[ \prod _{j>2:\,r_j=1} \theta _{j,\,(1,1,{\varvec{\alpha }}^*)}\right] (1-s_1) \bar{p}_{(1,1,{\varvec{\alpha }}^*)} \right\} . \end{aligned}$$To ensure the above equation holds, it suffices to have the following equations satisfied for any \({\varvec{\alpha }}^*\in \{0,1\}^{K-2}\)

$$\begin{aligned} {\left\{ \begin{array}{ll} p_{(1,1,{\varvec{\alpha }}^*)}= \bar{p}_{(1,1,{\varvec{\alpha }}^*)} ;\\ g_1 [p_{(0,0,{\varvec{\alpha }}^*)} + p_{(0,1,{\varvec{\alpha }}^*)}] + (1-s_1) p_{(1,0,{\varvec{\alpha }}^*)}= \bar{g}_1 [\bar{p}_{(0,0,{\varvec{\alpha }}^*)} + \bar{p}_{(0,1,{\varvec{\alpha }}^*)}] + (1-s_1) \bar{p}_{(1,0,{\varvec{\alpha }}^*)}. \end{array}\right. } \end{aligned}$$(15) -

(c)

For any \({\varvec{r}}\) such that \((r_1,r_2) = (0,1)\) and \({\varvec{r}}_{S_1}\ne \mathbf {0}\), by symmetry to the previous case of \((r_1,r_2)=(1,0)\), when the following equations hold for any \({\varvec{\alpha }}^*\in \{0,1\}^{K-2}\), Eq. (12) is guaranteed to hold

$$\begin{aligned} {\left\{ \begin{array}{ll} p_{(1,1,{\varvec{\alpha }}^*)} = \bar{p}_{(1,1,{\varvec{\alpha }}^*)} ;\\ g_2 [p_{(0,0,{\varvec{\alpha }}^*)} + p_{(1,0,{\varvec{\alpha }}^*)}] + (1-s_2) p_{(0,1,{\varvec{\alpha }}^*)} = \bar{g}_2 [\bar{p}_{(0,0,{\varvec{\alpha }}^*)} + \bar{p}_{(1,0,{\varvec{\alpha }}^*)}] + (1-s_2) \bar{p}_{(0,1,{\varvec{\alpha }}^*)}. \end{array}\right. } \end{aligned}$$(16) -

(d)

For any \({\varvec{r}}\) such that \((r_1,r_2) = (1,1)\) and \({\varvec{r}}_{S_1}\ne \mathbf {0}\), similarly to the previous cases, Eq. (12) can be equivalently written as

$$\begin{aligned}&\sum _{{\varvec{\alpha }}^*}\left\{ \left[ \prod _{j>2:\,r_j=1} \theta _{j,\,(0,0,{\varvec{\alpha }}^*)}\right] \big [ g_1 g_2 p_{(0,0,{\varvec{\alpha }}^*)} + (1-s_1) g_2 p_{(1,0,{\varvec{\alpha }}^*)} + g_1 (1-s_2) p_{(0,1,{\varvec{\alpha }}^*)} \big ] \right. \\&\qquad \left. + \left[ \prod _{j>2:\,r_j=1} \theta _{j,\,(1,1,{\varvec{\alpha }}^*)}\right] (1-s_1) (1-s_2) p_{(1,1,{\varvec{\alpha }}^*)} \right\} \\&\quad = \sum _{{\varvec{\alpha }}^*}\left\{ \left[ \prod _{j>2:\,r_j=1} \theta _{j,\,(0,0,{\varvec{\alpha }}^*)}\right] \big [ \bar{g}_1\bar{g}_2 \bar{p}_{(0,0,{\varvec{\alpha }}^*)} + (1-s_1) \bar{g}_2 \bar{p}_{(1,0,{\varvec{\alpha }}^*)} +\bar{g}_1 (1-s_2) \bar{p}_{(0,1,{\varvec{\alpha }}^*)} \big ]\right. \\&\qquad \left. + \left[ \prod _{j>2:\,r_j=1} \theta _{j,\,(1,1,{\varvec{\alpha }}^*)}\right] (1-s_1) (1-s_2) \bar{p}_{(1,1,{\varvec{\alpha }}^*)} \right\} . \end{aligned}$$To ensure the above equation hold, it suffices to have the following equations hold for any \({\varvec{\alpha }}^*\in \{0,1\}^{K-2}\)

$$\begin{aligned} \begin{aligned} {\left\{ \begin{array}{ll} &{} p_{(1,1,{\varvec{\alpha }}^*)} = \bar{p}_{(1,1,{\varvec{\alpha }}^*)}; \\ &{} g_1 g_2 p_{(0,0,{\varvec{\alpha }}^*)} + (1-s_1) g_2 p_{(1,0,{\varvec{\alpha }}^*)} + g_1 (1-s_2) p_{(0,1,{\varvec{\alpha }}^*)} \\ &{}\qquad =\bar{g}_1 \bar{g}_2 \bar{p}_{(0,0,{\varvec{\alpha }}^*)} + (1-s_1) \bar{g}_2 \bar{p}_{(1,0,{\varvec{\alpha }}^*)} +\bar{g}_1 (1-s_2 ) \bar{p}_{(0,1,{\varvec{\alpha }}^*)}. \end{array}\right. } \end{aligned} \end{aligned}$$(17)

We further consider those response vectors with \({\varvec{r}}_{S_1}=\mathbf {0}\). A similar argument gives that, to ensure (12) holds for any \({\varvec{r}}\) with \({\varvec{r}}_{S_1}=\mathbf {0}\), it suffices to have Eqs. (14)–(17) hold. Together with the results in cases (a)–(d) discussed above, we know that Eqs. (14)–(17) are a set of sufficient conditions for (12) to hold for any \({\varvec{r}}\in \{0,1\}^J\). Therefore, to show the necessity of Condition 2, we only need to construct \((\bar{g}_1,\bar{g}_2,\bar{{\varvec{p}}})\ne ( g_1, g_2, {\varvec{p}}) \) satisfying (14)–(17), which can be equivalently written as, for any \({\varvec{\alpha }}^*\in \{0,1\}^{K-2}\), \(p_{(1,1,{\varvec{\alpha }}^*)} = \bar{p}_{(1,1,{\varvec{\alpha }}^*)}\) and

To construct \((\bar{g}_1,\bar{g}_2,\bar{{\varvec{p}}})\ne ( g_1, g_2, {\varvec{p}}) \), we focus on the family of parameters \(({\varvec{s}},{\varvec{g}},{\varvec{p}})\) such that for any \({\varvec{\alpha }}^*\in \{0,1\}^{K-2}\),

where u and v are some positive constants. Next we choose \( \bar{{\varvec{p}}}\) such that for any \({\varvec{\alpha }}^*\in \{0,1\}^{K-2}\)

for some positive constants \(\bar{\rho }\), \(\bar{u}\) and \(\bar{v}\) to be determined. In particular, we choose \(\bar{\rho }\) close enough to 1 and then (18) is equivalent to

For any \(g_1,g_2, s_1, s_2, u\) and v, the above system of equations contain five free parameters \(\bar{\rho }\), \(\bar{u}\), \(\bar{v}\), \(\bar{g}_1\) and \(\bar{g}_2\), while only have four constraints, so there are infinitely many sets of solutions of \((\bar{\rho }, \bar{u}, \bar{v}, \bar{g}_1, \bar{g}_2)\) to (19). This gives the non-identifiability of \((g_1, g_2,{\varvec{p}})\) and hence justifies the necessity of Condition 2. \(\square \)

Proof of Sufficiency

It suffices to show that if \(T({\varvec{s}},{\varvec{g}}){\varvec{p}}= T(\bar{{\varvec{s}}},\bar{{\varvec{g}}})\bar{{\varvec{p}}}\), then \(({\varvec{s}},{\varvec{g}},{\varvec{p}})= ( \bar{{\varvec{s}}},\bar{{\varvec{g}}},\bar{{\varvec{p}}})\). Under Condition 1, Theorem 4 in Xu and Zhang (2016) gives that \({\varvec{s}}=\bar{{\varvec{s}}}\) and \(g_j = \bar{g}_j\) for \(j\in \{K+1,\ldots ,J\}.\) It remains to show \(g_j = \bar{g}_j\) for \(j\in \{1,\ldots ,K\}\). To facilitate the proof, we introduce the following lemma, whose proof is given in the Supplementary Material.

Lemma 1

Suppose Condition 1 is satisfied. For an item set S, define \(\vee _{h\in S\,}{\varvec{q}}_h \) to be the vector of the element-wise maximum of the \({\varvec{q}}\)-vectors in the set S. For any \(k\in \{1,\ldots ,K\}\), if there exist two item sets, denoted by \(S_k^-\) and \(S_k^+\), which are not necessarily nonempty or disjoint, such that

then \(g_k = \bar{g}_k\).

Suppose the Q-matrix takes the form of (3), then under Condition 2, any two different columns of the \((J-K)\times K\) submatrix \(Q^* \) as specified in (3) are distinct. Before proceeding with the proof, we first introduce the concept of the “lexicographic order.” We denote the lexicographic order on \(\{0,1\}^{J-K}\), the space of all \((J-K)\)-dimensional binary vectors, by “\(\prec _{\text {lex}}\).” Specifically, for any \({\varvec{a}}=(a_1,\ldots ,a_{J-K})^\top \), \({\varvec{b}}=(b_1,\ldots ,b_{J-K})^\top \in \{0,1\}^{J-K}\), we write \({\varvec{a}}\prec _{\text {lex}}{\varvec{b}}\) if either \(a_1<b_1\); or there exists some \(i\in \{2,\ldots ,J-K\}\) such that \(a_i<b_i\) and \(a_j=b_j\) for all \(j<i\). For instance, the following four vectors \({\varvec{a}}_1,{\varvec{a}}_2,{\varvec{a}}_3,{\varvec{a}}_4\) in \(\{0,1\}^2\) are sorted in an increasing lexicographic order:

It is not hard to see that if the K column vectors of the submatrix \(Q^*\) are mutually distinct, then there exists a unique way to sort them in an increasing lexicographic order. Thus under Condition 2, there exists a unique permutation \((k_1,k_2,\ldots ,k_K)\) of \((1,2,\ldots ,K)\) such that column \(k_1\) has the smallest lexicographic order among the K columns of \(Q^*\), column \(k_2\) has the second smallest lexicographic order, and so on, i.e.,  . As an illustration, consider the leftmost Q-matrix presented in Example 1, Eq. (6):

. As an illustration, consider the leftmost Q-matrix presented in Example 1, Eq. (6):

then the permutation is \((k_1,k_2,k_3)=(3,2,1)\), since the third column of \(Q^*\) has the smallest lexicographic order, while the first column has the largest. Recall that we denote \({\varvec{a}}\succeq {\varvec{b}}\) if \(a_i>b_i\) for all i, and denote \({\varvec{a}}\nsucceq {\varvec{b}}\) otherwise. Then by definition, if \({\varvec{a}}\prec _{\text {lex}}{\varvec{b}}\), then \({\varvec{a}}\nsucceq {\varvec{b}}\) must hold. Therefore for any \(1\le i<j\le K\), since  , we must have

, we must have  . This fact will be useful in the following proof.

. This fact will be useful in the following proof.

Equipped with the permutation \((k_1,\ldots ,k_K)\), we first prove \(g_{k_1} = \bar{g}_{k_1}\). Define a subset of items

which includes those items from \(\{K+1,\ldots ,J\}\) that do not require attribute \(k_1\). Since  is of the smallest lexicographic order among column vectors of \(Q^*\), for any \(k\in \{1,\ldots ,K\}\backslash \{k_1\}\), we must have

is of the smallest lexicographic order among column vectors of \(Q^*\), for any \(k\in \{1,\ldots ,K\}\backslash \{k_1\}\), we must have  Thus, for any \(k\in \{1,\ldots ,K\}\backslash \{k_1\}\) there must exist some item \(j_k\in \{K+1,\ldots ,J\}\) such that \(q_{j_k,k} = 1 > 0 = q_{j_k,k_1},\) which indicates that the union of the attributes required by items in \(S_{k_1}^-\) includes all the attributes other than \(k_1\), i.e.,

Thus, for any \(k\in \{1,\ldots ,K\}\backslash \{k_1\}\) there must exist some item \(j_k\in \{K+1,\ldots ,J\}\) such that \(q_{j_k,k} = 1 > 0 = q_{j_k,k_1},\) which indicates that the union of the attributes required by items in \(S_{k_1}^-\) includes all the attributes other than \(k_1\), i.e.,

We further define \(S_{k_1}^+ = \{K+1,\ldots ,J\}\). Since \(S_{k_1}^-\) and \(S_{k_1}^+\) satisfy conditions (20) in Lemma 1 for attribute \(k_1\), we have \(g_{k_1} = \bar{g}_{k_1}.\)

Next we use the induction method to prove that for \(l=2,\ldots ,K\), we also have \(g_{k_l}=\bar{g}_{k_l}\). In particular, suppose for any \(1\le m\le l-1\), we already have \(g_{k_m} = \bar{g}_{k_m}\). Note that each \(k_l\) is an integer in \(\{1,\ldots ,K\}\) that can be viewed as either the index of the \(k_l\)th attribute or the index of the \(k_l\)th item. Define a set of items

where the set \(\{j > K : q_{j,k_l}=0\}\) contains those items, among the last \(J-K\) items, which do not require attribute \(k_l\), while the set \(\{k_m :1\le m\le l-1\}\) contains those items for which we have already established the identifiability of the guessing parameter in steps \(m=1,2,\ldots ,l-1\) of the induction method, i.e., \(g_{k_m}=\bar{g}_{k_m}\) for \(m=1,\ldots ,l-1\). Thus for any item \(j\in S_{k_l}^-\), we have \(g_{j} = \bar{g}_{j}\). Namely, \(S_{k_l}^-\) includes the items whose guessing parameters have already been identified prior to step l of the induction method. Moreover, we claim

This is because for any \(1\le m\le l-1\), the item \(k_m\), whose \({\varvec{q}}\)-vector is \({\varvec{e}}_{k_m}^\top \), is included in the set \(S_{k_l}^-\) and hence attribute \(k_m\) is required by the set \(S_{k_l}^-\); on the other hand, for any \(h\in \{l+1,\ldots , K\}\), the column vector  is of greater lexicographic order than

is of greater lexicographic order than  , and hence, there must exist some item in \(S_{k_l}^-\) that does not require attribute \(k_l\) but requires attribute \(k_h\). We further define \(S_{k_l}^+ = \{K+1,\ldots ,J\}\). The chosen \(S_{k_l}^-\) and \(S_{k_l}^+\) satisfy the conditions (20) in Lemma 1 and therefore \(g_{k_l} = \bar{g}_{k_l}.\)

, and hence, there must exist some item in \(S_{k_l}^-\) that does not require attribute \(k_l\) but requires attribute \(k_h\). We further define \(S_{k_l}^+ = \{K+1,\ldots ,J\}\). The chosen \(S_{k_l}^-\) and \(S_{k_l}^+\) satisfy the conditions (20) in Lemma 1 and therefore \(g_{k_l} = \bar{g}_{k_l}.\)

Now that all the slipping and guessing parameters have been identified, \(T({\varvec{s}},{\varvec{g}}) {\varvec{p}}= T(\bar{{\varvec{s}}},\bar{{\varvec{g}}}) \bar{{\varvec{p}}} = T({\varvec{s}},{\varvec{g}}) \bar{{\varvec{p}}}\). Then, the fact that \(T({\varvec{s}},{\varvec{g}})\) has full column rank, which is shown in the proof of Theorem 1 in Xu and Zhang (2016), implies \({\varvec{p}}= \bar{{\varvec{p}}}.\) This completes the proof. \(\square \)

Rights and permissions

About this article

Cite this article

Gu, Y., Xu, G. The Sufficient and Necessary Condition for the Identifiability and Estimability of the DINA Model. Psychometrika 84, 468–483 (2019). https://doi.org/10.1007/s11336-018-9619-8

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11336-018-9619-8