Abstract

Covariance structure analysis and its structural equation modeling extensions have become one of the most widely used methodologies in social sciences such as psychology, education, and economics. An important issue in such analysis is to assess the goodness of fit of a model under analysis. One of the most popular test statistics used in covariance structure analysis is the asymptotically distribution-free (ADF) test statistic introduced by Browne (Br J Math Stat Psychol 37:62–83, 1984). The ADF statistic can be used to test models without any specific distribution assumption (e.g., multivariate normal distribution) of the observed data. Despite its advantage, it has been shown in various empirical studies that unless sample sizes are extremely large, this ADF statistic could perform very poorly in practice. In this paper, we provide a theoretical explanation for this phenomenon and further propose a modified test statistic that improves the performance in samples of realistic size. The proposed statistic deals with the possible ill-conditioning of the involved large-scale covariance matrices.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Structural equation modeling or covariance structure analysis has become a popular tool of quantitative research in the social sciences (e.g., Bollen, 1989; Lee, 1990; Kano, 2002; Tomarken & Waller, 2005; Xu & Mackenzie, 2012; Wu & Browne, 2015). In the analysis of covariance structures, it is hypothesized that the \(p\times p\) population covariance matrix \(\varvec{\Sigma }\) can be represented as a matrix function \(\varvec{\Sigma }(\varvec{\theta })\) of q unknown parameters. Assuming that the population has multivariate normal distribution, the maximum likelihood (ML) estimate \(\hat{\varvec{\theta }}\) is obtained by minimizing

where \(\varvec{S}\) is the sample covariance matrix based on a sample of size n. The associated ML test statistic for evaluating the hypothetical model is \(T_\mathrm{ML}=nF_\mathrm{ML}(\hat{\varvec{\theta }})\) (Browne, 1982).

It is known that under the assumption of multivariate normality and the null hypothesis (and some mild regularity conditions), \(T_\mathrm{ML}\) is asymptotically distributed as Chi-square with \(p^*-q\) degrees of freedom, where \(p^*=p(p+1)/2\). The ML method has been implemented in virtually all structural equation modeling software packages and widely used in practice. Although it could be guaranteed that \(T_\mathrm{ML}\) has an asymptotic Chi-square distribution even without the assumption of multivariate normality of the data in some situations (Amemiya & Anderson, 1990; Browne & Shapiro, 1987), it has been demonstrated that the test statistic \(T_\mathrm{ML}\) can break down completely for nonnormally distributed data in many empirical studies (e.g., Hu et al., 1992).

Since the assumption of multivariate normality of data sets is often unrealistic, a test statistic that does not require any specific distribution assumptions of the observed data, distribution-free ADF test statistic, was proposed by Browne (1984). Unfortunately, it has shown in various empirical studies that unless sample sizes are extremely large (e.g., more than 4000 samples depending on the model and data analyzed), this ADF can perform very poorly (e.g., Hoogland & Boomsma, 1998; Boomsma & Hoogland, 2001).

The goal of this paper is twofold. First, we aim to give a theoretical explanation for some of the observed behaviors of this statistic. The second goal is to suggest a modification, which could lead to improved behavior in samples of realistic size. Different from existing modifications of Browne’s test statistic suggested in the literature (e.g., Yuan & Bentler, 1998, 1999), our approach is mainly concerned with the potential ill-conditioning of the large-scale covariance matrices involved in test statistic calculations. We provide an asymptotic analysis of the proposed test statistic and further explain why and how the suggested adjustments could improve the behavior of ADF statistic. In addition, we present an illustrative numerical example using Monte Carlo method to study and compare finite sample behavior of ADF statistic and the proposed statistic.

2 Preliminary Discussion

Let \(\varvec{X}=(X_1,\ldots ,X_p)'\) be a \(p\times 1\) random vector with mean \(\varvec{\mu }={\mathbb {E}}[\varvec{X}]\) and covariance matrix

For a \(p\times 1\) vector \(\varvec{a}\) we have that \(\mathrm{Var}(\varvec{a}'\varvec{X})=\varvec{a}'\varvec{\Sigma }\varvec{a}\), and hence \(\varvec{a}'(\varvec{X}-\varvec{\mu })\) is identically zero iff \(\varvec{a}'\varvec{\Sigma }\varvec{a}=0\). It follows that the matrix \(\varvec{\Sigma }\) is singular iff the elements of random vector \(\varvec{X}-\varvec{\mu }\) are linearly dependent. Moreover, \(\varvec{\Sigma }\) has rank \(r<p\), iff there exist \(p-r\) linearly independent vectors \(\varvec{a}_1,\ldots ,\varvec{a}_{p-r}\) such that \(\varvec{a}_i'\varvec{\Sigma }\varvec{a}_i=0\), \(i=1,\ldots ,p-r\). This leads to the following result.

Proposition 1

Rank of the covariance matrix \(\varvec{\Sigma }\) is equal to the maximal number of linearly independent elements of \(\varvec{X}-\varvec{\mu }\).

Let \(\varvec{X}^1,\ldots ,\varvec{X}^n\) be an iid sample of vector \(\varvec{X}\), and

be the corresponding sample covariance matrix. We have that if \(\varvec{a}'(\varvec{X}-\varvec{\mu })\equiv 0\), then \(\varvec{a}'(\varvec{X}^v-\bar{\varvec{X}})\equiv 0\), \(v=1,\ldots ,n\), and hence, \(\mathrm{rank}(\varvec{S})\le \mathrm{rank}(\varvec{\Sigma })\). In fact if \(n>p\) and random vector \(\varvec{X}\) has continuous distribution, then \(\mathrm{rank}(\varvec{S})=\mathrm{rank}(\varvec{\Sigma })\) w.p.1. Denote by \(\varvec{\sigma }=\mathrm{vecs}(\varvec{\Sigma })\) and \(\varvec{s}=\mathrm{vecs}(\varvec{S})\) the corresponding \(p^*\times 1\) vectors formed from the above (including diagonal elements, i.e., nonduplicated elements of the respective matrices). Recall that \(\varvec{s}\) is an unbiased estimator of \(\varvec{\sigma }\), i.e., the expected value \({\mathbb {E}}[\varvec{s}]=\varvec{\sigma }\).

Consider the \(p^*\times 1\) vector \(\varvec{Y}\) with elements \((X_i-\mu _i)(X_j-\mu _j)\), \(i\le j\), and let \(\varvec{\Gamma }\) be the covariance matrix of random vector \(\varvec{Y}\). That is, \({\mathbb {E}}[\varvec{Y}]=\varvec{\sigma }\) and

with the typical element

Assuming existence (finiteness) of the fourth-order moments, we have by the central limit theorem that \(\sqrt{n}(\varvec{s}-\varvec{\sigma })\) converges in distribution to normal \(N({\varvec{0}},\varvec{\Gamma })\). Now, let us discuss properties of the \(p^*\times p^*\) covariance matrix \(\varvec{\Gamma }\).

Proposition 2

The following properties hold:

-

(i)

Rank of \(\varvec{\Gamma }\) is equal to the maximal number of linearly independent elements of \(\varvec{Y}-\varvec{\sigma }\).

-

(ii)

Let r be the rank of the covariance matrix \(\varvec{\Sigma }\). Then

$$\begin{aligned} \mathrm{rank}(\varvec{\Gamma })\le r(r+1)/2. \end{aligned}$$(2.3)

Proof

Property (i) follows immediately from Proposition 1.

Now suppose that \(\mathrm{rank}(\varvec{\Sigma })=r\), it follows that there are r linearly independent elements of vector \(\varvec{X}-\varvec{\mu }\) such that the other elements of \(\varvec{X}-\varvec{\mu }\) are linear functions of these r elements. By permuting elements of \(\varvec{X}-\varvec{\mu }\) if necessary, we can assume that the first r elements of \(\varvec{X}-\varvec{\mu }\) are linearly independent. Then for \(i>r\) and \(j>r\) we have that \(X_i-\mu _i=\sum _{k=1}^r a_k(X_k-\mu _k)\) and \(X_j-\mu _j=\sum _{l=1}^r b_l(X_l-\mu _l)\) for some coefficients \(a_k\) and \(b_l\). It follows that

That is, the component \(Y_{ij}\) of \(\varvec{Y}\) is a linear combination of elements \(Y_{kl}\), \(k,l=1,\ldots ,r\). Since there are \(r(r+1)/2\) elements \(Y_{kl}\), \(k\le l=1,\ldots ,r\), this completes the proof. \(\square \)

It follows that the covariance matrix \(\varvec{\Gamma }\) is singular iff random variables \((X_i-\mu _i)(X_j-\mu _j)-\sigma _{ij}\), \(i\le j\), are linearly dependent. In particular, this implies that if \(\varvec{\Gamma }\) is singular, then the distribution of \(\varvec{X}\) is degenerate (cf., Jennrich & Satorra, 2013). It also follows that if the matrix \(\varvec{\Sigma }\) is singular, then the matrix \(\varvec{\Gamma }\) is singular. However, the converse of this is not true in general. That is, it can happen that \(\varvec{\Sigma }\) is nonsingular, while the corresponding matrix \(\varvec{\Gamma }\) is singular. For example, let \(p=1\) and consider the following random variable X, which takes two values, \(X=1\) with probability 1/2 and \(X=-1\) with probability 1/2. This random variable has zero mean and positive variance, while \(Y=X^2\) is constantly 1. As an another example, for \(p=2\), let \(X_1\sim N(0,1)\), and \(X_2=X_1\) for \(|X_1|\le 1\) and \(X_2=-X_1\) for \(|X_1|> 1\). The random variables \(X_1\) and \(X_2\) have zero mean and are linearly independent, while \(X_1^2=X_2^2\). This also shows that the inequality (2.3) can be strict.

Now let \(\varvec{Y}^v\), \(v=1,\ldots ,n\), be \(p^*\times 1\) vector composed from elements \((X^v_i-\bar{X}_i)(X^v_j-\bar{X}_j)\), \(i\le j\), and \(\bar{\varvec{Y}}=n^{-1} \sum _{v=1}^n \varvec{Y}^v\). A sample estimate of \(\varvec{\Gamma }\) is

with typical elements

Note that \(\varvec{s}=(n-1)^{-1} \sum _{v=1}^n \varvec{Y}^v\), so the estimate

is slightly different from the estimate \(\tilde{\varvec{\Gamma }}\). Note also that \({\mathbb {E}}[(X^v_i-\mu _i)(X^v_j-\mu _j)]= \sigma _{ij},\) while \( {\mathbb {E}}[(X^v_i-\bar{X}_i)(X^v_j-\bar{X}_j)]\ne \sigma _{ij}, \) i.e., \({\mathbb {E}}[\varvec{Y}^v]\ne \varvec{\sigma }\), and that both estimators \(\tilde{\varvec{\Gamma }}\) and \(\breve{\varvec{\Gamma }}\) are biased. By the law of large numbers, both estimators are consistent, i.e., converge to \(\varvec{\Gamma }\) w.p.1 as \(n\rightarrow \infty \).

3 Asymptotically Distribution-Free Test Statistic

Let \(\varvec{\Sigma }=\varvec{\Sigma }(\varvec{\theta })\) be a covariance structural model, and \(\varvec{\Delta }(\varvec{\theta })=\partial \varvec{\sigma }(\varvec{\theta })/\partial \varvec{\theta }\) be the corresponding \(p^*\times q\) Jacobian matrix. Here, \(\varvec{\theta }\) is \(q\times 1\) parameter vector varying in a specified parameter space \(\Theta \subset {\mathbb {R}}^q\). For the sake of simplicity we assume first that the model is correctly specified, i.e., there exists \(\varvec{\theta }_0\in \Theta \) such that \(\varvec{\Sigma }_0=\varvec{\Sigma }(\varvec{\theta }_0)\). In order to evaluate power of the considered tests, we also consider misspecified models later assuming Pitman population drift, with resulting noncentral Chi-square asymptotic distribution. Let \(\hat{\varvec{\theta }}\) be a consistent estimate of \(\varvec{\theta }_0\), and consider \(\hat{\varvec{\sigma }}= \varvec{\sigma }(\hat{\varvec{\theta }})\) and the estimate \(\hat{\varvec{\Delta }}=\varvec{\Delta }(\hat{\varvec{\theta }})\) of the population Jacobian matrix \(\varvec{\Delta }_0=\varvec{\Delta }(\varvec{\theta }_0)\). We assume throughout the paper that the mapping \(\varvec{\theta }\mapsto \varvec{\sigma }(\varvec{\theta })\) is continuously differentiable. For an \(m\times k\) matrix \(\varvec{A}\), we denote by \(\varvec{A}_c\) its orthogonal complement, i.e., \(\varvec{A}_c\) is an \(m\times (m-\nu )\) matrix of full column rank \(m-\nu \) such that \(\varvec{A}'_c \varvec{A}={\varvec{0}}\) with \(\nu =\mathrm{rank}(\varvec{A})\). All respective orthogonal complements can be obtained from a particular one by the transformation \(\varvec{A}_c \mapsto \varvec{A}_c \varvec{M}\), where \(\varvec{M}\) is an arbitrary \((m-\nu )\times (m-\nu )\) nonsingular matrix.

Consider the following distribution-free test statistic, introduced in Browne (1984),

where \(\widehat{\varvec{\Gamma }}\) is a consistent estimator of \(\varvec{\Gamma }\), and \(\hat{\varvec{\Delta }}_c\) is an orthogonal complement of matrix \(\hat{\varvec{\Delta }}\). The right-hand side of (3.1) does not depend on a particular choice of the orthogonal complement \(\hat{\varvec{\Delta }}_c\). By using a matrix identity, the statistic \(T_\mathrm{B}\) can be written in the following equivalent form [Browne, 1984; expression (2.20b)],

provided that matrix \(\widehat{\varvec{\Gamma }}\) is nonsingular. Let us consider the following assumptions.

-

(A1)

The population vector \(\varvec{X}\) has finite fourth-order moments.

-

(A2)

The population Jacobian matrix \(\varvec{\Delta }_0\) has full column rank q.

-

(A3)

The estimator \(\hat{\varvec{\theta }}\) is \(O_p(n^{-1/2})\) consistent estimator of \(\varvec{\theta }_0\), i.e., \(n^{1/2}(\hat{\varvec{\theta }}- \varvec{\theta }_0)\) is bounded in probability.

-

(A4)

The covariance matrix \(\varvec{\Gamma }\) is nonsingular.

Under the above regularity conditions (A1)–(A4), the test statistic \(T_\mathrm{B}\) converges in distribution to a Chi-square distribution with \(p^*-q\) degrees of freedom (cf., Browne & Shapiro, 2015, see also Theorem 1 below). The required regularity conditions are rather mild, and no assumptions about population distribution are made (apart from existence of fourth-order moments). The asymptotic distribution of \(n^{1/2}(\hat{\varvec{\theta }}- \varvec{\theta }_0)\) does not need to be normal, in particular the true value \(\varvec{\theta }_0\) of the parameter vector can be a boundary point of the parameter space \(\Theta \). Even the assumption (A2) can be relaxed by considering overparameterized models and assuming instead that \(\varvec{\theta }_0\) is a regular point of the mapping \(\varvec{\theta }\mapsto \varvec{\sigma }(\varvec{\theta })\). In that case, the number of degrees of freedom should be corrected to \(p^*-{\mathrm{rank}}(\varvec{\Delta }_0)\) (cf., Shapiro, 1986). While it was previously argued that the requirement for the matrix \(\varvec{\Gamma }\) to be nonsingular is essential (e.g., Jennrich & Satorra, 2013), this assumption can be relaxed to the following.

-

(A5)

The \((p^*-q)\times (p^*-q)\) matrix \(\varvec{\Delta }'_c\varvec{\Gamma }\varvec{\Delta }_c\) is nonsingular (nonsingularity of this matrix does not depend on a particular choice of the orthogonal complement \(\varvec{\Delta }_c\)).

It is important to note that, even if \(\varvec{\Gamma }\) is nonsingular, it could still be ill-conditioned. Conditioning of a symmetric positive definite matrix is often measured by its condition number, defined as \(\mathrm{cond}(\varvec{\Gamma })=\lambda _{\max }(\varvec{\Gamma })/\lambda _{\min }(\varvec{\Gamma })\), where \(\lambda _{\max }(\varvec{\Gamma })\) and \(\lambda _{\min }(\varvec{\Gamma })\) are the respective largest and smallest eigenvalues of \(\varvec{\Gamma }\). The largest and smallest eigenvalues have the following variational representation

It follows that by adding a row and column to a symmetric positive definite matrix (and hence increasing its dimension by one), its largest eigenvalue becomes larger and smallest eigenvalue becomes smaller (Sturmian separation theorem, e.g., Bellman, 1960, p. 117). Therefore, matrices of large dimensions tend to be ill-conditioned. It also follows from the variational representation (3.3) that \(\lambda _{\max }(\cdot )\) is a convex function, and hence by Jensen’s inequality \({\mathbb {E}}\big [\lambda _{\max }(\widehat{\varvec{\Gamma }})\big ]\ge \lambda _{\max }\big ({\mathbb {E}}[\widehat{\varvec{\Gamma }}]\big )\). It follows that \(\lambda _{\max }(\widehat{\varvec{\Gamma }})\) tends to be bigger than \(\lambda _{\max }(\varvec{\Gamma })\), and similarly \(\lambda _{\min }(\widehat{\varvec{\Gamma }})\) tends to be smaller than \(\lambda _{\min }(\varvec{\Gamma })\). Consequently, \(\mathrm{cond}(\widehat{\varvec{\Gamma }})\) tends to be even larger than \(\mathrm{cond}(\varvec{\Gamma })\).

If \(\mathrm{cond}(\varvec{\Gamma })\) is large, then even very small changes in elements of \(\varvec{\Gamma }\) can result in large changes of its inverse. In that case, the right-hand side of (3.2) could become very sensitive to small perturbations of the estimate \(\widehat{\varvec{\Gamma }}\). As a result, one would need a very large sample for a reasonable convergence of the distribution of \(T_\mathrm{B}\) to the Chi-square distribution. This was observed empirically (e.g., Huang & Bentler, 2015) and is considered to be a serious drawback of the distribution-free test statistic \(T_\mathrm{B}\). We can cite, for example: “One of major limitations associated with this approach [ADF] in addressing nonnormality has been its excessively demanding sample-size requirement. It is now well known that unless sample sizes are extremely large the ADF estimator performs very poorly and can yield severely distorted estimated values and standard errors” (Byrne, 2012, p. 315).

It is numerically demonstrated in Yuan and Bentler (1998) that the test statistic \(T_\mathrm{B}\) is “sensitive to model degrees of freedom \(p^*-q\), rather than to the model complexity, defined as number of parameters.” Indeed, we observe that in the representation (3.1), one needs to invert matrix \(\hat{\varvec{\Delta }}'_c\widehat{\varvec{\Gamma }} \hat{\varvec{\Delta }}_c\) of order \((p^*-q)\times (p^*-q)\), rather than the \(p^*\times p^*\) matrix \(\widehat{\varvec{\Gamma }}\). Considering the corresponding population counterparts, observe that the eigenvalues, and hence the condition number, of matrix \(\varvec{\Delta }'_c \varvec{\Gamma }\varvec{\Delta }_c\) depend on the choice of the orthogonal complement \(\varvec{\Delta }_c\). Suppose that matrix \(\varvec{\Delta }'_c \varvec{\Gamma }\varvec{\Delta }_c\) is nonsingular (assumption (A5)) and consider matrix

which is independent of a particular choice of \(\varvec{\Delta }_c\). Suppose further that the orthogonal complement matrix \(\varvec{\Delta }_c\) has orthonormal column vectors, i.e., \(\varvec{\Delta }'_c \varvec{\Delta }_c=\varvec{I}_{p^*-q}\). Existence of such orthonormal complement matrix is ensured by applying the transformation \(\varvec{\Delta }_c \mapsto \varvec{\Delta }_c \varvec{M}\) if necessary. If matrix \(\varvec{\Delta }_c\) is orthonormal, then the nonzero eigenvalues of matrix \(\varvec{\Xi }\) coincide with the eigenvalues of matrix \((\varvec{\Delta }'_c\varvec{\Gamma }\varvec{\Delta }_c)^{-1}\), which are inverse of the eigenvalues of matrix \(\varvec{\Delta }'_c\varvec{\Gamma }\varvec{\Delta }_c\), and do not depend on a particular choice of the orthonormal complement matrix \(\varvec{\Delta }_c\).

Unless stated otherwise, we assume that the matrix \(\varvec{\Delta }_c\) is orthonormal. By the above discussion, it makes sense to measure sensitivity of the statistic \(T_\mathrm{B}\) in terms of the condition number of \(\varvec{\Delta }'_c\varvec{\Gamma }\varvec{\Delta }_c\) rather than the condition number of \(\varvec{\Gamma }\).

If we replace sample estimates in (3.2) with their true counterparts, then the corresponding statistic \(T_\mathrm{true}\) is calculated as

We will show that \(T_\mathrm{true}\) could have a distribution well approximated by the corresponding Chi-square distribution even when the matrices \(\varvec{\Gamma }\) and \(\varvec{\Delta }'_c\varvec{\Gamma }\varvec{\Delta }_c\) are ill-conditioned. Note that \(T_\mathrm{true}\) cannot be computed in practice since true values of the required parameters are not known.

It is pointed out in Yuan and Bentler (1998) that since for a random symmetric positive definite matrix \(\varvec{A}\), it holds that \({\mathbb {E}}[\varvec{A}^{-1}]\succeq ({\mathbb {E}}\varvec{A})^{-1}\) (in the sense that matrix \({\mathbb {E}}[\varvec{A}^{-1}]- ({\mathbb {E}}\varvec{A})^{-1}\) is positive semidefinite), “in the typical situation with large models and small to moderate sample sizes, \(T_\mathrm{B}\) rejects correct models far too frequently.” By using a second-order approximation of the difference \((\hat{\varvec{\Delta }}'_c\widehat{\varvec{\Gamma }} \hat{\varvec{\Delta }}_c)^{-1}-(\hat{\varvec{\Delta }}'_c\varvec{\Gamma }\hat{\varvec{\Delta }}_c)^{-1}\), we can write the following approximation of the difference \(T_\mathrm{B}-T_\mathrm{true}\)

where \(\varvec{Z}_n=n^{1/2}(\varvec{s}-\hat{\varvec{\sigma }})\) and matrix \(\varvec{\Xi }\) is defined in (3.4). When \(\varvec{\Xi }\) is “large,” which happens when matrix \(\varvec{\Delta }'_c\varvec{\Gamma }\varvec{\Delta }_c\) is ill-conditioned, even small differences between the respective elements of \(\widehat{\varvec{\Gamma }}\) and \(\varvec{\Gamma }\) are amplified by the matrix \(\varvec{\Xi }\). This gives another explanation for sensitivity of the distribution of \(T_\mathrm{B}\) to the number of degrees of freedom \(p^*-q\), which is the dimension of matrix \(\varvec{\Delta }'_c\varvec{\Gamma }\varvec{\Delta }_c\). Since matrix \(\varvec{\Xi }\) is positive semidefinite, the second-order term in the expansion (3.6) of \(T_\mathrm{B}-T_\mathrm{true}\) is always nonnegative. If \(\widehat{\varvec{\Gamma }}\) is an unbiased estimator of \(\varvec{\Gamma }\), then \({\mathbb {E}}[\varvec{\Xi }(\widehat{\varvec{\Gamma }}-\varvec{\Gamma })\varvec{\Xi }]={\varvec{0}}\). Even if \(\widehat{\varvec{\Gamma }}\) is a slightly biased, for “large” \(\varvec{\Xi }\), the second-order term typically dominates. This indicates that \(T_\mathrm{B}\) tends to be stochastically bigger than \(T_\mathrm{true}\), and hence \(T_\mathrm{B}\) rejects correct models too often.

So far, we have provided theoretical explanations on the reasons why ADF statistic could perform poorly and discussed some empirical evidence documented in the literature. In the next section, we propose a modified test statistic, which could improve the behavior of ADF statistic.

4 Modified Test Statistic

As discussed earlier, under assumptions (A1)–(A3) and (A5), the test statistic \(T_\mathrm{B}\) has asymptotically Chi-square distribution with \(p^*-q\) degrees of freedom. Let us now consider the case where matrix \(\varvec{\Delta }'_c\varvec{\Gamma }\varvec{\Delta }_c\) is singular. Let \(\xi _1\ge \cdots \ge \xi _{p^*-q}\) be the eigenvalues of matrix \(\varvec{\Delta }'_c\varvec{\Gamma }\varvec{\Delta }_c\). Since it is assumed that matrix \(\varvec{\Delta }_c\) is orthonormal, the eigenvalues \(\xi _1,\ldots ,\xi _{p^*-q}\) coincide with the first (largest) \(p^*-q\) eigenvalues of matrix \(\varvec{\Xi }\) defined in (3.4). By making transformation \(\varvec{\Delta }_c \mapsto \varvec{\Delta }_c \varvec{M}\) with an appropriate orthogonal matrix \(\varvec{M}\), we can make matrix \(\varvec{\Delta }'_c\varvec{\Gamma }\varvec{\Delta }_c\) diagonal with diagonal elements \(\xi _1,\ldots ,\xi _{p^*-q}\). We assume that matrix \(\varvec{\Delta }'_c\varvec{\Gamma }\varvec{\Delta }_c\) is not null so that \(\xi _1>0\).

Let

It follows that \(r-q>0\), and since \(\varvec{\Delta }'_c\varvec{\Gamma }\varvec{\Delta }_c\) is singular, we have that \(r-q<p^*-q\), and \(\xi _{r-q}>0\) while \(\xi _{r-q+1}=\ldots = \xi _{p^*-q}=0\). Consider partitioning \(\varvec{\Delta }_c=\big [\varvec{\Delta }_{1c},\varvec{\Delta }_{2c}\big ]\), where \(\varvec{\Delta }_{1c}\) formed from first \(r-q\) columns of \(\varvec{\Delta }_c\), and \(\varvec{\Delta }_{2c}\) formed from the columns corresponding to zero eigenvalues \(\xi _{r-q+1},\ldots ,\xi _{p^*-q}\). It makes sense then to reduce dimension of matrix \(\varvec{\Delta }_c\) by removing columns corresponding to zero eigenvalues \(\xi _{r-q+1},\ldots ,\xi _{p^*-q}\), and hence to replace \(\varvec{\Delta }_c\) with its \(p^*\times (r-q)\) submatrix \(\varvec{\Delta }_{1c}\). Similarly, even if the population matrix \(\varvec{\Delta }_c'\varvec{\Gamma }\varvec{\Delta }_c\) is nonsingular but is ill-conditioned, it would be worthwhile to remove unstable components associated with its small eigenvalues. This is similar to a common practice in principal component analysis (PCA).

We can approach this in the following way. Let \(\varvec{\Upsilon }\) be a \(p^*\times r\) matrix such that the \(r\times r\) matrix \(\varvec{\Upsilon }'\varvec{\Gamma }\varvec{\Upsilon }\) is nonsingular. Let us make the following replacements in the right-hand side of (3.2): \(\widehat{\varvec{\Gamma }}\) by \(\varvec{\Upsilon }'\widehat{\varvec{\Gamma }}\varvec{\Upsilon }\), \(\hat{\varvec{\Delta }}\) by \(\varvec{\Upsilon }'\hat{\varvec{\Delta }}\) and \(\varvec{s}-\hat{\varvec{\sigma }}\) by \(\varvec{\Upsilon }'(\varvec{s}-\hat{\varvec{\sigma }})\). That is, consider the following modified statistic

Alternatively, we can write this statistic as

where \(\hat{\varvec{\Delta }}_\Upsilon \) is an orthogonal complement of matrix \(\varvec{\Upsilon }'\hat{\varvec{\Delta }}\). That is, \(\hat{\varvec{\Delta }}_\Upsilon \) is an \(r\times (r-q)\) matrix of full column rank such that \(\hat{\varvec{\Delta }}'\varvec{\Upsilon }\hat{\varvec{\Delta }}_\Upsilon ={\varvec{0}}\). Note that the right-hand side of (4.3) does not depend on a particular choice of the orthogonal complement \(\hat{\varvec{\Delta }}_\Upsilon \). The \(p^*\times (r-q)\) matrix \(\tilde{\varvec{\Delta }}_c=\varvec{\Upsilon }\hat{\varvec{\Delta }}_\Upsilon \) is orthogonal of matrix \(\hat{\varvec{\Delta }}\) and can be viewed as a sample counterpart of the matrix \(\varvec{\Delta }_{1c}\).

Using \(\tilde{\varvec{\Delta }}_c\) we can write

Note that because of the equivalence of (4.2) and (4.3), the expression in the right-hand side of (4.3) does not depend on a particular choice of the orthogonal complement \(\hat{\varvec{\Delta }}_\Upsilon \).

Theorem 1

Suppose that the assumptions (A1)–(A3) hold and the matrix \(\varvec{\Upsilon }'\varvec{\Gamma }\varvec{\Upsilon }\) is nonsingular. Then the test statistic \(T_\Upsilon \) converges in distribution to a Chi-square distribution with \(r-q\) degrees of freedom.

Proof

Because of the assumption (A1), we have by the central limit theorem that \(n^{1/2}(\varvec{s}-\varvec{\sigma }_0)\) converges in distribution to normal \(N({\varvec{0}},\varvec{\Gamma })\). Here \(\varvec{\Sigma }_0=\varvec{\Sigma }(\varvec{\theta }_0)\) is the population covariance matrix and \(\varvec{\sigma }_0=\mathrm{vecs}(\varvec{\Sigma }_0)\). Since \(\varvec{\sigma }(\cdot )\) is differentiable, we can write

where \(\varvec{\Delta }=\varvec{\Delta }(\varvec{\theta }_0)\) (for simplicity of notation, we drop here the subscript in \(\varvec{\Delta }_0\)). Moreover, because of (A3), we have that \(\hat{\varvec{\theta }}-\varvec{\theta }_0=O_p(n^{-1/2})\), and hence

Let \(\varvec{\Delta }_\sharp \) be the population counterpart of matrix \(\hat{\varvec{\Delta }}_\sharp = \hat{\varvec{\Upsilon }}\hat{\varvec{\Delta }}_\Upsilon \). Since \(\varvec{\Delta }'_\sharp \varvec{\Delta }={\varvec{0}}\), it follows that

Now since \(n^{1/2}(\varvec{s}-\varvec{\sigma }_0)\) converges in distribution, it follows that \(\varvec{s}-\varvec{\sigma }_0=O_p(n^{-1/2})\), and hence we obtain that

Since \(n^{1/2}\varvec{\Delta }'_\sharp (\varvec{s}-\varvec{\sigma }_0)\) converges in distribution to normal \(N({\varvec{0}},\varvec{\Delta }'_\sharp \varvec{\Gamma }\varvec{\Delta }_\sharp )\), it follows by Slutsky’s theorem that \(T_\Upsilon \) converges in distribution to \(\varvec{Z}' \varvec{U}^{-1}\varvec{Z}\), where \(\varvec{U}=\varvec{\Delta }'_\sharp \varvec{\Gamma }\varvec{\Delta }_\sharp \) and \(\varvec{Z}\sim N({\varvec{0}},\varvec{U})\). It follows that \(T_\Upsilon \) converges in distribution to Chi-square with \(r-q\) degrees of freedom. \(\square \)

It is important to note that the true rank of \(\varvec{\Gamma }\) (or rather of \(\varvec{\Delta }'_c\varvec{\Gamma }\varvec{\Delta }_c\)) is not known in practice. Therefore, we could take \(\varvec{\Upsilon }\) to be composed of eigenvectors of \(\hat{\varvec{\Gamma }}\) corresponding to its largest eigenvalues. Choice of the number of the largest eigenvalues could be based on heuristic, similar to the heuristic of PCA. It is also possible to approach this by choosing largest eigenvalues of \(\hat{\varvec{\Delta }}'_c\hat{\varvec{\Gamma }}\hat{\varvec{\Delta }}_c\).

Next, in order to evaluate power of the modified statistic \(T_\Upsilon \), we can use the following approach. Assume that the population value \(\varvec{\sigma }_{0,n}\) depends on the sample size n and converges to \(\varvec{\sigma }^*=\varvec{\sigma }(\varvec{\theta }^*)\), \(\varvec{\sigma }^*\in \Theta \), in such way that \(n^{1/2}(\varvec{\sigma }_{0,n}-\varvec{\sigma }^*)\) tends to a vector \(\varvec{\mu }\). This is the so-called population drift assumption and referred to as a sequence of local alternatives [see, e.g., McManus, (1991) for a historical overview of this assumption]. Under this additional assumption of local alternatives, the statistic \(T_\Upsilon \) converges in distribution to a noncentral Chi-square distribution with \(r-q\) degrees of freedom and the noncentrality parameter

Consequently, power of test statistic \(T_\Upsilon \) is measured in terms of the noncentrality parameter \(\delta \) and the number of degrees of freedom \(df=r-q\). This leads to a certain loss of power compared with the test statistic \(T_\mathrm{B}\). In particular, if \(\varvec{\Delta }_{1c}'\varvec{\mu }={\varvec{0}}\) for some \(\varvec{\mu }\), then \(\delta =0\). This is the price one would be willing to pay for making the ADF approach work better.

5 Illustrative Numerical Example

In our Monte Carlo experiments, we consider the following factor analysis model \(\varvec{\Sigma }=\varvec{\Lambda }\varvec{\Lambda }'+\varvec{\Psi }\), where \(\varvec{\Lambda }=[\lambda _{k\ell }]\) is \(p\times m\) matrix of factor loadings and \(\varvec{\Psi }=\mathrm{diag}(\psi _{11},\ldots ,\psi _{pp}) \) is \(p\times p\) diagonal matrix of residual variances. In order to make this model identifiable for \(m>1\), for example, we can set the upper triangular part of \(\varvec{\Lambda }\) to zero, i.e., set \(\lambda _{k\ell }=0\) for \(\ell >k\). Here, the parameter vector \(\varvec{\theta }\) consists of \(mp-m(m-1)/2\) elements of \(\varvec{\Lambda }\) (with \(\lambda _{k\ell }\), \(\ell >k\), removed) and p diagonal elements of \(\varvec{\Psi }\). So the dimension of \(\varvec{\theta }\) is \(q=mp-m(m-1)/2 +p\). With this structure, we can further write this model as

Therefore, the elements \(\delta _{ij,k\ell }\) of the Jacobian matrix \(\varvec{\Delta }(\varvec{\theta })\) can be computed by using partial derivatives \(\delta _{ij,k\ell }=\frac{\partial \sigma _{ij}}{\partial \lambda _{k\ell }}\), \(1\le i\le j\le p\), \(k=1,\ldots ,p\), \(\ell =1,\ldots ,m\), \(\ell \le k\), with

With the factor analysis model described above, we first demonstrate that if \({\varvec{\Gamma }}\) is ill-conditioned, then the corresponding Chi-square distribution could be a poor approximation of the distribution of statistic \(T_\mathrm{B}\) defined in (3.2). Then, we illustrate that the correction proposed in Sect. 4 can make significant improvements.

Detailed simulation procedures and simulation parameters are as follows. In our experiment, we set \(p= 9, m = 3, q = 18\) for Model 1 (confirmatory factor analysis model), \(p=9\), \(m=2\), \(q=26\) for Model 2 (exploratory factor analysis model) and construct the population covariance matrix \(\varvec{\Sigma }^*\) with specific values of elements of \(\varvec{\Lambda }^*\) and diagonal elements of \(\varvec{\Psi }^*\) generated as shown in Tables 1 and 2. Note that these models are identifiable since the upper triangular part of \(\varvec{\Lambda }^*\) is zero. For both models, the factor correlations are fixed to zero.

Next, we generate the ill-conditioned sample estimate \(\widehat{\varvec{\Gamma }}\) with the data from an elliptical distribution (\(M=3000\) simulation samples with the sample size \(n=500\) for each model) as follows. Let \(\varvec{X}\sim N({\varvec{0}},\varvec{\Sigma })\) be a random vector having (multivariate) normal distribution and W be a random variable independent of \(\varvec{X}\). Then the random vector \(\varvec{Y}=W \varvec{X}\) has an elliptical distribution with zero mean vector, covariance matrix \(\alpha \varvec{\Sigma }\), where \(\alpha = {\mathbb {E}}[W^2]\), and the kurtosis parameter \(\kappa =\frac{{\mathbb {E}}[W^4]}{({\mathbb {E}}[W^2])^2}-1\) (see Chun & Shapiro, 2009 for more details). We involve a random variable W taking two values, 2 with probability 0.2 and 0.5 with probability 0.8. The kurtosis parameter of the elliptical distributions is then \(\kappa =2.25\). Note that in this case, \({\mathbb {E}}[W^2]=1\), so that the covariance matrices of \(\varvec{X}\) and \(\varvec{Y}\) are equal to each other. For the Monte Carlo samples, condition numbers of \(\widehat{\varvec{\Gamma }}\) range from 198.83 to 1589.7 (Model 1), and 241.097 to 2721.653 (Model 2), with a mean of 529.89 and 728.492, respectively (while the condition number of \(\varvec{\Gamma }\) is 113.39 and 259.115 for respective Models 1 and 2). This is in accordance with an observation in Sect. 3 that the condition number of sample covariance matrix \(\widehat{\varvec{\Gamma }}\) tends to be larger than the condition number of \(\varvec{\Gamma }\).

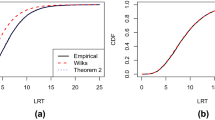

For each simulation sample, we obtain consistent estimates \(\hat{\varvec{\sigma }}\) (through the generalized least squares method) and calculate three different test statistics \(T_\mathrm{B}\), \(T_\mathrm{true}\), \(T_{\Upsilon }\) as follows. Using the sample estimates \(\hat{\varvec{\sigma }}\), \(\hat{\varvec{\Gamma }}\) and \(\hat{\varvec{\Delta }}\), the statistic \(T_\mathrm{B}\) can be calculated as defined in Eq. (3.2). The statistic \(T_\mathrm{true}\) is computed as in (3.5) by replacing sample estimates in (3.2) with their true counterparts. We will show that \(T_\mathrm{true}\) could have a distribution well approximated by the corresponding Chi-square distribution, while this Chi-square distribution could give a poor approximation of the corresponding statistic \(T_\mathrm{B}\). We make corrections by taking \(\varvec{\Upsilon }=[\hat{\varvec{e}}_1,\ldots ,\hat{\varvec{e}}_r]\), where \(\hat{\varvec{e}}_1,\ldots ,\hat{\varvec{e}}_r\) are eigenvectors of \(\widehat{\varvec{\Gamma }}\) corresponding to its largest eigenvalues, and we can calculate \(T_\Upsilon \) as defined in Eq. (4.2). In our experiments, we try to remove the smallest eigenvalues which are smaller than 0.05–1.2% of the largest eigenvalue for each simulation sample. With this correction, we will show that the corresponding Chi-square asymptotics provided in Theorem 1 give a reasonably good fit for the statistic \(T_\Upsilon \). As we discussed earlier, it is also possible to make corrections based on the largest eigenvalues of \(\hat{\varvec{\Delta }}'_c\hat{\varvec{\Gamma }}\hat{\varvec{\Delta }}_c\).

We use several discrepancy measures to compare the fit of each statistic. One is the Kolmogorov–Smirnov (KS) distance defined as

where \(\hat{F}_{M}(t)=\frac{\# \left\{ T_{i} \le t \right\} }{M}\) is the empirical cumulative distribution function (cdf) based on the \(M=3000\) Monte Carlo samples of each test statistic \(T_\mathrm{B}\), \(T_\mathrm{true}\), \(T_{\Upsilon }\), and F(t) is the theoretical cdf of the respective approximations of the test statistic. As the KS distance only depends on the extreme cases, we also consider the average Kolmogorov–Smirnov distance (AK) (cf., Yuan et al., 2007) as

where

with \(T_{(1)} \le \cdots T_{(M)}\) being the respective order statistics.

In addition to the KS distances, quantile comparisons through the empirical rejection rates are done to investigate the quality of each approximation with respect to the validity of confidence intervals. That is, we count the number of rejections for \(\alpha =1, 5, 10\%\) for each statistic. If the statistic is well approximated by the corresponding theoretical Chi-square distribution, the empirical rejection rates should be close to 1, 5, 10%, respectively. Finally, quantile–quantile (Q–Q) plots for each statistic against its theoretical Chi-square distributions are provided.

Tables 3 and 4 present KS distances and empirical rejection rates for each \(\alpha \) level for three different statistics. It can be seen that distribution of \(T_\mathrm{true}\) is well approximated by the corresponding Chi-square distribution and has better empirical rejection rates (rejection rates are close to the \(\alpha \) levels set). As expected, the approximation of \(T_\mathrm{true}\) by the ADF statistic \(T_\mathrm{B}\) is extremely poor. It rejects the true values too often (e.g., empirical rejection rate is about 75% where \(\alpha =5\%\) for Model 1 in Table 3), and KS distance numbers are very large. On the other hand, when we make corrections by removing some of the smallest eigenvalues, we observe the significantly improved fit. Empirical rejection rates are much closer to the \(\alpha \) levels, and KS distance numbers are much smaller. For example, when \(df=20\) for Model 1 (Table 3), empirical rejection rates of \(T_{\Upsilon }\) are 1.7, 4.1, and 5.3% where the rates of \(T_\mathrm{true}\) are 1.4, 5.7, and 11%, and of \(T_\mathrm{B}\) are 63, 75, and 80%. That is, the proposed test statistic \(T_{\Upsilon }\) has the considerably improved empirical rejection rate compared to \(T_\mathrm{B}\). For Model 2, the performance of \(T_{\Upsilon }\) and even the performance of \(T_\mathrm{true}\) are slightly worse than for Model 1, but the proposed test statistic \(T_{\Upsilon }\) still has the significantly improved empirical rejection rates compared to \(T_\mathrm{B}\). For example, when \(df=16\) (Table 4), empirical rejection rates of \(T_{\Upsilon }\) are 3.9, 9.3, and 13.9% where the rates of \(T_\mathrm{true}\) are 2, 7, and 13%, and the rates of \(T_\mathrm{B}\) are 31, 43, and 50%. That is, the proposed test statistic \(T_{\Upsilon }\) has the considerably improved empirical rejection rates compared to \(T_\mathrm{B}\) for both models. We also observe that the KS distance numbers are much smaller than those of \(T_\mathrm{B}\). Note that for \(T_{\Upsilon }\), since we remove a certain percentage of the eigenvalues, we ended up with several different degrees of freedom depending on how many eigenvalues are removed.

The following Q–Q plots confirm the similar results. We first generate plots with all the data points, and then with only the 1st–99th percentiles to see the relevant deviations. Figures 1 and 2 show the comparison between the Q–Q plots for \(T_\mathrm{true}\) and \(T_\mathrm{B}\) for Models 1 and 2, respectively. While we observe a reasonably straight line for \(T_\mathrm{true}\), a clearly curved pattern is shown for \(T_\mathrm{B}\). Again, as shown in Figs. 3 (Model 1) and 4 (Model 2), \(T_{\Upsilon }\) significantly improves the fit even though there still exist a few outliers. In summary, \(T_{\Upsilon }\) performs significantly better than the \(T_\mathrm{B}\) but not as good as the \(T_\mathrm{true}\). However, it is important to note that, in real applications, \(T_\mathrm{true}\) is not available.

Next, we extend our simulation study and compare test statistics with different sample sizes, and we also study the model with larger p and provide additional results. Regarding different samples sizes, we generate the ill-conditioned sample estimate \(\widehat{\varvec{\Gamma }}\) with the data from an elliptical distribution with \(M=1000\) simulation samples with different sample sizes \(n=250, 500, 1000\) for Model 1 (other simulation parameters remain the same). Table 5 provides empirical rejection rates (for \(\alpha =1, 5, 10\%\)) and KS distances for three different statistics with different sample sizes. As expected, the performance of the ADF statistic \(T_\mathrm{B}\) is very sensitive to the sample size and improves as the sample size increases. However, it is still a lot worse than the performances of \(T_\mathrm{true}\) and \(T_{\Upsilon }\) even when \(n=1000\). Both \(T_\mathrm{true}\) and \(T_{\Upsilon }\) display slightly better fits with larger sample sizes, and the proposed statistic \(T_{\Upsilon }\) notably improves the performance compared to \(T_\mathrm{B}\) for all the sample sizes considered.

Lastly, we compare statistics for a larger model with \(p=21, m=3, q=42\) (Model 3) with constructed population covariance matrix \(\varvec{\Sigma }^*\) with specific values of elements of \(\varvec{\Lambda }^*\) and diagonal elements of \(\varvec{\Psi }^*\) generated as shown in Table 6. The factor correlations are fixed to zero. We generate the ill-conditioned matrix \(\hat{\Gamma }\) with the data from an elliptical distribution (with \(\kappa =2.25\), \(M=1000\), and \(n=1000\)). For the Monte Carlo samples, condition numbers of \(\widehat{\varvec{\Gamma }}\) range from 22,441 to 66,500, with a mean of 40,580 (while the condition number of \(\varvec{\Gamma }\) is 1607.3). Note that the condition number of sample covariance matrix \(\widehat{\varvec{\Gamma }}\) is significantly larger than the condition number of \(\varvec{\Gamma }\).

Table 7 presents KS distances and empirical rejection rates for each \(\alpha \) level for three different statistics. Similar to the results for Models 1 and 2, \(T_\mathrm{true}\) is well approximated by the corresponding Chi-square distribution and has relatively good empirical rejection rates (rejection rates are close to the \(\alpha \) levels set) while p (and the corresponding degrees of freedom and condition numbers) is significantly larger than in Models 1 and 2. On the other hand, performance of the ADF statistic \(T_\mathrm{B}\) is extremely poor and a lot worse than the cases of Models 1 and 2. In particular, empirical rejection rates are 100% for all three levels of \(\alpha \) and KS distances are extremely large. That is, \(T_\mathrm{B}\) breaks down completely. However, the proposed test statistic \(T_{\Upsilon }\) again demonstrates a considerably improved fit. \(T_{\Upsilon }\) has the remarkably improved empirical rejection rate and a lot smaller KS distances compared to \(T_\mathrm{B}\). Q–Q plots shown in Figs. 5 and 6 lead to similar conclusions. Therefore, we could confirm that \(T_{\Upsilon }\) works reasonably well and performs significantly better than \(T_\mathrm{B}\) even for a larger model.

6 Conclusions

Browne’s ADF test statistic has become very popular in structural equation modeling in applied research. However, it was found empirically that, unless the sample size is very large, it could perform poorly in practice. In this paper, we first provide a theoretical explanation of this phenomenon by demonstrating the tendency of ill-conditioning of large covariance matrices. When the \(p^*\times p^*\) covariance matrix of sample covariances is ill-conditioned, small variations of the involved estimates produce large variations of the computed ADF statistic. As a result, the corresponding rejection rate is significantly larger than it should be. This theoretical observation is also confirmed by our numerical experiments.

Next, we suggest a modified test statistic that could improve the performance of ADF test statistic and provide an asymptotic analysis. We make approximations while removing small eigenvalues of the corresponding \(p^*\times p^*\) covariance matrix, which is in a similar spirit of the principal component analysis. By performing Monte Carlo numerical experiments, we compare the behavior of the proposed test statistic against ADF statistic. We demonstrate that the modified statistic considerably improves the performance of ADF statistic in terms of the empirical rejection rate and KS distances.

References

Amemiya, Y., & Anderson, T. W. (1990). Asymptotic chi-square tests for a large class of factor analysis models. Annals of Statistics, 18, 1453–1463.

Bellman, R. E. (1960). Introduction to matrix analysis. New York: McGraw-Hill Book Company.

Bollen, K. A. (1989). Structural equations with latent variables. New York: Wiley.

Boomsma, A., & Hoogland, J. J. (2001). The robustness of lisrel modeling revisited. In Structural equation modeling: Present and future: A Festschrift in Honor of Karl Jöreskog (pp. 139–168). Chicago: Scientific Software International.

Browne, M. W. (1982). Covariance structures. In D. M. Hawkins (Ed.), Topics in applied multivariate analysis (pp. 72–141). Cambridge: Cambridge University Press.

Browne, M. W. (1984). Asymptotically distribution-free methods for the analysis of covariance structures. British Journal of Mathematical and Statistical Psychology, 37, 62–83.

Browne, M. W., & Shapiro, A. (1987). Robustness of normal theory methods in the analysis of linear latent variate models. British Journal of Mathematical and Statistical Psychology, 41, 193–208.

Browne, M. W., & Shapiro, A. (2015). Comments on the asymptotics of a distribution-free goodness of fit test statistic. Psychometrika, 80, 196–199.

Byrne, B. M. (2012). Choosing structural equation modeling computer software: Snapshots of lisrel, eqs, amos, and mplus. In R. H. Hoyle (Ed.), Handbook of structural equation modeling, chap. 19 (pp. 307–324). New York: Guilford Press.

Chun, S. Y., & Shapiro, A. (2009). Normal versus noncentral chi-square asymptotics of misspecified models. Multivariate Behavioral Research, 44(6), 803–827.

Hoogland, J. J., & Boomsma, A. (1998). Robustness studies in covariance structure modeling: An overview and a meta-analysis. Sociological Methods and Research, 26(3), 329–367.

Hu, L., Bentler, P. M., & Kano, Y. (1992). Can test statistics in covariance structure analysis be trusted? Psychological Bulletin, 112, 351–362.

Huang, Y., & Bentler, P. M. (2015). Behavior of asymptotically distribution free test statistics in covariance versus correlation structure analysis. Structural Equation Modeling, 22, 489–503.

Jennrich, R., & Satorra, A. (2013). The nonsingularity of \(\gamma \) in covariance structure analysis of nonnormal data. Psychometrika, 79, 51–59.

Kano, Y. (2002). Variable selection for structural models. Journal of Statistical Planning and Inference, 108(1–2), 173–187.

Lee, S.-Y. (1990). Multilevel analysis of structural equation models. Biometrika, 77(4), 763–772.

McManus, D. A. (1991). Who invented local power analysis? Econometric Theory, 7, 265–268.

Shapiro, A. (1986). Asymptotic theory of overparametrized structural models. Journal of the American Statistical Association, 81, 142–149.

Tomarken, A., & Waller, N. G. (2005). Structural equation modeling as a data-analytic framework for clinical science: Strengths, limitations, and misconceptions. Annual Review of Clinical Psychology, 1, 31–65.

Wu, Hao, & Browne, M. W. (2015). Quantifying adventitious error in a covariance structure as a random effect. Psychometrika, 80(3), 571–600.

Xu, J., & Mackenzie, G. (2012). Modelling covariance structure in bivariate marginal models for longitudinal data. Biometrika, 99(3), 649–662.

Yuan, K. H., & Bentler, P. M. (1998). Normal theory based test statistics in structural equation modelling. British Journal of Mathematical and Statistical Psychology, 51, 289–309.

Yuan, K. H., & Bentler, P. M. (1999). F tests for mean and covariance structure analysis. Journal of Educational and Behavioral Statistics, 24(3), 225–243.

Yuan, K. H., Hayashi, K., & Bentler, P. M. (2007). Normal theory likelihood ratio statistic for mean and covariance structure analysis under alternative hypotheses. Journal of Multivariate Analysis, 98(6), 1262–1282.

Acknowledgements

Funding was provided for the third author by National Science Foundation (Grant No. CMMI1232623).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Chun, S.Y., Browne, M.W. & Shapiro, A. Modified Distribution-Free Goodness-of-Fit Test Statistic. Psychometrika 83, 48–66 (2018). https://doi.org/10.1007/s11336-017-9574-9

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11336-017-9574-9