Abstract

Purpose

The effect of snoring on the bed partner can be studied through the evaluation of in situ sound records by the bed partner or unspecialized raters as a proxy of real-life snoring perception. The aim was to characterize perceptual snore events through acoustical features in patients with obstructive sleep apnea (OSA) with an advanced mandibular position.

Methods

Thirty-minute sound samples of 29 patients with OSA were retrieved from overnight, in-home recordings of a study to validate the MATRx plus® dynamic mandibular advancement system. Three unspecialized raters identified sound events and classified them as noise, snore, or breathing. The raters provided ratings for classification certainty and annoyance. Data were analyzed with respect to respiratory phases, and annoyance.

Results

When subdividing perceptual events based on respiratory phase, the logarithm-transformed Mean Power, Spectral Centroid, and Snore Factor differed significantly between event types, although not substantially for the spectral centroid. The variability within event type was high and distributions suggested the presence of subpopulations. The general linear model (GLM) showed a significant patient effect. Inspiration segments occurred in 65% of snore events, expiration segments in 54%. The annoyance correlated with the logarithm of mean power (r = 0.48) and the Snore Factor (0.46).

Conclusion

Perceptual sound events identified by non-experts contain a non-negligible mixture of expiration and inspiration phases making the characterization through acoustical features complex. The present study reveals that subpopulations may exist, and patient-specific features need to be introduced.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Obstructive sleep apnea (OSA) as part of the sleep disordered breathing (SDB) spectrum [1], is a highly prevalent disorder in adults with progressive upper airway dysfunction during sleep [2, 3]. Most existing therapies for OSA are directed toward reversing the anatomical problem by increasing the patency of the upper airway (UA). The aim of these treatments is to restore and maintain a normal respiration during inspiration and expiration [4] and consequently a lowering of the apnea–hypopnea index (AHI). In the literature, several terms are used for classification such as: normal breathing, non-snoring, snoring, and non-breathing sounds [5,6,7]. An attempt to characterize and classify the different snoring and breathing events can be approached from their acoustical features such as, e.g., Snore Index (SI = snores per hour of sleep), loudness, frequency and duration, formant analysis [8], and psycho-acoustical features (annoyance factor) [9, 10].

The assessment of (especially residual) snoring after treatment has rarely been explored in-depth with a mandibular advancement device (MAD). Stouder et al. in 2007 performed one of the rare studies that examined forty patients with OSA and the effect of an oral appliance with mandibular advancement on several acoustical snoring parameters including frequency of snoring, average and peak loudness, and anatomic site of snoring (percentage of palatal or tongue base flutter). This study suggests that oral appliances with mandibular advancement may be an effective treatment for both palatal and tongue base snoring [11]. In 2014, Rohrmeier et al. subjected 25 raters to 55 sleep breathing sound samples of patients with OSA with the mandible in the rest position and asked them to rate psychoacoustic factors including certainty of rating in order to generate a distinction between snore sounds and normal breathing sounds [12].

The aim of the present study was to explore the effect of the automated dynamic mandibular titration system (MATRx plus®) oriented towards the increase of oxygenation (i.e., achieving a reduction of ODI) on snoring and its characterization. The underlying hypothesis was that, when a remedy is provided with respect to the airflow based on a feedback-loop aiming at optimization of ODI, automatically AHI will decrease and the sounds during sleep will be normal breathing sounds.

Material and methods

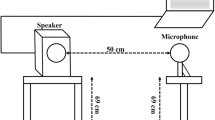

A convenience sample of 33 patients were selected from 53 patients who previously participated in a clinical trial designed to validate the efficacy of the MATRx plus® in-home feedback-controlled mandibular positioner (FCMP). The mandible was dynamically and progressively repositioned (anterior–posterior), under polysomnographic observation, without disturbing the patient based on the instantaneous measurement of the oxygen desaturation index (ODI) [13]. The audio signal was recorded by an add-on microphone (Panasonic omnidirectional electret condenser microphone, WM-61A, with the signal conditioned and digitalized at 22,050 Hz) mounted on the mandibular positioner. A 30-min episode was chosen from the 1st night one hour after the start of the study. During the episodes, the FCMPs were actively positioning the patient’s mandible in the posterior–anterior direction [13]. Four patients were excluded due to technical issues with the audio recording files.

Patients (see Table 1) showed a mean OSA severity expressed by the ODI of 31.7 (SD 18.0) at baseline — see Table 1.

Three dental internship-students, ages 24–25 years from the University of Concepcion, Chile, 1 woman (BCA) and 2 men (ACG and FMC), manually indicated the beginning and ending of sounds occurring in the 30-min episodes. They classified the sound signal recorded during the obtained time lapses into three categories: snore, breath, noise — a category for non-breathing or unidentifiable sounds. A classification certainty was attributed through a visual analogue scale (VAS) consistent with previous studies with a numbered color scale ranging from zero (red-uncertain) up to ten (green-totally certain), and an annoyance scale ranging from zero, red-extremely annoying up to ten, (blue-not annoying) [12]. At the moment of scoring, the raters had normal hearing (inquired statement, a hearing test was not performed) and were not familiar with a snoring bed partner.

The sound samples were played back sequentially on an identical laptop (Dell, Precision Mobile 5530, Xeon E-2176 M 6 Core 45 W 15.6 inch Ultra HD Touch IGZO4 3840 × 2160) and headphone (BOSE Quiet Comfort 25) by means of the software Audacity for Windows (Audacity® 2.3.1 2019). The sound pressure level was calibrated and identical for all raters. The evaluators scored separately in an identical quiet room.

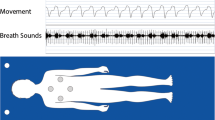

In what follows, we refer to the time lapses indicated and the corresponding signal as sound events. An event started when at least one rater started the event and stopped when all raters ended the event. Each event was sub-divided based on the respiratory cycle, measured by nasal airflow, into inspiratory and expiratory sub-events called segments.

Acoustic features for each sub-event were calculated according to the following procedures: the sound signal was divided into 40-ms-long windows with 75% overlap and a time–frequency decomposition was performed to extract power and the spectral centroid of each window. A Snore Factor was defined to reflect the rough, percussive sound of soft tissues colliding that was associated with a snore. The Snore Factor was calculated by using a Hilbert transform to extract the sound envelope which was then zero-phase filtered using a 3rd-order bandpass Butterworth filter in the range from 20 to 80 Hz and computing the root-mean square value of the resulting signal across the whole event.

A descriptive study of acoustical frequency, sound power, spectral centroid, and Snore Factor (SF) of snore events and segments and exploratory general linear model (GLM) were followed by a classification effort based on data aggregated on patient level. For technical reasons, only segments with a duration above 0.04 s were considered in the analysis and events containing at least such a segment.

When considering only the events indicated by all raters, a Fleiss' Kappa of 0.84 was observed (CI 95% [0.838; 0.851]) for the classification in noise, snore, and breath. Moreover, the absolute classification certainty (certainty score 10) ranged from 64 up to 70% for snore events depending on the rater with an average of 8.8 (sd 1.4). For breathing sounds, the absolute certainty ranged from 24% up to 29% with an average of 8.5 (sd 1.5). The analysis is based on qualification agreement of at least two raters; e.g., if at least two raters indicated that an event is snoring, the event is considered to be a snoring event.

The statistical analysis and graph plotting were performed using Excel Office 365 (Microsoft Corporation), IBM SPSS Statistics 26.0.0.0 64-bit (IBM Corp.) and MatLab R2019b (MathWorks).

Results

A total of 12,526 sound events were identified by at least one rater. Out of these events, 89% (11,122) of events were determined by at least two raters in agreement with the qualification and contained an expiration or inspiration phase of at least 0.04 s. These events consisted mostly of snore 6398 (58%) and breathing events 3652 (33%) with a low number of general noise events 1072 (10%) — see Table 2.

When refining sound events based on inspiration and expiration, the number of time lapses to consider increased from 11,122 events up to 17,090 segments (54% increase). Snoring dominated with 59% of segments, followed by breathing sounds 33%, and a small percentage of noise 8%. There was a significant relation between inspiration and expiration on the sound type (Chi2 p < 10−6). The fraction of inspiration snore sound segments is about 10% higher than the fraction of expiration sound segments, respectively, 65% and 54%. The segments belonging to the aforementioned 11,122 events were the basis for further characterization.

The skewness of Mean Power, Spectral Centroid, and Snore Factor ranged, respectively, 2.4–29.3, 1.2–1.6, and 2.2–10.9 depending on segment type; snore or breath in combination with inspiration or expiration. In order to mitigate skewness and the presence of outliers, a natural logarithmic transform (Ln) was applied. Table 3 represents the descriptive statistics for the transformed characteristics.

A full factorial repeated measures GLM with patient as a random effect and event type as a fixed effect was applied. All interaction effects (p < 0.0001) as well as the direct effects (p < 0.0001) were significant, except for the direct patient effect for the Ln Spectral Centroid (p = 0.54). For all acoustical features, the combination of classification and phase (inspiration-expiration) depended on the patient. When applying the non-parametric Kruskal–Wallis test (K-W) all segment types and breathing phases differed significantly with respect to ln Mean Power, Ln Spectral Centroid, and Ln Snore Factor (p < 0.0001).

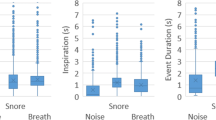

The boxplots representing the Ln Mean power and Ln Snore Factor for different segment types showed that there was a relatively high variability in combination with the presence of potential outliers, especially at the high end, despite the logarithmic transformation —– see Figs. 1a, 2 and 3a.

In the Ln Mean Power, there was a significant difference between respiratory phases. For noise and breathing sounds, the inspiration Ln Mean Power was lower than for expiration; on the contrary, for the snoring sounds the inspiration Ln Mean Power was higher than for the expiration — Table 3, Fig. 1a.

While for inspiration snore sounds, the distribution was bimodal with peaks rather close to each other, for expiration one peak may be observed that corresponds to the first peak for expiration, followed by a plateau towards the higher values — see Fig. 1b. For breathing sounds, a peak may be observed that corresponds to the snore peak, but the plateau was absent when breathing out — see Fig. 1c. For inspiration, the bimodality was not explicitly present — see Fig. 1c.

For Ln Spectral Centroid, the distributions for all sound types and breathing phases were rather similar with outliers to the left for all distributions — see Fig. 2a, b, and c. Although the overall K-W differences were significant (p < 0.0001), the difference between medians was rather small — see Table 3.

For snore sounds, the median Ln Snore Factor for breathing in was somewhat higher than breathing out, but this difference was accompanied by substantial variability — see Table 3 and Fig. 3a. Breathing sounds had a lower median Ln Snore Factor than snore sounds, with a lower variability for breathing in and a substantial variability for expiration — see Table 3 and Fig. 3a. For breathing and snore sounds during expiration, the population could be subdivided into two subpopulations while for snore sounds during inspiration, a very pronounced bimodal distribution was observed for breathing sounds, one modus remained of a rather prominent peak — see Fig. 3b, and c.

When exploring the scatterplot of Ln Mean Power, Ln Spectral Centroid, and Ln Snore Factor for snore and breath sounds during inspiration and expiration, no clearly distinct groupings was found — see Fig. 4.

The average (over raters) annoyance and certainty of classification correlated significantly (p < 0. 10−10) with all three acoustical measures introduced. The Pearson and Kendall correlation between annoyance and Ln Snore Factor were highest (r = 0.48; t = 0.35) followed by the correlations with Ln Mean Power (r = 0.46; t = 0.33), and rather weak correlations with Ln Spectral Centroid (r = 0.22; t = 0.13). All correlations with the certainty score were weak: Ln Mean Power r = 0.19, t = 0.13, Ln Spectral Centroid r = 0.13, t = 0.08, and Ln Snore Factor r = 0.20, t = 0.15.

Discussion

To our knowledge, most studies start from sound events defined by one or more OSA or sound specialists as a basis for further analysis, e.g., [6, 10, 12, 14, 15]. The use of raters without snoring experience or technical sound background has been used to mimic non-specialist bed partners. This is particularly useful if one wants to study partner — bed-partner interactions and relate to the real in situ situation. Research is required to study the relation between the score of raters without snoring experience or technical sound background and scoring of bed partners.

In a previous paper, we found a high concordance with respect to the determination of sound events and their classification among raters, making the existence of a notion of “perceptual snoring” plausible [16]. Remarkably, the raters did not make a distinction between breathing in and breathing out. All raters included both breathing phases regularly in one sound event. Perceptual snoring events do not correspond to what, in general, has been considered as a sound event by OSAS or sound specialists. This might be important when studying sound from a dyadic perspective [17], but when considering perceptual snoring events as basis for automatic characterization, the fact that expiration phases as well as inspiration phases were included in the events is a challenge. Acoustical features are expected to be different for breathing in and breathing out phases because of airflow direction [18].

The differences in Ln Mean Power between inspiration and expiration for observed snore and breath sounds are rather surprising in comparison with other studies such as Rohrmeier in 2014 and Levartovsky in 2016 [7, 12, 19]. Both claim that the expiration would be of lesser importance, even negligible. These differences, observed in our study, might be partially explained by the presence of the MAD and the degree of protrusion applied in combination with interaction between Ln Mean Power, microphone positioning with respect to the sound source and the room acoustics. Expiration snoring is present in up to 76% of snore segments, further investigations are needed to explore the influence of dynamic and partial protrusion on snoring in expiration as well as inspiration.

A possible approach to acoustical characterization is to subdivide the events according to expiration and inspiration. Although the Ln Mean Power and the Snore Factor (SF) differ significantly between noise, snore, and breath for inspiration segments as well as expiration segments, the differences are not extreme, certainly when taking into account the variability. When looking more in-depth at the distributions, the occurrence of bimodality depending on the inspiration of expiration phase suggests the presence of patient subpopulations. This does not come as a surprise: differences in patients’ anatomy are bound to influence the airflow and sounds produced depending on the airflow direction, also the differences in recording conditions might contribute. Looking at the significant patient effect in the GLM, the modeling of patient-specific features and sleep circumstances might provide a step forward.

The subdivision based on breathing phases only, might be inadequate when attempting to develop discriminating acoustical features, due not only to uncertainty on transitions between inspiration and expiration, but also the presence of periods on intermittent absence of noise neglected by the raters. A way forward in the characterization-segmentation might be to determine representative selections of periods within the perceptual events that allow for stable acoustical features. Immediately, dB, often used as a criterion in snore definitions, comes to mind, although this criterion must be properly defined when considering in situ evaluations. Calibration-standardization has to be performed to counter differences in recording conditions.

A significant positive correlation exists between the power-related measure, the Snore Factor, and the annoyance attributed to the events. Further research is required to confirm the relation with an annoyance score attributed to the segments rather than the events. The detection and subsequent elimination of snoring are expected to reduce annoyance of bed partners. Whether or not the elimination of snoring influences the clinical outcome for the snorer remains to be seen. In De Meyer et al. 2021, the inverse could not be established. When using the dynamic MAD (MATRx plus®) oriented towards the reduction of the ODI, snoring remained substantially present [16]. Future research is needed to establish whether or not treatment aimed at the reduction of snoring has importance for the clinical outcome of the snorer.

Conclusion

Perceptual sound events determined by non-experts contain a mixture of non-negligible expiration and inspiration phases making the characterization through acoustical features complex. This present study reveals that subpopulations may exist, and patient-specific features need to be introduced.

Data availability

Yes.

Abbreviations

- MATRx plus ®:

-

MATRx plus (r) Dynamic mandibular titration device (FCMP)

- GLM:

-

General linear model

- SDB:

-

Sleep disordered breathing

- OSA:

-

Obstructive sleep apnea

- AHI:

-

Apnea–hypopnea index

- ODI:

-

Oxygen desaturation index

- UA:

-

Upper airway

- SI:

-

Snore Index

- MAD:

-

Mandibular advancement device

- FCMP:

-

Feedback-controlled mandibular positioner

- VAS:

-

Visual analogue scale

- SF:

-

Snore Factor

- CI:

-

Confidence interval

- Sd:

-

Standard deviation

- Chi²:

-

Chi-square

- Ln:

-

Natural logarithmic transform

- K-W:

-

Kruskal–Wallis test

- dB:

-

Decibel

References

American Academy of Sleep Medicine International classification of sleep disorders (2014) 3rd ed. Darien: American Academy of Sleep Medicine

Zancanella E, Haddad FM, Oliveira LAMP, Nakasato A, Duarte BB, Soares CFP, Cahali MB, Eckeli A, Caramelli B, Drager L, Ramos BD, Nóbrega M, Fagondes SC, Andrada NC (2014) Obstructive sleep apnea and primary snoring: diagnosis. Braz J Otorhinolaryngol 80((1 Supl. 1)):S1–S16

Heinzer R, Vat S, Marques-Vidal P et al (2015) Prevalence of sleep-disordered breathing in the general population: the HypnoLaus study. Lancet Respir Med 3:310–318

An Y, Li Y, Liu Z, Wang J, Li T, Xiong H, Yin H, Zhang X, Xian J, Huang Y (2015) Effects of fluid shift on upper airway patency and neck circumference in normal-weight subjects. Sleep Med 16:1419–1426

Ng AK, Koh TS, Abeyratne UR, Puvanendran K (2009) Investigation of obstructive sleep apnea using nonlinear mode interactions in nonstationary snore signals. Ann Biomed Eng 37(9):1796–806

Dafna E, Tarasiuk A, Zigel Y (2013) Automatic detection of whole night snoring events using non-contact microphone. PLoS ONE 8(12):e84139

Levartovsky A, Dafna E, Zigel Y, Tarasiuk A (2016) Breathing and snoring sound characteristics during sleep in adults. J Clin Sleep Med 12(3):375–384

Ng AK, Koh TS, Baey E, Puvanendran K (2007) Diagnosis of obstructive sleep apnea using formant features of snore signals. IFMBE Proceedings 14(2):967–970

Ng AK, Koh TS (2008) Using psychoacoustics of snoring sounds to screen for obstructive sleep apnea. Conf Proc IEEE Eng Med Biol Soc 1-8:1647–1650

Rohmeirer C, Herzog M, Haubner F, Kuehnel TS (2012) The annoyance of snoring and psychoacoustic parameters: a step towards an objective measurement. Eur Arch Otorhinolaryngol 269:1537–1543

Stouder S, Jones L, Brietzke S, Mair EA (2007) Does an oral appliance reduce palatal flutter and tongue base snoring? Otolaryngology-Head and Neck Surgery 136:827–831

Rohrmeier C, Herzog M, Ettl T, Kuehnel TS (2014) Distinguishing snoring sounds from breath sounds: a straightforward matter? Sleep Breath 18:169–176

Remmers JE, Topor Z, Grosse J, Vranjes N, Mosca EV, Brant R, Bruehlmann S, Charkhandeh S, Jahromi SA (2017) A feedback-controlled mandibular positioner identifies individuals with sleep apnea who will respond to oral appliance therapy. J Clin Sleep Med 13(7):871–880

Dreher A, Rader T, Patscheider M, Klemens C, Schmidt M, Baker F, de la Chaux R (2009) The annoyance of snoring. Eur Arch Otorhinolaryngol 266:293–296

Rohrmeier C, Fischer R, Merz AK, Ettl T, Herzog M, Kuehnel TS (2015) Are subjective assessments of snoring sounds reliable? Eur Arch Otorhinolaryngol 272(1):233–240

De Meyer MMD, Jahromi SA, Dillon A Hambrook DA, Remmers JE, Marks LAM, Jacquet W (2021) Perceptual snoring as a basis for a psychoacoustical modeling and clinical patient profiling. Sleep Breath https://doi.org/10.1007/s11325-021-02348-6.

Fischer R, Kuehnel TS, Merz AK, Ettl T, Herzog M (2016) Rohrmeier C (2016) Calculating annoyance: an option to proof efficacy in ENT treatment of snoring? Eur Arch Otorhinolaryngol 273(12):4607–4613

Oliveira A, Marques A (2014) Respiratory sounds in healthy people: a systematic review. Respir Med 108(4):550–570

Pevernagie D, Aarts RM, De Meyer M (2010) The acoustics of snoring. Sleep Med Rev 14(2):131–144

Acknowledgements

Bernardita A. Cárcamo, Andrés M. Chavez, Felipe A. Matamoros, Internship Students University de Conception, Chilé (2019); Pascale Van Damme, VP EMEA VMware business, Dell Technologies.

The authors would like to show all gratitude to all the volunteering patients-snorers. The authors like to thank Zephyr Sleep Technologies (Canada) for the provision of the sound and sleep test data.

Funding

Funding for the trial was provided by Alberta Innovates Technology Futures, Zephyr Sleep Technologies and the NRC-IRAP Industrial Assistance Fund.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The clinical trial was approved by the Conjoint Health Research Ethics Board of the University of Calgary, Canada and informed consent was obtained from all participants.

(Ethics ID: E-24903).

Conflict of interest

The co-authors Seyed Abdolali Zareian Jahromi, Dillon A Hambrook, John E. Remmers are employees affiliated with the Zephyr Sleep technologies, Calgary, Canada.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Jacquet, W., De Meyer, M.M.D., Remmers, J.E. et al. The acoustical and perceptual features of snore-related sounds in patients with obstructive sleep apnea sleeping with the dynamic mandibular advancement system MATRx plus®. Sleep Breath 26, 215–224 (2022). https://doi.org/10.1007/s11325-021-02392-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11325-021-02392-2