Abstract

Edge Computing is a novel paradigm that extends Cloud Computing by moving the computation closer to the end users and/or data sources. When considering Edge Computing, it is possible to design a three-tier architecture (comprising tiers for the cloud devices, edge devices, and end devices) which is useful to meet emerging IoT applications that demand low latency, geo-localization, and energy efficiency. Like the Cloud, the Edge Computing paradigm relies on virtualization. An Edge Computing virtualization model provides a set of virtual nodes (VNs) built on top of the physical devices that make up the three-tier architecture. It also provides the processes of provisioning and allocating VNs to IoT applications at the edge of the network. Performing these processes efficiently and cost-effectively raises a resource management challenge. Applying the traditional cloud virtualization models (typically centralized) to virtualize the edge tier, are unsuitable to handle emerging IoT application scenarios due to the specific features of the edge nodes, such as geographical distribution, heterogeneity and, resource constraints. Therefore, we propose a novel distributed and lightweight virtualization model targeting the edge tier, encompassing the specific requirements of IoT applications. We designed heuristic algorithms along with a P2P collaboration process to operate in our virtualization model. The algorithms perform (i) a distributed resource management process, and (ii) data sharing among neighboring VNs. The distributed resource management process provides each edge node with decision-making capability, engaging neighboring edge nodes to allocate or provision on-demand VNs. Thus, the distributed resource management improves system performance, serving more requests and handling edge node geographical distribution. Meanwhile, data sharing reduces the data transmissions between end devices and edge nodes, saving energy and reducing data traffic for IoT-edge infrastructures.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The Internet of Things (IoT) is considered one of the main components of the Internet of the future [69]. The IoT is a novel paradigm in which smart objects (things) communicate with each other and with physical and/or virtual resources through an existing network infrastructure, including the Internet [6, 33]. The IoT is attractive to several application domains (e.g., healthcare [16], smart cities [76], smart homes [74], and industry [57]). However, the wide dissemination of IoT applications and the growth of the number of devices connected to the Internet gave rise to many challenges regarding the infrastructure for supporting these applications. In this context, paradigms grounded on virtualized infrastructures, such as Cloud Computing [5], Cloud of Things (CoT) [17], Cloud of Sensors [60], Fog/Edge Computing [9, 10], and most recently the Edge Mesh [59] approach, have emerged as solutions for supporting the needs of storing, processing, and distribution of data generated by IoT devices.

In the last years, the Cloud Computing paradigm [5] has been advocated as a promising solution to tackle most of the IoT issues regarding reliability, performance, and scalability by playing the role of intermediary between smart objects and applications that use data and resources provided by these objects. Indeed, with its vast computational capacity, cloud computing comes hand-in-hand with IoT to act as backend infrastructure for processing and long-term storage the massive amount of data produced by IoT devices. The integration between IoT and Cloud has been addressed by several works and gave birth to the paradigm called Cloud of Things (CoT) [2, 4, 11, 17, 21, 60]. CoT extends the traditional Cloud models (IaaS, PaaS, and SaaS) to include novel models as, for instance, Sensing as a Service [63], Sensing and Actuation as a Service [23] to name a few.

Despite its advantages, the Cloud provides centralized services that are unsuitable to meet requirements of some types of IoT applications. There are still problems unsolved on the application side, mainly concerning the latency in the data transmissions between the devices and the Cloud, mobility, geo-distribution, energy efficiency, location-awareness, among others [9, 10, 13, 53, 56, 79]. To overcome these open issues, the Edge Computing paradigm has recently emerged as a solution for delivering data and real-time data processing closer to the data source [77].

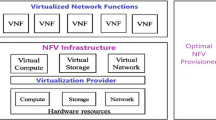

Fog/Edge Computing [1, 9, 10, 43, 46, 50, 65] is a computing paradigm strongly based on virtualization [40] of physical edge devices, allowing the decoupling of the applications from both the edge infrastructure and the end devices. To achieve this goal, the physical infrastructure is abstracted through a set of virtual entities. The virtual entity represents the resources available at the Fog infrastructure (e.g., data, computation, and communication capabilities) to users. The end devices are devices capable of performing sensing and actuation tasks (e.g., a temperature sensor, a relay actuator). According to Yi et.al [77], the physical edge devices (also called Fog or Edge nodes) encompass both resource-poor devices (e.g., access points, smart routers, switches, base stations and smart sensors), and more powerful computing nodes as Micro Datacenters, and server clusters such as Cloudlet [62] and IOx [20]. These devices are heterogeneous regarding (i) their physical capabilities/resources (vertical heterogeneity) and (ii) their provided services (horizontal heterogeneity) [32]. Moreover, they can provide resources for services at the edge of the network and execute tasks which were previously assigned to the cloud (e.g., data collection from end devices, data storage, data preprocessing, and data filtering), thereby decreasing the data traffic to the cloud servers. Therefore, the Fog Computing already meets some requirements of emerging IoT applications such as low latency, energy efficiency, and location-awareness. It is worth noting that the terms “Fog” [8] and “Edge” [31] come from different communities, and sometimes, have a slightly different meaning for expressing the same type of computation. Thereby, we are adopting the term “Edge” for the remaining of this paper to denote the tier (providing computation, storage and communication capabilities) between physical objects (things/sensors) that act as data source, and the remote cloud data centers.

Although Edge computing already meets some requirements of emerging IoT applications (e.g., low latency, energy efficiency, and location-awareness), several other challenges need to be dealt with to fully benefit from the synergy of these two technologies. Among such challenges, we can mention dealing with the high heterogeneity (of devices and applications), resource-constraint (in comparison to the cloud), mobility and geo-distribution of the edge devices, and promoting a fair and cost-effective consumption of the available resources. In our work, we focus on the challenges of designing a suitable virtualization model, of managing the collaboration process among edge devices and solving resource management issues. Our goal is to leverage the state of the art in Edge Computing and CoT paradigms to meet requirements such as heterogeneity, mobility, and geo-distribution of devices, scalability of services, and low response time [3, 9, 32, 50]. The addressed challenges and the respective requirements to be met are described in the next Section.

1.1 Current challenges in edge computing

In this Section, we describe the research challenges found in the current literature regarding the virtualization model, collaboration process, and resource management for Edge Computing, which are within the scope of the contributions of this paper.

Virtualization is widely used and well addressed in Cloud Computing. However, in the context of Edge Computing, it is necessary to consider the specific features of the edge devices, such as heterogeneity, resource-constraint and geographical distribution (geo-location) [50, 65, 77], and propose novel models tailored to this new scenario. The geo-location of edge nodes at the edge of the network has advantages compared to cloud nodes by allowing taking computing closer to data sources. However, this geo-location also brings challenges regarding managing and deployment of the applications. The heterogeneity of edge nodes hinders both the development and maintenance of the services provided by these nodes regarding the complexity and amount of generated code, besides the time of coding [15, 30]. Since edge devices are more restricted in resources than cloud nodes, Edge Computing requires lighter virtualization models. Moreover, the design of a new virtualization model for the edge must also consider the requirements of emerging IoT applications, such as low latency and fast response to events.

Another challenge faced when proposing a virtualization model for edge computing is how to perform the data sharing between the virtual entities [59]. The data sharing can bring benefits to the system such as improving the energy saving (thus increasing the lifespan of sensors) and reducing the bandwidth consumption. Santos et al. [61] proposed a virtualization model where Virtual Nodes (VN) are provided to store and share (with other VNs) raw sensing data generated from one or many sensor devices or processed data using a data fusion technique. In this model, the VNs can only interact with each other within the same workflow, where a workflow represents the set of application tasks. Thus, whenever there is a dependency between data inputs and outputs of the application requests, the VNs will need to share their data. This model represents a typical dataflow approach, where the result of the processing (data output) from a node is the input of processing (data input) of another node [38]. Such approach is passive and data sharing only occurs when requested, i.e., a VN does not automatically send its data to other VNs which share the same datatype. Therefore, the challenge is to make the data sharing an active process using a collaboration process in Edge Computing, allowing a VN to share its data with neighboring VNs, efficiently and transparently, to improve the sensor lifespan (energy saving).

Regarding the collaboration process, several works in Edge Computing use the hierarchical model to share resources, since such a model makes easy the set-up of collaboration between edge nodes [10, 13, 46, 66, 72]. According to Mahmud et al. [46], this model is typically based on the concept of master-slave, in which the master edge node controls functionalities, processing load, data flow, etc. of its subordinated slave nodes. Although this approach facilitates the collaboration, it has at least two significant drawbacks. The first drawback concerns the strong dependency on the master node. In case of failures of the master node, the communication with slave-nodes is interrupted, leaving the underlying network inaccessible. This problem generates the second drawback by requiring procedures to re-setup the hierarchy structure which can consume many processing resources, bandwidth, and energy, thus affecting the capability of the system to meet the application requests. According to Mahmud et al. [46], the master-slave approach when assembled in a cluster model or Peer-to-Peer (P2P) is inadequate in real-time data processing environments since the communication between master-slave produces high bandwidth consumption. A feasible solution regarding such drawbacks is to organize the edge nodes in groups, i.e., in a neighborhood, and promote a node-to-node communication (within the neighborhood), without the need of a node acting as the communication controller. Therefore, since the hierarchical approach has its drawbacks in a dynamic scenario as the Edge Computing environment, we argue that, for adequately tackling this challenging issue, the flat P2P approach [46] is a feasible solution for the collaboration process between edge nodes. In such approach, the closeness defines the connection between nodes, thereby reducing the latency and saving bandwidth during the communication and indeed improves the response time to meet, for instance, the request for a latency-sensitive application.

Concerning resource management, it encompasses two primary activities, namely resource allocation and resource provisioning. Resource provisioning is responsible for preparing the infrastructure to host and instantiate the virtual entities to further meet the requests issued by the applications [66, 72, 78]. In turn, resource allocation allocates the resources required by virtual entities to adequately meet the workload of the multiple applications using the edge infrastructure in a given moment [22, 26]. The solutions for resource management are well established in the Cloud Computing field. However, in a scenario involving a mix of IoT and edge nodes, the centralized model of the Cloud is unsuitable since the edge nodes are distributed along the edge of the network. Several issues remain open in this regard [22, 59, 72, 75]. Recent works have been proposing [61, 66, 72] models to deal with resource management in Edge Computing. However, these works adopt centralized or hierarchical models to support the distributed decision-making at the edge of the network. In these models, the master node is responsible for identifying the edge slave-node capable of receiving the workload. The slave node is the one in charge of provisioning the required resources to run the workload. Therefore, existing solutions are not fully distributed, and present the drawbacks beforementioned. We argue that, for properly tackling this challenging issue, it is necessary to distribute the resource management entirely at the edge of the network.

1.2 Goals of our work

In order to contribute for providing solutions to the challenges mentioned in Section 1.1, and advance the state of the art in Edge Computing, in this paper we propose and evaluate LW-CoEdge, a new virtualization model improved with a collaboration process. The main goal of this work is to design a lightweight virtualization model for Edge Computing, which is based on the virtual node concept as proposed by Olympus [60, 61], and enhanced with a flat P2P collaboration process to allow both the data sharing and the distributed resource management at the edge of the network.

The remainder of this paper is organized as follows. Section 2 discusses related work. Section 3 describes our lightweight virtualization model and the collaboration process. Section 4 describes the heuristic algorithms for our distributed resource management and P2P collaboration. Sections 5 and 6 discuss the performed evaluation and final remarks.

2 Related work

In this Section, we describe the works related to ours in the light of the following main aspects: (i) computing paradigm and virtualization model, (ii) P2P collaboration, (iii) resource management, and (iv) architecture (two or three-tiers). At the end, we present a classification of the described papers.

2.1 Computing paradigm and virtualization model

Two recent works brought significant advances to the Cloud of Sensors (CoS) field [45, 61]. Madria et al. [45] proposed a centralized virtualization model, which encompasses Virtual Sensors and provides sensing as a service for the users (SaaS). Our proposal differs from [45] since we implement a decentralized virtualization model tailored to meet requirements of emerging IoT applications such as low latency and location-awareness. The authors in Santos et al. [61] extended their original design of Olympus [60] to create a three-tier CoS infrastructure by including the Edge tier to provision Virtual Nodes (VN) at the edge of the network. Our proposal differs from Olympus in two essential aspects. First, we provide a process of collaboration between the VNs to actively share fresh data with neighboring VNs. Thus, we avoid re-reading the sensors to get the same data, thereby improving response time, latency, bandwidth, and sensor lifespan. Second, Olympus defines the VN as a program capable of performing a set of information fusion techniques based on application requirements. Unlike Olympus, our model provides predefined types of VNs representing each data type provided to serve the application requests. This is important to favor the collaboration process among virtual nodes. Sahni et al. [59] present a novel computing approach named Edge Mesh integrating the best characteristics of the Cloud Computing, Edge Computing, and Cooperative Computing into a mesh network of edge devices and routers to decentralize decision-making tasks instead of sending them to be processed by a centralized server. It enables collaboration between edge devices for data sharing and computation tasks as well as the interaction between different end devices. However, the authors present several open issues for implementing the communication between different types of devices. Some open issues are how and which data are shared between edge devices, and the appropriate local to execute the intelligence of the application at the edge of the network. Our proposal leverages the advances promoted by the Edge mesh approach and addresses the related open issues. We implemented a P2P collaboration process for enabling the data sharing between VNs and a resource management process distributed between edge nodes. Our data sharing process is smart, because it considers the data requirements of other VNs before replicating the data. Moreover, the distributed resource management provides for each edge node the ability to make decisions and engage neighboring edge nodes to allocate or provision VNs whenever it is necessary.

2.2 Collaboration

According to Mahmud et al. [46], the implementation of P2P collaboration can be hierarchical and non-hierarchical (flat). Mobile Fog [35] is a PaaS that provides a high-level programming model based on events. Applications use Mobile Fog to send messages between nodes or to get sensing data from an active sensor, besides other functions. Mobile Fog uses a hierarchical network topology of edge devices. It distributes the processes through the cloud (the root node of a hierarchy), fog (intermediate nodes) and edge (leaf node). Our proposal differs from the Mobile Fog approach in two fundamental aspects. First, we adopt a flat P2P collaboration between edge nodes to avoid the drawbacks related to the hierarchical model. In a flat P2P model, if a physical node fails only the VNs provisioned in this physical node are lost and the other VNs continue working. Second, our proposal provides VNs that are already pre-programmed to perform the essential operations to deliver sensing data or perform actuation. Thus, the end-users access the VNs by including in their applications a simple call to submit the requests to our CoT system, without the need to implement the VN behavior from scratch. Shi et al. [64] provide a non-hierarchical P2P view of Fog Computing that connects the cloud of sensors and smart devices via mobile devices. Moreover, the infrastructure offers and consumes resources and services of mobile devices through the REST pattern using the CoAP protocol, thereby promoting the dissemination of data between users in a decentralized way. Unlike Shi et al. [64], we designed a new virtualization model capable of running VNs for providing sensing data or performing actuation in response to the application requests directly at the edge of the network. Moreover, we implemented a process of collaboration to allow VNs to share data with their neighboring nodes without the user mediation, thereby improving the request response time and saving bandwidth.

Concerning the collaboration between edge nodes, Taleb et al. [68] introduced the “Follow Me Edge”. It is a concept based on Mobile Edge Computing (MEC) providing a two-tiered architecture to enable the containers migration across edge nodes according to the localization of their mobile users. Although the container migration emerges as a feasible solution for the mobility requirement, the authors claim that the selection of the appropriate technique to perform the migration is a vital task to avoid both communication latency and data synchronism problems. Our proposal differs from [68] by providing a flat P2P collaboration to share only the sensing data between edge nodes. Thus, we avoid transferring huge container images through the network, since each edge node already provides its services (VN container), thereby saving bandwidth and decreasing latency.

2.3 Resource management

Recent works on resource allocation and provisioning have been presented to take advantage of Edge Computing to enable workload offloading from the Cloud servers to edge devices, i.e., closer to the users. Zenith [75] is a novel resource allocation model for Edge Computing. It uses Weighted Voronoi Diagrams (WVD) [36] to divide a geographic area composed of several Micro Datacenters (MDCs). Then it allocates the infrastructure resources (from the MDC with the lowest communication latency) bought by the service providers to run their services. Our model applies the ideas behind the Zenith approach by using the WVD algorithm to divide an Edge network into regions and build the neighborhoods of edge nodes. Unlike Zenith, our model assigns virtual nodes running in the edge node to provide either raw or processed data in response to the application requests. Wang et al. [72] present the Edge Node Resource Management (ENORM), a framework for handling the application requests and performing the workload offloading from the Cloud to running at the Edge network. ENORM addresses the resource management problem through a provisioning and deployment mechanism to integrate an edge node with a cloud server, and an auto-scaling tool to dynamically manage edge resources. Although we provide a resource management approach inspired by ENORM, our proposal is fully decentralized at the edge network. Such feature enables the edge nodes to find or provision the best VNs for providing either raw or aggregated sensing data, or performing actuation in response to the user application requests arriving from the cloud or the edge of the network. Skarlat et al. [66] present a hierarchical architecture of fog colonies for resource provisioning and orchestration in both the cloud and fog that takes into account the available resources in Fog/IoT scenarios. This architecture encompasses a cloud-fog control middleware that controls fog cells at the IoT tier through a fog orchestration control node. Fog cells are software components serving as access points to the IoT devices. They can receive and execute tasks (e.g., data analysis, data storage, monitoring, data transfer), allocate resources, and communicate to the fog orchestration control node to propagate tasks. The ideas behind this framework have a point of convergence with our proposal regarding performing data sharing. However, our approach implements the data sharing using the flat P2P collaboration, i.e., a full decentralized approach.

2.4 Architecture

Munir et al. [50] present the Integrated Fog Cloud IoT Architecture (IFCIoT), a fog-centric architectural paradigm that integrates IoT, Fog, and Cloud into the three-tier architecture to support five service layers, namely the Application, Analytics, Virtualization, Reconfigurable, and Hardware. It is designed to enhance system properties including performance, energy efficiency, latency, scalability, and to provide better-localized accuracy for IoT and cyber-physical systems (CPS) applications. Despite all the innovation, IFCIoT does not show how the fog nodes can collaborate with each other for data sharing, for instance. Our proposal differs from the IFCIoT by providing a lightweight virtualization model through virtual nodes and a collaboration process between the fog nodes that is fully distributed.

2.5 Classification

Table 1 summarizes the main characteristics, contributions and open issues of the related works and includes the features of our work. In this table, the  symbol indicates a feature supported by the respective work, and the

symbol indicates a feature supported by the respective work, and the  symbol means an open issue.

symbol means an open issue.

3 LW-CoEdge model

This Section presents LW-CoEdge, our proposal for a lightweight virtualization model enhanced with a collaboration process for Edge Computing. Initially, we detail the three-tier architecture considered in this paper (Section 3.1) and then we describe the virtualization model (Section 3.2). Next, we present the collaboration process (Section 3.3) that encompasses the data sharing and the distributed resource management.

3.1 The three-tier architecture

Figure 1 depicts the architecture adopted in this work to support the virtualization model. We assume an ecosystem composed of three tiers: (i) Cloud tier (CT), (ii) Edge tier (ET), and (iii) End device tier (EdT).

The Cloud tier (CT) is the top level of the architecture. It includes a set of cloud nodes CN = {cn1, cn2, …, cnn}. Each cni represents physical data centers that provide elastic resources on-demand (e.g., processing, storage, energy, and bandwidth). Hence, the CT can provide virtually unlimited resources to perform high-performance computing such as time-based data analysis and long-term storage of high amounts of data.

The Edge tier (ET) is the middle level and encompasses a set of edge nodes EN = {en1, en2, …, enn} placed geographically closer to the data sources, i.e. they are closest to the End devices tier than the cloud nodes. Moreover, each eni provides the same resources of cnibut in a smaller scale, i.e. eni is a less powerful device than cni.

Finally, the End devices tier (EdT) is the bottom level and encompasses a set of end devices Ed = {ed1, ed2, …, edn} deployed over a geographical area. Each edi is heterogeneous regarding the processing speed, total memory, and energy capacity. Besides, it is able to provide sensing data and/or perform actuation tasks. Thus, the EdT may comprise smart devices such as a smartwatch or smart phone, for instance, or smart sensors connected and composing one or more Wireless Sensor and Actuator Networks (WSANs).

In our architecture, we assume that the cloud nodes (CNs) and edge nodes (ENs) host the majority of computational entities in our work, namely: APIs, Virtual Nodes, Resource Management components, and sensing & actuation components responsible for managing the interactions between the VNs and the End devices tier. The CN and EN host the APIs that expose the application entry point and the management services of the framework. The entry point is the service accessed by the users to submit the application requests. Concerning the components hosted at the EN, they are responsible for handling the application requests by performing tasks to provide sensing data (acquired from end devices) or to perform actions (on the end devices). All computational units are detailed in the following sections.

Furthermore, we identified the main actors in typical CoT infrastructures. These actors can be either humans or software systems. The software system can be either a web site (a Web Portal), or a web-service, or a mobile app that interacts with our CoT system by calling the APIs for sending request for information from the monitored environment or to perform some actuation. Seismic monitoring, traffic monitoring, and temperature control are examples of typical IoT applications (accessed via web site or deployed as a mobile app). The human actor is a user of the system playing roles such as Software Developer, End-user, and Service Provider Manager. The Software Developer is the actor in charge of programming the software above-mentioned. He/She is also responsible for programming the end devices to send sensing data to the CoT system. The End-user is the actor in charge of operating the software to perform some action (sending a request to our system). The software operation occurs either via a Web Portal or via mobile devices (e.g., smartphone, tablet, smartwatch) in which the software is installed. The last human actor is the Service Provider Manager (SPM). The SPM is responsible for configuring the CoT system through a set of managing APIs to describe the datatype(s) used by the Virtual Node to meet the requests, besides configuring the neighborhood of edge nodes. The SPM can specify a simple datatype or combine datatypes to provide more accurate or more complex data for the application. Moreover, the SPM can engage one or many end devices to compose a datatype. In the next Section, we depict the virtualization model, the relation between its elements, and the formal description of the Virtual Node, the application request, and the Datatype descriptor used in the virtualization.

3.2 The virtualization model

LW-CoEdge provides the services of the physical infrastructure (things, edge, and cloud) to users and their applications by means of a virtualization model. Our proposed model leverages the Edge tier for the provision of Virtual Nodes (VNs) closer to the data sources (end devices) besides providing a collaboration process to support distributed intelligence and decision-making at the edge tier. In addition, it promotes the decoupling of user’s applications from the physical details of both edge nodes and end devices. Thus, we leverage the VN as the core computational unit of the virtualization model to provide sensing data or perform actuation in response to the application requests. LW-CoEdge comprises the process for VN instantiation along with three main sub-processes, namely (i) a process to perform the resource management (encompassing allocation and provisioning), (ii) a process for managing sensing and actuation tasks, and (iii) a process for managing the collaboration among nodes. Figure 2 summarizes the components implementing these processes and the relationships among them.

3.2.1 The definition of the application request and the system operation

Regarding the system operation, End-users operate software (web site or application) to perform actions that generate requests to the system using the system API. This API also provides the functionalities to list the available datatype descriptors for the Software Developers in their geolocation or for the entire system (when requested via cloud). From this list, the Software Developers choose the datatype to be used in their requests.

The request represents an abstract command to get sensing data or perform actuation in order to fulfill the functional requirements of the application. We describe the request (Figure 3a) as a tuple Request = (datatype, param, callback). The datatype denotes the type of requested data (it can be either a simple, or a complex type or an actuation command), and its specification is presented further below. In this property, the Software Developer sets only the id related to the datatype descriptor chosen previously from the repository. The param represents a set of properties required by the Virtual Node (VN) to process a datatype, and the callback is a notification procedure. Figure 3b presents an example of code representing the request of a simple datatype. The VN uses the callback address to deliver the processing response with either a data stream or the status of the actuation command to the request issuer. The properties of the param depend on the datatype. The datatype of the type simple encompasses the properties fr (data freshness threshold in milliseconds), rtth (it denotes the expected response time threshold in milliseconds) and the sr (sampling data rate). Finally, the datatype of the types complex and actuation command encompass only the property rtth since they do not obtain data from the sensor.

The submitted requests arrive at the system through the entry point at the Cloud or via an edge node. When requests arrive via Cloud, the system must forward each request to the nearest edge node from the application request location. Therefore, the adoption of an algorithm to find the closest edge node is required. Nevertheless, the creation of the algorithm is out of the scope of our current work. Hence, we suggest the use of existing approaches in the literature to accomplish the task. Nishant et al. [54] and Xu et al. [75] are examples. Regardless the entry point in our CoT system, the requests are met by the virtualization system at the nearest selected edge node.

Inside the virtualization system, the Resource Allocator (RA) is the component in charge of the resource allocation process for allocating a Virtual Node (VN) in response to the application request. First, the RA receives a submitted request via API and then, searches in the cache of instances for a VN whose datatype matches the datatype requested by the application. When the VN is found, the RA forwards the request for the respective VN to execute the tasks and provide the requested datatype. In cases where the RA is not able to allocate a VN instance, or the selected one is busy fulfilling other requests, then the RA always invokes the Resource Provisioner (RP). The RP is the component in charge of the resource provisioning process. It is responsible for provisioning a new VN instance (whenever the RA did not find one available) or to scale-up an existing VN instance (when the current one is busy but has available resources). Moreover, the RP registers the new VN instances into the cache of instances and returns the new instance to the RA. The new (or scaled up) VN instance is created/configured to meet the datatype stated in the received request. The instantiation (deploy) and scale-up tasks are the responsibility of the Edge Node manager. Edge Node manager is an important component for abstracting the physical details of the edge nodes by providing services to support the operations of both the collaboration process and the resource provisioning. Besides the previous tasks, the Edge Node manager also allows un-deploying and scaling down the VN resources by checking the availability of edge node resources and other services, for instance, the load of edge node settings. Furthermore, the Edge node manager checks the availability and loads the datatype descriptors in the repository before performing its tasks. Thus, when the edge node does not have enough resources to instantiate the VN, the RP invokes the Collaboration Manager to identify a neighboring node to provision a new VN. However, if a neighbor cannot be selected, the request is refused and the RP throws a warning message to the RA. In parallel, the RP uses the Collaboration Manager that interacts with existing VNs to register the new VN instance for the purposes of performing the data sharing. The Collaboration Manager is the component in charge of enabling the collaboration process (Section 3.3) at the Edge tier.

At the time when a VN receives a request to process, it interacts with the Sensing Manager and the Actuation Manager components. These two are responsible for managing all interactions between the VN and the End devices tier. As the tasks of the VN are finalized, the VN sends the result of the processing to the request issuer by using the callback URL. For instance, when an actuation command arrives, the Actuation Manager sends it to be executed by the end device in the physical environment, and the result is sent back to the request issuer.

The importance of the Sensing Manager and the Actuation Manager is to abstract the complexity of dealing with the highly heterogeneous devices (both from the Edge tier and End device tier) regarding their physical capabilities/resources (vertical heterogeneity) and services (horizontal heterogeneity) [32] as well as all the communication processes. They are used to retrieve data (raw or processed) or to perform actuation on the physical environment depending on the requested datatype. Moreover, the Sensing Manager implements the Data processing sub-process. It is responsible for abstracting the complexity of dealing with operations for getting sensing data from the physical devices, and operations on data (persistence, update, delete, and retrieval) in the historical databases maintained at the Edge tier.

A concern regarding the VN operations is optimizing the use of resources, mainly the end devices energy. The VN always must check for the possibility of reusing data in the cache (memory) when the request is for getting sensing data, thus avoiding unnecessary access to the End devices tier. Therefore, the data freshness [12] is an essential requirement which a VN must verify when it is processing the request. The data freshness can be described according to Bouzeghoub [12] as “The time elapsed from the last update to the source.” In order to validate the data freshness, the VN checks the request time against the last acquisition date time of the provided data (freshness = request time − acquisition datetime) before accessing the Sensing Manager. Then, when the last data delivered is within a valid range time of data freshness (request. param. fr ≥ freshness), the VN sends the data from the cache to the application. Otherwise, the VN interacts with the Sensing Manager to get fresh data before forwarding it to the application. In the end, the VN invokes the Collaboration Manager to share the new data with its neighbor VNs, thereby avoiding other VNs to access unnecessarily access the edge device tier for getting the same data. The criteria for defining the neighborhood of VNs are defined in Section 3.3.

3.2.2 The datatype descriptor

The datatype is a fundamental element used for the Virtual Node (VN) operations. The datatypes are specified by the Service Provider Manager (SPM) and they are stored in the Datatype descriptors repository. A datatype descriptor or simply Descriptor describes the elements to be used by the VN to get simple data or complex data or the outcome of an actuation task. Also, a Descriptor may be related to several VN instances. However, each VN instance refers to one Descriptor.

Per definition (1), each Descriptor (Figure 4a) is a tuple in terms of the properties id, description, type, and element. Id is the unique identification; description is a high-level description to help the Software Developers understand the type of data being provided. In this property, the SPMs can provide any technical level information that they deem to be relevant to the Software Developer, such as sensor accuracy and the periodicity of measurement. All these extra information are obtained from the infrastructure components used to interact with the physical environment; type denotes the type of information provided; and element represents an array where its elements are described further below. Figure 4b represents a datatype descriptor for a simple datatype.

The datatype descriptor identification (id) is a fundamental property to facilitate its identification by users. Therefore, an efficient and friendly naming schema to identify a data type is needed. However, such a naming mechanism for the Edge Computing paradigm has not been built and standardized up till now. For this reason, we envision to create the identification using the naming technique depicted in Shi et al. [65] which encompasses location (where), role (who), and data description (what). For instance, the id value “UFRJ.UbicompLab.temperature” identifies the descriptor used by the VN to provide raw data of temperature from the Ubicomp laboratory at our university, UFRJ. Indeed, this friendly naming style allows users to identify the data type descriptors registered in the catalog easily using a search mechanism.

The type is described using three pre-defined values, namely: simple, complex and actuation. The simple type means that the information provided is a raw data (e.g., temperature), the actuation means an actuation operation (e.g., turning on/off a lamp), and complex represents a workflow. Regarding both simple and actuation types, each elementi ∣ i ≥ 1 represents a physical device used to either get sensing data (sensor device) or perform actuation (actuator device) respectively. Concerning the complex type, elementi ∣ i = 1 represents only an instance of the workflow. The workflow is used to specify a data flow containing other data types (either simple or complex) that VN instances use to get data (either raw or processed) as input, perform some processing, and generate information as output. These tasks are similar to the processing performed in a Complex Event Processing (CEP) engine [19]. Therefore, we envisioned using a CEP engine for executing the workflow. Nevertheless, the creation of the workflow is out of the scope of our current work.

3.2.3 The virtual nodes

In our virtualization model, the Virtual Node (VN) is the core computational unit. Our virtualization model was designed to circumvent two drawbacks identified in the model adopted in Olympus [61]. The first drawback concerns the design of the VN and the second is the lack of collaboration between VNs. Furthermore, our model design is simplified based on the integration of lightweight virtualization [47, 48] approach and the microservice pattern [42, 70], thereby defining VNs as “lightweight-nodes”. This new VN is provided as a microservice as it is small, high decoupled, and owns a single responsibility. Moreover, the VN is packaged in lightweight-images thereby facilitating its distribution and managing at the edge nodes.

The first drawback is tackled by simplifying the VN concept as proposed by Olympus. Our VN is defined as an abstract class (Figure 5) providing common attributes and functionalities to design predefined types of VNs for each provided datatype. A datatype can represent either a raw data, or a processed data (representing a complex event or the result of a data fusion procedure), or an actuation result. Therefore, there is a VN to represents the simple datatypes (SensingVN), a VN to represent the actuation datatypes (ActuationVN), and a VN to represent complex datatypes (DatahandlingVN). They are depicted further below.

The second drawback is fulfilled by extending the VN concept to allow the collaboration among VNs for the purpose of data sharing using the collaboration process.

Per definition (2), we describe each VN (Figure 6a) as a tuple in terms of the properties ID, Datatype, Data, and Neighbors. ID is the virtual node identification; Datatype is a link to the datatype descriptor, and it is defined as a tuple is _ referenc = Ra, where Ra = id representing the unique Descriptor identifier; Data represents the resources provided by the VN; and the Neighbors = {vn1, …, vnn} represents a set of neighboring VN connected to the VN that shares the same data (values). Figure 6b represents a VN providing a simple datatype of temperature.

The ID is a relevant property used to identify the VN instances into the repository in each edge node. In the same way as in the datatype identification, we need of an efficient and friendly naming schema for the VN identification that facilitates the collaboration. Therefore, we describe the identification of the VN as a tuple VNID = {hostname, Descriptor(id)}, where hostname is the unique label (assigned by the network administrator) identifying the edge node connected to a network, and Descriptor(id) denotes the datatype identification provided by the VN. This decision aims to facilitate the collaboration during the task of identifying which edge node the VN is executing since the same datatype can be provided on different edge nodes. Let’s use the prior datatype identification as an example, “UFRJ.UbicompLab.temperature”. Considering that our system can provide the datatype in the edge nodes EN1 and EN2, the related IDs will be “EN1.UFRJ.UbicompLab.temperature” and “EN2.UFRJ.UbicompLab.temperature”.

Regarding the resources provided by the VN (Data property), it can be either raw data (e.g., temperature, humidity, luminosity, presence, etc.) or data processed by a CEP engine, such as the description of an event of interest as, for instance, a Fire Detection. Finally, the resource can also be information relating to the result of the actuation command (actuation type). Moreover, the acquisition datetime represents the date and time in which a data has been acquired. Hereafter, we describe the predefined types of VNs.

The VN of the type Sensing represents the simple datatype and provides a stream of raw data sensed by one or many physical nodes. This VN has a set of properties p : p = (simple, fr, sr, callback). Simple property denotes the information to process, fr means the data freshness threshold in milliseconds, sr the sampling data rate, and callback is an operation invoked by the VN to deliver data to the request issuer whenever it finishes the processing. The stream of raw data is retrieved either from historical databases maintained at the edge tier or by a direct connection with the physical nodes at the sensor tier. However, the latter option depends on the value of the data freshness property.

The VN of the type Actuation provides actuation capabilities over the physical environment and has a set of properties p : p = (command, callback). Command denotes the type of actuation function provided by the VN, and the callback denotes an URL address used by the VN to notify the request issuer when the operation finalizes along with its status (e.g., processed or not).

The VN of the type Data handling is used to provide value-added information to meet user requests by employing information fusion techniques [60]. This is done by executing pre-defined queries on sensing data using a Complex Event Processing (CEP) engine. The VN represents the complex datatype and has a set of properties p : p = (complex, callback). Complex represents the query to be executed, and callback is used by the VN to notify the request issuer when the CEP has completed its operation along with the query response (data stream).

In this Section, we presented the virtualization model, its main components, and the relationship between them. Moreover, we described the Virtual Node, the application request and the Datatype descriptor. In the next Section, we describe the collaboration process.

3.3 Collaboration process

We designed a novel flat P2P collaboration process entirely distributed at the edge tier. This process is used in two activities of the CoT infrastructure operation. First, in the data sharing activity that allows a VN to actively share its fresh data with neighboring VNs. Second, in the distributed resource management that provides for each edge node the capability of decision-making to engage neighboring edge nodes to allocate or provision VNs whenever it is necessary.

Our approach uses the flat P2P model in opposition to the hierarchical model [35] to overcome the drawback regarding hierarchical models and avoid the high dependence on the master node (or controller node – a single point of failure). Also, we avoid the communication overhead to create and maintain the hierarchy. Therefore, our VNs and resource management are independent of each other from an operational point of view, i.e., each edge node has its own resource management to allocate or provision one or many VNs. By following our approach, the edge nodes are homogeneous regarding their functionalities even though heterogeneous regarding their capabilities.

A challenge regarding our approach is how to organize the edge nodes in a neighborhood to enable the collaboration process. Therefore, we define the neighborhood concept using (i) the closeness between the edge nodes and (ii) the semantics of the data provided by them. The geographic closeness is used to take advantage of the location-aware capability of the edge nodes and helps decreasing the latency and bandwidth consumed between the edge nodes. The data semantics is used to determine which data the VNs can share with each other to reduce the access and the data transmissions between end devices and edge nodes, thereby saving energy and bandwidth. Thus, we need to apply techniques to identify which edge nodes can be neighbor to each other and which data to be shared.

In the first case (closeness), we adopted the Weighted Voronoi Diagrams (WVD) [36] to divide a geographic area (Edge network) into regions to promote the collaboration among edge nodes. The WVD was adopted as a solution to build the neighborhood because it is widely used in the areas of GIS (Geographic Information Systems), sensor networks and wireless networks for making placement decisions. To use the WVD algorithm, we need to provide the edge nodes geographic locations on the map. This location in the WVD is called “site.” Moreover, the algorithm allows setting the weight for each site that represents a value determining the distance between the site A and site B, for instance. As our approach focuses on each edge node belonging to the Edge network which is connected to the underlying network of end devices, our problem P is how to divide the Edge network into regions of edge nodes to establish the neighborhood. Figure 7 illustrates our collaboration process.

To better understand its operation, we will detail how the essential elements are distributed both at End device tier and Edge tier besides how the neighborhood is defined. The specifications of the Edge and End device tiers regarding their elements are the same presented in Section 3.1. Regarding the End devices tier, our example encompasses the end devices ed1, ed2, ed3, ed4 and ed5. The Edge tier encompasses four edge nodes en1, en2, en3 and en4. Each edge node eni is connected to one or many end devices. In our example, en1 is connected to end devices ed1, ed2 and ed3; en2 is connected to end devices ed2 and ed3; en3 is connected to end devices ed3 and ed4; and, en4 is connected to end devices ed5.Moreover, each edge node eni can host and run one or many virtual nodes VN = {vn1, vn2, …, vnn}. Each vni can provide sensing data obtained from or perform actuation on one or many end devices. For instance, in the en1the VN1 is connected to the End device ed1and ed3 whereas the VN2 is connected to the End device ed1 and ed2.

In this work, we provide a process to support the Service Provider Manager (SPM) activities regarding the setup of the collaboration among edge nodes. The setup encompasses two main steps: (i) dividing the End device tier into areas and positioning the edge nodes inside these areas, (ii) building the neighborhoods. The activity diagram in Figure 8 illustrates the process.

The SPM executes the first step when there are new end devices and/or edge nodes to be integrated into the CoT infrastructure. Thus, the SPM can determinate the best position for each edge node (at the Edge tier) regarding the end device. Initially, we assume that the best localization for each edge node is the closest to the end devices which it will be connected to, thereby minimizing the latency of communication between them. In order to achieve this goal, the SPM obtains the position of each end device at the End device tier (EdT). Next, the WVD algorithm is invoked to divide the EdT into areas A = {a1, a2, …, an} using the end device localization as site. Thus, each area ai ∈ [1, A] can contain one or many edge nodes. Lastly, the SPM places each edge node on the areas and generates a list of the edge nodes positions. Figure 9a presents the WVD with the edge nodes positioned. In our example, we placed the en1 on the boundary of the areas a1, a2, and a3 to enable the connection to the end devices ed1, ed2 and ed3. The en2 is placed on the boundary of the areas a2, and a3 to enable the connection to the end devices ed2 and ed3. The en3 is placed on the boundary of the areas a3, and a4 to enable the connection to the end devices ed3 and ed4. Finally, the en4 is placed on the area a5 to enable the connection to the end devices ed5. Therefore, the position of each edge node respects, besides the distance, which type of data is provided by the end device(s).

The second step of the setup process can be executed independently of step one. The SPM uses this step to determine the neighborhoods. Upon getting the location of each edge node at the Edge tier (ET), the SPM invokes the WVD algorithm to divide the ET into regions R = {r1, r2, …, rn}. Moreover, the edge node localization is used as the site, and each site has the weight(s) determined by a distance D, where D = dlm represents the distance between enl and enm. Also, each region ri ∈ [1, R] only contains one edge node. Next, for each edge node eni, the border of its area is verified regarding the other edge nodes. So, the edge nodes that border a given edge node eniare selected and included in its list of neighboring nodes. For instance, Figure 9b illustrates the ET divided into four regions by the WVD algorithm, and the border between them defines the neighborhoods. Thus, the edge node en1 has en2 and en3 as neighbors. The edge node en2has en1 and en3 as neighbors. The edge node en3 has en1, en2 and en4 as neighbors. Lastly, the edge node en4 has the edge node en3 as neighbor. After defining the neighborhoods, for each edge node, a configuration file containing its neighboring nodes is generated, and then the setup process finalizes.

From now onwards, the virtual nodes (VN) can collaborate with each other for data sharing by means of a dataflow approach. In the dataflow programming model, the application is represented as a graph [32]. In the graph, the nodes can be (i) data producers, (ii) data consumers, and (iii) processing units (or intermediary). The producer node only produces outputs and represents the start of the dataflow. The consumer node only consumes inputs provided by another node and represents the end of the flow, whereas the processing units are nodes developed for processing the inputs and produce outputs. Hence, in the context of the dataflow model, we can classify the VN as a processing unit, since our VN is able to receive/provide sensed data from/to its neighboring VNs.

In addition to the approaches and techniques before mentioned, we also need to establish which data a VN can share with its neighbors, thereby defining the semantic collaboration. The data sharing may be either total or partial. In the first case, the VN sends all its data to the neighboring VNs. In turn, in the partial model, the VN sends only the sensing data related to the end device(s) (e.g., a sensor) connected to neighboring VNs. For instance, in the model of Figure 7, the VN2 of en1is connected to end devices ed1 and ed2 whereas the VN2 of en2is connected to the ed2. Thus, VN2 of the en1 sends only the sensing data ( S2) related to ed2 for the neighboring VN2 of en2 and vice-versa. Therefore, our model avoids the communication overhead by sending only the necessary data between the VNs.

Furthermore, our collaboration process allowed designing a novel model of distributed resource management (RM). This model takes advantage of the best features of the flat P2P collaboration so that each edge node has the capability of decision-making to allocate the best resource to meet the application request. Thus, whenever the edge node does not have enough resources to instantiate the VN, the RM will identify a neighboring node with available resources to provision a new VN. However, if a neighbor edge node is not able to be selected, the application request is refused.

In this Section, we presented our collaboration process. It focuses on meeting the requests of emerging IoT applications besides providing a model where both the virtual nodes and the resource management components are independent of each other from an operational point of view. To achieve the collaboration goal, initially, a process was presented to create the neighborhood of edge nodes. Next, we described the main features of the data sharing between virtual nodes and the decentralized resource management between edge nodes. In the next Section, we present heuristic algorithms implementing our distributed resource management and P2P collaboration with data sharing.

4 Heuristic algorithms for the distributed resource management and P2P collaboration

In this section, we present the proposal of four heuristic algorithms implementing our distributed resource management and flat P2P collaboration processes. We are using a heuristic method since our problem involves placement decisions of which edge node will meet the applications requests to maximize utility regarding response time (latency) and bandwidth consumed. According to Xu et al. [75], this class of problem is NP-Hard, and the heuristic algorithms are often used to solve it [41, 44]. We define some premises to drive the algorithm development, that are: (i) each edge node has its datatype repository, and all components of the architecture deployed; (ii) the information about which is the neighbor node of a specific node is already available, and it was generated using the second step of the setup process (Figure 8); (iii) during the collaboration, the perimeter of actuation should be limited by only looking at the neighboring nodes regarding the node in which the request arrived; (iv) the application requests a single datatype; (v) the requests arriving in the system are served immediately and in order of arrival; (vi) the resource availability of the edge node should always be checked before provisioning the new virtual node; (vii) the resource availability of the VN should always be checked before meeting the request; (viii) the infrastructure should try to scale-up the resources of the VN to meet more requests before provisioning another VN; (ix) the VN identification (VNid) is unique and should be combined using the datatype id and edge node hostname aiming to facilitate the collaboration process.

Our resource management is based on the algorithms proposed by Wang et al. [72] and also by Hong et al. [35] with several modifications described as follows. First, we have modified the model from hierarchical to flat for addressing the collaboration described in Section 3.3. Second, we have enhanced the resource management with P2P collaboration (Section 4.3). The third modification concerns how the edge nodes deal with the application requests. To handle the requests, Wang et al. [72] enable workload offloading from cloud servers to run on the edge nodes. Our proposal adopts a different approach: the infrastructure meets the applications requests by allocating virtual nodes running at the edge tier. Thus, we save bandwidth consumption and avoid network overhead, thereby favoring the execution of the emerging IoT applications that are latency-sensitive, for instance.

A remarkable feature of our algorithm is to work on-demand. As user application requests arrive in any edge nodes, the Resource Allocator (RA) component (Section 4.1) receives the requests and allocates virtual nodes (VN) to meet them. The Resource Provisioner (Section 4.2) only provisions a new VN instance whenever it is requested by the RA. As the edge nodes are resource constrained devices, this strategy avoids the additional provisioning of VNs, thereby saving resources and avoiding affecting their original (primary) functions, for instance, routing whenever the edge node is a smart router. Furthermore, we limit the actuation perimeter of our algorithm only to look at neighboring nodes regarding the node in which the request arrived. Therefore, in this P2P collaboration, the requests are forwarded only to one hop regarding the entry edge node. To best understand our algorithms, we present two UML activity diagrams that describe their primary activities and the relationship between them. Figure 10 represents the distributed resource management logic (Sections 4.1 and 4.2) and Figure 11 the P2P collaboration (Sections 4.3 and 4.4). They are explained in details in the next Sections.

4.1 Resource allocation

Resource Allocation is a process in charge of executing the tasks to allocate resources necessary to meet the workloads of one or several applications [22]. In our virtualization model, the Resource Allocator (RA) component is responsible for performing this process. Following the virtualization approach, it has the main tasks of finding and allocating virtual nodes (VNs) to meet application requests. Moreover, the RA can invoke the Resource Provisioner component to provision new VNs whenever it is necessary. Algorithm1 implements all the logic steps of our RA. The RA listens for incoming requests from the application (lines 21–30). Upon receiving the request, the RA verifies the type of message, and if it is APP_REQUEST, the handleRequest procedure is executed to meet it (line 1).

The handleRequest procedure performs a search in the instances repository for a Virtual Node (VN) matching the received request (line 3). If no VN exists matching the request, the RA invokes the Resource Provisioner (RP) to provision a new VN instance (lines 4–5). Moreover, if the selected VN has its resources busy managing other requests, the RP is also invoked (lines 7–10) either to increase the VN resources (e.g., memory) or to provide another VN. As VN instances are chosen (or provisioned), the RA forwards the requests to the respective VN to process them (lines 12–16). It is worth noting that the catch command (line 17) is responsible for capturing any processing error whereas the throw exception (line 18) is the command in charge of throwing the error messages. These commands have the same goals in all algorithms.

4.2 Resource provisioning

In the context of this paper, Resource Provisioning is the process responsible for provisioning new Virtual Nodes (VN) or empowering the VN with extra resources to meet new received requests. The Resource Provisioner (RP) is the component in charge of performing this process. Algorithm2 implements our RP logic. The algorithm starts when the Resource Allocator invokes the RP through the provisioning function passing two parameters, namely CurrentVirtualNode and Request (line 1).

Initially, the RP invokes the EdgeNodeManager component to verify the resources of the edge node for hosting a new VN instance (line 2). EdgeNodeManager is the component in charge of providing a set of methods for abstracting the access to physical information of the edge nodes besides controlling the VN life-cycle. When the edge node has enough resources available (line 3), the next step is either deploying a new VN container or to re-configure (scale-up) an existing one. In case of CurrentVirtualNode parameter is null (line 4), the RP instantiates a new VN by invoking the containerDeploy method from the EdgeNodeManager component (line 5). Next, the new VN is cached and also registered for purposes of data sharing collaboration (lines 6 and 8). At the end, the VN instance is returned (line 10). When a CurrentVirtualNode parameter is not null, the scale-up method from the EdgeNodeManager component (line 12) is invoked to increase the VN container with extra resources for improving its performance to meet more requests. If the scale-up worked fine, the current virtual node instance is returned (lines 13–14). Otherwise, if the scale-up fails or the container does not have enough resources, the RP forwards the request to the P2P collaboration for finding a neighbor edge node capable of meeting it (line 20). The RP always return the null value when a VN instance cannot be created (line 22).

4.3 P2P collaboration

P2P collaboration component implements the essential services to enable the collaboration process at the Edge tier. Among the services, we can mention: (i) forwarding of requests to be served by a neighboring edge node, (ii) determining the best neighboring edge node to meet a request and (iii) registering a VN to enable the data sharing collaboration. Algorithm3 implements the logic of these services. During the initialization process, there is a call to the EdgeNodeManager component to get the edge node neighborhood and the needed resources to deploy a VN. Next, the run method (line 56) is performed to listen for incoming events sent by the Resource Provisioner (lines 60 and 63) and EdgeNodeManager (line 66).

Upon receiving the event, the algorithm verifies the type of event. When the event is SEND_TO_NEIGHBOR_NODE, then the sendToNeighborNode method (line 1) is executed. The sendToNeighborNode selects a neighboring node through the private method getNeighborNode (line 3), and then forwards the Request to the neighboring Resource Allocator (lines 4). The getNeighborNode (lines 9–23) is used to provide a neighboring node with the best resources for hosting and running the virtual node. Initially, the method seeks an instance of the datatype descriptor in the repository by invoking the Catalog component using the id property (from the Request object) (line 10). Next, the candidate neighboring nodes are selected, i.e., the nodes that provide the requested data (lines 12–17).

Next, the bestResource private method is invoked to find the best neighbor among the candidate edge nodes belonging to its neighborhood (line 18). In case of the best neighbor does not exist, an exception message is thrown (lines 19–21). Initially, when the bestResource method runs (line 24), it obtains the amount of memory required to process the request (line 28). Next, for every candidate node, its available physical resources (e.g., memory, CPU) are obtained (line 31) using the monitor component. Such memory information is used to verify if the candidate node has enough available memory to process the received request (line 34). When the candidate node has available physical memory to process the request, it is selected and stored into the list of the best candidate nodes (lines 34–36), otherwise, it is discarded. Moreover, network latency also is measured (line 33). Then the list of candidate edge nodes is sorted based on the criteria of the number of available CPUs, free memory and lower latency. In the end, we find and select the node with the best resources on top of the list (line 42).

Whenever the received event is REGISTER_VN_TO_DATASHARING, the registerVNtoDataSharing method is executed (line 44). This method is responsible for registering a new virtual node instance to participate of the data sharing process. First, the process executes the register inside of the same edge node (lines 45–48). Next, the register occurs on the neighboring nodes that can collaborate (lines 49–54), i.e., the neighboring edge nodes running Virtual Nodes that provide the same datatype elements. In order to check if a VN can collaborate, the VerifyIntersection private method is used to check the intersection of the elements of the NewVirtualNode and existing VirtualNode.

Finally, when the received event is RELOAD_CONFIG, the settings related to the neighborhood and resources needed are reloaded (line 67 and 68). This event is essential when new data about the neighborhood are made available (insertion or exclusion of neighbor nodes) through a manual or dynamic process.

4.4 P2P data sharing

P2P Data Sharing is responsible for enabling a Virtual Node (VN) to share data with its neighboring VNs. Algorithm4 implements the logic of this service.

The VN calls the shareData method to share fresh data obtained directly from (physical) sensor devices or received from other VNs (line 2–15). The data sharing occurs only among elements of the same type (line 4), i.e., according to the data sharing constraint presented in Section 3.3. When the previous restriction is valid, and the neighboring VN belongs to the same edge node, the data are share locally (lines 6–7); otherwise, the data are sent to the VN in the neighboring edge node (line 10).

In this section, we presented a set of algorithms that implement our distributed resource management and the flat P2P collaboration. First, we detailed a set of premises used to drive the implementation. Next, we presented two algorithms found in the literature that served as the base for our solution as well as the types of adaptations needed in these algorithms to support our model. Last, we presented the four main algorithms and a detailed explanation of their operations.

5 Evaluation

We used the Goal Question Metric (GQM) methodology to help in the design of the performed evaluation. The GQM [7] is a hierarchical approach (three levels) based on the premise of deriving software metrics from questions and goals. According to the methodology, first, we have to define the goals. The goal represents the conceptual level related to the measurement purpose (what object and why), the perspective (what aspect and who), and the environmental characteristics (where). Second, each goal needs to be refined into several questions. A question represents the operational level used to define the object of measurement (product, process, resource). Finally, the metrics represent the information at the quantitative level that should be collected to answer one or many questions. According to Basili et al. [7], the metrics can be either objective or subjective. Objective metrics represent data that depends only on the object that is being measured and not on the point of view of who is measuring it. Subjective metrics represent data that depends on the object that is being measured as well as the point of view of who is measuring it.

In Section 5.1, we describe the goals, questions, and metrics defined to be used in our evaluation. In Section 5.2, we describe the overall parameters of the scenarios considered in our experiments. In Section 5.3, we describe the three experiments performed to answer each of the questions defined using the GQM methodology, including the responses to the defined questions.

5.1 Goals, questions and metrics

The goals, questions, and the derived metrics used in our work are presented as follows.

Goal G1 is to analyze LW-CoEdge, for the purpose of evaluating its resource management algorithms, with respect to the performance in meeting application requests, from the viewpoint of the end-user.

Goal G2 is to analyze LW-CoEdge, for the purpose of evaluating its collaboration mechanisms, with respect to the impact of meeting a request through a neighboring edge node regarding the bandwidth, the latency of communications and energy saving, from the viewpoints of both the end-user and the underlying computational infrastructure.

From these two goals, we raise four relevant questions (Q1, Q2, Q3, and Q4) described in Table 2 . It is important to mention that the performed evaluation always considered as benchmark for comparison purposes a version of the resource management process with no collaboration among edge nodes.

Table 3 shows a set of metrics that are collected from our CoT system. We used these metrics to answer our posed research questions. To answer the question Q1, we classified application requests within two groups: (a) request met, and (b) request not met. Our system considers that a request is met whenever the CoT system provides the required datatype and the Response Time Threshold (RTTh) desired by the application is satisfied. It is worth noting that the system delivers the results to the request issuer even if the RTTh desired by the application is not respected. The RTTh is an important QoS parameter and depends on the type of application. In PubNub Staff [58], a discussion about the impact of the Real-time applications and the response time desirable is performed. In Yi et al. [77], the authors argue that augmented reality and real-time video analytics are two examples of applications in which a high response time (more than tens of milliseconds) can affect the user experience. Moreover, network latency is a factor that affects the response time of the applications and also needs to be measured.

For the purpose of assessing if the received requests are met, we used the metrics REQ_MET (M1) and REQ_NOT_MET (M2) that measure the effectiveness of the resource management (RM) algorithms to meet the application requests arriving in the system. REQ_MET computes the number of requests which were properly met, while REQ_NOT_MET records the number of requests which the RM algorithm was unable to meet during a monitoring period. Hereafter, we present the expression (3) and the expression (4), used to assist in measuring the metrics REQ_MET and REQ_NOT_MET.

Per expression (3), the metric TTS represents the total time spent to meet a given request. It is the sum of the time periods collected through the parameters TIME_REQ, TIME_SPENT_FW, and COMM_TIME. TIME_REQ denotes the time required by our algorithm to handle a request at the same edge node where the application has issued the request. TIME_SPENT_FW measures the time spent since receiving the request, finding a neighbor node, and forwarding the request to the neighbor node. Lastly, COMM_TIME measures the communication time between the node that received the request and the neighboring edge node that will serve the request. It is important to mention that the parameters TIME_SPENT_FW and COMM_TIME are collected only when the collaboration process is executed.

Expression (4) presents a function used to determine whether the request has been met adequately or not (i.e. to calculate REQ_MET and REQ_NOT_MET). The function receives as input the datatype (DType) and the response time threshold (RTTH) from the request, along with the measured value of the TTS metric. The function returns the value 1 when a request has been met, i.e., if the DType required is provided in the system and the value of TTS is less than or equal to the RTTH desired by the application. On the other hand, the function returns the value 0 if the DType is not provided or TTS is greater than RTTH. Furthermore, our CoT system considers if the datatype is provided when the virtual node responsible for processing it has enough resources in term of memory, CPU and the datatype descriptor configured. Otherwise, the datatype is classified as not provided and the application request is forwarded to the collaboration mechanism that, if it is active, checks the availability of a neighboring virtual node to process it.

Therefore, the metrics aforementioned help us assessing the effectiveness of our CoT system to meet more requests using the proposed collaborative RM, in comparison to a system without collaboration among edge nodes.

To answer question Q2, it is necessary to assess if the collaboration process helps decreasing the data traffic between edge nodes and end devices (thus, contributing to save bandwidth consumption in the CoT system). To do so, we used a set of metrics to evaluate the data traffic in relation to the consumption and saving obtained during the execution of the collaboration mechanisms. The network usage is a concern in this work since its high consumption affects the overall system performance. Thus, we selected the metrics AVG_DT_EN (M3) and AVG_DT_ENED (M4) to assess the data traffic. The metric AVG_DT_EN (M3), represented by expression (5), has the purpose of monitoring the entire data flow of the collaboration process, i.e., the message exchange (request/response operations) between edge nodes. For example, a message exchange may represent the operation of an edge node sending the application request to the neighboring edge node to process. Each message (including body and headers) has its size calculated and registered in the system.

Per expression (5), the metric AVG_DT_EN is the sum of the data traffic generated to meet the application request and is collected through the parameters DT_REQREC_APP, DT_REQSENT_NB, DT_DTSHARING, and DT_CALLBACK. The parameter DT_REQREC_APP records the data traffic to receive an application request arriving in the system. The parameter DT_REQSENT_NB records the data traffic during the operation of an edge node to select the neighboring edge node and send to it the application request to process. The parameter DT_DTSHARING records the data traffic during the operation of data sharing among virtual nodes. Lastly, the parameter DT_CALLBACK records the data traffic to send the answer to the request issuer.

In addition to the consumption related to the communication between edge nodes, the data traffic regarding the interaction between edge nodes and end devices was also assessed, since it is another part of the CoT system that strongly influences in its overall performance in terms of bandwidth consumption. As aforementioned in Section 3.2, the virtual nodes (VN) can obtain fresh data either from historical databases maintained at the edge tier (local databases deployed into edge nodes), or from a direct connection with the physical nodes at the end devices tier, or from cache system in memory of the VN. Each source of data has a substantial impact on traffic in the network between the edge tier and end device tier. For instance, the less access to physical sensors, the less traffic is generated. Although these strategies are also used without running the collaboration, it is essential to evaluate whether our collaboration, along with the proposed mechanism for data sharing among VNs, helps to decrease the number of accesses to the end device tier, thus, reducing the data traffic. The metrics used for evaluation are described below.

The metric AVG_DT_ENED (M4), represented by expression (6), has the purpose of assessing the data traffic during the interaction between edge nodes and end devices to obtain sensing data or perform actuation tasks. In order to provide input to the expression (6), we used the parameter R_ENDEV responsible for recording the number of requests served using sensing data read directly from the end device. Moreover, the parameter SDsize represents the size of the sensing data in kilobytes (Kb) handled in our CoT system (Table 4). Per expression (6), we calculate the metric AVG_DT_ENED by multiplying value of the parameter R _ ENDEV with the size of the sensing data (SDsize).

Therefore, when the collaboration process finalizes, the metrics AVG_DT_EN (M3) and AVG_DT_ENED (M4) help to identify the traffic generated during such a process. In this way, we are able to know the overhead added on the network and determine the effectiveness of our CoT system to reduce the data traffic between edge nodes and end devices.

Furthermore, we have selected the AVG_DT_TRAFFIC_KB (M7) metric to provide information to assess if our system is cost-effective regarding the traffic generated in the network to meet a request with the collaboration process enabled and disabled. The metric is calculated using the expression (7). To provide input for the expression, we use as parameters the previously mentioned AVG_DT_EN and REQ_MET metrics.