Abstract

Accurate prediction of river discharge is essential for the planning and management of water resources. This study proposes a novel hybrid method named HD-SKA by integrating two decomposition techniques (termed as HD) with support vector regression (SVR), K-nearest neighbor (KNN) and ARIMA models (combined as SKA) respectively. Firstly, the proposed method utilizes local mean decomposition (LMD) to decompose the original river discharge series into sub-series. Next, ensemble empirical mode decomposition (EEMD) is employed to further decompose the LMD-based sub-series into intrinsic mode functions. Further, the EEMD decomposed components are used as inputs in three data-driven models to predict river discharge respectively. The prediction of all components is then aggregated to obtain the results of HD-SVR, HD-KNN and HD-ARIMA models. The final prediction is obtained by taking the average prediction of these models. The proposed method is illustrated using five rivers in Indus Basin System. In five case studies, six models were built to compare the performance of the proposed HD-SKA model. The data analysis results show that the HD-SKA model performs better than all other considered models. The Diebold-Mariano test confirms the superiority of the proposed HD-SKA model over ARIMA, SVR, KNN, EEMD-ARIMA, EEMD-KNN, and EEMD-SVR models.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The prediction of river discharge is essential for the planning and management of water resources. Hydrological data prediction provides critical information on the impending drought, heatwaves, or floods which brings devastation due to its delayed and inaccurate estimation (Sehgal et al. 2014). River discharge prediction has gained attention to handle extreme events as an outcome of climate changes. Different studies on river discharge have been studied in China (Wei et al. 2013), the USA (Meshram et al. 2019), Iran (Dehghani et al. 2021) and North America (Alizadeh et al. 2021). Thus, precise river discharge estimation is necessary to construct warning systems for water management to deal with extreme events (Wang et al. 2018; Adnan et al. 2021).

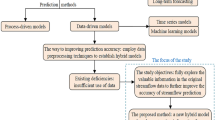

Data-driven models are used to predict hydrological variables such as inflow, out-flow and discharge. These include both statistical and artificial intelligence (AI) models. Statistical models for time series include Moving Average (MA), Autoregressive (AR), ARMA and Autoregressive Integrated Moving Average (ARIMA) models and others (Bayazit 2015; Fashae et al. 2019; Bonakdari et al. 2020; Musarat et al. 2021; Aghelpour et al. 2021). However, these models are unable to capture time changes with sufficient accuracy because of linear analysis phenomena. Nowadays, AI models are widely used to model complex and non-linear time series data. For example, K-nearest Neighbor (KNN), Support Vector Regression (SVR) and Artificial Neural Network (ANN) are popular models implemented in hydrological studies (Wu et al. 2008; Nikolic and Simonovic 2015; Poul et al. 2019; Sharghi et al. 2019; Riahi-Madvar et al. 2021). However, the drawback of AI models is that they do not incorporate noise and ignore the complex multi-scale structure of hydrological variables (Rezaie-Balf et al. 2019). Therefore, using a single model to predict river discharge is challenging. Researchers in the field of hydrology have introduced hybrid methods to improve the prediction accuracy of models. This study aims to overcome the limitations on the models application and proposes a new hybrid method that helps in the precise estimation of river discharge.

Many pre-processing techniques are integrated with data-driven models to create hybrid models for predicting hydrological variables. Some pre-processing methods such as Empirical Mode Decomposition (EMD), Local Mean Decomposition (LMD) and Ensemble EMD (EEMD) are combined with data-driven models to predict river discharge (Rezaie-Balf et al. 2019; Silva et al. 2021; Vidya and Janani 2021). In this study, the focus is on improving the prediction of river discharge by using hybrid pre-processing techniques.

In this study, a novel hybrid method is proposed by combining two pre-processing techniques with data-driven models. The two pre-processing techniques used are LMD and EEMD (abbreviated as HD) and three models applied are SVR, KNN, and ARIMA models (termed as SKA). Our proposed hybrid method uses LMD to decompose the original river discharge series into sub-series. Then, EEMD is applied to decompose the obtained sub-series into different components. The HD components are used as the inputs to SVR, KNN and ARIMA models. The predictions of these components are aggregated for HD-SVR, HD-KNN and HD-ARIMA models respectively. The final forecast of HD-SKA model is obtained by taking the average of these predictions. The effectiveness of the proposed hybrid method is illustrated on the discharge data of five rivers of Indus Basin System, Pakistan. The rivers include Kabul River, Kanshi River, Kunhar River, Jhelum River (Domel station) and Jhelum River (Chattar Kallas station). The superiority of the HD-SKA method is successfully presented on five data sets where six benchmark models are used to verify the performance of the proposed hybrid model. The HD-SKA method is novel based on the combination of hybrid decomposition with data-driven models which efficiently decomposes discharge series and capture its complex features with higher prediction accuracy.

2 Methodologies

2.1 Local Mean Decomposition (LMD)

Smith (2005) introduced LMD as a tool for analyzing the time–frequency of the electroencephalogram signal. LMD is a self-adaptive time–frequency approach that is useful in capturing non-linear features of data (Liu and Han 2014; Huynh et al. 2021). LMD decomposes a signal into several product functions (\(PFs\)) that have a physical meaning. The time–frequency distribution of the signal is obtained by assembling the instantaneous frequency and instantaneous amplitude of all the obtained \(PFs\). Given the original signal \(y(t)\), it is decomposed using the following steps:

-

(i)

Obtain the local extrema (\({n}_{i}\)) from \(y(t)\) and then compute the average (\({m}_{i}\)) of the two successive extrema as follows:

$${m}_{i}=\frac{1}{2}\left({n}_{i}+{n}_{i+1}\right)$$(1)All the mean values (\({m}_{i}^{\prime}s)\) are connected through the straight lines and the local mean function \({m}_{11}(t)\) is formed by moving averaging which smooth the \({m}_{i}^{{\prime}}s\).

-

(ii)

The envelope estimate (\({a}_{i}\)) is defined as follows:

$${a}_{i}=\frac{1}{2}|{n}_{i}+{n}_{i+1}|.$$(2)The estimates of the local envelope are smoothed in similar ways as the local means to derive the envelope function \({a}_{11}(t)\).

-

(iii)

The \({m}_{11}(t)\) is subtracted from \(y\left(t\right)\) which forms the resulting signal as \({h}_{11}(t)\):

$${h}_{11}\left(t\right)=y\left(t\right)-{m}_{11}\left(t\right).$$(3) -

(iv)

\({h}_{11}\left(t\right)\) is amplitude demodulated by dividing it by envelope function \({a}_{11}\left(t\right)\) which forms \({s}_{11}\left(t\right)\) given as:

$${s}_{11}\left(t\right)=\frac{{h}_{11}\left(t\right)}{{a}_{11}\left(t\right)}.$$(4)The function \({s}_{11}\left(t\right)\) is the purely frequency modulated signal known as the envelope function \({a}_{12}\left(t\right)\) of \({s}_{11}\left(t\right)\) should satisfy the condition \({a}_{12}\left(t\right)=1\). If this condition is not satisfied, then \({s}_{11}(t)\) is regarded as the original signal and the above process is repeated until the purely frequency modulated signal (\({s}_{1n}\left(t\right)\)) is derived that satisfies \(-1\le {s}_{1n}\left(t\right)\le 1\). Therefore,

$$\left\{\begin{array}{l}h_{11}\left(t\right)=y\left(t\right)-m_{11}\left(t\right)\\h_{12}\left(t\right)=s_{11}\left(t\right)-m_{12}\left(t\right)\\\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\vdots\\h_{1n}\left(t\right)=s_{1\left(n-1\right)}\left(t\right)-m_{1n}\left(t\right)\end{array}\right.,$$(5)where \(\left\{\begin{array}{c}{s}_{11}\left(t\right)={h}_{11}(t)/{a}_{11}(t)\\ {s}_{12}\left(t\right)={h}_{12}(t)/{a}_{12}(t)\\ \vdots \\ {s}_{1n}\left(t\right)={h}_{1n}(t)/{a}_{1n}(t)\end{array}\right..\)

-

(v)

The envelope signal \({a}_{1}\left(t\right)\) known as instantaneous amplitude function is obtained by taking the product of the functions of successive envelope estimate which are obtained during the iterative procedure discussed above.

$${a}_{1}\left(t\right)={a}_{11}\left(t\right){a}_{12}\left(t\right)\dots {a}_{1n}\left(t\right)=\textstyle\prod_{l=1}^{n}{a}_{1l}\left(t\right),$$(6)where \(l\) denoted the times of iterative procedure.

-

(vi)

The first product function \((P{F}_{1})\) is obtained by taking the product of \({a}_{1}\left(t\right)\) with the purely \({s}_{1n}\left(t\right)\) i.e., \(P{F}_{1}={a}_{1}\left(t\right){s}_{1n}\left(t\right).\) The instantaneous amplitude of \(P{F}_{1}\) is \({a}_{1}\left(t\right)\) and the instantaneous frequency of \(P{F}_{1}\) can be obtained from \({s}_{1n}\left(t\right)\) as:

$${f}_{1}\left(t\right)=\frac{1}{2\pi }.\frac{d\left[\mathrm{arccos}\left({s}_{1n}\left(t\right)\right)\right]}{dt}.$$(7) -

(vii)

The difference of \(y(t)\) from \(P{F}_{1}\) is obtained as the new resulting signal. The whole process is repeated \(k\) times until \({u}_{k}\left(t\right)\) is monotonic or constant.

$$\left\{\begin{array}{l}u_1\left(t\right)=y\left(t\right)-PF_1\left(t\right)\\u_2\left(t\right)=u_1\left(t\right)-PF_2\left(t\right)\\\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\;\vdots\\u_k\left(t\right)=u_{(k-1)}\left(t\right)-{PF}_k(t)\end{array}\right..$$(8)Up to this point. However, the original signal can be obtained by

$$y\left(t\right)=\textstyle\sum_{q=1}^{k}P{F}_{q}\left(t\right)+{u}_{k}\left(t\right),$$(9)

where \(q\) is the number of \(PF\) and \({u}_{k}\left(t\right)\) represents the residual term. In this study, the LMD is applied in Python through Jupyter Notebook in Anaconda Navigator using “pyLMD” package.

2.2 Ensemble Empirical Mode Decomposition

In 1998, Huang introduced empirical mode decomposition (EMD) to analyze non-linear signals. In EMD, a signal is decomposed into intrinsic mode functions (\(IMFs\)). To become an \(IMF\), a signal needs to satisfy two conditions (a) the average of lower and upper envelopes is zero everywhere and (b) The number of extremes and zero-crossing should be equal or differ at most by 1. However, EMD has a major issue of mode-mixing (Huang et al. 1998). To overcome the drawback of EMD, Wu and Huang (2009) introduced Ensemble empirical mode decomposition (EEMD) which has a noise-assisted system. The EEMD algorithm is given as follows:

-

(i)

Initialize the amplitude of added white noise (\(w{n}_{j}(t)\)) and ensemble number \(N\) to the observed series \(y(t)\). The \({j}^{th}\) noise-added signal is \({y}_{j}\left(t\right)=y\left(t\right)+w{n}_{j}\left(t\right)\).

-

(ii)

All the local minima and maxima of \({y}_{j}\left(t\right)\) are identified as lower and upper envelopes obtained by cubic spline functions.

-

(iii)

Compute the average of \({m}_{1}\left(t\right)\) lower and upper envelopes.

-

(iv)

Compute difference of \({y}_{j}(t)\) and average value \({m}_{j}(t)\) i.e., \({h}_{1}\left(t\right)={y}_{j}\left(t\right)-{m}_{1}\left(t\right).\)

-

(v)

Check if \({h}_{1}\left(t\right)\) is the first \(IMF\) component of the signal i.e., \({h}_{1}\left(t\right)={c}_{1}\left(t\right), {r}_{1}\left(t\right)\) is defined from the remaining data by \({r}_{1}\left(t\right)={y}_{j}\left(t\right)-{c}_{1}\left(t\right).\) Otherwise, steps (ii)-(v) are repeated.

To determine the residue (\({r}_{1}\left(t\right)\)) as a new signal, step (ii)-(v) should be repeated \(n\) times until the sift out all the \(IMFs\) till the stopping criterion is met. The stopping criteria occurs when the \(IMF\) component or residue becomes so small that is smaller than the predetermined value. After the sifting processing, the original signal \({y}_{j}(t)\) can be determined as the sum of all the \(IMFs\) and the residual error as follows:

where \(n\) is the number of \(IMFs\), \({c}_{k}\left(t\right)\) denotes the \({k}^{th}\) \(IMF\) when adding \({j}^{th}\) noise and \({r}_{n}\left(t\right)\) denotes the final residual error.

2.3 Support Vector Regression (SVR)

The SVR is a widely applied supervised learning algorithm for regression and classification problems. SVR algorithm estimates the relationship between the output and input of a system using an existing sample (Vapnik 1995). Therefore, SVR is applied to model the non-linear and complex features of the river discharge series.

Suppose we have the training set with \(n\) observations \(\left\{{x}_{j},{y}_{j}\right\}\), where \({y}_{j}\) represents the estimated output value of the data, \({x}_{j}\) is the corresponding lagged input vector. Then, the SVR is developed as follows:

where \(w\) is the weights vector, \(b\) is a constant which represents the bias and \(\Phi \left(x\right)\) is the non-linear transfer function applied to project the input data into high dimensional space. Using the structural approach of risk minimization, Eq. (11) is solved as follows:

where \(C>0\) denotes the penalty parameter, \({\zeta }^{*}\) and \(\zeta\) represent the slack variables which denotes the lower and upper constraint of \(g(x)\) and \(\varepsilon\) represents the insensitive loss function. The Lagrangian function is further implemented which uses the regression function to replace the \(\Phi \left(x\right)\) and weight vector given in the Eq. (11) as:

where \({\alpha }_{j}\) and \({\alpha }_{j}^{*}\) are the Lagrange coefficients and \(k\left(x,{x}_{j}\right)=\langle \Phi \left(x\right), \Phi \left({x}_{j}\right)\rangle\) denotes the kernel function. There are different forms of kernel functions such as linear and radial basis. However, the choice of kernel depends upon the nature of the data. In this study, the SVR algorithm is implemented in R programming language using “e1071” package.

2.4 K-Nearest Neighbor (KNN)

Cover and Hart (1967) introduced the KNN algorithm as a non-parametric method for pattern recognition work. The KNN algorithm also has a good approximation ability for non-linear dynamics and is used for time series prediction (Martinez et al. 2018; Al-Juboor 2021). Thus, it is utilized as an efficient tool to capture non-linear dynamics of river discharge in this study.

The KNN algorithm calculates the similarity (neighborhood) of the input variables \({X}_{o}=\left\{{x}_{1o},{x}_{2o},\dots ,{x}_{no}\right\}\) with historical observations of the input variable \({X}_{t}=\left\{{x}_{1t},{x}_{2t},\dots ,{x}_{nt}\right\}\) using Euclidean distance function (\({D}_{ot}\)) which is given by (Araghinejad 2013):

The predicted variable (\({Y}_{o}\)) is computed by using the probabilistic function of the observed values of discharge series (\({T}_{p}\)):

where \(f\left({D}_{op}\right)\) denotes the kernel function of the KNN computed by using the distance (\({D}_{op}\)):

The main parameter of KNN algorithm is \(K\), finding an optimal value of \(K\) is a practical problem. In this study, the optimal value of \(K\) is computed by using metrics through the “caret” package in R.

2.5 Autoregressive Integrated Moving Average (ARIMA) Model

ARIMA model is a simple method for predicting a time series. ARIMA model is applicable for both non-stationary and stationary time series, which makes it an efficient model for predicting the uncertainty of river discharge (Wang et al. 2018). Thus, ARIMA model is used to predict river discharge which has uncertainty with time changes. Let \({y}_{t}\) be the time series and \({\varepsilon }_{t}\) represent the random error at time \(t\). Then \({y}_{t}\) is considered to be the linear function of past \(p\) observations \({(y}_{t-1},{y}_{t-2},\dots , {y}_{t-p})\) and \(q\) random errors \({(\varepsilon }_{t}, {\varepsilon }_{t-1},\)…, \({\varepsilon }_{t-q}\)). The corresponding ARIMA model is given as:

where \({\theta }_{j}(j=\mathrm{0,1},2,\dots ,p)\) are the autoregressive coefficients, \({\beta }_{j}\left(j=\mathrm{0,1},2,\dots ,q\right)\) are the moving average coefficients and \({\varepsilon }_{t}\) is identically distributed with zero mean and constant variance. Similar to parameter \(d\), the coefficients \(q\) and \(p\) are referred as the order of the ARIMA model. The main issue of ARIMA model is the determination of the appropriate order of (\(p,d,q\)) which is determined using correlation tools i.e., autocorrelation function (ACF) and partial ACF (Box et al. 2008).

3 Proposed Hybrid Modelling Method

In this study, a novel hybrid method is proposed to enhance the forecasting accuracy of hydrological data. The hybrid method proposed in this study is shown in Fig. 1.

The steps of the proposed hybrid method are described as follows:

-

(i)

The LMD algorithm is applied to decompose the original river discharge series into several product functions (\(PFs\)) and residual.

-

(ii)

The EEMD is employed to decompose the sub-series obtained in step (i). In this step, each component obtained by LMD is further decomposed into \(IMFs\) and residual.

-

(iii)

The SVR, KNN and ARIMA models are applied to predict each \(IMF\) and residual component obtained in step (ii).

-

(iv)

The predictions of all components is aggregated for HD-SVR, HD-KNN and HD-ARIMA models.

-

(v)

The average of the predictions obtained from HD-SVR, HD-KNN and HD-ARIMA models is the final prediction of the river discharge series.

4 Case Studies

In this section, the description of data and the index measures used for evaluation are provided. Program codes were written in R and Python programming language version 4.0.3 and 3.7, respectively. All the analyses were performed on a personal computer with Intel Core i9-9900 CPU and 32.0 GB of RAM.

4.1 Data Description

In this study, the discharge data of different rivers in Indus Basin System has been collected which is used for the thorough evaluation of the performance of the proposed hybrid method. The data is collected for five rivers i.e., Jhelum River (Chattar Kallas station), Jhelum River (Domel station), Kabul River (Nowshera station), Kunhar river (Talhata station) and Kanshi River (Palotte station). The daily river discharge data is obtained from the Surface Water Hydrology Project agency of Water and Power Development Authority (WAPDA) Pakistan with different hydrological periods given in Table 1.

The river discharge series (m3/s) is denoted by Si for i = 1, 2, 3, 4 and 5 for Jhelum River (Chattar Kallas station), Jhelum River (Domel station), Kabul River, Kunhar river and Kanshi river respectively. Initially, the data cleaning procedure was performed on the five data series and the missing values were replaced by the average discharge of the month. In Table 1, the descriptive summary of the river discharge is given. It shows that on average the discharge was highest in S1. The standard deviation (SD) of discharge is 564.13 m3/s, 210.66 m3/s, 750.68 m3/s, 85.18 m3/s and 9.31 m3/s in S1, S2, S3, S4 and S5, respectively. The shape of discharge of all rivers is positively skewed.

Figure 2b represents the box plot of discharge of five rivers. There is an evident difference between the discharge of rivers. The discharge of S5 is relatively low as compared to other rivers (S1-S4). In Fig. 2b, some unusual discharge records are in all rivers that indicate a sign of water overflow in these rivers that is a hydrological hazard and needs proper exploration to avoid floods.

Figure 3 represents the time series plot of river discharge. It shows that the river discharge in all cases is non-linear, volatile and has huge variability during the given years. The discharge series (S1-S5) is divided into the training and testing sets. The first 80% observations are used as the training set and the last 20% observations are used as the testing set.

4.2 Evaluation Indexes

The root mean square error (RMSE), mean absolute error (MAE) and root-relative square error (RRSE) are adopted to evaluate the prediction performance of models. Several researchers have used these indicators e.g., Rezaie-Balf et al. (2019). These measures are defined as follows:

where \({y}_{j}\) represents the actual value, \({\hat{y}}_{j}\) represent the predicted value, \(\bar{\hat{y} }\) is the mean of predicted values and \(m\) is the total number of observations in the considered case. To further evaluate the performance of models, the improved percentage indicators of RMSE, MAE and RRSE are used in this study. These are defined as follows:

5 Results and Discussion

In the proposed hybrid method, LMD and EEMD algorithms are used to decompose the original river discharge series. The training set of the original discharge series of S1 is decomposed by LMD and the resulting \(PFs\) are given in Fig. 4. Then, using EEMD each \(PF\) and residual is further decomposed into \(IMFs\). The EEMD decomposition results of \(P{F}_{1}\) to \(P{F}_{4}\) are presented in Fig. 5. Similarly, all the other series are decomposed. The remaining decomposition results of S1-S5 are provided in the supplementary materials. Further, the EEMD decomposed components were fed to SVR, ARIMA and KNN models individually. The predictions for all components is obtained and aggregated respectively to obtain the final forecasts of the HD-SVR, HD-KNN and HD-ARIMA models. Lastly, the average prediction of these three models is computed as the final prediction of the HD-SKA model.

Discharge data of five rivers is used to verify the prediction performance of the proposed HD-SKA model. The six benchmark models compared to the HD-SKA model are ARIMA, SVR, KNN, EEMD-ARIMA, EEMD-SVR and EEMD-KNN models. Lastly, the proposed hybrid model (HD-SKA) is applied to predict the daily river discharge of S1-S5.

5.1 Prediction Results

In this section, the prediction results of seven models on the S1-S5 series are compared and discussed. The prediction results of the training and testing phase are represented in Table 2. The detailed results are summarized as follows:

-

i.

The SVR, KNN and ARIMA models are close competitors of each other in predicting daily river discharge of S1-S5.

-

ii.

The EEMD-based models have better performance than single SVR, ARIMA, and KNN models except in some instances. For example, in S4 training phase, the MAE of the EEMD-KNN model is more than the KNN model. The use of the decomposition technique improves the prediction accuracy of models for hydrological time series (Huynh et al. 2021). Therefore, EEMD has improved the prediction accuracy of SVR, ARIMA and KNN models in all five cases.

-

iii.

For S1-S5, the RMSE, MAE and RRSE of the proposed HD-SKA model are smaller compared to the other considered models. However, there are certain situations where the MAE of the proposed HD-SKA model is slightly higher than EEMD-based models but this difference is negligible. The proposed HD-SKA model has better predictive accuracy than EEMD-based ARIMA, KNN and SVR models.

-

iv.

Overall, the proposed HD-SKA model has better performance than all considered models in the study. The implementation of hybrid decomposition in the proposed method has enhanced the prediction capability of models. The HD-SKA model produces reliable river discharge prediction.

Figure 6a represents the prediction performance of the HD-SKA model compared to EEMD-based models in the testing phase of the S1 and S2 series. It can be observed that the proposed HD-SKA model precisely predicts the S1 and S2 series and is quite near to the original daily discharge. The remaining prediction plots for the S3-S5 series are provided in the supplementary material. For further verification of HD-SKA model performance, the coefficient of determination (R2) of all the seven models is represented in Fig. 6b. It is observed that the proposed HD-SKA model yields highest R2 value and has produces better prediction results compared to all other considered models in both training and testing phase.

5.2 Improvements by the Proposed Hybrid Model

The effectiveness of hybrid decomposition in the proposed hybrid model is illustrated in Table 3 through improved percentage indexes of RMSE, MAE and RRSE. By comparing the percentage improvements of the HD-SKA model to ARIMA, SVR and KNN models, it is observed that PRMSE, PMAE, and PRRSE are all positive. The performance of the proposed HD-SKA model is superior to ARIMA, SVR, and KNN models in all five cases.

Further, the comparison of percentage improvements of the proposed HD-SKA model with EEMD-based models indicates that the HD-SKA model performs better than EEMD-ARIMA, EEMD-SVR and EEMD-KNN in all case studies. The PRMSE, PMAE, and PRRSE are mostly positive except PMAE index in some situations. For example, the MAE of the HD-SKA model compared to the EEMD-KNN model is increased by 1.38% in the training phase of the S5 series. However, it can be observed that both models have similar performance and the difference is not much.

Overall, the proposed HD-SKA model has better performance than all other considered models. The hybrid decomposition-based models have better predictive performance than single decomposition-based models as studied in the literature see Vidya and Janani (2021). It may be deduced that the implementation of LMD with EEMD in the proposed hybrid model efficiently reduces the complexity and randomness of river discharge.

The proposed HD-SKA model in this study can serve as a helpful tool for the accurate prediction of river discharge.

5.3 Diebold-Mariano Test

The Diebold Mariano (DM) test is a well-known statistical hypothesis testing approach that helps in the identification of the degree of discrepancy among the proposed model and the compared models (Silva et al. 2021). The null hypothesis of the DM test is that the two models have similar prediction accuracy against the alternative that model 2 has lower prediction accuracy than model 1. Symbolically,

where \({e}_{t}^{1}\) and \({e}_{t}^{2}\) are the prediction errors of two comparison models and \(L\) denotes the loss function of the prediction errors. The DM statistic is defined as follows:

where \({\hat{f}}_{d}(0)\) represents the spectral density, \(m\) is the length of prediction results, \(2\pi {\hat{f}}_{d}(0)\) denotes the consistent estimator of the asymptotic variance and \(\bar{d }=\frac{1}{m}\sum_{t=1}^{m}\left(L\left({e}_{t}^{1}\right)-L\left({e}_{t}^{2}\right)\right)\). In this study, the loss function used is mean squared error (MSE). The null hypothesis will be rejected if \(DM<-{Z}_{\alpha /2}\) where \(\alpha\) is the level of significance.

In this study, the DM test is applied to verify the prediction results of models at 1%, 5%, and 10% levels of significance. The null hypothesis will be rejected for S1-S5 if the DM statistic value is smaller than –2.58, –1.96, and –1.64 for 1%, 5% and 10% levels of significance respectively. The DM test is applied using the proposed HD-SKA model as “model 1” and benchmark models as “model 2” respectively.

In Table 4, the DM test statistic values on predictions of testing data sets are given for S1-S5 series. The null hypothesis is rejected for all cases except for the EEMD-SVR model in S5. The DM test results reveals that the performance of the proposed HD-SKA model for the S1-S5 series is markedly diverse and superior to all considered models. However, in S5 the EEMD-SVR model and HD-SKA model have similar prediction accuracy. Thus, the proposed HD-SKA model has higher prediction accuracy among all considered models for river discharge prediction.

6 Conclusion

In this study, a novel hybrid method is proposed to predict daily river discharge. The application of the proposed method is illustrated using discharge data of five rivers in the Indus Basin System, Pakistan. The performance of the proposed HD-SKA model is compared with six benchmark models. The models’ performance is evaluated using different performance indicators and the Diebold-Mariano test. The data analysis results show that the proposed HD-SKA model outperforms all the models considered in the study. The results of the Diebold-Mariano test revealed that the HD-SKA model possesses a higher predictive ability than benchmark models. Overall, the proposed hybrid method can be a successful tool to predict daily river discharge. The proposed method has greater accuracy when the number of components identified at the second decomposition stage is not too large. Moreover, the application of the proposed method may be checked on large data sets in future research work.

Availability of Data and Material

Will be provided on request.

Code Availability

Will be provided on request.

References

Adnan RM, Petroselli A, Heddam S, Santos C, Kisi O (2021) Short term rainfall-runoff modelling using several machine learning methods and a conceptual event-based model. Stoch Environ Res Risk Assess 35:597–616. https://doi.org/10.1007/s00477-020-01910-0

Aghelpour P, Bahrami-Pichaghchi H, Varshavian V (2021) Hydrological drought forecasting using multi-scalar streamflow drought index, stochastic models and machine learning approaches, in northern Iran. Stoch Environ Res Risk Assess 35:1615–1635. https://doi.org/10.1007/s00477-020-01949-z

Alizadeh F, Gharamaleki AF, Jalilzadeh R (2021) A two-stage multiple-point conceptual model to predict river stage-discharge process using machine learning approaches. J Water Clim Change 12:278–295. https://doi.org/10.2166/wcc.2020.006

Al-Juboor AM (2021) A hybrid model to predict monthly streamflow using neighboring rivers annual flows. Water Resour Manage 35:729–743. https://doi.org/10.1007/s11269-020-02757-4

Araghinejad S (2013) Data-driven modeling: Using MATLAB in water resources and environmental. Springer Science & Business Media, Berlin

Bayazit M (2015) Nonstationarity of hydrological records and recent trends in trend analysis: a state-of-the-art review. Environ Process 2:527–542. https://doi.org/10.1007/s40710-015-0081-7

Bonakdari H, Binns AD, Gharabaghi B (2020) A comparative study of linear stochastic with nonlinear daily river discharge forecast models. Water Resour Manage 34:3689–3708. https://doi.org/10.1007/s11269-020-02644-y

Box GE, Jenkins GM, Reinsel GC (2008) Operational Research Quarterly. Time Series Analysis: Forecasting and Control, 4th edn. John Wiley & Sons Inc, New York, pp 137–191

Cover T, Hart P (1967) Nearest neighbor pattern classification. IEEE Trans Inf Theory 13:21–27. https://doi.org/10.1109/TIT.1967.1053964

Dehghani R, Torabi H, Younesi H, Shahinejad B (2021) Application of wavelet support vector machine (WSVM) model in predicting river flow (Case study: Dez basin). Watershed Eng Manage 13:98–110. https://doi.org/10.22092/IJWMSE.2020.128735.1748

Fashae O, Olusola A, Ndubuisi I, Udomboso C (2019) Comparing ANN and ARIMA model in predicting the discharge of River Opeki from 2010 to 2020. River Res Appl 35:169–177. https://doi.org/10.1002/rra.3391

Huang N, Shen Z, Long S, Wu M, Shih H, Zheng Q, Yen N, Tung CC, Liu H (1998) The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc R Soc Lond a: Math Phys Eng Sci 454:903–995. https://doi.org/10.1098/rspa.1998.0193

Huynh AN, Deo RC, Ali M, Abdulla S, Raj N (2021) Novel short-term solar radiation hybrid model: Long short-term memory network integrated with robust local mean decomposition. Appl Energy 298:117193. https://doi.org/10.1016/j.apenergy.2021.117193

Liu H, Han M (2014) A fault diagnosis method based on local mean decomposition and multi-scale entropy for roller bearings. Mech Mach Theory 75:67–78. https://doi.org/10.1016/j.mechmachtheory.2014.01.011

Martinez F, Frias MP, Perez-Godoy MD, Rivera AJ (2018) Dealing with seasonality by narrowing the training set in time series forecasting with kNN. Expert Syst Appl 103:38–48. https://doi.org/10.1016/j.eswa.2018.03.005

Meshram SG, Ghorbani MA, Shamshirband S, Karimi V, Meshram C (2019) River flow prediction using hybrid PSOGSA algorithm based on feed-forward neural network. Soft Comput 23:10429–10438. https://doi.org/10.1007/s00500-018-3598-7

Musarat MA, Alaloul WS, Rabbani MB, Ali M, Altaf M, Fediuk R, Vatin N, Klyuev S, Bukhari H, Sadiq A, Rafiq W, Farooq W (2021) Kabul river flow prediction using automated ARIMA forecasting: a machine learning approach. Sustainability 13:10720–10746. https://doi.org/10.3390/su131910720

Nikolic VV, Simonovic SP (2015) Multi-method modeling framework for support of integrated water resources management. Environ Process 2:461–483. https://doi.org/10.1007/s40710-015-0082-6

Poul A, Shourian M, Ebrahimi H (2019) A comparative study of MLR, KNN, ANN and ANFIS models with wavelet transform in monthly stream flow prediction. Water Resour Manage 33:2907–2923. https://doi.org/10.1007/s11269-019-02273-0

Rezaie-Balf M, Fani Nowbandegani S, Samadi S, Fallah H, Alaghmand S (2019) An ensemble decomposition-based artificial intelligence approach for daily streamflow prediction. Water 11:709–738. https://doi.org/10.3390/w11040709

Riahi-Madvar H, Dehghani M, Memarzadeh R, Gharabaghi B (2021) Short to long-term forecasting of river flows by Heuristic optimization algorithms hybridized with ANFIS. Water Resour Manage 35:1149–1166. https://doi.org/10.1007/s11269-020-02756-5

Sehgal V, Tiwari MK, Chatterjee C (2014) Wavelet bootstrap multiple linear regression based hybrid modeling for daily river discharge forecasting. Water Resour Manag 28:2793–2811. https://doi.org/10.1007/s11269-014-0638-7

Sharghi E, Nourani V, Najafi H, Soleimani S (2019) Wavelet-exponential smoothing: a new hybrid method for suspended sediment load modeling. Environ Process 6:191–218. https://doi.org/10.1007/s40710-019-00363-0

Silva RG, Ribeiro MH, Moreno SR, Mariani VC, Coelho LDS (2021) A novel decomposition-ensemble learning framework for multi-step ahead wind energy forecasting. Energy 216:119174. https://doi.org/10.1016/j.energy.2020.119174

Smith JS (2005) The local mean decomposition and its application to EEG perception data. J R Soc Interface 2:443–454. https://doi.org/10.1098/rsif.2005.0058

Vapnik V (1995) The nature of statistical learning theory. Springer, New York

Vidya S, Janani SV (2021) Wind speed multistep forecasting model using a hybrid decomposition technique and a selfish herd optimizer-based deep neural network. Soft Comput 25:6237–6270. https://doi.org/10.1007/s00500-021-05608-5

Wang ZY, Qiu J, Li FF (2018) Hybrid models combining EMD/EEMD and ARIMA for Long-term streamflow forecasting. Water 10:853–866. https://doi.org/10.3390/w10070853

Wei S, Yang H, Song J, Abbaspour K, Xu Z (2013) A wavelet-neural network hybrid modelling approach for estimating and predicting river monthly flows. Hydrol Sci J 58:374–389. https://doi.org/10.1080/02626667.2012.754102

Wu C, Chau K, Li Y (2008) River stage prediction based on a distributed support vector regression. J Hydrol 358:96–111. https://doi.org/10.1016/j.jhydrol.2008.05.028

Wu Z, Huang NE (2009) Ensemble empirical mode decomposition: a noise-assisted data analysis method. Adv Adapt Data Anal (AADA) 1:1–41. https://doi.org/10.1142/S1793536909000047

Acknowledgements

We would also like to acknowledge the Surface Water Hydrology Project (SWHP) Agency of Water and Power Development Authority (WAPDA), Pakistan for providing the river discharge data for this research work. We are thankful to the Editor and anonymous reviewers for teir comments on earlier versions of the manuscript which improved the paper.

Funding

No funding for this research work.

Author information

Authors and Affiliations

Contributions

All the authors jointly worked on the idea. MS collected the data. SC and FI worked on the methodology. MS performed the analysis and prepared the initial draft. SC and FI reviewed and revised the draft.

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Shabbir, M., Chand, S. & Iqbal, F. A Novel Hybrid Method for River Discharge Prediction. Water Resour Manage 36, 253–272 (2022). https://doi.org/10.1007/s11269-021-03026-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11269-021-03026-8