Abstract

In popular applications such as e-commerce sites and social media, users provide online reviews giving personal opinions about a wide array of items, such as products, services and people. These reviews are usually in the form of free text, and represent a rich source of information about the users’ preferences. Among the information elements that can be extracted from reviews, opinions about particular item aspects (i.e., characteristics, attributes or components) have been shown to be effective for user modeling and personalized recommendation. In this paper, we investigate the aspect-based top-N recommendation problem by separately addressing three tasks, namely identifying references to item aspects in user reviews, classifying the sentiment orientation of the opinions about such aspects in the reviews, and exploiting the extracted aspect opinion information to provide enhanced recommendations. Differently to previous work, we integrate and empirically evaluate several state-of-the-art and novel methods for each of the above tasks. We conduct extensive experiments on standard datasets and several domains, analyzing distinct recommendation quality metrics and characteristics of the datasets, domains and extracted aspects. As a result of our investigation, we not only derive conclusions about which combination of methods is most appropriate according to the above issues, but also provide a number of valuable resources for opinion mining and recommendation purposes, such as domain aspect vocabularies and domain-dependent, aspect-level lexicons.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

1.1 Motivation

In the predominant view, addressing situations of information overload and helping in decision making tasks, recommender systems aim to identify and suggest information items (e.g., products, services and people) of “relevance” for a target user (Jannach and Adomavicius 2016). Broadly, the relevance of an item can be estimated according to items the user liked in the past—content-based (CB) recommendations—or considering items preferred by like-minded people—collaborative filtering (CF) recommendations—(Adomavicius and Tuzhilin 2005).

In addition to contextual data (Adomavicius and Tuzhilin 2015), recommender systems mainly generate item relevance predictions based on both user/item attributes and user preferences, i.e., interests, tastes or needs. Such preferences are explicitly stated by the users or are inferred from past user-item interactions, commonly numeric evaluations (a.k.a. ratings) (Herlocker et al. 1999) and consumption records (Hu et al. 2008), respectively. There are, however, many popular applications—such as e-commerce sites and social media—where users not only evaluate items through ratings, but also provide personal reviews supporting their preferences.

Reviews are usually in the form of textual comments that express the reasons for which the users like or dislike the evaluated items. They thus represent a rich source of information about the users’ preferences, and can be exploited to build fine-grained user profiles and enhance personalized recommendations. In this sense, Chen et al. (2015) identify various elements of valuable information that can be extracted from user reviews and can be utilized by recommender systems, namely frequently used terms, discussed topics, overall opinions about reviewed items, specific opinions about item features, comparative opinions, reviewers’ emotions, and reviews helpfulness.

Frequently used terms can be used to characterize the reviewers with term-based profiles, which e.g. could be leveraged to a CB recommender (García Esparza et al. 2011). Their relevance may be determined with a weighting measure such as TF-IDF. Discussed topics can be utilized to enhance ratings in CF, as done in Seroussi et al. (2011). They may be obtained by grouping frequently occurring nouns or via a topic modeling technique such as Latent Dirichlet Allocation, LDA (Blei et al. 2003). The users’ overall opinions (i.e., positive or negative sentiment orientations) about the reviewed items could be converted into virtual ratings, which may be valuable for improving CF approaches (Poirier et al. 2010; Pero and Horváth 2013; Zhang et al. 2013). They could be inferred by aggregating the sentiments of all opinion words in the reviews or via machine learning techniques. The users’ opinions about item features can be used to enhance item profiles and increase recommendation ranking quality (Aciar et al. 2007; Yates et al. 2008; Dong et al. 2013), as latent preference factors in model-based CF (Jakob et al. 2009; Wang et al. 2012; Chen et al. 2016), and to weight user preferences in augmented recommendations (Liu et al. 2013; Chen and Wang 2013, 2014). In general, they correspond to nouns and noun phrases frequently occurring together with nearby adjectives. Comparative opinions, which indicate whether an item is superior or inferior to another with respect to certain feature, can be extracted via linguistic rules. They may be used to build a graph of comparative relationships between items. Such a graph could be exploited to improve the quality of item rankings (Li et al. 2011; Jamroonsilp and Prompoon 2013; Kumar et al. 2015). The reviewers’ emotions and mood (e.g., happiness, sadness) when writing the reviews can be used to determine the probability that the users will like the items, as presented by Moshfeghi et al. (2011) and Zhang et al. (2013). Finally, the reviews helpfulness, established in terms of the number of votes given by users to reviews, can be used to identify quality ratings that allow making better item relevance predictions (Raghavan et al. 2012).

Among the previous elements, opinions and sentiments expressed by users in personal reviews about specific features or aspects (i.e., characteristics, attributes or components) of the reviewed items have shown to be effective for user modeling (Wang et al. 2010; Ganu et al. 2013; Wu et al. 2015). For instance, let us consider a user who rated a particular mobile phone with an overall rating of 4 stars in a 1–5 star scale. With no more information, it is not possible to know why she gave that score instead of the highest 5-star rating. In contrast, analyzing a review she would have written about the phone, we may find out that the user thought the phone camera was the best she had ever used and its battery life was relatively long. Moreover, we could also discover that the user perceived the phone a bit heavy and quite expensive, referring to the phone weight and price respectively. These opinions about aspects of the phone are the reasons for the 4-star rating, and provide a fine-grained representation of the user’s preferences.

Aspect-based recommender systems, a.k.a. recommender systems based on feature preferences (Chen et al. 2015), aim to exploit such particularities, and provide personalized recommendations taking into account the users’ opinions about aspects of the rated items. Following the previous example, let us now consider a reviewer who is usually concerned about the audio characteristics of electronic devices; a fact that has been somehow inferred and incorporated into the user’s profile. For this user, an aspect-based recommender system may find as more relevant and may suggest those phones that have been evaluated as having a good voice call quality in others’ reviews. In this way, even when items are evaluated with the same rating value, these systems are able to capture particular strengths and weaknesses of the items and, based on this information, better estimate the relevance of such items for the target user, as recently shown by Bauman et al. (2017) and Musto et al. (2017).

Despite these benefits, aspect-based recommender systems have received limited attention in the research literature, even when the extraction of opinions about item aspects from user reviews is a major research topic in the area of Sentiment Analysis and Opinion Mining (Liu and Zhang 2012; Rana and Cheah 2016). Chen et al. (2015) presented an exhaustive survey on review-based recommender systems in general, and aspect-based recommender systems in particular. As shown in that survey, the majority of published papers propose recommendation approaches that follow a specific aspect extraction method, and do not evaluate existing alternatives. In most cases, the proposed recommendation approaches are empirically compared with standard user/item-based CF and matrix factorization (MF), but not with other aspect-based recommenders. Moreover, in general, reported evaluations were conducted on single domains and datasets, and using rating prediction metrics, which are progressively in disuse and are replaced by ranking-based and non accuracy metrics. In this context, to the best of our knowledge, there is no study that clarifies which aspect extraction methods and subsequent recommendation approaches could represent the best solution for a given domain, in terms of heterogeneous recommendation quality measures.

Aiming to shed light on this situation, in this paper we separately address three tasks, namely aspect extraction, i.e., identifying references to item aspects in user reviews, aspect opinion polarity identification, i.e., classifying the sentiment orientation/polarity (e.g., as positive, neutral or negative) of the opinions about the aspects identified in the reviews, and aspect-based recommendation, i.e., exploiting the extracted aspect opinion information to provide enhanced personalized top-N recommendations. In both the aspect extraction and aspect-based recommendation tasks, we empirically compare several state-of-the-art and novel approaches on various domains and standard datasets, analyzing distinct metrics. Moreover, in the aspect opinion extraction task, we use popular natural language processing and opinion mining resources to enhance techniques on sentiment orientation identification. In particular, we consider domain-dependent aspect-level polarities of adjectives (e.g., low price vs. low battery life), adverbs modifying or intensifying such polarities (e.g., quite/too/absolutely cheap battery), and negation of adjectives (e.g., non cheap battery) and sentences (e.g., I do not think the battery is cheap).

As a result of our investigation, we do not only report and analyze extensive results on which combination of aspect extraction and recommendation methods may be the most appropriate for a certain domain, but also provide a number of resources valuable for researchers and practitioners, specifically, domain aspect vocabularies, domain-dependent, aspect-level lexicons (specifically, lists of positive and negative adjectives), and aspect opinion annotations of the datasets.

1.2 Research questions

In this paper, we aim to give well argued answers to the following three research questions:

-

RQ1 Is there an aspect extraction method that generates data consistently effective for both content-based and collaborative filtering strategies?

To address this question, we experiment with several state-of-the-art methods to aspect (opinion) extraction, evaluating the different types of existing approaches, namely exploiting aspect vocabularies, word frequency distributions (Caputo et al. 2017), syntactic relations (Qiu et al. 2011), and topic models (McAuley and Leskovec 2013). We integrate each of these techniques with a number of content-based and collaborative filtering methods for aspect-based recommendation. In this way, we aim to show whether combining aspect opinions and ratings as user preferences entails better recommendations, and to identify aspect extraction approaches that generate valuable data for all/most of the evaluated recommenders.

-

RQ2 To what extent are opinions about item aspects valuable to improve the quality of personalized recommendations?

To address this question, we empirically compare the developed aspect-based recommendation methods against state-of-the-art recommenders that do not exploit aspect opinion information, and HFT (McAuley and Leskovec 2013), a matrix factorization model that considers hidden topics as a proxy for item aspects. Differently to previous work, in this paper, we analyze not only the recommendation accuracy (by means of precision, recall and nDCG ranking-based metrics), but also the achieved trade-off between accuracy and other recommendation quality metrics, such as coverage, diversity and novelty.

-

RQ3 How do the coverage and type of extracted aspects affect the performance of aspect-based recommendation methods?

To address this question, we investigate scenarios with different levels of aspect opinion annotation coverage, measured in terms of the percentage of the rated/reviewed items that contain aspect opinions (identified by the developed extraction methods). We thus aim to show whether the achieved recommendation performance on the original datasets is comparable to that achieved in situations where there are aspect opinion annotations for all items. Moreover, we compare the types of aspects extracted with each method with respect to their effectiveness for improving recommendation performance and to their adequacy for explaining generated recommendations.

For the three research questions, we conduct our evaluations on popular YelpFootnote 1 and AmazonFootnote 2 (McAuley and Yang 2016) datasets, considering user reviews about items in eight domains: hotels, beauty and spas and restaurants, and movies, digital music, CDs and vinyls, mobile phones and video games, respectively.

1.3 Contributions

In contrast to previous work, in this paper we extensively evaluate combinations of distinct methods to extract item aspect opinions from user reviews, and methods that exploit such opinions to provide personalized item recommendations. As a result of our investigation, in addition to the answers provided to the stated research questions, we claim the next contributions:

-

To the best of our knowledge, we present the first empirical comparison of aspect opinion extraction methods covering the existing types of approaches, namely vocabulary-, word frequency-, syntactic relation-, and topic model-based approaches.

-

We present a novel technique to estimate the sentiment orientation of opinions, which adapts the polarity of adjectives by considering adverbs that modify the intensity of the opinions, and by identifying negations of adjectives and/or sentences.

-

We evaluate content-based and collaborative filtering state-of-the-art and novel aspect-based recommendation methods on several domains and well-known datasets, using heterogeneous metrics of recommendation quality, such as ranking accuracy, catalog coverage, and item novelty and diversity.

Besides these contributions, we provide new categorizations and up-to-date surveys on aspect opinion extraction and aspect-based recommender systems. Moreover, we make publicly availableFootnote 3 the following resources:

-

Aspect-level lexicons with the polarity of adjectives associated to item aspects in reviews for the addressed domains.

-

Vocabularies composed of nouns appearing in user reviews that refer to aspects for the above domains.

-

Lists of weighted adverbs that strengthen, soften or invert the polarity of adjectives.

-

Aspect opinion annotations of the used datasets, which are popular in the Sentiment Analysis and Opinion Mining research area.

1.4 Structure of the paper

The remainder of the paper is structured as follows. In Sect. 2, we revise related work on aspect opinion extraction and aspect-based recommendation, following formal categorizations of existing approaches for both tasks. Selected as state-of-the-art examples from each of the identified categories or proposed as novel methods, in Sects. 3 and 4, we describe the developed and integrated aspect extraction techniques and aspect-based recommenders. Next, in Sects. 5 and 6, we present the experiments conducted to address the stated research questions, describing the experimental setting and analyzing the achieved empirical results, respectively. Finally, in Sect. 7 we end with some conclusions and future research lines.

2 Related work

In this section, we survey the research literature on the two main tasks involved in the aspect-based recommendation problem, namely extracting opinions about item aspects from user reviews (Sect. 2.1), and exploiting the extracted opinion information for personalized item ranking (Sect. 2.2).

2.1 Aspect opinion extraction approaches

In the subsequent subsections, we discuss state-of-the-art aspect (opinion) extraction methods, following an own categorization based on those presented by Liu (2012) and Rana and Cheah (2016). We focus on unsupervised methods, where no manually labeled aspect annotations are needed, and specifically we distinguish between the following approaches: vocabulary-based methods that make use of lists of aspect words (Sect. 2.1.1), word frequency-based methods in which words that have a high appearance frequency are selected as aspects (Sect. 2.1.2), syntactic relation-based methods where syntactic relations between words of a sentence are the basis for identifying aspect opinions (Sect. 2.1.3), and topic model-based methods where topic models are used to extract the main aspects from user reviews (Sect. 2.1.4). Next, in Sect. 2.1.5, we compare the surveyed methods and analyze their strengths and weaknesses. Differently to Liu (2012), we exclude aspect extraction methods based on supervised learning (Jakob and Gurevych 2010) since they rely on large amounts of labeled data, an uncommon scenario in real applications. Moreover, in contrast to Rana and Cheah (2016), we do consider topic modeling techniques as they have been proven to be very effective in representing item aspects from reviews (Titov and McDonald 2008b; Zhao et al. 2010a; McAuley et al. 2012; Diao et al. 2014).

In addition to the way in which references to aspects are identified in user reviews, it is important to describe how the sentiment orientation or polarity of the opinions about aspects is established. In this context, at some point, existing solutions make use of lexicons. In the simplest form, a sentiment/opinion lexicon (or simply lexicon) is composed of lists of adjectives that are used to reflect positive or negative subjectivity characteristics or qualities of any type of entity. There are lexicons that contain other types of words (e.g., nouns, adverbs and verbs), lexicons that provide numeric polarity scores (e.g., in a \([-\,5, 5]\) range), and lexicons that include misspellings, morphological variants, slang expressions, and social media mark-up. In general, available lexicons are limited to words that express generic, domain-independent subjectivity. We will cite which lexicons are used in the papers surveyed.

2.1.1 Vocabulary-based extraction

The most direct approach to identify aspect opinions in reviews is by means of a vocabulary with the terms that refer to aspects. Aciar et al. (2007) presented a semi-automatic method that identifies references to aspects in user reviews through an ontological structure. When processing user reviews, each sentence that contains words mapped to an aspect ontology is annotated with the corresponding ontology concepts. Afterwards, a text mining technique is used to select and classify a review sentence as good or bad if it contains information about features that the user has evaluated as item strengths and weaknesses, respectively. The method thus needs an initial, domain-dependent ontology manually built in advance, whereas its annotation algorithm is fully automatic.

2.1.2 Word frequency-based extraction

One of the simplest, yet effective, approaches to extract references to aspects from textual reviews consists of identifying words frequently used in a specific domain. In this context, Hu and Liu (2004a) presented a method aimed to summarize textual reviews, highlighting the fragments most valuable for readers according to their information needs. Specifically, the authors used association rule mining and the Apriori algorithm (Agrawal et al. 1994) over nouns and noun phrases to find frequent itemsets, and performed a pruning stage to keep only the most informative ones, which are assumed to refer to evaluated item aspects. In their methods, the sentiment orientation of each aspect opinion is assigned based on the nearest adjectives to the selected nouns. In particular, an aspect opinion is annotated with the polarity (or inverse polarity) that the corresponding adjective—or any of its synonyms (or antonyms) obtained from WordNet (Miller 1995)—have in the well-known lexicon presented in Hu and Liu (2004b).

This method was improved in Popescu and Etzioni (2005) and Bafna and Toshniwal (2013) by removing those frequent nouns that are not likely to represent aspects. Specifically, Popescu and Etzioni (2005) considered that an aspect is part or feature of a product, and can be identified by means of high Point-Wise Mutual Information (PMI) values,

between potential aspect words f and meronymy discriminatorsd associated with the type of the product, e.g., “of phone”, “phone has” and “phone comes with” for the phone type. For this computation, the authors utilized the hits statistics provided by the KnowItAll Assessor system (Etzioni et al. 2005), which obtains relationships such as isPartOf(screen, phone) by querying the Web. Bafna and Toshniwal (2013), in contrast, investigated a probabilistic approach to select all those nouns that are likely to represent aspects.

Scaffidi et al. (2007) built the Red Opal system, which makes use of a Language Model to identify references to aspects in reviews, and detect those that the target user is more interested in. The authors assumed that item aspects are mentioned more often in a review than in a multi-domain corpus. For instance, in a collection of reviews about restaurants, words as ‘ambiance’, ‘service’, ‘food’, ‘dessert’ or ‘price’ tend to appear much more often than in document repositories of other domains. Their method computes the probability that a word t is observed \(n_t\) times in a review of length N, and compare it to the ratio of appearance in standard English, \(p_t\). If the ratio is high, then the word t is considered to be an aspect word. The opinion sentiment orientation is assigned based on the assumption that the global rating of a review correlates with the polarity of each word. Red Opal thus only considers the review ratings to estimate the user’s interest on the items, and avoids analyzing opinion words.

Recently, Caputo et al. (2017) have presented the SABRE search engine, which, similarly to the Red Opal system, compares the word frequency distributions in a target, single-domain document collection with distributions in a general, multi-domain corpus. SABRE produces as output a set of tuples describing an input review. Such tuples contain extracted aspects together with their relevance and sentiment, along with sub-aspects related to the aspects, if exist. The key point of this method is how word relevance is measured. The authors use the point-wise Kullback–Leibler divergence (KL divergence, referred to as \(\delta \)) with respect to a general corpus. Formally, given two corpora \(c_a\) and \(c_b\), and a word t, the KL-divergence is calculated as:

The proposed method computes the KL divergence for each of the extracted nouns on the domain and general corpus, and considers those nouns with a KL score higher than certain threshold \(\varepsilon \) to be item aspects.

2.1.3 Syntactic relation-based extraction

Another type of approach to aspect opinion extraction focuses on analyzing the syntactic sentence structure and word relations. Qiu et al. (2011) presented the Double Propagation (DP) algorithm, which exploits syntactic relations between the words in a review to identify those that correspond to aspects. More specifically, the algorithm makes use of the relations between nouns or noun phrases, and adjectives. It utilizes dependency grammar to describe such syntactic relations (Schuster and Manning 2016), and follows a set of extraction rules. Using a lexicon, the basic idea of DP is to extract opinion words or aspects by iteratively using known and previously extracted opinion words and aspects. To illustrate the algorithm, let us consider the sentences “Canon G3 takes great pictures”, “The picture is amazing”, “You may have to get more storage to store high quality pictures and recorded movies” and “The software is amazing,” and the input positive opinion word great. DP first extracts picture as an item aspect based on its relation with great. Analyzing other relations of these aspect and opinion words, DP determines that amazing is also an opinion word, and movies is another aspect. In a second iteration, as amazing is recognized as an opinion word, software is extracted as an aspect. This propagation stops as no more aspect or opinion words are identified. The polarity associated to each aspect is assigned at the same stage than the extraction. It is based on the polarity of the known word that it is related to, considering negation and contrary words in the sentence. This method may propagate noise when extracting aspects terms that are not real aspects. This problem was addressed by Qiu et al. (2011), by means of a final pruning stage. The DP algorithm has become the basis of several state-of-the-art methods for extracting opinions about item aspects from textual reviews, and some works have presented improvements over the originally proposed set of propagation rules. For instance, Zhang et al. (2010) introduced “part-whole” and “no” pattern rules to identify aspects. The “part-whole” pattern extracts aspects mentioned in a review as part of another product, as in “the engine of the car,” where engine is part of car. The “no” pattern handles phrases like “no noise”. Poria et al. (2014) also proposed a variation of DP by extending the set of rules and accounting for verb words as aspects.

2.1.4 Topic model-based extraction

Most of the approaches analyzed in previous subsections extract a list of words referring to aspects in reviews. In this context, several words may refer to the same aspect. For example, users may talk about the service in a restaurant by using distinct words like ‘service’, ‘staff’ and ‘attention’, which should not be considered as different aspects. Aciar et al. (2007) manually handled this issue defining an ontology that groups related words. There are, in contrast, methods that rely on Topic Models, such as LDA (Blei et al. 2003) and pLSA (Hofmann 2001), for both extracting and clustering aspect-related words automatically in a single phase.

If LDA or pLSA are applied in a straightforward way, they might not be able to capture the appropriate item aspects. In particular, they tend to build general topics that map terms into concepts the reviews talk about. For example, in the restaurants domain, topics are usually related to types of cuisine, such as Italian, Asiatic, vegetarian and vegan; in movies and books reviews, topics in general correspond to genres; and in electronics reviews, topics tend to represent different types of devices. Hence, several works have investigated particular topic models to find more fine-grained concepts in the reviews. Titov and McDonald (2008a) proposed Multi-Grain Topic Models (MG-LDA), a probabilistic approach that focuses on both global and local topics. Global topics are described by words related to the domain or general properties of the reviewed items, whereas local topics capture item aspects or features. This approach improves the quality of LDA by considering as aspects only those topics that can be explicitly rated. The same authors, in Titov and McDonald (2008b), enhanced the probabilistic model to associate the topics obtained with MG-LDA with particular item aspects. The followed method is based on the assumption that aspect ratings should be correlated with item ratings. Hence, the global rating of the review may be helpful to identify topics that correspond to aspects.

McAuley et al. (2012) presented a probabilist model that exploits the ratings associated with the reviews to simultaneously learn words that refer to aspects, and words that are associated with particular ratings. For instance, the word ‘flavor’ may be used to discuss the taste aspect, whereas the word ‘amazing’ may indicate a 5-star rating (in an 1–5 star scale). In the paper, the authors present three (unsupervised, semi-supervised and supervised) learning methods to build the model; in all cases, requiring a ground-truth set of ratings on aspects.

More recently, in the context of recommender systems, some works have related the representation of an item in the latent factor model (Koren et al. 2009) to the latent topics in reviews. In low-rank Matrix Factorization (MF), a user \(\mathbf{u }\) and item \(\mathbf{i }\) and can be respectively associated with k-dimensional latent factors \(p_u, q_i \in \mathbb {R}^k\). Their rating is then estimated as \(\hat{r}_{u,i} = p_u^T \cdot q_i\). These factors can be considered as item properties and the preference of the user for these properties, respectively. Based on this representation, Wang and Blei (2011) presented the Collaborative Topic Regression (CTR) model, where MF and LDA are run in the same stage. The latent item factor \(q_i\) is set to be the topic proportions in LDA \(\theta _i\) plus an offset \(\varepsilon _i\) as \(q_i = \theta _i + \varepsilon _i\). Thereafter, McAuley and Leskovec (2013) presented HTF, a slightly modified version of CTR in which latent topics in the reviews and latent factors for the item are related by a monotonic function (order is preserved):

where \(\kappa \) controls the peakiness of the transformation.

2.1.5 Discussion

In the previous subsections, we have surveyed several works proposed in the last decade to extract aspects and associated opinions from textual reviews. We have categorized them according to the approaches they use to extract the aspects, and the required input data. Specifically, we have analyzed vocabulary-, word frequency-, syntactic relation- and topic model-based approaches.

Except those based on topic models, the majority of the surveyed methods do not consider that different words may refer to the same aspect. This represents the main limitation we identify in the heuristic approaches. Topic model-based techniques intrinsically solve such limitation. Instead of extracting specific words, they are able to capture and group the main topic the reviews are about. To identify which of the extracted topics represent aspects, standard LDA models are modified so different generation distributions can focus on specific parts of the reviews. Hence, the extraction procedures lead to K topics, each of them represented by a collection of aspect terms. The main weakness of this type of approach is that the output topics might not precisely represent aspects, but a mixture of aspects and global characteristics of the items. However, as we shall show in Sect. 2.2, such topics have been shown very effective when exploited by recommender systems.

In the surveyed works, most of the reported experiments have been conducted on small product datasets of less than a hundred reviews (Hu and Liu 2004a; Popescu and Etzioni 2005; Bafna and Toshniwal 2013; Qiu et al. 2011; Poria et al. 2014), and only a few of them have focused on larger datasets (Scaffidi et al. 2007; Liu et al. 2012). Moreover, in general, methods from different types are not empirically compared. As we shall present in Sect. 3, in this paper, we evaluate the surveyed types of aspect opinion extraction approaches for the aspect-based recommendation problem, by combining representative extraction methods with several recommendation algorithms. Moreover, we evaluate the considered combinations on large datasets, ranging from a few to more than a hundred thousands reviews.

2.2 Aspect-based recommender systems

In this section, we provide an exhaustive survey of the research literature on aspect-based recommender systems. In some of the analyzed papers, item aspects are referred to as features and topics. In fact, some of the discussed recommendation approaches—such as those based on Topic Models—consider aspects that may correspond to content-based attributes and context values. For simplicity, we always use the term aspect, regardless the terminology and aspect type used in the cited papers. Moreover, although being related work of interest, we omit papers presenting information filtering (Scaffidi et al. 2007), question answering (McAuley and Yang 2016), and information retrieval (Caputo et al. 2017) systems that exploit aspect opinion data.

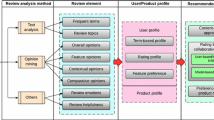

We present the surveyed articles following an own categorization, which is defined upon the one proposed by Chen et al. (2015). Specifically, we distinguish between the following types of approaches: enhancing item profiles with aspect opinion information (Sect. 2.2.1), modeling latent user preferences on item aspects (Sect. 2.2.2), deriving user preference weights from aspect opinions (Sect. 2.2.3), and incorporating aspect-level user preferences into recommendation methods (Sect. 2.2.4). Next, in Sect. 2.2.5, we discuss limitations identified in the literature that have motivated our work.

2.2.1 Enhancing item profiles with aspect opinion information

A first type of approach to exploit item aspect opinion information for recommendation purposes focuses on building enhanced representations of items.

Aciar et al. (2007) presented a seminal work in this line. They proposed an ontological item representation with two components: an item quality component containing the user’s evaluation of item aspects, and an opinion quality component including several variables that measure the opinion providers expertise with the item. The authors use text mining tools to first classify the sentences of each item review as good, bad and quality; the latter referring to the opinion quality component. Afterwards, the aspects mentioned in each of the classified sentences are extracted. Item profiles are then built applying a number of computations with the extracted data. In the paper, the authors propose a simple content-based recommendation model that ranks items according to both the item profiles and the user’s current interest on the aspects, explicitly stated or estimated from the aspect frequencies in the user’s reviews.

Yates et al. (2008) proposed an item profile that combines aspect opinions extracted from reviews and item technical specifications (e.g., a camera lens and resolution). This profile is called the item value model V(i), and indicates the intrinsic value of the item i for an average user. The item prize, considered as an indicator of extrinsic value, is treated as the dependent variable in a training phase where a SVM model is built on new items to predict their intrinsic values. Assuming the existence of a personalized value model V(u) for user u in the same aspect space as V(i), the difference \(\frac{V(u) - V(i)}{V(i)}\), change-in-value, reflects i’s suitability for u. A user is then recommended with the items having the highest change-in-value scores.

Ko et al. (2011) proposed to represent an item as a vector composed of key aspects—relevant terms derived from user reviews and item descriptions—with importance and sentiment scores. The item vectors are built for each user separately from the ratings and reviews of similar users. Then, for each user, a binary (recommendable and non-recommendable items) classification model is learned from the derived vectors, and used for item recommendation.

Finally, Dong et al. (2013) presented an item profile composed of aspects, each of them with sentiment and popularity scores. They applied a shallow natural language processing technique to extract single nouns and bigram phrases as item aspects, and an opinion pattern mining method to identify the opinions given to the aspects. The authors proposed a case-based recommendation method that matches the user’s profile—given as an input example item—with items whose profiles are highly similar and produce greater sentiment improvements.

2.2.2 Modeling latent user preferences on item aspects

A major approach to aspect-based recommendation consists of analyzing a user’s reviews to infer latent preferences (ratings) on item aspects, and exploiting such aspect-level user preferences through collaborative filtering techniques.

The work done by Jakob et al. (2009) represents one of the first attempts to extract opinions about aspects from user reviews, and incorporate them into the Matrix Factorization (MF) model (Koren et al. 2009). The authors presented a model that captures several types of relations between users, items and item aspects, namely user ratings, item aspects, user opinions on aspects, and rating- and aspect-based user similarities. These relations are treated as feature vectors for running the Multi-Relational Matrix Factorization (MRMF) algorithm proposed by Lippert et al. (2008). The aspects were extracted using LDA and the Subjective Lexicon (Wilson et al. 2005). Wang et al. (2010) proposed LRR, a probabilistic regression model to infer latent ratings on aspects. The model assumes that a rating on an item is generated through a weighted combination of latent ratings over all the item aspects; where the weights represent the relative emphasis the user has placed on the aspects, and an aspect latent rating depends on the review fragment that discusses such aspect. Using their model, the authors proposed a CF method that personalizes a ranking of items by using only the reviews written by the k reviewers whose aspect-level rating behavior is most similar to the target user’s. A two-component approach is also presented by Wang et al. (2012) and Nie et al. (2014). In this case, the extraction of aspect opinions is performed through the Double Propagation (Qiu et al. 2011) and LDA algorithms, whereas recommendations are generated via a tensor factorization method that assembles the overall rating matrix \(\mathbf {R}\) and K aspect rating matrices \(\mathbf {R}^1, \mathbf {R}^2, \ldots , \mathbf {R}^K\) into a 3rd-order tensor \(\mathcal {R}\), with which CF is performed. Ganu et al. (2013) proposed a clustering-oriented CF method based on aspect-level user preferences. The method first builds a SVM classifier to categorize review sentences into a fixed number of aspects (called topics in the paper) and sentiment categories. Based on the classification of the sentences of a user’s reviews, the method builds the user’s profile, composed of weighted (aspect, sentiment) tuples. Using the generated user profiles, a soft clustering algorithm is applied to group users with similar aspect-level preferences. The obtained user clusters are finally incorporated into the CF heuristic.

Instead of addressing the aspect-based user preference extraction and recommendation tasks separately, McAuley and Leskovec (2013) presented HFT, a matrix factorization model that incorporates hidden topics as a proxy for item aspects. The model aligns latent factors in rating data with latent factors in review texts. In this context, an identified topic may not correspond to a particular aspect or may be associated with several aspects, and thus a user may express different opinions for various aspects in the same topic. Nonetheless, the authors show that HTF predicts ratings more accurately than other models that consider either of such data sources in isolation, especially for cold-start items, whose factors cannot be fit from only a few ratings, but from a few reviews. Wu et al. (2014) presented JMARS, a probabilistic approach based on CF and topic modeling. Similarly to Wang et al. (2010), JMARS model assumes that review ratings arise from the process of combining ratings associated to aspects of the evaluated items. In contrast, JMARS jointly models user and item aspect rating distributions. In the same line of the work, Wu et al. (2015) present FLAME, an extension of Probabilistic Matrix Factorization (PMF) (Salakhutdinov and Mnih 2007) to model the user-specific aspect ratings. Finally, Chen et al. (2016) presented LRPPM, a tensor-matrix factorization algorithm that models interactions among users, items and features simultaneously, to learn user preferences from ratings along with textual reviews. Differently to previous work, the proposed method introduces a ranking-based (i.e., learning to rank), instead of a rating-based, optimization objective, for better understanding user preferences at aspect level.

2.2.3 Deriving user preference weights from aspect opinions

Another type of approach to aspect-based recommendation uses aspect opinion information to establish the weights of preferences in user profiles, rather than using it to infer such preferences. In these approaches, a user \(u_m\)’s profile is represented as a vector \(\mathbf {u}_m = \{w_{m,1}, w_{m,2}, \ldots , w_{m,K}\}\), where \(w_{m,k}\) denotes the relative relevance (weight) of aspect \(a_{k}\) for \(u_m\), and K is the total number of aspects.

In particular, Liu et al. (2013) proposed to determine the weight \(w_{m,k}\) by means of two factors, namely how much the user concerns about the aspect, and how much quality the user requires for such aspect; formally, \(w_{m,k} = concern(u_m, a_k) \times requirement(u_m, a_k)\). The value of \(concern(u_m, a_k)\) increases when \(u_m\) comments on \(a_k\) very frequently in his/her reviews, and other users comment on it less often. The value of \(requirement(u_m, a_k)\), on the other hand, increases when \(u_m\) frequently rates \(a_k\) lower than other users across different items. In the paper, the authors extract aspect opinions through a technique that accommodates to characteristics of the Chinese language. They also propose a recommendation method that estimates the relevance score \( relevance(\mathbf {u}_m, \mathbf {i}_n) = {\sum _{k=1}^{K} w_{m,k} \times v_{n,K}} / {\sum _{k=1}^{K} w_{m,k}} \), where \(v_{n,k}\) is the average of reviewers’ opinions about aspect \(a_k\) of item \(i_n\). The method recommends to \(u_n\) the top-N items with the highest relevance scores.

Differently to Liu et al. (2013), Chen and Wang (2013) focused on the cold-start situation where a user has not made enough reviews with which determining his/her aspect preference weights. The authors proposed a method that first derives cluster-level preferences, which are common to groups of users. Then, these cluster-level preferences are used to refine the users’ personal preferences. The refined preferences can in turn be used to adjust the cluster-level preferences, continuing the process until both types of preferences do not change significantly. This method is executed on an initial set of (aspect, opinion) tuples extracted from the user reviews. In the aspect extraction stage, the authors utilized WordNet (Miller 1995) and SentiWordNet (Esuli et al. 2007) to group aspect synonyms and determine aspect opinion polarities, respectively. In the recommendation stage, all the users are first clustered according to their cluster-level preferences, and then heuristic user-based CF is applied within the cluster to which the target user belongs.

To derive the weights of a user’s preferences, it could be valuable to consider his/her current contextual conditions (Adomavicius and Tuzhilin 2015). For instance, when searching for hotels, the aspects atmosphere and location may be of interest if the user wants to spend a weekend with his/her partner, whereas cleanliness and price may be the most important aspects if the user is planning one-week holidays with his/her family. In this example, period of time and companion would be the context variables that determine the current relevance (weight) of the above aspects for the user. Some researchers have investigated this issue. Levi et al. (2012) proposed to compute the preference of user \(u_m\) for aspect \(a_k\) within contexts \(C = \{c_1, c_2, \ldots , c_L\}\) as \(w_{m,k} = importance(u_m, a_k) \cdot {\prod _{l=1}^L freq_{k,l}}\), where \(importance(u_m, a_k)\) is the current importance of aspect \(a_k\) explicitly stated by \(u_m\), and \(freq_{k,l}\) is the frequency with which aspect \(a_k\) occurs in reviews with context \(c_l\). With this definition of aspect-level preference, the authors estimate the relevance of each review d for user \(u_n\) as \( relevance(u_m, d) = {\sum _{s \in S(d)}} {\sum _{a_k \in A(s)} w_{m,k} \cdot so(a_k, s)} \), where S(d) represents the sentences of review d, A(s) is the set of aspects commented in the sentence s, and so(a, s) returns the sentiment orientation (polarity) of the opinion on aspect a given in sentence s. Then, the authors present a content-based recommendation method that suggests the items i with highest review relevance scores. Differently to Levi et al. (2012), Chen and Chen (2014) aimed to directly extract the relation between preference weights and context in user reviews, by considering the co-occurrences of aspect opinions and context values. They distinguished between context-independent and context-dependent user preferences. The former are identified by building a regression model for overall ratings and aspect opinions of reviews, and applying a statistical t-test to select the model weights passing a significance level; the latter are extracted through a contextual review analysis based on keyword matching, and a rule-based reasoning on contextual aspect opinion tuples. The authors finally incorporated the derived preference weights into the recommendation approach proposed by Levi et al. (2012).

2.2.4 Incorporating aspect-level user preferences into recommendation methods

A last type of approach is represented by recommendation methods that explicitly incorporate aspect-level user preferences into their heuristic functions or predictive models for item relevance estimation.

Using the Stanford CoreNLP toolkit (Socher et al. 2013), Wang et al. (2013) presented an approach that first analyzes reviews to derive user preferences for aspect values in the form of (aspect, sentiment orientation, aspect value) tuples, such as (weight, positive, 200 g) to denote that the user expressed a positive opinion about a camera weight whose value is 200 g. These tuples are then linked to item specifications \(\mathbf {i}\) using the algorithm presented in Chen and Wang (2013), and compared among users in a CF fashion to derive unknown aspect-level user preferences. After estimating the target user u’s preferences \(\mathbf {u}(k)\) and the candidate item i weighted attributes \(\mathbf {i}(k)\) on aspects \(a_k\), the method estimates the relevance of i as \(\frac{1}{K} {\sum _{k=1}^K \mathbf {u}(k) \cdot \mathbf {i}(k)}\). The top-N items with highest relevance scores are finally recommended to u.

Recently, Bauman et al. (2017) presented SULM, a sentiment utility logistic model that simultaneously fits the opinions extracted from reviews and the ratings provided by the users. SULM assumes that a user u’s overall level of satisfaction with consuming item i is measured by an utility value \(V_{u,i} \in \mathbb {R}\). This overall utility is estimated as a linear combination of the individual (inferred) sentiment utility values \(\hat{V}_{u,i}^k(\theta _s)\) for all the aspects in a review, \(\hat{V}_{u,i} = {\sum _k \hat{V}_{u,i}^k(\theta _s) \cdot (w^k + w_u^k + w_i^k) } \), where \(w^k\) is a general coefficient expressing the relative importance of aspect \(a_k\), \(w_u^k\) is a coefficient that represents u’s individual importance of aspect \(a_k\), and \(w_i^k\) is a coefficient that determines the importance of aspect \(a_k\) for item i. Denoting these coefficients by \(\theta _r = (W_A, W_U, W_I)\), and the set of all parameters by \(\theta = (\theta _r, \theta _s)\), the model estimates \(\theta \) such that the a logistic transformation g of the overall utility \(\hat{V}_{u,i}(\theta )\) would fit binary ratings \(r_{u,i} \in \{0,1\}\) as \(\hat{r}_{u,i}(\theta ) = g(\hat{V}_{u,i}(\theta )) \). The model is built by searching for the \(\theta \) values that maximize the log-likelihood function \( l_r(R|\theta ) = {\sum _{u,i} r_{u,i} \cdot \log (\hat{r}_{u,i}(\theta )) + (1 - r_{u,i}) \cdot \log (1 - \hat{r}_{u,i}(\theta ))} \). In the paper, the authors make use of the Double Propagation algorithm (Qiu et al. 2011) to extract item aspect opinions from the user reviews.

Finally, Musto et al. (2017) presented multi-criteria user- and item-based collaborative filtering heuristics that incorporate aspect opinion information. For the user-based case (the item-based case is analogous), the authors propose an aspect-based user distance calculated as \( dist(u,v) = \frac{1}{|I(u,v)|} + {\sum _{i \in I(u,v)}} \sqrt{{\sum _{a \in A(u,i) \cap A(v,i)} |r_a(u,i) - r_a(v,i)|^2 }} \),

where I(u, v) is the set of items rated by both users u and v, A(u, i) is the set of aspects commented in user u’s review about item i, and \(r_a(u,i)\) is the sentiment rating inferred for aspect a in that review. The similarity between users is then calculated as the opposite of the distance d, and ratings are computed through the traditional CF heuristic, \( \hat{r}(u,i) = {\sum _{v \in N(u)} sim(u,v) \cdot r(v,i)} = {\sum _{v \in N(u)} (1 - dist(u,v)) \cdot r(v,i)} \),

where N(u) is u’s neighborhood with his/her most similar users. In the paper, the aspect extraction is performed with the SABRE engine (Caputo et al. 2017).

2.2.5 Discussion

In the previous subsections, we have surveyed more than 20 research papers on aspect-based recommender systems published in the last decade, categorizing them according to how they model and weight user preferences at aspect level, and how they incorporate such preferences into the recommendation generation process. For most cases, we have seen that the aspect extraction and aspect-based recommendation tasks are addressed separately. In general, however, in each paper, only one aspect extraction method is performed, without assessing existing alternatives. To the best of our knowledge, only Musto et al. (2017) tested the SABRE engine (Caputo et al. 2017) with two sentiment analysis strategies: a deep learning technique provided by the Stanford CoreNLP toolkit and a lexicon-based algorithm evaluated in Musto et al. (2014), finding no significant performance differences between them. As explained in Sect. 3, in this paper, we shall evaluate several aspect extraction methods, each of them belonging to one of the approaches types presented in Sect. 2.1.

Moreover, in most cases, the proposed recommendation approaches were empirically compared with standard baselines that do not exploit aspect opinions, but overall item ratings; in this context, a few exceptions exist, such as Wu et al. (2014) and Bauman et al. (2017), where JMARS and SLUM where evaluated against HTF (McAuley and Leskovec 2013). As explained in Sect. 4, in this paper, additionally to standard rating-based baselines and HFT, we shall evaluate a number of content-based and collaborative filtering methods that exploit aspect opinion data.

We finally note that in many studies, the reported experiments were conducted on small datasets, for one or a few domains, and using rating prediction metrics (MAE, MSE, RMSE), which are in relative disuse within the recommender systems community (Bellogín et al. 2011). In this paper, as presented in Sects. 5 and 6, we shall run our experiments on two review-oriented datasets from Yelp and Amazon, as in Levi et al. (2012), McAuley and Leskovec (2013), Socher et al. (2013), Wang et al. (2013), Chen et al. (2016), Musto et al. (2017) and Bauman et al. (2017), covering 8 domains: hotels, beauty and spas, restaurants, movies, digital music, CDs and vinyls, mobile phones, and video games. Instead of rating prediction metrics, as done e.g. by Levi et al. (2012), Liu et al. (2013), Chen et al. (2016) and Bauman et al. (2017), we will compute ranking-based metrics, focusing on the top-N recommendation task. Differently to previous work, we will also analyze other metrics measuring recommendation coverage, diversity and novelty.

3 Developed aspect opinion extraction methods

In this section, we present the evaluated methods to aspect opinion extraction. We have selected a representative method for each type of approach described in Sect. 2.1, namely vocabulary-, word frequency-, syntactic relation-, and topic model-based approaches. As we shall show in our experimental study (Sects. 5 and 6), when applicable, we will integrate each of the developed aspect opinion extraction methods with several content-based and collaborative filtering techniques, described in Sect. 4.

3.1 Vocabulary-based method

Our first aspect opinion extraction method makes use of a vocabulary for item aspects on a specific domain, and analyzes syntactic relations between the words of each sentence to extract opinions about aspects. The vocabulary contains a predefined list of item aspects, and a fixed set of nouns referring to each aspect, e.g., ‘staff’, ‘employees’, ‘waiters’ and ‘waitresses’ for the staff aspect of the restaurants domain. The method searches for the vocabulary nouns cited in the text of an input review, and for each of the found nouns, it generates an aspect annotation. Next, it builds the annotation in the form of a \((u, i, a, so_{u,i,a})\) tuple, where \(so_{u,i,a}\) is the sentiment orientation of the opinion given by user u to aspect a of item i—usually represented by a numeric value that is lower than, equal to, or greater than 0 when the opinion is negative, neutral or positive, respectively. In this context, for a given dictionary, the followed method differs from others in the way the sentiment orientation so is determined. From now on, we will refer to our method as voc. All the resources created for and generated by this method, and presented next, are publicly available.\(^{3}\)

3.1.1 Aspect vocabulary building

A vocabulary used by the voc method is composed of lists of nouns that refer to item aspects on a particular domain. We manually selected the aspects, including those that have been considered in research papers (Sect. 2), and those that correspond to item features, attributes and characteristics reported or analyzed in specialized forums (e.g., e-commerce sites, product review web portals), such as AllMusicFootnote 4 for music and GameSpotFootnote 5 for video games, among others. The selection of some of these aspects have to be carefully done in certain domains. For instance, in the restaurant domain, we observed that there were reviews with opinions about dishes focused on particular principal ingredients, such as ‘rice’ and ‘potatoes’. We assumed that people may find valuable reviews about dishes and restaurants that received positive opinions on those ingredients. We also decided to include them since topic model-based methods identify such aspects in user reviews.

Next, for each aspect, we created an initial list of ‘seed’ words, corresponding to the WordNetFootnote 6 (Miller 1995) synonyms of the aspect names, e.g., ‘atmosphere’, ‘ambiance’ and ‘ambience’ for the ‘atmosphere’ domain. We then extended each list with synonyms of the obtained seeds, since the name chosen for an aspect in the vocabulary may not have all the valid synonyms in WordNet. For a particular word, we only considered the synonyms of the WordNet synsets (i.e., word meanings) whose definitions contained certain reference word of the target domain, e.g., ‘music’ and ‘movies’. Thus, we limit the number of obtained synonyms, but avoid ambiguities. In the list, we also included plural forms of the seed nouns, and morphological deviations of seed compound nouns, e.g., ‘checkin’, ‘check-in’ and ‘check in’ in the hotels domain.

Finally, we automatically searched for all the obtained aspect nouns in a large collection of text reviews (about items in the target domain), scoring each noun with the number of reviews in which it occurred. Merging singular, plural and compound forms of the found nouns, we sorted them by decreasing scores. We then filtered out those nouns with a score lower than certain threshold, established for each aspect and domain by manual inspection.

Table 1 shows the 8 generated aspect lists to be exploited by the voc method in our experiments. The table shows the aspects considered for each domain, and the number of aspect nouns compiled in each vocabulary. On average, a vocabulary has 29.7 aspects and 296.4 nouns, i.e., 10 nouns per aspect approximately. After a careful inspection of the used reviews, we claim that no or only few additional relevant aspects or aspect words can be found in our datasets. Thus, we believe that experiments and results reported in this paper are correct in that respect.

3.1.2 Aspect opinion extraction

To extract opinions about item aspects from user reviews, the voc method first identifies in a review occurrences of any noun stored in the aspect vocabulary of the target domain. If an occurrence is found, the method analyzes the sentence in which the noun appears, in order to obtain a potential opinion about the corresponding aspect.

For such purpose, similarly to previous work (e.g., Wang et al. 2013; Caputo et al. 2017), our method makes use of the Stanford CoreNLP toolkit (Socher et al. 2013) to language natural processing; specifically, its Part-of-Speech (POS) tagger (Toutanova et al. 2003) and syntactic dependency parser (Chen et al. 2014). On a given sentence, the POS tagger returns the Penn TreebankFootnote 7 POS tag of each word, e.g., NN, NNS, NNP and NNPS for singular/plural common/proper nouns, and JJ, JJR and JJS for positive/comparative/superlative adjectives. The syntactic dependency parser, on the other hand, returns binary grammatical dependencies in the sentence as a list of (gov, rel, dep) triples, representing the relations rel hold between governors gov and dependents dep. The parser current representation contains approximately 50 grammatical relations. Figure 1 shows the POS tags and syntactic relations returned for the sentence “The hotel staff and owner were not very friendly”.

The syntactic dependencies shown in the figure are given below as a list of triples. For instance, (friendly-9, nsubj, staff-3) means that the noun staff is the subject (nsubj) of the noun clause with the adjective friendly.

-

(ROOT-0, root, friendly-9)

-

(staff-3, det, The-1)

-

(staff-3, compound, hotel-2)

-

(friendly-9, nsubj, staff-3)

-

(staff-3, cc, and-4)

-

(staff-3, conj:and, owner-5)

-

(friendly-9, nsubj, owner-5)

-

(friendly-9, cop, were-6)

-

(friendly-9, neg, not-7)

-

(friendly-9, advmod, very-8)

For a given sentence, our method analyzes the list of syntactic dependencies to generate preliminary annotations in the form of (noun, adjective, modifier, isAffirmative) tuples, where noun and adjective are linked by certain syntactic relation (nsubj in general); modifier, if exists, is an adverb (e.g., ‘little’, ‘enough’, ‘quite’, ‘very’, ‘absolutely’) that may alter the polarity intensity of the adjective, to which is linked through the advmod relation; and isAffirmative is a Boolean variable that is ‘true’ if the polarity of the adjective has not to be inverted because there are not a neg relation or a ‘but’ preposition complementing the adjective, and the sentence is not negative.Footnote 8 In the previous example, the voc method would generate the following two tuples:

-

(staff, friendly, very, false)

-

(owner, friendly, very, false)

where ‘staff’ and ‘owner’ are noun siblings linked by the conj:and relation, and are described as ‘very friendly’, an adjective that, in this case, is not in an affirmative form since it is negated by the ‘not’ adverb. In Table 2 we show some examples of recognized sentence structures and generated opinion annotations, including affirmative versus negative sentences, single versus multiple nouns, single versus multiple adjectives, and adjective modifiers.

To generate the above annotations, A, we propose Algorithm 1, which processes certain syntactic patterns identified in a sentence S that relate nounsFootnote 9 and adjectives. Specifically, it analyzes the graph of dependencies D extracted by the CoreNLP tool (line 3), considering the following relations: nsubj and nsubjpass, which correspond to active/passive subjects in noun phrases—e.g., (friendly, nsubj, staff) in “The staff is friendly”—(lines 6–25), amod and advmod, which are adjectival and adverbial phrases complementing a noun phrase—e.g., (staff, amod, friendly) in “The hotel has friendly staff”—(lines 26–35), and xcomp, which represents predicative or clausal complements of a verb or adjective without its own subject—e.g., (consider, xcomp, friendly) in “I consider the staff friendly”—(lines 36–45). The algorithm analyzes other relations, such as conj and xcomp between pairs of nouns and pairs of adjectives/adverbs to extract noun siblings (function getNounSiblings called in lines 7, 28 and 38) and adjective siblings (function getAdjectiveSiblings called in lines 9, 17, 29 and 39) respectively, acomp and advmod to extract adjective modifiers (function getAdjectiveModifiers called in lines 10, 18, 30 and 40), and neg to extract negations of adjectives (function isAffirmative called in lines 11, 19, 31 and 41). Finally, the algorithm addresses the negation of the sentence by jointly considering the root and neg relations (lines 48-50), and removes those annotations whose nouns do not belong to the input, domain-dependent aspect vocabulary V (line 51).

As explained before, the proposed algorithm analyzes a sentence if it contains a noun that corresponds to an item aspect, i.e., a noun in the input vocabulary V. This does not allow extracting opinions about an aspect cited in a sentence through a personal pronoun (it, they), which refers to the aspect noun appearing in a previous sentence. To address this issue, we may use a coreference resolution technique. We tested the CoreNLP tool for such purpose, and decided to discard it in our experiments because the number of coreferences associated to aspects was very small, and the execution time increased significantly.

3.1.3 Opinion polarity identification

For each (noun, adjective, modifier, isAffirmative) annotation extracted by Algorithm 1, the voc method establishes the sentiment orientation of the opinion associated to the annotation, generating a final (aspect, sentiment_orientation) tuple, where aspect is the label of the aspect (in V) referred by noun, and sentiment_ orientation is a real number that is greater than, equal to, or lower than 0 if the opinion is positive, neutral or negative, respectively. In the following, we explain how such score is computed.

First, we set the adjective polarity \(p_{adj} = polarity(\texttt {adjective}) \in \{-\,1, 0, +\,1\}\). We attempt to get such value from the well-known generic, domain-independent lexicon created by Hu and Liu (2004b). If the adjective is not found there, we attempt to obtain it from own domain-dependent, aspect-level lexicons, which we make publicly available\(^{3}\). We built the lexicon of a target domain extending the generic lexicon by computing \(PMI(a_g, a_d)\) values (see Sect. 2.1.2) between adjectives \(a_g\) and \(a_d\) that co-occur in aspect opinions of a review collection on the domain, where \(a_g\) is an adjective of the generic lexicon, and \(a_d\) is an adjective whose polarity is unknown. For those pairs that have PMI values greater than certain threshold (chosen by manual inspection), the polarity of \(a_g\) determines the polarity of \(a_d\). Thus, for example, if “expensive” and “small” appear frequently together when describing the size of rooms in hotel reviews, they would have a high PMI value, and, since the polarity of “expensive” is negative, we set the polarity of “small” to negative (for the hotel room size aspect).

Next, if exist, we consider adverbs to strengthen or soften the adjective polarity. This is a particular case of the intensifiers discussed by Taboada et al. (2011), and is envisioned as an open research issue in Chen et al. (2015). Our method makes use of a list of 300 adverbs, each of them with a weight \(w_{mod} \in \{-\,1, +\,0.5, +\,2\}\) expressing respectively whether the adverb inverts, softens or strengthens the polarity of the adjective. If the modifier of the annotation belongs to that list, we set the corresponding weight \(w_{mod}\). The list, which we also make publicly available, is composed of the Thesaurus.comFootnote 10 synonyms of representative adverbs, namely very, entirely, amazingly, quite, somehow, little, too, excessively and insufficiently, and the synonyms of the latter, discarding duplicates. More specifically, the list contains 83, 82 and 135 adverbs with weights \(w_{mod} = -\,1, +\,0.5, +\,2\), respectively.

Finally, we take the isAffirmative value into account to set a weight \(w_{aff}=\{-\,1, +\,1\}\) depending on whether isAffirmative is false or true, respectively. The value of \(\texttt {sentiment\_orientation}\) is then computed as follows:

As illustrative examples, “amazingly tasty” and “slightly expensive” are assigned \(+\,2\) and \(-\,0.5\) semantic orientation values, respectively.

3.2 SABRE method

As a representative method of word frequency-based aspect opinion extraction approaches, we have implemented the SABRE algorithm (Caputo et al. 2017). Making use of Language Models, this algorithm works on the assumption that the vocabulary used differs when talking about distinct topics. Hence, it aims at selecting as aspects the nouns whose distributions in a specific-domain document collection differs significantly from their distributions in a general, multi-domain corpus. Caputo et al. (2017) conducted experiments over a set of TripAdvisorFootnote 11 reviews, showing that using their KL divergence metric allowed extracting better aspects than considering only frequencies of appearance. We will refer to this method as sab in the remainder of the document.

3.2.1 Aspect extraction

Assuming that aspects are mostly nouns (Liu 2012), we compute the frequency of appearance of each noun in the specific item domain. Similarly to Caputo et al. (2017), we utilize the Stanford CoreNLP lemmatizer to consider two nouns to be the same if they have a common lemma. Formally, we compute the frequency and subsequent probability of lemma t appearing \(n_{t,D}\) times in domain D as

where \(N_D\) is the sum of the frequencies of all the noun lemmas in the domain.

Next, \(p_{t,D}\) is compared to the probability of appearance of t in a general, multi-domain corpus. As done in Caputo et al. (2017), we use the British National Corpus (BNC)Footnote 12 to do this comparison. The method assigns a score to every noun in the domain that also appears in the generic corpus as the pointwise Kullback–Leibler divergence \(\delta \) between both probabilities. Let D be the target domain, and BNC be the multi-domain corpus, the above score is calculated as

Finally, every noun with a score higher than a threshold \(\varepsilon \) is considered to be an aspect, since it is overrepresented in the target domain. The authors set \(\varepsilon =0.3\) in reported experiments.

3.2.2 Opinion polarity identification

Differently to the method proposed in Caputo et al. (2017), we follow the algorithm explained in Sect. 3.1.3 to identify the sentiment orientation of the existing opinions about the extracted aspects. We refer the reader to that section for the details. We just remind the reader here that our algorithm allows considering both adjective and sentence negation, adjective modifiers, and multiple aspects and opinions in a sentence.

3.3 Double propagation method

In our experiments, we also consider a syntactic relation-based method to aspect opinion extraction. In particular, we evaluate the Double Propagation (DP) method presented in Qiu et al. (2011). The DP algorithm has become the basis of several state-of-the-art methods for identifying opinions about item aspects from textual reviews. This method is based on the observation that aspects are mostly nouns, and opinion words are mostly adjectives complementing such nouns. Hence, analyzing (noun, adjective) syntactic relations, the dp method aims at finding aspect opinions and their sentiment orientations simultaneously.

3.3.1 Aspect extraction

The basic idea of the dp method is to identify aspect and opinion words iteratively using known and extracted (in previous iterations) aspect and opinion words, and certain syntactic relations, propagating information back and forth between iterations.

The identification of the relations is the key to the extraction. Two words are direct dependent if one word depends on the other word without any additional words in their grammar dependency path, or if both have a direct dependency on a third word. In particular, dp uses direct dependencies between nouns and adjectives, identified by the POS tags: NN (nouns) and NNS (plural nouns) for aspects, and JJ (adjectives), JJR (comparative adjectives) and JJS (superlative adjectives) for opinions. As done by Qiu et al. (2011), we obtained these tags with the Stanford POS tagger (Socher et al. 2013).

The mod, pnmod, subj, s, obj, obj2, desc syntactic relations were considered between an aspect word and an opinion word, whereas the conj relation was used between aspect (or opinion) words. The followed procedure to find such syntactic dependencies in the reviews is similar to that exposed in Sect. 3.1.2. We run the POS tagger and syntactic dependencies parser to obtain triples of the form (gov, rel, dep) that represent the relations rel hold between governors gov and dependents dep; see Sect. 3.1.2 for details. In the following, for simplicity, we assume (gov, rel, dep) and (rel, gov, dep) are equivalent, and use (word1, word2, dep) to refer to both of them. The developed method handles both alternatives.

After the nouns, adjectives and dependency relations are identified, the propagation algorithm starts. The four rules proposed in Qiu et al. (2011) are presented in Table 3. They are used to extract new words from previously extracted words. The dp method begins with a list of well-known opinion words from the Lexicon of Liu et al. (2012). In the first iteration, considering the initial words, the method extracts related aspect words through Rule 1, and other opinion words through Rule 4. Then, it searches for nouns or opinion words related to these new extracted words through Rules 2 and 3, parsing every sentence in the dataset. The procedure is repeated until no more aspect or opinion words are extracted following the propagation.

When the propagation has finished, we run a pruning stage to remove noise terms that have been selected as potential aspects. We perform a modified version of the Clause Pruning suggested in Qiu et al. (2011), which consists in keeping only the most frequent target noun in a clause with several nouns. In terms of Precision, Recall and F-score in aspect extraction, we compared the results obtained with Clause Pruning against Sentence Pruning—i.e., keeping only the most popular word in a sentence as target aspect—on the dataset used by Liu (2012). We did not observe significant differences in performance, but Sentence Pruning avoids parsing the sentence to obtain the clauses, and is more scalable. Therefore, in this work we apply Sentence Pruning instead of Clause Pruning. We also perform a global pruning stage, removing target words that appear only once in the whole opinion set. Finally, we perform Compound Pruning, which combines multiple words (two nouns or a noun and an adjective) to create multi-term aspects. We will refer to the DP + pruning method as dpp.

3.3.2 Opinion polarity identification

In the dp method, the assignment of polarity to adjectives is done simultaneously to the propagation process. The polarity of a new extracted word depends on the polarity of the word from which it has been propagated. The underlying idea is that the syntactic relations used in the extraction rules correspond to dependencies that refer to the same concept, so they have to share the polarity.

The initial words are annotated with the polarity scores (+1 for positive, -1 for negative) existing in a lexicon, and these scores are then used in the propagation. Moreover, the assigned polarity scores are inverted if negation words affect the extracted words, within a surrounding word window. In our experiments, we set a 5-word window as in the original work. We refer to Qiu et al. (2011) for more details on this sentiment orientation assignment.

3.4 LDA method

Topic model-based aspect opinion extraction methods provide latent representations of items (and users) in terms of the topics discussed in the reviews. These representations allow extracting intrinsic characteristics of the items from their reviews, which capture, among other things, the item aspects commented by the users. In particular, we evaluate the standard form of LDA, an effective algorithm that is the basis of the state-of-the-art methods based on topic models. We will refer to this method as lda.

3.4.1 Aspect-topic representation

As discussed in Sect. 2.1.4, LDA may extract generic topics, instead of specific topics related to aspects. In this context, we have empirically observed that as the number of topics increases, the obtained latent topics are more aspect-specific. In fact, we will report results of experiments run on up to 100 topics.

We use the LDA implementation of the MALLET framework (McCallum 2002), optimizing the hyperparameters every 20 iterations. We run the algorithm for at least 500 iterations, until convergence (\(\varepsilon =0.001\)) in the logarithm of the perplexity metric. As done by McAuley and Leskovec (2013), we consider the set of all reviews of a particular item as a document, which leads to the latent representation of the item.

3.4.2 Opinion polarity identification

LDA allows representing an item as a K-dimensional vector \((\varphi _{i,1}, \varphi _{i,2}, \dots , \varphi _{i,K})\), where \(\varphi _{i,k}\) is the proportion of item i about topic (aspect) \(a_k\). The assigned polarity \(w_{i,k}\) of aspect \(a_k\) to item i is computed as the weighted sentiment orientation \(so_{i,k}\) of the topic k in item i:

where \(so_{i,k}\) is computed by selecting the 10 most representative words for topic k, and computing the average polarity of those words in the document (i.e., the set of reviews about item i). The polarity of these words is computed following the algorithm explained in Sect. 3.1.3.

4 Developed aspect-based recommendation methods

To analyze the effect of exploiting opinions about item aspects in recommender systems, we experiment with two families of recommendation approaches: content-based and collaborative filtering.

The opinion information to be used by the evaluated recommenders will be generated by the aspect extraction methods presented in Sect. 3, namely the vocabulary-based voc method, the word frequency-based sab method, the syntactic relation-based dp and dpp methods, and the latent topic-based lda method.

Depending on the particular combinations of aspect extraction and recommendation approaches, the recommenders will belong to one of the types of aspect-based recommendation presented in Sect. 2.2: building enhanced aspect-based item profiles (Sect. 2.2.1), modeling latent user preferences on aspects (Sect. 2.2.2), setting the weights of aspect-level user preferences (Sect. 2.2.3), and incorporating aspect-based user/item similarities into recommendation heuristics (Sect. 2.2.4).

Before presenting in Sect. 4.2 the particular evaluated recommenders, in Sect. 4.1 we first explain how user and item profiles are built with aspect-based information extracted from reviews.

4.1 Modeling users and items

Following the standard procedure proposed in the literature (Chen et al. 2015), we split the aspect-based modeling process into item profiling (Sect. 4.1.1) and user profiling (Sect. 4.1.2).

From now on, a user \(u_m\)’s profile is represented as a vector \(\mathbf {u}_m = \{w_{m,1}, w_{m,2}, \ldots , w_{m,K}\}\), where \(w_{m,k}\) denotes the relative relevance (weight) of aspect \(a_{k}\) for \(u_m\), and K is the total number of aspects. Analogously, an item \(i_n\)’s profile is represented as a vector \(\mathbf {i}_n = \{w_{n,1}, w_{n,2}, \ldots , w_{n,K}\}\), where \(w_{n,k}\) denotes the relative relevance of \(a_{k}\) for \(i_n\).

4.1.1 Item profiling

Next, we describe how we compute the weight \(w_{i,k}\) for each item i and aspect \(a_k\). We consider both profiles associated to actual aspects commented in the user reviews, and profiles composed of latent (aspect) topics inferred from the texts of the review collection.

-

Aspect annotation-based item profiles This type of item profiling assumes that the aspect extraction technique may generate tuples \((u,i,a_k,so_{u,i,k})\) for each user u and item i, associated to aspect \(a_k\) and sentiment orientation \(so_{u,i,k}\). In this case, the weight of an aspect for a particular item is computed as the average of the estimated sentiment orientation over every occurrence such aspect appears in the reviews associated to that item. Formally:

$$\begin{aligned} w_{i,k} = \frac{1}{|(\cdot ,i,a_k,\cdot )|} \sum _{(\cdot ,i,a_k,so)}{so_{u,i,k}} \end{aligned}$$(1) -

Latent factor-based item profiles This profiling technique is used together with latent topic-based aspect extraction methods, which represent the items by their topic distribution. As described in Sect. 3.4.2, a sentiment orientation \(so_{i,k}\) can be assigned to each aspect (latent topic) for every item. Furthermore, an item i can be represented in terms of the proportion \(\varphi _{i,k}\) of each aspect k, leading to the following weight for each (item, aspect) pair:

$$\begin{aligned} w_{i,k}=\varphi _{i,k} \cdot so_{i,k} \end{aligned}$$(2)

4.1.2 User profiling