Abstract

Orthogonal moments and their invariants to geometric transformations for gray-scale images are widely used in many pattern recognition and image processing applications. In this paper, we propose a new set of orthogonal polynomials called adapted Gegenbauer–Chebyshev polynomials (AGC). This new set is used as a basic function to define the orthogonal adapted Gegenbauer–Chebyshev moments (AGCMs). The rotation, scaling, and translation invariant property of (AGCMs) is derived and analyzed. We provide a novel series of feature vectors of images based on the adapted Gegenbauer–Chebyshev orthogonal moments invariants (AGCMIs). We practice a novel image classification system using the proposed feature vectors and the fuzzy k-means classifier. A series of experiments is performed to validate this new set of orthogonal moments and compare its performance with the existing orthogonal moments as Legendre invariants moments, the Gegenbauer and Tchebichef invariant moments using three different image databases: the MPEG7-CE Shape database, the Columbia Object Image Library (COIL-20) database and the ORL-faces database. The obtained results ensure the superiority of the proposed AGCMs over all existing moments in representation and recognition of the images.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Description of images invariant to geometric transformations such as rotation, scaling and translation is useful in image analysis, object recognition and classification [1, 2]. Moments, as a popular class of the global invariant features, have been widely used in different applications of pattern recognition, image analysis and computer vision applications, such as object recognition [3], optical character recognition [2], pattern classification [4], image watermarking [5], content-based image retrieval [6], image reconstruction [7], image compression [8, 9], edge detection [10] and template matching [11].

The moments can be divided into two groups: orthogonal moments and non-orthogonal moments. Non-orthogonal moments such as geometric moments, complex moments and rotational moments have a certain degree of information redundancy and high sensitivity to noise, and the reconstruction of image from these moments is also quite difficult [12, 13]. On the contrary, orthogonal moments defined in terms of a set of orthogonal polynomials have received more attentions recently owing to their ability in representing images with minimal information redundancy and high noise robustness and image intensity functions could be reconstructed by using a finite number of orthogonal moments [14].

Depending on the kernel polynomials orthogonal on a rectangle or on a disk, orthogonal moments can be further divided into orthogonal moments defined in polar coordinate or Cartesian coordinate. The orthogonal moments defined in polar coordinate mainly include Zernike moments [15, 16], pseudo-Zernike moments [17], Bessel–Fourier moments [18], orthogonal Fourier–Mellin moments [19] and the remarkable advantage of these moments is easily to achieve rotation invariance. The orthogonal moments defined in the Cartesian coordinate mainly include Legendre [15], Tchebichef [20], Krawtchouk [21] and Hahn moments [22].

Gegenbauer–Chebyshev moments [23,24,25] as classical orthogonal moments defined in the Cartesian coordinates have been widely used in the field of image analysis. It is known that the rotation, scaling and translation invariant property of image moments has a high significance in image recognition. Unfortunately, to the best of our knowledge, both rotation, scaling and translation invariant of the Gegenbauer–Chebyshev moments have not been studied until now. Indeed, in Sect. 6 of the paper cited in [25], Zhu was able to construct moment invariants from Tchebichef–Krawtchouk moment, Tchebichef–Hahn moment and Krawtchouk–Hahn moment, but he could not extract the invariants from Gegenbauer–Chebyshev moment.

The extraction of invariant features of an image from the orthogonal moments defined in the Cartesian coordinate is a very difficult task. In this context, many authors have used the technique of expressing the orthogonal invariant moments as a linear combination of geometric invariant moments with the later are invariants under translation, scaling and rotation of the image they describe. It is used by Hosny [23, 24] to extract the invariants of orthogonal Gegenbauer Moment, by Papakostas et al. [26] to derive of invariants of the Krawtchouk orthogonal moments, by Zhu [25] to obtain the invariants of Tchebichef moments (TMI), Krawtchouk moments (KMI), Hahn moments (HMI), Tchebichef–Krawtchouk moments (TKMI), Tchebichef–Hahn moments (THMIs), Krawtchouk–Hahn moments (KHMI), it is used also by Hmimid et al. [27] to build the invariants of Meixner–Tchebichef moments (MTMIs), Meixner Krawtchouk moments (MKMIs) and Meixner–Hahn moments (MHMIs) etc.

In this paper, we use this technique to construct a new set of invariants of adapted Gegenbauer–Chebyshev moments (AGCMIs). This technique is based on the explicit formulation \(\sum\nolimits_{i = 0}^{n} {\alpha_{i} x^{i} }\) of the used orthogonal polynomials. On the other hand, the explicit formulations of the Gegenbauer and the Chebyshev polynomials, which are defined in Eqs. (10) and (12), are written in the form \(\sum\nolimits_{i = 0}^{n} {a_{i} \left( {\frac{x - 1}{2}} \right)^{i} }\). This obstructs the extraction of invariants from the orthogonal moments (AGCMs). For this reason, we introduce in this paper a new series of orthogonal polynomials, based on Gegenbauer and Chebyshev polynomials. We call them “the orthogonal adapted Gegenbauer–Chebyshev polynomials (OAGCP)”. This set of orthogonal polynomials is used to define a new type of orthogonal moments called adapted orthogonal Gegenbauer–Chebyshev moments (AGCMs). This helps to create a set of orthogonal moments (AGCMIs) invariant to translation, rotation and scale. These moment invariants (AGCMIs) are written in terms of geometric moment invariants presented by Hu [2]. We also apply a new 2D image classification technique using the invariant features extracted from the proposed invariant moments (AGCMIs and fuzzy K-means (FKM) [28, 29]. The performance of these new orthogonal moment invariants is compared with the existing orthogonal moments in terms of quantitative and qualitative measures. The comparison clearly shows the superiority of the proposed AGCMIs over the existing orthogonal moment invariants.

The other sections of the paper are organized as follows. The basic equations of the Gegenbauer–Chebyshev polynomials are briefly described in Sect. 2. All aspects of the proposed orthogonal moments for images are presented in Sect. 3. The computation of orthogonal adapted Gegenbauer–Chebyshev moment invariants is presented in Sect. 4. Experiments and the discussion of the obtained results are presented in Sect. 5. Conclusion is presented in Sect. 5.

2 The adapted Gegenbauer–Chebyshev polynomials

In this work, the image features are extracted using Gegenbauer–Chebyshev orthogonal moments for image representation and recognition. These orthogonal moments are based on the composition of two sets of orthogonal polynomials: Gegenbauer and Chebyshev polynomials. The theoretical aspect of these polynomials will be presented in the following subsections. In the first subsection, the equations governing orthogonal Jacobi polynomials are presented. The Gegenbauer polynomials are defined in the second subsection. In the third subsection the Chebyshev polynomials are presented. Finally, based on the last two sets of polynomials a new series of separable polynomials \(\psi_{n,m} \left( {x,y} \right)\) with two variable \(x\) and \(y\) are proposed in the fourth subsection. These polynomials are defined and orthogonal over the rectangle \(\left[ {0,N} \right] \times \left[ {0,M} \right],\) where \(N \times M\) is the size of the described image.

2.1 Orthogonal Jacobi polynomials

The \(n^{th}\) order Jacobi polynomial \(P_{n}^{\alpha ,\beta } \left( x \right)\) with parameters \(\alpha ,\beta\) is defined by the hypergeometric function as follows [25]:

where \(\left( {\alpha + 1} \right)_{n}\) is a Pochhammer symbol defined as:

The hypergeometric function \({}_{2}^{{}} F_{1} \left( {a,b; c; x} \right)\) is defined for \(\left| x \right| < 1\) by the power series

More explicitly, Jacobi polynomial of the nth order is defined as follows:

where \(\varGamma \left( z \right)\) is the Gamma function.

For \(\alpha > - 1\) and \(\beta > - 1,\) the set of Jacobi polynomials satisfy the orthogonality condition:

where \(\delta_{nm}\) is the Kronecker delta and \(w_{\alpha ,\beta } \left( x \right)\) is the weight function defined by

And

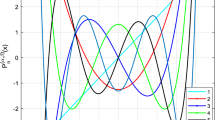

Figures 1 and 2 show the graphs of the first six Jacobi polynomials with \(\alpha = 3, \beta = 3\) and with \(\alpha = 1, \beta = 2\), respectively.

2.2 Orthogonal Gegenbauer polynomials

The Gegenbauer polynomials [30] \(G_{n}^{\left( \lambda \right)} \left( x \right)\) are given in terms of the Jacobi polynomial \(P_{n}^{\alpha ,\beta } \left( x \right)\) with \(\alpha = \beta = \lambda - \frac{1}{2}(\lambda > - \frac{1}{2},\lambda \ne 0)\) by

We define \(c_{k} \left( \lambda \right)\) as follows

The polynomial \(G_{n}^{\left( \lambda \right)} \left( x \right)\) can be written as

2.3 Orthogonal Chebyshev polynomials

The Chebyshev polynomials of the first kind are a special case of the Jacobi polynomials \(P_{n}^{{\left( {\alpha ,\beta } \right)}} \left( x \right)\) with \(\alpha = \beta = - 1/2\)

Therefore, the Chebyshev polynomial \(T_{n} \left( x \right)\) can be written as follows

Where \(d_{k}\) is defined as

2.4 Orthogonal adapted Gegenbauer–Chebyshev polynomials

For \(N,M \ge 1.\) According to Sects. 2.2 and 2.3, we can define the adapted Gegenbauer polynomials \(\bar{G}_{n}^{\left( \lambda \right)} \left( t \right)\) of size \(N\) as

And the adapted Chebyshev polynomials \(\bar{T}_{n} \left( t \right)\) of size \(M\) as

According to Eq. (10), the adapted Gegenbauer polynomial \(\bar{G}_{n}^{\left( \lambda \right)} \left( t \right)\) of size \(N\) can be written as:

Where \(c'_{k} \left( \lambda \right) = c_{k} \left( \lambda \right)\left( {\frac{ - 1}{N}} \right)^{k}\).

And the adapted Chebyshev polynomial \(\bar{T}_{n} \left( t \right)\) of size \(M\) can be written as

Where \(d'_{k} = d_{k} \left( {\frac{ - 1}{N}} \right)^{k}\).

Definition 1

The adapted Gegenbauer–Chebyshev polynomials of size \(\left( {N,M} \right)\) are defined as follows

We use Eqs. (16) and (17); the polynomial \(\psi_{n,m} \left( {x,y} \right)\) is written as

Where \(c'_{k} \left( \lambda \right) = c_{k} \left( \lambda \right)\left( {\frac{ - 1}{N}} \right)^{k}\) and \(d'_{k} = d_{k} \left( {\frac{ - 1}{N}} \right)^{k}\)

Theorem 1

The adapted Gegenbauer–Chebyshev polynomials of size \(\left( {N,M} \right)\) are orthogonal over on the rectangle \(\left[ {0,N} \right] \times \left[ {0,M} \right]\) with the weighting function

and

Where \(C\left( { n,m,\lambda } \right)\) is the normalization constant defined as:

Proof of Theorem 1

By substituting

And

And with the help of Eq. (5) and with the change of variables \(x' = \frac{N - 2x}{N}\) and \(y' = \frac{M - 2y}{M},\) we have

Using Eqs. (8), (11) and (5), we get

3 Orthogonal adapted Gegenbauer–Chebyshev moments

The orthogonal Gegenbauer–Chebyshev moments (AGCMs) of a gray-level image \(f\left( {x,y} \right)\) are defined as follows:

Equation (24) can be approximated by:

The relation (25) makes it possible to construct a descriptor vector of an image \(f\left( {x, y} \right)\) of size \(N \times M\) in the form of a matrix \(V\left( f \right) = \left( {AGC_{ij} } \right)\) for a given size \(\left( {p + 1} \right) \times \left( {q + 1} \right)\) as follows:

where \(h_{ij} = f\left( {j,i} \right)i^{{\lambda - \frac{1}{2}}} \left( {N - i} \right)^{{\lambda - \frac{1}{2}}} j^{{ - \frac{1}{2}}} \left( {M - j} \right)^{{ - \frac{1}{2} }} .\)

Based on the orthogonality property of the adapted Gegenbauer–Chebyshev polynomials, the image function \(f \left( {x, y} \right)\) defined on the rectangle \(\left[ {0,N} \right] \times \left[ {0,M} \right]\) can be written as:

where the orthogonal adapted Gegenbauer–Chebyshev moments, \(AGC_{nm}\), are calculated over the rectangle \(\left[ {0,N} \right] \times \left[ {0,M} \right]\). If moments are limited to an order \(Max\), we can approximate \(f\) with \(\tilde{f}\):

where the number of adapted Gegenbauer–Chebyshev moment \(AGC_{ij}\) used in Eq. (28) is computed as follows:

4 Computation of adapted Gegenbauer–Chebyshev moment invariants

To use the proposed Gegenbauer–Chebyshev moments (AGCMs) in 2D image classification, we need to construct invariant features under the three geometric transformations: translation, rotation and scale of the image. Therefore, to obtain the translation, scale and rotation invariants of adapted Gegenbauer–Chebyshev orthogonal moments (AGCMs), we adopt the same strategy used by Papakostas et al. for Krawtchouk moments [26]. So, we derive the adapted Gegenbauer–Chebyshev moment invariants (AGCMIs) through the geometric invariant moments.

4.1 Geometric invariant moments

Given an image function \(h \left( {x, y} \right)\) defined on the rectangle \(\left[ {0,N} \right] \times \left[ {0,M} \right].\) The geometric moment of order \(\left( {n + m} \right)\) is defined as:

The set of geometric moments, which are invariant under rotation, scaling and translation are defined as [25,26,27]:

With

And

The central geometric moment of order \(\left( {n + m} \right)\) is defined as

By using the binomial formula with Eq. (31), the set of geometric moments, which are invariant to the three geometric transformations, are defined as follows:

4.2 Adapted Gegenbauer–Chebyshev moment invariants

Using Eqs. (23) and (19), we get

We consider the new image function \(h\left( {x, y} \right)\) defined as:

Substituting Eqs. (37) and (30) into (36) yields:

The adapted Gegenbauer–Chebyshev moment invariants (AGCMIs) can be expanded in terms of GMIs as follows:

Where \(V_{ij} \left( h \right)\) are the parameters defined as

According to all the aforementioned about the theoretical framework, we have succeeded in constructing orthogonal invariant features of the images, which are invariant to the three geometric transformations defined as follows:

To evaluate the performance of this feature vectors, we will present an experimental study in the next section.

5 Experimental results

Experiments are performed to evaluate the ability of the proposed orthogonal moments in representation and recognition of gray-level images and objects. The performance of the proposed, AGCMs, is compared with the performance of the existing orthogonal moments such as the orthogonal Chebyshev rational moments (CRMs) [31], the weighted radial shifted Legendre moment (WRSLMs) [32], the fractional-order Orthogonal Chebyshev moments (FrCbMs) [33], the orthogonal fractional discrete Tchebyshev moments (FrDTMs) [34], the Fractional-Order Polar Harmonic Transform moments (FrPHTMs) [35], the Legendre moments (LMs) [36], the Tchebichef (TMs) moments [25] and the Gegenbauer moments (GegMs) [23, 24].

This section is divided into five subsections. Experiments for testing the invariance to geometric transformations are presented in the first subsection. Tests in the second subsection are performed to evaluate the ability of the proposed AGCMs method in reconstructing the gray-level images. The accuracy of the reconstructed images is an indicator that reflects the accuracy of the proposed method. An image retrieval system based on the proposed feature vectors is presented in the third subsection. In the fourth subsection, we present an evaluation on the accuracy of the proposed descriptor vector for the recognition of object and the classification of image databases. Experiments to estimate the CPU times are presented in the in the fifth subsection.

5.1 Invariance to geometric transformations

Geometric transformations of digital images include rotation, scaling and translation (RST). These spatial transformations sometimes called similarity transformations. Invariance with respect to RST is required in most pattern recognition applications, because the object should be correctly recognized, regardless of its position, orientation in the scene, and the object-to-camera distance. In other words, the computed moment invariants must be unchanged if the original images are rotated with any angle, scaled with any scaling factor and translated with any translation vectors.

However, the accuracy of the orthogonal moment invariants is negatively affected by the accumulated errors. In this section, the accuracy of the proposed moment invariants is evaluated and compared with the moment invariants of the existing methods [31,32,33,34,35]. Three experiments are performed using the gray-scale image of Lena of size \(128 \times 128\) pixels as displayed in Fig. 3.

To measure the degree of the invariability of adapted Gegenbauer–Chebyshev invariant moments (AGCMIs) to remain unchanged under different image transformations, we use the relative error between the two sets of invariant moments corresponding to the original image \({\text{f}}\left( {{\text{x}},{\text{y}}} \right)\) and the transformed image \({\text{f}}^{\text{t}} \left( {{\text{x}},{\text{y}}} \right)\) as

where \({\text{p}}\) is the number of transformed images, \(||. ||\) is the Euclidean norm, \({\text{AGCI}}\left( {\text{f}} \right)\) is the adapted Gegenbauer–Chebyshev orthogonal invariant moments for the original image and \({\text{AGCI}}\left( {{\text{f}}^{\text{t}} } \right)\) is the adapted Gegenbauer–Chebyshev orthogonal invariant moment for the transformed image.

The first experiment is performed to test the rotation invariance of the proposed orthogonal moments. The image Lena (Fig. 3) is rotated with different angles ranging from 0o to 180o with fixed increment 30o where the original and rotated images are displayed in Fig. 4.

The proposed AGCMIs, the existing orthogonal moments [31,32,33,34,35] are computed for original and rotated images with moment order 20. The MSE values for of these moments are presented in Table 1. It is observed that the values of the MSE measure for the proposed method are much smaller than their corresponding values for the existing orthogonal methods [31,32,33,34,35] that ensures the accuracy of the proposed method.

The second experiment is performed to test the scaling invariance of the proposed orthogonal moments (AGCMIs). The image “Baboon” presented in Fig. 5 is uniformly scaled with 7 different scaling factors \(\lambda = 0,5, 0.75, 1, 1.25, 1.5, 1.75, 2\).

The original image and the scaled ones are displayed in Fig. 6. The proposed AGCMIs and the existing orthogonal moments [31,32,33,34,35] are computed for original image, \({\text{M}}_{\text{pq}} \left( {\text{f}} \right)\), and the scaled images, \({\text{M}}_{\text{pq}} \left( {{\text{f}}^{\text{scaled}} } \right),\) with moment order. The MSE values of these moments are presented in Table 2. It is clear that the proposed method is outperformed all other methods.

The third experiment is performed to evaluate the translation invariance of the proposed moments. The image Barbara, illustrated in Fig. 7, is translated with the five different vectors (− 3, − 3), (− 1, − 1), (1, 1), (3, 3), (5, 5) and (8, 8). The original image and the translated images of image “Barbara” are displayed in Fig. 8.

The proposed AGCMIs and the existing orthogonal are computed for original image, \({\text{M}}_{{{\text{p}},{\text{q}}}} \left( {\text{f}} \right),\) and the translated images, \({\text{M}}_{{{\text{p}},{\text{q}}}} \left( {{\text{f}}^{\text{trans}} } \right),\) with moment order 20. The MSE values of the translation invariants are presented in Table 3. It is observed that the trend of the results obtained from the three performed experiments is the same and ensures the superiority of the proposed method over all other exiting methods.

5.2 Image reconstruction

In this section, we will discuss the ability of the adapted Gegenbauer–Chebyshev for the reconstruction of 2D images using Eq. (28). The accuracy of the reconstructed images usually evaluated using quantitative and qualitative measures. The quantitative measure is the normalized image reconstruction error (NIRE) [37]:

The value of is zero when the reconstructed image,\({\hat{\text{f}}}\left( {{\text{x}},{\text{y}}} \right)\), is identical to the original image, \({\text{f}}\left( {{\text{x}},{\text{y}}} \right)\) which is impossible in practice. The value of the \({\text{NIRE}}\) decreased as the accuracy of the computational method increased. The proposed method is highly accurate when the value of the quantitative measure \({\text{NIRE}}\) approaches zero. The quality of the reconstructed image is evaluated quantitatively using the human eye observation, where the normal human eye could easily measure the degree of similarity between the original and the reconstructed images.

The six images displayed in Fig. 9 are used as the test images in this study. This section presents three experiments. In the first experiment, we perform experimental tests in the two gray-scale images “Dog” and “House” presented in Fig. 9a, b of sizes 200 ×200 pixels. Knowing that the maximum order of the orthogonal ranging is 40, 60, 80, 100, 140, 160 and 200. The MSE‟s values of the proposed (AGCMs) are compared with their corresponding values of the Legendre moments (LMs) [36], the Tchebichef (TMs) moments [25] and the Gegenbauer moments (GegMs) [23, 24], where these values are presented in Tables 4 and 5.

It is clear that the MSE values decrease and approach zero as the order of the moment increases. The proposed adapted Gegenbauer–Chebyshev orthogonal moment is highly accurate and stable for all moment orders compared with the other tested orthogonal moments.

In the second experiment, we perform visual tests for the reconstruction of two images “Girl” and “Barbara” presented in Fig. 9c, d using three types of orthogonal moments. The reconstructions of images based on the proposed orthogonal moments (AGCMs), Legendre orthogonal moment (LMs), Tchebichef orthogonal moments (TMs) and Gegenbauer orthogonal moments (GegMs) of order 50, 100, 150 are illustrated in Figs. 10 and 11. The first analysis of the results illustrated in these figures shows the quality of the reconstruction of (AGCMs) and indicates that the reconstructed image is closer to the original when the order of the maximum moment reaches a certain value. We observe that the reconstruction results based on the proposed orthogonal moments (AGCMs) are better than the other orthogonal moments tested.

In the third experiment, we test the capacity of noisy image reconstruction using the proposed orthogonal moments (AGCMs). In this context, we use two images “Baboon” and “Cameramen” (Fig. 9e, f). We add two types of noise: Gaussian noise (mean: 0, variance: 0.01) and salt-and-pepper noise (3%). The reconstructions of the images are performed by three types of orthogonal moments: (AGCMs), (LMs) and (TMs) with the maximal order 200. We represent the results of this experiment in Fig. 12. Other times, the results of this experiment show the superiority of our orthogonal moments (AGCMs) in the reconstruction of noisy images.

5.3 Proposed image retrieval system

This section presents a new image retrieval system based on the features extracted by the proposed AGCMIs and the similarity measure. In the proposed retrieval scheme, the distances between the query image and the images present in the database are calculated using the Euclidean distance

where \(X = \left( {{\text{AGCI}}_{ij} \left( Q \right)} \right), i = 0, \ldots ,p\; {\text{and}} \;j = 0, \ldots ,q\) is a descriptor vector of query image Q and \(Y = \left( {{\text{AGCI}}_{ij} \left( I \right)} \right), i = 0, \ldots ,p\;{\text{and}}\;j = 0, \ldots ,q\) the descriptor vector of image \(I\) in the database. To measure the performance of our image retrieval system, we use the recall and precision criteria defined by

To show the effectiveness of our image retrieval approach, we have performed tests on COLL-100 image database. Our system proceeds in two phases: The first is the indexing phase, in this step the descriptor vectors of the images are automatically extracted; using the orthogonal adapted Gegenbauer–Chebyshev moment invariants AGCMIS and stored in a database of vectors. These features are recovered quickly and efficiently. The second is the research phase; it consists of extracting the feature vector of the query image using our AGCMIs and comparing it with the feature vectors of the images of the database using the Euclidean distance. The system returns the result of the search in a list of ordered images according to the distance between their descriptors vectors and the descriptor vector of the query image. The distances are arranged in ascending order and the first images are retrieved. The results to retrieve the query image “object13_0” (Fig. 13) from COIL-100 database are compared with those obtained using very recent invariant moments such as CRMs [31], WRSLMs [32], FrCbMs [33], FrDTMs [34] and FrPHTMs [35].

The performance is tested with the measures precision and recall, which are defined in Eqs. (45) and (46). Knowing that, the conditions of these experiences are satisfied; the precision and recall are calculated and the results obtained are presented in the form of recall–precision graphs in Fig. 14. The comparison results show the superiority of our orthogonal invariant moments. Finally, the proposed AGCMIs are robust to image transformations.

5.4 Image classification

2D Image classification is a system in computer vision, which can classify a 2D image according to its content. This system can be divided into two main phases: The first is the feature extraction step, which consists in calculating the descriptor vectors of the images and storing them in a database. The second phase consists of applying a classification algorithm.

The extraction of descriptor vectors of the image is an operation that makes it possible to convert an image into a vector of real or complex values that can serve as a signature for an image. That is to say, for an accurate system of classification, the used descriptor vector must be invariant to the three image transformations (translation, rotation and scale), which means that, the descriptor vectors of the image and the transformed image by translation, rotation or scale must be equal. In the proposed classification system, we use the proposed orthogonal adapted Gegenbauer–Chebyshev invariant moments (AGCMIs) presented in Eq. (39) to extract the descriptor vectors of the images as follows:

For the second phase, we use the fuzzy K-means (FKM) algorithm [29, 38] to classify image databases such the number of its classes is predefined in advance. This technique is based on the notion of measure of similarity or distance between two descriptor vectors. In this approach, we use the Euclidean distance. Note \(X = \left\{ {X_{j} \left( {f_{j} } \right),j = 1, \ldots ,N} \right\}\) is the set of the descriptor vectors of the considered images, where \(X_{j} \left( {f_{j} } \right) = \left( {x_{1j} ,x_{2j} , \ldots ,x_{dj} } \right) ^{t}\) is the descriptor vector of an image \(f_{j}\) of the database and \(C = \left\{ {C_{i} ;i = 1, \ldots ,c} \right\}\) is a set of vector unknown prototypes, where \(C_{i} = \left( {c_{i1} ,x_{i2} , \ldots ,x_{id} } \right) ^{t}\) characterizes the class. In this method an element of X is assigned to a class and only one of the proposed \(C\). In this case, the functional to be minimized is:

where m is any real number greater than 1, \(X_{i}\) is the \(i^{th}\) of p-dimensional measured data, \(C_{j}\) is the p-dimension center of the cluster and the fuzzy partition matrix \(U = \left( {u_{ij} } \right)_{c \times N}\) satisfies the following conditions.

The optimal points of the objective function (48) can be found by adjoining the constraint (50) to J by means of Lagrange multipliers:

Where \(\lambda = \left( {\lambda_{j} } \right), j = 1, \ldots , N\) are the Lagrange multipliers for the N constraints in Eq. (50). By differentiating \(J\left( {C,U,X,\lambda } \right)\) to all its inputs arguments, the necessary conditions for J to reach its minimum are

This iteration will stop when

where \(\varepsilon\) is a termination criterion between 0 and 1, whereas k are the iteration steps. This procedure converges to a local minimum or a saddle point of \(J\left( {C, U, X} \right)\).

Our objective is to focus on using the invariant moments to classify an image into one of many classes based on shape.

According to algorithm 1, the degree of membership of each image to the clusters is given randomly in the initial step (iteration \(l = 0\)) such as \(U^{\left( 0 \right)} = \left( {U_{ij}^{0} } \right)\) satisfies the conditions (49), (50) and (51) and in each iteration \(l \left( {l = 1,2 \ldots} \right),\) we calculate, simultaneously, the cluster centers \(C_{i}^{\left( l \right)}\) and the degrees of membership \(u_{ij}^{\left( l \right)}\) as follows

-

1

$$\left\{ {C_{i}^{\left( l \right)} = \frac{{\mathop \sum \nolimits_{j = 1}^{N} \left( {u_{ij}^{{\left( {l - 1} \right)}} } \right)^{m} X_{j} }}{{\mathop \sum \nolimits_{j = 1}^{N} \left( {u_{ij}^{{\left( {l - 1} \right)}} } \right)^{m} }} , \quad 1 < i < c} \right..$$

-

2

$$d_{ij}^{2} = d^{2} \left( {X_{j} , C_{i}^{\left( l \right)} } \right)^{t} ;\quad 1 < i < c , 1 < j < N.$$

-

3

$$u_{ij}^{\left( l \right)} = \frac{1}{{\mathop \sum \nolimits_{k = 1}^{c} \left( {\frac{{d_{ij} }}{{d_{kj} }}} \right)^{{\frac{2}{{\left( {m - 1} \right)}}}} }}$$

The algorithm stops when the condition \({\text{U}}^{{\left( {\text{l}} \right)}} - {\text{U}}^{{\left( {{\text{l}} - 1} \right)}} = max_{i,j} \left| {u_{ij}^{\left( l \right)} - u_{ij}^{{\left( {l - 1} \right)}} } \right| < \varepsilon\) is satisfied.

If the value of \(k\) equals the number of classes in the database, the application of the FKM algorithm presents the results as \(k\) homogeneous classes (each class contains the same object). Therefore, in this case, the FKM behaves brilliantly as a method of classification.

To test our image classification system presented in Fig. 15, which is based on the proposed orthogonal invariant moments and fuzzy K-means algorithm (FKM), we use the three image databases: The first database is “MPEG7-CE Shape” [39]. This database contains images of the objects geometrically deformed. In this experiment, we have considered ten classes of objects and each class contains 20 images. The second is the Columbia “Object Image Library (COIL-20) database” [40], which consists of 1440 images of size \(128 \times 128\) distributed as 72 images for each object. The third image database is the “ORL-faces database” [41]. This database contains ten different images for the face of each person. The total number of images is equal to 400. All images of this database have the size \(92 \times 112\).

We tested the performance of the adapted Gegenbauer–Chebyshev orthogonal moment invariants (AGCMIs) and we performed a comparative study with the performance of the existing orthogonal moments such as the Chebyshev rational moments (CRMs) [31], the weighted radial shifted Legendre moment (WRSLMs) [32], the fractional-order Chebyshev moments (FrCbMs) [33], the fractional discrete Tchebyshev moments (FrDTMs) [34] and the Fractional-Order Polar Harmonic Transform moments (FrPHTMs) [35]. This study was done on the three previous databases by adding different densities of salt-and-pepper noise densities 1%, 2%, 3%, and 4%. We use the precision defined in Eq. (56) to measure the performance of each image classification system.

Tables 6, 7 and 8 present the results of image classification for the three databases. From these results, we can see our classification system based on orthogonal invariant moments (AGCMIs) and the fuzzy k-means algorithm (FKM) better than the systems based on the other invariant moments. See that, the accuracy of the recognition is decreasing according to the density of noise.

In addition, our proposed orthogonal invariant moments (AGCMIs) are robust for the three geometric transformations, despite the noisy conditions and the accuracy of the recognition compared with the other tested descriptors.

5.5 CPU time

CPU times are a quantitative measure used to evaluate the rapidity of any algorithm. In this subsection, we performed two experiments with both datasets of images. All our numerical experiments are performed in MATLAB 2018 on a PC HP, Intel(R) Core(TM) I5-5200U CPU @ 2.20 GHz, 4 GB of RAM, Operating system windows 7. The first experiment is performed with the COIL-20 database. In this experiment, the proposed orthogonal moment invariants AGCMIs, the orthogonal Chebyshev rational moments (CRMs) [31], the weighted radial shifted Legendre moment (WRSLMs) [32], the fractional-order orthogonal Chebyshev moments (FrCbMs) [33], the orthogonal fractional discrete Tchebyshev moments (FrDTMs) [34] and the fractional-order polar harmonic transform moments (FrPHTMs) [35] are used to compute the moments of the images of the COIL-20 dataset for moment orders ranging from 0 to 40 with fixed increment 10. We present the results as the average time needed for a single image, by dividing the overall time by the number of images in the dataset. The average CPU times for these methods are presented in Table 9. In the second experiment, we used the test set of the MPEG7-CE Shape database. We follow the same steps of the first experiment to estimate the processing times of each of this set. The average CPU times for this experiment are presented in Table 10. It is clear that the proposed moment invariants AGCMIs are very fast than the other tested methods.

6 Conclusion

A new set of orthogonal adapted Gegenbauer–Chebyshev moments for image representation and recognition is presented. The performed experiments clearly show that the proposed image descriptors have many useful characteristics. First, the new adapted Gegenbauer–Chebyshev polynomials are invariant under the three geometric transformations and orthogonal over the rectangular domain \(\left[ {0, N} \right] \times \left[ {0,M} \right]\) of the image, which significantly increase the recognition capabilities of the proposed image descriptors. Second, these descriptors are computed using a highly accurate and numerically stable method. Third, the proposed image descriptors are robust against the different kinds of noise. Fourth, fast computation of the proposed image descriptors is suitable for real-time applications. Based on these characteristics, the recognition performance of the proposed image descriptors is outperformed the existing moments-based image descriptors. Also, the proposed descriptors are almost unchanged with rotation, scaling and translation transformations which ensure the usefulness of these descriptors in pattern recognition applications.

The proposed orthogonal Gegenbauer–Chebyshev moments show a significant improvement in image reconstruction capabilities and stabilities for either lower or higher moment orders. This is very attractive property for image processing applications. Based on their characteristics, the proposed orthogonal moments AGCMs and their image descriptors constitute promising tools for many pattern recognition and images processing applications.

References

Wang X, Xiao B, Ma JF, Bi XL (2007) Scaling and rotation invariant analysis approach to object recognition based on Radon and Fourier-Mellin transforms. Pattern Recognit 40(12):3503–3508

Hu MK (1962) Visual pattern recognition by moment invariants. IRE Trans Inform Theory 8(2):179–187

Wang L, Healey G (1998) Using Zernike moments for the illumination and geometry invariant classification of multispectral texture. IEEE Trans Image Process 7(2):196–203

Papakostas GA, Boutalis YS, Karras DA, Mertzios BG (2007) A new class of Zernike moments for computer vision applications. Inf Sci 177(13):2802–2819

Lin H, Si J, Abousleman GP (2008) Orthogonal rotation invariant moments for digital image processing. IEEE Trans Image Process 17(3):272–282

Chen Z, Sun SK (2010) A Zernike moment phase descriptor for local image representation and matching. IEEE Trans Image Process 19(1):205–219

Singh C (2006) Improved quality of reconstructed images using floating point arithmetic for moment calculation. Pattern Recognit 39(11):2047–2064

Honarvar B, Paramesran R, Lim C-L (2014) Image reconstruction from a complete set of geometric and complex moments. Signal Process 98:224–232

Rahmalan H, Abu NA, Wong SL (2010) Using Tchebichef moment for fast and efficient image compression. Pattern Recognit Image Anal 20(4):505–512

Luo L-M, Xie X-H, Bao X-D (1994) A modified moment-based edge operator for rectangular pixel image. IEEE Trans Circuits Syst Video Technol 4(6):552–554

Choi M-S, Kim W-Y (2002) A novel two stage template matching method for rotation and illumination invariance. Pattern Recogn 35(1):119–129

Shu HZ, Luo LM, Coatrieux JL (2007) Moment-based approaches in imaging. Part 1. Basic features [A look At …]. IEEE Eng Med Biol Mag 26(5):70–74

Flusser J, Suk T, Zitová B (2009) Moments and moment invariants in pattern recognition. Wiley, New York

Rizzini DL (2018) Angular Radon spectrum for rotation estimation. Pattern Recogn 84:182–196

Teague MR (1980) Image analysis via the general theory of moments. J Opt Soc Am 70(8):920–930

Belkasim S, Hassan E, Obeidi T (2007) Explicit invariance of Cartesian Zernike moments. Pattern Recogn Lett 28(15):1969–1980

Chong C-W, Raveendran P, Mukundan R (2003) The scale invariants of pseudo-Zernike moments. Pattern Anal Appl 6:176–184

Xiao B, Ma JF, Wang X (2010) Image analysis by Bessel–Fourier moments. Pattern Recogn 43(8):2620–2629

Sheng Y, Shen L (1994) Orthogonal Fourier–Mellin moments for invariant pattern recognition. Opt Soc Am 11(6):1748–1757

Mukundan R, Ong SH, Lee PA (2001) Image analysis by Tchebichef moments. IEEE Trans Image Process 10(9):1357–1364

Yap PT, Paramedran R, Ong SH (2003) Image analysis by Krawtchouk moments. IEEE Trans Image Process 12(11):1367–1377

Yap PT, Paramesran R, Ong SH (2007) Image analysis using Hahn moments. IEEE Trans Pattern Anal Mach Intell 29(11):2057–2062

Hosny KM (2011) Image representation using accurate orthogonal Gegenbauer moments. Pattern Recognit Lett 32(6):795–804

Hosny KM (2014) New set of gegenbauer moment invariants for pattern recognition applications. Arab J Sci Eng 39:7097–7107

Zhu H (2012) Image representation using separable two-dimensional continuous and discrete orthogonal moments. Pattern Recogn 45(4):1540–1558

Papakostas GA, Karakasis EG, Koulouriotis DE (2010) Novel moment invariants for improved classification performance in computer vision applications. Pattern Recogn 43(1):58–68

Hmimid A, Sayyouri M, Qjidaa H (2015) Fast computation of separable two-dimensional discrete invariant moments for image classification. Pattern Recognit 48(2):509–521

Dunn JC (1973) A fuzzy relative of the ISODATA process and its use in detecting compact well-separated clusters. J Cybern 3(3):32–57

Amir BD, Shamir R, Zohar Y (1999) Clustering gene expression patterns. J Comput Biol 6:281–297

Erdelyi A, Magnus W, Oberhettinger F, Tricomi FG (1953) Higher transcendental functions, 2. McGraw-Hill, New York

Hosny KM, Darwish MM (2017) Comments on Robust circularly orthogonal moment based on Chebyshev rational function [Digit. Signal Process. 62 (2017) 249-258]. Digit Signal Proc 68:152–153. https://doi.org/10.1016/j.dsp.2017.06.002

Xiao B, Wang G, Li W (2014) Radial shifted legendre moments for image analysis and invariant image recognition. Image Vis Comput 32(12):994–1006

Benouini R, Batioua I, Zenkouar K, Zahi A, Najah S, Qjidaa H (2019) Fractional-order orthogonal Chebyshev Moments and Moment Invariants for image representation and pattern recognition. Pattern Recogn 86:332–343

Xiao B, Luo J, Bi X, Li W, Chen B (2020) Fractional discrete Tchebyshev moments and their applications in image encryption and watermarking. Inf Sci 516:545–559

Hosny KM, Darwish MM, Aboelenen T (2020) Novel fractional-order polar harmonic transforms for gray-scale and color image analysis. J Frankl Inst 357(4):2533–2560

El Mallahi M, El-Mekkaoui J, Zouhri A, Amakdouf H, Qjidaa H (2018) Rotation scaling and translation invariants of 3D radial shifted legendre moments. Int J Autom Comput 15(2):169–180

Guo L, Zhu M (2011) Quaternion Fourier–Mellin moments for color images. Pattern Recogn 44(2):187–195

Hjouji A, Jourhmane M, EL-Mekkaoui J, Qjidaa H, ELKHalfi A (2018) Image clustering based on Hermetian positive Definite matrix and radial Jacobi moments. In: International conference on intelligent systems and computer vision (ISCV), 17751499

http://www.cis.temple.edu/~latecki/TestData/mpeg7shapeB.tar.gz

http://www.cs.columbia.edu/CAVE/software/softlib/coil-20.php

http://www.cl.cam.ac.uk/research/dtg/attarchive/facedatabase.html

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Hjouji, A., Bouikhalene, B., EL-Mekkaoui, J. et al. New set of adapted Gegenbauer–Chebyshev invariant moments for image recognition and classification. J Supercomput 77, 5637–5667 (2021). https://doi.org/10.1007/s11227-020-03450-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-020-03450-4