Abstract

The power of the Hellmann–Feynman theorem is primarily conceptual. It provides insight and understanding of molecular properties and behavior. In this overview, we discuss several examples of concepts coming out of the theorem. (1) It shows that the forces exerted upon the nuclei in a molecule, which hold the molecule together, are purely Coulombic in nature. (2) It indicates whether the role of the electronic charge in different portions of a molecule’s space is bond-strengthening or bond-weakening. (3) It demonstrates the importance of the electrostatic potentials at the nuclei of a molecule, and that the total energies of atoms and molecules can be expressed rigorously in terms of just these potentials, with no explicit reference to electron–electron interactions. (4) It shows that dispersion forces arise from the interactions of nuclei with their own polarized electronic densities. Our discussion focuses particularly upon the contributions of Richard Bader in these areas.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

An underappreciated theorem

In the early days of quantum theory, five researchers (three of whom became Nobel Laureates) independently derived a rather straightforward expression [1,2,3,4,5]. If H is the Hamiltonian operator of a system with a normalized eigenfunction Ѱ and energy eigenvalue E, and λ is any parameter that appears explicitly in the Hamiltonian, then,

The best known formulation of Eq. (1) was reported separately by Hellmann [6] and by Feynman [5], making use of the fact that the negative gradient of an energy is a force. Thus, if the parameter λ is set equal to a coordinate of a nucleus A in a molecule, then Eq. (1) gives the corresponding component of the force exerted upon A by the electrons and the other nuclei. By doing this for each coordinate, one can obtain the total force upon that nucleus due to the electrons and other nuclei,

where ZA and RA are the nuclear charge and position of nucleus A. This special case of Eq. (1) is known as the Hellmann–Feynman theorem (or sometime the “electrostatic theorem”). The detailed derivations of Eqs. (1) and (2) are available in several sources besides the original papers [7,8,9].

The force described by Eq. (2) is purely Coulombic. Thus the Hellmann–Feynman theorem shows that the forces felt by any nucleus in a system of nuclei and electrons are classically Coulombic, the attractive interactions with the electrons and the repulsive ones with the other nuclei. All that is needed to determine these forces are the electronic density and the charges and positions of the nuclei.

The bonding in a molecule can accordingly be explained in terms of Coulomb’s Law. Since the only attractive force in chemistry is between the nuclei and the electrons [10, 11], it seems difficult to avoid the conclusion that this is what holds a molecule together. In fact, London already in 1928 explained the bonding in H2 in terms of a “bridge” of electronic density attracting the nuclei [12]. (For a translation and more complete discussion, see Bader [13].) On the other hand, one could also focus upon the lowering of the total energy as driving chemical bonding or the virial theorem (see below). Of course, the total energy and the forces are linked by Eq. (2). The point is that there are different ways of addressing chemical bonding, and to claim just one of these as resolving “the nature of the chemical bond” is misleading.

Despite its illustrious parentage, the Hellmann–Feynman theorem has, overall, not received the respect that it deserves. In fact Coulson and Bell concluded in 1945, just 6 years after Feynman’s derivation, that it was invalid [14]; their argument was refuted in 1951 by Berlin [15], who also demonstrated how it can be used to understand the bonding in molecules. A few years later, Bader considerably extended Berlin’s approach [16,17,18,19,20]. In 1962, Wilson used the Hellmann–Feynman theorem to derive an exact formula for molecular energies [21]. A few years later, Slater declared the Hellmann–Feynman and the virial theorems to be “two of the most powerful theorems applicable to molecules and solids” [22]. Nevertheless, in 1966, the theorem was described as “too trivial to merit the term “theorem” [23].”

Many theoreticians appear to have agreed with the “trivial” label. Deb observed in 1981 that “the apparent simplicity of the H–F theorem has evoked some skepticism and suspicion [24].” We agree with Deb. The advice of Newton (“Nature is pleased with simplicity.”) and Einstein (“Nature is the realization of the simplest conceivable mathematical ideas.”) [25] is unfortunately generally ignored. In 2005, Fernández Rico et al. noted, with respect to the Hellmann–Feynman theorem, that “the possibilities that it opens up have been scarcely exploited, and today the theorem is mostly regarded as a scientific curiosity [26].” One exception to this is Richard Bader, who recognized the conceptual power of the theorem in understanding molecular properties and did exploit it, as we shall show.

Coulombic forces in molecules

Many theoreticians find the Coulombic interpretation of bonding to be unacceptable. The idea that something as simple and ancient as Coulomb’s Law (~ 1785) is sufficient to explain what holds molecules together is not met with favor, even though the derivation of Eq. (2) follows directly from the fact that the potential energy terms in a molecular Hamiltonian are purely Coulombic [7,8,9]. A Coulombic interpretation seems to ignore such revered quantum mechanical concepts as exchange, Pauli repulsion, orbital interaction, etc.

The problem is a failure to distinguish between the mathematics that produces a wave function, which in itself has no physical significance, and the electronic density, which does. The roles of exchange and Pauli repulsion are in obtaining a proper wave function and good approximation to the electronic density; they do not correspond to physical forces [7, 8, 15, 22, 27]. Orbitals are simply mathematical constructs that provide a convenient means for expressing a molecular wave function; they are not physically real [28,29,30], as shown by the fact that one set of orbitals can be transformed into another quite different set without affecting the total electronic density of the system. As Levine has pointed out, “there are no “mysterious quantum–mechanical forces” acting in molecules [8]”.

Schrődinger already was aware of the problem of confusing mathematical procedure with physical reality. Bader describes very nicely Schrődinger’s evolution in thinking [31]. Initially, he attributed physical meaning to wave functions. But by the fourth of his classic 1926 papers in Annalen der Physik [32], he had concluded that the wave function is not a function in real space, except for a one-electron system. What is real is what Schrődinger called the density of electricity, which he defined essentially by Eq. (3); it is what we now know as the average electronic density ρ(r) at a point r,

where N is the number of electrons.

An important early application of the Hellmann–Feynman theorem to chemical bonding was by Berlin in 1951 [15]. He used the theorem and Coulomb’s Law to show how a chemical bond is strengthened or weakened by the effects of the electronic charge is different regions of a molecule. Berlin demonstrated that whereas the electronic charge in an internuclear region clearly pulls the two nuclei together and strengthens the bonding, the charge beyond each nucleus may be either bond-strengthening or bond-weakening, depending upon how close it is to the extensions of the internuclear axis.

Such reasoning can explain, for instance, why the bond in CO+ is stronger than in CO [33]. The most energetic electrons in CO are in the carbon lone pair. Since they are concentrated along the extension of the OC axis and are closer to the carbon than to the oxygen, they tend to pull the carbon away from the oxygen, thereby weakening the bond [34]. In forming CO+, one of these electrons is lost, the bond-weakening effect is diminished, and the bond becomes stronger.

Bader et al. saw the value of this type of analysis for understanding what holds molecules together and extended it considerably to a large number of diatomic molecules [16,17,18,19,20]. They also introduced the idea of using outer contours of molecular electronic densities to define molecules’ surfaces and provide a measure of their sizes [35]. The 0.001 au contour is now widely used for this purpose.

The work of Berlin and Bader led to numerous other studies relating electronic densities and Coulombic forces to chemical bonding. See, for example, Fernández Rico et al. [26, 36], Deb [37], Hirshfeld and Rzotkiewicz [38], and Silberbach [39].

Molecular energies

Since the negative gradient of an energy is a force, the total energy of a molecule can in principle be found by integrating the force components, provided that the electronic density is known for different positions of the nuclei. This has indeed been done, by Hurley [41] and by others, as discussed by Deb [37]. Unfortunately, the results are very sensitive to the quality of the electronic density, and thus variationally computed energies are usually more accurate. However, Bader did show, for some small molecules, that allowing the orbital exponents to vary with the internuclear separations improved the Hellmann–Feynman energies and even made them superior to those obtained from simple molecular orbital calculations [42].

A very different approach was introduced by Wilson in 1962 [21]. He used the Hellmann–Feynman theorem to derive an exact expression for molecular energy in terms of the electrostatic potentials at the nuclei due to the electrons and other nuclei. These potentials are a purely Coulombic property, which can be obtained from the electronic densities and the nuclear charges and positions. Further versions of Wilson’s expression have followed, which have also extended it to atoms [43, 44]. Applying these exact formulas is challenging, but they are very important conceptually since they show that atomic and molecular energies can be determined from electronic densities, a one-electron property, with no explicit reference to interelectronic repulsion, a two-electron property. Thus the Hellmann–Feynman theorem can be viewed as foreshadowing the Hohenberg–Kohn theorem [45], which showed that the electronic density is the determinant of all molecular properties.

These studies stimulated the derivation of a variety of approximate atomic and molecular energy formulas, also focusing upon electrostatic potentials at nuclei, as reviewed by Levy et al. [46] and by Politzer et al. [47, 48]. The results obtained with these formulas for Hartree–Fock wave functions are often significantly better than would be anticipated. This is because whereas Hartree–Fock electronic densities are correct to first order [49], the electrostatic potentials at nuclei are correct to second order [46, 50]. Levy et al. were accordingly able to obtain even partial atomic correlation energies using Hartree–Fock electrostatic potentials at the nuclei [51], even though Hartree–Fock methodology does not include correlation. All of these relationships, whether exact or approximate, demonstrate the fundamental significance of the electrostatic potentials at nuclei [48, 52].

Dispersion

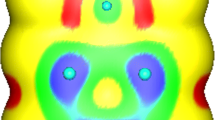

The weak interaction known as dispersion, e.g., between two argon atoms, is commonly interpreted in terms of London’s fluctuating induced dipoles [53, 54], as depicted in structure 1 below. However, Feynman showed, using the Hellmann–Feynman theorem, that, in fact, the electronic charge of each atom is slightly polarized toward the other, structure 2, and that it is “the attraction of each nucleus for the distorted charge distribution of its own electrons that gives the attractive 1/R7 force.” [5] The London and the Feynman interpretations are clearly very different in physical terms (compare 1 and 2), although both lead to a 1/R7 dependence of the force.

Bader and Chandra were among early supporters of Feynman’s view [55], and it has also been confirmed by other studies [56,57,58,59]. The computed electronic density in the Ar–-Ar system shows polarization of the electronic density of each atom toward the other just as predicted by Feynman and shown in 2 [60,61,62]. Nevertheless Feynman’s explanation of dispersion interactions continues to be widely ignored.

The virial and the Hellmann–Feynman theorems

There is an interesting relationship between the virial and the Hellmann–Feynman theorems. It was already demonstrated by Slater for polyatomic molecules [22], but for simplicity, we will discuss it for a diatomic molecule with internuclear separation R, as did Bader [40]. In this case, the virial theorem can be written within the Born–Oppenheimer approximation, as

in which T(R), E(R), and V(R) are the kinetic, total, and potential energies of the molecule as functions of the internuclear separation. At the equilibrium separation Re, the derivatives in Eq. (4) are zero, and the theorem assumes its more familiar form, T(Re) = − E(Re) = − 0.5 V(Re).

By Eq. (2), − dE/dR is the Hellmann–Feynman force F(R) exerted on a nucleus at separation R. Thus Eq. (4) can be written as

F(R) is attractive (pulling the nuclei together) for R > Re and repulsive (tending to separate them) for R < Re. At R = Re, F(Re) = 0; the net force upon any nucleus at equilibrium must be zero. Equation (2) shows that by integrating F(R) from R = ꝏ to R = Re, the bonding energy can be obtained.

Bader calculated the changes in T(R), V(R), and E(R) as a function of R for two contrasting diatomic molecules [40], the very weakly bound Ar2 and CO, which has one of the strongest covalent bonds known. Qualitatively the plots for the two molecules are very similar. As R decreases from ꝏ to Re, V(R) initially becomes positive but then decreases sharply as R approaches Re to reach a negative value at Re. T(R) follows the opposite pattern; it is initially negative but sharply increases to a positive value at Re. This similarity, for molecules with such different bonding, supports Slater’s contention that “there is no very fundamental distinction between the van der Waals binding and covalent binding. If we refer them back to the electronic charge distribution, which as we have seen is solely responsible for the interatomic forces, we have the same type of behavior in both cases [22].” Bader has expressed the same idea [40, 63].

Bader’s plots for Ar2 and CO bear directly upon a long-standing argument in the area of chemical bonding. Starting with Hellmann in 1933 [4], some theoreticians have attributed chemical bonding not to electron-nuclear Coulombic attraction, but rather to the decrease in kinetic energy as the space available to the electrons increases in the formation of a molecule [64,65,66,67,68]. The Ar2 and CO plots show that initially the total energy lowering is indeed due to the kinetic energy decreasing, but as Re is approached, it is the decreasing potential energy that is dominant and results in a negative total energy. So which feature does one choose to emphasize? Like most academic arguments, this is not likely to be resolved in the near future.

Concluding comments

Quantum chemistry has traditionally focused, with great success, upon energy. The Hellmann–Feynman theorem should not be viewed as an alternative approach, but rather as a complementary one. Its power is conceptual, not in providing better descriptions of molecules and their behavior but rather in developing insight and understanding of their properties. Our purpose in this brief overview has been to demonstrate this conceptual role of the Hellmann–Feynman theorem, and as well to point out the contributions made in this area by Richard Bader. For a more detailed recent review, see Politzer and Murray [9].

We conclude by quoting Deb: “we should do well to employ both the energy and the force formulation in our studies of molecular structure and dynamics. The former approach would generally provide more accurate numbers, while the latter should provide a simple unified basis for developing physical insights into different chemical phenomena [37]”.

References

Schrődinger E (1926) Quantisierung als Eigenwertproblem. Ann Phys 80:437–490

Gűttinger P (1932) Das Verhalten von Atomen in magnetischen Drehfeld. Z Phys 73:169–184

Pauli W (1933) Principles of wave mechanics, Handbuch der Physik 24. Springer, Berlin, p 162

Hellmann H (1933) Zur Rolle der kinetischen Elektronenenergie fűr die zwischenatomaren Kräfte. Z Phys 85:180–190

Feynman RP (1939) Force in molecules. Phys Rev 56:340–343

Hellmann H (1937) Einfűhrung in die Quantenchemie. Deuticke, Leipzig

Hirschfelder JO, Curtiss CF, Bird RB (1954) Molecular theory of gases and liquids. Wiley, New York

Levine IN (2000) Quantum chemistry, 5th edn. Prentice Hall, Upper Saddle River, NJ

Politzer P, Murray JS (2018) The Hellmann-Feynman theorem - a perspective. J Mol Model 24:266

Lennard-Jones J, Pople JA (1951) The molecular orbital theory of chemical valency. IX. The interaction of paired electrons in chemical bonds, Proc Royal Soc, London, Series A 210:190–206

Matta CF, Bader RFW (2006) An experimentalist’s reply to “what is an atom in a molecule?” J Phys Chem A 110:6365–6371

London F (1928) Zur Quantentheorie der Homőopolaren Valenzzahlen 46:455–477

Bader RFW (2010) The density in density functional theory. J Mol Struct (Theochem) 943:2–18

Coulson CA, Bell RP (1945) Kinetic energy, potential energy and force in molecule formation. 41:141–149

Berlin T (1951) Binding regions in diatomic molecules. J Chem Phys 19:208–213

Bader RFW, Jones GA (1961) The Hellmann-Feynman theorem and chemical binding. Can J Chem 39:1253–1265

Bader RFW, Henneker WH, Cade PE (1967) Molecular charge distributions and chemical binding. J Chem Phys 46:3341–3363

Bader RFW, Keaveny I, Cade PE (1967) Molecular charge distributions and chemical binding. II. First-row diatomic hydrides AH. J Chem Phys 47:3381–3402

Cade PE, Bader RFW, Henneker WH, Keaveny I (1969) Molecular charge distributions and chemical binding. IV. The second-row diatomic hydrides AH. J Chem Phys 50:5313–5333

Bader RFW, Bandrauk AD (1968) Molecular charge distributions and chemical binding. III. The isoelectronic series N2, CO, BF, and C2. BeO and LiF. J Chem Phys 49:1653–1665

Wilson EB Jr (1962) Four-dimensional electron density function. J Chem Phys 36:2232–2233

Slater JC (1972) Hellmann-Feynman and virial theorems in the Xα method. J Chem Phys 57:2389–2396

Musher JI (1966) Comment on some theorems of quantum chemistry. Am J Phys 34:267–268

Deb BM (1981) Preface to: the force concept in chemistry. In: Deb BM (ed). Van Nostrand Reinhold, New York, p ix

Isaacson W (2007) Einstein: his life and universe. Simon and Schuster, New York, p 549

Fernández Rico J, López R, Ema I, Ramírez G (2005) Chemical notions from the electronic density. J Chem Theory Comput 1:1083–1095

Bader RFW (2006) Pauli repulsions exist only in the eye of the beholder. Chem - Eur J 12:2896–2901

Bachrach SM (1994) Population analysis and electron densities from quantum mechanics. In: Lipkowitz KB, Boyd DB (eds) Reviews in computational chemistry, vol 5. VCH Publishers, New York, ch 3:171–227

Scerri ER (2000) Have orbitals really been observed? J Chem Educ 77:1492–1494

Cramer CJ (2002) Essentials of computational chemistry. Wiley, Chichester, UK, p 102

Bader RFW (2007) Everyman’s derivation of the theory of atoms in molecules. J Phys Chem A 111:7966–7972

Schrődinger E (1926) Quantisierung als Eigenwertproblem. Ann Phys 81:109–139

Herzberg G (1950) Molecular spectra and molecular structure, vol I. Van Nostrand, New York

Politzer P (1965) The electrostatic forces within the carbon monoxide molecule. J Phys Chem 69:2132–2134

Bader RFW, Carroll MT, Cheeseman JR, Chang C (1987) Properties of atoms in molecules. J Am Chem Soc 109:7968–7979

Fernández Rico J, López R, Ema I, Ramírez G (2002) Density and binding forces in diatomics. J Chem Phys 116:1788–1799

Deb BM (1973) The force concept in chemistry. Rev Mod Phys 45:22–43

Hirshfeld FL, Rzotkiewicz S (1974) Electrostatic binding in the first-row AH and A2 diatomic molecules. Mol Phys 27:1319–1343

Silberbach H (1991) The electron density and chemical bonding: a reinvestigation of Berlin’s theorem. J Chem Phys 94:2977–2985

Bader RFW (2010) Definition of molecular structure: by choice or by appeal to observation. J Phys Chem A 114:7431–7444

Hurley AC (1962) Virial theorem for polyatomic molecules. J Chem Phys 37:449–450

Bader RFW (1960) The use of the Hellmann-Feynman theorem to calculate molecular energies. Can J Chem 38:2117–2127

Politzer P, Parr RG (1974) Some new energy formulas for atoms and molecules. J Chem Phys 61:4258–4262

Politzer P (2004) Atomic and molecular energies as functionals of the electrostatic potential. Theor Chem Accts 111:395–399

Hohenberg P, Kohn W (1964) Inhomogeneous electron gas. Phys Rev B 136:864–871

Levy M, Clement SC, Tal Y (1981) Correlation energies from Hartree-Fock electrostatic potentials at nuclei and generation of electrostatic potentials from asymptotic and zero-order information. In: Politzer P, Truhlar DG (eds) chemical applications of atomic and molecular electrostatic potentials. Plenum Press, New York, pp 29–50

Politzer P (1987) Atomic and molecular energy and energy difference formulas based upon electrostatic potentials at nuclei. In: March NH, Deb BM (eds) the single-particle density in physics and chemistry. Academic Press, San Diego, pp 59–72

Politzer P, Murray JS (2002) The fundamental nature and role of the electrostatic potential in atoms and molecules. Theor Chem Acc 108:134–142

Moller C, Plesset MS (1934) Note on an approximate treatment for many-electron systems. Phys Rev 46:618–622

Cohen M (1979) On the systematic linear variation of atomic expectation values. J Phys B 12:L219–L221

Levy M, Tal Y (1980) Atomic binding energies from fundamental theorems involving the electron density, <r-1>, and the Z-1 perturbation expansion. J Chem Phys 72:3416–3417

Politzer P, Murray JS (2021) Electrostatic potentials at the nuclei of atoms and molecules. Theor Chem Accts 140:7

Eisenschitz R, London F (1930) Űber das Verhältnis der van der Waalsschen Kräfte zu den homőopolaren Bindungskräften. Z Phys 60:491–527

London F (1937) The general theory of molecular forces. Trans Faraday Soc 33:8–26

Bader RFW, Chandra AK (1968) A view of bond formation in terms of molecular charge distribtions. Can J Chem 46:953–966

Salem L, Wilson EB Jr (1962) Reliability of the Hellmann-Feynman theorem for approximate charge densities. J Chem Phys 36:3421–3427

Hirschfelder JO, Eliason MA (1967) Electrostatic Hellmann-Feynman theorem applied to the long-range interaction of two hydrogen atoms. J Chem Phys 47:1164–1169

Deb BM (1981) Miscellaneous applications of the Hellmann-Feynman theorem. In: Deb BM (ed) the force concept in chemistry. Van Nostrand Reinhold, New York, pp 388–417

Hunt KLC (1990) Dispersion dipoles and dispersion forces: proof of Feynman’s “conjecture” and generalization to interacting molecules of arbitrary symmetry. J Chem Phys 92:1180–1187

Thonhauser T, Cooper VR, Li S, Puzder A, Hyldgaard P, Langreth DC (2007) Van der Waals density functional: self-consistent potential and the nature of the van der Waals bond. Phys Rev B 76:125112

Clark T (2017) Halogen bonds and σ-holes. Faraday Discuss 203:9–27

Murray JS, Zadeh DH, Lane P, Politzer P (2019) The role of “excluded” electronic charge in noncovalent interactions. Mol Phys 117:2260–2266

Bader RFW (2009) Bond paths are not chemical bonds. J Phys Chem A 113:10391–10396

Ruedenberg K (1962) The physical nature of the chemical bond. Rev Mod Phys 34:326–376

Gordon MS, Jensen JH (2000) Perspective on “the physical nature of the chemical bond.” Theor Chem Acc 103:248–251

Ruedenberg K, Schmidt MW (2009) Physical understanding through variational reasoning: electron sharing and covalent bonding. J Phys Chem A 113:1954–1968

Jacobsen H (2009) Chemical bonding in view of electron charge density and kinetic energy density descriptors. J Comput Chem 30:1093–1102

Zhao L, Schwarz WHE, Frenking G (2019) The Lewis electron-pair bonding model: the physical background, one century later. Nature Rev Chem 3:35–47

Author information

Authors and Affiliations

Contributions

Calculations: This is a conceptual paper. Writing: P. Politzer, 75% and J. S. Murray, 25%. Draft revision: P. Politzer, 50% and J. S. Murray, 50%.

Corresponding author

Ethics declarations

Ethics approval

None.

Consent to participate

Both P. Politzer and J. S. Murray agreed on preparing this paper.

Consent for publication

Both P. Politzer and J. S. Murray consent to the publication of this paper if the journal accepts it.

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Politzer, P., Murray, J.S. The conceptual power of the Hellmann–Feynman theorem. Struct Chem 34, 17–21 (2023). https://doi.org/10.1007/s11224-022-01961-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11224-022-01961-9